Abstract

Nowadays, object detection has become increasingly crucial in various Internet-of-Things (IoT) systems, and ship detection is an essential component of this field. In low-illumination scenes, traditional ship detection algorithms often struggle due to poor visibility and blurred details in RGB video streams. To address this weakness, we create the Lowship dataset and propose the YOLO-SAR framework, which is based on the You Only Look Once (YOLO) architecture. As for implementing ship detecting methods in such challenging conditions, the main contributions of this work are as follows: (i) a low-illumination image-enhancement module that adaptively improves multi-scale feature perception in low-illumination scenes; (ii) receptive-field attention convolution to compensate for weak long-range modeling; and (iii) an Adaptively Spatial Feature Fusion head to refine the multi-scale learning of ship features. Experiments show that our method achieves 92.9% precision and raises mAP@0.5 to 93.8%, outperforming mainstream approaches. These state-of-the-art results confirm the significant practical value of our approach.

1. Introduction

With the steady advancement of deep learning, object detection powered by this technology has made significant progress across various circumstances. This momentum has also extended to the specialized field of ship target detection [1]. Ship detection is central to intelligent shipping systems [2] and remains a core safeguard for canal operations and navigational safety. In real-world low-illumination scenes or during nighttime [3], ship images often suffer from poor visibility and blurred details. Taking a bird’s-eye view of recent research, it can be vividly seen that directly applying generic detectors to low-illumination ship images typically leads to a decline in accuracy because vessels appear faint [4]. These disadvantages mean that plenty of essential visual clues are missing. Therefore, achieving stable and high precision in ship detection under low-illumination conditions remains a significant challenge for various research communities [5].

To cope with the recognition demands in low-illumination scenes, researchers are more willing to combine image enhancement techniques with network optimizations to improve detection performance. Wang Y.T. [6] incorporated RetinexFormer into the same backbone, to estimate illumination and reflectance maps, thereby improving brightness and contrast. Zhang Y.H. [7] combined Retime enhancement with receptive-field modules in YOLOv4, achieving effective detection of maritime targets in foggy conditions. Building on Retinex and saliency theory, Pengcheng Hao [8] devised a low-light enhancement framework that fused predicted salient regions with the enhanced image, boosting detection under dim lighting. Zhang et al. [9] proposed a lightweight CNN equipped with a CA-Ghost module and hybrid training, which strengthens shallow features and raises accuracy in foggy and nighttime scenes. Hong et al. [10] streamlined YOLOv4 by introducing residual blocks, easing network degradation and markedly improving detection in low-illumination scenes. Finally, Sen Li [11] used contrastive-learning pre-training to mitigate nighttime feature degradation without adding significant complexity. References [6,7,8] highlight target cues through enhanced or fused strategies. However, they have limited capacity for global modeling and fail to rank feature importance, leading to missed detections. The missing detection of the vessel could lead to potential hazards. In response to these negative factors, many effective measures are taken, for instance, incorporating non-local attention mechanisms to enhance long-range dependency modeling, employing dual-path channel-spatial attention for dynamic feature map reweighting, and constructing multi-scale feature pyramid networks to mitigate cross-level semantic conflicts. References [9,10,11] optimize network features for low-illumination scenes, yet semantic conflicts across levels remain unsolved. In low-illumination scenes, the misalignment between abstract representations and contour clues prevents existing models from sensibly balancing multi-scale perception. In some complex situations, these core methods do adaptively weight textures and outlines to cope with their specific contexts. Nevertheless, the prior distributions and overall traits of multi-scale cognition there differ significantly from ours, so those schemes lose their edge in our setting. In monocular scenes, extracting high-level clues with deep models can conflict with low-level perceptions such as ship outlines. Clearly, this hampers ship detection in low-illumination scenes.

As smart maritime systems and intelligent shipping technologies continue to advance, object detection algorithms are increasingly required to demonstrate high adaptability in complex real-world environments—particularly regarding multi-scale feature representation and fine-grained perception of vessel targets. In nighttime or low-illumination scenarios, vessel imagery often suffers from insufficient luminance, degraded contrast, ambiguous boundaries, and loss of structural details, which collectively undermine the capability of conventional detection algorithms to effectively delineate object contours and morphology. Representative application domains include canal port surveillance, nighttime maritime security patrols, and maritime supervision under adverse weather conditions. The targets encompass heterogeneous vessel types—such as cargo ships, fishing vessels, and passenger ferries—which frequently exhibit visual challenges including large scale variation, partial occlusion, low-texture surfaces, and significant background–boundary fusion. These characteristics commonly result in performance degradation of existing detection models, manifesting as false negatives and misclassifications.

Although the YOLO series exhibits strong real-time performance and overall effectiveness, its capacity to extract and represent low-level features is significantly constrained under low-light conditions. These limitations often induce semantic inconsistencies and multi-scale feature imbalance during the fusion process, consequently impairing detection responsiveness and degrading boundary localization accuracy for maritime targets. To tackle these challenges, this paper proposes YOLO-SAR, a specialized low-light ship detection framework that integrates an expanded receptive field with an adaptive spatial feature fusion mechanism. Built upon YOLO11, the proposed architecture incorporates a SCINet module to improve input image quality and employs a cross-level perception structure coupled with a dynamic weighting fusion strategy, thereby enhancing detection precision and robustness under conditions involving low illumination, multiple targets, and scale variance. The major contributions of this study are as follows:

(1) A method for constructing a low-light ship dataset is proposed, and its effectiveness is validated through comparative experiments based on gray value and HSV color space analysis. This approach partially addresses the limitations of existing open-source datasets.

(2) To address the challenges of low-light ship detection, a dedicated framework named YOLO-SAR is proposed for inland river scenarios. This framework alleviates semantic conflicts and enhances multi-scale feature learning by integrating a Self-Calibrated Illumination Network, which recovers multi-scale feature details of ships in low-light images. Additionally, it introduces attentional convolution with expanded receptive fields to improve global context modeling and incorporates an adaptive spatial feature fusion strategy to overcome feature insufficiency. Together, these components significantly improve detection accuracy in such challenging environments.

(3) Extensive experiments conducted on the self-constructed Lowship dataset and a real-world low-light vessel dataset demonstrate that YOLO-SAR achieves superior detection accuracy compared to existing methods, including YOLOv11. This framework lays a solid foundation for robust vessel recognition and tracking in canal scenarios and contributes to the advancement of intelligent shipping systems.

The remainder of this paper is organized as follows: Section 2 reviews related work, focusing on advanced object-detection methods applied to ship detection and the baseline YOLO11. Section 3 details the proposed YOLO-SAR framework. Section 4 represents extensive experiments validating its effectiveness, and Section 5 concludes this study and discusses future research directions.

2. Related Work

2.1. Related Research

Research on object detection is usually categorized into traditional pipelines and deep-learning-based algorithms [12]. Early approaches heavily relied on hand-crafted features: the Viola–Jones detector [13] employed a sliding window technique to scan the image for potential targets, while the Deformable Part Model [14] combined enhanced Histogram of Oriented Gradient features [15] with a Support Vector Machine classifier [16] and bounding-box regression [17]. These conventional pipelines require substantial computational resources and struggle to meet today’s demands for low-illumination scenes and real-time operation. Deep learning detectors can be broadly classified into two categories: two-stage and one-stage methods. Two-stage methods first generate region proposals and then perform classification and regression with a convolutional network. Classic examples include R-CNN [18] and Faster R-CNN [19]. One-stage methods make end-to-end predictions on the whole image, typified by the Single Shot MultiBox Detector [20] and You Only Look Once [21]. Two-stage approaches often yield higher accuracy but run slowly, whereas one-stage algorithms such as YOLO drastically improve real-time detection [22].

In recent years, a significant number of studies have explored the YOLO model families for the purpose of ship detection. Shouwen Cai et al. [23] have utilized YOLOv7 as their baseline and developed an end-to-end feature fusion and enhancement scheme that integrates channel attention with a spatial channel pooling block, thereby strengthening the synergy between multi-scale clue comprehensions and network structures. Sun X.R. et al. [24] have refined YOLOv5 by incorporating a task-specific module designed to suppress conflicting information, which enhances the detection of fine ship details. Yuan M. et al. [25] have introduced an adaptive multi-scale variant of YOLO that is combined with a multi-granularity adaptive feature enhancement module. Their approach also employs grouped weighting and various adaptive mechanisms to enrich detail extraction and fuse multi-scale features with contextual information, thereby increasing applicability in dynamic maritime environments. Zhang Y. et al. [26] propose YOLO-Ships, a lightweight yet effective method that upgrades the Ghost module to amplify feature extraction and adopts a ship-feature enhancement block, thus balancing compactness with detection accuracy. As for methods employed in low-light conditions, many existing YOLO models demonstrate notable capabilities in object detection (such as YOLOv3, YOLOv4, YOLOv5, and YOLOv7). However, they also exhibit several limitations when confronted with the challenges posed by low-illumination environments. First of all, conventional YOLO models often struggle with feature extraction in low-light conditions, particularly in recognizing fine details in real-world situations. These negative factors often lead to increased image noise and diminished contrast, resulting in the loss of critical features that adversely affect detection accuracy. In addition, the performance of current YOLO algorithms can be unstable in complex low-light scenarios. It results in the loss of critical features that adversely affect detection accuracy. Moreover, many models lack the ability to adaptively adjust to changes in lighting conditions. This underscores the need for optimization to enable effective responses to diverse illumination scenarios. Last but not least, the introduction of complex computations to enhance detection accuracy can detrimentally affect their real-time performance, while these models are celebrated for their rapid inference speed. Therefore, it is necessary to take related and necessary measures. Collectively, these works keep YOLO’s speed while boosting ship-detection capability through attention, custom modules, and multi-scale fusion, yet deficiencies remain under low-illumination scenes. Accordingly, further architectural upgrades are warranted for such conditions.

Notably, this framework incorporates three key modules, SCINet, RFAConv, and ASFF, into the YOLO11 framework to enhance detection performance in low-light maritime scenarios. SCINet, initially proposed by Ma et al. [27], was designed for self-calibrated enhancement of low-illumination images. It has since been widely adopted in low-light object detection tasks. For instance, Jie Yang [28] integrated the Self-Calibrated Illumination Network (SCINet) into the early layers of the YOLOv8 backbone, effectively improving image contrast and revealing fine-grained details in dim environments. The residual mapping mechanism within SCINet facilitates the restoration of contrast and texture. However, its original architecture was tailored for static scenes and lacks the capability to model multi-scale target information, limiting its applicability in dynamic or complex environments. RFAConv, proposed by Zhang et al. [29], is a convolutional module designed to enhance spatial perceptual capacity. In the context of small-object detection in aerial remote sensing imagery, Zhao et al. [30] proposed an improved YOLO-RLDW framework by combining RFAConv with the C2f feature fusion module, thereby forming the C2f-RFA block. This integration significantly boosted the detection of multi-scale aerial targets in cluttered backgrounds, enhancing both robustness and accuracy. ASFF, introduced by Liu et al. [31], addresses the inconsistency of multi-scale feature representations by spatially filtering conflicting information. This mechanism improves the scale-invariance of features across different detection layers. Building upon this, Qiu et al. [32] developed ASFF-YOLOv5 by embedding a receptive-field-aware ASFF strategy, which demonstrated improved performance in multi-scale fusion and small object detection. However, its performance under low-light conditions remains constrained, particularly in aligning blurred contours and extracting low-contrast edge information—an inherent bottleneck in dim scenes. In light of the limitations in scene adaptability and architectural integration of these modules in their original implementations, this paper proposes a unified integration of SCINet, RFAConv, and ASFF within the YOLO11 framework. This fusion strategy simultaneously preserves model robustness and significantly enhances the detection of maritime targets with low texture and indistinct contours. Empirical results confirm the effectiveness of this structural design, demonstrating its superior performance in low-illumination environments.

2.2. YOLO11

Object detection algorithms play a critical role in the field of computer vision. As the requirements for object detection in real-world applications continue to increase, the limitations of conventional approaches that primarily rely on handcrafted features have become more apparent. Consequently, algorithms based on deep learning models are increasingly gaining prominence. Within this domain, the You Only Look Once (YOLO) frameworks stand out as a significant contribution. YOLO11, released by Ultralytics in September 2024, introduces several architectural optimizations and innovations that further enhance feature extraction and inference speed.

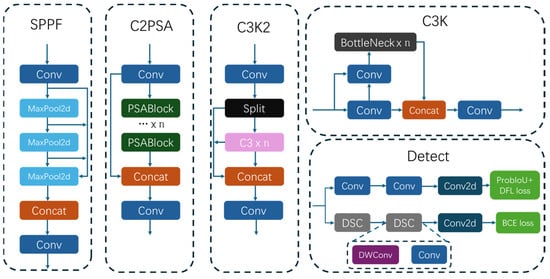

In the backbone and neck of the YOLO11 architecture, a novel C3K2 module is introduced [33]. It optimizes the computation process, reduces redundant parameters, and enhances feature representation simultaneously. Following the Spatial Pyramid Pooling Fast (SPPF) module [34], a Cross Stage Partial Self-Attention (C2PSA) block [35] is integrated to absorb the model into the target regions and increase the granularity of feature extraction. In the head of the network, YOLO11 adopts an anchor-free decoupled head, which further delineates the processes of box regression and classification. There is no doubt that these modifications significantly enhance efficiency. Meanwhile, these updating strategies enable the model to maintain high throughput even when processing high-resolution inputs.

Collectively, these enhancements enable YOLO11 to achieve more remarkable real-time detection performance. They can also provide us with a faster and more accurate solution for computer vision applications. The enhanced modules of YOLO11 are illustrated below in Figure 1.

Figure 1.

YOLO11 optimized network module diagram.

3. Methods

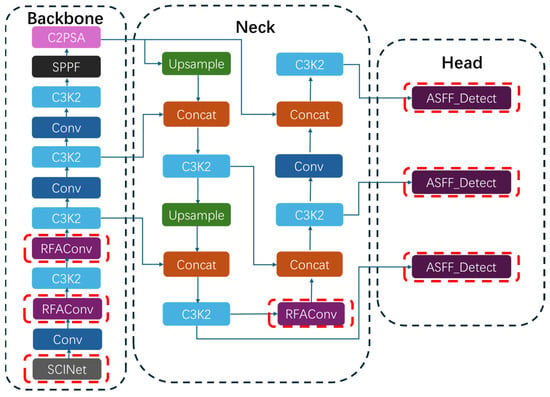

Based on the YOLO11 architecture, YOLO-SAR addresses key limitations of the baseline model by enhancing its backbone, neck, and detection head components. The backbone integrates an illumination enhancement module, multiple convolutional layers, and a Spatial Pyramid Pooling Fast (SPPF) module. SCINet employs a cascaded illumination learning process and a weight-sharing mechanism to improve visual clarity in low-light images, thereby recovering fine-grained, multi-scale ship features. Furthermore, the standard convolutions are replaced with the receptive-field attention convolution (RFAConv) module, which dynamically recalibrates intra-receptive-field feature weights to enhance global context modeling and compensate for limitations in capturing long-range dependencies. The SPPF module consolidates multi-scale features into a unified resolution, improving the detection of ships of varying sizes. In the neck section, the model adopts a hybrid of feature pyramid network (FPN) and Path Aggregation Network (PANet) to enable hierarchical feature fusion. The backbone outputs are first channel-aligned and then refined through the RFAConv module, which enhances salient feature representations using a dynamic receptive-field attention mechanism. Top-down and bottom-up fusion through upsampling and downsampling operations further integrates multi-scale features into robust fused representations, enhancing detection across different scales. The detection head adopts the Adaptively Spatial Feature Fusion (ASFF) module, which dynamically assigns spatial attention weights to resolve semantic conflicts during cross-scale feature aggregation. ASFF adaptively modulates contributions from different layers, mitigating contour ambiguity and feature degradation in low-light conditions. Additionally, a decoupled anchor-based head separately optimizes bounding box regression and object classification, thereby improving both precision and robustness in challenging environments. Collectively, these architectural enhancements significantly strengthen the model’s capability for reliable vessel detection under complex low-illumination scenarios.

In low-illumination ship-detection tasks, the SCINet module utilizes pixel clues from low-light environments through abstract node interactions. By constructing abstract node comprehensions and feature representations, it effectively extracts low-light characteristics and enables the detector to capture ship features more accurately. The receptive field is introduced to restore the missing global context subsequently, thereby reducing the likelihood of missed detections of small ships. They are supposed to focus on the frame edge representations. Furthermore, the Adaptively Spatial Feature Fusion (ASFF) in the head adaptively fuses cross-scale cues, facilitating multi-scale learning and ensuring precise ship recognition. In conclusion, our improved YOLO11 framework consists of SCINet, RFAConv, and ASFF modules. SCINet emphasizes low-contrast and low-saturation ship patterns. ASFF dynamically readjusts their weights within the cascade, feature tuning in time and blemish filtering, which ensures robust detection. Its complete architecture is illustrated in Figure 2 and guarantees robust ship detection.

Figure 2.

Architecture of the YOLO-SAR network. The blue module (Conv) denotes standard convolution used for basic feature extraction; light blue (C3K2) for feature refinement; purple (C2PSA) for enhancing feature representations; black (SPPF) for multi-scale context modeling via spatial pyramid pooling; green (Upsample) for resolution recovery; and orange (Concat) for multi-layer feature fusion. The modules highlighted by red dashed rectangles—SCINet, RFAConv, and ASFF_Detect—are the key improvements proposed in this study.

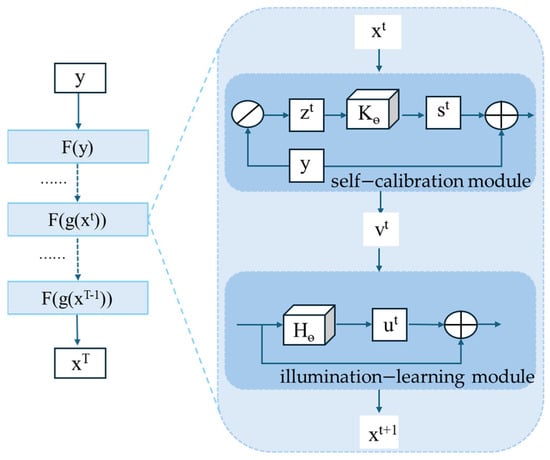

3.1. SCINet Module

To address the challenges of poor discriminability of multi-scale features and significant spatio-temporal fragmentation in low-illumination scenarios, this framework introduces a Self-Calibrated Illumination Network (SCINet). SCINet is a deep learning model that integrates spatial and channel representations; it adaptively enhances multi-scale feature perception in low-illumination scenes marked by low saturation, low contrast, and weak discriminability. Built from several convolutional and deconvolutional layers, SCINet consists of two main components: an illumination learning module and a self-calibration module, which are described in detail below in Figure 3.

Figure 3.

SCINet module architecture.

The illumination learning module gradually refines illumination estimation via residual learning, capturing the residual between illumination and the low-illumination image, and in doing so, this approach reduces computational costs while stabilizing model performance. Employing the Retinex formulation, a low-illumination observation is modeled as the element-wise product of a clean image and its illumination map. Learnable mapping is then introduced to track illumination variations progressively, forming a bidirectional mapping that adapts dynamically to changing lighting and achieves precise image enhancement. In Equation (1), and denote the residual term and the illumination at stage , respectively. represents the learnable mapping function.

As for low-illumination observations, the self-calibration module constrains each stage’s output by defining an unsupervised training loss. It also reduces the model’s computational cost and endows it with adaptability to diverse scenarios. This can be found in Equation (2).

In Equation (2), denotes the calibrated input to the next stage, and represents a learnable parametric operator. On this basis, the input to the illumination-learning process becomes this calibrated output, and the basic unit of the illumination-optimization process can be expressed as Equation (3).

3.2. RFAConv Module

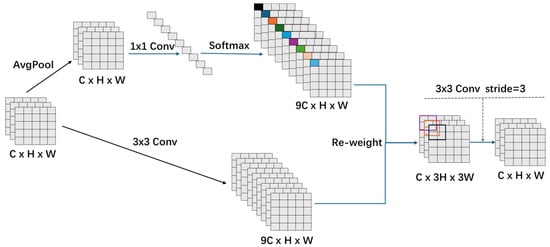

Standard convolutions are introduced here to utilize fixed kernels, which overlook both spatial heterogeneity and variations in feature importance indeed. They do more harm than good to suboptimal feature extraction and are not able to bring more robust ship detections. To address these limitations, we introduce receptive-field attention convolution (RFAConv). Unlike standard convolutions, RFAConv emphasizes spatial differences within each receptive field. They are adopted here to effectively mitigate the shortcomings of conventional convolution in capturing long-range dependencies. In addition, it adaptively adjusts weights across scales to enhance the extraction of key features. Furthermore, RFAConv leverages dynamic interactions between receptive field features and kernels, thereby significantly improving the model’s overall detection accuracy on time. The architecture of the RFAConv module can be illustrated in Figure 4.

Figure 4.

RFAConv module architecture.

RFAConv segments the input feature map into non-overlapping patches, each corresponding to a receptive field, thus transforming spatial features into receptive-field representations. Each patch undergoes average pooling to aggregate receptive-field features and capture global context. A 1 × 1 convolution then enables feature interaction across receptive fields, strengthening feature correlation and global expressiveness. The softmax function is used to generate attention weights. These weights reflect the importance of features within each receptive field. By multiplying weights with receptive-field features element-wise, salient clues are emphasized, thereby improving extraction effectiveness. A convolution aligns feature mapping matching mechanisms to the attention map. Applying the attention weights and resizing then yields the final RFAConv output.

RFAConv effectively addresses the limitations of standard convolutions in convolutional neural networks, which benefits weight readjustment and spatial insensitivity. They are adopted here and account for the significance of features within each receptive field. Based on dynamic generation of distinct attention weights for each receptive field, RFAConv introduces a non-shared weight convolutional operation that substantially energizes feature extraction precision. Furthermore, the integration of convolutional operations at the backbone, neck, and head levels enables the capture of multi-scale ship features. They are more willing to work together and allow the YOLO SAR framework to efficiently detect vessels across varying scales. Following this, RFAConv improves the framework’s ship recognition, detail comprehension, and perception capabilities without introducing lots of computational overhead or parameters.

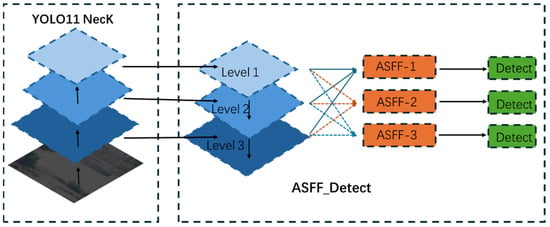

3.3. ASFF Module

In the YOLO11 network, conventional multi-scale feature fusion can result in conflicts across feature levels. When a target is labeled positive in one layer, the same region may be treated as the background in other layers. These negative factors may lead to significant semantic gaps between scales. In the context of ship detection under low-illumination conditions, poor lighting and occlusion frequently blur contours, exacerbate scale differences, and obscure critical features. To address these challenges, we incorporate an Adaptively Spatial Feature Fusion (ASFF) strategy within the detection head. ASFF adjusts spatially adaptive weights to mitigate inter-level semantic conflicts and filters out noise that destabilizes backpropagation, thereby resolving scale inconsistencies. Subsequently, it reshapes features from all scales to a common size, allowing each level to leverage its strengths effectively. The architecture of the ASFF module is illustrated in Figure 5.

Figure 5.

ASFF module architecture.

The ASFF module contains Level 1, Level 2, and Level 3 representations. They are adopted here to represent three distinct fusion strategies at different layers and scales. Level 1 emphasizes the finest scale and utilizes high-resolution feature mapping processes to capture the intricate details of small objects. Level 2 operates at medium resolution to identify medium-sized targets while maintaining a low computational cost. Level 3 concentrates more on the coarsest scale, employing the lowest-resolution feature mapping processes to delineate entire large objects. Within the ASFF framework, fusion weights adaptively adjust to reflect each level’s contribution and comprehend the spatial relationships among multi-scale features indeed. ASFF incorporates a spatial filtering mechanism that eliminates mismatched responses, thereby enhancing the effectiveness of feature fusion. The final fusion multiplies each feature map by three learnable weights, , , and , reflecting its importance at each scale. The computation is provided in Equation (4).

denotes the new feature map produced by the ASFF module. represents the feature vector at position (, ) that is mapped from level 1 to level . , , ∈ [0, 1] are the spatial weights. are assigned to position (, ) on level . These representations are shown in Equation (5).

The adaptive spatial feature fusion head mentioned above effectively resolves the feature loss that often arises during cross-scale fusion, and this mechanism not only enriches the core representations on various network features but also markedly boosts the model’s robustness across diverse detection scenarios.

4. Results

4.1. Dataset Preprocessing

Due to the limited availability of low-light ship datasets, this study incorporates images independently captured in the Yujiang River Basin, Guilin, Guangxi. The imaging device used was the Hikvision DS-2DC7423IW-AE surveillance camera. All images were recorded in 1920 × 1080 resolution and H.264 format, with a fixed shutter speed of 1/1000 s and ISO set to auto. To enrich data diversity, additional samples were selected from the publicly available SeaShips dataset. The collected and selected images primarily depict open inland waterways, featuring vessels with varying viewing angles, scales, and background complexities. The vessels are categorized into five types: bulk carriers, container ships, fishing vessels, ore carriers, and passenger ships. As these images were all captured under normal lighting conditions, with several examples presented in Figure 6, a low-light simulation method was proposed to transform them into corresponding low-illumination scenarios. The construction pipeline of the resulting low-light dataset is elaborated in the following sections.

Figure 6.

Selected sample images (samples of various categories of vessels under normal daylight conditions in open inland waterways).

To simulate the underexposure characteristics of sensors in low-illumination scenarios, each pixel is multiplied by a randomly sampled exposure coefficient exposure ∈ [0.1, 0.4], allowing the image to retain visible details in low-light environments. Gaussian sensor noise is then added to the exposure-adjusted image to the additional quality degradation caused by hardware limits. We employ a Gaussian-noise model with intensity sensor = 0.03, meaning that noise accounts for 3% of the 0~255 gray-level range.

Following these modifications, gamma correction is applied to refine the luminance distribution curve. This correction comprehends the nonlinear response of sensors in low-illumination environments, with the gamma value being randomly drawn from [1.0, 1.5]. The exact computation is given in Equation (6).

and denote the pixel values before and after correction, respectively. The image contrast is then slightly adjusted to emulate the compressed dynamic range typical of low-illumination scenes; the contrast coefficient must be kept within a reasonable range to avoid unnaturally strong or weak contrast [36]. In the end, to enhance nighttime realism, a mild tone adjustment is applied, particularly by amplifying the blue channel to replicate the cool tint commonly observed in nocturnal images [37]. This effect is achieved by decreasing the intensity of the red channel while increasing that of the blue channel.

After the multi-stage processing described above, images captured under normal lighting now exhibit the hallmarks of low-illumination scenes—reduced brightness, heightened noise levels, weaker contrast, and a slight color cast. These operations faithfully mimic a sensor’s response to external light in low-illumination scenes, bringing the images closer to real-world capture conditions.

The same procedure is used to create a low-illumination ship dataset called Lowship, which contains 12,606 images belonging to a single class. The dataset is randomly divided by a ratio of 8:2, resulting in 10,084 training images and 2522 validation images. Several samples from the dataset are presented in Figure 7.

Figure 7.

Sample images from the Lowship dataset (the images obtained from Figure 6 after preprocessing).

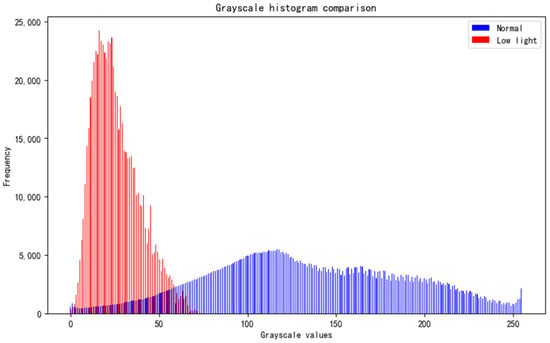

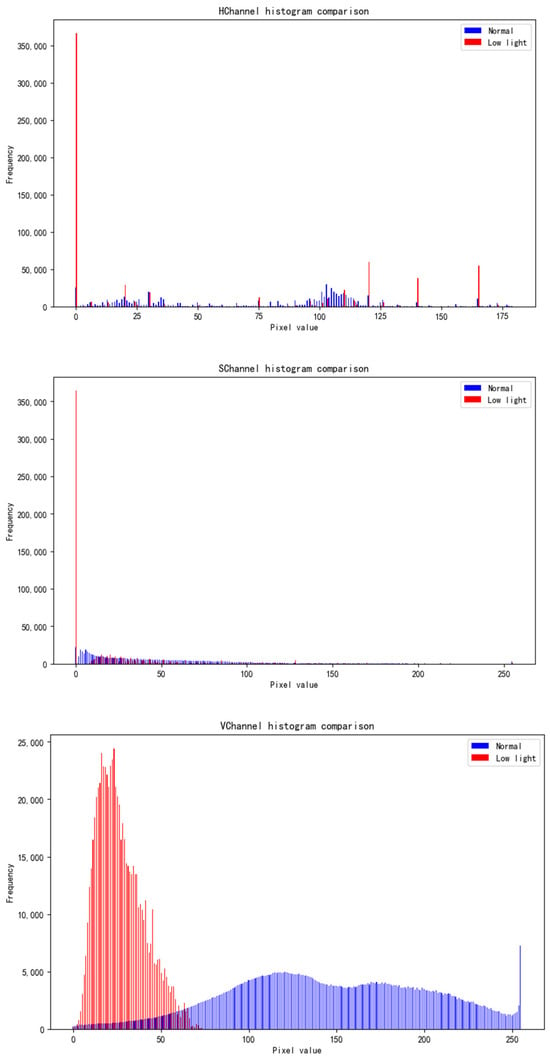

To evaluate the effectiveness of the proposed dataset-preprocessing strategy, comparative experiments are conducted in grayscale and HSV color spaces on both the original dataset and the low-illumination ship dataset generated through this method. In the experiments, grayscale values and the three HSV channels—hue (H), saturation (S), and value (V)—are extracted from ship images in both datasets and represented as histograms.

As shown in Figure 8, the grayscale distribution reveals that while images captured under normal lighting exhibit values evenly distributed across the higher range, the low-illumination images demonstrate a shift towards lower values, indicating a reduction in brightness following preprocessing.

Figure 8.

Comparison of grayscale histograms under normal light and low light.

Figure 9 illustrates a comparison of histograms for pixel distributions across the HSV channels under normal lighting and low-illumination scenes. In the H channel (hue), the hue values of low-illumination images cluster in the lower range, with most frequencies approaching zero. By contrast, the hue distribution in the original dataset under normal lighting is more uniform. The histogram of the S channel (saturation) shows that low-illumination images possess lower saturation compared to images captured under normal lighting. In the V channel (value), it is evident that the brightness values of low-illumination images predominantly fall within the lower range, further confirming the decrease in luminance.

Figure 9.

Comparison of pixel distribution histograms of HSV color gamut under normal light and low light.

The analysis mentioned above demonstrates that the processed dataset exhibits notable differences in brightness, hue, saturation, and grayscale characteristics compared to the original dataset, thereby validating the effectiveness of preprocessing strategies in simulating low-illumination environments.

4.2. Evaluation Metrics

To objectively evaluate the detection framework’s performance, we use precision (P), recall (R), mean average precision (mAP), giga floating-point operations (GFLOPs), frames per second (FPS), and parameters as evaluation metrics.

Precision (P) is defined as the ratio of correctly detected objects to the total number of detections. In the context of ship detection tasks, it is calculated as the number of accurately identified ships divided by the total number of predicted bounding boxes. Recall (R) measures the ratio of detected objects to the actual number of objects, calculated as the number of correctly detected ships divided by the ground-truth ship count. A higher precision indicates more accurate detections, while a higher recall indicates fewer missed targets. The exact computations are provided in Equations (7) and (8).

Mean average precision (mAP) represents the area under the precision–recall curve. It combines precision and recall into a single metric and is the most widely used measure in object detection. A higher mAP indicates better detection accuracy. mAP@50 denotes the average precision over all classes at an IoU threshold of 0.5. mAP@50:95 represents the average precision at IoU thresholds from 0.5 to 0.95 in increments of 0.05. These two metrics evaluate performance at different strictness levels, with higher values indicating superior detection capabilities. Detailed formulations of these metrics can be found in Equations (9) and (10).

4.3. Comparative Experiments

All comparisons in this paper refer to absolute changes measured in percentage points. The experiments were run on a 64-bit Windows 11 system with Python 3.9, PyTorch 2.5.0, CUDA 12.1, and an RTX 3070 GPU with 8 GB of VRAM. The model was trained from zero with an early-stopping strategy: training was halted when validation performance ceased to improve, effectively reducing overfitting.

To empirically demonstrate the performance superiority of our proposed YOLO-SAR low-illumination ship-detection framework, we performed a benchmark comparison on the Lowship dataset against other YOLO variants and several representative object-detection models. The experimental results for all key metrics are presented in Table 1.

Table 1.

Comparison results for the Lowship dataset.

Based on the experimental results, the proposed YOLO-SAR framework demonstrates superior performance across multiple evaluation metrics, showcasing enhanced robustness and accuracy in low-light object detection. Traditional detection algorithms, such as Faster R-CNN and SSD, show limited effectiveness under low-illumination conditions due to their dependence on shallow convolutional backbones, which are insufficient in capturing weak textures and low-contrast features. These methods are highly sensitive to illumination degradation and edge information, often resulting in false negatives and false positives under challenging conditions such as image blur or poor lighting, rendering them inadequate for ship detection in low-light scenarios. Although YOLOv3-tiny achieves extremely high inference speed, its lightweight architecture compromises its feature representation capability and lacks an effective multi-scale feature perception mechanism, leading to degraded detection performance on small or low-texture targets. Specifically, compared to YOLOv3-tiny, YOLO-SAR achieves improvements of 11.1% in precision, 10.2% in recall, and 9.3% in mAP@0.5, highlighting its ability to maintain high detection performance under complex low-light conditions.

Although recent mainstream YOLO series models—such as YOLOv5, YOLOv8n, YOLOv9t, and YOLOv10n—achieve a good trade-off between accuracy and speed under general lighting conditions, their network architectures are not specifically optimized for the characteristics of low-light imagery. In particular, they exhibit clear limitations in shallow-level edge representation and cross-scale semantic alignment. Most of these models adopt static feature aggregation schemes, which lack the ability to dynamically adjust multi-scale feature weights based on image content. As a result, they struggle to resolve scale-level semantic inconsistencies prevalent in low-light environments. Experimental results show that under identical testing conditions, YOLO-SAR outperforms the aforementioned models by 3.2%, 2.6%, 4.7%, and 3.5% in terms of mAP@0.5, respectively, demonstrating its superior overall performance in low-light object detection scenarios. Despite the introduction of multiple modules that introduce a certain computational overhead, YOLO-SAR maintains an inference speed of 51.7 FPS. This indicates that the overall real-time performance remains within acceptable limits and is sufficient to meet the requirements of most practical applications.

To further evaluate the generalization capability and practical applicability of the proposed YOLO-SAR framework under varying low-light conditions, a real-world ship image dataset was collected at dusk from the Yujiang River Basin in Guilin, Guangxi. This dataset consists of 8529 images captured in representative inland open-water scenarios. The ship targets exhibit varied viewpoints, object scales, and complex backgrounds, and include representative vessel classes such as cargo ships and ore carriers. The images were acquired under dusk lighting conditions, closely simulating real nighttime application environments. The data acquisition setup and parameter configurations are consistent with those used in the Lowship dataset. Example images are provided in Figure 10.

Figure 10.

Sample images from the real dataset (multi-category vessel samples captured in the evening from the real-world ship image dataset collected in the open waters of the Yu River, Guilin, Guangxi, China).

In terms of experimental setup, the same hardware platform and hyperparameter configurations as the main experiment were adopted. The real-world dataset was partitioned using an 8:2 split into a training set (6823 images) and a validation set (1706 images). All baseline models were trained from zero, with consistent evaluation and testing procedures.

The experimental results on the real-world dataset are summarized in Table 2. YOLO-SAR achieves substantial improvements across all key detection metrics, notably achieving an accuracy of 96.4%, recall of 93.6%, and mAP@0.5 of 94.5%, outperforming state-of-the-art detectors such as YOLOv5, YOLOv8n, and YOLOv10n. These results underscore its robustness and generalization capability in real-world low-light conditions. Benefiting from the synergistic integration of an image enhancement module, receptive-field attention mechanism, and multi-scale feature fusion strategy, YOLO-SAR demonstrates superior perception of low-texture targets, along with enhanced fine-grained detection and adaptability to complex environments, making it well-suited for nighttime maritime surveillance tasks in challenging inland waterway scenarios.

Table 2.

Comparison results for the real dataset.

4.4. Ablation Experiments

Ablation experiments were conducted on the Lowship dataset using the YOLO11n model as the baseline to assess each module’s effectiveness in ship detection tasks. All ablation experiments employed consistent hyperparameter settings, and the results are summarized in Table 3.

Table 3.

The ablation experiment results for the Lowship dataset.

As shown in Table 3, each standalone modification to the detection framework effectively boosts its accuracy. Compared to the baseline YOLO11n, the integration of the Self-Calibrated Illumination Network (SCINet) increases the mAP@0.5 by 0.8 percentage points. This addition effectively addresses multi-scale perception deficits and uneven feature contrast through cascaded low-illumination enhancement. Introducing receptive-field attention convolution (RFAConv) further increases mAP@0.5 by 0.6 percentage points, indicating that the model not only captures global context more effectively but also adaptively reweights multi-scale features. Additionally, incorporating the Adaptively Spatial Feature Fusion (ASFF) module in the head results in a 1.1 percentage point increase in mAP@0.5, demonstrating the great capacity to adaptively flexible multi-scale features and automatically balance information across different levels through learnable weights. While all three modifications contribute to improved detection performance, they do incur a corresponding increase in parameter count and computational overhead.

The combined-methods experiments show that merging SCINet and RFAConv modules improve the mAP@0.5 by 1.5% and the mAP@0.5:0.95 by 1.6%, further enhancing detection performance compared to single-module upgrades. Additionally, the combination of SCINet module with ASFF module results in an increase of 2.0 percentage points in mAP@0.5. Finally, the integration of all three enhancements yields significant improvements in precision, recall, mAP@0.5, and mAP@0.5:0.95, with increases of 2.6, 3.8, 2.7, and 2.4 percentage points, respectively. These findings validate that our core YOLO SAR framework achieves a noteworthy enhancement in detection accuracy.

4.5. Data Visualization Analysis

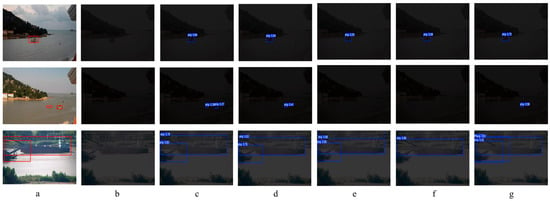

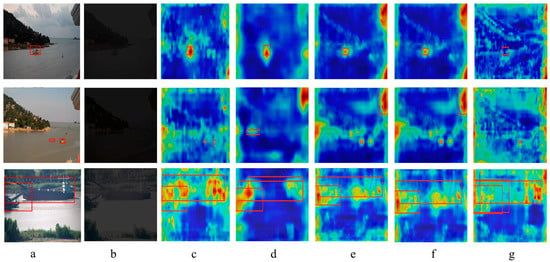

To visually assess YOLO-SAR’s detection performance, representative images from the Lowship dataset have been selected for analysis. YOLO-SAR has been benchmarked against YOLOv3-tiny, YOLOv5, YOLOv9t, YOLOv10n, and YOLO11n. The detection comparison is shown in Figure 11. Heatmaps are also used to highlight the feature-map regions to which each algorithm attended. A blue-to-red gradient heatmap indicates attention levels: deep red denotes highest attention, while deep blue denotes lowest. The heatmap results are presented in Figure 12.

Figure 11.

The detection comparison: (a) ship image with normal lighting and ground-truth labels; (b) low-illumination image; (c) YOLO-SAR; (d) YOLOv3-tiny; (e) YOLOv5; (f) YOLOv10n; (g) YOLO11n.

Figure 12.

Heatmap comparison results: (a) ship image with normal lighting and ground-truth labels; (b) low-illumination image; (c) YOLO-SAR; (d) YOLOv3-tiny; (e) YOLOv5; (f) YOLOv10n; (g) YOLO11n.

From the detection results in Figure 11, the improved YOLO-SAR framework successfully identifies all ship targets within the images. The YOLO-SAR framework reduces missed detections by enhancing long-range relationship modeling and achieves precise recognition of occluded ships through the adaptive weighting of ship feature sets. YOLOv9t and YOLOv10n fail to detect small ships in the second image, mainly due to the loss of target features under low-illumination conditions. In the third image with occluded ships, all algorithms except for YOLO-SAR exhibit poor performance when suffering with occlusions. This phenomenon is evidenced by redundant detection boxes and missed targets. This deficiency is primarily due to multi-level feature conflicts that arise during occlusion. These findings underscore that the enhanced YOLO-SAR framework demonstrates exceptional detection performance in complex environments. The heatmaps in Figure 12 more clearly illustrate YOLO-SAR’s advantages over other algorithms. With a wider receptive field, the YOLO-SAR framework can accurately locate ship targets and reduce missed detections. Furthermore, its adaptive spatial feature fusion mechanism effectively facilitates interaction between high-level semantics and low-level contours, thereby significantly improving overall detection accuracy.

5. Conclusions and Future Directions

To address the challenges of ship detection in low-light environments—such as degraded target features and limited image contrast, which significantly hinder the deployment of existing detection algorithms—this paper proposes an enhanced detection framework for low-light ship imagery, named YOLO-SAR. Extensive empirical evaluations on both a custom-built dataset and a real-world dataset demonstrate its effectiveness and practical potential under challenging illumination conditions.

To address the limited availability of low-light ship imagery, this paper presents a preprocessing approach based on image degradation modeling and introduces the Lowship dataset. The proposed method simulates realistic nighttime conditions by applying exposure suppression, noise injection, gamma correction, and tone adjustment. Its effectiveness is quantitatively validated through comparative experiments using grayscale intensity and HSV color space metrics. In terms of architecture, the YOLO-SAR framework integrates the SCINet module to enhance perceptual image quality. It incorporates receptive-field attention convolution (RFAConv) to strengthen global contextual representation and adopts an adaptive spatial feature fusion (ASFF) mechanism in the detection head to address semantic inconsistencies across feature hierarchies.

Comparative experiments on the Lowship dataset demonstrate that YOLO-SAR achieves significant improvements in detection performance. It surpasses YOLOv5, YOLOv8n, YOLOv9t, and YOLOv10n by 3.2%, 2.6%, 4.7%, and 3.5%, respectively, in terms of mAP@0.5. Ablation studies further validate the individual contributions of each module and highlight the cumulative benefits of the overall architecture in improving detection accuracy. To assess the model’s real-world applicability and cross-domain generalization, a low-light ship image dataset was collected from the Yujiang River Basin in Guilin, Guangxi. Experimental results show that YOLO-SAR continues to deliver state-of-the-art performance on this real-world dataset, demonstrating strong generalization capabilities and promising potential for practical deployment.

Although YOLO-SAR demonstrates notable performance gains in low-light object detection, it still presents several critical limitations in real-world applications that require further improvement. First, under conditions of extreme darkness, complex occlusion, or severe noise, the model tends to generate both false negatives and false positives, compromising detection robustness. Second, the current model incurs high computational costs, including substantial parameters and inference latency, which restrict its deployment on resource-constrained embedded or edge platforms. Additionally, while the proposed low-light preprocessing method enables the generation of synthetic datasets and has undergone initial validation using grayscale and HSV domain comparisons, it lacks a systematic analysis of parameter configurations and a comprehensive quantitative evaluation of its effectiveness.

To address these limitations, future work will focus on the following directions: (i) exploring structural pruning, lightweight attention mechanisms, and knowledge distillation to improve inference efficiency while maintaining accuracy; (ii) designing a multi-scale fusion architecture with scalability and pruning flexibility to enhance real-time performance and deployment adaptability on embedded platforms; (iii) systematically evaluating the impact of image degradation parameters on both image quality and detection accuracy, and establishing a quantitative correlation framework between preprocessing strategies and detection outcomes, thereby enabling automated preprocessing selection and scenario-specific optimization.

Author Contributions

Conceptualization, Z.X. and M.W.; software, Z.X.; experiment analysis, Z.X. and J.Z.; writing—review and editing, Z.X., M.W., and R.K.; funding acquisition, M.W. and R.K.; investigation, Z.X.; visualization, Z.X.; supervision, Z.X. and M.W.; project administration, Z.X. and M.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Guangxi Science and Technology Major Program under Grant No. GuikeAA23062035 and Grant No. GuikeAD23026032.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

We are unreservedly willing to provide research data or key codes mentioned in this manuscript. If necessary, please contact Zi-Hang Xiong via email (2120231261@glut.edu.cn) to obtain the Baidu Netdisk (Baidu Cloud) URL link and then download the files you need.

Acknowledgments

We are all very grateful to the volunteers and staff from GLUT and GUET for their selfless assistance during our experiments.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Wang, Y.; Zeng, W.; Xu, H.; Jiang, Y.; Liu, M.; Xiao, C.; Zhao, K. Multi-Type Ship Target Detection in Complex Marine Background Based on YOLOv11. Processes 2025, 13, 249. [Google Scholar] [CrossRef]

- Xiao, G.; Wang, Y.; Wu, R.; Li, J.; Cai, Z. Sustainable maritime transport: A review of intelligent shipping technology and green port construction applications. J. Mar. Sci. Eng. 2024, 12, 1728. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, B.; Fan, Y. PPGS-YOLO: A lightweight algorithms for offshore dense obstruction infrared ship detection. Infrared Phys. Technol. 2025, 145, 105736. [Google Scholar] [CrossRef]

- Ma, R.; Bao, K.; Yin, Y. Improved ship object detection in low-illumination environments using RetinaMFANet. J. Mar. Sci. Eng. 2022, 10, 1996. [Google Scholar] [CrossRef]

- Chen, Y.; Ren, J.; Li, J.; Shi, Y. SD HFFM: A Network Shallow-Deep Improvement Mechanism for Ship Detection in Low-Light Navigational Channel Scenarios. J. Indian Soc. Remote Sens. 2024, 53, 1–16. [Google Scholar]

- Wang, Y.; Kou, Z.; Han, C.; Qin, Y. RRBM-YOLO: Research on Efficient and Lightweight Convolutional Neural Networks for Underground Coal Gangue Identification. Sensors 2024, 24, 6943. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Ge, H.; Lin, Q.; Zhang, M.; Sun, Q. Research of maritime object detection method in foggy environment based on improved model SRC-YOLO. Sensors 2022, 22, 7786. [Google Scholar] [CrossRef]

- Hao, P.; Wang, S.; Li, S.; Yang, M. Low-light image enhancement based on retinex and saliency theories. In Proceedings of the 2019 Chinese Automation Congress (CAC), Hangzhou, China, 22–24 November 2019; IEEE: New York, NY, USA, 2019; pp. 2594–2597. [Google Scholar]

- Zhang, M.; Rong, X.; Yu, X. Light-SDNet: A lightweight CNN architecture for ship detection. IEEE Access 2022, 10, 86647–86662. [Google Scholar] [CrossRef]

- Hong, X.; Cui, B.; Chen, W.; Rao, Y.; Chen, Y. Research on multi-ship target detection and tracking method based on camera in complex scenes. J. Mar. Sci. Eng. 2022, 10, 978. [Google Scholar] [CrossRef]

- Li, S.; Cao, X.; Zhou, Z. Research on inshore ship detection under nighttime low-visibility environment for maritime surveillance. Comput. Electr. Eng. 2024, 118, 109310. [Google Scholar] [CrossRef]

- Ishaque, N.B.M.; Florence, S.M. An Intelligent Deep Learning based Classification with Vehicle Routing Technique for municipal solid waste management. J. Hazard. Mater. Adv. 2025, 18, 100655. [Google Scholar] [CrossRef]

- Choi, C.H.; Han, J.; Oh, H.W.; Cha, J.; Shin, J. EOS: Edge-Based Operation Skip Scheme for Real-Time Object Detection Using Viola-Jones Classifier. Electronics 2025, 14, 397. [Google Scholar] [CrossRef]

- Felzenszwalb, P.; McAllester, D.; Ramanan, D. A discriminatively trained, multiscale, deformable part model. In Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, Alaska, 23–28 June 2008; IEEE: New York, NY, USA, 2008; pp. 1–8. [Google Scholar]

- Xie, H.; He, J.; Lu, Z.; Hu, J. Two-level feature-fusion ship recognition strategy combining HOG features with dual-polarized data in SAR images. Remote Sens. 2023, 15, 4393. [Google Scholar] [CrossRef]

- Wu, S.; Chen, X.; Shi, C.; Fu, J.; Yan, Y.; Wang, S. Ship detention prediction via feature selection scheme and support vector machine (SVM). Marit. Policy Manag. 2022, 49, 140–153. [Google Scholar] [CrossRef]

- Ma, S.; Xu, Y. FPDIoU Loss: A loss function for efficient bounding box regression of rotated object detection. Image Vis. Comput. 2025, 154, 105381. [Google Scholar] [CrossRef]

- Yuan, M.; Meng, H.; Wu, J.; Cai, S. Global Recurrent Mask R-CNN: Marine ship instance segmentation. Comput. Graph. 2025, 126, 104112. [Google Scholar] [CrossRef]

- Tang, X.; Zhang, J.; Xia, Y.; Cao, K.; Zhang, C. PEGNet: An Enhanced Ship Detection Model for Dense Scenes and Multi-scale Targets. IEEE Geosci. Remote Sens. Lett. 2025, 22, 4004405. [Google Scholar] [CrossRef]

- Cui, K.; Huang, M.; Lv, W.; Liu, S.; Zhou, W.; You, Q. Research on Intelligent Recognition Algorithm of College Students’ Classroom behavior based on improved Single Shot Multibox Detector. IEEE Access 2024, 12, 168106–168119. [Google Scholar] [CrossRef]

- Wu, C.M.; Lei, J.; Li, Z.Q.; Ren, M.L. Ship_YOLO: General ship detection based on mixed distillation and dynamic task-aligned detection head. Ocean. Eng. 2025, 323, 120616. [Google Scholar] [CrossRef]

- Ansari, S.; Desai, D.V.; Saad, A.; Stahl, A. Implications of single-stage deep learning networks in real-time zooplankton identification. Curr. Sci. 2023, 125, 1259–1266. [Google Scholar] [CrossRef]

- Cai, S.; Meng, H.; Wu, J. FE-YOLO: YOLO ship detection algorithm based on feature fusion and feature enhancement. J. Real-Time Image Process 2024, 21, 61. [Google Scholar] [CrossRef]

- Sun, X.; Wu, H.; Yu, G.; Zheng, N. Research on the Automatic Detection of Ship Targets Based on an Improved YOLO v5 Algorithm and Model Optimization. Mathematics 2024, 12, 1714. [Google Scholar] [CrossRef]

- Yuan, M.; Meng, H.; Wu, J. AM YOLO: Adaptive multi-scale YOLO for ship instance segmentation. J. Real-Time Image Process 2024, 21, 100. [Google Scholar] [CrossRef]

- Zhang, Y.; Chen, W.; Li, S.; Liu, H.; Hu, Q. YOLO-Ships: Lightweight ship object detection based on feature enhancement. J. Vis. Commun. Image Represent. 2024, 101, 104170. [Google Scholar] [CrossRef]

- Ma, L.; Ma, T.; Liu, R.; Fan, X.; Luo, Z. Toward fast, flexible, and robust low-light image enhancement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 5637–5646. [Google Scholar]

- Yang, J.; Yue, Z.; Hu, Y.; Wang, M.; Zheng, B. Thermal Defect Detection in Power Equipment Based on YOLOv8s-SCINet-GAM Model with Low-Light Image Enhancement algorithm. In Proceedings of the 2024 4th International Conference on Energy, Power and Electrical Engineering (EPEE), Wuhan, China, 20–22 September 2024; IEEE: New York, NY, USA, 2024; pp. 365–368. [Google Scholar]

- Zhang, X.; Liu, C.; Yang, D.; Song, T.; Ye, Y.; Li, K.; Song, Y. RFAConv: Innovating spatial attention and standard convolutional operation. arXiv 2023, arXiv:2304.03198. [Google Scholar]

- Zhao, L.; Liang, G.; Hu, Y.; Xi, Y.; Ning, F.; He, Z. YOLO-RLDW: An Algorithm for Object Detection in Aerial Images Under Complex Backgrounds. IEEE Access 2024, 12, 128677–128693. [Google Scholar] [CrossRef]

- Liu, S.; Huang, D.; Wang, Y. Learning spatial fusion for single-shot object detection. arXiv 2019, arXiv:1911.09516. [Google Scholar]

- Qiu, M.; Huang, L.; Tang, B.H. ASFF-YOLOv5: Multielement detection method for road traffic in UAV images based on multiscale feature fusion. Remote Sens. 2022, 14, 3498. [Google Scholar] [CrossRef]

- Zhang, Q.; Guo, W.; Lin, M. LLD-YOLO: A multi-module network for robust vehicle detection in low-light conditions. Signal Image Video Process. 2025, 19, 271. [Google Scholar] [CrossRef]

- Hu, M.; Liu, J.; Liu, J. DRR-YOLO: A study of Small Target Multi-modal Defect Detection for Multiple Types of Insulators Based on Large Convolution Kernel. IEEE Access 2025, 13, 26331–26344. [Google Scholar] [CrossRef]

- Zhang, M.; Ye, S.; Zhao, S.; Wang, W.; Xie, C. Pear Object Detection in Complex Orchard Environment Based on Improved YOLO11. Symmetry 2025, 17, 255. [Google Scholar] [CrossRef]

- Qiu, J.; Wang, Z.; Huang, Y.; Huang, H. Infrared Image Dynamic Range Compression based on Adaptive Contrast Adjustment and Structure Preservation. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5006512. [Google Scholar] [CrossRef]

- Yu, C.; Han, G.; Pan, M.; Wu, X.; Deng, A. Zero-TCE: Zero Reference Tri-Curve Enhancement for Low-Light Images. Appl. Sci. 2025, 15, 701. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).