The Role of Artificial Intelligence in Sports Analytics: A Systematic Review and Meta-Analysis of Performance Trends

Abstract

1. Introduction

2. Materials and Methods

2.1. Eligibility Criteria and Search Strategy

2.2. Exclusion Criteria

2.3. Text Screening and PRISMA Search

2.4. Data Extraction and Study Coding

2.5. Quality Assessment

3. Results

3.1. Quality Assessment

Experimental Studies

- Computer Vision and Predictive Modeling Studies

3.2. Systematic Review and Meta-Analysis

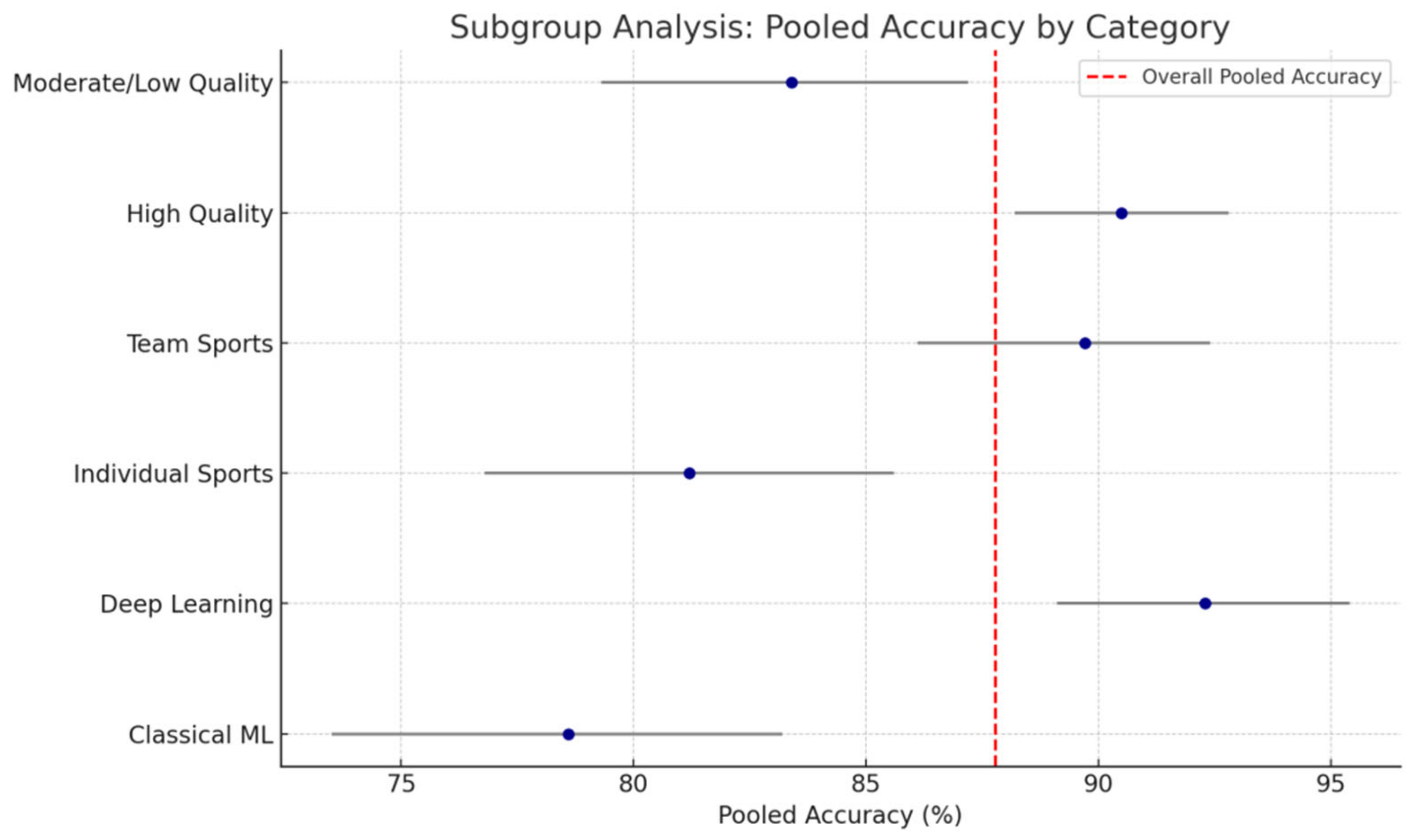

- Subgroup Analyses

- Sensitivity Analysis

- Pooled Effects

- Heterogeneity Analysis

- Risk of Bias and Reporting Considerations

- Certainty of Evidence (GRADE)

4. Discussion

4.1. AI Performance

- Most Popular Metrics in AI-Based Sports Analysis

- Chatterjee et al. [7] developed an AI-driven, pose-based sports activity classification framework that accurately captures athletes’ dynamic postures across multiple disciplines, demonstrating improved biomechanical assessment over traditional methods.

- Salim et al. [10] integrated advanced sensing modalities with convolutional and recurrent neural networks in volleyball training, enabling real-time action recognition and delivering immediate, data-driven feedback to both athletes and coaches.

- Li [14] applied supervised machine learning to player movement trajectories for defensive strategy analysis in basketball, showing how AI models can reveal tactical patterns and support in-game decision-making.

- García-Aliaga et al. [16] employed ensemble machine learning techniques on player statistics to derive composite key performance indicators in football, blending individual and team metrics to refine talent evaluation.

- Krstić et al. [1] conducted a systematic review of AI applications in sports, mapping out implementation contexts from training optimization and performance monitoring to health management and injury risk prediction.

4.2. Implementation Contexts Across Sports

4.3. Ethical and Societal Considerations

4.4. Data Quality, Granularity, and Ethical Constraints

4.5. Study Limitations

5. Conclusions

Supplementary Materials

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| ML | Machine Learning |

| DL | Deep Learning |

| CNN | Convolutional Neural Network |

| LSTM | Long Short-Term Memory |

| RL | Reinforcement Learning |

| GPS | Global Positioning System |

| IMU | Inertial Measurement Unit |

| IoT | Internet of Things |

| PCA | Principal Component Analysis |

| PICO(S) | Population, Intervention, Comparison, Outcome (±Study design) |

| PRISMA | Preferred Reporting Items for Systematic Reviews and Meta-Analyses |

| PROSPERO | Prospective Register of Systematic Reviews |

| MMAT | Mixed Methods Appraisal Tool |

| JBI | Joanna Briggs Institute |

| PROBAST | Prediction model Risk Of Bias ASsessment Tool |

| TRIPOD+AI | Transparent Reporting of a multivariable prediction model for Individual Prognosis Or Diagnosis (extended for AI) |

| AMSTAR 2 | A Measurement Tool to Assess Systematic Reviews |

| CI | Confidence Interval |

| I2 | Higgins’ I-squared statistic (measure of heterogeneity) |

| τ2 | Tau-squared (between-study variance estimate) |

| SVM | Support Vector Machine |

References

- Krstić, D.; Vučković, T.; Dakić, D.; Ristić, S.; Stefanović, D. The application and impact of artificial intelligence on sports performance improvement: A systematic literature review. In Proceedings of the 4th International Conference on Communications, Information, Electronic and Energy Systems (CIEES), Plovdiv, Bulgaria, 23–25 November 2023; pp. 1–8. [Google Scholar] [CrossRef]

- Mateus, N.; Abade, E.; Coutinho, D.; Gómez, M.-Á.; Peñas, C.L.; Sampaio, J. Empowering the Sports Scientist with Artificial Intelligence in Training, Performance, and Health Management. Sensors 2025, 25, 139. [Google Scholar] [CrossRef] [PubMed]

- Çavuş, Ö.; Biecek, P. Explainable expected goal models in football: Enhancing transparency in AI-based performance analysis. arXiv 2022, arXiv:2206.07212. [Google Scholar]

- Musat, C.L.; Mereuta, C.; Nechita, A.; Tutunaru, D.; Voipan, A.E.; Voipan, D.; Mereuta, E.; Gurau, T.V.; Gurău, G.; Nechita, L.C. Diagnostic Applications of AI in Sports: A Comprehensive Review of Injury Risk Prediction Methods. Diagnostics 2024, 14, 2516. [Google Scholar] [CrossRef]

- Seçkin, A.Ç.; Ateş, B.; Seçkin, M. Review on Wearable Technology in Sports: Concepts, Challenges and Opportunities. Appl. Sci. 2023, 13, 10399. [Google Scholar] [CrossRef]

- Tang, J. An Action Recognition Method for Volleyball Players Using Deep Learning. Sci. Program. 2021, 2021, 3934443. [Google Scholar] [CrossRef]

- Chatterjee, R.; Roy, S.; Islam, S.H.; Samanta, D. An AI approach to pose-based sports activity classification. In Proceedings of the 8th International Conference on Signal Processing and Integrated Networks (SPIN), Noida, India, 26–27 August 2021; pp. 156–161. [Google Scholar] [CrossRef]

- Ferraz, A.; Duarte-Mendes, P.; Sarmento, H.; Valente-Dos-Santos, J.; Travassos, B. Tracking devices and physical performance analysis in team sports: A comprehensive framework for research-trends and future directions. Front. Sports Act. Living 2023, 5, 1284086. [Google Scholar] [CrossRef]

- Yu, X.; Chai, Y.; Chen, M.; Zhang, G.; Fei, F.; Zhao, Y. AI-Embedded Motion Sensors for Sports Performance Analytics. In Proceedings of the 2024 IEEE 3rd International Conference on Micro/Nano Sensors for AI, Healthcare, and Robotics (NSENS), Shenzhen, China, 2–3 March 2024; pp. 116–119. [Google Scholar] [CrossRef]

- Salim, F.A.; Postma, D.B.W.; Haider, F.; Luz, S.; van Beijnum, B.F.; Reidsma, D. Enhancing volleyball training: Empowering athletes and coaches through advanced sensing and analysis. Front. Sports Act. Living 2024, 6, 1326807. [Google Scholar] [CrossRef]

- Mehta, S.; Kumar, A.; Dogra, A.; Hariharan, S. The art of the stroke: Machine learning insights into cricket shot execution with convolutional neural networks and SVM. In Proceedings of the 2nd World Conference on Communication & Computing (WCONF) 2024, Raipur, India, 12–14 July 2024. [Google Scholar] [CrossRef]

- Quinn, E.; Corcoran, N. Automation of computer vision applications for real-time combat sports video analysis. In Proceedings of the European Conference on the Impact of Artificial Intelligence and Robotics, Oxford, UK, 1–2 December 2022. [Google Scholar] [CrossRef]

- Zhao, L. A Hybrid Deep Learning-Based Intelligent System for Sports Action Recognition via Visual Knowledge Discovery. IEEE Access 2023, 11, 46541–46549. [Google Scholar] [CrossRef]

- Li, J. Machine learning-based analysis of defensive strategies in basketball using player movement data. Sci. Rep. 2025, 15, 13887. [Google Scholar] [CrossRef]

- Hu, W. The application of artificial intelligence and big data technology in basketball sports training. ICST Trans. Scalable Inf. Syst. 2023, 10, e2. [Google Scholar] [CrossRef]

- García-Aliaga, A.; Marquina, M.; Coterón, J.; Rodríguez-González, A.; Luengo-Sanchez, S. In-game behaviour analysis of football players using machine learning techniques based on player statistics. Int. J. Sports Sci. Coach. 2020, 16, 148–157. [Google Scholar] [CrossRef]

- Román-Gallego, J.Á.; Pérez-Delgado, M.; Cofiño-Gavito, F.J.; Conde, M.Á.; Rodríguez-Rodrigo, R. Analysis and parameterization of sports performance: A case study of soccer. Appl. Sci. 2023, 13, 12767. [Google Scholar] [CrossRef]

- Fernández, J. From training to match performance: An exploratory and predictive analysis on F. C. Barcelona GPS data. In Proceedings of the IEEE 16th International Conference on Data Mining Workshops (ICDMW), Barcelona, Spain, 12–15 December 2016. [Google Scholar]

- Yunus, M.; Aditya, R.S.; Wahyudi, N.T.; Razeeni, D.M.; Almutairi, R.I. Talent Scouting and standardizing fitness data in football club: Systematic review. Retos 2024, 62, 1382–1389. [Google Scholar] [CrossRef]

- Gu, C.; Varuna, D.S. Deep generative multi-agent imitation model as a computational benchmark for evaluating human performance in complex interactive tasks: A case study in football. arXiv 2023, arXiv:2303.13323. [Google Scholar] [CrossRef]

- Collins, G.S.; Moons, K.G.; TRIPOD Group. TRIPOD+AI: Transparent reporting of a multivariable prediction model for individual prognosis or diagnosis, updated for artificial intelligence and machine learning. 2025, manuscript in preparation.

- Hong, Q.N.; Fàbregues, S.; Bartlett, G.; Boardman, F.; Cargo, M.; Dagenais, P.; Pluye, P. The Mixed Methods Appraisal Tool (MMAT) version 2018 for information professionals and researchers. Educ. Inf. 2018, 34, 285–291. [Google Scholar] [CrossRef]

- Shea, B.J.; Reeves, B.C.; Wells, G.; Thuku, M.; Hamel, C.; Moran, J.; Henry, D.A. AMSTAR 2: A critical appraisal tool for systematic reviews that include randomised or non-randomised studies of healthcare interventions, or both. BMJ 2017, 358, j4008. [Google Scholar] [CrossRef]

- Leddy, C.; Bolger, R.; Byrne, P.J.; Kinsella, S.; Zambrano, L. The application of Machine and Deep Learning for technique and skill analysis in swing and team sport-specific movement: A systematic review. Int. J. Comput. Sci. Sport 2024, 23, 110–145. [Google Scholar] [CrossRef]

- Val Vec, S.; Tomažič, S.; Kos, A.; Umek, A. Trends in real-time artificial intelligence methods in sports: A systematic review. J. Big Data. 2024, 11, 148. [Google Scholar] [CrossRef]

- Sampaio, T.; Oliveira, J.P.; Marinho, D.A.; Neiva, H.P.; Morais, J.E. Applications of Machine Learning to Optimize Tennis Performance: A Systematic Review. Appl. Sci. 2024, 14, 5517. [Google Scholar] [CrossRef]

- Barker, T.H.; Habibi, N.; Aromataris, E.; Stone, J.C.; Leonardi-Bee, J.; Sears, K.; Munn, Z. The revised JBI critical appraisal tool for the assessment of risk of bias for quasi-experimental studies. JBI Evid. Synth. 2024, 22, 378–388. [Google Scholar] [CrossRef]

- Wolff, R.F.; Moons, K.G.; Riley, R.D.; Whiting, P.F.; Westwood, M.; Collins, G.S.; Mallett, S.; PROBAST Group. PROBAST: A tool to assess the risk of bias and applicability of prediction model studies. Ann. Intern. Med. 2019, 170, 51–58. [Google Scholar] [CrossRef] [PubMed]

- Moola, S.; Munn, Z.; Tufanaru, C.; Aromataris, E.; Sears, K.; Sfetcu, R.; Mu, P.F. Chapter 7: Systematic reviews of etiology and risk. In JBI Manual for Evidence Synthesis; JBI Global: North Adelaide, Australia, 2020. [Google Scholar] [CrossRef]

- Biró, A.; Cuesta-Vargas, A.I.; Szilágyi, L. AI-Assisted Fatigue and Stamina Control for Performance Sports on IMU-Generated Multivariate Times Series Datasets. Sensors 2024, 24, 132. [Google Scholar] [CrossRef] [PubMed]

- Campaniço, A.T.; Valente, A.; Serôdio, R.; Escalera, S. Data’s hidden data: Qualitative revelations of sports efficiency analysis brought by neural network performance metrics. Motricidade 2018, 14, 94–102. [Google Scholar] [CrossRef]

- Chen, M.; Szu, H.; Lin, H.Y.; Liu, Y.; Chan, H.; Wang, Y.; Zhao, Y.; Zhang, G.; Yao, J.D.; Li, W.J. Phase-based quantification of sports performance metrics using a smart IoT sensor. IEEE Internet Things J. 2023, 10, 15900–15911. [Google Scholar] [CrossRef]

- Demosthenous, G.; Kyriakou, M.; Vassiliades, V. Deep reinforcement learning for improving competitive cycling performance. Expert Syst. Appl. 2022, 203, 117311. [Google Scholar] [CrossRef]

- Nagovitsyn, R.; Valeeva, L.; Latypova, L. Artificial intelligence program for predicting wrestlers’ sports performances. Sports 2023, 11, 196. [Google Scholar] [CrossRef]

- Rodrigues, A.C.N.; Pereira, A.S.; Mendes, R.M.S.; Araújo, A.G.; Couceiro, M.S.; Figueiredo, A.J. Using Artificial Intelligence for Pattern Recognition in a Sports Context. Sensors 2020, 20, 3040. [Google Scholar] [CrossRef]

- Yu, A.; Chung, S. Automatic identification and analysis of basketball plays: NBA on-ball screens. In Proceedings of the International Conference on Big Data, Cloud Computing, Data Science & Engineering 2019, Honolulu, HI, USA, 29–31 May 2019. [Google Scholar] [CrossRef]

- Marquina, M.; Lozano, D.; García-Sánchez, C.; Sánchez-López, S.; de la Rubia, A. Development and Validation of an Observational Game Analysis Tool with Artificial Intelligence for Handball: Handball.ai. Sensors 2023, 23, 6714. [Google Scholar] [CrossRef]

- Ramanayaka, D.H.; Parindya, H.S.; Rangodage, N.S.; Gamage, N.; Marasinghe, G.M.; Lokuliyana, S. Spinperform–A cricket spin bowling performance analysis model. In Proceedings of the International Conference on Awareness Science and Technology 2023, Taichung, Taiwan, 9–11 November 2023. [Google Scholar] [CrossRef]

- European Commission. Ethics Guidelines for Trustworthy AI. High-Level Expert Group on AI; European Commission: Brussels, Belgium, 2019. [Google Scholar]

- OECD. OECD AI Principles; OECD: Paris, France, 2019. [Google Scholar]

- UNESCO. Recommendation on the Ethics of Artificial Intelligence; UNESCO: Paris, France, 2021. [Google Scholar]

- IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems. Ethically Aligned Design: A Vision for Prioritizing Human Well-being with Autonomous and Intelligent Systems; IEEE: New York, NY, USA, 2019. [Google Scholar]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef]

| Component | Description |

|---|---|

| Population (P) | Competitive athletes and team sports as well as individual sports (e.g., soccer, basketball, tennis) at both amateur and professional levels. |

| Intervention (I) | Application of artificial intelligence techniques, such as machine learning, deep learning, computer vision, and reinforcement learning, to analyze and enhance sports performance (e.g., real-time feedback, predictive analytics, automated motion analysis). |

| Comparison (C) | Traditional performance analysis methods (e.g., manual assessments, conventional statistical techniques) or comparisons among different AI-based methodologies. |

| Outcomes (O) | Quantitative metrics (e.g., accuracy, precision, recall, F1-score, mean absolute error) and qualitative outcomes (e.g., tactical decision support, improved training strategies, enhanced performance monitoring). |

| Study Design (S) | Empirical studies including experimental, observational, and quasi-experimental designs, as well as systematic reviews and meta-analyses that report on AI applications in sports performance analysis. |

| Study | MMAT Score (Out of 5) | JBI Quasi-Experimental Score (Out of 5) | Overall Quality Rating |

|---|---|---|---|

| Biró et al. (2023) [30] | 4 | 3 | Moderate |

| Campaniço et al. (2018) [31] | 5 | Not Applicable | High |

| Chen et al. (2023) [32] | 4 | 4 | High |

| Demosthenous et al. (2022) [33] | 3 | 3 | Moderate |

| Nagovitsyn et al. (2023) [34] | 4 | 3 | Moderate |

| Rodrigues et al. (2020) [35] | 4 | 3 | Moderate |

| Román-Gallego et al. (2023) [17] | 5 | Not Applicable | High |

| Yu et al. (2024) [36] | 4 | 4 | High |

| Study | JBI Score (Out of 8) | Overall Quality Rating |

|---|---|---|

| Fernández et al. (2016) [18] | 7 | High |

| Marquina et al. (2023) [37] | 6 | Moderate |

| Study | PROBAST Risk | TRIPOD-ML Adherence | Overall Quality Rating |

|---|---|---|---|

| Chatterjee et al. (2021) [7] | Low | High | High |

| Hu (2023) [15] | Moderate | Moderate | Moderate |

| Quinn and Corcoran (2022) [12] | Low | Moderate | High |

| Ramanayaka et al. (2023) [38] | Moderate | Moderate | Moderate |

| Yu and Chung (2019) [36] | Moderate | Low | Moderate |

| Study: Campaniço et al., 2018 [31] Sport: Fencing AI Used: Neural Network, Dynamic Time Warping Performance Measured: Prediction accuracy Study Design: Experimental study using inertial sensors Performance Metrics: 76.6% accuracy | Study: Chatterjee et al., 2021 [7] Sport: Tennis AI Used: Detectron2, Pose Estimation, Convolutional Neural Networks (CNNs) Performance Measured: Classification accuracy Study Design: Computer vision study Performance Metrics: 98.60% accuracy | Study: Chen et al., 2023 [32] Sport: Volleyball AI Used: Machine Learning Performance Measured: Accuracy in identifying skill levels Study Design: Experimental study using wearable sensors Performance Metrics: Up to 95% accuracy | Study: Yu and Chung, 2019 [36] Sport: Basketball AI Used: Motion tracking Performance Measured: Sensitivity Study Design: Machine learning study Performance Metrics: 90% sensitivity, 8% improvement compared with the existing literature |

| Study: Yu et al., 2024 [9] Sport: Gymnastics AI Used: AI-embedded Inertial Measurement Units, Visual Analysis Performance Measured: Segmentation of vaulting phases, evaluation of detailed movements Study Design: Experimental study Performance Metrics: 4.57% estimation error in flight height | Study: Yunus et al., 2024 [19] Sport: Football AI Used: Machine Learning, Data Mining, Classification Models, Regression Models Performance Measured: Accuracy in performance score for forward positions Study Design: Review Performance Metrics: Classification and regression models: up to 94% accuracy | Study: Román-Gallego et al., 2023 [17] Sport: Soccer AI Used: Fuzzy Logic Performance Measured: Agreement with actual rankings Study Design: Experimental study Performance Metrics: Fuzzy Logic System: 75% agreement with actual top team rankings, 87.5% agreement with lower-ranked teams | Study: Ramanayaka et al., 2023 [38] Sport: Cricket AI Used: Deep Learning, Convolutional Neural Network Performance Measured: Accuracy in detecting player in danger area, detecting position of front leg, detecting angle of bowling arm, detecting no ball delivery Study Design: Computer vision study Performance Metrics: Overall accuracy above 95% |

| Study: Demosthenous et al., 2022 [33] Sport: Cycling AI Used: Model-based Reinforcement Learning, Deep Reinforcement Learning, Deep Q-Learning, Stochastic Gradient Boosting, Random Forests, Symbolic Regression Performance Measured: Mean absolute error Study Design: Experimental study Performance Metrics: Random Forest: Average MAE for speed prediction: 4.34 kmh Neural Network: Average MAE for speed prediction: 4.24 kmh | Study: Rodrigues et al., 2020 [35] Sport: Futsal AI Used: Artificial Neural Networks (ANNs), Long Short-Term Memory Network, Dynamic Bayesian Mixture Model (DBMM) Performance Measured: Accuracy, precision, recall, F1-score Study Design: Experimental study Performance Metrics: ANN: 90.03% accuracy, 16.06% precision, 67.87% recall, 14.74% F1-score LSTM: 60.92% accuracy, 29.89% precision, 57.61% recall, 36.31% F1-score DBMM: 96.47% accuracy, 77.70% precision, 84.12% recall, 80.54% F1-score | Study: Nagovitsyn et al., 2023 [34] Sport: Wrestling AI Used: Deep Neural Networks, Logistic Regression, Random Forest Performance Measured: Error probability in predicting competitive performance Study Design: Experimental study Performance Metrics: The improvement metrics include an 11% error probability in predictions, indicating an 89% accuracy rate; the program achieves 100% prediction efficiency when three specific trait categories are identified; specific conditions can increase the probability of achieving high sports performance to 92% or not achieving it to 89% | Study: Quinn and Corcoran, 2022 [12] Sport: Combat sports AI Used: Computer Vision, YOLOv5, Human Action Recognition (HAR), Object Tracking, Deep Learning Performance Measured: Mean average precision, F1-score Study Design: Computer vision study Performance Metrics: mean average precision: 95.5% at a confidence threshold of 50% F1-Score: -Sample One: 0.95 at a confidence threshold of 0.489 -All classes: 0.99 at a confidence of 0.684 |

| Study: Fernández et al., 2016 [18] Sport: Football AI Used: Machine Learning, Feature Selection Techniques, Principal Component Analysis (PCA) Performance Measured: Predictive models of locomotor variables, metabolic variables, mechanical variables Study Design: Observational study using tracking data Performance Metrics: Successful prediction rates in 11 out of 17 total variables | Study: Marquina et al., 2023 [37] Sport: Handball AI Used: Machine Learning, Natural Language Processing (NLP) Performance Measured: Intraclass correlation coefficient, Cohen’s kappa Study Design: Observational study Performance Metrics: Automatic Variables: ICC = 0.957 (intra-observer), ICC = 0.937 (inter-observer) Manual Variables: ICC = 0.913 (intra-observer), ICC = 0.904 (inter-observer) Cohen’s kappa: 0.889 (expert agreement) | Study: Hu, 2023 [15] Sport: Basketball AI Used: Whale Optimized Artificial Neural Network (WO-ANN), Convolutional Random Forest (ConvRF), Attention Random Forest (AttRF), Convolutional Long Short-Term Memory (ConvLSTM), Attention Long Short-Term Memory (AttLSTM), 3D Convolutional Neural Network, Posture Normalized CNN Performance Measured: Accuracy, mean average precision (mAP) Study Design: Computer vision study Performance Metrics: ARBIGNet: 98.8% accuracy, 95.5% mAP Alternative configuration: 96.5% accuracy, 90.5% mAP ConvRF unit improvement: +1.3% accuracy, +1.1% mAP AttRF unit improvement: +1.7% accuracy, +1.5% mAP | Study: Biró et al., 2023 [30] Sport: Running AI Used: Random Forest, Gradient Boosting Machines, Long Short-Term Memory Network (LSTM) Performance Measured: Accuracy, precision, recall, F1-score Study Design: Experimental study using Inertial Measurement Units (IMUs) Performance Metrics: Extra Trees Classifier–Accuracy: 50.75%, F1-score: 50.22% Random Forest Classifier–Accuracy: 50.51%, F1-score: 49.57% Quadratic Discriminant Analysis–Accuracy: 48.98%, F1-score: 52.76% K-Nearest Neighbor Classifier–Accuracy: 48.65%, F1-score: 47.22% Decision Tree Classifier–Accuracy: 50.66%, F1-score: 51.15% Gradient Boosting Classifier–Accuracy: 47.13%, F1-score: 45.90% Logistic Regression–Accuracy: 48.96%, F1-score: 51.09% AdaBoost Classifier–Accuracy: 48.40%, F1-score: 48.95% Linear Discriminant Analysis–Accuracy: 48.81%, F1-score: 51.08% Ridge Classifier–Accuracy: 48.81%, F1-score: 51.08% Light Gradient Boosting Machine–Accuracy: 49.58%, F1-score: 47.16% SVM (Linear Kernel)–Accuracy: 49.12%, F1-score: 54.39% Naive Bayes–Accuracy: 48.12%, F1-score: 52.09% Dummy Classifier–Accuracy: 51.30%, F1-score: 67.78% LSTM Model–Accuracy: 59.00%, F1-Score: 59.00% |

| Domain/Outcome | Classification Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|

| Risk of Bias | 0 | 0 | 0 | 0 |

| Inconsistency | 1 | 1 | 1 | 1 |

| Indirectness | 0 | 0 | 0 | 0 |

| Imprecision | 0 | 0 | 0 | 0 |

| Publication Bias | 0 | 0 | 0 | 0 |

| Total Downgrades | 1 | 1 | 1 | 1 |

| Score (4-downgrades) | 3 | 3 | 3 | 3 |

| Grade | Moderate | Moderate | Moderate | Moderate |

| Effect Estimate | 87.8% (95% CI 82.7–92.9%) | 48–97% | 47–96% | 45–95% |

| Number of Studies | 16 | 13 | 13 | 13 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pietraszewski, P.; Terbalyan, A.; Roczniok, R.; Maszczyk, A.; Ornowski, K.; Manilewska, D.; Kuliś, S.; Zając, A.; Gołaś, A. The Role of Artificial Intelligence in Sports Analytics: A Systematic Review and Meta-Analysis of Performance Trends. Appl. Sci. 2025, 15, 7254. https://doi.org/10.3390/app15137254

Pietraszewski P, Terbalyan A, Roczniok R, Maszczyk A, Ornowski K, Manilewska D, Kuliś S, Zając A, Gołaś A. The Role of Artificial Intelligence in Sports Analytics: A Systematic Review and Meta-Analysis of Performance Trends. Applied Sciences. 2025; 15(13):7254. https://doi.org/10.3390/app15137254

Chicago/Turabian StylePietraszewski, Przemysław, Artur Terbalyan, Robert Roczniok, Adam Maszczyk, Kajetan Ornowski, Daria Manilewska, Szymon Kuliś, Adam Zając, and Artur Gołaś. 2025. "The Role of Artificial Intelligence in Sports Analytics: A Systematic Review and Meta-Analysis of Performance Trends" Applied Sciences 15, no. 13: 7254. https://doi.org/10.3390/app15137254

APA StylePietraszewski, P., Terbalyan, A., Roczniok, R., Maszczyk, A., Ornowski, K., Manilewska, D., Kuliś, S., Zając, A., & Gołaś, A. (2025). The Role of Artificial Intelligence in Sports Analytics: A Systematic Review and Meta-Analysis of Performance Trends. Applied Sciences, 15(13), 7254. https://doi.org/10.3390/app15137254