2.1. Emotion-Based Recommendations

Recently, with the growth of the OTT market and the production of various types of content, technologies that recommend customized content to users have been advancing. As user participation in service and product development increases alongside recommendation technology, the emotions experienced by users become crucial factors in their service utilization and purchasing decisions [

4]. Consequently, research in emotional engineering, which analyzes and evaluates the relationship between human emotions and products, is actively progressing. Emotional engineering embodies engineering technologies aimed at faithfully reflecting human characteristics and emotions, with emotional design, emotional content, and emotional computing serving as integral research fields within this discipline [

4]. Moreover, with the proliferation of mobile devices and the increased use of social networking services, individuals can share their thoughts and opinions anytime and anywhere. Consequently, user emotions are analyzed through social media platforms, and numerous studies are underway on recommendations based on user emotions.

Content such as music, movies, and books has a significant influence on changing users’ emotions. Therefore, emotions are an important factor in content recommendation. Existing emotion-based recommendation studies analyze content and user emotions to suggest content that aligns with the user’s emotional state.

The music recommendation method that considers emotions [

5,

44,

45,

46] recognizes facial expressions to derive the user’s emotions and recommends music suitable for the user. As a recommendation method, content-based filtering (CSF), collaborative filling (CF), and similarity techniques were used to recommend music suitable for the user. In addition, studies have been performed for recommending music by estimating emotions through brainwave data, which is a user’s biosignal [

47], recommending music by analyzing the user’s music listening history data [

48], recommending music by analyzing the user’s music listening history data [

48], and even studying the user’s keyboard input and mouse click patterns to analyze and recommend music [

49]. Research on music recommendations that consider existing emotions mainly recommends music based on the user’s current emotional state.

The emotion-based movie recommendation method [

6] defines colors as singular emotions and recommends movies based on the color selected by the user. This approach combines content-based filtering, collaborative filtering, and emotion detection algorithms to enhance the movie recommendation system by incorporating the user’s emotional state. However, because colors are defined as representing singular emotions and consider only the user’s emotion corresponding to the selected color, the accuracy of the recommendation results is expected to be low. To provide recommendations that better suit the user, it is essential to express the diverse characteristics and intensity of the user’s emotions.

The emotion-based tourist destination recommendation method [

7] combines content-based and collaborative filtering techniques to recommend tourist destinations based on the user’s emotions. This approach involves collecting data on tourist attractions and quantifying emotions by calculating term frequencies based on words from eight emotion groups. Furthermore, research has been conducted on recommending artwork by considering emotions [

8], recommending fonts by analyzing emotions through text entered by users [

9], and recommending emoticons [

10].

A common limitation of these emotion-based content recommendation studies is that they make recommendations that consider only the user’s current emotions. An individual’s emotions change over time, and even the same emotion can be felt differently depending on how it changes. An individual’s current emotional state may transition to a different emotional state over time or depending on the situation. Additionally, the speed at which emotions change may vary depending on an individual’s emotional highs and lows. Research has shown that individuals experience regular changes in their emotions over time [

11,

12], suggesting that certain emotions can change into other emotions with some regularity, making it possible to predict emotional changes in individual users. Additionally, individuals experience a variety of positive, negative, or mixed emotions in their daily lives [

13,

14]; therefore, there is a need to express their emotions as complex emotions. Thus, the user’s emotional state can be predicted from the current point in time based on the user’s emotional history. Predicting the user’s emotional state allows us to anticipate their satisfaction with recommended items, thereby enabling the development of a recommendation system that aligns closely with the user’s preferences and needs.

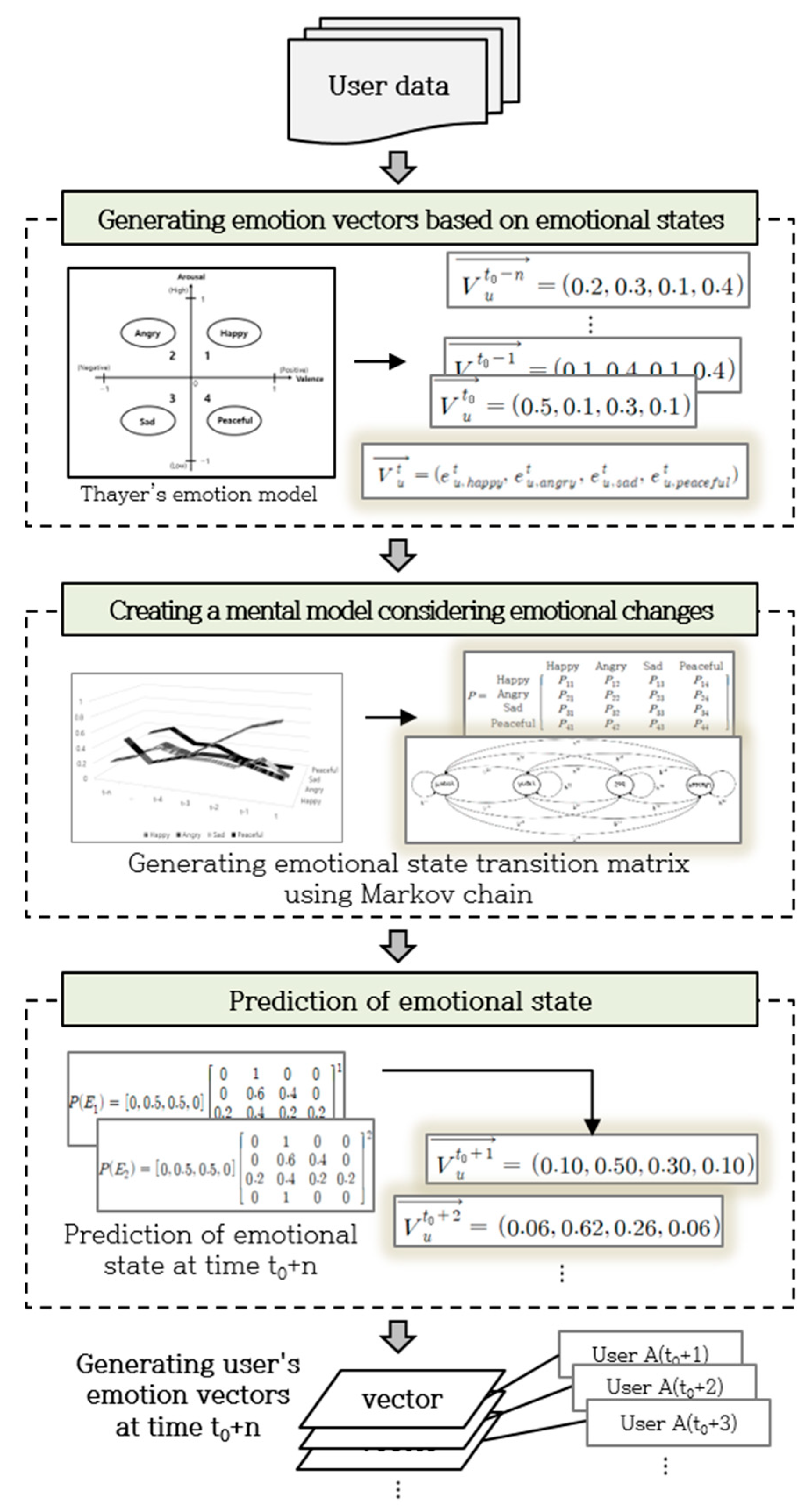

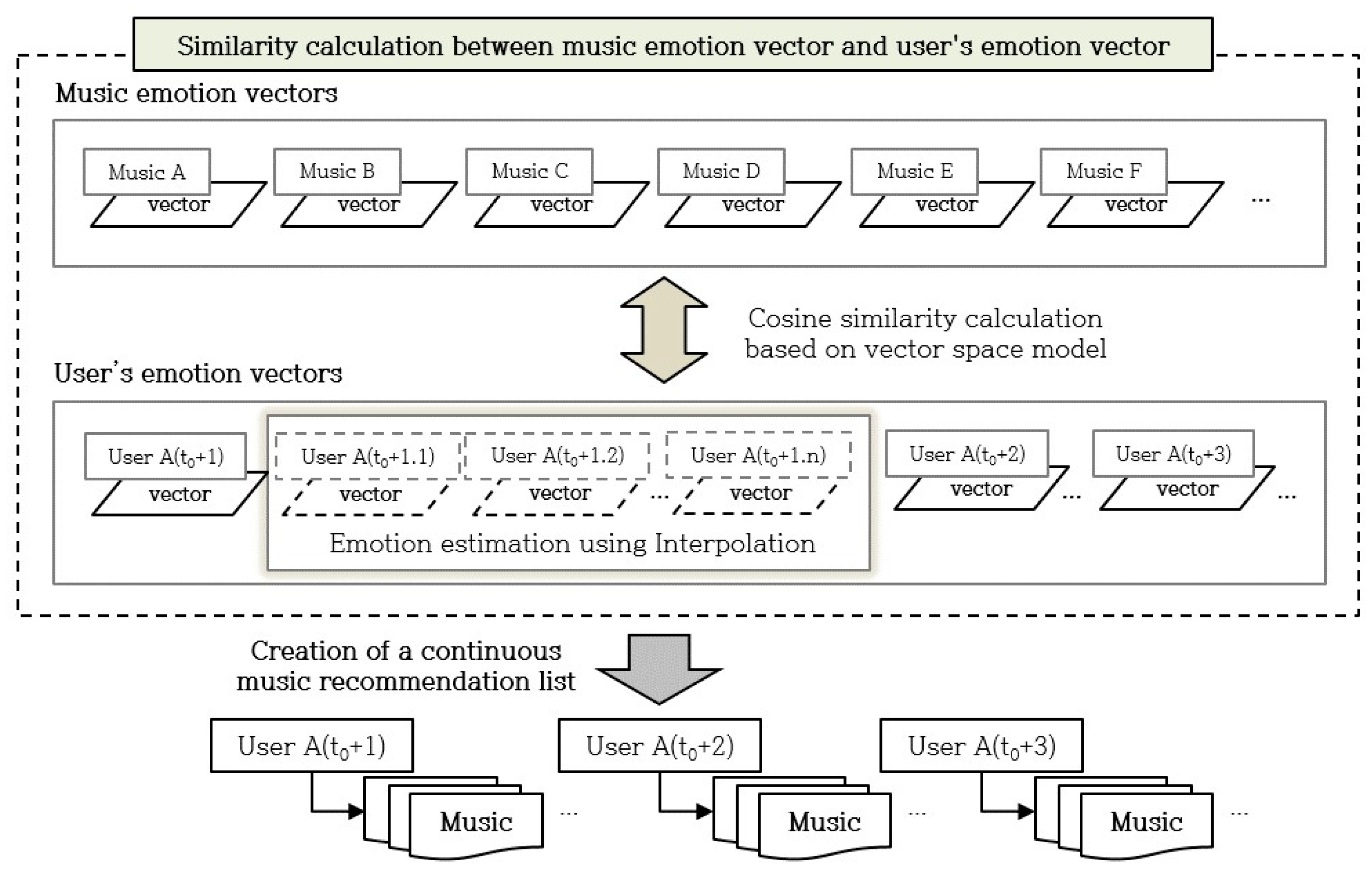

Therefore, in this study, we focus on music, which can significantly affect the user’s emotions among the various types of content. We propose a continuous music recommendation method that considers emotional changes over time.

2.2. Emotion Model

Psychological research related to emotions has been widely applied by computer scientists and artificial intelligence researchers aiming to develop computational systems capable of expressing human emotions similarly [

23]. Emotional models are primarily categorized into individual emotion, dimensional, and evaluation models. The dimensional theory of emotion uses the concept of dimensions rather than individual categories to represent the structure of emotions [

24]. It argues that emotions are not composed of independent categories but exist along an axis with two-dimensional polarities: valence and arousal [

25]. The valence axis represents the degree to which emotions are positive or negative, and the arousal axis represents the degree of emotional intensity. Valence and arousal are correlated elements that can effectively express emotions [

22,

26,

27]. Two representative figures in the research on emotion models are Russell [

28] and Thayer [

29].

Russell [

28] argued that emotion is a linguistic interpretation of a comprehensive expression involving various cognitive processes, suggesting that specific theories can explain what appears to be emotion.

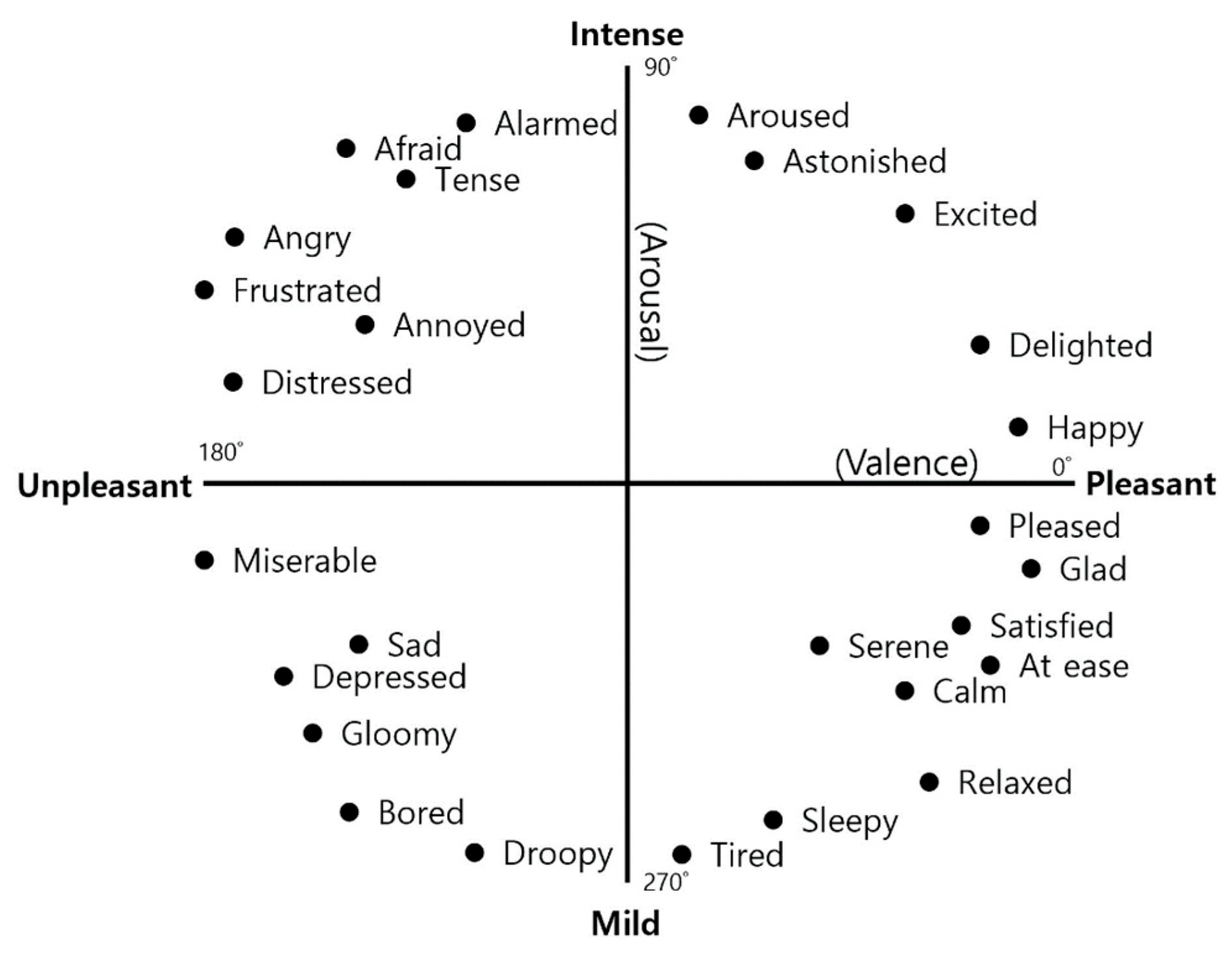

Figure 1 illustrates Russell’s emotional model. The horizontal axis represents valence, ranging from pleasant to unpleasant intensities, with increasing pleasantness toward the right. The vertical axis represents arousal, ranging from mild to intense, with increasing intensity upwards. According to Russell, internal sensibility suggests that individuals can experience specific sensibility through internal actions alone, independent of external factors. He argued that these experiences can be understood at a simpler level than commonly recognized emotions like happiness, fear, and anger. However, Russell’s emotion model has been criticized for its lack of compatibility with modern emotions due to the overlapping meanings and ambiguous adjective expressions inherent in its adjective-based approach [

1]. Thayer’s model has been proposed to address these limitations and compensate for these shortcomings.

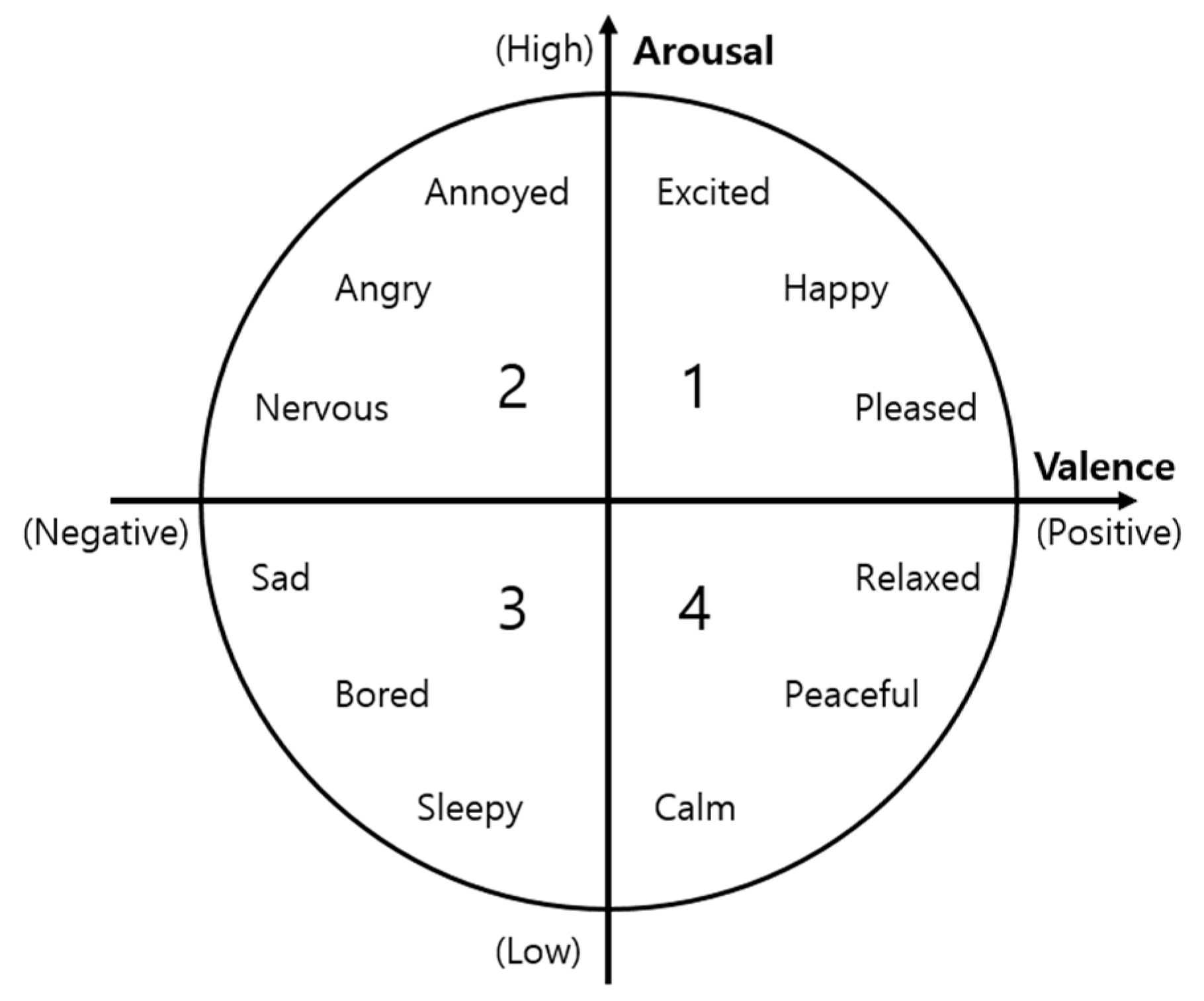

The emotion model presented by Thayer [

29] is illustrated in

Figure 2. This model compensates for the shortcomings of Russell’s emotion model by providing a simplified and structured emotion distribution. It organizes the boundaries between emotions, making it easy to quantify and clearly express the location of emotions [

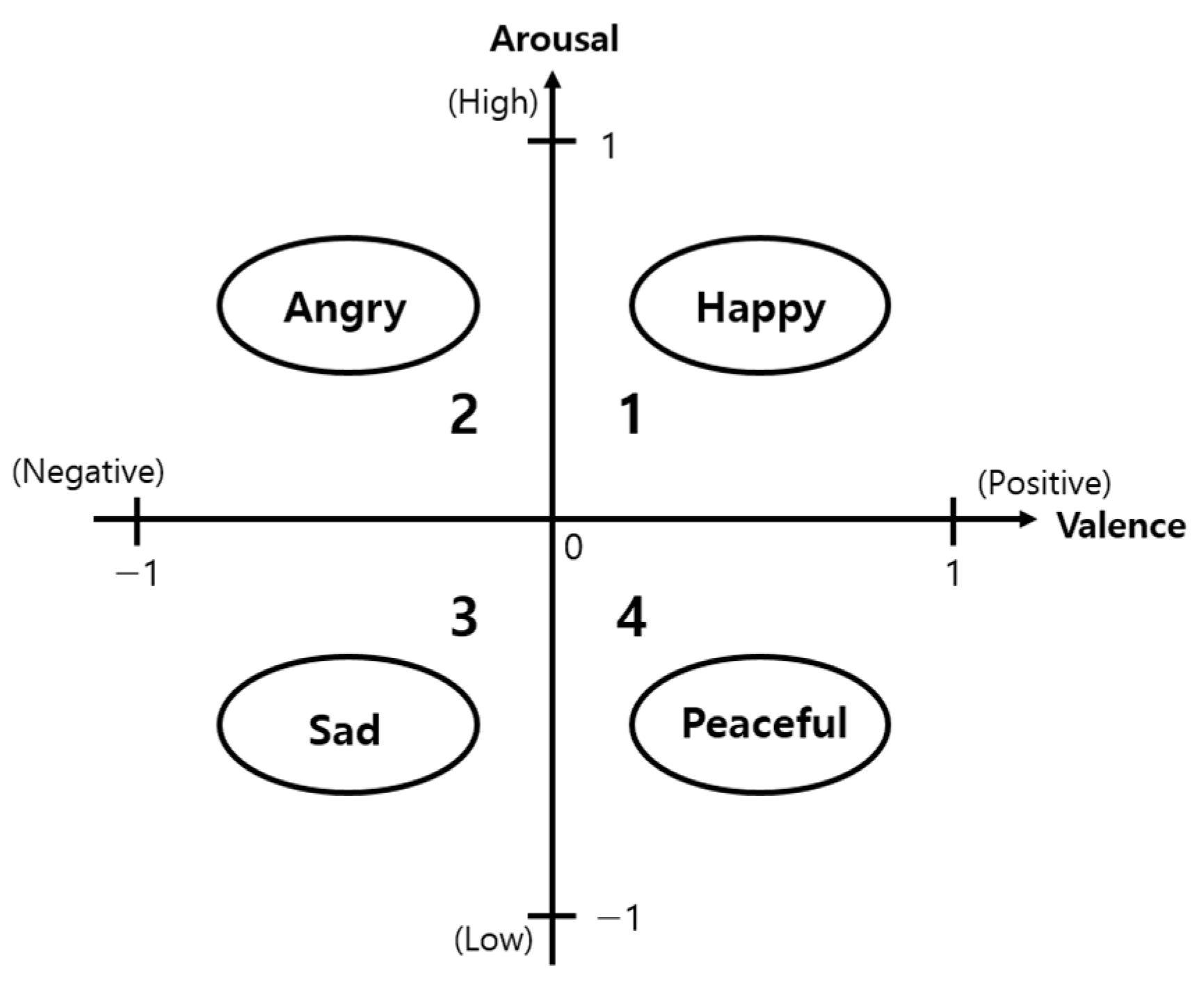

30]. Thayer’s emotion model employs 12 emotion words. In this model, the horizontal axis represents valence, indicating the intensity of positive and negative emotions at the extremes. The vertical axis represents arousal, reflecting the intensity of emotional response from low to high. Positivity increases toward the right on the horizontal axis, while emotional intensity increases upwards on the vertical axis. Each axis value is expressed as a real number between −1 and 1. Thayer’s model is primarily used to express emotions in emotion-based studies.

Based on Thayer’s emotion model, emotions are classified into quadrants based on ranges of valence and arousal values [

23]. Additionally, a model has been proposed that classifies emotions into eight types, considering the characteristics of the content [

30]. These studies interpret valence on the horizontal axis and arousal on the vertical axis similarly to Thayer’s stablished emotion model.

Moon et al. [

32] conducted an experiment where users evaluated their emotions toward music using Thayer’s emotion model. To express emotions as a single point, a five-point scale was used for each emotional term, and users could select a maximum of three emotional terms. If multiple terms were selected, the total score could not exceed five points. Additionally, extreme emotional terms could not be evaluated simultaneously. Emotions can be felt in complex ways, and their intensity can vary among individuals; therefore, this evaluation method has limitations and is difficult to judge accurately.

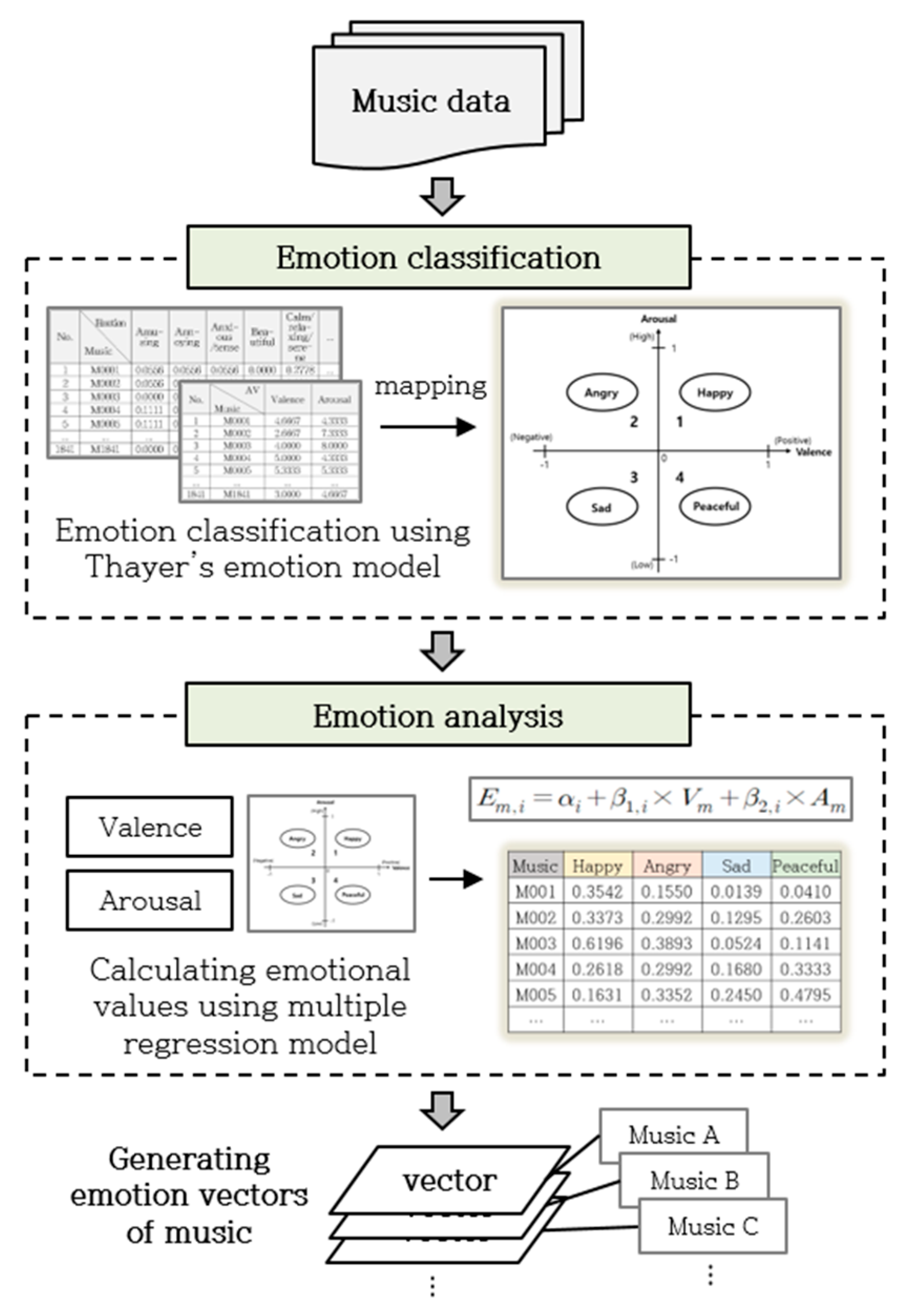

In this study, we sought to generate music and user emotion vectors by dividing a quadrant into four emotion areas based on Thayer’s emotion model. Music emotion vectors were created using valence and arousal values, and user emotion vectors were generated from user input on the level of emotional terms in each quadrant.

2.3. Emotion Analysis

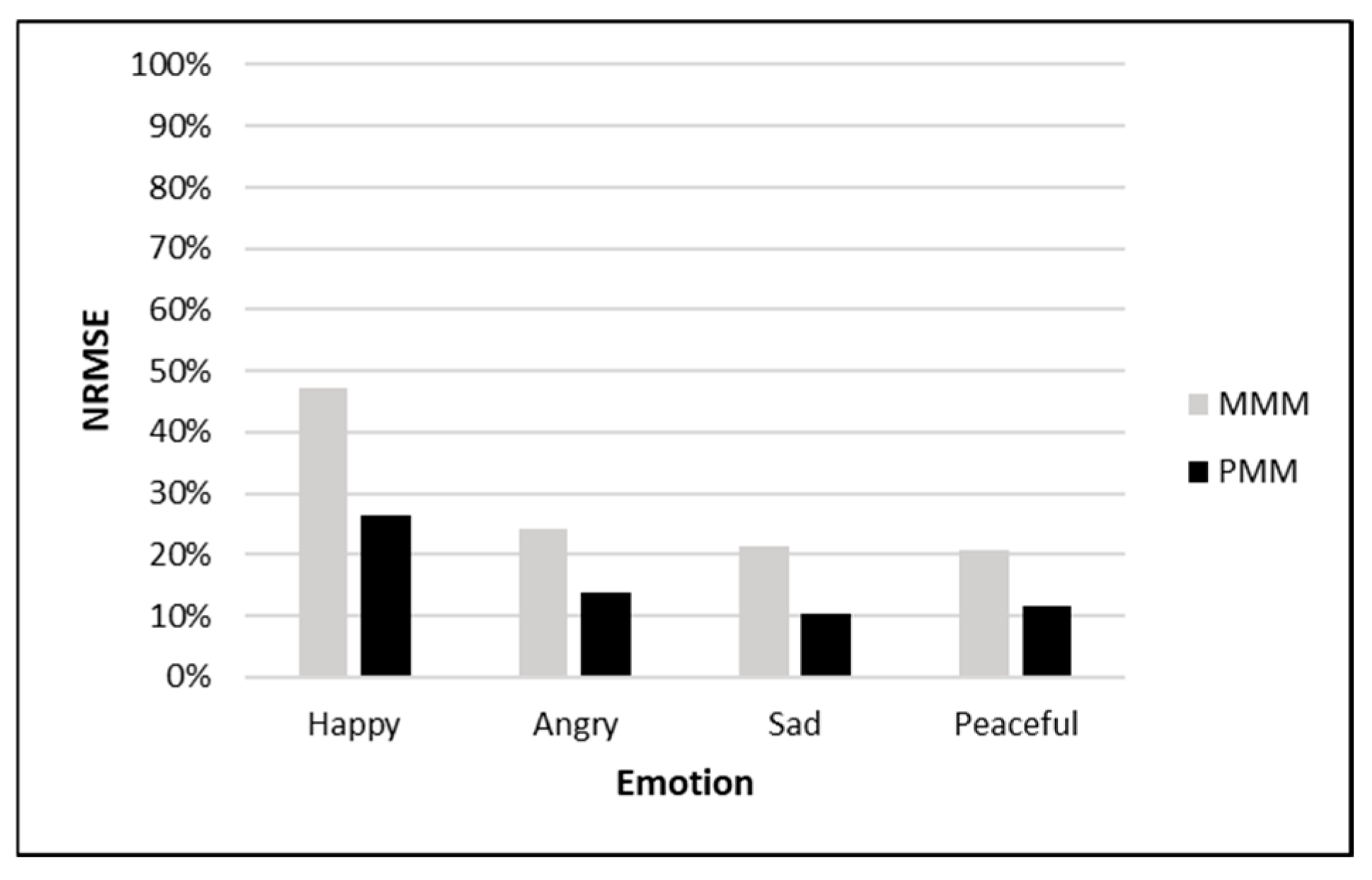

Various studies have analyzed emotions in content. Laurier et al. [

33] classified the music emotions using audio information and lyrics, selecting four levels of emotions: angry, happy, sad, and relaxed. Better performance in emotion analysis was shown when both audio and lyrics were used compared with lyrics alone. Hu et al. [

34] also classified music emotions using audio and lyrics. They created emotional categories using tag information and conducted experiments comparing three methods: audio, lyrics, and audio plus lyrics. Performance varied by category. In categories such as happy and calm, the best performance was achieved when audio was used. On the other hand, in the romantic and angry categories, the best performance was achieved when lyrics were used.

In a previous study [

20] focusing on visualizing music based on the emotional analysis of lyric text, the lyric text was linked to video visualization rules using Google’s natural language processing API. The emotional analysis extracted score and magnitude values, which were mapped to valence and arousal to determine the location of each emotion. The score represents the emotion and is interpreted as positive or negative, and the magnitude represents its intensity. Based on this, six emotions (happy, calm, sad, angry, anticipated, and surprised) were classified into ten emotions according to their intensity levels. Consequently, we propose a rule for setting ranges based on values obtained from Google’s natural language processing Emotion Analysis API.

In a previous study by Moon et al. [

32] analyzing the relationship between mood and color according to music preference, multiple users were asked to select colors associated with mood and mood words for music. Emotional coordinates were calculated using Thayer’s model based on the input values of the emotional intensity experienced by several users while listening to music. The sum of the intensity of each emotion for a specific piece of music among multiple users was calculated using Equation (1).

Here,

represents the sum of emotions related to music, and

denotes the level of emotions input by the user regarding the music. Using the results from Equation (1), the coordinate values for each emotion in the arousal and valence (AV) space were computed, as shown in Equation (2).

Here,

xi represents the valence value of emotion

i in the AV space,

yi represents the arousal value of emotion in the AV space, and

i represents the angle of emotion in the AV space.

is defined recursively as

, and

is 15°. The average of the computed coordinate values for each emotion was then determined using Equation (3) to identify the representative emotion of the music.

In a previous study by Kim et al. [

30], they designed a method to predict emotions by analyzing various elements of music. This method involved deriving an emotion formula that incorporates weights for five music elements: tempo, dynamics, amplitude change, brightness, and noise. These elements were mapped onto a two-dimensional emotion coordinate system represented by X and Y coordinates. Next, the study involved plotting these X and Y values onto on a two-dimensional plane and drawing a circle to determine the minimum and maximum radii that could encompass all eight emotions. The probability of each emotion was then calculated based on the proportion of the emotion’s area within the circle. These probabilities were compared with emotions experienced by actual users through a survey. However, owing to conceptual differences in individual emotions, there are limitations in accurately quantifying emotions with this method.

When emotions are analyzed using music lyrics or audio information, there are limitations to evaluating the accuracy. Lyrics sometimes express emotions, but because they are generally lyrical and contain deep meanings, it is difficult to accurately predict emotions, and modern music, depending on the genre, has limitations in expressing emotions through audio information alone. Because emotions experienced when listening to music may vary based on individual emotional fluctuations and contexts, it is crucial to analyze how users experience emotions while listening to music.

Moon et al. [

36] aimed to enhance music search performance by employing folksonomy-based mood tags and music AV tags. A folksonomy is a classification system that uses tags. Initially, they developed an AV prediction model for music based on Thayer’s emotion model to predict the AV values and assign internal tags to the music. They established a mapping relationship between the constructed music folksonomy tags and the AV values of the music. This approach enables music search using tags, AV values, and their corresponding mapping information.

Cowen et al. [

22] classified the emotional categories evoked by music into 13 emotions: joy (fun), irritation (displeasure), anxiety (worry), beauty, peace (relaxation), dreaminess, vitality, sensuality, rebellion (anger), joy, sadness (depression), fear, and victory (excitement). American and Chinese participants were recruited and asked to listen to various music genres, select the emotions they felt, and score their intensity. After analyzing the answers, the emotions experienced while listening to music were summarized into 13 categories. The study revealed a correlation between emotions and the elements of valence and arousal.

In a past study [

37] on the emotions evoked by paintings, users were asked to indicate the ratio of positive and negative emotions they felt toward the artworks, which are artistic content in addition to music. Additionally, in a study [

38] on emotions in movies, emotions were derived from the analysis of movie review data.

Various methods have been employed across various fields to analyze individual emotions. Psychology, dedicated to the study of emotions, actively conducts research on human emotions. Emotions are inferred through social factors, facial expressions, and voices recognized by humans [

39,

40,

41]. Research has advanced from inferring emotions to predicting behavior based on other people’s emotions and behavior [

42,

43]. This confirms that humans can predict the emotions of others and that emotions can also predict future emotional states [

26].

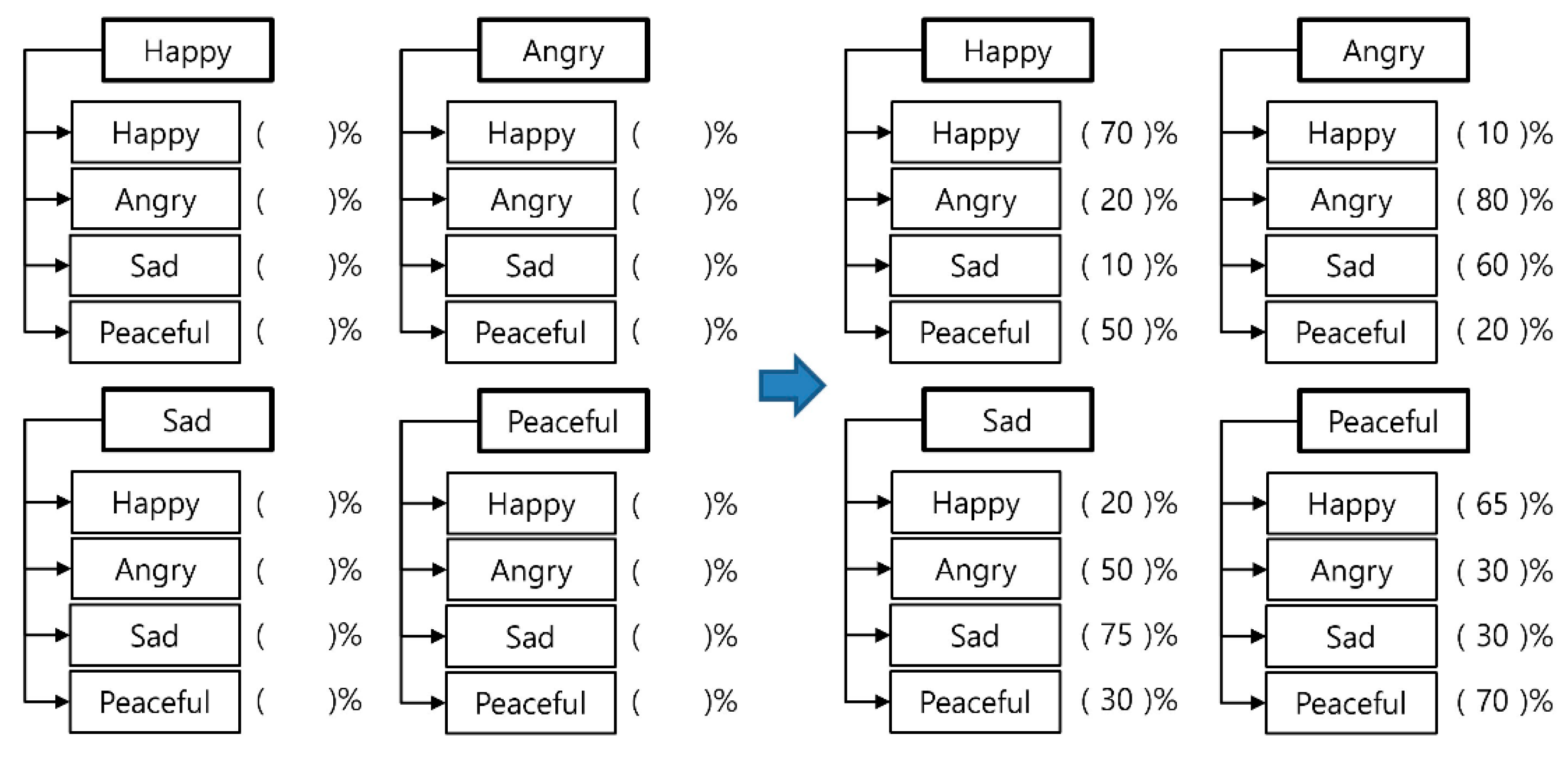

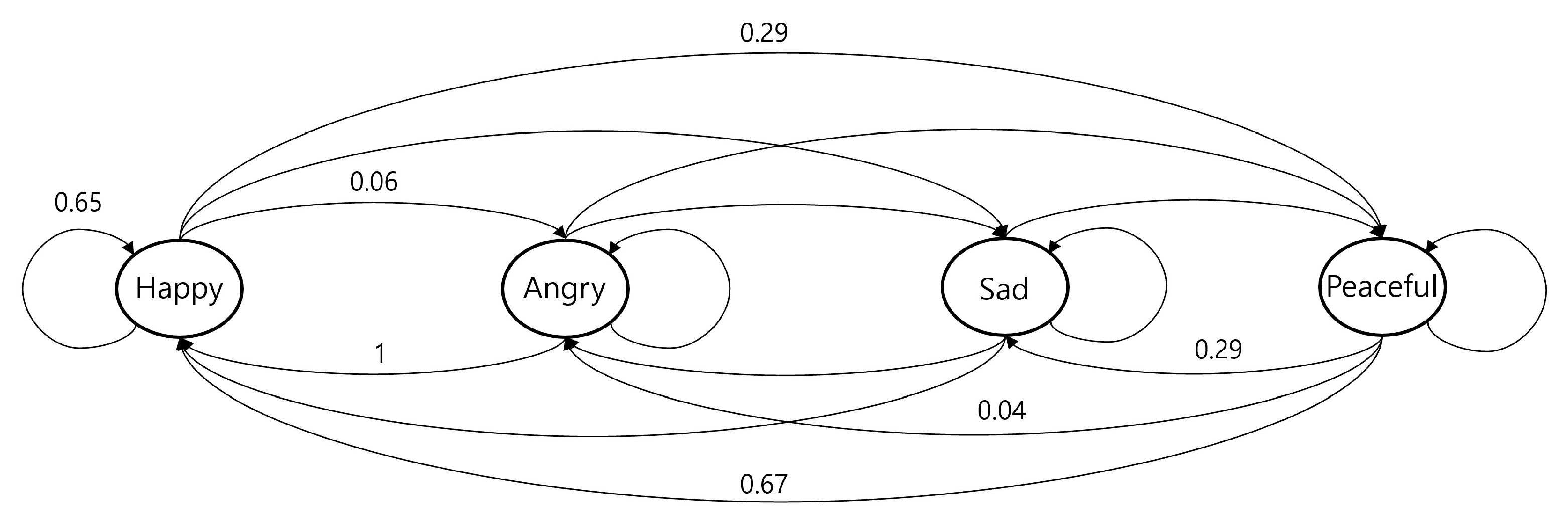

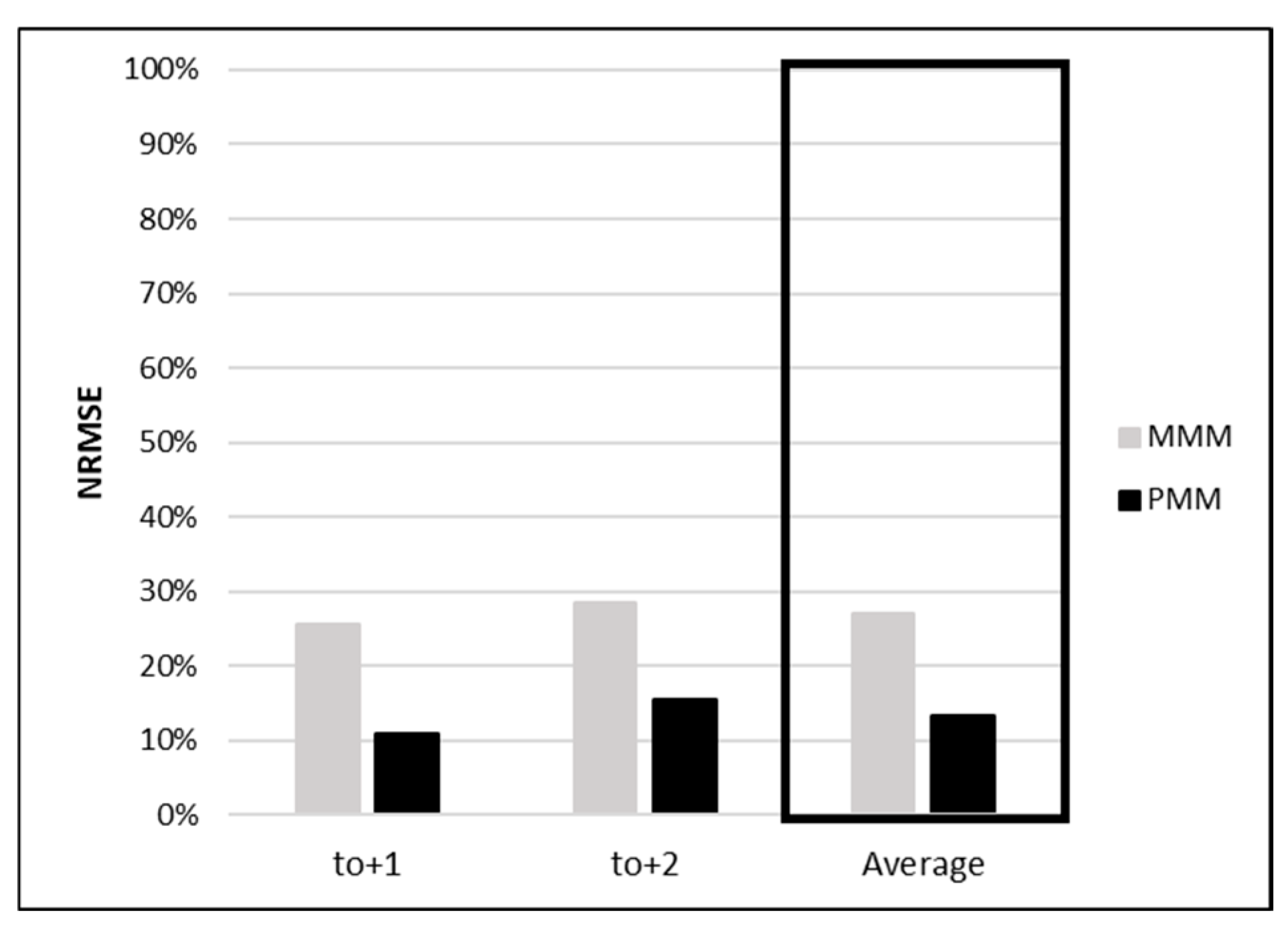

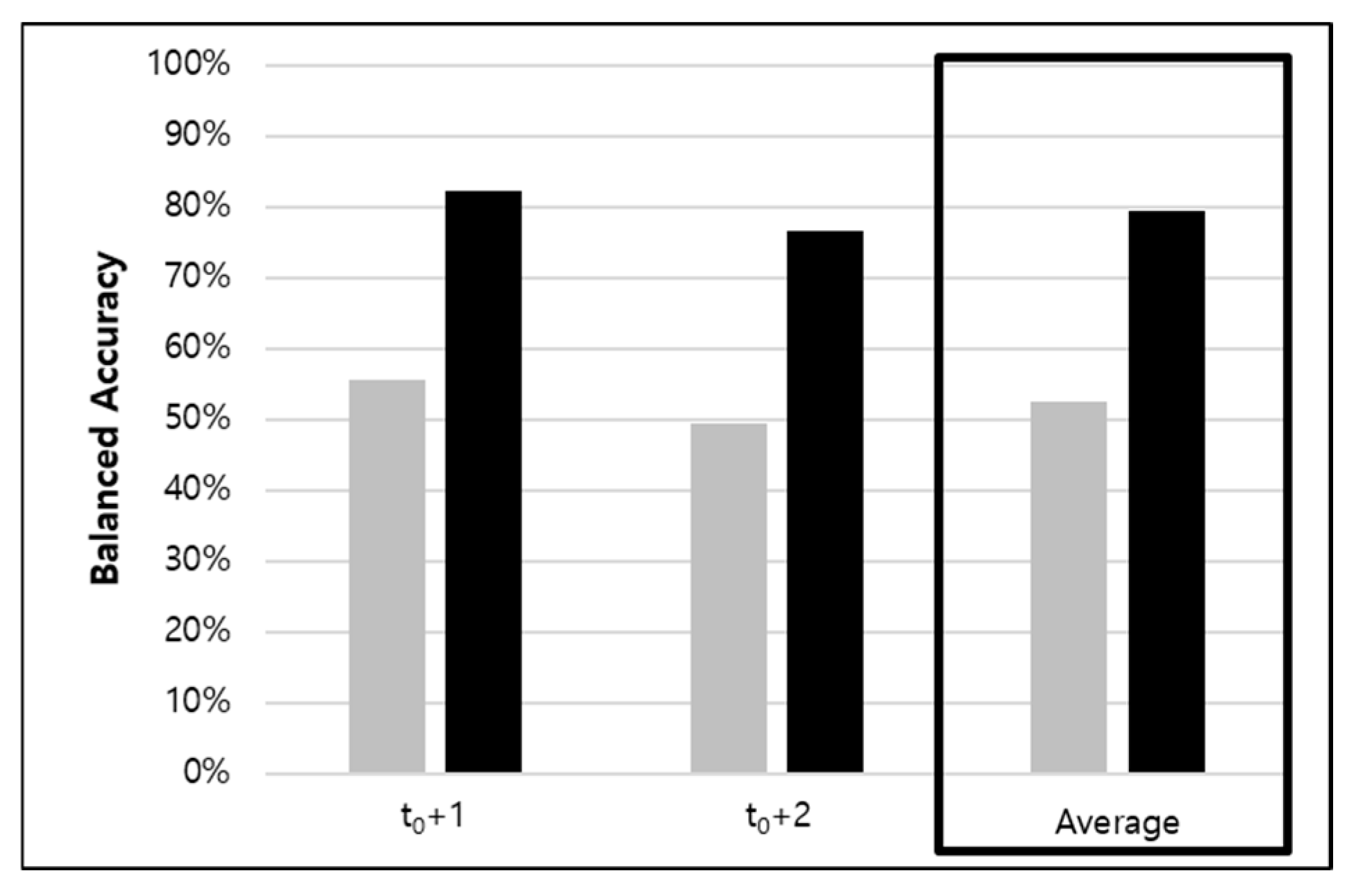

Thornton et al. [

26] investigated the accuracy of mental models predicting emotional transitions. Participants rated the probability of transitioning between emotions based on 18 emotion words used in the study by Trampe et al. [

13], assigning probabilities ranging from 0 to 100. They developed a mental model using average transition probabilities.

To assess the accuracy of the mental model, they employed a Markov chain to measure how well the participants could predict real emotional transitions. The experiment utilized actual emotional history data, revealing significant prediction capability for emotions up to two levels deep.

However, a limitation of the mental model presented in this study is its potential for errors due to the generalization of participants’ individual emotions. Furthermore, because specific emotional transitions were evaluated using fragmented probabilities through one-to-one matching, it lacks consideration of the user’s complete emotional history or temporal aspects. More accurate predictions could be achieved by predicting emotions based on an individual’s personalized emotional transition probabilities. Additionally, brainwave data, facial expressions, and psychological tests were used to analyze user emotions.

In this study, we aimed to select music that is commonly employed in emotion analysis research and is known to affect emotions. To analyze music emotions, we used music emotion data sourced from Cowen et al. [

22]. Using a multiple regression model, we analyzed the relationship between valence and arousal within each emotional domain. To analyze user emotions, we utilized data as detailed by Trampe et al. [

13], with considerations for the limitations identified in the mental model proposed by Thornton et al. [

26].