A Deep Learning-Driven CAD for Breast Cancer Detection via Thermograms: A Compact Multi-Architecture Feature Strategy

Abstract

1. Introduction

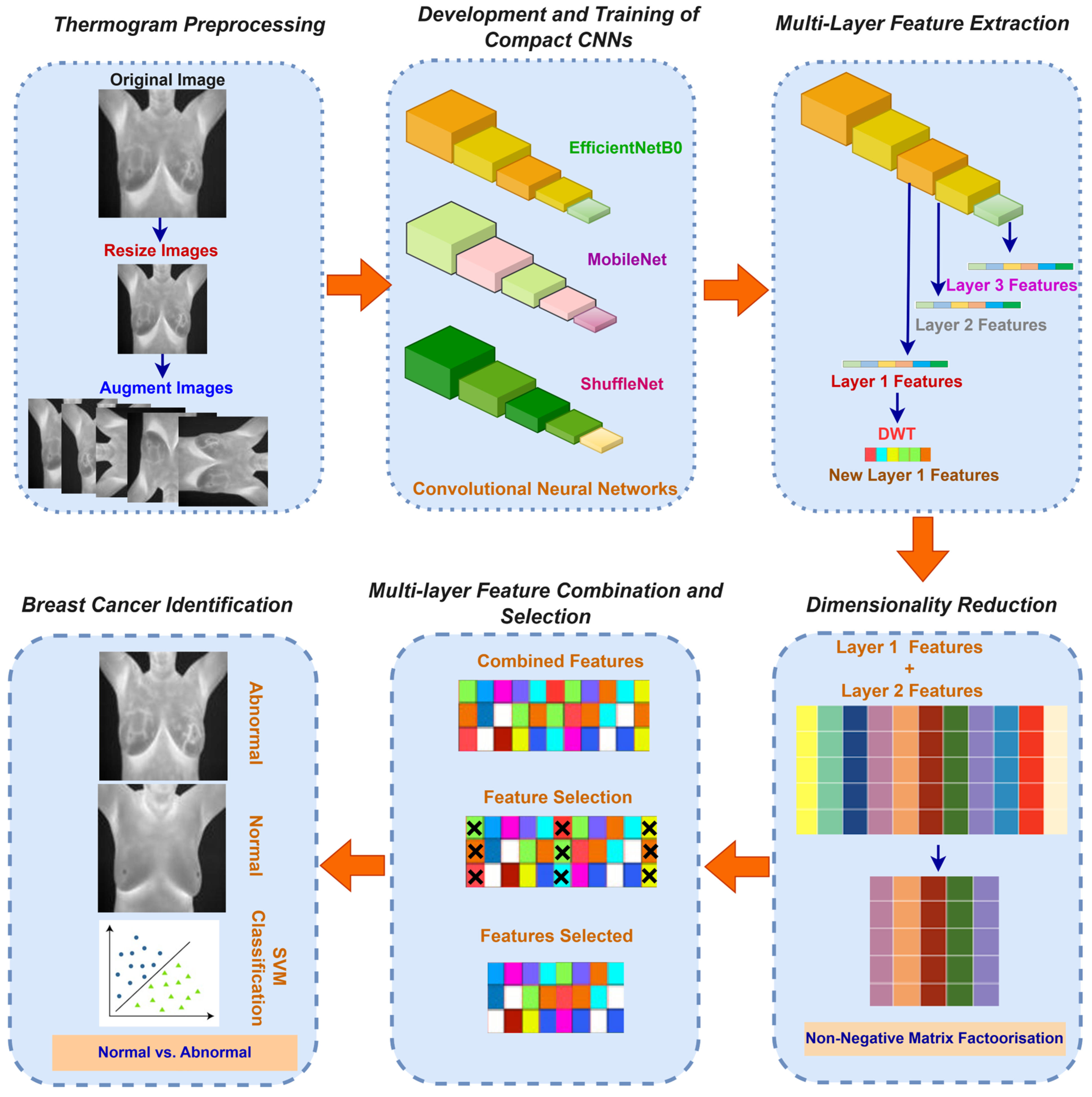

- Constructing a CAD paradigm based on compact CNN models instead of employing deep learning models of complex architectures and huge amounts of deep layers and parameters.

- The implementation of multi-level and multi-model feature fusion mechanisms based on extracting and merging multi-level deep features from three distinct CNN structures, and applying a feature selection (FS) technique to choose the features with significant impact on classification performance.

- Utilizing feature transformation approaches including discrete wavelet transform (DWT) and non-matrix negative matrix factorization (NNMF) to lower feature dimensionality, thereby decreasing classification complexity.

- Enhancing the detection workflow by removing redundant processing and segmentation stages, thus reducing computation burden.

2. Previous Works

3. Materials and Methods

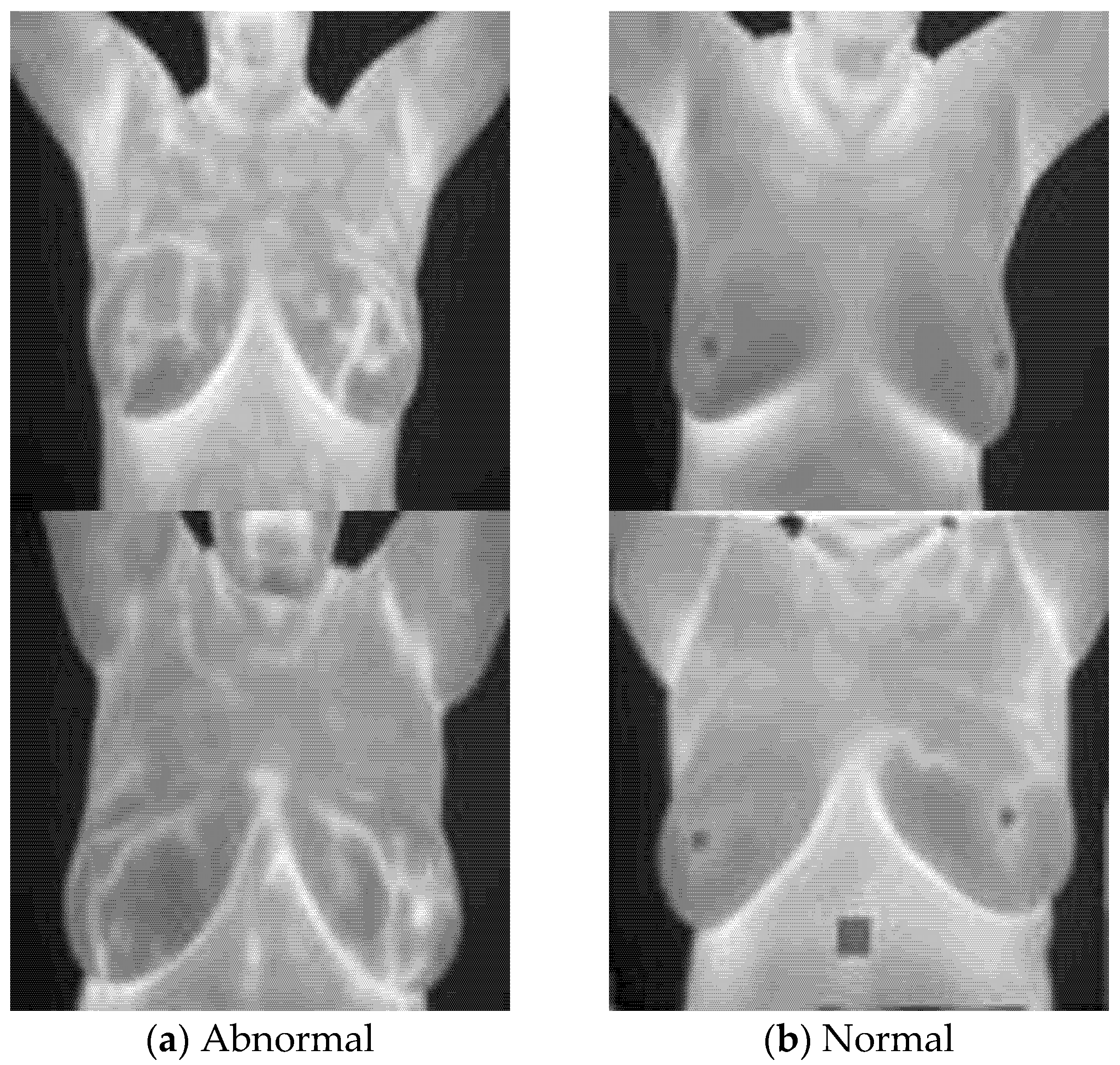

3.1. Breast Cancer Thermograms Dataset

3.2. Feature Transformation and Reduction Approaches

3.2.1. Discrete Wavelet Transform

3.2.2. Non-Negative Matrix Factorization

- W: A matrix of size m × r that serves as a basis for the data, where each column represents a distinct component or part.

- H: A matrix of size r × n containing the coefficients or weights corresponding to the components in W.

3.3. Presented CAD

3.3.1. Thermogram Preprocessing

3.3.2. Development and Training of Compact CNNs

3.3.3. Multi-Layer Feature Extraction

3.3.4. Dimensionality Reduction

3.3.5. Multi-Layer Feature Combination and Selection

3.3.6. Breast Cancer Identification

4. Experimental Settings

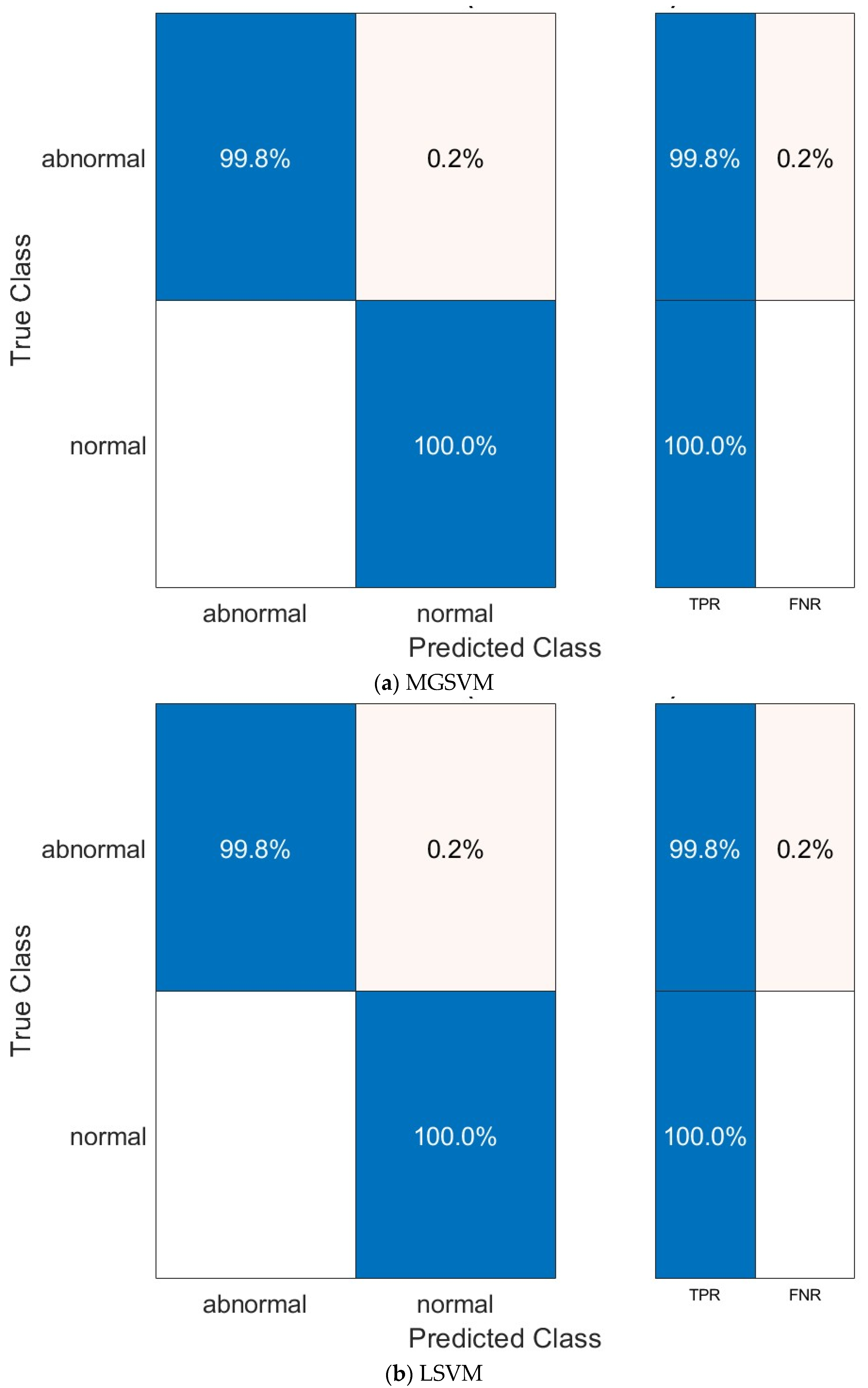

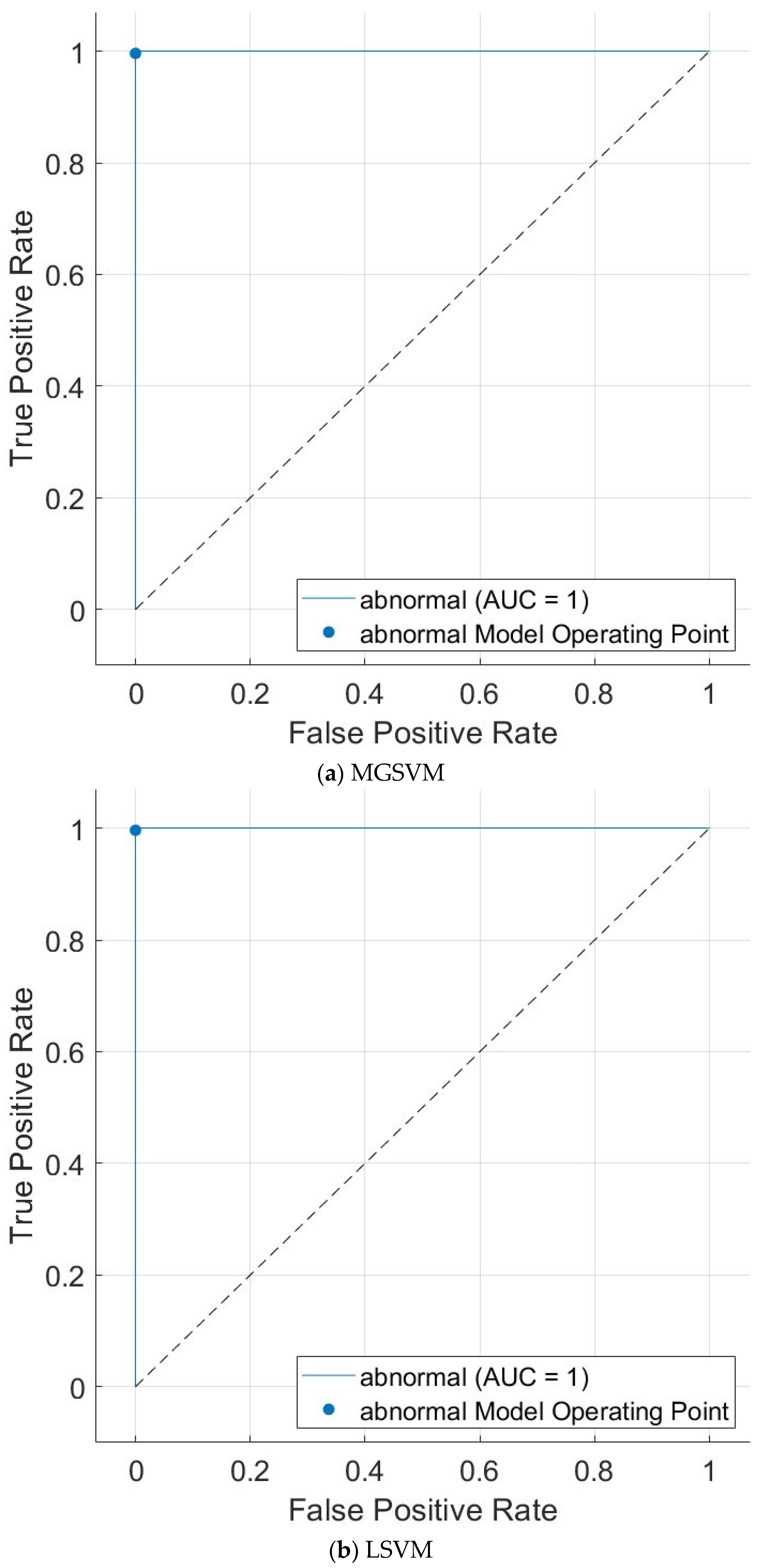

5. Results

5.1. Ablation Study

5.2. Parameter Analysis

5.2.1. Reduction Dimensionality

5.2.2. Feature Fusion

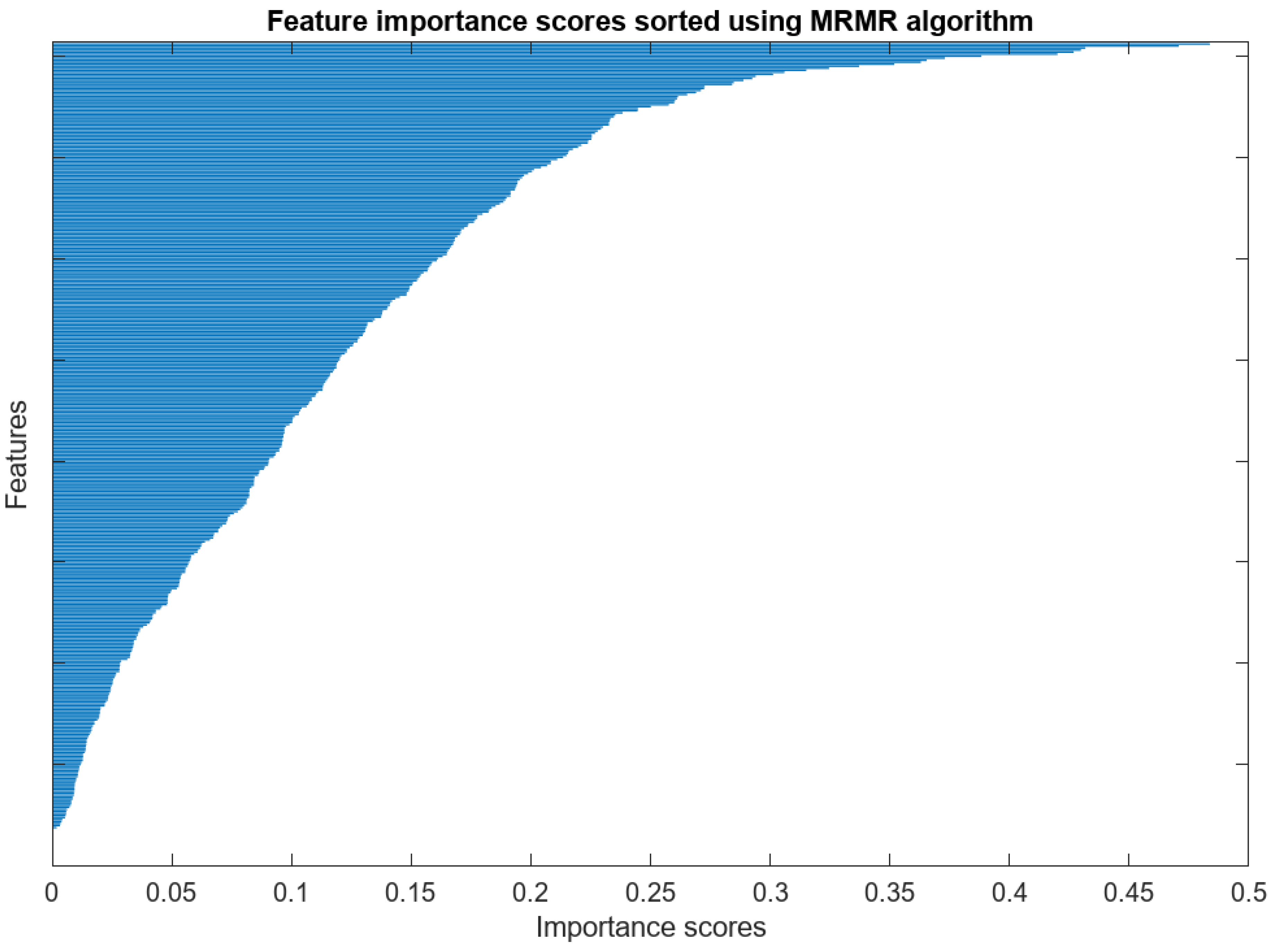

5.2.3. Feature Selection

5.3. Cutting-Edge Comparisons

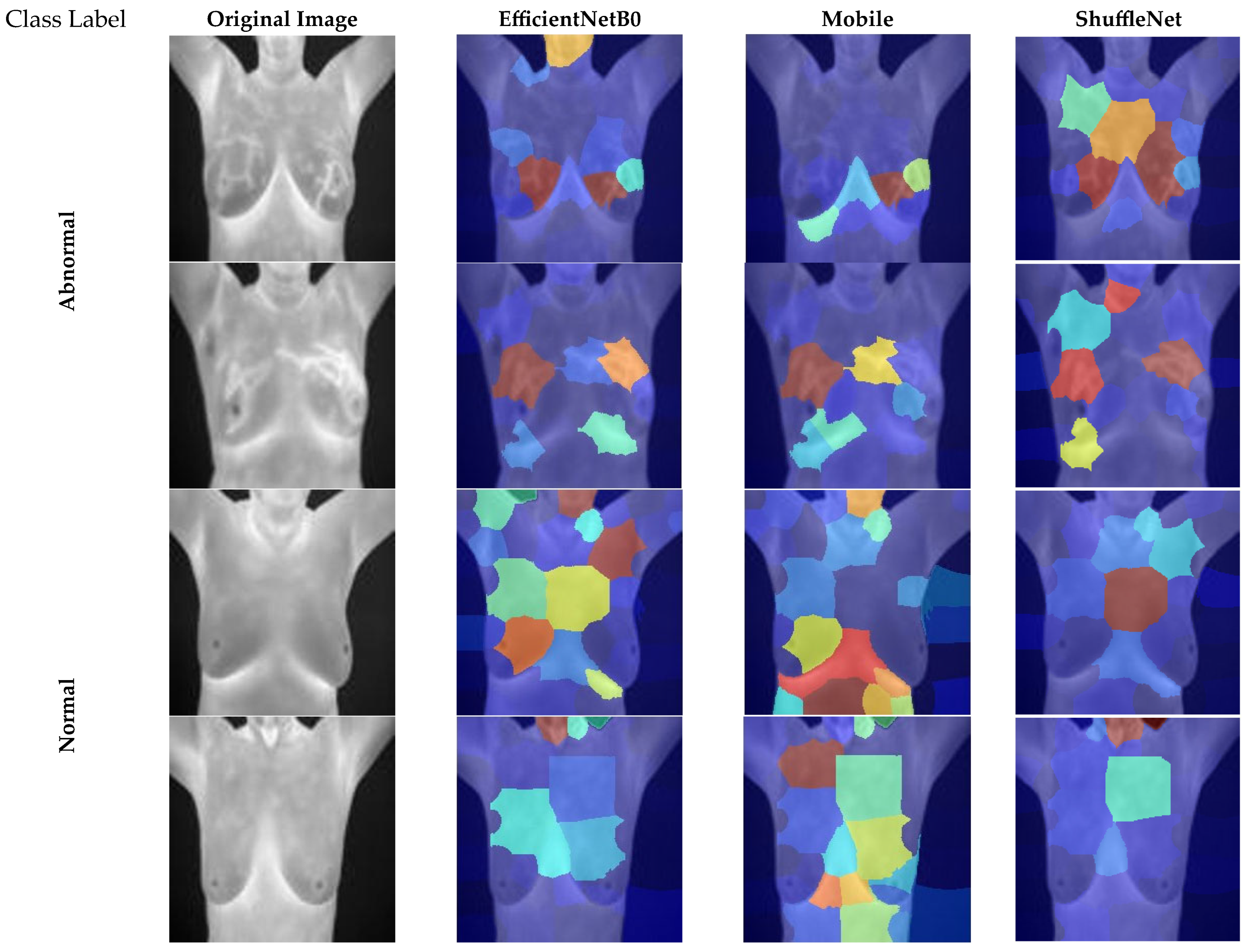

5.4. Explainability Analysis

6. Discussion

6.1. Comparative Performance Evaluation of Feature Reduction Approaches

- PCA: generally effective for linear dimensionality reduction; however, it also assumes Gaussian distribution with linear correlations, which may not be effective on the nonlinear and heterogeneous thermal patterns in breast thermography.

- t-SNE/UMAP: These techniques are best suited for visualization through local structure preservation. However, these are computationally very expensive and do not fit well for learning an effective subspace of features for further classification tasks. Their stochastic nature can also affect reproducibility by introducing variability.

- Autoencoders: Though powerful in producing nonlinear embeddings, they require huge amounts of training data and significant computational resources to get them to perform well. This is opposed to what could be achieved by accomplishing a light, efficient deployment in the resource-limited context.

6.2. Complexity Analysis of the Proposed CAD

6.3. Shortcomings and Possible Future Directions

7. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Atrey, K.; Singh, B.K.; Bodhey, N.K.; Pachori, R.B. Mammography and Ultrasound Based Dual Modality Classification of Breast Cancer Using a Hybrid Deep Learning Approach. Biomed. Signal Process. Control 2023, 86, 104919. [Google Scholar] [CrossRef]

- Atrey, K.; Singh, B.K.; Bodhey, N.K. Multimodal Classification of Breast Cancer Using Feature Level Fusion of Mammogram and Ultrasound Images in Machine Learning Paradigm. Multimed. Tools Appl. 2024, 83, 21347–21368. [Google Scholar] [CrossRef]

- Yaffe, M.J. Mammographic Density. Measurement of Mammographic Density. Breast Cancer Res. 2008, 10, 209. [Google Scholar] [CrossRef]

- Nazari, S.S.; Mukherjee, P. An Overview of Mammographic Density and Its Association with Breast Cancer. Breast Cancer 2018, 25, 259–267. [Google Scholar] [CrossRef] [PubMed]

- Boca, I.; Ciurea, A.I.; Ciortea, C.A.; Dudea, S.M. Pros and Cons for Automated Breast Ultrasound (ABUS): A Narrative Review. J. Pers. Med. 2021, 11, 703. [Google Scholar] [CrossRef] [PubMed]

- Heller, S.L.; Moy, L. Breast MRI Screening: Benefits and Limitations. Curr. Breast Cancer Rep. 2016, 8, 248–257. [Google Scholar] [CrossRef]

- Dar, R.A.; Rasool, M.; Assad, A. Breast Cancer Detection Using Deep Learning: Datasets, Methods, and Challenges Ahead. Comput. Biol. Med. 2022, 149, 106073. [Google Scholar]

- Pokharel, A.; Luitel, N.; Khatri, A.; Khadka, S.; Shrestha, R. Review on the Evolving Role of Infrared Thermography in Oncological Applications. Infrared Phys. Technol. 2024, 140, 105399. [Google Scholar] [CrossRef]

- Yoshida, S.; Nakagawa, S.; Yahara, T.; Koga, T.; Deguchi, H.; Shirouzu, K. Relationship Between Microvessel Density and Thermographic Hot Areas in Breast Cancer. Surg. Today 2003, 33, 243–248. [Google Scholar] [CrossRef]

- Mashekova, A.; Zhao, Y.; Ng, E.Y.; Zarikas, V.; Fok, S.C.; Mukhmetov, O. Early Detection of the Breast Cancer Using Infrared Technology–A Comprehensive Review. Therm. Sci. Eng. Prog. 2022, 27, 101142. [Google Scholar] [CrossRef]

- Singh, D.; Singh, A.K. Role of Image Thermography in Early Breast Cancer Detection-Past, Present and Future. Comput. Methods Programs Biomed. 2020, 183, 105074. [Google Scholar] [CrossRef] [PubMed]

- Gonzalez-Hernandez, J.-L.; Recinella, A.N.; Kandlikar, S.G.; Dabydeen, D.; Medeiros, L.; Phatak, P. Technology, Application and Potential of Dynamic Breast Thermography for the Detection of Breast Cancer. Int. J. Heat Mass Transf. 2019, 131, 558–573. [Google Scholar] [CrossRef]

- Yousuff, M.; Babu, R.; Ramathulasi, T. Artificial Intelligence in Medical Image Processing. In Artificial Intelligence for Health 4.0: Challenges and Applications; River Publishers: Aalborg, Denmark, 2023; pp. 269–302. [Google Scholar]

- Chan, H.; Hadjiiski, L.M.; Samala, R.K. Computer-aided Diagnosis in the Era of Deep Learning. Med. Phys. 2020, 47, e218–e227. [Google Scholar] [CrossRef] [PubMed]

- Attallah, O. Acute Lymphocytic Leukemia Detection and Subtype Classification via Extended Wavelet Pooling Based-CNNs and Statistical-Texture Features. Image Vis. Comput. 2024, 147, 105064. [Google Scholar] [CrossRef]

- Pacal, I. MaxCerVixT: A Novel Lightweight Vision Transformer-Based Approach for Precise Cervical Cancer Detection. Knowl.-Based Syst. 2024, 289, 111482. [Google Scholar] [CrossRef]

- Attallah, O. RADIC: A Tool for Diagnosing COVID-19 from Chest CT and X-Ray Scans Using Deep Learning and Quad-Radiomics. Chemom. Intell. Lab. Syst. 2023, 233, 104750. [Google Scholar] [CrossRef]

- Attallah, O. Multi-Domain Feature Incorporation of Lightweight Convolutional Neural Networks and Handcrafted Features for Lung and Colon Cancer Diagnosis. Technologies 2025, 13, 173. [Google Scholar] [CrossRef]

- Attallah, O. Skin-CAD: Explainable Deep Learning Classification of Skin Cancer from Dermoscopic Images by Feature Selection of Dual High-Level CNNs Features and Transfer Learning. Comput. Biol. Med. 2024, 178, 108798. [Google Scholar] [CrossRef]

- Attallah, O. A Hybrid Trio-Deep Feature Fusion Model for Improved Skin Cancer Classification: Merging Dermoscopic and DCT Images. Technologies 2024, 12, 190. [Google Scholar] [CrossRef]

- Attallah, O. GabROP: Gabor Wavelets-Based CAD for Retinopathy of Prematurity Diagnosis via Convolutional Neural Networks. Diagnostics 2023, 13, 171. [Google Scholar] [CrossRef]

- Elkorany, A.S.; Elsharkawy, Z.F. Efficient Breast Cancer Mammograms Diagnosis Using Three Deep Neural Networks and Term Variance. Sci. Rep. 2023, 13, 2663. [Google Scholar] [CrossRef] [PubMed]

- Pacal, I.; Attallah, O. InceptionNeXt-Transformer: A Novel Multi-Scale Deep Feature Learning Architecture for Multimodal Breast Cancer Diagnosis. Biomed. Signal Process. Control 2025, 110, 108116. [Google Scholar] [CrossRef]

- Anwar, F.; Attallah, O.; Ghanem, N.; Ismail, M.A. Automatic Breast Cancer Classification from Histopathological Images. In Proceedings of the 2019 International Conference on Advances in the Emerging Computing Technologies (AECT), Al Madinah Al Munawwarah, Saudi Arabia, 10 February 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1–6. [Google Scholar]

- Chougrad, H.; Zouaki, H.; Alheyane, O. Deep Convolutional Neural Networks for Breast Cancer Screening. Comput. Methods Programs Biomed. 2018, 157, 19–30. [Google Scholar] [CrossRef]

- Shahid, A.H.; Singh, M.P. Computational Intelligence Techniques for Medical Diagnosis and Prognosis: Problems and Current Developments. Biocybern. Biomed. Eng. 2019, 39, 638–672. [Google Scholar] [CrossRef]

- Dharani, N.P.; Govardhini Immadi, I.; Narayana, M.V. Enhanced Deep Learning Model for Diagnosing Breast Cancer Using Thermal Images. Soft Comput. 2024, 28, 8423–8434. [Google Scholar] [CrossRef]

- Tello-Mijares, S.; Woo, F.; Flores, F. Breast Cancer Identification via Thermography Image Segmentation with a Gradient Vector Flow and a Convolutional Neural Network. J. Healthc. Eng. 2019, 2019, 9807619. [Google Scholar] [CrossRef]

- Ahmed, F.; Rahman, M.; Akter Shukhy, S.; Mahmud Sisir, A.; Alam Rafi, I.; Khan, R.K. Breast Cancer Detection with Vgg16: A Deep Learning Approach with Thermographic Imaging. Int. J. Intell. Syst. Appl. Eng. 2024, 12. [Google Scholar]

- Ekici, S.; Jawzal, H. Breast Cancer Diagnosis Using Thermography and Convolutional Neural Networks. Med. Hypotheses 2020, 137, 109542. [Google Scholar] [CrossRef]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:170404861. [Google Scholar]

- Tan, M.; Le, Q. Efficientnet: Rethinking Model Scaling for Convolutional Neural Networks. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; Proceedings of Machine Learning Research (PMLR). pp. 6105–6114. [Google Scholar]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. Shufflenet: An Extremely Efficient Convolutional Neural Network for Mobile Devices. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 6848–6856. [Google Scholar]

- Rasti, R.; Teshnehlab, M.; Phung, S.L. Breast Cancer Diagnosis in DCE-MRI Using Mixture Ensemble of Convolutional Neural Networks. Pattern Recognit. 2017, 72, 381–390. [Google Scholar] [CrossRef]

- Du, C.; Wang, Y.; Wang, C.; Shi, C.; Xiao, B. Selective Feature Connection Mechanism: Concatenating Multi-Layer CNN Features with a Feature Selector. Pattern Recognit. Lett. 2020, 129, 108–114. [Google Scholar] [CrossRef]

- Mohamed, E.A.; Rashed, E.A.; Gaber, T.; Karam, O. Deep Learning Model for Fully Automated Breast Cancer Detection System from Thermograms. PLoS ONE 2022, 17, e0262349. [Google Scholar] [CrossRef] [PubMed]

- Dihmani, H.; Bousselham, A.; Bouattane, O. A New Computer Aided Diagnosis for Breast Cancer Detection of Thermograms Using Metaheuristic Algorithms and Explainable AI. Algorithms 2024, 17, 462. [Google Scholar] [CrossRef]

- Mirasbekov, Y.; Aidossov, N.; Mashekova, A.; Zarikas, V.; Zhao, Y.; Ng, E.Y.K.; Midlenko, A. Fully Interpretable Deep Learning Model Using IR Thermal Images for Possible Breast Cancer Cases. Biomimetics 2024, 9, 609. [Google Scholar] [CrossRef] [PubMed]

- Yadav, S.S.; Jadhav, S.M. Thermal Infrared Imaging Based Breast Cancer Diagnosis Using Machine Learning Techniques. Multimed. Tools Appl. 2022, 81, 13139–13157. [Google Scholar] [CrossRef]

- Sánchez-Cauce, R.; Pérez-Martín, J.; Luque, M. Multi-Input Convolutional Neural Network for Breast Cancer Detection Using Thermal Images and Clinical Data. Comput. Methods Programs Biomed. 2021, 204, 106045. [Google Scholar] [CrossRef]

- Nissar, I.; Alam, S.; Masood, S. Computationally Efficient LC-SCS Deep Learning Model for Breast Cancer Classification Using Thermal Imaging. Neural Comput. Appl. 2024, 36, 16233–16250. [Google Scholar] [CrossRef]

- Tsietso, D.; Yahya, A.; Samikannu, R.; Tariq, M.U.; Babar, M.; Qureshi, B.; Koubaa, A. Multi-Input Deep Learning Approach for Breast Cancer Screening Using Thermal Infrared Imaging and Clinical Data. IEEE Access 2023, 11, 52101–52116. [Google Scholar] [CrossRef]

- Nogales, A.; Perez-Lara, F.; García-Tejedor, Á.J. Enhancing Breast Cancer Diagnosis with Deep Learning and Evolutionary Algorithms: A Comparison of Approaches Using Different Thermographic Imaging Treatments. Multimed. Tools Appl. 2024, 83, 42955–42971. [Google Scholar] [CrossRef]

- Pramanik, R.; Pramanik, P.; Sarkar, R. Breast Cancer Detection in Thermograms Using a Hybrid of GA and GWO Based Deep Feature Selection Method. Expert Syst. Appl. 2023, 219, 119643. [Google Scholar] [CrossRef]

- Munguía-Siu, A.; Vergara, I.; Espinoza-Rodríguez, J.H. The Use of Hybrid CNN-RNN Deep Learning Models to Discriminate Tumor Tissue in Dynamic Breast Thermography. J. Imaging 2024, 10, 329. [Google Scholar] [CrossRef] [PubMed]

- Kaddes, M.; Ayid, Y.M.; Elshewey, A.M.; Fouad, Y. Breast Cancer Classification Based on Hybrid CNN with LSTM Model. Sci. Rep. 2025, 15, 4409. [Google Scholar] [CrossRef] [PubMed]

- Silva, L.F.; Saade, D.C.M.; Sequeiros, G.O.; Silva, A.C.; Paiva, A.C.; Bravo, R.S.; Conci, A. A New Database for Breast Research with Infrared Image. J. Med. Imaging Health Inform. 2014, 4, 92–100. [Google Scholar] [CrossRef]

- Chakraborty, J.; Nandy, A. Discrete Wavelet Transform Based Data Representation in Deep Neural Network for Gait Abnormality Detection. Biomed. Signal Process. Control 2020, 62, 102076. [Google Scholar] [CrossRef]

- Zhang, D. Wavelet Transform. In Fundamentals of Image Data Mining; Springer: Berlin/Heidelberg, Germany, 2019; pp. 35–44. [Google Scholar]

- Bahoura, M.; Ezzaidi, H.; Méthot, J.-F. Filter Group Delays Equalization for 2D Discrete Wavelet Transform Applications. Expert Syst. Appl. 2022, 200, 116954. [Google Scholar] [CrossRef]

- Alickovic, E.; Kevric, J.; Subasi, A. Performance Evaluation of Empirical Mode Decomposition, Discrete Wavelet Transform, and Wavelet Packed Decomposition for Automated Epileptic Seizure Detection and Prediction. Biomed. Signal Process. Control 2018, 39, 94–102. [Google Scholar] [CrossRef]

- Lee, D.D.; Seung, H.S. Learning the Parts of Objects by Non-Negative Matrix Factorization. Nature 1999, 401, 788–791. [Google Scholar] [CrossRef] [PubMed]

- Berry, M.W.; Browne, M.; Langville, A.N.; Pauca, V.P.; Plemmons, R.J. Algorithms and Applications for Approximate Nonnegative Matrix Factorization. Comput. Stat. Data Anal. 2007, 52, 155–173. [Google Scholar] [CrossRef]

- Févotte, C.; Idier, J. Algorithms for Nonnegative Matrix Factorization with the β-Divergence. Neural Comput. 2011, 23, 2421–2456. [Google Scholar] [CrossRef]

- Wang, Y.-X.; Zhang, Y.-J. Nonnegative Matrix Factorization: A Comprehensive Review. IEEE Trans. Knowl. Data Eng. 2012, 25, 1336–1353. [Google Scholar] [CrossRef]

- Gillis, N. The Why and How of Nonnegative Matrix Factorization. Regul. Optim. Kernels Support Vector Mach. 2014, 12, 257–291. [Google Scholar]

- Hoyer, P.O. Non-Negative Matrix Factorization with Sparseness Constraints. J. Mach. Learn. Res. 2004, 5, 1457–1469. [Google Scholar]

- Lu, J.; Behbood, V.; Hao, P.; Zuo, H.; Xue, S.; Zhang, G. Transfer Learning Using Computational Intelligence: A Survey. Knowl.-Based Syst. 2015, 80, 14–23. [Google Scholar] [CrossRef]

- Attallah, O. Deep Learning-Based CAD System for COVID-19 Diagnosis via Spectral-Temporal Images. In Proceedings of the 2022 The 12th International Conference on Information Communication and Management, London, UK, 13–15 July 2022; pp. 25–33. [Google Scholar]

- Attallah, O. ADHD-AID: Aiding Tool for Detecting Children’s Attention Deficit Hyperactivity Disorder via EEG-Based Multi-Resolution Analysis and Feature Selection. Biomimetics 2024, 9, 188. [Google Scholar] [CrossRef]

- Radovic, M.; Ghalwash, M.; Filipovic, N.; Obradovic, Z. Minimum Redundancy Maximum Relevance Feature Selection Approach for Temporal Gene Expression Data. BMC Bioinform. 2017, 18, 9. [Google Scholar] [CrossRef]

- Ershadi, M.M.; Seifi, A. Applications of Dynamic Feature Selection and Clustering Methods to Medical Diagnosis. Appl. Soft Comput. 2022, 126, 109293. [Google Scholar] [CrossRef]

- Peng, H.; Long, F.; Ding, C. Feature Selection Based on Mutual Information Criteria of Max-Dependency, Max-Relevance, and Min-Redundancy. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1226–1238. [Google Scholar] [CrossRef]

- Nguyen Chi, T.; Le Thi Thu, H.; Doan Quang, T.; Taniar, D. A Lightweight Method for Breast Cancer Detection Using Thermography Images with Optimized CNN Feature and Efficient Classification. J. Imaging Inform. Med. 2024, 38, 1434–1451. [Google Scholar] [CrossRef]

- Madhavi, V.; Thomas, C.B. Multi-View Breast Thermogram Analysis by Fusing Texture Features. Quant. InfraRed Thermogr. J. 2019, 16, 111–128. [Google Scholar] [CrossRef]

- Ribeiro, M.T.; Singh, S.; Guestrin, C. “Why Should I Trust You?”: Explaining the Predictions of Any Classifier. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; ACM: San Francisco, CA, USA, 2016; pp. 1135–1144. [Google Scholar]

| CNN Model | Layer 3 Number of Features | Layer 2 Number of Features | Layer 1 (Before Reduction) Number of Features | Layer 1 (After Reduction) Number of Features |

|---|---|---|---|---|

| MobileNet | 2 | 1280 | 62,720 | 980 |

| ShuffleNet | 2 | 544 | 26,656 | 417 |

| EfficientNetB0 | 2 | 1280 | 62,720 | 980 |

| CNN Model | CNN Layer | LSVM | QSVM | CSVM | MGSVM | CGSVM |

|---|---|---|---|---|---|---|

| MobileNet | Layer 1 | 98.2 | 98.2 | 98.4 | 98.5 | 98.1 |

| Layer 2 | 97.2 | 96.9 | 96.6 | 97.2 | 96.1 | |

| Layer 3 | 91.4 | 91.4 | 73.5 | 90.1 | 90.2 | |

| EfficientNetB0 | Layer 1 | 98.2 | 98.6 | 98.6 | 98.4 | 98.0 |

| Layer 2 | 99.4 | 99.4 | 99.4 | 99.3 | 99.2 | |

| Layer 3 | 88.7 | 89.3 | 88.5 | 89.0 | 88.5 | |

| ShuffleNet | Layer 1 | 95.6 | 96.1 | 96.3 | 96.4 | 92.8 |

| Layer 2 | 96.0 | 96.5 | 96.5 | 97.2 | 93.6 | |

| Layer 3 | 90.6 | 85.9 | 37.4 | 89.3 | 89.1 |

| CNN Model and Layer | Number of Features | LSVM | QSVM | CSVM | MGSVM | CGSVM |

|---|---|---|---|---|---|---|

| EfficientNetB0 Layer 1 Deep Features | 10 | 96.8 | 97.8 | 97.7 | 98.1 | 96.1 |

| 20 | 97.7 | 97.9 | 97.6 | 97.8 | 97.5 | |

| 30 | 97.9 | 97.7 | 97.5 | 97.9 | 97.7 | |

| 40 | 97.8 | 98.0 | 97.9 | 97.8 | 98.0 | |

| 50 | 97.8 | 97.9 | 97.8 | 98.0 | 97.8 | |

| 60 | 98.1 | 97.9 | 97.7 | 98.0 | 98.0 | |

| 70 | 97.6 | 97.9 | 97.8 | 98.0 | 98.0 | |

| 80 | 98.1 | 98.1 | 97.9 | 97.4 | 97.8 | |

| 90 | 97.9 | 98.0 | 97.5 | 97.5 | 98.0 | |

| 100 | 97.3 | 97.2 | 97.2 | 97.2 | 97.6 | |

| MobileNet Layer 1 Deep Features | 10 | 97.4 | 98.2 | 97.7 | 98.1 | 96.5 |

| 20 | 98.2 | 98.2 | 98.5 | 97.9 | 95.9 | |

| 30 | 97.9 | 98.1 | 98.1 | 97.9 | 97.8 | |

| 40 | 98.3 | 98.6 | 98.1 | 98.3 | 98.2 | |

| 50 | 97.7 | 98.1 | 97.7 | 97.4 | 97.7 | |

| 60 | 97.8 | 98.0 | 98.0 | 97.9 | 97.9 | |

| 70 | 97.8 | 97.6 | 97.7 | 97.6 | 97.6 | |

| 80 | 97.8 | 97.6 | 97.7 | 97.6 | 97.6 | |

| 90 | 98.0 | 98.0 | 98.0 | 97.4 | 97.9 | |

| 100 | 97.7 | 97.8 | 97.7 | 97.7 | 97.7 | |

| ShuffleNet Layer 1 Deep Features | 10 | 92.4 | 95.0 | 94.2 | 95.3 | 91.0 |

| 20 | 95.5 | 96.4 | 96.2 | 96.1 | 96.3 | |

| 30 | 95.6 | 95.7 | 95.4 | 96.3 | 94.4 | |

| 40 | 95.3 | 96.3 | 95.9 | 96.2 | 94.0 | |

| 50 | 95.2 | 96.2 | 95.6 | 96.2 | 93.4 | |

| 60 | 95.2 | 95.8 | 95.5 | 96.0 | 94.2 | |

| 70 | 96.3 | 96.4 | 96.1 | 96.3 | 94.5 | |

| 80 | 96.0 | 96.2 | 96.5 | 96.5 | 94.2 | |

| 90 | 95.0 | 96.6 | 96.0 | 96.6 | 94.5 | |

| 100 | 95.4 | 94.7 | 94.6 | 95.7 | 93.9 |

| CNN Model and Layer | Number of Features | LSVM | QSVM | CSVM | MGSVM | CGSVM |

|---|---|---|---|---|---|---|

| EfficientNetB0 Layer 2 Deep Features | 10 | 97.2 | 97.8 | 97.6 | 98.1 | 96.2 |

| 20 | 98.6 | 98.7 | 98.7 | 98.8 | 98.5 | |

| 30 | 98.7 | 99.0 | 99.0 | 98.4 | 98.1 | |

| 40 | 98.7 | 98.4 | 98.5 | 98.5 | 98.6 | |

| 50 | 98.9 | 99.1 | 99.0 | 99.0 | 98.3 | |

| 60 | 98.5 | 98.2 | 98.0 | 98.5 | 98.6 | |

| 70 | 98.7 | 99.0 | 98.7 | 98.9 | 98.7 | |

| 80 | 99.1 | 98.8 | 98.8 | 98.6 | 98.8 | |

| 90 | 99.2 | 99.1 | 98.9 | 98.8 | 98.8 | |

| 100 | 98.5 | 98.8 | 98.7 | 98.2 | 98.2 | |

| MobileNet Layer 2 Deep Features | 10 | 96.4 | 97.2 | 97.5 | 97.2 | 94.7 |

| 20 | 97.0 | 97.2 | 97.4 | 97.5 | 96.9 | |

| 30 | 97.0 | 97.2 | 97.4 | 97.5 | 96.9 | |

| 40 | 97.4 | 97.4 | 97.3 | 97.3 | 97.4 | |

| 50 | 97.1 | 97.2 | 97.0 | 97.3 | 97.3 | |

| 60 | 97.1 | 96.9 | 96.7 | 96.9 | 97.1 | |

| 70 | 97.5 | 96.9 | 97.0 | 97.0 | 97.2 | |

| 80 | 97.2 | 97.6 | 97.3 | 97.5 | 97.2 | |

| 90 | 97.3 | 96.9 | 97.5 | 97.1 | 97.7 | |

| 100 | 97.6 | 97.5 | 97.6 | 97.2 | 97.0 | |

| ShuffleNet Layer 2 Deep Features | 10 | 93.9 | 96.2 | 96.2 | 96.8 | 93.0 |

| 20 | 95.1 | 97.0 | 96.5 | 96.2 | 93.9 | |

| 30 | 96.3 | 97.0 | 96.4 | 96.2 | 95.5 | |

| 40 | 96.0 | 95.5 | 95.5 | 96.4 | 95.8 | |

| 50 | 96.4 | 96.5 | 96.2 | 96.3 | 96.1 | |

| 60 | 96.7 | 96.0 | 96.0 | 96.4 | 95.5 | |

| 70 | 96.1 | 95.8 | 95.8 | 95.9 | 95.9 | |

| 80 | 96.7 | 97.0 | 96.8 | 96.9 | 95.8 | |

| 90 | 96.4 | 96.8 | 96.1 | 96.4 | 96.1 | |

| 100 | 97.2 | 96.7 | 95.7 | 96.5 | 96.0 |

| CNN Layer | CNN Model | LSVM | QSVM | CSVM | MGSVM | CGSVM |

|---|---|---|---|---|---|---|

| Layer 1 Features | MobileNet | 98.2 | 98.2 | 98.4 | 98.5 | 98.1 |

| EfficientNet | 98.2 | 98.6 | 98.6 | 98.4 | 98.0 | |

| SuffleNet | 95.6 | 96.1 | 96.3 | 96.4 | 92.8 | |

| Combined Features | 98.6 | 98.7 | 98.7 | 98.8 | 98.7 | |

| Layer 2 Features | MobileNet | 97.2 | 96.9 | 96.6 | 97.2 | 96.1 |

| EfficientNet | 99.4 | 99.4 | 99.4 | 99.3 | 99.2 | |

| ShuffleNet | 96.0 | 96.5 | 96.5 | 97.2 | 93.6 | |

| Combined Features | 99.4 | 99.5 | 99.5 | 99.8 | 99.5 | |

| Layer 3 Features | MobileNet | 91.4 | 91.4 | 73.5 | 90.1 | 90.2 |

| EfficientNet | 88.7 | 89.3 | 88.5 | 89.0 | 88.5 | |

| SuffleNet | 90.6 | 85.9 | 37.4 | 89.3 | 89.1 | |

| Combined Features | 94.7 | 94.7 | 95.1 | 94.1 | 94.1 |

| Number of Features | LSVM | QSVM | CSVM | MGSVM | CGSVM |

|---|---|---|---|---|---|

| 50 | 96.9 | 97.7 | 98.4 | 96.8 | 96.1 |

| 100 | 97.8 | 98.9 | 98.9 | 97.9 | 97.2 |

| 150 | 98.6 | 99.3 | 99.4 | 99.0 | 97.8 |

| 200 | 99.4 | 99.4 | 99.5 | 99.5 | 98.2 |

| 250 | 99.4 | 99.6 | 99.5 | 99.5 | 98.8 |

| 300 | 99.4 | 99.6 | 99.6 | 99.5 | 99.4 |

| 350 | 99.6 | 99.7 | 99.7 | 99.9 | 99.6 |

| 400 | 99.9 | 99.8 | 99.8 | 99.9 | 99.8 |

| Metric | LSVM | QSVM | CSVM | MGSVM | CGSVM |

|---|---|---|---|---|---|

| Sensitivity | 0.9980 | 0.9960 | 0.9960 | 0.9980 | 0.9960 |

| Specificity | 1.000 | 1.000 | 1.000 | 1.000 | 1.000 |

| Precision | 1.000 | 1.000 | 1.000 | 1.000 | 1.000 |

| F1-score | 0.9990 | 0.9980 | 0.9980 | 0.9990 | 0.9980 |

| MCC | 0.9980 | 0.9960 | 0.9960 | 0.9980 | 0.9960 |

| Study | Segmentation | Feature Selection | Methods | Accuracy | Sensitivity | Specificity | Precision | F1-Score |

|---|---|---|---|---|---|---|---|---|

| [36] | U-Net | No | Customized CNN | 0.9933 | 1.00 | 0.9867 | ||

| [27] | Fuzzy C-means Clustering | No | Customized EDCNN | 0.9680 | 0.9370 | |||

| [43] | Temperature Ranges | No | Customized CNN | 0.9385 | 0.9053 | 0.9700 | 0.9666 | |

| [29] | No | No | VGG16 + Sequential Classifier | 0.9940 | 1.00 | 0.9750 | 0.9890 | 0.9980 |

| [40] | No | No | Customized CNN | 0.970 | 1.00 | 0.830 | ||

| [65] | level-set segmentation | No | GLCM + GLRM + GLSZM + NGTDM + PCA + SVM | 0.960 | 1.00 | 0.920 | ||

| [42] | Canny Edge Detector + Morphological Operations + | No | AlexNet | 0.9048 | 0.9333 | 0.8333 | 0.9333 | |

| [30] | Morphological operation + object-oriented segmentation | No | Customized CNN | 0.9895 | 0.9828 | 0.9959 | 0.9956 | |

| [64] | No | Yes | ResNet34 + Chi-square + SVM | 0.9962 | 0.9963 | 0.9963 | 0.9963 | |

| Proposed | No | Yes | MobileNet, EfficientNetB0, and ShuffleNet + NNMF + SVM | 0.9990 | 0.9980 | 1.000 | 1.000 | 0.9990 |

| CNN Model | CNN Layer | Dimensionality Reduction | LSVM | QSVM | CSVM | MGSVM | CGSVM |

|---|---|---|---|---|---|---|---|

| EfficientNetB0 | Layer 1 Features | NNMF | 98.1 | 97.9 | 97.7 | 98.0 | 98.0 |

| PCA | 97.2 | 97.8 | 97.3 | 97.1 | 97.6 | ||

| Autoencoders | 97.9 | 97.8 | 98.0 | 98.3 | 97.6 | ||

| Layer 2 Features | NNMF | 98.9 | 99.1 | 99.0 | 99.0 | 98.3 | |

| PCA | 98.7 | 98.5 | 98.1 | 98.7 | 99.1 | ||

| Autoencoders | 98.7 | 99.0 | 98.7 | 98.9 | 98.7 | ||

| MobileNet | Layer 1 Features | NNMF | 98.3 | 98.6 | 98.1 | 98.3 | 98.2 |

| PCA | 98.1 | 97.8 | 97.2 | 97.9 | 97.9 | ||

| Autoencoders | 98.1 | 97.9 | 97.7 | 98.1 | 98.2 | ||

| Layer 2 Features | NNMF | 97.6 | 97.5 | 97.6 | 97.2 | 97.0 | |

| PCA | 97.6 | 97.1 | 96.9 | 97.3 | 97.0 | ||

| Autoencoders | 97.1 | 96.8 | 96.8 | 97.0 | 95.8 | ||

| ShuffleNet | Layer 1 Features | NNMF | 96.3 | 96.4 | 96.1 | 96.3 | 94.5 |

| PCA | 94.9 | 94.7 | 93.5 | 95.1 | 94.6 | ||

| Autoencoders | 95.7 | 95.2 | 95.7 | 96.2 | 94.9 | ||

| Layer 2 Features | NNMF | 96.7 | 97.0 | 96.8 | 96.9 | 95.8 | |

| PCA | 95.9 | 96.0 | 95.8 | 96.6 | 96.1 | ||

| Autoencoders | 96.8 | 96.8 | 96.6 | 96.9 | 96.3 |

| Model | Input Data/ Feature Size to Classifier | Amount of Deep Network Parameters | Amount of Layers | Classification Time (Seconds) | Classification Complexity (O) |

|---|---|---|---|---|---|

| CNN Models of the Proposed CAD (End-to-End Deep Learning Classification) | |||||

| ShuffleNet | 224 × 224 × 3 | ~1.3 M | ~30 | 6525 | k: kernel length n: The overall length of the pattern (the amount of input entries) d: dimensionality of presentation |

| MobileNet | 224 × 224 × 3 | 3.5 M | 28 | 4194 | |

| EfficientNetB0 | 224 × 224 × 3 | 5.3 M | 82 | 15,870 | |

| Layer Level Features (Scenario I of the Proposed CAD) | |||||

| Layer 3 Features | ShuffleNet = 2 | MGSVM s = number of support vectors p: number of features - | 0.99779 | ) p: number of features n: number of input samples | |

| Mobile = 2 | 2.5092 | ||||

| EfficientB0 = 2 | 0.9251 | ||||

| Layer 2 Features | ShuffleNet = 544 | 1.8921 | |||

| Mobile = 1280 | 3.3620 | ||||

| EfficientB0 = 1280 | 3.3868 | ||||

| Layer 1 Features after DWT | ShuffleNet = 417 | 1.4029 | |||

| Mobile = 980 | 2.3265 | ||||

| EfficientB0 = 980 | 2.3946 | ||||

| Layer Level Features after NNMF | |||||

| Layer 2 after NNMF | ShuffleNet = 80 | MGSVM s = number of support vectors p: number of features | 0.9910 | ) p: number of features n: number of input samples | |

| Mobile = 100 | 1.0079 | ||||

| EfficientB0 = 50 | 0.9373 | ||||

| Layer 1 after DWT and NNMF | ShuffleNet = 70 | 1.1128 | |||

| Mobile = 40 | 0.8532 | ||||

| EfficientB0 = 60 | 0.8762 | ||||

| Layer Level Fusion (Scienario II of the Proposed CAD) | |||||

| Layer 3 Features of the three CNNs | 6 | MGSVM s = number of support vectors - | 0.96421 | ) p: number of features n: number of input samples | |

| Layer 2 Features of the three CNNs | 230 | 0.95033 | |||

| Layer 1 Features of the three CNNs | 170 | 0.95719 | |||

| Multi-CNN Multi-Layer Level Fusion (Scienario III of the Proposed CAD) | |||||

| Features selected using mRMR | 350 | MGSVM s = number of support vectors - | 1.4045 | ) p: number of features n: number of input samples | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Attallah, O. A Deep Learning-Driven CAD for Breast Cancer Detection via Thermograms: A Compact Multi-Architecture Feature Strategy. Appl. Sci. 2025, 15, 7181. https://doi.org/10.3390/app15137181

Attallah O. A Deep Learning-Driven CAD for Breast Cancer Detection via Thermograms: A Compact Multi-Architecture Feature Strategy. Applied Sciences. 2025; 15(13):7181. https://doi.org/10.3390/app15137181

Chicago/Turabian StyleAttallah, Omneya. 2025. "A Deep Learning-Driven CAD for Breast Cancer Detection via Thermograms: A Compact Multi-Architecture Feature Strategy" Applied Sciences 15, no. 13: 7181. https://doi.org/10.3390/app15137181

APA StyleAttallah, O. (2025). A Deep Learning-Driven CAD for Breast Cancer Detection via Thermograms: A Compact Multi-Architecture Feature Strategy. Applied Sciences, 15(13), 7181. https://doi.org/10.3390/app15137181