1. Introduction

Haptic feedback is critical in a virtual environment(VE), enabling users to experience more realistic physical sensations [

1]. Simulating real-world tactile sensations in virtual environments is a focus research objective, such as rendering haptic feedback for virtual surface textures [

2]. The expansion of the haptic device market is largely fueled by advancements in the entertainment and the experience economy, as seen in the widespread use of compact mobile devices such as game controllers and VR headsets equipped with linear resonant actuators (LRAs) [

3]. As demand for haptic experiences grows, many studies have explored advanced acquisition devices and haptic actuators [

4]. While these technologies enhance user experiences, they also raise costs. Additionally, we observed that current haptic design workflows, such as Core Haptics of Apple (Apple Inc., Cupertino, CA, USA) [

5] and RichTap Creator of AAC Technologies (AAC Technologies Holdings Inc., Shenzhen, China) [

6], often require designers to rely on proprietary editors or have programming expertise. Although some of these tools support audio-based haptic feedback generation, creating haptic feedback that matches with visual texture information remains mainly a case-by-case process, resulting in significant workload.

With the widespread adoption of generative artificial intelligence technologies, knowledge can now be embedded into the design process through generative methods. As a result, leveraging generative techniques to support haptic design has become an inevitable trend and holds great potential for enhancing the quality of haptic experiences. Generative methods have been applied in haptic design to generate matching vibrotactile feedback from visual and auditory modalities [

7,

8,

9,

10,

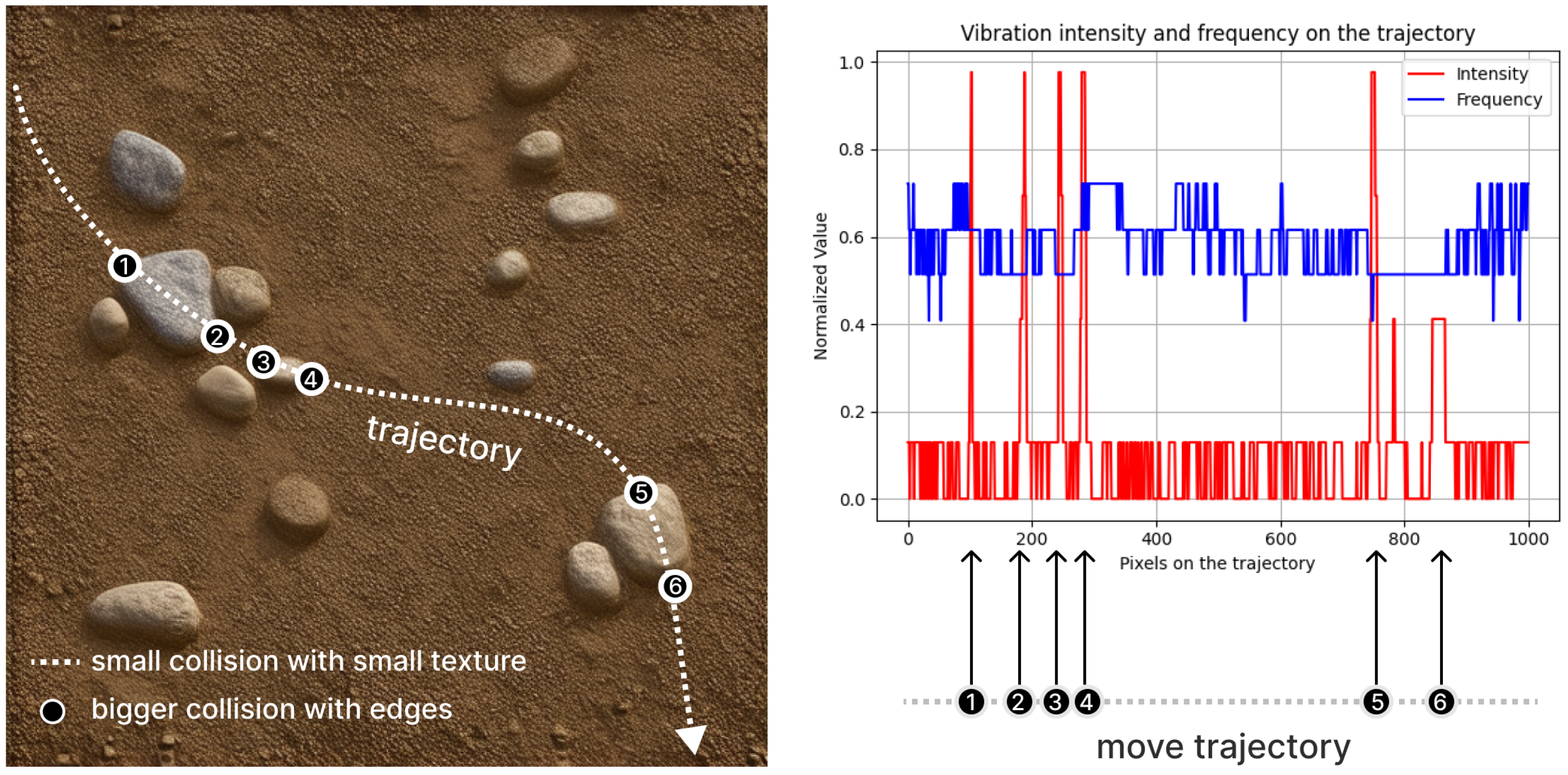

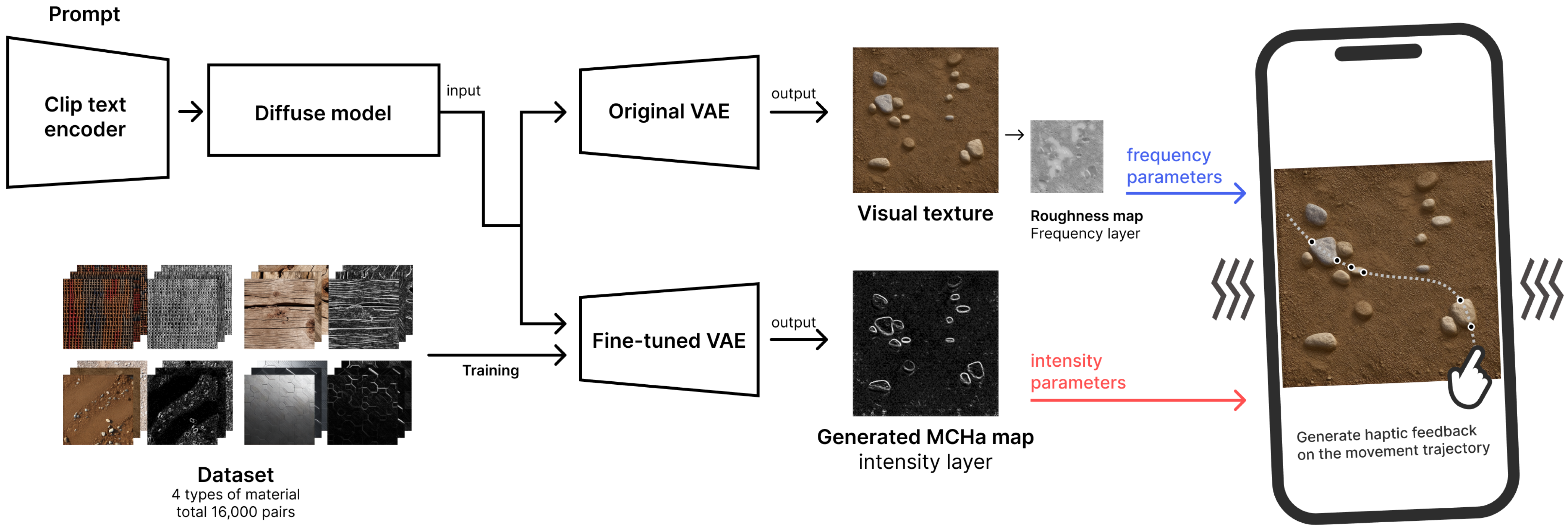

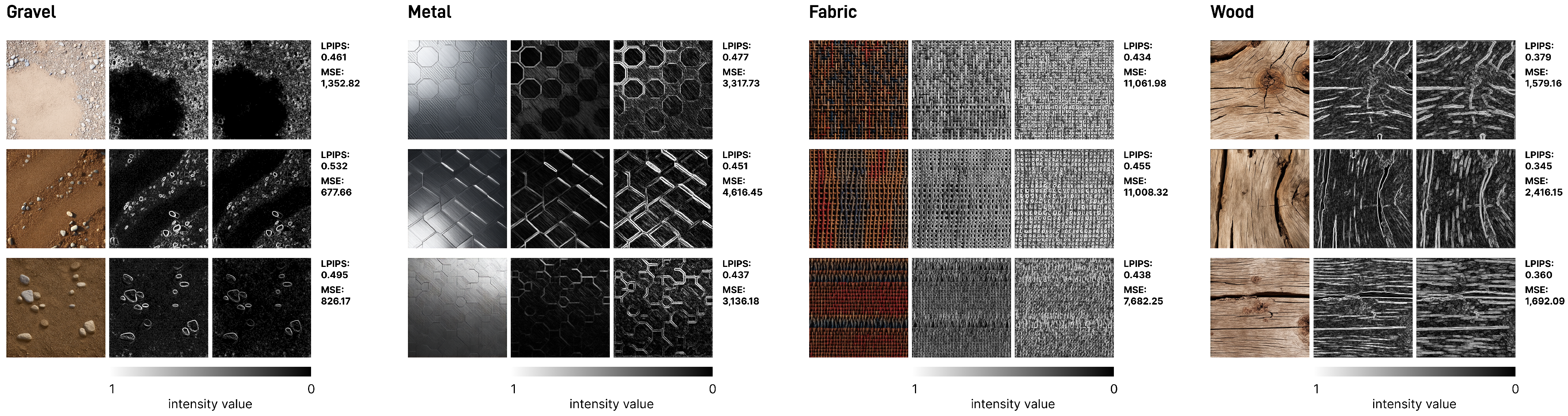

11]. To streamline the haptic design workflow, enabling high-fidelity haptic feedback on widely available devices, we introduce micro-collision haptic (MCHa) maps and use a fine-tuned variational autoencoder (VAE) decoder of Stable Diffusion. This method simultaneously generates visual textures and matching MCHa maps. Visual texture maps define surface contact characteristics via displacement and roughness information. User interactions with micro surface dimples can be modeled as a trajectory composed of sequential collisions. Haptic feedback for virtual texture enables users to sense material properties through micro texture collisions [

12], with pronounced feedback over significant height variations. Thus, height and roughness data from 2D visual texture maps can be utilized to derive a corresponding 2D haptic map.

To further understand the impact of our generative tool on the haptic design workflow, we conducted a user study. We designed a haptic creation task in which designers were asked to create haptic feedback for four materials—gravel, metal, fabric, and wood—and assign them randomly to a 4 × 4 tactile square. Eight professional haptic designers, each with over 3 years of experience, first completed the task using a traditional workflow, and then repeated it using our generative haptic tool. In parallel, ten non-haptic interaction designers were recruited. After a brief training, they completed the same task using both the conventional workflow and our generative tool. After each task, the designers provided subjective evaluations of their experience. To ensure the practical applicability and relevance of our approach, all experiments were conducted on an iPhone 13 mini with LRA, representing one of the most widely accessible consumer-level platform types for tactile interaction.

To assess the output quality, 30 users were recruited to evaluate the haptic feedback from all four groups. The participants also performed a material identification task on the 4 × 4 tactile square to assess the recognizability of the designed textures. The NASA-TLX results revealed no significant differences in overall user preference, but the designers reported significantly lower workload and improved efficiency when using our generative tool. Subjective evaluations showed that our method significantly improved the overall ratings and consistency of the haptic designs, and many materials were perceived to be more realistic.

Overall, our work contributes to the field of haptic design in the following ways:

Lowering the expertise threshold:

Our generative workflow enables novice designers to create effective haptic feedback without extensive technical training.

Reducing professional workload: It streamlines the design process, allowing expert designers to work more efficiently and focus on creative tasks rather than manual implementation.

Promoting broader adoption: By simplifying the workflow, our method encourages the integration of haptic design in various media platforms, including gaming, streaming, and other interactive applications.

Enhancing user experience: The tool supports the generation of realistic, material-consistent tactile feedback, contributing to more immersive multisensory experiences in virtual environments.

4. User Study: Streamlining Haptic Design with Generative MCHa Map

We evaluated how the generative MCHa map simplifies the haptic design workflow. Specifically, we assessed the quality of haptic designs produced by both professional and non-professional designers, with and without the use of our method. Finally, we conducted interviews with participating designers to gather qualitative feedback on their experiences with our tool.

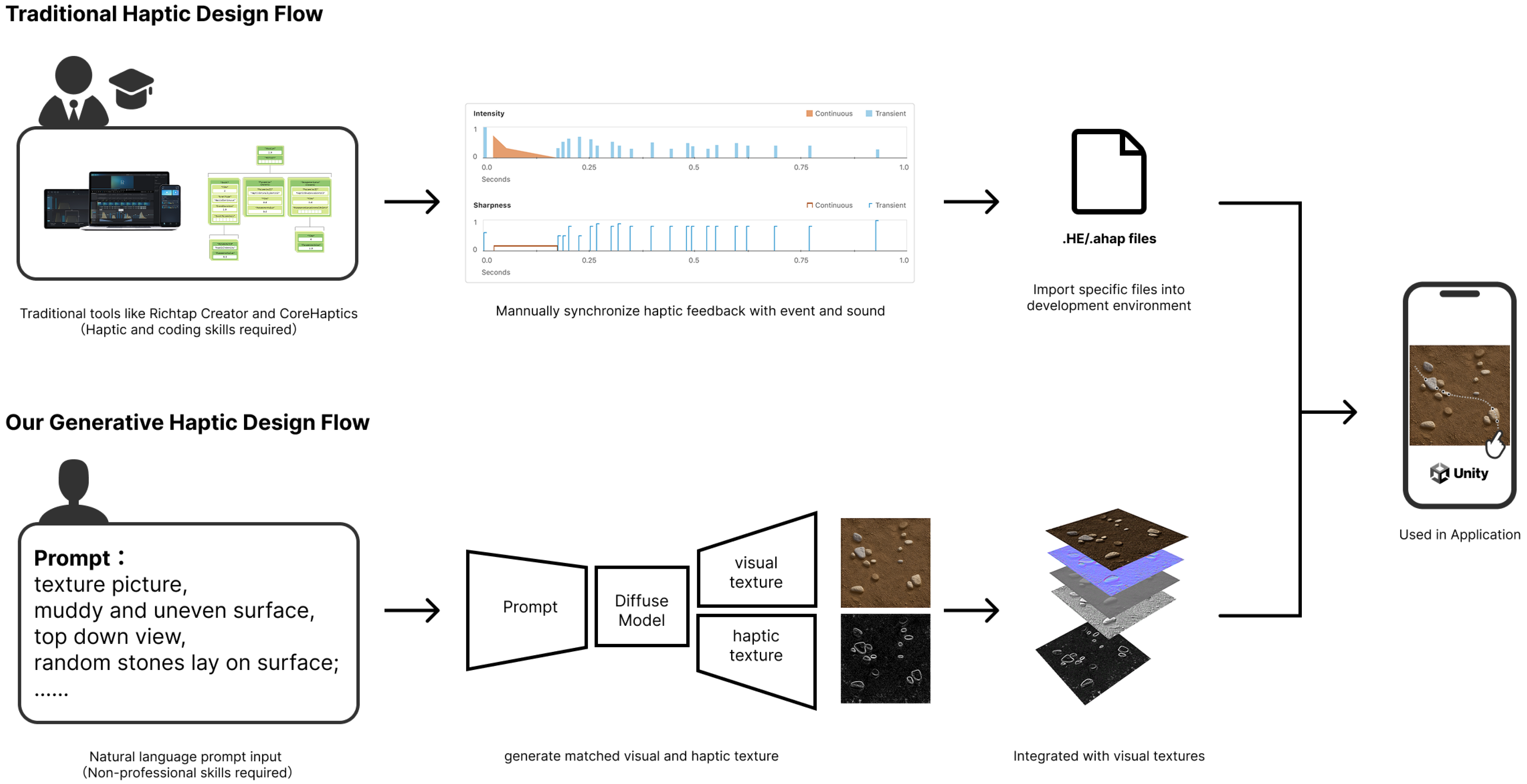

4.1. Haptic Design Flow

Haptic content creation typically involves interdisciplinary collaboration among engineers, designers, and domain experts. The process generally begins with the definition of design goals based on specific application scenarios. First, the workflow includes identifying key interaction events, such as gameplay elements or multisensory video segments requiring haptic augmentation (

Figure 4). Subsequently, designers develop sensory mapping models that associate these events with appropriate vibrotactile feedback—for instance, simulating impacts, explosions, or heartbeats. Finally, based on the specifications of the employed vibration actuator, we determined the design parameters and utilized corresponding development environments and software tools—such as RichTap Creator and Apple’s Core Haptics—to design vibration patterns. The patterns were then exported as corresponding

.ahap (Apple Haptic and Audio Pattern) and

.he (Haptic Effect) files. A prototype system was subsequently built to enable rapid iteration and testing. Engineers are responsible for characterizing actuator behavior—such as voltage-to-acceleration curves—to support parametric design. Designers, on the other hand, conduct contextual analyses and tune multisensory synchronization to deliver cohesive user experiences.

4.2. Experiment Design

To evaluate the effectiveness of our generative MCHa map tool in supporting haptic design tasks, we conducted a controlled experiment targeting the following questions:

Can non-haptic designers, without a background in haptics, utilize our tool to create compelling haptic experiences effectively?

Can professional haptic designers benefit from our tool in terms of quality and efficiency?

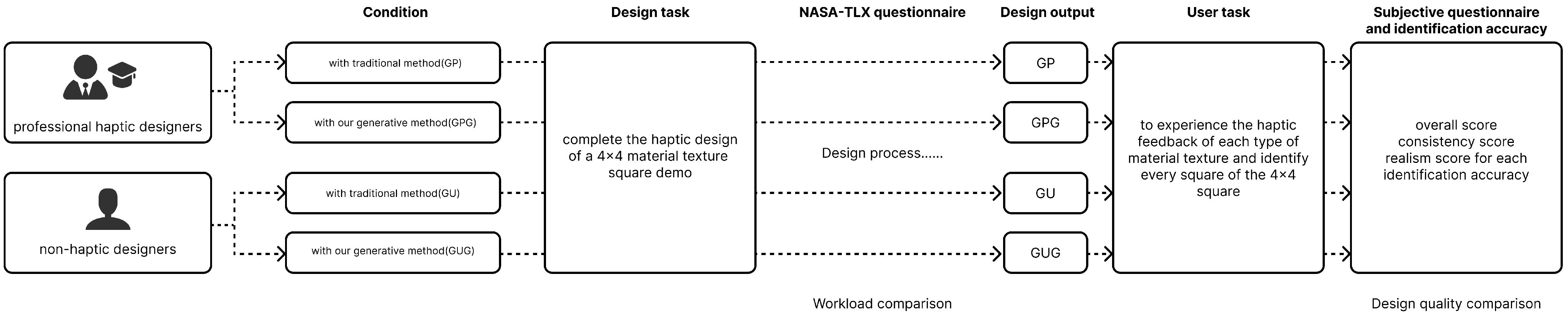

The experimental procedure is briefly summarized in

Figure 5.

We invited 8 professional haptic designers, each with over 3 years of experience in haptic design. They were highly familiar with tools such as Apple Core Haptics, RichTap Creator, and the design of vibration feedback for gaming controllers like those from Xbox and Sony. Additionally, we recruited 10 designers without a haptic design background but with expertise in interaction design and user experience design.

The participants were assigned to the following experimental groups:

GP: Professional haptic designers used traditional tools (RichTap Creator) to complete the design task.

GPG: The same professionals repeated the task using our generative tool; they were allowed to tune the output with their specialty.

GU: Non-haptic expert designers used traditional tools; they were taught to use the tools before the experiment.

GUG: Non-haptic expert designers completed the task solely using our tool.

Since visual texture design is not the primary focus of this study, we used Stable Diffusion to generate consistent visual textures for all materials.

For professional haptic designers, we first asked them to complete the task using traditional tools. Specifically, they used RichTap Creator by AAC Technologies to design vibration-based haptic feedback corresponding to each texture, simulating the sensation of brushing across the surface (GP). Specifically, designers were first provided with a unified set of visual textures. Based on these textures, they sketched the expected finger-surface interaction profiles while sweeping from left to right across the texture, focusing on surface height variations and potential collision events. They then estimated a vibration intensity envelope according to the magnitude of undulations and the severity of perceived impacts. Next, they assessed the perceived surface smoothness and marked expected changes in roughness along the sweep trajectory, leading to a sketched frequency envelope representing perceived vibration frequency variations. Finally, these envelopes were implemented in RichTap Creator, where users generated corresponding vibration fragments by editing the intensity and frequency curves. The resulting vibration patterns were exported as .he files, containing the complete LRA drive signals. Then, we asked the same designers to use our proposed tool to complete the same task. They used Stable Diffusion in combination with a VAE model trained by us to directly generate haptic texture maps for the four materials. After generation, they fine-tuned the parameters for each texture’s haptic feedback using our methods (GPG).

For non-haptic designers, we first guided them through the traditional design process. Before starting the formal design task, we provided step-by-step instructions on how to use conventional methods, along with multiple practice sessions. This was intended to reduce unfamiliarity with the tool and mitigate any potential learning effects due to repeated design tasks. After the practice phase, they completed the haptic feedback design for the texture stimuli (GU). Afterwards, we instructed them to use our tool, which allowed them to directly generate the four material textures using Stable Diffusion and our trained VAE model. After the practice phase, they completed the haptic feedback design for the texture stimuli (GUG).

We did not impose a strict time limit for the design task. All participating designers completed the task within 40 min. After completing each round of the design task, the designers filled out a modified version of the NASA-TLX questionnaire to assess their perceived workload.

The comparison between GP and GPG illustrates how our method improves both the quality and efficiency of haptic design for professionals. The comparison between GU and GUG demonstrates how our tool simplifies the design process and enhances usability for non-experts. The comparison between GPG and GUG further reflects the extent to which our tool enhances design quality across different user groups.

4.3. Design Task

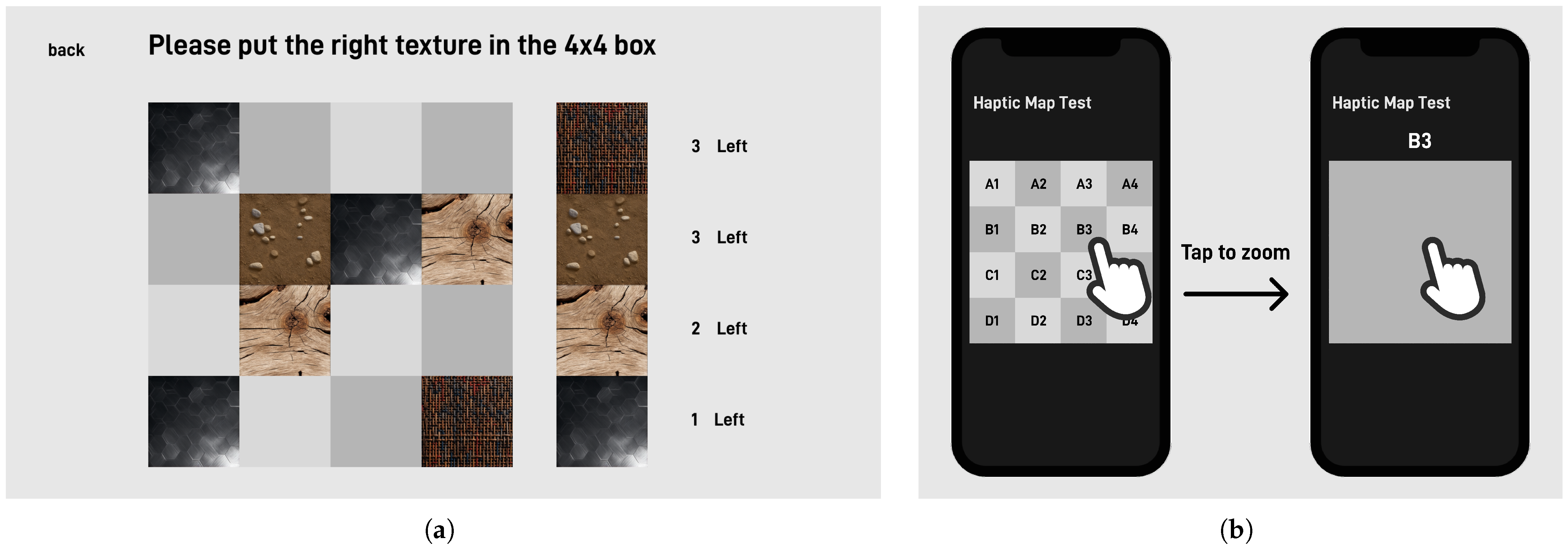

We designed a haptic design task in which the participants were asked to create haptic feedback for a demo. The terrain consisted of a

square composed of sixteen

textures (

Figure 6). These textures were made up of four different texture types: gravel, metal, fabric, and wood. Each texture type shared a consistent visual texture. The pointer could freely move across the square and triggered vibration-based haptic feedback corresponding to the underlying texture.

This demo was used for user testing. In the testing phase, players were asked to explore the

square, while their visual input was blocked. Their goal was to identify and reconstruct the material distribution of the terrain using only the haptic feedback (

Figure 6). The design task was entirely text-guided and consisted of the following steps:

Design one visual texture for each of the four types of textures: gravel, metal, fabric, and wood.

Create matching haptic feedback for each of the visual textures.

Randomly assign each material to four textures in the square. All tiles of the same material shared the same visual texture.

4.4. Apparatus

Our demo and all completed designs were presented on an iPhone 13 mini (Apple Inc., Cupertino, CA, USA). The iPhone 13 mini supports a maximum vibration intensity of 1.3 g and a vibration frequency range of 80–260 Hz. The demo was developed using Unity (Unity Technologies, San Francisco, CA, USA), version 2022.3.10f1c1.

4.5. User Study

We conducted a user study with 30 participants (aged 18–35, mean: 24.1) who reported no sensory impairments. Each participant experienced the haptic textures designed in conditions of GP, GPG, GU, and GUG in randomized order to counterbalance potential sequencing effects. This experiment was approved by the Ethics Review Committee of Shanghai Jiao Tong University (E2022741), and each participant was informed about the study and signed a consent form.

In each demo, the texture square was visually occluded. The participants were asked to explore the space using vibrotactile feedback and reconstruct the spatial texture layout. In GPG and GUG, the designers created entirely novel textures from scratch using our generative tool. Given that conventional vibration fragments cannot provide real-time feedback based on the user’s finger position, inconsistent sweeping speeds may lead to mismatched haptic sensations. To ensure fair comparison across trials, a visual speed indicator was presented during each touch of haptic texture, guiding participants to sweep across the texture at a consistent and appropriate speed (200 pixels per second). In addition, the participants were allowed to freely explore each tactile texture without time or interaction constraints.

Following the task, the participants completed a subjective questionnaire evaluating the following:

Overall quality of haptic design (1 (very poor)–7 (very good) Likert scale).

Perceived consistency across textures(1 (very poor)–7 (very good) Likert scale).

Realism of individual textures (gravel, metal, fabric, wood (1 (very poor)–7 (very good) Likert scale)).

Additionally, the accuracy of reconstruction was recorded to examine the discriminability of the generated haptic textures.

4.6. Result

All statistical analyses were conducted using Python (v3.13) with the scipy and scikit-posthocs libraries. As the data did not meet normality assumptions (assessed visually and confirmed via Shapiro–Wilk tests), non-parametric methods were adopted. For NASA-TLX workload scores, we used Wilcoxon signed-rank tests for within-subject comparisons (GP vs. GPG; GU vs. GUG) and Mann–Whitney U tests for between-group comparisons (GPG vs. GUG), as these involved independent samples. For user ratings across four design conditions (GP, GPG, GU, and GUG) and identification accuracy, Friedman tests were employed to account for repeated measures, followed by Nemenyi post hoc tests to identify pairwise differences when significance was found.

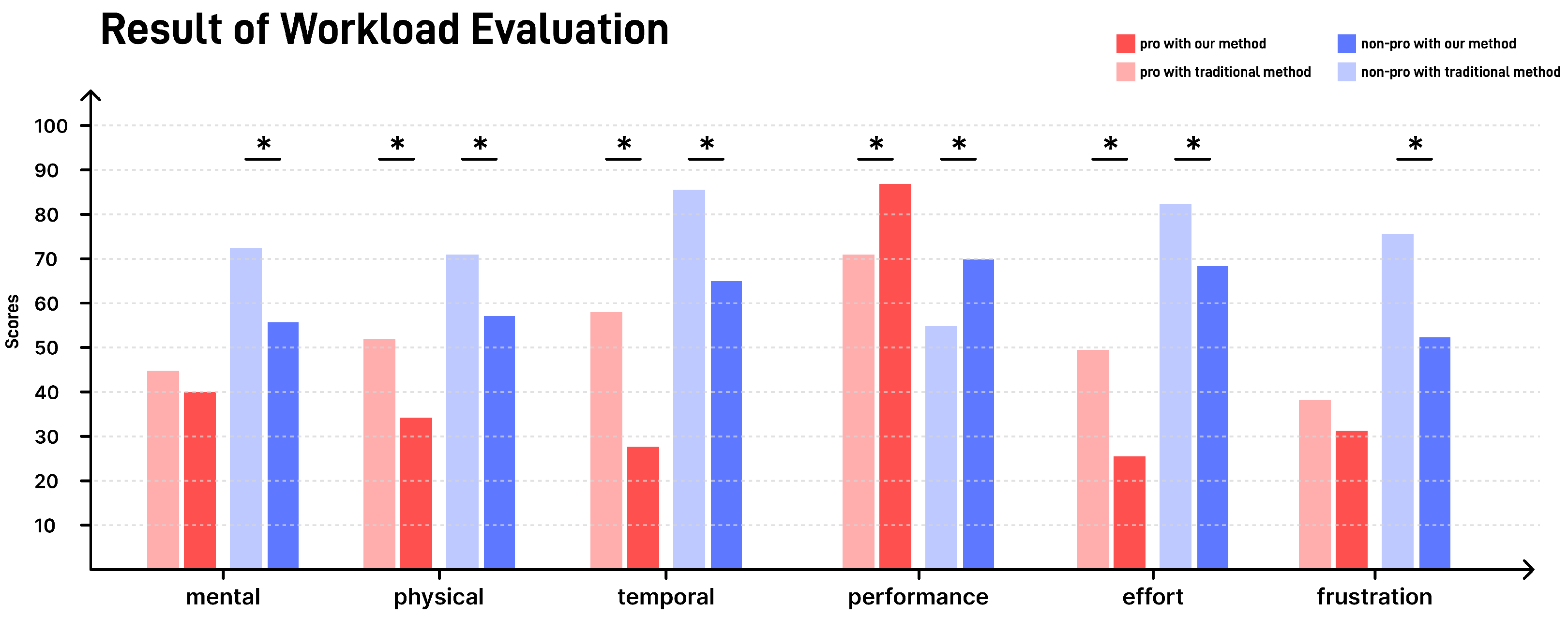

4.6.1. Workload of Design Workflow

We conducted statistical analyses on six NASA-TLX subscales to evaluate perceived workload across three group comparisons: GP vs. GPG (professional designers using traditional vs. generative tools), GU vs. GUG (non-professional designers using traditional vs. generative tools), and GPG vs. GUG (professional vs. non-professional using generative tools) (

Figure 7).

Results from Wilcoxon signed-rank tests revealed significant reductions in physical, temporal, performance, and effort workloads when professional designers used the generative tool (GP vs. GPG, all p < 0.05), with no significant differences found for mental and frustration. For non-professional designers, generative tools significantly reduced perceived workload across all subscales (GU vs. GUG, all p < 0.01). Furthermore, Mann–Whitney U tests comparing professional and non-professional users of generative tools showed that GPG consistently reported lower workload than GUG in all subscales except mental demand, with statistical significance across five subscales (all p < 0.05).

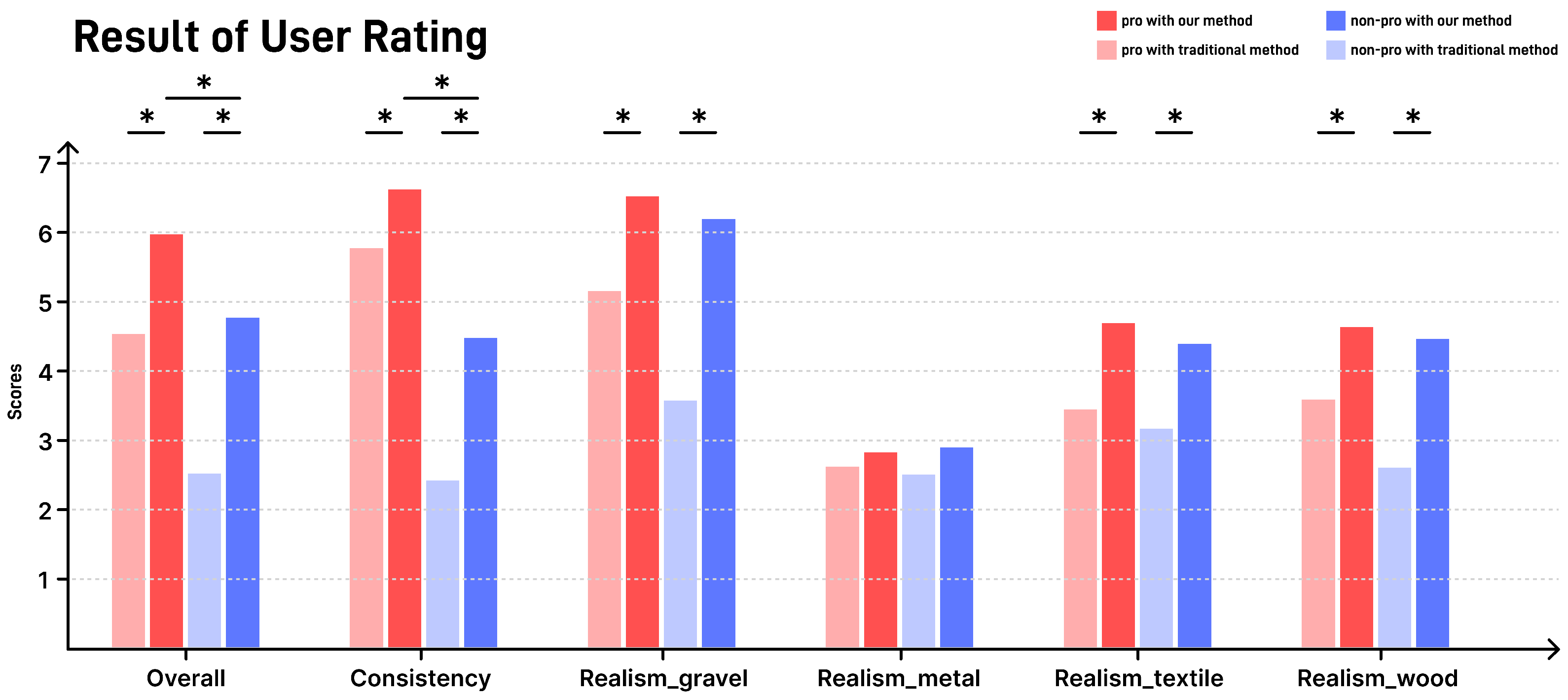

4.6.2. User Evaluation

To assess the quality of the design outputs, we conducted Friedman tests followed by Nemenyi post hoc comparisons focusing on two key comparisons: (1) professional haptic using traditional tools (GP) versus our methods (GPG), and (2) professional haptic (GPG) versus non-haptic designers (GUG) using our methods (

Figure 8).

For Overall ratings, the Friedman test revealed a significant difference (, ). Post hoc analysis showed that designs created with the intelligent tool by professional designers (GPG) were rated significantly higher than those created with traditional tools (GP) (), while no significant difference was found between GPG and GUG (). For Consistency, a significant difference was observed (, ). GPG designs were rated significantly more consistent than GUG (), although no significant difference was found between GP and GPG ().

Regarding Gravel Realism, the Friedman test indicated a significant difference (, ). GPG designs achieved significantly higher realism ratings compared with GP (), whereas no significant difference was observed between GPG and GUG (). For Metal Realism, no significant difference was found among groups (, ). For Fabric Realism, a significant difference was detected (, ). GPG designs outperformed GP with a significant difference (), and there was no significant difference between GPG and GUG (). For Wood Realism, the Friedman test again showed significant differences (, ). GPG designs were significantly preferred to GP (), while no significant difference was found between GPG and GUG ().

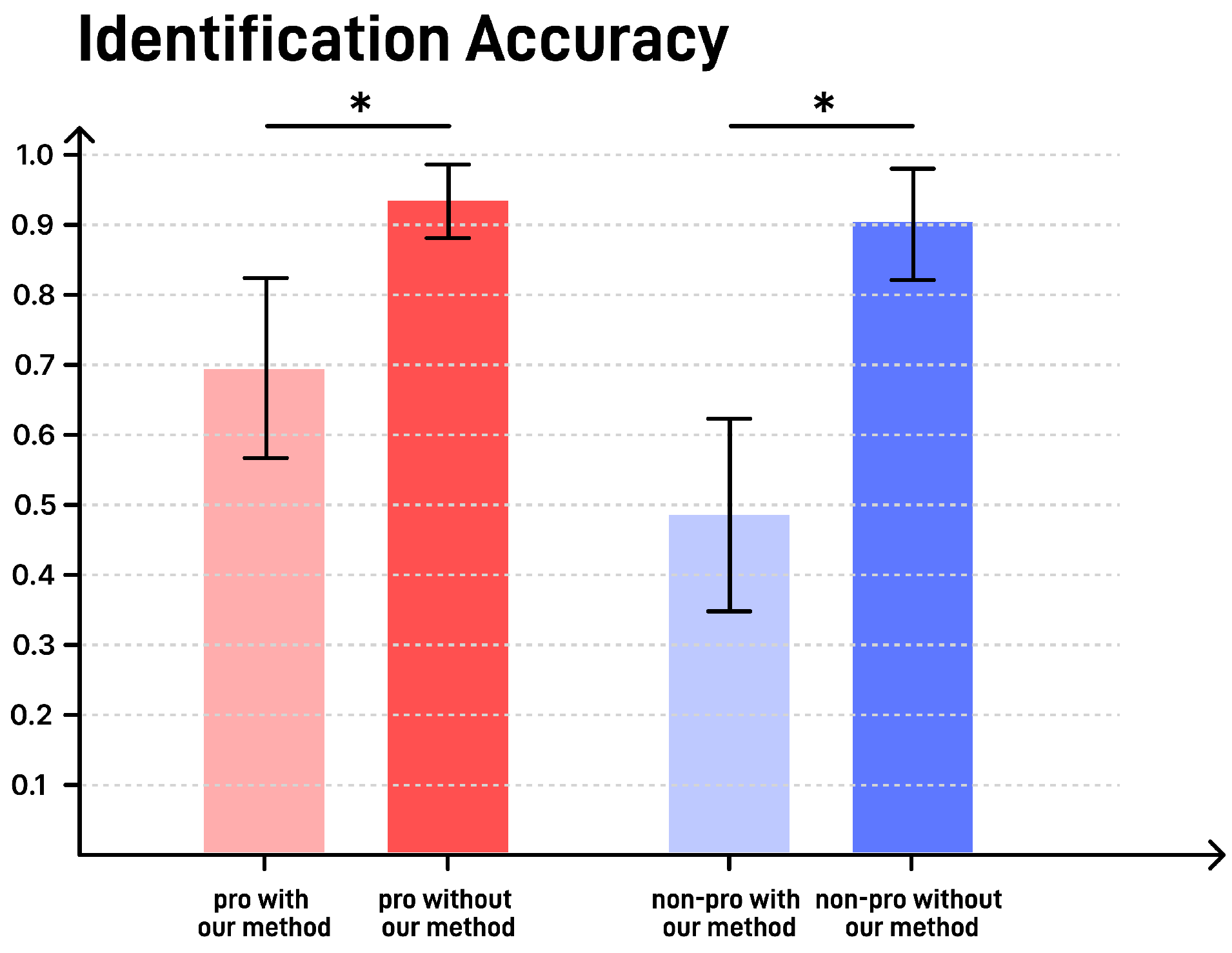

Finally, for

Discriminability (identification accuracy) (

Figure 9), a highly significant difference was observed (

,

). Designs produced by professional designers with the intelligent tool (GPG) were rated significantly higher than those using traditional methods (GP) (

), and no significant difference was observed between GPG and GUG (

).

5. Discussion

5.1. Generative MCHa Map

First, our MCHa map offers a structural advantage for real-time interaction. Although traditional methods can achieve good performance in Consistency and in the Realism of certain materials when the user’s brushing speed is controlled, due to carefully designed feedback that matches the expected collision patterns, our method shows greater advantages during free exploration. This may explain why our method received higher Overall ratings (

Figure 8). Unlike traditional approaches that rely on pre-designed, unidirectional tactile sequences, our method supports interactive feedback during free-form exploration. It accommodates touches in any direction, providing a more realistic and immersive haptic experience.

In particular, our method demonstrates a significant advantage in the discriminability of fine textures. Such textures often require users to explore them repeatedly from multiple directions to be recognized, and our approach clearly outperforms traditional methods in this aspect (

Figure 9). Compared with existing methods, our approach demonstrates superior performance in both mechanistic rationale and practical implementation: The work of Ujitoko et al. [

8] also realized end-to-end haptic feedback, but their method cannot achieve free exploration of haptic textures like MCHa due to the limitations of the haptic data. The work of Chan et al. [

10] is based on the micro-contact mechanics, where vibration feedback signals are mapped from the height map of virtual textures. Their method shows good discrimination for simple textures when using specific actuators. However, in free exploration and an absolute identification experiment, the recognition accuracy of users is lower than that of the MCHa map. Fang et al. [

11] also used a VAE model to generate haptic data and proposed a visual–tactile cross-modal generative algorithm to enhance the consistency between visual and tactile modalities, but it cannot directly apply the generated output to the actuator input, as our method does. Compared with those prior works, the novelty of our work is in the micro-collision-based haptic feedback. We treat the interaction with virtual textures as a sequence of collision feedback on a virtual plane, simplifying the generation of tactile textures while retaining the texture’s tactile features. Additionally, by integrating Stable Diffusion, we enable direct generation of haptic textures from natural language prompts, which can directly drive vibration actuators, significantly simplifying the haptic interaction design process.

In summary, our method offers an elegant and efficient way for designers to generate both visual texture maps and matching MCHa maps directly from textual prompts. This not only helps professional haptic designers improve efficiency and reduce workload but also enables non-experts to quickly create usable haptic feedback.

5.2. Design with Generative Haptic Methods

Our method offers a convenient and efficient workflow for haptic design. For professional haptic designers, when using our method, they are relieved from spending extensive time and effort on aligning visual textures with haptic feedback. Our system directly transforms the visual texture’s surface features into corresponding haptic responses, eliminating the need for manual calibration and fine-tuning of vibration intensity envelopes typically required in traditional workflows (

Figure 7). This enables professional haptic designers to focus their efforts on more critical aspects of the design process—such as expressive articulation and the creative nuances of haptic feedback—thereby making a more substantial impact on the overall user experience. In our experiments, professional designers using our method tended to invest more energy in refining the details of the haptic feedback. This post-generation fine-tuning contributed to significantly higher scores in both overall quality and consistency compared with designs from non-professional users (

Figure 8). These findings suggest that our approach can further enhance the quality of expert-level haptic design.

Our method offers an intuitive and user-friendly tool for novice and non-haptic-specialist designers. For non-haptic designers, one of the main challenges lies in accurately describing the desired texture using precise language, even though they are typically experienced in working with generative AI tools. Unlike other visual or interaction design assets, texture generation demands careful consideration of camera angles, surface patterns, lighting effects, and fine-grained texture features. The unfamiliar technical vocabulary can increase the cognitive load when using generative haptic tools (

Figure 7). Another challenge involves adjusting the generated outputs. Although our method provides highly usable haptic feedback directly, users without knowledge of haptic parameters or tactile dynamics often struggle to make efficient and meaningful adjustments based on their subjective impressions. They typically require multiple rounds of fine-tuning before finalizing a satisfactory design. Nevertheless, compared with using traditional tools, non-professional designers reported a significantly reduced workload (

Figure 7). This indicates that tools based on generative AI offer a more user-friendly experience for novice designers. The reduced reliance on specialized knowledge and the simplified workflow allow them to complete design tasks with greater ease.

Our method significantly enhances the quality of haptic design, particularly in terms of perceived realism. In addition, designers without a professional background in haptics were also able to generate tactile feedback with a high degree of realism using our method. In the single-task design scenario of our experiment, the tactile feedback they produced for the four material types did not differ significantly in perceived realism compared with that of professional haptic designers (

Figure 8). This suggests that our approach can substantially lower the barrier to entry for tactile design, improving overall quality. For basic and straightforward design tasks, non-haptic designers can achieve high-quality results with ease using our method.

5.3. The Streamlining of the Haptic Design Process

These results suggest that the generative tool effectively reduces perceived workload across most dimensions, particularly in terms of temporal demand, effort, and performance, for both professional and non-professional designers. The consistent improvement observed in the GPG vs. GP and GUG vs. GU comparisons indicates that the generative tool provides ergonomic and cognitive benefits regardless of design expertise. Interestingly, while mental demand did not show a significant difference for professionals, non-professional users experienced notable reductions. This may imply that the generative tool offers more cognitive scaffolding for less experienced users, potentially by automating or simplifying complex design processes. The frustration level only significantly decreased among non-professional users, suggesting that experienced designers may require more control or transparency to feel satisfied with AI-supported workflows. Overall, the results reinforce the value of intelligent tools in democratizing design by lowering cognitive barriers, especially for novices, while still delivering measurable efficiency gains for experts.

5.4. Limitations

However, the micro-collision-based approach still has room for improvement. Our method is particularly well suited for textures with pronounced surface contact features, such as the granular surface of gravel or the ridged structures typical of wood. In contrast, for relatively smooth textures like metal, which lack significant surface height variation, our method may struggle to convey distinct tactile cues. To address this limitation, we suggest augmenting the MCHa map with auditory feedback—such as metallic friction sounds—to provide a more realistic and immersive multisensory experience.

Although our method provides support for novice designers, professional haptic designers still need to invest considerable cognitive effort in formulating design strategies—for example, ensuring consistency in perceived intensity across interactions. To address this challenge, our ongoing work explores the integration of large language models (LLMs). We are currently constructing a structured knowledge base of haptic design principles and techniques. By leveraging retrieval-augmented generation or fine-tuning approaches, we aim to enable LLMs to offer expert-level guidance in haptic design workflows.

Moreover, responsiveness to the user’s movement speed across the surface is also crucial. Due to current device limitations—specifically, the relatively low touchscreen sampling rates (typically below 240 Hz) and the response latency of vibration actuators (ranging from 1 to 5 ms)—the system may fail to provide immediate and accurate feedback for each micro-collision during fast swiping. This limitation suggests the need to prioritize surface features by importance, allowing the system to respond to the most salient micro-collisions first. Such prioritization can help ensure recognition accuracy under real-time interaction constraints. We plan to address this issue in future work.

5.5. Implication for Design and Future Research

We plan to further develop the MCHa map tool by creating plugins for development platforms such as Unity, enabling fast and high-quality haptic texture generation. Building on our work, the tool can be integrated with haptic design SDKs such as Apple Core Haptics or RichTap Creator. Since different vibration actuators have varying amplitude and frequency characteristics, incorporating device-specific control parameters can help produce more perceptually consistent haptic feedback. Our method also has potential for further development in media applications such as games and videos. By combining content understanding, haptic knowledge systems, and our generative texture framework, the tool could deliver real-time, expressive, and realistic haptic feedback for dynamic scenes—such as surface contact and friction in racing games or cinematic sequences. For future research, one direction is to build a structured database of expert haptic design terminology. This would include strategies, guidelines, and methods extracted from the literature, along with psychophysical data on human tactile perception. A clean, accurate, and professionally curated database would greatly benefit generative haptic design tools. Since haptic design is often used in conjunction with visual and auditory modalities, future models will need strong cross-modal integration capabilities. Research into multimodal information processing and cross-modal generation involving haptics will be essential in advancing this field.

6. Conclusions

In this study, we introduce MCHa maps, a simple and user-friendly representation for vibrotactile textures. We also train a generator that enables developers and designers to efficiently produce visual textures along with precisely matched MCHa maps using latent diffusion models (LDMs), such as Stable Diffusion, driven by natural language prompts. Our fine-tuned VAE model exhibits high performance in texture generation, especially for stochastic and irregular patterns, such as gravel and wood surfaces. We further evaluated the proposed generative haptic design tool, and found that it significantly reduces designers’ workload compared with traditional tools, particularly in terms of physical effort. In addition, we assessed the quality of the generated haptic feedback. Our method delivers superior performance during free exploration tasks and more faithfully captures the tactile characteristics of surface interactions. The proposed method and tool substantially lower the workload and expertise barrier in haptic design, facilitating broader accessibility and contributing to the democratization of tactile content creation. Moreover, our approach improves the overall quality of haptic design, particularly by enabling the creation of realistic tactile feedback in applications such as gaming and immersive media.