Off-Cloud Anchor Sharing Framework for Multi-User and Multi-Platform Mixed Reality Applications

Abstract

1. Introduction

- It details the design and development of a novel spatial anchor sharing system: a system that locally stores and synchronizes spatial anchors between devices, removing reliance on third-party or cloud services.

- It provides cross-platform compatibility. The proposed solution seamlessly integrates diverse devices, including Microsoft HoloLens 2, desktop computers and IoT devices, enabling real-time multi-user collaboration.

- To illustrate the use of the proposed solution, a practical implementation is described (a multiplayer collaborative game showcasing the framework capabilities), which serves as a reference for future applications in XR development. In order to facilitate the reproducibility of the experiments and the extension of the proposed framework, the development is available as open-source code [25].

2. State of the Art

3. Design and Implementation of the System

3.1. Main Features of the Proposed System

- It provides smooth user interaction within the virtual environment both for desktop and MR devices.

- The system allows clients to either connect to an external server or to host the server themselves (i.e., the server can be executed on the device of a specific client).

- It allows multiple users to connect to a server and to experience the same scenario in real time.

- The system is able to keep the status of all the virtual elements synchronized across all the connected devices.

- Specifically for MR devices:

- –

- Spatial anchors are used to align virtual objects between the different devices and to keep them synchronized.

- –

- The system has been designed to share spatial anchors as fast as possible among MR devices.

- –

- An efficient local storage system is utilized for saving spatial anchors in local memory.

- –

- To reduce communications overhead, the anchor exchange protocol has been designed for minimizing the number of times a spatial anchor needs to be exchanged between devices via LAN. Thus, loading times at scene launching can also be decreased.

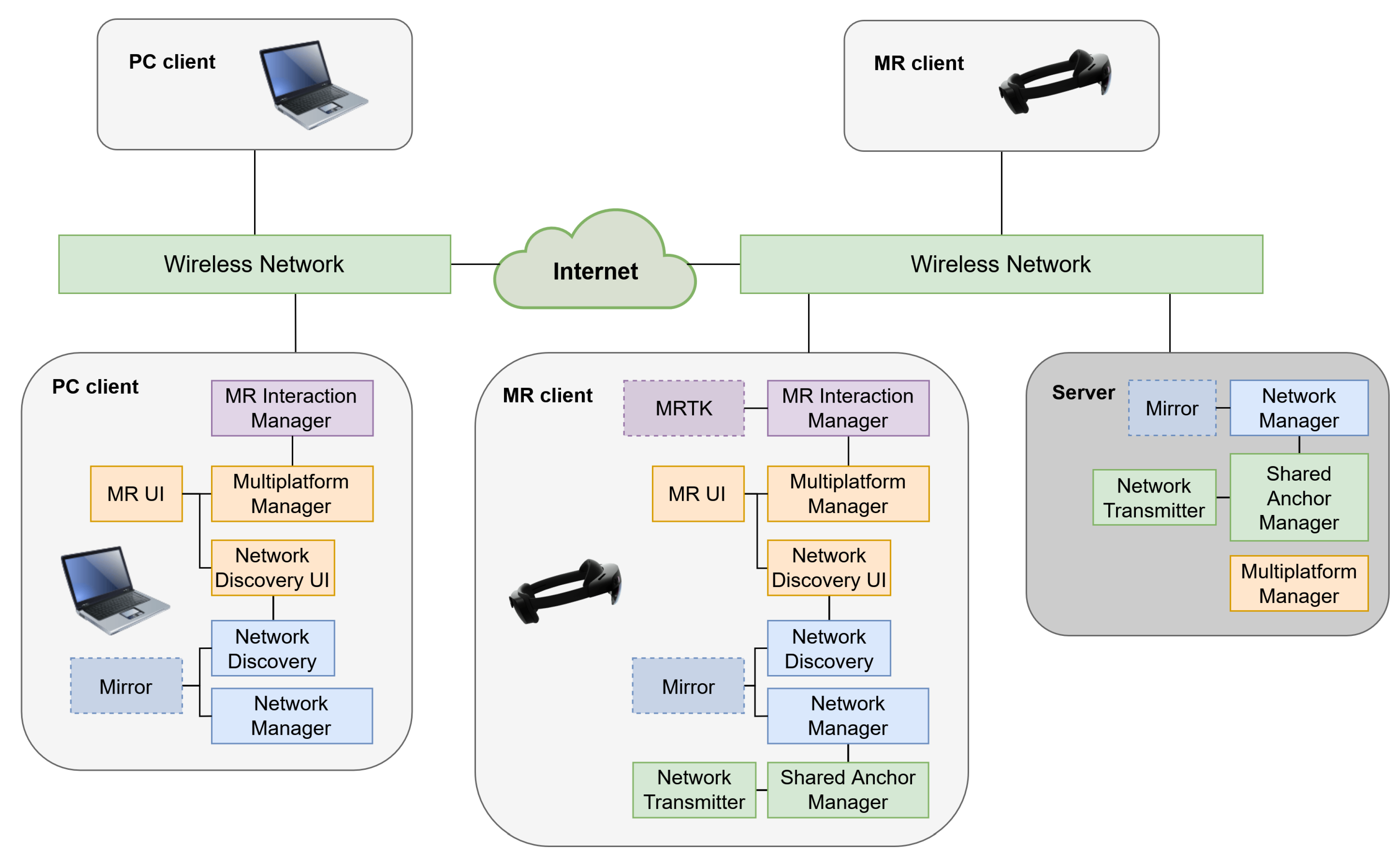

3.2. Communication Architecture

- Network Manager, which uses the Mirror library, is in charge of exchanging messages among the clients and the server, while it is also responsible for keeping all the objects synchronized.

- Network Discovery, which also utilizes the network library, is the subsystem in charge of managing the automatic connection of the users to the local servers.

- Shared Anchor Manager: this module handles all aspects related to the sharing and synchronization of anchors between MR devices.

- Network Transmitter: combined with the Shared Anchor Manager, is used to divide the anchors into smaller segments and to send them through the network.

- Multiplatform Manager, which is responsible for managing the platform-dependent components, enables and disables the components specific to each runtime environment. Specifically, this is implemented using conditional compilation directives (e.g., #if UNITY_WSA, #if UNITY_STANDALONE) and runtime logic, ensuring that only the necessary elements are loaded and active on each platform. This approach allows for a clean separation of platform-dependent resources while maintaining a unified codebase.

- Lastly, the Interaction Manager, which uses MRTK on MR devices, is in charge of managing the different user inputs depending on the platform on which the application is running.

3.3. System Design

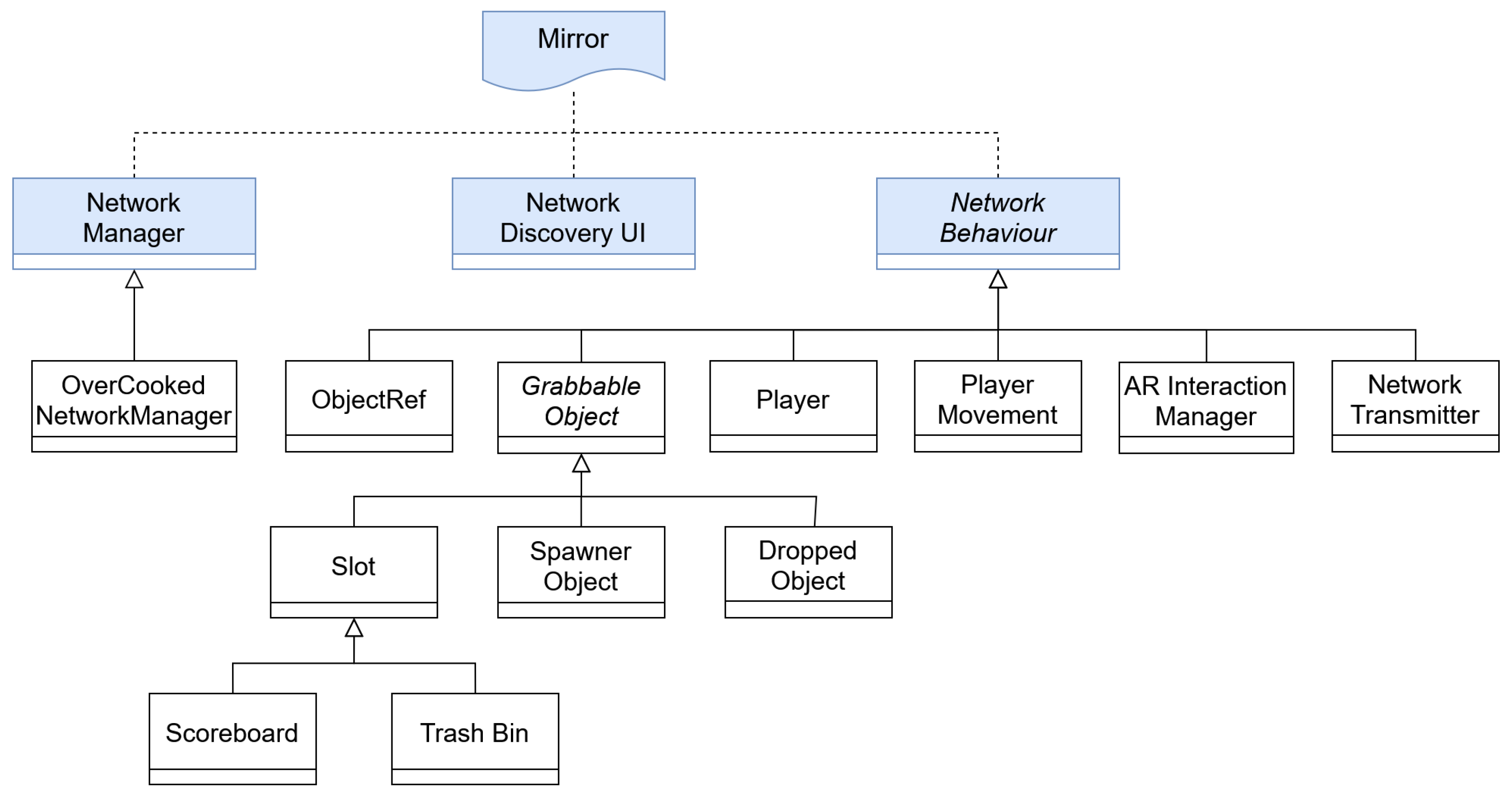

- OverCookedNetworkManager: When using Mirror, it is required to implement a NetworkManager. This is in charge of managing and delegating the methods and events that are triggered when a server is started, a client is connected, etc.

- Player: This class is responsible for handling everything related to the connection, interactions with the environment and movement of the players. It is also used to exchange information with the server through Mirror commands.

- ObjectRef: This class represents an element shared by the network to reference an object (in this case, an ingredient or dish). It stores a reference to the represented GameObject (Prefab).

- ARInteractionManager: This class captures and manages all the MRTK events that are triggered when an MR user makes hand gestures or interacts with elements of the virtual environment. Some examples of these interactions can be clicks, pressing buttons or using the cutting board, among others.

- Slot: It symbolizes the point at which users can drop objects (in this particular case, countertops). This class could also be extended to create special Slots such as the trash can or the counter where finished dishes are placed for delivery.

- WorldAnchorSharedManager: This class manages all aspects related to anchor sharing and synchronization. It offers methods that are used, for example, from the Player, allowing both local synchronization in each device and global synchronization between server and clients. It uses the “ARAnchorManager” system from the Unity XR ARFoundation library and also OpenXR “XRAnchorStore”.

- NetworkTransmitter: This class is used when sending an anchor between devices, being an adaptation of the NetworkTransmitter provided by Unity in one of their example projects [37]. The file has been taken as a reference to adapt it to the needs of the system developed in this project. This class is employed when the size of the serialized anchor exceeds the transmission limit imposed by the Mirror networking library (298,449 bytes); the data must then be split into smaller segments. Mirror internally uses a KCP-based transport layer, where the default window size is 4096 bytes. However, the maximum payload size per packet is determined by Mirror and calculated to be 298,449 bytes. Thus, the anchor is divided into appropriately sized chunks to fit within this limit, ensuring reliable and ordered delivery across the network.

- NetworkDiscoveryUI: This class is responsible for the automatic connection of users to the network, thus facilitating the connection between devices and avoiding the need to enter the server IP manually. Its methods are executed when the application connection panel is used, that is, when a user opens the application for the first time and connects to the network as a host or client. This is where Mirror’s “NetworkDiscovery” component is encapsulated and used transparently to the user and developer, regardless of the platform on which the application is running.

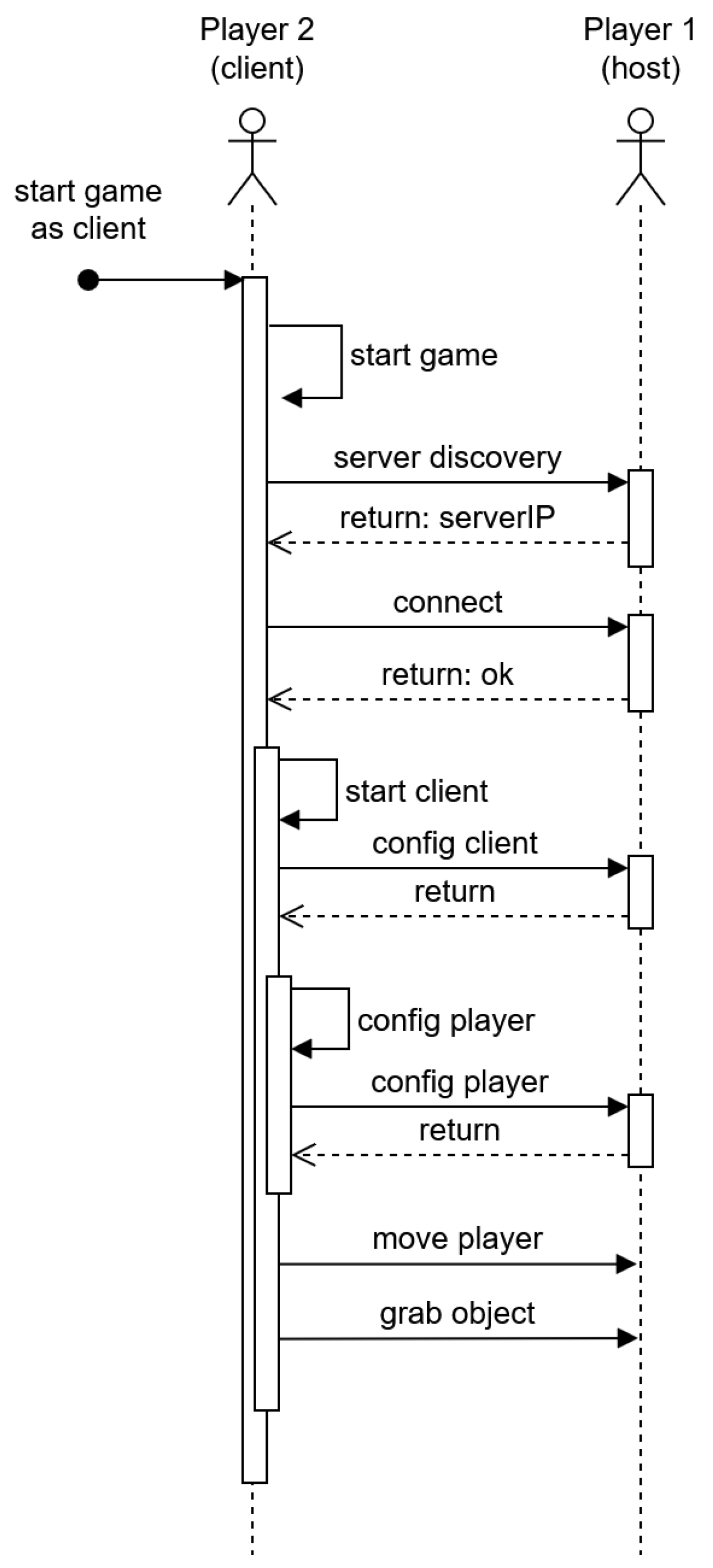

3.3.1. Automatic User Connection Module

- 1.

- A person (Player 2) starts the application and selects the menu button to connect as a client. This is the only action performed by Player 2, the rest of the process is executed automatically by the system.

- 2.

- The system executes the method “networkDiscovery.StartDiscovery()” to search if there is any server available in the network. The active server (in this case, Player 1 acting as host) responds by sending its IP.

- 3.

- The system configures the received IP as a connection point and connects to that server.

- 4.

- The system calls the “networkManager.StartClient()” method, and configures and synchronizes the scene with the server.

- 5.

- Then, the system invokes the “OnStartClient()” and “Start()” methods, where all the internal variables for the correct operation of Player 2 and the system are configured.

- 6.

- From this moment, Player 2 will be able to play freely and the system will synchronize its movements and actions with the server, which will be in charge of broadcasting these actions to the rest of the clients of the network, in case there are any.

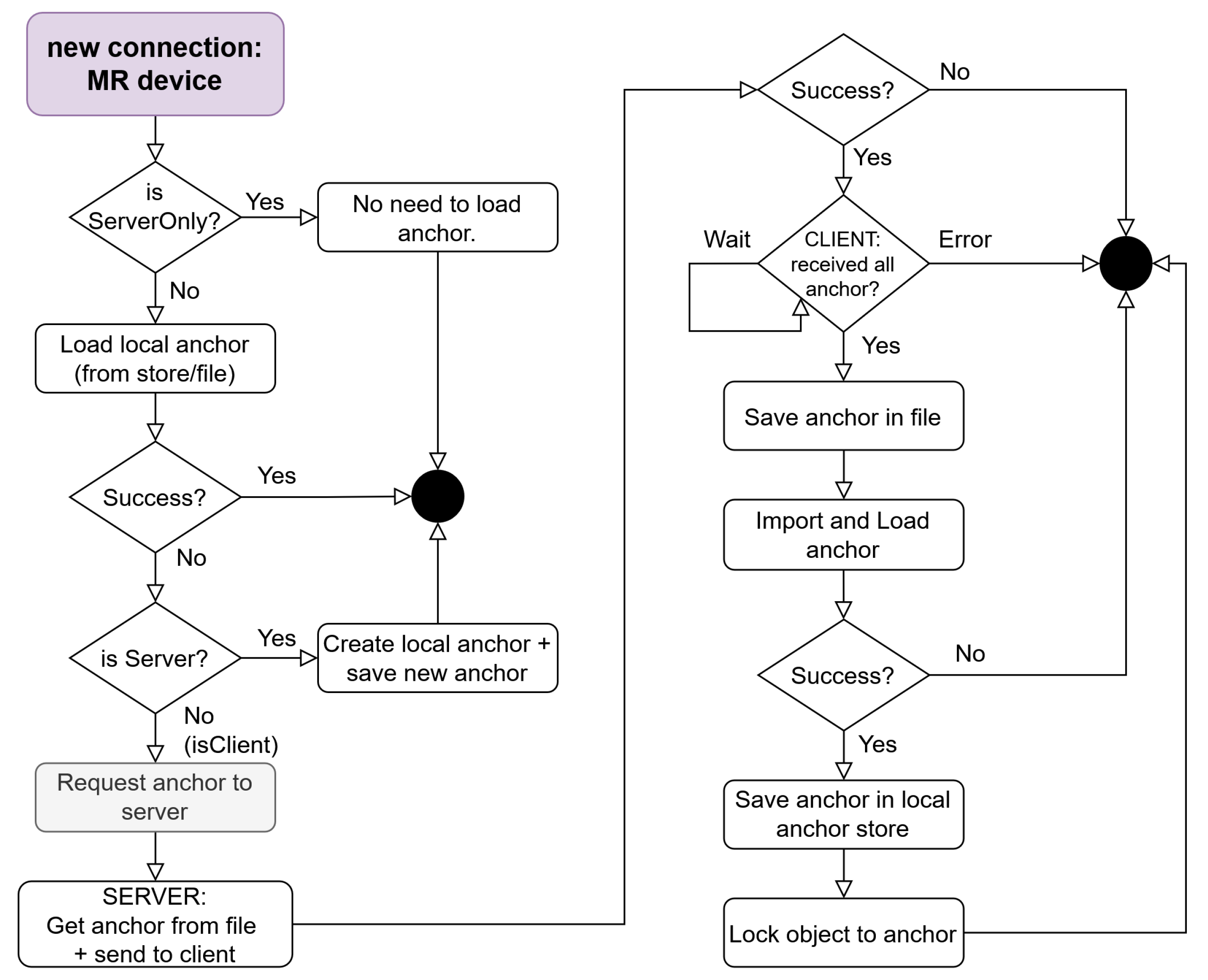

3.3.2. Anchor Synchronization Module

- 1.

- Load local anchor (either from the HoloLens anchor store or from a file in local memory). If this anchor exists and is loaded successfully, the process ends.

- 2.

- If there is no local anchor, two situations may occur:

- (a)

- If the device is acting as a host (server+client), a new anchor is created and stored locally (either in the anchor store or in memory in a file), and the process ends.

- (b)

- If the device acts as a client, a request is made to the server asking to send an anchor.

- 3.

- Once the request is received by the server, it prepares the anchor to be sent (if the size of the anchor is too large, the NetworkTransmitter component is used to divide it into smaller pieces and transmit them one at a time).

- 4.

- Finally, when the client receives the whole anchor, it is saved in an in-memory file and loaded into the scene (it is imported into Unity’s internal anchor management system and stored in the local anchor store). The format in which the anchor is stored in local memory is a plain text file containing the serialized byte array that is generated after exporting the anchor using the MRTK libraries. After the anchor is loaded, the 3D model is moved to the anchor position in order to synchronize the scenes of two devices (server and client), so they can both see the 3D objects on the same physical position.

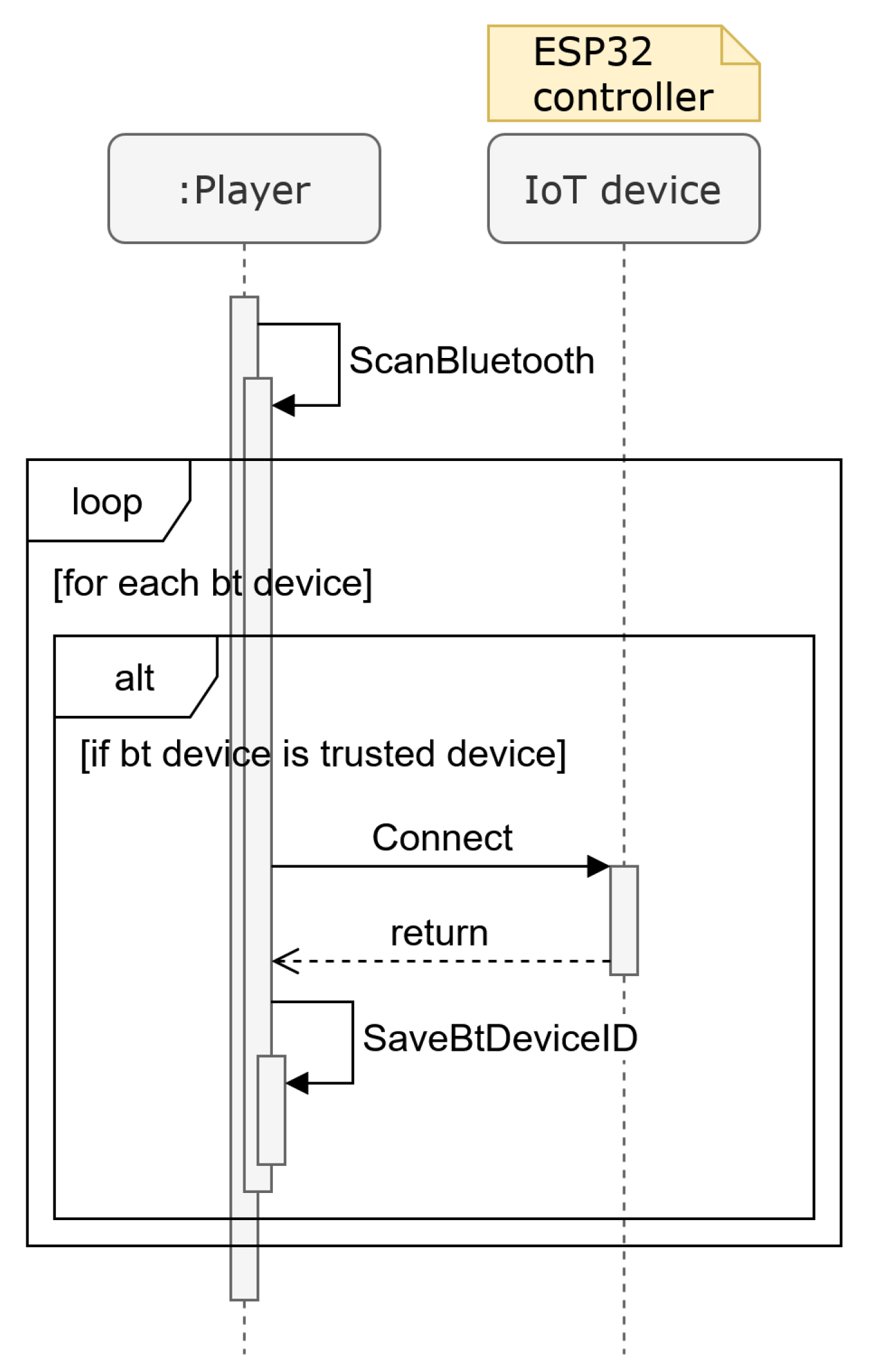

3.3.3. IoT Interaction Module

- 1.

- Initial Connection: When the application is first started on the HoloLens 2, the application automatically scans the environment for compatible Bluetooth devices (i.e., devices that are included in a previously defined white list of trusted devices). Once detected, the connection is established without the need of manual intervention.

- 2.

- Physical Action: Pressing the physical button connected to the ESP32 sends a message to the HoloLens 2 device.

- 3.

- Virtual Action: The HoloLens 2 receives the message and executes the assigned function, ensuring a fast and consistent response to the user’s physical interaction.

4. Practical Use Case

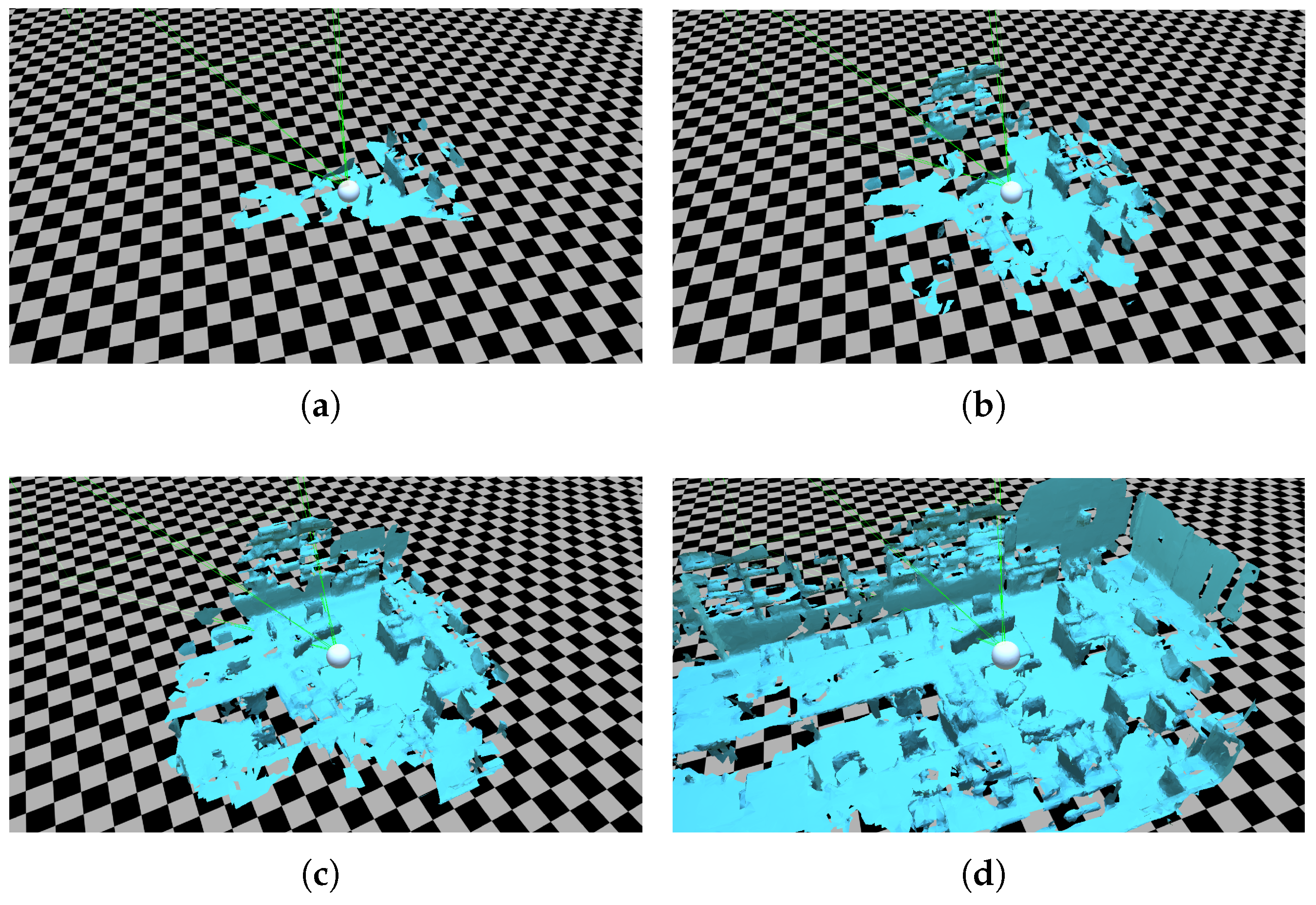

5. Experiments

- 1.

- Small anchor: Standing, slowly rotate head and Head-Mounted Displays (HMD) 180° on vertical and horizontal axis multiple times to capture a small, focused area.

- 2.

- Medium anchor: Similar to the small anchor setup, standing, the HMD performs full 360° head rotation, capturing a slightly larger spatial environment.

- 3.

- Big anchor: Standing on each corner of a 2 m by 2 m square; performing slow 360° rotations on vertical and horizontal axes multiple times to scan a broader area.

- 4.

- Room-sized anchor: walking around the room, scanning walls and surrounding objects by rotating the head and HMD, generating a large and detailed spatial map.

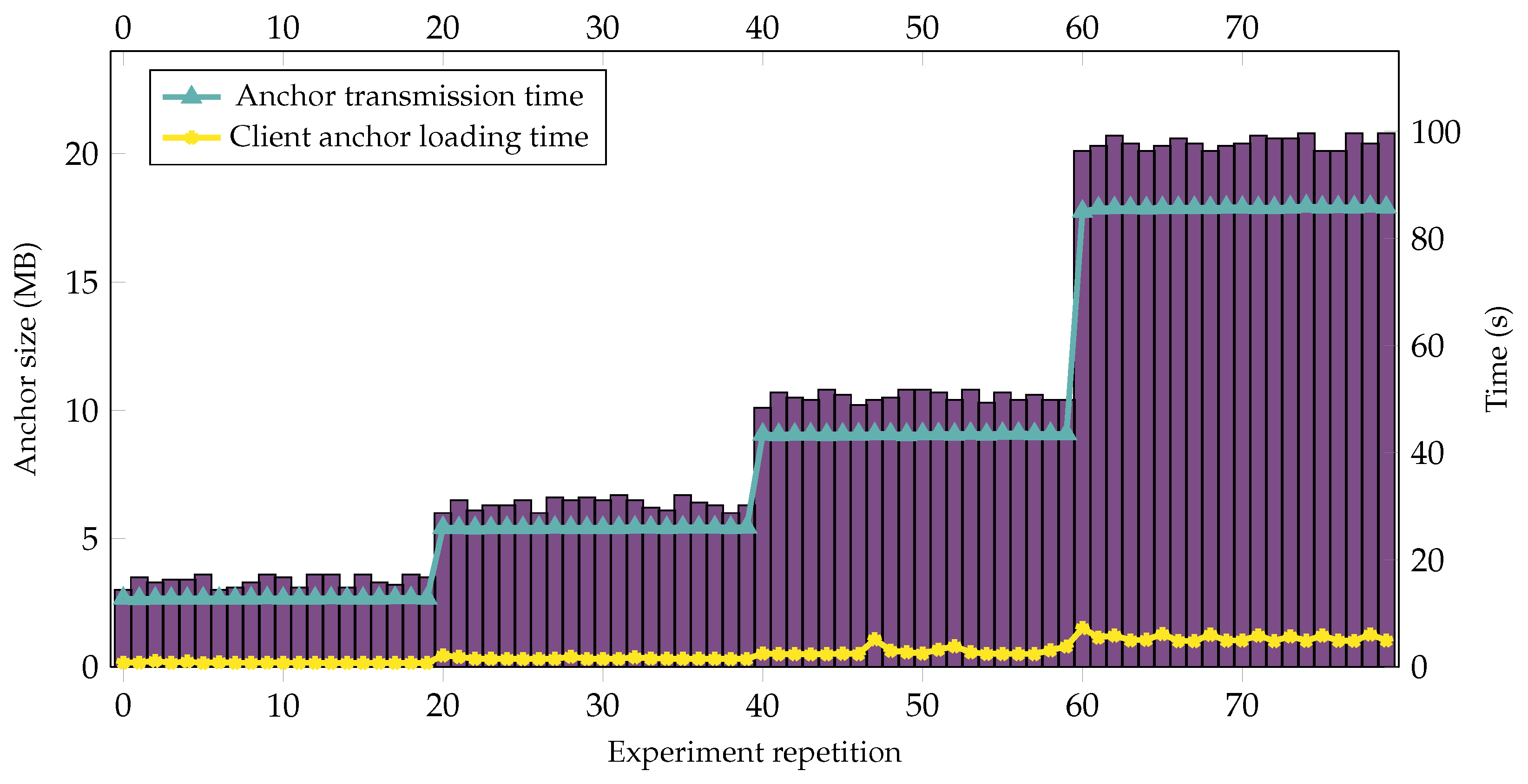

5.1. First Experiment: Local HoloLens–HoloLens Communication

- Microsoft HoloLens 2 (Qualcomm Snapdragon 850, Wi-Fi (IEEE 802.11 ac (2x2)), 4 GB of LPDDR DRAM).

- Router: TP-LINK Archer C5400 (IEEE 802.11 ac), 2.4 GHz connection.

5.2. Second Experiment: Remote HoloLens–PC Communication

- Client-side:

- –

- Microsoft HoloLens 2 (Qualcomm Snapdragon 850, Wi-Fi (IEEE 802.11 ac (2x2)), 4 GB of LPDDR DRAM).

- –

- Router: TP-LINK Archer C5400 (IEEE 802.11 ac), 2.4 GHz connection.

- Host-side:

- –

- Desktop computer: Windows 10 (Intel Core i7-960 3.20 GHz (4 cores) CPU, 12 GB RAM and graphic card NVIDIA GeForce GTX 660).

- –

- Router: Zte H3600P (IEEE 802.11 a/x), Ethernet connection.

6. Key Findings

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Chengoden, R.; Victor, N.; Huynh-The, T.; Yenduri, G.; Jhaveri, R.H.; Alazab, M.; Bhattacharya, S.; Hegde, P.; Maddikunta, P.K.R.; Gadekallu, T.R. Metaverse for healthcare: A survey on potential applications, challenges and future directions. IEEE Access 2023, 11, 12765–12795. [Google Scholar] [CrossRef]

- Vidal-Balea, A.; Blanco-Novoa, Ó.; Fraga-Lamas, P.; Fernández-Caramés, T.M. Developing the Next Generation of Augmented Reality Games for Pediatric Healthcare: An Open-Source Collaborative Framework Based on ARCore for Implementing Teaching, Training and Monitoring Applications. Sensors 2021, 21, 1865. [Google Scholar] [CrossRef]

- Wedel, M.; Bigné, E.; Zhang, J. Virtual and augmented reality: Advancing research in consumer marketing. Int. J. Res. Mark. 2020, 37, 443–465. [Google Scholar] [CrossRef]

- Von Itzstein, G.S.; Billinghurst, M.; Smith, R.T.; Thomas, B.H. Augmented reality entertainment: Taking gaming out of the box. In Encyclopedia of Computer Graphics and Games; Springer: Berlin/Heidelberg, Germany, 2024; pp. 162–170. [Google Scholar]

- Li, X.; Yi, W.; Chi, H.L.; Wang, X.; Chan, A.P. A critical review of virtual and augmented reality (VR/AR) applications in construction safety. Autom. Constr. 2018, 86, 150–162. [Google Scholar] [CrossRef]

- Yu, J.; Wang, T.; Shi, Y.; Yang, L. MR meets robotics: A review of mixed reality technology in robotics. In Proceedings of the 2022 6th International Conference on Robotics, Control and Automation (ICRCA), Xiamen, China, 26–28 February 2022; pp. 11–17. [Google Scholar]

- Nguyen, H.; Bednarz, T. User experience in collaborative extended reality: Overview study. In Proceedings of the International Conference on Virtual Reality and Augmented Reality; Springer: Berlin/Heidelberg, Germany, 2020; pp. 41–70. [Google Scholar]

- Doolani, S.; Wessels, C.; Kanal, V.; Sevastopoulos, C.; Jaiswal, A.; Nambiappan, H.; Makedon, F. A review of extended reality (xr) technologies for manufacturing training. Technologies 2020, 8, 77. [Google Scholar] [CrossRef]

- Speicher, M.; Hall, B.D.; Yu, A.; Zhang, B.; Zhang, H.; Nebeling, J.; Nebeling, M. XD-AR: Challenges and opportunities in cross-device augmented reality application development. Proc. ACM Hum.-Comput. Interact. 2018, 2, 1–24. [Google Scholar] [CrossRef]

- Tümler, J.; Toprak, A.; Yan, B. Multi-user Multi-platform xR collaboration: System and evaluation. In Proceedings of the International Conference on Human-Computer Interaction; Springer: Berlin/Heidelberg, Germany, 2022; pp. 74–93. [Google Scholar]

- The Khronos Group Inc. OpenXR Overview. Available online: https://www.khronos.org/openxr/ (accessed on 17 June 2025).

- Soon, T.J. QR code. Synth. J. 2008, 2008, 59–78. [Google Scholar]

- Garrido-Jurado, S.; Muñoz-Salinas, R.; Madrid-Cuevas, F.J.; Marín-Jiménez, M.J. Automatic generation and detection of highly reliable fiducial markers under occlusion. Pattern Recognit. 2014, 47, 2280–2292. [Google Scholar] [CrossRef]

- Zhou, F.; Duh, H.B.L.; Billinghurst, M. Trends in Augmented Reality tracking, interaction and display: A review of ten years of ISMAR. In Proceedings of the 2008 7th IEEE/ACM International Symposium on Mixed and Augmented Reality, Cambridge UK, 15–18 September 2008; pp. 193–202. [Google Scholar]

- Microsoft. Spatial Anchors—Mixed Reality|Microsoft Learn. Available online: https://learn.microsoft.com/en-us/windows/mixed-reality/design/spatial-anchors (accessed on 17 June 2025).

- Hübner, P.; Clintworth, K.; Liu, Q.; Weinmann, M.; Wursthorn, S. Evaluation of HoloLens tracking and depth sensing for indoor mapping applications. Sensors 2020, 20, 1021. [Google Scholar] [CrossRef] [PubMed]

- Vassallo, R.; Rankin, A.; Chen, E.C.; Peters, T.M. Hologram stability evaluation for Microsoft HoloLens. In Proceedings of the Medical Imaging 2017: Image Perception, Observer Performance, and Technology Assessment; Spie: Orlando, FL, USA, 2017; Volume 10136, pp. 295–300. [Google Scholar]

- Microsoft Azure. Azure Spatial Anchors Retirement. Available online: https://azure.microsoft.com/es-es/updates/azure-spatial-anchors-retirement/ (accessed on 17 June 2025).

- Vidal-Balea, A.; Blanco-Novoa, O.; Fraga-Lamas, P.; Fernández-Caramés, T.M. A Multi-Platform Collaborative Architecture for Multi-User eXtended Reality Applications. In Proceedings of the 5th XoveTIC Conference, A Coruña, Spain, 5–6 October 2023; pp. 148–151. [Google Scholar]

- Unity. Unity Real-Time Development Platform|3D, 2D, VR & AR Engine. Available online: https://unity.com/ (accessed on 17 June 2025).

- Mirror. Mirror Networking Documentation. Available online: https://mirror-networking.gitbook.io/docs (accessed on 17 June 2025).

- Microsoft Learn. MRTK2-Unity Developer Documentation—MRTK 2. Available online: https://learn.microsoft.com/en-us/windows/mixed-reality/mrtk-unity/mrtk2/ (accessed on 17 June 2025).

- Ghost Town Games. Overcooked|Cooking Video Game|Team17. Available online: https://www.team17.com/games/overcooked (accessed on 17 June 2025).

- Hernández-Rojas, D.L.; Fernández-Caramés, T.M.; Fraga-Lamas, P.; Escudero, C.J. A Plug-and-Play Human-Centered Virtual TEDS Architecture for the Web of Things. Sensors 2018, 18, 2052. [Google Scholar] [CrossRef]

- ORBALLO Project. UNDERCOOKED—ORBALLO Extended Reality Framework. Available online: https://gitlab.com/Orballo-project/orballo-extended-reality (accessed on 17 June 2025).

- Dong, T.; Churchill, E.F.; Nichols, J. Understanding the challenges of designing and developing multi-device experiences. In Proceedings of the 2016 ACM Conference on Designing Interactive Systems, Brisbane, Australia, 4–8 June 2016; pp. 62–72. [Google Scholar]

- Akyildiz, I.F.; Guo, H. Wireless communication research challenges for extended reality (XR). ITU J. Future Evol. Technol. 2022, 3, 1–15. [Google Scholar] [CrossRef]

- Van Damme, S.; Sameri, J.; Schwarzmann, S.; Wei, Q.; Trivisonno, R.; De Turck, F.; Torres Vega, M. Impact of latency on QoE, performance, and collaboration in interactive Multi-User virtual reality. Appl. Sci. 2024, 14, 2290. [Google Scholar] [CrossRef]

- Ren, P.; Qiao, X.; Huang, Y.; Liu, L.; Pu, C.; Dustdar, S.; Chen, J.L. Edge ar x5: An edge-assisted multi-user collaborative framework for mobile web augmented reality in 5g and beyond. IEEE Trans. Cloud Comput. 2020, 10, 2521–2537. [Google Scholar] [CrossRef]

- Ayyanchira, A.; Mahfoud, E.; Wang, W.; Lu, A. Toward cross-platform immersive visualization for indoor navigation and collaboration with augmented reality. J. Vis. 2022, 25, 1249–1266. [Google Scholar] [CrossRef]

- Warin, C.; Seeger, D.; Shams, S.; Reinhardt, D. PrivXR: A Cross-Platform Privacy-Preserving API and Privacy Panel for Extended Reality. In Proceedings of the 2024 IEEE International Conference on Pervasive Computing and Communications Workshops and other Affiliated Events (PerCom Workshops), Biarritz, France, 11–15 March 2024; pp. 417–420. [Google Scholar]

- Bao, L.; Tran, S.V.T.; Nguyen, T.L.; Pham, H.C.; Lee, D.; Park, C. Cross-platform virtual reality for real-time construction safety training using immersive web and industry foundation classes. Autom. Constr. 2022, 143, 104565. [Google Scholar] [CrossRef]

- Islam, A.; Masuduzzaman, M.; Akter, A.; Shin, S.Y. Mr-block: A blockchain-assisted secure content sharing scheme for multi-user mixed-reality applications in internet of military things. In Proceedings of the 2020 International Conference on Information and Communication Technology Convergence (ICTC), Jeju Island, Republic of Korea, 21–23 October 2020; pp. 407–411. [Google Scholar]

- Hubs Foundation. Hubs Foundation—We’ll Take It from Here. Available online: https://hubsfoundation.org/ (accessed on 17 June 2025).

- VRChat Inc. VRChat. Available online: https://hello.vrchat.com/ (accessed on 17 June 2025).

- Mozilla. End of Support for Mozilla Hubs|Hubs Help. Available online: https://support.mozilla.org/en-US/kb/end-support-mozilla-hubs (accessed on 17 June 2025).

- Unity-Technologies. NetworkTransmitter.cs. Available online: https://github.com/Unity-Technologies/SharedSpheres/blob/f054cdd832b1f0d575cab5469a7dd6454fd4dcc2/Assets/CaptainsMess/Example/NetworkTransmitter.cs (accessed on 17 June 2025).

- Espressif Systems. ESP32 Wi-Fi & Bluetooth SoC. Available online: https://www.espressif.com/en/products/socs/esp32 (accessed on 17 June 2025).

- Unity Technologies. AR Foundation|AR Foundation. Available online: https://docs.unity3d.com/Packages/com.unity.xr.arfoundation@5.0/manual/index.html (accessed on 17 June 2025).

- Vidal-Balea, A.; Fraga-Lamas, P.; Fernández-Caramés, T.M. Advancing NASA-TLX: Automatic User Interaction Analysis for Workload Evaluation in XR Scenarios. In Proceedings of the 2024 IEEE Gaming, Entertainment, and Media Conference (GEM), Turin, Italy, 5–7 June 2024; pp. 1–6. [Google Scholar]

| # Exp | Anchor Size (MB) | Server Exporting Time (s) | Anchor Transmission Time (s) | Client Loading Time (s) |

|---|---|---|---|---|

| 1—Small Anchors | 3.40 (±0.21) | 0.4479 (±0.27) | 12.8068 (±0.04) | 0.8019 (±0.10) |

| 2—Medium Anchors | 6.40 (±0.22) | 0.3765 (±0.20) | 26.0065 (±0.05) | 1.5944 (±0.18) |

| 3—Big Anchors | 10.5 (±0.20) | 0.9910 (±0.53) | 43.4579 (±0.07) | 2.7862 (±0.70) |

| 4—Room-Sized Anchors | 20.4 (±0.25) | 1.2923 (±0.31) | 85.7326 (±0.19) | 5.3937 (±0.64) |

| # Exp | Anchor Size (MB) | Transmission Time (s) | Client Loading Time (s) |

|---|---|---|---|

| 1—Small Anchors | 3.00 | 12.969 (±0.55) | 0.890 (±0.22) |

| 2—Medium Anchors | 6.00 | 25.682 (±0.03) | 1.472 (±0.18) |

| 3—Big Anchors | 10.10 | 43.477 (±0.09) | 2.639 (±0.38) |

| 4—Room-Size Anchors | 20.10 | 85.845 (±0.38) | 5.265 (±0.58) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vidal-Balea, A.; Blanco-Novoa, O.; Fraga-Lamas, P.; Fernández-Caramés, T.M. Off-Cloud Anchor Sharing Framework for Multi-User and Multi-Platform Mixed Reality Applications. Appl. Sci. 2025, 15, 6959. https://doi.org/10.3390/app15136959

Vidal-Balea A, Blanco-Novoa O, Fraga-Lamas P, Fernández-Caramés TM. Off-Cloud Anchor Sharing Framework for Multi-User and Multi-Platform Mixed Reality Applications. Applied Sciences. 2025; 15(13):6959. https://doi.org/10.3390/app15136959

Chicago/Turabian StyleVidal-Balea, Aida, Oscar Blanco-Novoa, Paula Fraga-Lamas, and Tiago M. Fernández-Caramés. 2025. "Off-Cloud Anchor Sharing Framework for Multi-User and Multi-Platform Mixed Reality Applications" Applied Sciences 15, no. 13: 6959. https://doi.org/10.3390/app15136959

APA StyleVidal-Balea, A., Blanco-Novoa, O., Fraga-Lamas, P., & Fernández-Caramés, T. M. (2025). Off-Cloud Anchor Sharing Framework for Multi-User and Multi-Platform Mixed Reality Applications. Applied Sciences, 15(13), 6959. https://doi.org/10.3390/app15136959