Corner Enhancement Module Based on Deformable Convolutional Networks and Parallel Ensemble Processing Methods for Distorted License Plate Recognition in Real Environments

Abstract

1. Introduction

2. Related Work

2.1. Deep Learning Based Real-Time License Plate Detection

2.2. License Plate Recognition in Real Road Environments

2.3. Test-Time Augmentation

3. Proposed Method

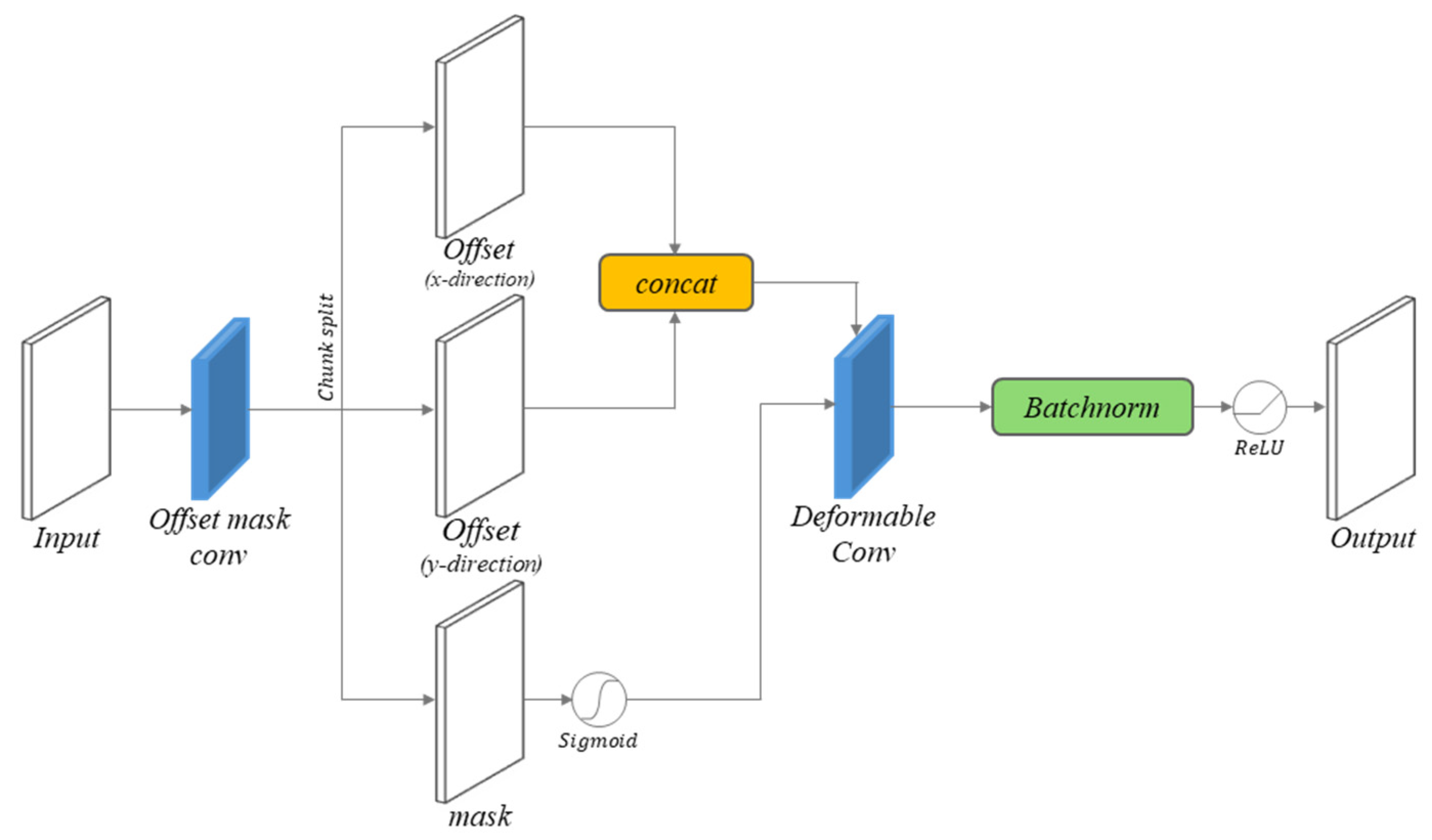

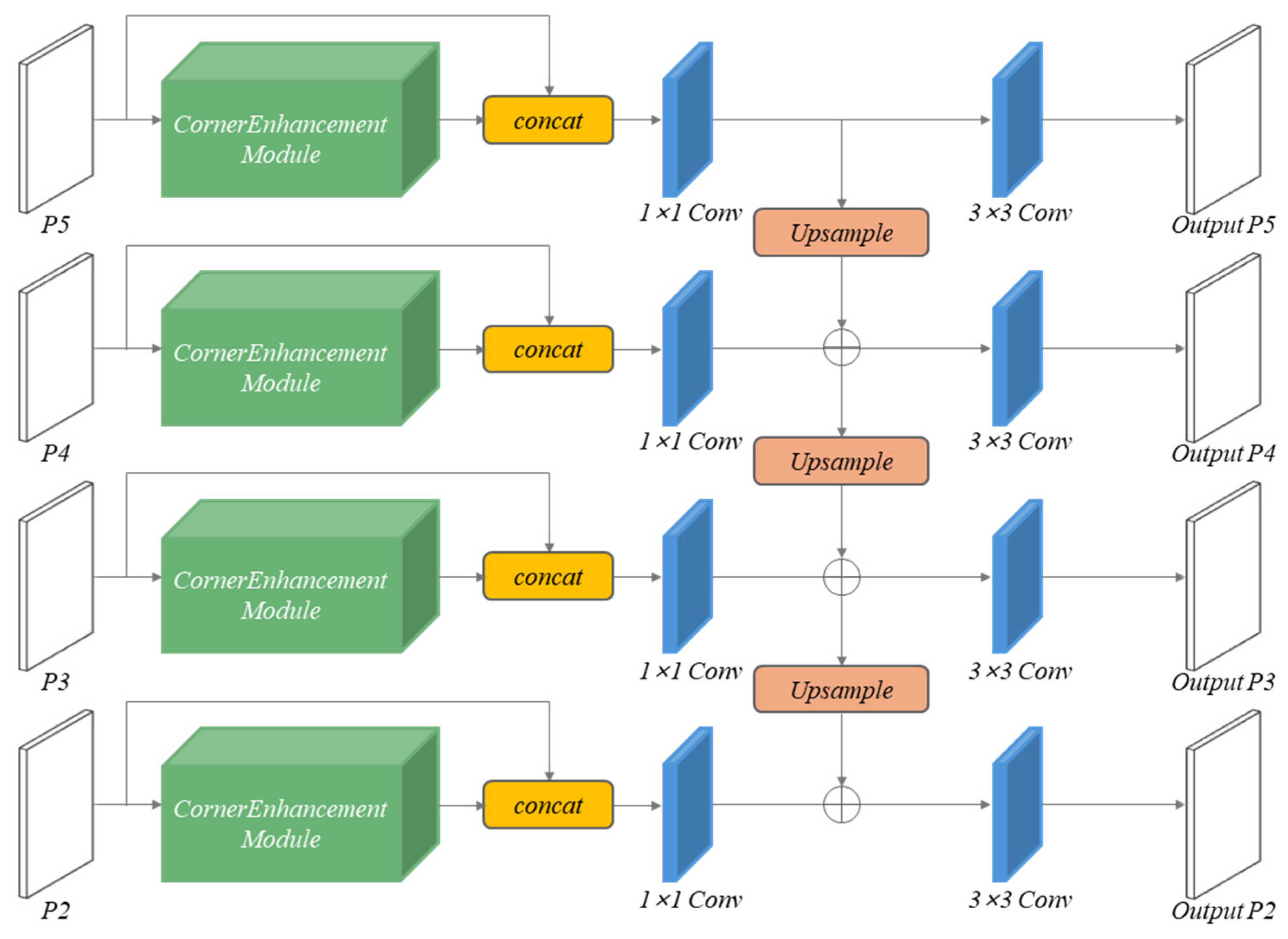

3.1. Corner Enhancement Module

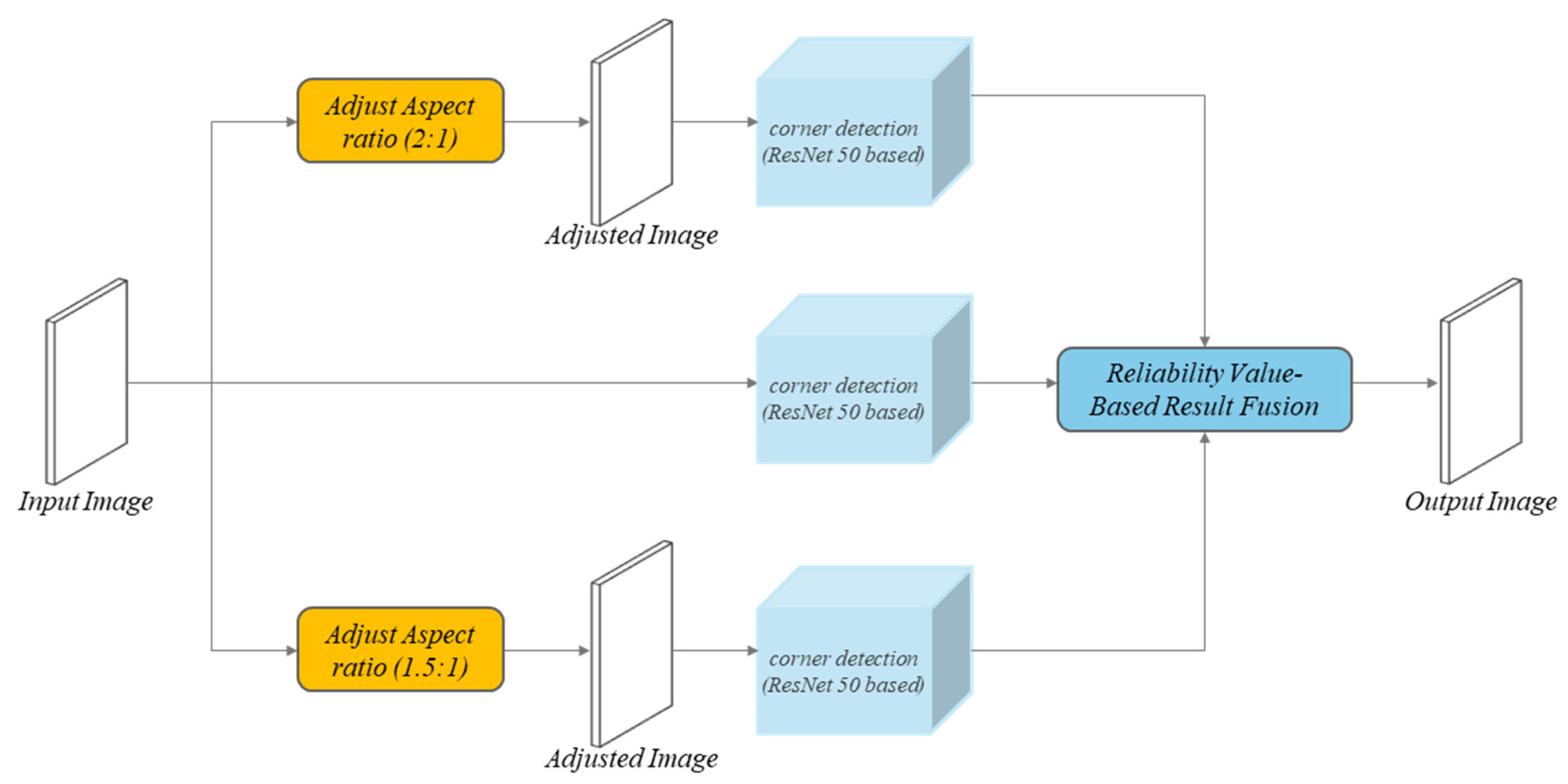

3.2. Test-Time Augmentation-Based Parallel Ensemble Processing Technique

3.3. Homography and Image Correction

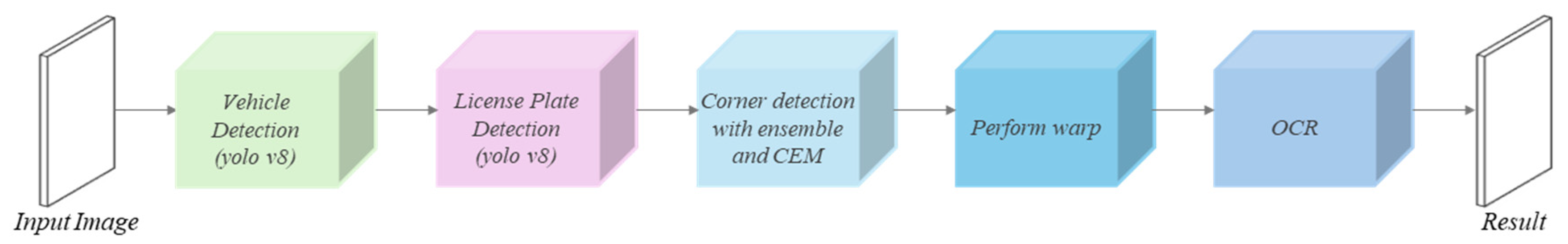

3.4. Overall Integrated System

4. Results

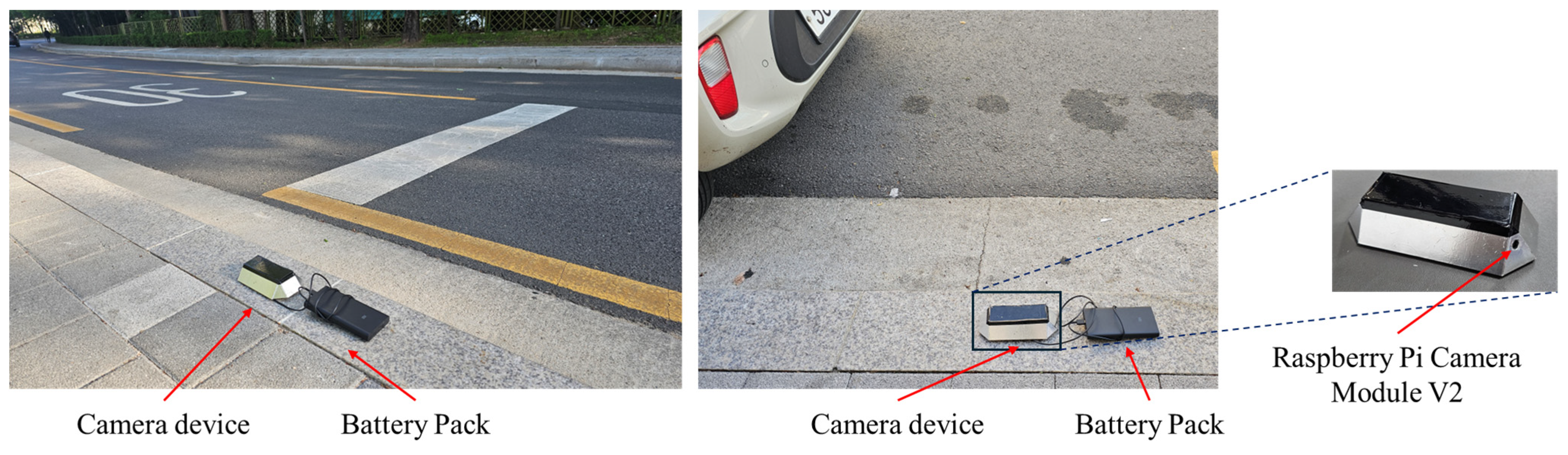

4.1. Experimental Environment and Dataset

4.2. Evaluation Metrics and Methodology

4.3. Experimental Results and Analysis

4.3.1. Vehicle and License Plate Detection Performance

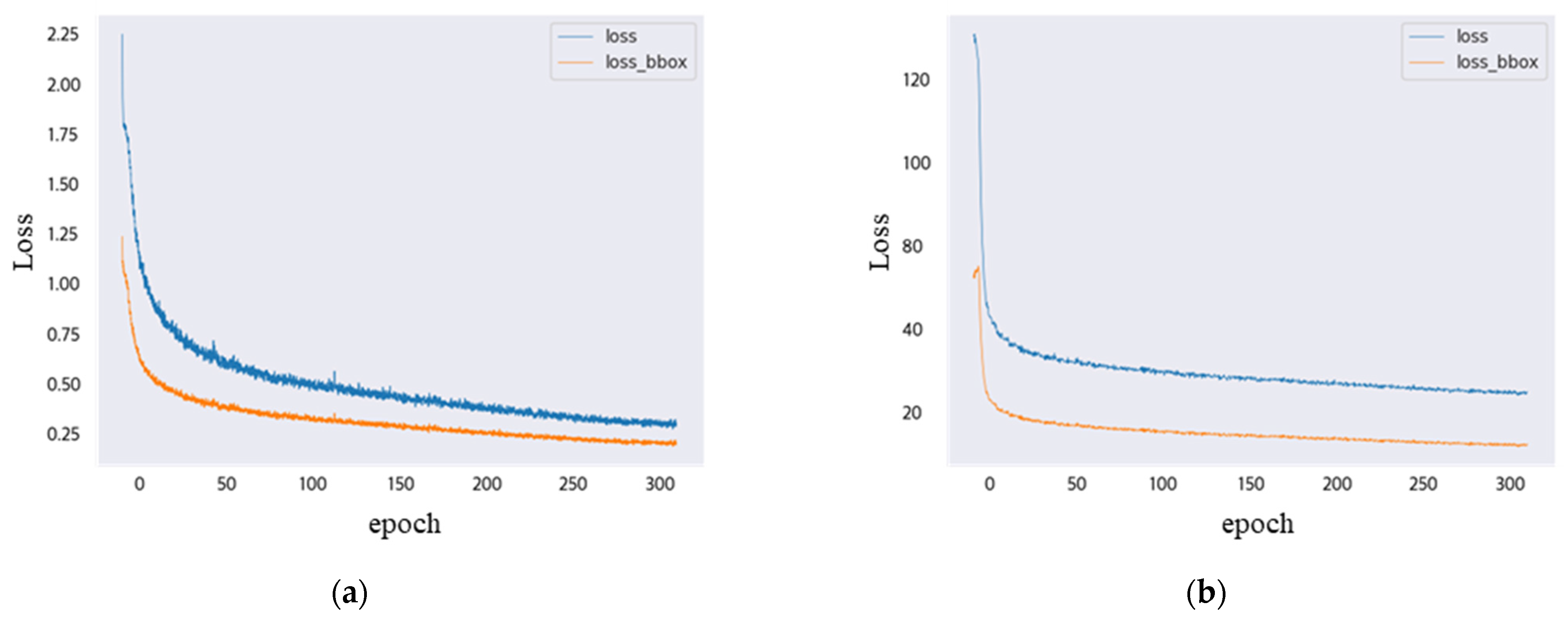

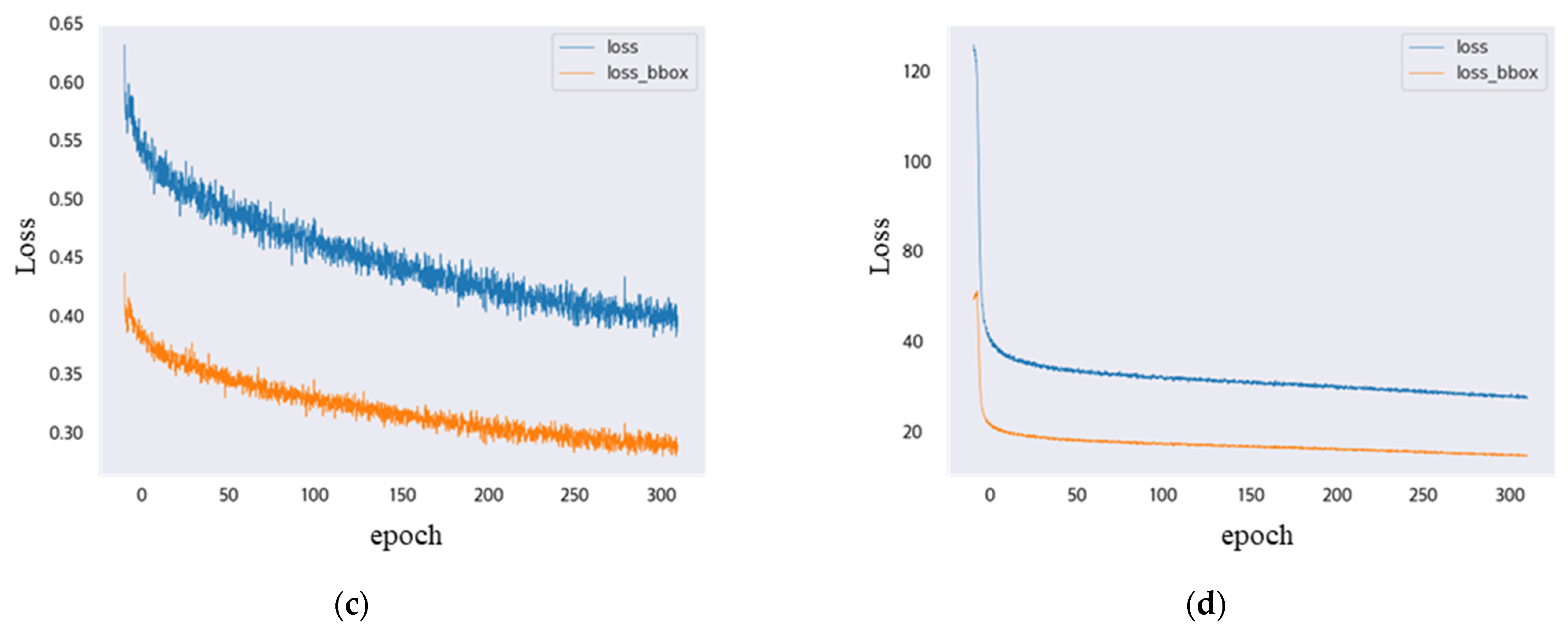

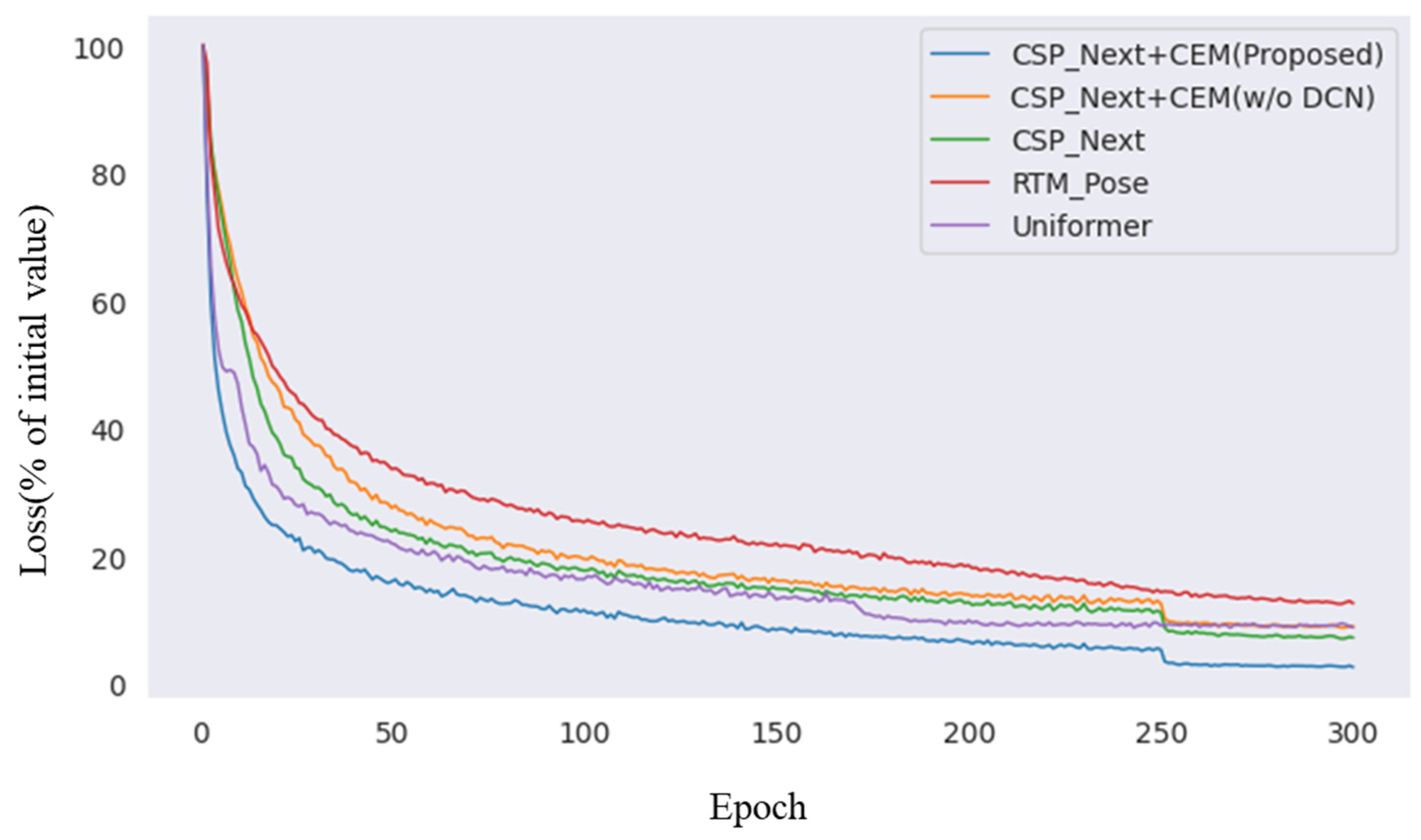

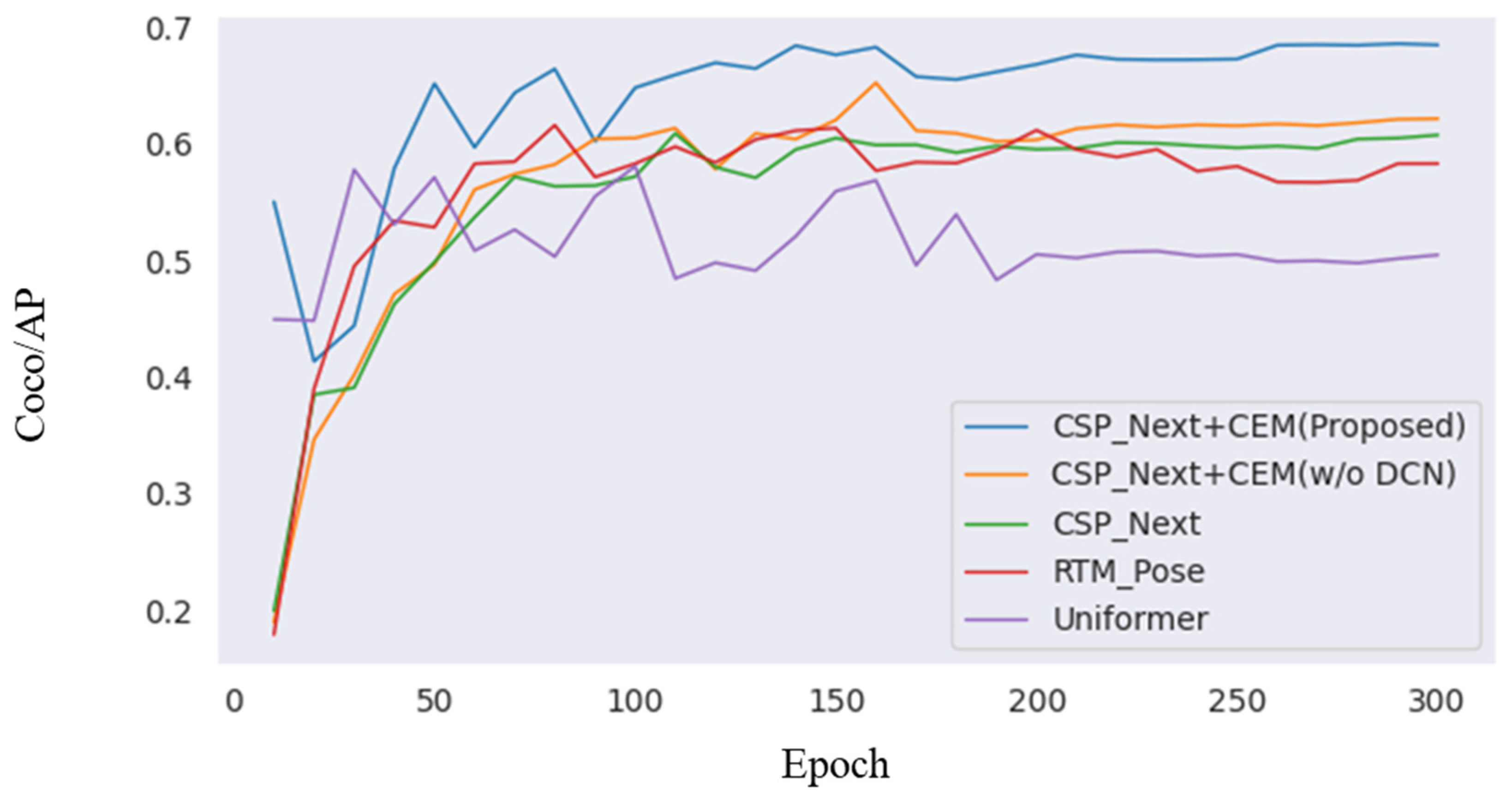

4.3.2. License Plate Corner Detection Performance

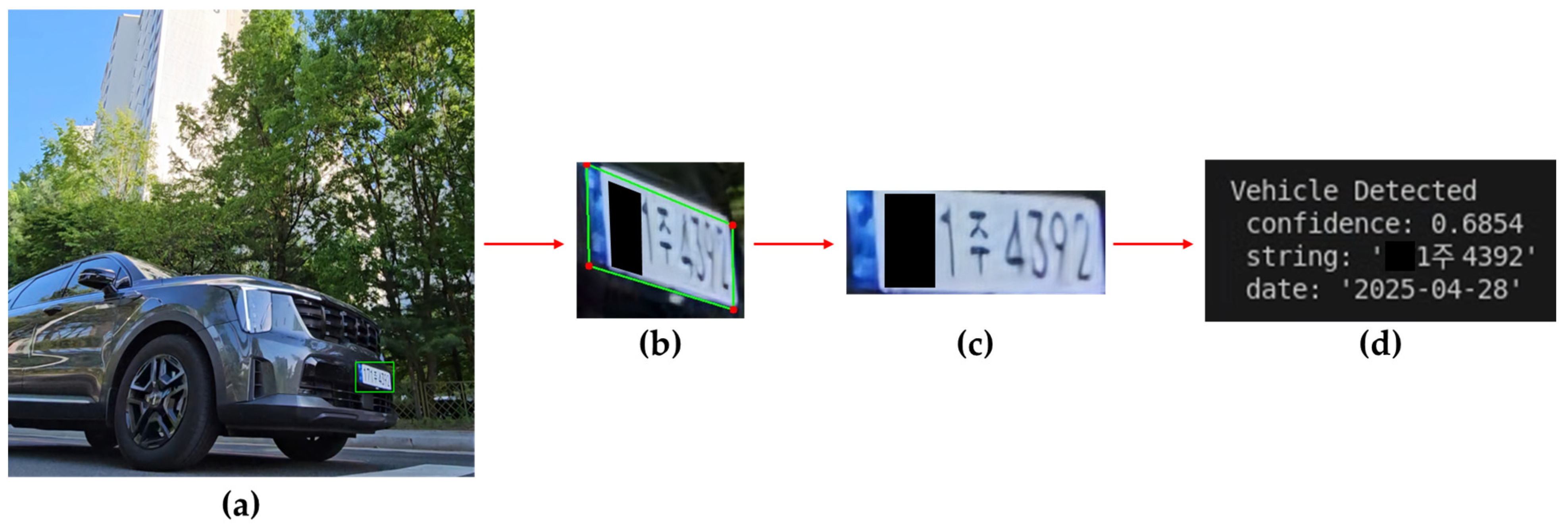

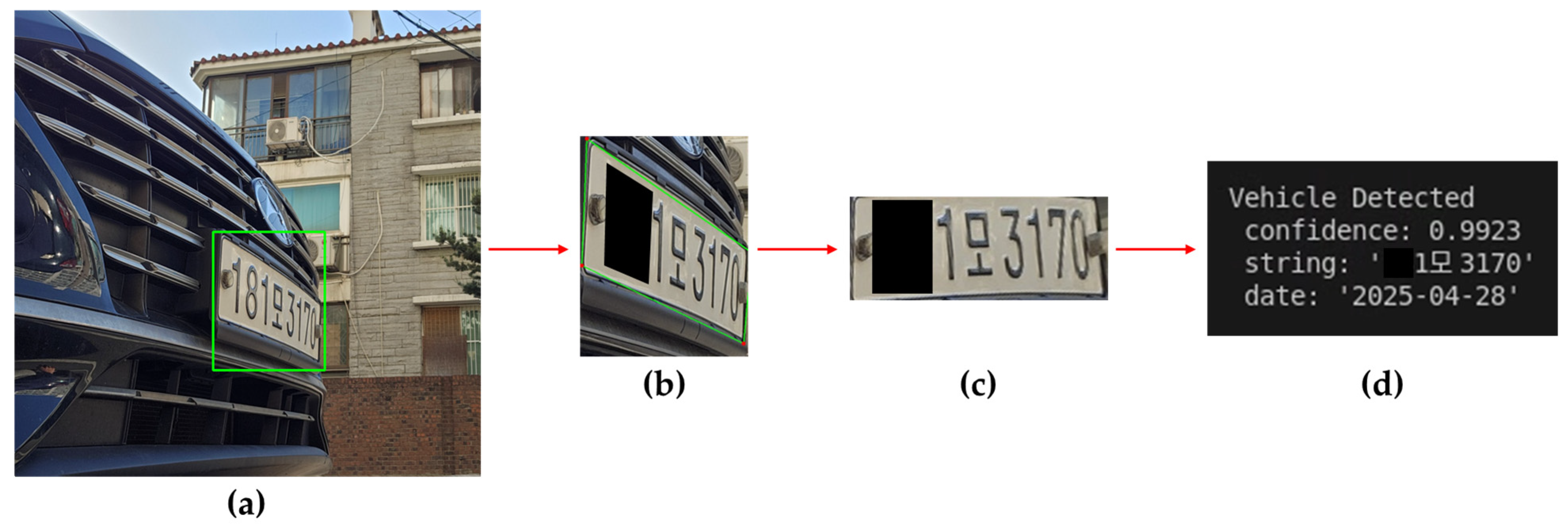

4.3.3. Validation in Real Road Environments

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Du, S.; Ibrahim, M.; Shehata, M.; Badawy, W. Automatic license plate recognition (ALPR): A state-of-the-art review. IEEE Trans. Circuits Syst. Video Technol. 2012, 23, 311–325. [Google Scholar] [CrossRef]

- Lubna; Mufti, N.; Shah, S.A.A. Automatic number plate Recognition: A detailed survey of relevant algorithms. Sensors 2021, 21, 3028. [Google Scholar] [CrossRef] [PubMed]

- Xu, H.; Zhou, X.D.; Li, Z.; Liu, L.; Li, C.; Xie, J. EILPR: Toward end-to-end irregular license plate recognition based on automatic perspective alignment. IEEE Trans. Intell. Transp. Syst. 2021, 23, 11454–11467. [Google Scholar] [CrossRef]

- Khan, I.R.; Ali, S.T.A.; Siddiq, A.; Khan, M.M.; Ilyas, M.U.; Rehman, H.Z.U.; Chaudary, M.H.; Nawaz, S.J. Automatic license plate recognition in real-world traffic videos captured in unconstrained environment by a mobile camera. Electronics 2022, 11, 1408. [Google Scholar] [CrossRef]

- He, M.X.; Hao, P. Robust automatic recognition of Chinese license plates in natural scenes. IEEE Access 2020, 8, 164946–164961. [Google Scholar] [CrossRef]

- Kim, T.G.; Yun, B.J.; Kim, T.H.; Lee, J.Y.; Park, K.H.; Jeong, Y.; Lee, J.H.; Kim, J.S.; Yoon, S.H.; Lim, J.S. Recognition of vehicle license plates based on image processing. Appl. Sci. 2021, 11, 6292. [Google Scholar] [CrossRef]

- Alhussein, M.; Aurangzeb, K.; Haider, S.I. Vehicle license plate detection and perspective rectification. Elektron. Elektrotech. 2019, 25, 35–42. [Google Scholar] [CrossRef]

- Lin, H.Y.; Li, Y.Q.; Lin, D.T. System implementation of multiple license plate detection and correction on wide-angle images using an instance segmentation network model. IEEE Trans. Consum. Electron. 2023, 70, 71–80. [Google Scholar] [CrossRef]

- Yang, S.J.; Ho, C.C.; Chen, J.Y.; Tsai, C.Y. Practical homography-based perspective correction method for license plate recognition. In Proceedings of the International Conference on Information Science and Digital Content Technology, Jeju, Republic of Korea, 26–28 June 2012; pp. 232–237. [Google Scholar]

- Yoo, H.; Jun, K. Deep homography for license plate detection. Information 2020, 11, 221. [Google Scholar] [CrossRef]

- Yoo, H.; Jun, K. Deep corner prediction to rectify tilted license plate images. Multimed. Syst. 2021, 27, 807–815. [Google Scholar] [CrossRef]

- Sihombing, D.P.; Nugroho, H.A.; Ardiyanto, I. Perspective rectification in vehicle number plate recognition using 2D-2D transformation of planar homography. In Proceedings of the International Conference on Science and Technology, Yogyakarta, Indonesia, 11–13 November 2015; pp. 1–5. [Google Scholar]

- Plavac, N. Assessment of Deep Learning Algorithms for Automatic License Plate Recognition on Distorted Images. Master’s Thesis, Norwegian University of Science and Technology, Trondheim, Norway, 2024. [Google Scholar]

- Pham, T.A. Effective deep neural networks for license plate detection and recognition. Vis. Comput. 2023, 39, 927–941. [Google Scholar] [CrossRef]

- Risha, K.; Hemanth, J. A Structured Review of Vehicle Registration Number Plate Detection for Improvisation in Intelligent Transportation System: Special Study on Adverse Conditions. Int. J. Intell. Transp. Syst. Res. 2025, 1, 1–20. [Google Scholar] [CrossRef]

- Liu, Y.Y.; Liu, Q.; Chen, S.L.; Chen, F.; Yin, X.C. Irregular License Plate Recognition via Global Information Integration. In Proceedings of the Conference on Multimedia Modeling, Amsterdam, The Netherlands, 29 January–2 February 2024; pp. 320–332. [Google Scholar] [CrossRef]

- Jiang, Y.; Jiang, F.; Luo, H.; Lin, H.; Yao, J.; Liu, J.; Ren, J. An efficient and unified recognition method for multiple license plates in unconstrained scenarios. IEEE Trans. Intell. Transp. Syst. 2023, 24, 5376–5389. [Google Scholar] [CrossRef]

- Dai, J.; Qi, Z.; Xiong, Y.; Li, Y.; Zhang, G.; Hu, H. Deformable convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 764–773. [Google Scholar] [CrossRef]

- Zhao, K.; Peng, L.; Ding, N.; Yao, G.; Tang, P. Deep representation learning for license plate recognition in low quality video images. In Advances in Visual Computing; Springer: Cham, Switzerland, 2025. [Google Scholar] [CrossRef]

- Wang, Y.; Xu, S.; Wang, P.; Liu, L.; Li, Y.S.; Song, Z. Vehicle detection algorithm based on improved RT-DETR. J. Supercomput. 2025, 81, 290. [Google Scholar] [CrossRef]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. Detrs beat yolos on real-time object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024. [Google Scholar] [CrossRef]

- Hussein, A.M.A. Automatic Number Plate Recognition for Seamless Toll Charging Without Stopping. Ibn AL-Haitham J. Pure Appl. Sci. 2025, 38. [Google Scholar] [CrossRef]

- Arjun, R.P.; Akshitha, R.; Ranjan, N. Innovation in Vehicle Tracking: Harnessing YOLOv8 and Deep Learning Tools for Automatic Number Plate Detection. In Computing Technologies for Sustainable Development; Springer: Cham, Switzerland, 2025. [Google Scholar] [CrossRef]

- Pandey, R.; Maurya, P.; Saxena, P. Advancing Automatic Number Plate Recognition: Insights, Innovations, and Future Directions. In Proceedings of the 2025 IEEE 14th International Conference, Bhopal, India, 7–9 March 2025. [Google Scholar] [CrossRef]

- Ashkanani, M.; AlAjmi, A.; Alhayyan, A.; Esmael, Z. A Self-Adaptive Traffic Signal System Integrating Real-Time Vehicle Detection and License Plate Recognition for Enhanced Traffic Management. Inventions 2025, 10, 14. [Google Scholar] [CrossRef]

- Son, J.; Kang, S. Efficient improvement of classification accuracy via selective test-time augmentation. Inf. Sci. 2023, 642, 119148. [Google Scholar] [CrossRef]

- Sherkatghanad, Z.; Abdar, M.; Bakhtyari, M.; Plawiak, P.; Makarenkov, V. BayTTA: Uncertainty-aware medical image classification with optimized test-time augmentation using Bayesian model averaging. arXiv 2024, arXiv:2406.17640. [Google Scholar] [CrossRef]

- Ma, X.; Tao, Y.; Zhang, Y.; Ji, Z.; Zhang, Y.; Chen, Q. Test-Time Generative Augmentation for Medical Image Segmentation. arXiv 2024, arXiv:2406.17608. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Dollar, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar] [CrossRef]

- Chen, X.; Yang, C.; Mo, J.; Sun, Y.; Karmouni, H.; Jiang, Y.; Zheng, Z. CSPNeXt: A new efficient token hybrid backbone. Eng. Appl. Artif. Intell. 2024, 132, 107886. [Google Scholar] [CrossRef]

- Jiang, T.; Lu, P.; Zhang, L.; Ma, N.; Han, R.; Lyu, C.; Li, Y.; Chen, K. Rtmpose: Real-time multi-person pose estimation based on mmpose. arXiv 2023, arXiv:2303.07399. [Google Scholar] [CrossRef]

- Li, K.; Wang, Y.; Zhang, J.; Gao, P.; Song, G.; Liu, Y.; Qiao, Y. Uniformer: Unifying convolution and self-attention for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 12581–12600. [Google Scholar] [CrossRef]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar] [CrossRef]

| Corner Detection Dataset | License Plate Detection Dataset | Vehicle Detection Dataset | |

|---|---|---|---|

| Number of Images | 3619 | 16,188 | 24,914 |

| Training Set | 3319 | 16,060 | 23,575 |

| Validation Set | 301 | 128 | 1339 |

| Resolution Range | 302 × 192~600 × 450 | 640 × 640 | 640 × 640 |

| Model | Object | mAP | mAP_50 | mAP_75 | mAP_s | mAP_m | mAP_l | Latency (ms) |

|---|---|---|---|---|---|---|---|---|

| YOLOv7 | Vehicle | 0.661 | 0.871 | 0.769 | 0.361 | 0.716 | 0.749 | 0.0168 |

| YOLOv8 | Vehicle | 0.689 | 0.890 | 0.807 | 0.474 | 0.704 | 0.725 | 0.0146 |

| Model | Object | mAP | mAP_50 | mAP_75 | mAP_s | mAP_m | mAP_l | Latency (ms) |

|---|---|---|---|---|---|---|---|---|

| YOLOv7 | License Plate | 0.692 | 0.874 | 0.790 | 0.161 | 0.609 | 0.807 | 0.0156 |

| YOLOv8 | License Plate | 0.704 | 0.886 | 0.791 | 0.373 | 0.631 | 0.797 | 0.0152 |

| Model | AP | AP.5 | AP.75 | AR | Latency (ms) |

|---|---|---|---|---|---|

| CSPNext + CEM + Ensemble (Proposed) | 0.721 | 0.789 | 0.737 | 0.778 | 32.6 |

| CSPNext + CEM (Proposed) | 0.685 | 0.750 | 0.700 | 0.739 | 21.3 |

| CSPNext + Ensemble | 0.692 | 0.759 | 0.708 | 0.745 | 29.8 |

| CSPNext + CEM(w/o DCN) | 0.652 | 0.735 | 0.686 | 0.729 | 19.8 |

| CSPNext | 0.608 | 0.769 | 0.680 | 0.812 | 17.0 |

| RTM Pose | 0.615 | 0.731 | 0.636 | 0.729 | 21.0 |

| Uniformer | 0.608 | 0.713 | 0.710 | 0.658 | 18.8 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, S.; Cho, S.; Kim, J.; Son, K. Corner Enhancement Module Based on Deformable Convolutional Networks and Parallel Ensemble Processing Methods for Distorted License Plate Recognition in Real Environments. Appl. Sci. 2025, 15, 6550. https://doi.org/10.3390/app15126550

Kim S, Cho S, Kim J, Son K. Corner Enhancement Module Based on Deformable Convolutional Networks and Parallel Ensemble Processing Methods for Distorted License Plate Recognition in Real Environments. Applied Sciences. 2025; 15(12):6550. https://doi.org/10.3390/app15126550

Chicago/Turabian StyleKim, Sehun, Seongsoo Cho, Jangyeop Kim, and Kwangchul Son. 2025. "Corner Enhancement Module Based on Deformable Convolutional Networks and Parallel Ensemble Processing Methods for Distorted License Plate Recognition in Real Environments" Applied Sciences 15, no. 12: 6550. https://doi.org/10.3390/app15126550

APA StyleKim, S., Cho, S., Kim, J., & Son, K. (2025). Corner Enhancement Module Based on Deformable Convolutional Networks and Parallel Ensemble Processing Methods for Distorted License Plate Recognition in Real Environments. Applied Sciences, 15(12), 6550. https://doi.org/10.3390/app15126550