Time Series Anomaly Detection Using Signal Processing and Deep Learning

Abstract

1. Introduction

2. Literature Review

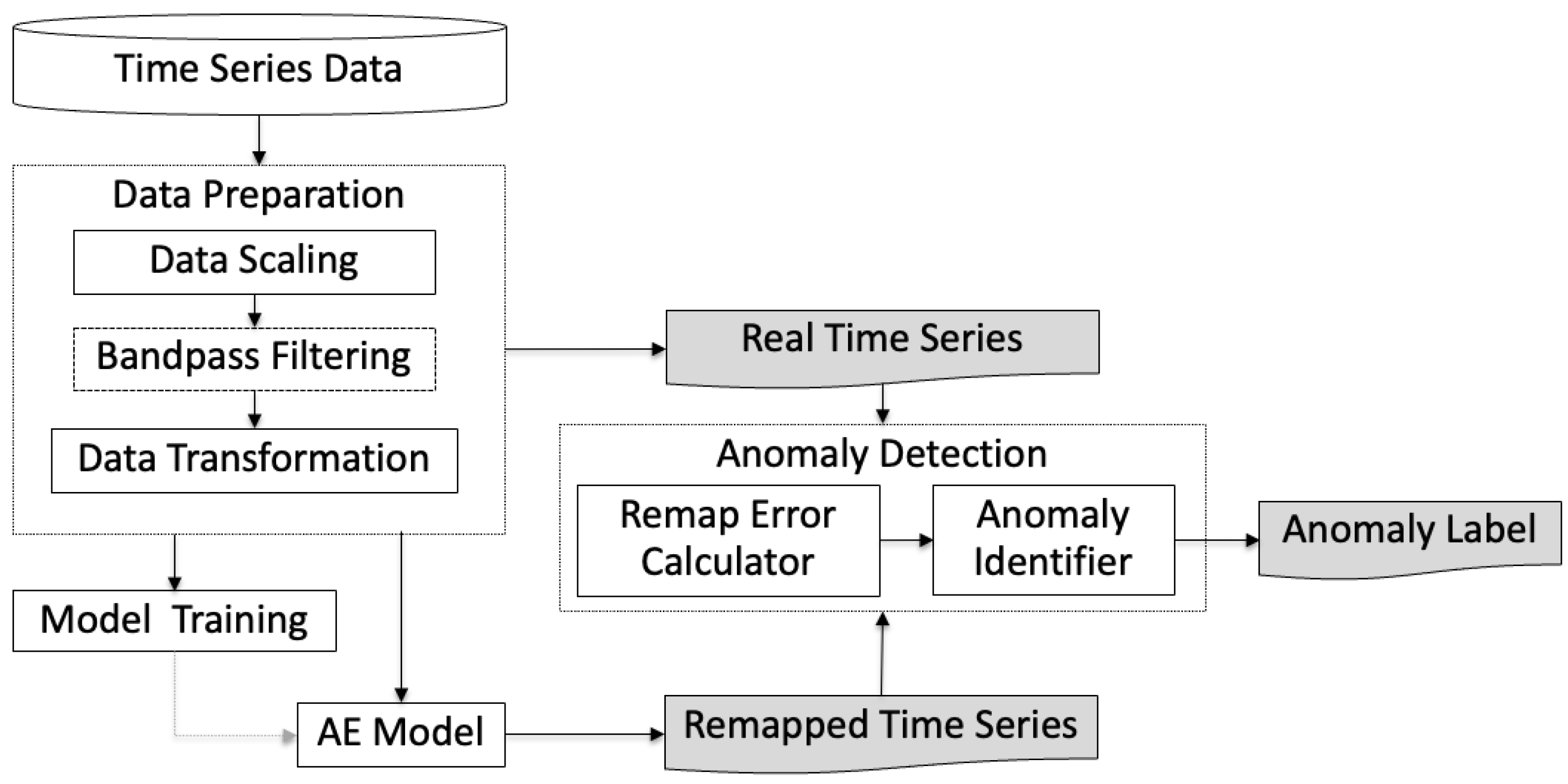

3. Methodology

3.1. Data Preparation

- Low cutoff frequency—removes components with frequencies below it (e.g., trends or baseline drift).

- High cutoff frequency—removes components with frequencies above it (e.g., random noise).

3.2. Model Training

- Encoder: Compresses the R dimensional input time series data , where and is a compact time interval, into a latent, lower-dimensional representation in the subsequent layers, where , where , and where . This process aims to retain the most relevant features of the data.

- Decoder: Reconstructs the original input as from the latent representation .

3.2.1. MLP

- Encoder: Input data x, where x is a vector stitched together over the time points and features, pass through multiple fully connected layers with traditional neurons. The output of the latent representation z is given as follows:where b and W are the bias and weight parameters, is the output of the previous layer, and is the activation function.

- Decoder: The decoder mirrors the encoder with fully connected layers, reconstructing the input from z:where is the output of the previous layer before the output and l denotes the total number of layers in the network.

3.2.2. LSTM

- Encoder: Input data , where S is the sequence length and (t = 1, 2, …, S), are passed through the LSTM layers. The LSTM processes the sequence step by step, updating its hidden states and cell states :The final hidden and cell states serve as the latent representation Z that summarizes the entire multivariate input sequence into two compact representations with dimensions (num_layers, hidden_size).

- Decoder: The hidden and cell states of the latent representation Z are used to initialize the decoder LSTM layers with and together with a zero input tensor . The decoder then generates a reconstructed sequence of hidden states using

3.2.3. FNN

- Encoder: The input layer that consists of time series data is transformed into a latent representation at an intermediate continuous hidden layer, denoted as the layer. This constitutes the encoder part of the FNN Autoencoder architecture, referred to by the functional encoder. The reduced-dimensional representation of the input in the continuous hidden layer is given as follows:where and . Here, the function is observed at time points.

- Decoder: From this latent representation, the network works toward getting to the output layer, reconstructing the functional input values through continuous neurons in subsequent hidden layers. This constitutes the decoder part of the network, referred to as the functional decoder. The reconstructed values of the input in the output layer are given as follows:

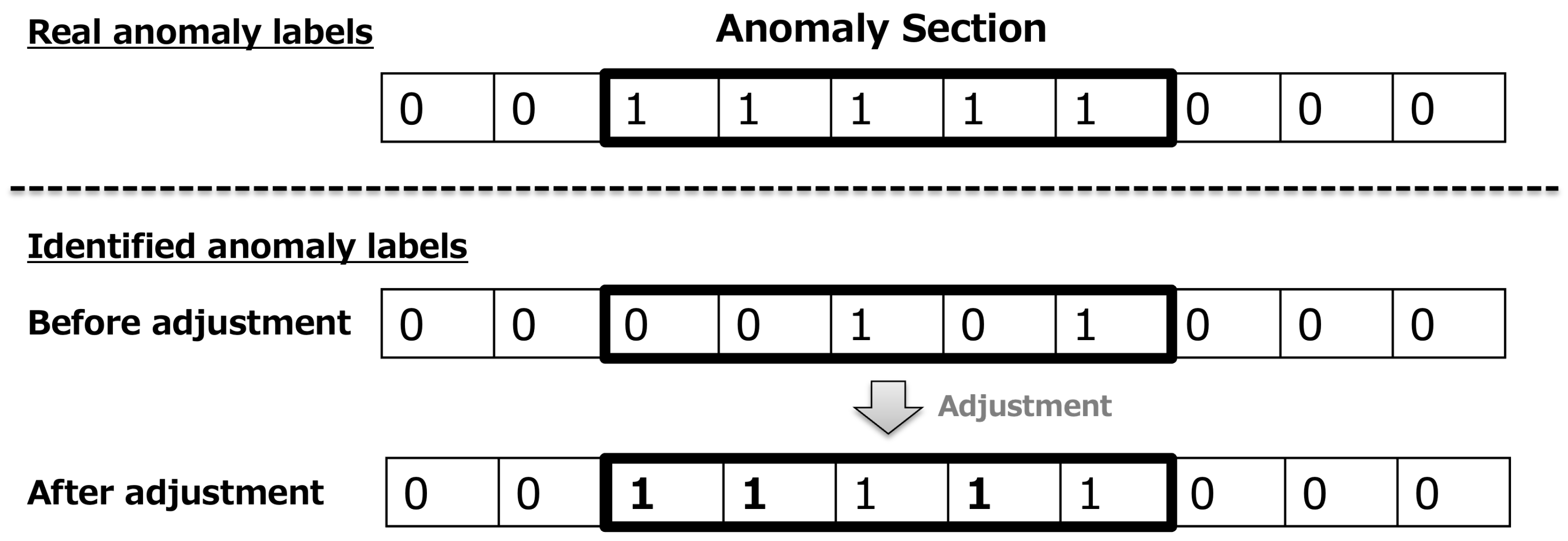

3.3. Anomaly Detection

4. Experiment and Results

4.1. Real-World Datasets

4.2. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| DNN | Deep Neural Network |

| FDA | Functional Data Analysis |

| FFT | Fourier Transform |

| STFT | Short-Time Fourier Transform |

| AE | Autoencoder |

| BP | bandpass |

| MLP | Multi-Layer Perceptron |

| FNN | Functional Neural Network |

| MAE | Mean Absolute Error |

| TS | time series |

| CNN | Convolutional Neural Networks |

| BFAE | Bi-Functional Autoencoder |

| LSTM | Long Term Short Memory |

| RNN | Recurrent Neural Network |

References

- Darban, Z.Z.; Webb, G.I.; Pan, S.; Aggarwal, C.C.; Salehi, M. Deep Learning for Time Series Anomaly Detection: A Survey. arXiv 2022, arXiv:2211.05244. [Google Scholar]

- Kim, S.M.; Kim, Y.S. Enhancing Sound-Based Anomaly Detection Using Deep Denoising Autoencoder. IEEE Access 2024, 12, 84323–84332. [Google Scholar] [CrossRef]

- Wang, C.; Wang, B.; Liu, H.; Qu, H. Anomaly Detection for Industrial Control System Based on Autoencoder Neural Network. Wirel. Commun. Mob. Comput. 2020, 2020, 8897926:1–8897926:10. [Google Scholar] [CrossRef]

- Lee, X.Y.; Kumar, A.; Vidyaratne, L.; Rao, A.R.; Farahat, A.; Gupta, C. An ensemble of convolution-based methods for fault detection using vibration signals. In Proceedings of the 2023 IEEE International Conference on Prognostics and Health Management (ICPHM), Montreal, QC, Canada, 5–7 June 2023; pp. 172–179. [Google Scholar] [CrossRef]

- Roy, S.S.; Chatterjee, S.; Roy, S.; Bamane, P.; Paramane, A.; Rao, U.M.; Nazir, M.T. Accurate Detection of Bearing Faults Using Difference Visibility Graph and Bi-Directional Long Short-Term Memory Network Classifier. IEEE Trans. Ind. Appl. 2022, 58, 4542–4551. [Google Scholar] [CrossRef]

- Abdallah, M.; Joung, B.G.; Lee, W.J.; Mousoulis, C.; Sutherland, J.W.; Bagchi, S. Anomaly Detection and Inter-Sensor Transfer Learning on Smart Manufacturing Datasets. Sensors 2022, 23, 486. [Google Scholar] [CrossRef]

- Kozitsin, V.O.; Katser, I.D.; Lakontsev, D. Online Forecasting and Anomaly Detection Based on the ARIMA Model. Appl. Sci. 2021, 11, 3194. [Google Scholar] [CrossRef]

- Yang, Y.; Zhang, C.; Zhou, T.; Wen, Q.; Sun, L. DCdetector: Dual Attention Contrastive Representation Learning for Time Series Anomaly Detection. In Proceedings of the 29th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Long Beach, CA, USA, 6–10 August 2023. [Google Scholar]

- Xu, J.; Wu, H.; Wang, J.; Long, M. Anomaly Transformer: Time Series Anomaly Detection with Association Discrepancy. arXiv 2021, arXiv:2110.02642. [Google Scholar]

- Wagner, D.; Michels, T.; Schulz, F.C.F.; Nair, A.; Rudolph, M.R.; Kloft, M. TimeSeAD: Benchmarking Deep Multivariate Time-Series Anomaly Detection. Trans. Mach. Learn. Res. 2023, 2023. [Google Scholar]

- Yin, C.; Zhang, S.; Wang, J.; Xiong, N.N. Anomaly Detection Based on Convolutional Recurrent Autoencoder for IoT Time Series. IEEE Trans. Syst. Man Cybern. Syst. 2022, 52, 112–122. [Google Scholar] [CrossRef]

- Wei, Y.; Jang-Jaccard, J.; Xu, W.; Sabrina, F.; Çamtepe, S.A.; Boulic, M. LSTM-Autoencoder-Based Anomaly Detection for Indoor Air Quality Time-Series Data. IEEE Sens. J. 2022, 23, 3787–3800. [Google Scholar] [CrossRef]

- Tuli, S.; Casale, G.; Jennings, N.R. TranAD: Deep Transformer Networks for Anomaly Detection in Multivariate Time Series Data. Proc. VLDB Endow. 2022, 15, 1201–1214. [Google Scholar] [CrossRef]

- Jin, M.; Koh, H.Y.; Wen, Q.; Zambon, D.; Alippi, C.; Webb, G.I.; King, I.; Pan, S. A Survey on Graph Neural Networks for Time Series: Forecasting, Classification, Imputation, and Anomaly Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 46, 10466–10485. [Google Scholar] [CrossRef]

- Yan, P.; Abdulkadir, A.; Luley, P.P.; Rosenthal, M.; Schatte, G.A.; Grewe, B.F.; Stadelmann, T. A Comprehensive Survey of Deep Transfer Learning for Anomaly Detection in Industrial Time Series: Methods, Applications, and Directions. IEEE Access 2023, 12, 3768–3789. [Google Scholar] [CrossRef]

- Schmidl, S.; Wenig, P.; Papenbrock, T. Anomaly Detection in Time Series: A Comprehensive Evaluation. Proc. VLDB Endow. 2022, 15, 1779–1797. [Google Scholar] [CrossRef]

- Kim, B.; Alawami, M.A.; Kim, E.; Oh, S.; Park, J.H.; Kim, H. A Comparative Study of Time Series Anomaly Detection Models for Industrial Control Systems. Sensors 2023, 23, 1310. [Google Scholar] [CrossRef]

- Rao, A.R.; Reimherr, M.L. Nonlinear Functional Modeling Using Neural Networks. J. Comput. Graph. Stat. 2021, 32, 1248–1257. [Google Scholar] [CrossRef]

- Rao, A.R.; Wang, H.; Gupta, C. Functional approach for Two Way Dimension Reduction in Time Series. In Proceedings of the 2022 IEEE International Conference on Big Data (Big Data), Osaka, Japan, 17–20 December 2022; pp. 1099–1106. [Google Scholar]

- Zhang, C.; Zhou, T.; Wen, Q.; Sun, L. TFAD: A Decomposition Time Series Anomaly Detection Architecture with Time-Frequency Analysis. In Proceedings of the 31st ACM International Conference on Information & Knowledge Management, Atlanta, GA, USA, 17–21 October 2022. [Google Scholar]

- Giovannelli, A.; Lippi, M.; Proietti, T. Band-Pass Filtering with High-Dimensional Time Series. SSRN Electron. J. 2023. [Google Scholar] [CrossRef]

- Yaacob, A.H.; Tan, I.K.; Chien, S.F.; Tan, H.K. ARIMA Based Network Anomaly Detection. In Proceedings of the 2010 Second International Conference on Communication Software and Networks, Singapore, 26–28 February 2010; pp. 205–209. [Google Scholar] [CrossRef]

- Pena, E.H.M.; de Assis, M.V.O.; Proença, M.L. Anomaly Detection Using Forecasting Methods ARIMA and HWDS. In Proceedings of the 2013 32nd International Conference of the Chilean Computer Science Society (SCCC), Temuco, Chile, 11–15 November 2013; pp. 63–66. [Google Scholar] [CrossRef]

- Hinton, G.E.; Salakhutdinov, R.R. Reducing the dimensionality of data with neural networks. Science 2006, 313, 504–507. [Google Scholar] [CrossRef]

- Kingma, D.P. Auto-encoding variational bayes. arXiv 2013, arXiv:1312.6114. [Google Scholar]

- He, S.; Du, M.; Jiang, X.; Zhang, W.; Wang, C. VAEAT: Variational AutoeEncoder with adversarial training for multivariate time series anomaly detection. Inf. Sci. 2024, 676, 120852. [Google Scholar] [CrossRef]

- Vincent, P.; Larochelle, H.; Bengio, Y.; Manzagol, P.A. Extracting and composing robust features with denoising autoencoders. In Proceedings of the 25th International Conference on Machine Learning (ICML ’08), Helsinki, Finland, 5–9 July 2008; pp. 1096–1103. [Google Scholar] [CrossRef]

- Thill, M.; Konen, W.; Wang, H.; Bäck, T. Temporal convolutional autoencoder for unsupervised anomaly detection in time series. Appl. Soft Comput. 2021, 112, 107751. [Google Scholar] [CrossRef]

- Malhotra, P.; Ramakrishnan, A.; Anand, G.; Vig, L.; Agarwal, P.; Shroff, G. LSTM-based encoder-decoder for multi-sensor anomaly detection. arXiv 2016, arXiv:1607.00148. [Google Scholar]

- Kanarachos, S.; Christopoulos, S.R.G.; Chroneos, A.; Fitzpatrick, M.E. Detecting anomalies in time series data via a deep learning algorithm combining wavelets, neural networks and Hilbert transform. Expert Syst. Appl. 2017, 85, 292–304. [Google Scholar] [CrossRef]

- Oppenheim, A.V.; Schafer, R.W. Discrete-Time Signal Processing, 3rd ed.; Pearson: London, UK, 2009. [Google Scholar]

- Meyer, Y. Wavelets: Algorithms and Applications; SIAM: Philadelphia, PA, USA, 1993. [Google Scholar]

- Golgowski, M.; Osowski, S. Anomaly detection in ECG using wavelet transformation. In Proceedings of the 2020 IEEE 21st International Conference on Computational Problems of Electrical Engineering (CPEE), Online Conference, Poland, 16–19 September 2020; pp. 1–4. [Google Scholar] [CrossRef]

- Shang, L.; Zhang, Z.; Tang, F.; Cao, Q.; Pan, H.; Lin, Z. CNN-LSTM Hybrid Model to Promote Signal Processing of Ultrasonic Guided Lamb Waves for Damage Detection in Metallic Pipelines. Sensors 2023, 23, 7059. [Google Scholar] [CrossRef]

- Lu, Y.X.; Jin, X.B.; Chen, J.; Liu, D.J.; Geng, G.G. F-SE-LSTM: A Time Series Anomaly Detection Method with Frequency Domain Information. arXiv 2024, arXiv:2412.02474. [Google Scholar]

- Yao, Y.; Ma, J.; Ye, Y. Regularizing autoencoders with wavelet transform for sequence anomaly detection. Pattern Recognit. 2023, 134, 109084. [Google Scholar] [CrossRef]

- Kokoszka, P.; Reimherr, M.L. Introduction to Functional Data Analysis; Chapman and Hall/CRC: New York, NY, USA, 2017. [Google Scholar]

- Shi, L.; Cao, L.; Chen, Z.; Chen, B.; Zhao, Y. Nonlinear subspace clustering by functional link neural networks. Appl. Soft Comput. 2024, 167, 112303. [Google Scholar] [CrossRef]

- Wang, Q.; Zheng, S.; Farahat, A.K.; Serita, S.; Gupta, C. Remaining Useful Life Estimation Using Functional Data Analysis. In Proceedings of the 2019 IEEE International Conference on Prognostics and Health Management (ICPHM), San Francisco, CA, USA, 17–20 June 2019; pp. 1–8. [Google Scholar]

- Hsieh, T.Y.; Sun, Y.; Wang, S.; Honavar, V.G. Functional Autoencoders for Functional Data Representation Learning. In Proceedings of the SDM, Virtual Event, 29 April–1 May 2021. [Google Scholar]

- Shi, L.; Shen, L.; Zakharov, Y.V.; de Lamare, R.C. Widely Linear Complex-Valued Spline-Based Algorithm for Nonlinear Filtering. In Proceedings of the 2023 31st European Signal Processing Conference (EUSIPCO), Helsinki, Finland, 4–8 September 2023; pp. 1908–1912. [Google Scholar]

- Feng, C.; Tian, P. Time Series Anomaly Detection for Cyber-physical Systems via Neural System Identification and Bayesian Filtering. In Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery & Data Mining, Virtual Event, Singapore, 14–18 August 2021. [Google Scholar]

- Shi, L.; Lu, R.; Liu, Z.; Yin, J.; Chen, Y.; Wang, J.; Lu, L. An Improved Robust Kernel Adaptive Filtering Method for Time-Series Prediction. IEEE Sens. J. 2023, 23, 21463–21473. [Google Scholar] [CrossRef]

- Shi, L.; Tan, J.; Wang, J.; Li, Q.; Lu, L.; Chen, B. Robust kernel adaptive filtering for nonlinear time series prediction. Signal Process. 2023, 210, 109090. [Google Scholar] [CrossRef]

- Singh, R.K.; Sinha, V.S.P.; Joshi, P.K.; Kumar, M. Use of Savitzky-Golay Filters to Minimize Multi-temporal Data Anomaly in Land use Land cover mapping. Indian J. For. 2019, 42, 362–368. [Google Scholar]

- Cleveland, R.B.; Cleveland, W.S.; McRae, J.E.; Terpenning, I. STL: A seasonal-trend decomposition. J. Off. Stat 1990, 6, 3–73. [Google Scholar]

- Tan, J.; Li, Z.; Zhang, C.; Shi, L.; Jiang, Y. A multiscale time-series decomposition learning for crude oil price forecasting. Energy Econ. 2024, 136, 107733. [Google Scholar] [CrossRef]

- Hou, S.; Liang, M.; Zhang, Y.; Li, C. Vibration signal demodulation and bearing fault detection: A clustering-based segmentation method. Proc. Inst. Mech. Eng. Part C J. Mech. Eng. Sci. 2014, 228, 1888–1899. [Google Scholar] [CrossRef]

- Selamat, N.A.; Ali, S.H.M. A Novel Approach of Chewing Detection based on Temporalis Muscle Movement using Proximity Sensor for Diet Monitoring. In Proceedings of the 2020 IEEE-EMBS Conference on Biomedical Engineering and Sciences (IECBES), Langkawi Island, Malaysia, 1–3 March 2021; pp. 12–17. [Google Scholar]

- Shen, L.; Li, Z.; Kwok, J. Timeseries anomaly detection using temporal hierarchical one-class network. Adv. Neural Inf. Process. Syst. 2020, 33, 13016–13026. [Google Scholar]

- Podder, P.; Hasan, M.M.; Islam, M.R.; Sayeed, M. Design and Implementation of Butterworth, Chebyshev-I and Elliptic Filter for Speech Signal Analysis. arXiv 2020, arXiv:2002.03130. [Google Scholar] [CrossRef]

- Hochreiter, S. Long Short-term Memory. In Neural Computation; MIT-Press: Cambridge, MA, USA, 1997. [Google Scholar]

- Malhotra, P.; Vig, L.; Shroff, G.M.; Agarwal, P. Long Short Term Memory Networks for Anomaly Detection in Time Series. In Proceedings of the European Symposium on Artificial Neural Networks, Bruges, Belgium, 22–24 April 2015. [Google Scholar]

- Rao, A.R.; Reimherr, M.L. Modern non-linear function-on-function regression. Stat. Comput. 2023, 33, 130. [Google Scholar] [CrossRef]

- Xu, H.; Feng, Y.; Chen, J.; Wang, Z.; Qiao, H.; Chen, W.; Zhao, N.; Li, Z.; Bu, J.; Li, Z.; et al. Unsupervised Anomaly Detection via Variational Auto-Encoder for Seasonal KPIs in Web Applications. In Proceedings of the 2018 World Wide Web Conference on World Wide Web—WWW ’18, Lyon, France, 23–27 April 2018; ACM Press: New York, NY, USA, 2018; pp. 187–196. [Google Scholar] [CrossRef]

- Su, Y.; Zhao, Y.; Niu, C.; Liu, R.; Sun, W.; Pei, D. Robust Anomaly Detection for Multivariate Time Series through Stochastic Recurrent Neural Network. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, KDD ’19, Anchorage, AK, USA, 4–8 August 2019; pp. 2828–2837. [Google Scholar] [CrossRef]

- Hundman, K.; Constantinou, V.; Laporte, C.; Colwell, I.; Söderström, T. Detecting Spacecraft Anomalies Using LSTMs and Nonparametric Dynamic Thresholding. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, London, UK, 19–23 August 2018. [Google Scholar]

- Abdulaal, A.; Liu, Z.; Lancewicki, T. Practical Approach to Asynchronous Multivariate Time Series Anomaly Detection and Localization. In Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery & Data Mining, KDD ’21, Virtual Event, Singapore, 14–18 August 2021; pp. 2485–2494. [Google Scholar] [CrossRef]

- Angryk, R.A.; Martens, P.C.; Aydin, B.; Kempton, D.J.; Mahajan, S.S.; Basodi, S.; Ahmadzadeh, A.; Cai, X.; Boubrahimi, S.F.; Hamdi, S.M.; et al. Multivariate time series dataset for space weather data analytics. Sci. Data 2020, 7, 227. [Google Scholar] [CrossRef]

- Mathur, A.P.; Tippenhauer, N.O. SWaT: A water treatment testbed for research and training on ICS security. In Proceedings of the 2016 International Workshop on Cyber-physical Systems for Smart Water Networks (CySWater), Vienna, Austria, 11 April 2016; pp. 31–36. [Google Scholar] [CrossRef]

- Ahmed, C.; Palleti, V.; Mathur, A. WADI: A water distribution testbed for research in the design of secure cyber physical systems. In Proceedings of the 3rd International Workshop on Cyber-Physical Systems for Smart Water Networks, Pittsburgh, PA, USA, 21 April 2017; pp. 25–28. [Google Scholar] [CrossRef]

- Lai, K.H.; Zha, D.; Xu, J.; Zhao, Y.; Wang, G.; Hu, X. Revisiting Time Series Outlier Detection: Definitions and Benchmarks. In Proceedings of the Thirty-Fifth Conference on Neural Information Processing Systems Datasets and Benchmarks Track (Round 1), Virtual, 6–14 December 2021. [Google Scholar]

| Dataset | # of Training Data | # of Test Data | Dimensions | Anomaly % |

|---|---|---|---|---|

| MSL [57] | 58,317 | 73,729 | 55 | 10.5 |

| SMAP [57] | 135,183 | 427,617 | 25 | 12.8 |

| SMD [56] | 58,317 | 73,729 | 38 | 10.72 |

| PSM [58] | 132,481 | 87,841 | 25 | 27.8 |

| SWAN [59] | 60,000 | 60,000 | 38 | 32.6 |

| SWAT [60] | 99,000 | 89,984 | 26 | 12.2 |

| WADI [61] | 1,048,571 | 172,801 | 123 | 5.99 |

| Dataset | MLP (No BP) | MLP (BP) | LSTM (No BP) | LSTM (BP) | FNN (No BP) | FNN (BP) |

|---|---|---|---|---|---|---|

| MSL | 0.871 | 0.863 | 0.886 | 0.863 | 0.849 | 0.846 |

| SMAP | 0.700 | 0.706 | 0.699 | 0.706 | 0.698 | 0.706 |

| SMD | 0.709 | 0.758 | 0.765 | 0.789 | 0.792 | 0.816 |

| PSM | 0.936 | 0.977 | 0.962 | 0.980 | 0.945 | 0.987 |

| SWAN | 0.791 | 0.795 | 0.789 | 0.793 | 0.801 | 0.819 |

| SWAT | 0.755 | 0.901 | 0.821 | 0.910 | 0.878 | 0.927 |

| WADI | 0.797 | 0.799 | 0.826 | 0.875 | 0.838 | 0.893 |

| Dataset | MLP (No BP) | MLP (BP) | LSTM (No BP) | LSTM (BP) | FNN (No BP) | FNN (BP) |

|---|---|---|---|---|---|---|

| MSL | 0.930 | 0.928 | 0.940 | 0.934 | 0.925 | 0.922 |

| SMAP | 0.850 | 0.855 | 0.852 | 0.857 | 0.848 | 0.849 |

| SMD | 0.840 | 0.852 | 0.875 | 0.875 | 0.893 | 0.904 |

| PSM | 0.956 | 0.977 | 0.978 | 0.987 | 0.981 | 0.995 |

| SWAN | 0.861 | 0.865 | 0.860 | 0.865 | 0.870 | 0.881 |

| SWAT | 0.837 | 0.949 | 0.892 | 0.958 | 0.931 | 0.962 |

| WADI | 0.890 | 0.895 | 0.915 | 0.944 | 0.925 | 0.958 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Backhus, J.; Rao, A.R.; Venkatraman, C.; Gupta, C. Time Series Anomaly Detection Using Signal Processing and Deep Learning. Appl. Sci. 2025, 15, 6254. https://doi.org/10.3390/app15116254

Backhus J, Rao AR, Venkatraman C, Gupta C. Time Series Anomaly Detection Using Signal Processing and Deep Learning. Applied Sciences. 2025; 15(11):6254. https://doi.org/10.3390/app15116254

Chicago/Turabian StyleBackhus, Jana, Aniruddha Rajendra Rao, Chandrasekar Venkatraman, and Chetan Gupta. 2025. "Time Series Anomaly Detection Using Signal Processing and Deep Learning" Applied Sciences 15, no. 11: 6254. https://doi.org/10.3390/app15116254

APA StyleBackhus, J., Rao, A. R., Venkatraman, C., & Gupta, C. (2025). Time Series Anomaly Detection Using Signal Processing and Deep Learning. Applied Sciences, 15(11), 6254. https://doi.org/10.3390/app15116254