Abstract

Event types classify Chinese verbs based on the internal temporal structure of events. The categorization of verb event types is the most fundamental classification of concept types represented by verbs in the human brain. Meanwhile, event types exhibit strong predictive capabilities for exploring collocational patterns between words, making them crucial for Chinese teaching. This work focuses on constructing a statistically validated gold-standard dataset, forming the foundation for achieving high accuracy in recognizing verb event types. Utilizing a manually annotated dataset of verbs and aspectual markers’ co-occurrence features, the research conducts hierarchical clustering of Chinese verbs. The resulting dendrogram indicates that verbs can be categorized into three event types—state, activity and transition—based on semantic distance. Two approaches are employed to construct vector matrices: a supervised method that derives word vectors based on linguistic features, and an unsupervised method that uses four models to extract embedding vectors, including Word2Vec, FastText, BERT and ChatGPT. The classification of verb event types is performed using three classifiers: multinomial logistic regression, support vector machines and artificial neural networks. Experimental results demonstrate the superior performance of embedding vectors. Employing the pre-trained FastText model in conjunction with an artificial neural network classifier, the model achieves an accuracy of 98.37% in predicting 3133 verbs, thereby enabling the automatic identification of event types at the level of Chinese verbs and validating the high accuracy and practical value of embedding vectors in addressing complex semantic relationships and classification tasks. This work constructs datasets of considerable semantic complexity, comprising a substantial volume of verbs along with their feature vectors and situation type labels, which can be used for evaluating large language models in the future.

1. Introduction

The relationship between language and cognition has always been a focus of linguists. In human cognitive concepts, the event types represented by verbs hold significant importance for our understanding of the world, as this classification essentially shapes the foundational patterns of concept formation and integration in humans. Moreover, the classification of verbs by humans can reflect our cognition of embodied experiences in the real world.

From the perspective of Chinese language research, the study and prediction of event types are of great significance, primarily in two aspects. On one hand, the collocational patterns in Chinese primarily revolve around verbs, exploring the relationships between verbs and other components. Whether at the syntactic level involving subjects and objects, or in the dominance relationships among semantic roles, both are influenced by the verb event types. Additionally, aspectual auxiliaries constitute an important category of function words in Chinese, and event types provide crucial hints for the collocational relationships between verbs and aspectual auxiliaries. Although their focuses differ: event types concentrate on the temporal structure formed by verbs and their arguments, while aspectual auxiliaries focus on various facets of the event process, offering different observation perspectives on events, the interaction between the two is indeed a key point in studying their collocational relationships.

On the other hand, the fundamental classification of verbs is of significant importance for linguistic research. The study of Chinese verbs has long been a hot topic, with linguists employing various classification methods based on their research objectives. For instance, Zhu [1] posited that the grammatical functions of verbs are not entirely consistent and can be categorized into different subcategories based on distinct criteria, including transitive verbs, intransitive verbs, nominal-object verbs, verbal-object verbs, nominal verbs and auxiliary verbs. Some scholars, such as Kong [2], classified verbs from the perspective of grammatical functions and semantic features. He divided verbs into four categories based on the relationship between verbs and the dynamic auxiliary word “GUO”, including repetitive verbs, stative verbs, non-repeatable verbs and verbs with judgment and evaluation properties. Regardless of the method employed, these classifications essentially pertain to the categorization of event types, with the ultimate aim of clarifying the collocational patterns between verbs and other sentence constituents, which has important implications for both linguistic research and teaching. However, there are exceptions; for example, the SUMO (Suggested Upper Merged Ontology) [3] ontology classification system does not differentiate vocabulary based on parts of speech but instead focuses on constructing a universal knowledge system for humanity. Nevertheless, the foundational conceptual system of SUMO still comprises concepts such as state and activity. Therefore, a comprehensive and thorough study of verb event types has become an almost unavoidable task.

From an applied perspective, the collocational patterns between words are significantly influenced by the event types. The event types of predicates, particularly verbs, form the foundation for studying the rules of the Chinese language and represent a crucial node in both learning and teaching Chinese. The use of aspectual markers is a key focus and challenge in Chinese language instruction. With a clear understanding of event types, students can more easily grasp the collocational patterns between words, verbs and aspectual markers.

Event type refers to the classification of a verb based on the event structure it exhibits across the temporal phase, often referred to as situation type. Verbs and aspectual markers exhibit different co-occurrence patterns, leading to distinctions in event types. These differences in co-occurrence capabilities suggest the characteristics of different event structures within verbs. Although various terminologies exist to describe the event types of verbs, they can generally be categorized into three classes: state, activity and transition.

The study of aspect has long been a focal point in the linguistic community. Overall, research on situation types has yielded fruitful results at the current stage. However, there are still some controversies, primarily manifested in the following three aspects: Firstly, there is the problem of classification diversity. For example, Vendler [4] proposed a classic classification of verb temporal structures, categorizing verbs into four classes—state, activity, accomplishment and achievement—based on three semantic features. Building on Vendler’s work, Smith introduced the semelfactive category, resulting in a five-part classification system for situation types [5]. It is thus clear that scholars hold divergent views on the classification of situation types, lacking objective criteria for classification, thus hindering the establishment of a scientific classification system. Secondly, compositionality is an inherent property of aspect, yet its theoretical value has not been fully appreciated in existing research. Although a thorough exploration of compositionality inevitably involves complex semantic interactions among different syntactic structures, research on aspect in any language should not confine the analysis dimension to the sentence level by rejecting compositionality. Doing so would obscure the construction and analysis of lexical situation type systems, impeding a comprehensive explanation of the interaction patterns between aspectual markers and verbs, ultimately limiting the depth and explanatory power of aspectual category research. Thirdly, from an applied perspective, there is a scarcity of literature on situation type prediction, with issues such as non-standard data annotation and less advanced training methods for models. For instance, studies by Liu [6,7] indicate the difficulty in achieving high accuracy in predicting situation types. These are pressing issues in situation type research that this article aims to address.

A review of Liu [6,7] reveals several problems, including the limited size of the dataset, the use of heterogeneous training corpora and reliance on outdated Word2vec embeddings. These factors may collectively contribute to the lower accuracy in situation type prediction. In order to address these problems, especially to improve prediction accuracy, this paper conducted further research following the achievements of Liu [6,7], thus sharing many methodological similarities with the previous work. Regarding the definition of aspect and cross-linguistic comparisons of aspect—issues that have been systematically addressed in Liu [6,7]—we refrain from redundant discussions here to maintain focus on the current research objectives. This paper primarily focuses on the following two research questions: firstly, how to construct a verb situation type classification system using a more scientific approach. Secondly, how to achieve high-accuracy prediction of verb situation types. These questions will be further elucidated in the subsequent sections through a review of the relevant literature.

2. Literature Review

2.1. Research Based on Traditional Linguistic Methods

Significant progress has been made in the study of verb situation types, with research based on traditional linguistic methods primarily focusing on two aspects: the first is the study of the situation types’ classification, the second is the study of the interaction between situation types and aspectual markers. Additionally, some studies address both parts simultaneously. Next, we will introduce the research results of two parts separately.

First, we examine research on the classification and construction of situation type systems. In the study of verb situation types, the most influential framework is Vendler’s four-category classification [4], which categorized verbs based on three semantic features: [durative], [dynamic] and [telic]. According to Vendler [4], verbs can be classified into state verbs, activity verbs, accomplishment verbs and achievement verbs. Vendler’s theory has been widely accepted and adopted, influencing many studies on Chinese, such as that of Teng [8], who argued that situation types of Chinese must be divided into four categories following Vendler’s framework. Furthermore, Smith’s five-category classification [5] has also had a significant impact on subsequent research. Building on Vendler’s work [4], Smith [5] introduced the semelfactive category, which she described as atelic achievements characterized by [-static], [-durative], [-telic] features, exemplified by verbs like “tap”, “knock” and “cough”. Smith’s two component theory [5] clearly distinguished between situation types and viewpoint aspect as two independent yet interacting components, contributing significantly to the establishment of aspect systems. Many scholars have adopted Smith’s analytical approach, including Xiao and McEnery [9] and Chen [10]. However, scholars hold diverse views on the classification of situation types, with some proposing even more detailed categorizations. For instance, Chen [11] argued that Chinese sentences can be divided into five situation types based on their temporal structure, with predicate verbs further classified into ten categories. Similarly, Guo [12] categorized situation types of verbs into five major classes and ten subclasses based on their performance in tense aspect structures.

Next, we turn to research on the interaction between situation types and aspectual markers. Aspect is a crucial grammatical category, and the grammaticalized means used to express aspect are referred to as aspectual markers. Comrie’s Aspect [13] is the first comprehensive work to explore aspect on a universal linguistics level. He argued that two types of language can be distinguished among those that express the opposites of aspect by morphological means: one type has a clearly defined aspectual marker, and the form of the verb does not change with aspect; on the contrary, the other type lacks such markers. Chinese belongs to the first category described by Comrie [13], as it employs numerous aspectual markers expressed by grammaticalized or semi-grammaticalized auxiliary words. These markers attach to predicate verbs to express different aspectual meanings. Research on the relationship between situation types and aspectual markers in Chinese dates back to the 1920s. Li [14] proposed that the auxiliary word “LE” indicates completion, although a clear aspect category had not yet been established at that time. Subsequent research can be divided into two phases, with the late 1970s serving as the dividing line. Early studies primarily focused on summarizing and distinguishing the meanings of aspectual markers, with notable contributions from scholars such as Lyu [15], Wang [16] and Gao [17]. From the 1980s onward, research entered a phase of development and deepening, placing greater emphasis on the unique characteristics of Chinese. Discussions during this period increasingly addressed the interaction between situation types and aspectual markers, with the co-occurrence of verbs and aspectual markers often determined by researchers’ intuitive judgments. Prominent scholars in this phase include Tai [18], Chen [11], Guo [12,19], Dai [20] and Chen [10]. For instance, Dai’s A Study on the Tense and Aspect System in Mandarin [20] is the first comprehensive and systematic monograph dedicated to the study of aspect in modern Chinese. The theoretical framework of this work is influenced by Comrie [13]. In this book, Dai argued that situation types should be examined at both the verb and sentence levels. He proposed that Chinese exhibits a distinction between perfective and imperfective aspects and constructed an aspect system comprising two major categories and six subcategories. Each aspect corresponds to specific aspectual markers that express aspectual meanings. The book also discusses in detail the specifics of whether different situation types and aspectual markers can be paired.

In recent years, new research perspectives have emerged. For example, Yin et al. [21] explored the situation type and mechanism for the eventualization interpretation of the construction yige+V in Chinese. Zhang [22] investigated the neurocognitive mechanism behind the syntactic processing of verbs with different situation types and aspectual markers, using native Chinese speakers and Chinese second language learners from diverse linguistic backgrounds as subjects. Zhu et al. [23] examined the correspondence relationship between Korean morpheme “-eoteot-” and Chinese perfective aspect “LE” and “GUO”.

Overall, when studying the classification of situation types, scholars have generally included both situation types and aspectual markers within their discussions. Their research implicitly incorporates Smith’s two component theory [5].

2.2. Research Based on Computational Methods

In recent years, numerous scholars have conducted in-depth studies on situation types by integrating statistical methods and computer algorithms. These studies primarily focus on the following two aspects.

Firstly, there are studies of the prediction of situation types. In the field of computational linguistics, the prediction of situation types can be divided into sentence-level and verb-level studies.

At the sentence level, relevant research includes Siegel and McKeown [24], Cao et al. [25] and Xu [26]. These studies emphasized the use of linguistic features and had achieved high prediction accuracy. For example, Xu [26] attained a prediction accuracy of 77.95% in experiments involving the classification of mid-level event types, including static, dynamic, achievement and accomplishment features. However, these scholars mapped the situation types of sentences to the composition of verbs, thereby denying the compositionality and recursiveness of situation types. Additionally, the study of Li et al. [27] is the first attempt of collaborative bootstrapping in natural language processing application, which involves two heterogeneous classifiers. And the model has yielded good results in analyzing temporal relations in a Chinese multiple-clause sentence. Zarcone and Lenci [28] employed both supervised and unsupervised methods to train two models for the automatic identification of situation types in Italian sentences.

At the verb level, research on the prediction of situation types includes studies by Pustejovsky et al. [29], Kazantseva and Szpakowicz [30], Saurí and Pustejovsky [31], Costa and Branco [32], Meyer et al. [33], Falk and Martin [34] and Liu [6,7], among others. However, the prediction accuracy in these studies is relatively modest. For instance, Liu [7] trained Word2vec embeddings on a combined corpus of Sinica and Chinese Gigaword to predict the situation types of verbs, yet the best-performing classifier achieved only a 72.05% prediction accuracy. The low accuracy in Liu’s study stems from multiple factors. For instance, while the Chinese Gigaword corpus contains 1.12 billion words, its scale is not comparable to that of the Centre for Chinese Linguistics PKU (abbreviated as CCL) corpus. This disparity results in insufficient contextual co-occurrence information, which impedes models from capturing deep semantic relationships between words, thereby compromising the effectiveness of Word2vec embeddings trained on this corpus. Additionally, given the continuous emergence of new large language models, Word2vec embeddings are becoming less competitive. Situation type prediction experiments should now explore the adoption of more advanced embedding vectors to enhance semantic representation capabilities.

In recent years, some scholars have combined situation type theory with statistical analysis methods to further investigate aspectual markers in Chinese. Jin and Li [35] employed multiple correspondence analysis to examine the temporal characteristics of verbs and the aspectual marker “ZHE” in the “V ZHE V ZHE” structure in Chinese. They concluded that the aspectual properties of the imperfective marker “ZHE” in this particular structure are predicted to be dual-dynamic-and-static, durative and atelic. This study provides quantitative support for earlier introspective research on the classification of aspectual markers and situation types.

Secondly, there have been studies expanding situation type theory into practical applications, with relevant contributions from Kazantseva and Szpakowicz [30], Costa and Branco [32], Meyer et al. [33], Grillo et al. [36], among others. For instance, Kazantseva and Szpakowicz [30] discussed methods for automatically generating summaries of literary short stories. They reviewed viewpoints from scholars like Dorr and Olsen [37], arguing that verbs are categorized into different situation classes based on dynamic, durative and telic features. These semantic features assist in understanding the behavior and meaning of verbs in sentences. And situation type is a key factor in the comprehension and generation of short story summaries. Grillo et al. [36], integrating event structure theory, explored the processing unambiguous verbal passives in German for the first time. They presented two self-paced reading experiments and concluded that “In German, the comprehension accuracy with unambiguous verbal passives is independent of the event structure associated with the underlying predicate”.

In summary, these studies significantly contribute to reducing the subjectivity in situation type classification and quantifying the interaction between verbs and aspectual markers, while also demonstrating their practical value for tasks such as information retrieval, text summarization and machine translation.

2.3. Comments on Previous Research

Based on the research status reviewed above, we can at least identify the following issues.

First, the most critical problem lies in the disagreements over situation type classification, which manifests in two main aspects: the first is there is no consensus on how many categories situation types should be divided into; and the second is the hierarchical issues of situation types. Vendler [4] placed units such as verbs, verbal phrases and even sentences on the same level and attempted to classify them all at once, in such a way that verb situation types cannot be independent of the whole structure. Influenced by Vendler [4], many scholars’ categorization of accomplishment situation types always include verb-object phrases, while other situation types are composed by verbs alone. To avoid conflicts, many researchers have continually elevated the level of situation types, but this approach makes it difficult to investigate the essence of lexical aspect.

Second, the classification of situation types is often based on scholars’ experience and intuition, which involves a certain degree of subjectivity. A crucial issue is the lack of explicit annotation standards, as many scholars categorize situation types based on the semantics of verbs rather than considering formal syntactic rules as annotation criteria. Only a few scholars, such as Guo [12], determine the attribution of verb situation types based on the co-occurrence of verbs with aspectual markers. However, regardless of semantic or formal criteria, scholars have overlooked consistency checks in their research, which is also a focal point of this paper. Additionally, there is currently a lack of large-scale data validation for the classification systems. Therefore, it is essential to adopt scientific and statistical methods for research.

Third, most scholars can only predict situation types at the sentence level because they cannot thoroughly analyze verb level situation types. Only a few scholars have conducted in-depth research and prediction on the situation types of verbs. However, if situation type prediction at the verb and phrasal levels cannot be achieved, predicting situation types at the sentence level is not highly meaningful. This is because the situation type of a verb may change according to the situation type of the sentence it appears in, eventually falling into the dilemma of circularity. Most scholars avoid the issue of predicting verb situation types, and the primary reason for this avoidance is not, as they often claim, that “situation type can only be assigned to a verb phrase or sentence [5,38]” or that “situation types can be shifted by the influence of other sentence components [4,5,39]”. Rather, it is because verbs are numerous, each with multiple senses which may change in context. This makes it necessary for researchers to consider complex factors when predicting situation types, resulting in a massive workload for comprehensive research. Therefore, only a small number of scholars have achieved thorough classification. In the study of situation types in China, only Guo [12] has classified the situation types of over 1000 verbs from the Verb Usage Dictionary [40].

Fourth, many scholars have avoided the issue of situation type compositionality. They only acknowledge the existence of event types at the sentence level, oversimplifying the true semantic model of situation type research, and fail to recognize the independence of verb situation types. Compositionality allows verbs, verbal phrases and sentences to be placed at different levels, reflecting the recursiveness of aspectual information. If we accept the compositionality and recursiveness of aspect, then constructing a verb situation type system becomes an unavoidable task. However, since the factors involved in compositionality are relatively complex, we will address this topic in a separate paper.

Fifthly, existing research on predicting situation types is predominantly found in English literature, with most studies focusing on English as the research subject. While a few studies touch upon discussions in other languages such as Italian, there is a general lack of research targeting situation types in Chinese for prediction. Therefore, there is still further exploration space to achieve high-accuracy prediction of Chinese verb situation types.

Given the current state of situation type research, this paper will focus on the following issues:

First, how can we classify verb situation types in a more scientifically grounded manner to avoid controversies in classification research? Scholars have primarily relied on intuitive or introspective methods when constructing situation type systems, which have consistently lacked a unified foundation. Our goal is to establish a verb situation type classification system that can be measured by objective standards. Therefore, the use of statistical methods is crucial.

Second, can we achieve high-accuracy prediction of verb situation types? Current prediction methods in research struggle to attain high accuracy. To address this, we not only need to improve the methods for constructing training datasets but also employ more advanced and complex algorithms for the automatic recognition of verb situation types. Specific research methods will be detailed in the next section.

Only by achieving high-accuracy prediction of verb situation types can we possibly predict the situation types of phrases and sentences, thereby further verifying the compositionality and recursiveness of situation types and exploring the collocation rules between situation types and aspectual markers. Additionally, accurately predicting the situation type of verbs can enable us to make predictions on unknown language resources, helping researchers to more clearly categorize verb types. This, in turn, offers significant practical value for understanding the world, exploring the collocation patterns between words and advancing Chinese language teaching.

In summary, this paper will focus on solving the problem of improving situation type prediction accuracy from an engineering perspective, aiming to train a practically useful situation type automatic recognition model.

3. Procedure, Data and Methodology

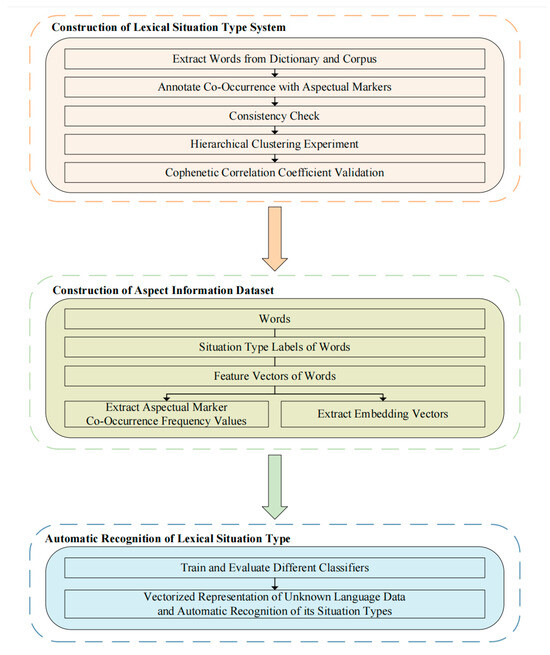

To address the discrepancies in the classification system of verb situation types and reduce the subjectivity in classification research, we employed a threefold approach to ensure the scientific rigor of the situation type classification system. These approaches are as follows: First, we used objective syntactic criteria as the basis for classification. Second, when determining whether a verb can co-occur with an aspectual marker, we adopted a multi-annotator labeling approach and introduced consistency checks, rather than relying on individual experience or intuition. Third, we performed hierarchical clustering on the validated matrix, which allows the situation type system to be automatically generated based on the semantic distance between verbs, rather than being artificially constructed based on intuitive experience. To achieve these objectives, the experiment was divided into the following three steps, as illustrated in Figure 1.

Figure 1.

Flowchart of verb situation type prediction experiment.

Next, we will provide a detailed introduction to the data and methods involved in each part of the experiment.

3.1. Construction of Lexical Situation Type System

3.1.1. Verb Extraction

Constructing a situation type classification system is a bottom-up process. The number of verbs at the foundational level must be sufficiently large and their coverage broad enough to ensure the representativeness of the resulting classification system. Therefore, the primary task is to establish a large-scale lexicon of verbs. The sources of verbs in it consist of two parts.

First, we draw from the Verb Usage Dictionary by Meng [40]. The reasons for using this dictionary are as follows: First, the dictionary is dedicated to the detailed division of verb senses and the explanation of verb meanings, providing a reference for determining primary senses during manual annotation. Second, the dictionary contains extensive information describing verb functions, such as the co-occurrence capabilities of verbs with aspectual markers like “LE”, “ZHE”, “GUO” and temporal quantifiers, which can be used to verify whether the annotation results of the co-occurrence of verbs and aspectual markers are accurate. Third, Guo [12] annotated the verbs in Meng’s dictionary, allowing for a dual verification of our annotation results to ensure their reliability.

Second, we extracted high-frequency verbs from the CCL corpus. The rationale for this choice is twofold: first, verbs in the corpus directly reflect actual language usage. Using raw corpus data ensures that the data are more authentic and representative. In contrast, verbs in dictionaries undergo manual standardization and may not accurately capture the true face of language. This approach thus complements the verbs from Meng’s dictionary. Second, the corpus contains a larger number of verbs, providing broader coverage of co-occurrence features between verbs and aspectual markers. This can effectively avoid the data sparsity problem in statistical validation.

3.1.2. Manual Annotation of Co-Occurrence Information

The classification of verb situation types is based on the co-occurrence features of verbs with different aspectual markers. To ensure the scientific rigor of the situation type classification system, we need to establish objective annotation criteria and manually judge and annotate the relevant features.

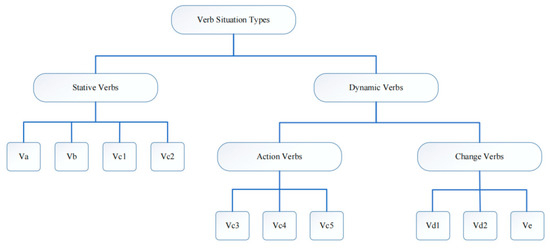

We use Guo’s principles for classifying verb situation types [12] as a basis for determining whether verbs have the ability to co-occur with aspectual markers. Guo [12,19] focused on the event structure exhibited by verbs across temporal stages, classifying Chinese verbs from the perspective of process structure, which essentially aligns with the mainstream concept of situation types. Guo [12] believes that verbs, as declarative components, have the signified that involves an internal process unfolding over time. This internal process consists of three elements: inception, finish and duration. The presence and intensity of these three elements determine the differences in verb situation types. Based on this, Guo classified Chinese verb situation types into five major categories and ten subcategories, viewing the subclasses as a complete gradual system with three prototypical categories: Va, Vc4 and Ve, representing state, activity and transition, respectively [12]. Later, Guo [19] revised his perspective, proposing that the subclasses of verb situation types exist in a certain hierarchical relationship. He introduced a three-tiered situation type hierarchy consisting of stative verbs, action verbs and change verbs, as shown below in Figure 2.

Figure 2.

Guo’s verb situation type hierarchy system [19].

The reason for adopting Guo’s annotation method [12] is that his work in annotating Meng’s dictionary is comprehensive and meticulous. He annotated nearly all the verbs in the dictionary, totaling 1328 verbs and 2117 senses. Moreover, Guo’s classification of situation types is primarily based on the grammatical features of verbs—specifically, whether they can co-occur with various aspectual markers—rather than on their intrinsic semantics. This approach ensures both scientific rigor and practical operability, aligning with our principles. We have summarized the specific criteria as follows in Table 1.

Table 1.

Guo standard for annotating verb situation types [12].

As shown in the table above, classifying the situation type of a verb requires referencing two criteria in sequence: first, what aspectual markers the verb can co-occur with; and second, what meaning the verb conveys when co-occurring with these aspectual markers. For example, in the case of the aspectual marker “LE”, we first need to determine whether the verb can co-occur with “LE”. If they can co-occur, there are two possible scenarios: first, if the co-occurrence indicates the beginning of an action, the verb has both the inception and duration; second, if the co-occurrence indicates the completion of an action, the verb has the finish. For instance:

(1) hai4xiu1 le

feel_shy LE

start to feel shy

[+inception, +duration]

(2) shi2xian4 le

achieve LE

have achieved

[+finish]

(3) zhi2xing2 le

execute LE

start to execute/have executed

[+inception, +duration, +finish]

Furthermore, if a verb can co-occur with the progressive markers “ZHE” or “ZAI/ZHENGZAI”, then the verb possesses a duration; if a verb can co-occur with the experiential marker “GUO”, then the verb has the finish. It is important to note that if a verb cannot co-occur with any of these five aspectual markers but can indicate an ongoing action, then the verb still has a duration.

Therefore, it is evident that the elements of inception, duration and finish are crucial in determining the situation type of a verb. Based on the presence and tensity of these three elements, verbs can be classified into ten distinct subcategories.

There are several points that require special clarification:

(1) Many aspectual markers in Chinese have homographs. The aspectual marker “LE” can be divided into two separate words: “LE1”, which functions as a modal particle at the end of sentences indicating the emergence of a new situation, and “LE2”, which is an auxiliary word placed after the verb to indicate the completion of an action. Although Guo [12] did not explicitly distinguish between the two, he noted that the “LE” in the above table should form a direct constituent with the verb. This means that the aspectual marker “LE” includes two cases: “LE” functioning as “LE2” and “LE” combining both “LE1” and “LE2”. It does not include “LE” functioning solely as “LE1”.

(2) In fact, many scholars argue that the time adverbs “ZAI” and “ZHENGZAI” differ in their semantics and co-occurrence capabilities with verbs. However, since both can indicate the progression of an action or the continuation of a state, we adhere to Guo’s standard [12] and treat them as a single feature for the time being.

(3) The aspectual marker “GUO” here is an auxiliary word. Some scholars argue that it should be divided into “GUO1” and “GUO2”. “GUO1” follows a predicate to indicate the completion of an action, while “GUO2” follows a predicate to indicate a past experience [41,42]. We do not make this distinction here, but it is important to clarify that the aspectual marker “GUO” does not include other homographs such as the directional verb “GUO”.

Next, we employ a manual annotation approach to label the co-occurrence capabilities of verbs with five aspectual markers. The annotators are required to make judgments based on intuition and introspection, avoiding interference from corpus data.

Additionally, to conduct an internal consistency check on the data, we employed a systematic sampling method to select 20 verbs from the lexicon as repeated items. These verbs were then re-annotated by the annotators after a certain period following the initial annotation. The systematic sampling approach was able to avoid the selected verbs from suggesting co-occurring features with each other, which can prevent the failure of internal consistency checks of data.

3.1.3. Consistency Check

The annotation task was completed by four annotators who had received specialized training in linguistics. Subsequently, we conducted a multi-level cross-validation using Cohen’s Kappa consistency test [43]. The Kappa coefficient is a statistical measure used to assess consistency, evaluating the ratio of observed agreement among annotators to the agreement expected by chance. The coefficient ranges from −1 to 1, with values closer to 1 indicating higher agreement between annotators. We established a threshold of 0.75; if the Kappa value from the test exceeded this threshold, it demonstrated that the annotated data had passed the consistency verification.

The consistency verification was conducted at three levels:

(1) Intra-annotator consistency: For the 20 repeated verbs, we performed intra-annotator consistency checks by comparing each annotator’s initial and subsequent annotations. This was performed to evaluate the stability of the judgments formed by each annotator through intuition and introspection.

(2) Inter-annotator consistency: We conducted inter-annotator consistency checks across the four annotators to measure the degree of agreement among them in performing the annotation task.

(3) Cross-validation with existing resources: After finalizing the annotations results, we performed consistency checks between our annotations and those of Guo [12]. By cross-validating with pre-annotated linguistic resources, we ensured the scientific rigor of the annotation results.

Only the annotations that passed the consistency checks were used in the hierarchical clustering experiments. These tests effectively improve the methods used by previous researchers in annotating data, to some extent reducing subjectivity in situation type classification.

3.1.4. Automatic Generation of Lexical Situation Type System

Based on the annotation results, verbs exhibit distinct distribution patterns in terms of aspectual marker features. Although Guo [12] classified the situation type system of verbs according to objective grammatical criteria, his approach remains insufficiently thorough. This is because, when determining the presence and intensity of the three elements—inception, finish and duration—he merely aggregated these features without employing a more scientific method to examine the impact of differences in element intensity. Therefore, to address the limitations of previous research and further measure the similarity and structural relationships among verbs, we transformed the categorical variables in the original matrix into dummy variables. Based on these dummy variables, we performed hierarchical clustering on the verbs and visualized the clustering results using a dendrogram. Finally, we evaluated the clustering outcome using the Cophenetic Correlation Coefficient (CPCC) metric.

Prior to conducting hierarchical clustering, it is necessary to calculate the distance between each pair of samples and assemble these distances into a distance matrix to determine the optimized clustering structure during the clustering process. Since there are multiple methods for calculating the distance matrix and linking methods, different parameters can yield varying clustering results and construct different dendrograms. To achieve a more desirable hierarchical clustering outcome, we first employed the grid search method to identify the optimized parameters for hierarchical clustering.

Grid search can exhaustively explore all possible combinations of parameters, selecting the optimized set for hierarchical clustering. Using this set of parameters in hierarchical clustering yields the best CPCC, and the dendrogram with the highest CPCC value is chosen as the optimized dendrogram.

After determining the parameters, we employ agglomerative hierarchical clustering to process the matrix, automatically generating the hierarchical structure of situation types from the bottom up. In hierarchical clustering, each sample initially forms its own cluster. Based on the distance information in the distance matrix, the similarity between clusters is calculated, and at each step, the two closest clusters are merged. This process continues until all samples are merged into a single cluster. The system of situation types is then automatically generated based on the semantic distances between verbs and is presented in the form of a dendrogram.

Finally, based on the results of hierarchical clustering, the verb situation types are mapped to the senses of the verbs. We adhere to the principle of a one-to-one correspondence between verb senses and situation types, meaning that each sense of a verb is assigned to a specific situation type in the hierarchical clustering, thereby obtaining the corresponding situation type labels.

3.2. Construction of the Training Dataset

After establishing the verb situation type system using statistical methods, the first research question of this paper has been addressed. The next step is to tackle the problem of achieving high-accuracy prediction of verb situation types.

The second research question can be broken down into two specific issues: first, how to construct a gold-standard dataset for model training. To improve the prediction accuracy of situation types, it is essential to have a large-scale, high-quality validation dataset as a foundation. We will attempt to extract verb vectors using both supervised and unsupervised methods to build the situation type dataset and compare the effectiveness of different vectors in prediction experiments. Second, what methods can be employed during model training to achieve better performance and improve the accuracy of automatic verb situation type identification? We will integrate statistical methods and computational algorithms to explore approaches for improving model performance. Specifically, we will experiment with different classifiers and conduct tenfold cross-validation to select the best-performing model, which will then serve as the model for large-scale automatic identification of verb situation types. The detailed processes and methodologies related to these two issues will be elaborated on in this section and the following section.

This section primarily introduces the data and methods used to construct the gold-standard dataset. Each dataset mainly consists of the following components: verb entries, verb situation type labels, and linguistic feature vectors or embedding vectors of the verbs. Each of these components will be described in detail below.

After the automatic generation of the situation type system, the pairing patterns between verb senses and situation types have been established, and the verb entries along with their corresponding situation type labels are naturally preserved. It is worth noting that not all verb sense entries used in the clustering process are retained in the training dataset. This is because some senses occur with extremely low frequency in the corpus, which could lead to a data sparsity problem during classification experiments. Therefore, only the most frequent sense of each verb and its corresponding situation type label are retained in the dataset.

We primarily rely on the research by Yuan [44] as a reference for selecting high-frequency verb senses. Yuan [44] examined 1220 verbs from Levels A and B of The outline of the Graded Vocabulary for HSK, using the State Language Affairs Commission’s Modern Chinese General Corpus as the basis. She comprehensively and meticulously analyzed the distribution of verb senses and established a three-level system comprising verbs, their frequencies and the proportion of each sense in polysemous verbs. Based on this system, we selected the primary sense for each verb according to the proportion of senses in polysemous verbs within the corpus. Additionally, for a small number of verbs not covered in Yuan’s study [44], we referred to the Modern Chinese Dictionary (7th Edition) and the dictionary by Meng [40] for sense explanations, combined with frequency statistics from the CCL corpus, and identified the most frequent sense as the primary sense.

As for the verb vector data, we employed both supervised and unsupervised methods to construct features for situation type classification. The former refers to extracting linguistic feature vectors from large corpus, while the latter involves extracting embedding vectors of verbs, including Word2vec, FastText, BERT and ChatGPT. Linguistic feature vectors can not only validate the effectiveness of the linguistic model we propose but also reveal the co-occurrence relationships between situation types and linguistic features, offering strong explanatory power. Embedding vectors were selected because they can capture more complex semantic information and, at the same time, verify that the classification system is qualified and applicable to practical and linguistic teaching. The following sections will focus on the sources and methodologies of these various types of word vectors.

3.2.1. Extraction of Linguistic Feature Vectors

Guo [12] used the co-occurrence capability of verbs with aspectual markers as the criterion for classifying verb situation types. Correspondingly, we can also rely on relevant linguistic features to construct a vector matrix for verbs to achieve the prediction of situation types.

In this study, we selected the aspectual markers “LE”, time-span object, “ZHE”, “ZAI”, “ZHENGZAI” and “GUO” and the adverb of degree “HEN” as linguistic features. This is because, in the prediction experiments, we primarily determine the linguistic features of verbs based on their ability to co-occur with aspectual markers, rather than using the semantic differences conveyed by their co-occurrence as classification features. Therefore, for the aspectual markers “LE” and time-span object, we do not distinguish whether the co-occurrence with verbs indicates inception or finish. Additionally, based on the research of scholars such as Tai [18], Teng [8] and Chen [11], we consider the co-occurrence capability of verbs with “HEN” as a feature. In prediction, the more features that contribute to the classification of situation types are selected, the more ideal the prediction results will be.

Unlike the clustering experiment, we extracted co-occurrence frequency information between verbs and the selected linguistic features from both the CCL and Sinica corpora separately to construct the vector matrices. This allows for cross-validation between corpora by comparing the results of classification experiments. We did not use manually annotated co-occurrence features for prediction because our goal is to accurately reflect the real-world collocation patterns of verbs and aspectual markers in actual usage through frequency information, thereby simulating the co-occurrence capability of verbs with aspectual markers. We do not aim to verify what effect the linguistic features used in annotation can have on prediction. In this way, the pitfalls of circularity can be avoided.

3.2.2. Extraction of Embedding Vectors

While the vector matrix constructed through supervised methods effectively reflects the collocation patterns of verbs with aspectual markers in actual contexts, this approach still has certain limitations when applied to large-scale situation type prediction. This is because the linguistic features of verbs we selected are based on specific contextual information, which overlooks broader contextual cues; thus, the information contained is still not comprehensive enough. Therefore, we will next leverage semantic vectors to conduct further experiments on situation type prediction.

Semantic vector representation is a general term for techniques that map linguistic units to real-valued vectors for modeling semantic features. Traditional methods, such as Word2Vec and FastText, generate static word vectors through unsupervised learning based on the Distributional Hypothesis [45]. This hypothesis posits that a word’s semantics are determined by its context, such that words occurring in similar contexts should obtain proximate vector representations. In contrast, pre-trained language models, such as BERT and ChatGPT, leverage deep neural networks to capture dynamic contextual features. Although the two types of models differ in their technical approaches, they both essentially achieve semantic representation through vector space mapping. For ease of comparative analysis, we refer to the vectors generated by these models as “embedding vectors” and construct multi-source datasets to comprehensively evaluate the impact of different semantic representation methods on verb situation type prediction.

(1) Word2vec Vectors

Word2vec is a classic word embedding model. We utilized Word2vec to construct word embedding vectors in an unsupervised manner, which not only captures semantic correlations based on word co-occurrence information but also effectively preserves the influence of word frequency. Since the CCL corpus is significantly larger than the Sinica corpus, and larger corpora generally yield higher-quality word vectors, this helps the model better learn subtle semantic distinctions. Therefore, we pre-trained the Word2vec model on the CCL corpus to obtain the word vectors.

(2) FastText Vectors

Although Word2vec has achieved relatively good results in previous prediction experiments, it still has certain limitations. The Word2vec model generates a vector for each individual word but overlooks the internal morphological features of words. The FastText model effectively addresses this limitation. It is suitable for training on large-scale text, adapts better to multilingual text, and performs quickly and accurately on categorization tasks. We trained the FastText model on the CCL corpus to obtain the corresponding word vectors.

(3) BERT Vectors

The BERT model, released by Google in 2018, is a pre-trained language model based on the Transformer architecture, utilizing a bidirectional attention mechanism. Compared to the Word2vec and FastText models, the BERT model typically possesses stronger context awareness. We utilized the Chinese pre-trained BERT-wwm model, based on the Whole Word Masking technique released by the Joint Laboratory of Harbin Institute of Technology and iFLYTEK Research, to extract verb vectors.

(4) ChatGPT Vectors

Word2vec and FastText are two traditional static word vector models that do not change once training is completed. In contrast, the word vectors of ChatGPT are dynamic and based on the Transformer model. Compared to the previous three word vector models, ChatGPT utilizes a larger training corpus, making it suitable for tasks such as predicting verb situation types. We extracted the corresponding word vectors from ChatGPT to construct the dataset for verb situation type automatic recognition experiments.

With this, all four datasets for the automatic recognition of verb situation types have been fully constructed.

We only utilized four types of embedding vectors for classification experiments, without selecting more advanced large language models trained on Chinese corpora. This is because, even though the training scale of Chinese large language models continues to expand, the accessibility of their pre-trained embedding parameters remains limited, except for some open-source architectures. Constrained by commercial confidentiality agreements and parameter access permissions, we have difficulties in systematically obtaining corresponding resources from different models. Therefore, we could only select four more accessible word vectors for experiments.

3.3. Automatic Recognition of Lexical Situation Types

Next, we will utilize the relevant models to train the dataset and achieve the prediction of situation types. This section primarily introduces the models, classifiers and related methods used in the prediction experiments.

Salton et al. [46] proposed the Vector Space Model (VSM) in the field of information retrieval. In the study of computational linguistics, the VSM has been widely applied and exhibits robust capabilities in handling tasks involving large-scale textual data. Leveraging the VSM, we base our experiment on the constructed linguistic feature vector matrices and embedding vector matrices as the basis for our data, and utilize multinomial logistic regression (MNLogit), support vector machine (SVM) and artificial neural network (ANN) as three common classifiers. The reason for selecting these classifiers is as follows:

MNLogit is a relatively simple traditional classifier. As a linear model, it decomposes multi-class classification problems into multiple binary classification tasks, and has been widely applied in studies involving multi-class classification and prediction problems.

SVM is among the best-performing traditional classifiers. This algorithm, based on the maximum margin principle, constructs a maximum margin hyperplane in the feature space to maximally separate data of different classes.

ANN is a widely used and high-performance computational model. By mimicking the behavior feature of biological neural networks and simulating the structure and function of the human brain using engineering techniques, ANN is capable of handling complex prediction problems.

These classifiers demonstrate certain advantages in processing high-dimensional data, effectively performing feature selection and classification tasks. Therefore, we select these three classifiers for tenfold cross-validation. We optimize the classifiers’ parameters using grid search to achieve the highest F1 score as the criterion for model optimization, determining the optimized parameters for each classifier. Finally, we train the models based on the gold-standard dataset to evaluate the aspect information dataset.

The classification experiments in this paper consist of two parts: prediction experiments based on linguistic features and based on embedding vectors. The following sections will separately introduce the data and methods used in these two experiments.

3.3.1. Prediction Experiments Based on Linguistic Feature Vectors

As mentioned earlier, we extracted co-occurrence frequencies between verbs and aspectual markers from both the CCL and Sinica corpora to simulate the collocational capacity between verbs and aspectual markers. Using the aspectual marker features of verbs as independent variables and the situation type of verbs as the dependent variable, we constructed two distinct vector matrices. Based on these matrices, we will, respectively, employ three classifiers to perform tenfold cross-validation. Through comparative analysis of model reports, we aim to evaluate the predictive effectiveness achieved by feature vectors derived from different corpora while simultaneously assessing performance differences among the three classifiers.

3.3.2. Prediction Experiments Based on Embedding Vectors

The experimental procedure for prediction based on embedding vectors follows a similar framework to the aforementioned approach. We extracted four types of embedding vectors for verbs, thereby constructing four distinct datasets for model training. Using verb embedding vectors as independent variables and situation type labels as the dependent variable, we conducted tenfold cross-validation with three classifiers across the four vector matrices, respectively. Through comparative analysis of experimental results, the model achieving the highest prediction accuracy was selected as the optimized model for automatic situation type identification.

3.3.3. Prediction Experiments for Situation Types of Unknown Language Data

Subsequently, we employed this optimized model to conduct further prediction experiments for expanding the dataset of verb situation types. Specifically, we extracted 2000 unannotated verbs from the CCL corpus. Following the data base of the optimized model, we constructed corresponding verb embedding datasets as a novel prediction set. After training the optimized model, automatic situation type identification was performed on this prediction set, yielding situation type labels for all 2000 verbs. Finally, we manually verified the results of the automatic identification of situation types, performed consistency checks and then incorporated them into the dataset upon confirmation. The expanded dataset underwent additional tenfold cross-validation to evaluate the model’s accuracy and generalization capability.

4. Results and Discussion

4.1. Construction of Lexical Situation Type System

We selected verbs from Verb Usage Dictionary [40] and high-frequency verbs in the CCL corpus, conducting statistical analysis by sense units, with a total of 1823 entries.

Following the completion of co-occurrence capacity between all verbs and aspectual marker features by four annotators, we aggregated the four annotation outcomes and performed a three-level Cohen’s Kappa consistency check [43], which utilized the default configuration in the pandas (v2.2.0) and scikit-learn (v1.6.0) libraries in Python (3.12.1). The results of the inter-annotator agreement within each group are presented in Table 2.

Table 2.

Intra-group Kappa consistency test results for four annotators.

The above table demonstrates that the consistency of annotations provided by the four annotators for 20 repeated verbs across two rounds is relatively high (Kappa > 0.75), indicating that the annotation results based on intuition and introspection are stable for each annotator.

Next, we present the inter-group consistency test results for the four annotators, as shown in Table 3.

Table 3.

Inter-group Kappa consistency test results for four annotators.

As shown in the above table, we conducted consistency tests between each pair of annotation results. The results reveal a high level of agreement among the annotators in the annotation task (Kappa > 0.75), which also reflects the scientific rigor and operational feasibility of the annotation rules. For the few instances of inconsistency, we engaged in detailed discussions and selected the annotation results that achieved consensus in at least 75% of the annotations as the final results, thereby refining the annotated data.

Finally, a consistency check was conducted between our unified annotation and the annotations by Guo [12]. The results indicate that our annotations passed the consistency test (Kappa = 0.95 > 0.75), demonstrating that the unified annotation results are highly reliable compared to previously annotated linguistic resources. For the few instances where discrepancies existed, we manually verified them using the CCL corpus, correcting some inaccuracies in the previous linguistic resources. This process ultimately resulted in a dataset capturing the co-occurrence features of verbs and aspectual markers. With this, the data foundation for the clustering experiment was established.

It should be noted that some verbs exhibit unique grammatical representation. These include verbs such as shi4 (be), ren4wei2 (consider) and deng3yu2 (equal), which express absolute states and cannot combine with most aspectual markers. Guo [12] classified these verbs separately as Va, categorizing them as infinite structures and grouping them under stative verbs. He [47] referred to these as “absolute stative verbs”. We adopt the perspective of previous scholars and directly classify these verbs as state situation type, excluding them from the hierarchical clustering experiment.

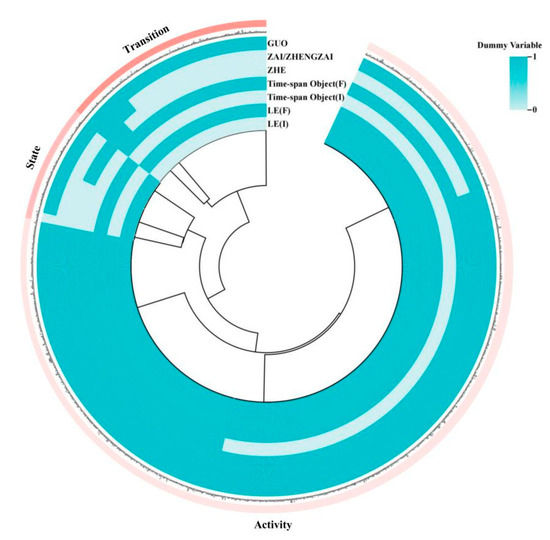

Next, we performed a grid search based on the co-occurrence feature dataset. In this dataset, the dummy variable of 1 indicates that a verb has a certain aspectual marker feature, meaning that the verb can co-occur with that aspectual marker, while the dummy variable of 0 indicates that the verb does not possess that feature. Based on the results of the grid search, we determined that Euclidean Distance would be used as the distance metric, and the centroid-linkage method would be employed to calculate inter-cluster distances. The hierarchical clustering results are shown in Figure 3.

Figure 3.

Hierarchical clustering dendrogram of verb situation types [48].

As shown in the figure above, the distribution patterns of verbs based on their co-occurrence features with aspectual markers exhibit significant differences. Through semantic distance-based clustering analysis, the verbs are categorized into nine subclasses, which are further merged into three main categories from the bottom up. Observing the figure clockwise from the top, the first main category consists of three subclasses, which include verbs such as zhuo1 (catch), ben1pao3 (run) and gui4 (kneel), representing typical physical actions. These verbs describe dynamic and durative situations, which we classify as activity situation types. The second main category also comprises three subclasses, including verbs such as tao3yan4 (hate), ming2bai2 (understand) and ren4shi2 (recognize), which denote mental activities or attributive relations. While these verbs also exhibit a durative feature, unlike activity situation types, they are strongly static in nature, and we classify them as state situation types. The third main category similarly consists of three subclasses, containing verbs such as tong2yi4 (agree), shi2xian4 (achieve) and ti2gao1 (improve). These verbs all indicate changes in state and possess inherent endpoints, which we classify as transition situation types.

CPCC is a metric used to evaluate the fitting degree between hierarchical clustering and the data. A CPCC value closer to 1 indicates a higher similarity between the distance matrix used in hierarchical clustering and the original matrix, signifying a better clustering performance. The CPCC value calculated for the hierarchical clustering shown in Figure 3 is 0.97, demonstrating that the clustering results are satisfactory.

The above presents the automatically generated verb situation types based on objective semantic distances. The hierarchical clustering results suggest that the tripartite classification of situation types into activity, state and transition is a scientifically sound approach. In fact, many previous scholars, such as Kenny [49], Tai [18], Pustejovsky [50] and Guo [19], have proposed similar tripartite classifications, and their categories largely correspond to the three situation types mentioned above. Building on these theoretical foundations, this study further validates the rationality of the tripartite classification of situation types through statistical methods.

4.2. Construction of the Training Dataset

Through hierarchical clustering, each verb entry has been assigned a corresponding situation type label. However, based on previous research, it is necessary to filter the data rather than retain all of it. Liu [6,7] attached situation types to the senses of verbs and retained each sense along with its situation type label. Nevertheless, the prediction accuracy of situation types using selected linguistic feature vectors was only 68.44%, and even with embedding vectors, the accuracy reached merely 72.05%. These experimental results indicate that the methods previously used to construct training datasets require improvement.

We attempted to predict situation types by retaining only the primary senses of verbs, based on the following rationale: first, primary senses are used more frequently and can better reflect the core meaning of verbs. By associating each verb’s primary sense with its corresponding situation type, more distinct feature vectors can be formed. Second, focusing on primary senses can make the dataset more concise, thereby enhancing the specificity and accuracy of the classification model. After manually selecting the primary senses, the retained data included a total of 1133 verbs, comprising 823 activity verbs, 136 state verbs and 174 transition verbs.

We employed both supervised and unsupervised methods to extract verb vectors for constructing the situation type datasets. The supervised approach included linguistic feature vectors extracted from the CCL corpus and the Sinica corpus. The unsupervised approach involved embedding models, specifically Word2vec, FastText, BERT and ChatGPT vectors.

The following Table 4 shows the parameter configurations for training Word2vec and FastText embeddings on the CCL corpus:

Table 4.

Parameter settings for different word embedding models.

Both the Word2Vec and FastText models described above were trained using the Gensim library (v4.3.3) in Python (3.12.1). Additionally, we used the pre-trained language model BERT-wwm to construct 768-dimensional feature vectors for each verb. Finally, we extracted word vectors from ChatGPT (GPT-4 version), with each verb vector comprising 1536 dimensions.

Ultimately, we obtained two linguistic feature vector matrices and four embedding vectors matrices. These will be used in subsequent grouped experiments for predicting verb situation types.

4.3. Automatic Recognition of Lexical Situation Types

In this section, we will sequentially present the results of situation type prediction based on linguistic feature vectors and embedding vectors, from which we select the best-performing model. Subsequently, we will demonstrate the accuracy of the optimized model in predicting unknown linguistic data, validating its practical value in classification tasks.

4.3.1. Classification Experiments Based on Linguistic Feature Vectors

To evaluate the predictive capability of verb linguistic feature vectors extracted from different corpora for situation types, we input the two linguistic feature vector matrices into three classifiers. Utilizing the pandas library (v2.2.0) for data processing and implementing classification through models from the scikit-learn library (v1.6.0), we optimized the parameters of the classifiers using grid search, and conducted classification experiments with tenfold cross-validation. The parameter settings and experimental results for each classifier are as follows in Table 5 and Table 6:

Table 5.

Parameter settings for different classifiers based on linguistic feature vectors.

Table 6.

Classification experiment results based on linguistic feature vectors.

Overall, the ANN model outperforms other models in both sets of classification experiments. The highest prediction accuracy is achieved by the ANN classifier based on the CCL corpus, reaching 77.23%, demonstrating its advantage in handling complex linguistic features. This suggests that the ANN model’s nonlinear feature learning capability can improve the prediction accuracy of situation types to some extent.

We employed the majority class baseline method to establish the baseline, which involves consistently predicting the most frequent category (activity verbs, 72.64% prevalence in the training set, 823/1133 instances) throughout all iterations of the tenfold cross-validation process. However, the best classifier only improves accuracy by less than 5% compared to the baseline, indicating that relying solely on linguistic feature vectors cannot achieve high-accuracy prediction of situation types. Therefore, we will shift the focus of our experiments to classification based on embedding vectors, aiming to search for the most suitable dataset and classifier for improving prediction accuracy.

4.3.2. Classification Experiments Based on Embedding Vectors

Considering the complexity of predicting situation types, we have decided to explore semantic representation methods based on embedding vectors, aiming to fully explore the latent deep features within the data to enhance the model’s accuracy in predicting different situation types. Among the four models, Word2vec and FastText are early word vector models that have played a significant role in research within the field of natural language processing. With the rapid advancement of deep learning technologies, more advanced models have emerged and gradually become mainstream. Therefore, we have introduced BERT and ChatGPT models for enhanced comparative analysis. The experimental framework maintained methodological continuity with the procedure in Section 3.3.1, wherein pandas’ DataFrame structures standardized feature processing, and scikit-learn’s GridSearchCV class optimized classifier parameters. To avoid overfitting in the experiments, we used tenfold cross-validation to ensure the evaluation rigor. Moreover, random seeds were fixed across three classifiers for reproducibility. Table 7 and Table 8, respectively, present the specific parameter settings of the classifiers used with different datasets and the experimental results.

Table 7.

Parameter settings for different classifiers based on embedding vectors.

Table 8.

Classification experiment results based on embedding vectors.

A comparison between Table 6 and Table 8 reveals that the experimental accuracy of embedding vectors significantly surpasses that of linguistic feature vectors. Table 8 demonstrates that the tenfold cross-validation results using FastText word vectors are relatively superior, exhibiting higher experimental accuracy on both SVM and ANN classifiers compared to the other three word vector matrices. In particular, the ANN classifier achieves 99.56% accuracy in prediction, marking an increase of 26.92% in accuracy compared to the baseline. This remarkable performance may be attributed to FastText’s advantage in handling word morphology information.

In response to the occurrence of high accuracy in prediction experiments using FastText word vectors, we need to test whether the model is experiencing overfitting. To address this, we first extract a portion of data from the original training set, removing their situation type labels to construct a small unlabeled prediction set. The remaining data form a new training set, on which we train the FastText model and predict situation types on the prediction set. Subsequently, we manually evaluate the model’s prediction accuracy to preliminarily assess the presence of overfitting tendencies. Additionally, we extract unlabeled verbs from dictionaries, collect commonly used colloquial words and create several more challenging small prediction sets without situation type labels. Utilizing the original FastText vector dataset as the training set, we conduct multiple rounds of prediction experiments to further evaluate the model for overfitting. The experimental results indicate that the model’s accuracy on these small prediction sets is generally around 20% higher than the baseline, showing consistent performance with the classification experiments on the original complete dataset and no apparent signs of overfitting. This suggests that the FastText model exhibits good generalization capabilities in situation classification tasks, maintaining a relatively high level of accuracy, and also validates the effectiveness of the dataset we utilized.

Furthermore, although other models have demonstrated good performance, they have not been able to achieve the accuracy of FastText word vectors. This indicates that there are indeed differences in the feature extraction capabilities and depth of contextual understanding among different models in the task of predicting situation types. Our objective is not to verify the correlation between the sophistication of models and prediction accuracy, but rather to select the optimized model capable of accurately identifying situation types. Taking all the above into consideration, we have decided to use the FastText vectors dataset as the training set and combine it with an ANN classifier to conduct automatic recognition of verb situation types in unknown language resources.

4.3.3. Automatic Recognition of Situation Types of Unknown Language Data

We extracted 2000 high-frequency verbs from the CCL corpus as the objects of prediction experiments. We obtained corresponding word vectors from the pre-trained FastText model to form the prediction set, which was then divided into several parts for machine-based automatic recognition in batches. After each part of the predictions, the automatic recognition results of situation types were verified by four annotators with subsequent consistency checks. Once confirmed to be accurate, they were merged into the training set. By gradually expanding the size of the training set in this manner, we continuously improve the model’s predictive performance.

After the completion of predictions, we obtained 2000 situation type labels corresponding to the verbs. We merged this portion of data with the original training set, resulting in the complete dataset for this prediction experiment. The dataset comprises 3133 verbs, their corresponding FastText word vectors and situation type labels, including 1839 activity verbs, 349 state verbs and 945 transition verbs.

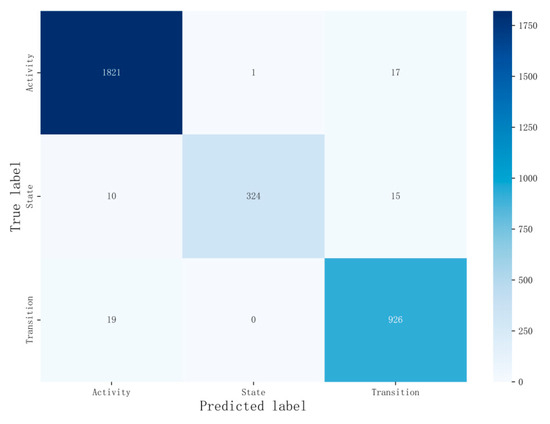

To verify whether the model can still achieve high accuracy in automatic recognition of situation types on the expanded dataset, we conducted tenfold cross-validation using the merged dataset as the basis and an ANN classifier again. Table 9 and Figure 4 present the detailed results of the experiment.

Table 9.

Parameter configuration and experimental results of verb prediction task on 3133 samples.

Figure 4.

Confusion matrix analysis for verb classification on 3133 linguistic samples.

Table 9 demonstrates that the model performs exceptionally well on multiple evaluation metrics. Specifically, the Precision is 98.61%, and the Recall reaches 96.84%, indicating the model’s high reliability and effectiveness in automatic recognition of situation types. As a comprehensive measure of Precision and Recall, the F1 score evaluates the model’s balanced performance. The F1 score in the table is 97.68%, suggesting that the model’s overall performance in handling situation type prediction tasks is quite satisfactory. With an Accuracy of 98.37%, it shows that in the testing of 3133 verb samples, the model’s proportion of correct predictions is significantly high, with less than 2% of samples having their situation types incorrectly predicted. Compared to the baseline, the accuracy in this experiment has increased by nearly 40%, demonstrating the model’s strong predictive ability in automatic recognition of verb situation types and validating the scientific validity of the selected dataset and algorithm combination.

The confusion matrix in Figure 4 illustrates the specific classification performance, revealing that the model’s predictive performance on activity verbs is quite outstanding, with only 1 verb sample incorrectly predicted as a state verb and 17 verbs incorrectly predicted as transition verbs. The model’s accuracy in recognizing state verbs is lower than that of activity situations, with 10 samples incorrectly predicted as activity verbs and 15 samples incorrectly predicted as transition verbs. The model demonstrates relatively good performance on transition verbs, with 19 samples incorrectly predicted as activity verbs. However, the accuracy is still lower compared to the model’s performance on activity verbs. Overall, the model exhibits strong recognition capabilities in activity situation and transition situation, while the prediction performance on state situation lags slightly behind. Nevertheless, the overall predictive results still indicate the model’s high accuracy, providing reliable support for the automatic recognition of situation types.

At this point, a practical model dedicated to achieving high accuracy prediction of Chinese verb situation types has been successfully trained. In previous studies, many scholars have proposed automatic recognition models for situation types in different languages. Compared to these prediction systems, our model has been optimized in several key aspects:

Firstly, at the prediction level, previous research primarily focused on analyzing entire sentences, allowing models to leverage the rich contextual features within sentences to achieve high accuracy easily. However, this approach is not effective in predicting the situation types of basic units. In contrast, predicting verb situation types poses a greater challenge. This study starts from verbs and successfully predicts situation types, thereby offering possibilities for extending predictions to higher-level situation types in the future. Furthermore, the prediction accuracy of models in previous studies was not high and did not surpass the accuracy achieved in our experiments focusing on verb-level prediction.

Secondly, concerning the construction of the dataset, previous researchers did not take sufficient measures to reduce subjectivity during the annotation stage. For instance, Zarcone and Lenci’s [28] annotation work was completed by a single annotator, while Xu [26], despite conducting Kappa consistency tests after annotation, only involved two annotators. Additionally, issues such as a lack of transparency in the dataset construction process and small dataset size were present. In contrast, the data annotation process in this study was more standardized in terms of workflow and methods, involving four professional annotators and conducting multidimensional consistency checks to ensure accuracy. Furthermore, we have provided detailed transparency on the construction process of the training dataset in our prediction experiments, facilitating reproducible research by other scholars.

Thirdly, current research on predicting situation types is predominantly conducted within the realm of English, with a few studies involving languages such as Italian [28]. Chinese, being the most widely spoken language in the world, has received relatively limited attention in related studies. Presently, the only other researcher focusing on predicting Chinese situation types is Xu [26]; however, their study solely delves into sentence-level scenarios. Our paper enriches the landscape of research on predicting Chinese verb situation types.

Fourthly, the research methods adopted by previous scholars are relatively outdated. Firstly, in aspectual classification tasks, the classifiers used by previous researchers are not advanced enough; for instance, Cao [25] and Xu [26] mainly employed SVM classifiers. ANN classifiers often exhibit superior performance in classification tasks and played a crucial role in the prediction experiments of this paper, yet they are rarely mentioned in existing prediction studies. Secondly, the approach taken by previous scholars in constructing vector matrices is rather simplistic, mostly relying on linguistic features and co-occurrence word frequency matrices as training datasets, with limited incorporation of high-dimensional embedding vectors, thus failing to effectively validate the ability of large language models to capture semantic information.

In conclusion, the Chinese verb situation type prediction model trained in this paper demonstrates a certain level of advancement and superiority.

5. Conclusions

This paper presents a study focused on achieving high-accuracy prediction of verb situation types, systematically detailing the entire workflow from constructing a verb lexicon, annotating co-occurrence features and automatically generating a situation type system, to implementing automatic situation type identification. We employed rigorous methodologies including consistency checks, hierarchical clustering and tenfold cross-validation to ensure scientific validity throughout the experimental process, which ultimately solved the two research questions raised in this paper.