Abstract

Conveyor belts are critical in various industries, particularly in the barrier eddy current separator systems used in recycling processes. However, hidden issues, such as belt misalignment, excessive heat that can lead to fire hazards, and the presence of sharp or irregularly shaped materials, reduce operational efficiency and pose serious threats to the health and safety of personnel on the production floor. This study presents an intelligent monitoring and protection system for barrier eddy current separator conveyor belts designed to safeguard machinery and human workers simultaneously. In this system, a thermal camera continuously monitors the surface temperature of the conveyor belt, especially in the area above the magnetic drum—where unwanted ferromagnetic materials can lead to abnormal heating and potential fire risks. The system detects temperature anomalies in this critical zone. The early detection of these risks triggers audio–visual alerts and IoT-based warning messages that are sent to technicians, which is vital in preventing fire-related injuries and minimizing emergency response time. Simultaneously, a machine vision module autonomously detects and corrects belt misalignment, eliminating the need for manual intervention and reducing the risk of worker exposure to moving mechanical parts. Additionally, a line-scan camera integrated with the YOLOv11 AI model analyses the shape of materials on the conveyor belt, distinguishing between rounded and sharp-edged objects. This system enhances the accuracy of material separation and reduces the likelihood of injuries caused by the impact or ejection of sharp fragments during maintenance or handling. The YOLOv11n-seg model implemented in this system achieved a segmentation mask precision of 84.8 percent and a recall of 84.5 percent in industry evaluations. Based on this high segmentation accuracy and consistent detection of sharp particles, the system is expected to substantially reduce the frequency of sharp object collisions with the BECS conveyor belt, thereby minimizing mechanical wear and potential safety hazards. By integrating these intelligent capabilities into a compact, cost-effective solution suitable for real-world recycling environments, the proposed system contributes significantly to improving workplace safety and equipment longevity. This project demonstrates how digital transformation and artificial intelligence can play a pivotal role in advancing occupational health and safety in modern industrial production.

1. Introduction

Metal recycling is crucial in industrial waste management and sustainable development by minimizing environmental pollution and conserving natural resources [1]. Efficient separation of non-ferrous metals, such as aluminum and copper, from mixed waste is essential for maintaining high recycling quality and economic viability. One widely used method is the barrier eddy current separator (BECS), which relies on electromagnetic induction to create repelling forces between non-ferrous metals and non-conductive materials, like plastic and wood [2,3,4,5,6,7]. In BECS systems, the conveyor belt not only transports materials, but also influences separation accuracy, as even minor disruptions can affect the material trajectory and interaction with the magnetic drum.

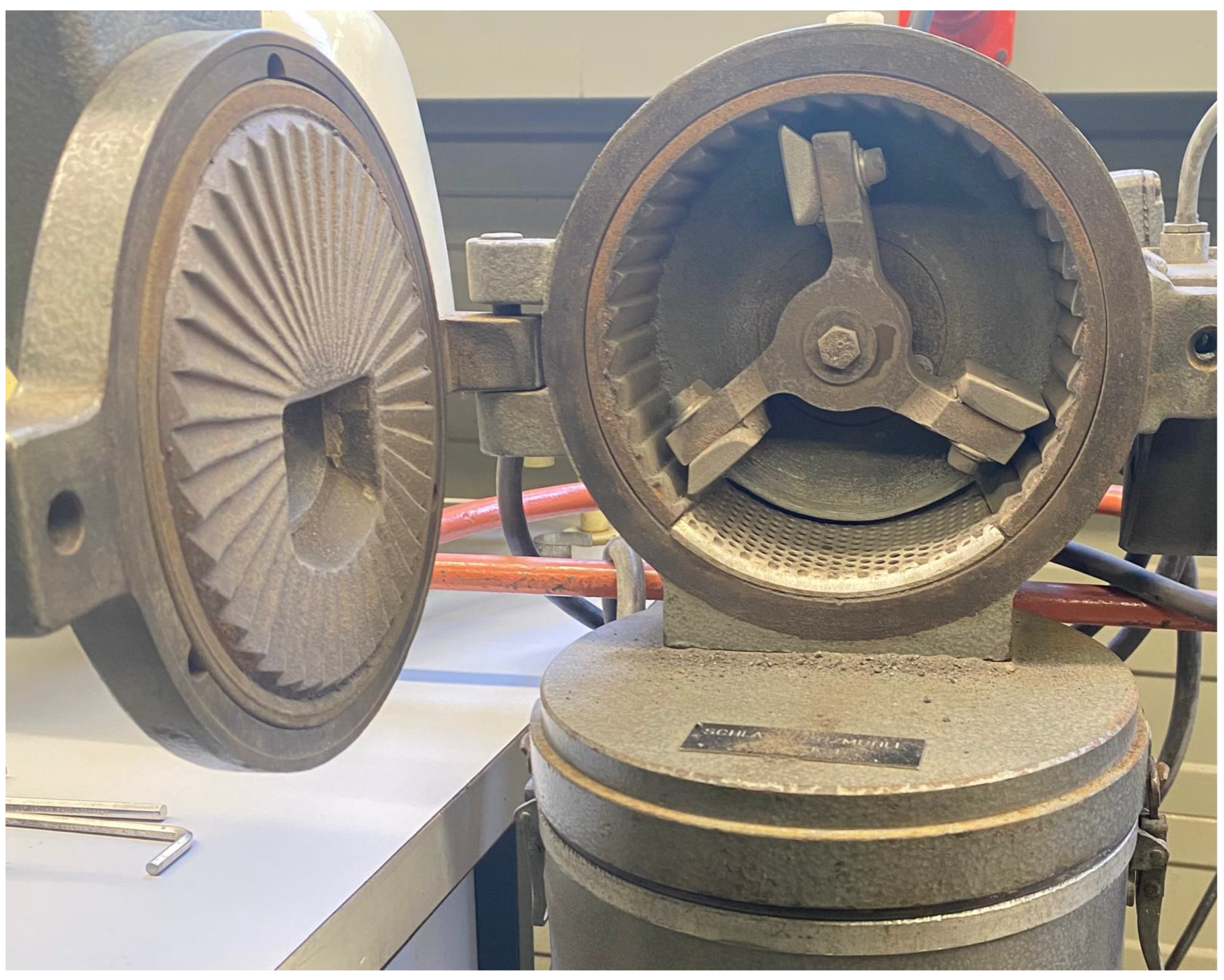

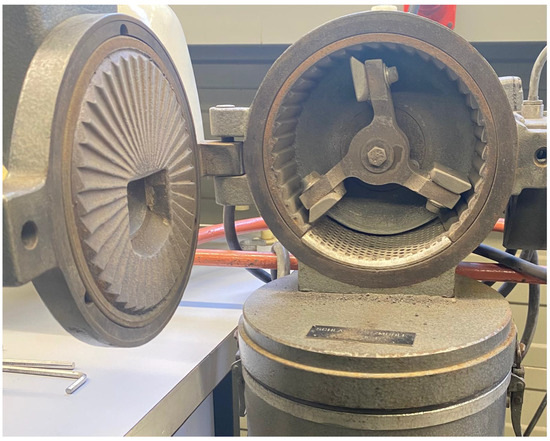

Industrial conveyor belts are exposed to multiple challenges, including the presence of sharp-edged or irregular materials, which typically result from malfunctioning upstream processes, such as milling [8,9,10]. These sharp fragments can damage belt surfaces, reduce separation accuracy, and pose hazards during handling and maintenance. Additionally, irregular material shapes disrupt magnetic field uniformity, lowering eddy current efficiency [11]. Therefore, the early identification of such anomalies helps optimize system performance and enhances workplace safety. Figure 1 illustrates the internal structure of the milling machine used in the preprocessing stage to grind and round metal particles. Its internal blades are designed to eliminate sharp edges, ensuring smoother fragments are conveyed to the eddy current separator.

Figure 1.

Internal view of the industrial milling machine that is used in the upstream process. The image shows the rotating blades and grooved chamber responsible for grinding and smoothing sharp-edged metal fragments before they enter the eddy current separator. The presence of sharp particles on the conveyor belt may indicate a malfunction or wear in this unit, highlighting its relevance to the proposed monitoring system.

This study presents an intelligent monitoring and protection system for BECS conveyor belts, utilizing image processing and artificial intelligence (AI) to address the challenges above. Integrating AI into safety-critical systems reflects a growing trend in recent research, such as developing AI-based workbenches for testing methodologies in occupational health and safety contexts [12].

The proposed system includes a thermal camera for detecting abnormal heat spots and preventing fire hazards, a low-power image processing unit for automatic belt misalignment correction, and a line-scan camera integrated with the YOLOv11 model [13] to evaluate the quality of materials transported on the belt. The system aims to protect industrial equipment and human health by reducing manual intervention and enabling the early detection of risks [14].

The aim of this research was to design and implement an intelligent, multi-module monitoring system that improves the safety, efficiency, and separation performance of conveyor belts in BECS systems.

The specific objectives of this study were as follows:

- To detect and classify material shapes (sharp vs. smooth) using a YOLOv11-based vision system to identify upstream process anomalies and reduce conveyor damage.

- To design and test a misalignment detection and correction module using image processing and stepper motors to maintain accurate belt alignment.

- To implement a thermal monitoring module using an MLX90640 thermal camera to detect the abnormal temperature rises caused by trapped ferrous materials.

- To integrate an IoT-based alert system for notification and remote response to safety hazards.

1.1. Background and Problem

In the recycling industry, conveyor belt safety ensures optimal performance and workplace security. Conveyor belts are not just simple transport systems; in many industrial applications, such as BECS, they are integral to separating non-ferrous metals from other materials. The proper functioning of a conveyor belt in such systems directly impacts the accuracy of material separation, production efficiency, and operational costs.

One of the most critical risks in barrier eddy current separator (BECS) systems is the accidental presence of ferromagnetic materials—such as iron—on the conveyor belt. These systems are designed to process non-ferromagnetic materials, and even a single iron-bearing object can disrupt the separation process, generate excessive friction, and create sparks when exposed to the magnetic drum. Figure 2 illustrates an actual instance of this hazard: a sizeable iron object mistakenly entered the system, becoming a hotspot of metallic concentration and posing a direct fire threat. This situation is hazardous as typical BECS systems lack automated mechanisms to detect and address such anomalies. Furthermore, since these machines often operate continuously, the magnetic drum accumulates significant heat over time, increasing the likelihood of fire. Combined with common issues, such as belt misalignment and surface wear, this creates a hazardous environment that threatens operational efficiency and technician safety [15,16,17,18]. Implementing a robust temperature monitoring and anomaly detection system is beneficial and essential for maintaining system stability and preventing dangerous incidents.

Figure 2.

A ferromagnetic object (highlighted with an arrow) is mistakenly present on the conveyor belt, posing a potential fire risk due to its interaction with the magnetic drum. Detecting such hazardous objects is a key function of the proposed thermal monitoring system.

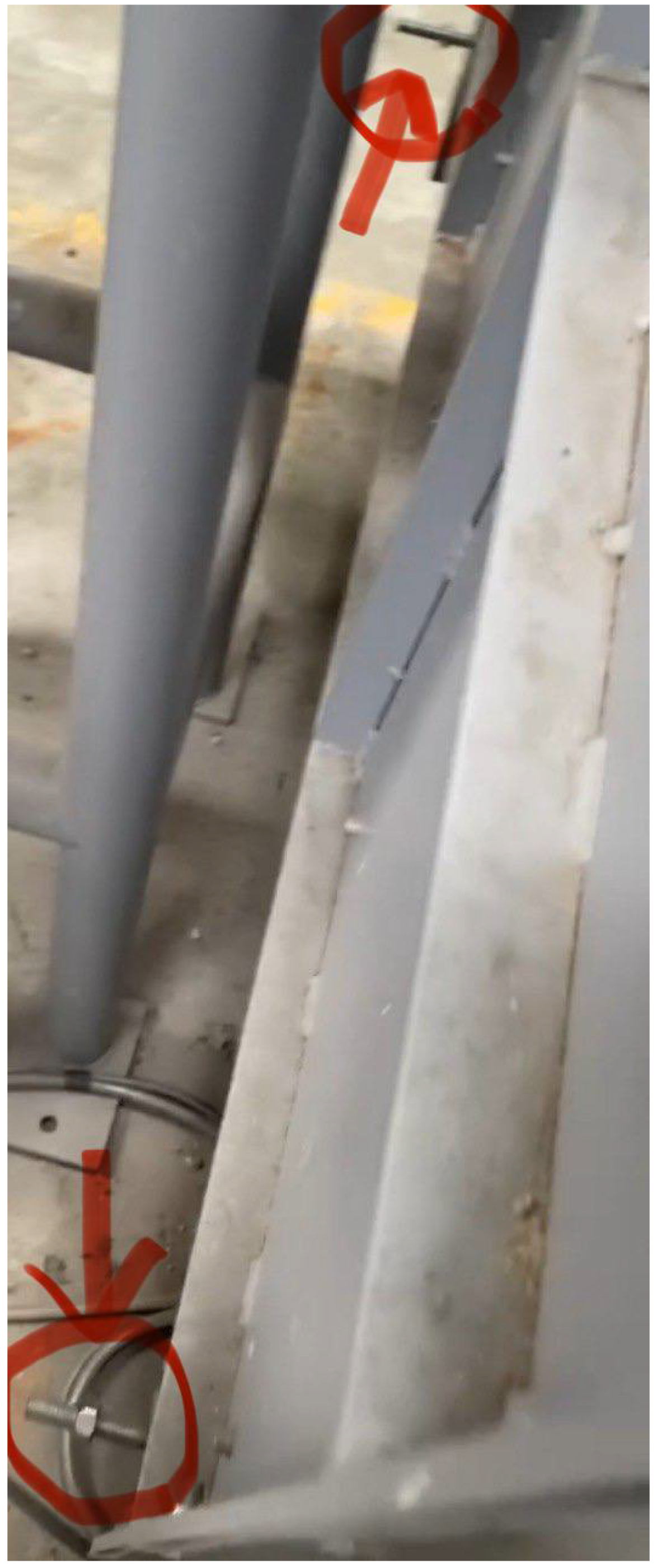

Ensuring conveyor belt safety extends beyond simple material transportation. In advanced recycling systems like barrier eddy current separators, the conveyor belt is a critical component of a complex sorting process, where any malfunction can impact the overall system’s efficiency and precision. For instance, belt misalignment can lead to inaccurate material separation and increased waste generation [18,19,20,21,22,23]. Misalignment in a BECS systems does not merely threaten mechanical damage, such as belt wear or rupture. It also disrupts the material separation process itself. This is because the conveyor belt in this context is not a passive transport mechanism—it is one of the three core components of the BECS machine that actively contributes to the separation performance. Misalignment changes the material trajectory and alters the interaction timing between the materials and the rotating magnetic drum, reducing separation quality. There is no automated detection system for belt misalignment in BECS machines. Correction is done manually: if the deviation is visually noticed by technicians on site, they use two external metal levers connected to one of the conveyor rollers. As shown in Figure 3, these levers require considerable manual force and can only be adjusted while the system is actively running. This increases physical strain on technicians and often requires more than one person to realign the belt properly. This limitation highlights the urgent need for an intelligent misalignment detection and correction system to improve safety, reduce human intensity, and preserve separation efficiency.

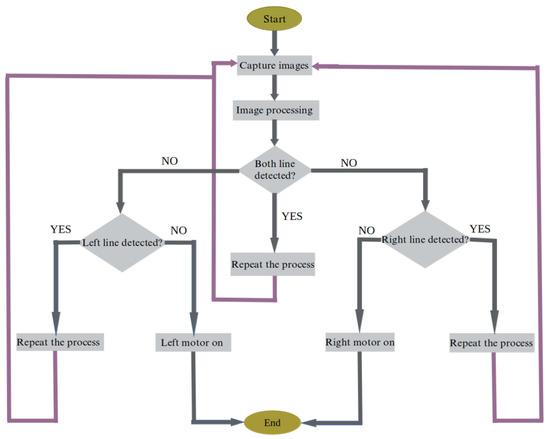

Figure 3.

View of the mechanical levers and support screws at the base of the conveyor belt system. These components (highlighted with red arrows) are responsible for manual alignment adjustments. In the proposed system, this mechanism is replaced with an AI-driven motorized correction module to ensure automatic belt realignment, thereby reducing manual intervention and operational delays.

One of the significant challenges in recycling systems is the damage caused by sharp-edged materials [18,20,21,23,24,25,26,27,28,29,30,31,32,33,34]. It is important to note that the performance of the magnetic drum is significantly more efficient when processing smooth and rounded materials. This is because the magnetic field calculations and interactions with such materials are more predictable and accurate. In contrast, sharp or angular fragments damage the conveyor belt and upstream equipment. It reduces the magnetic drum’s separation accuracy, and the particles’ trajectory, rotation, and magnetic response are more complex and less predictable. During recycling, metallic fragments and industrial plastic waste with sharp edges can tear or wear down the conveyor belt excessively.

If this damage is not detected early, it can eventually lead to complete belt failure, increased replacement costs, and reduced overall system efficiency. Additionally, sharp materials, such as shredders or crushers, which may require further optimization, often indicate inefficiencies in upstream machinery. Failure to detect and address these issues can result in unexpected down times, lower productivity, and increased operational costs. Figure 4 shows a sharp metal blade that was accidentally placed or mistakenly left on the conveyor belt. The presence of such objects can lead to serious consequences, including surface damage to the belt, disruption in the separation process, and potential safety hazards for operators. This highlights the importance of fast detection and removal of sharp materials from the conveyor path.

Figure 4.

Sharp object on the conveyor belt.

This makes monitoring and intelligent control of conveyor belts essential, utilizing technologies such as machine vision, deep learning, and the Internet of Things (IoT). An intelligent system can detect and correct belt misalignment, monitor surface temperatures to prevent overheating and fires and analyze material properties to optimize separation processes. By integrating these technologies, potential failures can be identified early, downtime is minimized, and worker safety risks are significantly reduced. Ultimately, ensuring a well-maintained and intelligent conveyor belt system not only extends equipment lifespan and lowers maintenance costs, but also prevents severe risks, such as fire hazards, mechanical failures, and inefficient material sorting.

1.2. Existing Body of Knowledge and the State of the Art

Conveyor belts are fundamental to industrial applications, such as recycling, mining, and material handling systems. In BECS systems, conveyor belts are not merely transport mechanisms but integral elements in effectively separating non-ferrous metals. Their reliability, efficiency, and durability are often compromised due to mechanical failures, misalignment, material-induced damage, and fire hazards, making monitoring and maintenance critical for industrial operations.

Several studies have examined different material separation techniques to optimize sorting efficiency. Sivamohan and Forssberg (1991) [35] explored electronic separation methods, including optical sorting, radiometric techniques, and X-ray-based methods, to enhance mineral separation efficiency. Their research emphasized preconcentration strategies to reduce processing costs and improve recovery rates.

However, their approach focused on electrical and density-based properties rather than materials’ physical shape and size, which is a critical factor in BECS performance. Another study, Elemental Characterization of Electronic Waste (2013–2023), reviewed various chemical analysis techniques, such as ICP-MS, ICP-OES, and XRF, to determine the elemental composition of e-waste. These methods are essential for identifying and extracting rare metals, but they fail to address how the physical attributes of materials affect separation efficiency.

Unlike these studies, the proposed project integrates machine vision and deep learning (YOLOv11) to analyze material shape and size, significantly improving sorting precision and efficiency in BECS systems.

Multiple studies have investigated the structural integrity and damage mechanisms affecting conveyor belts. The study Failure Analysis of Rubber–Textile Conveyor Belts analyzed the irreversible internal damage caused by sharp-edged materials. Researchers used computerized microtomography to examine belt deterioration, demonstrating how repeated impact loads lead to progressive structural weakening. However, this approach is reactive as it only examines failures after they occur.

The present study offers a proactive solution, leveraging AI-based detection to prevent damage before it happens. Similarly, Longitudinal Tear Early-Warning in Conveyor Belts Using Infrared Vision introduced a system that detects early signs of belt tears using thermal imaging and Gaussian filtering. While effective in damage detection, it does not prevent hazardous materials from entering the system, a gap that the proposed research aims to address. This project can reduce maintenance costs and operational downtime by identifying and filtering out problematic materials before they reach the belt.

Another study, Failure Analysis of Belt Conveyor Damage from Falling Materials, used microtomographic imaging to examine the hidden structural damage caused by heavy impacts. While useful for post-damage analysis, this technique lacks industrial applicability. In contrast, the proposed system employs deep learning and machine vision to prevent material-induced damage before it affects the conveyor belt, ensuring continuous, safe operation.

Recent advancements in AI-powered monitoring have led to conveyor belt maintenance solutions. The study Proactive Measures to Prevent Conveyor Belt Failures Using Deep Learning introduced an AI-based detection system utilizing a lightweight version of YOLOv4 to identify foreign objects on conveyor belts. While similar to the current project’s use of machine vision and deep learning, its primary focus was detecting large objects like metal rods and coal fragments.

The present research, however, is tailored for fine material sorting in BECS systems, particularly analyzing shape and size for optimal separation. Another relevant study, Types and Causes of Conveyor Belt Damage, classified various failure types, including misalignment, wear, and delamination, and proposed predictive maintenance strategies.

While valuable for long-term belt monitoring, this research does not offer a intervention strategy. In contrast, the proposed project implements a proactive filtering mechanism, preventing the root causes of belt degradation by eliminating problematic materials before they interact with the conveyor.

Fire detection and prevention in industrial conveyor belts have also been widely studied. Traditional fire detection systems, such as smoke and gas sensors, are commonly used along conveyor belts to detect dangerous gases or smoke and trigger alarms [36,37,38,39].

However, these systems are prone to false alarms in dusty environments, reducing worker trust and potentially delaying responses during actual fire incidents. Alternative fire suppression techniques, such as water misting and anti-fire foams [37,39], mainly focus on firefighting rather than early detection. Additionally, Distributed Temperature Sensing (DTS) systems [40,41] provide accurate temperature monitoring but lack visual data, making them unsuitable for smaller BECS conveyor setups due to their complex installation and high costs.

In contrast, recent studies [42,43] highlight the advantages of thermal cameras for fire detection. Unlike traditional sensors, thermal cameras generate heat maps that enable precise detection of abnormal heat patterns. The proposed project leverages thermal imaging to detect overheating from ferromagnetic contamination, integrating intelligent remote notifications via Telegram to ensure timely intervention, even in unmanned industrial setups.

Studies on conveyor belt misalignment have explored various deep learning models, such as YOLOv5 and ARIMA-LSTM hybrid models, for deviation detection [44,45]. While highly accurate, these deep learning models demand substantial computational resources, requiring high-power GPUs, which may be cost-prohibitive in industrial environments.

Additionally, most studies provide only detection mechanisms without automated correction, whereas the proposed project incorporates deviation detection and correction to maintain optimal belt alignment. The study Analysis of Devices to Detect Longitudinal Tear on Conveyor Belts reviewed mechanical, electromagnetic, optical, and magnetic field-based solutions for tear detection [45,46]. While effective for monitoring belt conditions, these systems are expensive, complex, and lack intervention capabilities. The present project, however, offers a cost-effective, AI-powered alternative with material sorting and belt protection.

Also, a recent study introduced PiVisionSort, a cost-effective material recognition system for conveyor belts that integrates image processing and lightweight machine learning techniques. The system, built on Raspberry Pi, employs OpenCV, K-means clustering, and decision trees to accurately identify and distinguish metals, such as aluminum, copper, and brass, under industrial conditions. Its success in achieving up to 100% accuracy in metal detection while maintaining performance underlines its relevance as a benchmark for lightweight, edge-based solutions in innovative recycling environments [47].

Most existing studies have focused on elemental analysis, post-damage assessment, and predictive maintenance. The proposed project advances the field by integrating AI-driven monitoring and prevention for conveyor belts in BECS systems. The key introduced in this study include machine vision-based belt misalignment correction, thermal imaging for early detection of overheating and fire hazards, deep learning-based material analysis (YOLOv11) to identify shape and size, enhancing separation efficiency and belt longevity, and IoT-enabled remote alerts for proactive system monitoring.

By combining machine vision, deep learning, and IoT-based automation, this research offers a cost-effective, lightweight solution that improves conveyor belt durability and enhances BECS separation system efficiency. Unlike traditional reactive approaches, this study provides a preventive mechanism, ensuring continuous industrial operations, reduced downtime, and enhanced worker safety.

1.3. Gap Detection

Previous research on the performance and safety of industrial conveyor belts has primarily focused on analyzing physical damage and predicting failures. Many studies have examined traditional methods, such as microtomographic analysis, magnetic sensors, X-ray imaging, and infrared monitoring systems, to detect conveyor belt failures [44,45].

While these methods effectively identify physical damage after it has occurred, they do not provide a preventive approach to stop harmful materials from reaching the conveyor belt in the first place. This represents a significant research gap as the primary focus of existing studies has been on damage identification and analysis rather than proactive prevention.

In several industries, particularly in ECS systems, preventing such failures can reduce maintenance costs and enhance system efficiency, yet this aspect remains largely unexplored. Studies that have investigated intelligent monitoring systems have primarily focused on detecting conveyor belt misalignment or identifying large foreign objects, such as screws and metal rods [44,45]. These research efforts have employed deep-learning models like YOLOv4 and YOLOv5 for object detection.

However, they do not address the critical issue of small material characteristics and their impact on non-ferrous metal separation accuracy. In ECS systems, improperly shaped or oversized materials (such as sharp-edged or irregularly shaped pieces) negatively affect separation efficiency and can cause mechanical damage to the conveyor belt and other system components [22].

While previous research has advanced object detection models, no study has specifically targeted a shape and size analysis of trim materials and their influence on the ECS process. Another significant research gap in conveyor belt safety relates to fire hazard detection and temperature monitoring. Existing studies have utilized gas sensors, smoke detectors, and Distributed Temperature Sensing (DTS) systems for monitoring conveyor belt temperatures [36,37,38,39,40,41].

However, these systems are expensive, require frequent maintenance, and often underperform in dusty industrial environments. Additionally, these methods only record temperature data without identifying the cause of temperature increases. Moreover, the use of thermal cameras as a monitoring tool in industrial conveyor belt systems has been relatively underexplored. In prior research, thermal cameras were mainly employed for fire detection in open environments, such as forests [48].

In contrast, this project applied thermal imaging to continuously monitor conveyor belt temperatures and prevent the fire hazards caused by ferromagnetic contamination in ECS systems [42,43].

Overall, the primary research gaps addressed in this project include the lack of an intelligent system for preventing conveyor belt damage instead of merely detecting it, the absence of studies focusing on the impact of material shape and size in ECS systems, and the need for a comprehensive solution to identify and prevent temperature increases in industrial conveyor belts. By combining computer vision, deep learning, and IoT-based automation, this project introduces an approach that reduces physical damage and maintenance costs and enhances separation accuracy and overall system efficiency.

1.4. Research Questions

RQ: How can an intelligent system be designed to optimize conveyor belt performance in BECS machines, enhancing separation accuracy while reducing potential damage, mitigating risks, and improving workplace safety?

RQ1: What advanced visual analysis methods can be utilized to identify conveyor belt materials based on their shape, enhancing separation accuracy in BECS systems and preventing belt wear and tear?

RQ2: What method can detect and correct conveyor belt misalignment in an industrial environment, and how can its accuracy be improved under different operating conditions?

RQ3: What role does thermal imaging technology play in detecting and preventing the abnormal temperature increases in the conveyor belt that are potentially caused by ferrous materials in the BECS system?

RQ4: What are the benefits of an IoT-based alert system in improving conveyor belt monitoring and reducing response time to potential issues?

2. Supplementary Literature and Related Work

Eddy current separators (ECSs) have become indispensable in industrial recycling because they can separate non-ferrous metals, such as aluminum and copper, from waste streams containing plastic, wood, and glass [4]. A critical component of this system is the conveyor belt, which not only facilitates the movement of materials, but also significantly influences the quality and precision of the separation process. Any deviation or misalignment in the belt can alter the trajectory of materials, reducing separation efficiency and damaging equipment. Prior research has addressed this problem using various sensor-based approaches. For instance, studies have implemented displacement sensors [49], LEM ATO-B10 current sensors [50], optical and acoustic sensors [51], and distributed fiber optic sensors (DOFS) [52] to detect belt deviations. While effective to some degree, these sensor systems are often limited by their single-functionality, sensitivity to environmental noise (dust, vibration, and heat), high maintenance demands, and inability to integrate with multi-fault detection tasks, like identifying sharp objects or overheating. Most notably, they lack corrective mechanisms for real-time belt alignment. More advanced methods using machine vision have emerged to overcome these limitations. A study by Wang et al. [53] applied OpenCV for misalignment detection. However, the proposed correction method was incompatible with BECS systems due to their unique belt structure, which involves four non-tensionable rollers. Similarly, studies by Zhang [54] and Wojtkowiak [51] designed correction mechanisms tailored for mining systems, which are mechanically and functionally different from BECS conveyor setups. Hence, their applicability remains restricted. Deep learning-based misalignment detection has also been explored. For example, Sun et al. [55] and Zhang et al. [44] implemented YOLO-based and ARIMA-LSTM models for fault diagnosis. Although they reported high accuracy (up to 99.45%), these systems depend on GPU resources, are expensive to deploy in real-time industrial environments, and offer detection only—not active correction. In contrast, the proposed study introduces a lightweight, real-time misalignment detection and correction module using a Raspberry Pi camera and stepper motors. This system provides dual functionality, minimal calibration, and can adapt to multiple conveyor-related anomalies. Moreover, it operates efficiently without GPU support, making it highly suitable for embedded industrial environments. Fire detection in BECS systems has been another concern, as overheating due to trapped ferromagnetic materials poses a significant risk. Traditional smoke and gas-based detection systems [36,39,40] are prone to false alarms in dusty environments, undermining safety protocols. Distributed Temperature Sensing (DTS) [41] offers high accuracy but is cost-prohibitive for small BECS setups and lacks visual confirmation of fire risks. More recently, thermal cameras have been proposed as an effective alternative [42,43]. They provide pixel-level temperature maps that facilitate early detection of heat anomalies. The present study integrates thermal imaging with IoT-based Telegram notifications, enabling remote alerts and reducing reliance on human presence. Unlike conventional systems [37], this method supports visual confirmation, early intervention, and real-time monitoring tailored for BECS environments. Sharp foreign objects, such as iron scraps, can damage conveyor belts, disrupt separation, and cause injury. Traditional object detection systems relying on fiber-optic [37], infrared [40], or electromagnetic sensors [39] often lack adaptability, precision, or remote alert features. Deep learning models such as lightweight YOLOv4 have shown promise, achieving 93.73% accuracy at 70.1 FPS in foreign object detection [56]. However, they require high-power GPUs and are usually designed for large-scale systems. In contrast, the current study applied YOLOv11 to classify small materials (1–4 mm) as sharp or smooth, thus combining shape-based material classification with foreign object detection.

3. Methods

This research pioneers developing an intelligent system for detecting and correcting conveyor belt misalignment in an eddy current separator. The primary objective of this project was to revolutionize the efficiency and accuracy of the separation process for lightweight metals, such as aluminum and copper, from non-metallic materials without causing any physical modifications to the conveyor belt structure.

3.1. Automated Detection and Correction of Conveyor Belt Misalignment in Industrial Environments

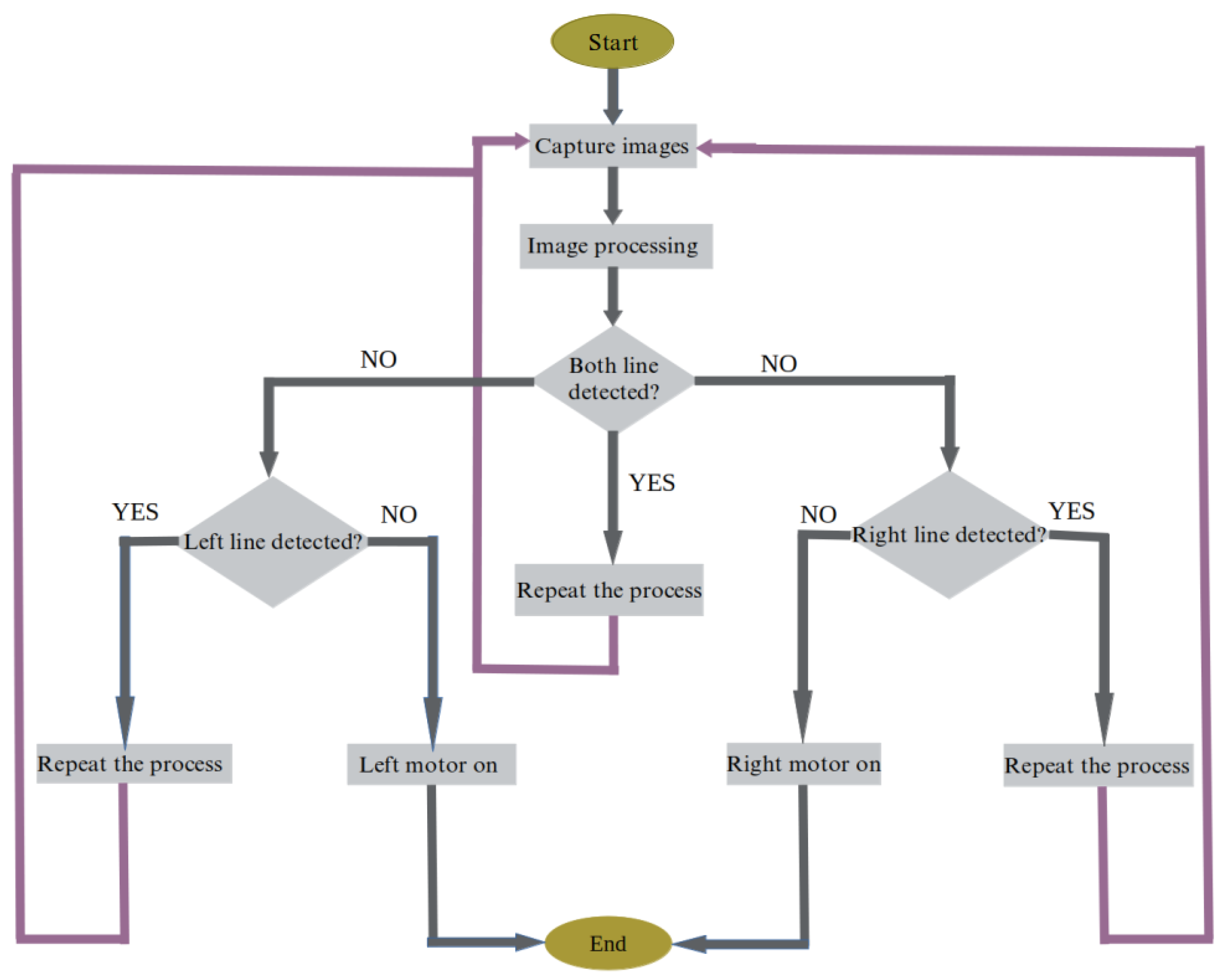

Using machine vision and image processing technologies, the system allows for precise detection of belt misalignment and automatically corrects it. Cameras and stepper motors established above the conveyor system allow for the correction process to take place without interrupting the separation operation. This study addresses the growing need, particularly as electronic and industrial waste production increases, for more intelligent technologies in recycling and material processing industries that require higher operational efficiency. Image processing algorithms and techniques, such as OpenCV [57,58], Canny edge detection, and Hough transform, help precisely detect and automatically correct conveyor belt misalignment. This is particularly important in industrial environments, where environmental factors influence data and require quick responses. Figure 5 illustrates a flowchart of the system’s operation steps. The system forms by capturing an image of the conveyor belt using a Raspberry Pi camera. The captured image is sent to the Raspberry Pi for processing. This step uses the OpenCV library, which presents extensive image analysis and processing capabilities. Canny edge detection [59] is initially applied to detect the white lines in the processing stage. This algorithm identifies prominent edges by calculating the intensity gradients in the image. Then, the Hough transform algorithm is used to identify the white lines on the belt.

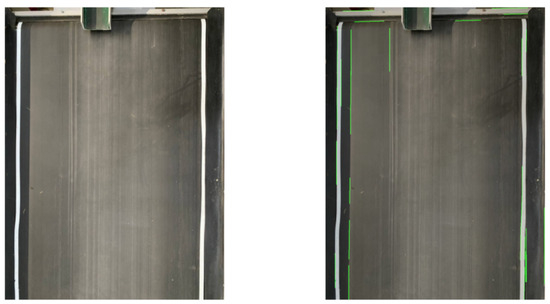

Figure 5.

Flowchart of the conveyor belt misalignment detection and correction algorithm using Raspberry Pi and image processing. Purple arrows indicate return to the beginning of the process under specific conditions, while grey arrows represent normal forward progress toward the end of the process.

As Figure 5 illustrates, after processing the image, the system checks whether the left and right lines are detected. If both lines are detected, the process repeats, and no action is taken. However, if one of the lines is not detected, the system determines that the belt has shifted towards the side where the line was missing. In such a case, the corresponding stepper motor (left or right) is activated to rectify the misalignment.

The motor continues to drive the belt until the line is detected again, indicating that the belt has been corrected. This approach significantly improves the accuracy and efficiency of detecting and correcting misalignment, thereby reducing costs and improving safety. The system ensures a safer working environment by eliminating manual work to monitor and adjust the conveyor belt.

Optimization Techniques

This work uses two techniques, Gaussian blur and region of interest (ROI) [60,61], to optimize and enhance image processing accuracy. Both techniques play a crucial and significant role in improving system performance, helping to increase the speed and precision of conveyor belt misalignment detection.

Gaussian blur is employed as a filter to reduce the noise in the image, which is applied before Canny edge detection to ensure that the edges detected by Canny are more exact and durable. The mathematical formula for Gaussian blur [60] is as follows:

In this formula, represents the value of the filtered pixel, and is the standard deviation, which determines the level of smoothing. A higher value of results in a more blurred image.

Also, region of interest (ROI) [62] defines the portion of the image that requires processing. This project restricts this region to a specific part of the conveyor belt where misalignment detection is required. By accomplishing this, only the relevant area is selected for processing, reducing the computational load and considerably raising processing speed and decreasing latency. At the same time, the system’s precision is maintained as only the essential parts of the image are analyzed, demonstrating the practical benefits of this technique. Figure 6 identifies the region of interest (ROI) before applying Gaussian blur, and Figure 7 shows the region of interest (ROI) after applying Gaussian blur.

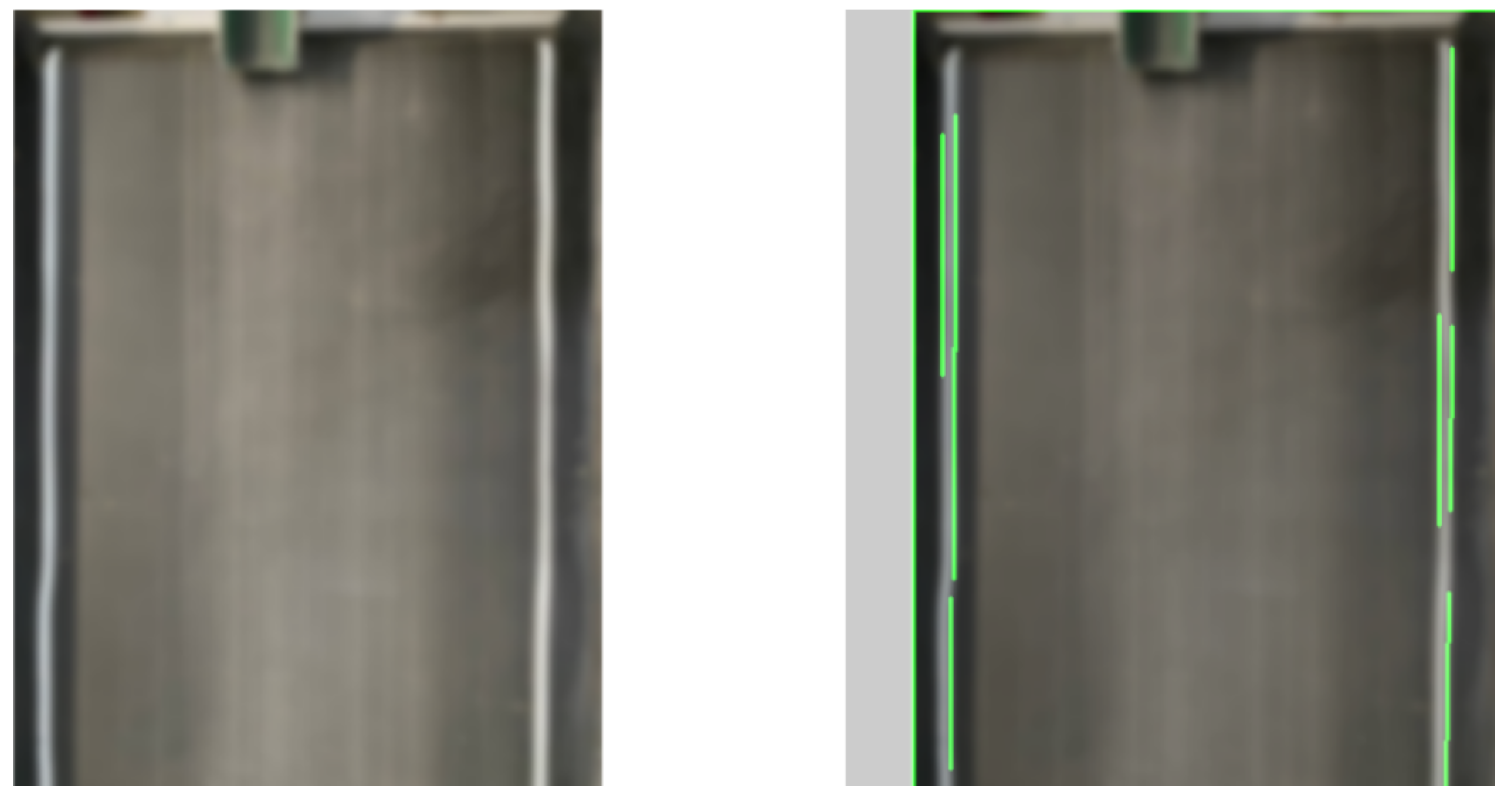

Figure 6.

Region of interest before Gaussian blur.

Figure 7.

Region of interest after Gaussian blur.

In image processing, particularly in industrial environments, noise and undesirable disturbances, such as dust and other factors in the image, can cause unnecessary lines to be wrongly recognized as the target lines. In this project, where the purpose is to detect white lines on the conveyor belt, some disturbances in the image, such as sudden changes in the light or environmental noise, might cause lines to be detected incorrectly, which, in fact, either do not exist or do not contribute to accurate detection.

As shown in Figure 6, however, at this stage, disruptions may cause lines to be detected that are not the intended white lines. This can reduce detection accuracy and lead to diagnostic errors as the system may struggle to distinguish between actual lines and unnecessary noise. In the following image, as shown in Figure 7, Gaussian blur was applied first to smooth the image and remove noise. This method eliminated the slight noise from the image, going only to the authentic and essential lines for detection. As a result, the exactness of the white line detection was improved.

3.2. Thermal Imaging for Early Detection and Prevention of Conveyor Belt Overheating

The Internet of Things (IoT) [63,64,65] plays a crucial role in modern industrial safety systems, particularly in mitigating fire hazards in BECS conveyor systems. These systems separate non-ferrous metals, such as aluminum and copper, from non-metallic materials.

Still, they are susceptible to fire risks when ferromagnetic contaminants like iron inadvertently enter the system. Such materials can become trapped and heated due to friction and the high-speed rotation of the magnetic drum, leading to potentially hazardous thermal buildup. To address this risk, an IoT-enhanced thermal monitoring system is integrated into the conveyor, leveraging thermal cameras, Raspberry Pi, and IoT connectivity for proactive fire prevention.

The thermal cameras in this system operate continuously, capturing high-resolution thermal maps of the conveyor belt with pixel-level accuracy. These cameras generate grayscale thermal images, where intensity variations correlate with temperature fluctuations. The OpenCV library [66,67,68] is utilized for image preprocessing, including grayscale conversion, Gaussian blurring, and adaptive thresholding, to enhance hotspot detection.

A dynamic region-of-interest (ROI) selection mechanism is implemented, ensuring that only critical zones of the conveyor belt are analyzed, thus optimizing computational efficiency. An empirical analysis of normal operating temperatures is conducted to establish a fire risk threshold. The average conveyor belt temperature is 27 °C, with occasional minor fluctuations within safe operational limits. However, a critical temperature threshold of 40 °C is set, beyond which automated alarms are triggered.

The system also integrates historical temperature data analysis, allowing for the identification of progressive overheating trends that indicate potentially hazardous conditions before they escalate into fires.

A two-tiered alarm system is implemented to enhance situational awareness and rapid response capabilities:

- Local Alarm with Buzzer:

- –

- When an overheating event is detected, a high-decibel buzzer is immediately activated on site.

- –

- This ensures that factory personnel receive an instant warning, allowing them to inspect and address the issue before escalation.

- –

- The system prioritizes intervention, preventing small anomalies from evolving into critical failures.

- Remote Notification:

- –

- A Telegram bot [69,70] is integrated with the system using Telegram’s secure API.

- –

- The bot sends alerts, including temperature readings, thermal snapshots, and timestamped event logs, directly to designated technicians and safety supervisors.

- –

- This approach allows for remote monitoring from any location, reducing reliance on on-site personnel and enhancing operational efficiency.

- –

- Security measures ensure data privacy, with no personal data storage, and messages are transmitted via end-to-end encrypted channels.

Beyond immediate fire prevention, this system is critical in predictive maintenance. By leveraging thermal imaging data, the system continuously learns from detected anomalies, thereby identifying recurring thermal hotspots that may serve as early indicators of potential mechanical issues. These hotspots may signal friction-induced wear and tear in conveyor components, suggesting excessive stress on certain system parts.

Additionally, thermal patterns can reveal misaligned conveyor belts, where uneven tension distribution leads to localized heat buildup, which, if left unaddressed, could degrade the belt material and disrupt operational stability. Another critical issue identified through this system is the accumulation of ferromagnetic debris near the magnetic drum, which can interfere with the efficiency of the eddy current separation process and pose further overheating risks.

By integrating machine learning models in future iterations, the system could refine its ability to anticipate mechanical failures more accurately, predicting failure points before they result in costly breakdowns. This would enable the implementation of a preemptive maintenance strategy, where servicing and adjustments are scheduled based on condition monitoring rather than reactive repairs. As a result, downtime would be minimized, operational efficiency would be maximized, and the lifespan of critical components would be significantly extended.

This IoT-driven thermal monitoring solution establishes a comprehensive and intelligent fire prevention system for BECS conveyor systems, addressing immediate safety concerns and long-term operational reliability. The system continuously monitors potential heat anomalies by integrating advanced thermal imaging for precise temperature monitoring. Its image processing capabilities effectively detect deviations before they escalate into hazardous conditions. At the same time, a dual-tiered alert mechanism guarantees that local and remote notifications are promptly delivered to the responsible personnel.

In addition to fire prevention, the system introduces predictive maintenance capabilities that optimize the long-term stability of the BECS system. This proactive approach reduces response time, enhances safety compliance, and prevents potential equipment failures, operational downtime, and workplace hazards. Through IoT connectivity, industrial facilities gain access to a fully automated safety framework, ensuring continuous, high-performance operation of the vortex separator conveyor, even under challenging industrial conditions.

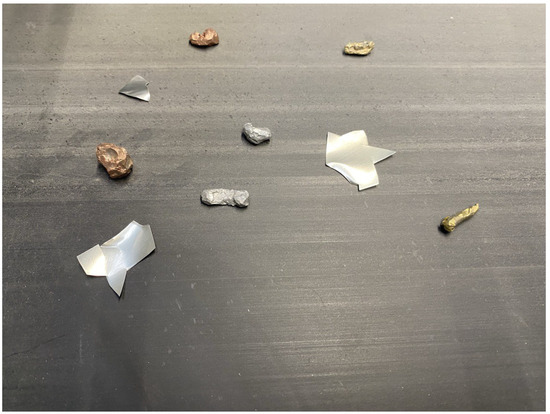

3.3. Machine Vision for Shape-Driven Conveyor Belt Material Recognition

This research employed a lightweight deep learning model called YOLOv11n-seg [71] to recognize and classify materials moving on a conveyor belt based on their shape. Two primary classes were defined for object identification: sharp and smooth. These classes were not defined based on the material type but rather on the geometric characteristics of the object’s surface. Specifically, the sharp class refers to objects with angular sides, pointed edges, and broken or jagged contours. Such objects are often the result of poor shredding processes or mechanical damage. In contrast, the smooth class includes objects with rounded edges, continuous curves, and elliptical or circular shapes, which typically indicate the proper functioning of grinders or cutters in recycling lines. During the training phase, these class definitions were implemented manually with high precision using the Roboflow platform. Each object within the dataset was labeled with accurate segmentation masks based on human visual judgment and relevant engineering criteria. This careful annotation process enabled the model to learn the distinction between sharp and smooth objects based on their visual and geometric features. Although the YOLOv11n-seg model could perform accurate segmentation independently, an additional geometric post-processing step was introduced after inference to enhance the explainability of the results and reduce misclassifications—especially for ambiguous cases. The predicted segmentation masks were processed using contour extraction functions from the OpenCV library in this step. For each detected region, the number of sharp corners was calculated using angular approximation techniques, such as cv2.approxPolyDP. According to predefined rules, if a segmented region contained more than three acute angles or jagged edges, it was reclassified as sharp during the post-processing stage, even if the model had initially predicted it as smooth. This strategy proved particularly effective for low-confidence predictions (e.g., those with confidence scores below 0.5), thus helping reduce false positives. A key factor in the success of this hybrid approach was the relatively low variability of materials in the current dataset, which primarily consisted of three types of visually and texturally similar substances. This consistency made it easier for the segmentation model to generalize and the geometric algorithm to make reliable decisions. However, for future projects involving more diverse materials—such as transparent plastics, aluminum, glass, or colored fragments—enhancements to the post-processing module may be necessary. Potential improvements could include pixel density analysis for each class, confidence-weighted classification logic, or network attention maps to interpret internal feature patterns better. The shape of these particles directly impacts the BECS’ performance. Sharp fragments disrupt the even distribution of magnetic fields and reduce separation accuracy, whereas smooth particles support stable eddy currents and lead to more precise separation. A monochrome line scan camera was installed above the conveyor to ensure reliable detection under high-speed industrial conditions. This type of camera can capture high-resolution images at high conveyor speeds. Although the images are grayscale, the edge details remain sufficiently precise for accurate shape classification.

The YOLOv11n-seg model provides both object detection and segmentation capabilities. It was trained using a mixed dataset of RGB and grayscale images collected from the line scan camera. Five hundred and nineteen labeled images containing 1105 object instances (461 sharp and 644 smooth) were used for training. The model was trained over 100 epochs and showed successful convergence.

Custom Dataset Preparation

A custom dataset was created using actual industrial samples to develop an effective detection and segmentation model for identifying sharp-edged and smooth materials on a conveyor belt. Approximately 45 sharp samples were manually crafted from aluminum energy drink cans. These materials were selected due to their reflective surface, industrial relevance, and ease of shaping.

They were intentionally manipulated to exhibit prominent and multiple edges, simulating hazardous or damaging materials that may harm the conveyor belt or interfere with the separation process.

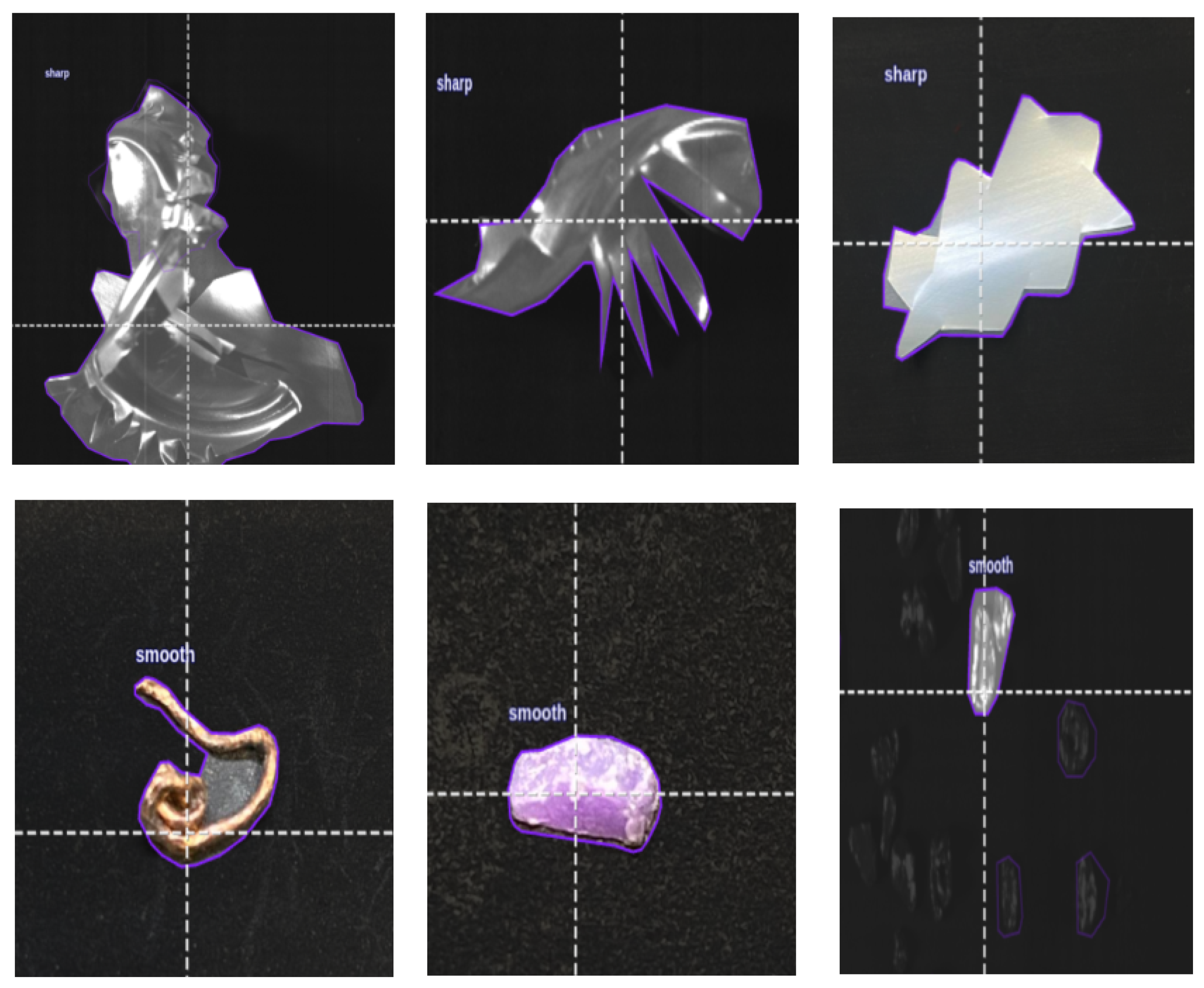

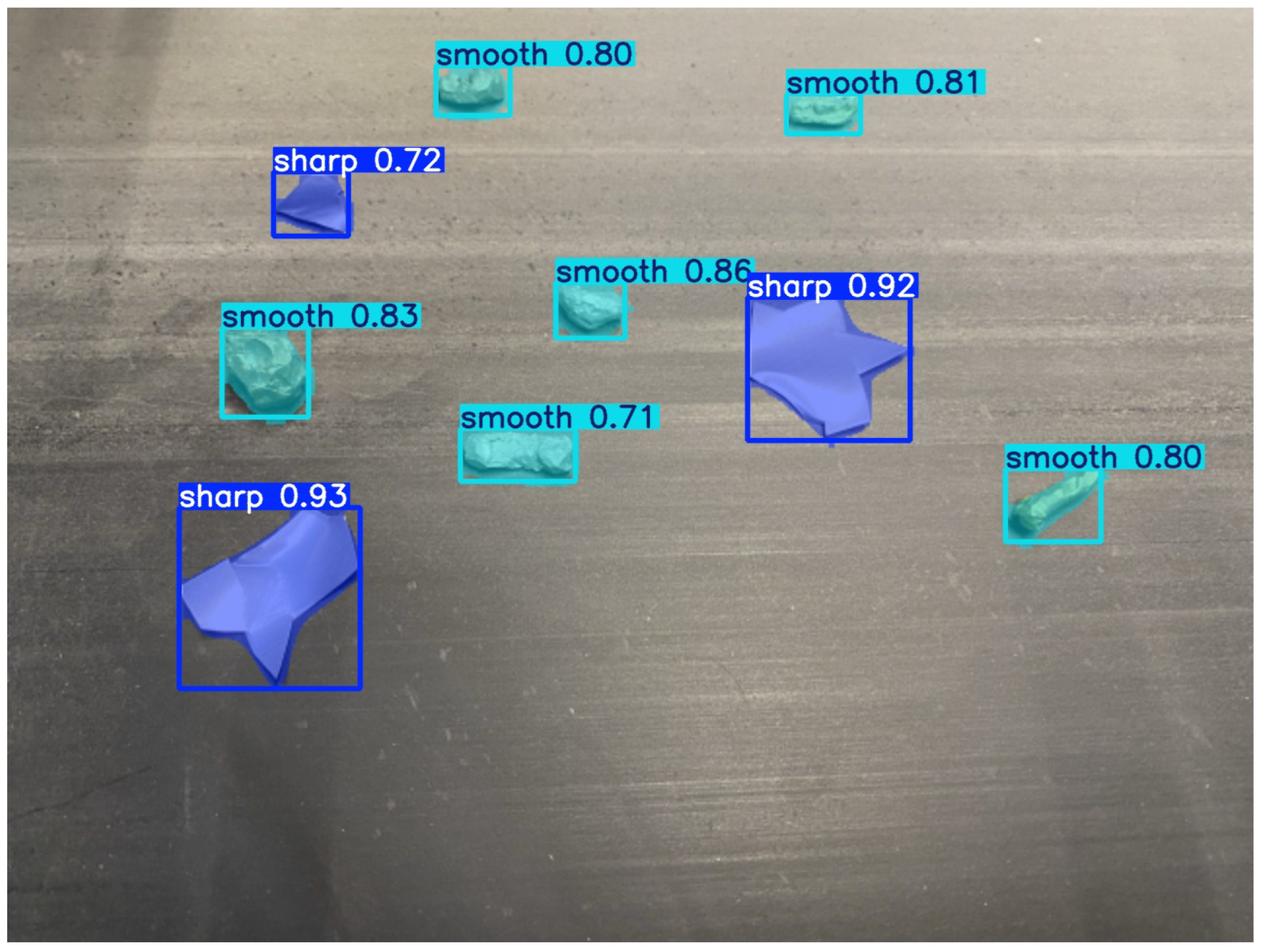

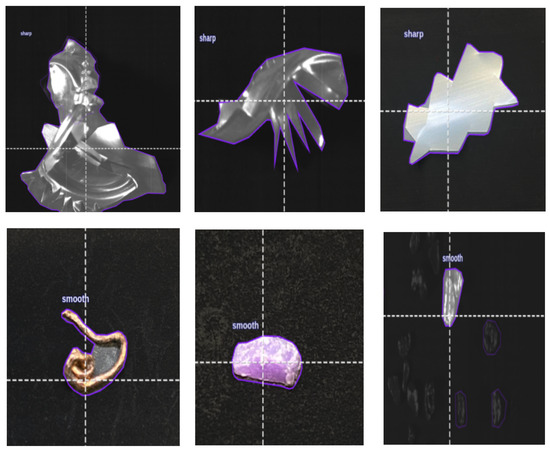

As shown in Figure 8, several sharp-edged samples were segmented with purple outlines for training purposes. All the materials were collected after passing through milling and industrial screening machines. As a result, the initial dataset contained numerous noisy or incomplete samples, which had to be manually filtered and discarded due to upstream processing errors. Only after ensuring image quality did the final imaging process begin.

Figure 8.

Roboflow-labeled samples: sharp (top) and smooth (bottom) materials.

Annotation was performed using the Roboflow platform [72]. In this stage, sharp and smooth materials were highly precisely labeled using polygon-based segmentation tools. Two thousand five hundred and ninety-one images were segmented, resulting in 2348 sharp and 3888 smooth object annotations. Figure 8 displays an example of a smooth, rounded object that the model recognizes as a non-hazardous item.

Every corner and contour of each object was carefully traced to teach the model the difference in edge complexity—with the assumption that the more numerous and sharper the edges, the more hazardous the material is. This handcrafted and balanced dataset helped the model to learn to distinguish between hazardous and safe conveyor belt materials based on visual appearance and to consider the density and sharpness of edges.

3.4. YOLOv11 Working Principle

YOLO (You Only Look Once) is a single-stage object detection model that eliminates the need for separate steps for feature extraction, region proposal, and classification by combining them into one compact convolutional neural network (CNN). This makes YOLO highly efficient for many applications.

YOLOv11 is an advanced version that integrates detection and segmentation in one framework. This project used YOLOv11n-seg (nano version), which is optimized for edge devices and lightweight hardware. Despite having fewer parameters, it provides an excellent balance between accuracy and speed [73,74,75]. The detection process includes the following steps:

- The input image passes through convolutional layers to extract features.

- The image is divided into a grid, where each cell predicts bounding boxes and class labels.

- The model outputs the location (x, y, w, and h), class label (e.g., “sharp” or “smooth”), and confidence score.

- The model also produces a pixel-wise mask for each detected object in the segmentation version.

Each output prediction includes the following:

- Bounding box coordinates.

- Class label (e.g., sharp or smooth).

- Segmentation mask.

- Confidence score (e.g., 0.93).

The model is trained using a composite loss function [76]:

where:

- BoxLoss: Bounding box regression loss.

- ClassLoss: Classification loss (cross-entropy).

- DFLLoss: Distribution focal loss for localization precision.

- SegmentationLoss: Pixel-wise segmentation mask loss.

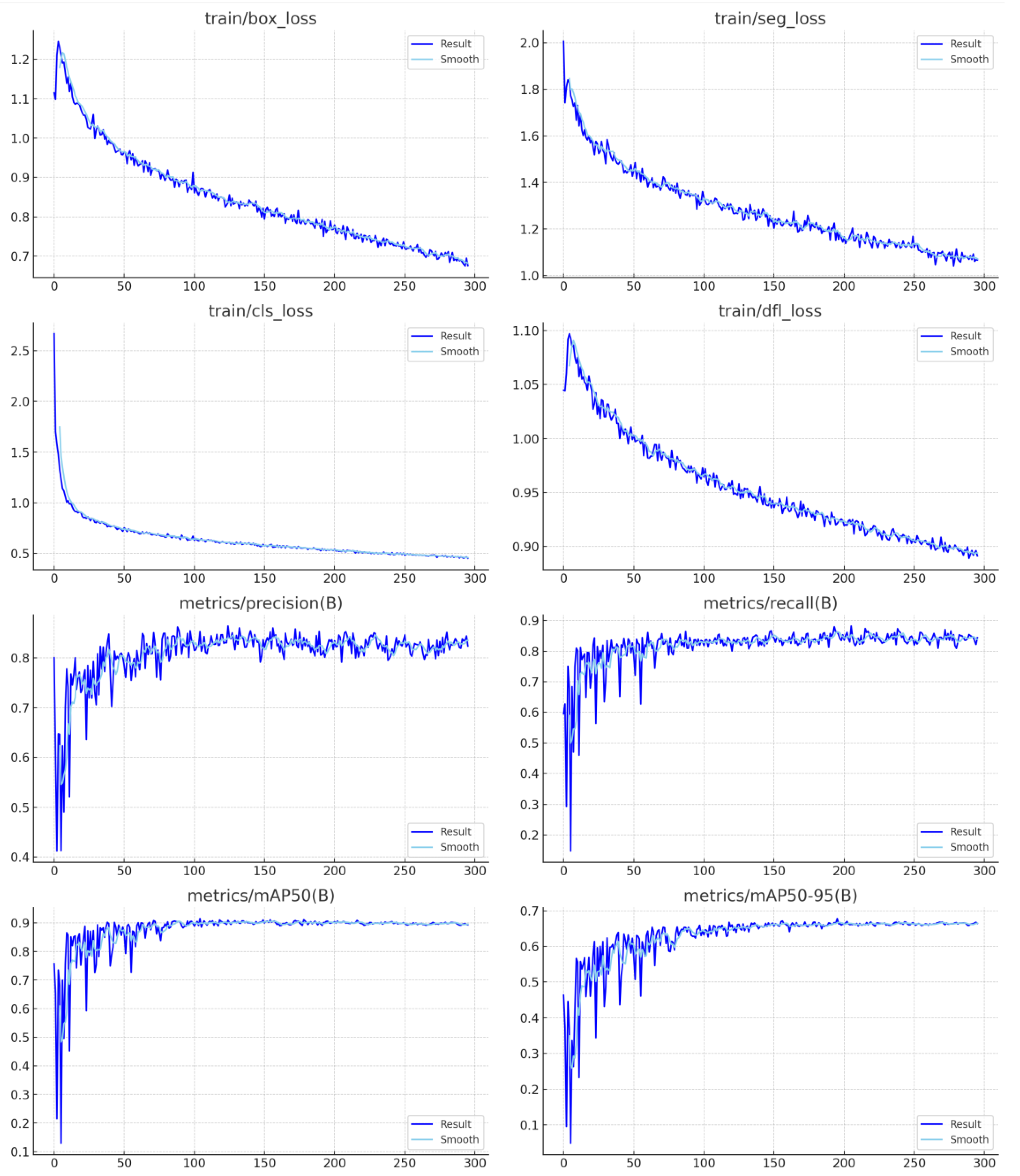

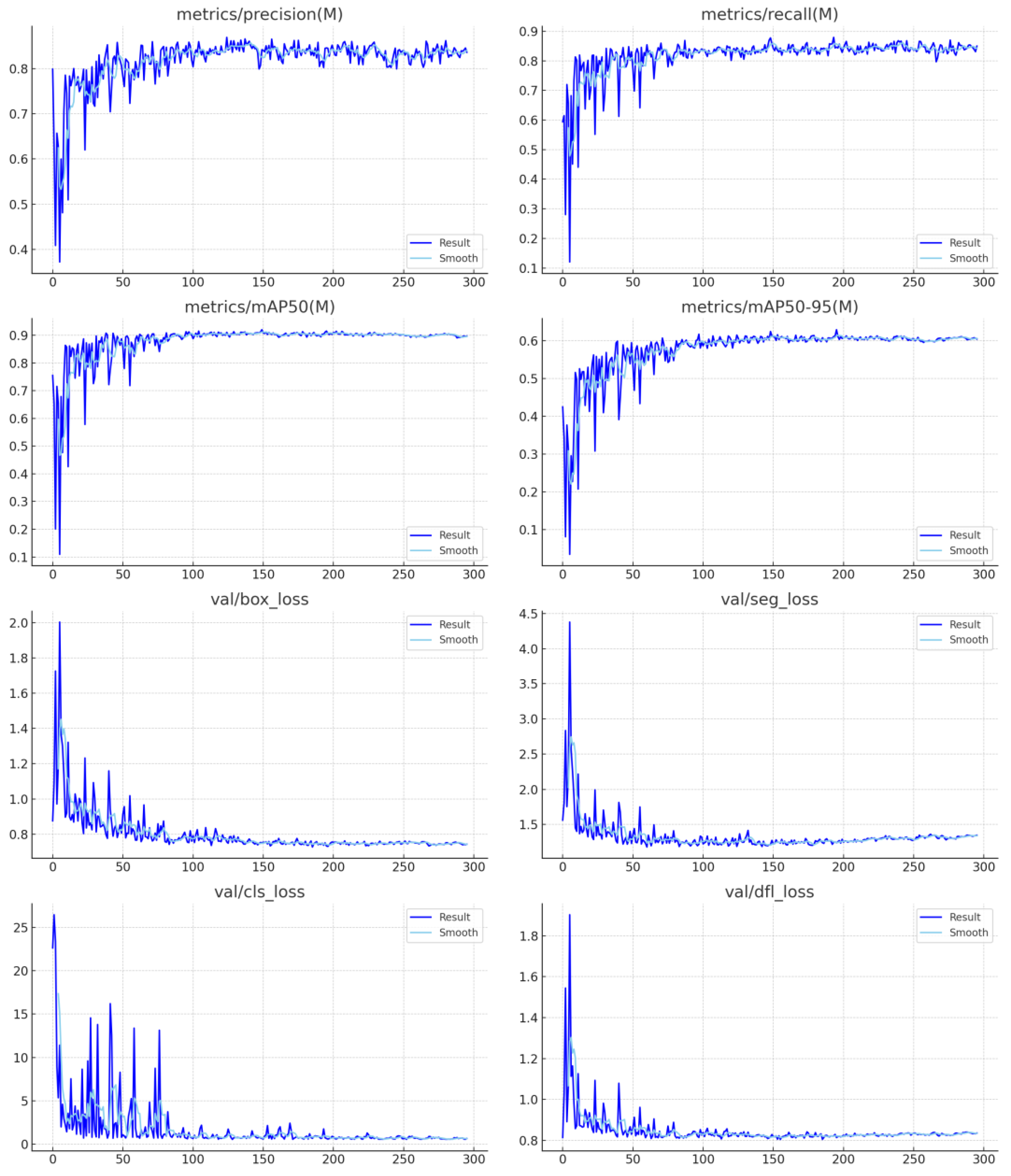

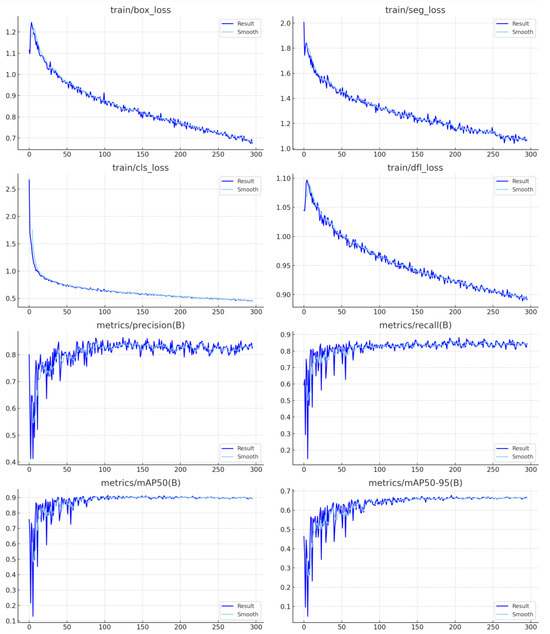

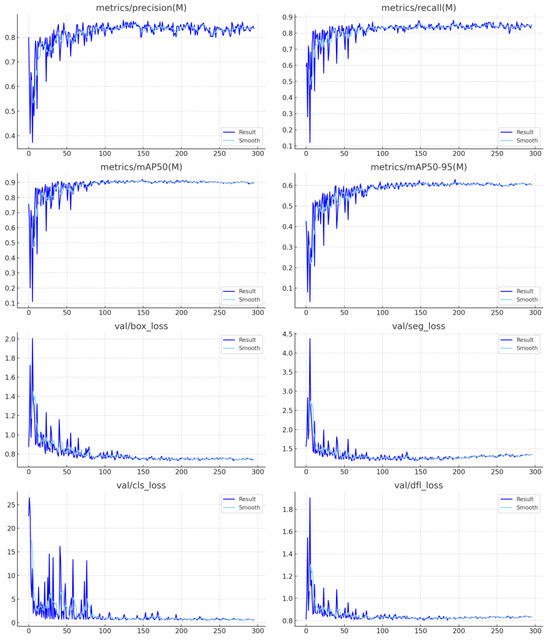

All these loss components decreased steadily during training, as shown in Figure 9 and Figure 10. The consistent downward trend of the box, class, segmentation, and DFL losses over 100 epochs indicates stable and practical learning. The model’s inference time was approximately 4.9 ms per image, with preprocessing and postprocessing times of 0.5 ms and 1.3 ms, respectively. These figures make the model suitable for deployment in industrial settings.

Figure 9.

The training and validation loss curves for a multi-task learning model (YOLOv11 with segmentation and detection).

Figure 10.

The evaluation metrics over 100 epochs for the bounding box (B) and mask (M) outputs in a multi-task detection and segmentation model.

Model Analysis and Label Distribution

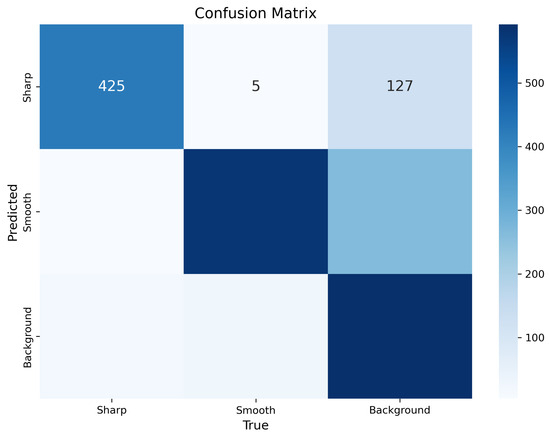

A set of analytical visualizations was generated to thoroughly assess the YOLOv11n-seg model’s classification performance and to understand the distribution of labeled objects within the dataset. These include confusion matrices (both raw and normalized), label distribution histograms, spatial density heatmaps, and a bounding box attribute correlogram.

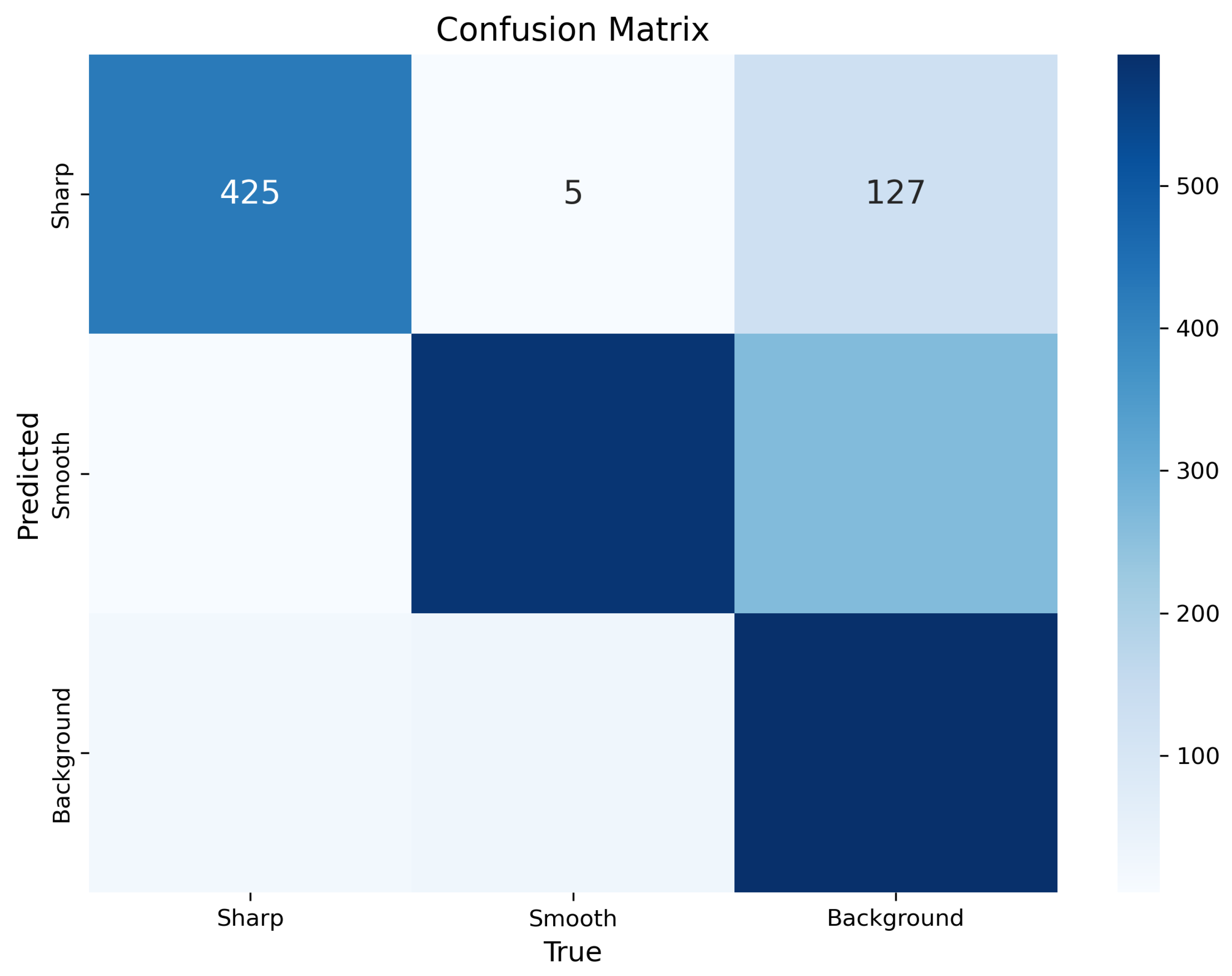

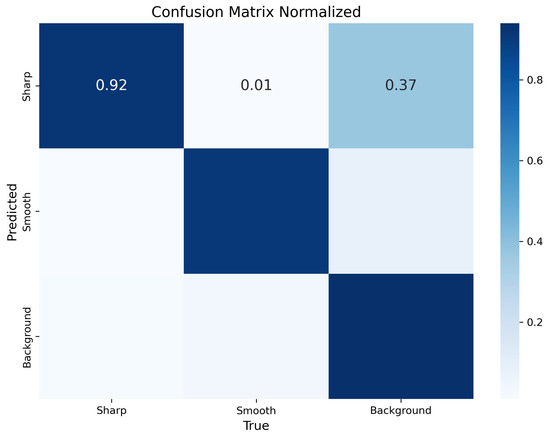

Figure 11 presents the raw confusion matrix that illustrates the model’s classification performance across three categories: sharp, smooth, and background. Among 557 ground-truth sharp samples, 425 were correctly predicted as sharp, 127 background instances were misclassified as sharp, and five smooth objects were incorrectly labeled as sharp. The model achieved strong performance for the smooth class: it correctly identified most smooth samples with a dark diagonal cell, indicating high confidence. However, a small portion was misclassified.

Figure 11.

Confusion matrix showing the raw classification counts for the YOLOv11n-seg model.

The background class appeared to be the most challenging as a significant portion of background instances (approximately 127) were incorrectly classified as sharp. This may be due to the visual similarity in texture or brightness between background noise and fine, sharp materials. Overall, the matrix reveals that, while the model performs strongly in distinguishing between sharp and smooth objects, it struggles more when dealing with background filtering, highlighting a potential direction for future model improvement.

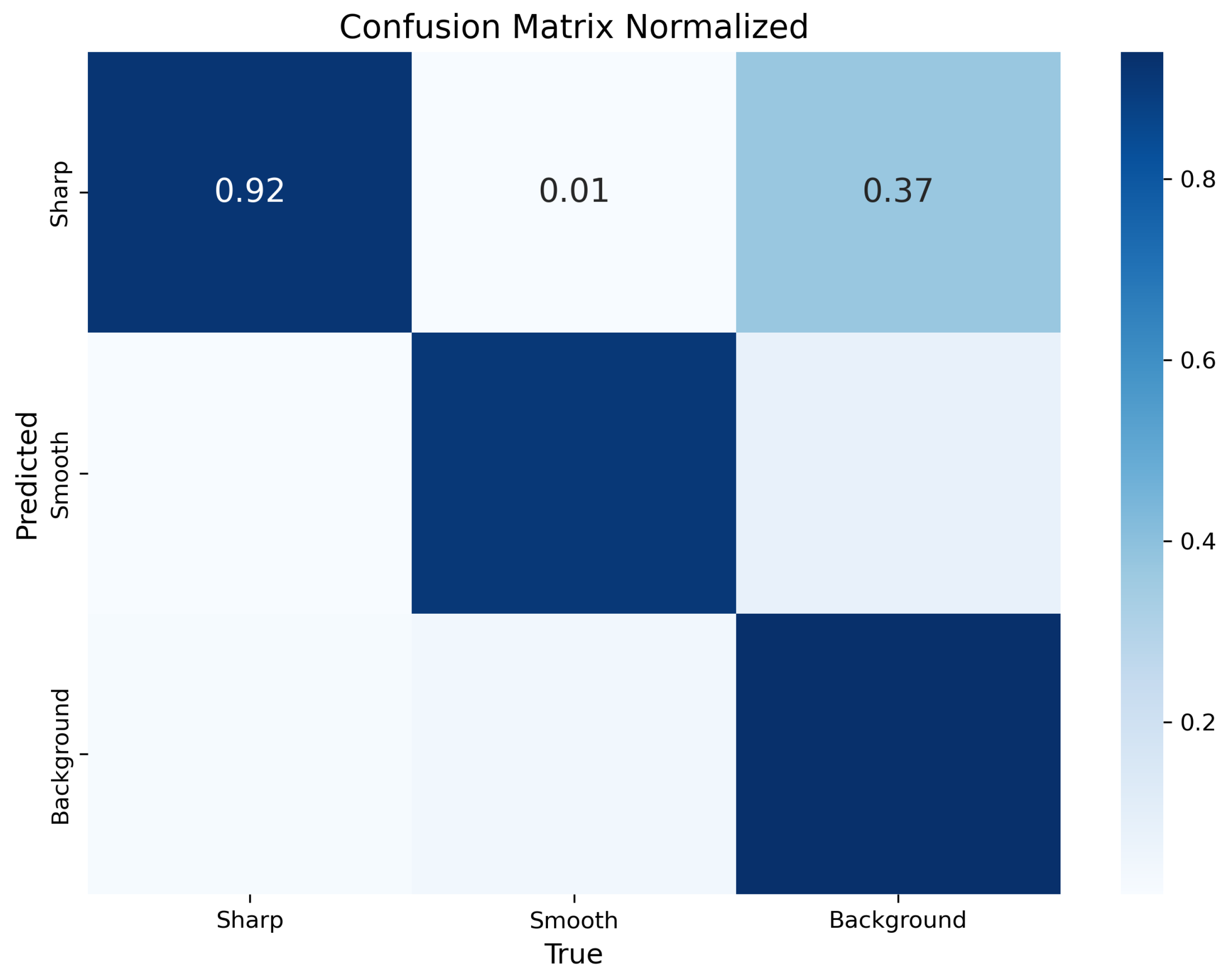

Figure 12 shows the normalized confusion matrix, providing a clearer understanding of class-wise accuracy and error proportions. The normalized values reveal that 92% of sharp samples and 89% of smooth samples were accurately classified.

Figure 12.

Normalized confusion matrix for the YOLOv11n-seg shape classification model.

Importantly, 37% of the background pixels were misclassified as either sharp or smooth, with the most significant portion misidentified as sharp. This highlighted a tendency of the model to overpredict object presence, possibly due to the high sensitivity required for detecting small, detailed particles. This trade-off can be optimized through improved background sampling and regularization strategies in future versions.

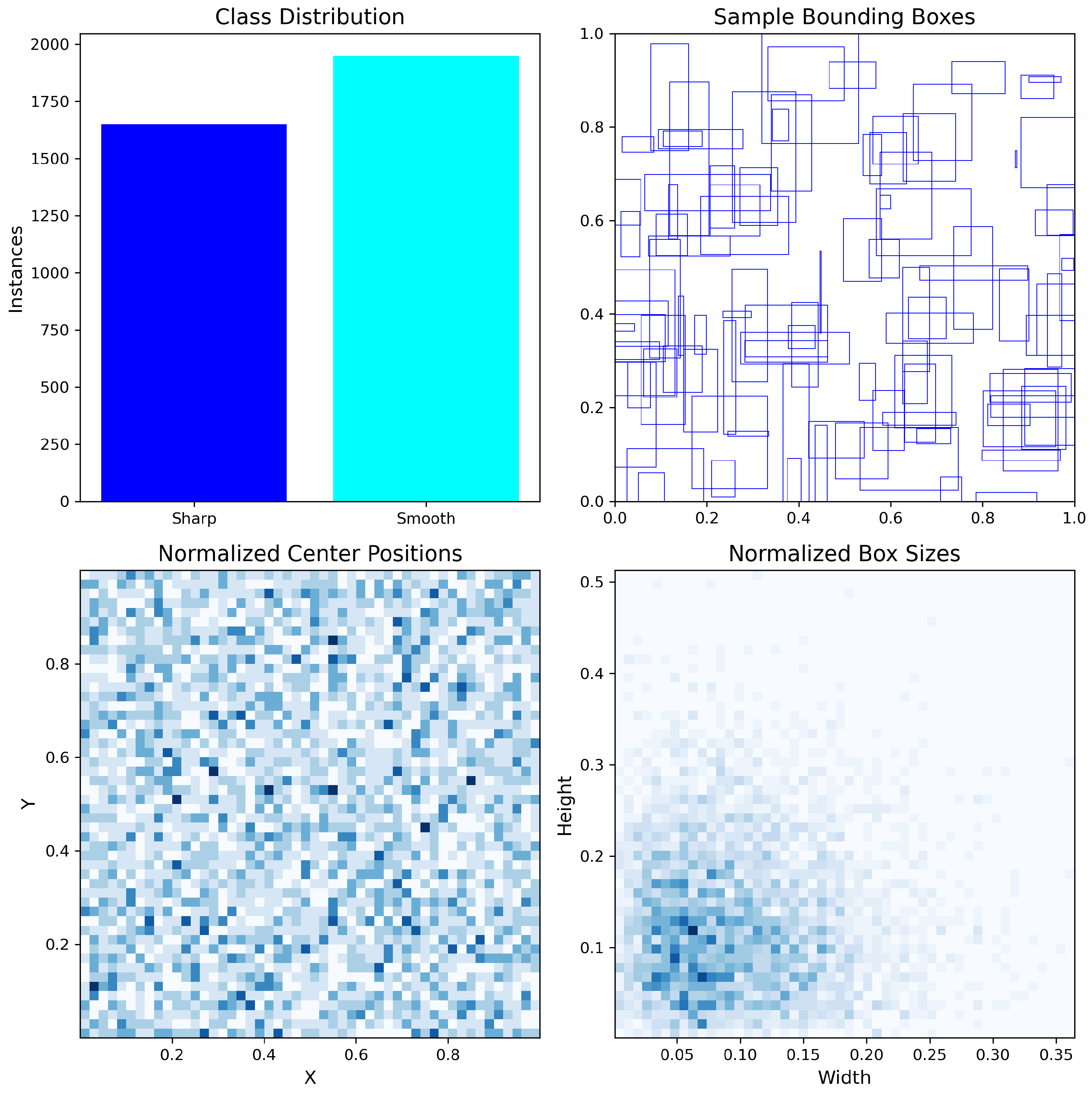

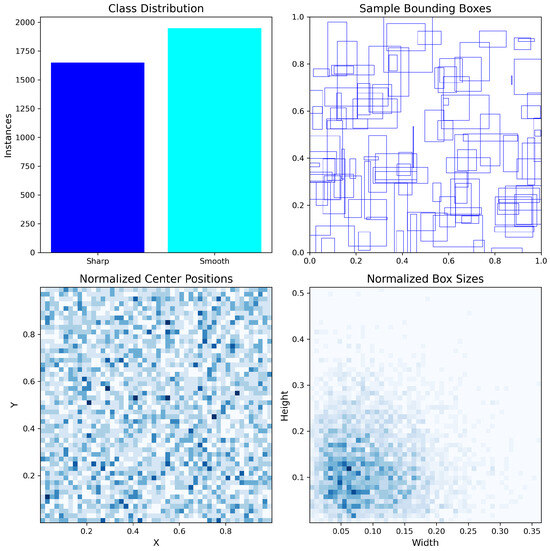

In Figure 13, a collection of label-based statistical visualizations is displayed. The top-left bar plot shows the instance count per class: approximately 1700 instances were labeled as sharp and over 2000 were labeled as smooth.

Figure 13.

Statistical overview of the labeled bounding boxes and shape categories in the training dataset.

This relatively balanced dataset helps ensure the model receives adequate learning opportunities from both classes. The top-right plot overlays all bounding boxes within the dataset, revealing that most labeled objects are concentrated toward the center of the frame. This pattern is consistent with the experimental setup, where materials flow along the center of the conveyor belt.

The bottom row of Figure 13 shows the distribution heatmaps of the bounding box coordinates. The location heatmap shows that objects were mostly centered horizontally () and vertically (), confirming the conveyor alignment assumption.

The heatmap of the bounding box revealed that most objects were relatively small in size—and they were primarily clustered in the lower width and height regions. This was expected given the fine particle sizes (1–4 mm) being processed in this system.

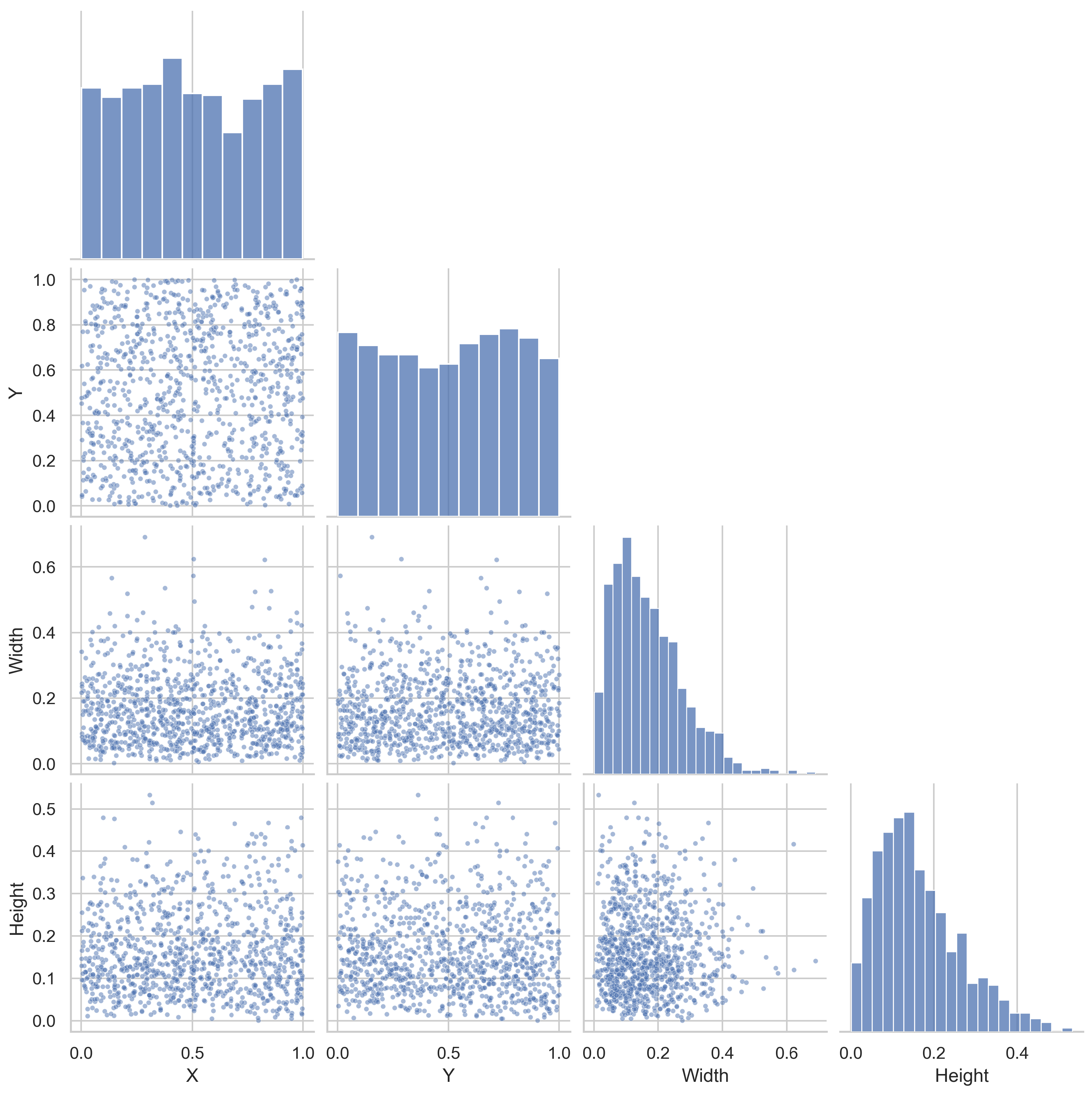

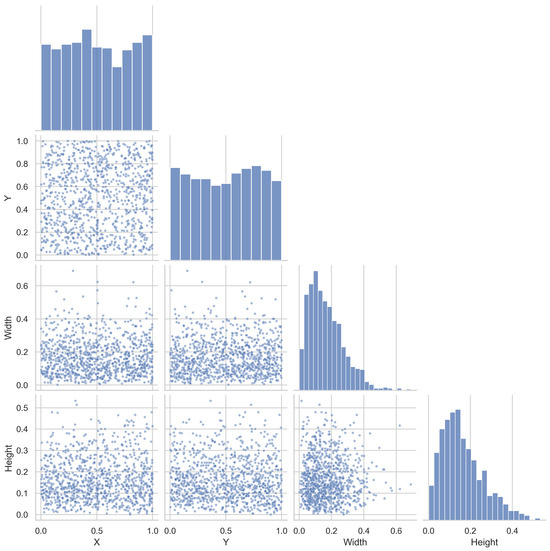

Figure 14 offers a correlogram that was used to evaluate the interdependencies between the bounding box features. The diagonal plots illustrate the marginal distributions for x, y, width, and height, where both x and y are firmly centered and both size features are skewed toward smaller values.

Figure 14.

Visualization of the statistical distribution and correlations among the normalized coordinates and dimensions of the bounding boxes used for particle detection on the conveyor belt. The diagonal plots represent the marginal histograms of each variable—x, y, Width, and Height—revealing that most detected objects are centered horizontally () but spread vertically (y varies uniformly). The lower triangles display 2D histograms that highlight the relationships between features. For instance, width and height show a mild positive correlation, while most bounding boxes tend to be relatively narrow and short, as shown by the concentration of values around lower ranges. These insights help validate dataset balance and guide preprocessing strategies for shape-aware detection models in the BECS system.

This confirms that the dataset primarily contains small objects in the image’s central region. Additionally, the scatterplots in the lower triangle of the matrix suggest no strong correlation between the object location and size, implying that the model is being trained on a diverse range of object positions and scales without bias.

These visualizations collectively confirm that the YOLOv11n-seg model performs strongly in distinguishing between sharp and smooth materials.

The instance balance across classes and the centered spatial distribution of objects reinforce the quality of the training dataset. However, the tendency to confuse the background with sharp objects suggests a potential over-sensitivity that could be addressed by refining negative sampling, enhancing background annotations, or incorporating additional background-focused training samples.

3.5. Enhancing Conveyor Belt Monitoring with IoT-Based Alert Systems

In the recycling industry and separation processes, particularly in BECS systems, the conveyor belt plays a critical role in material transportation and improving separation accuracy. However, challenges, such as belt misalignment, abnormal temperature increases, and unwanted objects like ferromagnetic materials, can disrupt system performance, cause production downtime, or even lead to fire hazards [77].

Implementing an IoT-based alert system enables conveyor belt monitoring, automatic issue detection, and reduced response time. This system leverages smart sensors, computer vision, image processing, and communication technologies to analyze collected data and send immediate alerts upon detecting anomalies.

One of the most effective ways to monitor conveyor belt conditions is by integrating smart sensors and industrial cameras into an IoT network, which processes and analyses operational data [78,79]. In our project, combining various technologies has enabled more precise monitoring.

Thermal cameras have been employed to identify hot spots on the conveyor belt, which may result from friction, belt misalignment, or ferromagnetic material accumulation. Additionally, a line scan camera has been used to examine the shape and type of materials transported on the conveyor, allowing for the detection of sharp objects that could damage the belt.

Alongside these hardware components, Raspberry Pi and IoT modules [80] handle edge computing for local data processing and send immediate alerts via communication channels when anomalies are detected. The system integrates OpenCV and lightweight machine-learning models [81,82,83] for anomaly detection.

For instance, belt misalignment is detected by analyzing the movement pattern of a reference white line on the belt. Similarly, thermal data captured by cameras are processed to analyze temperature fluctuations, triggering alerts when abnormal hot spots are detected, which could indicate excessive friction or the presence of unwanted materials.

The system can also identify unauthorized objects, such as iron fragments or angular debris, which could cause belt abrasion or physical damage. These capabilities transform the system from a simple alert mechanism into an advanced analytical and preventive tool, optimizing operations and reducing unexpected failures.

Instead of relying on traditional monitoring methods, which require manual inspection and lead to delayed problem detection, the IoT-based alert system sends instantaneous notifications upon detecting anomalies. This system includes two levels of alerts. Local alerts activate an on-site alarm buzzer and warning lights, allowing operators to respond immediately. In addition, remote notifications are sent via a Telegram bot to technicians, providing thermal images and temperature analysis of the conveyor belt.

Suppose the temperature exceeds a critical threshold (e.g., 40 °C). In that case, the system sends a text alert and initiates an automatic call to ensure the responsible personnel receives the warning. This approach significantly enhances reaction speed, minimizes equipment damage risks, and prevents sudden production halts.

A key challenge in implementing intelligent industrial systems is dealing with hardware limitations and harsh working environments (e.g., dust, environmental noise, and high energy consumption). In our project, multiple strategies were adopted to optimize system performance and increase stability.

One of these strategies is hybrid processing (edge computing + cloud computing), where local processing is performed on the Raspberry Pi and only essential data are sent to high-performance computing systems (such as a GPU-equipped laptop). Additionally, power management techniques have been applied to adjust the frame rate of cameras and regulate data transmission based on emergency levels. For instance, if the conveyor belt temperature remains safe, high-load processing is disabled, thus saving computational resources.

Techniques such as Gaussian filtering and region of interest (ROI) selection are used to eliminate noise and to focus on critical areas to improve image processing accuracy.

4. Results and Discussion

This section presents and analyses the results obtained from implementing each core module of the proposed BECS system—which were deployed on the conveyor belt of the eddy current separator (ECS). These modules include the fire and smoke detection and alert system, the conveyor belt misalignment correction mechanism, the particle shape detection model for classifying round and sharp-edged materials, and the AI-based optimization model for controlling the speed of system components. For each module, qualitative and quantitative results are reported to evaluate the system’s performance, accuracy, and stability under various industrial conditions.

4.1. Evaluation of Conveyor Belt Misalignment Detection and Correction

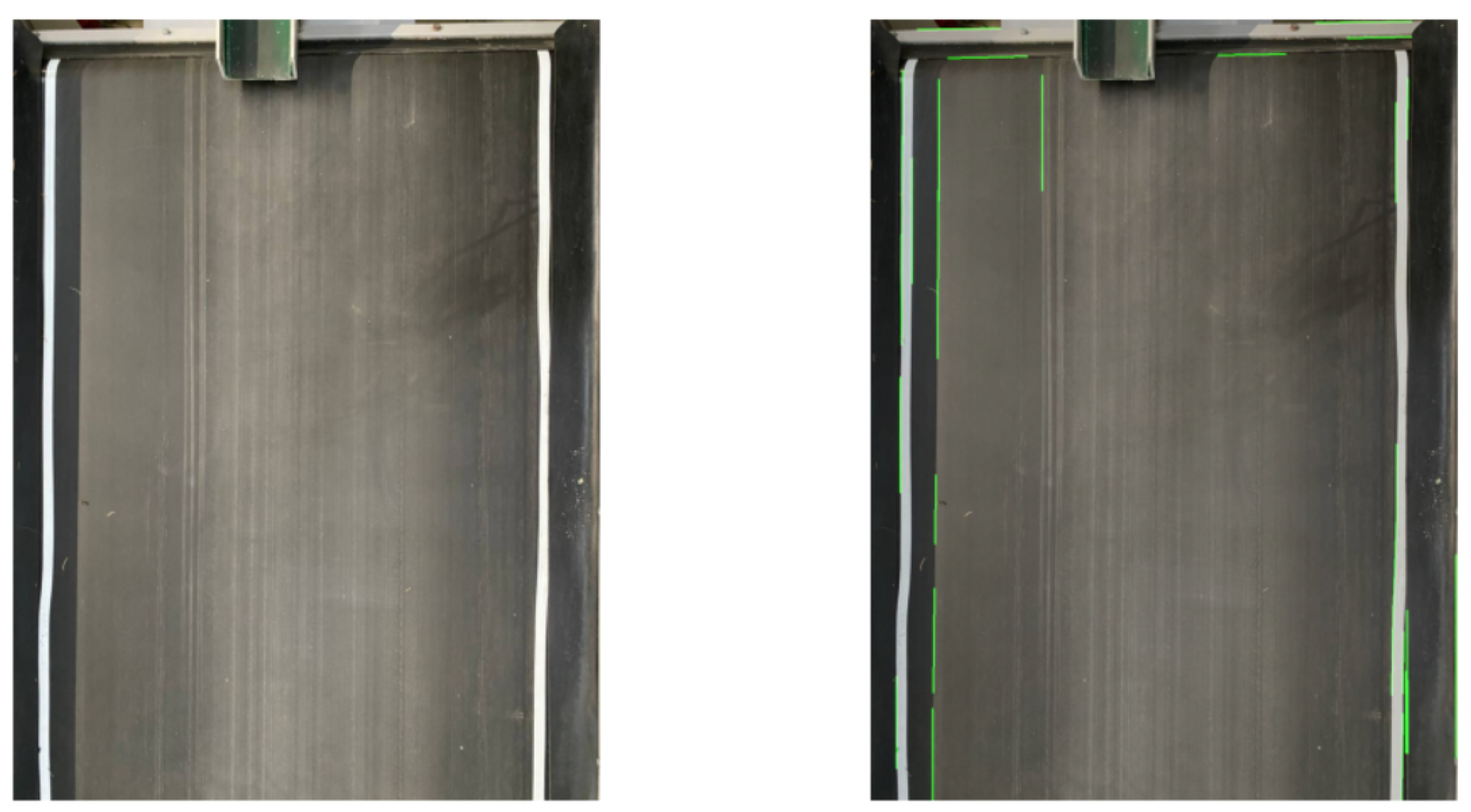

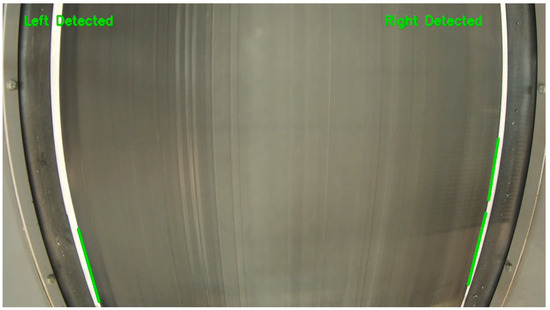

Due to the unmodifiable structure of the industrial BECS, all experiments were conducted using the actual conveyor belt system for the line detections and a simulated model to test the misalignment correction module. A top-mounted camera captured the conveyor’s edge regions. OpenCV was employed for image processing, combining Canny edge detection and Hough line transformation to detect both left and right edge lines.

To improve detection robustness under industrial conditions (such as varying lighting or machine-induced vibration), pre-processing techniques, including Gaussian blur and region of interest (ROI) cropping, were implemented.

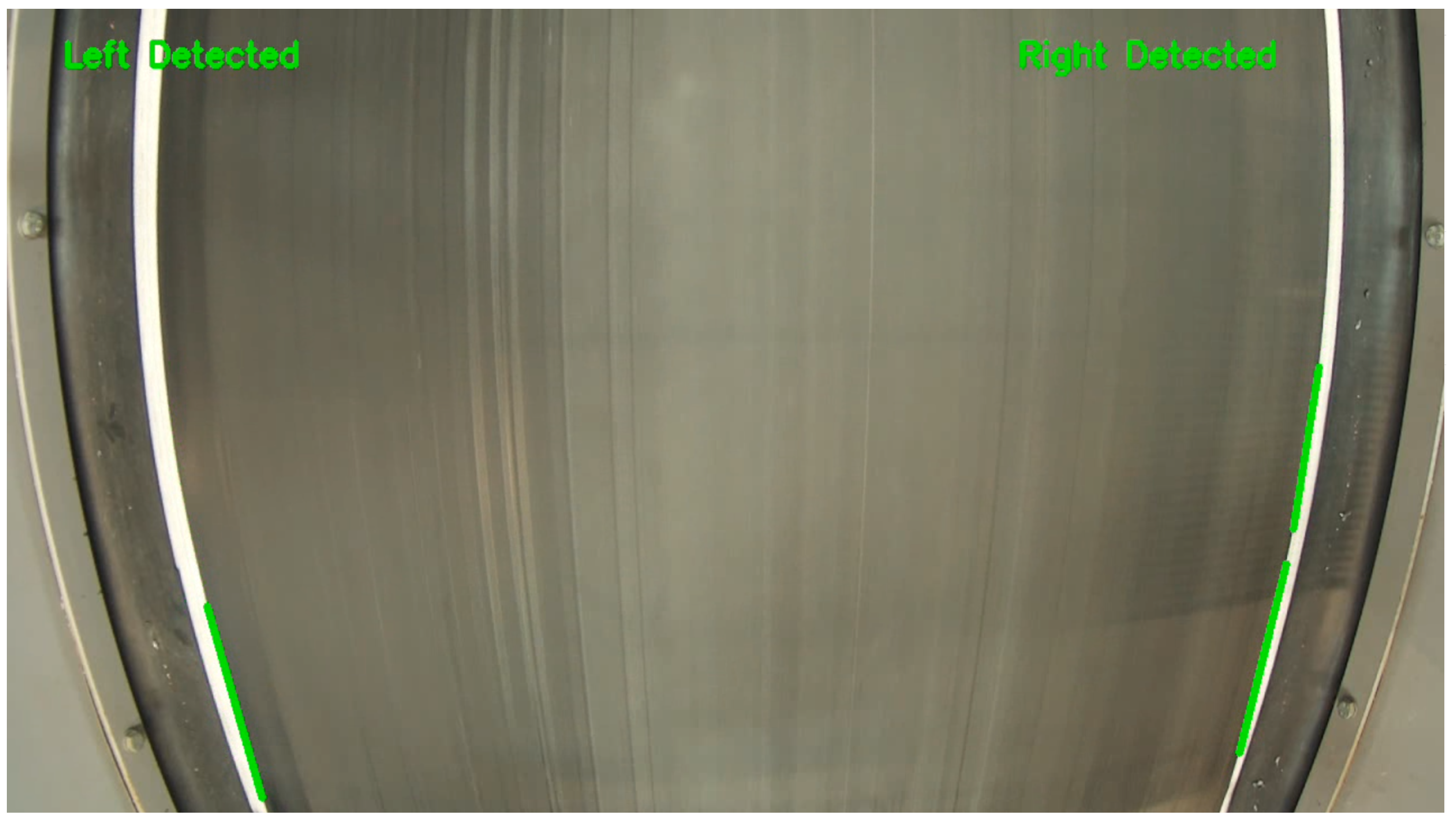

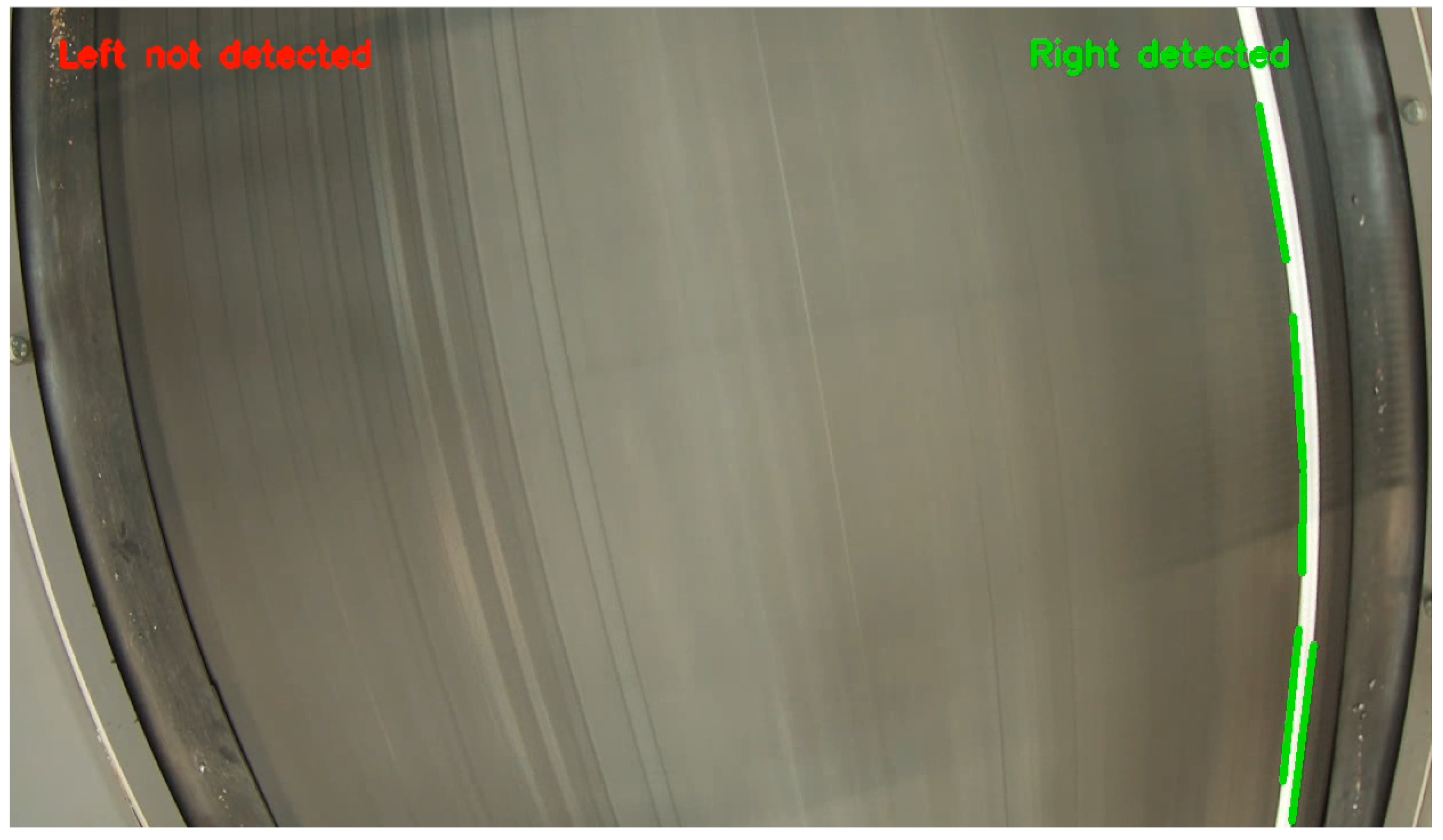

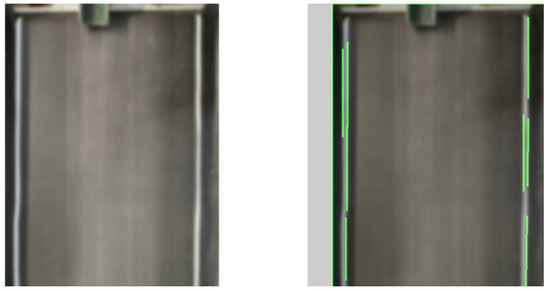

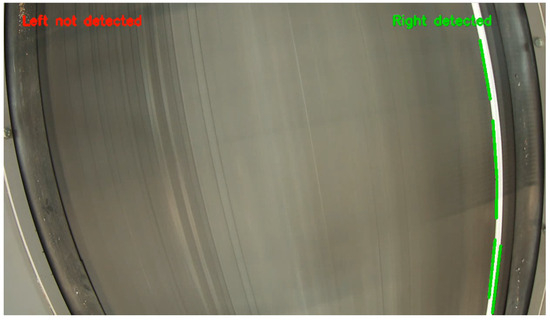

Figure 15 and Figure 16 demonstrate the visual output of the misalignment detection algorithm under two actual conditions. Both left and right edges are successfully detected in the left image, and they are marked by green vertical lines with on-screen indicators “Left Detected” and “Right Detected”.

Figure 15.

Edge detection results: Both edges detected.

Figure 16.

Edge detection results: The left edge has not been detected.

In contrast, the right image shows a misalignment event where the left edge of the conveyor is no longer visible within the frame. The system accurately detects this event and generates a red warning message, “Left not detected”, which triggers the left-side motor for correction. This qualitative feedback loop is critical in maintaining conveyor centering without human intervention.

To quantify the system’s performance, four experimental conditions were defined:

- Normal Lighting (Stable Environment).

- Low Lighting (Dim Factory Conditions).

- Normal Lighting with Induced Vibration (Simulated Machine Activity).

- Low Lighting with Vibration (Worst-Case Industrial Scenario).

Despite minor degradations, the system maintained an average accuracy above 90% in all conditions, which is considered highly reliable for industrial operations. The error cases were primarily due to transient motion blur or temporary occlusion of the line markers, which were mitigated by frame-by-frame recovery logic.

The image processing time per frame was measured at approximately 0.028 s, and the mechanical response time for activating the stepper motors and correcting the conveyor alignment ranged between 0.03 and 0.05 s depending on the severity of the belt displacement. The misalignment detection system demonstrated high sensitivity, rapid response time, and robust performance under real-world constraints.

4.2. Evaluation of Temperature Detection and Fire Prevention System

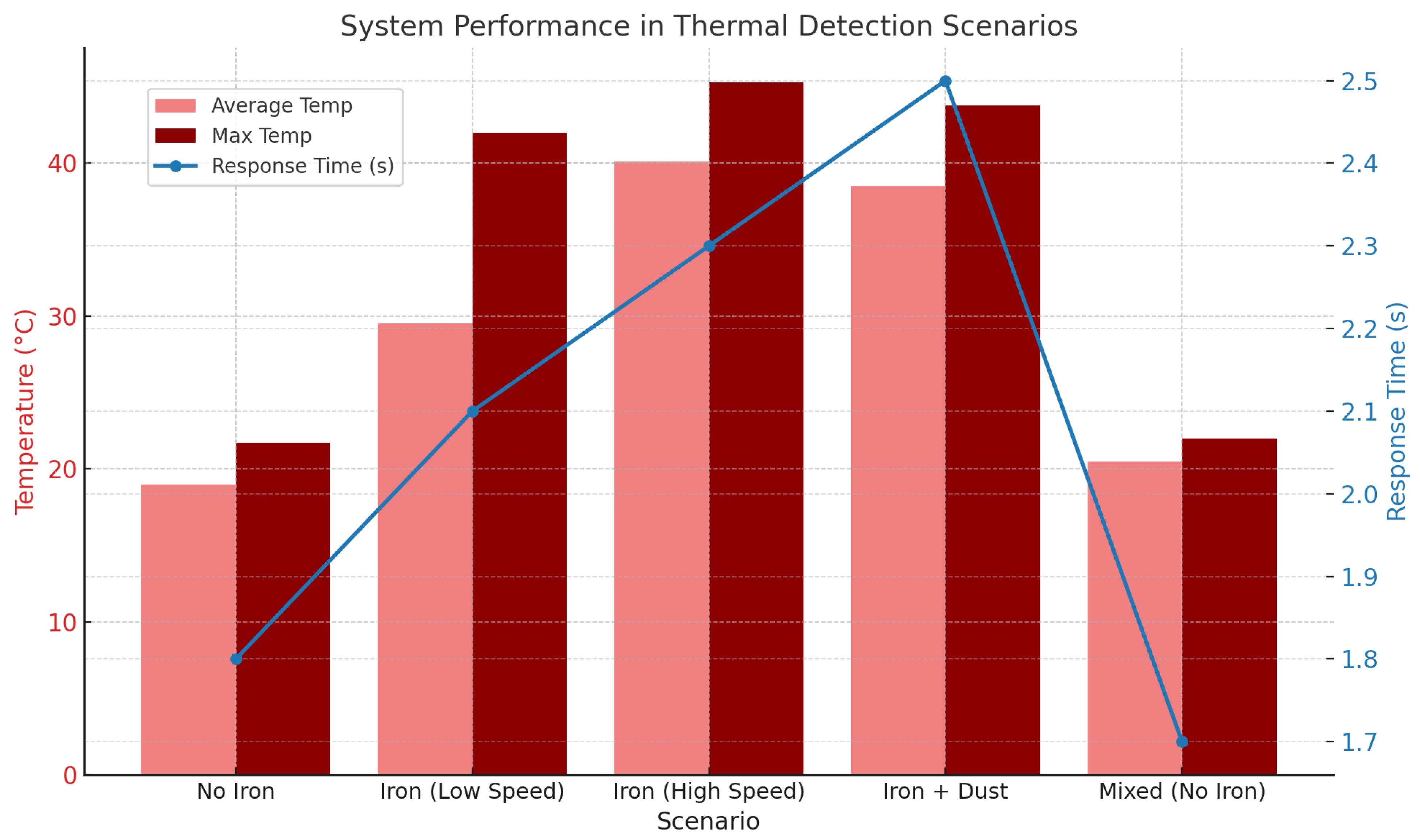

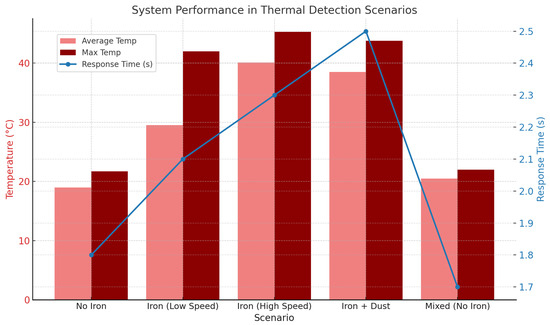

Five controlled experimental scenarios were designed to evaluate the system’s effectiveness in detecting abnormal temperature rises and preventing fire-related incidents. These included variations in material composition, conveyor belt speed, and environmental conditions, such as dust. The primary goal was to determine the system’s sensitivity, response speed, and reliability in triggering early alerts based on thermal camera data.

As summarized in Table 1, each test scenario recorded the average and maximum temperature readings and the system’s response time. These values were measured from when the temperature exceeded a predefined safety threshold to the activation of local or remote alerts.

Table 1.

The final performance results of the YOLOv11n-seg model.

The results indicate that, in scenarios involving iron—particularly under high-speed or dusty conditions—the temperature rose significantly, reaching a maximum of 45.3 °C in Scenario 2. This suggests a heightened fire risk due to potential sparks or friction-generated heat.

The system’s response time remained fast, ranging from 1.7 to 2.5 s. This quick reaction window is crucial in halting processes, activating alarms, and reducing the likelihood of fire escalation.

Figure 17 presents a comparative bar graph illustrating the average and peak temperatures across scenarios, along with the system’s response time (blue line). The graph shows that scenarios with iron content resulted in higher temperatures and longer response times, while scenarios without iron maintained safe temperature ranges and faster system reactions.

Figure 17.

Performance of the temperature detection system across five test scenarios, showing the average and maximum temperatures (bar graph) and system response time (blue line).

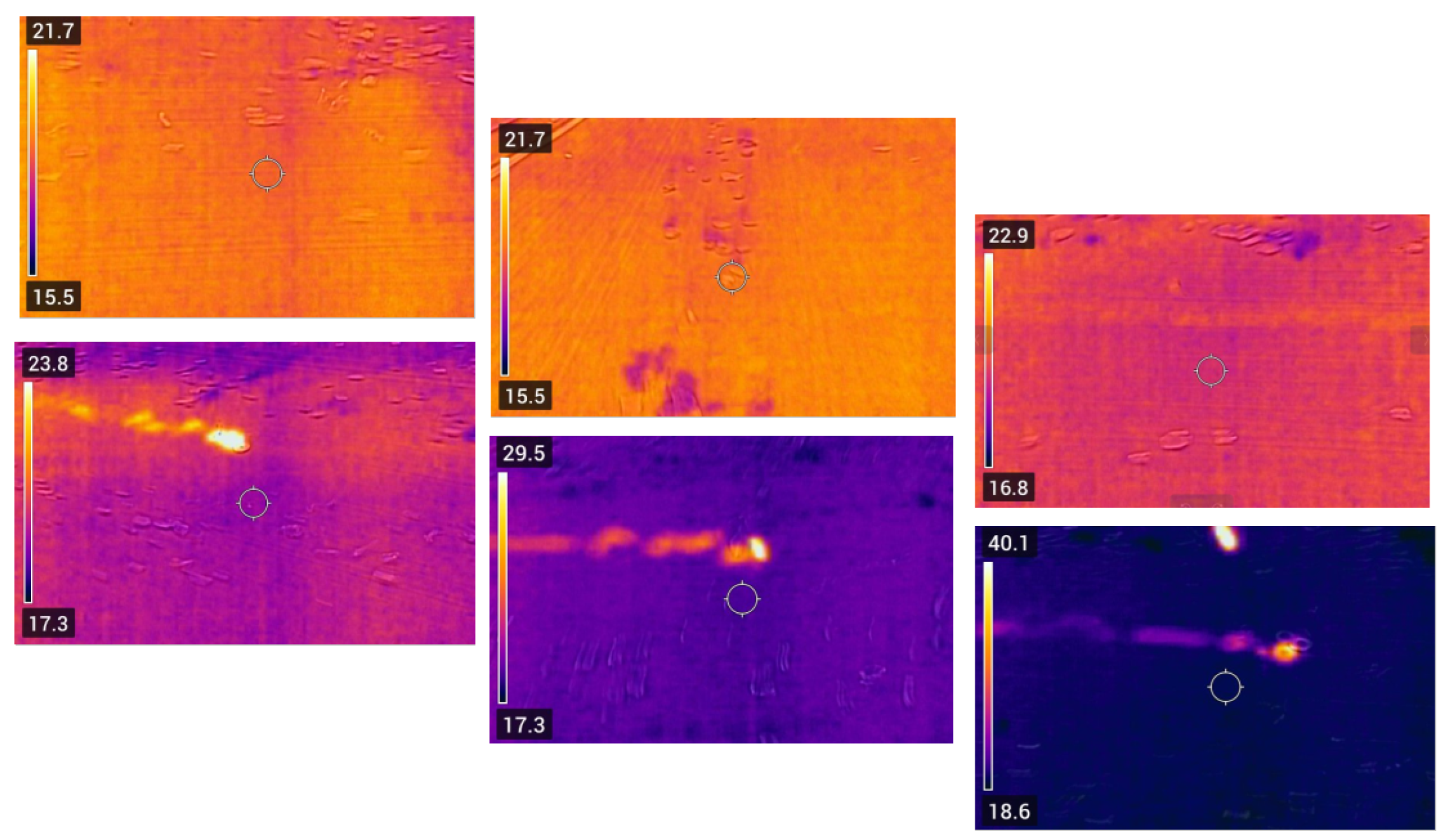

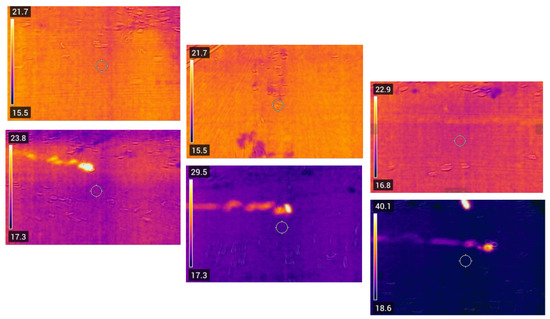

To further validate the performance under real-world industrial conditions, an additional test was conducted with a small iron object placed on the running conveyor belt of the BECS while the magnetic drum was active. The sequence of thermal frames captured during this test is shown in Figure 18.

Figure 18.

The thermal image sequence showing a real iron particle being trapped under the magnetic drum during ECS operation. A sudden temperature spike was observed due to friction and magnetic retention, triggering alert mechanisms.

As the iron particle approached the drum, it was magnetically trapped beneath the belt, resulting in a rapid and localized temperature increase. The thermal detection system successfully identified this anomaly, reaching over 40 °C, and it triggered alerts before any damage could occur. This scenario demonstrates the system’s capability to detect dangerous temperature spikes in realistic operational environments.

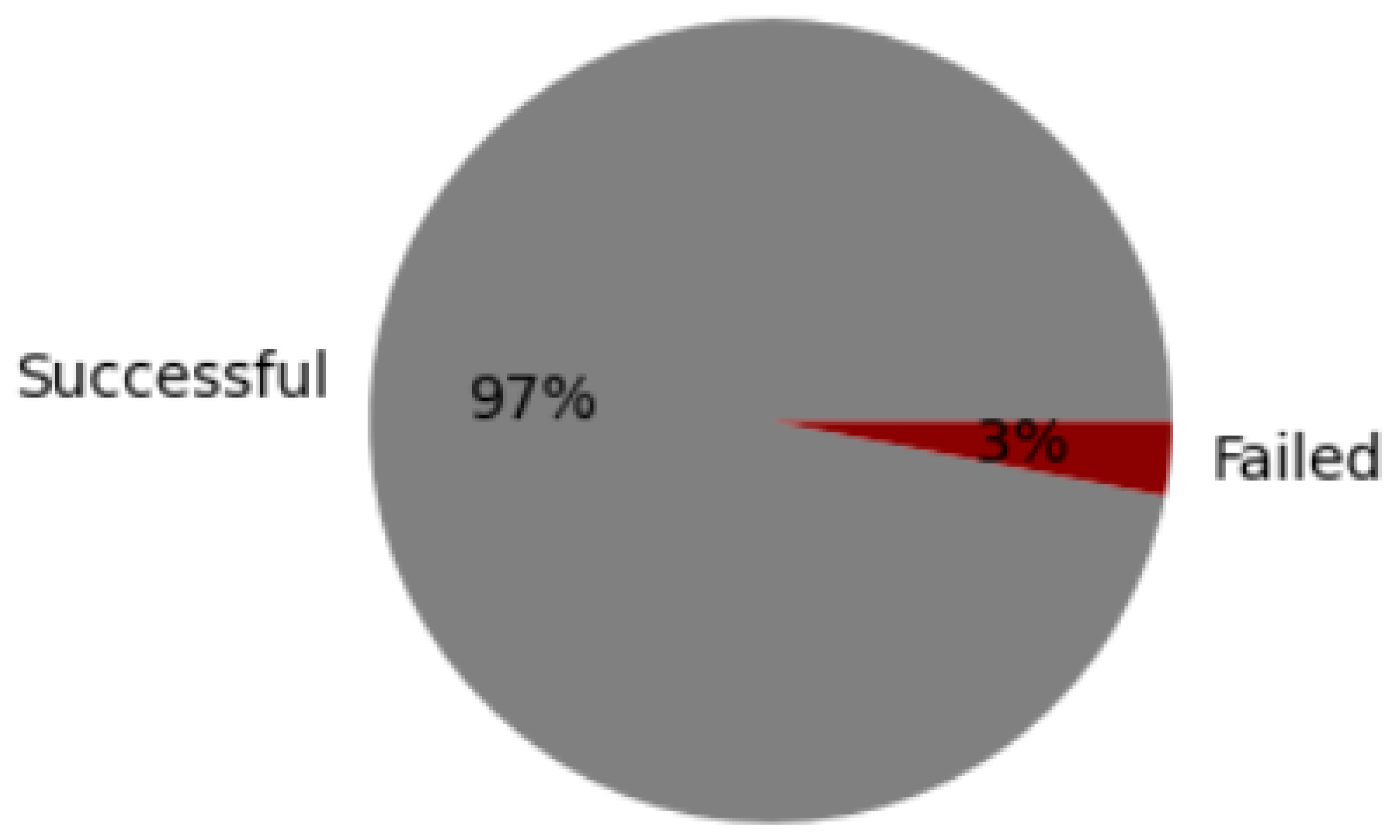

An aggregated analysis was conducted on all test cases to evaluate the reliability further. Out of the entire set of detection events, 97% were successfully identified and triggered the appropriate alert mechanisms, while only 3% failed—mainly due to momentary sensor latency or transitional errors. This overall success rate is illustrated in Figure 19, which visualizes the distribution of successful versus failed detections.

Figure 19.

Overall success rate of the fire detection system across all experimental scenarios.

The fire detection system demonstrated high accuracy, rapid response, and reliability in identifying early signs of hazardous conditions. The 97% success rate and sub-3-second reaction time validated the system’s suitability for real-time industrial deployment. These findings support its role as a proactive safety mechanism within the BECS conveyor setup.

4.3. Evaluation of the YOLOv11n-seg Model Performance for Material Shape Detection

To evaluate the system’s ability to detect material shapes (sharp-edged vs. rounded), an industrial line-scan camera and the lightweight YOLOv11n-seg model were employed. The model is optimized explicitly for low-power hardware, such as Raspberry Pi and edge devices. In this project, the model was deployed on a laptop equipped with an NVIDIA RTX 3500 Ada Generation GPU with 12 GB of memory.

- YOLO Version: Ultralytics 8.3.53

- Software Environment: Python 3.11.7, PyTorch 2.5.1 + CUDA 12.1

- Number of Layers: 265.

- Parameters: 2,834,958.

- Computational Load: 10.2 GFLOPs.

The dataset consisted of 519 labeled real-world images from the industrial line, containing a total of 1105 objects:

- 237 images with 461 sharp objects.

- 176 images with 644 rounded objects.

The model was trained for 100 epochs and achieved stable convergence. Key performance metrics are summarized in Table 2.

Table 2.

The YOLOv11n-seg model’s performance metrics.

Model processing speed:

- Pre-processing time: 0.5 ms.

- Inference time: 4.9 ms.

- Post-processing time: 1.3 ms.

- Total per image: ∼6.7 ms.

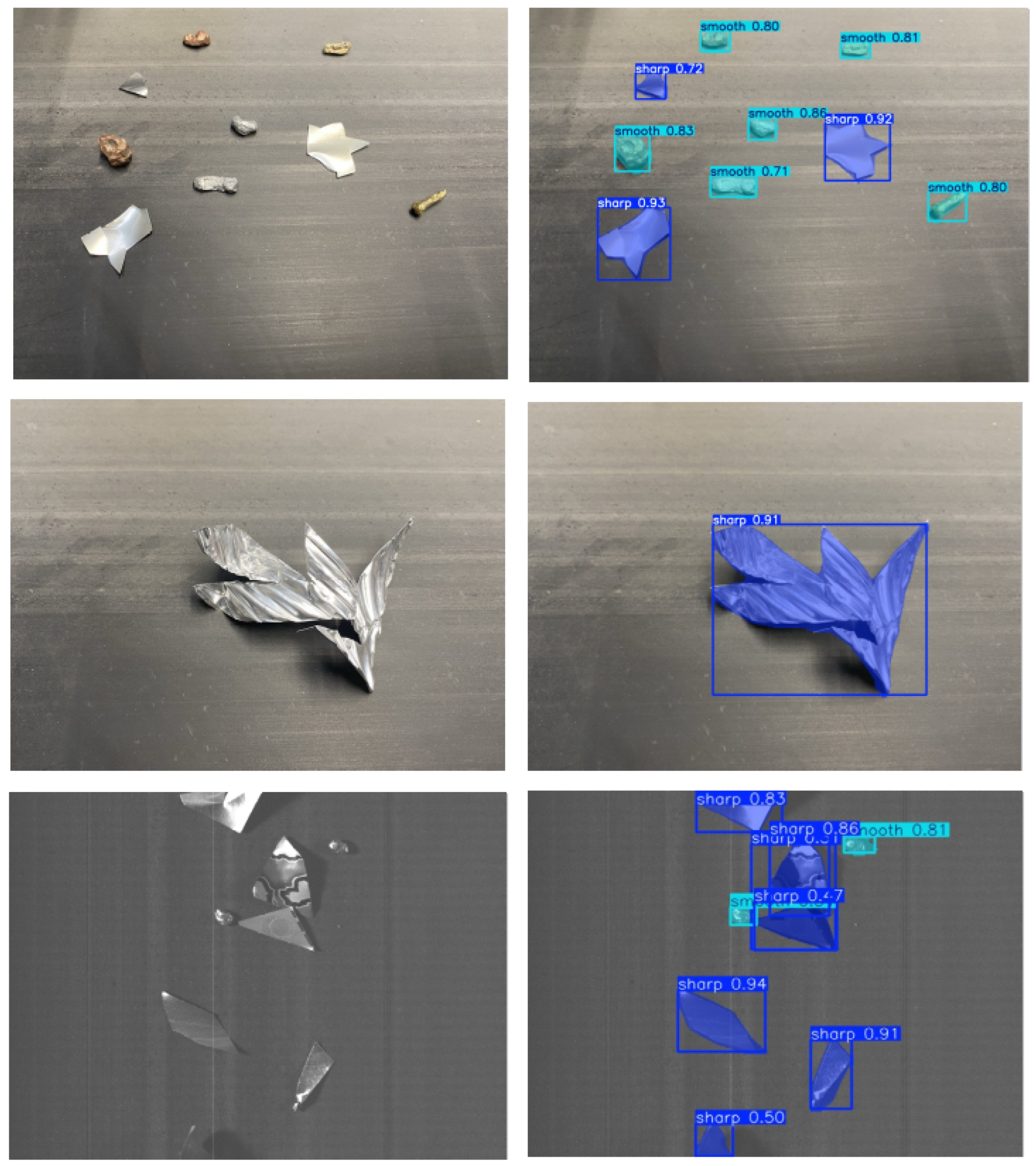

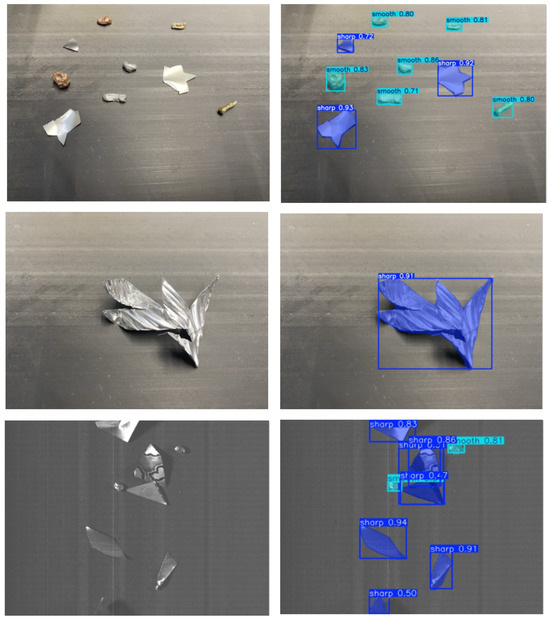

To demonstrate the model’s performance in real-world conditions, the inference results on multiple samples were captured and are presented. Figure 20 shows the detection outputs on both the RGB and grayscale images. This dual-mode testing was essential to evaluate the model’s adaptability in various camera settings (color and monochrome line-scan imaging).

Figure 20.

The original (left) and segmented (right) detection of mixed sharp and smooth objects using an RGB camera.

Additionally, Table 3 presents a summary of all the training and evaluation metrics, highlighting consistency across epochs and balanced performance across classes.

Table 3.

The final performance results of the YOLOv11n-seg model.

The YOLOv11n-seg model demonstrated robust and high-precision classification of small industrial particles. Its ability to distinguish between sharp and smooth objects in RGB and grayscale images highlights the generalization capacity of the model across different vision systems. This significantly reduces the risk of conveyor belt damage and improves the automated material sorting quality in BECS systems.

4.4. YOLOv11n-seg Model Selection and Comparison with Other Models

This study selected the YOLOv11n-seg model as the primary algorithm for identifying the shapes of fine recycled materials moving on a high-speed conveyor belt. The main reason for selecting this model was its enhanced processing speed while maintaining high accuracy in segmentation tasks. To validate this choice, the YOLOv8n-seg model was also trained on the same dataset, and the outputs of both models were thoroughly analyzed and compared.

4.4.1. YOLOv11n-seg Model Architecture

The YOLOv11n-seg model used in this project contains 265 layers and approximately 2.8 million parameters. It has a computational power of 10.2 GFLOPs and was trained using PyTorch version 2.1.1 with CUDA 12.1. The average inference time for this model is reported to be 6.1 milliseconds, with approximately 0.7 ms for preprocessing and 2.1 ms for postprocessing, keeping the total image processing time under 9 milliseconds—thus making it highly suitable for real-time industrial systems.

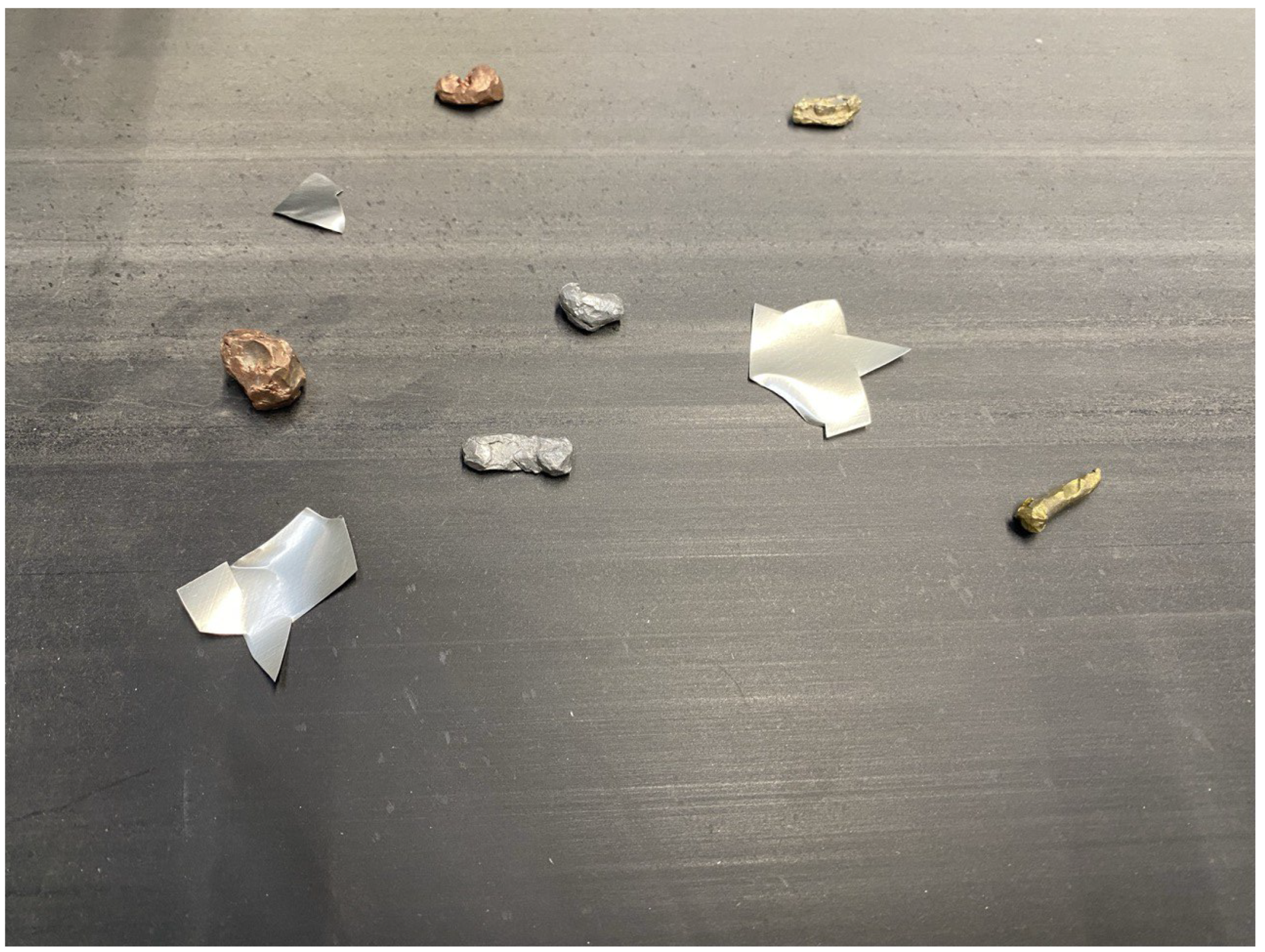

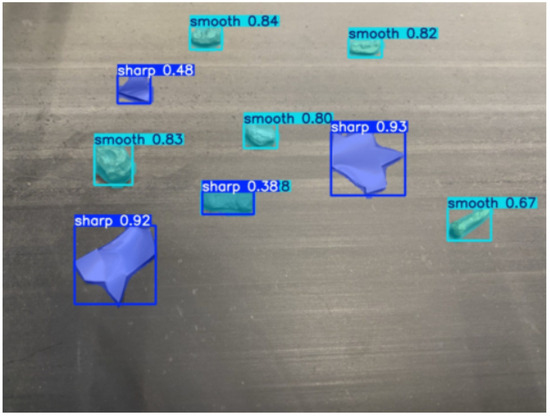

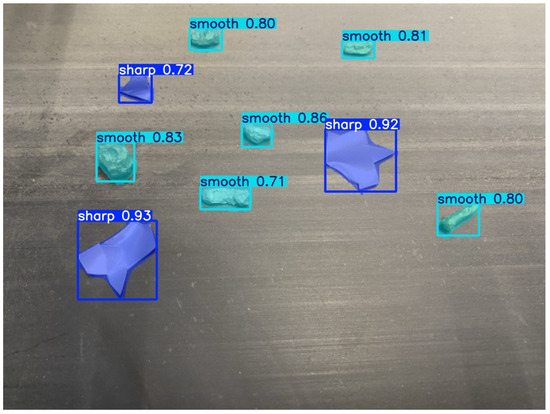

As shown in Figure 21, the sharp-edged fragments were visually distinguishable by their angular outlines and reflective surfaces, while smoother objects appeared more rounded and compact. This image served as the ground truth reference for evaluating segmentation model performance.

Figure 21.

Original test image showing a mixture of metallic and plastic particles with varying geometries.

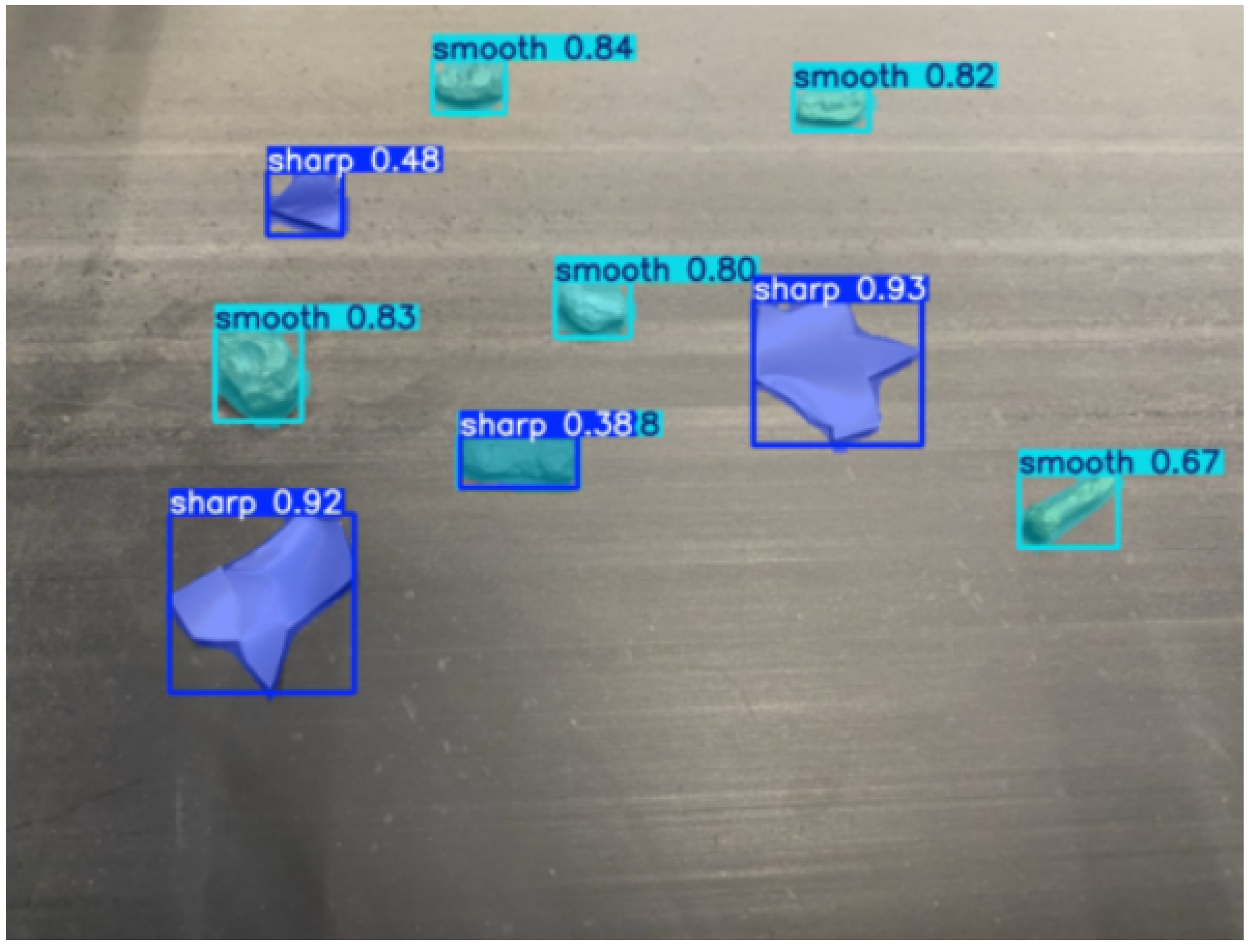

Figure 22 shows the output of the YOLOv8n-seg model on a test image of recycled materials moving on a conveyor belt. In this image, the model attempts to classify the objects into two main categories: the sharp class (with angular or pointed edges), highlighted in blue masks; and the smooth class (with rounded or soft edges), highlighted in cyan masks. The model assigns a confidence score for each detected object, indicating how certain it is about the classification. At the bottom part of the image, two large aluminum fragments with clearly defined sharp edges had been correctly classified as sharp, with high confidence scores of 0.92 and 0.93, respectively. In contrast, several smaller, more rounded stone-like objects had been accurately labeled as smooth, with confidence scores ranging between 0.80 and 0.84. However, the image also illustrates two borderline cases where the model had difficulty classifying. One object in the center of the image, which visually appeared to have a smooth shape and curved edges, had been misclassified as sharp with a low confidence score of 0.388. Another object near the top-left corner was also labeled as sharp but with a low confidence score of 0.48, suggesting the model’s uncertainty in its decision. This image plays a critical role in evaluating the performance of YOLOv8n-seg. While the model performs well in most cases, its lower accuracy in borderline examples—especially those with confidence scores below 0.5—indicates reduced reliability under complex edge patterns or when visual distinctions are diminished by lighting and shadow variations. This highlights the importance of incorporating additional post-processing steps or adopting more advanced models, such as YOLOv11, for industrial scenarios that demand high classification precision.

Figure 22.

The YOLOv8n-seg model output on the test image. The model identified sharp and smooth objects with varying confidence scores. One misclassification was observed—an object with smooth edges was labeled as sharp with a low confidence of 0.388.

The model output in Figure 23 demonstrates that all of the materials in the image were correctly classified into two categories, i.e., sharp and smooth, and the confidence scores for most predictions were also above 0.9 (e.g., sharp objects were identified with 0.92 and 0.93 confidence, while smooth objects scored 0.83, 0.80, and 0.84). No misclassifications were observed, and all of the objects were successfully detected.

Figure 23.

Model output from YOLOv11n-seg when applied to the test image. All visible particles were correctly segmented and classified into sharp (blue masks) and smooth (cyan masks) categories, with high confidence scores. The results demonstrate the model’s precise shape-based discrimination, which is essential for real-time separation and protection tasks in BECS systems.

In terms of evaluation metrics, the following results were obtained:

- mAP@50: 0.824 for sharp, and 0.820 for smooth.

- mAP@50–95: 0.646 for sharp, and 0.655 for smooth.

- Mask precision: 0.848.

- Mask recall: 0.845.

These values confirm that the YOLOv11n-seg model performs exceptionally well in accurately segmenting and classifying objects.

4.4.2. Comparison with YOLOv8n-seg

In the initial phase, the YOLOv8n-seg model was also trained on the same dataset (519 training and 176 validation images). This model contains 85 layers and approximately 3.2 million parameters. Despite having more parameters, its computational load is higher than that of YOLOv11n.

As shown in same figure, the following results were obtained:

- mAP@50: 0.902 for sharp, and 0.919 for smooth.

- mAP@50–95: 0.692 for sharp, and 0.642 for smooth.

However, the sample outputs of YOLOv8n shows that one sharp object was identified with only 0.38 confidence, and, in another case, a sharp object was incorrectly labeled as smooth.

Comparing the output images of both models, it is evident that YOLOv11n-seg performs more reliably and accurately, especially in cases where object shapes are similar or have slight edge overlaps.

4.4.3. Industrial Constraints and Model Suitability

The conveyor belt in this project operated at high speed (over 1 m per second), thus demanding immediate and precise inference. Additionally, the materials to be identified were very small (ranging from 1 to 4 mm). Therefore, high detection accuracy and fast processing capability were essential in the model selection.

Considering its processing speed of under 10 milliseconds and high segmentation accuracy, the YOLOv11n-seg model was highly suitable for this industrial application.

4.4.4. Limitations of Using Mask R-CNN for Real-Time Industrial Segmentation Tasks

A preliminary study was also conducted on Mask R-CNN, where it was compared with other segmentation architectures. While this model is well known for its accurate pixel-wise segmentation, it was found to be unsuitable for our high-speed industrial application due to several critical limitations.

First, Mask R-CNN is built upon the Faster R-CNN architecture and employs a two-stage processing pipeline. In the first stage, it generates regional proposals; in the second stage, it classifies and segments them. This architecture significantly increases computational complexity compared to one-stage models, like YOLOv8 or YOLOv11, leading to higher latency in image processing. As a result, the model is generally inappropriate for real-time or near-real-time systems [84].

Moreover, the original Mask R-CNN paper reported an inference speed of only five frames per second (FPS) using a ResNet-101-FPN backbone. In our scenario—where a conveyor belt moves at over 1 m per second—such a low speed is insufficient to segment and classify small, fast-moving objects effectively [84]. Additionally, efficient training and inference with Mask R-CNN require high-end GPUs, particularly when handling high-resolution images or large datasets. This imposes limitations in terms of deployment on resource-constrained industrial hardware, such as embedded systems (e.g., Jetson Nano or Raspberry Pi) [85].

Another primary concern is that Mask R-CNN performs relatively poorly on small objects. Given that our target materials often range from 1 to 4 mm in size, even minor inaccuracies in segmentation can lead to significant degradation in overall system performance [86].

Finally, while Mask R-CNN performs well in static domains, such as medical imaging (e.g., tumor or organ segmentation), document layout analysis, and labeled static datasets (like Cityscapes in autonomous driving), it is not optimized for fast-paced environments. Therefore, it is ill suited for dynamic recycling systems, where the critical requirements are high-speed conveyor belts and low-latency decision making.

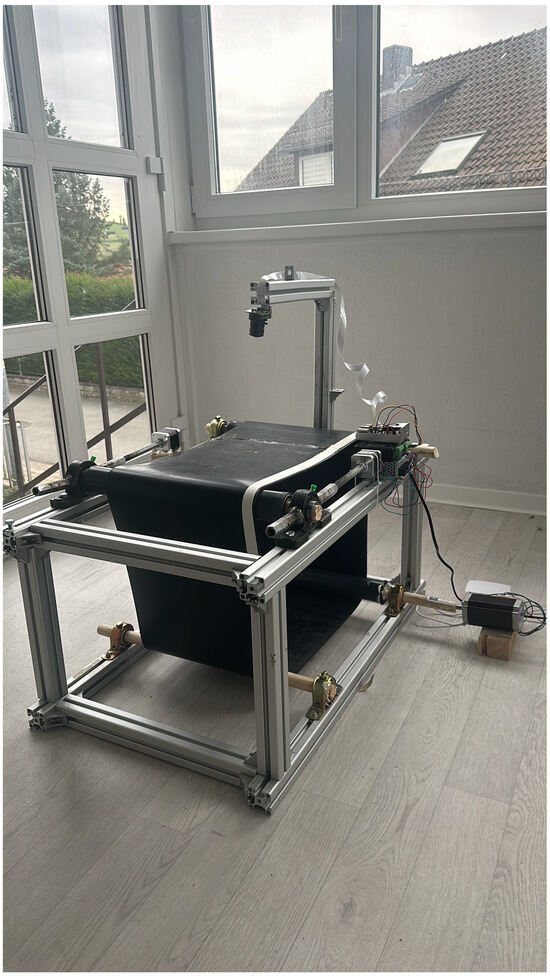

4.5. Prototyping and System Implementation

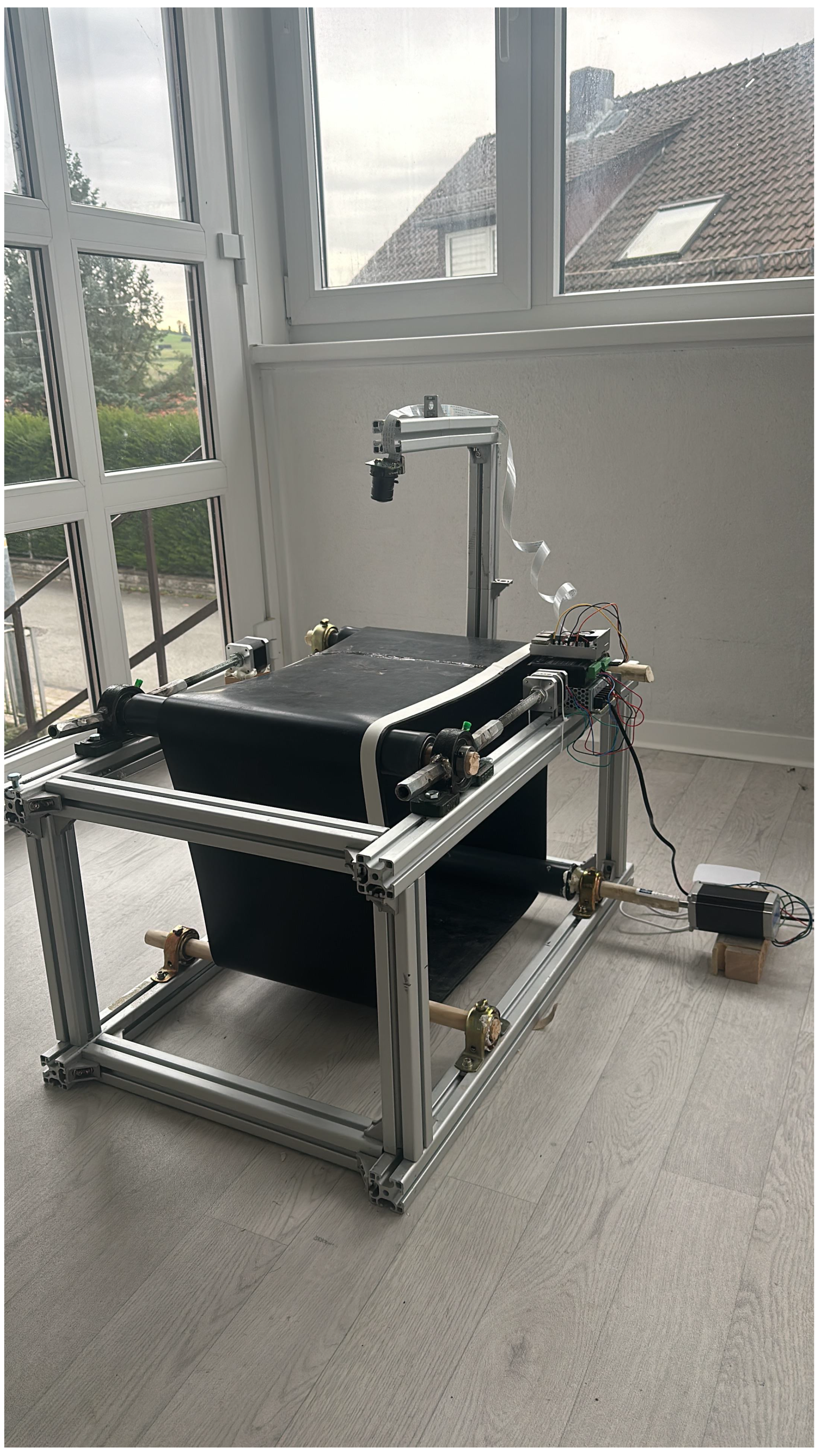

Due to operational constraints and safety regulations, executing the proposed system directly on the full-size BECS was not feasible. The machine was shared with other research projects, making extended experimentation impractical. To overcome this, a scaled-down prototype of the BECS conveyor belt was constructed to test and validate the proposed systems: the conveyor misalignment detection and correction, thermal hazard detection, and material shape classification.

The primary goal was to automate the manual correction of the conveyor belt misalignment. In the original BECS, two mechanical levers were used by technicians to manually re-align the belt after it shifts due to load imbalance, prolonged operation, or physical displacement. These levers are visible in the scaled prototype in Figure 24, where two stepper motors were installed to replace manual adjustments.

Figure 24.

The scaled-down prototype of the BECS conveyor belt. Stepper motors were used to replace the manual correction levers, and a vertical camera was mounted for misalignment detection.

The mini conveyor belt system replicated the original BECS structure using a lightweight aluminum profile framing and a custom plastic belt. The vertical camera mount was used for visual line detection, and a white band along the belt’s edge helped to detect the left and proper boundaries. A compact but powerful stepper motor was added for main belt motion, and Raspberry Pi microcontrollers were used for both belt movement and misalignment correction control. The total cost of this modular test setup was approximately EUR 350.

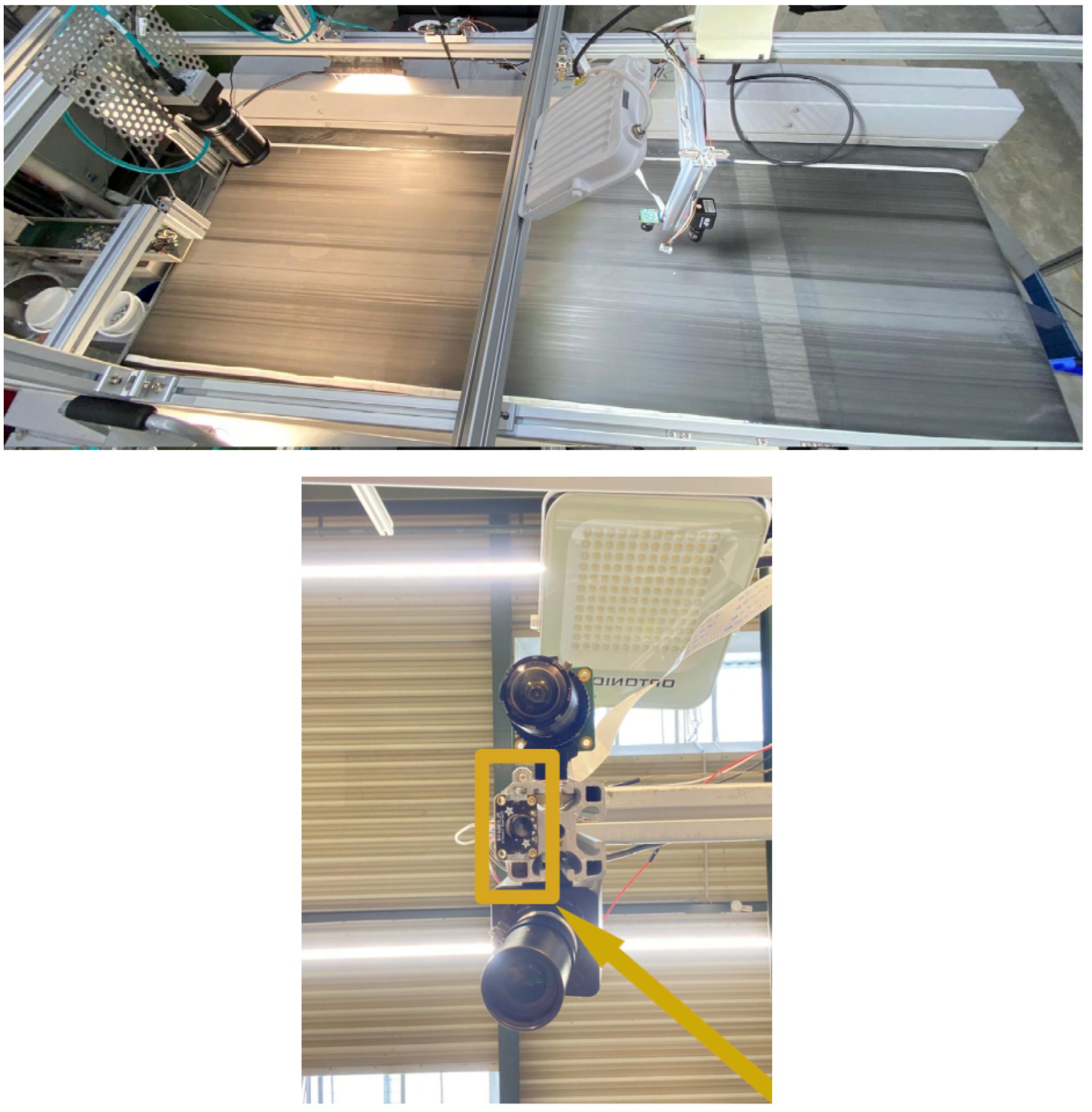

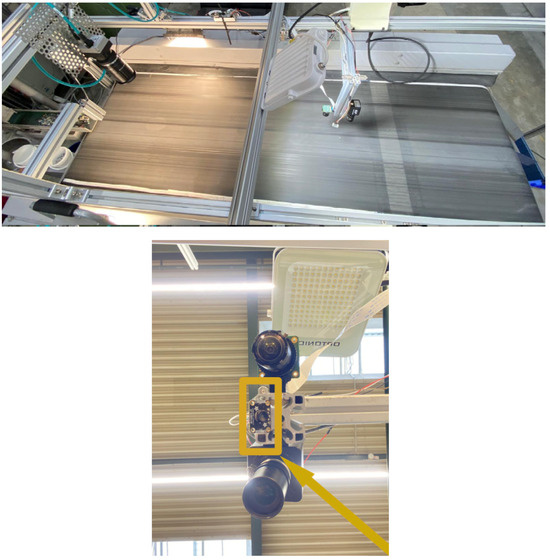

An industrial-grade line-scan camera was installed on the real BECS conveyor system to detect the material shapes on the belt. As shown in Figure 25, the camera was mounted on a rigid perforated metal bracket to allow flexible adjustment and alignment. The optical assembly included adjustable focus and aperture rings to fine tune the image capture based on conveyor speed and lighting conditions. Uniform illumination was provided by LED spotlights mounted on either side.

Figure 25.

Full BECS conveyor integration: left—line-scan camera for material detection, center—thermal camera and lighting for heat monitoring, and right—Raspberry Pi with sensors and an alert system.