1. Introduction

Diabetes mellitus (DM) is a condition affecting millions of individuals worldwide, with its global prevalence having increased rapidly over the past three decades. Diabetic foot is a common complication of DM, characterized by ulceration, infection, and/or destruction of deep tissues of the foot. This condition results from the interaction of multiple factors, primarily diabetic peripheral neuropathy and peripheral arterial disease, which impact the lower extremities of diabetic patients [

1].

Diabetic peripheral neuropathy is a loss of sensory function that begins in the lower extremities. It is also characterized by pain and significant morbidity. Over time, at least 50% of individuals with diabetes develop diabetic neuropathy [

2].

There are various methods to assess diabetic peripheral neuropathy. In a busy clinical environment, it is often sufficient to determine whether a patient has symptoms, particularly painful DPN, and to assess their risk of foot ulceration using a monofilament examination. However, for evaluating early nerve damage and providing a more precise phenotyping of somatic and autonomic neuropathy, specialized screening and diagnostic tests are available, such as quantitative sensory testing, sudomotor function tests, neurophysiology, and skin punch biopsy. Current neuropathy endpoints lack sensitivity to detect early abnormalities before overt neuropathy develops, are invasive, or have repeatedly failed as surrogate endpoints of therapeutic efficacy in clinical trials for DPN [

3].

Peripheral arterial disease is the lower-limb manifestation of systemic atherosclerotic disease. PAD may initially present symptoms of intermittent claudication, while chronic limb-threatening ischemia, the end stage of PAD, is characterized by rest pain and/or tissue loss. PAD is an age-related condition, present in over 10% of individuals aged ≥ 65 in high-income countries [

4].

Diagnosis of PAD relies on clinical assessment and diagnostic tests. The most commonly used diagnostic test is the ankle–brachial pressure index (ABPI) test, which evaluates blood flow to the limbs. However, using ABPI to assess PAD in diabetic individuals can be challenging due to medial sclerosis, the hardening of arteries. This condition can render the arteries incompressible, resulting in falsely elevated ABPI values [

5].

In both cases, DPN and PAD, the diagnostic accuracy is not particularly high. Specifically, the sensitivity of the monofilament test for diagnosing DPN is 53%, with a specificity of 88% [

6]. For PAD, the sensitivity of the ankle–brachial index is 35.48%, with a specificity of 97.55% [

7].

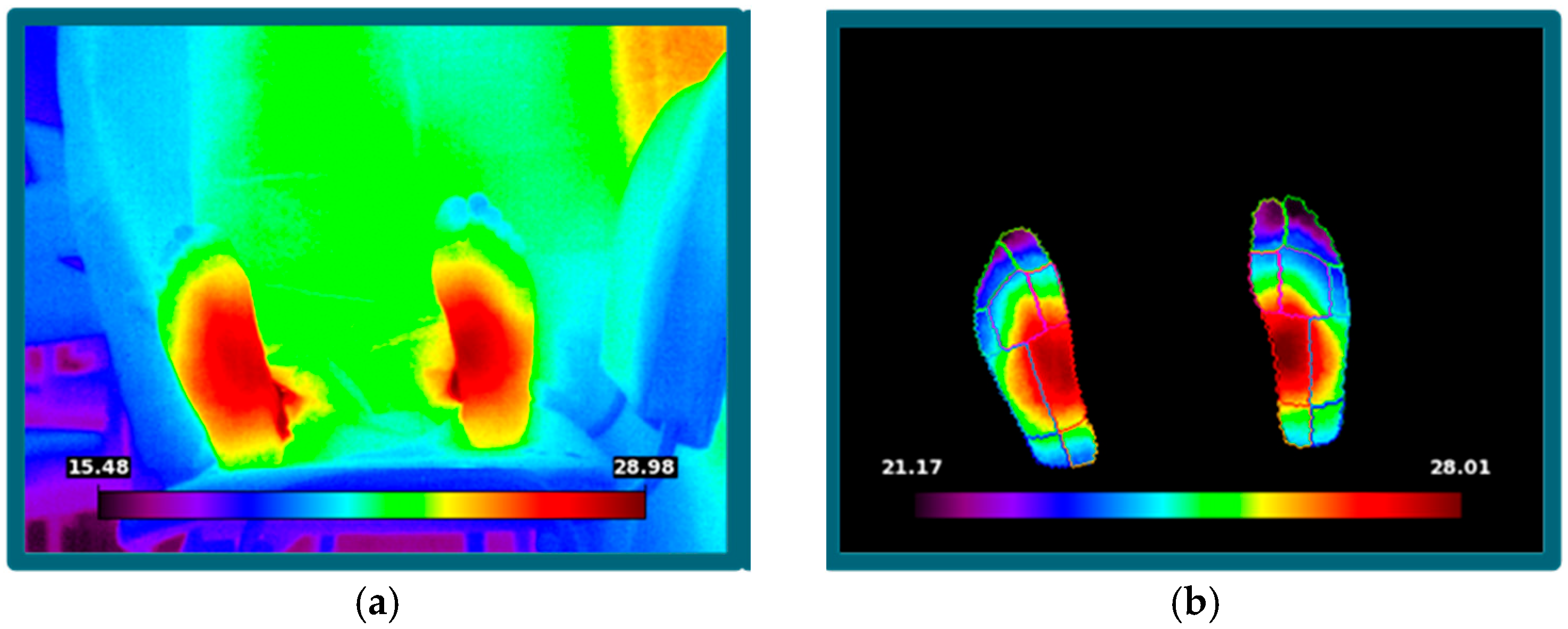

Within this context, infrared thermography (IR) is currently emerging as a promising method for supporting diagnostic processes by detecting and visualizing temperature changes emitted by the body.

Hardy [

8] proposed that physiological processes and the thermal properties of the skin are influenced by various factors because the skin plays a role in regulating core body temperature. These factors change in the presence of disease, making infrared measurements useful for diagnostic purposes.

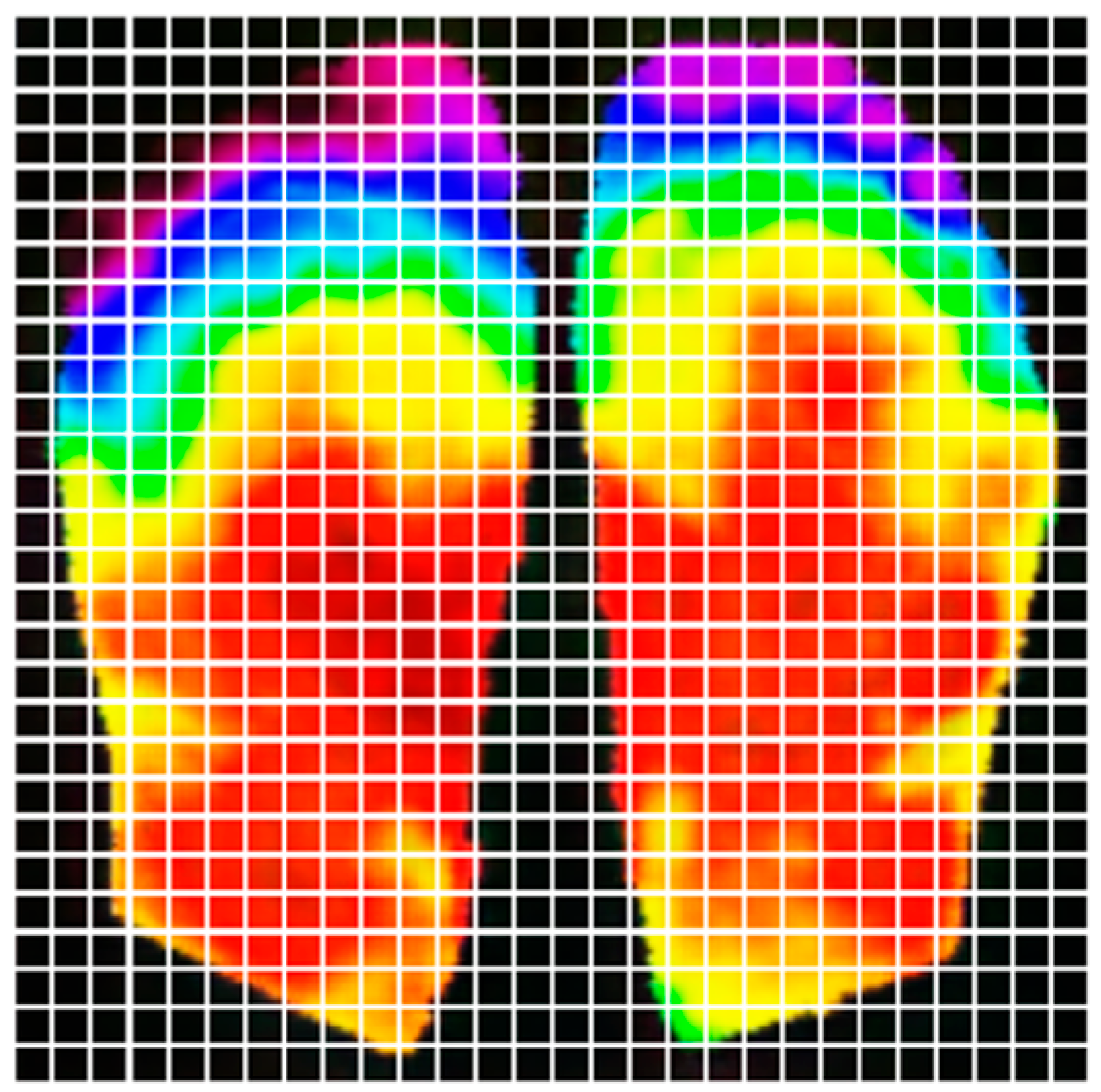

Since then, numerous studies have investigated the use of thermographic imaging in diagnosing diabetes-related complications. Zhou et al. [

9] explored the application of infrared thermal imaging technology for the early diagnosis of diabetic peripheral neuropathy. Their study identified temperature changes in plantar blood vessels associated with mild DPN, proposing a non-invasive, reliable, and early diagnostic method. They concluded that infrared thermal imaging is a dependable tool for detecting early microvascular abnormalities linked to DPN.

Padierna et al. [

10] developed a non-invasive methodology using infrared thermography and machine learning models to characterize peripheral arterial disease in patients with type 2 diabetes mellitus. Their findings indicated that infrared thermography, combined with machine learning, is a reliable tool for PAD detection in diabetic patients.

In this scenario, infrared thermography could provide a solution to enhance diagnostic performance. IR is a tool that captures the infrared radiation emitted by the human body through the lens of an infrared camera and converts it into temperature data via photoelectric conversion. This technique is non-invasive, contact-free, cost-effective, reliable, and allows for rapid examination. It is safe for both patients and healthcare professionals.

Artificial intelligence (AI), particularly deep learning, offers significant advantages over traditional statistical analysis in thermographic image interpretation for diagnosing DPN and PAD. While statistical methods rely on predefined features and thresholds, AI models learn complex, non-linear patterns directly from data. This enables higher sensitivity and specificity, especially in early-stage or subclinical cases. Furthermore, AI can integrate spatial and temporal information across images, enhancing diagnostic accuracy.

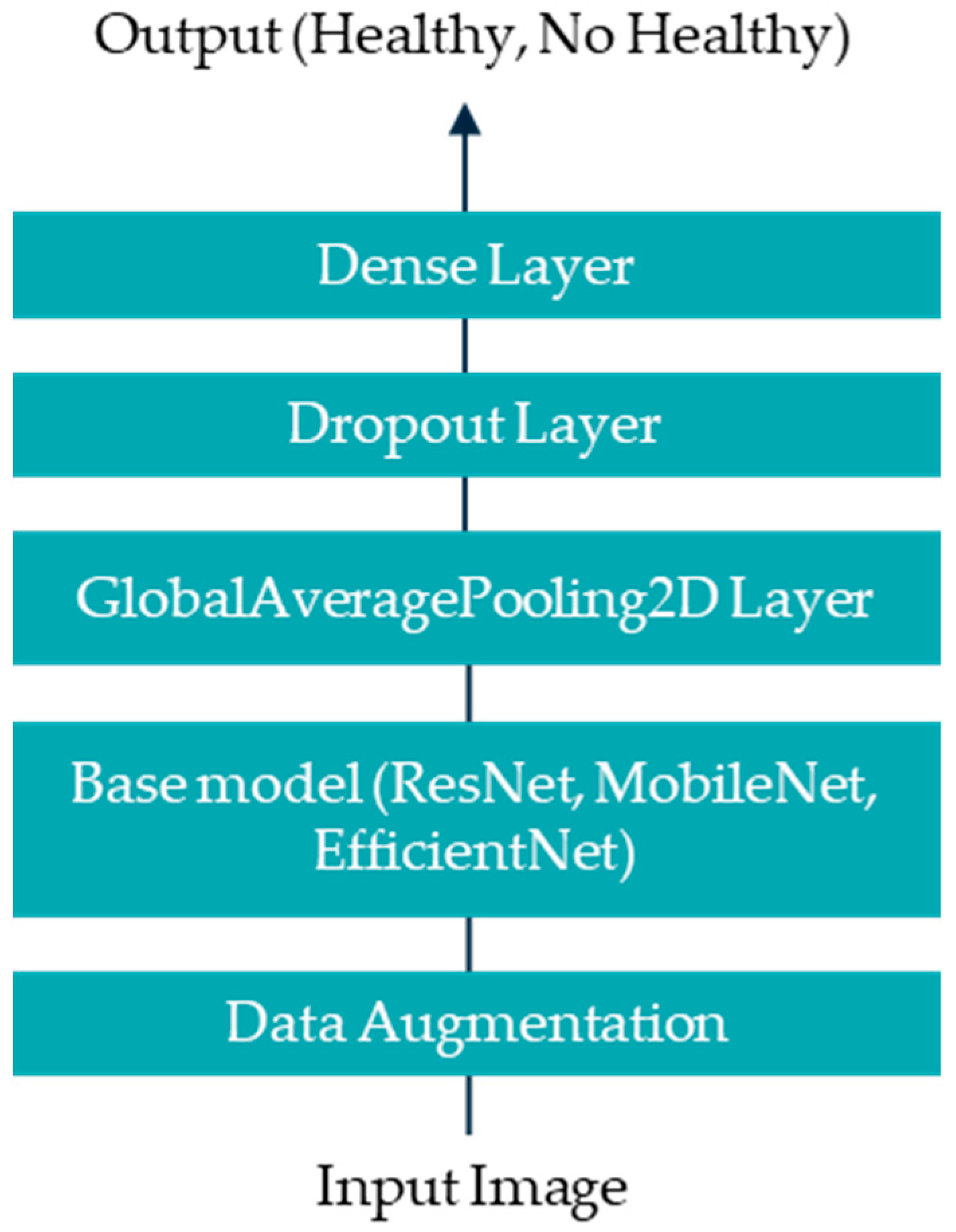

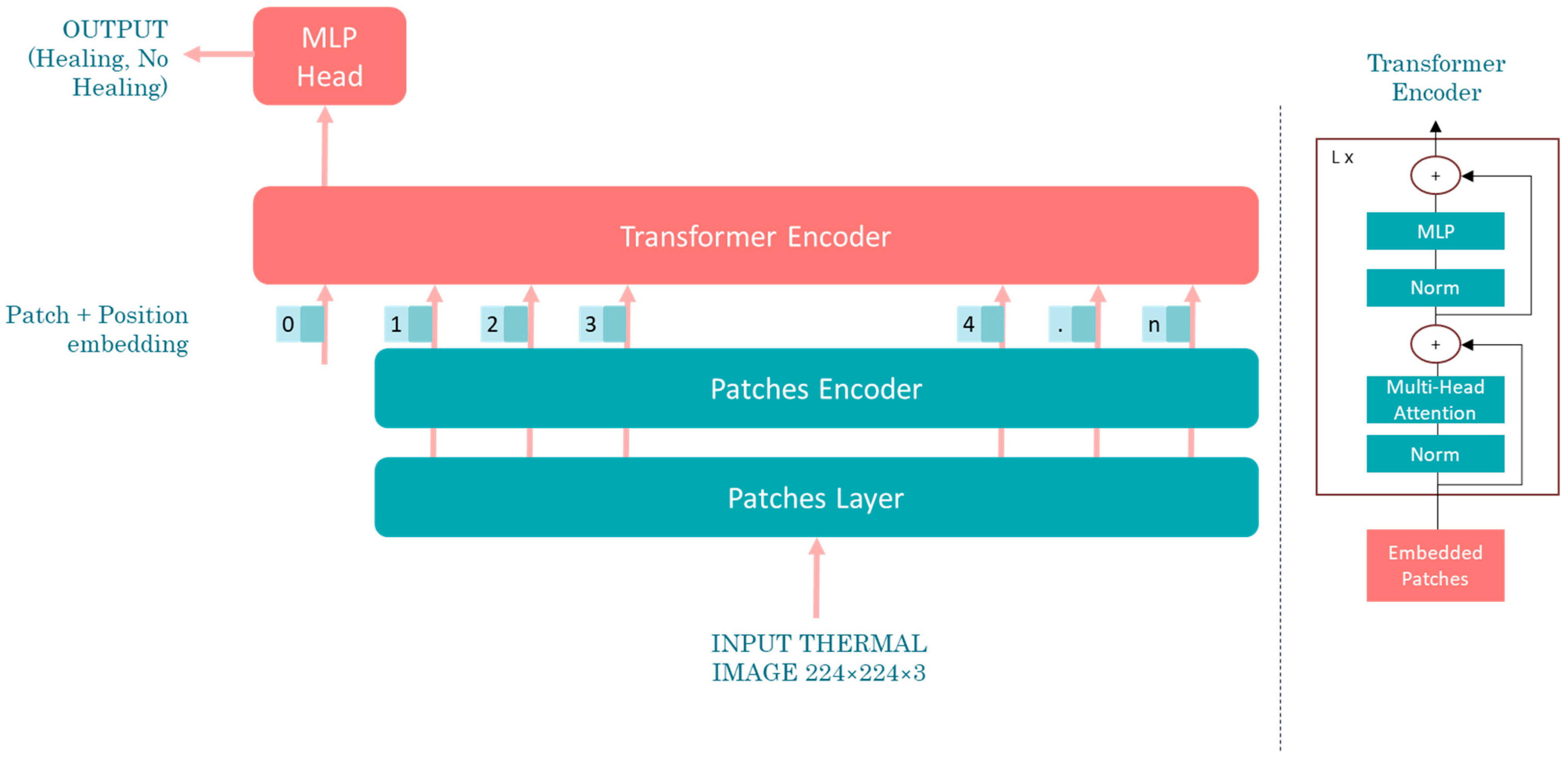

This study aims to develop a deep learning-based methodology for the identification of diabetic peripheral neuropathy (DPN) and peripheral arterial disease (PAD) using infrared thermographic features extracted from the plantar surface of the feet. To achieve this, we trained and evaluated multiple convolutional neural network (CNN) architectures and a vision transformer (ViT) model, specifically adapted to small datasets. The models were designed to automatically classify patients as either healthy or affected by DPN and/or PAD based on subtle temperature distribution patterns. Ultimately, this approach seeks to support the development of non-invasive, accessible, and objective diagnostic tools that can enhance early detection and the personalized management of diabetes-related foot complications such as DPN and PAD.

4. Discussion

This study demonstrated the integration of advanced artificial intelligence models, including convolutional neural networks (CNNs) and vision transformers (ViTs), with thermographic imaging for the diagnosis of diabetic peripheral neuropathy and peripheral arterial disease.

The comparative analysis revealed that ResNet-50 outperformed the other CNN architectures (MobileNet V1 and EfficientNetV2 B0) and the visual transformer network, achieving an accuracy of 95.00%, a sensitivity of 100.00%, and a specificity of 90%. These results are also superior to those of existing diagnostic methods, which report a sensitivity of 53% and specificity of 88% for DPN (monofilament test) and a sensitivity of 35.48% and specificity of 97.55% for PAD (ankle–brachial index).

Despite the promising results, the study is constrained by certain limitations. First, the relatively small size of the dataset—despite the inclusion of healthy cases from the INAOE public database—may limit the generalizability of the results. Although acquisition protocols between datasets were harmonized, subtle domain shifts cannot be entirely ruled out. Second, the lack of explainability in the AI predictions may hinder clinical adoption. Employing explainability techniques could address this issue by providing clinicians with insights into the decision-making process of the models.

5. Conclusions

The findings of this research underscore the efficacy of integrating infrared thermography with advanced artificial intelligence models—specifically convolutional neural networks (CNNs) and vision transformers (ViTs)—for the diagnosis of DPN and PAD. This approach offers a non-invasive, cost-effective, and scalable alternative to traditional diagnostic methods, facilitating timely interventions and reducing the risk of severe complications, such as ulcers and amputations. Furthermore, the use of transfer learning and public datasets demonstrates the potential of leveraging existing resources to overcome challenges related to data scarcity.

Future work should focus on exploring more sophisticated AI architectures, such as vision transformers with enhanced transfer learning capabilities, to improve diagnostic accuracy. Additionally, incorporating explainability techniques into these models will be essential for building trust and adoption among clinicians. Finally, future research should also prioritize the expansion of the clinical dataset to improve model generalizability and reduce the risk of overfitting, especially in real-world scenarios with diverse patient populations.

In conclusion, the combination of infrared thermography and AI represents a transformative step toward modernizing diabetic foot care, offering efficient, patient-friendly diagnostic solutions with the potential to significantly improve global health outcomes for individuals with diabetes.