Abstract

Voice conversion (VC) is an advanced technology that enables the transformation of raw speech into high-quality audio resembling the target speaker’s voice while preserving the original linguistic content and prosodic patterns. In this study, we propose a voice conversion algorithm, Multi-Dimensional Perception Flow Matching (MPFM-VC). Unlike traditional approaches that directly generate waveform outputs, MPFM-VC models the evolutionary trajectory of mel spectrograms with a flow-matching framework and incorporates a multi-dimensional feature perception network to enhance the stability and quality of speech synthesis. Additionally, we introduce a content perturbation method during training to improve the model’s generalization ability and reduce inference-time artifacts. To further increase speaker similarity, an adversarial training mechanism on speaker embeddings is employed to achieve effective disentanglement between content and speaker identity representations, thereby enhancing the timbre consistency of the converted speech. Experimental results for both speech and singing voice conversion tasks show that MPFM-VC achieves competitive performance compared to existing state-of-the-art VC models in both subjective and objective evaluation metrics. The synthesized speech shows improved naturalness, clarity, and timbre fidelity in both objective and subjective evaluations, suggesting the potential effectiveness of the proposed approach.

1. Introduction

Voice conversion (VC) [1] is an advanced speech processing technique that transforms a source speaker’s voice into that of a target speaker while preserving the original linguistic content and prosody. This technology has gained significant traction in applications such as personalized speech synthesis, voice editing for film and television, and speech anonymization.

Recent advances in voice conversion (VC) have largely been built upon the development of text-to-speech (TTS) frameworks [2,3,4,5,6]. Current VC models can be broadly classified into two categories. The first category includes end-to-end systems [7,8,9], which directly map input speech to output speech within a unified architecture. These systems typically outperform traditional models [10] in terms of speaker similarity and robustness. The second category follows a cascaded architecture, in which automatic speech recognition (ASR) models [11,12,13] are used to extract content features; then, these characteristics are transferred to the acoustic model based on the generated neural network [14,15,16,17,18] to produce a mel spectrogram, which is subsequently converted into a waveform [19,20,21] by a neural vocoder. While cascaded models often achieve superior audio quality compared with end-to-end systems, they are generally more vulnerable to noise and less robust under domain-shift conditions.

Despite progress in the field, several challenges remain, particularly in real-world applications. First, VC systems are highly sensitive to noise, especially when handling low-quality or degraded speech inputs. Second, distributional shifts between the training data and inference environment can lead to severe performance degradation. Third, many current methods struggle to disentangle speaker identity from linguistic content, resulting in feature entanglement and information leakage, which can compromise speaker anonymity or target similarity.

To guide our investigation, we formulate the following research hypotheses: (1) Incorporating flow matching into the voice conversion framework is hypothesized to enhance naturalness and stability due to its capacity for continuous distribution alignment. (2) Augmenting training with perturbations in content representations may support improved generalization and robustness, especially under mismatched or noisy conditions. (3) Introducing adversarial disentanglement of speaker features within the flow-matching architecture is expected to help suppress residual identity leakage more effectively, thereby improving speaker similarity in the converted speech.

To address these limitations, we propose MPFM-VC, a novel Multi-dimensional Perception Flow Matching-based voice conversion algorithm. Instead of directly generating speech features or waveforms, MPFM-VC explicitly models the dynamic distribution transfer of speech representations, thereby improving robustness and audio quality under mismatched or noisy conditions. Specifically, MPFM-VC introduces the following core contributions:

- Multi-dimensional perception flow matching. We propose a flow-matching-based generation framework for voice conversion that integrates multi-dimensional acoustic features—such as pitch, energy, and prosody—into the flow trajectory modeling process. This design is expected to improve robustness and facilitate more fine-grained and stable mel-spectrogram synthesis.

- Content perturbation training. A robustness-aware training scheme is introduced by injecting controlled noise into the content representation, which is designed to improve generalization to out-of-distribution data and reduce artifacts.

- Voiceprint disentanglement based on adversarial learning. We integrate an adversarial speaker disentanglement strategy into the flow-matching-based voice conversion architecture to suppress residual voiceprint in content representations, with the aim of improving timbre consistency and reducing interference in multi-speaker conversion tasks.

Experimental results suggest that our model offers improvements in speech quality, robustness, and speaker similarity over existing variational and diffusion-based VC methods.

2. Related Work

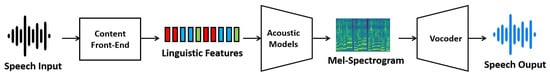

As shown in Figure 1, recent VC models typically follow a general architecture [1] that comprises a content front-end, an acoustic model, and a vocoder. The content front-end extracts linguistic features from the input speech, which are then transformed into a mel spectrogram by the acoustic model. Finally, the vocoder synthesizes the speech waveform based on the mel spectrogram.

Figure 1.

General architecture of VC models.

2.1. End-to-End VC Based on Variational Autoencoder

End-to-end VC approaches integrate all stages of the speech generation pipeline (content front-end, acoustic, and vocoder) into a unified architecture. Recent studies have increasingly adopted the variational autoencoder (VAE) framework as the foundation for such models. A major breakthrough in this direction was the introduction of VITS by SK Telecom [22,23], which unified VAE-based representation learning, stochastic duration modeling, and adversarial training into a single architecture. This integration eliminated the need for explicit alignments and has been shown to improve synthesis quality, efficiency, and style transfer.

Building upon the VAE framework, FreeVC [24] enhances alignment strategies to achieve higher-quality voice conversion, while Glow-WaveGAN2 [25] improves prosodic control and timbre consistency. DINO-VITS [26] incorporates semi-supervised learning to boost robustness under low-resource conditions. The effectiveness of VITS-based models has been validated against various competitive benchmarks. For instance, in the 2023 Singing Voice Conversion Challenge [27,28,29], models based on the VITS architecture achieved competitive rankings in naturalness and similarity evaluations. Despite their robustness and controllability, current VAE-based systems still face challenges in capturing fine-grained timbral variations and complex semantic representations typical of natural human speech.

2.2. Cascaded VC with Diffusion Models

With the rapid advancement of diffusion models in the field of generative modeling, they are among the mainstream acoustic models within cascaded VC frameworks. DiffWave, introduced by Huawei in 2020 [30], pioneered the use of denoising diffusion probabilistic models (DDPMs) for waveform generation from Gaussian noise. Grad-TTS [31] extended that approach to TTS tasks by applying the diffusion process to spectrum generation, achieving notable improvements in naturalness, prosody control, and temporal variability.

Building upon this foundation, Diff-VC [32] employed diffusion-based denoising to enhance speech naturalness and timbral accuracy, while DDDM-VC [33] further improved clarity and stylistic consistency. ComoSpeech [34] integrated conditional diffusion with a hybrid autoregressive/non-autoregressive decoding strategy, allowing for finer control over emotional expression, prosodic variation, and speaking rate. Models derived from ComoSpeech, including our improved variant, have reported subjective evaluation results approaching human-level performance.

Despite these advancements, diffusion-based VC models still face several limitations compared with variational approaches. These include high computational complexity, slower inference speed, and reduced robustness under conditions of distribution shifts.

2.3. Emerging Flow Matching Models

A novel generative paradigm—flow matching—was recently introduced in image synthesis [35] and has gained attention for its efficiency and robustness. Conceptually related to diffusion models, flow matching solves ordinary differential equations (ODEs) to map input noise to output features, enabling faster generation and potentially improved stability without iterative sampling. Unlike diffusion processes that rely on stepwise denoising, flow matching enables the linear evolution of features along a predefined trajectory, significantly improving inference speed and reducing computational overhead. Its applicability to multimodal generation—including audio and text—has opened up new opportunities for efficient, high-fidelity speech synthesis.

Recently, flow matching has emerged as a promising alternative to diffusion models in speech generation due to its improved efficiency and generative quality. Meta AI introduced Voicebox [36], the first non-autoregressive speech synthesis system that leverages flow matching to enable robust and controllable generation across multiple tasks. Building on this, VoiceFlow [37] proposes an acoustic model that replaces diffusion sampling with a rectified flow matching algorithm to synthesize mel spectrograms efficiently, achieving superior quality with significantly fewer sampling steps. In the domain of singing voice synthesis, TechSinger [38] employs flow matching to enable multilingual synthesis with fine-grained control over vocal techniques via natural language prompts, enabling increased expressiveness and realism in generated singing. To address the stability and control limitations of flow-based models, PitchFlow [39] incorporates speaker scoring and pitch guidance, improving both pitch controllability and timbre consistency during generation. Most recently, StableVC [40] extended these advancements to zero-shot voice conversion by combining dual-attention mechanisms with conditional flow matching, allowing independent control of timbre and style from unseen speakers while achieving high-quality conversion at real-time speeds.

In contrast, our MPFM-VC integrates flow matching into a cascaded VC framework with several novel components: a multi-dimensional perception network that fuses diverse acoustic features (pitch, energy, prosody, and voiceprint), a content perturbation strategy to improve robustness under domain shifts, and a voiceprint adversarial module to enforce clean speaker–content disentanglement. These innovations support high-quality and robust voice conversion in both speech and singing scenarios. Comparative evaluations suggest that MPFM-VC offers performance improvements over prior flow-based approaches in multiple subjective and objective metrics.

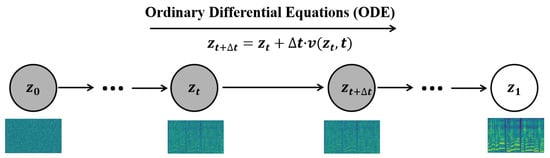

3. Flow Matching in Voice Conversion

As illustrated in Figure 2, in this study, we adopt a conditionally guided flow matching method based on optimal transport to learn the distribution of mel spectrograms and generate samples from this distribution conditioned on a set of acoustic features. Compared with diffusion probabilistic models [18], the proposed optimal transport-based conditional flow matching approach eliminates the need for reverse processes and complex mathematical derivations. Instead, it generates speech by learning a direct linear mapping between distributions, achieving results comparable to those of diffusion models. This method not only offers better generation performance but also provides a simpler gradient formulation, improved training efficiency, and significantly higher inference speed.

Figure 2.

The process of flow matching.

In this work, data representations are denoted by and , where and represent the prior distribution and the target mel spectrogram distribution, respectively. The subscripts indicate temporal positions, with zero denoting the starting point and one denoting the endpoint. In the flow-matching framework, a continuous path of probability densities is constructed from the initial prior distribution to the mel spectrogram distribution . Notably, the prior distribution in this formulation differs from that of many existing flow-matching models in that it is independent of any acoustic-related features. Instead, it is randomly initialized from a standard normal distribution. This acoustically agnostic initialization helps reduce entanglement among the feature representations within the dataset. The entire process can be formally described by the ordinary differential equation (ODE) shown in Equation (1).

where denotes the normalized time, and represents the data point at time t. The function is a vector field that defines the direction and magnitude of change for each data point in the state space over time. In the flow-matching framework, this vector field is parameterized and predicted by a neural network.

Once the predicted vector field is obtained, a continuous transformation path from the initial distribution to the target distribution can be constructed by solving the corresponding ordinary differential equation (ODE). This ODE can be numerically solved by using the Euler method, as shown in Equation (2).

where denotes the step size, t is the sampled time point, N is the total number of discretization steps, and represents the approximate solution at time t.

Overall, the core idea of flow matching lies in enforcing consistency between the predicted vector field and the ground-truth vector field corresponding to the target mel spectrogram. This ensures that the transformed probability distribution accurately aligns with the desired mel spectrogram distribution. The optimization objective can be formulated as the following loss function:

where denotes the parameters of the neural network, , and represents a sample from the target mel spectrogram distribution. denotes the ground-truth vector field, while represents the predicted vector field to be learned.

4. Methods

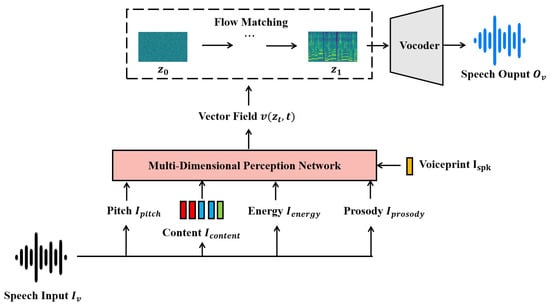

This section introduces the proposed voice conversion algorithm, Multi-Dimensional Perception Flow Matching. The overall architecture of the MPFM-VC model is illustrated in Figure 3. In addition to the content features, , extracted from the input speech, the model also incorporates auxiliary features, such as pitch (), energy (), and prosody (), to generate the target mel spectrogram, , that corresponds to the speaker’s voiceprint, . We provide a detailed explanation of the core components of the proposed model in the following, including flow matching for multi-dimensional perception, the multi-dimensional perception network, the content perturbation-based training enhancement method, and the adversarial training mechanism based on the voiceprint.

Figure 3.

The overall framework of MPFM-VC. Notably, the proposed network, which serves as the acoustic model, does not directly predict the mel spectrogram; instead, it predicts the vector field used in the ODE formulation of flow matching.

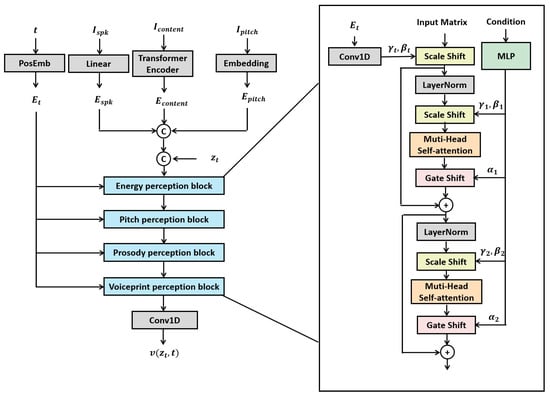

4.1. Multi-Dimensional Perception Network

Existing flow-matching models typically adopt a U-Net-based Transformer architecture to predict the vector field [17,41]. In this work, we propose a novel multi-dimensional feature perception network, as illustrated in Figure 4, which is designed to improve the model’s adaptability to varying acoustic conditions. Prior to being fed into the proposed feature perception blocks, all inputs undergo an encoding process as follows: First, a Transformer [17]-based sinusoidal positional encoding layer is introduced to generate time embeddings . Second, speaker identity features are projected onto a latent space via a linear layer to produce speaker embeddings . For the complex content representation , a Transformer encoder is employed to obtain content embedding . Simultaneously, pitch sequences are processed through a Transformer embedding layer to yield pitch embeddings . Additionally, at each time step t in the flow-matching process, the intermediate sample is computed by using linear interpolation: . Finally, the above multimodal embeddings are concatenated along the feature dimension and are used as inputs to the feature perception block.

Figure 4.

The architecture of the multi-dimensional feature perception network.

The multi-dimensional perception network proposed in this study adopts a modular architecture composed of multiple feature-awareness blocks. While all blocks share the same structural design, they utilize distinct condition matrices to capture diverse contextual information. Each block integrates conditional information through two complementary mechanisms:

(1) Scale Shift, defined in Equation (4), modulates the feature distribution by applying learnable scaling and bias transformation.

(2) Gated Shift, formulated in Equation (5), employs a gated unit to dynamically regulate the flow of information through adaptive feature reweighting.

The computations of these mechanisms are given by

where , , and are learnable parameters of the network, and x denotes the input matrix.

Specifically, the embedding of conditional information is carried out through a hierarchical processing mechanism. First, the time encoding is transformed by a one-dimensional convolutional layer to produce two learnable parameters, and , which are used for time-specific feature modulation. Next, the condition matrix is passed through a multi-layer perceptron (MLP) and mapped to six adaptive parameters and , enabling conditional feature encoding. The network then executes the following steps sequentially: (1) the application of layer normalization to the input feature matrix followed by conditional feature modulation; (2) cross-dimensional feature interaction based on a multi-head self-attention mechanism; (3) the application of a gated control mechanism to regulate the flow of information. To ensure training stability, a residual connection is introduced at the end of the block. This not only preserves the original feature information but also effectively mitigates issues related to gradient instability.

In the proposed multi-dimensional perception network, the condition matrix is used to embed auxiliary information, including energy (), prosody (), pitch (), and speaker voiceprint (). These conditioning features are incorporated into different feature-awareness blocks, each responsible for modeling a specific type of information.

Specifically, the embedding method of each feature is as follows:

- Energy is calculated as the root-mean-square (RMS) energy of each frame in the speech signal. The first perception block utilizes the energy embedding, , to keep the energy of input and output stable.

- Pitch information is extracted by using the pre-trained neural pitch estimation model RMVPE [42], which directly derives pitch features from raw audio. The second block employs the pitch embedding, , to maintain high reduction in and coherence of the output pitch.

- Prosodic features are extracted by using the neural prosody encoder HuBERT-Soft [43], which captures rhythm, intonation, and other prosodic cues in speech. The third block uses the prosody embedding, , to control the stress and opening of the output speech.

- Speaker identity features are obtained by using the pre-trained speaker verification model Camplus [44]. The final block integrates speaker characteristics by using the speaker embedding, , to captures speaker-dependent characteristics.

These encoded features are fused through a one-dimensional convolutional layer and are ultimately used to predict the vector field, . The core design principle behind this structure is that the closer to the output layer a block is, the stronger its sensitivity to conditional information is. Therefore, in acoustic modeling tasks, the speaker’s timbre should be assigned greater importance, while energy-related information can be considered relatively less influential. Nonetheless, each feature-awareness block is capable of adaptively learning and adjusting its sensitivity to various types of conditioning through dynamic parameterization, enabling the model to flexibly control the contribution of each feature and optimize feature fusion strategies based on task-specific requirements.

Furthermore, we adopted the Classifier-Free Diffusion Guidance [45] (CFG) approach, which allowed manual adjustment of the contribution of each condition during inference. Traditionally, as shown in Equation (6), the guidance vector field is predicted using a weighted combination of the full condition matrix and its fully masked counterpart. In this work, we introduced an additional term containing only the prosody matrix, as shown in Equation (7), to enable fine-grained control over prosodic features such as stress and openness in the synthesized speech. In this paper, our base inference method did not use CFG, and further prosodic CFG are discussed in ablation experiments.

where c denotes the conditional state, ⌀ denotes the unconditional state, denotes weight coefficients, and the sum of all the weight coefficients is 1.

In conventional flow-matching models, the vector field, , is typically trained uniformly across all time steps. However, in practice, predictions at intermediate time steps tend to be more challenging. Specifically, when t approaches 0, the optimal prediction tends to align with the mean of the target distribution (), while for t close to 1, it tends to align with the mean of the prior distribution (). In contrast, predictions around the midpoint () are often more ambiguous and unstable due to increased distributional uncertainty. To more accurately learn the ground-truth vector field, , we introduced a log-normal weighting scheme in the time dimension to reweight the loss function within the optimal transport-based flow-matching framework. This adjustment emphasizes the more difficult training samples at intermediate time steps, thereby improving the learning of vector dynamics during these transitions. The weighted loss function was defined as follows:

The log-normal distribution assigned lower loss weights to intermediate time steps, making them easier to optimize during training. In contrast, higher loss weights were assigned to time steps near 0 and 1, encouraging the model to converge more rapidly to optimal solutions in those regions.

4.2. Content Perturbation-Based Training Enhancement Method

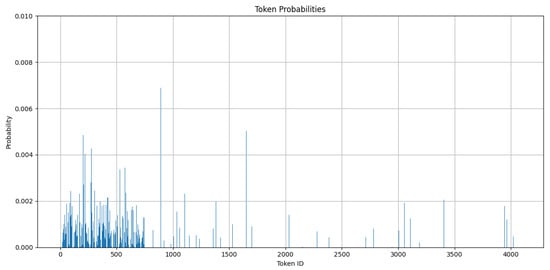

Due to distributional discrepancies between the training data and the inference environment, the model may produce degraded audio quality during inference, such as unnatural artifacts or plosive distortions. We counted the probability distribution of the content representation extracted by Sensevoice [46] in the dataset used in this paper. As shown in Figure 5, only about 20% of the 4096 word size was effectively used, and the rest of the representations were redundant representations.

Figure 5.

Word probability distribution map with 4096 word size.

To improve generalization and robustness, we propose a content perturbation-based training enhancement method. This method is designed to enhance the model’s contextual generalization capability and improve its stability in real-world deployment scenarios.

As illustrated in Figure 6, random perturbations are applied to the input content representations during the training phase to enhance model robustness. Given an input content representation vector , a certain proportion of its dimensions are randomly selected and masked. The selected dimensions are replaced with blank tokens, and the perturbation strategy is defined as follows:

where denotes a binary masking vector, where a subset of elements is randomly set to 0 according to a predefined masking ratio, while the remaining elements are set to 1. refers to the blank token used for replacement during perturbation, which is generated based on a roulette-wheel sampling strategy. Specifically, the value distribution of the content representation is first estimated from the training data by computing the frequency of each value within the embedding space. Then, values are randomly sampled in proportion to their observed frequencies to construct the blank representation used for masking.

Figure 6.

Content perturbation-based training enhancement method.

Through this training strategy, the model is expected to generate high-quality speech even when partial content representations are missing. This enables the model to maintain strong robustness and generalization during inference, particularly in scenarios involving incomplete feature inputs or noise-corrupted conditions, thereby ensuring stable and natural speech synthesis.

4.3. Adversarial Training Mechanism Based on Voiceprints

In voice conversion (VC) models, a core goal of the content encoder is to extract speaker-independent linguistic representations. However, due to the frequent reuse of the same speaker for both target and reference signals during training, the encoder often inadvertently captures speaker-specific cues. This speaker information leakage can result in generated speech that retains residual timbral characteristics of the reference speaker, ultimately reducing naturalness and target speaker fidelity.

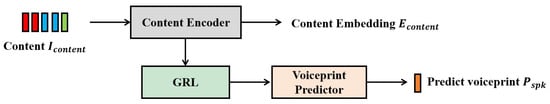

To mitigate this issue, we adopted an adversarial disentanglement strategy to explicitly suppress voiceprint information in the content representation. This approach, originally introduced in prior VC models such as StarGAN-VC [47] and CycleGAN-VC [48], has proven effective in decoupling speaker identity from linguistic content. In our work, we adapted this established technique to the flow-matching framework, integrating a speaker classifier trained adversarially against the content encoder. This enabled the model to better isolate linguistic information while preserving control over the target speaker’s timbre in the final generation stage.

As illustrated in Figure 7, the overall adversarial training framework consisted of three main components: the content encoder () from the flow-matching module, a gradient reversal layer (GRL), and a speaker classifier (). Specifically, within the acoustic model, the input content representation, , was first processed by the content encoder, , to generate a speaker-independent content embedding:

Figure 7.

Adversarial training mechanism based on voiceprint.

To disentangle speaker-related information from the content encoder, we designed a speaker classifier composed of three linear layers with ReLU activation functions. This classifier aimed to predict the speaker identity, , from the content embedding, , and guided the training process by comparing the prediction with the ground-truth speaker embedding, .

To enforce feature disentanglement, a gradient reversal layer (GRL) was inserted before the speaker classifier. During forward propagation, the GRL passed the content representation unchanged. However, during backpropagation, it reversed the gradient direction and scaled it by a tunable adversarial coefficient :

where denotes the adversarial coefficient, which controls the strength of gradient reversal.

The speaker classifier, , was optimized based on this mechanism to accurately predict the speaker identity by minimizing the loss, , while the content encoder, , was trained adversarially to maximize that loss—effectively preventing the classifier from extracting speaker-specific cues. This adversarial game facilitates the disentanglement of speaker information from content embeddings. The final training objective was defined as

4.4. The Training and Inference Processes of MPFM-VC

For inference, we use MPFM to predict the vector field, generate the mel spectrogram through ordinary differential equations, and finally generate the speech waveform from the vocoder. The specific inference process is as follows (Algorithm 1):

| Algorithm 1 The inference process of MPFM-VC. |

|

During training our MPFM does not predict the mel spectrogram directly, but predicts the vector field by randomly sampling the time step. The specific training process is as follows (Algorithm 2):

| Algorithm 2 The training process of MPFM. |

|

5. Results

5.1. Dataset

We conducted experiments on a Mandarin multi-speaker speech dataset, AISHELL-3 [49], and a Mandarin multi-singer singing dataset, M4Singer [50], to evaluate the effectiveness of the proposed method in both speech and singing voice conversion tasks.

(1) AISHELL-3

AISHELL-3 is a high-quality multi-speaker Mandarin speech synthesis dataset released by AISHELL Foundation. It contains 218 speakers (where the male-to-female ratio is balanced) and more than 85,000 speech sentences; the total duration is about 85 h, and the sampling rate is 44.1 kHz. A 16-bit, professional recording environment made clear sound quality possible. In order to construct the speech test set, in this study, we randomly selected 10 of the speakers to be excluded from training, and from each, we randomly selected 10 speech samples, totaling 100 speech samples.

(2) M4Singer

M4Singer is a large-scale Chinese singing voice dataset with multiple styles and multiple singers released by Tsinghua University. The dataset contains 30 professional singers (where the male-to-female ratio is balanced) and a total of 16,000 singing sentences; the total duration is 18.8 h, and the sampling rate is 44.1 kHz. It was generated in a 16-bit, clean, and noise-free recording environment and covers pop, folk, rock, and other styles. Similar to the above, 10 singers were randomly selected to be excluded from training, and 10 singing samples were selected for each singer, for a singing test set totaling 100 samples.

5.2. Data Processing

In this study, multiple key features were extracted from the original speech data, including content representation (), speaker voiceprint (), pitch (), energy (), prosody (), and the target mel spectrogram. The content representation, , was extracted by using a pre-trained automatic speech recognition model, SenseVoice [46], which provides high-precision linguistic features. Speaker identity features were obtained by using the pre-trained speaker verification model Camplus [44], which captures speaker-dependent characteristics. Pitch information was extracted by using the pre-trained neural pitch estimation model RMVPE [42], which directly derives pitch features from raw audio, ensuring high accuracy and robustness. Energy was calculated as the root-mean-square (RMS) energy of each frame in the speech signal. Prosodic features were extracted by using the neural prosody encoder HuBERT-Soft [43], which captures rhythm, intonation, and other prosodic cues in speech. The target mel spectrogram was computed with a standard signal processing pipeline consisting of pre-emphasis, framing, windowing, Short-Time Fourier Transform (STFT), power spectrum calculation, and mel filterbank projection. The configuration details were as follows: a sampling rate of 32,000 Hz, a pre-emphasis coefficient of 0.97, a frame length of 1024, a frame shift of 320, the Hann window function, and 100 mel filterbank channels.

5.3. Model Parameters

Regarding feature input parameters, the speaker voiceprint ( had a dimension of 192 and was first projected into a 100-dimensional speaker embedding () with a linear layer. The content representation () was a one-dimensional sequence of length T with a vocabulary size of 4096. It was embedded into a 512-channel matrix with an embedding layer and then fed into a Transformer-based encoder consisting of six blocks. Each block contained eight attention heads and a feed-forward layer with a hidden dimension of 2048. The output was content embedding . The pitch feature () was first mapped to a discrete sequence with a vocabulary size of 256, which was then transformed into a 512-channel matrix by using an embedding layer. The energy () and prosody () features were both projected into 100-dimensional embeddings ( and , respectively) by using linear layers.

In the multi-dimensional perception network, the time encoding was processed by a one-dimensional convolutional layer with an output size of to generate two modulation parameters, and . The multi-head self-attention module used four attention heads with a hidden dimension of 400. The conditional embedding network consisted of a multi-layer perceptron with two linear layers, where the hidden layer had a dimensionality of 400, and the output layer had a size of , producing six conditional parameters: , and . Finally, the entire multi-dimensional feature perception network outputted a 100-dimensional predicted vector field () via a one-dimensional convolutional layer.

5.4. Training Setup

The experiments were conducted on both speech and singing datasets, with training and testing performed separately for speech conversion and singing voice conversion tasks. The model was trained for 100 epochs by using the Adam optimizer, until full convergence was achieved. A dynamic batch size strategy was adopted, where the batch size was determined based on the total frame length of the content representation (), with a maximum limit of 10,000 frames per batch. The learning rate was set by using a warm-up strategy, with an initial learning rate and a warm-up step count of 2500. The learning rate at step t was computed as follows:

5.5. Baseline Models and Evaluation Metrics

(1) Baseline models

(1) Free-VC [24] (2022, ICASSP) is a voice conversion model that adopts the variational autoencoder architecture of VITS for high-quality waveform reconstruction; it is widely used in voice conversion tasks due to its efficient feature modeling capabilities.

(2) Diff-VC [32] (2022, ICLR) is a diffusion model-based voice conversion method which can generate high-quality converted speech based on noise reconstruction; it is the representative diffusion model for VC tasks.

(3) DDDM-VC [33] (2024, AAAI) is a newly proposed feature decoupling speech conversion method based on a diffusion model which improves the quality of converted speech and speaker consistency while maintaining the consistency of speech features.

(4) Voiceflow [37] (2024, ICASSP) is a recent speech generation method based on flow matching that achieves high synthesis quality with a limited number of sampling steps.

(2) Evaluation index

(1) Mean Opinion Score (MOS): the naturalness of the synthesized speech was evaluated by 10 students with good Mandarin skills and sound sense as the audience.

(2) Mel Cepstral Distortion (MCD): it measures the spectral distance between the converted speech and the target speech, where a lower value indicates higher-quality conversion.

(3) Word Error Rate (WER): the intelligibility of the converted speech was evaluated by using automatic speech recognition, where a lower WER indicated higher speech intelligibility.

(4) Similarity Mean Opinion Score (SMOS): listeners (10 students with good Mandarin skills and sound sense) scored the timbre similarity of the synthesized speech for us to measure the subjective similarity of the timbre after speech conversion.

(5) Speaker Embedding Cosine Similarity (SECS): The cosine similarity between the original speech and the converted speech was calculated based on the speaker coding, which was used to objectively measure the degree of timbre preservation. The higher the value was, the closer the converted speech was to the target timbre.

5.6. Experimental Results

(1) Quality evaluation of voice conversion

The primary goals of the voice conversion (VC) task are to transform the speaker’s timbre while preserving the original linguistic content and maximize the naturalness and intelligibility of the generated speech. To this end, we evaluated the proposed method on the speech test set by using both subjective and objective metrics. Subjective evaluation was conducted based on Mean Opinion Score (MOS) tests, while objective performance was quantified by using the Mel Cepstral Distortion (MCD) and Word Error Rate (WER). This combination provided a comprehensive assessment of the effectiveness of different voice conversion approaches.

As shown in Table 1, the proposed MPFM-VC algorithm achieved the highest scores among the evaluated methods in terms of naturalness (MOS), audio fidelity (MCD), and speech clarity (WER). Compared with existing models, MPFM-VC exhibited relatively consistent performance across multiple evaluation metrics, suggesting improved robustness under varying data conditions.

Table 1.

Results of voice conversion quality evaluation.

In particular, MPFM-VC showed an 11.57% increase in MOS compared to Free-VC, which may reflect better handling of speech continuity and prosodic variation while reducing artifacts commonly associated with end-to-end VITS-based frameworks. Compared with diffusion-based models such as Diff-VC and DDDM-VC, MPFM-VC reported lower MCD and WER values, indicating that it may better preserve semantic content and enhance the intelligibility of the converted speech. Furthermore, compared to Voiceflow-VC, a recent baseline based on rectified flow matching, MPFM-VC achieved marginal improvements across all three evaluation metrics. Although Voiceflow-VC already produces competitive results in terms of synthesis quality and efficiency, the inclusion of multi-dimensional feature modeling and content perturbation in MPFM-VC may contribute to enhanced spectral accuracy and prosodic consistency, which could lead to improved intelligibility and speaker similarity.

Overall, these results suggest that the proposed architectural components in MPFM-VC—particularly the integration of auxiliary acoustic features and robustness-aware training strategies—may enhance the model’s adaptability to various acoustic conditions and speaker characteristics.

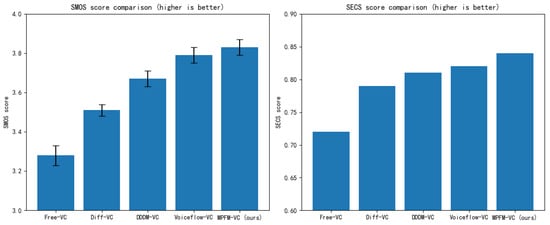

(2) Timbre similarity evaluation of voice conversion

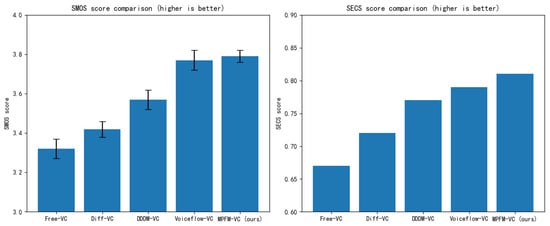

The goal of speaker similarity evaluation is to assess a voice conversion method’s ability to preserve the timbre consistency of the target speaker. In this study, we adopted two metrics for analysis: Similarity MOS (SMOS) and Speaker Embedding Cosine Similarity (SECS).

As shown in Table 2 and Figure 8, MPFM-VC exhibited relatively high speaker consistency in the speaker similarity evaluation task, achieving the highest SMOS (3.83) and SECS (0.84) scores. This suggests that MPFM-VC may better preserve the timbral characteristics of the target speaker during conversion. Compared with Free-VC, MPFM-VC enhanced the model’s adaptability to target speaker embeddings through multi-dimensional feature perception modeling, thereby improving post-conversion timbre similarity. Although Diff-VC benefited from diffusion-based generation, which improved overall audio quality to some extent, it failed to sufficiently disentangle speaker identity features, resulting in residual characteristics from the source speaker in the converted speech. While DDDM-VC introduced feature disentanglement mechanisms that improved speaker similarity, it still fell short compared with MPFM-VC. MPFM-VC showed slightly improved results over Voiceflow-VC by incorporating adversarial training tailored to speaker embeddings, which more effectively suppressed residual speaker leakage and refined speaker identity alignment.

Table 2.

Results of voice conversion timbre similarity evaluation.

Figure 8.

Results of voice conversion timbre similarity evaluation.

These findings suggest that the combination of flow-based modeling and speaker-guided adversarial training in MPFM-VC may support improved speaker similarity while maintaining acceptable naturalness and synthesis stability in voice conversion tasks.

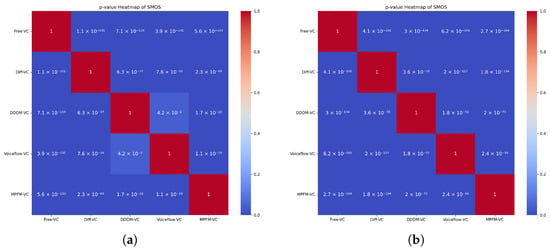

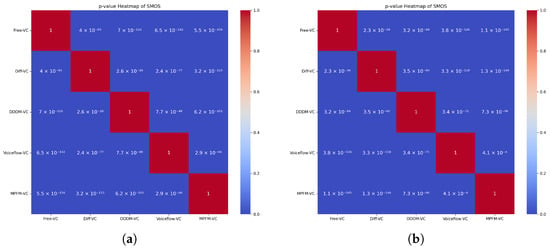

(3) Student’st-test of voice conversion

To validate the reliability of the subjective evaluation results, we conducted two-sample Student’s t-tests between each pair of evaluated systems for both MOS and SMOS metrics. In the p-value heatmaps, lower (blue) values indicate stronger statistical significance.

As shown in Figure 9, for MOS, our proposed method MPFM-VC exhibited statistically significant improvements (p < 0.05) over all baseline models, including Free-VC, Diff-VC, DDDM-VC, and Voiceflow-VC. The p-values were all extremely small (e.g., 2.3 × between MPFM-VC and DDDM-VC), confirming that the perceived improvements in naturalness were not due to chance.

Figure 9.

Student’s t-test of voice conversion. (a) p-value heatmap of MOS, (b) p-value heatmap of SMOS.

Similarly, for SMOS, MPFM-VC also achieved statistically significant differences against all other systems, with p-values often smaller than 1 × . This reinforces the claim that our method provides more consistent and perceptually closer timbre to the target speaker than competing approaches.

Notably, even between Voiceflow-VC and other strong baselines like DDDM-VC, the differences were statistically significant, which highlighted the effectiveness of flow-matching-based methods in general. However, MPFM-VC still surpassed Voiceflow-VC with clear statistical confidence. These results demonstrated that the performance gains observed in MOS and SMOS were both consistent and statistically significant, further supporting the effectiveness of our proposed multi-dimensional perception flow-matching strategy.

(4) Quality evaluation of singing voice conversion

In the context of voice conversion, singing voice conversion is generally more challenging than standard speech conversion due to its inherently richer pitch variations, timbre stability, and prosodic complexity. We adopted the same set of evaluation metrics used in speech conversion to comprehensively evaluate the performance of different models on the singing voice conversion task, including the subjective Mean Opinion Score (MOS) and objective indicators such as Mel Cepstral Distortion (MCD) and the Word Error Rate (WER).

As shown in Table 3, MPFM-VC achieved strong results on the singing voice conversion task, with a MOS of 4.12, an MCD of 6.32, and a WER of 4.86%. Through multi-dimensional feature perception modeling, MPFM-VC adapted to melodic variations and pitch fluctuations typically found in singing voices, producing outputs that exhibited improved fluency and preserve audio clarity. Compared with Free-VC, MPFM-VC achieved slightly better naturalness scores, possibly due to its use of flow matching, which may enhance dynamic feature modeling. Diffusion-based methods like Diff-VC and DDDM-VC tended to introduce some degree of over-smoothing, which may affect fine acoustic detail retention. Voiceflow-VC, as a recent flow-matching approach, achieved competitive performance (MOS: 3.93, WER: 5.56%), showing effective modeling of singing voices. However, MPFM-VC reported consistently higher scores across all metrics, potentially due to the integration of multi-dimensional conditioning and perturbation training.

Table 3.

Results of singing voice conversion quality evaluation.

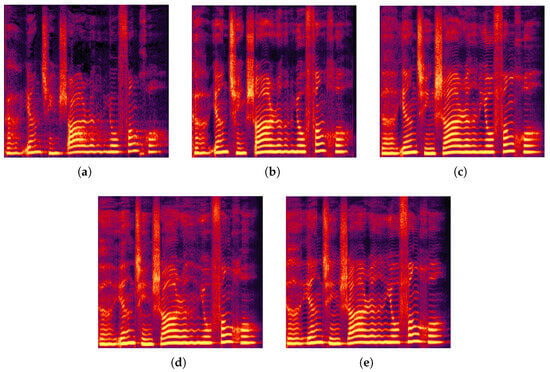

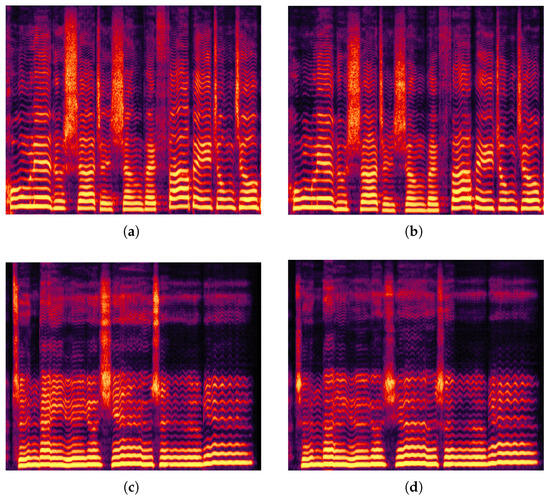

Additionally, we randomly selected a singing voice segment for spectrogram comparison, as shown in Figure 10. The spectrogram generated by MPFM-VC appeared sharper and more structured, which may be attributed to the multi-dimensional perception network that enabled a more accurate reconstruction of detailed features such as vibrato and articulation. Voiceflow-VC also showed strong performance in maintaining temporal and spectral structure, outperforming Free-VC and diffusion-based models in some aspects. However, it captured slightly fewer high-frequency harmonics and transient variations compared to MPFM-VC, which may be important for expressive singing voice synthesis. These findings support the potential of the proposed method in producing perceptually improved results in singing voice conversion.

Figure 10.

Spectrogram comparison for the same voice input. (a) Free-VC, (b) Diff-VC, (c) DDDM-VC, (d) Voiceflow-VC, (e) MDFM-VC.

(5) Timbre similarity evaluation of singing voice conversion

Timbre similarity in singing voices is a critical metric for evaluating a model’s ability to preserve the target singer’s vocal identity during conversion. Compared with normal speech, singing involves more complex pitch variations, formant structures, prosodic patterns, and timbre continuity, which pose additional challenges for accurate speaker similarity modeling. In this study, we performed a comprehensive evaluation by using both subjective SMOS (Similarity MOS) and objective SECS (Speaker Embedding Cosine Similarity) to assess the effectiveness of different methods in capturing and preserving timbral consistency in singing voice conversion.

As shown in Table 4 and Figure 11, MPFM-VC achieved superior performance in singing voice timbre similarity evaluation. It achieved higher scores in both SMOS (3.79) and SECS (0.81) than the compared baselines, suggesting improved preservation of the target singer’s timbral identity. In singing voice conversion, Free-VC and Diff-VC showed limitations in disentangling content and speaker representations, which may result in timbre mismatches with the target voice. Although the diffusion-based DDDM-VC model partially solved this issue, it continued to exhibit timbre smoothing effects, which may reduce the perceptual uniqueness of the generated singing voices. Voiceflow-VC showed improved timbre consistency over these baselines and achieved competitive SMOS and SECS scores. However, it achieved slightly lower scores than MPFM-VC, especially in cases requiring preservation of fine speaker-specific details during dynamic singing phrases.

Table 4.

Results of singing voice conversion timbre similarity evaluation.

Figure 11.

Results of singing voice conversion timbre similarity evaluation.

In contrast, MPFM-VC incorporated an adversarial speaker disentanglement strategy, which helped reduce residual source speaker cues and contributed to improved alignment with the target singer’s timbre. Additionally, MPFM-VC enabled the fine-grained modeling of timbral variation with a multi-dimensional feature-aware flow-matching mechanism, potentially supporting more stable and consistent timbre rendering throughout the conversion process.

(6) Student’s t-test of singing voice conversion

To further validate the significance of our model’s performance in the singing voice conversion task, we conducted pairwise Student’s t-tests on subjective evaluation metrics (MOS and SMOS). As shown in Figure 12, most p-values were significantly below 0.05, indicating that the differences in perceived speech quality and timbre similarity between MPFM-VC and other baselines were statistically significant. Notably, MPFM-VC consistently achieved statistically better results over all compared methods in both metrics.

Figure 12.

Student’s t-test of singing voice conversion. (a) p-value heatmap of MOS, (b) p-value heatmap of SMOS.

(7) Robustness Evaluation under Low-Quality Conditions

In real-world applications, voice conversion systems must exhibit robustness to low-quality input data in order to maintain reliable performance under adverse conditions such as background noise, limited recording hardware, or unclear articulation by the speaker. To assess this capability, we additionally collected a set of 30 low-quality speech samples that incorporated common noise-related challenges, including mumbling, background reverberation, ambient noise, signal clipping, and low-bitrate encoding.

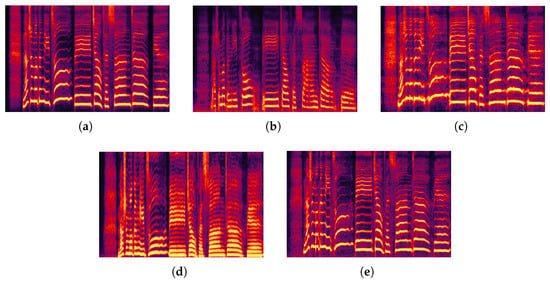

As shown in Table 5 and the spectrograms in Figure 13, MPFM-VC maintained stable performance even under low-quality speech conditions. The generated spectrograms retained clear structural patterns, suggesting preserved synthesis quality despite degraded inputs, whereas other systems showed more degradation in terms of naturalness and timbre consistency.

Table 5.

Results of voice conversion under low-quality conditions.

Figure 13.

Spectrograms under low-quality conditions. (a) Free-VC, (b) Diff-VC, (c) DDDM-VC, (d) Voiceflow-VC, (e) MPFM-VC.

Diffusion-based models, such as Diff-VC and DDDM-VC, exhibited reduced performance under noise perturbation. Their spectrograms appeared blurred, and metrics such as MCD and WER indicated degradation. For instance, Diff-VC resulted in the highest WER (35.20%) and MCD (8.85), pointing to its sensitivity to noisy inputs. Voiceflow-VC, using rectified flow matching, showed relatively better robustness compared to diffusion-based baselines, achieving higher MOS (3.12) and SMOS (3.34) than Free-VC and DDDM-VC. However, its performance remained slightly lower than that of MPFM-VC in terms of intelligibility and timbre similarity.

MPFM-VC achieved the highest scores across all evaluation metrics—including WER (15.37%), MCD (6.89), SMOS (3.53), and SECS (0.73). These results suggest that multi-dimensional feature modeling and content perturbation strategies may enhance resilience to noisy conditions and support more consistent and intelligible voice conversion in practical scenarios.

It is worth noting that although Free-VC showed relatively weaker performance in previous experiments, it still performed more reliably than diffusion-based architectures under low-quality speech conditions. Its generated spectrograms exhibited limited blurring, which may suggest some noise tolerance associated with the end-to-end variational autoencoder (VAE)-based modeling approach. However, its SECS score remained notably lower than that of MPFM-VC, suggesting possible challenges in preserving accurate timbre.

Voiceflow-VC, which leverages rectified flow matching for generation, showed improved robustness compared to diffusion-based models such as Diff-VC and DDDM-VC. It achieved competitive intelligibility and timbre similarity scores, and its spectrograms appeared more consistent under noise. Nevertheless, its overall performance was slightly lower than MPFM-VC, particularly in preserving speaker-specific features during more complex singing phrases, as reflected in the SECS and WER scores.

MPFM-VC maintained relatively high speech quality and speaker similarity under low-quality input conditions. It yielded the highest scores across the reported evaluation metrics—including MOS, MCD, WER, and SMOS—and its spectrograms retained structural clarity. This outcome may be related to the integration of multi-dimensional feature-aware flow matching, which enables more detailed modeling of speech under varying noise conditions. Additionally, the content perturbation-based training augmentation may improve the model’s robustness to missing or degraded input features, and the adversarial training on speaker embeddings may contribute to better timbre preservation under noisy conditions.

5.7. Ablation Experiments

(1) Prosodic feature verification in multi-dimensional perception network

In this study, we refined the existing Classifier-Free Guidance (CFG) inference strategy, as shown in Equation (7), by increasing the weight of prosodic features in the MDPN. This adjustment enabled a more effective analysis of the impact of prosody on the generated speech through spectrogram-based evaluations.

We set the weight of the prosodic matrix in the prosody-based CFG to 0.5 to compare its effect with the version without prosody CFG. As illustrated in the Figure 14, we randomly selected two pairs of singing samples for a more intuitive comparison. In the versions with prosody CFG, the high-frequency regions appeared more enriched, indicating a greater vocal openness and increased vocal brightness. Additionally, the spectrogram showed brighter formant structures for each syllable, suggesting that the prosody CFG enhanced the emphasis and articulation of stressed syllables.

Figure 14.

Prosodic feature verification in multi-dimensional perception network. (a) With prosodic CFG voice 1, (b) without prosodic CFG voice 1, (c) with prosodic CFG voice 2, (d) without prosodic CFG voice 2.

(2) Content perturbation-based training enhancement method

In the voice conversion task, the content perturbation-based training augmentation strategy was designed to improve model generalization by introducing controlled perturbations to content representations during training. That approach aimed to reduce inference-time artifacts such as unexpected noise and improve the overall stability of the converted speech. To validate the effectiveness of this method, we conducted an ablation study by removing the content perturbation mechanism and observing its impact on speech conversion performance. In addition, we also applied the methods of two related works to our MPFM-VC as the baseline. SpecAugment [51] randomly adds a mask to the input mel spectrogram, and Maskcyclegan-VC [52] uses a filling in frames (FIF) method to mask the mel spectrogram, which is similar to the method in this paper. The difference lies in that it enhances the spectrum, while the method in this paper enhances the content representation. A test set consisting of 100 out-of-distribution audio samples with varying durations was used to simulate real-world scenarios under complex conditions. The following evaluation metrics were determined: MOS, MCD, WER, SMOS, SECS, and the frequency of plosive artifacts per 10 s in converted speech (Badcase).

As shown in Table 6, the content perturbation-based training augmentation appeared to contribute to improved stability and robustness in the voice conversion model. When this component was removed, the Badcase rate increased noticeably (from 0.39 to 1.52 per 10 s), suggesting a higher occurrence of artifacts such as plosive noise or interruptions in more acoustically challenging cases. Additionally, MCD and WER both rose slightly, which may indicate reduced fidelity and intelligibility.

Table 6.

Results of ablation experiments for content perturbation-based training enhancement method.

Interestingly, the MOS improved slightly in the absence of perturbation, possibly due to better in-domain fitting, though this came at the cost of generalization across longer or mismatched utterances. Meanwhile, the SMOS and SECS remained largely stable, implying that the perturbation strategy primarily contributed to robustness rather than timbre consistency.

Compared to the traditional SpecAugment method, which yielded inferior results likely due to random masking, the proposed strategy offered more structured perturbation. Similarly, the FIF approach from MaskCycleGAN-VC also enhanced robustness, though it resulted in slight reductions in MOS and MCD. Nevertheless, FIF performed comparably to our approach in content preservation and timbre similarity under certain conditions.

Overall, the results suggest that the content perturbation strategy provides meaningful improvements in stability and generalization, particularly under noisy or out-of-domain scenarios, and can be a valuable addition for real-world voice conversion systems.

(3) Adversarial training mechanism based on voiceprint

In the voice conversion task, the strategy of adversarial training on speaker embeddings was designed to enhance the timbre similarity to the target speaker while suppressing residual speaker identity information from the source speaker. This ensured that the converted speech better matched the target speaker’s voice without compromising the overall speech quality.

To evaluate the effectiveness of that strategy, we conducted an ablation study by removing the adversarial training module and comparing its impact on timbre similarity and overall speech quality in the converted outputs. Moreover, we incorporated the conditioning strategy from Voicebox [36] into our baseline model MPFM-VC. The method involved using the mel spectrogram of a target speaker as a prompt, which was injected into each perceptual block to enhance speaker similarity.

As shown in Table 7, the speaker adversarial training module appeared to contribute substantially to timbre matching in the voice conversion task. When this component was removed, both SMOS and SECS scores decreased—from 3.83 to 3.62 and from 0.84 to 0.73, respectively—suggesting reduced alignment with the target speaker’s timbre and an increase in residual characteristics from the source speaker.

Table 7.

Ablation experimental results of adversarial training method for voiceprint features.

In contrast, the MOS and MCD metrics remained largely unchanged, indicating that the adversarial mechanism had a limited impact on overall audio quality. Interestingly, WER showed a slight improvement, which may reflect enhanced intelligibility. However, this change could be associated with a loss of timbre specificity, as the model could default to producing more neutral or averaged vocal characteristics.

While higher speaker similarity could be achieved with Voicebox-based methods, they relied on the mel spectrogram of a reference utterance, which might introduce unrelated acoustic information and affect both quality and intelligibility.

Overall, adversarial training helped improve timbre consistency by suppressing residual speaker identity cues in the content representation. While some perceptual quality scores improved when this component was omitted, these gains came at the expense of precise speaker identity transfer. Therefore, in practical applications, adversarial training remains essential to achieving high-quality voice conversion, ensuring that the generated speech is not only intelligible but also accurately timbre-matched to the intended target speaker.

6. Conclusions

To address the challenges of achieving high-quality and robust speech conversion, we proposed MPFM-VC, a novel model that utilized ordinary differential equations (ODEs) to model the dynamic evolution of speech features. A multi-dimensional perception mechanism was introduced to improve the stability and naturalness of speech synthesis. In addition, a content perturbation-based training augmentation strategy was employed to enhance the model’s generalization ability by introducing controlled perturbations to content features during training, helping reduce undesirable artifacts during inference. To further improve timbre matching, a voiceprint adversarial training strategy was adopted to better disentangle content and speaker identity features, minimizing feature entanglement and reducing residual speaker information. Experimental results indicated that the proposed MPFM-VC model achieved competitive performance across multiple evaluation metrics—including MOS, MCD, and SECS—demonstrating improvements in naturalness, speaker similarity, and robustness in real-world scenarios.

Limitations and Future Work

While MPFM-VC demonstrated superior performance in both clean and noisy voice conversion scenarios, several limitations remain to be addressed in future work.

First, although the use of flow matching provides a non-autoregressive and parallelizable generation scheme, the overall inference pipeline—particularly the multi-dimensional feature perception module—still introduces non-negligible computational overhead. This may pose challenges for deployment in resource-constrained or real-time streaming applications, especially when dealing with longer input sequences or high-resolution acoustic representations. We hypothesize that a knowledge distillation approach—similar to that used in ComSpeech [34]—could be leveraged to approximate the one-step solution of the ODE, thereby enhancing inference efficiency.

Moreover, to further advance research on enhancing speaker similarity and robustness within the flow-matching framework, we believe that a recent study on flow matching [53] offers valuable insights. This work proposes guiding the training of the flow-matching vector field using an auxiliary speech representation. Inspired by this, we suggest that such a strategy could be adapted to continuously guide the content encoder, effectively disentangling speaker-related information while enriching the completeness of content representations. This, in turn, has the potential to significantly improve the robustness and speaker similarity of voice conversion systems.

In future work, we also plan to explore lightweight alternatives to reduce model complexity, improve streaming compatibility, and investigate more efficient flow-based generation methods for low-latency deployment.

Author Contributions

Y.W.: methodology, software, validation, formal analysis, investigation, resources, and data curation; Y.W. and X.H.: writing—original draft preparation; Y.W., X.H., S.L., T.Z. and Y.C.: writing—review and editing; Y.W.: visualization; X.H.: supervision and project administration. All authors have read and agreed to the published version of the manuscript.

Funding

This research study received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in this study are contained in this paper. Further inquiries (including but not limited to trained models and code) can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| TTS | Text to speech |

| VC | Voice conversion |

| ODE | Ordinary differential equation |

| MOS | Mean Opinion Score |

| MCD | Mel Cepstral Distortion |

| WER | Word Error Rate |

| SMOS | Similarity Mean Opinion Score |

| SECS | Speaker Embedding Cosine Similarity |

| ICASSP | IEEE International Conference on Acoustics, Speech and Signal Processing |

| ICLR | International Conference on Learning Representations |

| AAAI | Association for the Advancement of Artificial Intelligence Conference |

References

- Kaur, N.; Singh, P. Conventional and contemporary approaches used in text to speech synthesis: A review. Artif. Intell. Rev. 2023, 56, 5837–5880. [Google Scholar] [CrossRef]

- Ren, Y.; Ruan, Y.; Tan, X.; Qin, T.; Zhao, S.; Zhao, Z.; Liu, T.Y. Fastspeech: Fast, robust and controllable text to speech. In Advances in Neural Information Processing Systems, Proceedings of the Annual Conference on Neural Information Processing Systems 2019, NeurIPS 2019, Vancouver, BC, Canada, 8–14 December 2019; Curran Associates Inc.: Red Hook, NY, USA, 2019; Volume 32. [Google Scholar]

- Ren, Y.; Hu, C.; Tan, X.; Qin, T.; Zhao, S.; Zhao, Z.; Liu, T.Y. Fastspeech 2: Fast and high-quality end-to-end text to speech. arXiv 2020, arXiv:2006.04558. [Google Scholar]

- Wang, Y.; Skerry-Ryan, R.; Stanton, D.; Wu, Y.; Weiss, R.J.; Jaitly, N.; Yang, Z.; Xiao, Y.; Chen, Z.; Bengio, S.; et al. Tacotron: Towards end-to-end speech synthesis. arXiv 2017, arXiv:1703.10135. [Google Scholar]

- Shen, J.; Pang, R.; Weiss, R.J.; Schuster, M.; Jaitly, N.; Yang, Z.; Chen, Z.; Zhang, Y.; Wang, Y.; Skerrv-Ryan, R.; et al. Natural tts synthesis by conditioning wavenet on mel spectrogram predictions. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 4779–4783. [Google Scholar]

- Li, N.; Liu, S.; Liu, Y.; Zhao, S.; Liu, M. Neural speech synthesis with transformer network. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 6706–6713. [Google Scholar]

- Saito, Y.; Ijima, Y.; Nishida, K.; Takamichi, S. Non-parallel voice conversion using variational autoencoders conditioned by phonetic posteriorgrams and d-vectors. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 5274–5278. [Google Scholar]

- Qian, K.; Zhang, Y.; Chang, S.; Yang, X.; Hasegawa-Johnson, M. Autovc: Zero-shot voice style transfer with only autoencoder loss. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 5210–5219. [Google Scholar]

- Chou, J.c.; Yeh, C.c.; Lee, H.y. One-shot voice conversion by separating speaker and content representations with instance normalization. arXiv 2019, arXiv:1904.05742. [Google Scholar]

- Jia, Y.; Zhang, Y.; Weiss, R.; Wang, Q.; Shen, J.; Ren, F.; Nguyen, P.; Pang, R.; Lopez Moreno, I.; Wu, Y.; et al. Transfer learning from speaker verification to multispeaker text-to-speech synthesis. In Advances in Neural Information Processing Systems, Proceedings of the Annual Conference on Neural Information Processing Systems 2018, NeurIPS 2018, Montréal, QC, Canada, 3–8 December 2018; Curran Associates Inc.: Red Hook, NY, USA, 2018; Volume 31. [Google Scholar]

- Radford, A.; Kim, J.W.; Xu, T.; Brockman, G.; McLeavey, C.; Sutskever, I. Robust speech recognition via large-scale weak supervision. In Proceedings of the International Conference on Machine Learning, Honolulu, HI, USA, 23–29 July 2023; pp. 28492–28518. [Google Scholar]

- Baevski, A.; Zhou, Y.; Mohamed, A.; Auli, M. wav2vec 2.0: A framework for self-supervised learning of speech representations. In Advances in Neural Information Processing Systems, Proceedings of the Annual Conference on Neural Information Processing Systems 2020, NeurIPS 2020, Online, 6–12 December 2020; Curran Associates Inc.: Red Hook, NY, USA, 2020; Volume 33. [Google Scholar]

- Qian, K.; Zhang, Y.; Gao, H.; Ni, J.; Lai, C.I.; Cox, D.; Hasegawa-Johnson, M.; Chang, S. Contentvec: An improved self-supervised speech representation by disentangling speakers. In Proceedings of the International Conference on Machine Learning, Baltimore, MD, USA, 17–23 July 2022; pp. 18003–18017. [Google Scholar]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Advances in Neural Information Processing Systems, Proceedings of the Annual Conference on Neural Information Processing Systems 2014, NeurIPS 2014, Montréal, QC, Canada, 8–13 December 2014; Curran Associates Inc.: Red Hook, NY, USA, 2014; Volume 27. [Google Scholar]

- Rezende, D.J.; Mohamed, S.; Wierstra, D. Stochastic backpropagation and approximate inference in deep generative models. In Proceedings of the International Conference on Machine Learning, Beijing, China, 21–26 June 2014; pp. 1278–1286. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. In Advances in Neural Information Processing Systems, Proceedings of the Annual Conference on Neural Information Processing Systems 2017, NeurIPS 2017, Long Beach, CA, USA, 4–9 December 2017; Curran Associates Inc.: Red Hook, NY, USA, 2017; Volume 30. [Google Scholar]

- Sohl-Dickstein, J.; Weiss, E.; Maheswaranathan, N.; Ganguli, S. Deep unsupervised learning using nonequilibrium thermodynamics. In Proceedings of the International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 2256–2265. [Google Scholar]

- Kong, J.; Kim, J.; Bae, J. Hifi-gan: Generative adversarial networks for efficient and high fidelity speech synthesis. In Advances in Neural Information Processing Systems, Proceedings of the Annual Conference on Neural Information Processing Systems 2020, NeurIPS 2020, Online, 6–12 December 2020; Curran Associates Inc.: Red Hook, NY, USA, 2020; Volume 33, pp. 17022–17033. [Google Scholar]

- Lee, S.g.; Ping, W.; Ginsburg, B.; Catanzaro, B.; Yoon, S. Bigvgan: A universal neural vocoder with large-scale training. arXiv 2022, arXiv:2206.04658. [Google Scholar]

- Kaneko, T.; Tanaka, K.; Kameoka, H.; Seki, S. iSTFTNet: Fast and lightweight mel-spectrogram vocoder incorporating inverse short-time Fourier transform. In Proceedings of the ICASSP 2022–2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 22–27 May 2022; pp. 6207–6211. [Google Scholar]

- Kim, J.; Kong, J.; Son, J. Conditional variational autoencoder with adversarial learning for end-to-end text-to-speech. In Proceedings of the International Conference on Machine Learning, Online, 18–24 July 2021; pp. 5530–5540. [Google Scholar]

- Kong, J.; Park, J.; Kim, B.; Kim, J.; Kong, D.; Kim, S. Vits2: Improving quality and efficiency of single-stage text-to-speech with adversarial learning and architecture design. arXiv 2023, arXiv:2307.16430. [Google Scholar]

- Li, J.; Tu, W.; Xiao, L. Freevc: Towards high-quality text-free one-shot voice conversion. In Proceedings of the ICASSP 2023–2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023; pp. 1–5. [Google Scholar]

- Lei, Y.; Yang, S.; Cong, J.; Xie, L.; Su, D. Glow-wavegan 2: High-quality zero-shot text-to-speech synthesis and any-to-any voice conversion. arXiv 2022, arXiv:2207.01832. [Google Scholar]

- Pankov, V.; Pronina, V.; Kuzmin, A.; Borisov, M.; Usoltsev, N.; Zeng, X.; Golubkov, A.; Ermolenko, N.; Shirshova, A.; Matveeva, Y. DINO-VITS: Data-Efficient Zero-Shot TTS with Self-Supervised Speaker Verification Loss for Noise Robustness. arXiv 2023, arXiv:2311.09770. [Google Scholar]

- Huang, W.C.; Violeta, L.P.; Liu, S.; Shi, J.; Toda, T. The singing voice conversion challenge 2023. In Proceedings of the 2023 IEEE Automatic Speech Recognition and Understanding Workshop (ASRU), Taipei, Taiwan, 16–20 December 2023; pp. 1–8. [Google Scholar]

- Zhou, Y.; Chen, M.; Lei, Y.; Zhu, J.; Zhao, W. VITS-based Singing Voice Conversion System with DSPGAN post-processing for SVCC2023. In Proceedings of the 2023 IEEE Automatic Speech Recognition and Understanding Workshop (ASRU), Taipei, Taiwan, 16–20 December 2023; pp. 1–8. [Google Scholar]

- Ning, Z.; Jiang, Y.; Wang, Z.; Zhang, B.; Xie, L. Vits-based singing voice conversion leveraging whisper and multi-scale f0 modeling. In Proceedings of the 2023 IEEE Automatic Speech Recognition and Understanding Workshop (ASRU), Taipei, Taiwan, 16–20 December 2023; pp. 1–8. [Google Scholar]

- Kong, Z.; Ping, W.; Huang, J.; Zhao, K.; Catanzaro, B. Diffwave: A versatile diffusion model for audio synthesis. arXiv 2020, arXiv:2009.09761. [Google Scholar]

- Popov, V.; Vovk, I.; Gogoryan, V.; Sadekova, T.; Kudinov, M. Grad-tts: A diffusion probabilistic model for text-to-speech. In Proceedings of the International Conference on Machine Learning, Online, 18–24 July 2021; pp. 8599–8608. [Google Scholar]

- Popov, V.; Vovk, I.; Gogoryan, V.; Sadekova, T.; Kudinov, M.S.; Wei, J. Diffusion-Based Voice Conversion with Fast Maximum Likelihood Sampling Scheme. In Proceedings of the International Conference on Learning Representations, Online, 25–29 April 2022. [Google Scholar]

- Choi, H.Y.; Lee, S.H.; Lee, S.W. Dddm-vc: Decoupled denoising diffusion models with disentangled representation and prior mixup for verified robust voice conversion. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2024; Volume 38, pp. 17862–17870. [Google Scholar]

- Ye, Z.; Xue, W.; Tan, X.; Chen, J.; Liu, Q.; Guo, Y. Comospeech: One-step speech and singing voice synthesis via consistency model. In Proceedings of the 31st ACM International Conference on Multimedia, Ottawa, ON, Canada, 29 October–3 November 2023; pp. 1831–1839. [Google Scholar]

- Esser, P.; Kulal, S.; Blattmann, A.; Entezari, R.; Müller, J.; Saini, H.; Levi, Y.; Lorenz, D.; Sauer, A.; Boesel, F.; et al. Scaling rectified flow transformers for high-resolution image synthesis. In Proceedings of the Forty-First International Conference on Machine Learning, Vienna, Austria, 21–27 July 2024. [Google Scholar]

- Le, M.; Vyas, A.; Shi, B.; Karrer, B.; Sari, L.; Moritz, R.; Williamson, M.; Manohar, V.; Adi, Y.; Mahadeokar, J.; et al. Voicebox: Text-guided multilingual universal speech generation at scale. In Advances in Neural Information Processing Systems, Proceedings of the Annual Conference on Neural Information Processing Systems 2023, NeurIPS 2023, New Orleans, LA, USA, 10–16 December 2023; Curran Associates Inc.: Red Hook, NY, USA, 2023; Volume 36. [Google Scholar]

- Guo, Y.; Du, C.; Ma, Z.; Chen, X.; Yu, K. Voiceflow: Efficient text-to-speech with rectified flow matching. In Proceedings of the ICASSP 2024–2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Seoul, Republic of Korea, 14–19 April 2024; pp. 11121–11125. [Google Scholar]

- Guo, W.; Zhang, Y.; Pan, C.; Huang, R.; Tang, L.; Li, R.; Hong, Z.; Wang, Y.; Zhao, Z. TechSinger: Technique Controllable Multilingual Singing Voice Synthesis via Flow Matching. arXiv 2025, arXiv:2502.12572. [Google Scholar] [CrossRef]

- Sadekova, T.; Kudinov, M.; Popov, V.; Yermekova, A.; Khrapov, A. PitchFlow: Adding pitch control to a Flow-matching based TTS model. In Proceedings of the Interspeech 2024, Kos Island, Greece, 1–5 September 2024; pp. 4418–4422. [Google Scholar]

- Yao, J.; Yuguang, Y.; Pan, Y.; Ning, Z.; Ye, J.; Zhou, H.; Xie, L. Stablevc: Style controllable zero-shot voice conversion with conditional flow matching. In Proceedings of the AAAI Conference on Artificial Intelligence, Philadelphia, PA, USA, 27 February–4 March 2025; Volume 39, pp. 25669–25677. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Lecture Notes in Computer Science, Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; Proceedings Part III 18; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Wei, H.; Cao, X.; Dan, T.; Chen, Y. RMVPE: A robust model for vocal pitch estimation in polyphonic music. arXiv 2023, arXiv:2306.15412. [Google Scholar]

- Van Niekerk, B.; Carbonneau, M.A.; Zaïdi, J.; Baas, M.; Seuté, H.; Kamper, H. A comparison of discrete and soft speech units for improved voice conversion. In Proceedings of the ICASSP 2022–2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 22–27 May 2022; pp. 6562–6566. [Google Scholar]

- Wang, H.; Zheng, S.; Chen, Y.; Cheng, L.; Chen, Q. Cam++: A fast and efficient network for speaker verification using context-aware masking. arXiv 2023, arXiv:2303.00332. [Google Scholar]

- Ho, J.; Salimans, T. Classifier-free diffusion guidance. arXiv 2022, arXiv:2207.12598. [Google Scholar]

- An, K.; Chen, Q.; Deng, C.; Du, Z.; Gao, C.; Gao, Z.; Gu, Y.; He, T.; Hu, H.; Hu, K.; et al. Funaudiollm: Voice understanding and generation foundation models for natural interaction between humans and llms. arXiv 2024, arXiv:2407.04051. [Google Scholar]

- Kameoka, H.; Kaneko, T.; Tanaka, K.; Hojo, N. Stargan-vc: Non-parallel many-to-many voice conversion using star generative adversarial networks. In Proceedings of the 2018 IEEE Spoken Language Technology Workshop (SLT), Athens, Greece, 18–21 December 2018; pp. 266–273. [Google Scholar]

- Kaneko, T.; Kameoka, H. Cyclegan-vc: Non-parallel voice conversion using cycle-consistent adversarial networks. In Proceedings of the 2018 26th European Signal Processing Conference (EUSIPCO), Roma, Italy, 3–7 September 2018; pp. 2100–2104. [Google Scholar]

- Shi, Y.; Bu, H.; Xu, X.; Zhang, S.; Li, M. Aishell-3: A multi-speaker mandarin tts corpus and the baselines. arXiv 2020, arXiv:2010.11567. [Google Scholar]

- Zhang, L.; Li, R.; Wang, S.; Deng, L.; Liu, J.; Ren, Y.; He, J.; Huang, R.; Zhu, J.; Chen, X.; et al. M4singer: A multi-style, multi-singer and musical score provided mandarin singing corpus. In Advances in Neural Information Processing Systems, Proceedings of the Annual Conference on Neural Information Processing Systems 2022, NeurIPS 2022, New Orleans, LA, USA, 28 November–9 December 2022; Curran Associates Inc.: Red Hook, NY, USA, 2022; Volume 35, pp. 6914–6926. [Google Scholar]

- Park, D.S.; Chan, W.; Zhang, Y.; Chiu, C.C.; Zoph, B.; Cubuk, E.D.; Le, Q.V. Specaugment: A simple data augmentation method for automatic speech recognition. arXiv 2019, arXiv:1904.08779. [Google Scholar]

- Kaneko, T.; Kameoka, H.; Tanaka, K.; Hojo, N. Maskcyclegan-vc: Learning non-parallel voice conversion with filling in frames. In Proceedings of the ICASSP 2021–2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; pp. 5919–5923. [Google Scholar]

- Yu, S.; Kwak, S.; Jang, H.; Jeong, J.; Huang, J.; Shin, J.; Xie, S. Representation Alignment for Generation: Training Diffusion Transformers Is Easier Than You Think. In Proceedings of the Thirteenth International Conference on Learning Representations, Singapore, 24–28 April 2025. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).