A Versatile Tool for Haptic Feedback Design Towards Enhancing User Experience in Virtual Reality Applications

Abstract

1. Introduction

1.1. Challenges and Opportunities for Haptic Feedback Implementation in VR

1.2. Gamepad as an Input and Haptic Device in VR

2. Materials and Methods

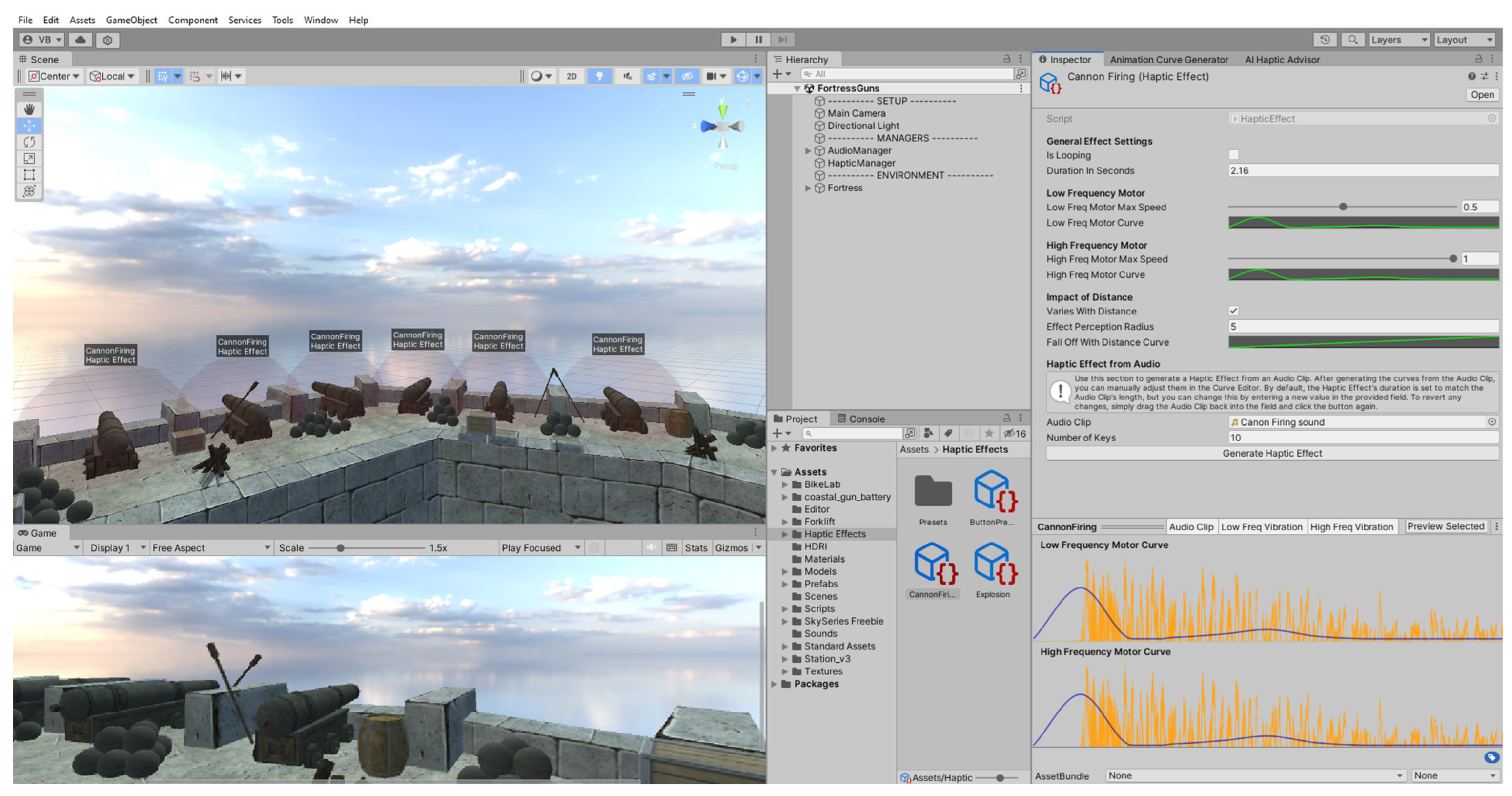

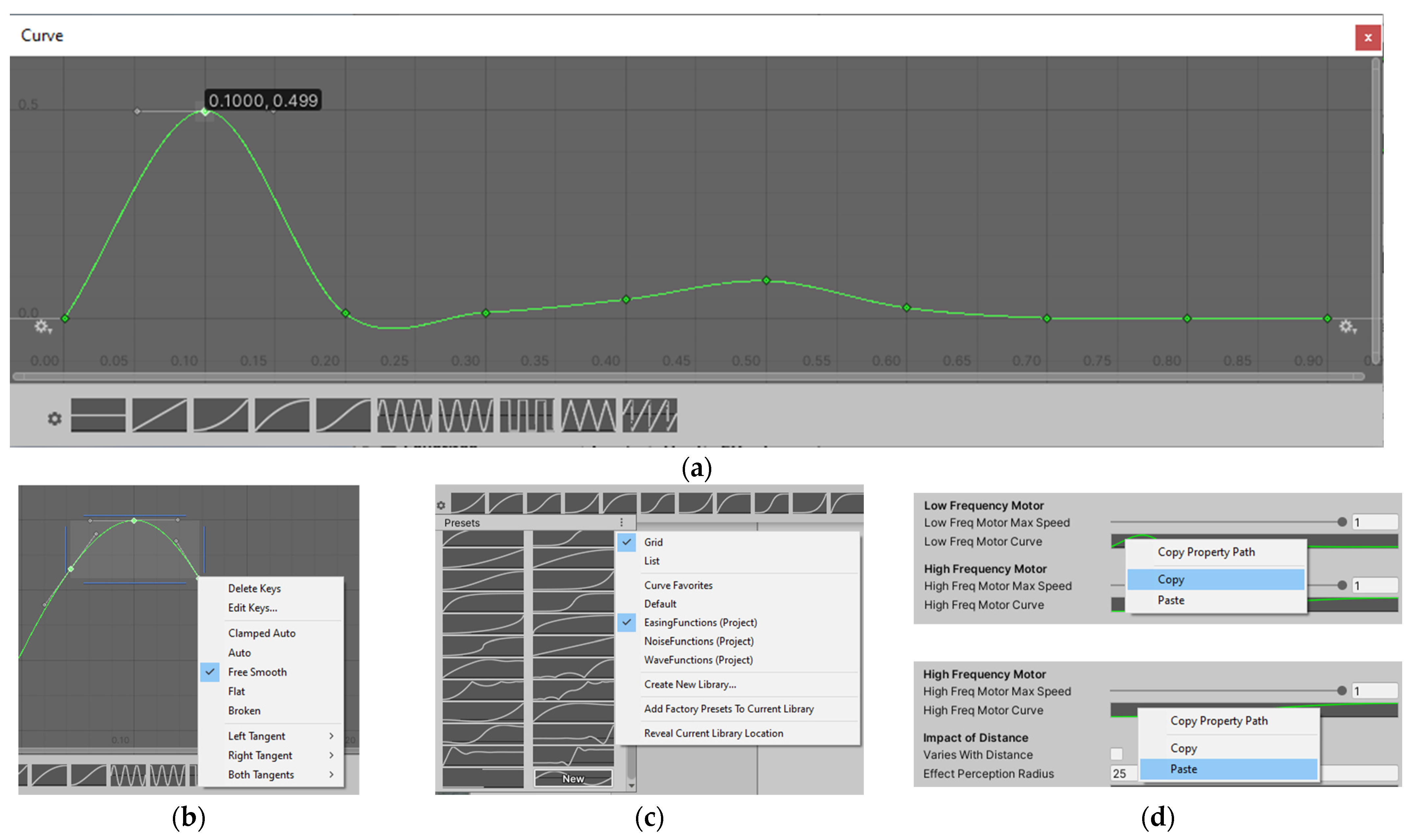

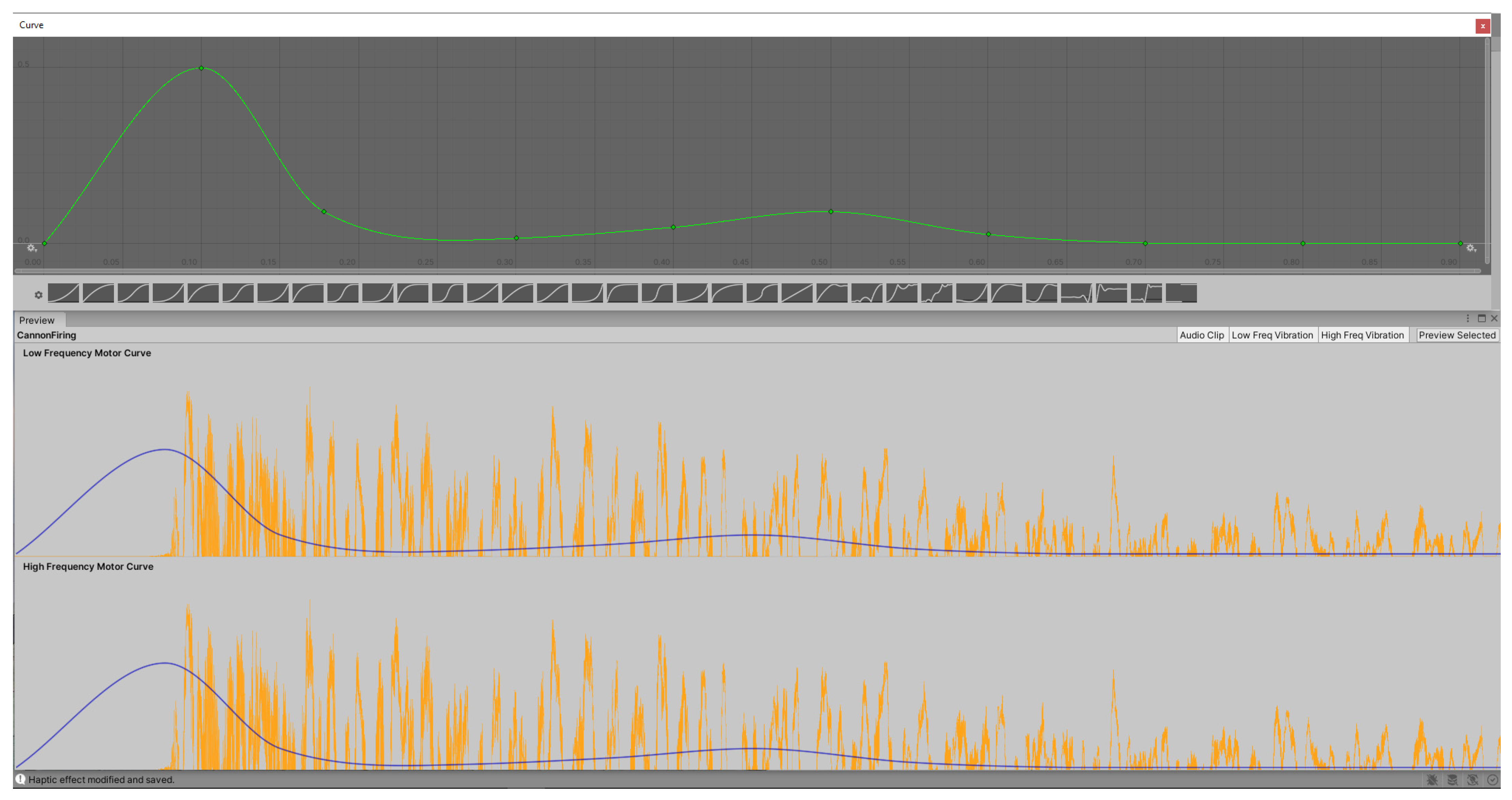

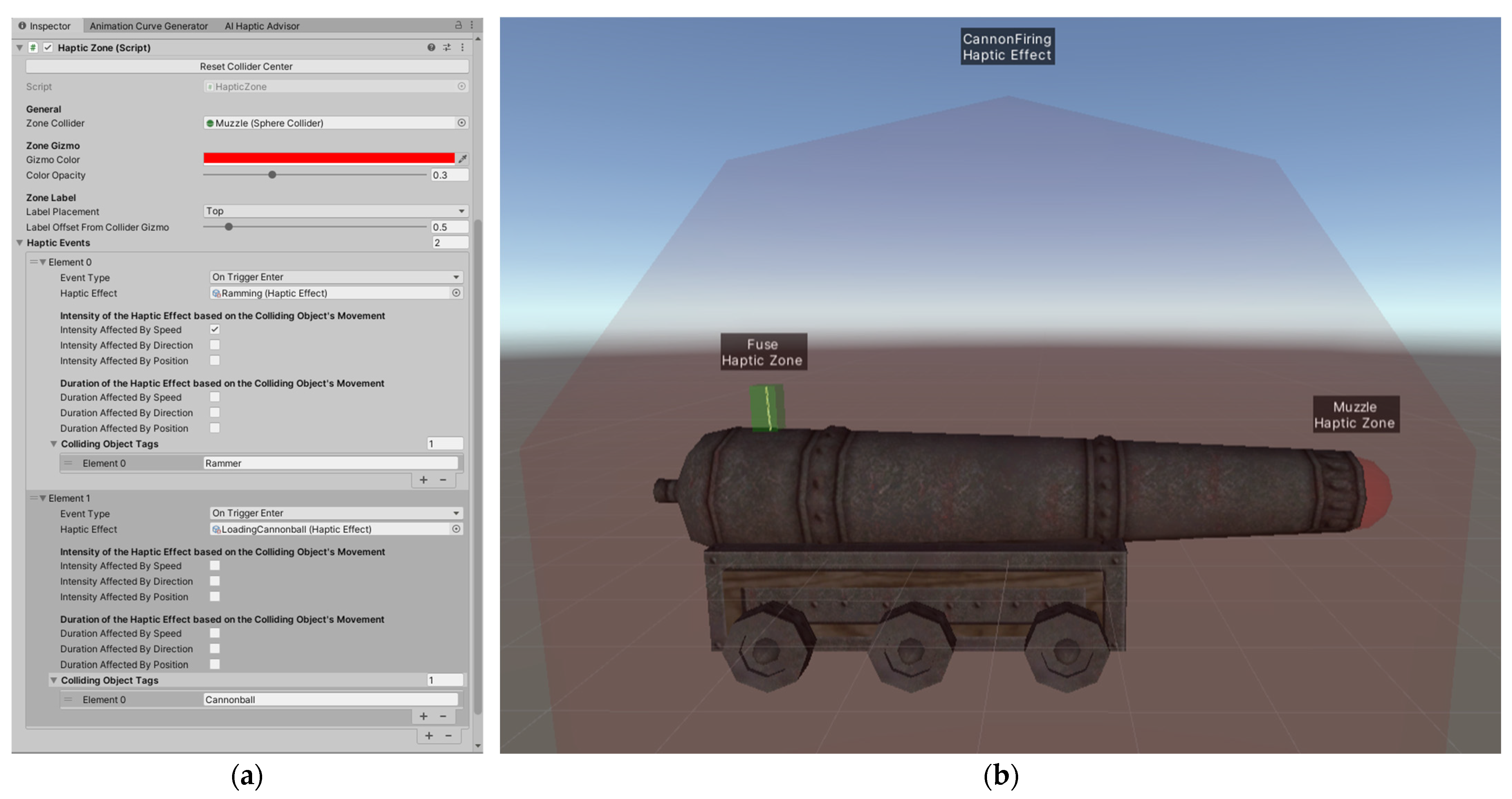

2.1. Key Features of the Haptic Feedback Design Tool

2.2. Participants

2.3. Procedure

2.4. Data Collection

- How familiar are you with haptic feedback in interactive experiences?

- How important do you believe haptic feedback is compared to visual and audio feedback in interactive experiences?

- How confident do you feel about implementing haptic feedback in your projects?

- General questions about the use of haptic feedback:

- How familiar are you with the use of haptic feedback in interactive experiences?

- How comfortable do you find the haptic feedback?

- How significant is the difference in user experience and immersion with and without haptic feedback?

- How much did your experiences in the virtual environment seem natural and consistent with your real-world experiences?

- How much does haptic feedback enhance your connection to characters, objects, and actions within the virtual world?

- How effective do you find haptic feedback compared to visual and audio feedback in interactive experiences?

- How satisfied are you with the use of haptic feedback in existing games, simulations, VR apps, and other interactive experiences you’ve tried?

- How likely are you to use haptic feedback in future projects?

- How challenging do you find implementing haptic feedback in interactive experiences, compared to other feedback mechanisms (e.g., visual or audio)?

- Questions specific to the proposed haptic feedback design tool:

- 10.

- How much time did it take you to learn how to use the haptic feedback design tool effectively?

- 11.

- How easy and intuitive did you find the tool to use?

- 12.

- How satisfied are you with your overall experience using the haptic feedback design tool, including its features, functionality, interface, and responsiveness?

- 13.

- How closely does the haptic feedback perceived on the device align with your expectations and design in the tool?

- 14.

- How much did the haptic feedback design tool help you in implementing your ideas during the project?

- 15.

- How likely would you use the haptic feedback design tool in future projects?

- 16.

- How confident do you feel in implementing haptic feedback in future projects after using this tool?

2.5. Data Analysis

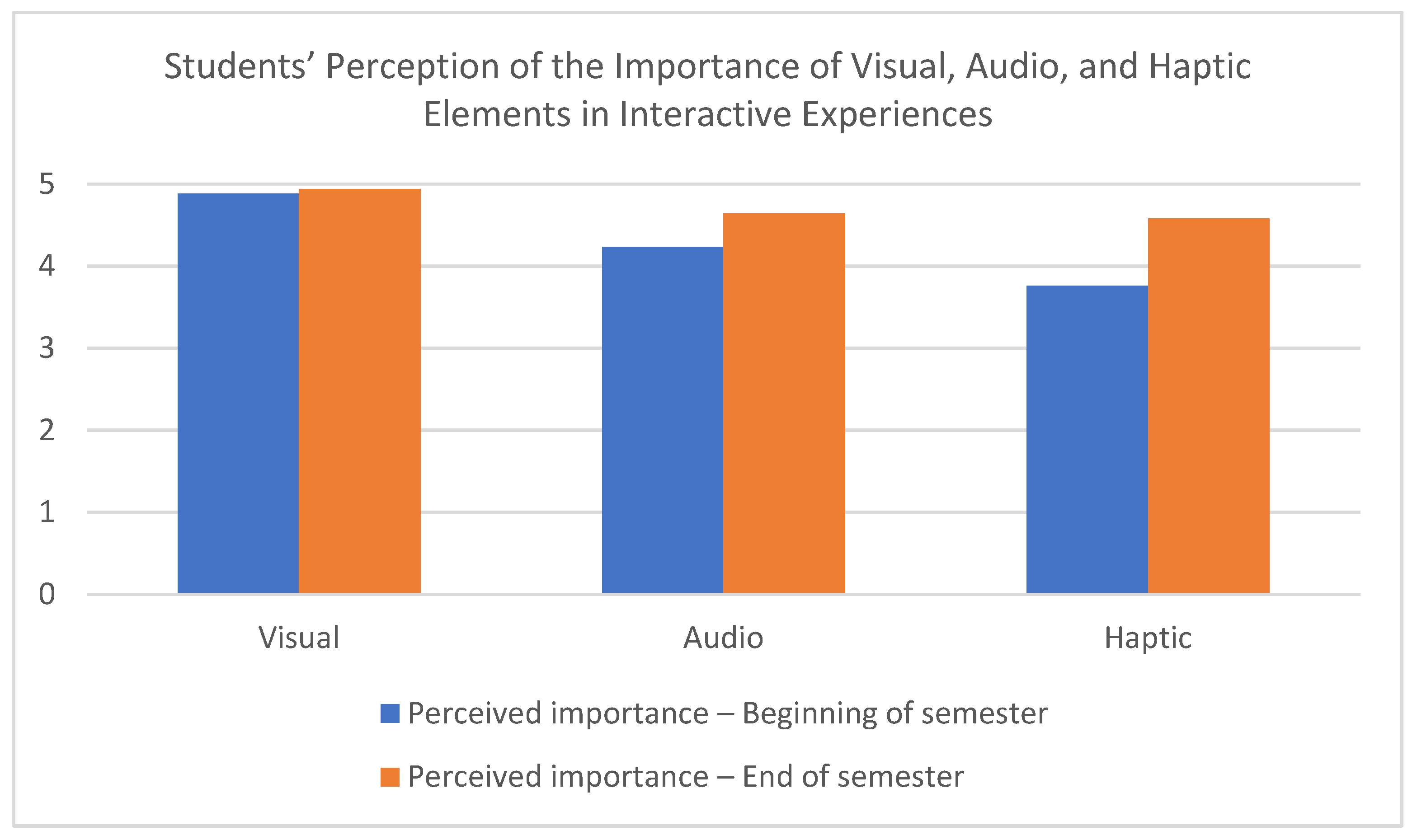

3. Results

3.1. Student Practical Experiences with the Haptic Feedback Design Tool

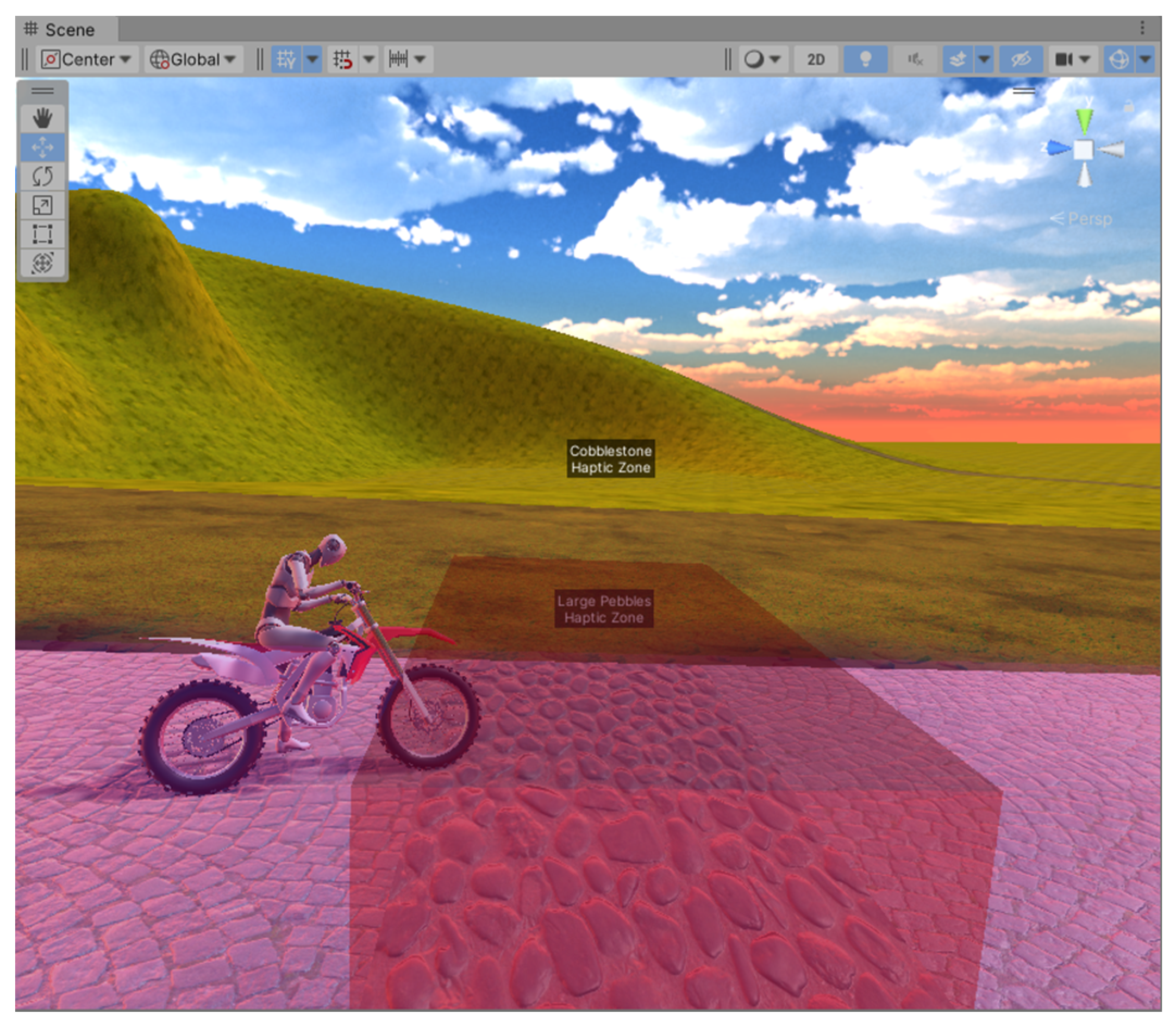

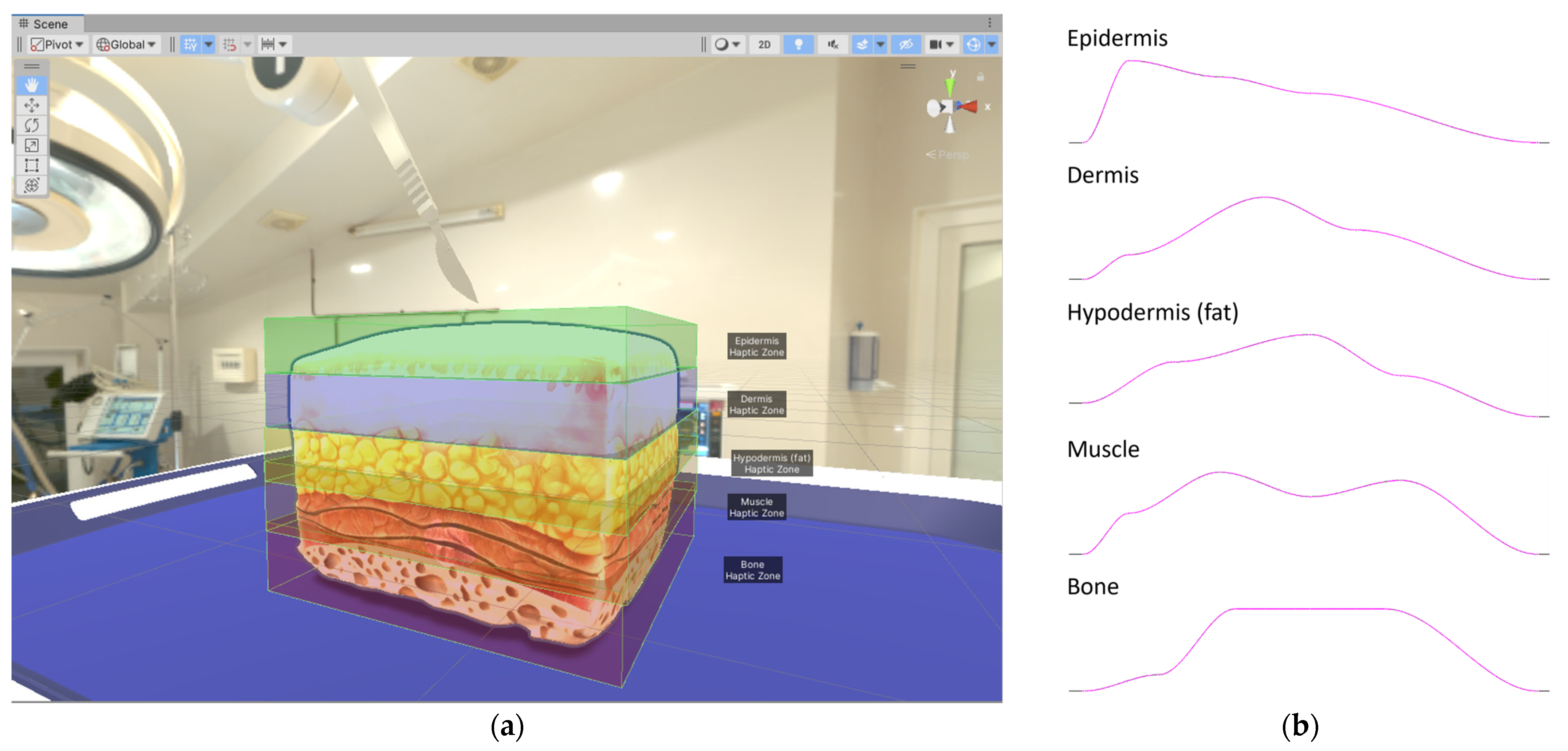

3.2. Outcomes and Examples of Student Projects

3.3. Student Feedback and Reflections

4. Discussion

4.1. Comparison with Existing Tools

4.2. Educational Framework Evaluation

4.3. Use Cases/Scenarios

4.4. Limitations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Gao, Y.; Spence, C. Enhancing Presence, Immersion, and Interaction in Multisensory Experiences Through Touch and Haptic Feedback. Virtual Worlds 2025, 4, 3. [Google Scholar] [CrossRef]

- Van Damme, S.; Legrand, N.; Heyse, J.; De Backere, F.; De Turck, F.; Vega, M.T. Effects of Haptic Feedback on User Perception and Performance in Interactive Projected Augmented Reality, Proceedings of the 1st Workshop on Interactive eXtended Reality, Lisboa, Portugal, 14 October 2022; ACM: New York, NY, USA, 2022; pp. 11–18. [Google Scholar]

- Kreimeier, J.; Hammer, S.; Friedmann, D.; Karg, P.; Bühner, C.; Bankel, L.; Götzelmann, T. Evaluation of Different Types of Haptic Feedback Influencing the Task-Based Presence and Performance in Virtual Reality, Proceedings of the 12th ACM International Conference on PErvasive Technologies Related to Assistive Environments, New York, NY, USA, 5 June 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 289–298. [Google Scholar]

- Collaço, E.; Kira, E.; Sallaberry, L.H.; Queiroz, A.C.M.; Machado, M.A.A.M.; Crivello Jr, O.; Tori, R. Immersion and Haptic Feedback Impacts on Dental Anesthesia Technical Skills Virtual Reality Training. J. Dent. Educ. 2021, 85, 589–598. [Google Scholar] [CrossRef]

- Wang, P.; Bai, X.; Billinghurst, M.; Zhang, S.; Han, D.; Sun, M.; Wang, Z.; Lv, H.; Han, S. Haptic Feedback Helps Me? A VR-SAR Remote Collaborative System with Tangible Interaction. Int. J. Hum.–Comput. Interact. 2020, 36, 1242–1257. [Google Scholar] [CrossRef]

- Culbertson, H.; Schorr, S.; Okamura, A. Haptics: The Present and Future of Artificial Touch Sensation. Annu. Rev. Control Robot. Auton. Syst. 2018, 1, 385–409. [Google Scholar] [CrossRef]

- A Touch of Virtual Reality. Nat. Mach. Intell. 2023, 5, 557. [CrossRef]

- Kim, J.J.; Wang, Y.; Wang, H.; Lee, S.; Yokota, T.; Someya, T. Skin Electronics: Next-Generation Device Platform for Virtual and Augmented Reality. Adv. Funct. Mater. 2021, 31, 2009602. [Google Scholar] [CrossRef]

- Pezent, E.; O’Malley, M.K.; Israr, A.; Samad, M.; Robinson, S.; Agarwal, P.; Benko, H.; Colonnese, N. Explorations of Wrist Haptic Feedback for AR/VR Interactions with Tasbi. In Proceedings of the Extended Abstracts of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 1–4. [Google Scholar]

- Tanaçar, N.; Mughrabi, M.; Batmaz, A.; Leonardis, D.; Sarac, M. The Impact of Haptic Feedback During Sudden, Rapid Virtual Interactions. In Proceedings of the IEEE World Haptics Conference (WHC), Delft, The Netherlands, 10–13 July 2023; p. 70. [Google Scholar]

- Brasen, P.W.; Christoffersen, M.; Kraus, M. Effects of Vibrotactile Feedback in Commercial Virtual Reality Systems. In Interactivity, Game Creation, Design, Learning, and Innovation; Brooks, A.L., Brooks, E., Sylla, C., Eds.; Lecture Notes of the Institute for Computer Sciences, Social Informatics and Telecommunications Engineering; Springer International Publishing: Cham, Switzerland, 2019; Volume 265, pp. 219–224. ISBN 978-3-030-06133-3. [Google Scholar]

- Sallnäs, E.-L.; Rassmus-Gröhn, K.; Sjöström, C. Supporting Presence in Collaborative Environments by Haptic Force Feedback. ACM Trans. Comput.-Hum. Interact. 2000, 7, 461–476. [Google Scholar] [CrossRef]

- Richard, G.; Pietrzak, T.; Argelaguet, F.; Lécuyer, A.; Casiez, G. Frontiers. Studying the Role of Haptic Feedback on Virtual Embodiment in a Drawing Task. Front. Virtual Real. 2021, 1, 573167. [Google Scholar] [CrossRef]

- Jacucci, G.; Bellucci, A.; Ahmed, I.; Harjunen, V.; Spape, M.; Ravaja, N. Haptics in Social Interaction with Agents and Avatars in Virtual Reality: A Systematic Review. Virtual Real. 2024, 28, 170. [Google Scholar] [CrossRef]

- Kaplan, H.; Pyayt, A. Fully Digital Audio Haptic Maps for Individuals with Blindness. Disabilities 2024, 4, 64–78. [Google Scholar] [CrossRef]

- Schmitz, B.; Ertl, T. Making Digital Maps Accessible Using Vibrations. In Computers Helping People with Special Needs; Miesenberger, K., Klaus, J., Zagler, W., Karshmer, A., Eds.; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2010; Volume 6179, pp. 100–107. ISBN 978-3-642-14096-9. [Google Scholar]

- Nordvall, M. The Sightlence Game: Designing a Haptic Computer Game Interface. In Proceedings of the DiGRA ’13—2013 DiGRA International Conference: DeFragging Game Studies, Atlanta, GA, USA, 26–29 August 2013. [Google Scholar]

- Gutschmidt, R.; Schiewe, M.; Zinke, F.; Jürgensen, H. Haptic Emulation of Games: Haptic Sudoku for the Blind. In Proceedings of the 3rd International Conference on PErvasive Technologies Related to Assistive Environments, Samos, Greece, 23–25 June 2010; Association for Computing Machinery: New York, NY, USA, 2010; pp. 1–8. [Google Scholar]

- Kaewprapan, W. Game Development for the Visually Impaired; Sulaiman, H.A., Othman, M.A., Othman, M.F.I., Rahim, Y.A., Pee, N.C., Eds.; Springer International Publishing: Cham, Switzerland, 2016; Volume 362, pp. 1309–1315. [Google Scholar]

- Trifănică, V.; Moldoveanu, A.; Butean, A.; Butean, D. Gamepad Vibration Methods to Help Blind People Perceive Colors. In Proceedings of the 12th Romanian Human-Computer Interaction Conference (RoCHI 2015), Bucharest, Romania, 24–25 September 2015; pp. 37–40. [Google Scholar]

- Seifi, H.; Fazlollahi, F.; Oppermann, M.; Sastrillo, J.A.; Ip, J.; Agrawal, A.; Park, G.; Kuchenbecker, K.J.; MacLean, K.E. Haptipedia: Accelerating Haptic Device Discovery to Support Interaction & Engineering Design. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 1–12. [Google Scholar]

- Wee, C.; Yap, K.M.; Lim, W.N. Haptic Interfaces for Virtual Reality: Challenges and Research Directions. IEEE Access 2021, 9, 112145–112162. [Google Scholar] [CrossRef]

- Jr, J.; Conti, F.; Barbagli, F. Haptics Rendering: Introductory Concepts. IEEE Comput. Graph. Appl. 2004, 24, 24–32. [Google Scholar] [CrossRef]

- Gibbs, J.K.; Gillies, M.; Pan, X. A Comparison of the Effects of Haptic and Visual Feedback on Presence in Virtual Reality. Int. J. Hum.-Comput. Stud. 2022, 157, 102717. [Google Scholar] [CrossRef]

- Cardoso, J.C.S. Comparison of Gesture, Gamepad, and Gaze-Based Locomotion for VR Worlds. In Proceedings of the Proceedings of the 22nd ACM Conference on Virtual Reality Software and Technology, Munich, Germany, 2 November 2016; ACM: New York, NY, USA, 2016; pp. 319–320. [Google Scholar]

- Mayor, J.; Raya, L.; Sanchez, A. A Comparative Study of Virtual Reality Methods of Interaction and Locomotion Based on Presence, Cybersickness, and Usability. IEEE Trans. Emerg. Top. Comput. 2021, 9, 1542–1553. [Google Scholar] [CrossRef]

- Zhao, J.; An, R.; Xu, R.; Lin, B. Comparing Hand Gestures and a Gamepad Interface for Locomotion in Virtual Environments. Int. J. Hum.-Comput. Stud. 2022, 166, 102868. [Google Scholar] [CrossRef]

- Ortega, F.R.; Williams, A.S.; Tarre, K.; Barreto, A.; Rishe, N. 3D Travel Comparison Study between Multi-Touch and GamePad. Int. J. Hum.–Comput. Interact. 2020, 36, 1699–1713. [Google Scholar] [CrossRef]

- Bowman, N.D.; Pietschmann, D.; Liebold, B. The Golden (Hands) Rule: Exploring User Experiences with Gamepad and Natural-User Interfaces in Popular Video Games. J. Gaming Virtual Worlds 2017, 9, 71–85. [Google Scholar] [CrossRef]

- Ali, M.; Cardona-Rivera, R.E. Comparing Gamepad and Naturally-Mapped Controller Effects on Perceived Virtual Reality Experiences. In Proceedings of the ACM Symposium on Applied Perception, Virtual Event, 12 September 2020; ACM: New York, NY, USA, 2020; pp. 1–10. [Google Scholar]

- Konishi, Y. Sony Group Portal—R&D—Stories—Haptics. Available online: https://www.sony.com/en/SonyInfo/technology/stories/Haptics/ (accessed on 22 September 2023).

- Kuchera, B. How the Nintendo Switch’s HD Rumble Makes Tumbleseed Feel Real. Polygon. 2017. Available online: https://www.polygon.com/2017/5/1/15499328/tumbleseed-hd-rumble-nintendo-switch (accessed on 15 March 2025).

- Tarigan, J.T.; Sikoko, A.S.; Selvida, D. Developing an Efficient Vibrotactile Stimuli in Computer Game. In Proceedings of the 2024 8th International Conference on Electrical, Telecommunication and Computer Engineering (ELTICOM), Medan, Indonesia, 21–22 November 2024; pp. 126–129. [Google Scholar]

- Pichlmair, M.; Johansen, M. Designing Game Feel: A Survey. IEEE Trans. Games 2022, 14, 138–152. [Google Scholar] [CrossRef]

- Bursać, V.; Ivetić, D.; Kupusinac, A. Program Model for a Visual Editor of Gamepad Haptic Effects. In Proceedings of the 14th International Conference on Applied Internet and Information Technologies (AIIT 2024), Zrenjanin, Serbia, 8 November 2024; University of Novi Sad, Technical Faculty “Mihajlo Pupin”, Zrenjanin, Republic of Serbia: Zrenjanin, Serbia, 2024; pp. 173–180. [Google Scholar]

- Terenti, M.; Vatavu, R.-D. VIREO: Web-Based Graphical Authoring of Vibrotactile Feedback for Interactions with Mobile and Wearable Devices. Int. J. Hum.–Comput. Interact. 2023, 39, 4162–4180. [Google Scholar] [CrossRef]

- Nordvall, M.; Arvola, M.; Boström, E.; Danielsson, H.; Overkamp, T. VibEd: A Prototyping Tool for Haptic Game Interfaces. In Proceedings of the iConference 2016 Proceedings, Philadelphia, PA, USA, 20–23 March 2016; iSchools: Philadelphia, PA, USA, 2016. [Google Scholar]

- Merdenyan, B.; Petrie, H. User Reviews of Gamepad Controllers: A Source of User Requirements and User Experience. Proceedings of the 2015 Annual Symposium on Computer-Human Interaction in Play; Association for Computing Machinery: New York, NY, USA, 2015. [Google Scholar]

- Valve Corporation Steam News—An Update on Steam Input and Controller Support–Steam News. Available online: https://store.steampowered.com/news/app/593110/view/4142827237888316811 (accessed on 3 March 2025).

- Straits Research Wireless Gamepad Market Size, Share & Trends, Growth Demand Report, 2031. Available online: https://straitsresearch.com/report/wireless-gamepad-market (accessed on 3 March 2025).

- Unity Technologies Input System|Input System|1.8.2. Available online: https://docs.unity3d.com/Packages/com.unity.inputsystem@1.8/manual/index.html (accessed on 27 June 2024).

- Cruz-Hernandez, J.M.; Ullrich, C.J. Sound to Haptic Effect Conversion System Using Mapping. U.S. Patent 10,339,772 B2, 2 July 2019. [Google Scholar]

- Brown, M.; Kehoe, A.; Kirakowski, J.; Pitt, I. Beyond the Gamepad: HCI and Game Controller Design and Evaluation. In Evaluating User Experience in Games; Springer: London, UK, 2010; pp. 197–219. ISBN 978-3-319-15984-3. [Google Scholar]

- Cleary, A.G.; McKendrick, H.; Sills, J.A. Hand-Arm Vibration Syndrome May Be Associated with Prolonged Use of Vibrating Computer Games. BMJ 2002, 324, 301. [Google Scholar] [CrossRef]

- Swain, J.; King, B. Using Informal Conversations in Qualitative Research. Int. J. Qual. Methods 2022, 21, 16094069221085056. [Google Scholar] [CrossRef]

- Witmer, B.G.; Singer, M.J. Measuring Presence in Virtual Environments: A Presence Questionnaire. Presence 1998, 7, 225–240. [Google Scholar] [CrossRef]

- Gerger, H.; Søgaard, K.; Macri, E.M.; Jackson, J.A.; Elbers, R.G.; van Rijn, R.M.; Koes, B.; Chiarotto, A.; Burdorf, A. Exposure to Hand-Arm Vibrations in the Workplace and the Occurrence of Hand-Arm Vibration Syndrome, Dupuytren’s Contracture, and Hypothenar Hammer Syndrome: A Systematic Review and Meta-Analysis. J. Occup. Environ. Hyg. 2023, 20, 257–267. [Google Scholar] [CrossRef] [PubMed]

- Hornsey, R.L.; Hibbard, P.B. Current Perceptions of Virtual Reality Technology. Appl. Sci. 2024, 14, 4222. [Google Scholar] [CrossRef]

- Schneider, O.; MacLean, K.; Swindells, C.; Booth, K. Haptic Experience Design: What Hapticians Do and Where They Need Help. Int. J. Hum.-Comput. Stud. 2017, 107, 5–21. [Google Scholar] [CrossRef]

- Unity Technologies Interface IDualMotorRumble Input System 1.8.2. Available online: https://docs.unity3d.com/Packages/com.unity.inputsystem@1.8/api/UnityEngine.InputSystem.Haptics.IDualMotorRumble.html (accessed on 27 June 2024).

- Godot Community; Linietsky, J.; Manzur, A. Controllers, Gamepads, and Joysticks. Available online: https://docs.godotengine.org/en/stable/tutorials/inputs/controllers_gamepads_joysticks.html#vibration (accessed on 15 April 2025).

- Unreal Engine Force Feedback in Unreal Engine Unreal Engine 5.5 Documentation Epic Developer Community. Available online: https://dev.epicgames.com/documentation/en-us/unreal-engine/force-feedback-in-unreal-engine (accessed on 15 April 2025).

- Meta Haptics Overview Meta Developers. Available online: https://developers.meta.com/horizon/resources/haptics-overview/ (accessed on 15 April 2025).

- Interhaptics Haptic Composer—Interhaptics. Available online: https://doc.wyvrn.com/docs/interhaptics-sdk/haptic-composer/ (accessed on 15 April 2025).

- Anderson, L.W.; Krathwohl, D.R. A Taxonomy for Learning, Teaching, and Assessing: A Revision of Bloom’s Taxonomy of Educational Objectives; Longman: Boston, MA, USA, 2001; ISBN 978-0-321-08405-7. [Google Scholar]

- Fink, L.D. Creating Significant Learning Experiences: An Integrated Approach to Designing College Courses; Wiley: Hoboken, NJ, USA, 2003; ISBN 978-0-7879-7121-2. [Google Scholar]

- Piaget, J. To Understand Is to Invent: The Future of Education; Penguin Books: London, UK, 1976; ISBN 978-0-14-004378-5. [Google Scholar]

- Kolb, D.A. Experiential Learning: Experience as the Source of Learning and Development; Prentice-Hall: Hoboken, NJ, USA, 1984; ISBN 978-0-13-295261-3. [Google Scholar]

| Use Case | Scenario | Activity | Learning Outcome |

|---|---|---|---|

| Introduction to Haptic Design | Students are introduced to basic haptic concepts and parameters (intensity, duration, rhythmic patterns, frequency, latency). | Students create simple haptic effects for basic events (e.g., button presses, character movements) and adjust parameters to feel changes. | Students grasp fundamental haptic principles and how to manipulate them. |

| Design of Advanced Haptic Effects for Mechanics and Events | Students design complex haptic feedback linked to mechanics and events. | Creating haptic patterns (e.g., an accelerating heartbeat when health is low) and environmental feedback (e.g., vibrations for different surfaces), while synchronizing with other feedback systems such as audio and visual effects. | Understand the relationship between haptic feedback, other feedback systems, and user experience to enhance immersion. |

| Collaborative Projects and Peer Review | Students work in teams to design and integrate haptic feedback into existing application prototypes. | Collaboratively design haptic effects using the tool, integrate them into the application, and participate in discussions to share and receive peer feedback for refinement. | Develop teamwork skills and the ability to evaluate, critique, and improve haptic designs. |

| Home Assignments and Remote Learning | Students continue working on haptic designs outside the lab. | Use the tool on personal devices to explore its features, refine projects, and access online resources and tutorials. | Encourage independent learning and reinforce concepts learned in the lab. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bursać, V.; Ivetić, D. A Versatile Tool for Haptic Feedback Design Towards Enhancing User Experience in Virtual Reality Applications. Appl. Sci. 2025, 15, 5419. https://doi.org/10.3390/app15105419

Bursać V, Ivetić D. A Versatile Tool for Haptic Feedback Design Towards Enhancing User Experience in Virtual Reality Applications. Applied Sciences. 2025; 15(10):5419. https://doi.org/10.3390/app15105419

Chicago/Turabian StyleBursać, Vasilije, and Dragan Ivetić. 2025. "A Versatile Tool for Haptic Feedback Design Towards Enhancing User Experience in Virtual Reality Applications" Applied Sciences 15, no. 10: 5419. https://doi.org/10.3390/app15105419

APA StyleBursać, V., & Ivetić, D. (2025). A Versatile Tool for Haptic Feedback Design Towards Enhancing User Experience in Virtual Reality Applications. Applied Sciences, 15(10), 5419. https://doi.org/10.3390/app15105419