Abstract

This paper analyzes and evaluates different types of interactions in educational software that uses three-dimensional (3D) interactions and visualization technologies, with a focus on accessibility in such environments. To determine the accessibility parameters based on 3D user interactions, a two-phase pilot study was conducted. In the first phase, the GeometryGame research instrument was tested, in which objective metrics were collected. In the second phase, subjective metrics were collected in the form of participants’ opinions on certain aspects of accessibility and interactions implemented in the GeometryGame. The most important interaction parameters were evaluated, including the size of the objects, the presence of distractions, the intuitiveness of the user interface, and the size and distance of the interaction elements from the virtual hand. The results provide insight into user preferences and highlight the importance of customizing the user interface to ensure effective and accessible 3D interactions. Based on objective measurements and subjective user feedback, recommendations were developed to improve the accessibility of educational software that supports 3D interactions; to increase usability, reliability, and user comfort; and to enhance future research in this area.

1. Introduction

Advances in digital technology have led to the widespread use of 3D graphical objects on desktop computers and mobile devices, allowing users to gain a deeper understanding of specific content and topics [1]. One of the most important applications of visualization technologies is in education, where they allow students to better acquire complex concepts from different domains such as mathematics, physics, biology, and history through exploration and interaction with materials such as 3D models, simulations, and interactive displays in an innovative way [2].

Introducing these technologies into the educational process encourages students’ active participation, allows them to visualize abstract concepts, and provides them with the opportunity to experiment in a safe environment, which greatly contributes to their understanding and engagement in learning. Students with learning difficulties or learning disabilities face challenges in the educational process, such as a limited ability to sustain attention and a corresponding inability to focus on what is required in the educational process for an extended period. Learning visualization technologies can engage their interest and direct their attention over an extended period to ensure effective learning. The use of interactive 3D models, simulations, and immersive technologies can enable them to actively participate and better understand abstract concepts through hands-on and visually engaging approaches, contributing to their cognitive development and motivation to learn [3].

Extended Reality (XR) is a term that is often used as an umbrella term for all current and future immersive technologies that combine the real and virtual worlds [4]. According to this view, the ITU describes XR as an environment composed of real, virtual, or mixed elements, where the “X” symbolizes each emerging type of reality—such as augmented, assisted, mixed, virtual, or diminished reality [5].

The two currently most influential XR technologies are virtual reality (VR) and augmented reality (AR). VR is a technology that completely immerses the user in a digital environment and prevents them from seeing the physical world around them [4]. In contrast, AR augments the real world by superimposing virtual elements on it, allowing the user to perceive both at the same time [6]. Depending on the degree of perceived presence, VR can be further subdivided into assisted or mixed reality (MR). In assisted reality, virtual content appears clearly artificial and is simply overlaid on the user’s view, whereas in MR, digital elements are seamlessly integrated into the physical space and allow the user to interact with them as if they were part of the real world [4]. MR enables more complex interactions by allowing virtual objects to respond to real-world stimuli and vice versa [7]. W3C Note on the XR Accessibility User Requirements outlines various user accessibility requirements for XR and the associated architecture [7,8].

XR technologies have numerous benefits for the educational process, especially in terms of increasing student engagement and learning efficiency. They enable interactive teaching methods that give students the opportunity to actively participate and thus lead to greater motivation. Empirical studies suggest that immersive experiences contribute to better cognitive processing of information and longer retention [9,10,11,12]. The needs and requirements of users often depend on the context of use [8]. To support this, such technologies could be adapted to different educational contexts and user profiles and thus contribute to an inclusive educational process. In addition to the benefits of XR technologies in education, there are also some limitations. For example, AR displays can be affected by occlusion, i.e., objects such as hands in the field of view that negatively impact the quality of interaction. Furthermore, current AR applications face challenges such as limited display of information due to relatively small smartphone screens that require constant holding and aligning of the device, which limits user interaction and content sharing [13]. In addition, many existing applications, such as holographic pyramids, lack interactivity, which reduces their effectiveness in enhancing the learning experience [14].

One of the most important features of the learning experience is the interaction with the learning software system [15]. Traditional user interfaces (UIs) were designed for the use of the mouse, keyboard, and touchscreen as input devices. Advances in computer technology have led to the development of new interfaces in areas where traditional devices are often inadequate. Visualization technologies in such environments require more intuitive forms of interaction and are therefore looking for new UIs that support such interactions.

Another important aspect of educational software systems is accessibility. Digital accessibility refers to the extent to which a computer program, website, or device is acceptable and suitable for use by people of all groups, including people with disabilities and older people, leading to a higher degree of their inclusion in society. Despite the rapid development of visualization technology, research into the accessibility and integration of these technologies still lags the needs of the market, and solutions need to be found that can overcome the barriers that these technologies present to users.

The goals of this research were to investigate and define the basic accessibility parameters by evaluating 3D user interactions in educational software and to formulate recommendations for improving the accessibility of existing and future educational software solutions. The accessibility guidelines defined in this study are specifically tailored to the needs of 3D interaction. They enable the creation of UI that not only fulfills the basic accessibility requirements but also improves the user experience. Based on previous research findings and the theoretical framework, three hypotheses were formulated and then tested against the results of the study.

The sections of this paper are organized as follows. After the introduction, the second section of this paper describes previous research in the field of 3D user interaction and accessibility of educational software systems with a focus on natural interactions. The third section describes the research conducted, focusing on the research instrument used in the form of an educational application and the metrics used to define the accessibility parameters. In this section, research hypotheses were formulated. The quantitative and qualitative research results are described in detail in the fourth section, while the fifth section summarizes the findings, highlights the limitations of the research conducted, presents the testing of the previously formulated hypotheses, and gives recommendations for adapting the representation of 3D objects and 3D interactions in educational software systems. Conclusions and plans for future research are presented in the sixth section.

2. Related Work

In this section, we provide an overview of user interactions with digital systems, including natural interactions, and summarize the research findings on defining accessibility parameters with a focus on environments with 3D interactions.

2.1. User Interactions with Digital Learning Systems

A digital learning system is basically a collection of digital tools and resources, such as texts, videos, quizzes, and simulations, that work together to enhance learning. These systems include hardware, software, and digital materials that allow students to access educational content, monitor their progress, and interact with teachers and other students [16]. The ability to interact with such a system is a fundamental requirement for the learning process, regardless of whether it takes place in a traditional or online context [17].

Usability factors have an important influence on user attitudes toward e-learning applications and indirectly on the results achieved. Previous studies have shown that the user’s satisfaction when using an application is influenced not only by the quality of the information but above all by the user’s attitude toward the application and the interface [18]. Users initially explore the various functions of the system to familiarize themselves with its capabilities. Over time, they focus on a stable set of functionalities that best suit their tasks. The increase in usage functionality has a positive effect on the perception of current performance, but also on objective performance measurements in later phases of use [19]. If the task is clearly defined and users are using a familiar tool, previous experience can help them to solve tasks with new technologies. In practice, however, it has been shown that users’ habits and previous experiences can reduce the effectiveness of use if the task is open and the new technology works differently from what the user is used to [20].

User interaction latency in an XR system refers to the time delay between the user’s physical action and the corresponding system response [21]. This latency is an important factor that affects the user experience, especially in terms of perceived realism, immersion, and task performance [13]. High latency can disrupt the sense of presence, cause motion sickness, and reduce the accuracy of the task. This is particularly problematic for applications that require fine motor control or fast reactions. Experiments in non-VR environments suggest that a latency of less than 100 ms has no impact on the user experience. Other research suggests that latency in VR environments should be less than 50 ms to feel responsive, while the recommended latency should be less than 20 ms [21].

Estimating the size of objects that users interact with is a particular challenge in XR environments. The accuracy of such estimates can vary considerably depending on the research context, the technological setup, and the specific characteristics of the virtual environment. A consistent finding of most studies dealing with the estimation of object size in VR environments is a tendency to underestimate, which often depends on the actual size of the objects being evaluated. One study reports that the size of virtual objects is underestimated by about 5%, regardless of their position in space [22]. Other research shows that when using VR devices with binocular disparity, such as head-mounted displays (HMDs), users tend to perceive virtual objects as 7.7% to 11.1% smaller than their actual size, regardless of the shape of the object [23]. In AR environments with handheld controllers, the detection threshold for size changes ranged from 3.10% to 5.18% [24]. In contrast, other studies reported even finer perception thresholds—less than 1.13% of the height of the target object and less than 2% of the width. These values are usually considered as the point of subjective equality (PSE), which indicates the deviation at which users have a 50% probability of correctly estimating the size of a virtual object [25].

User interaction encompasses the actions and reactions of a user in a digital environment. It describes the user’s activity when using a computer or applications. Most people are used to interacting with computers through standard 2D interfaces using a mouse and keyboard, with the screen being the output device. However, with the advancement of technology, especially in virtual environments, conventional input is not always practical [26]. This still raises the question of what is the most natural way to interact with these virtual systems and which devices are best suited for this.

In contrast, 3D interaction is a type of interaction between humans and computers in which users can move and interact freely in a 3D space. The interaction itself involves information processing by both humans and machines, where the physical position of elements within the environment is critical to achieving the desired results. The space in such a system can be defined as a real physical environment, a virtual environment created by computer simulation, or a hybrid combination of both environments. If the real physical space is used for data input, the user interacts with the machine via an input device that recognizes the user’s gestures. If, on the other hand, it is used for data output, the simulated virtual 3D scene is projected into the real world using a suitable output device (hologram, VR glasses, etc.). It is important to note that interactive systems that display 3D graphics do not necessarily require 3D interaction. For example, if a user views a building model on their desktop computer by selecting different views from a classic menu, this is not a form of 3D interaction. If the user clicks on a target object within the same application to navigate to it, then the 2D input is converted directly into a virtual 3D location. This type of interaction is called 3D interaction [27].

Most 3D interactions are usually more complex than 2D interactions because they require new interface components, which are usually realized with special devices. These types of devices offer many opportunities for designing new user experience-based interactions [28]. The main categories of these devices are standard input devices, tracking, control, navigation and gesture interfaces, 3D mice, and brain–computer interfaces.

Two different methods of interaction in the XR environment are direct and indirect. When considering their impact on user experience, engagement, and learning outcomes, both offer different benefits that can complement each other in a learning context. Direct interaction in XR environments mimics real-life activities, increasing physical involvement and potentially boosting intrinsic motivation. Indirect interactions with classic UI elements provide greater precision through an interface, although they usually require more cognitive effort and affect usability and motivation in different ways. A combination of both interaction methods can create a balanced and effective learning environment, as this approach supports hands-on learning in the initial phase and facilitates precision tasks in more advanced phases [29].

Various approaches have been developed to overcome the limitations of mapping 2D inputs to 3D spaces. One of the earliest methods is the triad cursor, which allows the manipulation of objects using a mouse relative to their projected axes [30], while another method uses two cursors to perform translation, rotation, and scaling simultaneously [31]. Alternatives include a handle box [32] and virtual handles [33] for applying transformations to virtual objects, while Shoemake presented a technique that allows object rotation by drawing arcs on a virtual sphere with the mouse [34]. Although these techniques are more than 30 years old, they are still used in the form of widgets in modern tools such as Unity3D. Other tools, such as AutoCAD, 3D Studio Max, and Blender, use orthogonal views instead of widgets for more precise manipulation of 3D objects [35].

Numerous studies have investigated different approaches for the selection and manipulation of objects in virtual 3D spaces. One of the basic techniques is the use of a “virtual hand”, which enables direct object manipulation by mirroring the user’s real hand movements onto a virtual counterpart. This type of interaction is very intuitive for humans [35], while the size of the object and the goals influence the grasping kinematics in 3D interactions. When designing a VR assessment, it is important to consider the size of the virtual object and the goals of the task as factors that influence participants’ performance [36]. The user’s perception of object size in XR environments is important for natural and intuitive interaction, as it influences the feeling of presence and navigation, and, indirectly, learning efficiency. Misperception of object size can lead to reduced efficiency and frustration for the user when interacting with virtual content. The point of subjective equality (PSE) is the point at which a user perceives two objects to be the same size, even if they are physically different sizes. In XR environments, the PSE is often used to quantify perceptual errors in size estimation, which helps to optimize the display of objects so that the user experience is as realistic and intuitive as possible [25].

The distractibility of objects in 3D interactions can affect task performance and decision making. Distraction can slow down behavior and increase costly body movements. Most importantly, distraction increases the cognitive load required for encoding, slows visual search processes, and decreases reliance on working memory. While the impact of visual distraction during natural interactions may appear localized, it can still trigger a range of downstream effects [37].

There are various devices, such as wearable sensors, touch screens, and computer vision-based interaction devices, that allow users to interact realistically in 3D [38,39]. Among the sensors that successfully recognize natural hand gestures, the Leap Motion controller [40] stands out. Leap Motion is a small peripheral device that is primarily used to recognize hand gestures and finger positions of the user. The device uses three infrared LEDs and two CCD sensors. According to the manufacturer of Leap Motion, the accuracy of the sensor in detecting the position of the fingertip is 0.01 mm. The latency of the Leap Motion Controller is influenced by various factors, including hardware, software, and display systems. As discussed in Leap Motion’s latency analysis, the latency of the overall system can be reduced to less than 30 milliseconds with specific adjustments and under optimal conditions [41]. In practice, however, latency can vary, and the reported average motion time error is around 40 milliseconds [42]. Due to its high recognition accuracy and fast processing speed, Leap Motion is often used by researchers for gesture-based interactions [27,43].

One of the challenges in implementing hand gesture interaction is the fact that there is no standard, model, or scientifically proven prototype for how the user can interact with a 3D object [26]. Although webcams are one of the most important devices for exploring possible interactions with 3D objects, no solution has yet been found for the optimal estimation of hand positions considering environmental and circuit constraints [44]. In [45], a system for hand tracking in VR and AR using a web camera was presented. In contrast to the cameras built into VR devices, which restrict the positioning of the hand and are uncomfortable for some users, this system allows greater freedom of hand movement and a more natural position. The system achieves a high gesture prediction accuracy of 99% and enables simultaneous interaction of multiple users, which significantly improves collaboration in 3D VR/AR environments. The use of markers as one of the possible solutions for realizing interactions via webcams is presented in [46].

2.2. Natural Interactions

Natural interaction, where humans interact with machines in the same way as in human communication—through movements, gestures, or speech—is one of the possible solutions for intuitive interaction with 3D interfaces [14].

A natural user interface (NUI) allows users to interact with digital systems through intuitive, human-like actions, such as speech, hand gestures, and body movements that are similar to the way humans interact with the physical world. This approach moves away from traditional input devices such as keyboard, mouse, or touchpad [47]. Natural interactions are not necessarily 3D interactions, even though they are often used in 3D interfaces. We often associate the term NUI with natural interactions. They have the advantage that the user can use a wider range of basic skills when interacting than in conventional GUIs (e.g., UIs, which mainly rely on the mouse and keyboard for interaction) [48].

Much of the concept of natural interactions and interfaces is based on Microsoft’s definitions and guidelines. Bill Buxton, a senior researcher at Microsoft, points out that NUI “exploits skills that we have acquired through a lifetime of living in the world, which minimizes the cognitive load and therefore minimizes the distraction” [49]. He also emphasizes that NUI should always be developed with the context of use in mind [49].

Gestures are seen as a technique that can provide more natural and creative ways of interacting with different software solutions. An important reason for this is the fact that hands are the primary choice for gestures compared to other body parts and serve as a natural means of communication between people [50]. In general, it is assumed that a UI becomes natural through the use of gestures as a means of interaction. However, when looking at the multi-touch gestures, e.g., on the Apple iPad [51], which are generally considered an example of natural interaction, it becomes clear that the reality is somewhat more complex. Some gestures on the iPad are natural and intuitive to the user, such as swiping left or right with one finger on the screen, which allows you to turn pages or move content from one side of the screen to the other, simulating the analog world. However, some gestures need to be learned, such as swiping left or right with four fingers to switch between applications. Such gestures are not intuitive for users and require additional learning, as it is not obvious how such an interaction should be performed. This requires an understanding of the relationship between the gesture and the action being performed [49].

By interaction types, NUIs can be divided into four groups: multi-touch (the use of hand gestures on the touch screen), voice (the use of speech), gaze (the use of visual interaction), and gestures (the use of body movements) [52]. Another new group of interfaces based on electrical biosignal sensing technologies can be added to this classification [53].

The emergence of multi-touch displays represents a promising form of natural interaction that offers a wide range of degrees of freedom beyond the expressive capabilities of a standard mouse. Unlike traditional 2D interaction models, touch-based NUIs go beyond flat surfaces by incorporating depth, enhancing immersion, and simulating the behavior of real 3D objects. This allows users to interact and navigate in fully spatial, multi-dimensional environments [54]. Touchscreen interactions are considered the most commonly used method for natural interactions as they are available on the most commonly used devices, such as smartphones and tablets.

Voice user interfaces (VUIs) have experienced significant growth due to their ability to enable natural, hands-free interactions. They are usually supported by machine learning models for automatic speech recognition. However, they still face major challenges when it comes to accommodating the enormous diversity and complexity of human languages [55]. A major problem is the limited support for numerous global languages and dialects, many of which are underrepresented in existing language datasets. This underrepresentation can lead to misinterpretation and a lack of inclusivity in VUI applications [56]. In addition, nuances such as context, ambiguity, intonation, and cultural differences pose difficulties for current natural language processing systems. Addressing these issues is critical to the broader adoption and effectiveness of VUIs, especially in multilingual and multicultural environments [57]. Recent advances in VUI technology include emotion recognition, where the system can recognize and respond to the user’s emotional tone of voice, and multilingual support, which enables interactions in multiple languages [58]. In two-way voice communication, natural language processing enables the system to understand the meaning of the spoken words, while the speech synthesis system generates responses in the form of human-like speech. Modern VUIs often integrate systems to handle complex conversations with multi-round interactions [59].

Gaze-tracking UIs use eye movements, in particular the direction of the user’s gaze, to control or manipulate digital environments. They interpret eye movements and translate them into commands or actions on a screen or in a virtual environment [60]. As hands-free interfaces, they are particularly beneficial for assistive technologies, XR, and public installations where traditional input methods can be impractical [52]. To ensure that the device works as accurately as possible, it must be individually calibrated for each user to calculate the gaze vector [61]. The two main types of gaze-based interaction are gaze pointing and gaze gestures. Gaze pointing uses the user’s gaze as a pointer and allows the user to select or interact with objects on a screen without having to physically touch the device. The UI responds to the location on the screen or in space that the user is looking at, often with a cursor or focus indicator [62]. Gaze gestures recognize and interpret complex eye movement patterns such as blinking or switching gaze between targets as commands, enabling a wider range of gestures and interactions [63,64]. Gaze-recognizing systems can adapt the content or interface depending on where the user is looking [65]. Recent advances, including multimodal approaches that combine gaze with speech or gestures, aim to mitigate these issues and improve the user experience [66] or combine them with technologies that measure brain activity to create a brain–computer interface [67]. Advances in this area aim to improve accuracy and overcome problems with interaction errors such as the “midas touch”, which occurs when the system’s response to a user action and the user’s expected outcome of that action do not match [68]. New findings in the field of deep learning have also influenced gaze-tracking technologies, so that more and more authors are working on the application of machine and deep learning in this field. Numerous authors have created and used their own annotated datasets for deep learning, some of which are publicly available, such as the Open EDS Dataset [69] or Gaze-in-Wild [70]. Among the approaches used, convolutional neural networks (CNNs) showed the best results in eye tracking and segmentation [71,72].

Gesture-based NUIs allow users to interact with devices by using certain gestures, such as hand or body movements or facial expressions, as input commands. These technologies rely on users’ body movements to interact with virtual objects and perform tasks, and they rely heavily on the ergonomics associated with various interactions [73]. One form is hand gesture recognition, where systems use cameras and different sensors (e.g., Leap Motion or Kinect) to capture hand movements and interpret them as commands for tasks such as selecting objects or navigating menus [44,74]. Wearables such as data gloves, video cameras, and sensors such as infrared or radar sensors [75] are also used for gesture recognition. Wearables provide more accurate and reliable results and can give haptic feedback, which gives them an advantage over visual systems. In addition, there is a third category for systems that combine both approaches. Nowadays, sensors and cameras are preferred for hand tracking as they offer greater freedom of movement [76]. Another form of gesture-based NUIs is whole-body gesture recognition, which captures body movements for more immersive interactions, as often seen in VR or AR applications [77,78]. Similar to hand gesture recognition, cameras and depth sensors are often used to recognize full-body gestures. Sensors are also used in the form of suits that track body movements via various sensors such as accelerometers, gyroscopes, and magnetometers that detect limb movements, posture, and orientation [44]. On a larger scale, ultrasonic or radar sensors are used to recognize the position and movement of the body in space [75], as well as computer vision algorithms that enable the recognition of whole-body gestures through video analysis [79,80]. Facial gesture recognition focuses on recognizing facial expressions as input so that devices can respond to emotions or gaze, which is common in accessibility technologies [81]. Finally, multimodal gestures combine different types of inputs, such as hand, voice, and gaze inputs, to create a more intuitive and efficient interaction experience [82].

Technologies for recording electrical biosignals, such as brain–machine interfaces (BCIs), which are based on electroencephalography (EEG) and electromyography (EMG), are often referred to under the collective term ExG [53]. With BCIs, it is possible to send commands to a computer using only the power of thought. A standard non-invasive BCI device that does not require brain implants is usually available in the form of an EEG headset or a VR headset with EEG sensors. The design of the device has evolved so that today smaller devices are produced in the form of EEG headbands that measure brain activity and other data such as blood flow in the brain, while the mapping of the collected signals to the user’s gestures is complex and relies heavily on a learned model [83]. EMG signals are used for various purposes, independently or in combination with other sensors, for example, for interpreting the user’s intentions when interacting with virtual objects [84], for device management [85,86], for analyzing muscle mass during rehabilitation [87], or for speech recognition [88,89]. The application of electrical interfaces to capture biosignals faces a number of problems, such as the detection of biological signals and the difficulty of precise localization of activity. Biological signals are variable, and errors in detection and classification increase the error rate, resulting in a lower speed of information processing compared to classical interaction methods. An additional problem is the so-called “midas touch” effect, which has already been mentioned for UIs with eye tracking. In addition to the technical challenges, there are also ethical problems, as, for example, the EEG data used during processing can reveal personal information of the user [53]. The scope of such interfaces in mobile and XR applications is limited due to performance accuracy as well as stability between sessions [83], but they are essential in certain areas such as rehabilitation, prosthetics, and assistive technologies, especially for people with severe disabilities for whom such interfaces are the only possible form of interaction with systems [53].

Achieving naturalness in every context of use and for all users is a challenge. While gestures, speech, and touch play an important role in many NUIs, they only feel truly natural to users if they match their abilities and specific usage scenarios as well as the context of the application and gestures. Given the technology available at the beginning of the 21st century, it is almost impossible to create a 3D UI that feels natural to all users. Instead of trying to make NUIs universal, one should focus on tailoring each of these interfaces to specific users and contexts [49].

2.3. Accessibility Parameters

UIs for gesture-based interaction should be designed according to established principles to ensure an intuitive and effective user experience. The system must be able to determine exactly when gesture recognition should begin and end to ensure that unintended movements are not misinterpreted. The order in which actions are performed is also crucial. Designers must clearly define each interaction step required to complete a process. In addition, the UI should be context-aware and provide immediate feedback to the user to confirm a successful action [38], while individual spatial abilities should be considered, as these abilities significantly affect the user’s performance [90]. In a global and culturally diverse environment, it is important to consider cultural differences, as they can influence the meaning, pace, and execution style of gestures [8].

Universal design aims to ensure that interactive systems can be used by as many users as possible, regardless of their abilities or experience [91]. In this context, in 3D environments, usability parameters such as object size, UI responsiveness, and intuitiveness of interaction must be adaptable to different physical, cognitive, and perceptual needs. A flexible design that allows users to customize interaction modes or change spatial layouts improves accessibility and supports user autonomy. This inclusive approach not only improves the overall user experience but also promotes equity in accessing immersive 3D technologies.

The most neglected accessibility principles in serious games are “operability” and “robustness”, where the principle of operability concerns user control and interaction, while robustness can be improved through assistive technologies. To improve accessibility, it is recommended to include features such as automatic transcriptions, sign language, photosensitivity control, external VR devices, and contextual help. It is emphasized that developers of serious games must make considerable efforts to improve accessibility [92].

As the field of accessibility matures, there are many studies that focus on specific subfields of accessibility research (e.g., people with autism or visual impairment) or on accessibility in different types of applications (e.g., websites, mobile applications, and VR games) [93]. Most existing accessibility guidelines for applications are based on the W3C Web Content Accessibility Guidelines [94], the W3C Mobile Accessibility Guidelines [95], and the Guide to Applying WCAG 2 to Non-Web-based Information and Communication Technologies [96]. They offer a series of recommendations to make digital content more accessible for all users, based on four basic principles: Perceivable, Operable, Understandable, and Robust (POUR). Applying these principles in development ensures that all users, including those with different types of impairments, can access, navigate, and understand the content. Certain industries, such as the gaming industry, have recently invested considerable resources in improving the accessibility of their products.

Previous research on accessibility in AR/VR environments has shown that these technologies present significant barriers to accessibility, which can lead to problems with the use of their features or to the exclusion of certain user groups, particularly people with certain forms of impairment. Issues such as limited evaluation of the effectiveness of solutions, lack of standardized testing methods, and technical barriers such as limited device resources and the need for environmental monitoring were identified. Successful systems emphasize UI adaptability and user involvement in the design process to ensure accessibility [97]. These findings could be used to identify key areas where further work is needed to develop immersive platforms that are more accessible to people with certain forms of disabilities [98].

When designing immersive applications for accessibility, there are some important principles to consider. Immersive applications need to provide redundant output options such as audio descriptions or subtitles to ensure that users can interact with the content in a way that best suits their abilities. They should also support redundant input methods and allow the user to interact with the environment by either changing the orientation of the head or using a controller. These applications need to be compatible with a wide range of assistive technologies so that users can use their own way of interacting between the physical world and the virtual space [99]. In addition, customization options for content presentation and input modalities should allow users to tailor the experience to their specific needs [100]. Applications should also offer direct assistance or facilitate support when the user encounters difficulties, through dynamic customization of the UI, intelligent agents, or the possibility of external support from friends or caregivers [99]. Finally, implementing the principles of inclusive design will ensure that text content and controls are accessible to a broad population (e.g., by displaying text in an appropriate size and color) [101].

The 3D interactions can be defined by three aspects: usability, reliability, and comfort [102]. Usability describes the ease of learning, understanding, and remembering interactions, where high ease of use means simplicity compared to other interactions. Reliability refers to the likelihood that the tracking device will interpret the interactions correctly. It is expected that interactions with high reliability will be recognized most of the time. Comfort describes the physical effort and discomfort of performing gestures, while interactions with high comfort can be performed with ease and minimal effort. To make their devices more usable, manufacturers of devices that offer 3D interaction claim to study human behavior and apply evidence-based design practices to identify the gestures, interactions, and haptic sensations that work best for their users [103]. They apply this knowledge in the design of their hardware and software, which programmers can use to control their devices.

The transition from 2D accessibility to accessibility in 3D environments is a major transition. Users are confronted with new types of interface components and interaction styles that may seem strange, unintuitive, or unnatural. In addition to the challenge of adapting to the new elements, additional complexities arise as accessibility principles from the 2D world need to be merged with those of real-world interaction. While expertise in 2D interactions is fundamental to translating accessibility to 3D, a perfect 1:1 conversion is often not possible.

As far as the authors are aware, there is no comprehensive research in the literature on the accessibility parameters of 3D user interactions in educational software systems that include 3D visualization.

3. Research Design and Instruments

At the beginning of this section, we outlined the research and put forward hypotheses to be tested in the research. In the following subsections, we describe the research instrument and metrics we used to identify the key parameters that influence the accessibility of 3D user interactions.

To create better XR interfaces, it is important to recognize the influence of certain accessibility parameters on task performance and user experience. This research is a pilot study that aims to collect initial data to identify the key parameters that influence the accessibility of 3D user interactions in educational software solutions based on 3D visualization technologies.

Based on previous research findings and theoretical foundations [20,35,36,37,42], we formulate three hypotheses that we evaluate using the results of the analysis of 3D user interactions in educational software:

H1:

In precision-oriented XR applications, smaller 3D objects relative to the virtual hand will improve task accuracy and user satisfaction.

H2:

Visual distractions reduce task performance and user satisfaction.

H3:

Indirect interaction methods lead to better task efficiency, accuracy, and satisfaction than direct interaction.

By empirically evaluating these hypotheses, this study contributes to the development of the accessibility of XR interfaces. The defined guidelines will be specifically tailored to the needs of 3D interaction. They will enable the creation of UIs that not only fulfill the basic accessibility requirements but also improve the user experience.

The study consisted of two phases. In the first phase, a research instrument called GeometryGame was used to collect quantitative data. The second phase collected qualitative data related to participants’ attitudes toward certain aspects of accessibility and interaction implemented in the GeometryGame. Figure 1 illustrates the experimental environment used in the first phase of the study.

Figure 1.

Schematic representation of the experimental environment used in the study. The layout includes the position of the participant, the sensor, the display, and the interaction area. The user interface of the application is in Croatian. In the upper left corner of each screen, the blue robot in the bubble displays the user’s instructions for a particular sublevel (e.g., “The goal of this game is to place a cube, a sphere and a pyramid in the appropriate space corresponding to their shape.”). In the upper right corner of the screen, the options “Skip sublevel” (hr. Preskoči razinu) and “Exit” (hr. IZLAZ) are displayed.

The quantitative data provide a basis for analyzing the effectiveness and accessibility during the interaction with the research tool, while the qualitative data provide information about the users’ satisfaction with the achieved precision, the ease of interaction, and the general subjective impressions during the interaction (e.g., the users’ frustration).

3.1. Description of Study

In 2023, a pilot study was conducted at the University of Dubrovnik and at the Faculty of Electrical Engineering and Computing at the University of Zagreb, in which the participants participated individually under the supervision of an examiner. Before the study began, each subject was verbally informed about the context, the research procedure, and the purpose and objectives of the study. Each subject gave informed consent before participating in the user tests.

The hardware on which the test was carried out consisted of an Acer laptop, model Nitro AN515-46, AMD Ryzen 7 6800H 3.20 GHz processor, NVIDIA GeForce RTX 3050 graphics card, 16 GB RAM, and a first-generation Leap Motion device.

The Leap Motion Controller was chosen as the primary input device for this study as it offers a combination of precision, accessibility, and suitability for natural 3D interaction. Its tracking accuracy allows for precise detection of hand movements, which is important in experiments evaluating the speed of task execution and fine motor control. The controller requires no additional external cameras and allows for natural interaction with the bare hand. In terms of practical application, it is available, easy to set up, compact, and portable, which was important for us as the research took place in various remote locations. The device easily connects to the most common XR development environments via its official SDK, making it easy to create applications and develop interactions [102].

Due to its characteristics, the Leap Motion controller sensor has attracted a lot of attention in the field of hand gesture recognition and has captivated researchers from various fields. All these features make the device particularly suitable for use in NUI research in 3D environments [104].

Each test subject has 15 min to complete the first phase of the study. Due to the nature of the study, the specifics of the used hardware, the duration of the study per test subject, and the desire for each participant to use the application under identical conditions, the number of test participants was limited, so that ultimately 53 people took part in the study.

Since the time required to complete the tasks is an important aspect in evaluating the usability and efficiency of the UI, a statistical analysis of the time required to complete the tasks in the first part of the study was performed. The measures used in the analysis were the mean (M), median (C), standard deviation (σ), minimum (min) and maximum (max) values, range (R), lower (Q1) and upper (Q3) quartiles, interquartile range (IQR), symmetry index (S), and flatness index (K). The unit of measurement for all results was the second.

In the second part of the research, participants completed a survey created in Google Forms. This part of the research was not time-limited. The survey statements evaluate the participants’ subjective experience of interacting with 3D objects and focus on the experience of ease, precision, intuitiveness, and frustration when handling the objects.

3.2. Research Tool

GeometryGame is the prototype of an educational software for teaching geometric solids in the form of a 3D game, in which four 3D interactions (grab-and-release, pinch, swipe, and 3D button press with a virtual finger) are implemented. The UI of this game is in Croatian.

The development of this tool is based on concepts and guidelines previously defined and implemented in collaboration with a student of the Applied/Business Computing at the University of Dubrovnik as part of her master’s thesis [105]. This game was developed using the cross-platform game development framework Unity, version 2020.2.6f1. A 1st generation Leap Motion device manufactured by Ultraleap, San Francisco, CA, United States was chosen as an input device to implement the 3D user interactions.

It was designed to focus on the user’s interactions, and users could always see their virtual hands on the screen. The relationships between distance and size were precisely defined by these virtual hands. The tasks in the game were simple and required no prior knowledge on the part of the participants. The interactions are explained by three aspects: usability, reliability, and comfort, described in the documentation of the manufacturer of Leap Motion [102]. The aspects are described with the qualitative descriptors “high”, “medium”, and “low”, and in this tool, we choose highly and moderately usable and comfortable interactions.

The game consists of four levels divided into sublevels, and the types of interactions used on each level are listed in Table 1.

Table 1.

Description of interactions by game level.

The definition of the accessibility parameters focuses on a detailed analysis of the four elements:

- 1.

- The size of the object in relation to the user’s virtual hand, analyzed by the grab-and-release interaction;

- 2.

- The presence of distracting 3D elements using pinch interaction with both hands;

- 3.

- The intuitiveness of the use of UI elements, analyzed by the swipe interaction;

- 4.

- The size of UI elements and their distance from the user’s virtual hand, analyzed by pressing 3D buttons with a virtual finger.

These interactions allow for easier and more natural handling of objects in the virtual space, as they are as similar as possible to the user’s natural movements. This ensures that interactions are accessible and simple for all users, promoting universality and inclusivity in the digital environment. By adapting the accessibility guidelines to the specific requirements of 3D interactions, interfaces should be created that not only fulfill the basic accessibility requirements but also improve the user experience. The integration of graphical interfaces, such as sliders to control rotation, was developed with the aim of enabling precise manipulation of objects without causing unnecessary effort or frustration for the user. This approach not only sought to meet existing accessibility standards but also to extend them by introducing new parameters relevant to 3D interactions.

3.2.1. Level 1: Retrieval of Object

The goal of the interactions on the first level, shown in Figure 2, is to place three geometric solids (sphere, cube, and pyramid) in the correct boxes. Participants must perform a certain interaction to progress in the game, regardless of the result. In situations where they cannot perform the required interaction, the alternative option is to skip the sublevel.

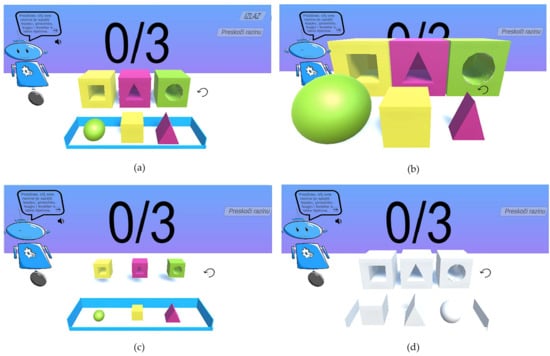

Figure 2.

Design of the screen on the first level: (a) objects of basic size; (b) enlarged objects; (c) shrunken objects; (d) objects of basic size with reduced contrast.

At this level, we want to investigate the optimal size of the object in relation to the user’s virtual hand and evaluate the user’s interaction under conditions of reduced visual contrast. The distance between the object and the virtual hand is defined by the Unity transformation component, which determines the position, rotation, and scaling of objects in 3D space. All calculations related to the size of the virtual hand and the object are expressed in the form of ratios. This level is divided into four sublevels:

- Basic—where objects are the same size as the virtual hand (Figure 2a);

- Shrunken—where objects are 50% smaller than the virtual hand (Figure 2b);

- Enlarged—where objects are 100% larger than the virtual hand (Figure 2c);

- Reduced contrast—sublevel with reduced contrast between the background color and the color of the 3D objects, while the ratio of the virtual hand size to object size is the same as in the basic sublevel (Figure 2d).

3.2.2. Level 2: Resizing the Object

The second level, shown in Figure 3, implements interactions that involve grabbing a ball and enlarging it with a pinch gesture. The pinch gesture requires participants to bring their index fingertip and thumb together while keeping their other fingers open.

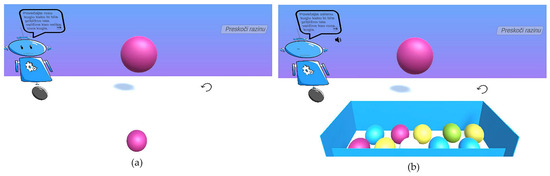

Figure 3.

Design of the screen on the second level: (a) without distractors; (b) with distractors.

This level was divided into two sublevels, differentiated by the use of distractors in the form of other objects. The aim of this approach was to relate the results obtained to the level of complexity of the interaction and to assess how distracting elements affect performance.

The goal of the first sublevel (without distractors) was for the subject to reach for the ball and enlarge it to approximately the same size as the large pink ball.

The second sublevel is more challenging as the subject had to select a specific ball described in text on the screen (e.g., green ball) and enlarge it to approximately the same size as the pink ball. In this scenario, distractors were placed to investigate how such elements affect the subject’s performance during the interaction.

Given the shape of the object whose size has changed, the resizing was limited to a proportional resizing. The original aspect ratio was retained, and the assessment was based solely on the overall scale factor in relation to the target object. To minimize the influence of the inaccuracy of the sensor and the output device used, we opted for a relatively safe threshold value of ±5% of the given object size. This value was chosen based on findings from previous research on UIs, where such tolerances were considered sufficiently accurate but at the same time realistically achievable for users to balance the natural variability of human interaction and the need for precision.

3.2.3. Level 3: Rotating the Object

The third level shown in Figure 4 is divided into two sublevels to further enrich the user experience and explore whether users prefer direct interaction with a 3D object or indirect interaction via a UI element slider.

Figure 4.

Design of the screen on the third level: (a) direct interaction; (b) indirect interaction.

In the direct interaction (Figure 4a), the participants had the task of picking up a dice, holding it in one hand, and making rotations with the help of hand movements. A grab-and-release interaction was implemented, which, together with the rotation using swipe motion, aimed to encourage exploration of the dice and to convey a 3D spatial perception to the participants by searching for a specific number.

In the indirect interaction (Figure 4b), the participants used the standard UI element slider. In this scenario, the participants must turn the dices using the slider until they reach a position with a clearly visible given number.

3.2.4. Level 4: Pressing the Button Using Virtual Finger

The fourth level shown in Figure 5 is designed like a trivia quiz. The questions were simple and required no prior knowledge from the participants. Interaction at this level is performed by tapping the buttons at different positions with a virtual finger. This structure allows for a consistent quiz experience, exploring how variations in object size and button spacing can affect user interaction.

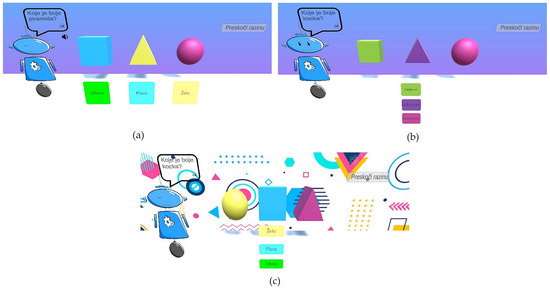

Figure 5.

Design of the screen on the fourth level: (a) horizontal orientation of buttons; (b) vertical orientation of buttons; (c) vertical orientation of buttons with distracting background.

Each sublevel retains the same concept but varies the size of the buttons and the distance of the buttons from the user’s virtual hand. The first two sublevels differ in the position of the buttons that the test subject must press: horizontal (Figure 5a) and vertical (Figure 5b). In the last sublevel, a distracting background is implemented in addition to the buttons in the vertical position (Figure 5c).

On the sublevel with horizontal alignment, the buttons have a size of 0.24×0.17, and the buttons are arranged at a spatial distance of −10. On the sublevel with vertical alignment, the size of the button is reduced to 0.14 × 0.13, and the spatial distance between the button and the virtual hand is increased to −15. The sublevel with distracting background uses the smallest buttons (0.12 × 0.17) placed closer to the participants (−5).

The size of the buttons was chosen by experimenting with different sizes and applying Fitts’s law [105]. Fitts’s law is a predictive model for human movement used in human–computer interaction. This law states that the time required to move quickly to a target area is a function of the ratio between the distance to the target and the width of the target [106]. It is often used in UI design to optimize the size and position of elements to improve the speed and accuracy of user interactions.

3.3. Design of the Survey Questionnaire

In addition to the demographic questions (age, gender, education, and occupation), the questionnaire contained repeated statements for each level of GeometryGame. These statements assess the participants’ subjective experience of interacting with objects and focus on the experience of ease, precision, intuitiveness, and frustration when handling the objects. Participants rated their attitude to the statements on a Likert scale from 1 to 5, with 1 indicating complete disagreement and 5 indicating complete agreement. User satisfaction with the interactions is defined in this context as the degree to which the user’s needs, expectations, and preferences are met by the interaction. The statements for the first level were as follows:

- It was very easy for me to reach the 3D object.

- I think precision is very important when reaching the 3D object.

- The interaction with the 3D object was intuitive for me.

- While reaching the 3D object, I was frustrated.

- I am satisfied with the precision I achieved while reaching the 3D object.

- I had no difficulty reaching the 3D object.

The statements differed only slightly in terms of the levels and tasks performed by the participants, with the basic structure of the statements remaining constant and the only change being the type of interaction with the object.

3.4. Metrics Used for Evaluation of Results

To objectively analyze the data collected during the user’s interaction with the GeometryGame, the relevant information was systematically recorded and stored in a database. Table 2 shows a system that was used to quantify the results. For each level, the activities and the corresponding result types stored in the database are shown.

Table 2.

Participants’ activities and associated metrics.

4. Results

In this section, we describe the results of the pilot study conducted. First, we describe the demographic data of the participants. In the following subsection, we present and analyze the quantitative data collected with GeometryGame. The qualitative data from the questionnaire is presented and analyzed in the third subsection.

4.1. Demographic Data

A total of 53 participants took part in this research. Of these, 32.08% (17 participants) were between 18 and 25 years old, 26.42% (14 participants) were between 25 and 35 years old, 22.64% (12 participants) were between 35 and 45 years old, 9.43% (5 participants) were between 45 and 55 years old, and 9.43% (5 participants) were over 55 years old.

By gender, 47.20% of participants were female, while 52.80% were male. Other options, such as “other” and “do not want to specify”, were not selected by any of the participants.

Considering that most participants were students, it was expected that a higher proportion of participants would have a secondary school degree, namely, 35.80%. A total of 5.70% had a bachelor’s degree, while 39.60% of the participants had a master’s degree. A total of 1.90% of participants completed postgraduate professional studies. A significant proportion, 17%, of participants had a doctorate.

In terms of profession, 77.40% of participants were from technical sciences, while 9.40% were interdisciplinary. Social sciences accounted for 7.50% of participants, natural sciences accounted for 3.8%, and biomedicine and health accounted for 1.90% of the total number of participants.

4.2. Quantitative Data Analysis

Regarding the quantitative analysis, the data collected during the testing of GeometryGame were exported from the database and statistically analyzed. The quantitative data analysis is based on interaction parameters defined in the metrics for evaluating the results, described in Section 3.3.

The first metric used in the analysis at all levels was the time taken to perform the activities of the level. The indicators used in this analysis were the mean (M), median (C), standard deviation (σ), minimum (min) and maximum value (max), range (R), lower (Q1) and upper (Q3) quartiles, interquartile range (IQR), coefficient of skewness (S), and kurtosis (K).

The second parameter in the analysis was the use of the object-return option in the tasks. It was used at all levels except the fourth quiz level, where participants did not have this option, because they had to answer each question only once.

The third parameter used in the analysis is the accuracy of the participants in performing the tasks. The data for this parameter were collected at the first and fourth levels.

At all sublevels, participants were offered the option to skip the task, and this was the fourth parameter used in the quantitative data analysis.

4.2.1. Execution Time

Table 3 shows the statistical analysis of the time required to complete the tasks at the first level. The unit of measurement for all results listed in the table is seconds.

Table 3.

Statistical analysis of the time required to complete tasks on sublevels of the first level.

At the first level, users must pick a specific object and place it in the correct box by grabbing and releasing it. When analyzing the data of the first metric for all sublevels of the first level, significant differences were found in the time spent on the tasks. The mean and median vary between the sublevels, indicating that the tasks were of different difficulty or complexity for the participants. Since the same interaction was implemented in all sublevels, the size of the 3D object that the participants had to reach had a significant influence on the difficulty of the tasks. Participants completed the tasks fastest in the reduced-contrast sublevel, where the mean completion time was 28.72 s, while they completed the tasks slowest in the sublevel with a shrunken object, with a mean time of 52.90 s.

At the basic sublevel, greater variability was observed in the time spent on the tasks, indicating more challenging or complex tasks for the participants. The variability of the data is reflected in their range, which is 142.74. In the sublevel with enlarged objects, the means and medians are larger, but the range and standard deviation show less variability in the data compared to the first sublevel. This could indicate that the subject’s movements are more consistent with the size of the object.

The sublevel with the shrunken objects shows the highest variability in task completion time, suggesting that it was challenging for participants to reach small 3D objects. Interestingly, participants completed the tasks in the reduced-contrast sublevel almost 1.5 times faster than in the basic sublevel. This difference in speed occurred even though the 3D shapes or geometric solids were the same size in both sublevels, and the only difference was the implementation of low contrast. This result suggests that it is important to first familiarize users with the interactions they will use in the application. Familiarity with the interactions can provide them with the necessary confidence and understanding of the interactions, which can help them overcome challenges more easily, even in worse conditions. Therefore, it is important to consider the learning process of the participants in addition to the design aspects to ensure an optimal user experience.

The distribution of time within the individual sublevels shows variability, with the data clustering relatively closely around the central values. This suggests that most participants had similar experiences with the speed of task completion within each sublevel. However, there are also individuals who need significantly more time for the same tasks, which could be due to different abilities or personal preferences of the participants.

On the second level of the game, the user must select a geometric solid and enlarge it with both hands using the pinch gesture. The results of the statistical analysis of this level by sublevels can be found in Table 4.

Table 4.

Statistical analysis of the time needed to complete tasks on sublevels of the second level.

The execution time of the tasks at the sublevel with distractors was significantly higher, indicating an increased complexity of the tasks due to the presence of distracting 3D elements. The standard deviation is also higher on the sublevel with distractors (37.98 s) than on the sublevel without distractors (29.59 s), indicating greater variability in execution times between participants. This could be due to the different methods used by the participants to overcome the challenges posed by the distractors. The minimum and maximum times confirm this variability and indicate a wide range of subject experience. The IQR was significantly higher on the sublevel with distractors (44.82 s) than on the sublevel without distractors (23.86 s), providing further evidence that participants approached and completed the tasks with greater variability. The indices for symmetry and flattening show higher values on the first sublevel, indicating the presence of more extreme values.

Overall, these results show that the distractors had a significant impact on the participants’ experience by increasing the time required to complete the tasks and leading to greater variability in performance. This suggests that the presence of 3D distractors increases complexity and challenge, causing participants to focus and exert more effort to successfully complete the tasks.

The third level of the game focuses on manipulation with 3D objects and offers participants the challenge of reaching and rotating a virtual dice. The results of the statistical analysis at this level can be found in Table 5.

Table 5.

Statistical analysis of the time needed to complete tasks on sublevels of the third level.

On the first sublevel, the participants use direct interaction with a 3D object (cube) to find the specified number. On the second sublevel, an indirect interaction with a slider is used to rotate the cube and find the corresponding number. When analyzing the statistical data for the sublevels, it is noticeable that the performance of the participants is different, which is partly due to the differences in the interactions implemented in each sublevel.

In the direct interaction, participants had to manage a combination of interactions, including grabbing and releasing as well as rotating a 3D object, resulting in an average processing time of 17.83 s. The complexity of this combination of interactions explains why the participants needed more time for this task in the direct interaction. In contrast, the indirect interaction used a simplified approach with only one type of interaction—a hand movement to one side, using a graphical slider to rotate the 3D object. This made the task easier for the participants, which is reflected in a significantly shorter average processing time of 7.83 s. The median, standard deviation, minimum, and maximum execution times confirm this conclusion and indicate greater consistency in task execution time between participants in indirect interaction. The lower variability in this sublevel suggests that the simplified approach with only one type of interaction allowed participants to solve the task more easily and faster. The direct interaction has a higher IQR, indicating a greater time span, while the lower IQR at the indirect interaction sublevel indicates a more consistent performance of the participants in task execution. The indices of symmetry and flatness also show differences between the sublevels.

The fourth level of the game was designed to test participants’ interaction with a virtual 3D surface in different contexts. Each sublevel of this level retains the basic concept of a quiz, but varies in the dimensions and placement of the interactive graphical elements, while the third sublevel adds distracting elements. Table 6 shows the results of the statistical analysis at this level.

Table 6.

Statistical analysis of the time needed to complete tasks on sublevels of the fourth level.

On the sublevel with horizontal orientation, the buttons were 0.24×0.17 in size and arranged at a spatial distance of −10. The results show an average task time of 7.06 s with a standard deviation of 2.99 s. This shows that the participants became accustomed to the task relatively quickly. The median of 5.98 s and a relatively small IQR of 4.13 s indicate a constant performance of the participants in the task. In the vertical plane, the size of the button is reduced to 0.14×0.13, and the spatial distance between the button and the virtual hand is increased to −15, resulting in a significantly higher mean processing time of 16.81 s. The large standard deviation of 13.09 s and a larger IQR of 13.88 s indicate fluctuations in the participants’ performance, which points to difficulties in solving the task.

This is a consequence of the increased task complexity caused by the smaller buttons and the larger spatial distance, requiring more precision from the participants. Although the distracting background sublevel uses the smallest buttons (0.12 × 0.17), it places them closer to the participants (−5), possibly facilitating interaction. The average time to complete the task is similar to the first sublevel (7.24 s with a standard deviation of 3.39). The smaller IQR of 3.67 s indicates that the participants completed the task evenly despite the challenge posed by the smaller buttons. This means that the smaller distance can compensate for the smaller size of the buttons, allowing participants to interact with the buttons more successfully. In the sublevel with vertical orientation, higher values of the flatness index indicate the presence of several extreme values that reflect the challenges the participants faced.

4.2.2. Use of the “Object Return” Option

The second parameter in the analysis is the use of the object return option in the tasks, and the number of times when participants used this option is shown in Table 7.

Table 7.

Number of times when option to return the object to the scene was used.

If we analyze the number of objects returned at the first level per sublevel in relation to the size of the object the participants were trying to reach, we find a significant correlation between these two parameters. For example, the number of objects returned on the basic sublevel was significant (73), and this option was used by 66.04% of the participants, while on the sublevel with enlarged objects, no subject used this option. However, further analysis of the data revealed that this result was due to difficulties in accurately grasping the 3D object. This is reflected in the increased number of incorrectly inserted objects, suggesting that the tasks were completed with less precision due to difficulties in handling larger 3D objects relative to the size of the hand. The sublevel with the shrunken objects has the highest number of objects returned (100). This indicates that the precision and handling of small 3D objects were a challenge for the participants. At the reduced contrast sublevel, a total of 51 objects were returned, and this option was used by 47.17% of participants.

On the second level, there is a significant difference in the use of the return object option between the sublevels, as participants used this option almost twice as often on the distractor sublevel. This can be explained by the presence of 3D distractor elements, which made the task considerably more difficult. If we look at the percentage of participants who successfully solved this task on the first attempt, we assume that the threshold used to determine the achieved size equality of the objects was well chosen considering the details of the task they were asked to solve (58.49% of participants at the sublevel without distractors and 35.85% of participants at the sublevel with distractors solved this task on the first attempt).

On the third level, on the sublevel with direct interaction, a lower number of uses of the object return option were recorded compared to the previous levels. The return option was used a total of 24 times, with each participant returning an object a maximum of 4 times, and 28.30% used this option. This result indicates a relatively high level of success and adaptability of participants in solving this task, which means that participants were able to manage the combination of interactions effectively.

In the indirect interaction sublevel, this option is not applicable. The reason for this is the nature of indirect interaction using a slider, which is designed so that it cannot go beyond the reach of the participant’s virtual hand, eliminating the need to return the 3D object.

4.2.3. Accuracy of Performing Tasks

Table 8 shows the results of solving tasks based on this categorization, with the percentage of participants who achieved each of these results.

Table 8.

Categorization of results on the first level based on accuracy in performing tasks. On the first level, the accuracy is represented by the number of correctly and incorrectly inserted 3D objects in four categories: correct (all objects are correctly inserted), mostly correct (two objects are correctly inserted), mostly incorrect (only one object is correctly inserted), and incorrect (none of the objects are correctly inserted).

At the basic sublevel, 62.26% of participants managed to insert all three 3D objects correctly, while a smaller number of participants (30.19%) inserted one object incorrectly. Only a few participants (7.55%) inserted two objects incorrectly. This indicates that objects of basic size are relatively easy to access for most participants. On the other hand, at the sublevel with the enlarged objects, there is a significantly higher number of participants who inserted two objects incorrectly (43.40%) or who were unable to insert a single object correctly (16.98%). This result indicates an increased level of difficulty of this sublevel compared to the basic sublevel, which is probably because the 3D objects are twice the size of a virtual fist.

The sublevel with shrunken objects shows an improvement with a higher number of participants correctly inserting all three 3D objects (83.02%). This improvement indicates that the task on this sublevel was better adapted to the participants’ expectations and abilities. On the reduced contrast sublevel, most participants (64.15%) managed to insert all three objects correctly, a smaller number (35.85%) of participants had one error, and no one had two or three errors. Better results on the last two sublevels could indicate that the participants have adapted to the implemented interactions, which enables them to perform better by repeating the interactions.

The accuracy of the participants at the fourth level, represented by the percentage of correctly solved tasks, is shown in Table 9.

Table 9.

Percentage of correct answers to questions on the fourth level.

On the sublevel with horizontal alignment of the buttons, most participants achieved a high accuracy of 98.11%, which means that this sublevel was relatively easy to solve. The high success rate indicates that the size of the objects and the distance were optimally adapted to the abilities of most participants, so that they were able to interact precisely. The sublevel with vertical orientation shows a slight decrease in the success rate, at 92.45%. Although this level is still very successful, the smaller decrease in the percentage of correct responses indicates a slightly higher level of task difficulty compared to the first sublevel due to small changes in object size and distance. The sublevel with the distracting background shows a significant decrease in success, with a percentage of only 62.26% correct answers. This decrease indicates that the task conditions in this sublevel were significantly more difficult for the participants, resulting in a more challenging interaction due to the smaller object size, greater distance, and the introduction of distractions that affected the participants’ ability to focus and be precise.

Analysis of all three sublevels shows that variations in task design, such as changes in object size and distance, have a direct impact on subject performance and satisfaction.

4.2.4. Use of the “Skip Sublevel” Option

At all sublevels, participants were offered the option to skip the sublevel, but participants rarely used this option. At the first and second levels, this option was only used once. At the first level, the participant who used this option gave up after 28.96 s on the sublevel with enlarged objects. On the second level, this option was used on the sublevel without distractors by one subject, who gave up after 64.81 s. The option to skip was used most frequently on the third level (5 times), on the sublevel with direct interaction. On average, the participants gave up after 17.69 s. On the fourth level, not a single subject used the option to skip a sublevel.

4.3. Qualitative Data Analysis

4.3.1. Simplicity of Implemented Interactions

In the second part of the research, participants completed a survey consisting of six questions to assess their satisfaction with the integrated interactions. The design of this survey is described in Section 3.3.

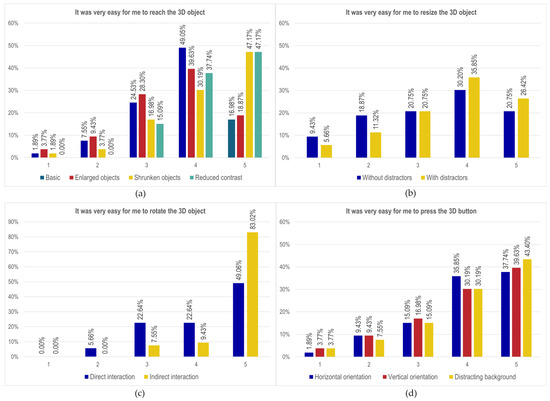

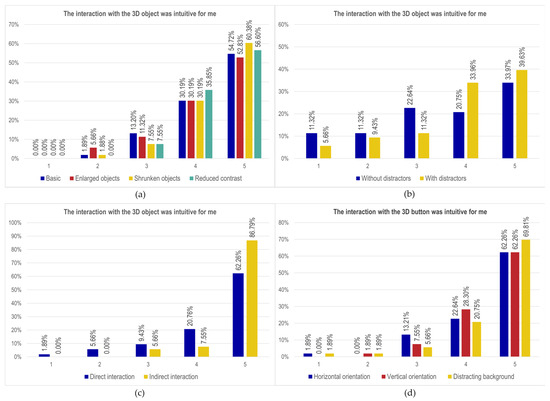

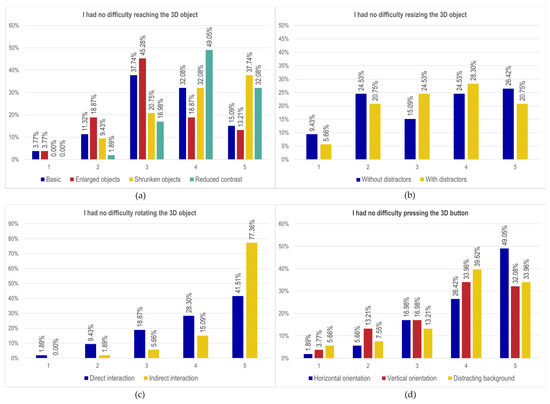

Figure 6a shows that most participants have no problem reaching objects at the first level. At the second level (Figure 6b), participants at the sublevel without distractors generally found that enlarging the 3D object was easy, as 50.95% of participants gave scores of 4 and 5. The interactions at the sublevel with distractors were rated even better, as 62.26% of participants gave the same scores. At the sublevel with distractors, a decrease in ratings of 1 and 2 can be observed. This pattern could indicate that the experience gained in the first level without distractors helped the participants to develop skills that helped them to solve the tasks in the next sublevel more easily, despite the presence of distracting elements.

Figure 6.

Participants’ ratings for ease of (a) 3D object retrieval; (b) resizing a 3D object; (c) rotating a 3D object; (d) pressing 3D button with a virtual finger.

The participants’ subjective ratings of the ease of rotating the 3D object at the third level of the game show a significant difference between the two sublevels (Figure 6c). On the level with direct interactions, most participants (49.06%) thought that rotating the 3D object was very easy. The remaining distribution of ratings shows a balanced number of participants with ratings of 3 and 4, while very few gave a rating of 2, and none gave a rating of 1. For indirect interactions, 83.01% of participants gave a rating of 5, a much smaller number of participants gave ratings of 3 and 4 (16.98%), while no participant gave ratings of 1 and 2. This could indicate that rotating the object, regardless of the type of interaction, was very easy, but that participants generally preferred indirect interaction with the slider.

The results of the ratings at the fourth level (Figure 6d) show a tendency for positive ratings to increase as progress is made through the sublevels. This result is consistent with previous observations on other types of interactions at the first and second levels. It can also confirm that the learning process had a positive effect on the participants’ ability to adapt to the interaction in the virtual space.

4.3.2. Importance of Precision in Implemented Interactions

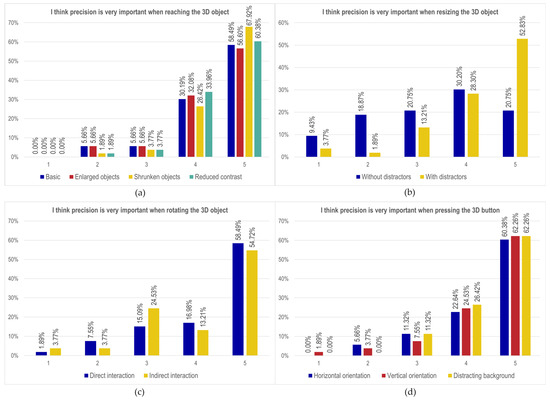

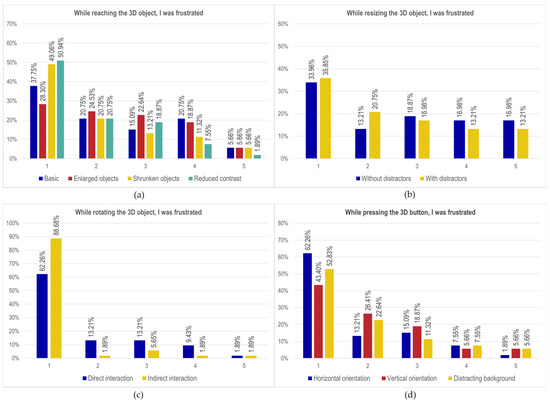

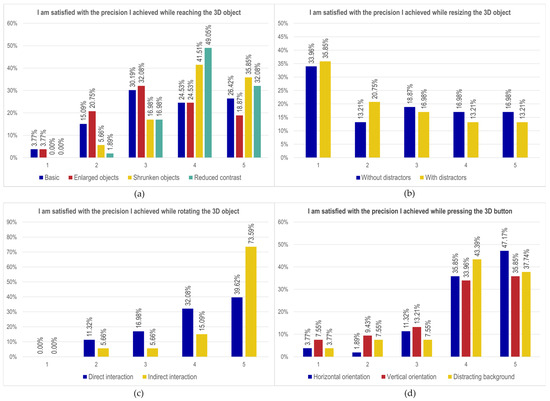

Participants at the first level generally consider precision in reaching 3D objects to be important, with most participants giving a score of 5, as shown in Figure 7a.

Figure 7.

Participants’ ratings of the importance of precision in (a) 3D object retrieval; (b) resizing a 3D object; (c) rotating a 3D object; (d) pressing 3D button with a virtual finger.