Abstract

Background/Objectives: Open-source artificial intelligence models (OSAIMs), such as BiomedCLIP, hold great potential for medical image analysis. While OSAIMs are increasingly utilized for general image interpretation, their adaptation for specialized medical tasks, such as evaluating scoliosis on posturographic X-ray images, is still developing. This study aims to evaluate the effectiveness of BiomedCLIP in detecting and classifying scoliosis types (single-curve and double-curve) and in assessing scoliosis severity. Methods: The study was conducted using a dataset of 262 anonymized posturographic X-ray images from pediatric patients (ages 2–17) with diagnosed scoliosis. The images were collected between January 2021 and July 2024. Two neurosurgical experts manually analyzed the Cobb angles and scoliosis stages (mild, moderate, severe). BiomedCLIP’s performance in detecting scoliosis and its type was evaluated using metrics such as accuracy, sensitivity, specificity, and AUC (Area Under the Curve). Statistical analyses, including Pearson correlation and ROC curve analysis, were applied to assess the model’s performance. Results: BiomedCLIP demonstrated moderate sensitivity in detecting scoliosis, with stronger performance in severe cases (AUC = 0.87). However, its predictive accuracy was lower for mild and moderate stages (AUC = 0.75 and 0.74, respectively). The model struggled with correctly identifying single-curve scoliosis (sensitivity = 0.35, AUC = 0.53), while it performed better in recognizing double-curve cases (sensitivity = 0.78, AUC = 0.53). Overall, the model’s predictions correlated moderately with observed Cobb angles (r = 0.37, p < 0.001). Conclusions: BiomedCLIP shows promise in identifying advanced scoliosis, but its performance is limited in early-stage detection and in distinguishing between scoliosis types, particularly single-curve scoliosis. Further model refinement and broader training datasets are essential to enhance its clinical applicability in scoliosis assessment.

1. Introduction

Open-source artificial intelligence models (OSAIMs) are free, publicly available tools that have found applications in various fields, including computer science and medicine [1,2]. While OSAIMs offer advanced capabilities for processing and interpreting visual data, most of them are not specifically tailored for medical image analysis, such as X-ray images. In response to this gap, dedicated models like BiomedCLIP have been developed, combining natural language processing with medical image analysis, providing new opportunities for the evaluation and interpretation of radiological data.

The evaluation of scoliosis, a complex, three-dimensional deformity of the spinal column, traditionally relies on manual radiological interpretation by clinicians. This process includes the assessment of curvature, vertebral rotation, and spinal dynamics, typically using X-ray images in anterior–posterior (AP) and lateral views [3,4]. Identifying the degree and type of scoliosis requires significant expertise and can be time-consuming, especially when differentiating between single-curve and double-curve forms or determining the severity based on the Cobb angle [5]. Challenges such as interobserver variability and the high volume of radiological data underline the need for automated tools that could enhance accuracy and reduce workload in clinical practice. Advanced AI models, such as BiomedCLIP, offer potential solutions to these challenges by providing automated, high-sensitivity analysis tailored to medical data.

Over the past year, there has been a global increase in interest in OSAIMs. This surge in attention is partly due to the release of advanced language models such as ChatGPT, produced by OpenAI. One of OpenAI’s products is Contrastive Language–Image Pretraining (CLIP), which integrates both natural language and images. This model is capable of a wide range of tasks, including zero-shot image classification, image captioning, and visual question answering [6]. CLIP achieves its remarkable results by training on a diverse dataset of images and their textual descriptions, allowing the model to learn a shared embedding space, enabling it to process and understand both text and images more efficiently.

Based on CLIP, several zero-shot models have already been developed, including medical models such as SDA-CLIP, a domain adaptation method for surgical tasks based on CLIP. It enables the recognition of surgical actions across different domains, such as virtual reality and real-life operations [7]. Another study utilizing this model was SleepCLIP, which demonstrated that CLIP could be effectively adapted to sleep staging tasks, showing potential for improving the diagnosis of sleep disorders [8].

BiomedCLIP, known in its full version as Biomed-CLIP-PubMedBERT_256-vit_base_patch16_224, is an advanced model that combines natural language processing with medical image analysis. It belongs to the category of vision–language foundation models, integrating both domains to perform complex tasks. This model was trained on a massive dataset, PMC-15M, containing 15 million “image-description” pairs from scientific articles available in PubMed Central. As a result, BiomedCLIP is capable of efficiently interpreting both medical text and images (Figure 1).

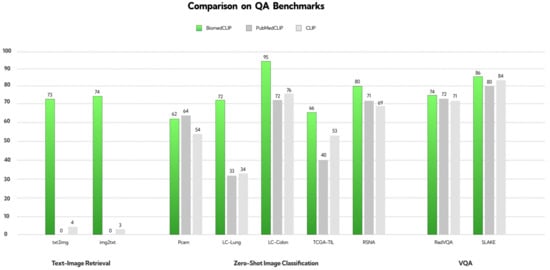

Figure 1.

The creators uploaded the reconstructed figure onto the website, providing a visual representation of the performance comparison of BiomedCLIP, PubMedCLIP, and CLIP models across various QA benchmarks [9]. The results demonstrate that BiomedCLIP consistently outperforms the other models, particularly in tasks related to text-image retrieval, zero-shot image classification, and visual question answering (VQA).

BiomedCLIP stands out due to its architecture, which combines the capabilities of PubMedBERT, a model dedicated to text analysis, with a Vision Transformer optimized for working with biomedical data. This combination allows it to achieve outstanding results across many benchmark datasets, making it one of the top models in its category.

The applications of BiomedCLIP are highly versatile. The model can be used for cross-modal retrieval (information search based on images or text), medical image classification, and answering questions related to those images. With its advanced technology, BiomedCLIP is becoming a powerful tool supporting diagnostics, scientific research, and medical education, opening new possibilities in the analysis and understanding of complex biomedical information [9].

Although BiomedCLIP demonstrates superior performance across most evaluated benchmarks, Figure 1 highlights an exception. In the PCam benchmark, PubMedCLIP outperformed BiomedCLIP, showcasing higher accuracy in this specific task. This observation suggests that while BiomedCLIP is a robust and versatile model, certain domain-specific datasets may still favor more specialized approaches like PubMedCLIP. This underscores the need for continued research to optimize vision–language models for diverse biomedical tasks.

Previous studies utilized the raw version of the CLIP model to evaluate posturographic X-ray images in the anteroposterior projection, demonstrating single-curve scoliosis at a severe stage. Since CLIP offers nine models with different architectures, our study partially confirmed the initial research hypothesis, indicating that only seven out of nine tested CLIP models were able to effectively recognize scoliosis from radiographic images. The second hypothesis was not confirmed, as only four of the nine models correctly answered questions regarding single-curve scoliosis. Assumptions regarding the accurate estimation of curvature degrees using AI were also not confirmed—none of the selected models achieved high sensitivity in the assessment of Cobb angles [10].

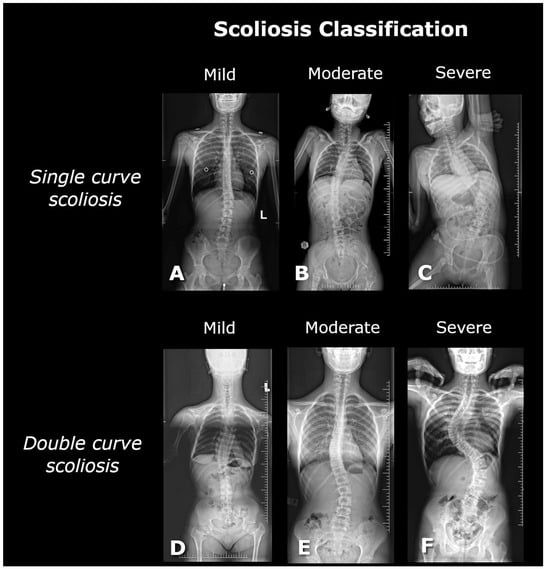

Scoliosis is a complex, three-dimensional deformity of the spinal column, identified by a curvature greater than 10 degrees, as determined by the Cobb method [11]. The diagnosis of scoliosis typically involves a comprehensive radiological posturographic examination of the entire spine in both anterior–posterior (AP) and lateral views, which facilitates the assessment of curvature, vertebral rotation, spinal dynamics, and trunk deformity, and aids in planning appropriate therapy [3,4]. Scoliosis is classified based on factors such as the patient’s age, degree of curvature, underlying cause, location, and number of curves present. In this study, scoliosis is categorized into single-curve (C-shaped) and double-curve (S-shaped) forms [5]. The severity of scoliosis, as indicated by the Cobb angle, is classified into mild (10–20 degrees), moderate (20–40 degrees), and severe (greater than 40 degrees) [5,12] (Figure 2).

Figure 2.

The figure shows posturographic X-ray images of single-curve scolioses at different stages: (A) mild (17° Cobb, L3/L4–Th12/Th11), (B) moderate (29° Cobb, L3/L4–Th7/Th6), (C) severe (56° Cobb, L4/L5–Th10/Th9), and double-curve scolioses: (D) mild (17° Cobb, L1/L2–Th9/Th8; 18° Cobb, Th8/Th7–Th2/Th1), (E) moderate (26° Cobb, L4/L5–L1/Th12; 25° Cobb, L1/Th12–Th8/Th7), and (F) severe (75° Cobb, L4/L5–Th12/Th11; 78° Cobb, Th11/Th10–Th5/Th4).

This study aimed to assess how a dedicated medical AI model, such as BiomedCLIP, performs in analyzing posturographic X-ray images that display both single-curve and double-curve scoliosis. To investigate this, three sets of questions were developed, partially building on prior research. The questions addressed whether the model detects the presence of scoliosis, correctly identifies its single-curve or double-curve form, and evaluates the severity of scoliosis—categorized as mild, moderate, or severe. Questions regarding Cobb angles were deliberately excluded, as earlier studies indicated that the CLIP model struggles with accurately determining these angles, often performing this task randomly. The following hypotheses (H) were formulated:

H1:

BiomedCLIP will detect the presence of scoliosis on all posturographic images with high sensitivity.

H2:

BiomedCLIP will correctly identify the type of scoliosis on the provided images with high sensitivity.

H3:

BiomedCLIP will accurately categorize the severity of scoliosis on the provided images.

2. Materials and Methods

The study was conducted as part of the scientific research activities at the Polish-Mother’s Memorial Hospital Research Institute in Poland. The bioethics committee determined that no formal consent was required for the analysis of the obtained radiological images. Posturographic images of patients diagnosed with scoliosis in the anteroposterior projection were collected between January 2021 and July 2024 (ages ranging from 2 to 17 years). Medical indications are required to perform X-ray examinations, and therefore, we did not include posturographic studies of patients without spinal deformities. Only images of patients with a prior diagnosis of scoliosis were used in this study. All images were anonymized, and consent was obtained from the legal guardians of the patients for the use of the X-ray images in this research. Inclusion criteria encompassed technically accurate images and those displaying both single-curve and double-curve scoliosis. Image quality assessment included verifying image readability and ensuring there were no errors in collimation or cropping. Exclusion criteria included scoliosis with co-occurring pathologies, such as spina bifida or vertebral hump, images that did not cover the entire spine, and scoliosis post-surgical correction with visible implants. From the X-ray image database, 262 posturographic images with visible scoliosis were included in the study. All tests were conducted using the same equipment. The X-ray images were not subjected to any modifications and were saved in JPEG format with a resolution of 2663 × 1277 px.

2.1. Manual Measurements

Analysis of the posturographic X-ray images was conducted independently by two neurosurgery specialists. RadiAnt software (version 2023.1) was used to evaluate the posturographic images.

2.2. BiomedCLIP Methodology

Model Selection and Fine-Tuning

In this study, the Biomed CLIP model was employed. This model is a fine-tuned version of the CLIP (Contrastive Language–Image Pretraining) model, specifically adapted for classifying X-ray images of children’s spines into three scoliosis categories: mild, moderate, and severe. The CLIP model was selected due to its superior zero-shot classification performance and proven ability to interpret complex biomedical imagery, surpassing other multimodal models like DALL-E, MedCLIP, and PubMedCLIP. The open-source availability of the Biomed CLIP model further facilitated its use in this research.

The model was fine-tuned using the PMC-15 dataset, which includes 15 million biomedical image–text pairs derived from 4.4 million scientific articles. This broad and diverse dataset helped adapt the model to the specific demands of pediatric spinal imagery and enhanced its ability to interpret medical images.

Hyperparameters

The fine-tuning of Biomed CLIP followed the same hyperparameters as those used in training the original CLIP ViT-B/16 model (Table 1). These include:

Table 1.

Hyperparameters used for fine-tuning BioMed CLIP, following the same setup as the original CLIP ViT-B/16 model.

Hardware and Software Configuration

All computational tasks were carried out on two NVIDIA L40S GPUs, supported by 16 vCPUs and 124 GB of RAM, with 96 GB of VRAM on the RunPod platform. The software environment utilized a Docker image (pytorch:2.1.0-py3.10-cuda11.8.0-devel-ubuntu22.04) containing the necessary CUDA and PyTorch modules to support model training and evaluation.

Model Architecture

The Biomed CLIP model is based on the Vision Transformer (ViT-B/16) architecture for its vision encoder, which divides input images into 16 × 16-pixel patches. This architecture was chosen for its ability to capture long-range dependencies in medical images. Each patch is treated as a token and processed using a self-attention mechanism. The model supports images of 224 × 224 pixels, though experiments with a higher resolution (384 × 384) suggested improved performance for certain tasks, albeit with increased training time.

The ViT-B/16 model features approximately 86 million trainable parameters. To handle the specific demands of medical text, the text encoder was adjusted from the standard 77 tokens to a context size of 256 tokens, accommodating longer and more detailed biomedical descriptions.

Training Setup

Training was performed on large-scale hardware configurations using 16 NVIDIA A100 or V100 GPUs. To optimize memory usage and computational efficiency, techniques such as gradient checkpointing and automatic mixed precision were employed. The fine-tuning process was carried out for 32 epochs, with a batch size of 32,768, and the Adam optimizer was used with weight decay and a learning rate of 4 × 10⁻4.

Data Handling and Evaluation

The evaluation was performed using a database of anonymized X-ray images, enabling iterative assessments of the model’s classification performance across various stages of scoliosis. For this task, descriptive text labels were prepared to represent the classification of each category:

- First Category:

- a.

- “This is an image of scoliosis”.

- b.

- “This is not an image scoliosis”.

- Second Category:

- a.

- “This is an image of single curve scoliosis”

- b.

- “This is an image of double curve scoliosis”

- Third Category:

- a.

- “This is an image of mild scoliosis”.

- b.

- “This is an image of moderate scoliosis”.

- c.

- “This is an image of severe scoliosis”.

Each X-ray image was preprocessed through normalization before being input into the model. The model then computed the probability of the image belonging to each scoliosis category, with a confidence score ranging from 0 to 1. This score was used as a quantitative measure of the model’s certainty in its predictions.

The evaluation emphasized accuracy in image classification and the ability to generalize across unseen medical image data, testing both the robustness and real-world applicability of Biomed CLIP in clinical diagnostics.

Detection and Classification Framework

Detection and Classification Methodology

Detection of Scoliosis

The detection process focused on identifying the presence of spinal deformities in posturographic X-ray images. The BiomedCLIP model was utilized to automatically determine whether a given image depicted scoliosis. The steps involved in detection included:

- Approach: Detection was conducted using descriptive text labels, assigning each image to one of two categories: “This is an image of scoliosis” or “This is not an image of scoliosis”.

- Parameters:

- ○

- The model’s vision encoder, based on the ViT-B/16 architecture, divided the input images into 16 × 16-pixel patches for analysis.

- ○

- Images were normalized and resized to 224 × 224 pixels prior to being fed into the model.

- ○

- The text encoder was extended to handle up to 256 tokens, accommodating detailed biomedical descriptions.

- Outcome: The model computed the probability of the image belonging to the category of scoliosis, with confidence scores ranging from 0 to 1, providing a quantitative measure of detection.

Classification of Scoliosis

Once scoliosis was detected, the images were further classified into two additional dimensions: the type of scoliosis (single-curve or double-curve) and the severity of the condition (mild, moderate, or severe).

- Classification of Scoliosis Type:

- ○

- Approach: Images were categorized based on their curvature type:

- ▪

- “This is an image of single-curve scoliosis”.

- ▪

- “This is an image of double-curve scoliosis”.

- ○

- Parameters:

- ▪

- The same preprocessing steps as in the detection process were applied, with images resized to 224 × 224 pixels and normalized.

- ▪

- Textual prompts tailored to the classification task were used to improve model specificity.

- ○

- Outcome: The model assigned probabilities to each category, identifying the type of scoliosis with high confidence.

- 2.

- Classification of Scoliosis Severity:

- ○

- Approach: Severity classification divided images into three categories:

- ▪

- Mild scoliosis (“This is an image of mild scoliosis”).

- ▪

- Moderate scoliosis (“This is an image of moderate scoliosis”).

- ▪

- Severe scoliosis (“This is an image of severe scoliosis”).

- ○

- Parameters:

- ▪

- Severity levels were defined based on the Cobb angle measurements:

- ▪

- Mild: <20°.

- ▪

- Moderate: 20°–40°.

- ▪

- Severe: >40°.

- ▪

- The model’s vision encoder leveraged detailed geometric features of the X-ray images to differentiate between severity levels.

- ○

- Outcome: Probabilities were calculated for each severity category, enabling precise classification of the scoliosis stage.

Integration of Detection and Classification

Both detection and classification were performed using the BiomedCLIP model, fine-tuned to handle pediatric spinal imagery. Although these processes shared a common model architecture, they were treated as distinct stages in the analysis pipeline. The detection stage established the presence of scoliosis, while the classification stages refined the analysis by identifying the specific type and severity.

By addressing detection and classification as separate processes, this methodology ensured a clear, structured workflow and optimized the model’s performance for each task. The parameters and technical solutions implemented for both stages are detailed in the corresponding sections of the methodology.

2.3. Statistical Analysis

In the present analysis, the significance level was set at α = 0.05. Descriptive statistics were employed to summarize the data. For continuous variables, the median (Mdn) served as the central tendency measure due to its robustness against outliers and distributional skewness. Furthermore, the first (Q1) and third (Q3) quartiles were reported to delineate the central 50% range of the data. Additionally, the inclusion of minimum (Min) and maximum (Max) values offers a complete view of the data’s range, highlighting the extremes. Categorical variables were quantified by counts (n) and the proportion of each category. The relationship between two numerical variables was investigated using Pearson correlation. The 95% confidence intervals (CI 95%) and p-values were computed through the asymptotic approximation of the t-test statistic.

The predictive performance of the BiomedCLIP model was evaluated by determining the optimal cutpoints for dichotomous outcomes (positive class—incidence of the event, negative class—absence of the event) through a method that maximizes the sum of specificity and sensitivity. The model’s discriminative ability was further assessed by estimating metrics such as accuracy, sensitivity, specificity, and the Area Under the Curve (AUC) with results visualized via Receiver Operating Characteristic (ROC) curves. To ascertain the model’s predictive capability beyond mere guessing, the 95% confidence intervals for the AUC metrics were estimated employing the DeLong test [13]. A high level of sensitivity was defined as achieving a result >90%, reflecting optimal performance for early detection and classification tasks.

Specificity requires a proper equation to connect ROC, AUC, and CI calculations for single- and double-curve scoliosis.

where TP—true positive values; FN—false negative values; TN—true negative values; FP—false positive value.

where d is the difference in scores or ranks between the positive and negative classes.

Variance of AUC is defined as:

where Vij is a weight assigned to each pair of observations; n1—number of positive instances, n2—number of negative instances, i—index of positive instances, j—index of negative instances.

where CI—confidence interval for AUC; z—is the critical value from the standard normal distribution (z = 1.96 for 95% confidence); SE—standard error, defined as:

Characteristics of the applied statistical tool and external packages

Analyses were conducted using the R Statistical language (version 4.3.3; [14]) on Windows 11 Pro 64 bit (build 22631), using the packages see (version 0.9.0; [15]), report (version 0.5.8; [16]), correlation (version 0.8.5; [17,18]), patchwork (version 1.2.0; [19]), pROC (version 1.18.5; [20]), gtsummary (version 1.7.2; [21]), cutpointr (version 1.1.2; [22]), ggplot2 (version 3.5.0; [23]), readxl (version 1.4.3; [24]), dplyr (version 1.1.4; [25]), and psych (version 2.4.6.26; [26]).

Characteristics of the observed data

An analysis was conducted on 262 radiographic images all identified with scoliosis. These images were assessed by two independent neurosurgical experts who evaluated the Cobb angles, scoliosis type, and its stage. The results of these assessments are presented in Table 2 and will be treated as observed data in further analyses.

Table 2.

Distribution of Cobb angle measurements, types, and stages of scoliosis in a study sample of radiographic images.

The examination of the data demonstrated that the median Cobb angle was 42.0 degrees, with observed values ranging from 12 to 115 degrees. The first and third quartiles were noted at 25.0 and 66.8 degrees, respectively, reflecting a significant variability in the severity of curvature across the studied sample. In terms of scoliosis type, the majority of cases were classified as single (56.87%), with double scoliosis observed in 43.13% of the samples. Regarding the staging of scoliosis, the data showed that severe scoliosis was the most common stage, accounting for 51.15% of cases, followed by moderate and mild stages at 32.44% and 16.41%, respectively.

3. Results

3.1. Analyzing the Correlation Between Observed Cobb Angles and Predictive Probabilities of Scoliosis Using the BiomedCLIP Model

The analysis utilizing the BiomedCLIP model to predict the likelihood of scoliosis from radiographic images yielded probabilities ranging from 0.097 to 0.818, demonstrating a considerable variation in the model’s certainty across the spectrum of scoliosis severities and types. A correlation analysis was conducted to evaluate the relationship between the observed Cobb angles and the predictive probabilities generated by the model.

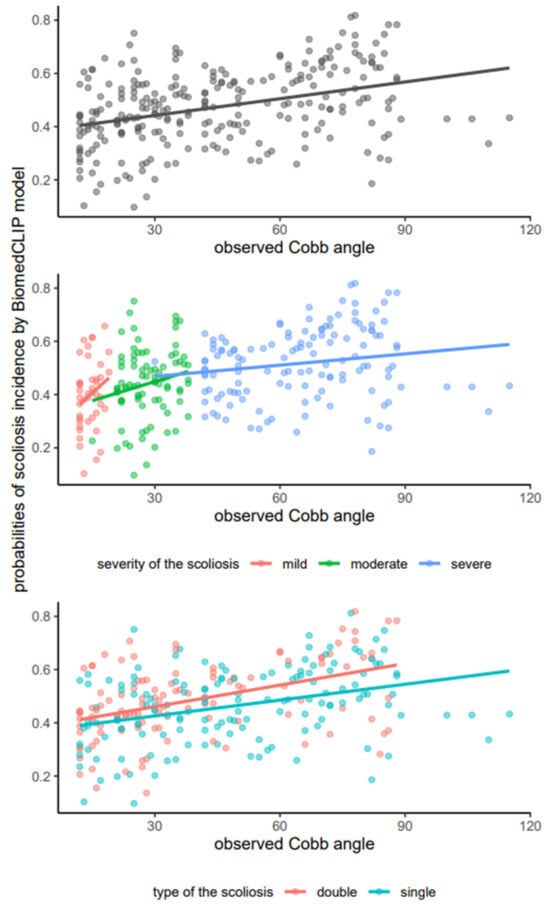

Based on the results in Table 2, in the overall sample of 262 images, a moderate correlation (r = 0.37) was observed, CI 95%: 0.26–0.47, which was statistically significant (t = 6.39, p < 0.001). This suggests that, generally, the model’s predictions align moderately well with the clinically observed Cobb angles across all cases.

However, when the data were stratified by the stage of scoliosis, the strength of correlation varied. In the mild stage group (n = 43), the correlation was weaker (r = 0.24) and not statistically significant (p = 0.118), indicating a lower predictive accuracy of the model in this subgroup. The moderate stage group (n = 85) also showed a weaker, non-significant correlation (r = 0.19, p = 0.077). The severe stage group (n = 134), however, displayed a slight yet statistically significant correlation (r = 0.18, p = 0.041), suggesting some level of predictive reliability in more advanced stages of scoliosis.

The analysis within scoliosis types revealed that the model performed better in predicting the occurrence of scoliosis in both single (r = 0.36, p < 0.001) and double (r = 0.44, p < 0.001) curvature types, with both correlations being statistically significant and stronger compared to the stage-based groups. This indicates that the model is more reliable in distinguishing the type of scoliosis rather than its severity stage.

The results of the correlation analyses were depicted through scatter plots with linear regression lines, as illustrated in Figure 3.

Figure 3.

Scatterplots illustrating the relationship between observed Cobb angles and predicted probabilities of scoliosis incidence as determined by the BiomedCLIP model, with fitted lines for the overall sample (upper), scoliosis stage (middle), and scoliosis type (lower).

The varying degrees of correlation across different stages and types suggest that the model’s predictive capabilities may benefit from further refinement, particularly for early-stage scoliosis detection where its current predictive certainty is lower (Table 3).

Table 3.

Correlation analysis between observed cobb angles and predictive probabilities of scoliosis using the BiomedCLIP model, stratified by scoliosis stage and type.

3.2. Estimating Optimal Cutpoints and Performance Metrics for Predictions of Scoliosis Severity and Types by BiomedCLIP Model

Analogous to the findings presented in Section 3.1, the results of the BiomedCLIP model were quantified on a numeric scale ranging from 0 to 1, representing the probability of event incidence. Descriptive statistics detailing the probabilities for events studied independently, including the occurrence of mild, moderate, and severe scoliosis, as well as single and double scoliosis types, are displayed in Table 4.

Table 4.

Distribution of the probabilities of the specified event incidence by BiomedCLIP model, N = 262.

Based in the results in Table 4, the BiomedCLIP model demonstrates differentiated predictive capabilities across different severity levels and types of scoliosis. The higher median probabilities and narrower interquartile ranges for single-curve scoliosis and moderate severity suggest that the model is more certain and consistent in these predictions. In contrast, severe scoliosis and double-curve scoliosis exhibit broader ranges of probabilities, indicating higher variability and less certainty in these predictions. This variability could stem from the inherent complexities and diverse manifestations of severe and double-curve scoliosis, which may challenge the model’s predictive accuracy.

The data presented in Table 5 provide a comprehensive analysis of the BiomedCLIP model’s capability to predict scoliosis severity stages and types using estimated optimal cutpoints and associated performance metrics.

Table 5.

Estimated optimal cutpoints and corresponding performance metrics for the prediction of scoliosis severity stages and types via the BiomedCLIP model.

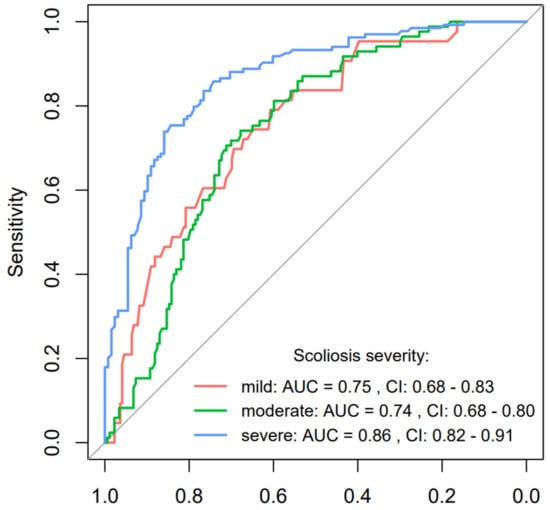

For the scoliosis severity forms, the model demonstrates varying degrees of effectiveness. In predicting mild scoliosis, the model achieves a moderate accuracy = 0.64, with a sensitivity = 0.79 and specificity = 0.61, supported by an AUC = 0.75, suggesting reasonable discriminative ability though with some limitations in specificity. The moderate scoliosis prediction shows a slightly better performance with an accuracy = 0.70, sensitivity = 0.74, and specificity = 0.68, which indicates a balanced ability in identifying true positives and true negatives, mirrored by an AUC = 0.74. Predictions for severe scoliosis deliver the highest accuracy = 0.80, accompanied by an impressive sensitivity = 0.84 and specificity = 0.77, reflected in the highest AUC = 0.87 among the group. This indicates a strong predictive capability of the model in identifying severe cases, which is crucial for prioritizing medical interventions. This could be attributed to more pronounced physiological markers or imaging characteristics that are easier to detect and differentiate at severe stages. The performance of the model, as visualized through ROC curves for specific scoliosis severity stages, is detailed in Figure 4. The lower boundary of the 95% confidence interval for each curve was distinctly above 0.50, indicating a clear superiority in the model’s discriminative ability compared to mere guessing.

Figure 4.

ROC curve analysis for scoliosis severity forms prediction using the BiomedCLIP model with AUC and 95% confidence interval.

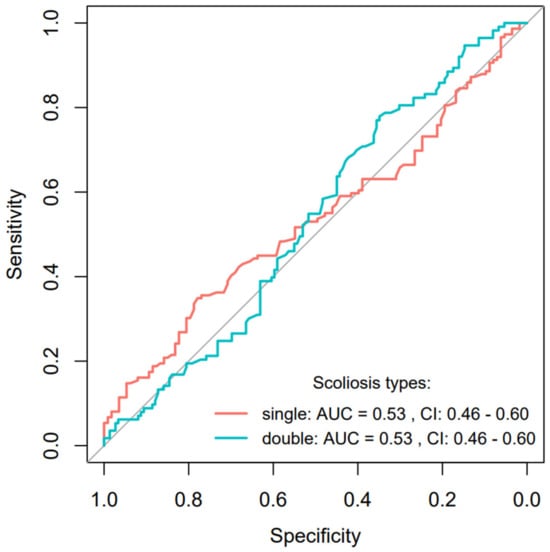

On the other hand, the performance metrics for scoliosis types reveal a different challenge. The prediction for a single-curve-type scoliosis result in a lower accuracy = 0.53, with a notably low sensitivity = 0.35, although the specificity is relatively higher at 0.78. This indicates a significant challenge in correctly identifying cases with single-curve scoliosis, as many actual cases might go undetected. The AUC = 0.53 suggests that the model performs only slightly better than chance in this scenario. In contrast, predictions for double-curve scoliosis also show an accuracy = 0.53 but with a reversed profile of high sensitivity = 0.78 and low specificity = 0.35, resulting in an AUC = 0.53. This suggests that while the model is better at detecting the presence of double-curve scoliosis, it also misclassifies a significant number of non-double-curve cases as double-curve. The performance of the model, as visualized through ROC curves for specific scoliosis severity stages, is detailed in Figure 5. The calculated CI 95% for both curves encompassed the value of 0.50, thereby indicating that the predictive capability was no better than random guessing.

Figure 5.

ROC curve analysis for scoliosis types prediction using the BiomedCLIP model with AUC and 95% confidence interval.

In conclusion, the BiomedCLIP model exhibits a robust predictive power for severe scoliosis stages, crucial for effective clinical prioritization and intervention. However, the challenges in predicting scoliosis types, particularly in distinguishing between single- and double-curve scoliosis with high accuracy and balanced sensitivity and specificity, highlight areas for potential model refinement and improvement.

4. Discussion

Our study found that the presented hypotheses regarding the effectiveness of the BiomedCLIP model in detecting scoliosis were not fully confirmed. Although the model demonstrated high sensitivity in recognizing advanced scoliosis, its effectiveness in identifying mild cases and different curvature types was limited. Particularly for single-curve scoliosis, the model’s sensitivity was significantly lower than expected.

4.1. Evaluation of BiomedCLIP Sensitivity in Scoliosis Detection Across All Images

The results of the BiomedCLIP model showed an overall correlation between Cobb angles and the probabilities predicted by the model, with r = 0.37, indicating moderate agreement with actual clinical outcomes (p < 0.001). Despite statistical significance, this result does not suggest high sensitivity of the model’s predictions in all cases. When analyzing differences based on scoliosis stage, the correlation in mild cases was low (r = 0.24, p = 0.118), indicating limited effectiveness of the model in detecting mild cases. Similarly, in the moderate stage, the correlation was weak (r = 0.19, p = 0.077), and in the severe stage, although statistically significant, the correlation was relatively low (r = 0.18, p = 0.041). These findings suggest that the model struggles to predict scoliosis in mild and moderate stages, challenging the assumption of its high sensitivity in all situations. In conclusion, Hypothesis H1 was not confirmed. The results indicate that BiomedCLIP does not achieve high sensitivity in predicting the presence of scoliosis across all posturographic images. While the model shows moderate effectiveness in more advanced cases and in predicting scoliosis types, its performance is weaker for mild and moderate stages, as well as in distinguishing scoliosis types, emphasizing the need for further optimization of the model.

The limitations in distinguishing scoliosis types highlight the necessity for improving training datasets and advanced image processing techniques to enhance the model’s precision [27]. Studies suggest that the integration of diverse imaging data could enhance AI performance in clinical environments [28]. Despite these challenges, ongoing advancements in AI and imaging techniques may ultimately lead to more reliable diagnostic tools for scoliosis.

4.2. Performance of BiomedCLIP in Detecting Single-Curve and Double-Curve Scoliosis

The results of the BiomedCLIP model demonstrated limited effectiveness in predicting both single-curve and double-curve scoliosis. For single-curve scoliosis, the model achieved low accuracy (0.53) and sensitivity (0.35), indicating difficulty in detecting actual cases. Although specificity was higher (0.78), the model struggled to confirm the presence of single-curve scoliosis, with an AUC of 0.53 suggesting performance only slightly better than random guessing. For double-curve scoliosis, the model showed a reverse pattern: higher sensitivity (0.78) but low specificity (0.35), resulting in numerous false positives. Accuracy remained at 0.53, and the AUC again indicated near-random performance (0.53). These consistent AUC results underscore the model’s challenges in distinguishing between single-curve and double-curve scoliosis The hypothesis (H2) that BiomedCLIP would accurately identify scoliosis types with high sensitivity was not fully confirmed. Hypothesis H2 posited that BiomedCLIP would accurately identify scoliosis types (single-curve and double-curve) with high sensitivity. However, the results demonstrated mixed outcomes. The model showed significant challenges in predicting single-curve scoliosis, achieving a sensitivity of only 0.35 and an AUC of 0.53, indicating performance slightly above random guessing. While the model exhibited higher specificity (0.78) in this category, it frequently failed to detect positive cases, leading to low overall accuracy (0.53). This suggests that the subtle radiographic features of single-curve scoliosis present a greater challenge for the model, likely due to the limited visibility of curvature in certain mild or intermediate stages. The overlapping of the two ROC curves near the middle of the sensitivity range reflects the complementary nature of the probabilities assessed by the AI model for features with two categories (single and double scoliosis). This behavior suggests that the model evaluates the features in a way that treats the two scoliosis types as interdependent or mutually exclusive.

This indicates that the AI model is likely assigning probabilities based on a shared feature set, where the sum of probabilities for both categories equals 1. The complementary probabilities reflect a balancing act by the model, where it adjusts predictions for one category as the likelihood for the other category increases. This approach is common in binary classification tasks, particularly when the model is trained on features that do not strongly distinguish between the two categories.

This complementary relationship in probability assessment is reflected in the nearly identical AUC values (0.53 for both single and double scoliosis) and the overlapping confidence intervals (CI: 0.46–0.60). These metrics suggest that the model’s performance in distinguishing between the two categories is limited and heavily influenced by shared characteristics of the feature space.

In contrast, the model performed better in detecting double-curve scoliosis, achieving a sensitivity of 0.78. However, this improvement came at the cost of reduced specificity (0.35), with the model misclassifying a notable number of non-double-curve cases as positive. The AUC value of 0.53 again highlights the lack of strong predictive capability. These results collectively suggest that while BiomedCLIP is capable of identifying double-curve scoliosis more reliably than single-curve cases, its overall performance in distinguishing scoliosis types remains limited. Further optimization, such as introducing additional training data with well-annotated examples of scoliosis types, is necessary to address these challenges. Low sensitivity for single-curve scoliosis and low specificity for double-curve scoliosis suggest that the model frequently failed to identify single-curve cases while misclassifying many as double-curve. This difficulty likely stems from the subtle imaging features of single-curve scoliosis, which are harder for the model to detect. While the model performed better in identifying double-curve scoliosis, this improvement came at the expense of increased false positives. These findings undermine the hypothesis of high sensitivity in recognizing scoliosis types and highlight the need for further optimization, particularly to improve sensitivity for single-curve scoliosis and specificity for double-curve scoliosis.

Detecting and classifying scoliosis using artificial intelligence (AI) models remains a significant challenge, especially in differentiating between these curvature types. Studies indicate that despite generally high accuracy, the sensitivity and specificity of AI models vary significantly depending on the curvature type and grade. For example, recent research demonstrated that an AI system achieved high sensitivity (0.97) and specificity (0.88) in detecting scoliosis, particularly in mild and moderate grades, while certain cases showed discrepancies exceeding 5° in Cobb angle measurement [29]. This suggests that such cases may be more difficult for models to accurately identify. While models demonstrate better sensitivity in double-curve scoliosis, this comes at the expense of an increased number of false alarms, leading to misclassification and reduced specificity [30].

These results underscore the need for further optimization of AI models to improve sensitivity in cases of single-curve scoliosis and increase specificity for double-curve scoliosis cases [31]. Techniques such as deep learning and automated ultrasound imaging are being studied to enhance diagnostic accuracy and reduce human error in measurements [31,32]. Although AI shows potential in detecting scoliosis, current limitations in sensitivity and specificity require further research and model optimization to achieve reliable clinical results.

4.3. Assessment of BiomedCLIP Accuracy in Classifying Scoliosis Severity

The results of the BiomedCLIP model in predicting scoliosis severity showed varying effectiveness depending on the disease stage. For mild scoliosis, the model achieved moderate accuracy (0.64), with a sensitivity of 0.79 and specificity of 0.61, highlighting limitations in precision. For moderate scoliosis, accuracy improved slightly to 0.70, with sensitivity at 0.74 and specificity at 0.68, indicating balanced performance in identifying true cases and reducing false positives. The best results were observed for severe scoliosis, where accuracy reached 0.80, sensitivity 0.84, and specificity 0.77, reflecting strong effectiveness in recognizing advanced cases.

The AUC analysis corroborates these findings. For mild scoliosis, the AUC was 0.75, suggesting moderate discrimination ability, while for moderate scoliosis, it was 0.74. For severe scoliosis, the AUC peaked at 0.87, underscoring excellent detection capability. The ROC curves further illustrate that the model consistently performed better than random guessing, particularly excelling in severe cases. These results indicate that BiomedCLIP performed significantly better in detecting severe scoliosis compared to mild and moderate stages. The model’s stronger performance in advanced cases can be attributed to more pronounced physiological changes visible in imaging. In contrast, subtler changes in mild scoliosis presented greater challenges, especially in maintaining specificity. The difficulty in detecting mild scoliosis reflects the subtleties of the disease, which make it harder for the model to discern changes on radiographic images. Hypothesis (H3), which proposed that BiomedCLIP would accurately classify scoliosis severity, was only partially confirmed. Hypothesis H3 proposed that BiomedCLIP would accurately categorize the severity of scoliosis into mild, moderate, and severe stages. The results indicate that the model’s performance varies depending on the severity stage. For severe scoliosis, BiomedCLIP achieved strong predictive power, with an accuracy of 0.80, sensitivity of 0.84, and specificity of 0.77, supported by an AUC of 0.87. These results confirm the model’s robustness in detecting advanced scoliosis cases, likely due to the more pronounced radiographic markers in severe stages.

In comparison, the model’s performance was more moderate for mild and moderate scoliosis. For mild cases, sensitivity reached 0.79, but accuracy was lower at 0.64, and specificity was limited to 0.61, indicating a tendency for false positives. Similarly, moderate cases showed a balanced performance with an accuracy of 0.70, sensitivity of 0.74, and specificity of 0.68, yet still fell short of the hypothesis’s expectation for high predictive precision. These findings highlight that while BiomedCLIP demonstrates notable success in identifying severe scoliosis, its ability to reliably classify mild and moderate stages remains limited.

The observed variability underscores the need for further refinement of the model and expansion of training datasets. Including more annotated examples of mild and moderate scoliosis, as well as enhancing image preprocessing techniques, could improve performance across all severity stages. Advanced AI models, although promising, still face limitations in accurately assessing Cobb angles and classifying less advanced cases of scoliosis [33]. Despite these advancements, the challenges of diagnosing mild scoliosis emphasize the need for further refinement of AI models to enhance diagnostic capabilities across all severity stages.

In conclusion, the hypothesis regarding the model’s ability to classify scoliosis severity was only partially confirmed. BiomedCLIP demonstrated strong performance in detecting severe scoliosis but moderate effectiveness for mild and moderate cases. Further optimization is necessary to improve detection in early and intermediate stages of scoliosis.

4.4. Comparison with CLIP

When comparing the results obtained by the CLIP and BiomedCLIP models, a key question arises: why did an untrained model like CLIP achieve 100% accuracy in detecting severe single-curve scoliosis, while BiomedCLIP, specifically trained on medical data, performed less effectively in similar cases? Notably, CLIP was tested on only 23 images of severe single-curve scoliosis, whereas BiomedCLIP analyzed a larger and more diverse dataset of 262 images, covering various severities and scoliosis types.

One explanation for this paradox is the simplicity of the task given to CLIP. Severe single-curve scoliosis presents clear and easily detectable physiological changes, enabling even an untrained model to identify these cases. The prominence of severe scoliosis in X-ray images likely made it easier for CLIP to succeed without requiring specialized medical data training. In contrast, BiomedCLIP faced a more complex task, having to differentiate not only severe scoliosis but also mild and moderate cases, as well as classify scoliosis types. This broader scope introduced additional challenges for the model.

Trained models like BiomedCLIP are also more prone to errors due to the diversity of the datasets they are trained on. Learning to recognize subtle and inconsistent nuances across a wide range of cases may dilute the model’s predictive accuracy, making it less effective in straightforward tasks such as detecting severe scoliosis. This diversity, while necessary for generalization, can reduce performance when handling highly specific or obvious changes.

4.5. Model Adaptation and Generalization

Models like BiomedCLIP have shown improved performance through domain-specific adaptations, helping focus on relevant features in medical images [34]. Introducing multi-level adaptation frameworks increases the model’s ability to generalize across different types of medical data, addressing domain discrepancies [35].

4.6. Challenges with False Features

Research indicates that models like CLIP can learn false correlations, reducing performance when data distribution changes [36]. Relying on such false features may limit effectiveness in scenarios with obvious but less specific changes, as the model may misinterpret signals. However, while data diversity poses challenges, it also broadens the model’s learning context, potentially increasing robustness in real-world applications.

Comparing the results of CLIP and BiomedCLIP is not entirely fair, as they were tested under different conditions. CLIP was evaluated on a simple task—detecting severe single-curve scoliosis—and performed well without specialized training. BiomedCLIP, on the other hand, had to handle a wider range of cases, including differentiating scoliosis types and severities, introducing complexities that likely impacted its performance. The differing test scenarios underline that the results of these models are not directly comparable. CLIP’s strong performance stemmed from a simple, focused task, while BiomedCLIP’s specialization required it to navigate more challenging diagnostic cases, affecting its accuracy in severe scoliosis detection.

The performance of BiomedCLIP can also be partially attributed to limitations in the dataset used for training. The PMC-15M dataset, containing 15 million image-text pairs from PubMed Central, includes a wide variety of biomedical image types, such as microscopy and histology. However, it does not guarantee suitability for scoliosis-specific training. A 2023 PubMed search for “scoliosis” yielded only approximately 31,226 articles, representing a small fraction of the PMC database. Many of these articles may lack relevant X-ray images, focusing instead on genetic, molecular, or clinical studies. Limited access to appropriate clinical scoliosis images likely constrained BiomedCLIP’s ability to detect mild cases and differentiate curvature types effectively.

BiomedCLIP’s use of the ViT (Vision Transformer) architecture also presents challenges for medical data. Transformers, initially designed for large-scale text data, require extensive datasets to extract meaningful patterns. Medical data, by contrast, are often highly specialized and context-dependent. Limited, variable datasets make it difficult to identify consistent patterns, leading to potential inaccuracies in predictions. Additionally, the complexity of interpreting medical images, which often depends on expert context, adds further difficulty. These limitations underscore the need for more targeted datasets and tailored architectural adjustments to improve performance in medical imaging tasks.

A significant challenge arises from the use of contrastive learning, a major component of transformer architectures, including BiomedCLIP. Contrastive learning can exacerbate these misinterpretations, potentially leading to incorrect classifications. Moreover, the limited availability of data presents another obstacle. The creators of BiomedCLIP themselves acknowledged that small datasets are insufficient for fine-tuning models like CLIP [34]. For instance, PubMedCLIP was trained on the ROCO dataset, which contains 81,000 images [37]. However, in the case of scoliosis, there are far fewer relevant articles and even fewer high-quality, annotated scoliosis images, further restricting the available data for model training.

This leads to another issue with the PMC-15 dataset, which focuses primarily on images where the accompanying text consists mainly of annotations. These annotations can be misleading and may provide incorrect clues about the overall context. Additionally, many medical conditions require a highly specific set of parameters for diagnosis, making it difficult to classify them correctly without sufficient and accurate data. Another limitation arises from the structure of the dataset itself, which includes images and descriptions from a wide range of biomedical fields. This diversity can result in the model being overly generalized in its predictions, making it less accurate for specific conditions like scoliosis. Furthermore, the lack of open-access PubMed articles restricts access to complete imaging datasets, which may have affected the quality and diversity of the training data available for BiomedCLIP. It is also important to note that our analysis was limited to radiographic images, which could restrict the generalizability of the results to other imaging techniques, such as MRI or CT. The lack of relevant data from other biomedical imaging modalities may have further constrained the model’s effectiveness in the broader diagnostic context of scoliosis.

Recent advancements in deep learning (DL) have significantly improved scoliosis detection and quantification, particularly in tasks such as axial vertebral rotation (AVR) estimation and Cobb angle (CA) measurement. For example, Zhao et al. proposed an automatic AVR measurement method that combines vertebra landmark detection and pedicle segmentation using an improved High-Resolution Network (HR-Net) with CoordConv layers and self-attention mechanisms. Their approach demonstrated high accuracy in estimating AVR by precisely extracting pedicle center coordinates and vertebral landmarks. This landmark-based approach provides detailed structural information, which is critical for assessing three-dimensional spinal deformities, particularly in adolescent idiopathic scoliosis (AIS) [38].

Similarly, a recent systematic review of DL methods for CA measurement highlights various approaches, such as U-Net variants, Capsule Neural Networks (CapsNets), and multi-stage pipelines, achieving notable accuracy in estimating spinal curvature. For instance, CapsNet achieved a Pearson correlation coefficient (PCC) of 0.93, while LocNet and RegNet models demonstrated robust vertebral localization and CA prediction performance. These approaches rely on precise manual annotations of vertebral landmarks and demonstrate strong capabilities in quantifying curvature, which remains a gold standard for scoliosis severity assessment [39].

Our study differs from these methods by addressing scoliosis classification tasks, specifically severity stages (mild, moderate, severe) and curvature types (single-curve, double-curve), using the BiomedCLIP model. Unlike landmark-dependent AVR or CA measurement methods, BiomedCLIP leverages a contrastive learning framework to embed image–text relationships, enabling classification without requiring explicit anatomical markers or angle calculations. This approach is particularly advantageous for automating scoliosis triage and broad classification in clinical settings.

However, similar challenges are observed across studies, including our own. While AVR- and CA-based methods achieve high precision in quantifying spinal deformities, they often require extensive manual annotations and are sensitive to image quality and data variability. Our findings, such as the lower sensitivity for mild scoliosis and reduced specificity for double-curve detection, align with these limitations. Moreover, subtle imaging features in early-stage scoliosis remain a significant challenge for both CA-based measurement and classification approaches.

Integrating precise AVR and CA measurement techniques with models like BiomedCLIP represents a promising direction for future research. By combining detailed anatomical quantification with classification-based predictions, a more comprehensive AI-driven framework could be developed, enhancing scoliosis diagnosis, severity assessment, and treatment planning.

This suggests the need for further optimization of the model, as discussed earlier, to improve its sensitivity in detecting subtle cases of scoliosis and accurately differentiate curvature types. Integrating precise AVR and CA estimation techniques with BiomedCLIP’s classification framework could be a valuable direction for future research.

5. Conclusions

Our study found that the initial hypotheses regarding the performance of the BiomedCLIP model in detecting scoliosis were not fully confirmed. While the model showed high sensitivity in recognizing advanced scoliosis, its effectiveness in identifying mild cases and different types of scoliosis was limited, particularly in detecting single-curve scoliosis.

BiomedCLIP performed well in classifying advanced scoliosis, but struggled with early-stage detection and distinguishing between different types of scoliosis, especially when compared to previous results with CLIP models. Though BiomedCLIP demonstrated better differentiation of scoliosis severity, its accuracy in detecting specific types of scoliosis remained inconsistent.

In conclusion, BiomedCLIP excelled in detecting advanced scoliosis but requires further refinement to improve its performance in early-stage detection and in distinguishing between different scoliosis types. Further optimization and broader data training will be essential to enhance the model’s overall accuracy and clinical applicability.

Author Contributions

Conceptualization, A.F., R.F. and A.Z.-F.; methodology, A.F., R.F., A.Z.-F. and B.P.; investigation, A.F., B.P., A.Z.-F. and R.F.; data curation, A.F., R.K. and B.P.; writing—original draft preparation, A.F., A.Z.-F., B.P., R.K. and R.F.; writing—review and editing, A.F., A.Z.-F., R.F., B.P., R.K. and E.N.; supervision, B.P. and E.N.; funding acquisition, A.F., E.N. and B.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data are contained within the article.

Acknowledgments

Many thanks are extended to Agnieszka Strzała for linguistic proofreading and to Robert Fabijan for the substantive support in the field of AI and for assistance in designing the presented study.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Daeschler, S.C.; Bourget, M.H.; Derakhshan, D.; Sharma, V.; Asenov, S.I.; Gordon, T.; Cohen-Adad, J.; Borschel, G.H. Rapid, automated nerve histomorphometry through open-source artificial intelligence. Sci. Rep. 2022, 12, 5975. [Google Scholar] [CrossRef] [PubMed]

- Hentschel, S.; Kobs, K.; Hotho, A. CLIP knows image aesthetics. Front. Artif. Intell. 2022, 5, 976235. [Google Scholar] [CrossRef] [PubMed]

- Yang, F.; He, Y.; Deng, Z.S.; Yan, A. Improvement of automated image stitching system for DR X-ray images. Comput. Biol. Med. 2016, 71, 108–114. [Google Scholar] [CrossRef] [PubMed]

- Hwang, Y.S.; Lai, P.L.; Tsai, H.Y.; Kung, Y.C.; Lin, Y.Y.; He, R.J.; Wu, C.T. Radiation dose for pediatric scoliosis patients undergoing whole spine radiography: Effect of the radiographic length in an auto-stitching digital radiography system. Eur. J. Radiol. 2018, 108, 99–106. [Google Scholar] [CrossRef]

- Hey, H.W.D.; Ramos, M.R.D.; Lau, E.T.; Tan, J.H.J.; Tay, H.W.; Liu, G.; Wong, H.K. Risk Factors Predicting C- Versus S-shaped Sagittal Spine Profiles in Natural, Relaxed Sitting: An Important Aspect in Spinal Realignment Surgery. Spine 2020, 45, 1704–1712. [Google Scholar] [CrossRef]

- OpenAI. CLIP: Connecting Text and Images. Available online: https://openai.com/research/clip (accessed on 29 July 2024).

- Li, Y.; Jia, S.; Song, G.; Wang, P.; Jia, F. SDA-CLIP: Surgical visual domain adaptation using video and text labels. Quant. Imaging Med. Surg. 2023, 13, 6989–7001. [Google Scholar] [CrossRef]

- Yang, W.; Wang, Y.; Hu, J.; Yuan, T. Sleep CLIP: A Multimodal Sleep Staging Model Based on Sleep Signals and Sleep Staging Labels. Sensors 2023, 23, 7341. [Google Scholar] [CrossRef]

- BiomedCLIP-PubMedBERT_256-Vit_Base_Patch16_224. Available online: https://huggingface.co/microsoft/BiomedCLIP-PubMedBERT_256-vit_base_patch16_224 (accessed on 2 September 2024).

- Fabijan, A.; Fabijan, R.; Zawadzka-Fabijan, A.; Nowosławska, E.; Zakrzewski, K.; Polis, B. Evaluating Scoliosis Severity Based on Posturographic X-ray Images Using a Contrastive Language–Image Pretraining Model. Diagnostics 2023, 13, 2142. [Google Scholar] [CrossRef]

- Maharathi, S.; Iyengar, R.; Chandrasekhar, P. Biomechanically designed Curve Specific Corrective Exercise for Adolescent Idiopathic Scoliosis gives significant outcomes in an Adult: A case report. Front. Rehabil. Sci. 2023, 4, 1127222. [Google Scholar] [CrossRef]

- Horng, M.H.; Kuok, C.P.; Fu, M.J.; Lin, C.J.; Sun, Y.N. Cobb Angle Measurement of Spine from X-Ray Images Using Convolutional Neural Network. Comput. Math. Methods Med. 2019, 2019, 6357171. [Google Scholar] [CrossRef]

- DeLong, E.R.; DeLong, D.M.; Clarke-Pearson, D.L. Comparing the areas under two or more correlated receiver operating characteristic curves: A nonparametric approach. Biometrics 1988, 44, 837–845. [Google Scholar] [CrossRef] [PubMed]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2021; Available online: https://www.R-project.org/ (accessed on 2 September 2024).

- Lüdecke, D.; Patil, I.; Ben-Shachar, M.; Wiernik, B.; Waggoner, P.; Makowski, D. see: An R Package for Visualizing Statistical Models. J. Open Source Softw. 2021, 6, 3393. [Google Scholar] [CrossRef]

- Makowski, D.; Lüdecke, D.; Patil, I.; Thériault, R.; Ben-Shachar, M.; Wiernik, B. Automated Results Reporting as a Practical Tool to Improve Reproducibility and Methodological Best Practices Adoption. CRAN 2023. Available online: https://easystats.github.io/report/ (accessed on 2 September 2024).

- Makowski, D.; Wiernik, B.; Patil, I.; Lüdecke, D.; Ben-Shachar, M. Correlation: Methods for Correlation Analysis. Version 0.8.3. 2022. Available online: https://CRAN.R-project.org/package=correlation (accessed on 2 September 2024).

- Makowski, D.; Ben-Shachar, M.; Patil, I.; Lüdecke, D. Methods and Algorithms for Correlation Analysis in R. J. Open Source Softw. 2020, 5, 2306. [Google Scholar] [CrossRef]

- Pedersen, T. Patchwork: The Composer of Plots. R Package Version 1.2.0. 2024. Available online: https://CRAN.R-project.org/package=patchwork (accessed on 2 September 2024).

- Robin, X.; Turck, N.; Hainard, A.; Tiberti, N.; Lisacek, F.; Sanchez, J.; Müller, M. pROC: An open-source package for R and S+ to analyze and compare ROC curves. BMC Bioinform. 2011, 12, 77. [Google Scholar] [CrossRef]

- Sjoberg, D.; Whiting, K.; Curry, M.; Lavery, J.; Larmarange, J. Reproducible Summary Tables with the gtsummary Package. R. J. 2021, 13, 570–580. [Google Scholar] [CrossRef]

- Thiele, C.; Hirschfeld, G. cutpointr: Improved Estimation and Validation of Optimal Cutpoints in R. J. Stat. Softw. 2021, 98, 1–27. [Google Scholar] [CrossRef]

- Wickham, H. Ggplot2: Elegant Graphics for Data Analysis; Springer: New York, NY, USA, 2016; ISBN 978-3-319-24277-4. [Google Scholar]

- Wickham, H.; Bryan, J. Readxl: Read Excel Files. R Package Version 1.4.3. 2023. Available online: https://CRAN.R-project.org/package=readxl (accessed on 2 September 2024).

- Wickham, H.; François, R.; Henry, L.; Müller, K.; Vaughan, D. Dplyr: A Grammar of Data Manipulation. R Package Version 1.1.2. 2023. Available online: https://CRAN.R-project.org/package=dplyr (accessed on 2 September 2024).

- Revelle, W. Psych: Procedures for Psychological, Psychometric, and Personality Research, R Package Version 2.3.9; Northwestern University: Evanston, IL, USA, 2023; Available online: https://CRAN.R-project.org/package=psych (accessed on 2 September 2024).

- Fabijan, A.; Zawadzka-Fabijan, A.; Fabijan, R.; Zakrzewski, K.; Nowosławska, E.; Po-lis, B. Assessing the Accuracy of Artificial Intelligence Models in Scoliosis Classification and Suggested Therapeutic Approaches. J. Clin. Med. 2024, 13, 4013. [Google Scholar] [CrossRef]

- Maaliw, R.R.; Susa, J.A.B.; Alon, A.S.; Lagman, A.C.; Ambat, S.C.; Garcia, M.B.; Piad, K.C.; Raguro, M.C.F. A Deep Learning Approach for Automatic Scoliosis Cobb Angle Identification. In Proceedings of the 2022 IEEE World AI IoT Congress (AIIoT), Seattle, WA, USA, 6–9 June 2022; pp. 111–117. [Google Scholar] [CrossRef]

- Kassab, D.K.I.; Kamyshanskaya, I.G.; Trukhan, S.V. A new artificial intelligence program for the automatic evaluation of scoliosis on frontal spinal radiographs: Accuracy, advantages and limitations. Digit. Diagn. 2024, 5, 243–254. [Google Scholar] [CrossRef]

- Xie, K.; Lei, W.; Zhu, S.; Chen, Y.; Lin, J.; Li, Y.; Yan, Y. Application of Deep Learning to Diagnose and Classify Adolescent Idiopathic Scoliosis. Chin. J. Med. Instrum. 2024, 48, 126–131. [Google Scholar] [CrossRef]

- Banerjee, S.; Huang, Z.; Lyu, J.; Leung, F.H.F.; Lee, T.; Yang, D.; Zheng, Y.; McAviney, J.; Ling, S.H. Automatic Assessment of Ultrasound Curvature Angle for Scoliosis Detection Using 3-D Ultrasound Volume Projection Imaging. Ultrasound Med. Biol. 2024, 50, 647–660. [Google Scholar] [CrossRef]

- Gardner, A.; Berryman, F.; Pynsent, P. How Accurate are Anatomical Surface Topography Parameters in Indicating the Presence of a Scoliosis? Spine 2024, 49, 1645–1651. [Google Scholar] [CrossRef] [PubMed]

- Fabijan, A.; Zawadzka-Fabijan, A.; Fabijan, R.; Zakrzewski, K.; Nowosławska, E.; Polis, B. Artificial Intelligence in Medical Imaging: Analyzing the Performance of ChatGPT and Microsoft Bing in Scoliosis Detection and Cobb Angle Assessment. Diagnostics 2024, 14, 773. [Google Scholar] [CrossRef] [PubMed]

- Zhang, S.; Xu, Y.; Usuyama, N.; Xu, H.; Bagga, J.; Tinn, R.; Preston, S.; Rao, R.; Wei, M.; Valluri, N.; et al. BiomedCLIP: A multimodal biomedical foundation model pretrained from fifteen million scientific image-text pairs. arXiv 2023, arXiv:2303.00915. [Google Scholar]

- Huang, C.; Jiang, A.; Feng, J.; Zhang, Y.; Wang, X.; Wang, Y. Adapting Visual-Language Models for Generalizable Anomaly Detec-tion in Medical Images. arXiv 2024, arXiv:2403.12570. [Google Scholar] [CrossRef]

- Wang, Q.; Lin, Y.; Chen, Y.; Schmidt, L.; Han, B.; Zhang, T. Do CLIPs Always Generalize Better than ImageNet Models? arXiv 2024, arXiv:2403.11497. [Google Scholar] [CrossRef]

- Pelka, O.; Koitka, S.; Rückert, J.; Nensa, F.; Friedrich, C.M. Radiology Objects in COntext (ROCO): A Multimodal Image Dataset. In Intravascular Imaging and Computer Assisted Stenting and Large-Scale Annotation of Biomedical Data and Expert Label Synthesis; Stoyanov, D., Taylor, Z., Balocco, S., Sznitman, R., Martel, A., Maier-Hein, L., Duong, L., Zahnd, G., Demirci, S., Albarqouni, S., et al., Eds.; LABELS CVII STENT 2018; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2018; p. 11043. [Google Scholar] [CrossRef]

- Zhao, Y.; Zhang, J.; Li, H.; Wang, Q. Automatic axial vertebral rotation estimation on radiographs for adolescent idiopathic scoliosis by deep learning. Biomed. Signal Process. Control. 2024, 88, 105711. [Google Scholar] [CrossRef]

- Kumar, R.; Gupta, M.; Abraham, A. A Critical Analysis on Vertebra Identification and Cobb Angle Estimation Using Deep Learning for Scoliosis Detection. IEEE Access 2024, 12, 11170–11184. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).