Self-Configurable Centipede-Inspired Rescue Robot

Abstract

1. Introduction

2. Materials and Methods

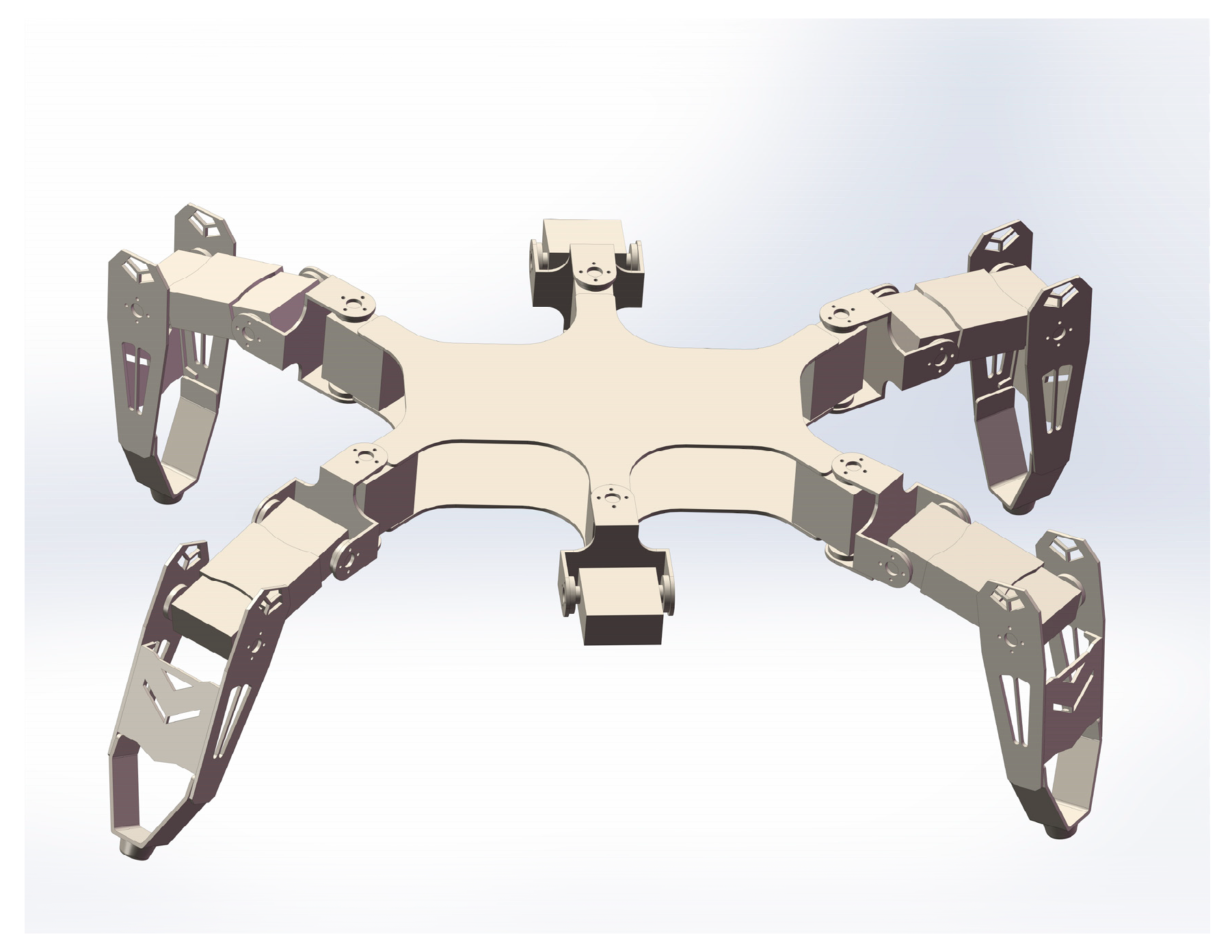

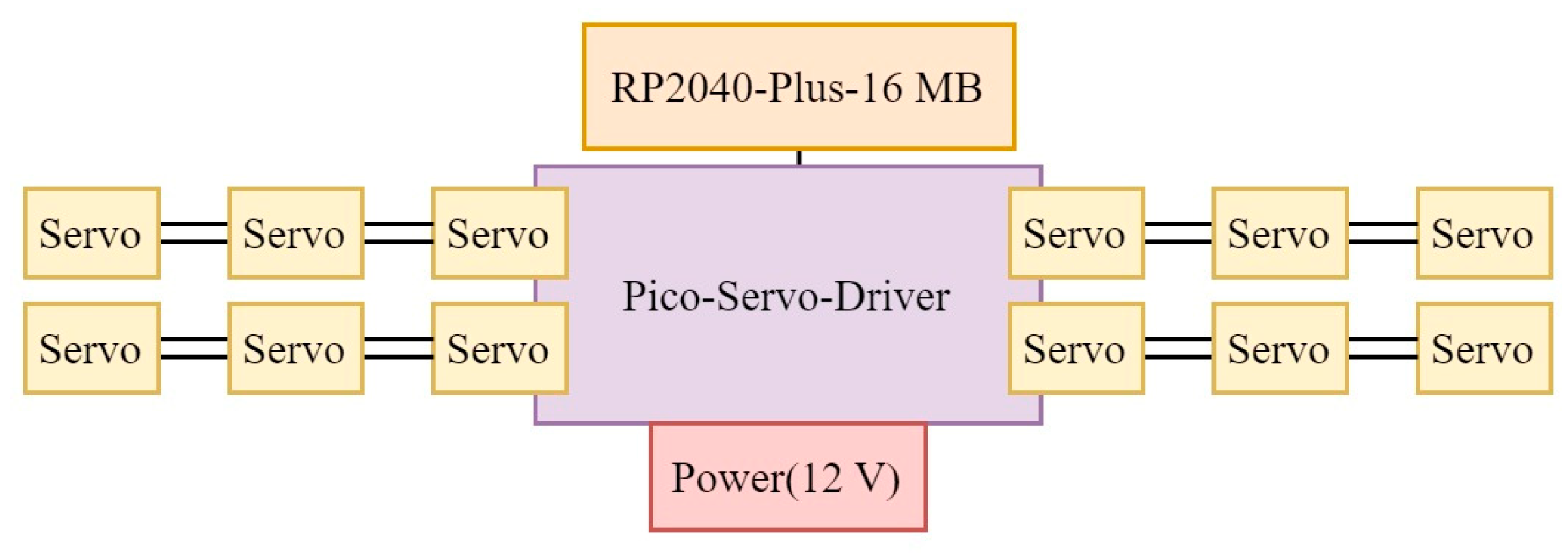

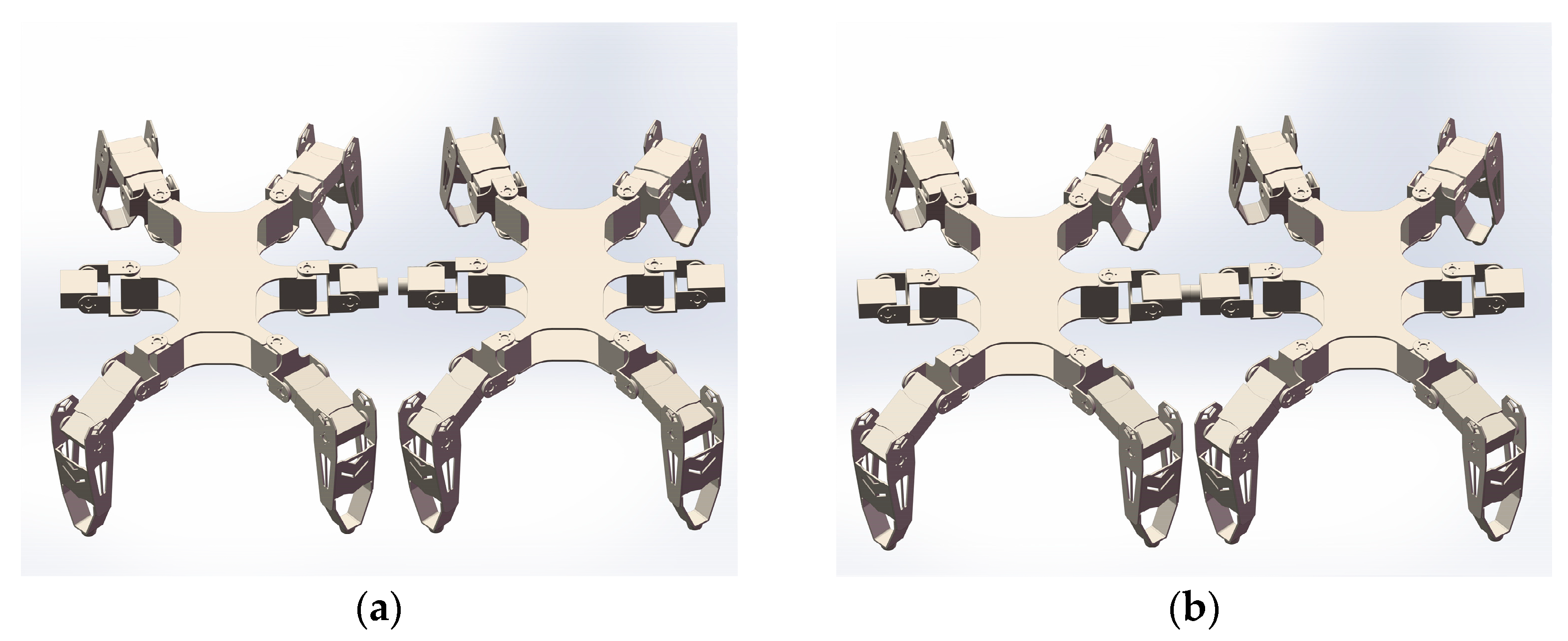

2.1. Mechanical Structure Design

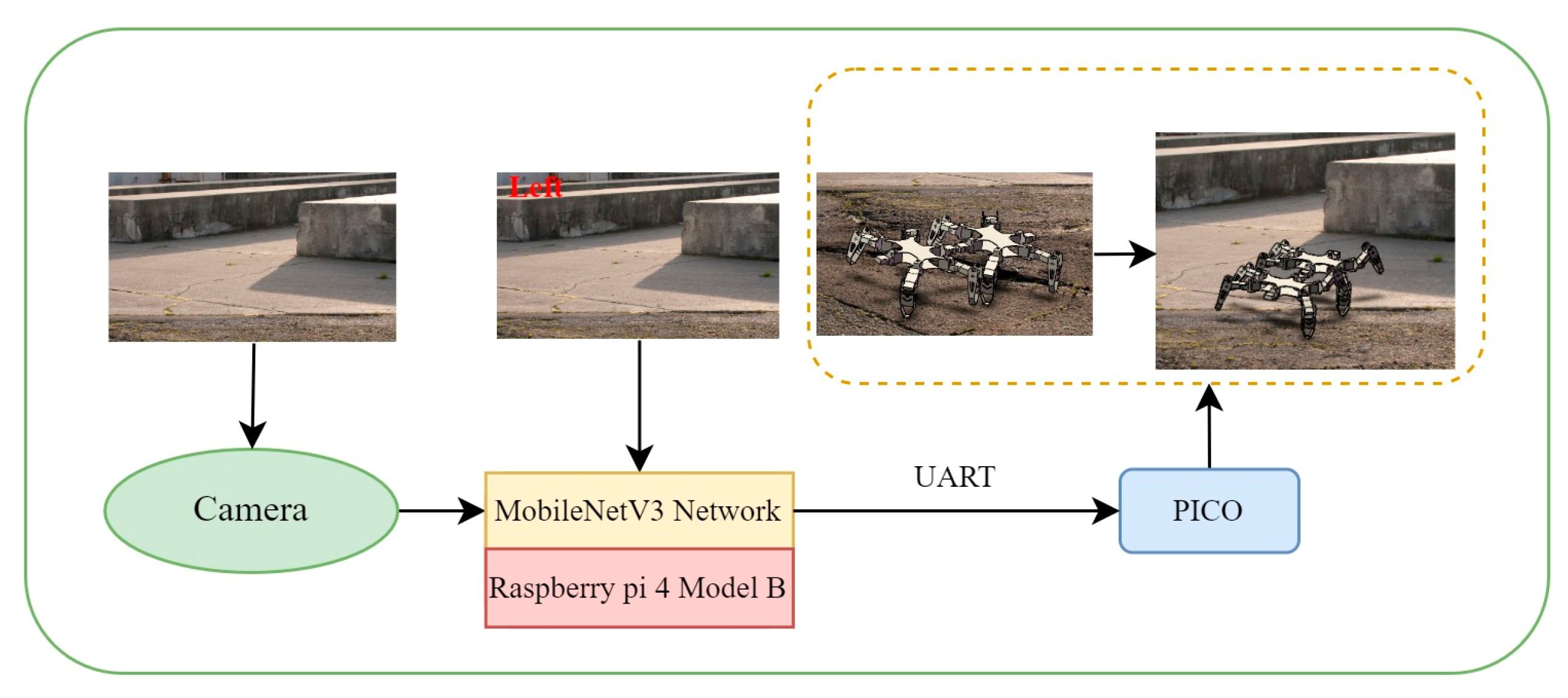

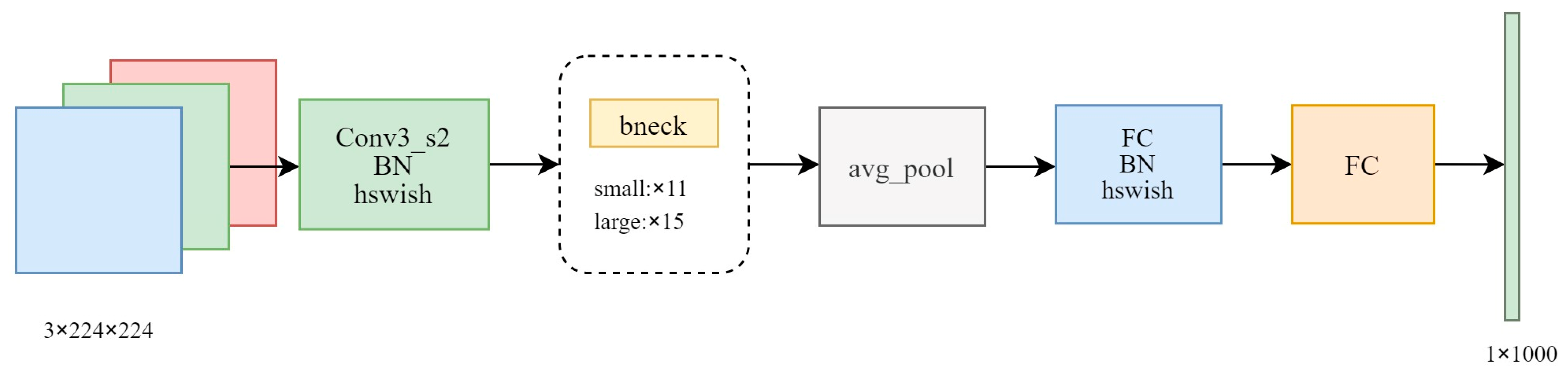

2.2. Machine Vision Design

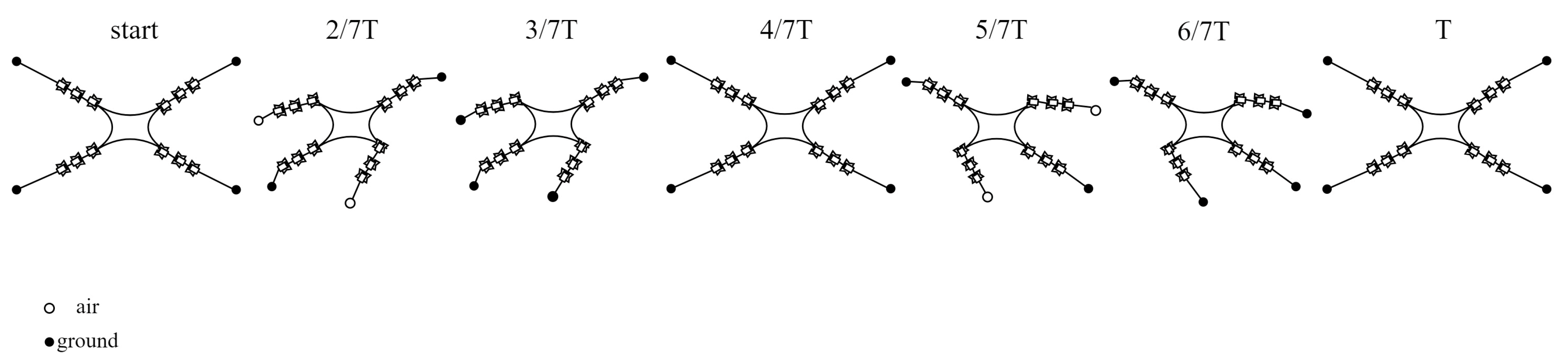

2.3. Machine Gait Design

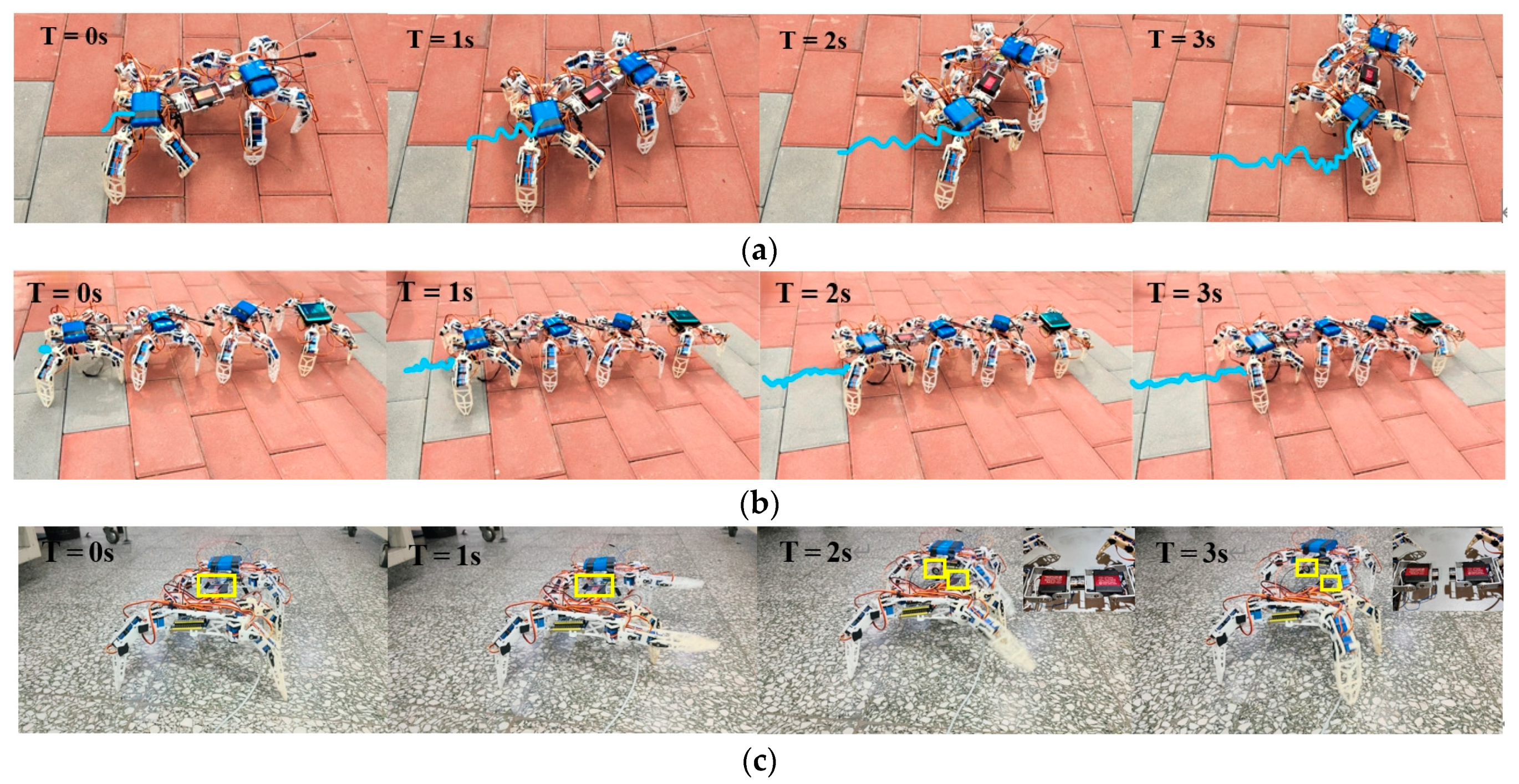

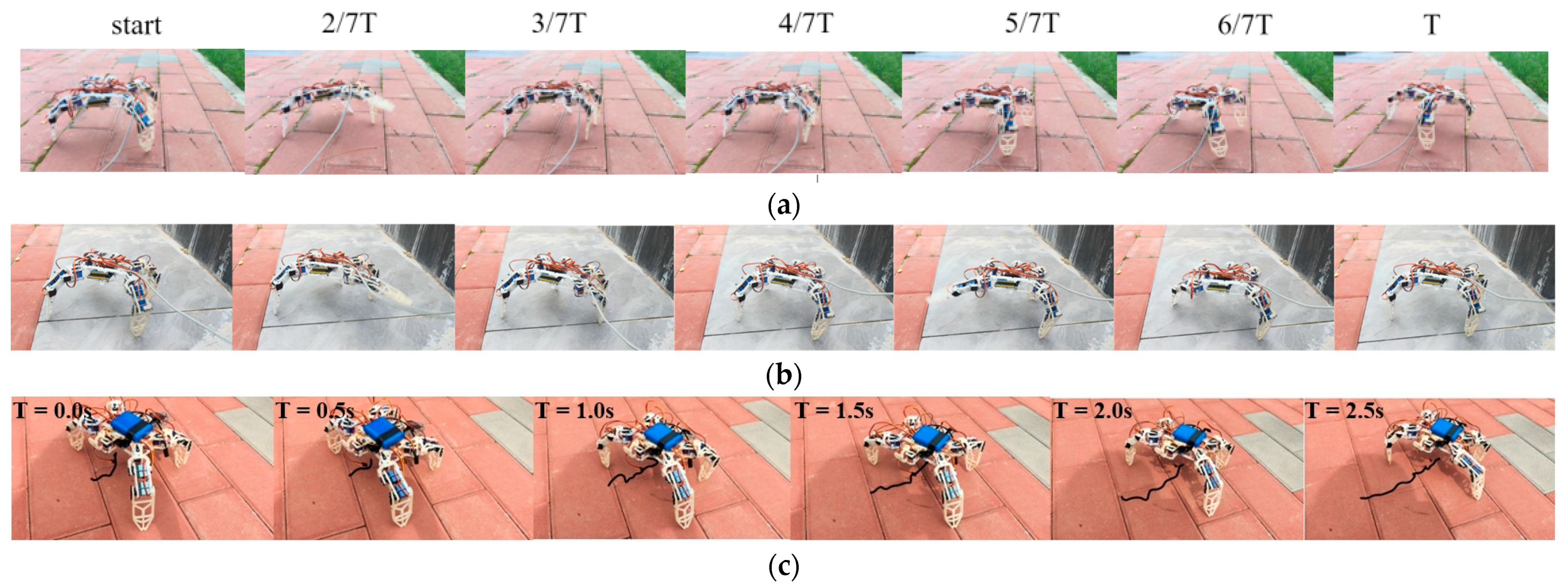

3. System Testing

3.1. Mechanical Structure Design

3.2. Machine Vision Design

3.3. Machine Gait Analysis

4. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Chong, B.; Aydin, Y.O.; Rieser, J.M.; Sartoretti, G.; Wang, T.; Whitman, J.; Kaba, A.; Aydin, E.; McFarland, C.; Cruz, K.D.; et al. A general locomotion control framework for multi-legged locomotors. Bioinspiration Biomim. 2022, 17, 046015. [Google Scholar] [CrossRef] [PubMed]

- Kuroda, S.; Uchida, N.; Nakagaki, T. Gait switching with phase reversal of locomotory waves in the centipede Scolopocryptops rubiginosus. Bioinspir. Biomim. 2022, 17, 026005. [Google Scholar] [CrossRef] [PubMed]

- Bulichev, O.; Klimchik, A. Concept Development of Biomimetic Centipede Robot StriRus. In Proceedings of the 2018 23rd Conference of Open Innovations Association (FRUCT), Bologna, Italy, 13–16 November 2018; pp. 85–90. [Google Scholar]

- Lim, K.B.; Kim, S.J.; Yoon, Y.S. Deliberative Planner for UGV with Actively Articulated Suspension to Negotiate Geometric Obstacles by Using Centipede Locomotion Pattern. In Proceedings of the ICCAS 2010, Gyeonggi-do, Republic of Korea, 27–30 October 2010; pp. 1482–1486. [Google Scholar]

- Wright, M.; Xiao, Q.; Dai, S.; Post, M.; Yue, H.; Sarkar, B. Design and development of modular magnetic bio-inspired autonomous underwater robot–MMBAUV. Ocean Eng. 2023, 273, 113968. [Google Scholar] [CrossRef]

- Kashiwada, S.; Ito, K. Proposal of Semiautonomous Centipede-Like Robot for Rubbles. In Proceedings of the The Seventeenth International Symposium on Artificial Life and Robotics, Oita, Japan, 19–21 January 2012; pp. 1127–1130. [Google Scholar]

- Homchanthanakul, J.; Manoonpong, P. Proactive body joint adaptation for energy-efficient locomotion of bio-inspired multi-segmented robots. IEEE Robot. Autom. Lett. 2023, 8, 904–911. [Google Scholar] [CrossRef]

- Jamisola, R.S.; Mastalli, C. Bio-Inspired Holistic Control through Modular Relative Jacobian for Combined Four-Arm Robots. In Proceedings of the 2017 18th International Conference on Advanced Robotics (ICAR), Hong Kong, China, 10–12 July 2017; pp. 346–352. [Google Scholar]

- Inagaki, S.; Niwa, T.; Suzuki, T. Navigation control and walking control on uneven terrain for centipede-like multi-legged robot based on fcp gait control. In Emerging Trends in Mobile Robotics; World Scientific Publishing: Hackensack, NJ, USA, 2010; pp. 656–663. [Google Scholar]

- Inagaki, S.; Niwa, T.; Suzuki, T. Follow-the-contact-point gait control of centipede-like multi-legged robot to navigate and walk on uneven terrain. In Proceedings of the 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems, Taipei, Taiwan, 18–22 October 2010; pp. 5341–5346. [Google Scholar]

- Wang, X.Q.; Chan, K.H.; Cheng, Y.; Ding, T.; Li, T.; Achavananthadith, S.; Ahmet, S.; Ho, J.S.; Ho, G.W. Somatosensory, light-driven, thin-film robots capable of integrated perception and motility. Adv. Mater. 2020, 32, 2000351. [Google Scholar] [CrossRef] [PubMed]

- Ozkan-Aydin, Y.; Chong, B.; Aydin, E.; Goldman, D.I. A Systematic Approach to Creating Terrain-Capable Hybrid Soft/Hard Myriapod Robots. In Proceedings of the 2020 3rd IEEE International Conference on Soft Robotics (RoboSoft), New Haven, CT, USA, 15 May–15 July 2020; pp. 156–163. [Google Scholar]

- Matthey, L.; Righetti, L.; Ijspeert, A.J. Experimental Study of Limit Cycle and Chaotic Controllers for the Locomotion of Centipede Robots. In Proceedings of the 2008 IEEE/RSJ International Conference on Intelligent Robots and Systems, Nice, France, 22–26 September 2008; pp. 1860–1865. [Google Scholar]

- Xu, R.; Xu, Q. Design of a Bio-Inspired Untethered Soft Octopodal Robot Driven by Magnetic Field. Biomimetics 2023, 8, 269. [Google Scholar] [CrossRef] [PubMed]

- Zhang, F.; Xiong, F.; Liu, Z. Learning Individual Features to Decompose State Space for Robotic Skill Learning. In Proceedings of the 2020 Chinese Control and Decision Conference (CCDC), Hefei, China, 22–24 August 2020; pp. 3169–3174. [Google Scholar]

- Coskuner-Weber, O.; Yuce-Erarslan, E.; Uversky, V.N. Paving the Way for Synthetic Intrinsically Disordered Polymers for Soft Robotics. Polymers 2023, 15, 763. [Google Scholar] [CrossRef]

- Chesnitskiy, A.V.; Gayduk, A.E.; Seleznev, V.A.; Prinz, V.Y. Bio-Inspired Micro-and Nanorobotics Driven by Magnetic Field. Materials 2022, 15, 7781. [Google Scholar] [CrossRef]

- Gomez-Tamm, A.E.; Ramon-Soria, P.; Arrue, B.; Ollero, A. Current State and Trends on Bioinspired Actuators for Aerial Manipulation. In Proceedings of the 2019 Workshop on Research, Education and Development of Unmanned Aerial Systems (RED UAS), Cranfield, UK, 25–27 November 2019; pp. 352–361. [Google Scholar]

- Liu, Y.; Li, J.; Deng, J.; Zhang, S.; Chen, W.; Xie, H.; Zhao, J. Arthropod-metamerism-inspired resonant piezoelectric millirobot. Adv. Intell. Syst. 2021, 3, 2100015. [Google Scholar] [CrossRef]

- Duan, S.; Shi, Q.; Wu, J. Multimodal Sensors and ML-Based Data Fusion for Advanced Robots. Adv. Intell. Syst. 2022, 4, 2200213. [Google Scholar] [CrossRef]

- Zazoum, B.; Batoo, K.M.; Khanm, M.A.A. Recent advances in flexible sensors and their applications. Sensors 2022, 22, 4653. [Google Scholar] [CrossRef]

- Úbeda, A.; Torres, F.; Puente, S.T. Assistance Robotics and Biosensors 2019. Sensors 2020, 20, 1335. [Google Scholar] [CrossRef]

- Wang, C.; Dong, L.; Peng, D.; Pan, C. Tactile sensors for advanced intelligent systems. Adv. Intell. Syst. 2019, 1, 1900090. [Google Scholar] [CrossRef]

- Torres, F.; Puente, S.T.; Úbeda, A. Assistance Robotics and Biosensors. Sensors 2018, 18, 3502. [Google Scholar] [CrossRef]

- Obute, S.O.; Kilby, P.; Dogar, M.R.; Boyle, J.H. Swarm foraging under communication and vision uncertainties. IEEE Trans. Autom. Sci. Eng. 2022, 19, 1446–1457. [Google Scholar] [CrossRef]

- Sayed, M.E.; Roberts, J.O.; Donaldson, K.; Mahon, S.T.; Iqbal, F.; Li, B.; Aixela, S.F.; Mastorakis, G.; Jonasson, E.T.; Nemitz, M.P.; et al. Modular robots for enabling operations in unstructured extreme environments. Adv. Intell. Syst. 2022, 4, 2000227. [Google Scholar] [CrossRef]

- Xiao, Z.; Wang, X.; Huang, J.; Hong, L. D-World: Decay Small-World for Optimizing Swarm Knowledge Synchronization. IEEE Access 2022, 10, 60060–60077. [Google Scholar] [CrossRef]

- Zhou, Y.; Chen, A.; He, X.; Bian, X. Multi-Target Coordinated Search Algorithm for Swarm Robotics Considering Practical Constraints. Front. Neurorobotics 2021, 15, 753052. [Google Scholar] [CrossRef] [PubMed]

- Du, Y. A novel approach for swarm robotic target searches based on the DPSO algorithm. IEEE Access 2020, 8, 226484–226505. [Google Scholar] [CrossRef]

- Qiao, Z.; Zhang, J.; Qu, X.; Xiong, J. Dynamic self-organizing leader-follower control in a swarm mobile robots system under limited communication. IEEE Access 2020, 8, 53850–53856. [Google Scholar] [CrossRef]

- Fujisawa, R.; Dobata, S.; Sugawara, K.; Matsuno, F. Designing pheromone communication in swarm robotics: Group foraging behavior mediated by chemical substance. Swarm Intell. 2014, 8, 227–246. [Google Scholar] [CrossRef]

- Aoi, S.; Yabuuchi, Y.; Morozumi, D.; Okamoto, K.; Adachi, M.; Senda, K.; Tsuchiya, K. Maneuverable and Efficient Locomotion of a Myriapod Robot with Variable Body-Axis Flexibility via Instability and Bifurcation. Soft Robot. 2023, 10, 1028–1040. [Google Scholar] [CrossRef] [PubMed]

- Yasui, K.; Takano, S.; Kano, T.; Ishiguro, A. Simple Reactive Head Motion Control Enhances Adaptability to Rough Terrain in Centipede Walking. In Proceedings of the Conference on Biomimetic and Biohybrid Systems, Virtual, 19–22 July 2022; Springer International Publishing: Cham, Swizterland, 2022; pp. 262–266. [Google Scholar]

- Ambe, Y.; Aoi, S.; Tsuchiya, K.; Matsuno, F. Generation of direct-, retrograde-, and source-wave gaits in multi-legged locomotion in a decentralized manner via embodied sensorimotor interaction. Front. Neural Circuits 2021, 15, 706064. [Google Scholar] [CrossRef] [PubMed]

- Bulichev, O.; Klimchik, A.; Mavridis, N. Optimization of Centipede Robot Body Designs through Evolutionary Algorithms and Multiple Rough Terrains Simulation. In Proceedings of the 2017 IEEE International Conference on Robotics and Biomimetics (ROBIO), Macau, Macao, 5–8 December 2017; pp. 290–295. [Google Scholar]

- Aoi, S.; Tanaka, T.; Fujiki, S.; Funato, T.; Senda, K.; Tsuchiya, K. Advantage of straight walk instability in turning maneuver of multilegged locomotion: A robotics approach. Sci. Rep. 2016, 6, 30199. [Google Scholar] [CrossRef] [PubMed]

- Koh, D.; Yang, J.; Kim, S. Centipede Robot for Uneven Terrain Exploration: Design and Experiment of the Flexible Biomimetic Robot Mechanism. In Proceedings of the 2010 3rd IEEE RAS & EMBS International Conference on Biomedical Robotics and Biomechatronics, Tokyo, Japan, 26–29 September 2010; pp. 877–881. [Google Scholar]

- Hoffman, K.L.; Wood, R.J. Robustness of Centipede-Inspired Millirobot Locomotion to Leg Failures. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 1472–1479. [Google Scholar]

- Mo, X.; Ge, W.; Ren, Y.; Zhao, D.; Wei, D.; Romano, D. Locust-inspired jumping mechanism design and improvement based on takeoff stability. J. Mech. Robot. 2024, 16, 061013. [Google Scholar] [CrossRef]

- Romano, D.; Benelli, G.; Kavallieratos, N.G.; Athanassiou, C.G.; Canale, A.; Stefanini, C. Beetle-robot hybrid interaction: Sex, lateralization and mating experience modulate behavioural responses to robotic cues in the larger grain borer Prostephanus truncatus (Horn). Biol. Cybern. 2020, 114, 473–483. [Google Scholar] [CrossRef]

- Wu, Y.; Yim, J.K.; Liang, J.; Shao, Z.; Qi, M.; Zhong, J.; Luo, Z.; Yan, X.; Zhang, M.; Wang, X.; et al. Insect-scale fast moving and ultrarobust soft robot. Sci. Robot. 2019, 4, eaax1594. [Google Scholar] [CrossRef]

- Li, T.; Zou, Z.; Mao, G.; Yang, X.; Liang, Y.; Li, C.; Qu, S.; Suo, Z.; Yang, W. Agile and resilient insect-scale robot. Soft Robot. 2019, 6, 133–141. [Google Scholar] [CrossRef]

- Vo-Doan, T.T.; Dung, V.T.; Sato, H. A cyborg insect reveals a function of a muscle in free flight. Cyborg Bionic Syst. 2022, 2022, 9780504. [Google Scholar] [CrossRef]

- Poon, K.C.; Tan DC, L.; Li, Y.; Cao, F.; Vo Doan, T.T.; Sato, H. Cyborg Insect: Insect Computer Hybrid Robot. In Electrochemical Society Meeting Abstracts 230; The Electrochemical Society, Inc.: Pennington, NJ, USA, 2016; p. 3221. [Google Scholar] [CrossRef]

- Romano, D.; Bloemberg, J.; Tannous, M.; Stefanini, C. Impact of aging and cognitive mechanisms on high-speed motor activation patterns: Evidence from an orthoptera-robot interaction. IEEE Trans. Med. Robot. Bionics 2020, 2, 292–296. [Google Scholar] [CrossRef]

- Romano, D.; Benelli, G.; Stefanini, C. How aggressive interactions with biomimetic agents optimize reproductive performances in mass-reared males of the Mediterranean fruit fly. Biol. Cybern. 2023, 117, 249–258. [Google Scholar] [CrossRef] [PubMed]

- Butail, S.; Abaid, N.; Macrì, S.; Porfiri, M. Fish–robot interactions: Robot fish in animal behavioral studies. Robot Fish Bio-Inspired Fishlike Underw. Robot. 2015, 359–377. [Google Scholar]

- Abaid, N.; Bartolini, T.; Macrì, S.; Porfiri, M. Zebrafish responds differentially to a robotic fish of varying aspect ratio, tail beat frequency, noise, and color. Behav. Brain Res. 2012, 233, 545–553. [Google Scholar] [CrossRef] [PubMed]

- de Croon, G.C.H.E.; Dupeyroux, J.J.G.; Fuller, S.B.; Marshall, J.A.R. Insect-inspired AI for autonomous robots. Sci. Robot. 2022, 7, eabl6334. [Google Scholar] [CrossRef] [PubMed]

- Arunkumar, V.; Rajasekar, D.; Aishwarya, N. A Review Paper on Mobile Robots Applications in Search and Rescue Operations. Adv. Sci. Technol. 2023, 130, 65–74. [Google Scholar]

- Guan, J.; Su, Y.; Su, L.; Sivaparthipan, C.B.; Muthu, B. Bio-inspired algorithms for industrial robot control using deep learning methods. Sustain. Energy Technol. Assess. 2021, 47, 101473. [Google Scholar] [CrossRef]

- Lewis, F.L.; Ge, S.S. (Eds.) . Autonomous Mobile Robots: Sensing, Control, Decision Making and Applications; CRC Press: Boca Raton, FL, USA, 2018. [Google Scholar]

- Iida, F.; Nurzaman, S.G. Adaptation of sensor morphology: An integrative view of perception from biologically inspired robotics perspective. Interface Focus 2016, 6, 20160016. [Google Scholar] [CrossRef]

- Yasui, K.; Sakai, K.; Kano, T.; Owaki, D.; Ishiguro, A. Decentralized control scheme for myriapod robot inspired by adaptive and resilient centipede locomotion. PLoS ONE 2017, 12, e0171421. [Google Scholar] [CrossRef]

- Sheynkman, D.; Wang, C.; Lawhorn, R.; Lu, L. Actuator Design and Whole-Body Dynamics of Micro Centipede Robots. In Proceedings of the Dynamic Systems and Control Conference, Minneapolis, MN, USA, 12–14 October 2016; American Society of Mechanical Engineers: New York, NY, USA, 2016; Volume 50695, p. V001T03A002. [Google Scholar]

- Lamperti, R.D.; de Arruda LV, R. Distributed strategy for communication between multiple robots during formation navigation task. Robot. Auton. Syst. 2023, 169, 104509. [Google Scholar] [CrossRef]

- Ito, K.; Ishigaki, Y. Semiautonomous centipede-like robot for rubble-development of an actual scale robot for rescue operation. Int. J. Adv. Mechatron. Syst. 2015, 6, 75–83. [Google Scholar] [CrossRef]

- Huang, X.; Arvin, F.; West, C.; Watson, S.; Lennox, B. Exploration in Extreme Environments with Swarm Robotic System. In Proceedings of the 2019 IEEE International Conference on Mechatronics (ICM), Ilmenau, Germany, 18–20 March 2019; Volume 1, pp. 193–198. [Google Scholar]

- Qin, Z.; Wu, Y.-T.; Eizad, A.; Lyu, S.-K.; Lee, C.-M. Advancement of mechanical engineering in extreme environments. Int. J. Precis. Eng. Manuf. -Green Technol. 2021, 8, 1767–1782. [Google Scholar] [CrossRef]

- Tan, N.; Sun, Z.; Mohan, R.E.; Brahmananthan, N.; Venkataraman, S.; Sosa, R.; Wood, K. A system-of-systems bio-inspired design process: Conceptual design and physical prototype of a reconfigurable robot capable of multi-modal locomotion. Front. Neurorobotics 2019, 13, 78. [Google Scholar] [CrossRef] [PubMed]

- Rastgar, H.; Naeimi, H.R.; Agheli, M. Characterization, validation, and stability analysis of maximized reachable workspace of radially symmetric hexapod machines. Mech. Mach. Theory 2019, 137, 315–335. [Google Scholar] [CrossRef]

- Howard, A.; Sandler, M.; Chen, B.; Wang, W.; Chen, L.-C.; Tan, M.; Chu, G.; Vasudevan, V.; Zhu, Y.; Pang, R.; et al. Searching for MobileNetV3. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar] [CrossRef]

- Chong, B.; He, J.; Soto, D.; Wang, T.; Irvine, D.; Blekherman, G.; Goldman, D.I. Multilegged matter transport: A framework for locomotion on noisy landscapes. Science 2023, 380, 509–515. [Google Scholar] [CrossRef]

| Classes | Recall | F1 Score | Average Precision | Precision |

|---|---|---|---|---|

| left | 92.75 | 94.81 | 99.21 | 96.97 |

| right | 96.15 | 93.46 | 98.20 | 90.91 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hou, J.; Xue, Z.; Liang, Y.; Sun, Y.; Zhao, Y.; Chen, Q. Self-Configurable Centipede-Inspired Rescue Robot. Appl. Sci. 2024, 14, 2331. https://doi.org/10.3390/app14062331

Hou J, Xue Z, Liang Y, Sun Y, Zhao Y, Chen Q. Self-Configurable Centipede-Inspired Rescue Robot. Applied Sciences. 2024; 14(6):2331. https://doi.org/10.3390/app14062331

Chicago/Turabian StyleHou, Jingbo, Zhifeng Xue, Yue Liang, Yipeng Sun, Yu Zhao, and Qili Chen. 2024. "Self-Configurable Centipede-Inspired Rescue Robot" Applied Sciences 14, no. 6: 2331. https://doi.org/10.3390/app14062331

APA StyleHou, J., Xue, Z., Liang, Y., Sun, Y., Zhao, Y., & Chen, Q. (2024). Self-Configurable Centipede-Inspired Rescue Robot. Applied Sciences, 14(6), 2331. https://doi.org/10.3390/app14062331