1. Introduction

Human–Robot Collaboration (HRC) has emerged as a critical area in the engineering and social sciences domain. This paper ventures into this dynamic domain with a keen focus on environments, where the collaboration pivots on the execution of routine tasks involving the manipulation of components on recycling disassembly lines, as well as management and classification of various electronic devices. We particularly explore the performance of collaboration with robotic arms, capable of a spectrum of autonomous actions under the guidance of a computer program.

In any kind of collaboration, including HRC, trust has been identified as a significant factor that can either motivate or hinder cooperation, especially in scenarios characterized by incomplete or uncertain information. Despite the ubiquitous understanding of the concept of “trust”, its definition has proven to be complex due to the range of fields it applies to and the individual context in which it is studied. Several perspectives contribute to the understanding of trust, depending on the theoretical focus and the specific field of study it is applied to. While interpersonal trust is the most studied, there is also an increasing focus on the trust between humans and technology, which is essential in HRC.

This paper aims to delve into significant research questions about the function of trust in HRC: (1) What are the fundamental factors influencing trust in HRC? (2) Is it possible to identify demographic or contextual variables that affect the nature and dynamics of trust? and (3) Can trust in HRC be measured using psychophysiological signals?

The paper is organized as follows:

Section 1 introduces the concept of trust in HRC, highlighting its general framework and outlining the major research questions this paper intends to explore;

Section 2 provides a theoretical background, covering current research and explaining the importance of defining trust in HRC;

Section 3 describes the Materials and Methods, with a detailed explanation of the experimental design and methodologies used in the data analysis;

Section 4 presents the Results, providing the empirical findings form the experimental process; in

Section 5, a comprehensive Discussion is included, thoroughly exploring the implications of the research findings for trust in HRC; lastly,

Section 6 offers the Conclusion and provides answers to the research questions.

2. Background

2.1. Theoretical Foundations of Trust

Trust is a crucial determinant of effective collaboration, in both human-to-human and human-to-machine interactions. Consequently, studies on trust modelling and measurement span a variety of disciplines, including psychology, sociology, biology, neuroscience, economics, management, and computer science [

1,

2,

3,

4,

5,

6,

7,

8,

9,

10]. These two approaches—(modelling and measuring)—share common knowledge, but with differing purposes and considerations. Trust modelling aims to depict human trust behaviour, extrapolating individual responses to a universal level, whereas trust measurement encompasses various methods. These methods include subjective ratings, which involve individuals’ personal assessments of trustworthiness based on their perceptions and experiences, and objective, measurable approaches that quantify involuntary body responses to varying trust-related stimuli. While subjective ratings provide insights into individuals’ subjective perceptions of trust, objective measurements offer quantifiable data that can be analysed and compared across different contexts.

The multidisciplinary nature of human trust research signifies that defining a unique modelling approach is complex. Various disciplines offer distinct perspectives on trust with specific views that, when integrated, offer a holistic perspective on the definition of trust. Sociology views trust as a subjective probability that another party will act in a manner that does not harm one’s interests amid uncertainty and ignorance [

1], while philosophy frames trust as a risky action deriving from personal and moral relationships between entities [

3]. Economics defines trust as the expectation of a beneficial outcome of a risky action taken under uncertain circumstances and based on informed and calculated incentives [

4], and psychology characterizes trust as a cognitive learning process shaped by social experiences and the consequences of trusting behaviours [

5]. Organizational management perceives trust as the willingness to take risks and be vulnerable in a relationship, predicated on factors like ability, integrity, and benevolence [

6], and trust in international relations is viewed as the belief that the other party is trustworthy, with a willingness to reciprocate cooperation [

7]. Within the realm of automation, trust is conceptualized as the attitude that one agent will achieve another agent’s goal in situations characterized by imperfect knowledge, uncertainty, and vulnerability [

8], while in computing and networking contexts, trust is understood as the estimated subjective probability that an entity will exhibit reliable behaviour for specific operations under conditions of potential risk [

9].

In general terms, trust is perceived as a relationship where a subject (trustor) interacts with an actor (trustee) under uncertain conditions to attain an anticipated goal. In this scenario, trust is manifested as the willingness of the trustor to take risks based on a subjective belief and a cognitive assessment of past experiences that a trustee will demonstrate reliable behaviour to optimize the trustor’s interest under uncertain situations [

2].

This definition emphasizes several issues concerning the nature of trust:

A subjective belief: Trust perception heavily relies on individual interactions and the preconceived notion of the other’s behaviour.

To optimize the trustor’s interest: Profit or loss implications for both the trustor and trustee through their interactions reveal the influence of trust/distrust dynamics.

Interaction under uncertain conditions: The trustor’s actions rely on expected behaviours of the trustee to optimize the anticipated outcome, but it may yield suboptimal or even prejudicial results.

Cognitive assessment of past experiences: Trust is dynamic in nature, initially influenced by preconceived subjective beliefs but evolving with ongoing interactions.

Authors have proposed varying dimensions of trust to explain different elements of trust development. Some differentiate between moralistic trust, based on previous beliefs about behaviour, and strategic trust, based on individual experiences [

10]. Other perspectives delineate dispositional, situational, and learned trust as distinct categories, where dispositional traits—such as age, race, education, and cultural background—influence an individual’s predisposition to trust, situational factors acknowledge conditions influencing trust formation (e.g., variations in risk), and learned trust reflects iterative development shaped by past interactions [

11]. Another approach categorizes the nature of trust into three facets: Referents of Trust, which encompass phenomena to which trust pertains, such as individual attitudes and social influences; Components of Trust, which encompass the sentiments inherent in trust, including consequential and motivational aspects; and Dimensions of Trust, outlining the evaluative aspects of trust, including its incremental and symmetrical nature. To avoid ambiguity between the terms “Trust dimensions” and “Dimensions of Trust”, we will use the denominations “Phenomenon-based”, “Sentiment-based”, and “Judgement-based” [

12]. Trust is also described as a combination of individual trust (derived from own personal characteristics and conforming to logical trust and emotional trust) and relational trust, referencing the dimensions of trust that rise from the relationship with other entities [

2].

Even if different authors use different classifications, it is possible to map similarities between different approaches. Despite omitting minor particularities,

Table 1 shows the convergence of these classifications. For convenience, we will use the nomenclature defined in [

11]—dispositional, situational and learned trust—without loss of generality.

In essence, trust relies on multiple complex factors, encompassing both individual and relational aspects. Notwithstanding the diverse disciplinary perspectives and methodologies in researching trust, there is considerable consensus on the fundamental concept and dimensions of trust. However, understanding, modelling, and measuring trust, particularly in human-to-machine contexts, continue to pose considerable challenges.

Tackling these challenges requires an in-depth understanding of multifaceted trust dynamics. The primary challenge lies in encapsulating the complexities of human trust in a computational model, given its subjectivity and dynamic nature. Quantifying trust is another significant obstacle, as trust is an internal and deeply personal emotion. The novelty of trust in the Human–Robot Collaboration domain implies a lack of historical data and testing methodologies to build the trust models upon. Furthermore, implementing these models in real-world scenarios is another challenge due to constraints related to resources, variability in responses and the need for instantaneous adaptation. Despite these hurdles, the potential rewards of successfully modelling and measuring trust in Human–Robot Collaborations—including enhanced efficiency, increased user acceptance and improved safety—are immense.

2.2. Trust in Human–Robot Collaboration

Within the field of Human–Robot Collaboration, trust plays a crucial role and is considered a significant determining factor. Various studies, including [

11,

13], have dedicated efforts to investigate and identify the factors that influence trust in this collaborative context. These factors have not only been structured within a single matrix but also classified based on their origins and dimensions of influence, which are instrumental in facilitating trust and designing experimental protocols.

Authors in [

14] provide a series of controllable factors with correlation to trust:

Robot behaviour: This factor relates to the necessity for robot companions to possess social skills and be capable of real-time adaptability, taking into account individual human preferences [

15,

16]. In the manufacturing domain, trust variation has been studied in correlation to changes in robot performance based on the human operator’s muscular fatigue [

17].

Reliability: An experiential correlation between subjective and objective trust measures was demonstrated through a series of system–failure diagnostic trials [

18].

Level of automation: Consistent with task difficulty and complexity and corresponding automation levels, alterations in operator trust levels were noted [

19].

Proximity: The physical or virtual presence of a robot significantly influences human perceptions and task execution efficiency [

20].

Adaptability: A robot teammate capable of emulating the behaviours and teamwork strategies observed in human teams has a positive influence on trustworthiness and performance [

21].

Anthropomorphism: Research in [

22] showed that anthropomorphic interfaces are prone to greater trust resilience. However, the uncanny valley phenomenon described in [

23] states that a person’s reaction to a humanlike robot would suddenly transition from empathy to repulsion as it neared, but did not achieve, a lifelike appearance.

Communication: Trust levels fluctuated based on the transparency and detail encapsulated within robot-to-human communication [

24,

25].

Task type: The task variability was recorded to influence interaction performance, preference, engagement, and satisfaction [

26].

Less controllable dimensions of trust include dispositional trust that is influenced exclusively by human traits and the organizational factors linked to the human–robot team [

11,

13]. These factors exhibit limited flexibility as they depend directly on the individual or the organizational culture. On the other hand, situational trust is controllable and heavily dependent on various factors, such as the characteristics of the task being developed, making it possible to manipulate it based on the experiment’s objective [

11,

13].

Moreover, trust manifests through brainwave patterns and physiological signals, making their use in assessing trust crucial [

27,

28]. Biologically driven, these elements foster a more symbiotic interaction, allowing machines to adapt to human trust levels.

Notably, trust in HRC is dynamic and influenced by a myriad of factors. Understanding the various dimensions of trust and the controllable and uncontrollable factors encompassed allows for the creation of experimental protocols and strategies to enhance trust, a hypothesis evident in studies looking into the triad of operator, robot, and environment [

13]. The importance of fostering and maintaining trust in the HRC domain is clear, especially considering the complexity of trust in the ever-evolving landscape of human–robot interaction.

2.3. Trust Measuring Using Different and Combined Psychophysiological Signals

Studies on trust have traditionally been situated within the context of interpersonal relationships, primarily utilizing various questionnaires to evaluate levels of trust [

29,

30,

31]. However, due to the arrival of automatic systems and the decreased cost of acquiring and analysing psychophysiological signals, focus has shifted towards examining these types of signals in response to specific stimuli in a bid to lower the subjectivity and potential biases associated with questionnaire-based approaches. Recent studies, like [

9,

32,

33,

34], have been centred on the usage of psychophysiological measurements in the study of human trust.

The choice of these psychophysiological sensors can differ, depending on which human biological systems (central and peripheral nervous systems) they are applied to. A common pattern has emerged from studies in which EEG is the most used signal to measure central nervous system activity, with fMRI closely behind it—the latter being more extensively used in the context of interpersonal trust [

35]. EEG analysis is increasingly being utilized in human–robot interaction evaluation and brain–computer interfaces [

36], as it provides the means to create real-time non-interruptive evaluation systems enabling the assessment of human mental states such as attention, workload, and fatigue during interaction [

37,

38,

39]. Additionally, attempts have been made to study trust through EEG measurements which only look at event-related potentials (ERPs), but ERP has proven to be unsuitable for real-time trust level sensing during human–machine interaction due to the difficulty in identifying triggers [

33,

39].

Similarly, sensors measuring signals from the peripheral nervous system, notably ECG (electrocardiography) and GSR (galvanic skin response), have been frequently used in assessing trust [

35]. GSR, a classic psychophysiological signal that captures arousal based on the conductivity of the skin’s surface, not under conscious control but instead modulated by the sympathetic nervous system, has seen use in measuring stress, anxiety, and cognitive load [

40]. Some research revealed that the net phasic component, as well as the maximum value of phasic activity in GSR, might play a critical role in trust detection [

33].

In contrast, the use of single signals most commonly involves only EEG, succeeded by fMRI [

35]. However, some studies have proposed that combining different psychophysiological signals (like GSR, ECG, EEG, etc.) improves the depth, breadth, and temporal resolution of results [

41,

42]. Interestingly, pupillometry has been recently highlighted as a viable method for detecting human trust, revealing that trust levels may be influenced by changes in partners’ pupil dilation [

43,

44,

45].

In short, the current state of the art exhibits an increasing trend towards the use of psychophysiological signals emanating from both central and peripheral nervous systems. Also, it showcases an interest in combining these signals to create more robust trust detection mechanisms with an improved breadth and depth of results.

3. Materials and Methods

The study of the dynamics that determine trust is a topic of great interest, although there is limited collected data available for analysis to draw conclusions from. In our case, the objective of the experimentation is to design and implement a process that can identify in real time if there has been a breach of trust between the operator and their robot companion (cobot) and to understand the factors that cause these variations in trust and identify the biological reactions related to it.

To minimize the inclusion of excessive variables that could occlude the true nature of trust, we imply that the cobot is a non-humanoid robotic arm with limited interaction capabilities. Thus, the only interaction with the human counterpart is reduced to the effectivity and performance of the tasks under its control. Additionally, in order to avoid contextual randomness, we decided that every participant should interact with the system in a similar environment, and thus, we determined that the experimental study would focus solely on the dispositional and learned dimensions of trust.

Consequently, in the context of this research, trust is defined as the predictability of the human that the robot will exhibit appropriate behaviour, following the established guidelines for the performance of its work in an orderly manner, without failure or errors that could be interpreted as dangerous to the person, the robot itself, or its work environment.

Our strategic planning for the experimental process aimed to achieve the following objectives:

Systematically collect a diverse array of relevant psychophysiological signals, emphasizing signal cleanliness and minimizing signal randomness;

Investigate the influence of human traits on various aspects of human–machine trust, specifically in the context of dispositional and learned trust dimensions;

Examine the role of the system’s capabilities, especially predictability and reliability, in shaping the evolutionary process of trust.

In the following subsections, the conceptual design of the experimentation, as well as the equipment and method used for this research, are described in detail.

3.1. Conceptual Design of the Experiment

Considering the objectives to be satisfied and to expose participants to different trust stimuli towards machines, this experimental stage has been designed as a game following a variant of the Prisoner’s Dilemma, known as the Inspection Game. These types of games are mathematical models that represent a non-cooperative situation where an inspector must identify whether his counterpart adheres to the established guidelines or, on the contrary, shrinks work duties. In this case, the participants take the role of the inspector, and their mission is to detect if their robotic counterpart is carrying out their job adequately.

Simplicity has been kept to a maximum during the design of this experiment; thus, participants interact with a single screen that provides them with the sensor feedback. The trust decision process is implemented via a single command button. Participants are exposed to 120 iterations during an approximate time of 30 min. However, the first 20 iterations are part of the learning and familiarization process and, thus, only 100 iterations per participant are considered in the experimental analysis.

Unknown to the participants, two experimental models were performed. Both had the same trustworthiness (the virtual sensor provided a correct answer 75 out of the 100 iterations), but in one of the cases the machine worked perfectly during the first 20 iterations, whereas the second model presented 50% success rate during the same first 20 iterations.

3.2. Experimental Sample

The experimental sample must be selected according to the population in which it is desired to validate the experimentation. The experimentation was conducted with a total of 55 individuals, of which only 51 are considered valid (the remainder were lost due to purely technical reasons such as poor data recording).

In this case, since the final objective is still oriented to an industrial work environment, the target population is very broad, since it covers all individuals of active working age. To refine the profile of the desired sample, its implications within the broader research context must be considered. Specifically, the demographic distribution of the sample has a direct impact on the collection of human traits and their eventual influence in the dispositional dimension of trust.

Taking into account these considerations, three factors are determined with which to compose the selected sample: Gender (Male/Female), Age (Under and over 40 years old) and Role in the work team (Technical/Non-technical). The first two belong to the category of human traits, while the third falls into the scope of the work environment.

Figure 1 shows the distribution of the final 51 participants in this experimental stage according to the indicated categories, revealing balanced samples, with the exception of the age segment.

3.3. Equipment Used

Given the specific objectives of this experimental phase, it may be prudent to consider acquiring a maximal number of psychophysiological signals possible to subsequently discriminate which ones are truly significant for the study of trust. However, this approach presents two significant challenges. Firstly, the acquisition of a multitude of signals for subsequent treatment without prior consideration could pose serious logistical problems for the experimental design and significant difficulties in their subsequent analysis. Secondly, the consideration of signals that, due to their acquisition methods, could be incompatible with real activity in industrial environments and would not align with the approach of this research.

After analysing the different bibliographic sources referring to previous studies, as well as the signals used during their execution and the acquisition systems used in each case, it is determined that the most appropriate signals to propose are those linked to:

Brain activity (EEG): Rigid headband with twelve dry electrodes for the reading of brain activity in anterior frontal regions AF[7-8], frontopolar Fp[1-2], frontal F[3-4], parietal P[3-4], parieto-occipital PO[7-8] and occipital O[1-2]. The numbers in brackets are referring to specific locations on the scalp where the electrodes are placed for the reading of brain activity based on the International 10–20 system for EEG.

Galvanic skin response (GSR): Electrodes positioned on the index and ring fingers of the non-dominant hand. In state excitement, sweat glands are activated, varying the electrical resistance of the skin. An applied low-voltage current between both points allows for the detection of these variations.

Respiration (RSP): Elastomeric band located at the height of the diaphragm. Issues small electrical signals when varying its extension, so it is possible identify inhalation and exhalation cycles. These provide information about the respiratory frequency, tidal volume, and characteristics of the respiratory cycle.

Pupillometry (PLP): Glasses equipped with eye tracking sensors which enable the identification of the fixation point of the gaze or eye movement refixation saccades. In addition to the direction of the gaze, they also provide information about the diameter of the pupil of each eye, which allows for the derivation of other parameters such as blinking frequency.

3.4. Experimental Process

In order to ensure that the experimental phase aligns with the established objectives, it is imperative to define a set of guidelines during execution that endorse the accurate execution of the process. The tasks earmarked for experimentation are delineated below:

Participants reception: Participants are briefed about the project and the experimentation, ensuring they are informed about the purpose, the physiological signals that will be collected, and the treatment they will receive. They are assured of data privacy through pseudo-anonymization and told of their right to opt out anytime. Once they consent, they provide demographic data and complete a technology trust survey. They are then familiarized with the experimental setup and equipment to capture psychophysiological signals (see

Figure 2). Participants are instructed to minimize movement during the experiment for data quality control.

Biocalibration: The biocalibration phase ensures the equipment is accurately tuned to the individuals’ varying physiological responses. This adjustment considers that, without context, a specific value cannot conclusively indicate high or low intensity. This phase helps define the participant’s normal thresholds in varied states of relaxation and excitement. After equipping the participants with the measuring gear, they perform tasks designed to both stimulate and soothe their signals, thereby minimizing uncontrolled disturbances.

Familiarization: The familiarization stage aims to ensure that participants completely understand their tasks and possible implications during the experiment. In this phase, participants repetitively interact with the machine to understand its workings, ensuring they can easily express their trust or mistrust. Unlike the experimental stage, they are made aware of the sensor’s performance, helping them form trust-based responses. This process also helps them become accustomed to the screens displaying crucial information during the interaction process.

Experimental process: During the experiment, participants interact with the machine and gauge the sensor’s trustworthiness. They are presented with a system state (“well lubricated” or “poorly lubricated”) and the default action matches the system state. They only interact if choosing to disregard the sensor. They are then informed of the real machine state and the result of their decision. This cycle repeats a hundred times with varying patterns unknown to the participants (see

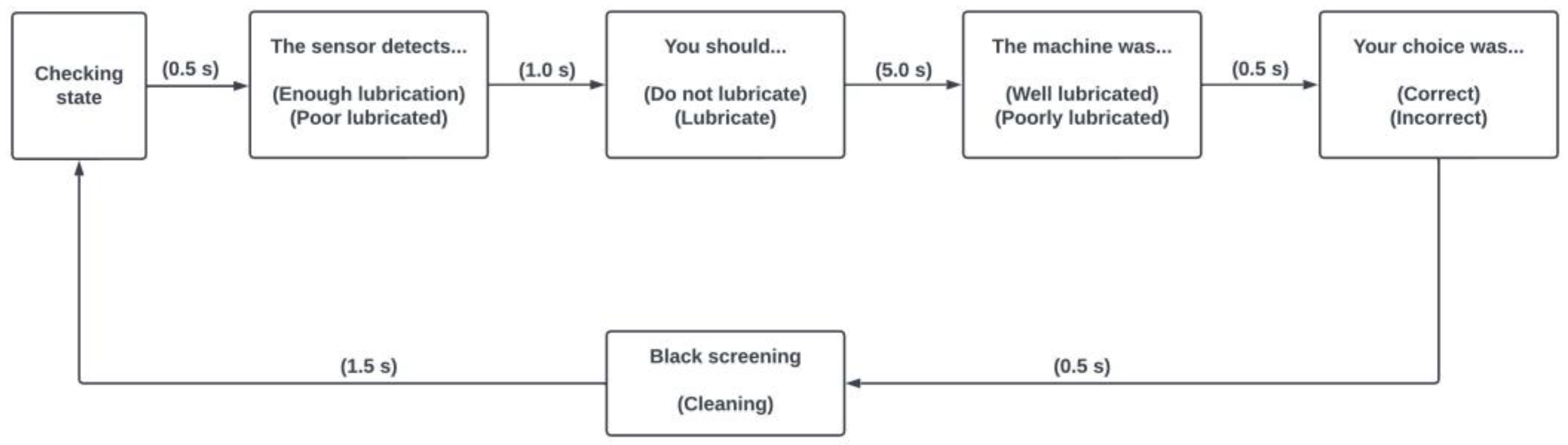

Figure 3).

Figure 3.

Sequence of events on a single experimental cycle.

Figure 3.

Sequence of events on a single experimental cycle.

Informal interview: A brief interview follows the experimental phase for each participant to assess their experience, identify disruptions, and understand areas of future improvement. It is especially important for participants presenting anomalies in signal visualization or behaviour. It helps filter data from those negatively affected by conditions like discomfort with measuring instruments or misunderstanding their tasks. This interview also aids in understanding participants’ perception of the system’s reliability and identifying personal traits influencing their perception.

4. Results

The following section comprises the experimental results, divided according to the research interests and main findings.

4.1. Factors Affecting Dispositional Trust

We utilized information from a concise affinity survey during the participants’ orientation to identify potential factors influencing their natural inclination to trust in a human–robot environment. These data were collected before any interaction with the designed experiment, ensuring they remain unaffected by procedural biases in the experiment and reflect the individuals’ intrinsic perspectives on trust in robot collaboration.

Additionally, each participant provided demographic information, including gender, age, and the technological intensity of their work role, along with a self-assessment of their trust in robots. At this stage, we investigated potential relationships between these variables and the ex-ante self-assessed trust in robots.

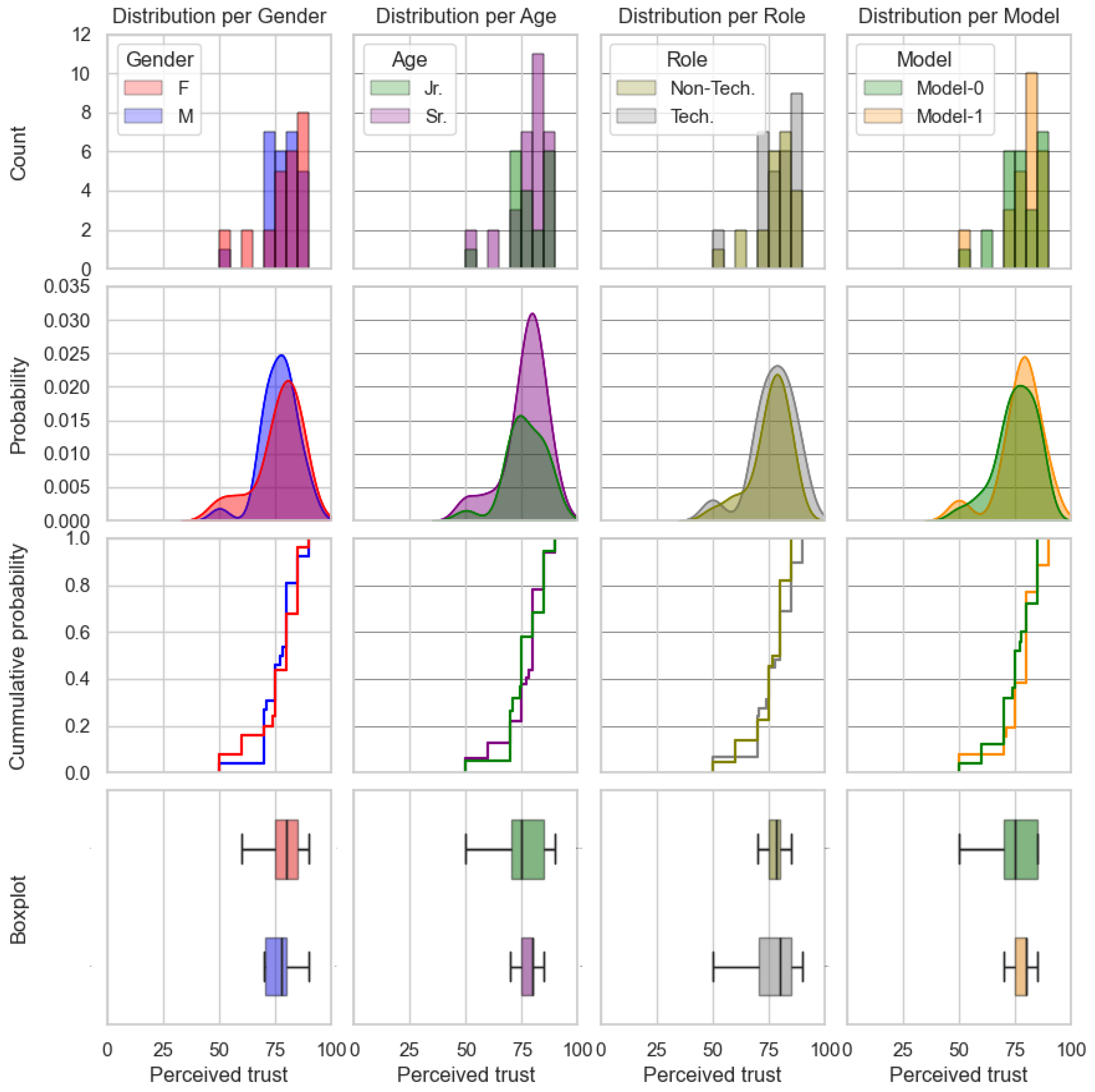

Figure 4 presents descriptive charts (histogram, probability density function—PDF—, cumulative distribution function—CDF—, and boxplot chart) illustrating dispositional trust across three dimensions of interest: gender (male versus female), age (juniors—younger than 40 years old—versus seniors—older than 40 years old), and work profile (highly technical versus non-technical).

Figure 4 highlights differences among the analyzed demographic groups. For instance, both the histogram and PDF reveal a noteworthy peak at the 70–80% trust level for females, and a similar peak is observed for juniors. This pattern is absent in their respective counterparts (males and seniors). The boxplot chart also indicates that senior individuals exhibit a slightly superior mean trust level compared to their younger counterparts.

To validate these perceptions, and considering the non-normal distribution of data, we conducted a Mann–Whitney U test to check for disparities in the data distribution of the different gender, age, and role collectives.

Table 2 presents the results of the tests, indicating that, while differences may exist, demographics alone are not significant enough to explain such disparities.

4.2. Factors Affecting Perceived Trust

After the experimental session, we instructed the participants to assess the system’s trustworthiness. It is important to note that, regardless of the interaction model the participants experimented with, the system behaved correctly 75% of the time, resulting in a consistent objective trust level across all cases which allowed us to compare results.

Once more, we examined the distribution of perceived trust and explored the influence of demographic factors on this variable, mirroring the analysis conducted for dispositional trust. However, given that these assessments were ex post, we also considered the impact of the interaction model to which participants were exposed.

Figure 5 and

Table 3 provide a summary of these results.

Similarly to dispositional trust,

Figure 5 shows some possible differences regarding the demographic data distribution such as higher trust values for senior participants and the subjects exposed to the experimental Model-1. However, the results of the conducted Mann–Whitney test compiled in

Table 3 show that these divergences are not significant enough to explain such disparities.

4.3. Influence of Past Iteraction in Trust Dynamics

The experimental process exposed participants to a series of interactions with an overall 75% reliability. Two experimental models were conceived to alter the sequence in which participants faced the test. Both models alternated between a fully reliable scenario and a chaotic sequence where only 50% of the readings were correct. Model-0 began with trustworthy iterations, while Model-1 presented chaotic iterations first. This setup allows us to investigate whether the order in which participants faced the test influences the propensity to trust.

To integrate the interaction results, we counted the times participants chose to trust the sensor’s reading on each experimental stage and divided the result by the number of interactions on that stage, obtaining the trust rate for each stage. These results were aggregated according to the experimental model they interacted with.

Figure 6 shows the distribution of trust rates among the different models and experimental stages, along with the mean trust rate for each case.

To verify the impact of past interactions on the conformation of trust, we decided to perform a statistical test on the trust rate distributions among the different experimental models for both the case of perfectly working sensors and for the randomly working sensor scenarios. Since

Figure 6 shows that the trust rate distribution does not follow normality, we conducted the Mann–Whitney U-test to check the significance of the trust rate differences among experimental models.

Table 4 shows the results of these tests, emphasizing those with statistical significance.

4.4. Universality of Trust Detection Models

Deciphering trust in the intricate human–robot dynamics requires many variables. The physiological signals captured during the experiment, including 12 detailed signals related to brain activity (EEG), skin conductance response (GSR), respiratory band extension (RSP), and pupil diameter variations (PLP), offer a variety of information to train a suitable model. However, there are some considerations to ponder in order to choose the most suitable approach. We analyzed three distinct yet complementary approaches in creating a trust detection model.

The General Approach involved constructing an expansive dataset comprising all participants’ data and iterations. This method allowed us to leverage a broad dataset, providing a comprehensive overview of general trust trends. However, it introduced the challenge of potential information leaks between the training and test sets, as participant-specific information was included in both sets. Under this approach, a single model covers all the trust detection needed for every participant.

In the Leave-One-Out Approach, we addressed the risk of information leaks by training algorithms using the complete dataset, excluding participant-specific information. While this approach maintained a substantial amount of data, it sacrificed personalized information crucial for trust detection, leaving out precise data that could contain the most valuable information. This approach created a specific classifier for each left-out participant and the results were later aggregated.

The Individual Approach focused on the uniqueness of trust reactions. By exclusively utilizing each participant’s individual dataset, this method created personalized algorithms for each individual. Despite having the smallest dataset for training, the Individual Approach allowed for the detection of specific trust nuances in each participant. As in the previous case, a specific model was created for every participant.

In summary, we created over 100 distinct models through an iterative process involving the use of a pipeline grid search to explore various algorithms, including K-Nearest Neighbors, Support Vector Machines, and Random Forests, and fine-tunning the corresponding hyperparameters to optimize the algorithmic result.

Table 5 presents key metrics, including minimum, mean, and maximum F1 scores, obtained with each training model, showcasing the strengths and nuances of each approach.

4.5. Universality of Signals Used for Trust Detection

During the experiment, we recorded a large variety of signals, including brain activity, electrodermal response, respiration, and pupillometry. However, whether these signals are valuable to detect general variations of trust is unclear. We seek to discern whether these signals exhibit consistent patterns applicable to the detection of trust variations in all the participants or whether they may be referred to as key elements to detect trust variations in certain individuals.

To analyze this issue, we systematically computed the frequency with which each signal contributed to the general analysis approach and, concurrently, how frequently it featured in individualized models.

Figure 7 succinctly encapsulates the outcomes, providing insights into the role of each signal in both general and individualized trust detection models.

5. Discussion

Throughout this article, we have presented the different dimensions of trust (dispositional, situational, and learned) and outlined the experimental process undertaken to determine the factors influencing them.

The literature consulted indicates a marked influence of personal factors on dispositional trust. Nevertheless, our empirical results contradicted conventional expectations by unveiling the absence of statistically significant correlations between these customary demographic indicators and the participants’ dispositional trust in automated systems. Since our analysis focused on gender, age, and occupational role, we suggest further analysis of the potential influence of unexplored demographic aspects. Variables such as social status, educational background, and nationality may wield significant results. Their potential significance in shaping dispositional trust cannot be disregarded, and further studies are needed to analyze their impact.

To analyze the evolution and dynamics of learned trust, we designed two experimental models with similar iterations arranged in different orders, thus allowing for the detection of variations in trust evolution dynamics. As indicated by statistical tests, the behavior of both groups is disparate. Specifically, participants who start with a series of iterations exhibiting random behavior (participants interacting with Model-1) experience a higher dynamic variation compared to their counterparts (participants interacting with Model-0), thus exhibiting higher trust levels when the system functions properly and lower trust levels when the system’s performance is erratic. In this context, it could be argued that the initial iterations with the system significantly influence the trajectory of future iterations. Drawing an analogy with classical mechanics, it can be asserted that the initial iterations are critical in determining the level of “trust inertia” individuals have towards automated systems and their future interactions.

Regarding the third component of trust, situational trust, its influence is not directly covered by the experimentation and analysis conducted in this research. However, a small portion of it is reflected in the link between post-trust perception and the interaction model to which each participant has been subjected. This is justified by considering that this combination incorporates elements that are external to the individual into a static value of trust and, therefore, cannot correspond to dispositional (individual) trust or learned (dynamic) trust. The analyses performed highlight a low significance in the link between these elements. This is mainly because this factor is not the main focus of the study, and thus, the experimental process is not designed to emphasize its influence.

Regarding mathematical models aimed at trust detection, we designed a common model for all participants using the generalist approach. The Leave-One-Out approach is also suitable, in general terms, for any individual and, in this regard, allows the same universalization as the general model. These models offer a moderate performance, achieving an F1 score close to 0.61–0.62. On the other hand, individualized models show much higher performance than these approaches, achieving F1 values that surpass those of the previous models in all cases, reaching an average F1 score of 0.76 and even reaching occasional values of 0.92 on this score. This indicates that, compared to generalist models, individualized models offer better performance in detecting trust violation and recovery situations. However, the implementation of this approach faces other challenges, such as the need to train independent algorithms for each subject.

The influence of the different psychophysiological signals in the proposed prediction models must also be considered. Variables such as pupil dilation or changes in breathing patterns play a fundamental role in implementing personalized trust detection models, but lack significance in the generalist model. Conversely, signals like brain activity in the parietal–occipital lobe (electrodes PO7 and PO8) contribute to the generalist model but have very little influence on personalized models. Although the cause of these phenomena has not been studied in detail, it can be inferred that signals included in the generalist model exhibit similar behavior in most individuals, but these changes are not necessarily the most sensitive to variations in trust situations.

Having outlined the scope of the results obtained, it is important to acknowledge that while this research may not fully capture the entirety of trust dynamics, it offers valuable insights that could have a significant impact if implemented in industrial environments.

The findings of our study underscore the significance of initial interactions in shaping the trajectory of trust dynamics in Human–Robot Collaboration (HRC). While it is essential to recognize the critical role of first interactions in trust formation, it is equally crucial to acknowledge the potential challenges posed if the machine’s trustworthiness diminishes over time. Monitoring trust variations in real time may offer insights into detecting deteriorating robot behavior. By incorporating mechanisms to detect and respond to changes in trust, developers can proactively address potential issues and maintain user confidence in robotic systems. These responses to changes in trust may include automatic modifications in robotic behavior, such as reduced operation speed, increased safety distance, and additional communication and feedback channels, such as alarms or flashing lights.

Additionally, another noteworthy aspect to consider is how the robot’s behavior might adapt if it receives indications that it is not trusted by the human user in its actions. This feedback loop could serve as a basis for extending trust models and developing more sophisticated algorithms for adaptive robot behavior. By incorporating feedback mechanisms that allow robots to adjust their actions based on user trust levels, we could potentially enhance the overall user experience and foster stronger collaboration between humans and robots in various contexts.

In summary, our study has clarified the intricate interplay of factors influencing trust in HRC, emphasizing the importance of initial interactions, the need for the real-time monitoring of trust dynamics, and the potential for adaptive robot behavior. These insights offer valuable guidance for developers and researchers seeking to design reliable, robust, and trusted human–robot collaborative environments.

6. Conclusions

To conclude our study, we need to review the core research questions that guided our research regarding the nature of trust in Human–Robot Collaboration.

First, we aimed to identify the fundamental factors affecting trust in HRC environments. We addressed this issue with an extensive literature review that revealed three distinct dimensions of trust: dispositional, situational, and learned. This review also stressed several demographic aspects that influence the dispositional dimension of trust.

The second question aimed to identify specific variables that could influence the previously disclosed dimensions of trust. To answer this issue, we created a specific experimental process that allowed us to identify potential factors affecting dispositional and learned trust. The literature suggested a marked influence of personal factors such as gender, age, and occupational roles on dispositional trust. However, contrary to conventional expectations, our empirical findings did not reveal any statistically significant correlations between these traditional demographic markers and participants’ dispositional trust in automated systems. This unexpected result prompted us to delve further into broader demographic factors—social status, educational background, and nationality—which were not explicitly considered in our initial study. On the other hand, the study proved that the first iterations between humans and robots play a crucial role the evolution of trust dynamics. Presumably, this points out that the learned dimension of trust is more sensible and susceptible to change than the other dimensions.

The third and final research question aimed to identify crucial human signals for measuring trust in HRC and understand their contributions to the development of trust detection models. Following previous works detailed in the literature review, our exploration into psychophysiological signals encompassed brain (EEG), electrodermal (GSR), respiratory (RSP), and ocular (PLP) activities. We focused on three different approaches, varying from generic to individualized trust models. Results revealed the difficulty of extrapolating a general model of trust. On the one hand, the individualized models worked better than the general model, and, on the other hand, several individually significant psychophysiological signals showed very particular responses and, thus, proved irrelevant in the general model. This issue emphasizes the very complex and personal nature of trust.

Our study effectively addresses the research questions, shedding light on the intricate interplay of factors influencing trust, the temporal dynamics of trust evolution, and the optimal human signals for trust measurement in HRC. However, it is worth mentioning that while our research provides valuable insights into the complex dynamics of trust in Human–Robot Collaboration, there are areas that remain inconclusive. For example, our discussion does not address the potential implications of feedback mechanisms on robot behaviour based on the trust levels perceived by human inspectors. Incorporating such feedback into our trust detection models could enhance the adaptability of robots in response to changing trust dynamics, thus optimizing collaboration and performance in human–robot interaction scenarios. Nevertheless, we are confident that this research will empower the design of future reliable, robust, and trusted human–robot collaborative environments.