MMDL-Net: Multi-Band Multi-Label Remote Sensing Image Classification Model

Abstract

1. Introduction

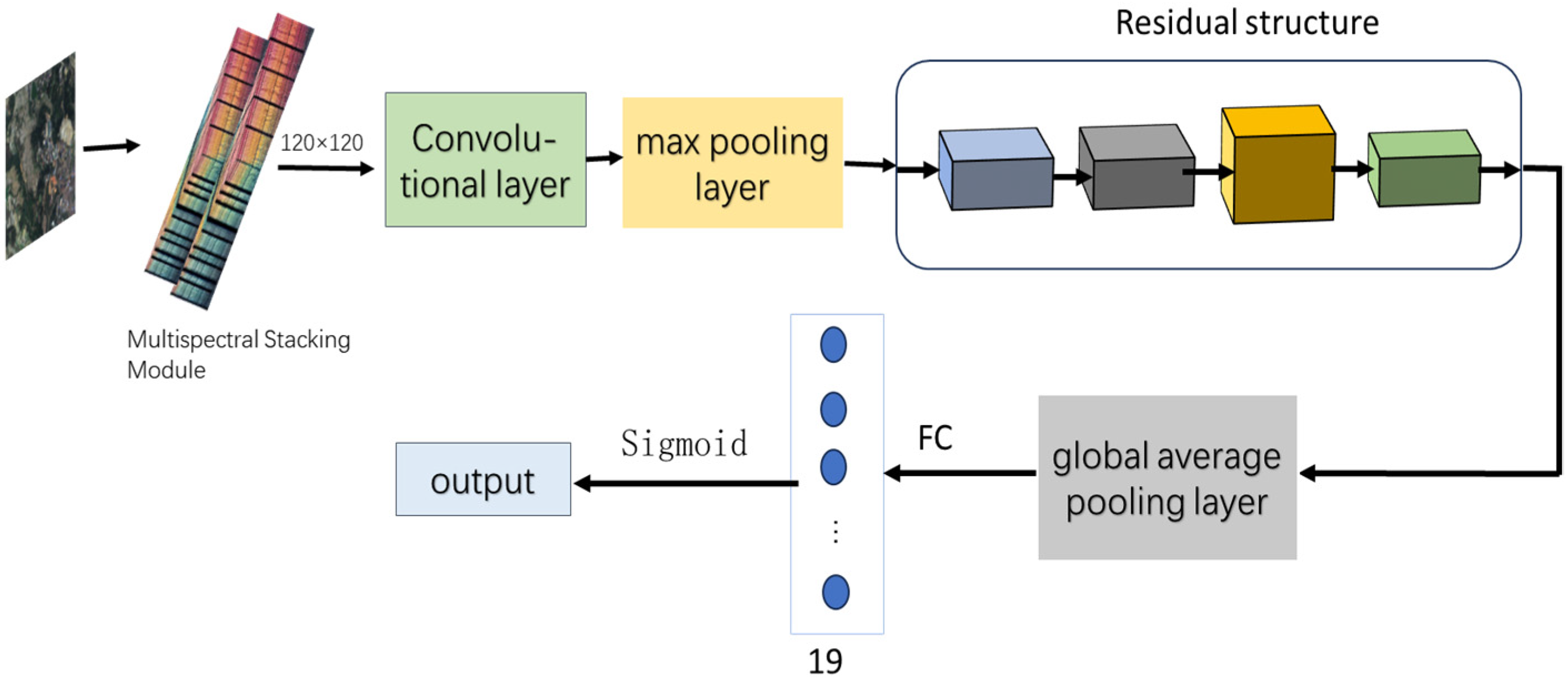

- Through meticulous analysis and comparison of existing remote sensing image classification methods, it adopts a multispectral multi-label classification strategy to efficiently extract features from high-resolution remote sensing images;

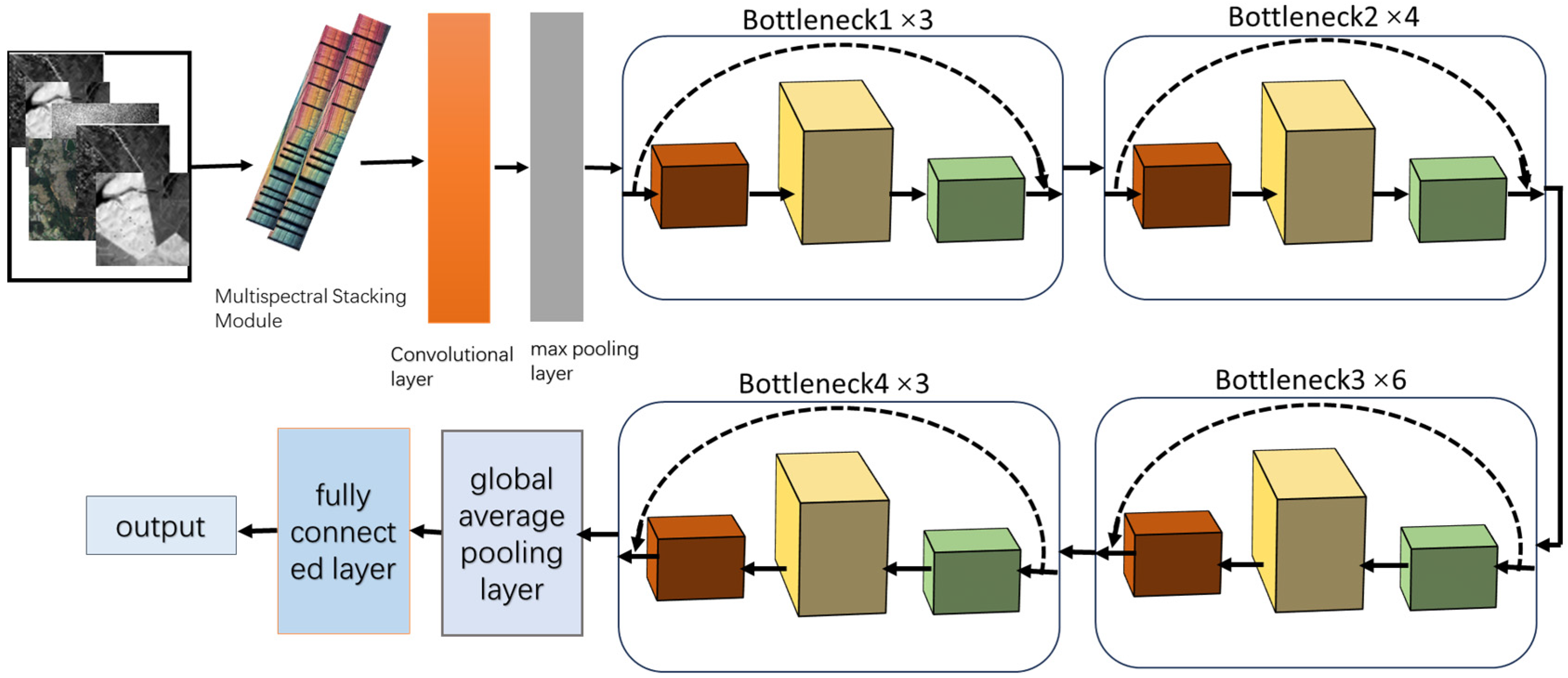

- It proposes a novel dual-number residual structure and multi-label classification module that can better learn and capture the details and semantic information of the input images, enabling the network to better adapt to the complex task of classifying remote sensing imagery applications;

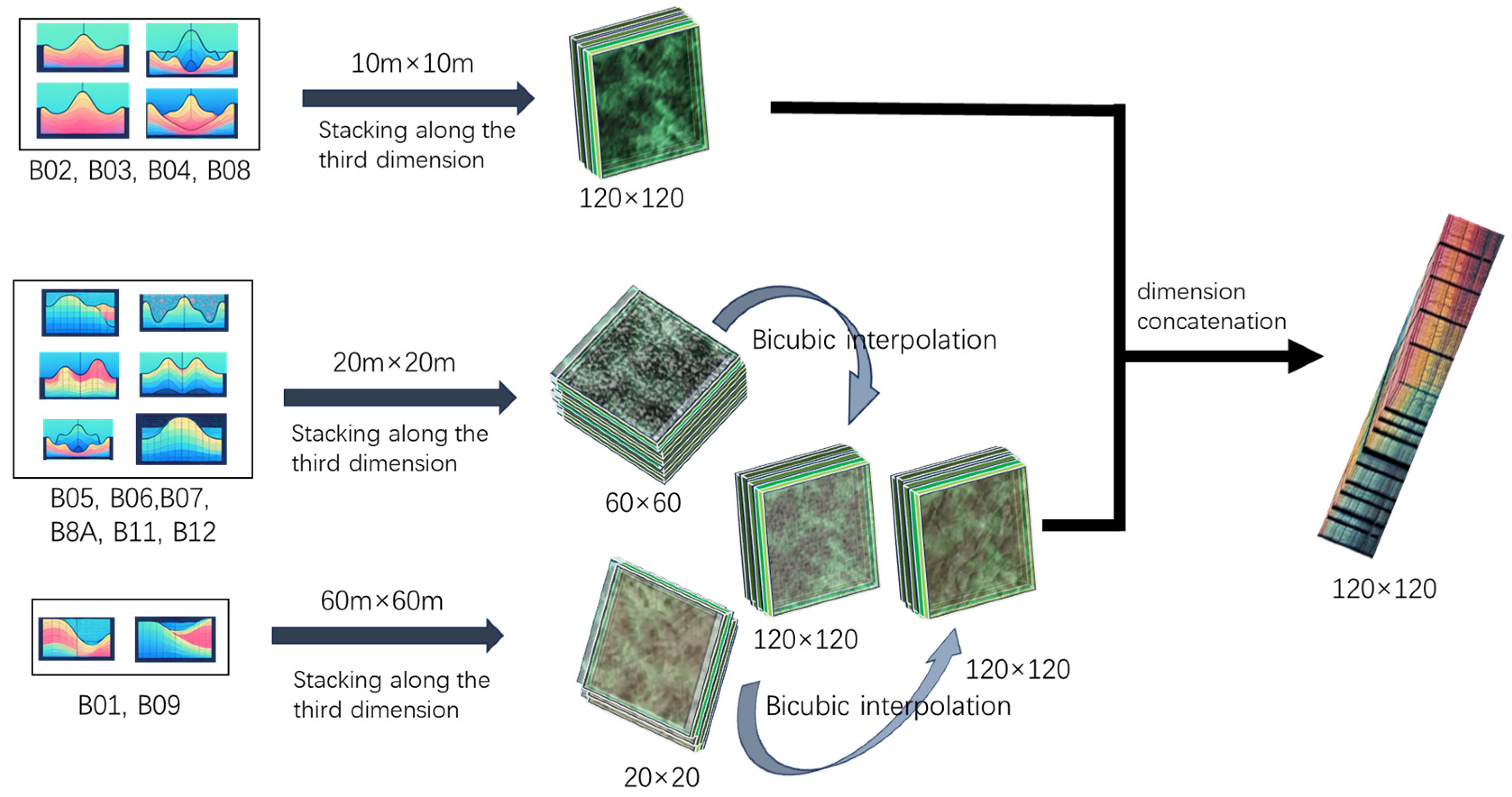

- It introduces a multispectral stacking module that effectively integrates information from bands of varying resolutions, thereby enriching the surface information available.

2. Materials and Methods

2.1. Multispectral Stacking Module

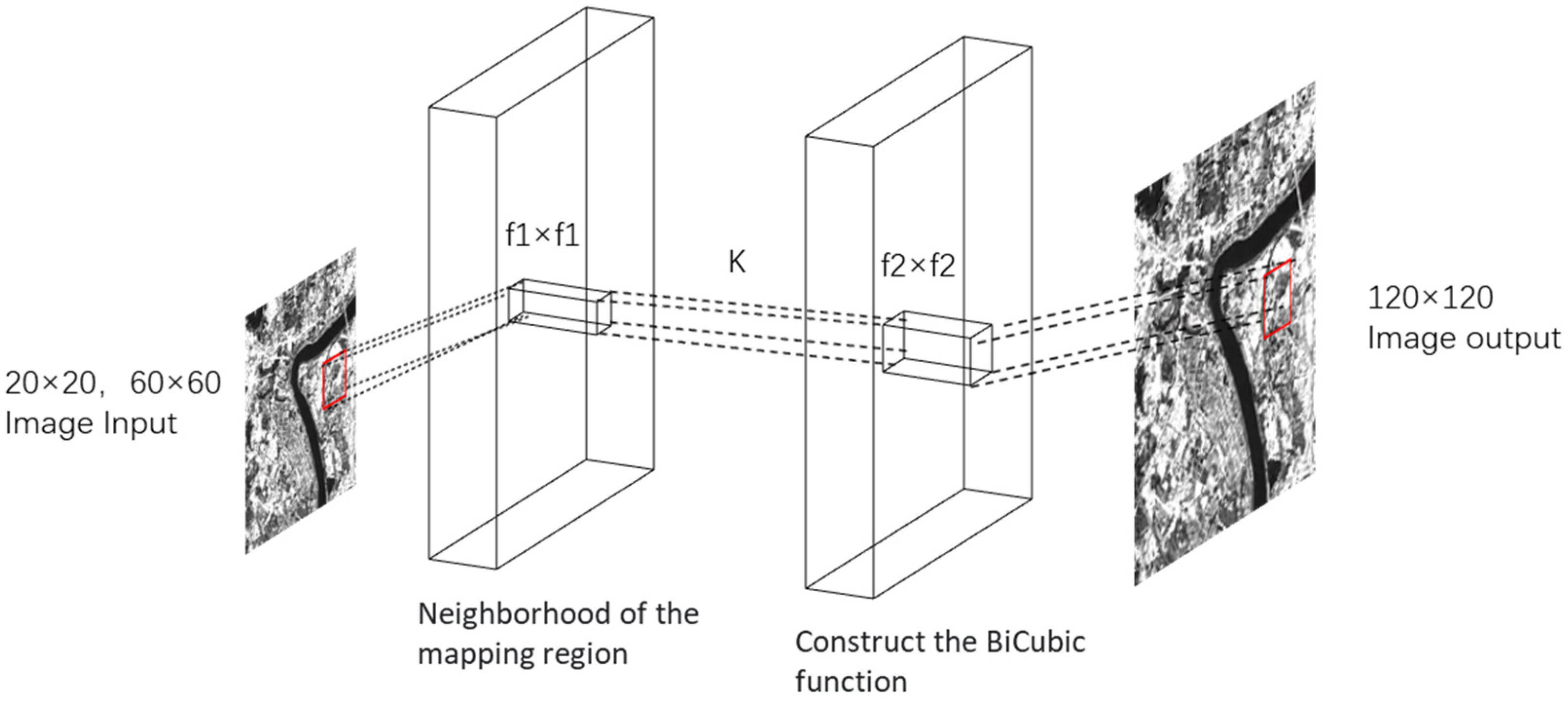

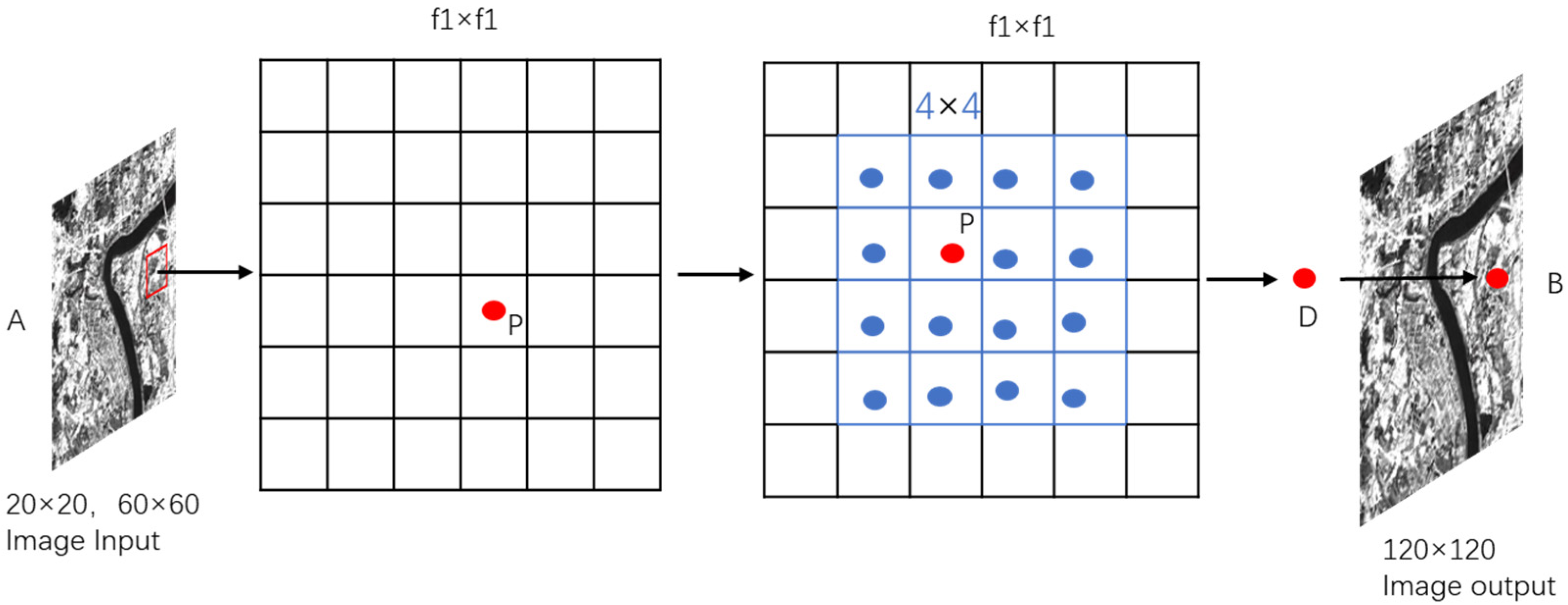

2.2. Model Input Adaptation and Interpolation in MMDL-Net

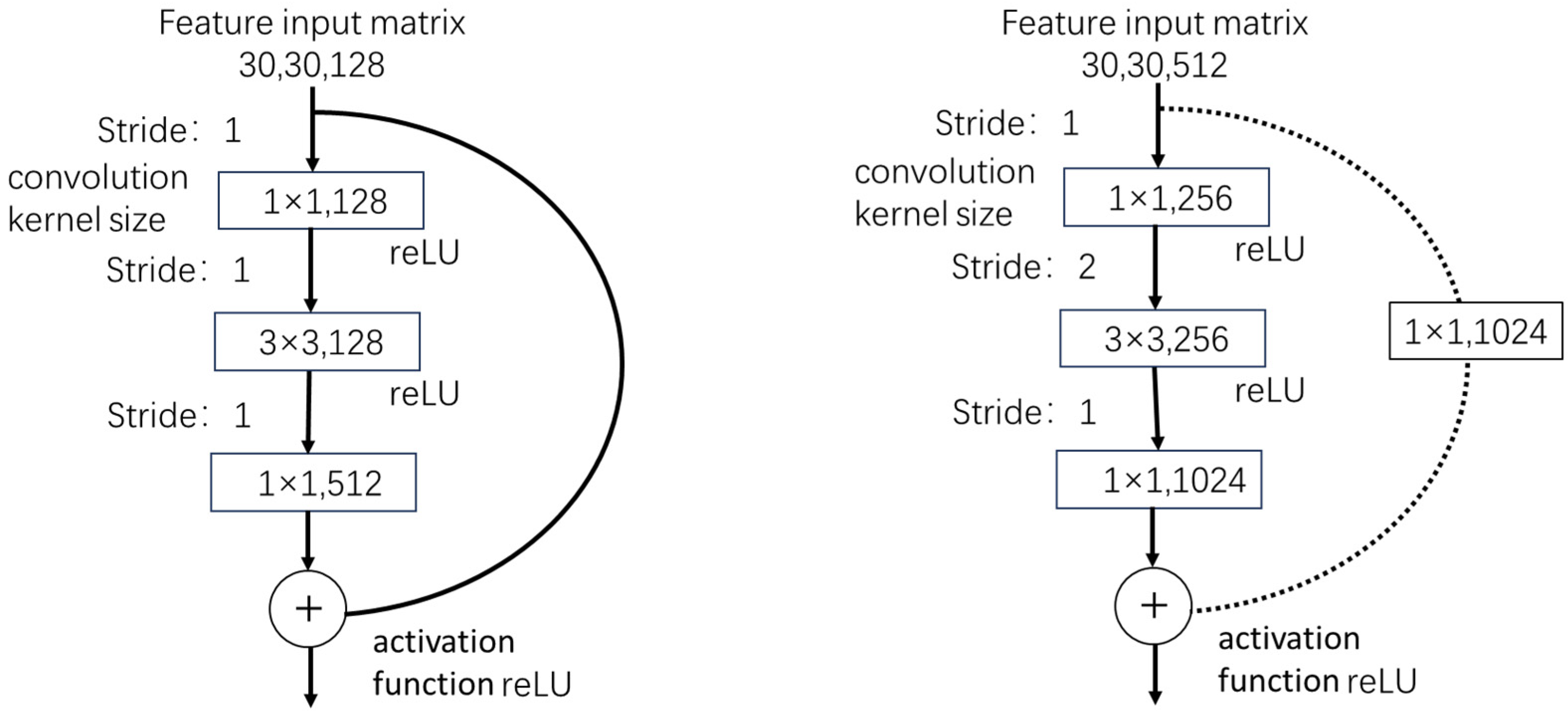

2.3. Dual-Number Residual Structure

2.4. Multi-Label Classification Module

2.5. Construction of the MMDL-Net Loss Function

3. Results

3.1. Dataset Introduction and Experimental Configuration

3.2. Evaluation Metrics

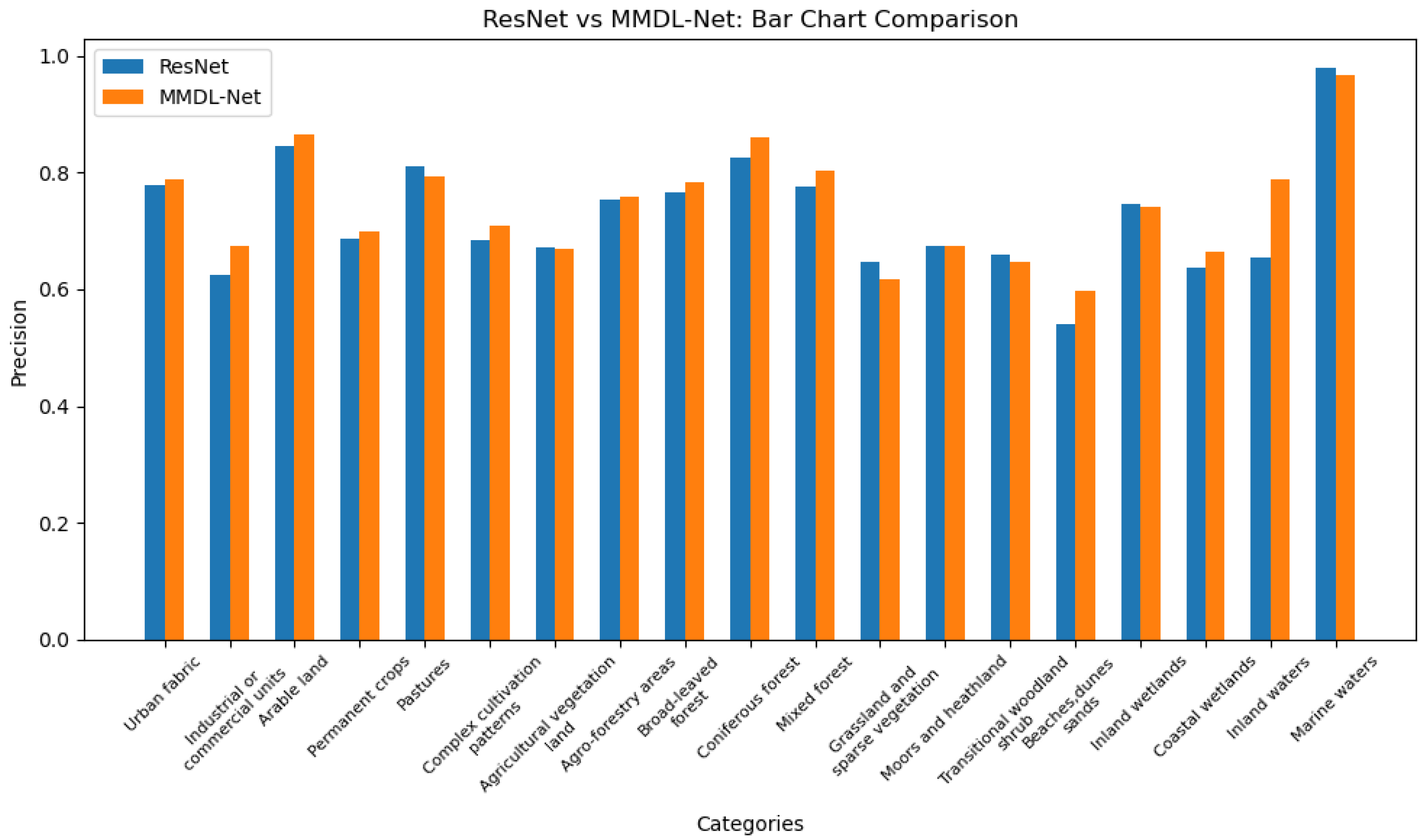

3.3. Result Analysis

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Li, Y.; Xu, W.; Chen, H.; Jiang, J.; Li, X. A Novel Framework Based on Mask R-CNN and Histogram Thresholding for Scalable Segmentation of New and Old Rural Buildings. Remote Sens. 2021, 13, 1070. [Google Scholar] [CrossRef]

- Zerrouki, N.; Harrou, F.; Sun, Y.; Hocini, L. A Machine Learning-Based Approach for Land Cover Change Detection Using Remote Sensing and Radiometric Measurements. IEEE Sens. J. 2019, 19, 5843–5850. [Google Scholar] [CrossRef]

- Talukdar, S.; Singha, P.; Mahato, S.; Shahfahad; Pal, S.; Liou, Y.-A.; Rahman, A. Land-Use Land-Cover Classification by Machine Learning Classifiers for Satellite Observations—A Review. Remote Sens. 2020, 12, 1135. [Google Scholar] [CrossRef]

- Dietler, D.; Farnham, A.; de Hoogh, K.; Winkler, M.S. Quantification of Annual Settlement Growth in Rural Mining Areas Using Machine Learning. Remote Sens. 2020, 12, 235. [Google Scholar] [CrossRef]

- Huang, C.; Zhang, C.; He, Y.; Liu, Q.; Li, H.; Su, F.; Liu, G.; Bridhikitti, A. Land Cover Mapping in Cloud-Prone Tropical Areas Using Sentinel-2 Data: Integrating Spectral Features with Ndvi Temporal Dynamics. Remote Sens. 2020, 12, 1163. [Google Scholar] [CrossRef]

- Wen, D.; Ma, S.; Zhang, A.; Ke, X. Spatial Pattern Analysis of the Ecosystem Services in the Guangdong-Hong Kong-Macao Greater Bay Area Using Sentinel-1 and Sentinel-2 Imagery Based on Deep Learning Method. Sustainability 2021, 13, 7044. [Google Scholar] [CrossRef]

- Pan, M.; Hu, T.; Zhan, J.; Hao, Y.; Li, X.; Zhang, L. Unveiling spatiotemporal dynamics and factors influencing the provision of urban wetland ecosystem services using high-resolution images. Ecol. Indic. 2023, 151, 110305. [Google Scholar] [CrossRef]

- Yang, R.; Ahmed, Z.U.; Schulthess, U.C.; Kamal, M.; Rai, R. Detecting functional field units from satellite images in smallholder farming systems using a deep learning based computer vision approach: A case study from Bangladesh. Remote Sens. Appl. Soc. Environ. 2020, 20, 100413. [Google Scholar] [CrossRef]

- Lin, C.; Jin, Z.; Mulla, D.; Ghosh, R.; Guan, K.; Kumar, V.; Cai, Y. Toward Large-Scale Mapping of Tree Crops with High-Resolution Satellite Imagery and Deep Learning Algorithms: A Case Study of Olive Orchards in Morocco. Remote Sens. 2021, 13, 1740. [Google Scholar] [CrossRef]

- Lin, Y.; Wan, L.; Zhang, H.; Wei, S.; Ma, P.; Li, Y.; Zhao, Z. Leveraging optical and SAR data with a UU-Net for large-scale road extraction. Int. J. Appl. Earth Obs. Geoinf. 2021, 103, 102498. [Google Scholar] [CrossRef]

- Knopp, L.; Wieland, M.; Rättich, M.; Martinis, S. A Deep Learning Approach for Burned Area Segmentation with Sentinel-2 Data. Remote Sens. 2020, 12, 2422. [Google Scholar] [CrossRef]

- Zheng, X.; Chen, T. Segmentation of High Spatial Resolution Remote Sensing Image based On U-Net Convolutional Networks. In Proceedings of the IGARSS 2020—2020 IEEE International Geoscience and Remote Sensing Symposium, Waikoloa, HI, USA, 26 September–2 October 2020; pp. 2571–2574. [Google Scholar]

- Yang, J.; Xu, J.; Lv, Y.; Zhou, C.; Zhu, Y.; Cheng, W. Deep learning-based automated terrain classification using high-resolution DEM data. Int. J. Appl. Earth Obs. Geoinf. 2023, 118, 103249. [Google Scholar] [CrossRef]

- Onojeghuo, A.O.; Miao, Y.; Blackburn, G.A. Deep ResU-Net Convolutional Neural Networks Segmentation for Smallholder Paddy Rice Mapping Using Sentinel 1 SAR and Sentinel 2 Optical Imagery. Remote Sens. 2023, 15, 1517. [Google Scholar] [CrossRef]

- Ribeiro, T.F.R.; Silva, F.; Moreira, J.; Costa, R.L.d.C. Burned area semantic segmentation: A novel dataset and evaluation using convolutional networks. ISPRS J. Photogramm. Remote Sens. 2023, 202, 565–580. [Google Scholar] [CrossRef]

- Xu, C.; Gao, M.; Yan, J.; Jin, Y.; Yang, G.; Wu, W. MP-Net: An efficient and precise multi-layer pyramid crop classification network for remote sensing images. Comput. Electron. Agric. 2023, 212, 108065. [Google Scholar] [CrossRef]

- Sumbul, G.; Charfuelan, M.; Demir, B.; Markl, V. Bigearthnet: A Large-Scale Benchmark Archive for Remote Sensing Image Understanding. In Proceedings of the IGARSS 2019—2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 5901–5904. [Google Scholar]

- Manas, O.; Lacoste, A.; Giro-i-Nieto, X.; Vazquez, D.; Rodriguez, P. Seasonal Contrast: Unsupervised Pre-Training from Uncurated Remote Sensing Data. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, BC, Canada, 11–17 October 2021; pp. 9394–9403. [Google Scholar]

- Sumbul, G.; Ravanbakhsh, M.; Demir, B. Informative and Representative Triplet Selection for Multilabel Remote Sensing Image Retrieval. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5405811. [Google Scholar] [CrossRef]

- Stojnic, V.; Risojevic, V. Self-Supervised Learning of Remote Sensing Scene Representations Using Contrastive Multiview Coding. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Nashville, TN, USA, 19–25 June 2021; pp. 1182–1191. [Google Scholar]

- Vincenzi, S.; Porrello, A.; Buzzega, P.; Cipriano, M.; Fronte, P.; Cuccu, R.; Ippoliti, C.; Conte, A.; Calderara, S. The color out of space: Learning self-supervised representations for Earth Observation imagery. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; pp. 3034–3041. [Google Scholar]

- Diakogiannis, F.I.; Waldner, F.; Caccetta, P.; Wu, C. ResUNet-a: A deep learning framework for semantic segmentation of remotely sensed data. ISPRS J. Photogramm. Remote Sens. 2020, 162, 94–114. [Google Scholar] [CrossRef]

- Kim, D.-H.; López, G.; Kiedanski, D.; Maduako, I.; Ríos, B.; Descoins, A.; Zurutuza, N.; Arora, S.; Fabian, C. Bias in Deep Neural Networks in Land Use Characterization for International Development. Remote Sens. 2021, 13, 2908. [Google Scholar] [CrossRef]

- Sumbul, G.; Demir, B. A Deep Multi-Attention Driven Approach for Multi-Label Remote Sensing Image Classification. IEEE Access 2020, 8, 95934–95946. [Google Scholar] [CrossRef]

- Koßmann, D.; Wilhelm, T.; Fink, G.A. Towards Tackling Multi-Label Imbalances in Remote Sensing Imagery. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; pp. 5782–5789. [Google Scholar]

- Dixit, M.; Chaurasia, K.; Kumar Mishra, V. Dilated-ResUnet: A novel deep learning architecture for building extraction from medium resolution multi-spectral satellite imagery. Expert Syst. Appl. 2021, 184, 115530. [Google Scholar] [CrossRef]

- Rehman, M.u.; Nizami, I.F.; Majid, M. DeepRPN-BIQA: Deep architectures with region proposal network for natural-scene and screen-content blind image quality assessment. Displays 2022, 71, 102101. [Google Scholar] [CrossRef]

- Wen, L.; Li, X.; Gao, L. A transfer convolutional neural network for fault diagnosis based on ResNet-50. Neural Comput. Appl. 2019, 32, 6111–6124. [Google Scholar] [CrossRef]

- Sarwinda, D.; Paradisa, R.H.; Bustamam, A.; Anggia, P. Deep Learning in Image Classification using Residual Network (ResNet) Variants for Detection of Colorectal Cancer. Procedia Comput. Sci. 2021, 179, 423–431. [Google Scholar] [CrossRef]

- Zhou, T.; Chang, X.; Liu, Y.; Ye, X.; Lu, H.; Hu, F. COVID-ResNet: COVID-19 Recognition Based on Improved Attention ResNet. Electronics 2023, 12, 1413. [Google Scholar] [CrossRef]

- Roy, S.K.; Manna, S.; Song, T.; Bruzzone, L. Attention-Based Adaptive Spectral–Spatial Kernel ResNet for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2021, 59, 7831–7843. [Google Scholar] [CrossRef]

- Sumbul, G.; Wall, A.d.; Kreuziger, T.; Marcelino, F.; Costa, H.; Benevides, P.; Caetano, M.; Demir, B.; Markl, V. BigEarthNet-MM: A Large-Scale, Multimodal, Multilabel Benchmark Archive for Remote Sensing Image Classification and Retrieval [Software and Data Sets]. IEEE Geosci. Remote Sens. Mag. 2021, 9, 174–180. [Google Scholar] [CrossRef]

- Papoutsis, I.; Bountos, N.I.; Zavras, A.; Michail, D.; Tryfonopoulos, C. Benchmarking and scaling of deep learning models for land cover image classification. ISPRS J. Photogramm. Remote Sens. 2023, 195, 250–268. [Google Scholar] [CrossRef]

- Chen, H.; Peng, S.; Du, C.; Li, J.; Wu, S. SW-GAN: Road Extraction from Remote Sensing Imagery Using Semi-Weakly Supervised Adversarial Learning. Remote Sens. 2022, 14, 4145. [Google Scholar] [CrossRef]

- Liu, W.; Wang, J.; Luo, J.; Wu, Z.; Chen, J.; Zhou, Y.; Sun, Y.; Shen, Z.; Xu, N.; Yang, Y. Farmland Parcel Mapping in Mountain Areas Using Time-Series SAR Data and VHR Optical Images. Remote Sens. 2020, 12, 3733. [Google Scholar] [CrossRef]

- Wang, B.; Chen, Z.; Wu, L.; Yang, X.; Zhou, Y. SADA-Net: A Shape Feature Optimization and Multiscale Context Information-Based Water Body Extraction Method for High-Resolution Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 1744–1759. [Google Scholar] [CrossRef]

- Faisal Koko, A.; Yue, W.; Abdullahi Abubakar, G.; Hamed, R.; Noman Alabsi, A.A. Analyzing urban growth and land cover change scenario in Lagos, Nigeria using multi-temporal remote sensing data and GIS to mitigate flooding. Geomat. Nat. Hazards Risk 2021, 12, 631–652. [Google Scholar] [CrossRef]

- Shimabukuro, Y.E.; Arai, E.; da Silva, G.M.; Hoffmann, T.B.; Duarte, V.; Martini, P.R.; Dutra, A.C.; Mataveli, G.; Cassol, H.L.G.; Adami, M. Mapping Land Use and Land Cover Classes in São Paulo State, Southeast of Brazil, Using Landsat-8 OLI Multispectral Data and the Derived Spectral Indices and Fraction Images. Forests 2023, 14, 1669. [Google Scholar] [CrossRef]

| Band Number | Central Wavelength (nm) | Primary Use |

|---|---|---|

| B01 | 443 | Coastal aerosol detection and atmospheric conditions |

| B02 | 490 | Blue band–Vegetation, soil, and water bodies |

| B03 | 560 | Green band–Vegetation health and vitality |

| B04 | 665 | Red band–Chlorophyll content for plant health |

| B05 | 705 | Red edge–Vegetation characteristics and biomass |

| B06 | 740 | Red edge–Vegetation characteristics and biomass |

| B07 | 783 | Red edge–Vegetation characteristics and biomass |

| B08 | 842 | NIR–Plant health and biomass estimation |

| B8A | 865 | Narrow NIR–Improved vegetation health assessment |

| B09 | 940 | Water vapor estimation |

| B10 | 1375 | Cirrus cloud detection |

| B11 | 1610 | SWIR–Moisture content, vegetation stress |

| B12 | 2190 | SWIR–Mineral content, soil properties, heat detection |

| Configuration Item | Details |

|---|---|

| Deep Learning Library | TensorFlow |

| software | PyCharm PROFESSIONAL 2019.3 |

| Server | AMAX, Fremont, CA, USA |

| Graphics Card | NVIDIA GeForce 2080 Ti, Santa Clara, CA, USA |

| Optimizer | 0.001 |

| Training Cycles | 500 epochs |

| Positive | Negative | |

|---|---|---|

| True | True Positive (TP) | True Negative (TN) |

| False | False Positive (FP) | False Negative (FN) |

| Model | Precision (%) | Accuracy (%) | Recall (%) | F1 (%) |

|---|---|---|---|---|

| MMDL-Net | 83.52 | 77.08 | 77.30 | 77.97 |

| ResNet50 | 75.56 | 60.86 | 70.30 | 70.53 |

| ResNet101 | 79.97 | 65.46 | 75.19 | 75.15 |

| ResNet152 | 80.51 | 66.01 | 75.52 | 75.63 |

| Category | MMDL-Net | ResNet50 | ResNet101 | ResNet152 |

|---|---|---|---|---|

| Urban buildings | 78.43 | 79.31 | 76.12 | 78.21 |

| Commercial and industrial units | 65.89 | 63.36 | 60.51 | 63.17 |

| Arable land | 85.65 | 80.81 | 87.80 | 85.07 |

| Permanent crops | 72.61 | 68.53 | 69.62 | 68.13 |

| Pastures | 78.56 | 83.67 | 79.56 | 80.29 |

| Complex farming systems | 73.71 | 68.96 | 66.58 | 69.60 |

| Agricultural and vegetation land | 71.13 | 66.20 | 67.30 | 67.99 |

| Agroforestry areas | 76.82 | 79.27 | 71.12 | 75.95 |

| Broadleaf forests | 78.51 | 74.51 | 78.25 | 76.85 |

| Coniferous forests | 86.42 | 76.21 | 84.72 | 86.54 |

| Mixed forests | 81.38 | 71.44 | 81.76 | 79.56 |

| Grasslands and sparse vegetation | 63.54 | 65.47 | 66.87 | 61.98 |

| Swamps and wastelands | 65.92 | 71.35 | 65.66 | 64.92 |

| Transitional woodland and shrub | 65.03 | 68.33 | 64.59 | 64.98 |

| Beaches, dunes, sandy areas | 57.20 | 57.07 | 55.28 | 49.96 |

| Inland wetlands | 73.00 | 71.16 | 74.94 | 77.54 |

| Coastal wetlands | 67.48 | 55.75 | 72.48 | 62.56 |

| Inland water bodies | 87.85 | 60.32 | 73.14 | 72.84 |

| Marine water bodies | 96.41 | 96.98 | 98.32 | 98.39 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cheng, X.; Li, B.; Deng, Y.; Tang, J.; Shi, Y.; Zhao, J. MMDL-Net: Multi-Band Multi-Label Remote Sensing Image Classification Model. Appl. Sci. 2024, 14, 2226. https://doi.org/10.3390/app14062226

Cheng X, Li B, Deng Y, Tang J, Shi Y, Zhao J. MMDL-Net: Multi-Band Multi-Label Remote Sensing Image Classification Model. Applied Sciences. 2024; 14(6):2226. https://doi.org/10.3390/app14062226

Chicago/Turabian StyleCheng, Xiaohui, Bingwu Li, Yun Deng, Jian Tang, Yuanyuan Shi, and Junyu Zhao. 2024. "MMDL-Net: Multi-Band Multi-Label Remote Sensing Image Classification Model" Applied Sciences 14, no. 6: 2226. https://doi.org/10.3390/app14062226

APA StyleCheng, X., Li, B., Deng, Y., Tang, J., Shi, Y., & Zhao, J. (2024). MMDL-Net: Multi-Band Multi-Label Remote Sensing Image Classification Model. Applied Sciences, 14(6), 2226. https://doi.org/10.3390/app14062226