Abstract

Background: The ever-growing extended reality (XR) technologies offer unique tools for the interactive visualization of images with a direct impact on many fields, from bioinformatics to medicine, as well as education and training. However, the accelerated integration of artificial intelligence (AI) into XR applications poses substantial computational processing demands. Additionally, the intricate technical challenges associated with multilocation and multiuser interactions limit the usability and expansion of XR applications. Methods: A cloud deployable framework (Holo-Cloud) as a virtual server on a public cloud platform was designed and tested. The Holo-Cloud hosts FI3D, an augmented reality (AR) platform that renders and visualizes medical 3D imaging data, e.g., MRI images, on AR head-mounted displays and handheld devices. Holo-Cloud aims to overcome challenges by providing on-demand computational resources for location-independent, synergetic, and interactive human-to-image data immersion. Results: We demonstrated that Holo-Cloud is easy to implement, platform-independent, reliable, and secure. Owing to its scalability, Holo-Cloud can immediately adapt to computational needs, delivering adequate processing power for the hosted AR platforms. Conclusion: Holo-Cloud shows the potential to become a standard platform to facilitate the application of interactive XR in medical diagnosis, bioinformatics, and training by providing a robust platform for XR applications.

1. Introduction

The integration of immersive and interactive human-to-data/information interfacing has become a critical element in numerous domains, including academia, education, biomedicine, and industry [1,2,3,4,5,6,7,8,9,10,11]. Indeed, extended reality (XR) interfaces, whether in the form of virtual reality (VR), augmented reality (AR), or mixed reality (MR), are gaining widespread acceptance and are becoming a prevalent display mode [12,13,14]. These interfaces are fundamentally transforming how we perceive and engage with 3D data, finding applications in various sectors, such as medicine [7,10,11,15,16,17,18,19,20,21] and education [6,22,23,24].

In healthcare, XR technologies are used to assist in preoperative and operative processes during surgeries. This enables physicians to navigate surgeries more effectively by having patients’ anatomy and medical information projected onto their field of view (FOV) [5,10,15,25,26]. Also, XR helps patients through interactive education before and after surgery [4,24,26]. However, due to the risk inherent to medical practice, the methodologies and standards required to use these capabilities need to be optimized for security, safety, performance, robustness, and intuitiveness while ensuring a minimal learning curve. The significance of this work lies in advancing the discovery of these methodologies and standards to further introduce this technology and its advantages to the medical field and enable its use in a wider practice.

Powered by the ever-growing availability of powerful yet cost-effective hardware, new paradigms are emerging, such as the interactive immersion of several participants into holographic 3D data using different types of interfaces, such as head-mounted displays (HMD) and handheld devices (HHD) in synergetic activities or on-the-fly use of complex computational tools [15]. In addition, in a recent scholarly work, Chandler et al. [27] elaborated on the concept of immersive analytics, wherein VR serves as an important tool in the fields of analytical reasoning and, therefore, decision-making.

One of the practical challenges and an important requirement in deploying modern human-immersion systems is providing computational power for on-the-fly processing and analytics [15,28,29,30]. Holographic immersion systems perform a considerable number of calculations [17] to process and reconstruct a 3D mesh and hologram on holographic displays, which requires processing power [15,31,32]. Also, with the increasing integration of AI-driven models for real-time data analysis and diagnosis, providing more computational resources is vital to avoid data delivery delays [29,30,33,34]. Moreover, interfaces need to provide an interactive and ergonomic experience for the viewers to ensure that their center of attention is on the actual data rather than operating the front end of the system. Providing such an environment requires high processing power, data exchange, and memory to retain the information of the holograms, which seems challenging to fulfill by the current generation of XR limited hardware capabilities. Therefore, to address this issue, system designs [15,17,31,35,36,37] rely on external servers or processing units to perform these crucial functionalities to reduce resource utilization on the HMD or HHD.

Multiuser synergy in XR environments requires sharing a massive amount of information regarding the XR scene in which users are able to interact. Digital worlds, such as the Omniverse, Metaverse, and Multiverse [38,39], provide a VR environment for digital twins [40], where users make virtual presence in a virtual world and interact in a shared XR scene. Providing location-independent infrastructure in this multiuser paradigm is crucial and is accomplished by leveraging local or public cloud infrastructures. An example is the immersive experience of patients remotely using HMD or HHD in a treatment environment [40,41].

In this study, we developed a highly available, reliable, modular, scalable, and interfaceable framework called Holo-Cloud. Holo-Cloud demonstrates the feasibility of a platform-independent framework to effectively address the increasing demand for computational resources and processing needs by XR applications. To enable current and future applications, this study had four primary directives that led to corresponding contributions. First, it was developed with cloud deployment as an inherently location-agnostic data immersion service for concurrent multiuser synergetic interactive immersion in multidimensional information. Second, server-based primary computing ensures the delivery of the required computational power for analytics and on-the-fly data processing to all users, regardless of the hardware capabilities and specifications of the locally employed HMD or HHD. Third, it is interfaceable to external physical systems, such as biomedical sensors, imaging scanners, and user interfaces, for perception (e.g., displays, HMD, HHD) and interaction (e.g., smart pointers and haptics) to enhance the viewers’ immersion experience. Yet another critical aspect of the system is its independence from any specific platforms and/or operating systems; this provides a key benefit considering the rapid changes in hardware. We utilized standard, secure, and simple communication and data exchange protocols (transmission control protocol (TCP) for data exchange, remote desktop protocol (RDP) for remote management, and secure sockets layer (SSL) for security, all as widely accepted communication and security protocols) to ensure rapid deployment and maximum compatibility with new HMD or HHD hardware with minimum software modification needs to focus users’ attention on actual on-the-cloud human immersion instead of dealing with the technical complexity of system deployment.

The presented cloud resource was designed and implemented to handle any type of multidimensional and spatio-temporal data we may encounter in the biomedical field, including 3D/4D images and renderings based on them (e.g., cardiac MRI and the 4D endocardium segmented). The data can be imported in any major medical imaging and 3D visualization formats, e.g., digital imaging and communications in medicine (DICOM) and universal scene description (USD). As such, it can be used in medical training and diagnosis using virtually any medical image modality as input, such as X-rays, computed tomography (CT), magnetic resonance imaging (MRI), ultrasound, positron emission tomography (PET), etc. Of particular value, however, is in interventional medicine when used with HMD to support hands-free interventions [31,42].

The primary contribution of this work is the introduction of a cloud-deployable computational framework and a proof-of-concept demonstration of (i) deploying extended reality applications for human–computer interfacing, (ii) remotely controlling medical devices via XR interfaces, and (iii) enabling interactive synergy of multiple users and at multiple locations with a variety of devices. This resource has a direct impact on addressing current computational and resource limitations, especially for multiuser interactions.

The remainder of this manuscript is structured as follows. In Section 2, we explain the methodology, design, and components of the proposed framework. In Section 3, we present the experimental design used in the evaluation of the performance of the framework and results. Lastly, in Section 4, limitations of the framework, possible use cases of the proposed method, and future directions will be discussed.

2. Materials and Methods

2.1. High-Level System Design

Apart from defining the objective of the work, literature review, and listing the limitations of the current research, our methodology includes proposing a framework that offers solutions for overcoming these limitations and proceeding to evaluate the performance of this framework using standard metrics to showcase the potential of the framework. However, owing to the inhomogeneity of XR applications, a comparative study with a state-of-the-art in a similar experimental setting needs to be performed in future work.

The Holo-Cloud system is based on the utilization of public cloud services that encompass computational resources, networking, high-availability measures, and security protocols, resulting in the establishment of a reliable, location-agnostic, and platform-independent framework. The primary goal is to facilitate the execution of the XR software core on compatible hardware without necessitating recurrent reprovisioning, reconfiguration, recompilation, and reinstallation of the software executable each time an expansion or shrinkage of the computational infrastructure is required. To actualize this objective, Holo-Cloud proposes the instantiation and configuration of the XR software on a virtualized hardware system, subsequently enabling secure public access for authorized users to the virtualized operating system.

In this architectural paradigm, the responsibility for providing the hypervisor service, a critical component, lies with the public cloud service provider. The hypervisor service role is to provision and manage on-demand virtual hardware, encompassing the central processing unit (CPU), random access memory (RAM), storage, video graphic acceleration (VGA), and peripheral interfaces allocated to the virtualized operating system. The virtualized operating system, in turn, operates on the hardware provided by the hypervisor, adapting seamlessly to any hardware modification, such as elastic increase or decrease in processing resources or network throughput. Notably, the operating system ensures the continuity of software services to the XR software core. The XR software core remains unaware of any underlying virtual infrastructure modifications.

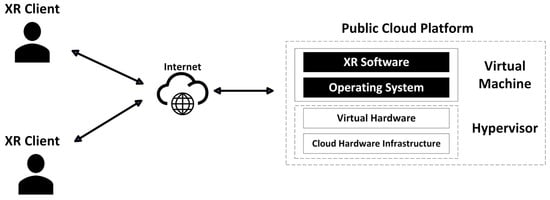

In this architectural scheme (Figure 1), the combination of the virtual operating system and the bundled XR software is denoted as a virtual machine. This virtual machine can be encapsulated, relocated, and transitioned from one hypervisor to another. This provides the flexibility to deploy the virtual machine on any public cloud platform with minimum configuration overhead, as almost all the well-known cloud service providers support virtual machine migration from external platforms [43].

Figure 1.

Holo-Cloud high-level system design. The virtual machine is transferrable to any other cloud service provider.

2.2. Cloud Deployment

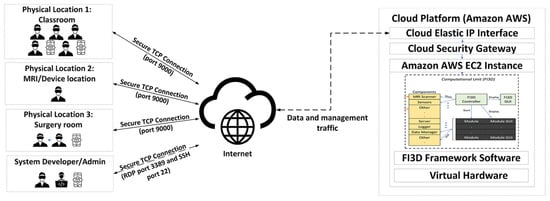

Cloud deployment encompasses the provisioning, configuration, and performance evaluation of hypervisor, networking, and security services. Figure 2 demonstrates the data flow diagram of the cloud deployment.

Figure 2.

Diagram of the cloud implementation and data flow. The clients communicate with the server through the internet via the client software installed on their HMD or HHD. The client software initiates the connection by sending requests to the assigned server’s public IP address and redirects to Amazon’s AWS cloud security gateway. The cloud security gateway inspects the incoming requests and forwards the approved requests to the EC2 virtual machine. The EC2 instance provides virtual hardware resources for the Holo-Cloud virtual machine. FI3D server software processes the request and sends the response to the corresponding FI. Developers and cloud administrators use dedicated TCP management ports to communicate with the EC2 virtual machine.

2.3. Hypervisor

Amazon Web Services (AWS) provides a robust and highly available cloud computing infrastructure known as elastic cloud computing (EC2) for running virtual machines across various operating systems and configurations [44]. EC2 offers an extensive range of preinstalled operating system images through the Amazon Machine Images (AMI) service, including well-known Linux distributions, Microsoft Windows Server Systems, and nested virtualization [45].

The EC2 service presents virtual hardware configurations with multiple CPU, RAM, storage, and VGA choices. Each hardware combination is associated with a distinct price point calculated based on hourly usage. Notably, EC2 virtual machine instances are not bound to fixed hardware configurations. The elasticity feature of EC2 allows users to immediately adjust the provided virtual hardware resources to accommodate the computational needs of their applications in response to user requests. Computational resources can be automatically increased based on user-defined thresholds. For instance, the virtual machine instance can be configured such that if the number of CPU processing requests reaches a user-defined threshold, e.g., 80% of the current CPU capacity, the virtual machine’s number of CPU cores can be increased to efficiently handle computational tasks. Users have the flexibility to select the physical datacenter location within AWS, optimizing traffic flow based on the proximity of their clients to the cloud service datacenter.

Given that FI3D has been developed and optimized for Microsoft Windows 10 Professional OS, we configured a virtual machine image with FI3D and all the required dependencies installed and configured. This image was then imported into the AWS cloud platform as an AMI image. AMI images can be cloned and reused. Subsequently, an EC2 instance was initiated to host the FI3D virtual machine.

2.4. Public Interface

A public IP address was assigned to the FI3D EC2 virtual machine as a communication gateway to the internet and public access. For ease of use, a meaningful name in the form of a fully qualified domain name (FQDN) was assigned to the public address. This name and the corresponding public IP address are registered in the global domain name system (DNS) servers, so any client can access the server using the domain name as long as the DNS record is valid.

2.5. Security

At the network level, AWS manages public access to internal virtual private cloud resources through AWS security groups. These security groups control the allowance or denial of both inbound and outbound traffic to the resources with which they are associated [29].

The deployment of Holo-Cloud uses TCP protocol for network communication. TCP is one of the most frequently used protocols within digital network communications and ensures end-to-end data delivery [46]. All outbound TCP/IP traffic originating from the FI3D server to the internet was permitted during the experiments, facilitating the necessary functions of the software and operating system. To enhance server security, only inbound TCP traffic on ports 9000 and 3389 was allowed. XR clients used TCP port 9000 as the default port for the framework interface communication. Virtual machine management was performed using the Microsoft remote desktop service on default TCP port 3389.

3. Results

3.1. Experimental Design

The performance of the Holo-Cloud system has undergone assessment processes across various settings to ensure satisfactory functionality. Pioneering XR and cloud studies have employed various experimental settings due to heterogeneity in their XR applications. Therefore, replicating a similar experimental setting for our work is challenging. However, the evaluation metrics used in this study align with the latest methods in the literature. The objective of the experimental design in this study is to mimic a test environment and evaluate the performance of the Holo-Cloud to demonstrate its potential for further development [47]. These settings include the connection of a single client to the FI3D application hosted on the cloud and the connection of multiple clients to the FI3D. In each scenario, the FI3D server software is executed on the Holo-Cloud virtual machine that was hosted on AWS. The server software loads images and models of a cardiac MRI. This model is a 4D reconstruction of the left ventricle of the human heart from a multislice, multiframe CINE MRI set [32]. Subsequently, XR client software (installed on viewers’ HMD and HHD, located in different physical locations) connect to the server software through a secure internet connection. Viewers choose the loaded study data, and the client software loads the 3D MRI holographic model.

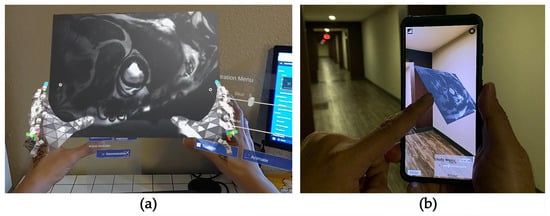

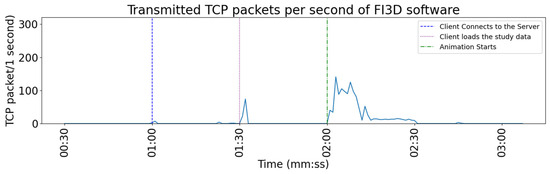

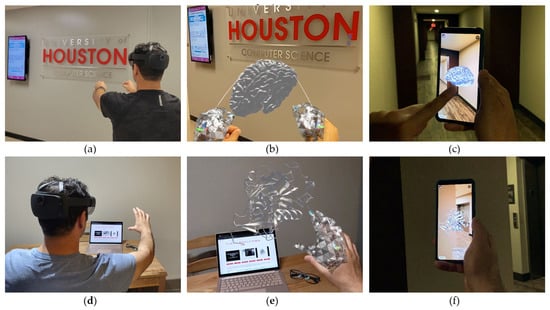

As the interaction with the system shown in Figure 3, for HMD viewers, the holographic model is loaded into their HMD’s FOV, and they can roam around and view the model from different perspectives. For HHD viewers, the model is overlayed on their HHD screen’s camera view. After viewing the model for a certain amount of time, the server software starts broadcasting the animated version of the study data to all currently connected clients. A scene control gesture menu was added to the system for HMD interfaces that enables users to modify study visuals by hand gesture. Similarly, HHD interfaces can interact with the study visuals using hand control commands (e.g., pinching and tapping) using the scene control touch menu. The overall network utilization, CPU utilization, and memory usage were measured during the study and later used to evaluate the performance of the Holo-Cloud platform.

Figure 3.

(a) This figure demonstrates the field of view (FOV) of a Microsoft HoloLens 2 HMD user. The MRI cardiac study visuals are projected using the Microsoft HoloLens 2 HMD. The user can interact with the system by selecting the desired MRI slice, playing the CINE sequence animation using scene control menu overlaid buttons, pinching the MRIs to zoom in and out, and using hand gestures to move, tilt, and rotate the hologram within the user’s FOV. (b) This figure demonstrates the visuals of the same study on an Android HHD connected to the internet using a 5G cellular connection. Android users can connect to the server from anywhere around the world using a cellular or Wi-Fi internet connection. They can also interact with the study visuals using hand control commands using the scene control touch menu.

During this process, the FI3D server software sends two sets of data regarding the study to the client. The first set of data is the pixel intensities and triangular mesh for 3D models. This set of data is cached on the client’s device. The second set of data, also known as metadata, is the visual properties of the of the model, such as the position, orientation, size, color, and slice index. The metadata, which is smaller than the first set of data, is not cached on the client device. The server continuously delivers models’ metadata to the connected client because the visual properties of the models (e.g., position or orientation) can be changed at any time by the server or other clients [31].

Spikes in resource utilization of the Holo-Cloud server are anticipated during each event in the experiments due to the increase in the number of received processing requests, TCP communication requests, and acknowledgments between the server software and clients. Moreover, uploading content data (models and collaborative CINE MRI sequence) to clients requires processing, memory, and network resources.

3.2. Multidimensional Models for Experiments

In each experiment, a multidimensional (multislice and multiframe) holographic model cardiac CINE MRI was loaded by the FI3D server software and transmitted to the HMDs and/or HHDs. Also, brain and molecular ribbon 3D models, stored in universal scene description (USD) file format, were provided to FI3D. USD is an opensource file format for 3D content creation and 3D scene description. USD file format is used by a multitude of platforms, e.g., Nvidia Omniverse [48]. USD support in FI3D can provide Holo-Cloud access to a wide variety of 3D models that are available in virtual worlds, such as the Omniverse [49].

3.3. Infrastructure Location

All physical infrastructure used to implement and evaluate the Holo-Cloud resides in the United States. The public cloud server instance of Holo-Cloud ran on the AWS US-WEST-2 physical datacenter that is located at the Amazon facility in Oregon state. The clients and remote management computers were located in Houston, Texas.

3.4. Evaluation Metrics

As cloud-based applications are rapidly developing, we are also in the early stages of setting up appropriate testing, performance metrics, and benchmarking. To address the lack of such standardized means, we used the most common measurement-based metrics for evaluating the performance of cloud services [10,47,50,51].

The resource utilization of Holo-Cloud has been demonstrated for each evaluation metric in every experiment to illustrate the changes in resource utilization under different experimental conditions, such as the number of connected users.

3.4.1. Processor Utilization

Central processing unit (CPU) utilization is used as a valuable metric for software performance evaluation in conjunction with other metrics, such as memory and network utilization. In recent years, this metric has become a benchmark for assessing the performance of cloud computing and distributed systems [51,52,53,54,55]. Measuring the CPU utilization metric is crucial for efficiently estimating the processing power required by XR applications hosted on the Holo-Cloud. The results will be used by Holo-Cloud to provide adequate processing power for the hosted applications under varying workloads. CPU utilization measurement calculates the percentage of the time that a CPU is allocated to execute non-idle necessary commands for running a program or process [56]. In this work, CPU utilization data were specifically collected from the FI3D server software process.

This study used the Microsoft Windows Performance Monitor tool to monitor and report processor utilization. This tool ensures the correct calculation of CPU utilization percentage for multicore and multithreaded systems [56].

3.4.2. Memory Usage

Understanding memory utilization data not only helps in the precise allocation of hardware resources to ensure the smooth operation of software but is also a vital part of the cloud economy. It helps efficiently estimate resource allocation in cloud platforms to maintain a balance between cost and service performance [57,58]. Memory usage is determined by calculating the current allocation size of random-access memory (in bytes) exclusive to an application and cannot be shared with other applications. Memory usage was logged using the Microsoft Windows Performance Monitor tool [59].

3.4.3. Network Bandwidth Utilization

To ensure a fast and reliable connection and content delivery between the Holo-Cloud server and the clients, a high-speed link needs to be provided to both clients and the server [60]. The available internet access speed of the Holo-Cloud virtual machine provided by the public cloud provider and the available internet speed of the client software were measured using the OOKLA network monitoring tool, which is widely used in enterprise scale [61,62]. The download and upload speeds are determined by requesting to receive or send chunks of data and calculating the real-time speed of the file transfer during the transfer session [61]. Holo-Cloud server-to-client direct connection speed at the time of the experiments has been measured and reported by the iPerf tool. This tool was initially developed by the National Laboratory for Applied Network Research [63,64].

In all evaluation scenarios, the available internet bandwidth and end-to-end connection speed of the Holo-Cloud server and clients were larger than the maximum send and receive speed rates of the FI3D software process. Having a higher connection speed between the server and client compared to the FI3D maximum send and receive speed needs to be guaranteed to ensure there will be no content delivery delays caused by network infrastructure speed slowness. Network delays may negatively impact content delivery and result in a decreased user experience. This may be detrimental to the process of surgeries using XR tools.

3.5. Experiments and Evaluation Scenarios

3.5.1. First Evaluation Scenario: Single Client Connection to the Holo-Cloud

To start the evaluation process, an Android device was connected to the FI3D framework hosted on an EC2 AWS public cloud instance. The Holo-Cloud instance utilized a single CPU core and 4 gigabytes of random-access memory on a general-purpose solid-state shared storage. The connection between the client software and the cloud-hosted server software was established using a 5G cellular network.

The entire process for the client software to join the server, load the study data, view the overlayed model on the 3D camera environment, view the collaborative 4D CINE MRI, and disconnect from the server was 90 s. In total, 11.534 kilobytes were received by the server, and 8343 kilobytes of data were sent to the client. The network utilization is demonstrated in Table 1.

Table 1.

Network utilization of the Holo-Cloud platform components in the 1st experiment.

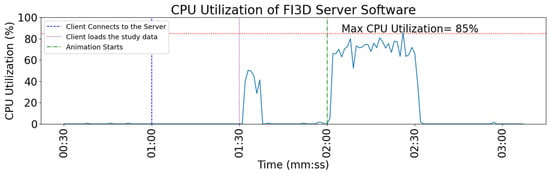

CPU utilization of the server software process in this test scenario has been demonstrated in Figure 4. The process of receiving a join request from the client software and replying to the request did not have any noticeable impact on CPU utilization. However, loading the demo study from the computer hard drive to the main memory and sending it to the connected client had a considerable impact on the CPU. Likewise, broadcasting collaborative 4D CINE MRI to the client software required up to 85% of the CPU power.

Figure 4.

CPU utilization of the server software in the first experiment. The blue solid line is % CPU utilization and the red horizontal dashed line is the maximum CPU utilization.

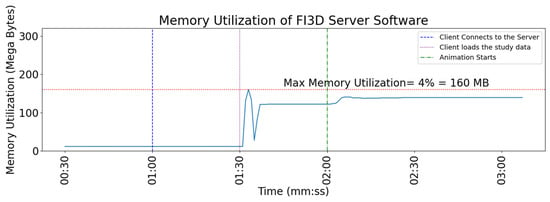

Figure 5 shows the memory utilization of the server software during the evaluation test events. Loading the study data from the hard drive to the main memory caused a huge spike in the memory utilization of the server software. However, the maximum amount of memory that the software demanded in this scenario was about 160 megabytes, which is not more than 4% of the total available memory of the system.

Figure 5.

Memory utilization of the server software in the first experiment. The blue solid line is memory utilization and the red horizontal dashed line is the maximum memory utilization.

Figure 6 shows the TCP packet transmission rate of the FI3D software during this experiment. The spike during data transmission of the animation data is larger than the packet transmission when the client software loads the static study scene. The data regarding multiple MRI frames for the multislice animation needs to be sent to the client. After the data were cached on the client’s device, the transmission of metadata continued until the end of the experiment.

Figure 6.

TCP packet transmission rate during the first experiment. The blue solid line is transmitted TCP packets per second.

3.5.2. Second Evaluation Scenario: Multiple Clients Simultaneously Interact with the Holo-Cloud

To evaluate the performance, stability, and reliability of the Holo-Cloud platform in resource-intensive situations, connecting with multiple clients is necessary. Implementing this evaluation scenario was challenging due to the inability to provide numerous clients because of their high price point. To overcome this issue, we have utilized the Microsoft HoloLens 2 emulator [65]. HoloLens 2 emulator is a free software that provides a virtual environment to run holographic and XR applications for development, testing, and quality assurance purposes. The environmental and user input gestures are simulated from the host computer input devices, such as the mouse, keyboard, and microphone, and then sent to HoloLens 2 emulator virtual sensors. One noticeable difference between a real HoloLens 2 HMD and a HoloLens 2 emulator is the inability of the HoloLens 2 emulator to receive the camera feed from the host device, so a black screen is shown as the background instead of the camera feed (Figure 7). This limitation will not impact the evaluation of the Holo-Cloud platform because the client software camera feed is not processed by the FI3D server software. For this emulator setup, a virtual machine with the necessary software applications to run the HoloLens 2 emulator was set up. Then, this virtual machine was cloned multiple times to provide multiple instances of HoloLens 2 emulators.

Figure 7.

(a) Holo-Cloud test environment, including the viewer wearing a HoloLens 2 HMD at a residential complex and four HoloLens 2 emulators running on a server computer located at a remote location (MRI LAB at the University of Houston). The user had remote access to the emulators for viewing and management. (b) Field of view of the viewer from the HoloLens 2 see-through display while performing the test.

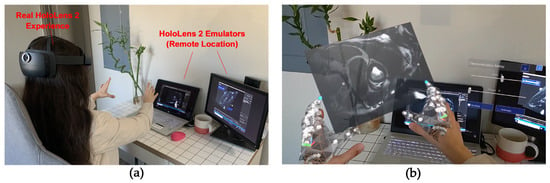

For the multiple connected client scenario, two CPU cores with 4 gigabytes of memory were allocated to the Holo-Cloud virtual machine to handle multiple connections. Increasing the number of CPU cores (compared to the resource allocation in the first scenario) is a very easy and efficient process in Holo-Cloud, owing to the cloud infrastructure features. The cloud infrastructure provider immediately provides the demanded CPU cores to the virtual machine. The Holo-Cloud performance is evaluated when the clients are connected to the cloud server, open and load the cardiac MRI study (Figure 8), and interact with the server by adjusting the CINE MRI sequence. In this scenario, we used one real Microsoft HoloLens 2 HMD and four HoloLens 2 emulator virtual machines.

Figure 8.

Screenshot of the study on the server while five clients are connected.

The network utilization of the clients and the server software process is reported in Table 2. The server software received 66.483 kilobytes of data from the clients and sent 58,395.51 kilobytes of data to the clients as a response to the requests and content. The maximum internet speed and end-to-end average data transfer speed of each end were greater than the maximum send and receive rate of the server software. This ensured no network-caused delay in the Holo-Cloud environment.

Table 2.

Network utilization of the Holo-Cloud platform components in the second experiment.

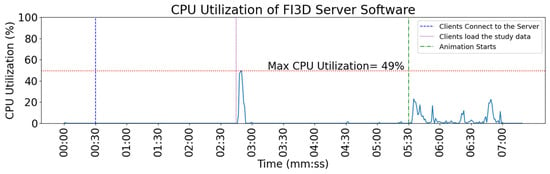

Figure 9 shows the CPU utilization of the software process in this test scenario. Loading the study data was the most demanding task, which needed almost half of the CPU processing power; however, this measurement returned to normal after the load process finished. Moreover, while playing the CINE MRI study animation, CPU utilization increased. The least demanding task was serving the clients’ request to register with the server at the beginning of the test.

Figure 9.

CPU utilization of the FI3D software in the second experiment. The blue solid line is % CPU utilization and the red horizontal dashed line is the maximum CPU utilization.

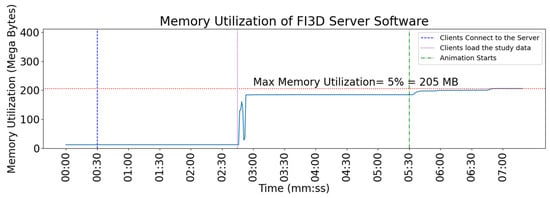

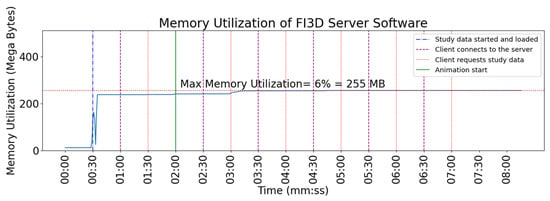

The memory utilization trend in Figure 10 shows a similar behavior to the first test scenario. Memory usage spikes only happen during the process of loading the study data. Serving clients’ requests to register with the server occupies minimal memory on the server system. In this scenario, the server software process used a maximum of 5% of the total 4 gigabytes of memory provided by the Holo-Cloud virtual machine to keep the study data loaded.

Figure 10.

Memory utilization of FI3D software in the second experiment. Spikes in memory utilization are observed when clients load the study data and the animation sequence starts. The blue solid line is memory utilization and the red horizontal dashed line is the maximum memory utilization.

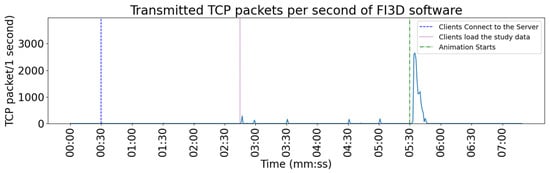

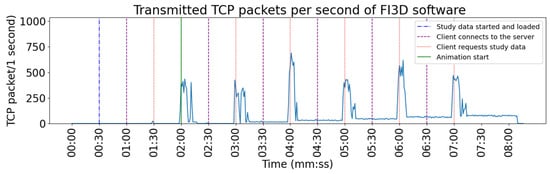

In Figure 11, the TCP transmission rate of the FI3D software is demonstrated. A huge spike occurred when the server started animating the 3D model, and FIs simultaneously received the animation data.

Figure 11.

The packet transmission rate for the different events in the second experiment. A spike is visible when the animation starts because data needs to be delivered to all the clients simultaneously. The blue solid line is transmitted TCP packets per second.

3.5.3. Third Evaluation Scenario: Multiple Clients Sequentially Connect to the Holo-Cloud

To identify the potential of the Holo-Cloud to serve multiple clients, we set up an experiment to connect multiple clients one at a time to the FI3D software to measure the resource utilization of the framework per client connection in a multiuser environment. In this experiment, the server software started and loaded the study data first. Then, the first client connected to the system, followed by the server initiating the animated cardiac CINE sequence. At 30 s intervals, subsequent clients joined the server and received the CINE sequence data. CPU utilization, memory usage, and the packet transmission rate of the FI3D software were measured for this scenario. FI3D software received 56.28 kilobytes of data from the clients and sent 53,493 kilobytes of data to the clients. Table 3 demonstrates the available bandwidth and network utilization.

Table 3.

Network utilization of the Holo-Cloud platform components in test scenario #3.

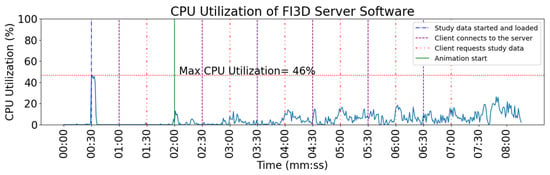

As shown in Figure 12, maximum CPU utilization occurs when the FI3D software starts to load the study data from the Holo-Cloud server hard drive into memory. The maximum CPU utilization does not reach the Holo-Cloud virtual machine’s maximum capacity, which shows that the Holo-Cloud platform successfully handles the processing needs of the FI3D server software. The required processing power to serve subsequent clients’ joint tasks and information requests is much easier to provide by Holo-Cloud because this amount is minimal compared to processing capabilities provided by the cloud infrastructure.

Figure 12.

CPU utilization spikes when the server loads and caches the study data for the first time. Holo-Cloud was able to fulfill the processing requirements. Also, minor spikes are seen when client connection and data requests are served by the server. The blue solid line is % CPU utilization and the red horizontal dashed line is the maximum CPU utilization.

The memory utilization of the FI3D software on Holo-Cloud follows the same pattern as the previous experiments (Figure 13). Because the server software caches the study data, the amount of memory required by the server software will not fluctuate when a new client connects to the system. This is crucial because it helps estimate memory requirements and efficient cloud resource procurement planning.

Figure 13.

Memory utilization spikes occur when the first client requests study data. Then, the server caches the data. The Holo-Cloud platform provides a sufficient amount of memory to run the FI3D. The blue solid line is memory utilization and the red horizontal dashed line is the maximum memory utilization.

As demonstrated in Figure 14, the server software sends the CINE MRI animation sequence data to each client upon their request. The data transmission is stopped once the client finishes caching the data. However, the metadata is still being sent to the client to update the latest visual properties of the model. The cached animation sequence is played and repeated by the client. This caching mechanism optimizes resource utilization by preventing extensive network utilization and system slowness when many clients are using the systems.

Figure 14.

The server sends the study data to clients upon request, illustrated by the spikes. The clients cache the data after receiving the data of one full CINE MRI sequence cycle then only metadata is transmitted. The blue solid line is transmitted TCP packets per second.

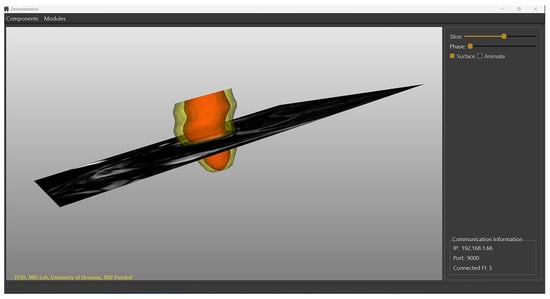

3.5.4. Fourth Evaluation Scenario: Multiuser Collaboration

In this scenario, multiuser collaboration of the Holo-Cloud platform was evaluated by projecting two holographic models to all viewers: a brain model and a molecular ribbon model, both provided in USD file format. All viewers loaded the models, and then one viewer interacted with the holograms by rotating and moving them using Microsoft HoloLens 2 hand gestures, and other users could seamlessly observe the changes in the FOV of their Microsoft HoloLens 2 HMD, HoloLens 2 emulator, and Android HHD at their respective physical locations (Figure 15). In this interaction, the data related to the coordination change in the brain and molecular ribbon models in each frame must be sent to the Holo-Cloud server, and the client software needs to receive the updated coordinates from the server to project on the respective device. The results showed seamless projection of the collaborative task on all client devices.

Figure 15.

(a) The user rotates, tilts, and zooms the brain model using the Microsoft HoloLens 2 HMD hand gestures. (b) The projection of the brain model in the user’s HoloLens see-through display. (c) Projection of the brain model using another user’s Android HHD. (d) The user interacts with a molecular ribbon 3D model using Microsoft HoloLens 2 HMD hand gestures. (e) The projection of the molecular ribbon model in HoloLens 2 FOV. (f) Another user interacts with the molecular ribbon model using an Android device.

In all the mentioned scenarios, no network slowness was observed. The network bandwidth requirement of the FI3D application is minimal, and as the network utilization tables show, the provided internet and end-to-end bandwidth of the cloud server and the clients were considerably higher than the bandwidth utilization of the application. The animation played on client devices was smooth, with no considerable frame drops or lags.

During the experiments, no slowness in the interaction between the clients and servers was observed. Also, the 4D CINE MRI sequence was played smoothly and had an acceptable visual framerate quality after the first cycle of the animation was cached by the client software.

4. Discussion and Conclusions

In this work, we introduced and evaluated a cloud framework to host a server application that enables users to connect from multiple locations and collaborate in an immersive XR environment. The importance of this platform is that it is the first step to proving that by utilizing an efficient cloud platform, we can overcome current processing limitations to expand the utilization of XR. This platform also provides an elevated user experience by eliminating the need to be physically present or in close proximity to the application server, which will result in enhanced quality of medical training. We also demonstrated that using the Holo-Cloud architecture is easy to implement, efficiently uses hardware resources, and is cloud platform-independent.

To evaluate the performance of the cloud platforms, it is crucial to measure CPU, memory, and network utilization. The experimental results show that the resource utilization of the FI3D application process hosted on the Holo-Cloud virtual machine container is minimal; therefore, the Holo-Cloud model has the potential to be utilized in various fields, such as surgery and surgical and radiology training.

There are some limitations associated with this framework. First, because this platform can be implemented on any cloud service provider, it may be challenging for the end user to decide between different providers. Secondly, in multiuser interaction scenarios with the immersive scene, every user registered with the system can modify the orientation and visual characteristics of the projected models, and the server will update the visual characteristics of the scene for all other users. Confusion may occur if more than one user interacts with the object simultaneously. To avoid this conflict, we plan to develop locking mechanisms. Apart from these limitations, some inherent limitations may impact user experience. Because the Holo-Cloud is multiplatform and accessible via a wide range of HMDs and HHDs, the visual experience of users regarding the projected model (delays in frame updates due to device hardware limitations, spatial resolution, luminance, contrast, and color profile of the models) may not be the same when using different hardware. Another limitation is the network quality of the end users. End users need to ensure that they have uninterrupted internet connectivity to have an optimal experience interacting with the platform.

Because the objective of this study is to evaluate the Holo-Cloud framework, the FI3D software’s original deployment and parameters were not changed or reconfigured, and this software was used as is. However, multiple FI3D software parameters, such as parallel processing, can be optimized for utilization on the cloud. Also, in the future, we plan to integrate medical imaging and medical IoT devices into the Holo-Cloud framework to enable users to interact and control devices via XR through the cloud. Moreover, we plan to integrate artificial intelligence models into the Holo-Cloud platform to leverage the high-performance and on-demand resources of the cloud for real-time diagnosis and data enhancement, such as on-the-fly medical image segmentation using various methods [34]. An economic analysis of using Holo-Cloud is also planned.

Author Contributions

Conceptualization, H.N., J.D.V.-G. and N.V.T.; methodology, H.N., J.D.V.-G. and N.V.T.; software, H.N. and J.D.V.-G.; validation, H.N. and J.D.V.-G.; formal analysis, H.N.; investigation, H.N. and J.D.V.-G.; resources, N.V.T.; data curation, H.N.; writing—original draft preparation, H.N.; writing—review and editing, H.N., K.Q.T., J.D.V.-G. and N.V.T.; visualization, H.N. and K.Q.T.; supervision, J.D.V.-G. and N.V.T.; project administration, J.D.V.-G. and N.V.T.; funding acquisition, J.D.V.-G. and N.V.T. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the National Science Foundation (NSF) awards CNS-1646566 and DGE-1746046 and by the National Aeronautics and Space Administration (NASA) STTR contract # 80NSSC22PB226.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data is contained within the article.

Conflicts of Interest

Author Jose Daniel Velazco-Garcia was employed by the company Tietronix Software, Inc. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Capecchi, I.; Bernetti, I.; Borghini, T.; Caporali, A. CaldanAugmenty—Augmented Reality and Serious Game App for Urban Cultural Heritage Learning. In Proceedings of the Extended Reality: International Conference, XR Salento 2023, Lecce, Italy, 6–9 September 2023; pp. 339–349. [Google Scholar] [CrossRef]

- Ayoub, A.; Pulijala, Y. The Application of Virtual Reality and Augmented Reality in Oral & Maxillofacial Surgery. BMC Oral Health 2019, 19, 238. [Google Scholar] [CrossRef]

- Geyer, M. BMW Group Starts Global Rollout of NVIDIA Omniverse. Available online: https://blogs.nvidia.com/blog/bmw-group-nvidia-omniverse/ (accessed on 25 December 2023).

- Kukla, P.; Maciejewska, K.; Strojna, I.; Zapał, M.; Zwierzchowski, G.; Bąk, B. Extended Reality in Diagnostic Imaging—A Literature Review. Tomography 2023, 9, 1071–1082. [Google Scholar] [CrossRef] [PubMed]

- Daher, M.; Ghanimeh, J.; Otayek, J.; Ghoul, A.; Bizdikian, A.J.; EL Abiad, R. Augmented Reality and Shoulder Replacement: A State-of-the-Art Review Article. JSES Rev. Rep. Tech. 2023, 3, 274–278. [Google Scholar] [CrossRef] [PubMed]

- Zhu, E.; Hadadgar, A.; Masiello, I.; Zary, N. Augmented Reality in Healthcare Education: An Integrative Review. PeerJ 2014, 2, e649. [Google Scholar] [CrossRef]

- Eckert, M.; Volmerg, J.S.; Friedrich, C.M. Augmented Reality in Medicine: Systematic and Bibliographic Review. JMIR Mhealth Uhealth 2019, 7, e10967. [Google Scholar] [CrossRef]

- Curran, V.R.; Xu, X.; Aydin, M.Y.; Meruvia-Pastor, O. Use of Extended Reality in Medical Education: An Integrative Review. Med. Sci. Educ. 2023, 33, 275–286. [Google Scholar] [CrossRef]

- Zhang, J.; Lu, V.; Khanduja, V. The Impact of Extended Reality on Surgery: A Scoping Review. Int. Orthop. 2023, 47, 611–621. [Google Scholar] [CrossRef]

- Arpaia, P.; De Benedetto, E.; De Paolis, L.; D’errico, G.; Donato, N.; Duraccio, L. Performance and Usability Evaluation of an Extended Reality Platform to Monitor Patient’s Health during Surgical Procedures. Sensors 2022, 22, 3908. [Google Scholar] [CrossRef]

- Longo, U.G.; De Salvatore, S.; Candela, V.; Zollo, G.; Calabrese, G.; Fioravanti, S.; Giannone, L.; Marchetti, A.; De Marinis, M.G.; Denaro, V. Augmented Reality, Virtual Reality and Artificial Intelligence in Orthopedic Surgery: A Systematic Review. Appl. Sci. 2021, 11, 3253. [Google Scholar] [CrossRef]

- Kim, J.C.; Laine, T.H.; Åhlund, C. Multimodal Interaction Systems Based on Internet of Things and Augmented Reality: A Systematic Literature Review. Appl. Sci. 2021, 11, 1738. [Google Scholar] [CrossRef]

- Schäfer, A.; Reis, G.; Reis, G. A Survey on Synchronous Augmented, Virtual and Mixed Reality Remote Collaboration Systems. ACM Comput. Surv. 2023, 55, 1–27. [Google Scholar] [CrossRef]

- Suh, A.; Prophet, J. The State of Immersive Technology Research: A Literature Analysis. Comput. Hum. Behav. 2018, 86, 77–90. [Google Scholar] [CrossRef]

- Sugimoto, M.; Sueyoshi, T. Development of Holoeyes Holographic Image-Guided and Telemedicine System: Clinical Benefits of Extended Reality (Virtual Reality, Augmented Reality, Mixed Reality), The Metaverse, and Artificial Intelligence in Surgery with a Systematic Review. Med. Res. Arch. 2023, 11. [Google Scholar] [CrossRef]

- Morimoto, T.; Hirata, H.; Ueno, M.; Fukumori, N.; Sakai, T.; Sugimoto, M.; Kobayashi, T.; Tsukamoto, M.; Yoshihara, T.; Toda, Y.; et al. Digital Transformation Will Change Medical Education and Rehabilitation in Spine Surgery. Medicina 2022, 58, 508. [Google Scholar] [CrossRef] [PubMed]

- Prange, A.; Chikobava, M.; Poller, P.; Barz, M.; Sonntag, D. A Multimodal Dialogue System for Medical Decision Support in Virtual Reality. In Proceedings of the 18th Annual SIGdial Meeting on Discourse and Dialogue, Saarbrücken, Germany, 15–17 August 2017; pp. 23–26. [Google Scholar] [CrossRef]

- Sutherland, J.; Belec, J.; Sheikh, A.; Chepelev, L.; Althobaity, W.; Chow, B.J.W.; Mitsouras, D.; Christensen, A.; Rybicki, F.J.; La Russa, D.J. Applying Modern Virtual and Augmented Reality Technologies to Medical Images and Models. J. Digit. Imaging 2019, 32, 38–53. [Google Scholar] [CrossRef]

- Zhao, Z.; Poyhonen, J.; Chen Cai, X.; Sophie Woodley Hooper, F.; Ma, Y.; Hu, Y.; Ren, H.; Song, W.; Tsz Ho Tse, Z. Augmented Reality Technology in Image-Guided Therapy: State-of-the-Art Review. Proc. Inst. Mech. Eng. H 2021, 235, 1386–1398. [Google Scholar] [CrossRef]

- Luxenburger, A.; Prange, A.; Moniri, M.M.; Sonntag, D. MedicaLVR. In Proceedings of the 2016 ACM International Joint Conference on Pervasive and Ubiquitous Computing: Adjunct, Heidelberg, Germany, 12–16 September 2016; ACM: New York, NY, USA, 2016; pp. 321–324. [Google Scholar] [CrossRef]

- Venkatesan, M.; Mohan, H.; Ryan, J.R.; Schürch, C.M.; Nolan, G.P.; Frakes, D.H.; Coskun, A.F. Virtual and Augmented Reality for Biomedical Applications. Cell Rep. Med. 2021, 2, 100348. [Google Scholar] [CrossRef] [PubMed]

- Uppot, R.N.; Laguna, B.; McCarthy, C.J.; De Novi, G.; Phelps, A.; Siegel, E.; Courtier, J. Implementing Virtual and Augmented Reality Tools for Radiology Education and Training, Communication, and Clinical Care. Radiology 2019, 291, 570–580. [Google Scholar] [CrossRef]

- Tan, Y.; Xu, W.; Li, S.; Chen, K. Augmented and Virtual Reality (AR/VR) for Education and Training in the AEC Industry: A Systematic Review of Research and Applications. Buildings 2022, 12, 1529. [Google Scholar] [CrossRef]

- Josephng, P.S.; Gong, X. Technology Behavior Model—Impact of Extended Reality on Patient Surgery. Appl. Sci. 2022, 12, 5607. [Google Scholar] [CrossRef]

- Morimoto, T.; Kobayashi, T.; Hirata, H.; Otani, K.; Sugimoto, M.; Tsukamoto, M.; Yoshihara, T.; Ueno, M.; Mawatari, M. XR (Extended Reality: Virtual Reality, Augmented Reality, Mixed Reality) Technology in Spine Medicine: Status Quo and Quo Vadis. J. Clin. Med. 2022, 11, 470. [Google Scholar] [CrossRef] [PubMed]

- López-Ojeda, W.; Hurley, R.A. Extended-Reality Technologies: An Overview of Emerging Applications in Medical Education and Clinical Care. J. Neuropsychiatry Clin. Neurosci. 2021, 33, A4–A177. [Google Scholar] [CrossRef] [PubMed]

- Chandler, T.; Cordeil, M.; Czauderna, T.; Dwyer, T.; Glowacki, J.; Goncu, C.; Klapperstueck, M.; Klein, K.; Marriott, K.; Schreiber, F.; et al. Immersive Analytics. In Proceedings of the 2015 Big Data Visual Analytics (BDVA), Hobart, Australia, 22–25 September 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 1–8. [Google Scholar] [CrossRef]

- Pi, D.; Liu, J.; Wang, Y. Review of Computer-Generated Hologram Algorithms for Color Dynamic Holographic Three-Dimensional Display. Light Sci. Appl. 2022, 11, 231. [Google Scholar] [CrossRef]

- Huzaifa, M.; Desai, R.; Grayson, S.; Jiang, X.; Jing, Y.; Lee, J.; Lu, F.; Pang, Y.; Ravichandran, J.; Sinclair, F.; et al. Exploring Extended Reality with ILLIXR: A New Playground for Architecture Research. arXiv 2020, arXiv:2004.04643. [Google Scholar]

- Parmar, V.; Kingra, S.K.; Shakib Sarwar, S.; Li, Z.; De Salvo, B.; Suri, M. Fully-Binarized Distance Computation Based On-Device Few-Shot Learning for XR Applications. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Vancouver, BC, Canada, 17–24 June 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 4502–4508. [Google Scholar]

- Velazco-Garcia, J.D.; Shah, D.J.; Leiss, E.L.; Tsekos, N.V. A Modular and Scalable Computational Framework for Interactive Immersion into Imaging Data with a Holographic Augmented Reality Interface. Comput. Methods Programs Biomed. 2021, 198, 105779. [Google Scholar] [CrossRef]

- Molina, G.; Velazco-Garcia, J.D.; Shah, D.; Becker, A.T.; Seimenis, I.; Tsiamyrtzis, P.; Tsekos, N.V. Automated Segmentation and 4D Reconstruction of the Heart Left Ventricle from CINE MRI. In Proceedings of the 2019 IEEE 19th International Conference on Bioinformatics and Bioengineering (BIBE), Athens, Greece, 28–30 October 2019; pp. 1019–1023. [Google Scholar]

- Hirzle Florian Müller Fiona Draxler, T.; Schmitz, M.; Knierim, P.; Hornbaek, K.; Hirzle, T.; Müller, F.; Draxler, F. When XR and AI Meet-A Scoping Review on Extended Reality and Artifcial Intelligence. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems, Hamburg, Germany, 23–28 April 2023; pp. 1–45. [Google Scholar] [CrossRef]

- Gao, S.; Zhou, H.; Gao, Y.; Zhuang, X. BayeSeg: Bayesian Modeling for Medical Image Segmentation with Interpretable Generalizability. Med. Image Anal. 2023, 89, 102889. [Google Scholar] [CrossRef] [PubMed]

- Prange, A.; Barz, M.; Sonntag, D. Medical 3D Images in Multimodal Virtual Reality. In Proceedings of the 23rd International Conference on Intelligent User Interfaces Companion, Tokyo, Japan, 7–11 March 2018; ACM: New York, NY, USA, 2018; pp. 1–2. [Google Scholar] [CrossRef]

- Morales Mojica, C.M.; Velazco-Garcia, J.D.; Pappas, E.P.; Birbilis, T.A.; Becker, A.; Leiss, E.L.; Webb, A.; Seimenis, I.; Tsekos, N.V. A Holographic Augmented Reality Interface for Visualizing of MRI Data and Planning of Neurosurgical Procedures. J. Digit. Imaging 2021, 34, 1014–1025. [Google Scholar] [CrossRef]

- Velazco Garcia, J.D.; Navkar, N.V.; Gui, D.; Morales, C.M.; Christoforou, E.G.; Ozcan, A.; Abinahed, J.; Al-Ansari, A.; Webb, A.; Seimenis, I.; et al. A Platform Integrating Acquisition, Reconstruction, Visualization, and Manipulator Control Modules for MRI-Guided Interventions. J. Digit. Imaging 2019, 32, 420–432. [Google Scholar] [CrossRef]

- The Metaverse Is the Future of Digital Connection|Meta. Available online: https://about.meta.com/metaverse/ (accessed on 26 December 2023).

- NVIDIA Omniverse the Platform for Connecting and Developing OpenUSD Applications. Available online: https://www.nvidia.com/en-us/omniverse/ (accessed on 26 December 2023).

- All-in-One Medical Imaging Solution for Analysis, 3D Modeling and Digital Twin. Available online: https://medicalip.com/medip/ (accessed on 26 December 2023).

- Raith, A.; Kamp, C.; Stoiber, C.; Jakl, A.; Wagner, M. Augmented Reality in Radiology for Education and Training—A Design Study. Healthcare 2022, 10, 672. [Google Scholar] [CrossRef]

- Driver, J.; Groff, M.W. Navigation in Spine Surgery: An Innovation Here to Stay. J. Neurosurg. Spine 2022, 36, 347–349. [Google Scholar] [CrossRef] [PubMed]

- White, E. Migrating Azure VM to AWS Using AWS SMS Connector for Azure. Available online: https://aws.amazon.com/blogs/compute/migrating-azure-vm-to-aws-using-aws-sms-connector-for-azure/ (accessed on 17 December 2023).

- Amazon AWS. Amazon EC2. Available online: https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/concepts.html (accessed on 17 December 2023).

- Amazon AWS. Amazon Machine Images (AMI). Available online: https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/AMIs.html (accessed on 17 December 2023).

- Cerf, V.G.; Icahn, R.E. A Protocol for Packet Network Intercommunication. ACM SIGCOMM Comput. Commun. Rev. 2005, 35, 71–82. [Google Scholar] [CrossRef]

- Duan, Q. Cloud Service Performance Evaluation: Status, Challenges, and Opportunities—A Survey from the System Modeling Perspective. Digit. Commun. Netw. 2017, 3, 101–111. [Google Scholar] [CrossRef]

- USD at NVIDIA. Available online: https://developer.nvidia.com/usd (accessed on 28 December 2023).

- Tran, K.Q.; Neeli, H.; Tsekos, N.V.; Velazco-Garcia, J.D. Immersion into 3D Biomedical Data via Holographic AR Interfaces Based on the Universal Scene Description (USD) Standard. In Proceedings of the 2023 IEEE 23rd International Conference on Bioinformatics and Bioengineering (BIBE), Dayton, OH, USA, 4–6 December 2023; pp. 354–358. [Google Scholar] [CrossRef]

- Li, Z.; O’Brien, L.; Zhang, H.; Cai, R. On a Catalogue of Metrics for Evaluating Commercial Cloud Services. In Proceedings of the 2012 ACM/IEEE 13th International Conference on Grid Computing, Beijing, China, 20–23 September 2012; pp. 164–173. [Google Scholar] [CrossRef]

- Li, Z.; Zhang, H.; O’brien, L.; Cai, R.; Flint, S. On Evaluating Commercial Cloud Services: A Systematic Review. J. Syst. Softw. 2013, 86, 2371–2393. [Google Scholar] [CrossRef]

- Brummett, T.; Sheinidashtegol, P.; Sarkar, D.; Galloway, M. Performance Metrics of Local Cloud Computing Architectures. In Proceedings of the 2nd IEEE International Conference on Cyber Security and Cloud Computing, CSCloud 2015—IEEE International Symposium of Smart Cloud, IEEE SSC 2015, New York, NY, USA, 3–5 November 2015; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2016; pp. 25–30. [Google Scholar] [CrossRef]

- Atas, G.; Gungor, V.C. Performance Evaluation of Cloud Computing Platforms Using Statistical Methods. Comput. Electr. Eng. 2014, 40, 1636–1649. [Google Scholar] [CrossRef]

- Kumar, S.; Maurya, V.; Gupta, R. A Distributed Load Balancing Technique for Multitenant Edge Servers with Bottleneck Resources. IEEE Trans. Reliab. 2023, 1–13. [Google Scholar] [CrossRef]

- Velkoski, G.; Simjanoska, M.; Ristov, S.; Gusev, M. CPU Utilization in a Multitenant Cloud. In Proceedings of the Eurocon 2013, Zagreb, Croatia, 1–4 July 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 242–249. [Google Scholar] [CrossRef]

- Tarra, H. Understanding Processor (% Processor Time) and Process (% Processor Time). Available online: https://social.technet.microsoft.com/wiki/contents/articles/12984.understanding-processor-processor-time-and-process-processor-time.aspx (accessed on 17 December 2023).

- Dittakavi, R.S.S. Deep Learning-Based Prediction of CPU and Memory Consumption for Cost-Efficient Cloud Resource Allocation. Sage Sci. Rev. Appl. Mach. Learn. 2021, 4, 45–58. [Google Scholar]

- Kumar, A.; Goswami, M. Performance Comparison of Instrument Automation Pipelines Using Different Programming Languages. Sci. Rep. 2023, 13, 18579. [Google Scholar] [CrossRef] [PubMed]

- Using Performance Monitor. Available online: https://learn.microsoft.com/en-us/previous-versions/windows/it-pro/windows-server-2008-R2-and-2008/cc749115(v=ws.11)?redirectedfrom=MSDN (accessed on 14 February 2024).

- Ul Islam, S.; Khattak, H.A.; Pierson, J.M.; Din, I.U.; Almogren, A.; Guizani, M.; Zuair, M. Leveraging Utilization as Performance Metric for CDN Enabled Energy Efficient Internet of Things. Measurement 2019, 147, 106814. [Google Scholar] [CrossRef]

- Ookla. Introducing Speedtest® CLI. Available online: https://www.ookla.com/articles/introducing-speedtest-cli (accessed on 17 December 2023).

- Abolfazli, S.; Sanaei, Z.; Wong, S.Y.; Tabassi, A.; Rosen, S. Throughput Measurement in 4G Wireless Data Networks: Performance Evaluation and Validation. In Proceedings of the 2015 IEEE Symposium on Computer Applications & Industrial Electronics (ISCAIE), Langkawi, Malaysia, 12–14 April 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 27–32. [Google Scholar] [CrossRef]

- Rajabzadeh, P. Monitoring Network Performance with Iperf; Politecnico di Milano: Milan, Italy, 2017. [Google Scholar]

- iperf. iperf3: A TCP, UDP, and SCTP Network Bandwidth Measurement Tool. Available online: https://github.com/esnet/iperf (accessed on 17 December 2023).

- Using the HoloLens Emulator. Available online: https://learn.microsoft.com/en-us/windows/mixed-reality/develop/advanced-concepts/using-the-hololens-emulator (accessed on 17 December 2023).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).