Abstract

Designing integrating systems for support, real-time monitoring, and executing of complex missions is challenging, since they often fail due to high levels of complexity and overwhelming volume of input data. Past attempts have resorted to “ad hoc” solutions, which face issues of being non-updatable, non-upgradable, and not applicable to similar missions, necessitating a complete redesign and reconstruction of the system. In the national defense and security sector, the impact of this reconstruction requirement leads to significant costs and delays. This study presents advanced methodologies for organizing large-scale datasets and handling complex operational procedures systematically, enhancing the capabilities of Decision Support Systems (DSSs). By introducing Complex Mission Support Systems (CMSSs), a novel SS sub-component, improved accuracy and effectiveness are achieved. The CMSS includes mission conceptualization, analysis, real-time monitoring, control dynamics, execution strategies, and simulations. These methods significantly aid engineers in developing DSSs that are highly user-friendly and operational, thanks to human-reasoning-centered design, increasing performance and efficiency. In summary, the systematic development of data cores that support complex processes creates an adaptable and adjustable framework in a wide range of diverse missions. This approach significantly enhances the overall sustainability and robustness of an integrated system.

1. Introduction

In this research, we will present an advanced Decision Support System (DSS) capable of human-centric management of operational procedures which, in most instances, are characterized by their intricate nature [1]. These processes are sequential or parallel and distinctly well-defined and they constitute a mission in its entirety. According to specialists in operational terminology, a mission is defined as a set of interconnected or not operational procedures. These processes are intricately linked and heavily reliant on large-scale input datasets and information.

Furthermore, in this study, a complex mission is defined as a scenario within an environment that encompasses a multitude of actors and sub-actors as integral components of a system. This definition of complexity typically refers to scenarios involving extensive input data integration derived from both internal and external sources, coupled with a significant number of operational procedures or processes. Such missions demand an advanced DSS meticulously engineered to proficiently manage their procedures, ensuring the effective completion of the missions.

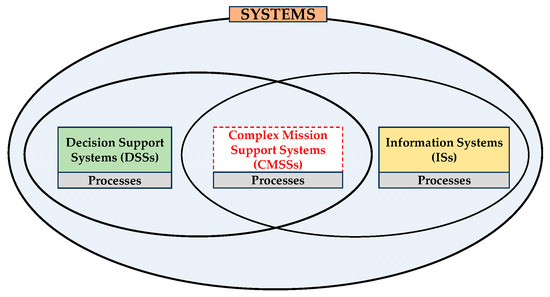

The DSS necessitates a well-structured design to function correctly in aiding the accurate planning and real-time execution of a mission within the system. It requires a structure for handling the necessary input data before entering in the system. This study introduces a novel SS sub-component, the Complex Mission Support System (CMSS or CMS system). Furthermore, this study proposes a new Decision Support System (DSS) scheme, in which a CMSS is interconnected to an Information System (IS) and the overall architecture constitutes a DSS. The CMS system is a kind of specialized system which resides in the intersection of DSSs and ISs (see Figure 1). The structure of DSSs, including some indicative functions, encompasses:

Figure 1.

CMSS as an intersection of DSS and IS.

- Decision Support System (DSS): This system plays a pivotal role in the design, monitoring, and real-time execution of missions. It includes a range of functionalities such as (a) designing the mission’s blueprint, outlining objectives, strategies, and resources, (b) monitoring the mission’s progress, ensuring adherence to the predefined plan, and (c) facilitating real-time adjustments and decision making during mission execution to respond to operational conditions of a dynamic nature.

- Information System (IS): This system is integral to data/information management, with functionalities including (a) managing input and output data/information, ensuring the accuracy and relevance of data used in decision-making processes, (b) handling data/information, encompassing storage, retrieval, and processing operations, (c) responding to Requests for Information (RFIs), ensuring timely and accurate dissemination of information, (d) implementing a computationally efficient classification of data/information to enable efficient categorization and retrieval, and (e) categorizing data/information thematically, which facilitates easier access and analysis for specific mission aspects.

- Complex Mission Support System (CMSS): This kind of system is designed for executing intricate missions, with features such as (a) producing detailed records of actions taken (log files), providing a comprehensive procedure footprint for efficient analysis, (b) predicting new intermediate (crucial for the mission) actions’ nodes, facilitating proactive planning and resource allocation, and (c) executing operational procedures efficiently, ensuring the seamless implementation of mission-critical tasks.

At this point, it is deemed appropriate to mention that the complexity of the procedures of CMSSs has been a significant challenge for IT engineers because it often exceeds the IS’s limits due to the inability of the ISs to organize and handle further incoming information [2]. The term complex mission support system is introduced as a description/characterization of one system that is used for supporting the design, simulation, monitoring, and control of any type of operational procedure (or complex mission as it is called by many operational experts). In an extended sense, these systems are also called command and control support systems because of their hierarchical operational structure [3]. Indeed, CMSS construction poses a significant technical challenge but, mainly, their creation is considered of major importance for the design and maintenance of national defense and security policy operations.

CMSSs need a multitude of information to operate properly. In particular, they usually demand georeferenced information which can be Two-Dimensional (2D) or Three-Dimensional (3D). In realistic operational scenarios, these CMS systems are dependent on information extracted from ill-designed data structures, either from data warehouses or other large-scale ISs. The creation of a CMSS presents an emerging scientific and engineering area of interest, while its efficient design and implementation is crucial for national defense planning [4].

The proper execution of complex procedures has been one of the most important factors affecting their outcome. The need for the construction and utilization of CMS systems in complex missions arises due to multiple factors: Firstly, the excessive complexity of the CMSS’s internal operational procedures, an emergent property of its nature and the volume of the input data [5]. Furthermore, the increased number of participant actors that are an internal part of the system in a mission, especially in cases of joint operations, which inherently demand a high level of coordination and synchronization [6]. Additionally, the large volume of operational input data needed, which are directly or indirectly geospatial [7]. Moreover, plenty of expertise of specialists and prior knowledge have already been standardized and/or formalized, both in the design phase and during the execution of a mission, but this knowledge is scattered and, in its totality, has not been integrated in a single IS. Last, but not least, the desire to use unexploited datasets of reliable quality (e.g., updated maps, regulations, guidelines, case laws in different geographical areas, etc.), as well as successful operational procedures (e.g., successful coordination of actors in search and rescue missions, use of standardized operational methodology where available, etc.) [8].

In general terms, DSSs are capable of managing, controlling, monitoring, and executing operational procedures, either scheduled or in an emergency [9]. This type of system has already been developed for realistic cases in the political sector for addressing natural disasters [10], epidemic incidents [11], transportation of inflammable or other hazardous materials, etc. [12] and in the security sector for search and rescue missions [13], urgent air transportation of patients [14], supply missions [15], and other similar complex missions in several areas in case of emergency situations [16] (see Appendix A).

However, it is widely known that these systems are designed ad hoc (i.e., for a specific purpose) and employ heuristic methodologies that may not necessarily be generalizable or applicable to other cases [17]. In the present study, it has been observed that the data required to support even simple operational procedures (e.g., search and rescue missions, transportation of sensitive or hazardous cargo, air transportation of patients and organs for transplantation, combating sea piracy, etc.) are usually of significantly high volume, often highly complex, and in the majority of cases, directly or indirectly related to maps [18].

In fact, the volume of these data continually increases, having already reached relatively large amounts. As a result, a system may become unmanageable or even uncontrollable. Additionally, these data often exhibit increased internal complexity and particular diversity of information required in missions, which further complicates the duty of operational officers who process these data [19].

The rapid dynamic changes of specific data (in terms of context, type, data structure, format, etc.) may present difficulties in being updated, potentially leading to considerable decision-making challenges for operational professionals [20]. Often, the presence of multiple autonomous entities producing distinct datasets leads to substantial issues concerning the internal coherence and compatibility of targeted data sub-sets. As a result, this inability to achieve internal interoperability and/or compatibility generally hampers the utilization of these data for operational procedures [21].

The bulk of information needed for DSSs is likely already developed in segments and of satisfactory quality, originating from multiple relevant authorities (e.g., governmental institutions, organizations, etc.) [22]. The way in which specific operational procedures are designed and executed by Subject Matter Experts (SMEs) has significant similarities among such procedures regardless of the kind of mission. The aforementioned SMEs are highly knowledgeable trained officials performing specialized functions in given processes. Nonetheless, the ability to develop solutions may markedly differ among operational officials; the fundamental principles of human reasoning used in designing missions are common among all operational officials. The procedures that any participant actor applies in any kind of mission present similarities (common segments). These common procedure segments have already been standardized or even established as common procedure templates by operational officials for a considerable number of missions [23] (see Appendix A).

However, to fully exploit the potential offered by the aforementioned similarities, in this work it was deemed necessary that certain actions ought to be taken as follows: (a) Constructing data cores which are organized in a systematic way to support complex operational procedures and (b) developing a general adaptable and adjustable framework of methodologies, based on the aforementioned data cores, to support a considerable thematic range of different operational procedures.

This research introduces specific methodologies and techniques for organizing and handling large-scale information in DSSs for complex missions with the goal of resolving complexities associated with diversity, polymorphism, and information volume. The aforementioned combinatorial solutions include:

- 1st Methodology: Use of information organization of the incoming data in the CMS system, based on purpose. This methodology emphasizes purpose-driven information organization. Aligning operational procedures with specific purposes aids efficient information organization correlating each action with the data required for mission support [24].

- 2nd Methodology: Use of thematic decomposition of complex operational procedures based on their thematic content, as well as the geographical area where they take place [25].

- 3rd Methodology: Use of a novel mission design algorithm for identifying key intermediate objectives, enabling the CMSS to easily and flexibly handle any kind of complex operational procedure, following crucial intermediate steps in order to accomplish a mission successfully.

- 4th Methodology: Use of a novel system architecture called a “teleological structure” or “thematic content map”. The aforementioned architecture ensures the sustainability of a complex system because it resembles the way in which the human mind works, correlating each subject or issue that it is called upon to address with the corresponding sub-sets of data and computational methods required to solve a particular problem or issue. Components of the teleological methodology are used in the three aforementioned methodologies by appropriate adaptation to CMSSs. So, a CMSS that implements the teleology method gains flexibility and functionality by simplifying the operational procedures/processes without negatively influencing the successful completion of a mission [26].

- 5th Methodology: Use of an organization of geodata called “themes over maps”, that optimally fits the aforementioned teleological structure. The themes over maps approach is more suitable for complex missions than the frequently applied architecture called “maps over themes” because it is totally aligned to the way in which the human mind works, correlating each subject or issue with the problem that it is called to solve [27,28]. Components of this structure are used in the entire system by suitable adaptation to the IS, which is interconnected with the CMSS. So, the integrated system that implements the themes over maps structure can provide to any of its components the actionable information required for the successful completion of a mission.

The aforementioned methodologies and techniques can significantly address the severe issue of CMS system rejection stemming from the inability of the system to support cases other than that for which it was created. In practice, the rejection of a CMS system leads to redesign and the construction of a new one, entailing cost- and delay-related issues.

1.1. Related Works/Existing DS System Methodologies

After conducting a literature review on the scientific field of the present study concerning methodologies and techniques in DS systems, an overview of the most prominent studies is carried out. The key characteristics of various DSSs and their areas of application were identified and are presented in this state-of-the-art sub-section. For instance, the authors of [29] describe a method using model-based systems engineering to develop an unmanned aerial system digital twin, focusing on route optimization in military contexts with multiple attribute utility theory to improve decision making and reduce human error. Similarly, Ref. [30] presents a system for selecting surface units for search and rescue operations at sea using an Automatic Identification System (AIS) and multi-criteria decision analysis. Extending these developments, Ref. [14] proposes a DDS for Medical Evacuation (MEDEVAC) in military operations and emergencies, aimed at enhancing the efficiency of medical personnel in triaging and evacuating casualties from a region. Furthermore, Ref. [31] presents the F-35 system, designed for pilots to execute advanced tactical missions. The DSS of this aircraft equips pilots with enhanced situational awareness and decision aids, facilitating critical and timely decision making. Additionally, Ref. [32] proposes a modular control solution integrated with the DSS to effectively plan, execute, and monitor intricate missions involving multiple drones. Complementing these approaches, Ref. [33] assesses the efficacy of neural networks in an Electrocardiogram (ECG) DSS for categorizing heartbeats as normal or abnormal, thus supporting medical professionals to make an assessment of the patient’s state and helping them proceed with the appropriate therapy. In a similar vein, the authors of [34] present a web-based DSS to efficiently predict, plan, and respond to fire and flood events. This system utilizes Earth observation data and real-time weather information. Moreover, the authors of [35] present a DSS design to improve police patrolling efficiency by integrating predictive policing capabilities with patrol districting models. The system aims to optimize the allocation of police resources by predicting crime risks and efficiently distributing police officers across patrol areas. The authors of [36] discuss the development and successful implementation of an advanced smart support system for operators of remotely operated vehicles, particularly those designed for navigating long-distance routes synchronously, accurately, and safely. Lastly, the study presented in [37] focuses on the development and implementation of a DSS designed for a low-voltage grid that integrates renewable energy sources, specifically photovoltaic panels and wind turbines. This system is aimed at proposing decisions for achieving an energy balance within a pilot microgrid, thereby reducing reliance on external power networks.

1.2. The Structure of the Present Study

The present manuscript is organized as follows:

- In Section 1, an introduction is provided, along with a proposal for a novel system structure and methodologies that merge a CMSS with an IS, forming an integrated DSS.

- In Section 2, the focus is on the critical challenges and methodologies involved in managing data and improving the decision-making process in complex mission scenarios, elaborating on the development and organization of CMSSs and ISs. This section is organized into several sub-sections:

- Section 2.1 discusses the data requirements and structural design necessary for creating CMSSs.

- Section 2.2 outlines the specific data needs of operational specialists for successfully executing complex missions using CMSSs.

- Section 2.3 focuses on addressing the challenges associated with managing extensive datasets in ISs.

- Section 2.4 explores the unique aspects and design challenges of SSs.

- Section 2.5 covers the structured development of data cores for efficient information by describing five methodologies for organizing data and complex operational processes in DSSs.

- In Section 3, methodologies and structures used in a CMSS-based mission are comprehensively described, providing insights into its design, organization, and comparative advantages. This section is organized into several sub-sections, each dedicated to a different aspect of the mission, for example:

- Section 3.1 introduces a mission-representative example, serving as a proof of concept for the CMSS.

- Section 3.2 analyzes how data are structured and managed in the mission example, representing the first methodology applied within the CMSS framework.

- Section 3.3 focuses on breaking down the mission content into themes or components, illustrating the second methodology in the CMSS approach.

- Section 3.4 delves into the specific algorithms or processes used in designing the mission within the CMSS, highlighting the third methodology used.

- Section 3.5 explores the goal-oriented aspects of the mission design, discussing how the mission’s objectives are structured and achieved, representing the fourth methodology.

- Section 3.6 describes a unique approach where thematic elements are prioritized over geographical or spatial considerations in mission planning, indicative of the fifth methodology in the CMSS.

- Section 3.7 provides a comparative analysis of the CMSS with other existing systems or methodologies in the domain, highlighting its uniqueness and advantages.

- In Section 4, a detailed discussion explores various aspects of enhancing decision-making systems:

- Section 4.1 emphasizes the importance of high-quality input in DSSs, CMSSs, and ISs.

- Section 4.2 highlights the critical role and necessity of developing these systems.

- Section 4.3 shifts focus to the efficient enhancement of data and procedural organization within these systems.

- Section 4.4 examines the potential limitations and challenges associated with the proposed CMSS in depth.

- Section 4.5 discusses practical considerations for implementing the CMSS framework in real-world applications.

- Section 4.6 explores future perspectives and emerging trends in CMSS.

- Section 4.7 explores the uncertainty in large-scale datasets and the role of fuzzy and interval data in enhancing DSSs.

- In Section 5, the conclusions of the present work are stated.

- This study is accompanied by Appendix A, Appendix B, Appendix C, Appendix D and Appendix E that offer detailed insights into various facets of the subject matter (e.g., organization and structuring of data within an IS, development and architecture of algorithms used in designing missions, mathematical equations, formulas, relationships, and expressions that enhance the understanding of the examined approaches, etc.)

- Lastly, a list of the references and relevant bibliographies used in the present study is given.

2. Materials and Methods

This section presents the crucial data requirements and mission algorithms necessary to support operational specialists in complex missions. It addresses the technical challenges of managing voluminous datasets within a DSS that have a negative influence on the system performance. Furthermore, this section also elucidates specific methodologies and techniques that leverage the reasoning capabilities of specialists to streamline the organization of data and processes within the DSS, contributing to the optimal function and performance of algorithms to effectively support the completion of the mission.

2.1. Identification of Input Data Requirements and Internal Frameworks for the Development of CMSSs

A rigorous examination was carried out on data and program requirements for supporting operational procedures in order to study the characteristics of desired data cores and programs. Representative examples of complex missions (outlined in Appendix A) were reviewed, highlighting their prevalence among the most frequent missions encountered by operational officers in their professional experience. Additionally, extensive interviews were conducted with operational specialists from various sectors in order to examine the realistic data and program needs for supporting the operational procedures which they are responsible for handling. These interviews helped in acquiring of a significant number of different types of critical operational information. This information directly yielded data and program requirements for the development of general DSSs to support complex missions of broad thematic range (as outlined in Appendix B).

2.2. Input Information Requirements in CMSSs for Operational Specialists to Successfully Execute Complex Missions

By conducting interviews with the operational specialists, who are an inextricable part of the present study, valuable operational experience has been gained and therefore actionable information has been gathered towards the development of a general system for the effective support of complex missions, for the needs of the present study. The aforementioned information can be categorized into two main groups (see [24] and for more details Appendix B):

- The common information. It is the type of information which usually satisfies common needs and operational requirements of mission actors. The most characteristic aspects of this information are presented below:

First of all, there is information including the time-constraint context. This type of information has the potential to heighten the complexity of a mission. This results in altering the mission structure which may require significant modifications to the required information. For instance, the allocated time for mission execution, the strategic utilization of timelines, obligations, and prioritization may undergo substantial changes. The analysis of a mission hinges upon the limitations imposed by time and the corresponding informational prerequisites [38].

Furthermore, there is another kind of information concerning the terrain characteristics, encompassing its morphology such as vegetation, river pathways, points of higher elevation, areas with high visibility, evacuation routes and pathways, etc.

Moreover, an additional type of information concerns environmental conditions such as temperature, wind speed, humidity, visibility, cloud concentration, fog, etc. It is imperative to proactively take these factors into consideration before embarking on a mission. Special emphasis should be given to instances where weather conditions impact the landscape, especially during intense rainfall or flooding, as well as when topography influences communication channels and the extent of visibility [39].

In addition, information concerning the capabilities and qualifications of actors in a mission significantly affects the success of the entire mission. Key determinants also include the number of actors, their designated duties, their level of training, their readiness to handle emergencies, and the possibility of continuous flow of information. These factors stand as pivotal considerations throughout a mission.

In addition, all types of information related to available technical or specialized equipment are crucial, encompassing vehicles, communication protocols, area surveillance systems, motion detection systems, telecommunications relays, satellites, etc. The quantity and quality of available technological resources, their adaptability to the specific needs of the mission, as well as their availability, decisively facilitate the execution of the mission.

Also, the type of information concerning vulnerabilities and potential threats is important and related to the possibility of partial or complete failure in achieving the objectives of a mission. In operational jargon, vulnerabilities encompass scenarios, procedures, and malicious actions stemming from external sources such as disasters and sabotage that can lead to loss of human life and damage to infrastructure, such as failures in communication and security systems, vehicle malfunctions, etc. Examples include, among others, congested roads, security issues, disruptions in primary or backup power supplies, ongoing construction within the mission’s geographic area, etc.

Moreover, numerous vulnerable points could arise during a mission, necessitating specific analysis. These points, with a high likelihood of emergence and impact on mission success, essentially represent threats. Consequently, experts are compelled to address them with utmost priority. When specific conditions align and pertinent critical events unfold, a particular threat escalates into a crisis, as defined in operational terminology.

Alongside, information concerning legislation and laws introduces heightened legal duties and law restrictions for the involved actors and operators. They should consider these factors and if they align with their directives or commands issued by superiors.

Last, but not least, additional significant types of information require specific operational protocols encompassing data capable of altering the current mission scenario in either advantageous or detrimental ways. This kind of information includes an extensive range of themes, such as demographic statistics of regions, economic and tourist activities, command-and-control center details, geographic zones of interest, transportation, communication networks, etc.

- Specialized information satisfies the special (particular) needs of actors not only to obtain a satisfactory real-time situational awareness, which is the ability to perceive, understand, and effectively respond to an unexpected situation in an operational field, but also to be capable of acting appropriately with precision and time exactness, maximizing the probability of mission success. A characteristic specialized example is the specialized information required during any Search and Rescue (SAR) mission concerning an aircraft pilot. SAR missions require, among others, (a) information regarding the aircraft type, accident location, time of incident, flight trajectory towards the critical crash area, etc., (b) pilot-related information covering pilot identity, equipment, potential pilot responsibility for the aircraft’s crash, current pilot status, etc., (c) environmental details regarding terrestrial or aquatic conditions, present and future weather conditions at low altitudes, potential obstacles or hindrances for search and rescue operations, etc., (d) authorities’ response details encompassing the availability of agencies for SAR, their operational capabilities, resource availability, equipment, materials, search and rescue personnel required, etc.

The majority of the previously mentioned actionable information, either common or specialized, is georeferenced, directly or indirectly, and it requires particular handling. The aforementioned necessity makes the acquisition and update of this information highly costly due to its typically extensive volume, requiring a level of detail far beyond the conventional SS’s capabilities. Further details of actionable information required in realistic missions are presented in Appendix B.

2.3. Addressing Technical Difficulties in Managing Large-Scale Input Datasets within ISs

In most cases where large-scale IS systems are developed to arrange georeferenced data, the data to be organized within a system (before the input in the system) typically display various notable features:

- Serious structural complexity and complicated interconnections among their components [40].

- Substantial diversity in their configuration, encompassing various definitions, contents, forms, and formats [41].

- Highly increased thematic diversity due to numerous of additional actors and heterogeneous procedures [42].

- Most importantly, a notable degree of polymorphism, indicating variability in how data are defined and their content across different versions. These versions are tailored to meet specific purposes for which the data are used [43].

As a consequence of these identified traits, most of these systems do not easily carry out domain adaptation (thematic shift) to different missions/operations. These systems are not easily manageable, and they become exceedingly challenging to be maintained, updated, and upgraded and they reach a state of unmanageability. Consequently, this leads to their rejection as domain-agnostic solutions. The geographical nature of the data further exacerbates these challenges by significantly amplifying the data’s complexity [44].

2.4. Distinctive Traits of SSs—Difficulties Involved in Their Design

A review of the literature has revealed that the majority of available systems supporting complex operational procedures do not serve as optimal models for constructing necessary data warehouses. This is due to a number of reasons. Firstly, their design relies on ad hoc heuristic methods and internal structures that often lack suitability for handling large volumes of multi-thematic data [45]. In addition, the methodologies used for their development and implementation cannot be standardized or applied universally to similar cases and challenges [46]. Moreover, their data cores lack the desired internal interoperability [47]. Another key point is that they encounter issues regarding updating and upgrading their internal procedures. They tend to become unmanageable computationally or even uncontrollable by the user [48]. Equally important, as these systems scale in size and complexity, their sustainability diminishes significantly. They become practically non-expandable or modifiable in terms of their functionalities. As previously stated, these systems become overburdened with this growth and the only viable solution appears to be the complete replacement of the old system with a new one. This approach is excessively drastic and incurs prohibitive costs. Additionally, when numerous actors are involved in designing and executing complex operational processes, it is highly likely that challenges will emerge concerning aligning these procedures and their associated input data. In particular, in military operations, CMSSs struggle to receive large volumes of input data, despite the international efforts to achieve standardization for input data and interoperability among different data sources. Along with the above, in many cases, the presence of multiple independent data sources creates significant hurdles, leading to internal interoperability issues or data sub-sets that clash, severely impeding or entirely obstructing their utilization in operational processes [49]. Above all, the data crucial for supporting complex missions, mainly tied to maps, tend to be voluminous and complex, adding layers of complexity to their management. Furthermore, the constantly changing nature of specific data complicates the updating process, potentially causing disruptions in decision-making protocols whose efficient implementation is time dependent [50]. Last, but not least, given the vast range of complex missions, the data needed can be highly diverse and polymorphic. This diversity poses substantial computational and algorithmic challenges in organizing these data within ISs [51].

It should be emphasized that the current organization of the data is not due to problems or weaknesses of the IS’s capabilities, but it is a remnant of the old traditional and convenient method of handling georeferenced information based absolutely on maps. In contrast, especially in human-centric missions, data organization in maps is not compatible with the human way of thinking, in which the data organization is based primarily on the subjects that should be solved and secondarily on maps. Therefore, the traditional method of organizing georeferenced information has a clear upper limit of usefulness because the volume and complexity are increasing.

2.5. Systematic Development of Organized and Sustainable Data Cores—Dissemination of Information

In this sub-section, methodologies and techniques are presented for achieving systematic development of well-organized and sustainable data cores in DSSs, fully leveraging human intelligence in realistic missions (e.g., intelligence rules, common similarities in operational procedures, etc.).

2.5.1. Information Organization of the Incoming Data in DSSs, Based on Mission Purposes (1st Methodology)

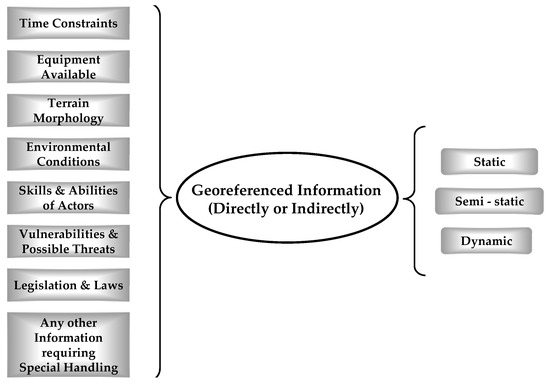

The organization of information in a CMSS should be guided and controlled by the mission purposes for which the necessary sets of information needed will be used, either by operational specialists or computer engineers. Additionally, it is imperative that the dissemination of data to the desired addressee or eventually to the general public is not anarchic, a procedure which is known as the principle of the need to know [52]. Simultaneously, the dissemination of the content of each operational procedure, which always correlates with an actor and the information needed for the execution of a mission, should be based on the purpose for which it is being carried out, which is far more practical and efficient. The methodology of organization of information based on purposes, which will be presented in the following, has a direct relationship with the teleology methodology. Figure 2 illustrates the classification of data required by the operational needs of an actionable actor. It becomes evident that the majority of these essential data are common across most complex missions. In the context of the DSS proposed in the present study, an actor is characterized as actionable due to the capability of making decisions at crucial points during the mission’s lifecycle.

Figure 2.

Categories of information needed for CMS (based on mission purposes).

It is easily understood that, according to human reasoning, information is generally organized by prioritizing issues related to procedures that are encountered in complex missions. Afterwards, these procedures are associated with the information needed to support a mission (i.e., issues and sub-issues). It is worth mentioning that this information is georeferenced, directly or indirectly, and is considered highly critical for the success of a mission.

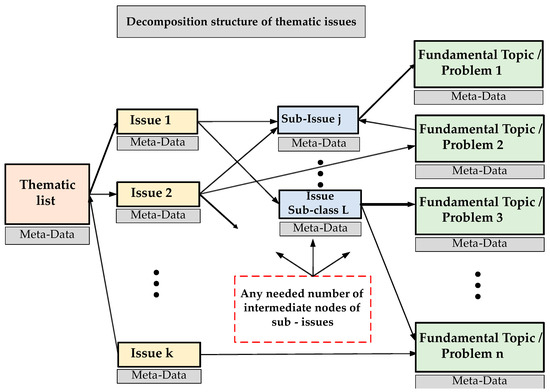

2.5.2. Thematic Decomposition of Complex Operational Procedures (2nd Methodology)

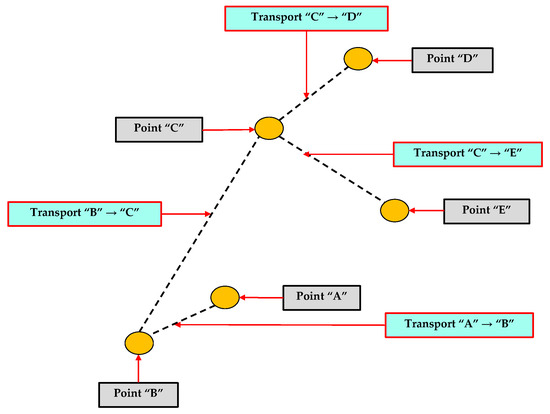

The predominant approach utilized in the design and analysis of operational processes is the segmentation/division of these procedures into distinct sub-procedures (in operational terminology the operational procedures could also be named mission/subject/issue). This segmentation, known as the thematic decomposition of operational procedures, is performed based on the thematic content of the mission and the geographical area in which the procedures will be executed. The process of thematic decomposition operates through a systematic analysis wherein each mission is dissected into primary sub-missions (1st level), and subsequently, each of these sub-missions (2nd level) undergoes further segmentation into subsequent sub-missions (nth level), continuing iteratively until they reach a point where further division is non-achievable. Each sub-mission within the mission should be precisely articulated, detailing its specific requirements. This structure conforms to an acyclic graph structure resembling an nth-level tree, where n ∈ ℕ*. Hence, situated at the apex of this nth-level tree structure lies the primary mission, branching out thematically into a number of sub-missions for each actor. This hierarchical decomposition continues downwards until the initial mission is exhaustively broken down into its most elementary thematic components.

Figure 3 contains an example of thematic decomposition of a complex mission, with application to the case of a transport mission. In Figure 3, it is observed that there is an initial transport mission (point “A”). This mission is divided into further sub-missions, which are sub-mission “transport from A to B”, sub-mission “point B”, sub-mission “transport from B to C”, sub-mission “point C”, sub-mission “transport from C to D”, and sub-mission “transport from C to E”. In this example, each sub-mission cannot be further divided, so in practice that means it is operationally manageable, well-defined, and with its requirements precisely specified.

Figure 3.

Example of thematic decomposition of an initial complex mission (tree-like graph structure).

The degree of thematic decomposition in a complex mission always depends on the executive capability and qualifications of the operational experts in mission design, who decide based on their experience and on empirical criteria such as available time, level of training, readiness of participant actionable actors, etc. to break down the mission into smaller segments in a versatile and convenient way. This tactic is considered necessary during the mission-planning phase for achieving efficient mission analysis. The methodology of thematic decomposition of information based on mission purposes has a direct relationship with the teleology methodology, basic elements of which have been presented in [26].

2.5.3. Input Data Organization within IS Solution for IT Engineering Challenges

It is apparent that any mission, irrespective of its magnitude, comprises sub-missions characterized by complex operational procedures. Numerous and diverse operators and actors take part in varying command and control tiers/levels. Each of these sub-missions necessitates operational data, which for operational officers are notably voluminous, complex, and diverse. As a result, their management necessitates specialized approaches, even within sub-missions. A major challenge faced by IT engineers is the disorderly structure of data warehouses and ISs, leading to failures due to their immense load (weight) and the inability to efficiently organize and handle large volumes of data. A potential future remedy might involve the following algorithmic steps:

- Step 1: Enter thematic information in the system and classify it according to mission objectives and purposes.

- Step 2: Categorize information by theme, time, and geographic relevance aligning with the operational requirements of the mandatory expert data and input information (with thematic content) into the system (referred to as thematic directory list of information).

- Step 3: Seek additional operational information essential to support the mission’s primary goals promptly and accurately.

- Step 4: If required information is inaccessible, explore alternative information sources that could indirectly aid in assessing the current situation and support mission purposes.

- Step 5: Upon identifying available information enabling rational conclusions, source this information from appropriate channels.

- Step 6: In cases where no relevant information is available, revisit the directory list of information and adjust the mission’s objectives to locate pertinent existing information facilitating an equivalent assessment.

- Step 7: In cases where finding equivalent information proves impossible, iterate through the process of adjusting the mission’s objectives to discover preexisting relevant information leading to an equivalent assessment and support mission purposes.

- Step 8: If the effort of adjusting fails to yield the desired equivalent results, revisit the list of information (directory) and further adapt the mission’s objectives to retrieve information with satisfactory assessment for supporting the mission purposes.

- Step 9: Repeat this algorithmic procedure iteratively until sufficient assessments for the mission are achieved.

The aforementioned approach is a comprehensive detailed process, emphasizing adaptability in aligning mission objectives with available information. This seems to outline a systematic approach for information handling in a mission. Further details about the previous algorithmic process for data organization in an IS, as described in the previous context, are provided in the form of a pseudocode based on C#, shown in Appendix C.

Additionally, in Appendix C, a detailed analysis of the algorithmic complexity of the pseudocode that was implemented by the previously described methodology is presented. The time complexity of this algorithm is estimated to be O(n log n), and the space complexity is O(n). These complexities are based on the selected data structures deemed optimal for each step of the algorithm.

2.5.4. Mission Design Algorithm for Identifying Key Intermediate Objectives for CMSS (3rd Methodology)

In the case of a large number of actionable actors involved in designing and executing operational procedures—from the simplest to the most complex and extensive ones—it is likely that issues of harmonizing these processes may arise. This is attributed to two main reasons:

- The purpose for which these procedures are performed varies according to each involved actionable actor.

- The definition and form, or the content of each operational procedure, differ among different actionable actors.

Efforts in the past to establish interoperability among relevant actionable actors, common operational terminology, and harmonization of respective operational procedures have not yielded the desired results so far. The harmonization of all relevant operational procedures (including their necessary corresponding data) executed by the actionable actors involved in a complex mission can be attained by identifying essential intermediate objectives. These objectives are crucial in achieving the final goal of the mission. A mission-planning algorithm has been created, where the mission underwent thematic decomposition. According to this technique, the mission was divided into smaller segments based on designer operational criteria, which are termed fundamental mission segments. In these segments, operational procedures take place and there are intermediate objectives. The completion of these intermediate objectives also means the completion of the corresponding mission segment. The completion of all mission sub-segments leads to the completion of the entire mission. It is worth mentioning that the mission segments are selected by an operational mission designer according to his experience based on the time needed for mission completion and logical thematic mission analysis. The algorithm’s steps are as follows:

- Step 1: Creation of a list of fundamental mission segmentsIn the mission design phase, each mission is divided into fundamental mission segments to ensure functional and operational manageability, employing appropriately selected segment lengths. This technique, known in engineering terminology as “divide and conquer”, allows for effective thematic mission sub-divisions [53]. It is crucial for each sub-division to account for any temporal or spatial constraints that might influence the lengths of these fundamental segments.

- Step 2: Creation of a list of points of interestPoints of Interest (POIs) are defined within each fundamental mission segment for operational planning reasons because their completion leads to the success of the mission. POIs are preplanned and considered of high priority because of the necessity for specialized handling and specific operational scrutiny during mission design and execution. POIs are selected at the discretion of the officials of the plan and are primarily map related; however, they might also encompass the concept of time or carry a logical interpretation and usefulness. These POIs necessitate the investigation of operational procedures, e.g., communication, mission criteria, conditions, situational awareness, record log files, etc. The number of POIs depends on the operation designer executive’s capability to decide with operational accuracy in order to achieve successful mission completion. In a sense, POIs can also be referred to as transition nodes. Before and upon them, the appropriate operational actions should be executed effectively throughout the mission. These actions are related to the mission’s success status, namely the total completion of the mission, the possibility of partial failure, and the potential abortion of the mission if demanded by the circumstances.

- Step 3: Creation of a list of fundamental mission segments with their POIs.This list is created by the outcomes of step 1 and step 2.

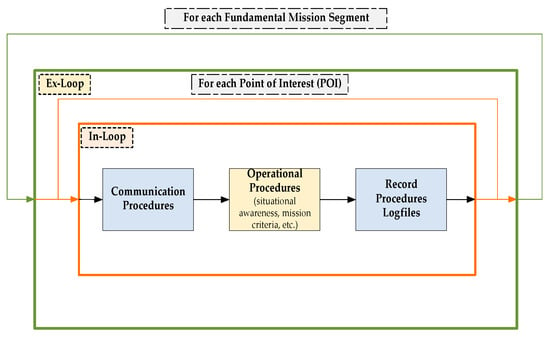

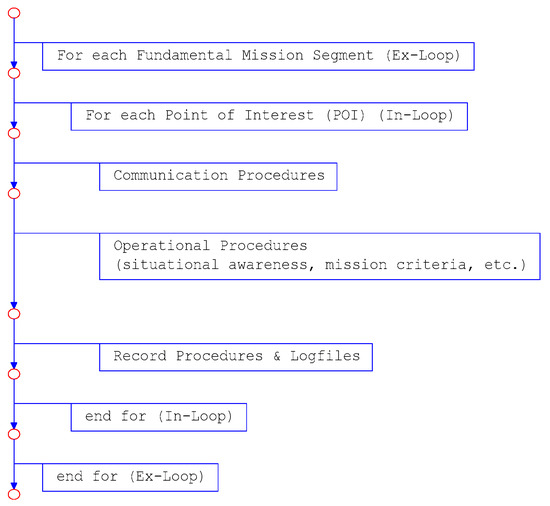

- Step 4: Creation of two iterative procedures, defined as external and internal loops.The use of the fundamental mission segments and POIs leads to a novel algorithm characterized by two iterative procedures, one of which (referred as internal, “In”) is nested within the other (referred as external, “Ex”):

- The external iterative procedure (Ex-loop) involves transitioning from the beginning of each fundamental mission segment to the beginning of the next.

- The internal iterative procedure (In-loop), nested within the external iterative procedure, involves transitioning from one POI to another within each fundamental mission segment.

The aforementioned iterative procedures are capable of describing any objective, intermediate or not, due to their exceptionally high generality. This characteristic strongly implies that the algorithmic process itself is inherently generalized. - Step 5: Execution of the mission design algorithm methodology inside the CMSS.The documentation of a mission construction design algorithm encompassing the transition from the external to internal iterative procedure is described in pseudocode based on C# (see Appendix D for further details).Additionally, in Appendix D, a detailed analysis of the algorithmic complexity of the pseudocode that was implemented by the previously described methodology is presented. The time complexity of this algorithm is estimated to be O(n2), and the space complexity is O(n2). These complexities are based on the selected data structures deemed optimal for each step of the algorithm.

In the following Figure 4, the visualization of the mission design algorithm is depicted.

Figure 4.

Representation of mission design algorithm.

In the following Figure 5, a diagrammatic flow chart is presented, in which the external loop (“Ex-loop”) and the internal loop (“In-loop”) are shown. The generalized mission design algorithm has been generated as a flow chart by using code in the MATLAB programming language (MATLAB, version R2022b; MathWorks Inc.: Natick, MA, USA, 2022).

Figure 5.

Diagrammatic flow chart of mission design algorithm.

The significant similarities in operational procedures (common or not), regardless of the type and nature of a mission, have led the Subject Matter Experts (SMEs) to design and execute complex missions by standardizing or even creating templates for operational procedures across a broad range of complex missions. This standardization defines the type of operational procedure, including the data needed, to achieve the objectives of complex missions. In this way, a high degree of harmonization was achieved, and it is feasible due to the fact that a generalized core of operational procedures (related to the data needed) can be created for application in different complex missions.

A detailed presentation of the aforementioned generalized diagrammatic algorithm was adjusted for designing and executing transport missions; it is in its final stage of completion by one of the authors (George Tsavdaridis) and is planned to be published in the near future.

2.5.5. Use of the Teleological Architecture for Organizing Systems (4th Methodology)

The teleological structure (also called as system content map), derived from the metaphorical meaning of the ancient Greek word “telos” which means purpose, is a specialized metadata structural architecture. It stems from a way of human thinking, as described earlier, and correlates each subject or issue (of a mission, a procedure, etc.) with the respective sub-sets of data and computational methods that must be used to solve those specific problems or issues. The content map serves as a precise representation detailing sub-sets of data and metadata, along with computational methods and programs associated with each thematic issue or sub-issue within a system. Indeed, the themes, issues, problems, concerns, or challenges requiring information stored within the IS serve as a conceptual index for the data and information housed within it. Hence, one could define the content map of the system as a teleological structure, as it delineates the inherent purpose behind the existence of data clusters and computational procedures within the system. The teleological structure resides within a distinct database and serves as an ongoing resource for organizing and accessing the core data and programs within the system. The teleology methodology has been outlined in [26,54,55]. A brief overview of each of these sections is provided below.

The metadata structure of teleology has been partitioned into two parts for improved readability (i.e., Sections Thematic (Mission) Decomposition of Teleological Architecture and Data/Indicators Cores and Computational Methods of Teleological Architecture). Section Exemplary Architecture of a System with Teleological Organization presents an example system structure with teleological organization.

Thematic (Mission) Decomposition of Teleological Architecture

The first part of the content map (or teleological structure) pertains to the thematic decomposition of the system. The content map of the system starts from the thematic list of the system, as Figure 6 indicates.

Figure 6.

Decomposition structure of thematic issues.

The teleological structure originates from a distinct cognitive process and aligns various subsets of data and metadata within a system with thematic content (mission, sub-mission, issue, sub-issue, etc.). Essentially, the system’s missions or sub-missions serve as a conceptual guide for organizing the associated data within an IS. Thus, a thematic list is created, through implementation of a comprehensive decomposition of all the subjects addressed in the mission, composing a tree graph structure, where the tree’s leaves represent non-divisible entities or fundamental subjects, and they directly lead to a list of corresponding indicators. For a deeper exploration, further details are available in [26].

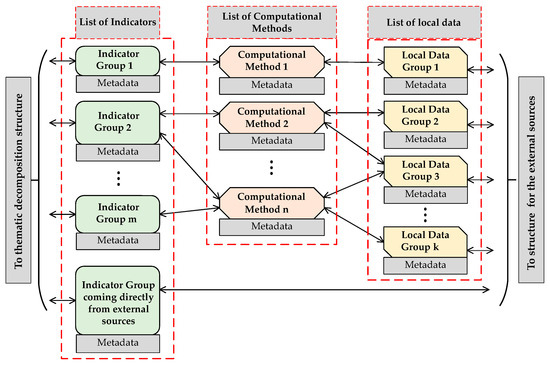

Data/Indicators Cores and Computational Methods of Teleological Architecture

In the second part of the teleological structure, a proper metadata structure describes the input data/variables and the data sources. This structure includes the descriptions of the indicators (i.e., the specific information that is needed by decision makers), as well as descriptions of the methods for computing these indicators from the input data, as shown in Figure 7.

Figure 7.

Data cores/indicators and computational methods.

In any operational procedure, effectively managing internal complexities and diverse data necessitates a meticulous thematic breakdown within the system. It is imperative to achieve clear and precise indicators that accurately capture the essence of the data. This involves ensuring that every sub-set of desired indicators corresponds directly to a set of computational methods represented by the following equation:

i = f(d)

In Equation (1), i stands for the specific indicator(s) arranged in a vector, f denotes the method utilized for computing these indicators, and d represents the input data vector essential for the computation of these specific indicators.

Adherence to this comprehensive thematic list makes the system inherently updatable, upgradeable, and, hence, generally evolvable and sustainable. In the final format of the table of map contents (or teleological structure) of the system, each sub-set of input data (variables) is correlated with the data sources from which they were obtained. More extensive descriptions, which are omitted here for simplicity, can be found in [25].

For a detailed mathematical foundation of the methodology, along with a comprehensive set of mathematical formulas describing an explanatory implementation, the reader is referred to Appendix E.

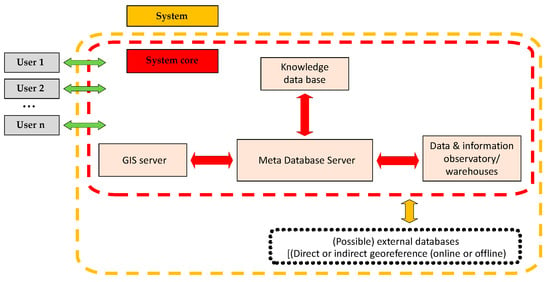

Exemplary Architecture of a System with Teleological Organization

The exemplary implementation of a system can include several components, which are depicted in the following Figure 8. Further detailed information about the teleological architecture can be found in [26].

Figure 8.

Exemplary system architecture with teleological organization.

Methodology for Bringing Order within Systems Based on Teleological Architecture

In this sub-section, according to the previously described teleological structure, an exact, novel methodology is presented for creating and maintaining highly organized datasets. For supporting complex missions and operational procedures, the methodology will be described in terms of a sequence of steps:

- Step 1: Identify, at the highest possible level, important missions or complex operational procedures which a supporting system can substantially support.

- Step 2: Assign priorities to the identified missions or complex operational procedures. If necessary, restrict the selection of missions according to technical, financial, or any other necessary criteria and select the missions which can be supported.The previous two steps are common at any level of command (e.g., national, strategic, and operational).

- Step 3: For each remaining mission or set of complex operational procedures, start recursive decomposition into simpler sub-missions (or sub-procedures). Gradually, a tree-like structure is created that looks like an acyclic graph. This graph comprises many levels depending on the number of sub-missions which have been created. Every sub-mission is well-defined, detailed, and simpler than the layer above. Stop the decomposition at sub-missions that: (i) can no longer be divided into simpler ones. We shall call these sub-missions fundamental. In the tree-like structure, each fundamental sub-mission appears as leaf; (ii) have an unambiguous description of their input (data), methodology of computation (methods or programs), and output. This output consists of indicators which are the data that permit decision making.

- Step 4: For each one of the fundamental sub-missions, specific data input will be needed for the SS. These input data are completely specified by the necessary indicators. Practice has shown that it is necessary to completely define the following:

- The exact form and format, as well as the necessary characteristics, of these data, according to their intended use.

- The sources from which these data have to be acquired.

- Any necessary procedures for cleansing, filtering, transformation, or merging of the acquired input data, so that the needed data can be computed. This sub-step may have a very wide range of difficulty.

- Exact procedures for regular and timely updating of the data.

- Step 5: If data gaps remain, then:

- First, examine if there is a way to directly compute the missing data from other available data.

- Then, examine if there are any other available data from which it is easy to make good-quality estimations for covering the gaps of missing data.

- If good-quality estimations cannot be found, look for sub-missions similar to the fundamental ones (as defined in step 3), which may involve different indicators, computed from data, for which good-quality estimations can be obtained.

- Otherwise, try to collect new input data.

- Step 6: In the case that data gaps still remain after steps 4 and 5, try to further back-track in the tree-like structure of the decomposed missions for a sub-mission that can be modified so that, eventually, computable indicators can be found, without compromising the actual aims of the mission.In a considerable number of cases, this back-tracking may involve going several levels up, and actually redesign a part of the mission, until all data needs are satisfied.

- Step 7: From the list of the fundamental sub-missions of the initial mission, create:

- a total indicators list,

- a total list of the computational methods (program list),

- a total data list, which is used as input to the computational methods to produce indicators), and

- a total list of updating procedures, in order to regularly and timely update the data cores of the system.

- Step 8: Organize all the information produced so far in a composite, advanced database, which is governed by a meta-database of critical importance, named a teleological structure. The prior algorithmic process mandates that all generated descriptions be systematically arranged within the teleological meta-database. Meanwhile, all remaining data must find their place within the composite database.

In the previous procedure, any problems of terminology or different names used by different actors for the same sub-missions, indicators, or data have to be tackled. In case a thematic shift of the dataset is needed, one has to start by augmenting the teleological structure, as will be explained later on. Data cores, organized according to the proposed methodology, can easily and seamlessly be distributed geographically if the need arises.

Use of Organization of Geodata Called “Themes over Maps”, Based on Teleological Structure (5th Methodology)

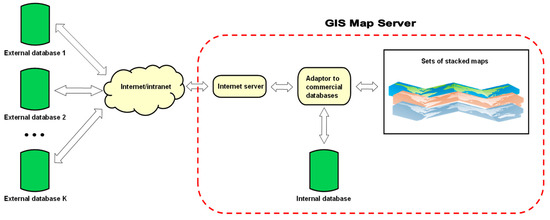

The most advanced georeferenced data architectures today look like the abstraction scheme in Figure 9. This scheme starts from one or more sets of stacked layers of maps to parts of which additional information is linked. This additional information can be either kept in an internal database or collected from external databases in real time. The administrators of the external databases can be dispersed over the entire World Wide Web. For this architecture, the term “maps over themes” could be established. Although this architecture is a powerful one, it has an upper limit of usability, as the volume and the complexity of the georeferenced information are increasing.

Figure 9.

“Maps over themes” architecture.

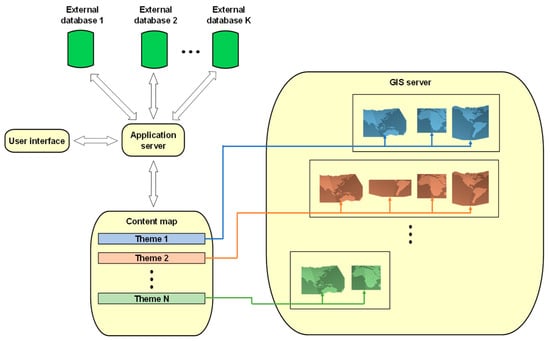

There is an architecture called as “themes over maps” which was presented for the first time in a number of EU-funded transportation projects for the needs of Project ETIS Agent of Program Growth. According to this structure, the system could perform an on-demand and on-the-fly joining of information retrieved from external or internal databases. The aforementioned architecture enables the actionable actor/user to select and request information needed (user selection guided). The information needed by an operational actionable actor is linked to the content map (teleological structure). The system then automatically and without any intervention by the user produces maps for the user with the desired information. The “themes over maps” methodology substantially enhances the manipulation capabilities of geospatial data and harmoniously integrates with the teleological structure or content map of the system. The “themes over maps” architecture looks like the abstraction scheme shown in Figure 10.

Figure 10.

“Themes over maps” architecture.

The “maps over themes” architecture has been adjusted to systems for design, monitoring, control, and execution of operational procedures and additional details can be found in [27,28].

3. Results

As previously mentioned, traditional DSSs used in the design, monitoring, control, and execution of complex missions have limitations in their functionality, i.e., the type of mission they support, the volume of required input information, etc. In particular, CMS systems often struggle to expand their functionality capacities. This is because any further development of their conventional data cores necessitates updates, upgrades, and, ultimately, enhanced control mechanisms. Consequently, the sustainability of these data cores becomes a significant concern. Hence, the issue of the sustainability of these cores is apparent.

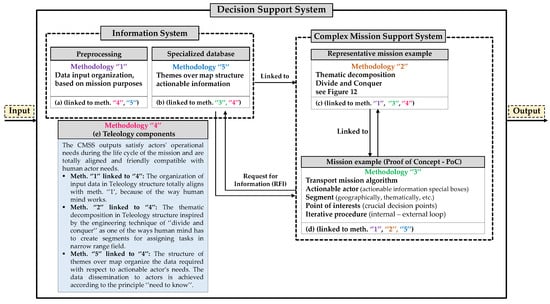

The techniques described in this work provide solutions with specific methodologies to address the issues of high volume, serious internal complexity, increased diversity, and interoperability concerning CMS systems. The combination of the aforementioned methodologies and techniques effectively reinforces the functionality of the proposed DSS. As shown in Figure 11, the proposed DSS architecture overview includes an IS that has two sub-systems:

Figure 11.

Overview of the proposed DS system architecture.

- The first sub-system (noted as “a” in Figure 11) concerns the preprocessing stage aiming at preparing to organize the input data before they enter the system that will support any mission and has a relationship with methodology “1” because it fixes the anarchic and disordered data input in the system.

- The second sub-system (noted as “b” in Figure 11) concerns the stage after the data preprocessing stage. In this phase, the preprocessed dataset undergoes more specialized organization based on a way of organizing data related to actionable actors’ needs. This phase has a relationship with methodology “5” (“themes over maps”).

The other component running in parallel to the IS is the CMS system, which implements methodology “2” (noted as “c” in Figure 11) involving thematic mission decomposition through the divide and conquer technique and “3” (noted as “d” in Figure 11) encompassing the mission transport algorithm, actionable actors, segments, Points of Interest (POIs), and other elements. The data dissemination to actors is achieved according to the principle of “need to know”. According to this principle, the actionable actor requests, handles, and analyzes only the absolutely necessary information according to one’s judgement and according to the actor’s operational needs (to avoid information overloading). Methodologies “1”, “2”, “3”, and “5” optimally fit with methodology “4” (noted as “e” in Figure 11), teleological structure, for supporting complex missions.

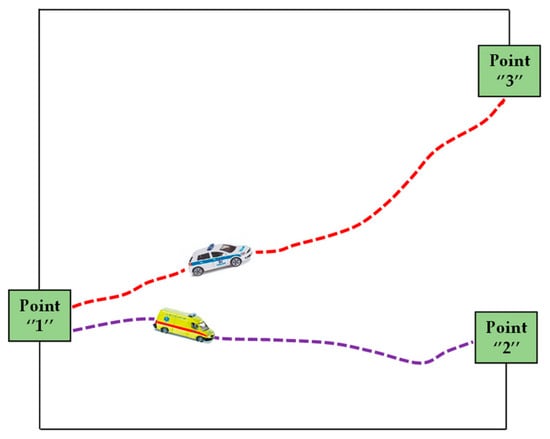

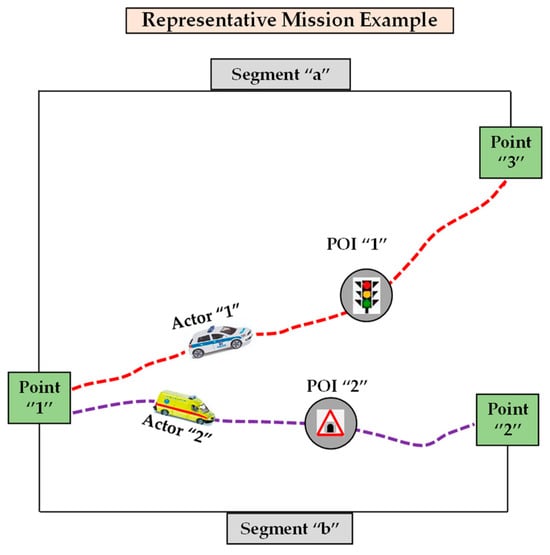

3.1. Representative Mission Example: Proof of Concept (PoC) in CMSS

Let us consider a representative example of a transport mission (see Figure 12). The mission starts at point “1”, and it branches into two sub-missions. The first, concerning the transportation of a briefcase containing classified documents via a police vehicle (actor “1”), starts from point “1” and proceeds to point “3”. The second, concerning the transportation of a patient by an ambulance to a hospital (actor “2”), starts from point “1” and terminates at point “2”. The two sub-missions are “fundamental sub-missions”. This means that each sub-mission is well-defined and there is no need for them to be further divided because their operational requirements are clear.

Figure 12.

Representative example of a transport mission in CMSS.

By implementing the five methodologies previously described in Section 2.5 for the systematic development of organized and sustainable data cores, the results of the PoC analysis, which are presented in the following sub-sections, are achieved.

3.2. Data Organization in Representative Mission Example (PoC in CMSS): 1st Meth

The available information for the needs of the aforementioned representative mission example has been organized systematically before entering the system by aligning with the mission objectives. Methodology “1” optimally fits with “4” (teleological structure) in order to support complex missions. Applying the algorithmic process for classification (as described in Section 2.5.3), we obtain:

- Mission overview: objective “1”—transport of classified documents from point “1” to “3” with a police vehicle (actor “1”) and objective “2”—transport of a patient from point “1” to “2” with an ambulance (actor “2”).

- Step-by-step application of algorithmic process for classification.

- Step 1: Enter thematic information in the system, i.e., objective “1”—classified document transportation, classify under theme “security for documents”; objective “2”—patient transportation, classify under theme “medical emergency for the patient”.

- Step 2: Categorize information by inputting in the system and creating thematic directories. For the present representative mission, an example could be security and medical, immediate transport requirement, potential delays, geographic points “1”, “2”, “3”, assess routes and distances, etc.

- Step 3: Seek additional operational information, i.e., traffic conditions, route security, medical facilities en route, availability of resources: police and medical personnel, etc.

- Step 4: Explore alternative information sources, i.e., if route information is lacking, check local news, traffic apps, community alerts, etc. If direct contact with a hospital is impossible, check regional health databases, etc.

- Step 5: Source information from appropriate channels, i.e., use police networks for security information, hospital records, and emergency services for medical information, etc.

- Step 6: Adjust mission objectives if necessary, i.e., if certain routes are blocked or unsafe consider alternative paths, if the nearest hospital is not available find the next closest one.

- Step 7 (if step 6 does not work): Iterate to find equivalent information, i.e., reevaluate routes and available facilities to find new information based on real-time input data.

- Step 8 (if step 7 does not work): Adapt mission’s objectives further, i.e., if continuous obstacles arise, try to adapt the mission’s objectives based on urgency and time constraints. Prioritize one of the two missions based on the aforementioned criteria and consider additional criteria such as the departure and arrival times, resource allocation, etc.

- Step 9: Repeat iteratively (i.e., go to step 1), namely, reassess mission objectives, ensuring that each adjustment brings the mission closer to an achievable outcome, by simultaneously correlating the information needed to the adjusted mission objectives.

The data organization technique, as outlined in the representative mission example, offers distinct benefits both from the perspective of the actionable actors involved in the mission and from the standpoint of design engineers of the system. The benefits for actionable actors in the mission are as follows: Firstly, decision making is enhanced, since actors receive well-categorized, relevant information that aids in making informed decisions quickly, which is crucial in time-sensitive missions. Secondly, efficient resource allocation is provided by the clear thematic categorization, e.g., security and medical, which allows specialists to effectively allocate resources (personnel, vehicles, etc.) based on the mission’s specific needs. Thirdly, adaptability to changing conditions, due to the iterative process of updating and reassessing information, enables specialists to efficiently change circumstances, such as route alterations due to traffic or emergencies, etc. Furthermore, increased operational awareness, given the continuous integration of input data with relevant categorization (e.g., traffic conditions, route security), provides actors with a comprehensive understanding of the mission’s current status. In addition, it offers risk mitigation by considering potential obstacles and seeking alternative information sources and therefore actors can foresee and mitigate risks, enhancing the mission’s safety. Moreover, communication and coordination are optimized, since the structured approach streamlines communication between different actors, e.g., police, medical teams, ensuring better coordination and collaboration.

The benefits of the data organization technique from the design engineer’s perspective are as follows: Initially, systematic data management enables the system to categorize and to prioritize data, ensuring efficient handling and retrieval of mission-critical information. In addition, the system’s design allows scalability and adaptability to different mission types and sizes by supporting various thematic categories and real-time updates. Furthermore, robust information processing, since the system is engineered to process a wide range of data sources, e.g., local news, traffic apps, ensuring comprehensive situational awareness. Last, but not least, this design achieves improved data accuracy and reliability, since the system’s design enhances the accuracy and reliability of the data input by accessing information from appropriate channels and sources, e.g., police networks, medical records, etc.

In summary, this data organization technique significantly enhances the mission’s execution by providing actors with a clear, adaptable, and comprehensive operational situational awareness, while from an engineer’s standpoint, the system is robust, flexible enough, and able to handle complex mission requirements effectively.

3.3. Thematic Decomposition in Representative Mission Example (PoC in CMSS): 2nd Meth

The representative mission example is divided into two sub-missions and has undergone thematic decomposition. In fact, the representative mission example has branched into different thematic sub-missions, which are point “1” to “3” and point “1” to “2”. The organization and analysis of the representative mission example are based on the geographical area where it takes place. The technique of thematic decomposition plays a crucial role in the success of complex missions, since it offers the following benefits:

- Clarity: By splitting the mission into two sub-missions (point “1” to “3” for document transport, and point “1” to “2” for patient transport) each team—the police and the ambulance crew, respectively—has a clear understanding of their distinct objectives. This clarity ensures that each actor knows his route, his cargo (i.e., classified documents or a patient), and specific operational requirements.

- Efficiency: Resources can be allocated specifically for each sub-mission. The police vehicle used for the documents can be equipped accordingly for security, while the ambulance can be equipped with medical supplies. Independent tackling of each sub-mission prevents resource overlap and ensures that each mission is carried out with the appropriate means and actors.

- Risk assessment: Operating the two sub-missions separately allows for a more focused risk analysis. For the police vehicle, the primary risks might involve security threats, whereas for the ambulance, the primary risks are related to medical emergencies en route. This separation allows each team to prepare for and mitigate their specific risks effectively.

- Communication: Clear communication channels can be established for each sub-mission. The police team will have a dedicated communication line focused on route security, while the ambulance crew will maintain communication with medical personnel. This prevents communication misunderstadings and ensures that each team receives the information needed.

- Resource optimization: Resources, e.g., fuel, personnel, equipment, etc., are allocated precisely according to the needs of each sub-mission. The police vehicle might require additional security personnel en route, while the ambulance may need medical staff (communicating remotely with the medical center of operations) and equipment (from local health centers). This targeted allocation prevents resource waste.

- Quality control: The flow of input information contributes to quality control for each thematic sub-mission, e.g., document transport might require assurance for the security of the information, while patient transport involves maintaining high medical care standards.

- Adaptability: If, for example, there is a traffic jam en route to the hospital, the ambulance can adapt its route independently without affecting the document transportation. Eventually, the existence of two distinct sub-missions ensures that changes in one sub-mission do not unnecessarily complicate or delay the other.

- Problem isolation: If the police vehicle encounters a problem, such as a mechanical failure, it can be addressed without directly impacting the patient transportation. This isolation of problems prevents a domino effect, ensuring that a problem in one area does not escalate to affect the other sub-mission.

This thematic decomposition approach indeed provides a comprehensive framework for managing complex missions, enhancing effectiveness and efficiency while reducing risks and improving communication and quality control.

3.4. Mission Design Algorithm in Representative Mission Example (PoC in CMSS): 3rd Meth

The use of a mission design algorithm for identifying key intermediate objectives enables the CMSS to easily and flexibly manage any kind of complex operational procedure, following successive steps in order to lead to the completion of the mission. By adjusting the algorithm to the representative mission example given consideration in this study, the following results arise:

- The creation of a list of fundamental mission segments. The mission is divided into two primary segments: segment “a” which concerns the transport of classified documents from point “1” to “3” (by police vehicle, actor “1”) and segment “b” which is the transport of a patient from point “1” to “2” (by ambulance, actor “2”). Each segment is independently manageable and designed considering the unique requirements of its cargo transportation.

- The creation of a list of Points of Interest (POIs). For segment “a” (document transport), potential POIs could include traffic lights or secure checkpoints, areas of high traffic, locations requiring specific navigation strategies, etc. For segment “b” (patient transport), potential POIs might include a tunnel or local health centers, where the ambulance is forced to make a short stop over due to the patient’s condition, the fastest routes for emergency transport by-pass, etc. In both cases, POIs are selected based on the urgency, security needs, and operational complexities of each transport mission.

- The creation of a list of fundamental mission segments (with their POIs). The representative mission example segments are combined with their respective POIs, as follows: (a) path of segment “a” from point “1” to “3” with its POI “1”, which is a traffic light, (b) path of segment “b” from point “1” to “2” with its POI “2”, which is a tunnel.

- The creation of two iterative procedures (external and internal loops). The external loop (Ex-loop) could involve transitioning from the preparation phase at point “1” to the execution of segments “a” and “b”. This loop involves two repetitions, one for each segment. The internal loop (In-loop) could involve, for segment “a”, transitioning between POIs from point “1” to “3” and, for segment “b”, transitioning between POIs from point “1” to “2”. For each segment scanned through the external loop, this internal loop involves as many repetitions as the number of POIs along the segment’s path. In the case considered in this study, for each segment the inner loop contains a single repetition for the one POI shown in Figure 13. These loops facilitate the operational flow of the mission, ensuring that all necessary actions are taken at each POI.

Figure 13. Mission design algorithm in representative mission example (PoC in CMSS).

Figure 13. Mission design algorithm in representative mission example (PoC in CMSS). - The execution of the mission design algorithm methodology in the CMSS. The algorithm for the transport representative mission example is totally documented and executed in a CMS system. This includes the specifics of handling each type of cargo (e.g., classified documents and a patient), the different transport vehicles (e.g., police vehicle and ambulance), and any other logistical or operational requirements.

By adapting the algorithm to this representative mission example, we focus on the challenges of transporting sensitive documents and a patient, ensuring that each sub-mission is effectively managed and executed. In Figure 13, the mission design algorithm in the representative mission example (PoC in CMSS) is illustrated.