“I See What You Feel”: An Exploratory Study to Investigate the Understanding of Robot Emotions in Deaf Children

Abstract

1. Introduction

(RQ1) How do hearing and deaf children assess the credibility of a humanoid robot without sound and speech?

(RQ2) Are children able to recognize emotions in a humanoid robot when they are exhibited through non-verbal communication?

(RQ3) Can empathy affect the ability to recognize emotions, expressed without sound or speech, in a humanoid robot?

(RQ4) Are the children who see a humanoid robot respond to a video in a congruent manner better able to recognize the emotions in the robot compared to children who see a robot respond to a video in an incongruent manner?

2. Related Works

2.1. Social Robots

2.2. Children with Deafness

2.3. Empathy

3. Materials and Methods

3.1. Participants and Recruitment

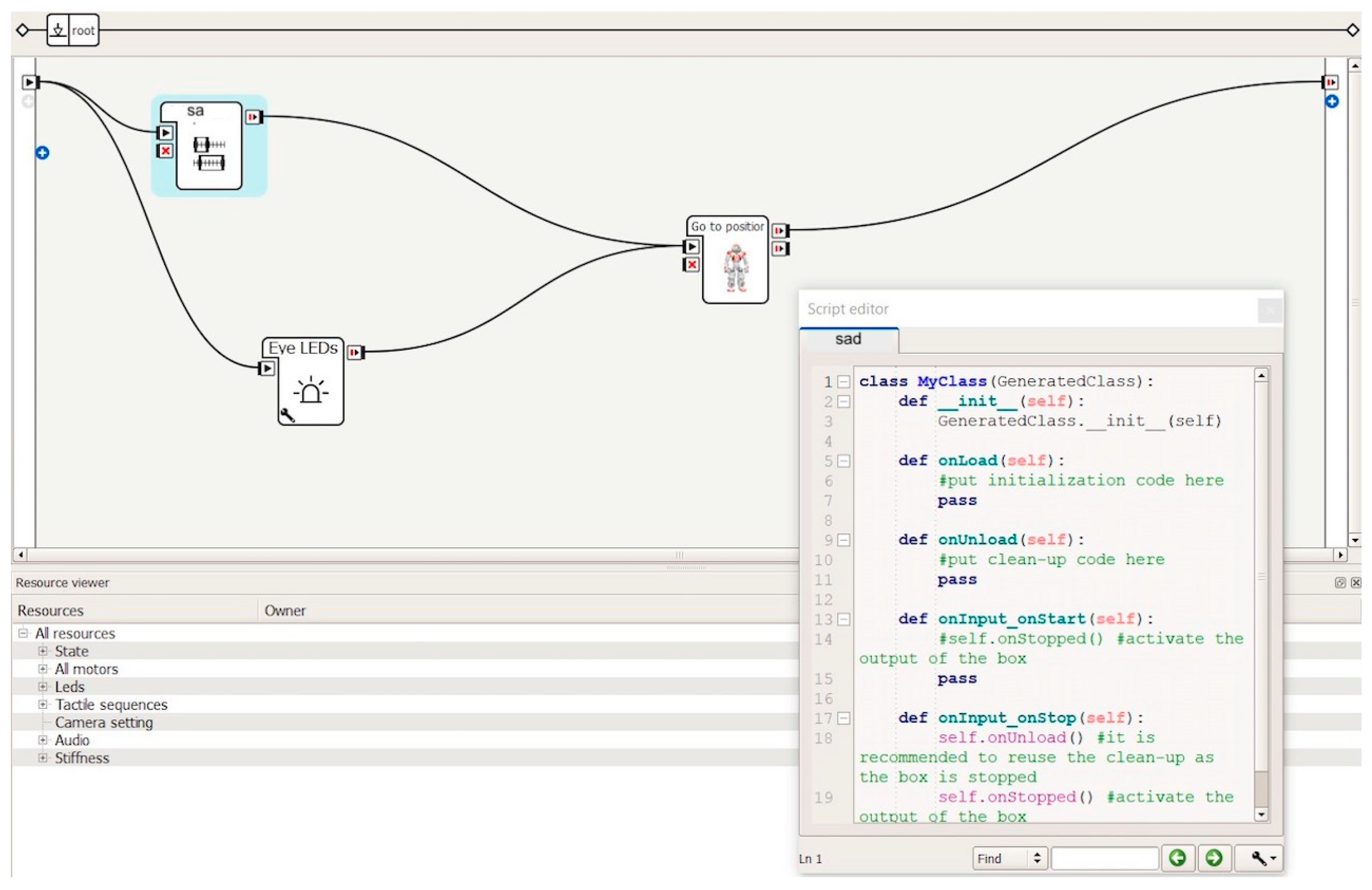

3.2. Stimulus Material

3.3. Instruments

3.3.1. Emotions in Video Clips

3.3.2. Emotion Recognition of NAO Robot

3.3.3. Empathy

3.3.4. State Empathy

3.3.5. Credibility toward the Humanoid Robot

3.4. Experimental Procedure

3.5. Statistical Analysis

4. Results

4.1. Descriptives

4.2. Credibility toward NAO

4.3. Recognition of NAO’s Emotions

5. Discussion and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Sheridan, T.B. Human–Robot Interaction: Status and Challenges. Hum. Factors 2016, 58, 525–532. [Google Scholar] [CrossRef] [PubMed]

- Goodrich, M.A.; Schultz, A.C. Human-Robot Interaction: A Survey. Found. Trends Hum. Comput. Interact. 2007, 1, 203–275. [Google Scholar] [CrossRef]

- Bonarini, A. Communication in Human-Robot Interaction. Curr. Robot. Rep. 2020, 1, 279–285. [Google Scholar] [CrossRef] [PubMed]

- Sati, V.; Sánchez, S.M.; Shoeibi, N.; Arora, A.; Corchado, J.M. Face Detection and Recognition, Face Emotion Recognition Through NVIDIA Jetson Nano. Int. Symp. Ambient Intell. 2020, 1239, 177–185. [Google Scholar] [CrossRef]

- Jaiswal, S.; Nandi, G.C. Robust real-time emotion detection system using CNN architecture. Neural Comput. Appl. 2020, 32, 11253–11262. [Google Scholar] [CrossRef]

- Vesić, A.; Mićović, A.; Ignjatović, V.; Lakićević, S.; Čolović, M.; Zivkovic, M.; Marjanovic, M. Hidden Sadness Detection: Differences between Men and Women. In Proceedings of the 2021 Zooming Innovation in Consumer Technologies Conference (ZINC), Novi Sad, Serbia, 26–27 May 2021; pp. 237–241. [Google Scholar] [CrossRef]

- Bartneck, C.; Forlizzi, J. A design-centered framework for social human-robot interaction. In Proceedings of the 13th IEEE International Workshop on, Kurashiki, Japan, 22 September 2004; pp. 591–594. [Google Scholar] [CrossRef]

- Sarrica, M.; Brondi, S.; Fortunati, L. How many facets does a “social robot” have? A review of scientific and popular definitions online. Inf. Technol. People 2020, 33, 1–21. [Google Scholar] [CrossRef]

- Zinina, A.; Zaidelman, L.; Arinkin, N.; Kotov, A. Non-verbal behavior of the robot companion: A contribution to the likeability. Procedia Comput. Sci. 2020, 169, 800–806. [Google Scholar] [CrossRef]

- Hall, J.; Tritton, T.; Rowe, A.; Pipe, A.; Melhuish, C.; Leonards, U. Perception of own and robot engagement in human robot interactions and their dependence on robotics knowledge. Robot. Auton. Syst. 2014, 62, 392–399. [Google Scholar] [CrossRef]

- Breazeal, C. Emotion and sociable humanoid robots. Int. J. Hum. Comput. Stud. 2003, 59, 119–155. [Google Scholar] [CrossRef]

- Berghe, R.v.D.; Verhagen, J.; Oudgenoeg-Paz, O.; van der Ven, S.; Leseman, P. Social Robots for Language Learning: A Review. Rev. Educ. Res. 2018, 89, 259–295. [Google Scholar] [CrossRef]

- Randall, N. A survey of robot-assisted language learning (RALL). ACM Trans. Hum. Robot Interact. 2019, 9, 36. [Google Scholar] [CrossRef]

- Konijn, E.A.; Jansen, B.; Bustos, V.M.; Hobbelink, V.L.N.F.; Vanegas, D.P. Social Robots for (Second) Language Learning in (Migrant) Primary School Children. Int. J. Soc. Robot. 2021, 14, 827–843. [Google Scholar] [CrossRef]

- Belpaeme, T.; Kennedy, J.; Ramachandran, A.; Scassellati, B.; Tanaka, F. Social robots for education: A review. Sci. Robot. 2018, 3, eaat5954. [Google Scholar] [CrossRef] [PubMed]

- Conti, D.; Cirasa, C.; Di Nuovo, S.; Di Nuovo, A. ‘Robot, tell me a tale!’: A Social Robot as tool for Teachers in Kindergarten. Interact. Stud. 2020, 21, 220–242. [Google Scholar] [CrossRef]

- Tsiourti, C.; Weiss, A.; Wac, K.; Vincze, M. Multimodal Integration of Emotional Signals from Voice, Body, and Context: Effects of (In)Congruence on Emotion Recognition and Attitudes Towards Robots. Int. J. Soc. Robot. 2019, 11, 555–573. [Google Scholar] [CrossRef]

- Signoret, C.; Rudner, M. Hearing impairment and perceived clarity of predictable speech. Ear Hear. 2019, 40, 1140–1148. [Google Scholar] [CrossRef]

- Garcia, R.; Turk, J. The applicability of Webster-Stratton parenting programmers to deaf children with emotional and behavioral problems, and autism, and their families: Annotation and case report of a child with autistic spectrum disorder. Clin. Child Psychol. Psychiatry 2007, 12, 125–136. [Google Scholar] [CrossRef] [PubMed]

- Ashori, M. Impact of Auditory-Verbal Therapy on executive functions in children with Cochlear Implants. J. Otol. 2022, 17, 130–135. [Google Scholar] [CrossRef]

- Terlektsi, E.; Kreppner, J.; Mahon, M.; Worsfold, S.; Kennedy, C.R. Peer relationship experiences of deaf and hard-of-hearing adolescents. J. Deaf. Stud. Deaf. Educ. 2020, 25, 153–166. [Google Scholar] [CrossRef]

- Rieffe, C. Awareness, and regulation of emotions in deaf children. Br. J. Dev. Psychol. 2012, 30, 477–492. [Google Scholar] [CrossRef]

- Netten, A.P.; Rieffe, C.; Theunissen, S.C.P.M.; Soede, W.; Dirks, E.; Briaire, J.J.; Frijns, J.H.M. Low empathy in deaf and hard of hearing (pre) adolescents compared to normal hearing controls. PLoS ONE 2015, 10, e0124102. [Google Scholar] [CrossRef] [PubMed]

- Batson, C.D. These Things Called Empathy: Eight Related but Distinct Phenomena; The MIT Press: Cambridge, MA, USA, 2009. [Google Scholar] [CrossRef]

- Batson, C.D.; Early, S.; Salvarani, G. Perspective taking: Imagining how another feels versus imaging how you would feel. Personal. Soc. Psychol. Bull. 1997, 23, 751–758. [Google Scholar] [CrossRef]

- O’Connell, G.; Christakou, A.; Haffey, A.T.; Chakrabarti, B. The role of empathy in choosing rewards from another’s perspective. Front. Hum. Neurosci. 2013, 7, 174. [Google Scholar] [CrossRef] [PubMed]

- Ramachandra, V.; Longacre, H. Unmasking the psychology of recognizing emotions of people wearing masks: The role of empathizing, systemizing, and autistic traits. Personal. Individ. Differ. 2022, 185, 111249. [Google Scholar] [CrossRef] [PubMed]

- Charrier, L.; Galdeano, A.; Cordier, A.; Lefort, M. Empathy display influence on human-robot interactions: A pilot study. In Proceedings of the Workshop on Towards Intelligent Social Robots: From Naive Robots to Robot Sapiens at the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; p. 7. [Google Scholar]

- Beck, A.; Cañamero, L.; Hiolle, A.; Damiano, L.; Cosi, P.; Tesser, F.; Sommavilla, G. Interpretation of emotional body language displayed by a humanoid robot: A case study with children. Int. J. Soc. Robot. 2013, 5, 325–334. [Google Scholar] [CrossRef]

- Gasteiger, N.; Lim, J.; Hellou, M.; MacDonald, B.A.; Ahn, H.S. A Scoping Review of the Literature on Prosodic Elements Related to Emotional Speech in Human-Robot Interaction. Int. J. Soc. Robot. 2022, 1–12. [Google Scholar] [CrossRef]

- Di Nuovo, A.; Varrasi, S.; Lucas, A.; Conti, D.; McNamara, J.; Soranzo, A. Assessment of cognitive skills via human-robot interaction and cloud computing. J. Bionic Eng. 2019, 16, 526–539. [Google Scholar] [CrossRef]

- Conti, D.; Di Nuovo, A.; Cirasa, C.; Di Nuovo, S. A Comparison of kindergarten Storytelling by Human and Humanoid Robot with Different Social Behavior. In Proceedings of the Companion of the 2017 ACM/IEEE International Conference on Human-Robot Interaction, Vienna, Austria, 6–9 March 2017; pp. 97–98. [Google Scholar] [CrossRef]

- Robaczewski, A.; Bouchard, J.; Bouchard, K.; Gaboury, S. Socially Assistive Robots: The Specific Case of the NAO. Int. J. Soc. Robot. 2021, 13, 795–831. [Google Scholar] [CrossRef]

- Beck, A.; Cañamero, L.; Bard, K. Towards an Affect Space for robots to display emotional body language. In Proceedings of the 19th International Symposium in Robot and Human Interactive Communication, Viareggio, Italy, 13–15 September 2010; pp. 464–469. [Google Scholar] [CrossRef]

- Mizumaru, K.; Sakamoto, D.; Ono, T. Perception of Emotional Relationships by Observing Body Expressions between Multiple Robots. In Proceedings of the 10th International Conference on Human-Agent Interaction (HAI ’22), Association for Computing Machinery, New York, NY, USA, 5 December 2022; pp. 203–211. [Google Scholar] [CrossRef]

- Overgaauw, S.; Rieffe, C.; Broekhof, E.; Crone, E.A.; Güroğlu, B. Assessing empathy across childhood and adolescence: Validation of the empathy questionnaire for children and adolescents (emque-CA). Front. Psychol. 2017, 8, 870. [Google Scholar] [CrossRef]

- Lazdauskas, T.; Nasvytienė, D. Psychometric properties of Lithuanian versions of empathy questionnaires for children. Eur. J. Dev. Psychol. 2021, 18, 144–159. [Google Scholar] [CrossRef]

- Liang, Z.; Mazzeschi, C.; Delvecchio, E. Empathy questionnaire for children and adolescents: Italian validation. Eur. J. Dev. Psychol. 2023, 20, 567–579. [Google Scholar] [CrossRef]

- Shen, L. On a scale of state empathy during message processing. West. J. Commun. 2010, 74, 504–524. [Google Scholar] [CrossRef]

- Cordeiro, T.; Botelho, J.; Mendonça, C. Relationship Between the Self-Concept of Children and Their Ability to Recognize Emotions in Others. Front. Psychol. 2021, 12, 672919. [Google Scholar] [CrossRef]

- Wiefferink, C.H.; Rieffe, C.; Ketelaar, L.; De Raeve, L.; Frijns, J.H. Emotion Understanding in Deaf Children with a Cochlear Implant. J. Deaf. Stud. Deaf. Educ. 2013, 18, 175–186. [Google Scholar] [CrossRef]

| Constructs | No. of Items | M (SD) | Cronbach Alpha |

|---|---|---|---|

| Empathy | 18 | 2.70 (0.54) | 0.84 |

| State Empathy (total) | 12 | 3.62 (0.89) | 0.86 |

| State Empathy (affective) | 4 | 3.59 (1.09) | 0.79 |

| State Empathy (cognitive) | 4 | 3.99 (0.72) | 0.43 |

| State Empathy (associative) | 4 | 3.27 (1.30) | 0.82 |

| M (SD) | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 Emotion recognition (NAO) | 1.96 (1.09) | -- | ||||||||||

| 2 Emotion recognition (video) | 2.00 (0.78) | 0.639 ** | -- | |||||||||

| 3 Level of congruence 1 | 1.44 (0.51) | −0.039 | −0.097 | -- | ||||||||

| 4 Hearing level 2 | 1.33 (0.48) | −0.049 | −0.102 | 000 | -- | |||||||

| 5 Empathy | 2.70 (0.54) | −0.019 | −0.181 | −0.203 | −0.049 | -- | ||||||

| 6 Affective empathy | 3.59 (1.09) | 0.148 | 0.325 | 0.044 | 0.104 | −0.033 | -- | |||||

| 7 Associative empathy | 3.27 (1.30) | 0.102 | 0.265 | 0.148 | −0.072 | 0.131 | 0.815 ** | -- | ||||

| 8 State empathy * | 3.62 (0.89) | 0.174 | 0.346 | 0.037 | −0.043 | 0.082 | 0.935** | 0.915 ** | -- | |||

| 9 Believability | 3.84 (0.84) | 0.168 | 0.151 | −0.451 * | −0.165 | 0.293 | 0.222 | 0.428 * | 0.389 * | - | ||

| 10 Age | 7.59 (1.50) | −0.033 | 0.196 | 0.045 | 0.569 ** | .0130 | 0.030 | 0.009 | 0.037 | −0.102 | - | |

| 11 Sex 3 | 1.59 (0.50) | 0.534 ** | 0.196 | −0.017 | −0.053 | 0.105 | 0.177 | −0.003 | 0.133 | −0.056 | −0.178 | - |

| Min | Max | M (SD) | To a Minor Extent | To Some Extent | To a Great Extent | |

|---|---|---|---|---|---|---|

| The robot perceived the content of the movie clip correctly | 2 | 5 | 4.53 (0.84) | 6.7% | 0% | 93.3% |

| It was easy to understand which emotion was expressed by the robot | 3 | 5 | 4.60 (0.63) | 0% | 6.7% | 93.3% |

| It was easy to understand what the robot was thinking about | 1 | 5 | 4.27 (1.22) | 13.3% | 0% | 86.7% |

| The robot has a personality | 1 | 5 | 3.80 (1.52) | 20.0% | 0% | 80.0% |

| The robot’s behavior drew my attention | 1 | 5 | 4.00 (1.46) | 26.7% | 0% | 73.3% |

| The robot’s behavior was predictable | 1 | 5 | 3.27 (1.75) | 40.0% | 6.7% | 53.3% |

| The behavior expressed by the robot was appropriate for the content of the movie | 4 | 5 | 4.73 (0.45) | 0% | 0% | 100% |

| Min | Max | M (SD) | To a Minor Extent | To Some Extent | To a Great Extent | |

|---|---|---|---|---|---|---|

| The robot perceived the content of the movie clip correctly | 1 | 5 | 3.83 (1.40) | 25.0% | 0% | 75.0% |

| It was easy to understand which emotion was expressed by the robot | 1 | 5 | 3.67 (1.44) | 16.7% | 16.7% | 66.6% |

| It was easy to understand what the robot was thinking about | 1 | 5 | 3.00 (1.41) | 41.6% | 16.7% | 41.7% |

| The robot has a personality | 1 | 5 | 3.00 (1.86) | 41.7% | 8.3% | 50.0% |

| The robot’s behavior drew my attention | 1 | 5 | 4.42 (1.65) | 8.3% | 0% | 91.7% |

| The robot’s behavior was predictable | 1 | 5 | 3.33 (1.49) | 33.4% | 8.3% | 58.3% |

| The behavior expressed by the robot was appropriate for the content of the movie | 1 | 5 | 2.75 (1.71) | 58.3% | 0% | 41.7% |

| Predictors | β | t | p |

|---|---|---|---|

| Emotion recognition (video) | 0.720 | 4.341 | <0.001 |

| Congruence level | 0.134 | 0.742 | 0.469 |

| Hearing level | 0.166 | 0.794 | 0.439 |

| Empathy | 0.341 | 1.817 | 0.088 |

| State empathy (affective) | 0.107 | 0.182 | 0.858 |

| State empathy (associative) | −0.073 | −0.179 | 0.860 |

| State empathy (total) | −0.400 | −0.511 | 0.616 |

| Credibility toward the robot | 0.221 | 1.052 | 0.308 |

| Age | −0.118 | −0.579 | 0.571 |

| Sex | 0.421 | 2.757 | 0.014 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cirasa, C.; Høgsdal, H.; Conti, D. “I See What You Feel”: An Exploratory Study to Investigate the Understanding of Robot Emotions in Deaf Children. Appl. Sci. 2024, 14, 1446. https://doi.org/10.3390/app14041446

Cirasa C, Høgsdal H, Conti D. “I See What You Feel”: An Exploratory Study to Investigate the Understanding of Robot Emotions in Deaf Children. Applied Sciences. 2024; 14(4):1446. https://doi.org/10.3390/app14041446

Chicago/Turabian StyleCirasa, Carla, Helene Høgsdal, and Daniela Conti. 2024. "“I See What You Feel”: An Exploratory Study to Investigate the Understanding of Robot Emotions in Deaf Children" Applied Sciences 14, no. 4: 1446. https://doi.org/10.3390/app14041446

APA StyleCirasa, C., Høgsdal, H., & Conti, D. (2024). “I See What You Feel”: An Exploratory Study to Investigate the Understanding of Robot Emotions in Deaf Children. Applied Sciences, 14(4), 1446. https://doi.org/10.3390/app14041446