EEG Emotion Classification Based on Graph Convolutional Network

Abstract

1. Introduction

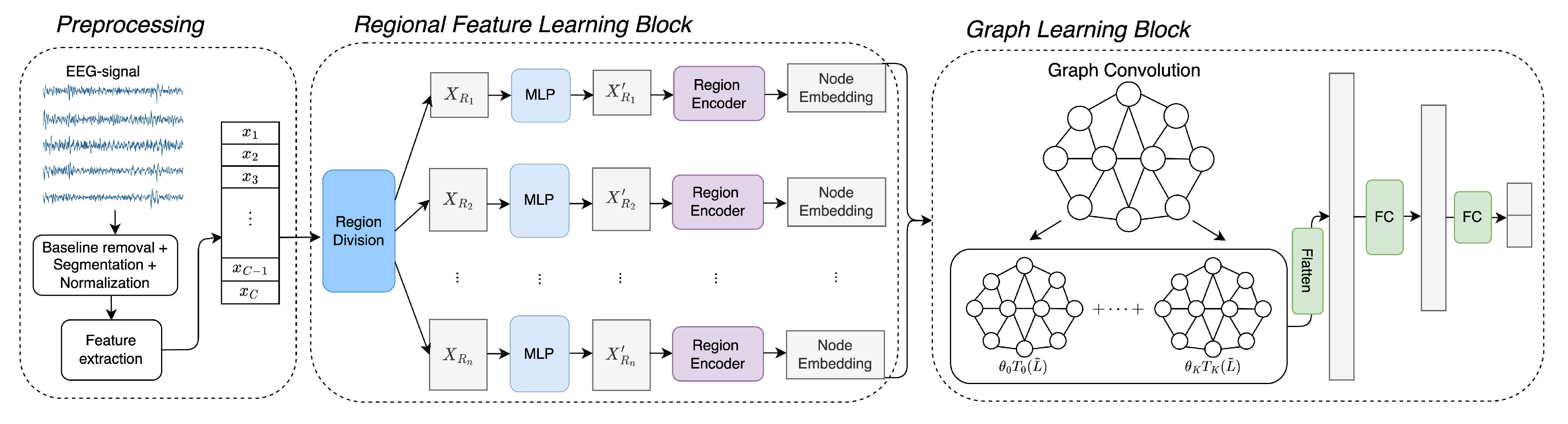

- We propose a model that solves emotion recognition based on region-level representation to learn the activity inside and across various brain regions. Such interactions have been proven to be highly relevant to human emotion state from a neurological point of view.

- To capture the correlation between brain regions, we construct a graph on EEG signals and employ graph spectral filtering with dynamical adjacency matrix. This approach is more applicable to study the interplay of brain areas since it does not limit itself to the notion of geographic closeness and provides flexibility in detecting function-level interactions.

- To thoroughly investigate how to formulate regional-level characteristics, we conduct a comprehensive experimental study in terms of different region encoders, region division strategies and input features. The results show that our approach outperforms many existing methods on DEAP and Dreamer datasets.

2. Literature Review

2.1. Machine Learning Approach

2.2. Deep Learning Approach

3. Method

3.1. Prepocessing

3.2. Regional Feature Learning Block

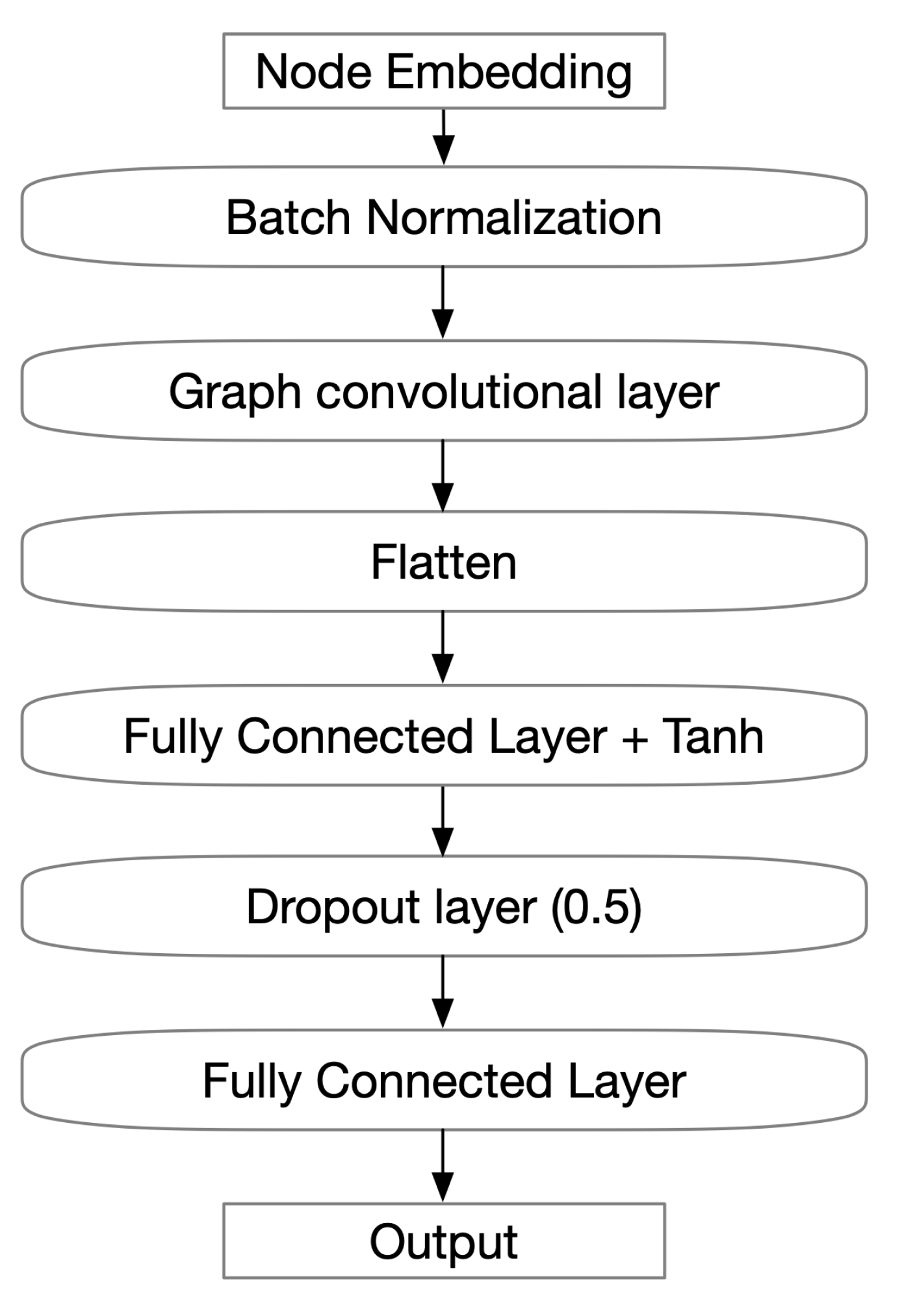

3.3. Graph Learning Block

4. Experiments and Discussions

4.1. Dataset

4.2. Training Detail

4.3. Evaluation Metric

4.4. Experiment on Region Representation

- Multi-layer perceptron (MLP) is one of the most common networks. The input is firstly flattened into a one-dimensional vector, then the multi-layer perceptron is applied:to generate the output .

- Convolutional neural network (CNN) is a class of neural network that is widely used in emotion recognition tasks. It utilizes kernel to move data into a grid pattern. Say the kernel size is m, then we can derive:where is the jth feature; is the kernel weight; is the offset of the jth feature; is the activation function.

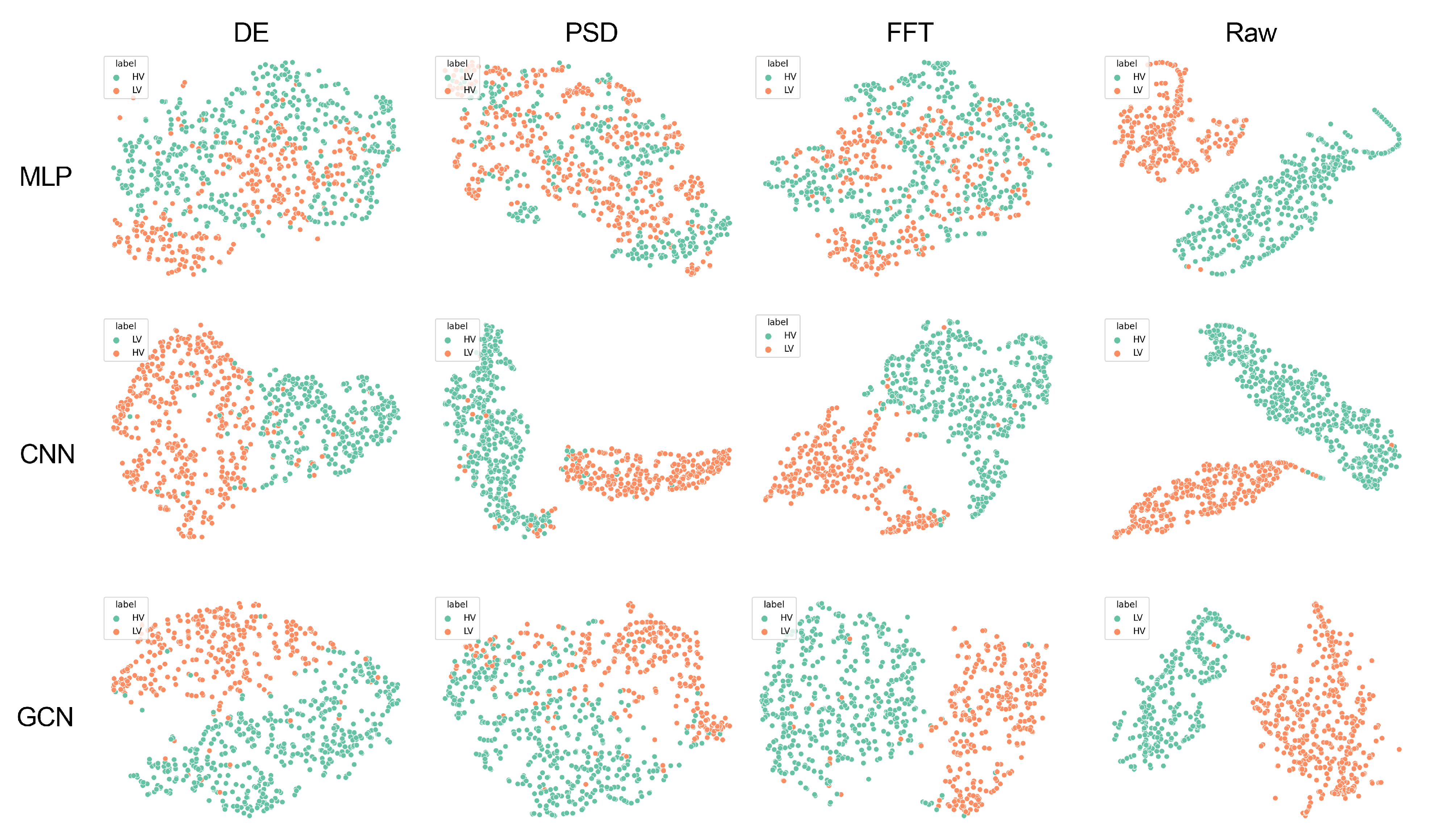

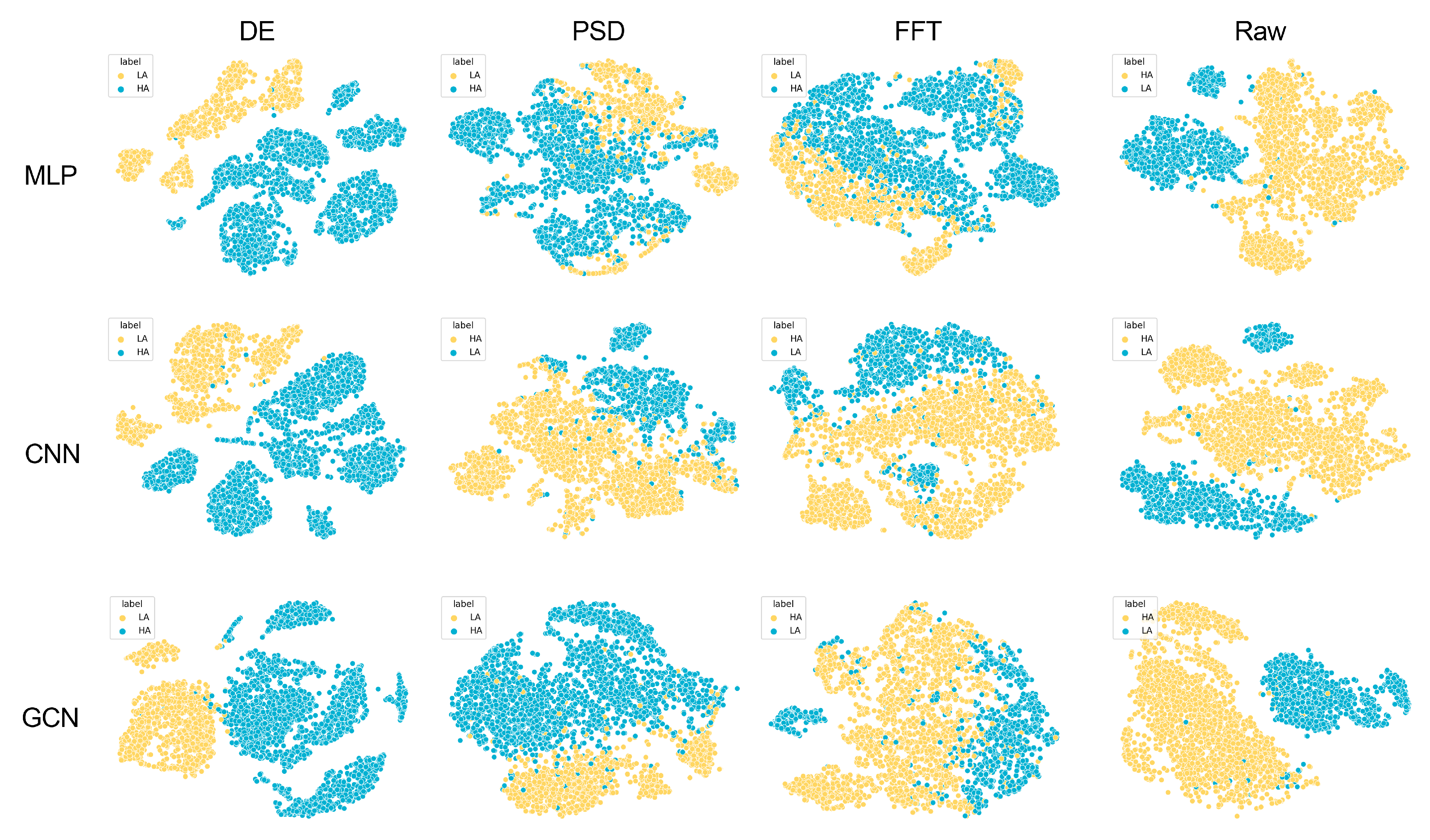

4.5. Feature Visualization

- Raw signal.

- Differential entropy () is a measure of the complexity of a continuous random variable. It can be calculated as following:where and denote the mean and standard deviation.

- Fast Fourier transform (FFT) is an algorithm that is used to compute the discrete Fourier transform(DFT) of a sequence of data points in an efficient way. It can be defined as:where f is the frequency; t denotes time; is the signal in time domain.

- Power spectral density () refers to the distribution of power of different frequencies in a signal. It is calculated by taking the squared magnitude of Fourier transform of the signal:

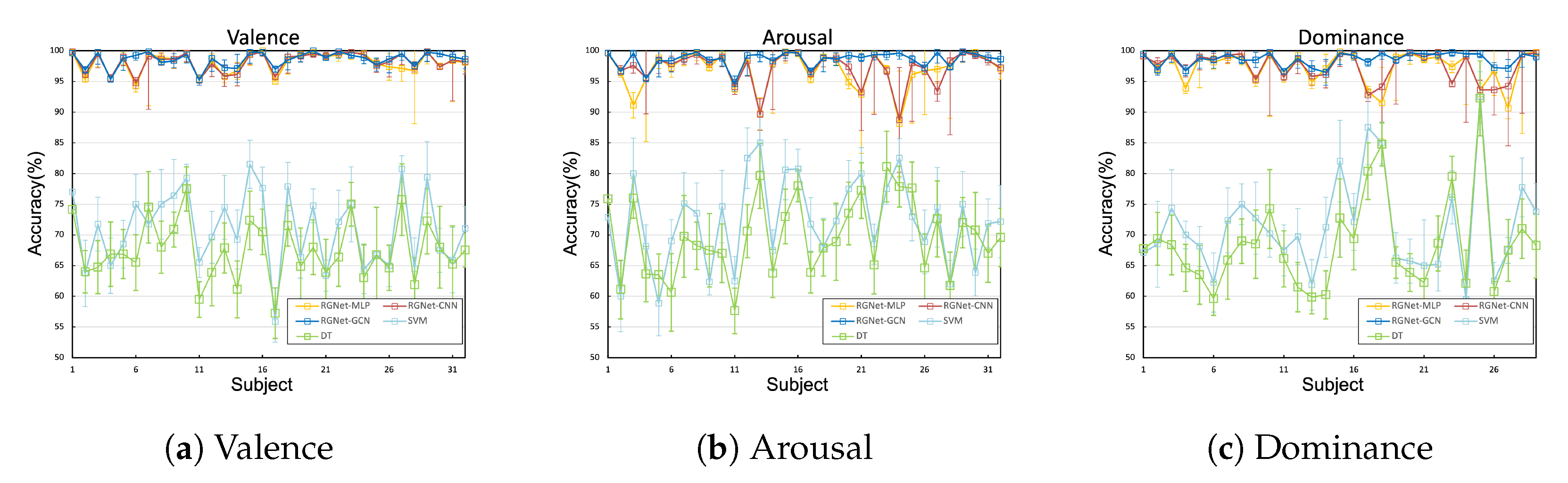

4.6. Subject-Wise Results

4.7. Comparison of Different Models

- CNN-RNN [35] is a hybrid neural network that combines CNN and RNN to process the spatial and temporal features.

- RACNN [47] is the regional-asymmetric convolutional neural network. It firstly extracts the time-frequency features using 1D CNN. Then, the asymmetrical regional features are captured from 2D CNN.

- ACRNN [29] is an attention-based convolutional recurrent neural network. In the first stage, it applies CNN to extract spatial features where a channel-wise attention mechanism is employed to determine the importance of different channels. Next, the extracted features are fed into the RNN network that has an extended self-attention mechanism to determine the intrinsic importance of each sample.

- DGCNN [27] is a model that utilizes a dynamical graph convolutional network, which maps multi-channel EEG signals into a graph structure by considering each channel as a node and the connection between them as edges. It allows the model to learn the dynamical structure of a graph so that the relationships among nodes are not constrained to geographical proximity.

- CapsNet [33] uses the attention mechanism and capsule network to conduct multi-task learning. The attention mechanism is used to capture the importance of each channel. The capsule network consists of multiple capsule layers that not only learn the characteristics required for individual tasks but also the correlations between them.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zou, S.; Huang, X.; Shen, X.; Liu, H. Improving multimodal fusion with Main Modal Transformer for emotion recognition in conversation. Knowl.-Based Syst. 2022, 258, 109978. [Google Scholar] [CrossRef]

- Wen, G.; Liao, H.; Li, H.; Wen, P.; Zhang, T.; Gao, S.; Wang, B. Self-labeling with feature transfer for speech emotion recognition. Knowl.-Based Syst. 2022, 254, 109589. [Google Scholar] [CrossRef]

- Middya, A.I.; Nag, B.; Roy, S. Deep learning based multimodal emotion recognition using model-level fusion of audio–visual modalities. Knowl.-Based Syst. 2022, 244, 108580. [Google Scholar] [CrossRef]

- Zhang, L.; Mistry, K.; Neoh, S.C.; Lim, C.P. Intelligent facial emotion recognition using moth-firefly optimization. Knowl.-Based Syst. 2016, 111, 248–267. [Google Scholar] [CrossRef]

- Klonowski, W. Everything you wanted to ask about EEG but were afraid to get the right answer. Nonlinear Biomed. Phys. 2009, 3, 2. [Google Scholar] [CrossRef] [PubMed]

- Sohaib, A.T.; Qureshi, S.; Hagelbäck, J.; Hilborn, O.; Jerčić, P. Evaluating Classifiers for Emotion Recognition Using EEG. In Proceedings of the Foundations of Augmented Cognition; Lecture Notes in Computer Science; Schmorrow, D.D., Fidopiastis, C.M., Eds.; Springer: Berlin/Heidelberg, Germany, 2013; pp. 492–501. [Google Scholar]

- Garg, A.; Kapoor, A.; Bedi, A.K.; Sunkaria, R.K. Merged LSTM Model for emotion classification using EEG signals. In Proceedings of the 2019 International Conference on Data Science and Engineering (ICDSE), Patna, India, 26–28 September 2019; pp. 139–143. [Google Scholar]

- Chen, S.; Jin, Q. Multi-modal Dimensional Emotion Recognition using Recurrent Neural Networks. In Proceedings of the 5th International Workshop on Audio/Visual Emotion Challenge, Brisbane, Australia, 26 October 2015; ACM: New York, NY, USA, 2015; pp. 49–56. [Google Scholar]

- Davidson, R.J.; Abercrombie, H.; Nitschke, J.B.; Putnam, K. Regional brain function, emotion and disorders of emotion. Curr. Opin. Neurobiol. 1999, 9, 228–234. [Google Scholar] [CrossRef]

- Pessoa, L. Beyond brain regions: Network perspective of cognition-emotion interactions. Behav. Brain Sci. 2012, 35, 158–159. [Google Scholar] [CrossRef]

- Kober, H.; Barrett, L.F.; Joseph, J.; Bliss-Moreau, E.; Lindquist, K.; Wager, T.D. Functional grouping and cortical–subcortical interactions in emotion: A meta-analysis of neuroimaging studies. NeuroImage 2008, 42, 998–1031. [Google Scholar] [CrossRef]

- Lindquist, K.A.; Wager, T.D.; Kober, H.; Bliss-Moreau, E.; Barrett, L.F. The brain basis of emotion: A meta-analytic review. Behav. Brain Sci. 2012, 35, 121–143. [Google Scholar] [CrossRef]

- Li, Y.; Zheng, W.; Wang, L.; Zong, Y.; Cui, Z. From Regional to Global Brain: A Novel Hierarchical Spatial-Temporal Neural Network Model for EEG Emotion Recognition. IEEE Trans. Affect. Comput. 2022, 13, 568–578. [Google Scholar] [CrossRef]

- Ding, Y.; Robinson, N.; Zeng, Q.; Chen, D.; Phyo Wai, A.A.; Lee, T.S.; Guan, C. TSception:A Deep Learning Framework for Emotion Detection Using EEG. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1–7. [Google Scholar]

- Li, Y.; Zheng, W.; Zong, Y.; Cui, Z.; Zhang, T.; Zhou, X. A Bi-Hemisphere Domain Adversarial Neural Network Model for EEG Emotion Recognition. IEEE Trans. Affect. Comput. 2021, 12, 494–504. [Google Scholar] [CrossRef]

- Ding, Y.; Robinson, N.; Zeng, Q.; Guan, C. LGGNet: Learning from Local-Global-Graph Representations for Brain-Computer Interface. arXiv 2022, arXiv:2105.02786. [Google Scholar] [CrossRef] [PubMed]

- Lin, Y.P.; Wang, C.H.; Jung, T.P.; Wu, T.L.; Jeng, S.K.; Duann, J.R.; Chen, J.H. EEG-Based Emotion Recognition in Music Listening. IEEE Trans. Biomed. Eng. 2010, 57, 1798–1806. [Google Scholar]

- Wang, X.W.; Nie, D.; Lu, B.L. Emotional state classification from EEG data using machine learning approach. Neurocomputing 2014, 129, 94–106. [Google Scholar] [CrossRef]

- Bazgir, O.; Mohammadi, Z.; Habibi, S.A.H. Emotion Recognition with Machine Learning Using EEG Signals. In Proceedings of the 2018 25th National and 3rd International Iranian Conference on Biomedical Engineering (ICBME), Qom, Iran, 29–30 November 2018; pp. 1–5. [Google Scholar]

- Alhalaseh, R.; Alasasfeh, S. Machine-Learning-Based Emotion Recognition System Using EEG Signals. Computers 2020, 9, 95. [Google Scholar] [CrossRef]

- Alhagry, S.; Aly, A.; El-Khoribi, R. Emotion Recognition based on EEG using LSTM Recurrent Neural Network. Int. J. Adv. Comput. Sci. Appl. 2017, 8, 355–358. [Google Scholar] [CrossRef]

- Schirrmeister, R.T.; Springenberg, J.T.; Fiederer, L.D.J.; Glasstetter, M.; Eggensperger, K.; Tangermann, M.; Hutter, F.; Burgard, W.; Ball, T. Deep learning with convolutional neural networks for EEG decoding and visualization: Convolutional Neural Networks in EEG Analysis. Hum. Brain Mapp. 2017, 38, 5391–5420. [Google Scholar] [CrossRef] [PubMed]

- Salama, E.S.; El-Khoribi, R.A.; Shoman, M.E.; Wahby, M.A. EEG-Based Emotion Recognition using 3D Convolutional Neural Networks. Int. J. Adv. Comput. Sci. Appl. 2018, 9, 329–337. [Google Scholar] [CrossRef]

- Lawhern, V.J.; Solon, A.J.; Waytowich, N.R.; Gordon, S.M.; Hung, C.P.; Lance, B.J. EEGNet: A compact convolutional neural network for EEG-based brain–computer interfaces. J. Neural Eng. 2018, 15, 056013. [Google Scholar] [CrossRef]

- Priyasad, D.; Fernando, T.; Denman, S.; Sridharan, S.; Fookes, C. Affect recognition from scalp-EEG using channel-wise encoder networks coupled with geometric deep learning and multi-channel feature fusion. Knowl.-Based Syst. 2022, 250, 109038. [Google Scholar] [CrossRef]

- Zhong, P.; Wang, D.; Miao, C. EEG-Based Emotion Recognition Using Regularized Graph Neural Networks. IEEE Trans. Affect. Comput. 2020, 13, 1290–1301. [Google Scholar] [CrossRef]

- Song, T.; Zheng, W.; Song, P.; Cui, Z. EEG Emotion Recognition Using Dynamical Graph Convolutional Neural Networks. IEEE Trans. Affect. Comput. 2020, 11, 532–541. [Google Scholar] [CrossRef]

- Xing, X.; Li, Z.; Xu, T.; Shu, L.; Hu, B.; Xu, X. SAE+LSTM: A New Framework for Emotion Recognition From Multi-Channel EEG. Front. Neurorobotics 2019, 13, 37. [Google Scholar] [CrossRef] [PubMed]

- Tao, W.; Li, C.; Song, R.; Cheng, J.; Liu, Y.; Wan, F.; Chen, X. EEG-based Emotion Recognition via Channel-wise Attention and Self Attention. IEEE Trans. Affect. Comput. 2020, 14, 382–393. [Google Scholar] [CrossRef]

- Yin, Y.; Zheng, X.; Hu, B.; Zhang, Y.; Cui, X. EEG emotion recognition using fusion model of graph convolutional neural networks and LSTM. Appl. Soft Comput. 2021, 100, 106954. [Google Scholar] [CrossRef]

- Feng, L.; Cheng, C.; Zhao, M.; Deng, H.; Zhang, Y. EEG-based emotion recognition using spatial-temporal graph convolutional LSTM with attention mechanism. IEEE J. Biomed. Health Inform. 2022, 26, 5406–5417. [Google Scholar] [CrossRef]

- Moontaha, S.; Schumann, F.E.F.; Arnrich, B. Online learning for wearable eeg-based emotion classification. Sensors 2023, 23, 2387. [Google Scholar] [CrossRef]

- Li, C.; Wang, B.; Zhang, S.; Liu, Y.; Song, R.; Cheng, J.; Chen, X. Emotion recognition from EEG based on multi-task learning with capsule network and attention mechanism. Comput. Biol. Med. 2022, 143, 105303. [Google Scholar] [CrossRef] [PubMed]

- Li, D.; Xie, L.; Wang, Z.; Yang, H. Brain emotion perception inspired eeg emotion recognition with deep reinforcement learning. IEEE Trans. Neural Netw. Learn. Syst. 2023, 1–14. [Google Scholar] [CrossRef]

- Yang, Y.; Wu, Q.; Qiu, M.; Wang, Y.; Chen, X. Emotion Recognition from Multi-Channel EEG through Parallel Convolutional Recurrent Neural Network. In Proceedings of the 2018 International Joint Conference on Neural Networks (IJCNN), Rio de Janeiro, Brazil, 8–13 July 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–7. [Google Scholar]

- Poldrack, R.A. Mapping Mental Function to Brain Structure: How Can Cognitive Neuroimaging Succeed? Perspect. Psychol. Sci. 2010, 5, 753–761. [Google Scholar] [CrossRef]

- Pessoa, L. On the relationship between emotion and cognition. Nat. Rev. Neurosci. 2008, 9, 148–158. [Google Scholar] [CrossRef] [PubMed]

- Scarantino, A. Functional specialization does not require a one-to-one mapping between brain regions and emotions. Behav. Brain Sci. 2012, 35, 161–162. [Google Scholar] [CrossRef] [PubMed]

- Vytal, K.; Hamann, S. Neuroimaging Support for Discrete Neural Correlates of Basic Emotions: A Voxel-based Meta-analysis. J. Cogn. Neurosci. 2010, 22, 2864–2885. [Google Scholar] [CrossRef]

- Fornito, A.; Zalesky, A.; Breakspear, M. Graph analysis of the human connectome: Promise, progress, and pitfalls. NeuroImage 2013, 80, 426–444. [Google Scholar] [CrossRef]

- Bullmore, E.; Sporns, O. Complex brain networks: Graph theoretical analysis of structural and functional systems. Nat. Rev. Neurosci. 2009, 10, 186–198. [Google Scholar] [CrossRef]

- Fair, D.A.; Cohen, A.L.; Power, J.D.; Dosenbach, N.U.F.; Church, J.A.; Miezin, F.M.; Schlaggar, B.L.; Petersen, S.E. Functional Brain Networks Develop from a “Local to Distributed” Organization. PLoS Comput. Biol. 2009, 5, e1000381. [Google Scholar] [CrossRef] [PubMed]

- Shuman, D.I.; Narang, S.K.; Frossard, P.; Ortega, A.; Vandergheynst, P. The emerging field of signal processing on graphs: Extending high-dimensional data analysis to networks and other irregular domains. IEEE Signal Process. Mag. 2013, 30, 83–98. [Google Scholar] [CrossRef]

- Defferrard, M.; Bresson, X.; Vandergheynst, P. Convolutional neural networks on graphs with fast localized spectral filtering. In Proceedings of the NIPS’16: Proceedings of the 30th International Conference on Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; Volume 29. [Google Scholar]

- Koelstra, S.; Muhl, C.; Soleymani, M.; Lee, J.-S.; Yazdani, A.; Ebrahimi, T.; Pun, T.; Nijholt, A.; Patras, I. DEAP: A Database for Emotion Analysis; Using Physiological Signals. IEEE Trans. Affect. Comput. 2012, 3, 18–31. [Google Scholar] [CrossRef]

- Katsigiannis, S.; Ramzan, N. DREAMER: A Database for Emotion Recognition Through EEG and ECG Signals From Wireless Low-cost Off-the-Shelf Devices. IEEE J. Biomed. Health Inform. 2018, 22, 98–107. [Google Scholar] [CrossRef]

- Cui, H.; Liu, A.; Zhang, X.; Chen, X.; Wang, K.; Chen, X. EEG-based emotion recognition using an end-to-end regional-asymmetric convolutional neural network. Knowl.-Based Syst. 2020, 205, 106243. [Google Scholar] [CrossRef]

- Duan, R.N.; Zhu, J.Y.; Lu, B.L. Differential entropy feature for EEG-based emotion classification. In Proceedings of the 2013 6th International IEEE/EMBS Conference on Neural Engineering (NER), San Diego, CA, USA, 6–8 November 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 81–84. [Google Scholar]

- Zheng, W.L.; Lu, B.L. Investigating critical frequency bands and channels for EEG-based emotion recognition with deep neural networks. IEEE Trans. Auton. Ment. Dev. 2015, 7, 162–175. [Google Scholar] [CrossRef]

- Ribas, G.C. The cerebral sulci and gyri. Neurosurg. Focus 2010, 28, E2. [Google Scholar] [CrossRef] [PubMed]

- Klem, G.; Lüders, H.; Jasper, H.; Elger, C. The ten-twenty electrode system of the International Federation. The International Federation of Clinical Neurophysiology. Electroencephalogr. Clin. Neurophysiol. Suppl. 1999, 52, 3–6. [Google Scholar] [PubMed]

- Dimond, S.J.; Farrington, L.; Johnson, P. Differing emotional response from right and left hemispheres. Nature 1976, 261, 690–692. [Google Scholar] [CrossRef]

- Davidson, R.J.; Ekman, P.; Saron, C.D.; Senulis, J.A.; Friesen, W.V. Approach-withdrawal and cerebral asymmetry: Emotional expression and brain physiology: I. J. Personal. Soc. Psychol. 1990, 58, 330–341. [Google Scholar] [CrossRef]

| Region No. | MLP | CNN | GCN |

|---|---|---|---|

| DEAP | |||

| 1. | 94.11 | 93.58 | 94.13 |

| 2. | 97.48 | 97.64 | 98.65 |

| 3. | 97.05 | 97.54 | 98.01 |

| Dreamer | |||

| 1. | 94.25 | 94.00 | 94.29 |

| 2. | 99.12 | 99.09 | 99.15 |

| 3. | 97.11 | 96.94 | 97.03 |

| Method | Feature | Valence | Arousal | Dominance |

|---|---|---|---|---|

| DT | DE | 67.52/4.79 | 69.59/6.09 | 69.96/9.68 |

| SVM | DE | 71.05/6.11 | 72.18/6.89 | 71.85/8.38 |

| CNN-RNN | raw signal | 89.92/2.96 | 90.81/2.94 | 90.90/3.01 |

| ACRNN | raw signal | 93.72/3.21 | 93.38/3.73 | - |

| DGCNN | DE | 92.55/3.53 | 93.50/3.93 | 93.50/3.69 |

| RACNN | raw signal | 96.65/2.65 | 97.11/2.01 | - |

| MTCA-CapsNet | raw signal | 97.24/1.58 | 97.41/1.47 | 98.35/1.28 |

| RGNet-MLP | raw signal | 98.09/1.66 | 96.99/2.98 | 97.36/2.58 |

| RGNet-CNN | raw signal | 98.21/1.55 | 97.21/2.69 | 97.49/2.35 |

| RGNet-GCN | raw signal | 98.61/1.24 | 98.63/1.26 | 98.71/1.07 |

| Method | Feature | Valence | Arousal | Dominance |

|---|---|---|---|---|

| DT | DE | 76.39/6.69 | 76.62/6.91 | 76.59/6.27 |

| SVM | DE | 83.36/5.21 | 82.58/5.41 | 82.71/5.30 |

| CNN-RNN | raw signal | 79.93/6.65 | 81.48/6.33 | 80.94/5.66 |

| ACRNN | raw signal | 97.93/1.73 | 97.98/1.92 | 98.23/1.42 |

| DGCNN | DE | 89.59/5.13 | 88.93/3.93 | 88.64/5.13 |

| RACNN | raw signal | 96.65/2.18 | 97.01/2.74 | - |

| MTCA-CapsNet | raw signal | 94.96/3.60 | 95.54/3.63 | 95.52/3.78 |

| RGNet-MLP | DE | 99.16/0.75 | 99.00/1.25 | 99.20/0.72 |

| RGNet-CNN | DE | 99.13/0.82 | 98.97/1.27 | 99.18/0.78 |

| RGNet-GCN | DE | 99.17/0.85 | 99.06/1.29 | 99.23/0.89 |

| Precision | Recall | F1-Score | |

|---|---|---|---|

| Valence | 98.65 ± 1.17 | 98.72 ± 1.61 | 98.67 ± 1.31 |

| Arousal | 98.64 ± 1.33 | 98.61 ± 1.98 | 98.60 ± 1.57 |

| Dominance | 98.76 ± 1.12 | 98.85 ± 1.50 | 98.78 ± 1.19 |

| Precision | Recall | F1-Score | |

|---|---|---|---|

| Valence | 99.05 ± 0.99 | 98.93 ± 1.11 | 98.99 ± 1.03 |

| Arousal | 99.06 ± 1.36 | 98.86 ± 1.59 | 98.96 ± 1.29 |

| Dominance | 99.19 ± 0.90 | 99.35 ± 0.73 | 99.27 ± 0.80 |

| Precision | Recall | F1-Score | |

|---|---|---|---|

| Positive | 97.84 ± 2.70 | 98.28 ± 1.53 | 97.90 ± 1.97 |

| Neutral | 95.72 ± 3.30 | 94.18 ± 4.55 | 94.58 ± 3.95 |

| Positive | 94.57 ± 6.14 | 88.50 ± 9.40 | 90.31 ± 8.19 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fan, Z.; Chen, F.; Xia, X.; Liu, Y. EEG Emotion Classification Based on Graph Convolutional Network. Appl. Sci. 2024, 14, 726. https://doi.org/10.3390/app14020726

Fan Z, Chen F, Xia X, Liu Y. EEG Emotion Classification Based on Graph Convolutional Network. Applied Sciences. 2024; 14(2):726. https://doi.org/10.3390/app14020726

Chicago/Turabian StyleFan, Zhiqiang, Fangyue Chen, Xiaokai Xia, and Yu Liu. 2024. "EEG Emotion Classification Based on Graph Convolutional Network" Applied Sciences 14, no. 2: 726. https://doi.org/10.3390/app14020726

APA StyleFan, Z., Chen, F., Xia, X., & Liu, Y. (2024). EEG Emotion Classification Based on Graph Convolutional Network. Applied Sciences, 14(2), 726. https://doi.org/10.3390/app14020726