Research on Intrusion Detection Based on an Enhanced Random Forest Algorithm

Abstract

1. Introduction

2. Research Method

2.1. Data-Level Improvement

2.2. The Random Forest Algorithm

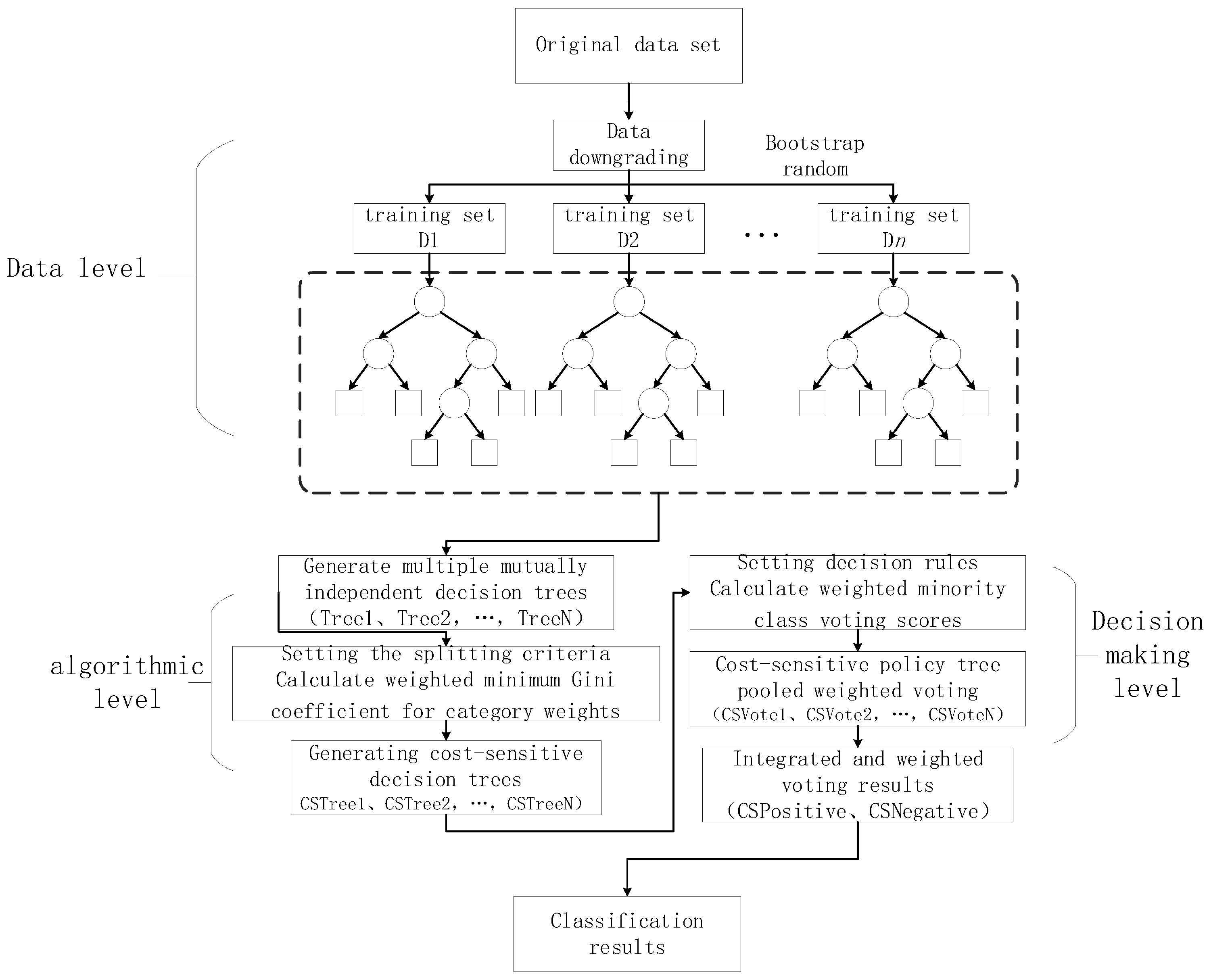

3. Cost-Sensitive Random Forest Algorithm

3.1. Introduction to Intrusion Detection

3.2. Algorithmic Enhancement

3.3. Decision-Level Enhancement

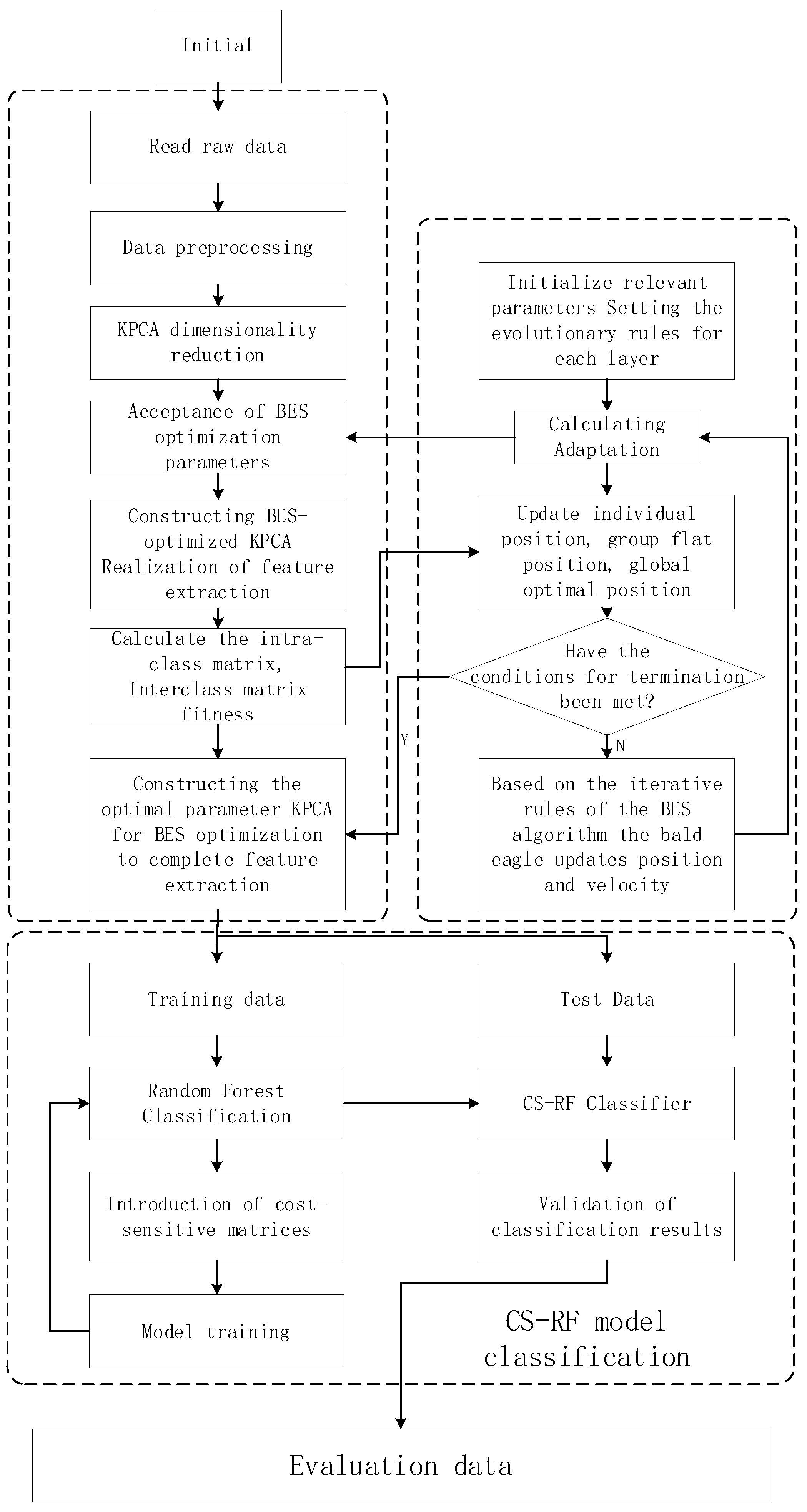

3.4. Enhanced Intrusion-Detection Algorithm Workflow

- (1)

- Data Preprocessing: This phase involves preprocessing the original dataset. Categorical features are one-hot encoded, transforming unmanageable categorical features into numerical ones. Additionally, to mitigate significant differences in feature values, the dataset is normalized.

- (2)

- Data Dimensionality Reduction: In this phase, the algorithm calculates distances between categories and within categories to construct a fitness function. The Bald Eagle Search (BES) algorithm is employed for optimizing KPCA parameters. The optimal parameters are then used in the B-KPCA algorithm to reduce dimensionality in the intrusion-detection dataset, creating a new feature subset.

- (3)

- Construction of Cost-Sensitive Random Forest Model: Cost matrices are introduced and applied to both the Gini function of base classifiers and the prediction in the decision tree classification voting. The model is trained with these considerations.

- (4)

- Model Validation: The final phase involves testing the trained model using a testing dataset. Multiple metrics are employed to evaluate and validate the classification performance of the model.

4. Experimental Verification and Result Analysis

4.1. Experimental Setup

- (1)

- Experimental environment setting

- (2)

- Dataset selection

- (3)

- Selection of Evaluation Metrics

4.2. Dataset Preprocessing

- (1)

- Unique Thermal Encoding of Character-based Features:

- (2)

- Feature data normalization:

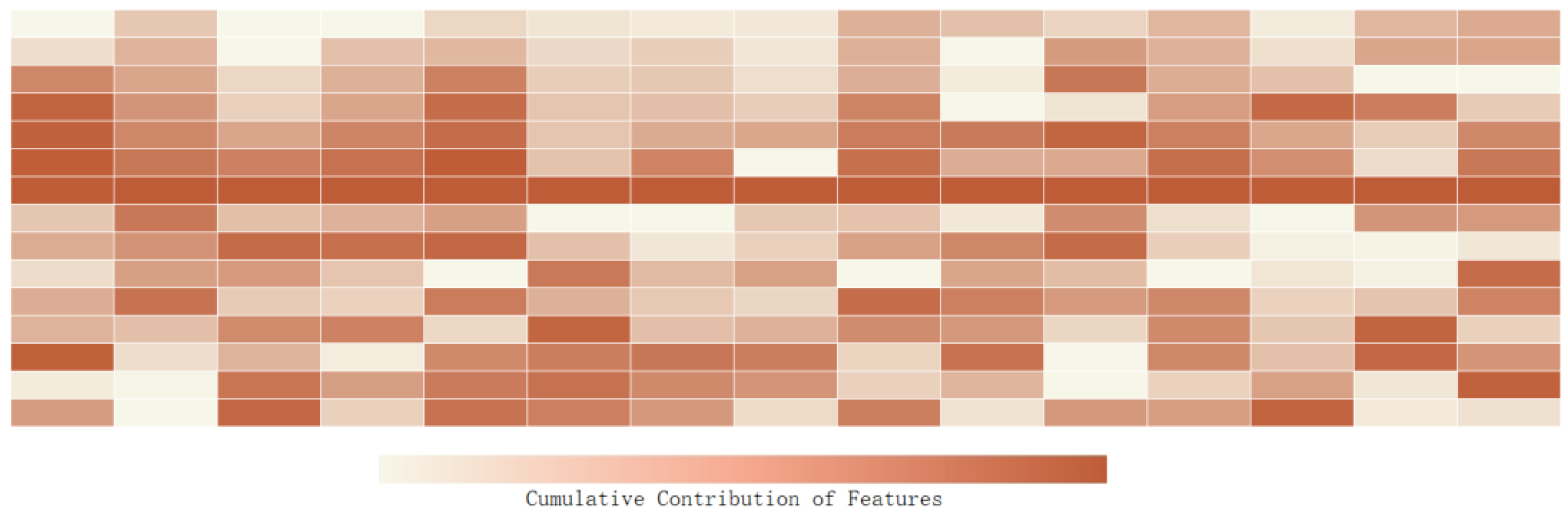

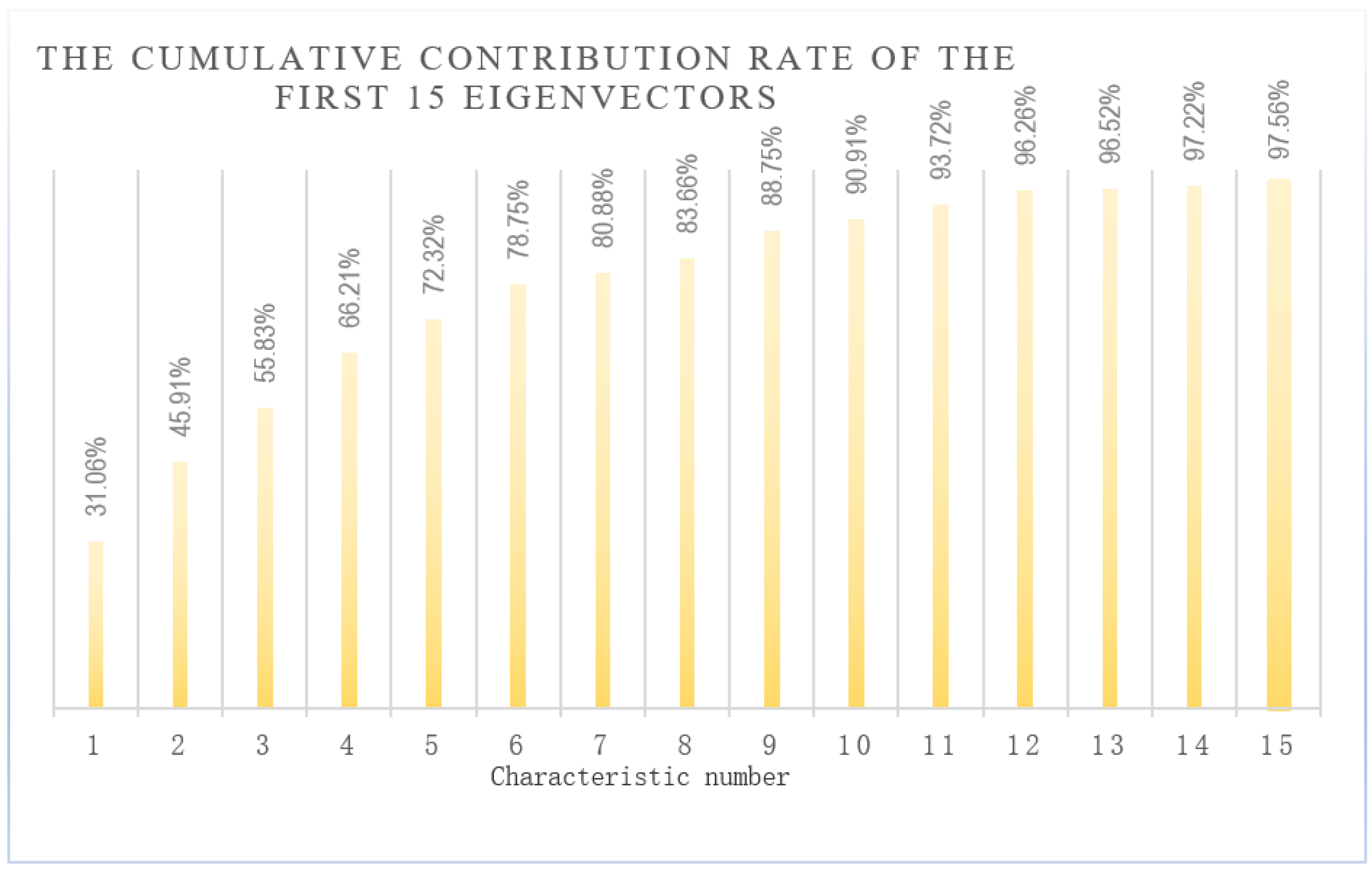

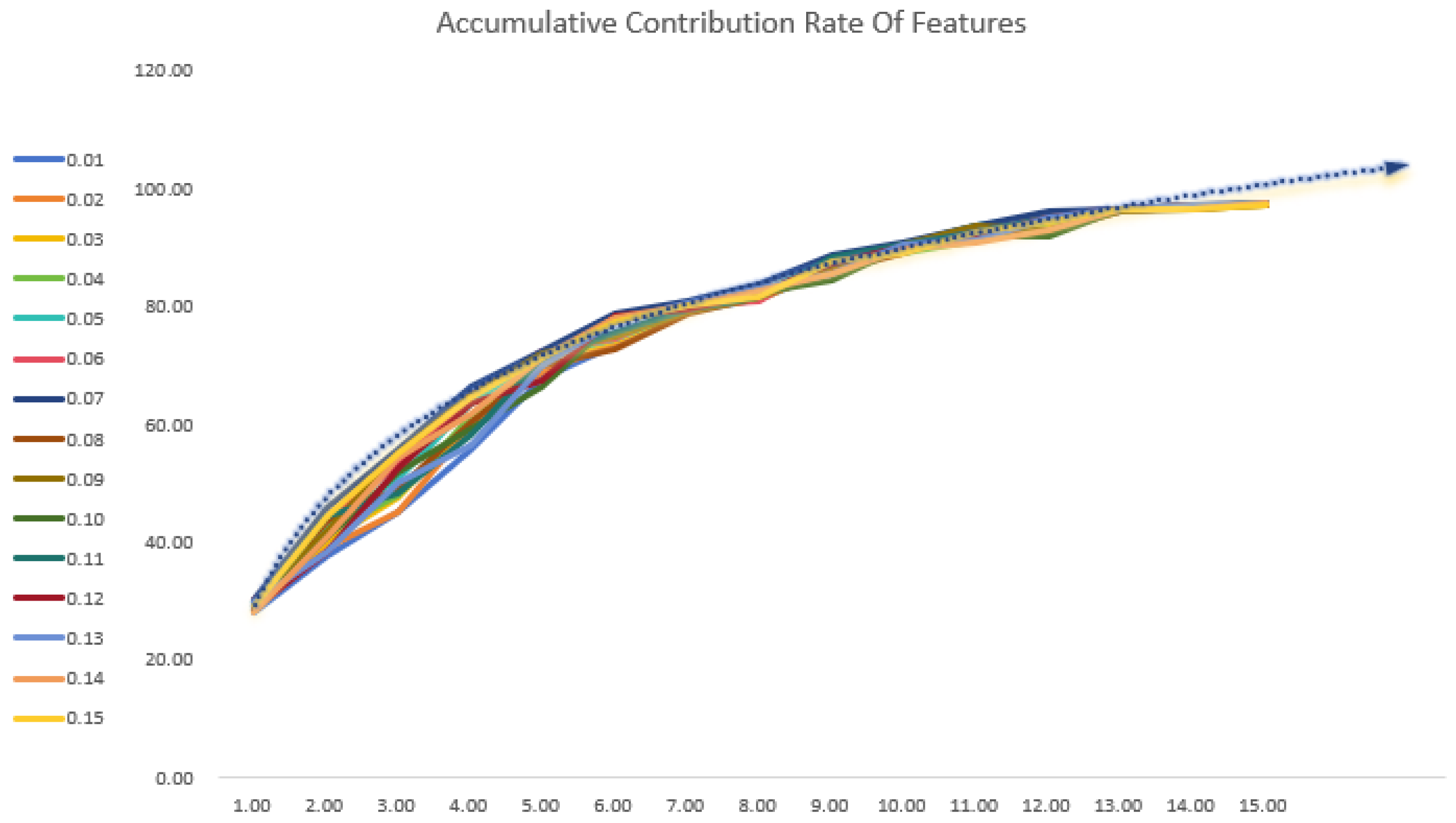

4.3. Dimension Reduction of Feature Data

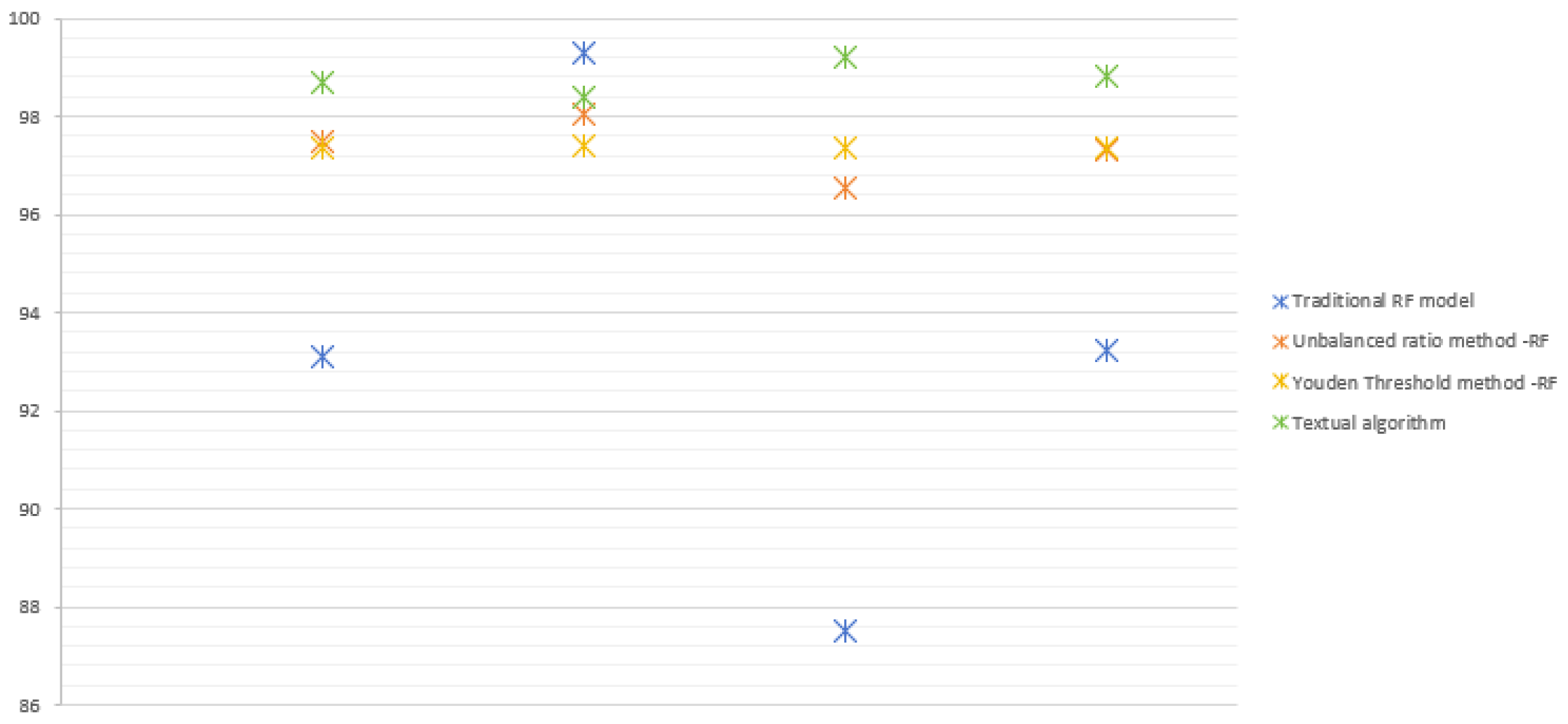

4.4. Experiment and Result Analysis

5. Conclusions

- (1)

- This paper proposes an improved intrusion-detection model based on the Random Forest algorithm, which solves the problem of the high computational complexity, high consumption of storage resources, and low classification accuracy of the traditional Random Forest caused by the characteristics of high data latitude and sample category imbalance in intrusion detection. Using the vulture search algorithm optimized KPCA to complete the data dimensionality reduction, the introduction of a cost-sensitive learning method to the Random Forest, through experiments, verified that the method proposed in this paper has a better performance compared with the traditional method and can be completed in a shorter period of time for a small number of categories of samples of high-efficiency detection under the premise of a higher accuracy rate.

- (2)

- It is experimentally verified that the method proposed in this paper has better performance compared with traditional methods. In terms of data dimensionality reduction, the B-KPCA algorithm takes only 3.69 s more compared to the PCA algorithm and saves 20.68 s and 16.58 s compared to the ISOMAP algorithm and the LLE algorithm, but the accuracy rate is improved by 7.04%, 9.83%, and 3.98%. Considering the accuracy rate and running time together, the B-KPCA algorithm is better in performance. Moreover, the model in this paper improves the accuracy by 5.59%, 1.23%, and 1.33%, the specificity by 11.7%, 2.66%, and 1.87%, the G-mean by 0.0558, 0.0150, and 0.0144, and the training time and testing time by more than half, compared with the traditional RF model, the imbalanced proportion method-RF, and the Youden threshold method-RF. Considering the above factors, the model in this paper more correctly recognizes the minority class samples.

- (3)

- However, during the experimental process, it was realized that the model still has some limitations. The evaluation of our study was based on publicly available datasets and was not tested in a real-world environment. Therefore, future work may focus on real-time environment testing, and on the basis of evaluating the model’s performance in a laboratory environment, the model will be tested and validated in an actual real-time environment, which will provide more realistic contexts and data to further validate the model’s reliability and practicality.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Florackis, C.; Louca, C. Cybersecurity Risk. Rev. Financ. Stud. 2022, 36, 351–407. [Google Scholar] [CrossRef]

- Insua, D.R.; Couce-Vieira, A.; Rubio, J.A. An Adversarial Risk Analysis Framework for Cybersecurity. Risk Anal. 2019, 41, 16–36. [Google Scholar] [CrossRef] [PubMed]

- Mills, R.; Marnerides, A.K. Practical Intrusion Detection of Emerging Threats. IEEE Trans. Netw. Serv. Manag. 2021, 19, 582–600. [Google Scholar] [CrossRef]

- Maseno, E.M.; Wang, Z. A Systematic Review on Hybrid Intrusion Detection System. Secur. Commun. Netw. 2022, 2022, 9663052. [Google Scholar] [CrossRef]

- Shaikha, H.K.; Abduallah, W.M. A Review of Intrusion Detection Systems. Acad. J. Nawroz Univ. 2017, 6, 101–105. [Google Scholar] [CrossRef][Green Version]

- Om, H.; Kundu, A. A hybrid system for reducing the false alarm rate of anomaly intrusion detection system. In Proceedings of the 2012 1st International Conference on Recent Advances in Information Technology (RAIT), Dhanbad, India, 15–17 March 2012; pp. 131–136. [Google Scholar]

- Liu, Z.; Ning, W.; Fu, X.; Zhang, M.; Wang, Y. Fast Intra-Mode Decision Algorithm for Virtual Reality 360 Degree Video Based on Decision Tree and Texture Direction. In Proceedings of the Twelfth International Conference on Digital Image Processing (ICDIP 2020), Osaka, Japan, 19–22 May 2020; Volume 11519. [Google Scholar]

- Donald, R.; Joseph, C.; Daniel, L.T.; Farah, K.; Anthony, S. Radio Identity Verification-Based IoT Security Using RF-DNA Fingerprints and SVM. IEEE Internet Things J. 2021, 8, 8356–8371. [Google Scholar]

- Han, Q.; Liu, J.; Shen, Z.; Liu, J.; Gong, F. Vector partitioning quantization utilizing K-means clustering for physical layer secret key generation. Inf. Sci. 2020, 512, 137–160. [Google Scholar] [CrossRef]

- Al-Abadi, A.A.J.; Mohamed, M.B.; Fakhfakh, A. Enhanced Random Forest Classifier with K-MeansClustering (ERF-KMC) for Detecting and Preventing Distributed-Denial of-Service and Man-in-the-Middle Attacks in Internet-of-Medical-Things Networks. Computers 2023, 12, 262. [Google Scholar] [CrossRef]

- Zhou, M.; Zhang, Y.; Wang, J.; Xue, T.; Dong, Z.; Zhai, W. Fault Detection of Wastewater Treatment Plants Based on an Improved Kernel Extreme Learning Machine Method. Water 2023, 15, 2079. [Google Scholar] [CrossRef]

- Tidrea, A.; Korodi, A.; Silea, I. Elliptic Curve Cryptography Considerations for Securing Automation and SCADA Systems. Sensors 2023, 23, 2686. [Google Scholar] [CrossRef]

- Hsu, C.-Y.; Wang, S. Intrusion detection by machine learning for multimedia platform. Multimed. Tools Appl. 2021, 80, 29643–29656. [Google Scholar] [CrossRef]

- Zhang, C.; Jia, D. Comparative research on network intrusion detection methods based on machine learning. Comput. Secur. 2022, 121, 102861. [Google Scholar] [CrossRef]

- Ring, M.; Wunderlich, S. A survey of network-based intrusion detection data sets. J. Big Data 2019, 86, 147–167. [Google Scholar] [CrossRef]

- Bagui, S.; Bagui, S. Resampling imbalanced data for network intrusion detection datasets. Rev. Financ. Stud. 2021, 8, 351–407. [Google Scholar] [CrossRef]

- Yang, Z.; Liu, X.; Li, T.; Wu, D.; Wang, J.; Zhao, Y.; Han, H. A systematic literature review of methods and datasets for anomaly-based network intrusion detection. Comput. Secur. 2022, 116, 102675. [Google Scholar] [CrossRef]

- Yousefnezhad, M.; Hamidzadeh, J.; Aliannejadi, M. Ensemble classification for intrusion detection via feature extraction based on deep Learning. Soft Comput. 2021, 25, 12667–12683. [Google Scholar] [CrossRef]

- Laber, E.; Murtinho, L. Minimization of Gini Impurity: NP-completeness and Approximation Algorithm via Connections with the k-means Problem. Electron. Notes Theor. Comput. Sci. 2019, 346, 567–576. [Google Scholar] [CrossRef]

- Hoang, U.N.; Mojdeh Mirmomen, S.; Meirelles, O.; Yao, J.; Merino, M.; Metwalli, A.; Marston Linehan, W.; Malayeri, A.A. Assessment of multiphasic contrast-enhanced MR textures in differentiating small renal mass subtypes. Abdom. Radiol. 2018, 43, 3400–3409. [Google Scholar] [CrossRef]

- Chutia, D.; Bhattacharyya, D.K.; Sarma, J.; Raju, P.N. An effective ensemble classification framework using random forests and a correlation based feature selection technique. Trans. GIS 2017, 21, 1165–1178. [Google Scholar] [CrossRef]

- Mishra, A.K.; Paliwal, S. Mitigating cyber threats through integration of feature selection and stacking ensemble learning: The LGBM and random forest intrusion detection perspective. Clust. Comput. 2023, 26, 2339–2350. [Google Scholar] [CrossRef]

- Li, J.; Cheng, K. Feature Selection: A Data Perspective. ACM Comput. Surv. 2017, 50, 1–45. [Google Scholar] [CrossRef]

- Gao, W.; Hu, L.; Zhang, P.; He, J. Feature selection considering the composition of feature relevancy. Pattern Recognit. Lett. 2018, 112, 70–74. [Google Scholar] [CrossRef]

- Reddy, G.T.; Reddy, M.P.; Lakshmanna, K.; Kaluri, R.; Rajput, D.S.; Srivastava, G.; Baker, T. Analysis of Dimensionality Reduction Techniques on Big Data. J. Mag. 2020, 8, 54776–54788. [Google Scholar] [CrossRef]

- Zhang, H.; Huang, L. An Effective Convolutional Neural Network Based on SMOTE and Gaussian Mixture Model for Intrusion Detection in Imbalanced Dataset. Comput. Netw. 2020, 177, 107315. [Google Scholar] [CrossRef]

- Li, Y.; Qin, T. HDFEF: A hierarchical and dynamic feature extraction framework for intrusion detection systems. Comput. Secur. 2022, 121, 102842. [Google Scholar] [CrossRef]

- Wang, Y.-C.; Cheng, C.-H. A multiple combined method for rebalancing medical data with class imbalances. Comput. Biol. Med. 2021, 134, 104527. [Google Scholar] [CrossRef] [PubMed]

- Herrera-Semenets, V.; Bustio-Martínez, L.; Hernández-León, R.; van den Berg, J. A multi-measure feature selection algorithm for efficacious intrusion detection. Knowl. Based Syst. 2021, 227, 107264. [Google Scholar] [CrossRef]

- Han, G.; Li, X.; Jiang, J.; Shu, L.; Lloret, J. Intrusion Detection Algorithm Based on Neighbor Information Against Sinkhole Attack in Wireless Sensor Networks. Comput. J. 2015, 58, 1280–1292. [Google Scholar] [CrossRef]

- Lei, L.; Shao, S.; Liang, L. An evolutionary deep learning model based on EWKM, random forest algorithm, SSA and BiLSTM for building energy consumption prediction. Energy 2024, 288, 129795. [Google Scholar] [CrossRef]

- Maidamwar, P.R.; Lokulwar, P.P.; Kumar, K. Ensemble Learning Approach for Classification of Network Intrusion Detection in IoT Environment. Int. J. Comput. Netw. Inf. Secur. 2023, 15, 30–36. [Google Scholar] [CrossRef]

- Li, D.; He, X.; Dai, X. Improved kernel principal component analysis algorithm for network intrusion detection. ICIC Express Lett. 2016, 10, 971–975. [Google Scholar]

- Zaky, A.A.; Ghoniem, R.M.; Selim, F. Precise Modeling of Proton Exchange Membrane Fuel Cell Using the Modified Bald Eagle Optimization Algorithm. Sustainability 2023, 15, 10590. [Google Scholar] [CrossRef]

- Serinelli, B.M.; Collen, A.; Nijdam, N.A. Training Guidance with KDD Cup 1999 and NSL-KDD Data Sets of ANIDINR: Anomaly-Based Network Intrusion Detection System. Procedia Comput. Sci. 2020, 175, 560–565. [Google Scholar] [CrossRef]

- Jain, S.; Kotsampasakou, E.; Ecker, G.F. Comparing the performance of meta-classifiers—A case study on selected imbalanced data sets relevant for prediction of liver toxicity. J. Comput.-Aided Mol. Design. 2018, 32, 583–590. [Google Scholar] [CrossRef] [PubMed]

- Óscar, M.G.; Sancho, N.J.C.; Ávila, V.M.; Caro, L.A. A Novel Ensemble Learning System for Cyberattack Classification. Intell. Autom. Soft Comput. 2023, 37, 1691–1709. [Google Scholar]

- Vanitha, S.; Balasubramanie, P. Improved Ant Colony Optimization and Machine Learning Based Ensemble Intrusion Detection Model. Intell. Autom. Soft Comput. 2022, 36, 849–864. [Google Scholar] [CrossRef]

- Huang, Z.; Xu, X.; Zuo, L. Reinforcement learning with automatic basis construction based on isometric feature mapping. Inf. Sci. 2014, 286, 209–227. [Google Scholar] [CrossRef]

- Li, M.; Luo, X.; Yang, J.; Sun, Y. Applying a Locally Linear Embedding Algorithm for Feature Extraction and Visualization of MI-EEG. J. Sens. 2016, 2016, 7481946:1–7481946:9. [Google Scholar] [CrossRef]

- Fang, X.; Zhang, H.; Gao, S.; Tan, Y. Imbalanced web spam classification based on nested rotation forest. ICIC Express Lett. 2015, 9, 937–944. [Google Scholar]

- Coolen-Maturi, T.; Coolen, F.P.A.; Alabdulhadi, M. Nonparametric predictive inference for diagnostic test thresholds. Commun. Stat. Theory Methods 2020, 49, 697–725. [Google Scholar] [CrossRef]

- Pradhan, B.; Sameen, M.I.; Al-Najjar, H.A.; Sheng, D.; Alamri, A.M.; Park, H.J. A Meta-Learning Approach of Optimisation for Spatial Prediction of Landslides. Remote Sens. 2021, 13, 4521. [Google Scholar] [CrossRef]

| UNSW-NB15 Testing and Training Sets | ||

|---|---|---|

| Training Set | Testing Set | |

| Normal | 56,000 | 37,000 |

| Anallysis | 2000 | 677 |

| Backdoor | 1746 | 583 |

| Dos | 12,264 | 4089 |

| Exploits | 33,393 | 11,132 |

| Fuzzers | 18,184 | 6062 |

| Generic | 40,000 | 18,871 |

| Reconnaissance | 10,491 | 3496 |

| Shellcode | 1133 | 378 |

| Worms | 130 | 44 |

| Total | 175,341 | 82,332 |

| Status | Judged as an Attack | Judged as a Norm |

|---|---|---|

| Attack traffic | TP | FP |

| Normal flow | FN | TN |

| B-KPCA | PCA | ISOMAP | LLE | |

|---|---|---|---|---|

| Accuracy after dimensionality reduction/% | 97.25 | 90.21 | 87.42 | 93.27 |

| Dimensionality reduction time/s | 9.16 | 5.47 | 29.84 | 25.74 |

| Algorithm | Acc/% | Sen/% | Spe/% | G-Mean | Training Time | Test Time |

|---|---|---|---|---|---|---|

| Traditional RF model | 93.11 | 99.30 | 87.51 | 0.9322 | 23.89 s | 0.87 s |

| Unbalanced ratio method -RF | 97.47 | 98.05 | 96.55 | 0.9730 | 27.46 s | 1.24 s |

| Youden Threshold method -RF | 97.37 | 97.39 | 97.34 | 0.9736 | 25.92 s | 1.28 s |

| Textual algorithm | 98.70 | 98.39 | 99.21 | 0.9880 | 12.57 s | 0.25 s |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lu, C.; Cao, Y.; Wang, Z. Research on Intrusion Detection Based on an Enhanced Random Forest Algorithm. Appl. Sci. 2024, 14, 714. https://doi.org/10.3390/app14020714

Lu C, Cao Y, Wang Z. Research on Intrusion Detection Based on an Enhanced Random Forest Algorithm. Applied Sciences. 2024; 14(2):714. https://doi.org/10.3390/app14020714

Chicago/Turabian StyleLu, Caiwu, Yunxiang Cao, and Zebin Wang. 2024. "Research on Intrusion Detection Based on an Enhanced Random Forest Algorithm" Applied Sciences 14, no. 2: 714. https://doi.org/10.3390/app14020714

APA StyleLu, C., Cao, Y., & Wang, Z. (2024). Research on Intrusion Detection Based on an Enhanced Random Forest Algorithm. Applied Sciences, 14(2), 714. https://doi.org/10.3390/app14020714