Hybrid Clustering Algorithm Based on Improved Density Peak Clustering

Abstract

1. Introduction

- In many of the existing DPC-related literature studies, such as the work by Rodriguez and Laio (2014) [7], as well as by Du and Wang (2021) [8], density estimation is typically performed using a fixed cut-off distance approach. However, this method raises concerns regarding the reliability of density estimation, particularly when dealing with small-scale datasets. Hence, in this study, we depart from relying on the parameter dc within the DPC algorithm and instead employ the mean-shift algorithm to locate local density peaks. This strategic shift ensures that our algorithm is no longer influenced by the parameter dc, thus facilitating more accurate identification of core objects. By utilizing this approach to locate density peaks, we can precisely and efficiently identify cluster cores and even define the number of clusters as needed. This method significantly enhances the reliability and applicability of the clustering algorithm.

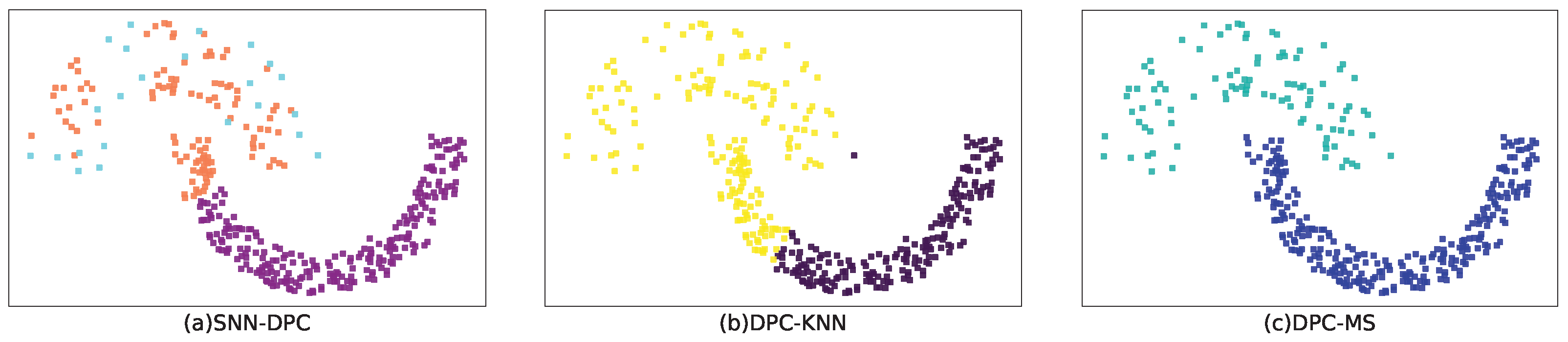

- The DPC algorithm is sensitive to parameter selection, requiring users to specify a neighborhood distance threshold. This threshold is crucial in determining both the number of peak density points and the quality of clustering results. In many existing DPC methods, such as those described by Lotfi et al. (2019) [9], commonly used distance metrics like the Euclidean distance are typically employed to assign labels to each data instance based on their proximity to the nearest cluster core. However, this label propagation method is often limited to spherical clusters. This paper introduces a novel adaptive distance metric approach aimed at identifying not only spherical clusters but also clusters of various shapes and densities. The core concept of this adaptive distance measurement involves automatically adjusting distances based on the data’s density. It accomplishes this by expanding distances between sparse points and contracting distances between dense points, enabling more precise identification of density peaks. Moreover, this approach allows us to discover clusters with complex non-spherical shapes. It enables our algorithm to adapt to clusters of different densities and shapes, thereby enhancing its practical applicability in practical scenarios.

2. Related Works

2.1. Improved Density Peak Clustering Algorithm

2.2. Hybrid Clustering Algorithm

3. Theoretical Basis

3.1. Density Peak Clustering Algorithm

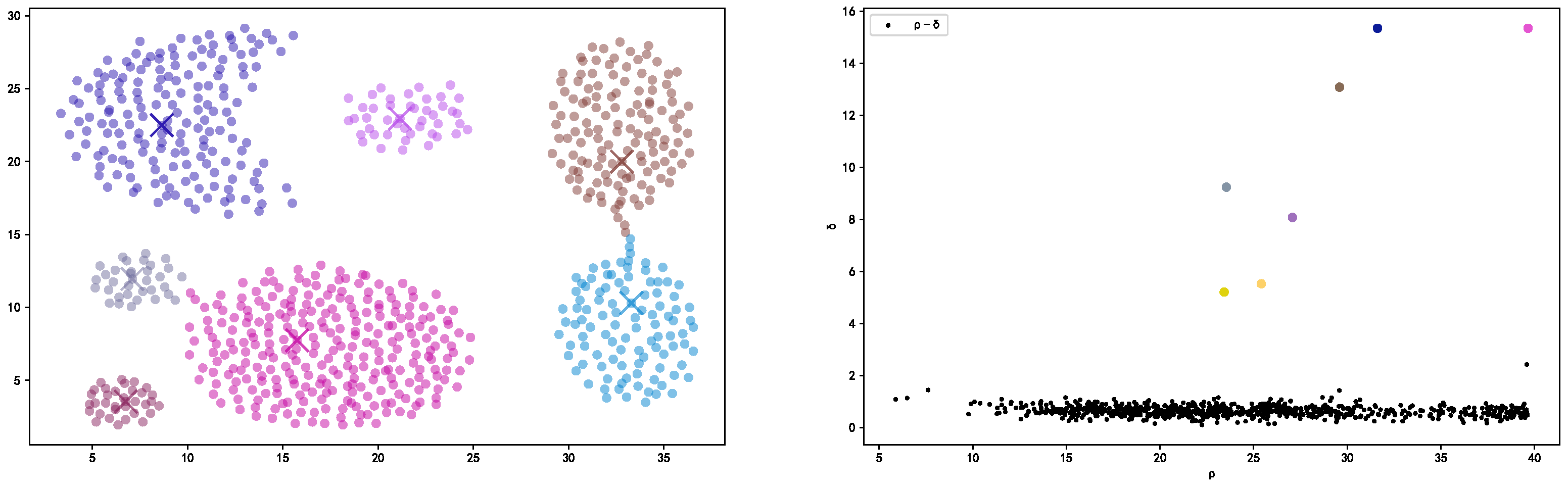

- The algorithm operates based on two underlying assumptions: (1) The local density of the cluster center (density peak point) exceeds that of its neighboring data points within a defined locality. (2) The distance separating the cluster center from other cluster centers is notably substantial. Two critical parameters play a pivotal role in this algorithm: local density () and the distance () between data points and their respective local density extreme points. The definitions are as follows:

3.2. Mean-Shift Algorithm

- For n sample points in within a d-dimensional space, represented as i = 1, …, n, and any point x within this space, the mean-shift vector of that point is defined as follows:

3.3. DPC-MS Clustering Algorithm

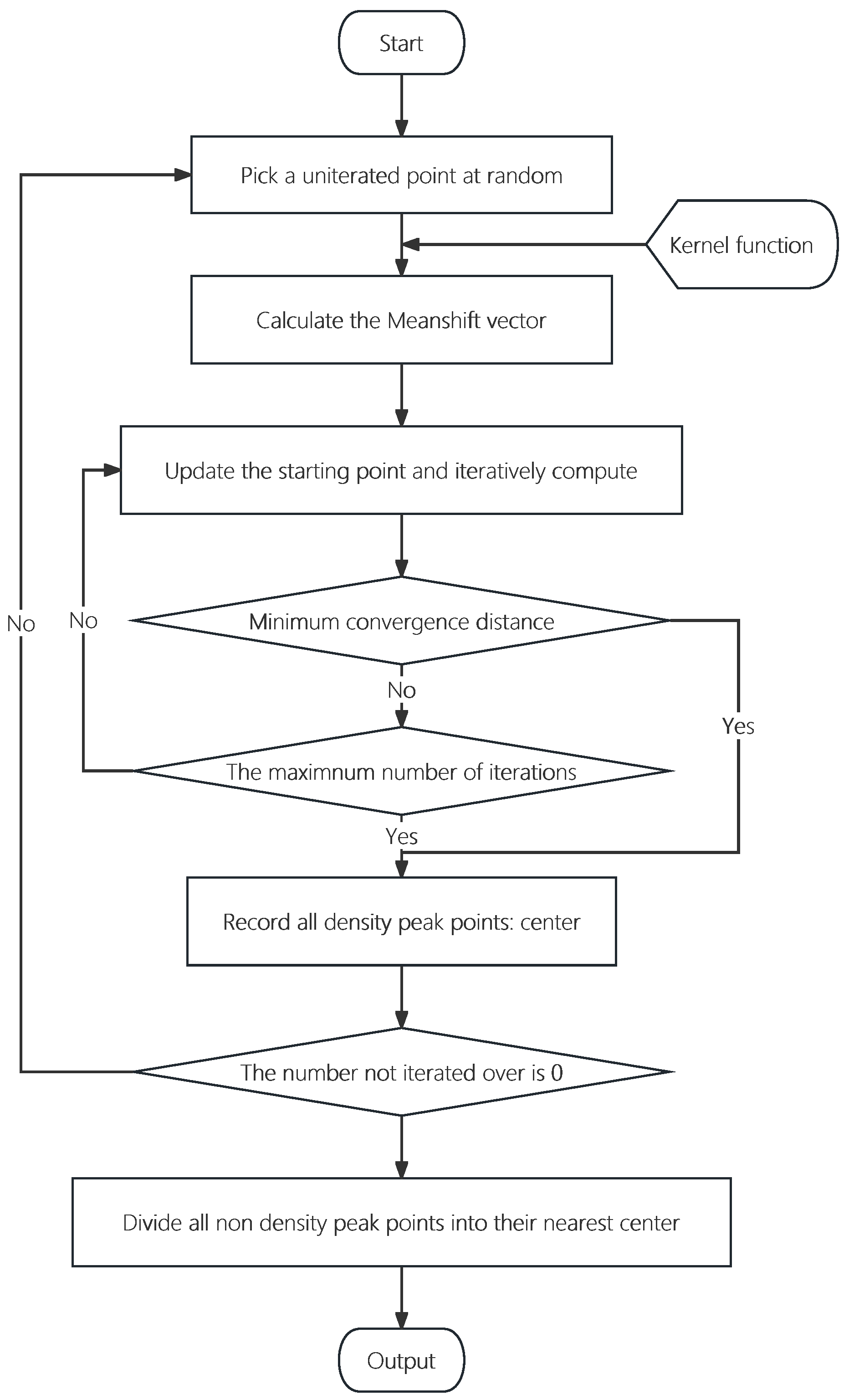

- Randomly select an unmarked data point as the initial center point.

- Utilize a Gaussian kernel function with a dynamic bandwidth: With an initial bandwidth of h = 2, calculate the number of data points with weights exceeding 10% within the range of the kernel function centered on the current point, denoted as n.

- Compute the weight vectors for all data points falling within the range of the Gaussian kernel function centered on the current point. Aggregate these weight vectors to obtain the shift vector and label the corresponding data points.

- Update the center position by moving it in the direction of the shift vector by an amount proportional to its magnitude: .

- Repeat steps 2, 3, and 4 until the magnitude of the shift vector becomes very small (indicating convergence), and mark the current as a point.

- Iterate steps 1 through 5 until all data points have been marked as accessed, and record all points.

- Calculate the adaptive distance from each data point to each point and assign it to the closest ’s class.

- Compute the weighted Euclidean distance between each pair of points. If this distance is less than a predefined threshold, merge the two classes, and merge the data points within these classes. The class of the current point set is determined based on which class has a larger number of n in its neighborhood.

| Algorithm 1 Algorithm of DPC-MS. |

|

4. Experiments

4.1. Datasets

4.2. Evaluation Metrics

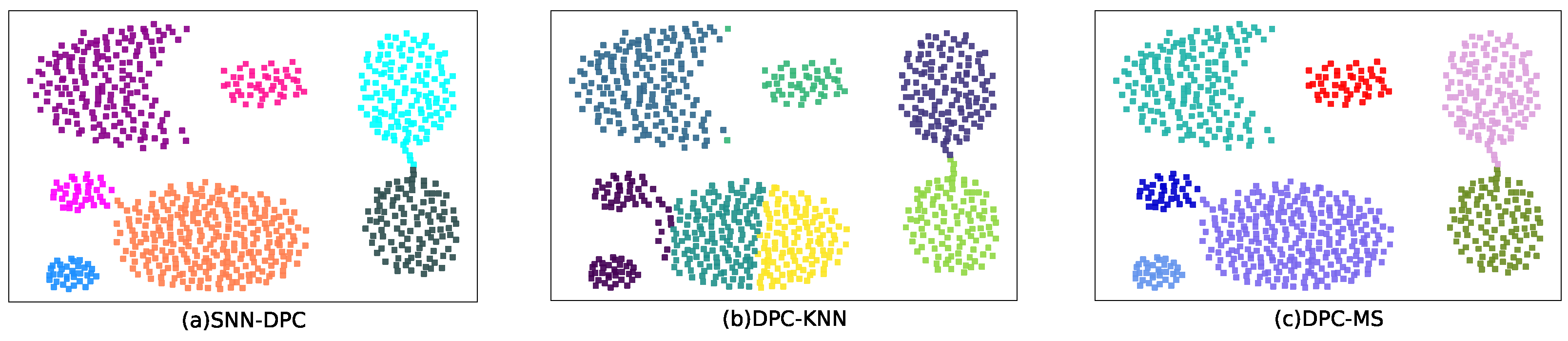

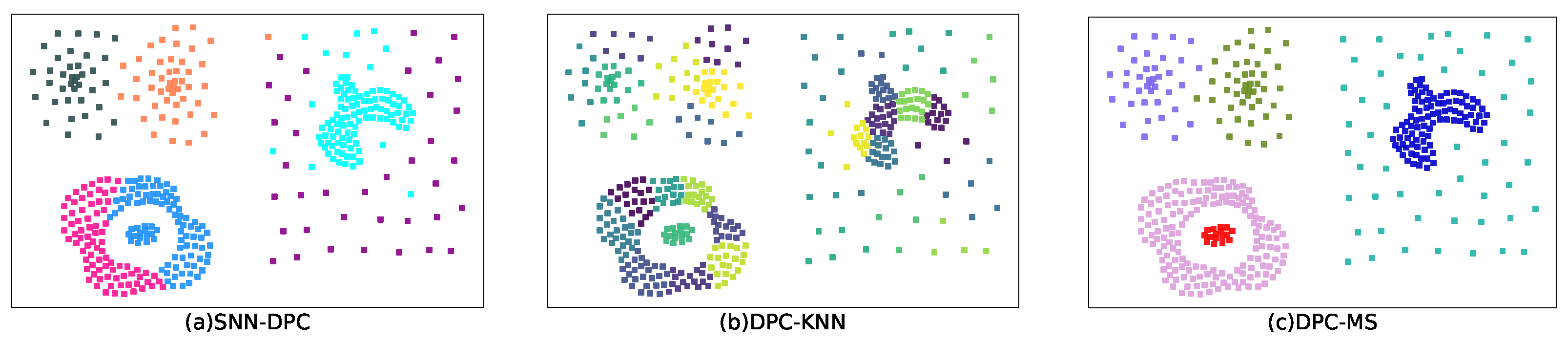

4.3. Results

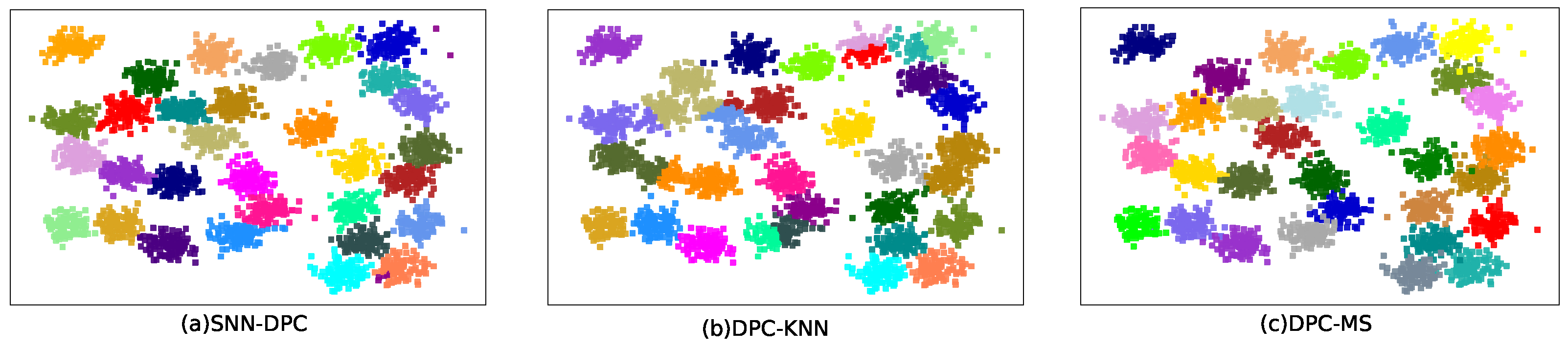

Results on Public Datasets

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ester, M.; Kriegel, H.P.; Sander, J.; Xu, X. A density-based algorithm for discovering clusters in large spatial databases with noise. kdd 1996, 96, 226–231. [Google Scholar]

- Chen, T.Q.; Murphey, Y.L.; Karlsen, R.; Gerhart, G. Color Image Segmentation in Color and Spatial Domain. In Proceedings of the Developments in Applied Artificial Intelligence: 16th International Conference on Industrial and Engineering Applications of Artificial Intelligence and Expert Systems, IEA/AIE 2003, Loughborough, UK, 23–26 June 2003; pp. 72–82. [Google Scholar]

- Huang, L.; Wang, G.; Wang, Y.; Pang, W.; Ma, Q. A link density clustering algorithm based on automatically selecting density peaks for overlapping community detection. Int. J. Mod. Phys. B 2016, 30, 1650167. [Google Scholar] [CrossRef]

- Mehmood, R.; Zhang, G.; Bie, R.; Dawood, H.; Ahmad, H. Clustering by fast search and find of density peaks via heat diffusion. Neurocomputing 2016, 208, 210–217. [Google Scholar] [CrossRef]

- Saini, A.; Saraswat, S.; Faujdar, N. Clustering Based Stock Market Analysis. Int. J. Control. Theory Appl. 2017, 10. [Google Scholar]

- Seyedi, S.A.; Lotfi, A.; Moradi, P.; Qader, N.N. Dynamic graph-based label spread for Density Peaks Clustering. Expert Syst. Appl. 2019, 115, 314–328. [Google Scholar] [CrossRef]

- Rodriguez, A.; Laio, A. Clustering by fast search and find of density peaks. Science 2014, 344, 1492–1496. [Google Scholar] [CrossRef] [PubMed]

- Du, H.; Ni, Y.; Wang, Z. An Improved Algorithm Based on Fast Search and Find of Density Peak Clustering for High-Dimensional Data. Wirel. Commun. Mob. Comput. 2021, 2021, 9977884. [Google Scholar] [CrossRef]

- Lotfi, A.; Seyedi, S.A.; Moradi, P. An improved density peaks method for data clustering. In Proceedings of the International Conference on Computer & Knowledge Engineering, Mashhad, Iran, 20–20 October 2016. [Google Scholar]

- Wang, Z.; Wang, Y. A New Density Peak Clustering Algorithm for Automatically Determining Clustering Centers. In Proceedings of the 2020 International Workshop on Electronic Communication and Artificial Intelligence (IWECAI), Shanghai, China, 12–14 June 2020; pp. 128–134. [Google Scholar]

- Wang, J.; Zhang, Y.; Lan, X. Automatic cluster number selection by finding density peaks. In Proceedings of the 2016 2nd IEEE International Conference on Computer and Communications (ICCC), Chengdu, China, 14–17 October 2016; pp. 13–18. [Google Scholar]

- Hou, J.; Zhang, A. Enhancing Density Peak Clustering via Density Normalization. IEEE Trans. Ind. Inform. 2020, 16, 2477–2485. [Google Scholar] [CrossRef]

- Bie, R.; Mehmood, R.; Ruan, S.; Sun, Y.; Dawood, H. Adaptive fuzzy clustering by fast search and find of density peaks. Pers. Ubiquitous Comput. 2016, 20, 785–793. [Google Scholar] [CrossRef]

- Dan, L.; Cheng, M.X.; Hao, D. A Semi-supervised Density Peak Clustering Algorithm. Geogr. Geo-Inf. Sci. 2017, 32, 69–74. [Google Scholar]

- Liang, Z.; Chen, P. Delta-density based clustering with a divide-and-conquer strategy: 3DC clustering. Pattern Recognit. Lett. 2016, 73, 52–59. [Google Scholar] [CrossRef]

- Xu, J.; Wang, G.; Deng, W. DenPEHC. Inf. Sci. 2016, 373, 200–218. [Google Scholar] [CrossRef]

- Pacifico, L.D.S.; Ludermir, T.B. Hybrid K-Means and Improved Self-Adaptive Particle Swarm Optimization for Data Clustering. In Proceedings of the 2019 International Joint Conference on Neural Networks (IJCNN), Budapest, Hungary, 14–19 July 2019; pp. 1–7. [Google Scholar]

- Drias, H.; Cherif, N.F.; Kechid, A. k-MM: A Hybrid Clustering Algorithm Based on k-Means and k-Medoids. In Advances in Nature and Biologically Inspired Computing. Advances in Intelligent Systems and Computing; Pillay, N., Engelbrecht, A., Abraham, A., du Plessis, M., Snášel, V., Muda, A., Eds.; Springer: Cham, Switzerland, 2016; Volume 419. [Google Scholar]

- Wang, S.; Yabes, J.G.; Chang, C.C.H. Hybrid Density- and Partition-based Clustering Algorithm for Data with Mixed-type Variables. J. Data Sci. 2019, 19, 15–36. [Google Scholar] [CrossRef]

- Xu, L.; Zhao, J.; Yao, Z.; Shi, A.; Chen, Z. Density Peak Clustering Based on Cumulative Nearest Neighbors Degree and Micro Cluster Merging. J. Signal Process. Syst. 2019, 91, 1219–1236. [Google Scholar] [CrossRef]

- Xue, F.; Liu, Y.; Ma, X.; Pathak, B.; Liang, P. A hybrid clustering algorithm based on improved GWO and KHM clustering. J. Intell. Fuzzy Syst. 2022, 42, 3227–3240. [Google Scholar] [CrossRef]

- Liu, R.; Wang, H.; Yu, X. Shared-nearest-neighbor-based clustering by fast search and find of density peaks. Inf. Sci. 2018, 450, 200–226. [Google Scholar] [CrossRef]

- Lichman, M. UCI Machine Learning Repository. University of California, School of Information and Computer Science. 2013. Available online: http://archive.ics.uci.edu/ml (accessed on 6 January 2024).

| Datasets | SNN-DPC | DPC-KNN | DPC-MS |

|---|---|---|---|

| Seeds | 0.8117 | 0.8177 | 0.8469 |

| Thyroid | 0.7395 | 0.6369 | 0.7566 |

| Iris | 0.8543 | 0.8668 | 0.9234 |

| Parkinson | 0.7492 | 0.6495 | 0.7844 |

| Spiral | 0.5003 | 0.5279 | 0.5643 |

| Diabetes | 0.6127 | 0.6016 | 0.6573 |

| E. coli | 0.7256 | 0.7858 | 0.7814 |

| Path-based | 0.7581 | 0.6653 | 0.8356 |

| Datasets | SNN-DPC | DPC-KNN | DPC-MS |

|---|---|---|---|

| Seeds | 0.8793 | 0.8795 | 0.8932 |

| Thyroid | 0.7692 | 0.6064 | 0.7383 |

| Iris | 0.8967 | 0.9124 | 0.9542 |

| Parkinson | 0.6269 | 0.5929 | 0.6940 |

| Spiral | 0.5376 | 0.5222 | 0.5674 |

| Diabetes | 0.5498 | 0.5830 | 0.5923 |

| E. coli | 0.8462 | 0.8681 | 0.8683 |

| Path-based | 0.7478 | 0.6507 | 0.8175 |

| Datasets | SNN-DPC | DPC-KNN | DPC-MS |

|---|---|---|---|

| Seeds | 0.7109 | 0.7277 | 0.7646 |

| Thyroid | 0.5790 | 0.2077 | 0.4582 |

| Iris | 0.7746 | 0.8015 | 0.9133 |

| Parkinson | 0.2248 | 0.1713 | 0.2586 |

| Spiral | 0.0064 | 0.0443 | 0.5643 |

| Diabetes | 0.0804 | 0.1659 | 0.1795 |

| E. coli | 0.5059 | 0.6925 | 0.6961 |

| Path-based | 0.4692 | 0.3003 | 0.6308 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guo, L.; Qin, W.; Cai, Z.; Su, X. Hybrid Clustering Algorithm Based on Improved Density Peak Clustering. Appl. Sci. 2024, 14, 715. https://doi.org/10.3390/app14020715

Guo L, Qin W, Cai Z, Su X. Hybrid Clustering Algorithm Based on Improved Density Peak Clustering. Applied Sciences. 2024; 14(2):715. https://doi.org/10.3390/app14020715

Chicago/Turabian StyleGuo, Limin, Weijia Qin, Zhi Cai, and Xing Su. 2024. "Hybrid Clustering Algorithm Based on Improved Density Peak Clustering" Applied Sciences 14, no. 2: 715. https://doi.org/10.3390/app14020715

APA StyleGuo, L., Qin, W., Cai, Z., & Su, X. (2024). Hybrid Clustering Algorithm Based on Improved Density Peak Clustering. Applied Sciences, 14(2), 715. https://doi.org/10.3390/app14020715