1. Introduction

The increasing prevalence of high-speed wireless networks, smart mobile devices, and digital services has led to a connected and data-centric society, driving innovation and economic growth across various industries. This digital transformation poses complex challenges such as low-latency data processing, efficient bandwidth utilization, and improved service quality. In response, Multi-Access Edge Computing (MEC) systems have garnered significant attention. MEC systems deploy numerous low-capacity MEC servers (MECSs) at the network edge, utilizing computing and storage resources for low-latency services. This is crucial for applications such as autonomous vehicles, augmented reality, real-time gaming, and healthcare. MEC systems efficiently manage network bandwidth and reduce congestion by processing data at the edge before transmitting it to central data centers. However, the benefit of an MEC system does not come without costs. With the expansion of the service area in MEC systems, there is an associated increase in the required number of MECSs. This escalation in the number of MECS contributes to a higher energy consumption, resulting in an augmented operational expenditure for the MEC system. In addition, networks and data centers are expected to account for 59.8% of the CO

2 emissions in the information and communications technology sector by 2030 [

1]. Therefore, it is essential to increase the energy efficiency of an MEC system to reduce the operational cost and tackle the environmental conservation problem.

An MECS undertakes the reception, processing, and subsequent transmission of results for tasks offloaded from users. Consequently, the energy consumed within an MEC system is categorized into the communication energy and computing energy of MECS. In the task-offloading process, a user device incurs transmission power consumption. Given the typically small size of service results, the downlink transmission power of an MECS is often assumed to be negligible. However, if an MECS cannot handle a service for processing the requested task, task migration to a cloud server or another MECS becomes necessary, constituting a significant portion of the communication energy within an MECS. To mitigate the energy impact of task migration, various service caching methods have been proposed [

2,

3,

4]. Given the potential minimization of communication energy using appropriate service caching methods, this paper concentrates on addressing the computing energy consumption issue. Since an MEC system involves complex interactions among diverse elements, a multitude of approaches have been proposed to mitigate computing energy consumption across various facets of MEC systems. For instance, the energy consumed in an MEC system can be reduced via an optimal offloading decision [

5], resource management [

6], network selection [

7], and dynamic voltage and frequency scaling [

8]. These methodologies presuppose the active mode for all MECSs within the system, overlooking the temporal and spatial variations in tasks offloaded to each MECS. Generally, the workload imposed on an MEC system is unevenly distributed across MECSs. Thus, when the total system load is below the combined capacity of all MECSs, some MECSs may remain lightly loaded or idle. However, an MECS consumes a substantial amount of energy even in the idle state [

9]. To address this, minimizing the total energy consumption of an MEC system involves placing unnecessary MECSs into a sleep state. However, since only active MECSs process loads in an MEC system, the service delay increases with the number of MECSs in the sleep state. Consequently, determining the operation mode of each MECS based on workload distribution becomes crucial for effectively reducing energy consumption while maintaining a reasonable service quality.

Various MECS sleep control methods have been proposed to strike a delicate balance between two conflicting performance metrics: the amount of energy consumed and the service latency delivered by an MEC system. Threshold methods [

10,

11], commonly employed in determining the operational mode of an MECS, involve transitioning an MECS into sleep mode when its load falls below a predefined threshold. This approach, wherein each MECS independently decides based on its own workload, is straightforward to implement. However, the uneven distribution of the load across MECSs at each time step prompts the exploration of comprehensive load distribution considerations to optimize system-wide energy efficiency. Addressing this, cooperative sleep decision methods have been proposed [

9,

12], where MECSs form clusters, exchange status information, and make sleep decisions, considering the status of other MECSs in the same cluster. Despite the potential enhancement in energy efficiency, such cooperative methods introduce additional signaling overhead, and when MECS decisions differ, an iterative consensus process ensues, potentially leading to delayed decision making. To tackle these challenges, deep learning models have been used [

13,

14]. These approaches leverage the current load information of MEC at a specific time point to predict the load of each MECS in the subsequent time step. Subsequently, decisions are made regarding which MECS should sleep based on these predictions. To forecast the MECS load, these techniques often model the MECS load as a time-series sequence and predominantly employ the LSTM (Long Short Term Memory) model as it is suitable for time-series data prediction. In this approach, the accuracy of the determined set of sleep MECS based on predicted values depends on the precision of load prediction for each MECS. Therefore, the optimization of the sleep MECS decision problem can be reframed as a precise workload prediction problem.

However, since LSTM was originally developed for the prediction of Euclidean data, its performance may degrade when applied to non-Euclidean data with graph structures like MEC systems. Therefore, in this paper, we improve the previous method based on the LSTM predictor in two major aspects. Firstly, we improve the accuracy of the predicted task arrival rate. The task arrival rate to an MECS varies in time and space. However, LSTM exploits only the correlation in the time domain, neglecting the valuable correlation information in the space domain. We enhance the accuracy of task arrival rate prediction by using STGCN (Spatio-Temporal Graph Convolution Network) [

15]. STGCN extends the convolution operations commonly used in graph neural networks to both spatial and temporal dimensions to effectively capture information between neighboring nodes and detects temporal changes, providing enhanced capabilities in modeling spatio-temporal data. Secondly, we reduce the prediction delay. Since LSTM has a recurrent architecture, it processes inputs sequentially. Therefore, the methods based on LSTM are slow in producing predicted values. On the contrary, since we use the STGCN model that can process inputs in parallel, we can reduce the time needed to produce predicted values. We can summarize our contributions as follows.

We propose a framework for the MECS sleep decision by using STGCN. We define an input graph for this framework and enhance the prediction accuracy for the workload distribution in an MEC system by utilizing not only the workload correlations in each MECS in the time domain, but also the relationships among MECSs in the space domain.

Despite the availability of the workload distribution information, determining the operational modes of individual MECS poses a computationally challenging combinatorial optimization problem. To address this, we utilize a genetic algorithm (GA) to fast compute the optimal operation mode for each MECS at the start of each time slot, taking into account both energy consumption and service latency factors for all MECSs in the system.

Comprehensive simulation studies show that our approach is better than the conventional LSTM-based method in terms of both energy efficiency and the time required for determining sleep MECSs.

The organization of this paper is as follows. We present related works in

Section 2. In

Section 3, we describe the system model and cast the sleep control problem. We describe our MECS sleep decision method in

Section 4. In

Section 5, we verify the proposed method by evaluating its performance via extensive simulation studies. We conclude the paper with future research directions in

Section 6.

2. Related Works

2.1. Preliminary: Graph Neural Networks

Graph neural network (GNN) is a term used to represent the artificial neural networks designed to process data represented as graphs. The key tasks of GNN include node level tasks, edge level tasks, and graph-level tasks. In the node level tasks, GNN learns the features of individual graph nodes by considering their attributes and connectivity. The learned features can be used for new node classification. For the edge level tasks, a GNN embeds edge features and uses them to predict possible links and weights indicating the strength of each connection in a graph. Graph-level tasks involve predicting or classifying features of the entire graph. For example, it can be used to identify communities within the entire social graph and create new protein molecules. In general, a computation module in a GNN is composed of a message function, aggregation function, update function, and readout function. Depending on the configuration of these functions in the computational module, various GNNs can be built [

16,

17]. In [

18], according to the primary objectives and architectural differences of various GNNs, the authors categorize a set of GNNs into RecGNN (Recurrent GNN), ConvGNN (Convolutional GNN), GAE (Graph AutoEncoder), and STGNN (Spatial-Temporal GNN). RecGNNs aim to learn node representations via recurrent structures, introducing the concept of message passing, where nodes exchange information with neighboring nodes until reaching a stable state. This message passing idea is adopted by ConvGNNs. ConvGNNs generalize the convolution from Euclidean data space to non-Euclidean data space, providing a foundation for constructing other complex GNNs. GAEs are an unsupervised learning model that encodes nodes or graphs into a latent vector space and generates new graphs using embedded graph information. STGNNs focus on learning hidden patterns of graphs that change spatio-temporally, considering the spatio-temporal correlations of graph information simultaneously. We summarize the GNN categories in

Table 1. For a further review of graph neural networks, we refer the readers to [

16,

17,

18] and the references therein.

In this paper, we aim to predict the workload of each MECS by considering the relationship among the MECSs both in the time domain and the space domain. An MEC system consists of MECSs, which are deployed at the network edge. In an MEC system, control messages are exchanged between geographically adjacent MECSs for the efficient operation of the system. Therefore, by considering MECSs as nodes and the geographical relationships between adjacent MECSs as links, the MEC system can be modeled as a graph. Since an MECS serves tasks offloaded by users, the load of an MECS is determined by the number of users and their task-offloading patterns within the MECS service area. Additionally, the locations of users change over time, and the mobility of users is physically constrained within a certain distance over a specified period. Consequently, changes in the user set for each MECS over time impact the user set of neighboring MECSs, influencing the load of each MECS based on the load of neighboring MECSs. In other words, the load of each MECS is closely related with the load of its neighboring MECSs over time and space. Due to its ability to consider spatio-temporal correlations among the related entities, STGNN is being applied to various applications. For example, in [

19], STGNN is used for road traffic prediction by exploiting complex spatio-temporal correlation of traffic flow. In [

20], STGNN is used for skeleton-based human action cognition by making use of the skeleton topology information. Motivated by these research trends and the characteristics of the load distribution in an MEC system, we chose STGCN which belongs to the STGNN category for predicting the load distribution in an MEC system. Specifically, we extract node features by representing the spatio-temporal relationships among the loads of each MECS by adopting STGCN. Then, by using the extracted node features, we predict the load across each MECS. Subsequently, we utilize this information to determine the optimal selection of MECSs to sleep by using a genetic algorithm.

2.2. Energy Saving in an MEC System

In line with the energy-saving objectives, studies pertaining to energy efficiency in an MEC system can be classified into two primary groups. The first group is dedicated to diminishing the energy consumption in end devices, while the second group is centered around addressing the energy consumption within an MEC system. User devices have the potential to conserve energy through the offloading of computing tasks to an MEC system. However, the task-offloading process itself entails the consumption of transmission power by the user device. Furthermore, task offloading can introduce an increase in task completion delay since the processed results are delivered to the user device after the task is sent to and processed within an MEC system. Consequently, various task-offloading decision methods are proposed to minimize the energy consumption of a user device. These methods take into account factors such as computing power, transmission power, and task completion delay [

21,

22,

23].

To minimize the energy consumption of an MEC system while providing a reasonable quality of service to end users, various resource management methods have been proposed [

24]. Load-balancing methods among MECSs are proposed in [

25,

26] to increase the resource efficiency of an MEC system while reducing the system cost. The authors in [

27,

28] propose optimal computation resource allocation methods to reduce the energy consumption of an MECS while ensuring the service delay requirement in each MECS. Content caching methods are proposed in [

29,

30] to accommodate the massive computation demands from users in an energy-efficient manner. Service placement schemes are proposed to reduce both service completion time and energy consumption by placing the services requested by users to their serving MECS [

31,

32].

However, these studies are primarily focused on designing the intended functionalities in an energy-efficient manner. In other words, they assume all MECSs are always in an active mode. However, servers consume a considerable amount of energy even in the idle state. Therefore, if a majority of MECS are underloaded, a potential strategy for decreasing the overall energy consumption of an MEC system is to activate only a subset of MECSs to handle tasks while putting the remaining MECSs in a sleep state.

2.3. Sleep Control Methods

Various methods have been proposed to increase the energy efficiency of an MEC system by controlling the working mode of the MECSs. These methods can be broadly categorized into distributed methods and centralized methods. Distributed methods use meta-heuristic optimizations, a bio-inspired method, and game theory. Centralized methods use the Lyapunov optimization framework, machine learning, and deep learning methods.

The authors in [

33] use the particle swarm optimization (PSO) algorithm to control the operation mode of MECSs. They formulate the MECS sleep control problem as a two-dimensional optimization problem. Then, they propose a user connection matrix-based AP sleeping method by using PSO. In the work presented in [

12], a bio-inspired method for controlling the sleep states of MECSs is proposed, drawing inspiration from the inter-cell signaling mechanism. At the end of each time slot, each MECS engages in periodic load information exchanges with its neighboring MECSs. Through a distributed process involving the comparison of relative load levels with those of neighboring MECSs, each MECS autonomously determines its operational mode. In the study presented in [

34], the authors employ the minority game theory to decide the operational mode of an MECS. They tackle the distributed computation offloading problem, taking into account the determination of the MECS operation mode. The minority game is utilized to seek an equilibrium state that optimally balances the latency of user tasks and the energy consumption within the MEC system. In these distributed methods, each MECS iteratively determines its sleep mode until the optimal sleep MECSs from the perspective of the MEC system is obtained. Since the iterative process takes time until a consensus among MECSs are reached, distributed methods are slow. In addition, these techniques result in an elevated signaling overhead due to the necessity of control message exchanges among MECSs.

In centralized methods, each MECS or a central server determines the sleep MECSs based on the service quality provided by the MEC system, and the amount of energy consumed in the system in an on-demand manner. The Lyapunov optimization framework is frequently employed to address optimization problems involving unknown future values in the context of long-term average cost. It transforms the long-term average cost optimization problem into a per-time-slot cost optimization problem, enabling the development of an online algorithm that utilizes only currently available information. In [

35], the problem of minimizing long-term average delay under power consumption constraints is formulated. The Lyapunov optimization framework is applied to solve the formulated problem by optimizing the sleep and offloading decisions of MECSs. In [

36], the problem of minimizing long-term average total energy consumption under reliability constraints is established. An online algorithm is devised using the Lyapunov optimization framework, placing MECSs into a sleep state whenever possible. In [

9], the authors formulate the energy optimization problem under delay constraints. Leveraging the Lyapunov optimization framework, they convert the long-term energy minimization problem into a per-time-slot problem and propose an online sleep control method under non-uniform traffic distribution in an MEC system. Various machine learning and deep learning methodologies have been proposed to address the MECS sleep control problem. In [

13], an online MECS mode switching algorithm is introduced, leveraging a linear regression method to predict user distribution and service requests. By calculating the utility value of each MECS based on the predicted values, MECSs are selected for sleep during the next time slot by comparing their utility values with a predefined threshold. The authors in [

14] present a method that optimizes the number of active MECSs using a deep learning model. They employ the LSTM model to predict the long-term workload and adjust the number of active MECSs in a heuristic manner based on the predicted workloads. For the joint optimization of task latency and energy consumption, the authors in [

37] adopt a reinforcement learning approach. After casting a joint optimization problem that minimizes the weighted sum of latency and energy, they develop a solver for the formulated problem by integrating DDQN (Deep Reinforcement Learning with Double Q-learning) with the multi-knapsack algorithm.

Centralized methods leverage existing state information of the MEC system to estimate its future state and use the estimated state to control the mode of MECSs. Therefore, the precision of the predicted state information becomes the major factor that influences the performance. The Lyapunov optimization framework transforms the optimization problem of long-term average cost into a per-time-slot cost optimization problem. In general, the optimality gap caused by the problem modification is not marginal. To enhance the accuracy of future state predictions, deep learning models such as LSTM are widely employed. However, since LSTM was originally developed for the prediction of Euclidean data, its performance may degrade when applied to non-Euclidean data with graph structures like MEC systems. Therefore, in this paper, we enhance the previous method based on the LSTM predictor in two major aspects. Firstly, we improve the accuracy of the predicted task arrival rate. The task arrival rate to an MECS varies in time and space. However, the previous methods that predict the task arrival rate for each MECS by using LSTM exploit only the correlation in the time domain, neglecting the valuable correlation information in the space domain. We enhance the accuracy of task arrival rate prediction by using STGCN. STGCN extends the convolution operations commonly used in graph neural networks to both spatial and temporal dimensions. This convolutional layer effectively captures information between neighboring nodes and detects temporal changes, providing enhanced capabilities in modeling spatio-temporal data. Secondly, we reduce the prediction delay. LSTM has a recurrent architecture which processes the input sequentially. Therefore, the methods based on LSTM are slow in producing the predicted values. On the contrary, we use the STGCN model that can process inputs in parallel. Therefore, compared to the methods based on LSTM, we can reduce the time needed to produce the predicted values.

For easy comparison of the techniques that have been proposed to control the sleep mode of MECSs, we summarize their main feature by adding

Table 2.

3. System Model

In this paper, we consider an MEC system in a wireless network composed of a set of base stations (BSs), a set of MECSs, and a remote controller. We assume that each BS is located with an MECS. Henceforth, we will use MECS and BS interchangeably unless stated otherwise. We divide the system time into a series of time slots with equal length

and consider a discrete time controller. Compared with a cloud server, an MECS is resource constrained in terms of the computing power and the memory size. Therefore, an MECS cannot host all the services that a cloud server has at the same time. Since the issue of service caching in an MEC system is dealt in [

2,

3,

4], in this paper, we focus on MECS sleep control by assuming that an MECS contains the necessary services for processing tasks offloaded to it. In other words, tasks offloaded to an MEC system is served not by a cloud server but by an MECS.

We denote the set of MECSs in the system as and the task processing capacity of an MECS i as . We also denote the service region of an MECS i as . If there is any overlap between and , MECS i and MECS j are neighboring MECSs. We denote the set of neighboring MECSs of an MECS i as . A user u offloads its task to the nearest MECS, which is called the serving MECS of u. We denote the set of users in during a time slot t as . Each MECS has a task queue to accommodate the offloaded tasks. We denote the task queue length of an MECS i at the beginning of a time slot t as .

We introduce a control variable

that indicates the operation mode of an MECS

i during a time slot

t. Specifically, when

, an MECS

i is in a sleep mode during a time slot

t. On the contrary,

represents the case where an MECS

i is in an active mode during a time slot

t. We denote the number of tasks generated from the users in

during a time slot

t as

. If

,

s are offloaded to their active serving MECS

i. However, if

, they are offloaded to active neighbors of the MECS

i. Therefore, if we denote the number of tasks newly offloaded to an MECS

i during a time slot

t as

, it is zero if

. In contrast, when

,

becomes the sum of

and the tasks offloaded to

i from the sleeping neighbors of the MECS

i. In other words, if we denote the amount of tasks offloaded to an active MECS

i from its sleeping neighbor

j during a time slot

t as

,

. Then, the dynamics of the task queue in an MECS

i is described as

Bounding the completion time for the tasks offloaded to an MEC system is crucial for delivering a satisfactory service to users. Since we assume that each task is served by an MECS once it is offloaded to an MEC system, the time spent to complete a task in an MEC system is determined by the task queuing delay and the task service delay in a serving MECS, which depends on the service scheduling policy, task size, and CPU capacity. We assume that the task service delay is constant because modern servers use dynamic frequency scaling. If we assume that each MECS processes tasks in a first-in, first-out (FIFO) manner, the queuing delay is proportional to the queue length. As shown in Equation (

1), the change in the task queue length in an MECS

i during a time slot

t is determined by the queue length at the beginning of the time slot

t, the amount of new tasks entering during the time slot

t, and the service capacity of an MECS

i. Among these factors,

is unknown at the start of the time slot. Since

depends on

,

, and

, we do not know the characteristics of the task arrival process to each MECS. Therefore, we take a conservative approach to bound the queue length. In other words, we aim to maintain

s under

.

The energy consumed by an MECS depends on its load. According to [

9], the energy consumed by an MECS in an active state during a time slot

t is given as

where

is the maximum power consumed when the load is the highest,

determines the fraction of power consumed when an MECS is idle, and

is the utilization of a MECS during a time slot

t. Since the amount of tasks that an MECS can process during a time slot is given as

, the utilization of an MECS

i during a time slot

t is given as

Therefore, the total energy consumed in an MEC system during a time slot is given as

Our goal is to find an optimal sleep control vector

at the start of each time slot that minimizes the total energy consumption in an MEC system while providing a reasonable service to the users. Thus, our MECS sleep control problem is formulated as follows.

The first constraint is that each MECS bounds its queue length. Wireless communication is constrained mainly by the distance between a user and a BS. Thus, to offload a task from a user to an MECS, the distance between them should be below a certain value. In this paper, we assume that MECSs are provisioned so that each user is able to communicate with an MECS located at a distance of two hops. Thus, the second constraint is that at least one of the neighboring MECS of the sleeping MECS must be active. The problem (

5) is a combinatorial problem and is NP-hard in general. In addition, to solve the problem at the start of each time slot,

should be given. However,

cannot be known at the time when we need to determine

. Therefore, to resolve these issues, we adopt a deep learning model STGCN to predict the task arrival rate for each MECS by considering the spatio-temporal relationship among them. Given the set of predicted task arrival rates, we employ a genetic algorithm to solve the problem (

5) fast.

4. Deep Learning-Inspired Sleep Control Strategy

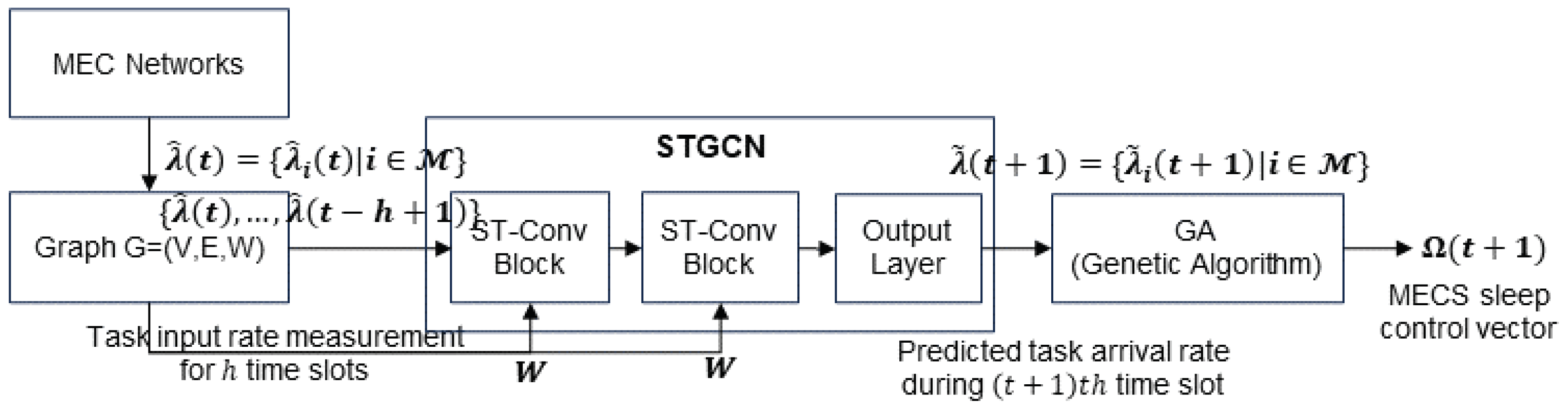

In

Figure 1, we show the overall procedure of our MECS sleep control strategy. Our MECS sleep control method is composed of two modules. In the first module, at the beginning of each time slot

t, a controller predicts

by using the spatio-temporal correlations among the recent past

, where

h is the length of the past task arrival rate history. We denote the predicted

as

. The second module uses the genetic algorithm to determine the MECS sleep control vector

by using

,

, and

, for all

. We explain the operation of each module in the following subsections.

4.1. Task Arrival Rate Prediction

The amount of tasks offloaded to an MECS i is determined by the number of users in and their service preferences. Since the distance that a user can move during a time slot is limited, is influenced mainly by and s, where . Therefore, is affected not only by but also . Consequently, to increase the prediction accuracy, the spatio-temporal correlation among and must be exploited. To make use of the spatio-temporal relationship, we model an MEC system as a graph . The set of nodes V is the set of MECSs . The elements of the edge set E are the links which reflect the adjacency between MECSs. In other words, the element in E is 1 if , otherwise it is zero.

Our goal is to predict the amount of tasks imposed on each MECS during the next time slot. Thus, we use the history of the workload generated in each

as a node feature. We denote the node feature vector as

. Therefore, our task arrival prediction problem becomes a problem to find a mapping function

such that

where

h is the length of the task-load history.

Since an MEC system has a graph structure, we adopt a STGCN model to approximate by capturing and exploiting the spatio-temporal dependency among s for all and .

As we can see in

Figure 1, STGCN is composed of two consecutive ST-Conv blocks and one output layer. The ST-Conv block is composed of a spatial Graph-Conv module in between two temporal Gated-Conv modules (

Figure 2). The Gated-Conv module is composed of a 1-D convolution unit having a width

kernel followed by GLU (gated linear unit). We denote the input to the

k-th temporal Gated-Conv module in the

l-th ST-Conv block as

where

. Then, for each node in

G, the 1-D convolution unit performs temporal convolution on

by exploring

neighbors, which reduces the sequence length from

h to

. Two linear layers in the GLU take the temporal features extracted by the 1-D convolution unit and produces

and

, respectively. Then, GLU outputs

by computing

, where ⊙ is the element-wise Hadamard product. Therefore, if we denote the temporal convolution kernel for the

k-th temporal Gated-Conv module in the

l-th ST-Conv block as

and the temporal gated convolution operator as

, the temporal gated convolution is expressed as

where

is a sigmoid function.

The spatial Graph-Conv module is designed based on a ChebNet [

38], which is a spectral-based GNN. The goal of the spatial Graph-Conv module is to derive the spatial features from the temporal features extracted by the temporal Gated-Conv module. To achieve the goal, the spatial Graph-Conv module performs graph convolution on

. We denote the graph convolution operator as

and the graph convolution kernel used by the spatial Graph-Conv module in the

l-th ST-Conv block as

. We also denote the input size and the output size of the feature maps as

and

, respectively. Since the output of the first temporal Gated-Conv module is fed into the spatial Graph-Conv module, the graph convolution on the input

is given as

where

is the Chebychev coefficients and

L is the normalized graph Laplacian.

Since the output of the spatial Graph-Conv module is fed into the second temporal Gated-Conv module, the final output of the

l-th ST-Conv block becomes

where ReLU is the rectified linear units’ function. The output of the second ST-Conv module is fed into the fully connected output layer. The output layer performs temporal convolution on the comprehensive features obtained from two ST-Conv blocks and produces a one-step prediction

.

To train the STGCN, the following L2 loss function is used.

where

are all trainable parameters in the STGCN model.

4.2. MECS Sleep Control Vector Determination

After collecting at the beginning of each time slot t, a controller determines an MECS sleep control vector by using GA. To exploit GA, we define a fitness function for a combination as if x satisfies the queue length constraint . When x violates the queue length constraint, we set .

We create a population

by randomly selecting

n combinations from the possible combinations of

. Among the combinations in

, we find the best combination

as follows.

To construct a crossover set from , we create a temporary set Y by selecting the best combinations from based on the fitness values of . Then, we randomly select two combinations x and y from Y and crossover them to make two children, and . Specifically, we randomly select an index k from , where is the number of MECSs in an MEC system. Then, we make a child combination by concatenating and , where denotes the first k elements in x and represents the last elements in y. We also make another child combination by concatenating and and put both and into the crossover set . To construct a mutation set , we mutate the children combination and as follows. After randomly selecting an index , we mutate by changing from 0 to 1 or vice versa. Then, we add the mutated and into the mutation set .

After repeating the crossover process and the mutation process

times, we find the best combination

in

. If

, we replace

with

. We construct a new population

by randomly choosing

n combinations from

and replace

with

. We repeat the whole process

times and output the final

as

. We summarize the MECS sleep decision algorithm in Algorithm 1.

| Algorithm 1 MECS Sleep Decision Algorithm |

| 1: | At the end of a time slot t: |

| 2: | Input: , . |

| 3: | Output: Optimal MECS sleep control vector . |

| 4: | Init: n, . |

| 5: | Construct by randomly selecting n elements from . |

| 6: | Get . |

| 7: | |

| 8: | while

do |

| 9: | Create Y by choosing the elements in with the highest fittness value. |

| 10: | . |

| 11: | . |

| 12: | for do |

| 13: | Randomly select x and y from Y. |

| 14: | Make and by crossing over x and y. |

| 15: | |

| 16: | Mutate and . |

| 17: | |

| | Find |

| 18: | if then |

| 19: | |

| 20: | Construct by randomly selecting n elements from . |

| 21: | Replace with |

| 22: | Return . |

5. Performance Evaluation

In this section, we verify the proposed method via simulation studies. We compare the performance of our method with that of a conventional method using the LSTM model as a task arrival rate predictor.

5.1. Simulation Environment Setup

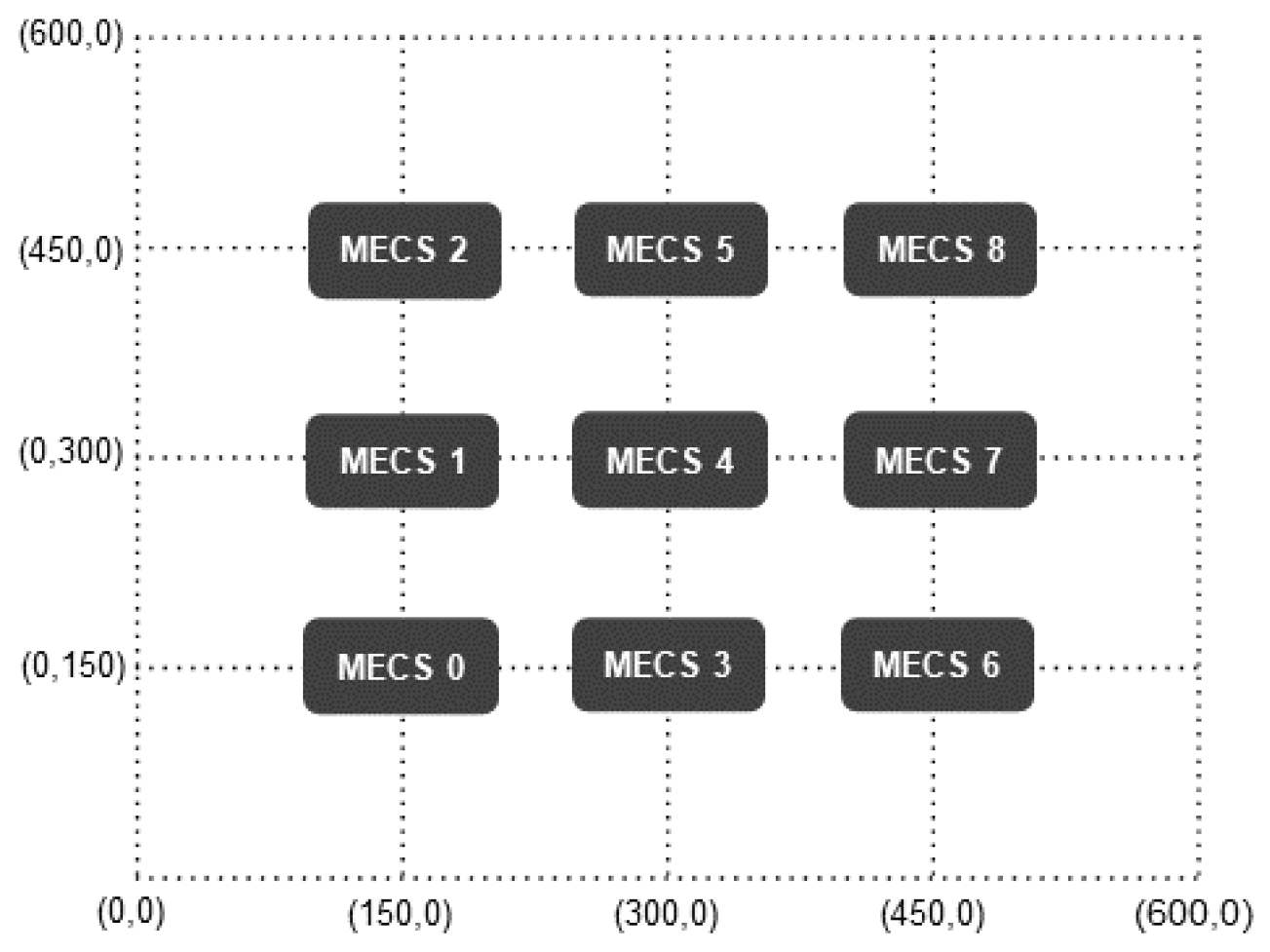

We deploy nine MECSs uniformly across a grid with dimensions of 600 m by 600 m (

Figure 3). The service region of each MECS is configured as the surrounding four grids with itself at the center. At the beginning of the simulation, we uniformly deploy 275 users on the topology. Users move around over time according to a random mobility model. In other words, at the start of each time slot, a user changes its moving direction from

, according to the Uniform distribution, and selects its speed from the Normal distribution with a mean 15 m per time slot and a variance of

m per time slot. To maintain the number of users in the topology, we assume that the top and bottom, as well as left and right, of the system topology are connected. Therefore, for example, when a user moves to the right and exits the topology, we add a new user to the left side of the topology.

Users offload their tasks to the nearest MECS. Initially, we configure that each user generates one task. After the initialization, we dynamically adjust the task generation rate of each user to change the total load imposed on the system over time. If for all MECS at the start of each time slot t, we increase the task generation rate of each user. The increase rate is randomly selected from for each user. When the load imposed on the MEC system exceeds its capacity, the queue length of at least one MECS exceeds . Then, after randomly choosing the decrease rate from for each user, we reduce the task generation rate of each user by the chosen rate. As the task arrival rate decreases, the number of sleeping MECS increases. However, in accordance with the second condition of the problem P1, at least three MECSs must remain active to serve users in the MEC system. Therefore, after the reduction in the task generation rate initiates, we consistently decrease the task arrival rate every time slot. This continues until the active MECS count reaches three. At this point, we resume increasing the user’s task generation rate by randomly selecting the increase rate from for each user every time slot.

After conducting the data generation process over 50,000 time slots, we construct the training dataset using task generation rates observed in the initial 35,000 time slots. The subsequent 7500 time slots are designated as the validation dataset, and the final 7500 time slots are allocated for the test dataset. In each dataset, a data sample is defined as a pair , where . These datasets are used to train the STGCN. After completing the training of STGCN, we generate for 1000 time slots via the same data generation process. We then evaluate the performance of the proposed method by inputting them into the trained STGCN.

We set

,

, and

tasks per time slot. We also set the maximum queue length of each MECS (

to 75 and

. We configure the length of the task-load history as

. For the genetic algorithm, we set

and

. We use the publicly known values of the LSM model and STGCN model for configuring their hyperparameters. Specifically, we use the hyperparameters in [

39] to configure the hyperparameters of the LSTM model and employ the hyperparameters in [

40] to configure the STGCN model. One hidden layer with 64 units is used for the LSTM model. Since one LSTM model has 16,961 parameters and there are nine MECS, a total of 152,649 parameters are used when the LSTM method is used. The STGCN model is composed of two ST-Conv blocks and one output layer. The units in the first ST-Conv block is (64, 32, 64) and the units in the second ST-Conv block is (64, 32, 128). The output layer has (256,128) units. Since one STGCN model is used for all MECSs, the total parameters when STGCN is used is 193,246. For our simulation study, we use the Colab Pro with CUDA version 12.0. We use Nvidia Tesla T4 GPU and 51 GB random access memory. When we run these models, we use Python version 3.10.12, Tensorflow 2.14.0, and Pytorch 2.1.0.

5.2. Task Arrival Rate Prediction Accuracy

To quantitatively compare the accuracy of the task arrival rate prediction, we calculate the mean absolute error (MAE) and the root mean square error (RMSE) and show the results in

Table 3. We observe that STGCN can predict the task arrival rate more accurately than LSTM regardless of the location of an MECS in the topology. The difference stems from the fact that, unlike LSTM, which independently considers the temporal correlation of the task arrival rate at each MECS, STGCN comprehensively considers the spatio-temporal relationships of the task arrival rates across all MECSs when predicting the task arrival rate. For example, compared to the LSTM predictor, the STGCN predictor decreases MAE and RMSE by

and

, respectively, when all MECSs are considered.

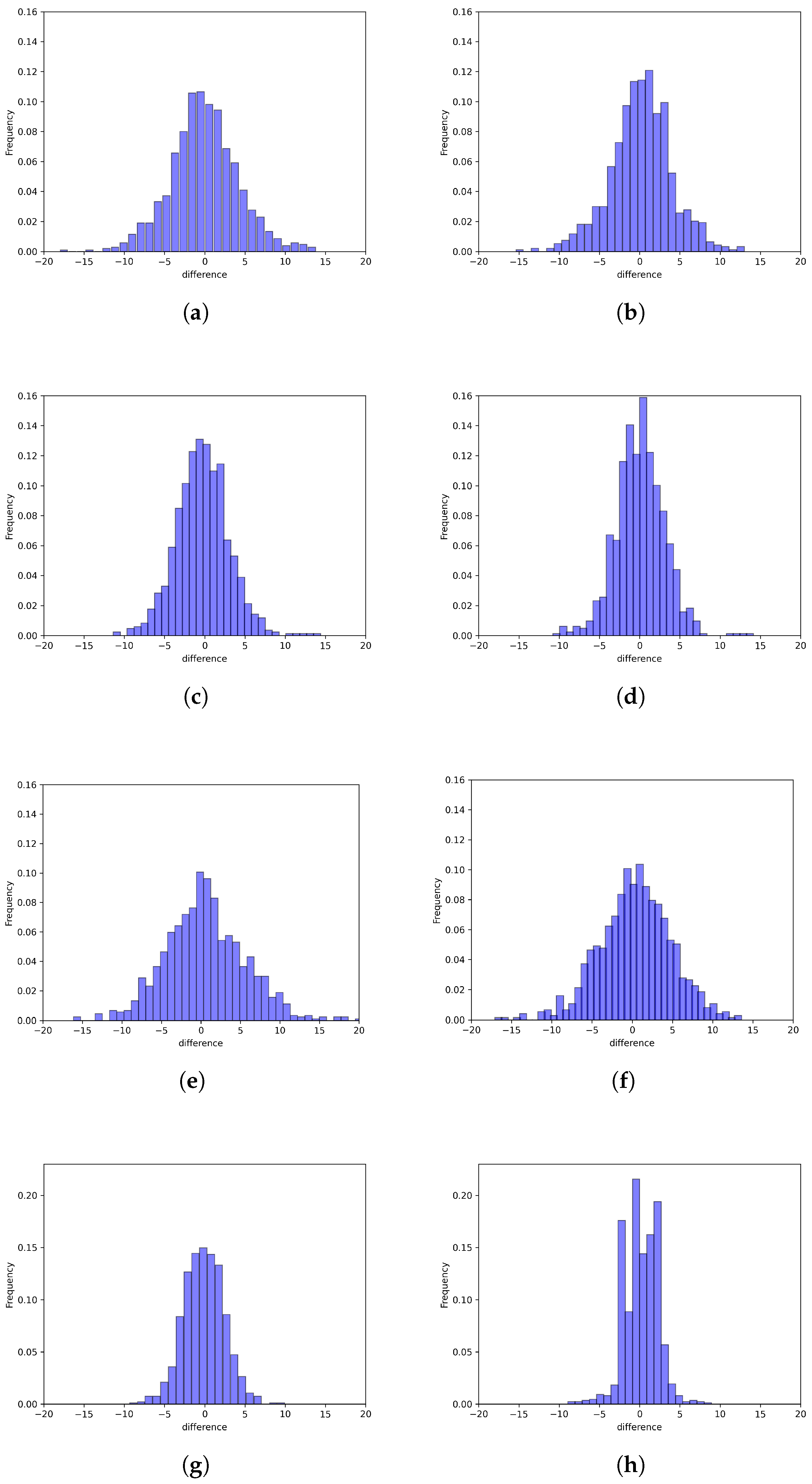

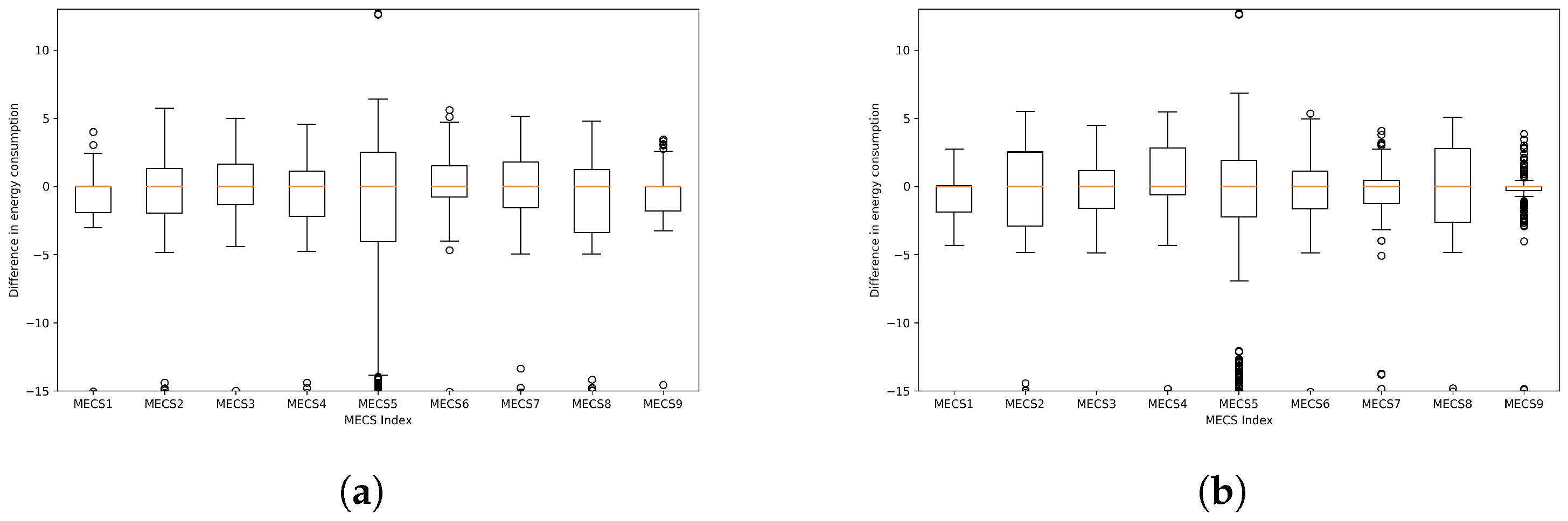

To further understand the prediction behavior, we inspect the distribution of the prediction error (i.e.,

) in

Figure 4. In this figure, for ease of visual comparison, we plot all subfigures with the same ranges for both the x-axis and y-axis. As we can see in

Figure 4, the prediction errors are more densely clustered around zero when using STGCN compared to LSTM. In addition, we observe that STGCN exhibits smaller variations in the errors compared to LSTM.

We also examine the prediction delay. Since LSTM processes inputs sequentially, the LSTM method takes an average of 450 ms to predict task arrival rates. On the contrary, STGCN processes inputs in parallel by using graph convolutions. Therefore, the time required to predict task arrival rates decreases to as small as 10 ms when STGCN is used. This corresponds to a reduction compared to the LSTM method.

5.3. Performance of Sleep Control Vector Decision Method

We use GA to determine . To focus on the influence of GA on the energy consumption and the queue length, we use true task arrival rates (i.e., ) instead of the predicted task arrival rates (i.e., ) when determining at the beginning of each time slot. Given s, we compare the performance of GA and that of the exhaustive search (ES) method. Since ES explores all possible instances in the solution space, found by ES is globally optimal for the given input state.

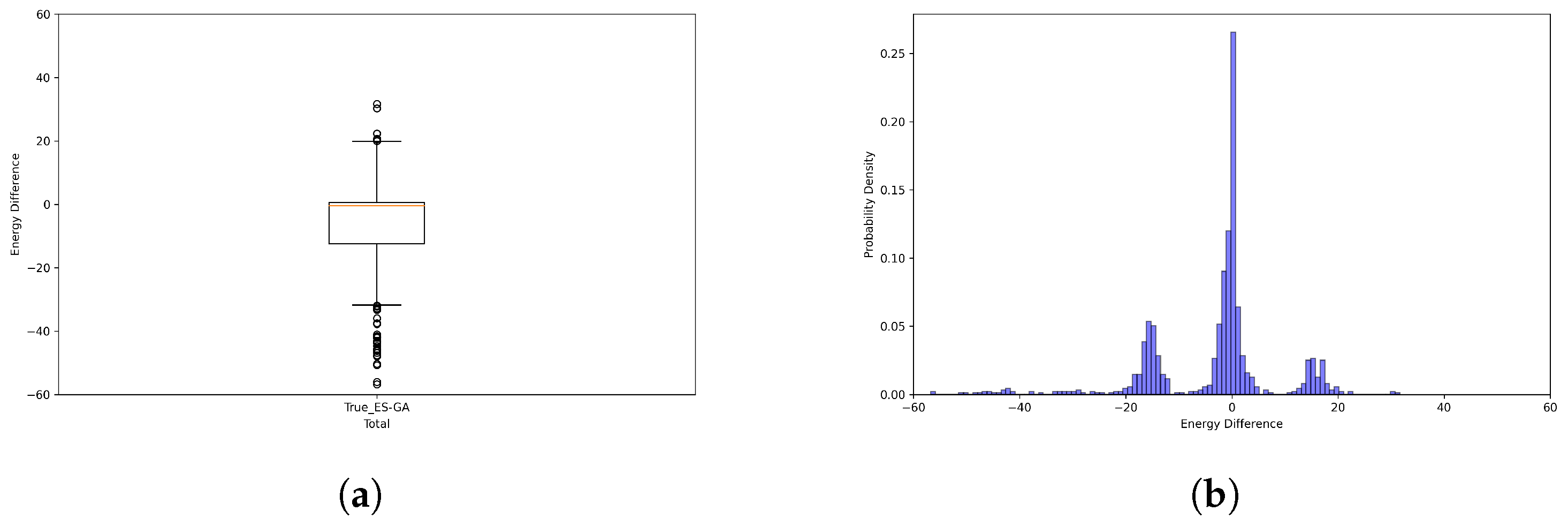

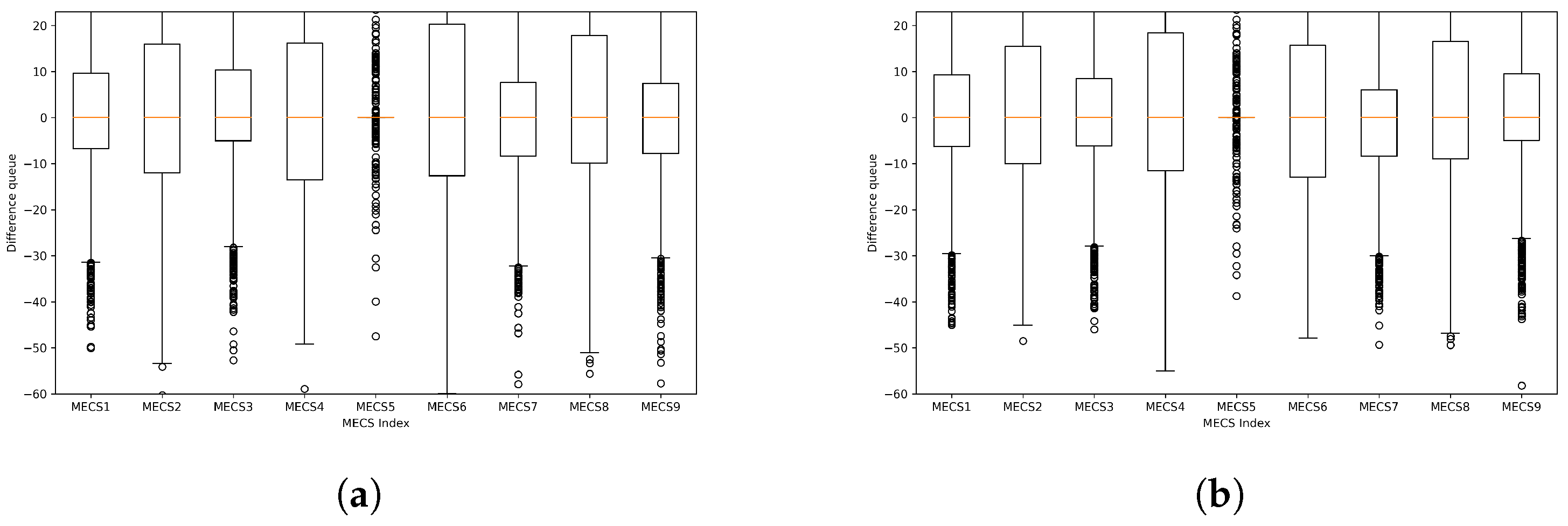

We measure the difference between

when ES is applied and that when GA is used and draw the distribution of the difference in

in

Figure 5. The circles in the box plot (

Figure 5a) represent the outliers. We observe that the distribution of

when GA is applied is very similar to that of

when ES is applied. Specifically, the

Q-values in the box plot are

,

, and

. In addition, we observe in

Figure 5b that the difference in

is densely concentrated around zero. We also examine the

Q-values of

when ES and GA were applied, respectively. When ES is used, it is

,

, and

, but while GA is employed, it is

,

, and

. Thus, the difference between

Q-values obtained by GA and those when ES is applied is marginal.

In

Figure 6, we show the distribution of the differences between the average queue length (i.e.,

) when ES is applied and

when GA is utilized. When compared to the ideal case where the error is zero, the median value (

) of

is 0.54. When we inspect the histogram, the average difference in

is 0.87. To further inspect the variance of

, we examine the interquartile range (IQR), which is the difference between the 75th percentile and the 25th percentile. In the case of ES, the IQR for

is 4.40, whereas with GA, the IQR is 3.91, resulting in a difference of 0.49 between them. Considering that

, such a difference can be regarded as very small.

We also compare ES and GA in terms of their run time. ES takes an average of 80 ms to find while GA takes an average of 29 ms. By using GA, we reduce the time needed to determine by .

5.4. Combined Effect

To evaluate the combined effects of the task arrival rate prediction and the sleep control vector determination, we compare the performance of the proposed method (STGCN-GA) with that of the following two techniques. The first method denoted by True-ES is an ideal method that determines via the exhaustive search by using the true task arrival rates . The second method uses GA to determine by exploiting the task arrival rate predicted by the LSTM model. Henceforth, we denote the second method as LSTM-GA.

We denote the energy consumed at an MECS

i during a time slot

t when True-ES is used as

. We also denote by

the energy consumed at an MECS

i during a time slot

t when LSTM-GA is used. We represent the energy consumed at an MECS

i by our method during a time slot

t as

. In

Figure 7, we show the box plots of

and

. To facilitate the comparison, the ranges of the y-axis in all subfigures are shown to be the same. We observe that the energy consumed by the proposed method is closer to the ideal value obtained by the True-ES method than the energy consumed by LSTM-GA. Specifically,

of

is −0.52 while

of

becomes −0.28. In addition, the IQR of

is 14.10 and the IQR of

decreases to 12.74.

To investigate the difference between the queue length generated by our method and LSTM-GA and the ideal queue length generated by True-ES, we introduce the following symbols. We denote the difference between

obtained by True-ES and that acquired by our method as

. We also denote the difference between

obtained by True-ES and

when LSTM-GA is used as

. Then, we show the distribution of

and

in

Figure 8. We observe that

is closer to zero than

, which means that the proposed method makes the queue length more similar to the ideal

obtained by Tru-ES compared to LSTM-GA. Specifically, the median of

is 0.56 while the median of

is 0.47, which corresponds to a

reduction. In addition, the IQR of

is 4.20 while the IQR of

is 3.87. Thus, the proposed method decreases the IQR of the queue length difference by

.

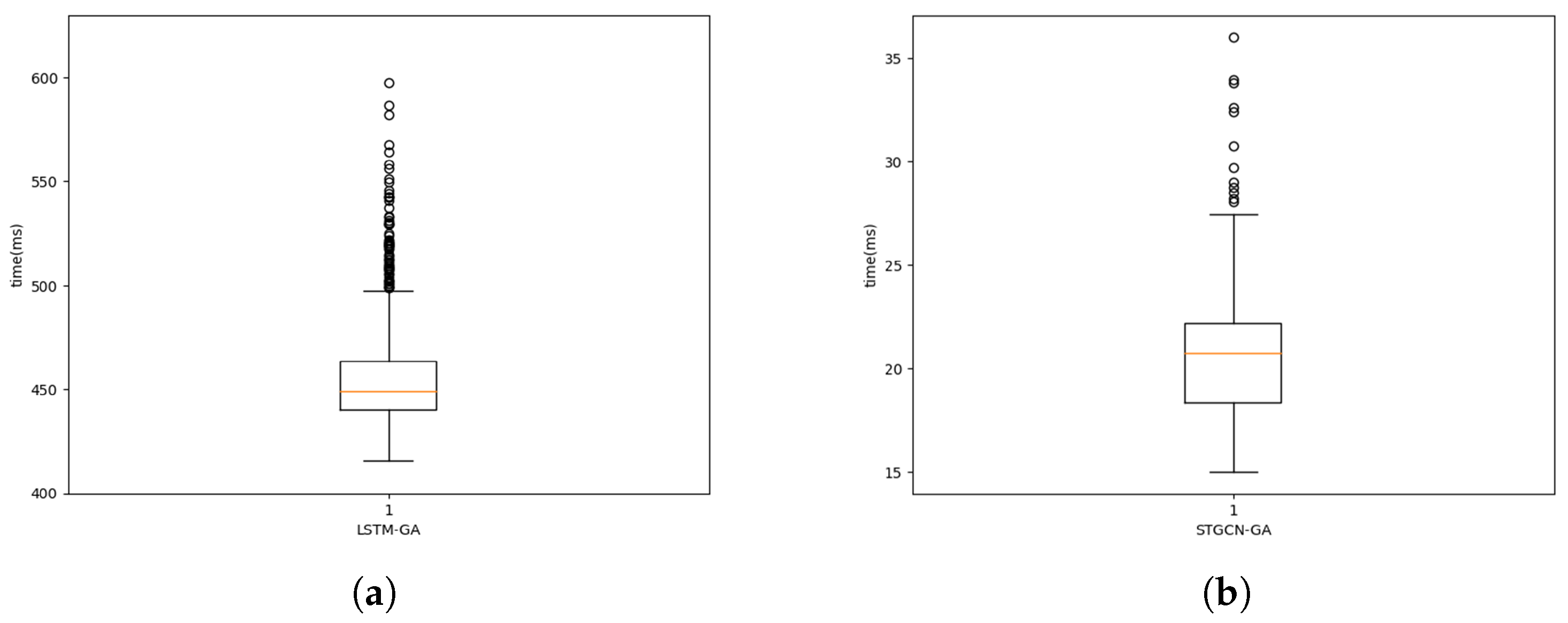

To compare the time taken for sleep control, we examine the end-to-end inference time, starting from predicting the task input rates to determining the sleep control vector. In

Figure 9, we show the distribution of the end-to-end inference time as the box plots. When we inspect the

Q-values, LSTM-GA obtains

ms,

ms, and

ms. The proposed method reduces the

Q-values significantly. When our method is used,

ms,

ms, and

ms. In other words, compared to LSTM-GA, the proposed method decreases the median end-to-end inference time from 449 ms to 21 ms, which is a

reduction. With respect to the IQR, our method reduces the IQR of the end-to-end inference time from 23 ms to 4 ms, which corresponds to an

decrease.