1. Introduction

Since the inception of animal domestication, humanity has relied on intuition, collective knowledge, and sensory signals to make effective decisions in animal husbandry. Over the centuries, these traditional methods have allowed us to achieve significant successes in livestock farming and agriculture. However, the increasing demand for food and revolutionary advancements in sensing technology have necessitated a shift towards more centralized, large-scale, and efficient forms of animal farming. This transformation is described by Neethirajan [

1] as a period marked by the increasing impact of modern technologies on livestock practices. These technologies reshape farming practices such as remote monitoring, automated feeding systems, and health management.

Food security is one of the world’s most significant challenges. The global population growth and rising demand for animal products necessitate a 70% increase in animal farming production over the next 50 years [

2]. According to studies by Tilman et al. [

3], the world population is projected to reach 9.2 billion by 2050, necessitating a significant increase in animal production to feed this growing number. Yitbarek [

4] suggests that this will require a substantial increase in cattle, sheep, goat, pig, and poultry production. This puts additional pressure on farmers and necessitates providing quality care to more animals.

Considering the limited natural resources and rising production pressures, sustainable methods and increased efficiency are necessary. Animal welfare and environmental impact management are critical in modern livestock farming [

5,

6]. Neethirajan [

1] highlights that manual processes in animal husbandry might be inadequate and that new systems and technologies must be developed for high efficiency. In this context, Precision Livestock Farming (PLF) and Industry 4.0 technologies play a significant role in achieving efficiency and sustainability goals in livestock farming [

7].

The threat posed by wild animals to farm animals is a significant issue for agricultural security. Damage caused by wildlife to agricultural production leads to severe economic losses globally. In North America and Europe, 3% of local livestock businesses are affected by such damages, while the figure rises to 18% in Africa and Asia [

8]. To overcome this problem, there is a need for technological solutions that can effectively monitor and manage wildlife in addition to traditional methods.

Computer vision, as a field aimed at obtaining information about the world from images, involves the analysis of characteristics such as shape, texture, and distance [

9]. On the other hand, machine learning deals with developing algorithms intended for extracting information from large datasets, some specifically designed to solve computer vision problems [

10]. Advances focused on deep learning have provided innovative monitoring and control capabilities in many areas, including farming [

11]. The general approach of farmers to acquire information about many animals is through visual observation, which is beneficial but costly and time-consuming. Traditionally, the classification of characteristics in live animals for diagnostic and imaging purposes relied on time-consuming and expensive methods. The development of computer vision systems holds the potential to automate these processes, offering high-efficiency phenotyping and extensive applications in livestock farming [

12].

The primary aim of this study was to investigate the use of image processing technologies within the scope of Industry 4.0 for detecting wild animals and farm animals in precision livestock operations regarding meat safety and sustainability. This approach aims to reduce threats from wildlife and enhance livestock security. Thus, it could be possible to protect the welfare of farm animals while minimizing agricultural production losses. To summarize the specific contributions of this article, the following important points shall be overviewed:

The primary contribution of this article is the utilization of deep learning-based object detection technologies to protect livestock from potential threats and improve farm safety.

Critical emphasis was placed on the ability to effectively distinguish various animal species concerning the characteristics of the model parameters. This provides valuable insights into model selection and adjustments for future studies.

We comprehensively examined the capabilities of advanced deep learning models such as YOLOv8, Yolo-NAS, and Fast-RNN to detect elements that may threaten livestock. These technologies’ effectiveness in agricultural practices can potentially revolutionize farm management and animal protection strategies.

We validated the superior capacity of the proposed system via a series of experiments in association with real traces of datasets.

Detection studies were conducted on specific species, encompassing both wild and domestic animals. A notable aspect of this research is the amalgamation of farm and wild animals within a unified dataset, which enables their integration into comprehensive image detection studies.

The forthcoming sections of the present article are organized as follows. The recent research field about the scope of novel technologies in observing and monitoring agriculture and wildlife is described in

Section 2. The third section aims to provide the essential definitions of the system models, covering the properties of the datasets, the details of the DL approaches, the experimental environment, and the evaluation metrics.

Section 4 presents the performance results to demonstrate the operating basis of the proposed idea. A discussion to highlight the main findings along with a clear conclusion is given in

Section 5.

2. Related Work

Numerous studies in recent years have proven the impact of advanced imaging and machine learning technologies on agricultural and wildlife monitoring. These technologies have facilitated significant advancements in various applications, such as animal counting, species recognition, and individual animal tracking (

Table 1).

One innovative work in this area is the machine vision system developed by Geffen et al. [

13], capable of automatically counting chickens in commercial poultry farms. The system was tested in a facility housing approximately 11,000 laying hens distributed across 37 battery cages. The system collected data using a camera mounted on a feed trough, traversing the length of the cages. It was equipped with a wide-angle lens to encompass all enclosures. The videos processed by a Faster R-CNN—an object detection CNN fed with multi-angle images—applied algorithms designed to detect and track chicken movements within the cages, achieving an accuracy of 89.6% at the cage level, with an average error margin of 2.5 chickens per cage.

In the dairy sector, a system based on the YOLO algorithm developed by Shen et al. [

14] achieved 96.65% accuracy in the lateral identification of dairy cows on farms. This study enhanced operational efficiency in dairy operations by providing faster and more accurate identification than traditional methods.

Focusing on breed differentiation, Barbedo et al. [

15] employed a CNN architecture that achieved over 95% accuracy in distinguishing between Nelore and Canchim breeds. Their study encompassed 1853 images containing 8629 animal samples and tested 15 different CNN architectures, revealing that most models achieved over 95% accuracy, with some deeper architectures like NasNet Large nearing 100% accuracy. This is an essential tool for managing genetic diversity and selective breeding programs.

In wildlife monitoring, the system developed by Norouzzadeh et al. [

16], based on the ResNet-18 architecture, was able to automatically classify 48 different species in the Serengeti with over 93.8% accuracy. They trained the networks to identify, count, and describe behaviors of 48 species across 3.2 million images from the Snapshot Serengeti dataset, with the deep neural networks achieving identification accuracy exceeding 93.8%. However, the system could automatically identify animals in 99.3% of the data when only classifying images with high confidence, while crowdsourced teams of human volunteers achieved a performance accuracy of 96.6%. This represents a valuable resource for the conservation of biodiversity and the monitoring of ecosystem health.

Studies on surveillance videos are also noteworthy. A method developed by Wang et al. [

17] utilized the well-known Faster R-CNN algorithm, noting its inefficiency and low precision for direct application in video surveillance. To overcome these limitations, they proposed an object detection method based on Faster CNN to detect dairy goats in surveillance footage, achieving twice the speed of Faster R-CNN and an average accuracy of 92.49% accuracy, demonstrating how surveillance technologies can be effectively utilized in livestock management.

Unmanned Aerial Vehicle (UAV)-based studies have achieved significant successes in areas such as the management of grazing cattle [

18] and the processing of dynamic herd images [

19]. They implemented a cattle detection and counting system based on convolutional neural networks (CNNs) using aerial images captured by Unmanned Aerial Vehicles (UAVs). Their dataset comprised 656 images of 4000 × 3000 pixels captured by UAVs, and a second dataset from a single flight on a clear day contained 14 images. Their study enhanced detection performance through input resolution optimization using YoloV2, achieving precision of 0.957, recall of 0.946, and an F-Score of 0.952. They demonstrated the suitability of deep neural architectures for the automated detection of Holstein Friesian cattle using computer vision. They introduced a video processing pipeline composed of standard components to efficiently process dynamic herd imagery captured by UAVs, illustrating that detection and localization of Friesian cattle could be robustly performed using the R-CNN algorithm with an accuracy of 99.3%. These works highlight the potential of UAV technology for effectively monitoring and managing vast areas.

Lastly, the YOLO integration proposed by Pandey et al. [

20] for preventing venomous animal attacks accelerated animal recognition and enhanced farm animal security. The deployment of YOLOv5 for snake detection and its synergistic integration with ResNet achieved a notable precision of 87% in detecting snake presence.

These studies demonstrate how imaging and machine learning technologies can revolutionize agricultural and wildlife monitoring. Our research aimed to explore further how these technologies can enhance livestock security and reduce threats from wildlife.

3. Materials and Methods

This study employed advanced image processing algorithms to differentiate wild and farm animals in diverse environments. These algorithms—YOLO v8, YOLO NAS, and Fast R-CNN—represent the forefront of machine learning techniques in object detection tasks. Each algorithm was chosen for its unique strengths in handling the complexities of animal detection in natural and controlled settings.

3.1. Animal Dataset

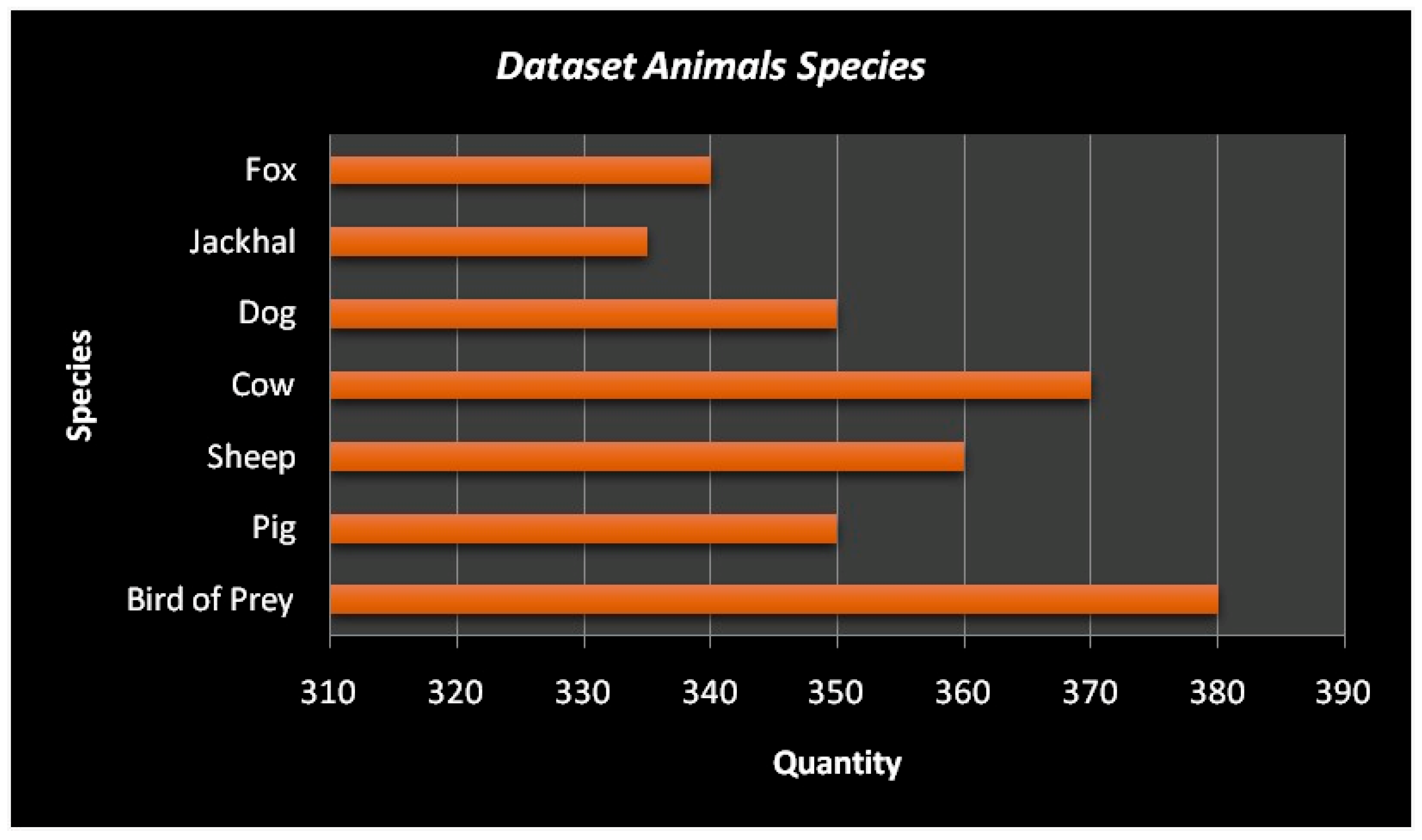

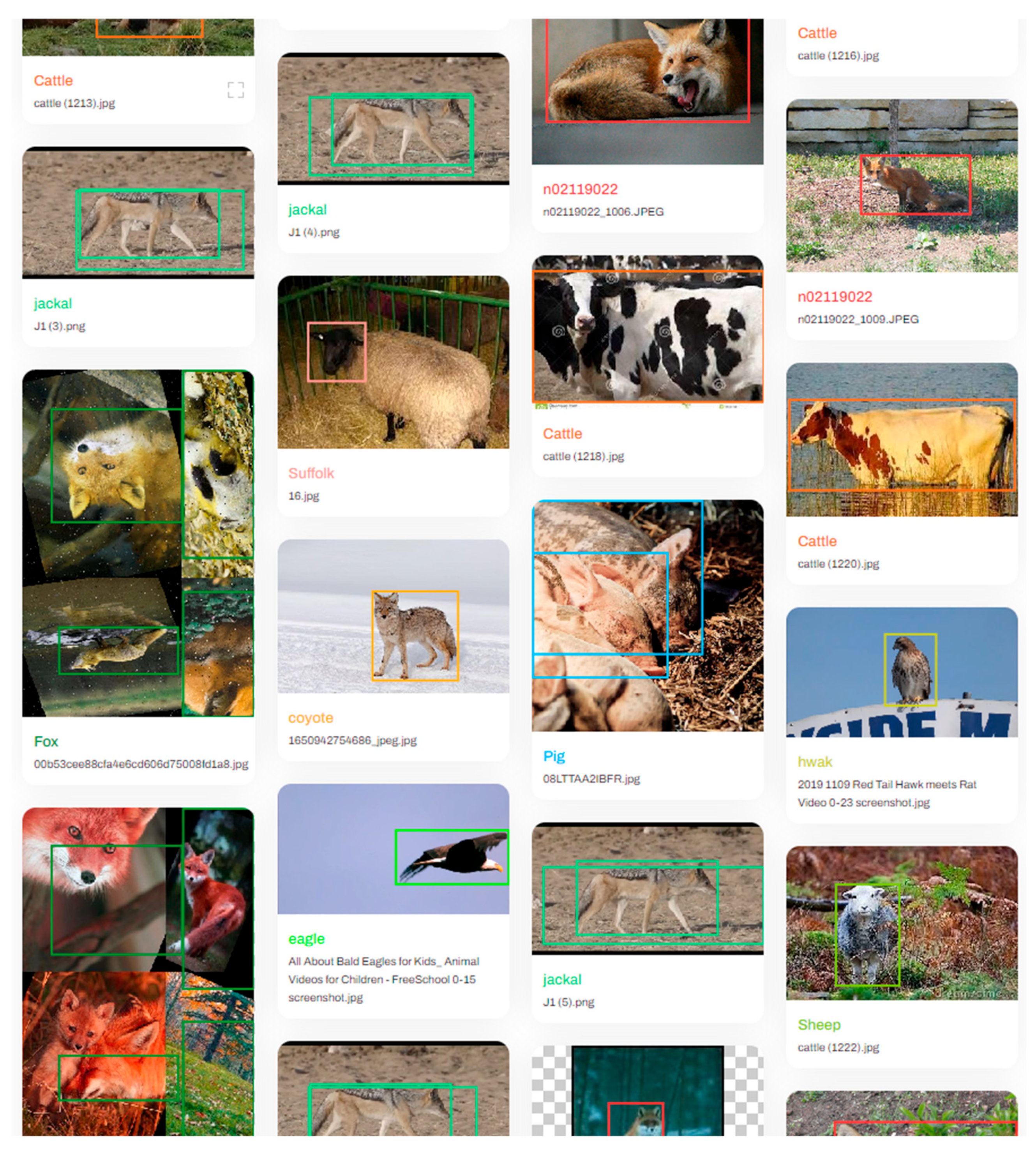

The visual dataset used in this study was selected from the open animal images of Kaggle, Roboflow, and Ultralytics [

21,

22,

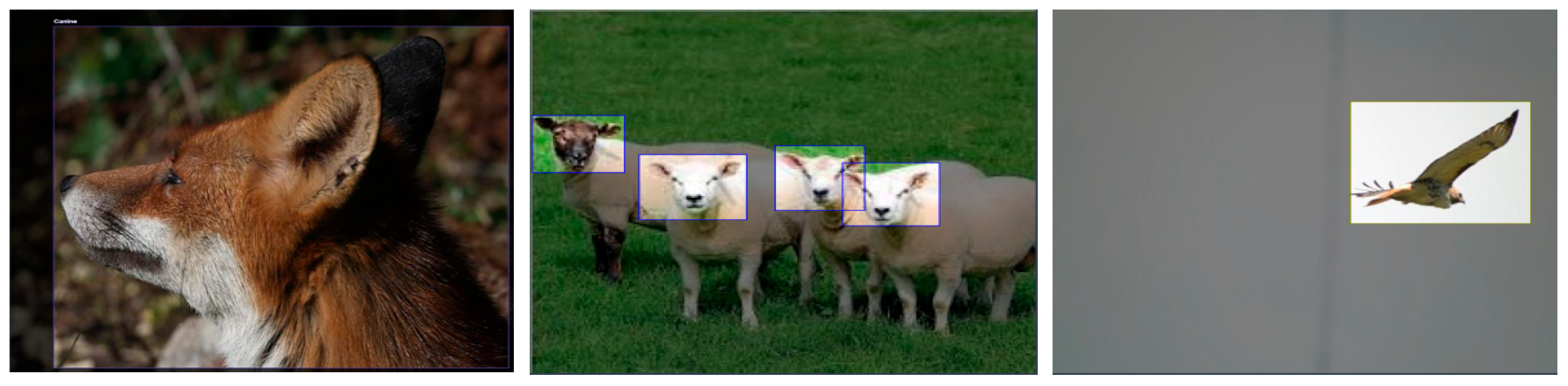

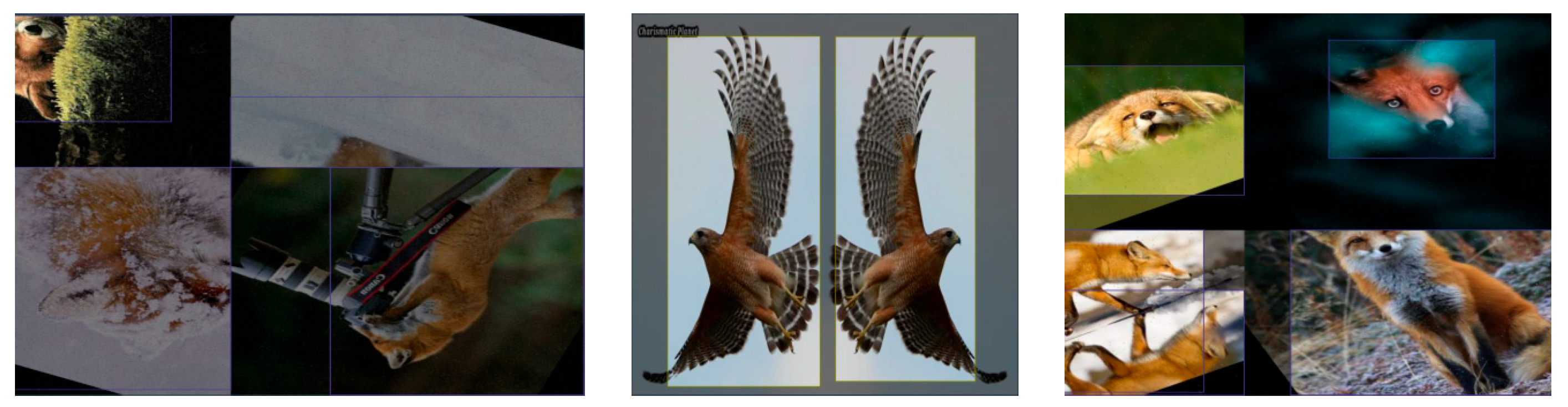

23]. In this study, a total of 2462 animal images that could potentially be present around farms were used. Care was taken to ensure that these images represented the most common species that could threaten farm animals. These species included birds of prey, pigs, cattle, sheep, foxes, jackals, and dogs. Images were collected from the free image dataset by choosing copyright-free ones (CC0) and compiled for the study. To ensure the efficient functioning of image detection, the species were included in the dataset in roughly equal numbers. Attention was paid to sourcing images taken in natural habitats. The dataset will be made available to researchers on sharing platforms. The raw form of the dataset images is presented in

Figure 1 and the distribution of animal types within the dataset is shown in

Figure 2.

After acquiring the dataset, the raw images underwent preprocessing steps for analysis using deep learning-based image processing techniques. These preprocessing steps included the below:

3.2. YOLOv8

In a 2023 study by Jocher et al. [

24], YOLOv8 was introduced as the newest iteration in the YOLO series for object detection, officially released on January 10th. This edition is noted for its enhanced processing speed, crucial for real-time application scenarios without compromising the model’s accuracy. A pivotal advancement in YOLOv8 was its shift towards an anchor-free framework, which simplified the training process on varied datasets and incorporated a sophisticated backbone network along with multi-scale prediction capabilities, thereby significantly improving the detection of miniature objects. Comparative assessments have demonstrated YOLOv8′s superior accuracy over its predecessors, establishing its competitive stance among contemporary object detection models.

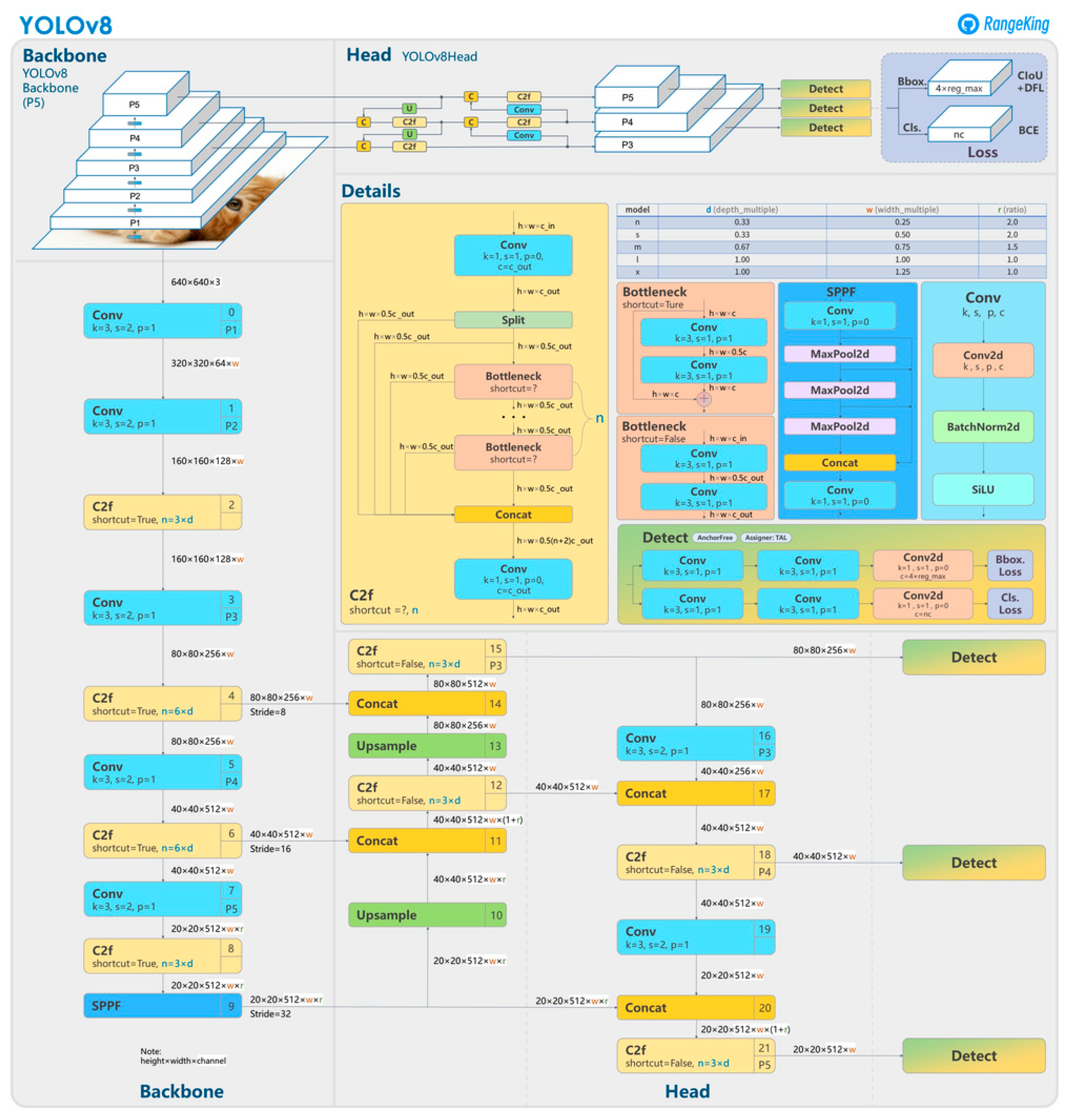

A defining feature of YOLOv8, as underscored by Hussain [

25], is its implementation of a single-pass detection methodology, which optimizes efficiency by amalgamating object detection and segmentation into a singular operation. This approach, alongside a convolutional neural network (CNN), pre-trained on an extensive dataset, enables the model to accurately predict the presence, location, size, and classification of objects within an image. YOLOv8′s architectural innovations, including an advanced backbone and an anchorless split Ultralytics head, enhance its accuracy and detection efficiency. Furthermore, the model offers a variety of pre-trained configurations to suit different tasks and performance requirements. The YOLOv8 model architecture is shown in

Figure 6.

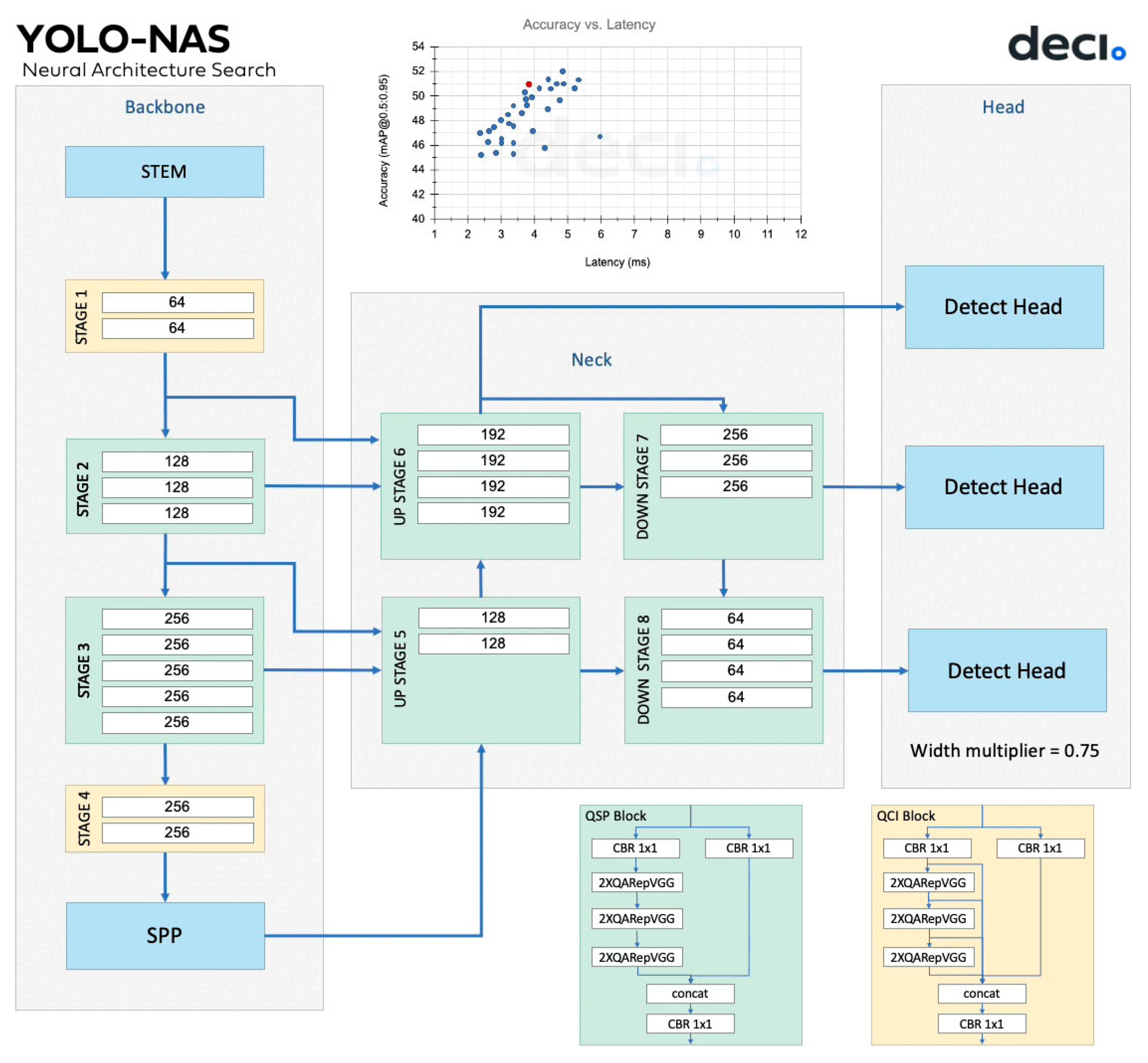

3.3. Yolo NAS

YOLO-NAS is an object detection model developed by Deci that achieves SOTA performances compared to YOLOv5, v7, and v8. Unveiled in May 2023, YOLO-NAS represents a significant advancement in real-time object detection by integrating the “You Only Look Once” (YOLO) architecture with a Neural Architecture Search (NAS). This development is particularly vital for applications demanding rapid and precise object identification, such as autonomous driving, underscoring the importance of real-time capabilities for safety and efficiency [

27,

28,

29].

Employing NAS, YOLO-NAS automates the optimization of neural network architecture, focusing on achieving the optimal balance between speed and accuracy. This process includes selecting stage sizes, configurations, and the number of blocks and channels based on a comprehensive search across extensive datasets.

Key to YOLO-NAS’s efficiency is its use of quantization-aware blocks and selective quantization, which minimize the computational and memory requirements during inference, maintaining high model accuracy. The training process incorporates Object365 and COCO 2017 datasets, enhanced by Knowledge Distillation (KD) and Distribution Focal Loss (DFL) techniques, leading to a model that excels in speed, accuracy, and applicability across various settings [

30].

YOLO-NAS is distinguished as below:

High Accuracy: Achieving leading results on diverse object detection benchmarks.

Real-Time Inference: Capable of instantaneous object detection.

Single-Stage Model: Enhancing efficiency with a one-pass detection approach.

NAS Optimization: Ensuring a finely tuned model for object detection tasks.

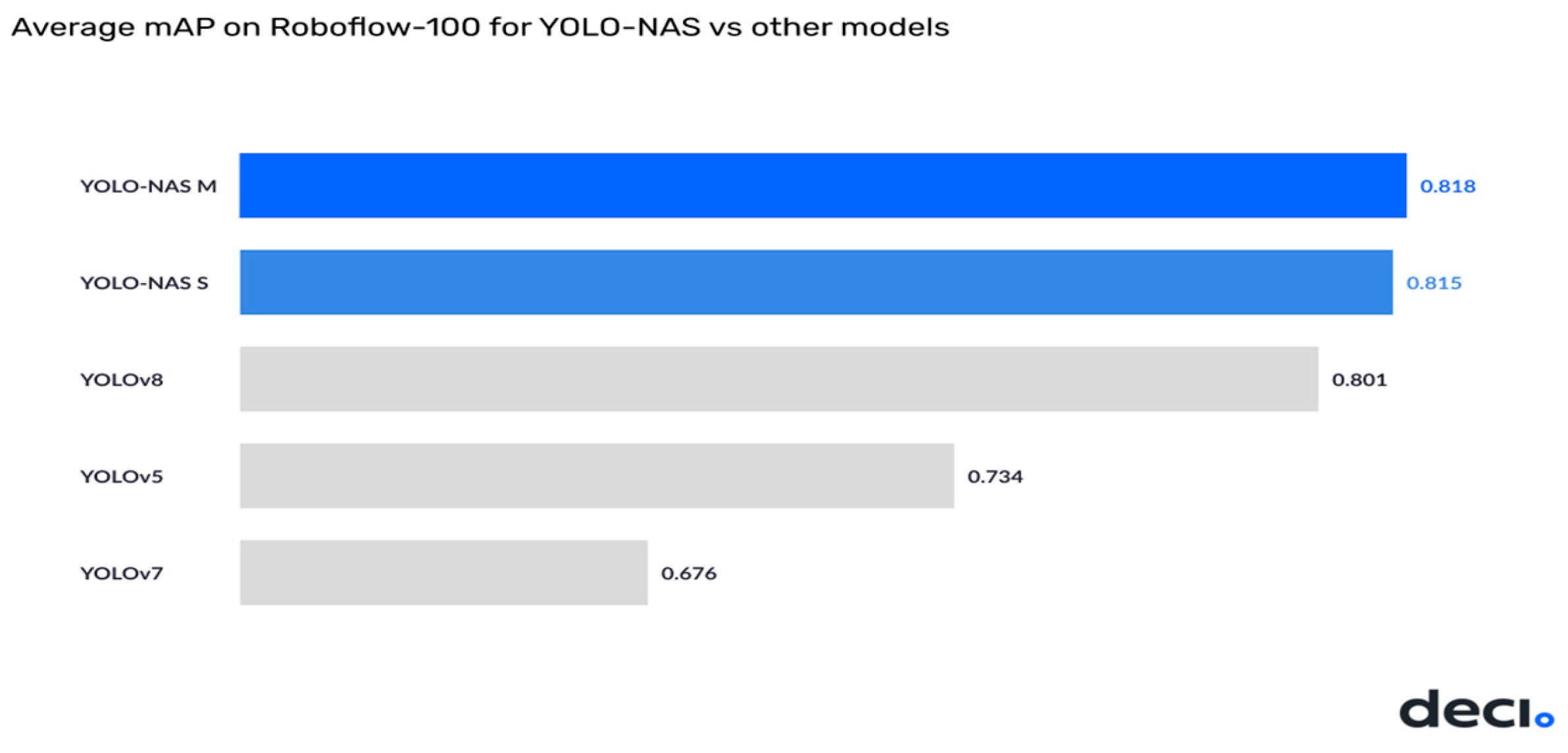

YOLO-NAS demonstrates successful performance in downstream tasks. By fine-tuning the Roboflow-100, our YOLO-NAS model can achieve higher mAP than our closest competitors [

31].

Figure 7 shows a comparison table with other Yolo models and

Figure 8 shows the model architecture. Successful results can be achieved by finely adjusting the given YOLO-NAS codes.

3.4. Fast R-CNN

Microsoft proposed FastRNN in 2018 [

32]. The model is based on the residual network (ResNet) [

33]. If we look at the structure of ResNet, in this model, every network layer is not stacked over each other but forms a residual mapping, which is always easier to optimize. The model is based on the VGG model and modified by adding residual layers. ResNet is trained on 25.5 million parameters. This makes ResNet a very heavy network as well. FastRNN exploits the learned residual connections of ResNet and provides stable training along with comparable training accuracies at a much lower computational cost. The prediction accuracies of FastRNN are 19% higher than regular RNN structures and a little less than the gated RNN [

32,

34].

Let

] be the input vector, where

xT denotes the t-th step feature vector. So, for a multi-class RNN, we aim to learn the function

F:

, which will predict one of these L classes for a given data point

X. The standard RNN architecture hidden state vectors are represented as below:

Learning U, W in the above architecture is difficult as the gradient can have an exponentially large (in T) condition number. Unitary network-based methods can solve this problem by expanding the network size, and, in this process, they become significantly large, and the model accuracy may not remain the same.

However, FastRNN uses a simple weighted residual connection to stabilize the training by generating well-conditioned gradients. In particular, FastRNN updates the hidden state

ht as follows:

Here, α and β are trainable weights that are parameterized by the sigmoid function and limited in the range 0 < α, β < 1. σ is a nonlinear function such as tanh, sigmoid, or ReLU, and can vary across datasets.

3.5. Experimental Environment

The experimental investigations were executed in a meticulously configured computational setting to ensure the integrity and reproducibility of the results. Utilizing MATLAB (R2020a), renowned for its robust capabilities in data analysis and algorithm development, the computational framework was designed to support intensive processing tasks. The system was equipped with an NVIDIA RTX 2080 GPU, (Santa Clara, CA, USA) acclaimed for its exceptional processing power and efficiency in handling parallel computations—essential for deep learning and complex image processing. Additionally, the computational setup included 32 GB of RAM, enhancing the system’s ability to manage and manipulate large datasets effectively. At the heart of the hardware configuration was an Intel Core i7 (Santa Clara, CA, USA) processor, providing the computational velocity and multitasking efficiency required for the study. This integration of advanced GPU capabilities, substantial memory capacity, and a high-performance CPU created an optimal environment for executing our research’s sophisticated deep-learning models and image processing algorithms, ensuring that the models operated at peak performance without compromising speed or accuracy.

As seen in the configuration details in

Table 2, YOLOv8 utilizes an advanced backbone and an anchorless split Ultralytics head, enhancing detection efficiency and accuracy. Yolo-NAS employs Neural Architecture Search (NAS) to fine-tune the architecture for optimal balance between speed and accuracy. Fast-RNN features a simplified network structure with weighted residual connections, ensuring stable training and effective learning of temporal dynamics.

3.6. Evaluation Metrics

The results obtained from our study were measured and evaluated according to various criteria, including precision, recall, mAP50, and mAP50-95. We employed mAP50 as a metric to evaluate and compare different models. The training process involved assessing key performance metrics such as accuracy and loss. Accuracy provides an overall measure of model performance by calculating the proportion of data points correctly predicted relative to the entire dataset. Alongside accuracy, precision and recall metrics were also computed to enhance the quality and relevance of the outcomes. Precision assesses explicitly the accuracy of the classification of samples within the entire dataset and provides insights into the accuracy of the classification process. Conversely, recall focuses on correctly identifying positive examples among all positive instances within a dataset, which is crucial in evaluating a model’s ability to capture all relevant instances. In target detection, the average precision (AP) for each target type and the mean average precision (mAP) for all targets are utilized to assess the detection effectiveness and performance of a model. AP is calculated as the area under the recall and precision curves, and its formula is as follows:

3.7. Limitations

Dataset Size and Diversity: Although the dataset comprising 2462 animal images represented a significant volume, the diversity within this dataset, particularly regarding environmental settings and animal behaviors, may not have been adequate to fully evaluate the generalizability of the deep learning models. More extensive datasets that encompass a wider range of scenarios and animal interactions could provide a more robust assessment of model performance.

Model Generalization: The models were validated using a specific dataset, which, despite its variety, represented a controlled environment. Consequently, the efficacy of these models in real-world scenarios—where factors such as lighting, weather, and landscape vary significantly—might not be as robust as indicated under controlled study conditions.

Hardware Limitations: The models’ performance was optimized for specific hardware setups that might not be universally available in practical, real-world farm settings. Dependence on high-performance GPUs for model training and inference poses significant limitations for deployment in resource-constrained environments.

Balancing Precision and Recall: While the models demonstrated commendable precision and recall rates, the balancing of these metrics is critical for practical applications. For example, the Yolo-NAS model exhibited high recall but lower precision, potentially leading to a high rate of false positives in actual deployments, which could compromise the system’s usability.

Impact of Non-Visual Factors: The current study focused exclusively on visual data for threat detection. However, in actual agricultural settings, other modalities such as acoustic signals, thermal imagery, and olfactory data could significantly enhance threat detection capabilities. Integrating these additional data types could improve detection rates and diminish the incidence of false positives.

Real-Time Processing Needs: Although the speed of the models was addressed, the real-time processing capabilities necessary in live environments—where decisions must be made swiftly to avert animal attacks—were not thoroughly tested.

Recommendations for Future Research: Addressing these limitations in future studies by broadening dataset diversity, testing models in more varied and realistic environments, integrating multimodal data, and evaluating deployment feasibility in typical farm settings with varying technological access could significantly enhance the practical applicability of the findings. Such improvements would pave a clearer pathway for deploying these technologies to bolster farm animal safety and enhance farm security.

4. Results

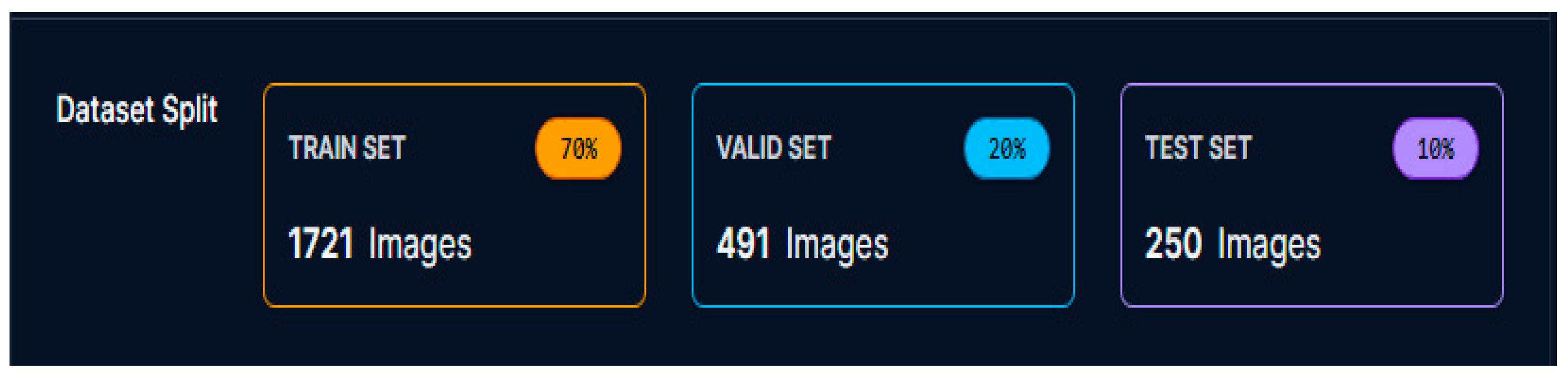

Experimental studies were conducted using deep learning-based YOLOv8, Yolo-NAS, and Fast-RNN models in this research. This study was based on 2462 animal images that could threaten farm surroundings. The images were selected from common species that might create risks for farm animals, including birds of prey, pigs, cattle, sheep, foxes, jackals, and dogs. The collected data were converted to a standard size of 640 × 640 pixels and divided into three main groups: training, validation, and testing. The data distribution was structured as 1721 images for the training set, accounting for 70% of the total data; 491 images for the validation set, accounting for 20%; and 250 images for the testing set, making up 10%. The numerical results of the experimental study are provided in

Table 3.

Upon careful examination of

Table 3 according to the study analysis results, it is observed that the average successful detection values, mAP50, were 93.1% for YOLOv8, 93.1% for YOLO-NAS, and 91.2% for Fast-RNN. The YOLOv8 model exhibited high performance with 93% precision, 85.2% recall, and a 93.1% average accuracy rate (mAP50), indicating that the model mostly made accurate detections and successfully identified a large portion of the objects. Conversely, despite producing more false positives with a lower precision rate of 52%, the Yolo-NAS model achieved an exceptional recall rate of 98.7%, nearly detecting all true objects. The Fast-RNN model demonstrated balanced performance in precision (85.2%) and recall (91.8%), obtaining an overall effective result, with a mAP50 value of 91.2%.

These findings showcase the capabilities of the developed models in detecting and tracking potential threats around the farm environment. The performance of the models was optimized appropriately for the dataset’s characteristics, which included various animal species and reflected real-world conditions. Consequently, this study highlights the potential of object detection technologies in protecting farm animals and enhancing farm security. Significantly, the high precision and recall values of the YOLOv8 and Fast-RNN models offer significant practical applications for farm management. The high recall rate of the Yolo-NAS indicates its particular strength in detecting all potential threats, making it valuable for specific applications.

In assessing the utility of the models under different operational conditions, it was found that YOLOv8 delivered robust performance in environments characterized by high-density and complex backgrounds, thereby affirming its efficacy in crowded agricultural settings where precise and reliable detection is crucial. Concurrently, Yolo-NAS demonstrated its strengths in real-time monitoring scenarios, showcasing its capability in instantaneous threat recognition, which is essential in live monitoring systems that require immediate response to potential threats. Moreover, Fast-RNN proved to be particularly adept in resource-constrained environments, illustrating its potential applicability in edge computing devices deployed in remote farm locations where computational resources are limited. These findings highlight the adaptability of the models to varied operational demands, suggesting their broad applicability in enhancing farm security through advanced object detection technologies.

Examining other metric values of the YOLOv8 model, it was observed that the study exhibited stable performance according to loss parameters. The decrease in loss values at the end of the study was interpreted positively for this work’s analysis. Additionally, these values indicated that the model did not memorize.

Figure 9 presents graphs of the precision, recall, Map 50, and Map 50-95 metrics and BoxLoss, ClassLoss, and ObjectLoss values over 100 epochs of YOLOv8 model operation.

The notable improvements in precision and recall observed in the later epochs could indicate a significant advancement in the learning process, potentially due to adjustments in the learning rate or the influence of more effective feature extraction layers beginning to impact the model’s performance. The stability observed in the mAP50, alongside enhancements in mAP50-95 and the recall rates for bounding boxes, suggests that the model was not only consistently detecting objects but was also increasingly proficient at ensuring that these detections were highly accurate in terms of both position and classification. This implies that the model was not just continuously detecting objects but was also enhancing its capability to ensure that those detections were precise in both placement and categorization.

The study analysis results were examined class by class. The class-based metric parameters for the YOLOv8 model were extracted and are shown in

Table 4.

In

Table 4, the model’s performance in detecting various animal classes is presented in detail. The model’s overall performance was impressive, with 93% precision, 85.2% recall, 93.1% mAP50, and 70.9% mAP50-95 values. Particularly for the birds of prey class, the model showed remarkable success, with 97.1% precision and 95.1% recall and recording a mAP50 of 99.2% and mAP50-95 of 83.7%. This indicated the model’s high effectiveness in distinguishing birds of prey from other species.

High precision and recall values were also observed for large mammals such as pigs, sheep, and cattle and predatory mammals like dogs, jackals, and foxes. The mAP50 values for each animal class were generally high, demonstrating the model’s ability to detect various species accurately. However, mAP50-95 values were typically lower, indicating a decrease in model performance as the IoU threshold increased.

These findings show that the model successfully detected various animal types, particularly excelling with specific species like birds of prey. Moreover, this study significantly contributes to the development of automatic detection systems to enhance farm security and protect poultry from potential threats. These results once again prove the effectiveness of deep learning models in animal detection and classification.

In

Figure 10, we present an example output from the YOLOv8 model applied to the task of detecting various wild and farm animals in natural and controlled environments. This figure illustrates the model’s capacity to accurately identify and differentiate between species, showcasing bounding boxes that delineate individual animals. The high detection accuracy, as demonstrated in the figure, underscores the effectiveness of YOLOv8’s enhanced deep learning architecture in handling complex image recognition tasks within diverse settings.

The

Table 5 provides a comprehensive comparison of the Yolo-NAS, Yolo v8, and Fast RNN models, highlighting key parameters such as training duration and processing speed, alongside performance metrics including precision, recall, and mAP50. The results offer valuable insights into how each model performs under various operational conditions. Notably, the Fast RNN model excels in environments with limited resources, demonstrating high processing speed and efficiency. The Yolo v8 model distinguishes itself with high accuracy in complex and densely populated backgrounds, while the Yolo-NAS model’s exceptional recall rate indicates its ability to detect nearly all true objects, making it particularly effective for real-time monitoring scenarios. These findings underscore the suitability of these models for enhancing farm security and animal protection applications. A detailed assessment of each model’s capabilities can assist in selecting the most appropriate model for the specific challenges encountered in diverse farm settings, thereby enhancing our understanding of the practical applications of deep learning models in agricultural environments.

5. Discussion

This research extensively examined the capabilities of deep learning algorithms such as YOLOv8, Yolo-NAS, and Fast-RNN in detecting animal species that could potentially pose threats to farm environments. This study was conducted on various animal images comprising multiple species that could pose potential risks to farm animals. The results showed that each model could detect animals with high accuracy and reliability under real-world conditions.

According to the analysis results, the YOLOv8 model demonstrated superior performance, with precision of 93%, recall of 85.2%, and a mAP50 value of 93.1%. This proves that YOLOv8 can successfully detect objects with high accuracy in most cases. On the other hand, while showing a lower precision rate of 52%, the Yolo-NAS model achieved a remarkable recall value of 98.7%, indicating its comprehensive detection ability, especially among a broad and diverse range of species. The Fast-RNN model displayed balanced performance, with precision of 85.2% and recall of 91.8%, reaching an overall effective detection ability, with a mAP50 value of 91.2%.

The outcomes of this study highlight the need for a detailed examination of the success of the class-based detection of the relevant algorithms. Specifically, it was determined that algorithms’ detection success is significantly high for specific classes, such as birds of prey. This presents significant opportunities for customizing and optimizing algorithms for detecting particular animal types.

In evaluating the efficacy of various deep learning models across a multitude of animal detection studies, our research situates itself within a dynamic field characterized by significant variability in model performance, as reflected in the comparative

Table 6. For instance, studies leveraging high-capacity networks like NasNet Large and AlexNet, as demonstrated by Barbedo et al. [

15] and Shen et al. [

14], reported accuracy levels of 95% and 96.65%, respectively, showcasing their effectiveness in structured environments with relatively uniform subjects such as cattle and dairy cows.

Conversely, Geffen et al. [

13] and Ferrante et al. [

41], employing Faster R-CNN and Scaled-YoloV4, achieved lower accuracies of 89.6% and 82%, respectively. These outcomes may reflect the challenges associated with more complex or heterogeneous datasets, underscoring the necessity for models that can adapt to diverse visual inputs without sacrificing accuracy. Interestingly, the perfect accuracy of 100% reported by Schütz et al. [

35] using YoloV4 on a dataset of fox images illustrates the potential of finely tuned models to achieve exceptional results in highly specialized settings.

Our study, employing YoloV8 on a dataset comprising 2462 images of seven specific animal types, achieved a commendable accuracy of 93%. This positions our findings competitively, particularly when considering the diversity of our dataset, which included multiple animal species in various environmental contexts. This performance is notably superior to that of Krishnan et al. [

39] and Ferrante et al. [

41], who used other Yolo variants on larger datasets but achieved lower accuracies.

This variability in accuracy across studies underscores the importance of model selection tailored to specific data characteristics and detection requirements. The high performance of models like YoloV4 and YoloV5 in our study and others suggests that advancements in Yolo architecture have progressively enhanced the ability to handle complex detection tasks effectively. Moreover, the wide range of accuracies observed highlights the ongoing need for improvements in model robustness and generalization capabilities, ensuring that deep learning tools remain versatile and effective across the evolving challenges of wildlife monitoring and agricultural management.

In conclusion, this research underscores the significant potential of deep learning-based object detection technologies in protecting farm animals and enhancing farm security. The class-based performance analysis of the models demonstrates the need to customize the model parameters based on the characteristics to distinguish various animal species effectively. This provides valuable insights for future work on model selection and adjustments.

This study once again proves that using deep learning models in animal detection and classification offers valuable solutions for practical applications. Future research on model optimization and customization is expected to play a central role in farm management and animal protection strategies. In this context, the further development and testing of models under real-world conditions will be the focus of future research in this field.