Performance Evaluation and Optimization of 3D Models from Low-Cost 3D Scanning Technologies for Virtual Reality and Metaverse E-Commerce

Abstract

1. Introduction

2. Related Work

2.1. NeRF (Neurance Radiance Field)

2.2. Photogrammetry

2.3. LiDAR Sensors

2.4. VR in E-Commerce Field

2.5. Influence of 3D Models in E-Commerce Activities

3. Low-Cost Digitisation for VR E-Commerce

3.1. Software Tools Selection Based on Scanning Techniques

3.2. Hardware Used

- Apple iPad Pro 2022 (Ciudad Real, Spain). Dual camera of 12 megapixels (MP) and 10 MP ultra-wide, plus 3D LiDAR sensors incorporated. It is the device on which we used Luma AI and Polycam’s LiDAR feature.

- Xiaomi Redmi Note 8 (Ciudad Real, Spain). Quad camera of 48 MP (main), 8 MP (ultra-wide), 2 MP (macro) and 2 MP (depth).

3.3. Features and Quality Metrics Used

- Size in MB of the .obj file.

- The number of polygons of the model (polygon count).

- The texture’s size of the model in MB.

- NR-3DQA 1.0 (https://github.com/zzc-1998/NR-3DQA accessed on 7 July 2024) [32]. This metric, which does not need a reference model, takes as input a point cloud or 3D mesh, extracting colour and geometric features, such as curvature, sphericity or linearity. After estimating four statistical parameters, a vector of 64 features is constructed and fed to a Support Vector Regression (SVR) neural network to provide a quality score in the form of a Mean Opinion Score (MOS) obtained by regression. For training, the public database Waterloo Point Cloud (WPC) [33] is used, which comprises 760 point clouds including 20 original ones and others distorted through different methods. These clouds are evaluated by obtaining an MOS, which represents the visual quality with which the point cloud is perceived by the human visual system.

- MM-PCQA 1.0 (https://github.com/zzc-1998/MM-PCQA accessed on 7 July 2024) was proposed by Zhang et al. [34]. This metric, which does not need a reference model, takes as input a point cloud in .PLY format. The proposed metric works by first splitting the point cloud into sub-models to capture local geometric distortions such as point offsets and down-sampling. It then converts the point clouds into 2D image projections to extract texture features. Both the sub-models and the projected images are encoded using point-based neural networks, such as PointNet++ and DGCNN (Dynamic Graph CNN), and image-based neural networks. The main innovation of this metric is the symmetric cross-modal attention mechanism, which fuses quality-aware multi-modal information to predict the quality score. The fused features are then used to predict the quality score of the point cloud. The loss function used to train the model includes components to minimise the difference between the predicted and actual quality scores and to maintain the quality ranking between the different point clouds. In this case, the WPC database was also used to train the neural networks.

- CMDM (Color Mesh Distortion Measure) 0.15.1 (https://github.com/MEPP-team/MEPP2 accessed on 7 July 2024) [35]. This metric, which uses a reference mesh, evaluates the quality of a 3D mesh with colour, combining geometric and colour features to determine the perception of distortion. It is based on the extraction of features at a local level and their subsequent analysis at a global multi-scale scale. Geometric features include mean curvature and curvature structure comparison, while colour features are extracted by transforming the colours of vertices to the LAB200HL colour space, considering luminance, chroma and hue. These features are compared between the reference and the distorted or compared mesh, and an optimised linear combination of these features, determined through logistic regression, results in a measure of perceived quality.

- Accuracy of the 3D model. This can be obtained by performing a comparison between triangular meshes of a reference model and the model you want to evaluate for accuracy. For this purpose, we will use the Root Mean Square (RMS) of the distances computed with the software CloudCompare 2.6.3 (https://www.danielgm.net/cc/ accessed on 7 July 2024), which is widely used in the literature for comparing meshes and point clouds. Thus, we will follow the recommendations provided by the tool for performing the comparisons. Since we do not have a ground truth model of the product being scanned, we are taking the 3D models provided by the most accurate scanning technology based on the results of Section 3.4.

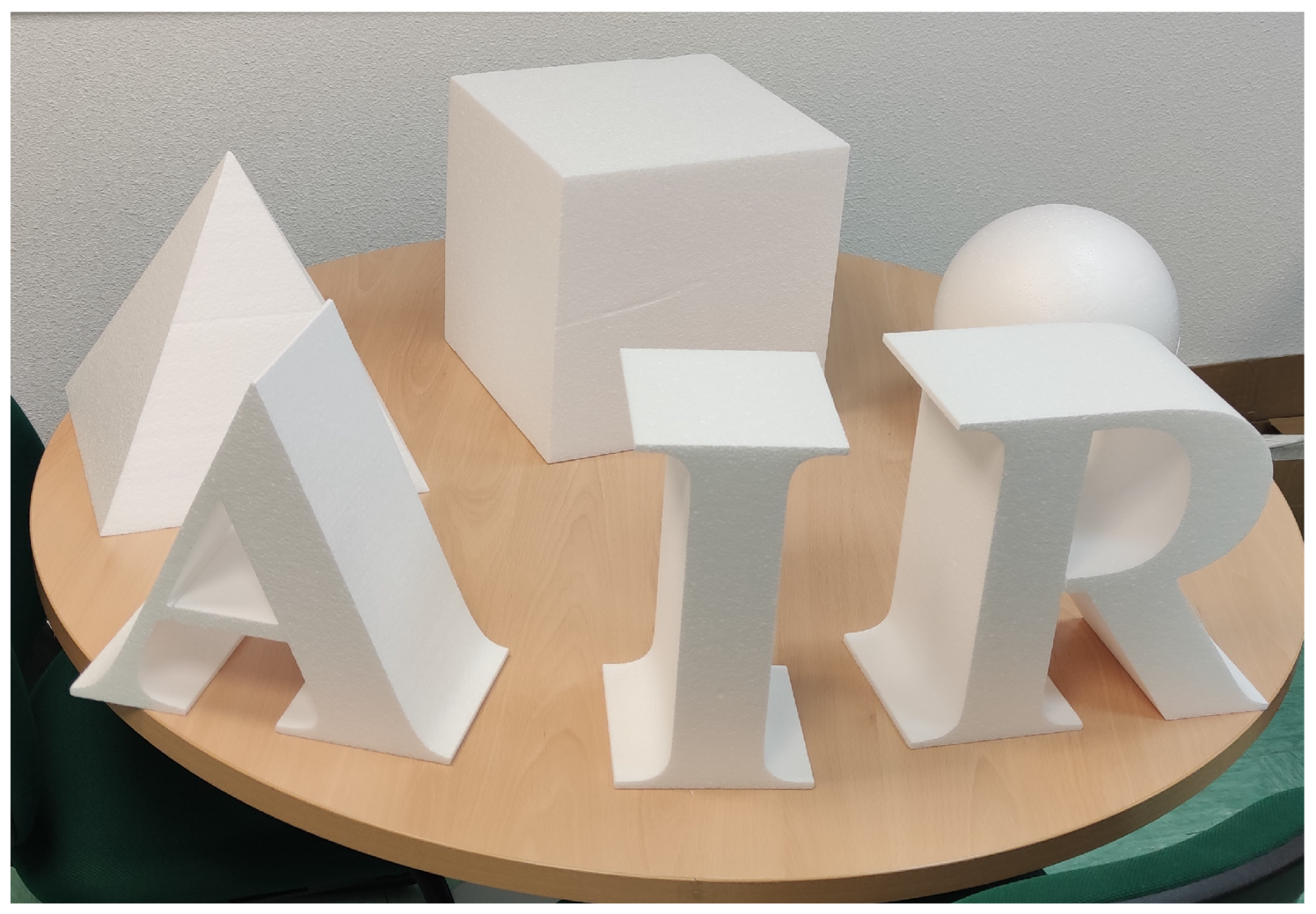

3.4. Scanning of Basic 3D Primitives

3.5. Scanning of Objects Selected for the Study

3.6. 3D Model Quality Evaluation

Discussion

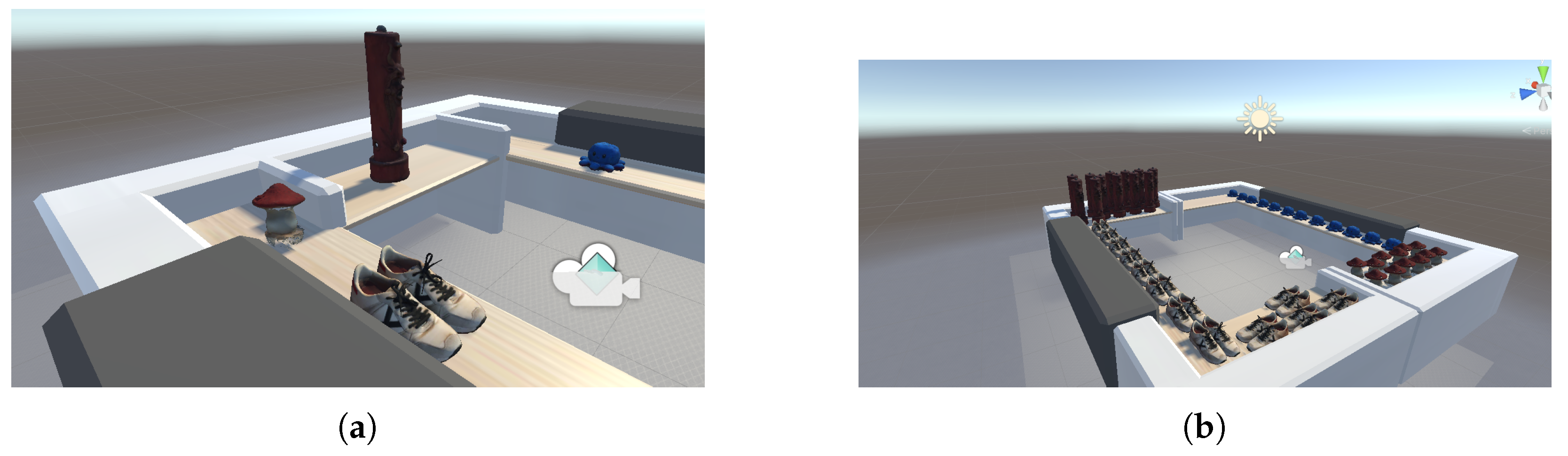

4. Performance Analysis of the Generated Models in Virtual Environments

- Meta Quest 2. Chipset: Qualcomm Snapdragon XR2, 8 cores (1 × 2.84 GHz, 3 × 2.42 GHz, 4 × 1.8 GHz), integrated GPU: Qualcomm Adreno 650 @ 587 MHz, 1.267 GFLOPS. RAM: 6 GB.

- Meta Quest Pro. Chipset: Qualcomm Snapdragon XR2+, 8 cores (1 × 2.84 GHz, 3 × 2.42 GHz, 4 × 1.8 GHz), integrated GPU: Qualcomm Adreno 650 @ 587 MHz, 1.267 GFLOPS. RAM: 12 GB.

- Meta Quest 3. Chipset: Qualcomm Snapdragon XR2 Gen 2, 6 cores (1 × 3.19 GHz, 2 × 2.8 GHz, 3 × 2.0 GHz) GHz, integrated GPU: Qualcomm Adreno 740 @ 492 MHz, 3.1 GFLOPS. RAM: 8 GB.

4.1. Data Collection Method

- Employ the official Meta SDK for Unity to incorporate into each model the necessary components to interact with the models. Thus, these can be picked up with virtual hands and moved freely to observe how a typical interaction with objects affects performance.

- Replicate the number of models in different scenes to estimate the limit of products that could be included in a virtual environment for each type of model generated by Luma AI. This allows estimating a maximum number of objects that can be displayed for the application to run smoothly and for the user experience to be satisfactory. This was performed with the low-poly models of Luma AI and Polycam for Android as they have been the most realistic models and the ones that have provided the best data, as seen in Section 3.3. In total, 14 scenes were developed to achieve this objective.

- For the collection of performance data, the corresponding application for each scene was run, and during an application execution lasting approximately 60 s, there was interaction with the objects in the environment, as well as free movement around the scene. This was done with the aim of simulating a user’s behaviour in a VR shopping environment.

4.2. Performance Analysis of Models

4.2.1. Frame Rate

4.2.2. Stale Frames

4.2.3. GPU U (GPU Utilization)

4.2.4. Application GPU Time (App T)

4.2.5. CPU Utilization Percentage

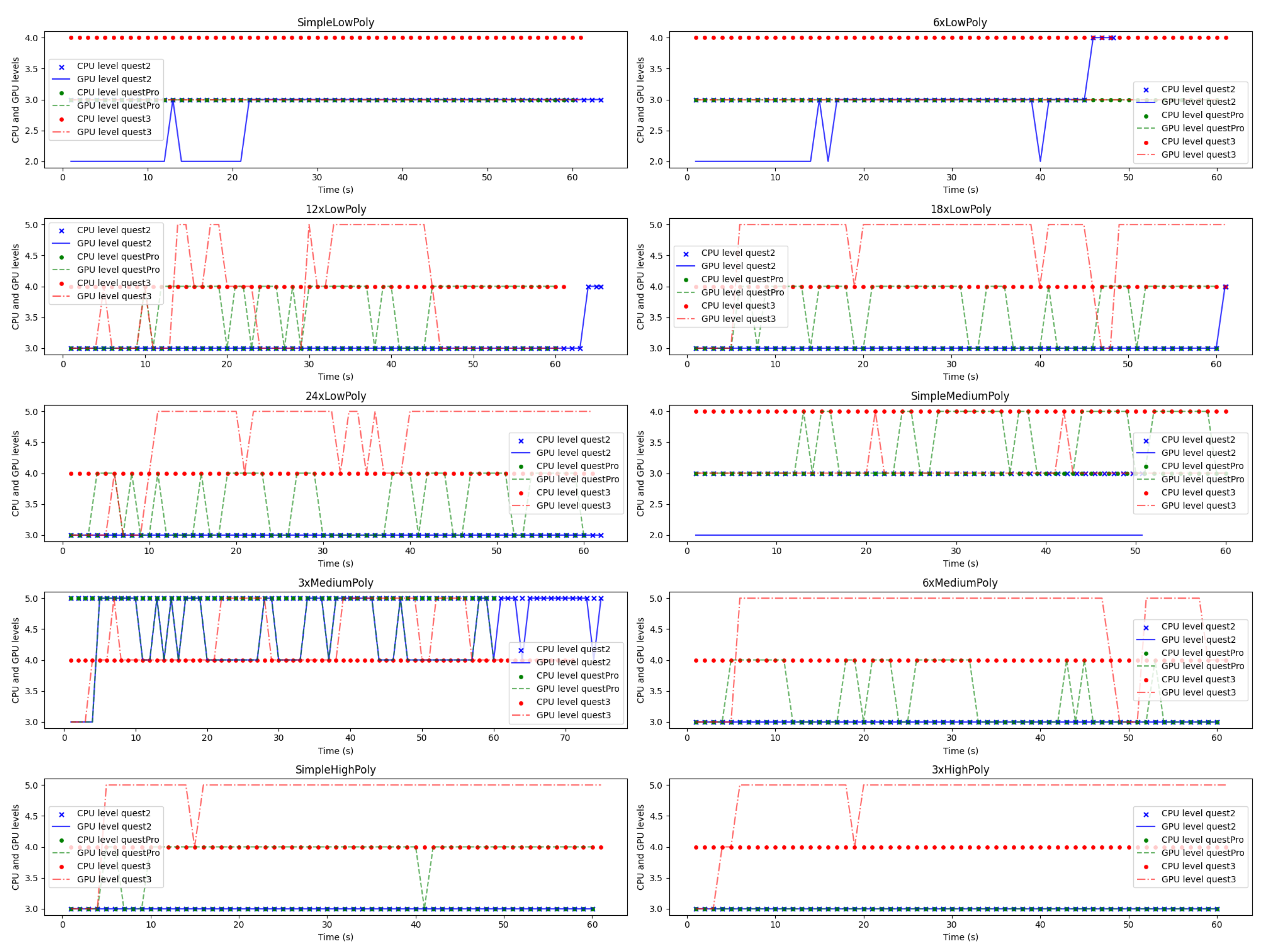

4.2.6. GPU and CPU Levels

4.2.7. Average Prediction

4.3. Discussion

5. 3D Model Optimisation of High-Poly Models for VR

5.1. Optimisation Process

- Apply a 3D mesh modifier with the “Decimate” tool [38], a technique that reduces the number of polygons by a specified percentage, preserving details while reducing the mesh geometry.

- Unwrap the 3D model imported to Blender 3.5 in order to create a 2D UV map that fits the mesh of the model with the Smart UV project tool, with an island margin of 0.02 to avoid overlapping.

- The “Shrinkwrap” tool was used to adjust the mesh of the optimised low-polygon model more closely to the shape of the high-poly model.

- Bake both a normal map and diffuse textures. While the first allows for the transfer of the surface details of the high-poly model without having to add more polygons, the second bake allows for the transfer of the colour details from the different texture files of the model (in the case of high-poly models, they had more than 50 files) into a single file.

5.2. Quality and Performance Evaluation of Optimised Models

6. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Chodak, G.; Ropuszyńska-Surma, E. Virtual Reality and Artificial Intelligence in e-Commerce. In Advanced Computing; Garg, D., Narayana, V.A., Suganthan, P.N., Anguera, J., Koppula, V.K., Gupta, S.K., Eds.; Springer: Cham, Switzerland, 2023; pp. 328–340. [Google Scholar] [CrossRef]

- Fedorko, R.; Kráľ, Š.; Bačík, R. Artificial Intelligence in E-commerce: A Literature Review. In Congress on Intelligent Systems; Saraswat, M., Sharma, H., Balachandran, K., Kim, J.H., Bansal, J.C., Eds.; Springer: Singapore, 2022; pp. 677–689. [Google Scholar] [CrossRef]

- Kang, H.J.; Shin, J.H.; Ponto, K. How 3D Virtual Reality Stores Can Shape Consumer Purchase Decisions: The Roles of Informativeness and Playfulness. J. Interact. Mark. 2020, 49, 70–85. [Google Scholar] [CrossRef]

- Pizzi, G.; Scarpi, D.; Pichierri, M.; Vannucci, V. Virtual reality, real reactions?: Comparing consumers’ perceptions and shopping orientation across physical and virtual-reality retail stores. Comput. Hum. Behav. 2019, 96, 1–12. [Google Scholar] [CrossRef]

- Cortinas, M.; Berne, C.; Chocarro, R.; Nilssen, F.; Rubio, N. Editorial: The Impact of AI-Enabled Technologies in E-commerce and Omnichannel Retailing. Front. Psychol. 2021, 12, 718885. [Google Scholar] [CrossRef]

- Miller, S.H.; Hashemian, A.; Gillihan, R.; Helms, E. A Comparison of Mobile Phone LiDAR Capture and Established Ground based 3D Scanning Methodologies. In Proceedings of the WCX SAE World Congress Experience, Detroit, MI, USA, 5–7 April 2022. [Google Scholar] [CrossRef]

- Skrupskaya, Y.; Skibina, V.; Taratukhin, V.; Kozlova, E. The Use of Virtual Reality to Drive Innovations. VRE-IP Experiment. In Information Systems and Design; Taratukhin, V., Matveev, M., Becker, J., Kupriyanov, Y., Eds.; Springer: Cham, Switzerland, 2022; pp. 336–345. [Google Scholar]

- Mildenhall, B.; Srinivasan, P.P.; Tancik, M.; Barron, J.T.; Ramamoorthi, R.; Ng, R. NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis. arXiv 2020, arXiv:2003.08934. [Google Scholar] [CrossRef]

- Gao, K.; Gao, Y.; He, H.; Lu, D.; Xu, L.; Li, J. NeRF: Neural Radiance Field in 3D Vision, A Comprehensive Review. arXiv 2023, arXiv:2210.00379. [Google Scholar] [CrossRef]

- Deng, N.; He, Z.; Ye, J.; Duinkharjav, B.; Chakravarthula, P.; Yang, X.; Sun, Q. FoV-NeRF: Foveated Neural Radiance Fields for Virtual Reality. IEEE Trans. Vis. Comput. Graph. 2021, 28, 3854–3864. [Google Scholar] [CrossRef] [PubMed]

- Dickson, A.; Shanks, J.; Ventura, J.; Knott, A.; Zollmann, S. VRVideos: A Flexible Pipeline for Virtual Reality Video Creation; University of Otago: Dunedin, New Zealand, 2022; pp. 199–202. [Google Scholar] [CrossRef]

- Li, K.; Rolff, T.; Schmidt, S.; Bacher, R.; Frintrop, S.; Leemans, W.; Steinicke, F. Immersive Neural Graphics Primitives. arXiv 2022, arXiv:2211.13494. [Google Scholar] [CrossRef]

- Rolff, T.; Li, K.; Hertel, J.; Schmidt, S.; Frintrop, S.; Steinicke, F. Interactive VRS-NeRF: Lightning fast Neural Radiance Field Rendering for Virtual Reality. In Proceedings of the SUI ’23: ACM Symposium on Spatial User Interaction, Sydney, Australia, 13–15 October 2023; pp. 1–3. [Google Scholar] [CrossRef]

- Obradović, M.; Vasiljević, I.; Durić, I.; Kićanović, J.; Stojaković, V.; Obradović, R. Virtual Reality Models Based on Photogrammetric Surveys—A Case Study of the Iconostasis of the Serbian Orthodox Cathedral Church of Saint Nicholas in Sremski Karlovci (Serbia). Appl. Sci. 2020, 10, 2743. [Google Scholar] [CrossRef]

- Andree León Tejada, R.; Alexander Jimenez Azabache, J.; Javier Berrú Beltrán, R. Proposal of virtual reality solution using Photogrammetry techniques to enhance the heritage promotion in a tourist center of Trujillo. In Proceedings of the 2022 IEEE Engineering International Research Conference (EIRCON), Lima, Peru, 26–28 October 2022; pp. 1–4. [Google Scholar] [CrossRef]

- Tadeja, S.; Lu, Y.; Rydlewicz, M.; Rydlewicz, W.; Bubas, T.; Kristensson, P. Exploring gestural input for engineering surveys of real-life structures in virtual reality using photogrammetric 3D models. Multimed. Tools Appl. 2021, 80, 31039–31058. [Google Scholar] [CrossRef]

- Raj, T.; Hashim, F.H.; Huddin, A.B.; Ibrahim, M.F.; Hussain, A. A Survey on LiDAR Scanning Mechanisms. Electronics 2020, 9, 741. [Google Scholar] [CrossRef]

- Mikita, T.; Krausková, D.; Hrůza, P.; Cibulka, M.; Patočka, Z. Forest Road Wearing Course Damage Assessment Possibilities with Different Types of Laser Scanning Methods including New iPhone LiDAR Scanning Apps. Forests 2022, 13, 1763. [Google Scholar] [CrossRef]

- Vogt, M.; Rips, A.; Emmelmann, C. Comparison of iPad Pro®’s LiDAR and TrueDepth Capabilities with an Industrial 3D Scanning Solution. Technologies 2021, 9, 25. [Google Scholar] [CrossRef]

- Ferreira, V.S.; Martins, S.G.; Figueira, N.M.; Pochmann, P.G.C. The Use of a Digital Surface Model with Virtual Reality in the Amazonian Context. In Proceedings of the 2021 International Conference on Electrical, Computer and Energy Technologies (ICECET), Cape Town, South Africa, 9–10 December 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Ricci, M.; Evangelista, A.; Di Roma, A.; Fiorentino, M. Immersive and desktop virtual reality in virtual fashion stores: A comparison between shopping experiences. Virtual Real. 2023, 27, 2281–2296. [Google Scholar] [CrossRef] [PubMed]

- Peukert, C.; Pfeiffer, J.; Meißner, M.; Pfeiffer, T.; Weinhardt, C. Shopping in Virtual Reality Stores: The Influence of Immersion on System Adoption. J. Manag. Inf. Syst. 2019, 36, 755–788. [Google Scholar] [CrossRef]

- Wu, H.; Wang, Y.; Qiu, J.; Liu, J.; Zhang, X.L. User-defined gesture interaction for immersive VR shopping applications. Behav. Inf. Technol. 2019, 38, 726–741. [Google Scholar] [CrossRef]

- Speicher, M.; Cucerca, S.; Krüger, A. VRShop: A Mobile Interactive Virtual Reality Shopping Environment Combining the Benefits of On- and Offline Shopping. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2017, 1, 102. [Google Scholar] [CrossRef]

- Speicher, M.; Hell, P.; Daiber, F.; Simeone, A.; Krüger, A. A virtual reality shopping experience using the apartment metaphor. In Proceedings of the AVI’18 2018 International Conference on Advanced Visual Interfaces, New York, NY, USA, 29 May–1 June 2018; pp. 1–9. [Google Scholar] [CrossRef]

- Shravani, D.; R, P.Y.; Atreyas, P.V.; G, S. VR Supermarket: A Virtual Reality Online Shopping Platform with a Dynamic Recommendation System. In Proceedings of the 2021 IEEE International Conference on Artificial Intelligence and Virtual Reality (AIVR), Taichung, Taiwan, 15–17 November 2021; pp. 119–123. [Google Scholar] [CrossRef]

- van Herpen, E.; van den Broek, E.; van Trijp, H.C.; Yu, T. Can a virtual supermarket bring realism into the lab? Comparing shopping behavior using virtual and pictorial store representations to behavior in a physical store. Appetite 2016, 107, 196–207. [Google Scholar] [CrossRef] [PubMed]

- Nightingale, R.; Ross, M.T.; Cruz, R.; Allenby, M.; Powell, S.K.; Woodruff, M. Frugal 3D scanning using smartphones provides an accessible framework for capturing the external ear. J. Plast. Reconstr. Aesthetic Surg. JPRAS 2021, 74, 3066–3072. [Google Scholar] [CrossRef] [PubMed]

- Luetzenburg, G.; Kroon, A.; Bjørk, A.A. Evaluation of the Apple iPhone 12 Pro LiDAR for an Application in Geosciences. Sci. Rep. 2021, 11, 22221. [Google Scholar] [CrossRef]

- Fawzy, H.E.D. The Accuracy of Mobile Phone Camera Instead of High Resolution Camera in Digital Close Range Photogrammetry. Int. J. Civ. Eng. Technol. (IJCIET) 2015, 6, 76–85. [Google Scholar]

- Croce, V.; Billi, D.; Caroti, G.; Piemonte, A.; De Luca, L.; Véron, P. Comparative Assessment of Neural Radiance Fields and Photogrammetry in Digital Heritage: Impact of Varying Image Conditions on 3D Reconstruction. Remote Sens. 2024, 16, 301. [Google Scholar] [CrossRef]

- Zhang, Z.; Sun, W.; Min, X.; Wang, T.; Lu, W.; Zhai, G. No-Reference Quality Assessment for 3D Colored Point Cloud and Mesh Models. arXiv 2021, arXiv:2107.02041. [Google Scholar] [CrossRef]

- Liu, Q.; Su, H.; Duanmu, Z.; Liu, W.; Wang, Z. Perceptual Quality Assessment of Colored 3D Point Clouds. IEEE Trans. Vis. Comput. Graph. 2022, 29, 3642–3655. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.; Sun, W.; Min, X.; Zhou, Q.; He, J.; Wang, Q.; Zhai, G. MM-PCQA: Multi-Modal Learning for No-reference Point Cloud Quality Assessment. arXiv 2023, arXiv:2209.00244. [Google Scholar] [CrossRef]

- Nehmé, Y.; Dupont, F.; Farrugia, J.P.; Le Callet, P.; Lavoué, G. Visual Quality of 3D Meshes With Diffuse Colors in Virtual Reality: Subjective and Objective Evaluation. IEEE Trans. Vis. Comput. Graph. 2021, 27, 2202–2219. [Google Scholar] [CrossRef] [PubMed]

- Lavoué, G. A Multiscale Metric for 3D Mesh Visual Quality Assessment. Comput. Graph. Forum 2011, 30, 1427–1437. [Google Scholar] [CrossRef]

- Webster, N.L. High poly to low poly workflows for real-time rendering. J. Vis. Commun. Med. 2017, 40, 40–47. [Google Scholar] [CrossRef]

- Schroeder, W.J.; Zarge, J.A.; Lorensen, W.E. Decimation of triangle meshes. SIGGRAPH Comput. Graph. 1992, 26, 65–70. [Google Scholar] [CrossRef]

- Grande, R.; Albusac, J.; Castro-Schez, J.; Vallejo, D.; Sánchez-Sobrino, S. A Virtual Reality Shopping platform for enhancing e-commerce activities of small businesses and local economies. In Proceedings of the 2023 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), Sydney, Australia, 16–20 October 2023; pp. 793–796. [Google Scholar] [CrossRef]

| Feature | Polycam 1.3.10 | Widar 4.1.3 | Kiri Engine 4.4.0 | Luma AI 1.3.8 | MagiScan 1.9.7 | Scaniverse 3.1.2 (iOS Only) |

|---|---|---|---|---|---|---|

| Technique Supported | P, L | P, L | P | N | P, L | P, L |

| Subscription Price | USD 14.99/m | USD 9.99/m | USD 15.99/m | Free | USD 9.99/m | Free |

| Free Export Formats | .gltf | No free export | .obj | .fbx, .obj, .glb, .gltf, .usdz, .stl, .ply, .xyz, .dae | .fbx, .obj, .glb, .gltf, .usdz, .stl, .ply | .fbx, .obj, .glb, .gltf, .usdz, .stl, .ply |

| Free Number of Export | Unlimited | No free export | 3/week | Unlimited | 3/account | Unlimited |

| Edition Tools Included | Yes | Yes | Yes | Yes | No | Yes |

| Object Masking (Photogrammetry) | Yes | No | Yes | - | No | No |

| User Rate | 4.3/5 (16,227 reviews) | 3.7/5 (2268 reviews) | 4.1/5 (1231 reviews) | 4.7/5 (1575 reviews) | 4.0/5 (4936 reviews) | 4.8/5 (7585 reviews) |

| Metric | Cube | Sphere | Pyramid | A | I | R |

|---|---|---|---|---|---|---|

| NeRF (Luma AI High-Poly Models) | ||||||

| Root Mean Square (RMS) | 0.00144 | 0.00751 | 0.00125 | 0.00750 | 0.00386 | 0.00394 |

| MSDM2 | 0.10303 | 0.14509 | 0.08394 | 0.12386 | 0.10182 | 0.11651 |

| Photogrammetry (Polycam Android 1.3.10) | ||||||

| Root Mean Square (RMS) | 0.00483 | 0.00945 | 0.00432 | 0.00221 | 0.00391 | 0.00417 |

| MSDM2 | 0.13715 | 0.18423 | 0.10411 | 0.11910 | 0.13607 | 0.11754 |

| LiDAR sensors (Polycam iOS 1.3.10) | ||||||

| Root Mean Square (RMS) | 0.00701 | 0.01179 | 0.00471 | 0.01045 | 0.01350 | 0.0055 |

| MSDM2 | 0.12862 | 0.24176 | 0.14615 | 0.16083 | 0.34365 | 0.17143 |

| Object | Type | Scanning Application | Device Used | Size (MB) | Polygon Count | Texture’s Size (MB) | NR-3DQA | MM-PCQA |

|---|---|---|---|---|---|---|---|---|

| Munich shoes | HP | LUMA AI | iPad Pro | 98.94 | 999,846 | 18.50 | 0.7272 | 86.06 |

| MP | LUMA AI | iPad Pro | 13.67 | 149,915 | 13.10 | 0.8101 | 83.52 | |

| LP | LUMA AI | iPad Pro | 2.16 | 24,893 | 10.20 | 0.7617 | 84.85 | |

| OP | Polycam | iPad Pro | 4.04 | 39,627 | 0.42 | 0.7363 | 80.67 | |

| OP | Polycam | Xiaomi Note 8 | 1.94 | 19,424 | 2.30 | 0.8111 | 82.18 | |

| Octopus teddy | HP | LUMA AI | iPad Pro | 99.47 | 999,869 | 8.60 | 0.6619 | 78.51 |

| MP | LUMA AI | iPad Pro | 13.50 | 149,916 | 5.19 | 0.6914 | 70.92 | |

| LP | LUMA AI | iPad Pro | 2.10 | 24,920 | 3.67 | 0.6537 | 66.25 | |

| OP | Polycam | iPad Pro | 0.25 | 2689 | 0.45 | 0.4288 | 20.51 | |

| OP | Polycam | Xiaomi Note 8 | 1.84 | 18,623 | 1.99 | 0.5539 | 65.98 | |

| Burner | HP | LUMA AI | iPad Pro | 53.54 | 528,442 | 4.64 | 0.6190 | 70.43 |

| MP | LUMA AI | iPad Pro | 14.30 | 149,972 | 3.71 | 0.6622 | 69.73 | |

| LP | LUMA AI | iPad Pro | 2.30 | 24,981 | 2.77 | 0.6487 | 70.63 | |

| OP | Polycam | iPad Pro | 0.64 | 6693 | 0.29 | 0.6381 | 67.50 | |

| OP | Polycam | Xiaomi Note 8 | 1.93 | 19,678 | 1.54 | 0.6238 | 69.97 | |

| Ceramic mushroom | HP | LUMA AI | iPad Pro | 98.94 | 999,731 | 2.97 | 0.7065 | 78.59 |

| MP | LUMA AI | iPad Pro | 13.67 | 149,875 | 1.16 | 0.7948 | 61.10 | |

| LP | LUMA AI | iPad Pro | 2.16 | 24,814 | 0.87 | 0.7226 | 72.60 | |

| OP | Polycam | iPad Pro | 0.96 | 10,161 | 0.30 | 0.6748 | 42.66 | |

| OP | Polycam | Xiaomi Note 8 | 1.05 | 10,917 | 2.26 | 0.6542 | 63.071 | |

| Trophy | HP | LUMA AI | iPad Pro | 39.2 | 329,208 | 6.43 | 0.7606 | 76.17 |

| MP | LUMA AI | iPad Pro | 13.9 | 149,923 | 4.93 | 0.8001 | 73.03 | |

| LP | LUMA AI | iPad Pro | 2.2 | 24,964 | 3.97 | 0.7974 | 69.61 | |

| OP | Polycam | iPad Pro | 1.49 | 13,416 | 1.83 | 0.4474 | 69.85 | |

| OP | Polycam | Xiaomi Note 8 | 3.39 | 36,105 | 12.2 | 0.4460 | 72.90 |

| Object | Type | Types Compared | Scanning Application | RMS | CMDM |

|---|---|---|---|---|---|

| Munich shoes | MP | HP, MP | LUMA | 0.00041 | 0.132868 |

| LP | HP, LP | LUMA | 0.00171 | 0.190245 | |

| OP | Polycam | Polycam | 0.01269 | 0.152718 | |

| OP | HP, PolyXiaomi | Polycam | 0.03012 | 0.223199 | |

| OP | HP, PolyiPad | Polycam | 0.03767 | 0.224947 | |

| Octopus teddy | MP | HP, MP | LUMA | 0.00023 | 0.151651 |

| LP | HP, LP | LUMA | 0.00098 | 0.17674 | |

| OP | Polycam | Polycam | 0.03360 | 0.204733 | |

| OP | HP, PolyXiaomi | Polycam | 0.00522 | 0.222374 | |

| OP | HP, PolyiPad | Polycam | 0.05976 | 0.318699 | |

| Burner | MP | HP, MP | LUMA | 0.00009 | 0.0480725 |

| LP | HP, LP | LUMA | 0.00040 | 0.0637373 | |

| OP | Polycam | Polycam | 0.01451 | 0.12820 | |

| OP | HP, PolyXiaomi | Polycam | 0.01568 | 0.121982 | |

| OP | HP, PolyiPad | Polycam | 0.01423 | 0.151532 | |

| Ceramic mushroom | MP | HP, MP | LUMA | 0.00018 | 0.239902 |

| LP | HP, LP | LUMA | 0.00072 | 0.290423 | |

| OP | Polycam | Polycam | 0.04873 | 0.181069 | |

| OP | HP, PolyXiaomi | Polycam | 0.03710 | 0.34902 | |

| OP | HP, PolyiPad | Polycam | 0.02446 | 0.350014 | |

| Trophy | MP | HP, MP | LUMA | 0.00027 | 0.0902698 |

| LP | HP, LP | LUMA | 0.00141 | 0.1052886 | |

| OP | Polycam | Polycam | 0.08235 | 0.239079 | |

| OP | HP, PolyXiaomi | Polycam | 0.03008 | 0.29231 | |

| OP | HP, PolyiPad | Polycam | 0.23793 | 0.390321 |

| Scene | Nº of Objects (Nº Polygons) | Average FPS | Stale Frames % | App GPU Time % | GPU U ≥ 99% | Average Prediction % |

|---|---|---|---|---|---|---|

| Meta Quest 2 | ||||||

| SimpleLowPoly | 4 LP (99,806) | 71.37 | 6.35 | 0.00 | 0.00 | 0.00 |

| 6xLowPoly | 24 LP (597,648) | 71.16 | 10.20 | 0.00 | 0.00 | 0.00 |

| 12xLowPoly | 48 LP (1,195,296) | 71.74 | 15.15 | 0.00 | 6.06 | 0.00 |

| 18xLowPoly | 72 LP (1,792,944) | 59.47 | 62.90 | 30.71 | 32.26 | 3.22 |

| 24xLowPoly | 96 LP (2,390,592) | 56.21 | 64.52 | 48.38 | 32.26 | 11.29 |

| SimpleMediumPoly | 4 MP (599,600) | 69.22 | 16.00 | 0.00 | 0.00 | 0.00 |

| 3xMediumPoly | 12 MP (1,798,800) | 48.81 | 80.00 | 57.33 | 17.33 | 29.33 |

| 6xMediumPoly | 24 MP (3,597,600) | 29.61 | 45.00 | 61.67 | 56.66 | 8.33 |

| SimpleHighPoly | 4 HP (3,527,888) | 9.30 | 10.0 | 30.00 | 93.33 | 78.33 |

| 3xHighPoly | 12 HP (10,583,644) | 8.75 | 1.66 | 33.33 | 93.33 | 56.66 |

| Meta Quest Pro | ||||||

| SimpleLowPoly | 4 LP (99,806) | 71.63 | 11.66 | 0.00 | 0.00 | 0.00 |

| 6xLowPoly | 24 LP (597,648) | 71.56 | 16.66 | 0.00 | 0.00 | 0.00 |

| 12xLowPoly | 48 LP (1,195,296) | 69.28 | 43.33 | 5.00 | 11.66 | 1.67 |

| 18xLowPoly | 72 LP (1,792,944) | 60.16 | 65.00 | 35.00 | 13.33 | 5.00 |

| 24xLowPoly | 96 LP (2,390,592) | 44.56 | 78.33 | 71.67 | 30.00 | 6.67 |

| SimpleMediumPoly | 4 MP (599,600) | 71.48 | 20.00 | 0.00 | 3.33 | 0.00 |

| 3xMediumPoly | 12 MP (1,798,800) | 56.94 | 88.33 | 63.33 | 20.00 | 30.00 |

| 6xMediumPoly | 24 MP (3,597,600) | 30.62 | 35.00 | 33.33 | 61.67 | 26.66 |

| SimpleHighPoly | 4 HP (3,527,888) | 12.40 | 5.00 | 18.33 | 93.33 | 48.33 |

| 3xHighPoly | 12 HP (10,583,644) | 8.28 | 80.00 | 53.33 | 91.66 | 90.00 |

| Meta Quest 3 | ||||||

| SimpleLowPoly | 4 LP (99,806) | 71.52 | 4.91 | 0.00 | 0.00 | 0.00 |

| 6xLowPoly | 24 LP (597,648) | 71.95 | 6.55 | 0.00 | 0.00 | 0.00 |

| 12xLowPoly | 48 LP (1,195,296) | 71.96 | 13.11 | 3.28 | 0.00 | 0.00 |

| 18xLowPoly | 72 LP (1,792,944) | 62.60 | 45.90 | 21.31 | 0.00 | 11.47 |

| 24xLowPoly | 96 LP (2,390,592) | 55.52 | 50.82 | 31.15 | 4.92 | 21.31 |

| SimpleMediumPol y | 4 MP (599,600) | 71.85 | 16.66 | 0.00 | 0.00 | 0.00 |

| 3xMediumPoly | 12 MP (1,798,800) | 52.43 | 84.51 | 67.60 | 30.03 | 19.71 |

| 6xMediumPoly | 24 MP (3,597,600) | 48.08 | 27.87 | 45.90 | 34.43 | 14.75 |

| SimpleHighPoly | 4 HP (3,527,888) | 15.97 | 13.11 | 83.60 | 81.97 | 40.98 |

| 3xHighPoly | 12 HP (10,583,644) | 10.95 | 4.92 | 91.80 | 91.80 | 57.37 |

| Device | Average Frame Rate | Stale Frames % | App GPU Time % | GPU U >= 99% | CPU U % | Average Prediction % |

|---|---|---|---|---|---|---|

| Meta Quest 2 | 71.36 | 15.00 | 0.00 | 0.00 | 19.6 | 0.00 |

| Meta Quest Pro | 71.33 | 15.00 | 0.00 | 0.00 | 21.70 | 0.00 |

| Meta Quest 3 | 71.65 | 11.66 | 0.00 | 0.00 | 26.15 | 0.00 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Grande, R.; Albusac, J.; Vallejo, D.; Glez-Morcillo, C.; Castro-Schez, J.J. Performance Evaluation and Optimization of 3D Models from Low-Cost 3D Scanning Technologies for Virtual Reality and Metaverse E-Commerce. Appl. Sci. 2024, 14, 6037. https://doi.org/10.3390/app14146037

Grande R, Albusac J, Vallejo D, Glez-Morcillo C, Castro-Schez JJ. Performance Evaluation and Optimization of 3D Models from Low-Cost 3D Scanning Technologies for Virtual Reality and Metaverse E-Commerce. Applied Sciences. 2024; 14(14):6037. https://doi.org/10.3390/app14146037

Chicago/Turabian StyleGrande, Rubén, Javier Albusac, David Vallejo, Carlos Glez-Morcillo, and José Jesús Castro-Schez. 2024. "Performance Evaluation and Optimization of 3D Models from Low-Cost 3D Scanning Technologies for Virtual Reality and Metaverse E-Commerce" Applied Sciences 14, no. 14: 6037. https://doi.org/10.3390/app14146037

APA StyleGrande, R., Albusac, J., Vallejo, D., Glez-Morcillo, C., & Castro-Schez, J. J. (2024). Performance Evaluation and Optimization of 3D Models from Low-Cost 3D Scanning Technologies for Virtual Reality and Metaverse E-Commerce. Applied Sciences, 14(14), 6037. https://doi.org/10.3390/app14146037