A Review of Recent Literature on Audio-Based Pseudo-Haptics

Abstract

1. Introduction

1.1. Haptics

1.2. Haptic Devices

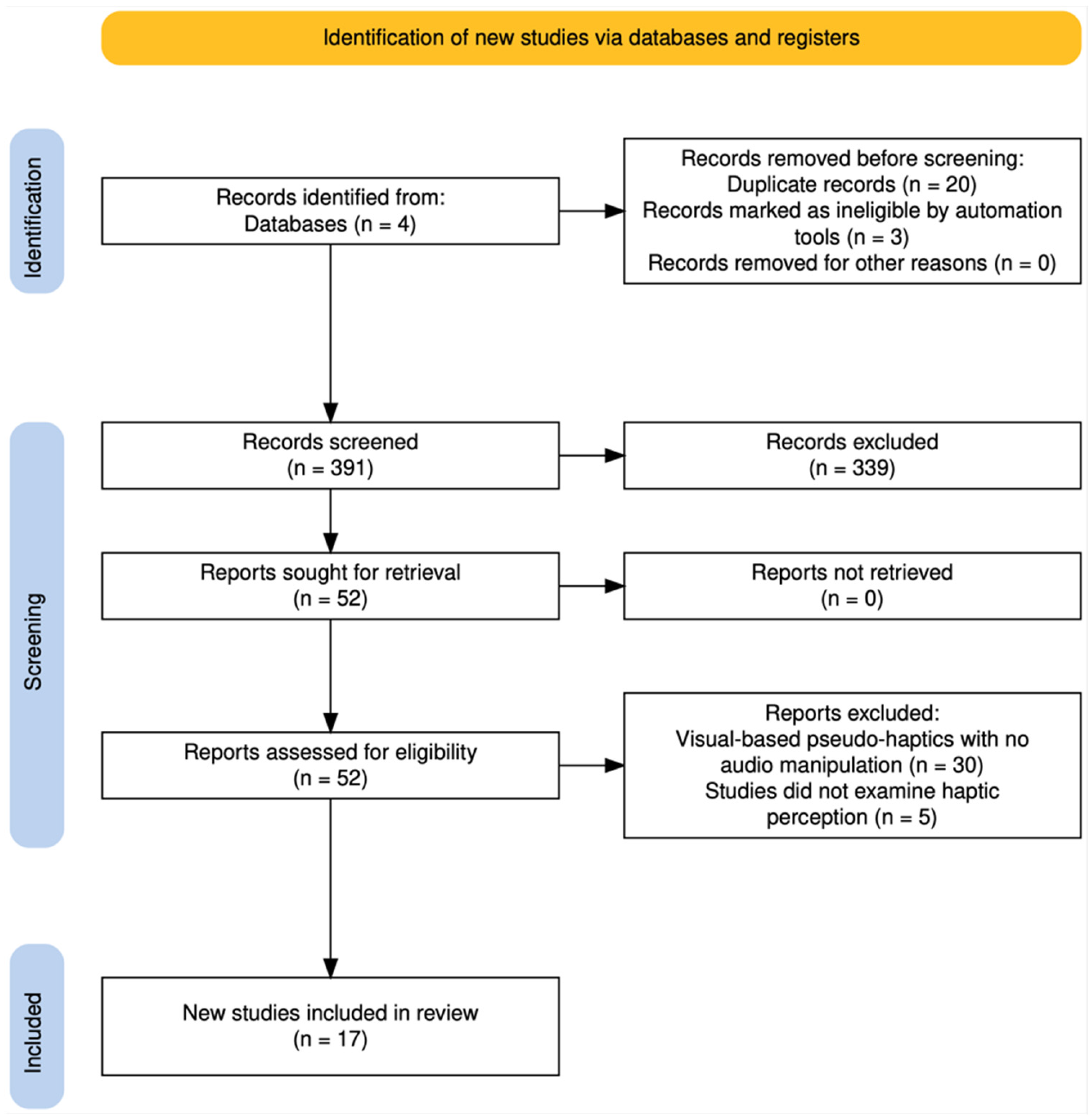

1.3. Review Details

2. The Review

2.1. Audio–Haptic Interactions

2.2. Audio-Based Pseudo-Haptics

2.3. Visual–Auditory-Based Pseudo-Haptics

3. Discussion

Applications to Medical Training

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wortley, D. The Future of Serious Games and Immersive Technologies and Their Impact on Society. In Trends and Applications of Serious Gaming and Social Media; Baek, Y., Ko, R., Marsh, T., Eds.; Gaming Media and Social Effects; Springer: Singapore, 2014; pp. 1–14. ISBN 978-981-4560-25-2. [Google Scholar]

- Suh, A.; Prophet, J. The State of Immersive Technology Research: A Literature Analysis. Comput. Hum. Behav. 2018, 86, 77–90. [Google Scholar] [CrossRef]

- Hall, S.; Takahashi, R. Augmented and Virtual Reality: The Promise and Peril of Immersive Technologies. In Proceedings of the World Economic Forum, Davos-Klosters, Switzerland, 17–20 January 2017; Volume 2. [Google Scholar]

- Brydges, R.; Campbell, D.M.; Beavers, L.; Khodadoust, N.; Iantomasi, P.; Sampson, K.; Goffi, A.; Caparica Santos, F.N.; Petrosoniak, A. Lessons Learned in Preparing for and Responding to the Early Stages of the COVID-19 Pandemic: One Simulation’s Program Experience Adapting to the New Normal. Adv. Simul. 2020, 5, 8. [Google Scholar] [CrossRef]

- Straits Research E-Learning Market to Reach USD 645 Billion in Market Size by 2030, Growing at a CAGR of 13%: Straits Research. Available online: https://www.infoprolearning.com/blog/elearning-localization-your-secret-code-to-reach-the-global-audience/ (accessed on 24 June 2024).

- Zion Research Serious Games Market Size, Share, Growth Report 2030. Available online: https://www.zionmarketresearch.com/report/serious-games-market (accessed on 24 June 2024).

- Mather, G. Foundations of Sensation and Perception; Psychology Press: London, UK, 2016; ISBN 978-1-317-37255-4. [Google Scholar]

- Dubois, D.; Cance, C.; Coler, M.; Paté, A.; Guastavino, C. Sensory Experiences: Exploring Meaning and the Senses; John Benjamins Publishing Company: Amsterdam, The Netherlands, 2021; Volume 24. [Google Scholar]

- Spielman, M.R.; Dumper, K.; Jenkins, W.; Lacombe, A.; Lovett, M.D.; Perlmutter, M. Psychology, H5P ed.; BCcampus: Victoria, BC, Canada, 2021. [Google Scholar]

- Wilson, K.A. How Many Senses? Multisensory Perception Beyond the Five Senses. In Sabah Ülkesi; IGMG: Cologne, Germany, 2021; pp. 76–79. Available online: https://philpapers.org/rec/WILMP-8 (accessed on 24 June 2024).

- Purves, A.C. Touch and the Ancient Senses; Bradley, M., Butler, S., Eds.; The Senses in Antiquity; Routledge: Abingdon, UK; New York, NY, USA, 2018; ISBN 978-1-84465-871-8. [Google Scholar]

- Hutmacher, F. Why Is There So Much More Research on Vision Than on Any Other Sensory Modality? Front. Psychol. 2019, 10, 2246. [Google Scholar] [CrossRef]

- Enoch, J.; McDonald, L.; Jones, L.; Jones, P.R.; Crabb, D.P. Evaluating Whether Sight Is the Most Valued Sense. JAMA Ophthalmol. 2019, 137, 1317. [Google Scholar] [CrossRef]

- Classen, C. The Deepest Sense: A Cultural History of Touch; University of Illinois Press: Champaign, IL, USA, 2012; ISBN 978-0-252-03493-0. [Google Scholar]

- El Rassi, I.; El Rassi, J.-M. A Review of Haptic Feedback in Tele-Operated Robotic Surgery. J. Med. Eng. Technol. 2020, 44, 247–254. [Google Scholar] [CrossRef]

- See, A.R.; Choco, J.A.G.; Chandramohan, K. Touch, Texture and Haptic Feedback: A Review on How We Feel the World around Us. Appl. Sci. 2022, 12, 4686. [Google Scholar] [CrossRef]

- Gallace, A.; Spence, C. In Touch with the Future: The Sense of Touch from Cognitive Neuroscience to Virtual Reality; Oxford University Press: Oxford, UK, 2014; ISBN 978-0-19-964446-9. [Google Scholar]

- Adilkhanov, A.; Rubagotti, M.; Kappassov, Z. Haptic Devices: Wearability-Based Taxonomy and Literature Review. IEEE Access 2022, 10, 91923–91947. [Google Scholar] [CrossRef]

- Hannaford, B.; Okamura, A.M. Haptics. In Springer Handbook of Robotics; Siciliano, B., Khatib, O., Eds.; Springer Handbooks; Springer International Publishing: Cham, Switzerland, 2016; pp. 1063–1084. ISBN 978-3-319-32550-7. [Google Scholar]

- Oakley, I.; McGee, M.R.; Brewster, S.; Gray, P. Putting the Feel in ‘look and Feel’. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Hague, The Netherlands, 1–6 April 2000; ACM: New York, NY, USA, 2000; pp. 415–422. [Google Scholar]

- Pacchierotti, C.; Prattichizzo, D.; Kuchenbecker, K.J. Cutaneous Feedback of Fingertip Deformation and Vibration for Palpation in Robotic Surgery. IEEE Trans. Biomed. Eng. 2016, 63, 278–287. [Google Scholar] [CrossRef] [PubMed]

- Huang, Y.; Yao, K.; Li, J.; Li, D.; Jia, H.; Liu, Y.; Yiu, C.K.; Park, W.; Yu, X. Recent Advances in Multi-Mode Haptic Feedback Technologies towards Wearable Interfaces. Mater. Today Phys. 2022, 22, 100602. [Google Scholar] [CrossRef]

- Lederman, S.; Klatzky, R.L. Human Haptics. In Encyclopedia of Neuroscience; Academic Press: Cambridge, MA, USA, 2009; Volume 5. [Google Scholar]

- Johansson, R.S.; Flanagan, J.R. Sensory Control of Object Manipulation. In Sensorimotor Control of Grasping: Physiology and Pathophysiology; Cambridge University Press: Cambridge, UK, 2009; pp. 141–160. [Google Scholar]

- Klatzky, R.; Lederman, S. The Haptic Identification of Everyday Life Objects. In Touching for Knowing: Cognitive Psychology of Haptic Manual Perception; John Benjamins Publishing Company: Amsterdam, The Netherlands, 2003. [Google Scholar]

- Goodwin, A.W.; Wheat, H.E. Sensory Signals in Neural Populations Underlying Tactile Perception and Manipulation. Annu. Rev. Neurosci. 2004, 27, 53–77. [Google Scholar] [CrossRef]

- Goble, D.J.; Brown, S.H. Upper Limb Asymmetries in the Matching of Proprioceptive Versus Visual Targets. J. Neurophysiol. 2008, 99, 3063–3074. [Google Scholar] [CrossRef] [PubMed]

- Lederman, S.J.; Klatzky, R.L. Haptic Perception: A Tutorial. Atten. Percept. Psychophys. 2009, 71, 1439–1459. [Google Scholar] [CrossRef]

- Culbertson, H.; Schorr, S.B.; Okamura, A.M. Haptics: The Present and Future of Artificial Touch Sensation. Annu. Rev. Control Robot. Auton. Syst. 2018, 1, 385–409. [Google Scholar] [CrossRef]

- Jafari, N.; Adams, K.D.; Tavakoli, M. Haptics to Improve Task Performance in People with Disabilities: A Review of Previous Studies and a Guide to Future Research with Children with Disabilities. J. Rehabil. Assist. Technol. Eng. 2016, 3, 205566831666814. [Google Scholar] [CrossRef] [PubMed]

- Panariello, D.; Caporaso, T.; Grazioso, S.; Di Gironimo, G.; Lanzotti, A.; Knopp, S.; Pelliccia, L.; Lorenz, M.; Klimant, P. Using the KUKA LBR Iiwa Robot as Haptic Device for Virtual Reality Training of Hip Replacement Surgery. In Proceedings of the 2019 Third IEEE International Conference on Robotic Computing (IRC), Naples, Italy, 25–27 February 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 449–450. [Google Scholar]

- Junput, B.; Wei, X.; Jamone, L. Feel It on Your Fingers: Dataglove with Vibrotactile Feedback for Virtual Reality and Telerobotics. In Towards Autonomous Robotic Systems; Althoefer, K., Konstantinova, J., Zhang, K., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2019; Volume 11649, pp. 375–385. ISBN 978-3-030-23806-3. [Google Scholar]

- Collins, K.; Kapralos, B. Pseudo-Haptics: Leveraging Cross-Modal Perception in Virtual Environments. Senses Soc. 2019, 14, 313–329. [Google Scholar] [CrossRef]

- D’Abbraccio, J.; Massari, L.; Prasanna, S.; Baldini, L.; Sorgini, F.; Airò Farulla, G.; Bulletti, A.; Mazzoni, M.; Capineri, L.; Menciassi, A.; et al. Haptic Glove and Platform with Gestural Control for Neuromorphic Tactile Sensory Feedback in Medical Telepresence. Sensors 2019, 19, 641. [Google Scholar] [CrossRef] [PubMed]

- Aldridge, R.J.; Carr, K.; England, R.; Meech, J.F.; Solomonides, T. Getting a Grasp on Virtual Reality. In Proceedings of the Conference Companion on Human Factors in Computing Systems Common Ground—CHI ’96; ACM Press: Vancouver, BC, Canada, 1996; pp. 229–230. [Google Scholar]

- Seinfeld, S.; Feuchtner, T.; Maselli, A.; Müller, J. User Representations in Human-Computer Interaction. Hum.–Comput. Interact. 2021, 36, 400–438. [Google Scholar] [CrossRef]

- Argelaguet Sanz, F.; Jáuregui, D.A.G.; Marchal, M.; Lécuyer, A. Elastic Images: Perceiving Local Elasticity of Images through a Novel Pseudo-Haptic Deformation Effect. ACM Trans. Appl. Percept. 2013, 10, 1–14. [Google Scholar] [CrossRef]

- Yu, R.; Bowman, D.A. Pseudo-Haptic Display of Mass and Mass Distribution During Object Rotation in Virtual Reality. IEEE Trans. Vis. Comput. Graph. 2020, 26, 2094–2103. [Google Scholar] [CrossRef]

- Lécuyer, A.; Burkhardt, J.-M.; Tan, C.-H. A Study of the Modification of the Speed and Size of the Cursor for Simulating Pseudo-Haptic Bumps and Holes. ACM Trans. Appl. Percept. 2008, 5, 1–21. [Google Scholar] [CrossRef]

- Ujitoko, Y.; Ban, Y. Survey of Pseudo-Haptics: Haptic Feedback Design and Application Proposals. IEEE Trans. Haptics 2021, 14, 699–711. [Google Scholar] [CrossRef] [PubMed]

- Lécuyer, A.; Coquillart, S.; Kheddar, A.; Richard, P.; Coiffet, P. Pseudo-Haptic Feedback: Can Isometric Input Devices Simulate Force Feedback? In Proceedings of the IEEE Virtual Reality 2000 (Cat. No.00CB37048), New Brunswick, NJ, USA, 18–22 March 2000; IEEE: Piscataway, NJ, USA, 2000; pp. 83–90. [Google Scholar]

- Kaneko, S.; Yokosaka, T.; Kajimoto, H.; Kawabe, T. A Pseudo-Haptic Method Using Auditory Feedback: The Role of Delay, Frequency, and Loudness of Auditory Feedback in Response to a User’s Button Click in Causing a Sensation of Heaviness. IEEE Access 2022, 10, 50008–50022. [Google Scholar] [CrossRef]

- Garlinska, M.; Osial, M.; Proniewska, K.; Pregowska, A. The Influence of Emerging Technologies on Distance Education. Electronics 2023, 12, 1550. [Google Scholar] [CrossRef]

- Lécuyer, A. Simulating Haptic Feedback Using Vision: A Survey of Research and Applications of Pseudo-Haptic Feedback. Presence Teleoper. Virtual Environ. 2009, 18, 39–53. [Google Scholar] [CrossRef]

- Oxman, A.D. Users’ Guides to the Medical Literature: VI. How to Use an Overview. JAMA 1994, 272, 1367. [Google Scholar] [CrossRef] [PubMed]

- Grant, M.J.; Booth, A. A Typology of Reviews: An Analysis of 14 Review Types and Associated Methodologies. Health Inf. Libr. J. 2009, 26, 91–108. [Google Scholar] [CrossRef] [PubMed]

- Green, B.N.; Johnson, C.D.; Adams, A. Writing Narrative Literature Reviews for Peer-Reviewed Journals: Secrets of the Trade. J. Chiropr. Med. 2006, 5, 101–117. [Google Scholar] [CrossRef] [PubMed]

- Haddaway, N.R.; Page, M.J.; Pritchard, C.C.; McGuinness, L.A. PRISMA2020: An R Package and Shiny App for Producing PRISMA 2020-compliant Flow Diagrams, with Interactivity for Optimised Digital Transparency and Open Synthesis. Campbell Syst. Rev. 2022, 18, e1230. [Google Scholar] [CrossRef] [PubMed]

- Kapralos, B.; Moussa, F.; Collins, K.; Dubrowski, A. Fidelity and Multimodal Interactions. In Instructional Techniques to Facilitate Learning and Motivation of Serious Games; Wouters, P., Van Oostendorp, H., Eds.; Springer International Publishing: Cham, Switzerland, 2017; pp. 79–101. ISBN 978-3-319-39296-7. [Google Scholar]

- Melaisi, M.; Rojas, D.; Kapralos, B.; Uribe-Quevedo, A.; Collins, K. Multimodal Interaction of Contextual and Non-Contextual Sound and Haptics in Virtual Simulations. Informatics 2018, 5, 43. [Google Scholar] [CrossRef]

- Lu, S.; Chen, Y.; Culbertson, H. Towards Multisensory Perception: Modeling and Rendering Sounds of Tool-Surface Interactions. IEEE Trans. Haptics 2020, 13, 94–101. [Google Scholar] [CrossRef]

- Chan, S.; Tymms, C.; Colonnese, N. Hasti: Haptic and Audio Synthesis for Texture Interactions. In Proceedings of the 2021 IEEE World Haptics Conference (WHC), Montreal, QC, Canada, 6–9 July 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 733–738. [Google Scholar]

- Maćkowski, M.; Brzoza, P.; Spinczyk, D. An Alternative Method of Audio-Tactile Presentation of Graphical Information in Mathematics Adapted to the Needs of Blind. Int. J. Hum.-Comput. Stud. 2023, 179, 103122. [Google Scholar] [CrossRef]

- Bosman, I.D.V.; De Beer, K.; Bothma, T.J.D. Creating Pseudo-Tactile Feedback in Virtual Reality Using Shared Crossmodal Properties of Audio and Tactile Feedback. S. Afr. Comput. J. 2021, 33, 1–21. [Google Scholar] [CrossRef]

- Zhexuan, W.; Zhong, W. Research on a Method of Conveying Material Sensations through Sound Effects. J. New Music Res. 2022, 51, 121–141. [Google Scholar] [CrossRef]

- Malpica, S.; Serrano, A.; Allue, M.; Bedia, M.G.; Masia, B. Crossmodal Perception in Virtual Reality. Multimed. Tools Appl. 2020, 79, 3311–3331. [Google Scholar] [CrossRef]

- Ning, G.; Grant, B.; Kapralos, B.; Quevedo, A.; Collins, K.; Kanev, K.; Dubrowski, A. Understanding Virtual Drilling Perception Using Sound, and Kinesthetic Cues Obtained with a Mouse and Keyboard. J. Multimodal User Interfaces 2023, 17, 165. [Google Scholar] [CrossRef]

- Speicher, M.; Ehrlich, J.; Gentile, V.; Degraen, D.; Sorce, S.; Krüger, A. Pseudo-Haptic Controls for Mid-Air Finger-Based Menu Interaction. In Proceedings of the Extended Abstracts of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019; ACM: New York, NY, USA, 2019; pp. 1–6. [Google Scholar]

- Eckhoff, D.; Cassinelli, A.; Liu, T.; Sandor, C. Psychophysical Effects of Experiencing Burning Hands in Augmented Reality. In Virtual Reality and Augmented Reality; Bourdot, P., Interrante, V., Kopper, R., Olivier, A.-H., Saito, H., Zachmann, G., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2020; Volume 12499, pp. 83–95. ISBN 978-3-030-62654-9. [Google Scholar]

- Haruna, M.; Noboru, K.; Ogino, M.; Koike-Akino, T. Comparison of Three Feedback Modalities for Haptics Sensation in Remote Machine Manipulation. IEEE Robot. Autom. Lett. 2021, 6, 5040–5047. [Google Scholar] [CrossRef]

- Kang, N.; Sah, Y.J.; Lee, S. Effects of Visual and Auditory Cues on Haptic Illusions for Active and Passive Touches in Mixed Reality. Int. J. Hum.-Comput. Stud. 2021, 150, 102613. [Google Scholar] [CrossRef]

- Puértolas Bálint, L.A.; Althoefer, K.; Perez Macias, L.H. Virtual Reality Percussion Simulator for Medical Student Training. In Proceedings of the 2021 IEEE 6th International Forum on Research and Technology for Society and Industry (RTSI), Naples, Italy, 6–9 September 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 295–299. [Google Scholar]

- Desnoyers-Stewart, J.; Stepanova, E.R.; Liu, P.; Kitson, A.; Pennefather, P.P.; Ryzhov, V.; Riecke, B.E. Embodied Telepresent Connection (ETC): Exploring Virtual Social Touch Through Pseudohaptics. In Proceedings of the Extended Abstracts of the 2023 CHI Conference on Human Factors in Computing Systems, Hamburg, Germany, 23–28 April 2023; ACM: New York, NY, USA, 2023; pp. 1–7. [Google Scholar]

- Kurzweg, M.; Letter, M.; Wolf, K. Vibrollusion: Creating a Vibrotactile Illusion Induced by Audiovisual Touch Feedback. In Proceedings of the 22nd International Conference on Mobile and Ubiquitous Multimedia, Vienna, Austria, 3–6 December 2023; ACM: New York, NY, USA, 2023; pp. 185–197. [Google Scholar]

- Lee, D.S.; Lee, K.C.; Kim, H.J.; Kim, S. Pseudo-Haptic Feedback Design for Virtual Activities in Human Computer Interface. In Virtual, Augmented and Mixed Reality; Chen, J.Y.C., Fragomeni, G., Eds.; Lecture Notes in Computer Science; Springer Nature: Cham, Switzerland, 2023; Volume 14027, pp. 253–265. ISBN 978-3-031-35633-9. [Google Scholar]

- Lécuyer, A.; Burkhardt, J.-M.; Etienne, L. Feeling Bumps and Holes without a Haptic Interface: The Perception of Pseudo-Haptic Textures. In Proceedings of the Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Vienna, Austria, 24–29 April 2004; ACM: New York, NY, USA, 2004; pp. 239–246. [Google Scholar]

- Moosavi, M.S.; Raimbaud, P.; Guillet, C.; Plouzeau, J.; Merienne, F. Weight Perception Analysis Using Pseudo-Haptic Feedback Based on Physical Work Evaluation. Front. Virtual Real. 2023, 4, 973083. [Google Scholar] [CrossRef]

- Mori, S.; Kataoka, Y.; Hashiguchi, S. Exploring Pseudo-Weight in Augmented Reality Extended Displays. In Proceedings of the 2022 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Christchurch, New Zealand, 12–16 March 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 703–710. [Google Scholar]

- Costes, A.; Argelaguet, F.; Danieau, F.; Guillotel, P.; Lécuyer, A. Touchy: A Visual Approach for Simulating Haptic Effects on Touchscreens. Front. ICT 2019, 6, 1. [Google Scholar] [CrossRef]

- Crandall, R.; Karadoğan, E. Designing Pedagogically Effective Haptic Systems for Learning: A Review. Appl. Sci. 2021, 11, 6245. [Google Scholar] [CrossRef]

- Bermejo, C.; Hui, P. A Survey on Haptic Technologies for Mobile Augmented Reality. ACM Comput. Surv. 2021, 54, 1–35. [Google Scholar] [CrossRef]

- Bouzbib, E.; Bailly, G.; Haliyo, S.; Frey, P. “Can I Touch This?”: Survey of Virtual Reality Interactions via Haptic Solutions: Revue de Littérature Des Interactions En Réalité Virtuelle Par Le Biais de Solutions Haptiques. In Proceedings of the 32e Conférence Francophone sur l’Interaction Homme-Machine, Virtual Event, 13 April 2021; ACM: New York, NY, USA, 2021; pp. 1–16. [Google Scholar]

- Hatzfeld, C.; Kern, T.A. Haptics as an Interaction Modality. In Engineering Haptic Devices; Kern, T.A., Hatzfeld, C., Abbasimoshaei, A., Eds.; Springer Series on Touch and Haptic Systems; Springer International Publishing: Cham, Swtizerland, 2023; pp. 35–108. ISBN 978-3-031-04535-6. [Google Scholar]

- Lim, W.N.; Yap, K.M.; Lee, Y.; Wee, C.; Yen, C.C. A Systematic Review of Weight Perception in Virtual Reality: Techniques, Challenges, and Road Ahead. IEEE Access 2021, 9, 163253–163283. [Google Scholar] [CrossRef]

- Pusch, A.; Lécuyer, A. Pseudo-Haptics: From the Theoretical Foundations to Practical System Design Guidelines. In Proceedings of the 13th International Conference on Multimodal Interfaces, Alicante, Spain, 14–18 November 2011; ACM: New York, NY, USA, 2011; pp. 57–64. [Google Scholar]

- Meyer, U.; Becker, J.; Draheim, S.; von Luck, K. Sensory Simulation in the Use of Haptic Proxies: Best Practices? In Proceedings of the Conference on Human Factors in Computing Systems, Online, 8–13 May 2021. [Google Scholar]

- Kaipel, M.; Majewski, M.; Regazzoni, P. Double-Plate Fixation in Lateral Clavicle Fractures—A New Strategy. J. Trauma Inj. Infect. Crit. Care 2010, 69, 896–900. [Google Scholar] [CrossRef] [PubMed]

- Lindenhovius, A.L.C.; Felsch, Q.; Ring, D.; Kloen, P. The Long-Term Outcome of Open Reduction and Internal Fixation of Stable Displaced Isolated Partial Articular Fractures of the Radial Head. J. Trauma Inj. Infect. Crit. Care 2009, 67, 143–146. [Google Scholar] [CrossRef] [PubMed]

- Houston, J.; Chiang, A.; Haleem, S.; Bernard, J.; Bishop, T.; Lui, D.F. Reproducibility and Reliability Analysis of the Luk Distal Radius and Ulna Classification for European Patients with Adolescent Idiopathic Scoliosis. J. Child. Orthop. 2021, 15, 166–170. [Google Scholar] [CrossRef] [PubMed]

- Dubrowski, A.; Backstein, D. The Contributions of Kinesiology to Surgical Education. J. Bone Jt. Surg.-Am. Vol. 2004, 86, 2778–2781. [Google Scholar] [CrossRef]

- Praamsma, M.; Carnahan, H.; Backstein, D.; Veillette, C.J.H.; Gonzalez, D.; Dubrowski, A. Drilling Sounds Are Used by Surgeons and Intermediate Residents, but Not Novice Orthopedic Trainees, to Guide Drilling Motions. Can. J. Surg. J. Can. Chir. 2008, 51, 442–446. [Google Scholar]

| Article | Brief Summary |

|---|---|

| Melaisi et al. [50] |

|

| Lu et al. [51] |

|

| Chan et al. [52] |

|

| Maćkowski et al. [53] |

|

| Bosman et al. [54] |

|

| Kaneko et al. [42] |

|

| Zhexuan and Zhong [55] |

|

| Malpica et al. [56] |

|

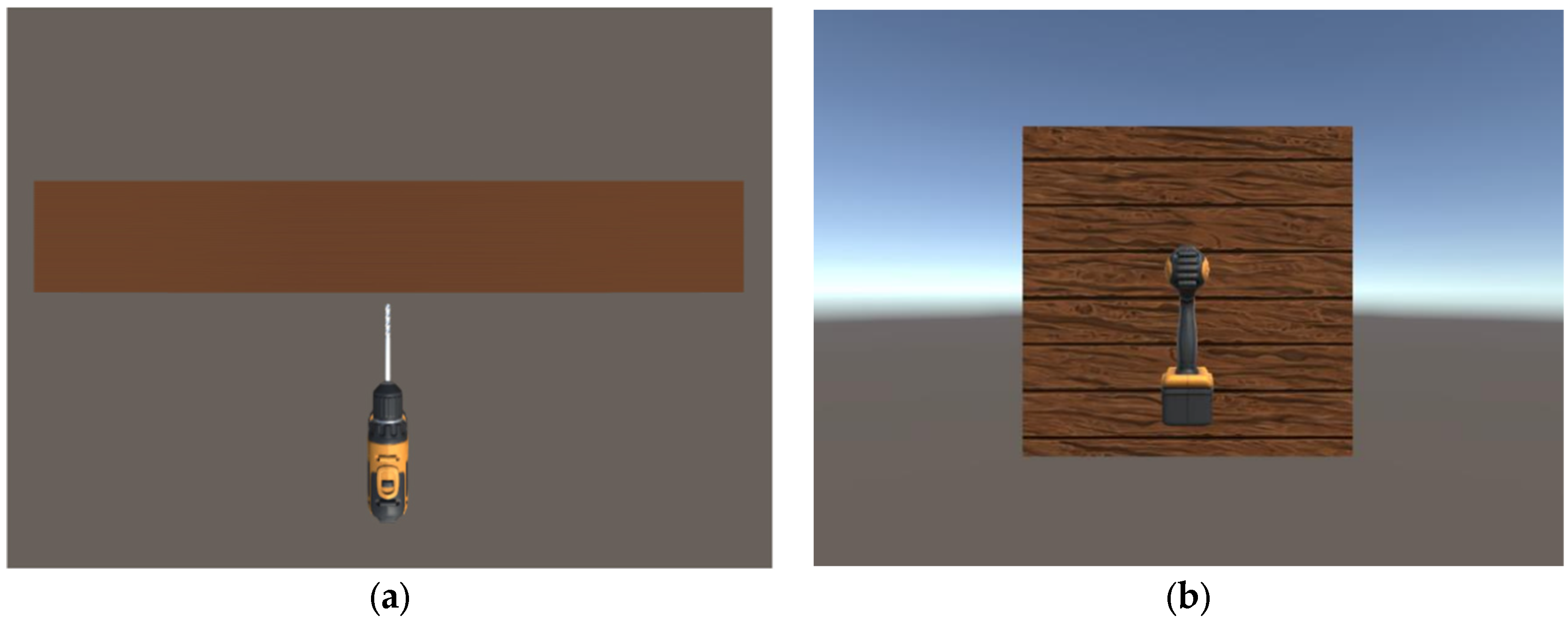

| Ning et al. [57] |

|

| Speicher et al. [58] |

|

| Eckhoff et al. [59] |

|

| Haruna et al. [60] |

|

| Kang et al. [61] |

|

| Puértolas Bálint et al. [62] |

|

| Desnoyers-Stewart et al. [63] |

|

| Kurzweg et al. [64] |

|

| Lee et al. [65] |

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Abdo, S.; Kapralos, B.; Collins, K.; Dubrowski, A. A Review of Recent Literature on Audio-Based Pseudo-Haptics. Appl. Sci. 2024, 14, 6020. https://doi.org/10.3390/app14146020

Abdo S, Kapralos B, Collins K, Dubrowski A. A Review of Recent Literature on Audio-Based Pseudo-Haptics. Applied Sciences. 2024; 14(14):6020. https://doi.org/10.3390/app14146020

Chicago/Turabian StyleAbdo, Sandy, Bill Kapralos, KC Collins, and Adam Dubrowski. 2024. "A Review of Recent Literature on Audio-Based Pseudo-Haptics" Applied Sciences 14, no. 14: 6020. https://doi.org/10.3390/app14146020

APA StyleAbdo, S., Kapralos, B., Collins, K., & Dubrowski, A. (2024). A Review of Recent Literature on Audio-Based Pseudo-Haptics. Applied Sciences, 14(14), 6020. https://doi.org/10.3390/app14146020