1. Introduction

Recently, the development of 3D laser scanning technology has been rapid. Laser scanning devices can obtain point cloud data by scanning objects [

1]. Compared with 2D image data, point cloud data contain depth information and have a wider range of application scenarios. However, due to the limited field of view of laser scanning devices, point cloud registration technology is usually required to match and fuse fragmented point cloud data in applications. For example, in 3D reconstruction, point cloud data captured from different perspectives need to be registered to obtain complete point cloud data [

2]; in robot grasping, point cloud registration is needed to estimate the precise pose of the workpiece [

3]; in autonomous driving, point cloud registration is used to construct real-time maps and achieve high-precision positioning of the vehicle [

4]; in heritage conservation, point cloud fragments are stitched together to improve the efficiency of heritage restoration while avoiding secondary damage to heritage through manual restoration [

5]; in the construction of a digital city, point clouds of features obtained from multiple sites are stitched together to create a highly realistic and accurate 3D model of the urban landscape [

6]. As a fundamental work, the study of point cloud registration technology has important value and broad application prospects.

The iterative closest point (ICP) algorithm [

7] is the most widely used and classic algorithm for point cloud registration. It selects the points with the minimum Euclidean distance as corresponding points and obtains the optimal rigid transformation between two point cloud datasets by minimizing the distance between corresponding points in the two sets [

8]. However, the ICP algorithm is highly dependent on the initial registration position and is prone to local optimal solutions [

9]. At the same time, ICP has low efficiency.

To improve ICP, Yang et al. proposed the Go-ICP algorithm, which partitions the subspaces in SE(3) and removes unproductive subspaces using a branch-and-bound method to find global optimal transformation [

10]. Although this method solves the problem of local minima and has certain improvements in registration accuracy and iteration speed, it is still sensitive to initialization. Pavlov et al. introduced Anderson acceleration into the ICP algorithm to accelerate the convergence of objective function and improve robustness of registration [

11,

12], but it still does not solve the problem of dependence on the initial position. Zhang et al. proposed a fast and robust iterative closest point (FR-ICP) algorithm [

13] based on the previous work that introduced Anderson acceleration into the ICP algorithm. They used the Welsch function as the metric error and minimized its quadratic surrogate function to obtain the optimal solution. This approach not only accelerated the iteration speed but also further improved the robustness of the algorithm. However, it still cannot solve the problem of dependence on the initial position. To address the problem of dependence on initial position, some researchers have proposed methods that combine initial registration with the ICP algorithm to achieve fast registration. Li et al. proposed a point cloud registration algorithm based on ISS-SHOT features [

14], which uses the ISS algorithm [

15] to extract point cloud feature points and perform SHOT feature description [

16]. The initial registration is completed by matching point pairs. Although this method has strong descriptiveness, it lacks robustness and easily produces erroneous point matches. Jing et al. proposed a point cloud registration method based on SIFT feature points combined with the ICP algorithm [

17]. They used the scale invariant feature transform (SIFT) algorithm to extract feature points from the point cloud and calculated fast point feature histogram (FPFH) features of feature points [

18]. In this method, the initial registration is completed by matching points. This method is computationally complex during feature extraction and is prone to erroneous matches. Wang et al. proposed an improved ICP algorithm [

19] by introducing RGB-D point cloud color information to assist in establishing accurate point correspondence and reducing the influence of noise and outliers on registration results, thus improving the accuracy of point matching in the improved ICP algorithm. This method requires point clouds to have color information. Wang et al. also proposed an improved sample consensus point cloud registration algorithm [

20]. By introducing a quadratic function of distance in FPFH, the weight of points in far-distance neighborhoods is reduced, while the weight of points in near-distance neighborhoods is increased to match corresponding points. This method makes the matching of corresponding point pairs more precise, but the efficiency is compromised.

ICP and variants mentioned above are prone to dependence on the initial position of point cloud in point cloud registration, which can lead to poor registration results. In addition, the initial registration stage of these algorithms is computationally complex [

21], and will lead to erroneous matches. In order to solve these problems, a point cloud registration method that incorporates RGB images is proposed. Firstly, the SIFT algorithm is used to extract feature points from the RGB image corresponding to the point cloud, and feature point matching is performed with bidirectional KNN. The random sample consensus (RANSAC) algorithm [

22] is then applied to eliminate incorrectly matched point pairs. The initial transformation matrix is calculated using four matched pairs of points based on the transformation of the coordinate system between the laser scanning device and the coaxial visible camera. The initial alignment is performed on the point cloud that has been denoised with a pass-through filter [

23] and transited down the voxel grid [

24]. Finally, on the basis of the initial alignment, the FR-ICP algorithm [

13] can be used for precise registration. Extracting feature points from point clouds can bring about complex computation. In this work, the complexity of initial alignment is reduced by extracting feature points from the RGB image corresponding to point cloud, and using the RANSAC algorithm to eliminate incorrectly matched points, thereby reducing the computational complexity and the rate of incorrect matching.

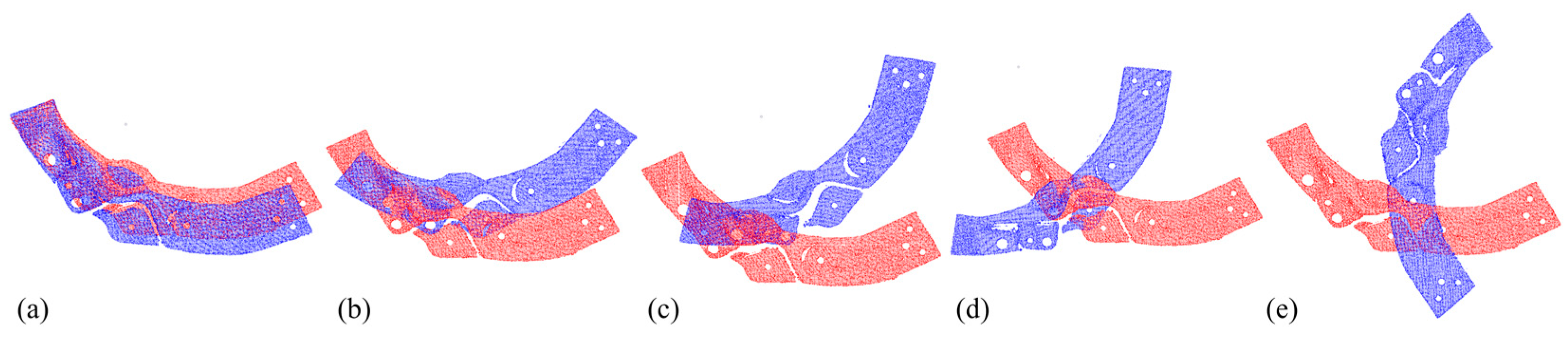

Experimental research was conducted using a point cloud dataset of automotive parts collected in real scenes, and error measurements were performed on the registered point cloud data under different initial angles. Experimental comparison and analysis were conducted with ICP and FR-ICP, and the results showed that the proposed method has a registration error of only 0.487 mm. In the same group of point cloud experiments with comparable registration error, the proposed method showed an improvement in registration speed of up to 69%/48% compared to ICP/FR-ICP.

2. Methods

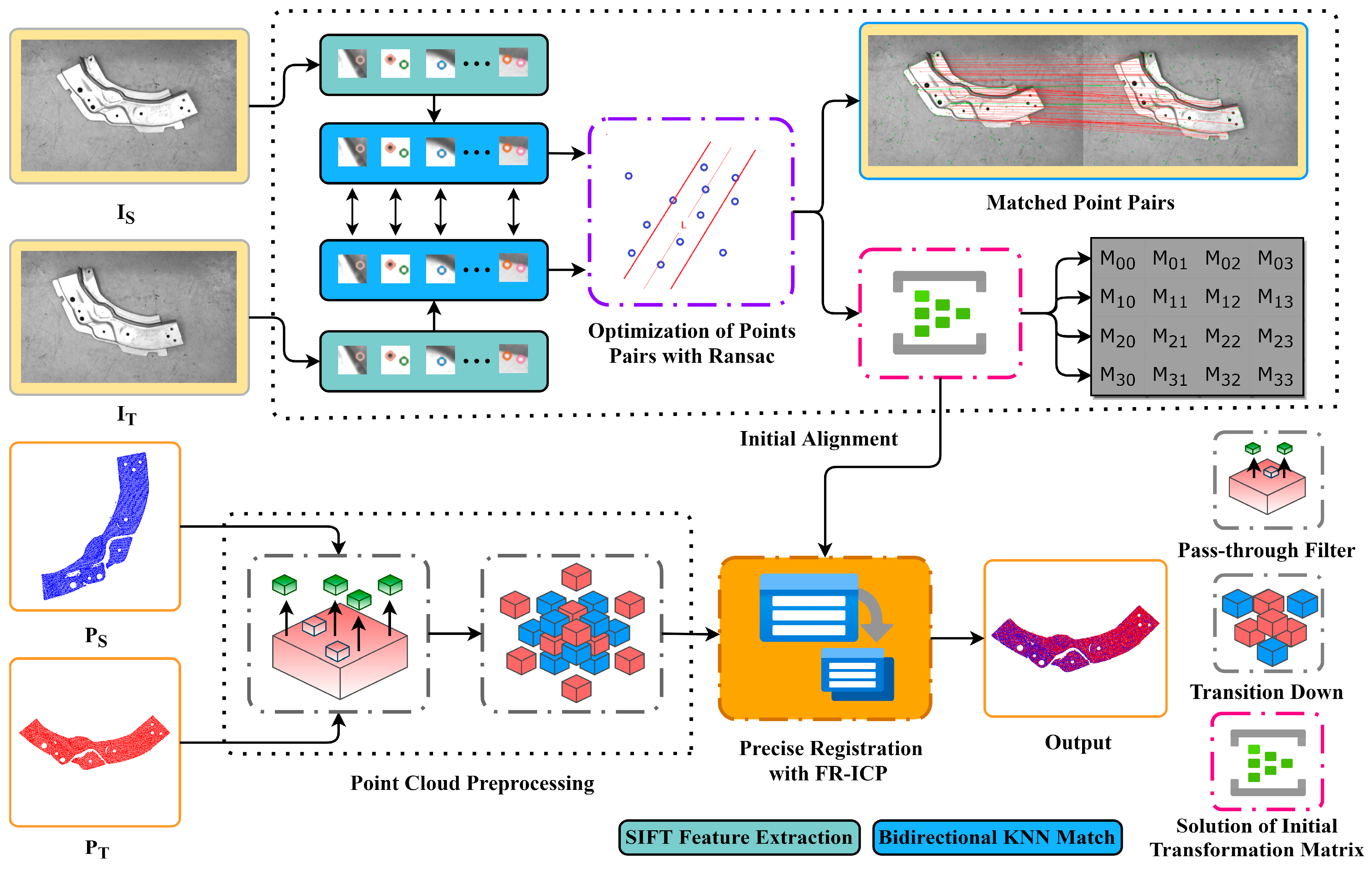

The flow of the research method proposed in this article is shown

Figure 1. Firstly, SIFT algorithm was applied to detect feature points in the RGB image corresponding to the point cloud, and the feature descriptors were calculated. Then, a bidirectional KNN algorithm was used to match the feature point pairs based on their feature descriptors. The RANSAC algorithm was utilized to eliminate erroneous feature point pairs and obtain optimized feature point pairs. From the selected feature point pairs, four non-collinear pairs were chosen to solve for the homography matrix H. Based on the coordinate system transformation relationship between the laser scanning device and the coaxial visible light camera, the camera motion parameters decomposed from H were converted to the motion parameters of the point cloud laser scanning device in order to form the initial transformation matrix for point cloud registration. The initial alignment was completed by applying the initial transformation matrix to the point cloud data that had undergone noise removal through a pass-through filter and transited down the voxel grid. This resulted in a rotation and translation transformation of the point cloud data. Finally, based on the initial alignment, the improved FR-ICP algorithm using Anderson acceleration was employed for further optimization, resulting in accurate point cloud registration.

2.1. Initial Alignment Based on RGB Images

In order to address the strong dependence of ICP on the initial alignment position, in this section, our work introduces the initial alignment based on the RGB images. We detected feature points in RGB scene images with SIFT and used bidirectional KNN for feature point matching. In the subsequent sections of this section, RANSAC has been used to reduce the number of erroneous feature point pairs, and how to solve the transformation matrix for the initial alignment of the point cloud will be illustrated.

2.1.1. Extraction of Feature Points with SIFT

SIFT, SURF, and ORB are commonly used algorithms for feature point detection. However, the ORB algorithm detects feature points unevenly, lacks rotation invariance, and is sensitive to noise [

25]. The SURF algorithm performs poorly in detecting feature points under lighting changes and deformation [

26]. Considering the positive stability of the SIFT algorithm and its ability to adapt to rotation [

27], scale changes, and brightness changes [

28], our work uses SIFT to extract features from RGB images and perform initial alignment.

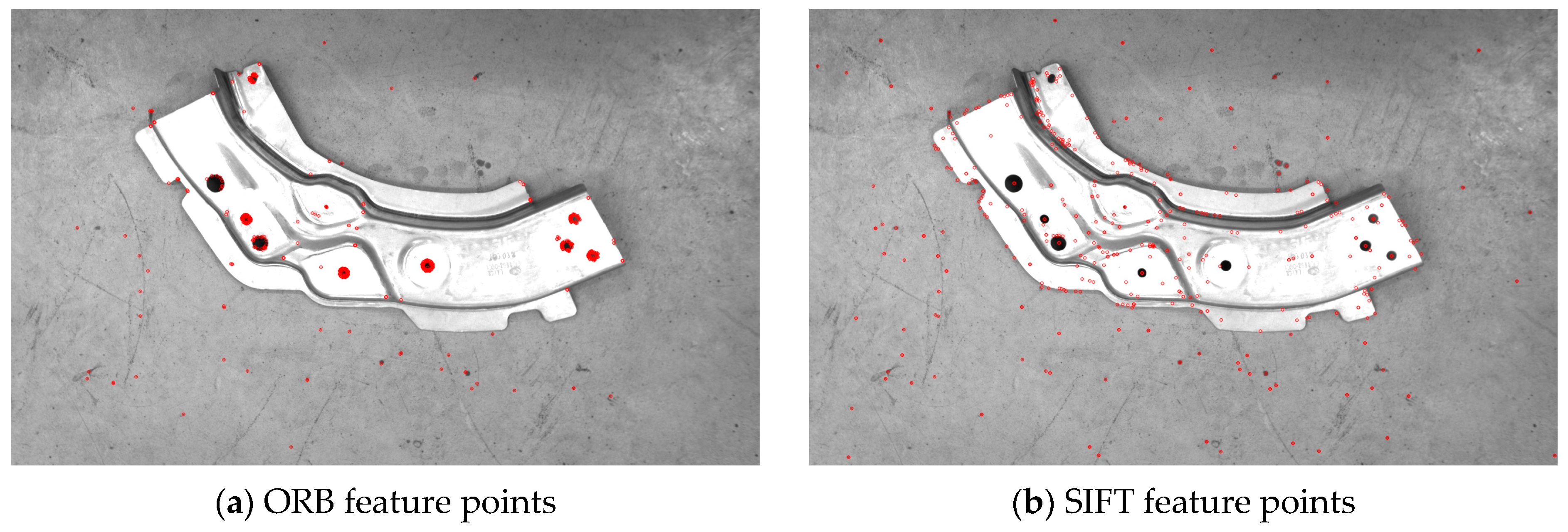

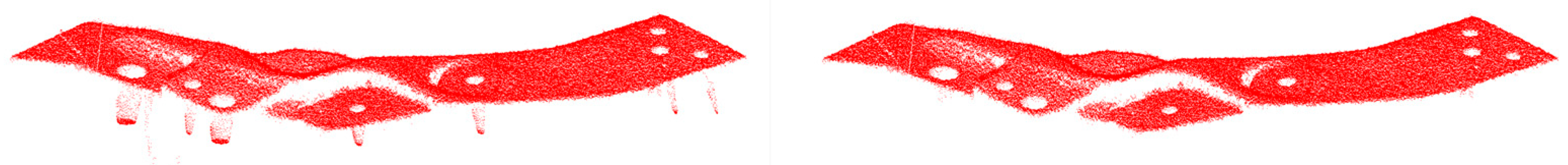

The SIFT algorithm detects the extremum in the scale space by constructing a Gaussian pyramid, determines feature points from the extremum, and forms a 128-dimensional feature descriptor. To compare the performance of different feature point detection algorithms, feature points were extracted from the RGB scene images corresponding to the experimental point cloud, and the final detection results are shown in

Figure 2. The left image in

Figure 2 shows the feature point extraction result of ORB on the RGB scene image, with unevenly distributed extracted features. The right image in

Figure 2 shows the feature point extraction results of SIFT on the RGB scene image. The SIFT algorithm is sensitive to feature points in the image, and even if the scene object is single, it can obtain a sufficient number of feature points, which is beneficial for subsequent feature matching.

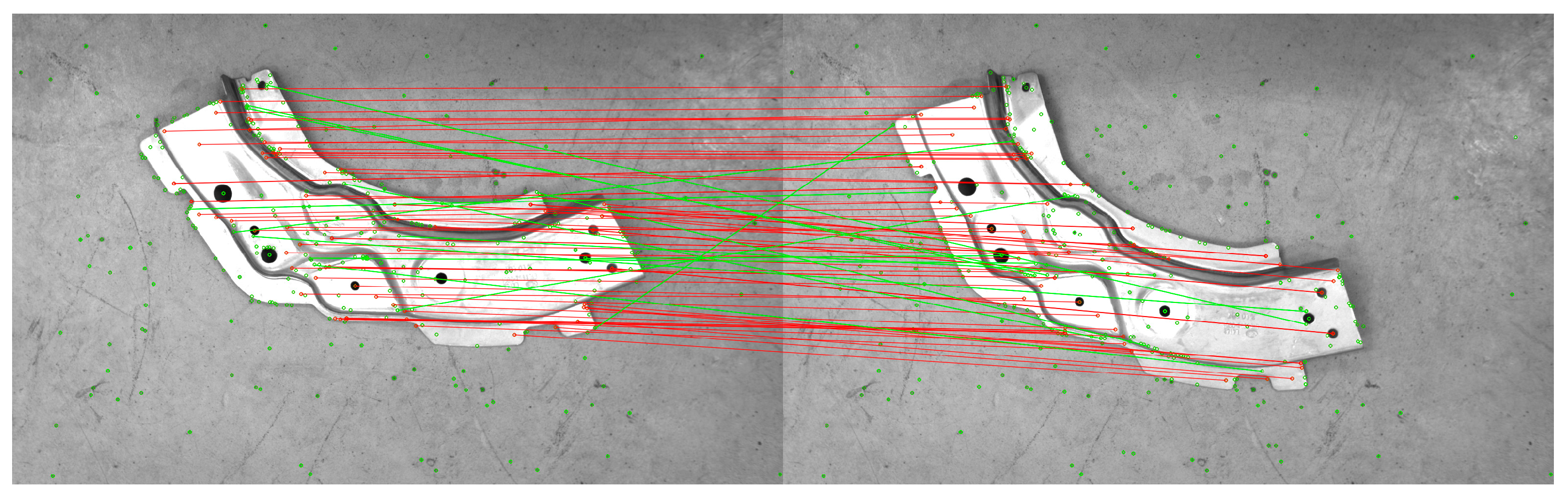

2.1.2. Feature Point Matching

Upon extraction of feature points from two RGB images, the commonly used method for matching is brute force matching. However, when the number of feature points is large, this approach can lead to a lengthy matching time and a significant number of false matches. To tackle the aforementioned issues, this paper proposes a bidirectional K-nearest neighbor (KNN) algorithm for feature point matching. Specifically, a k-d tree is constructed for both the source and target images, and KNN matching is performed independently on each of them. The common matching point pairs resulting from both matches are then obtained as the initial matching result, effectively reducing the number of false matches in the initial set.

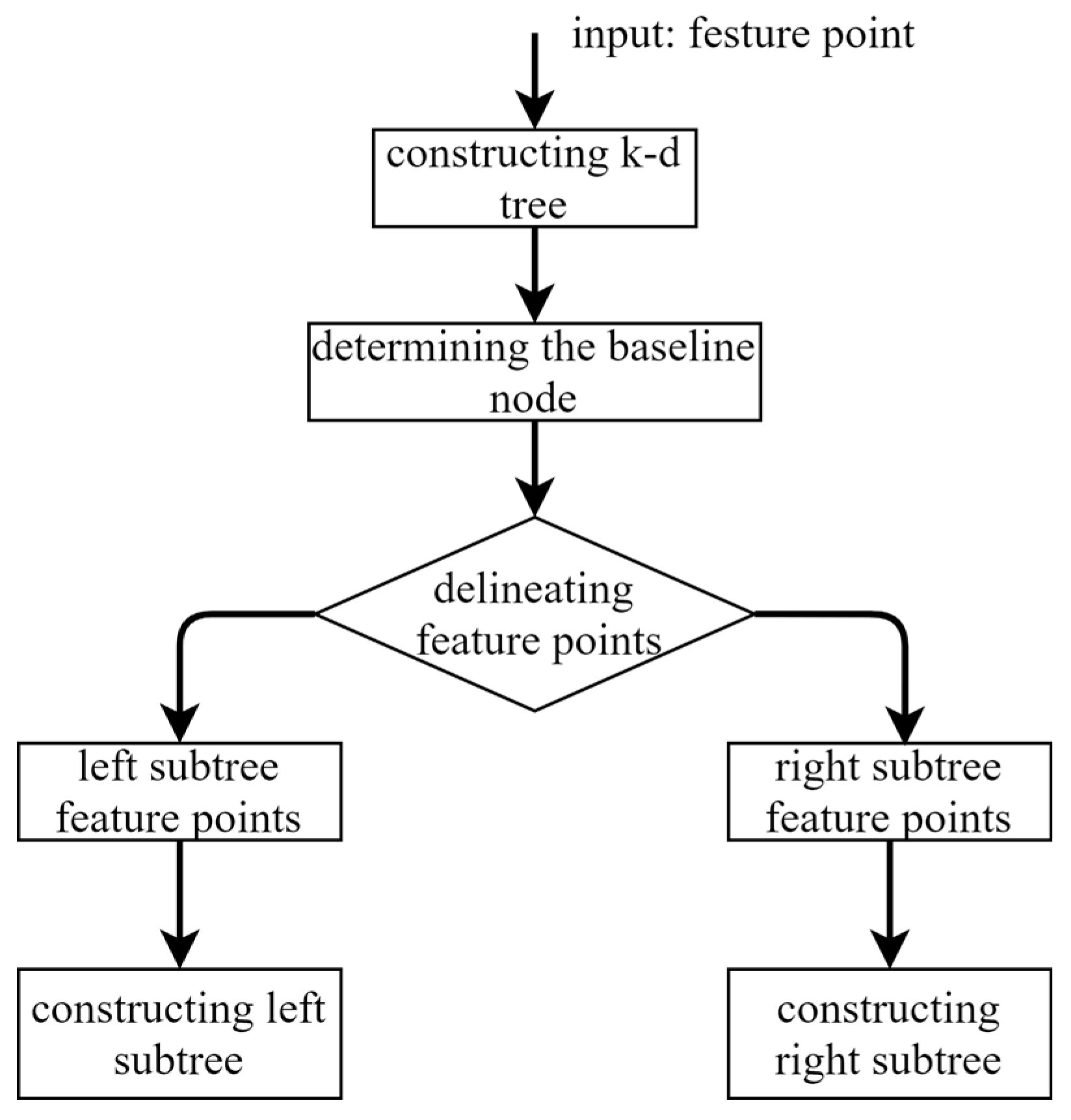

A k-d tree is constructed from the feature descriptors and the variance of X and Y dimensions is used to dimensionally segment the feature points. Afterwards, the dimension with the largest variance is sorted, and the median is taken as the pivot node. Nodes with values less than this median are assigned to the left sub-tree, while nodes with values greater than this median are assigned to the right sub-tree. This process is repeated until all nodes have been assigned.

Figure 3 depicts the process of constructing a k-d tree.

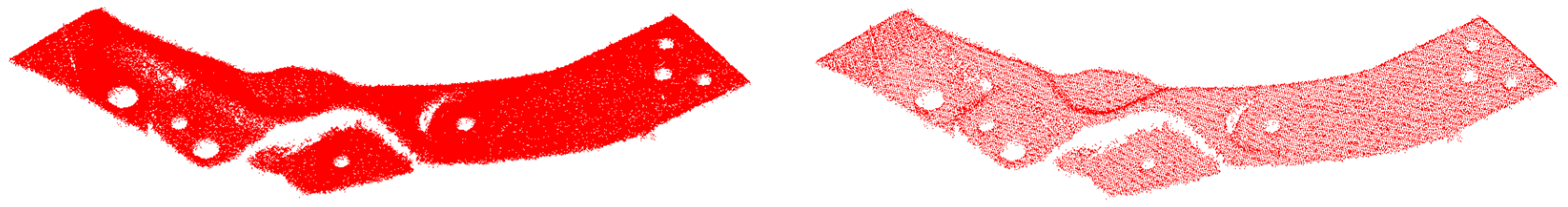

Bidirectional KNN feature matching refers to the process of building k-d trees (k-d tree 1 and k-d tree 2) for both source and target images, and then searching for the k nearest neighbor feature points of the target image, starting from the root node of k-d tree 1. At the same time, this process is also performed on k-d tree 2. The similarity between each feature point and other nodes is measured by the Euclidean distance between two feature points. The smaller the ratio of the Euclidean distance between two feature points, the higher the match between the two feature points, and vice versa, the lower the match. The matching points for the current feature point are selected as the top k (k = 2) highest similarity points, and the common matching point pairs resulting from both matches are chosen as final matching result.

2.1.3. Elimination of Mismatched Pairs with RANSAC

In order to further improve the matching accuracy of the feature points, the mismatched point pairs in the matching need to be removed. In our work, the RANSAC algorithm was used to reject incorrect matching point pairs [

22] and improved the matching accuracy. RANSAC uses a random sample of data as a basis for calculating the rest of the data, and by iterating over the data and reaching a threshold number of samples, a parametric model that satisfies the largest number of data points is obtained. The iteratively calculated dataset contains both correct data and abnormal data, with the correct data usually viewed as inliers and the abnormal data denoted as outliers. Noise in the data and exceptional values in the fitted model are usually regarded as outliers, and in this research outliers are represented as pairs of incorrectly matched points. The RANSAC in our research method was performed using the following steps:

- (1)

Obtain sample set Q through feature point matching experiments in

Section 2.1.2, and randomly select 4 pairs of corresponding points from set Q to form set S.

- (2)

Use set S to calculate the homography matrix

, denoted as model M. The method for solving the homography matrix is presented in

Section 2.1.4.

- (3)

Calculate the projection error between all data points in set Q and model M. If the error is less than the preset threshold

, add the corresponding data point pair to the inner point set I. Here,

and

denote the matching points, the projection error can be expressed as

- (4)

Re-randomly sample to obtain a new set S. Then, repeat steps (2) and (3). If the number of elements in the current set of interior point I is greater than the optimal set of interior points (where represents the set with the most elements), update and the number of random samples simultaneously until the number of samples reaches k (default 200).

- (5)

After completing k samples, is selected as the dataset of the optimized feature point pairs.

2.1.4. Solution of Initial Transformation Matrix

The optimized matched pairs of feature points can be solved for the homography matrix H of the projection transformation. The initial transformation matrix of the point cloud is obtained by converting the camera motion parameters decomposed in H into the relative motion parameters of the laser scanner according to the fixed coordinate transformation relationship between the camera and the laser scanner.

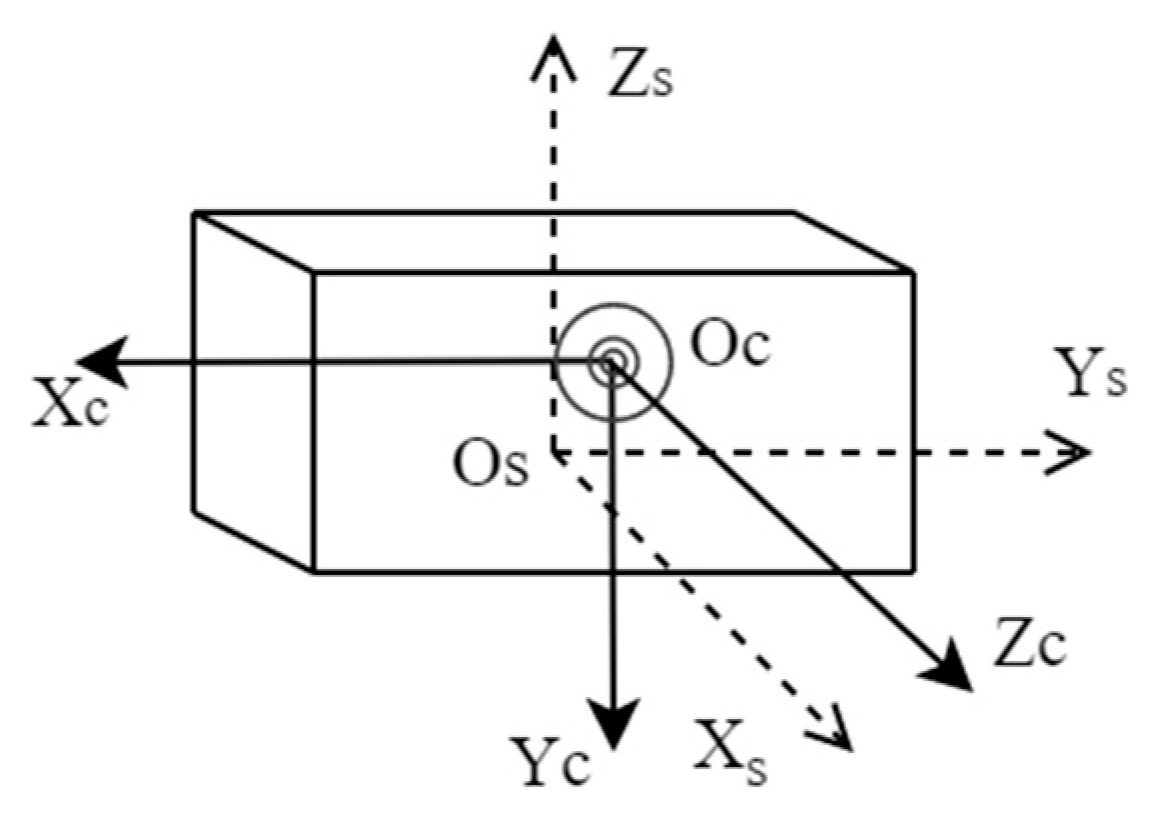

Assuming that the positions of the laser scanning device and the visible camera are fixed, the coordinate systems of the laser scanning device and the camera are defined as

and

, respectively. The rotation parameter

and translation parameter

are regarded as the rigid transformation relationship between the two coordinate systems.

and

are considered as the coordinates under the two coordinate systems, then the transformation relationship between

and

is expressed as

In the position shown in

Figure 4 below, a conventional laser scanner is placed coaxially with the visible camera.

and

axes,

and

axes, and

and

axes of the coordinate system of the laser scanner and the camera coordinate system are parallel to each other, the rotation parameters between these two coordinate systems are calculated as follows:

The point

in the RGB image captured by the camera can be transformed by projection to obtain the corresponding point

in the other image, then the mapping relationship between the corresponding points of the two images can be written as

H is denoted as a 3 × 3 homography matrix. In H,

is a scaled free degree and constant 1, so there are 8 effective degrees of freedom. Extending the above homography matrix transformation relationship, a mapping relationship for feature point matching can be established. The matrix H with 8 degrees of freedom can be found by establishing 8 equations through 4 sets of matching points. The relative rotation parameter

and the translation parameter

for the camera at two positions are obtained from the SVD [

29] decomposition of the matrix H.

The corresponding points in the two point clouds obtained by the laser scanning equipment are marked as

and

, and the coordinates of the two corresponding points under the camera are

and

, then the transformation of these two points under the two coordinate systems is expressed as

From the relative rotation parameter

and translation parameter

of the camera at the two positions, we have

Substituting into the above two equations, we can get

The relative rotation parameter

R and translation parameter

t of the laser scanning device at two positions can be obtained as follows:

Given that the scale factor λ cannot be determined, the translation parameter can only be obtained by estimating the above equation. Using rotation parameter and translation parameter to form the initial transformation matrix, point cloud is rotated and translated to complete initial alignment.

2.2. Point Cloud Data Preprocessing

In the process of point cloud acquisition, point cloud data will be subject to some noise points caused by the light environment and equipment errors. In order to improve the efficiency of point cloud follow-up, preprocessing experiments of point cloud data in

Section 3.1 are carried out to remove noise and transit down.

2.2.1. Pass-through Filter

The point cloud filter can eliminate some noisy points in the scene to improve the efficiency of subsequent point cloud registration. The pass-through filter, as one of the most common point cloud filters, reduces noise by eliminating values in a dimension that are not within a set threshold.

In this paper, the pass-through filter was used to eliminate the noise points on the

Z-axis, and the point cloud data in this paper cover a range of [−851, −715] on the

Z-axis. After several tests, the point cloud filter threshold was set to [−790, −736]. The data points outside the range were treated as noise and removed from it, and the obtained results are shown in

Figure 5.

The number of points in the point cloud before noise removal was 157,551, and the number of points in the point cloud after noise removal was 153,265. After evaluation through the pass-through filter to reduce noise, the shape and position of the point cloud data do not change.

2.2.2. Transition down with Voxel Grid

After the pass-through filter reduces the noise, the point cloud data were still relatively dense. To improve the speed of subsequent point cloud processing, the point cloud was transited down with a voxel grid. This approach can retain the basic contours and features of the point cloud, while effectively reducing the amount of point cloud data.

This process involves establishing a 3D voxel grid of the point cloud, which consists of several small grids. The data point closest to the center of gravity of the grid will be used to replace all data points, and other data points are eliminated. This approach allows for sparse data, while being simple and efficient, without the need to build complex topologies. The center of each small grid is calculated as

In the above equation,

denotes the number of points in the small grid, and

denotes the points in the small grid. The point cloud dataset in this paper is large, and a cube with a voxel volume of 1 m is created when the size of the raster is set, which here indicates the size of the voxel in the

X-

Y-

Z direction. The results of the transition down of the point cloud with the voxel grid is reported in

Figure 6.

The number of point clouds before transiting down was 153,265, and afterwards the number was 33,094. As reported in the above figure, after transiting down the point cloud, the basic contours and features are preserved. In addition, the size of the small raster can be controlled to adjust the balance between the number of point clouds and the point cloud contour information.

2.3. Accurate Registration of Point Cloud with FR-ICP

Given two sets of point cloud data

and

, ICP aligns

with

by optimizing the rigid transformations (rotation matrix

and translation matrix

) on

:

where

is expressed as the distance from the transformed point

to the point cloud

. ICP solves the point cloud registration issue by alternating iterations in two steps as follows:

- (1)

Correspondence step: seek the nearest corresponding point

of

in

based on the transformation

:

- (2)

Alignment step: update the transformation by minimizing the Euclidean distance between the corresponding points:

The FR-ICP algorithm uses a Welsch-based function to measure the error based on ICP, and Anderson’s accelerated majorization-minimization (MM) [

30] algorithm is applied to accelerate the iteration speed of the objective function to make the error minimized. Specifically, the registration is denoted as

where

is the Welsch function:

is a user-specified parameter. The Welsch function is used as the metric error, and its quadratic proxy function is minimized to obtain the optimal solution, and the final registration is reported as

where

This paper limits the parameter range of

with

and

, where

is computed as the median distances from each point

to its six nearest points on

and

is the median of all initial point-wise distances

.

The FR-ICP algorithm is based on minimizing the Euclidean distance between corresponding points in two sets of point sets to achieve the optimal rigid transformation between two point clouds, so there is also the problem of sensitivity to the initial position. In this paper, initial alignment and FR-ICP were used to complete the whole registration process to avoid registration failure caused by falling into the local optimum, and also to improve the efficiency of the accurate registration stage. In the accurate registration stage, the results of the initial alignment of the point cloud were treated as the input of FR-ICP. The default parameter settings of FR-ICP were used to register the two sets of points to ensure the ultimate accuracy.