1. Introduction

With the advancements in space technology, the increasing number of operational spacecraft is facing a severe threat from space debris, which is caused by frequent space launch activities [

1,

2]. According to the U.S. As of March 2022, the Space Surveillance Network (SSN) has cataloged up to 25,000 space debris, defunct spacecraft, and active spacecraft, with numbers expected to increase continuously in the future [

3]. Collisions with large space debris could destroy an entire spacecraft, while small-sized space debris could cause irreversible damage to spacecraft due to their high velocities, such as performance degradation or dysfunction. Therefore, it is vital to observe space debris in the distance to avoid latent collisions with spacecraft. One crucial step involves space debris detection, which could be further used for space debris tracking and cataloging.

There are currently two main categories of space debris surveillance systems: space-based systems and ground-based systems. Ground-based systems rely on large telescopes or radar installed on the ground for space debris detection and recognition. In contrast, space-based systems use onboard sensing devices to detect space debris. The advantages of space-based systems over ground-based systems can be described as follows. (1) They are not affected by weather or the circadian rhythm. (2) They could avoid the limits of stationary observation sites. (3) They could detect millimeter-sized small objects; the latter is aimed at centimeter-sized objects [

4]. Therefore, space-based surveillance systems are effective measures for enhancing the safety levels of spacecraft. For the application of space debris surveillance, onboard sensing devices generally contain visible sensors, infrared sensors, and radars. Among the sensing devices, visible sensors are promising solutions due to their superior autonomous levels and highly accurate observation data [

5].

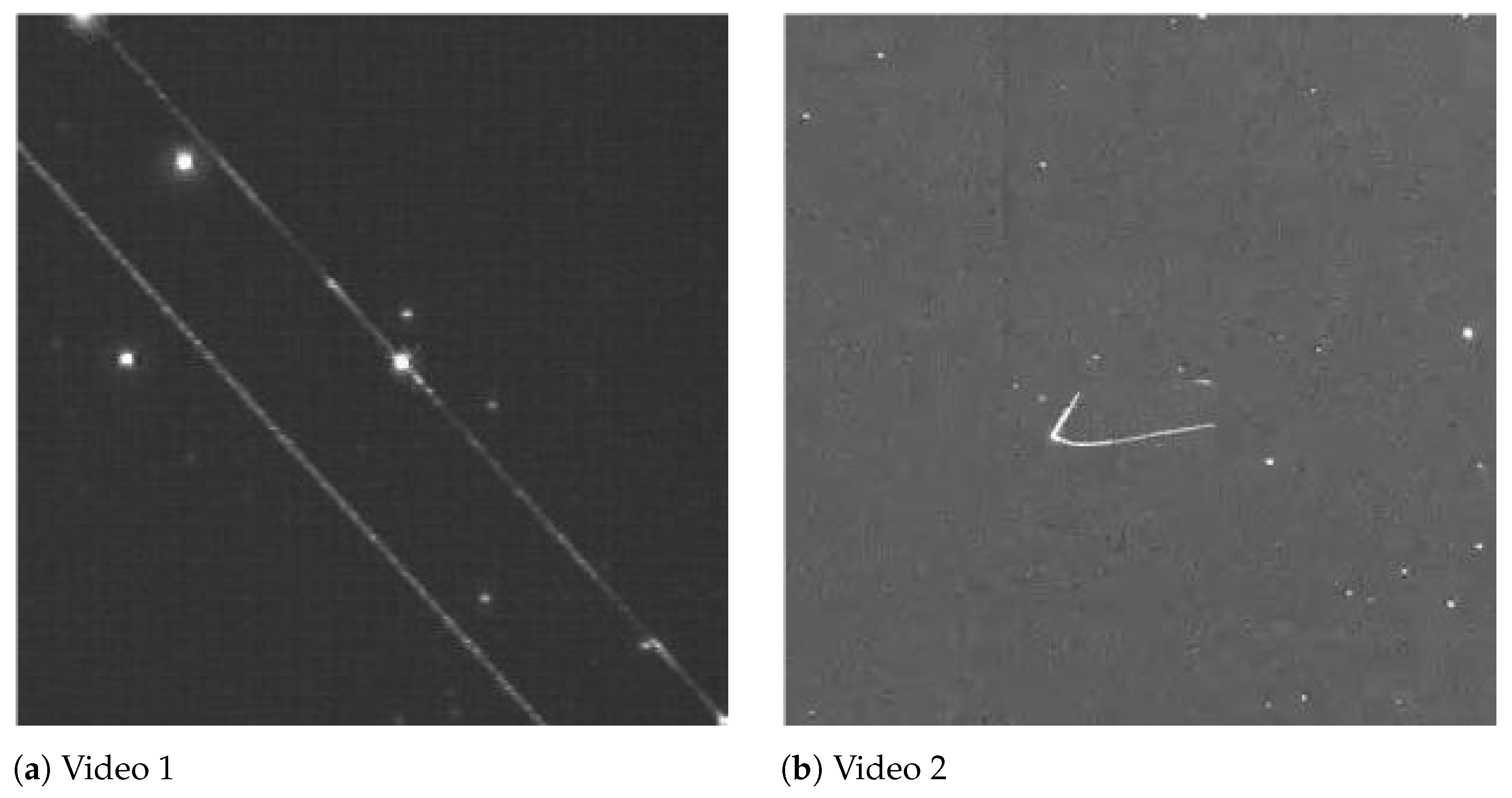

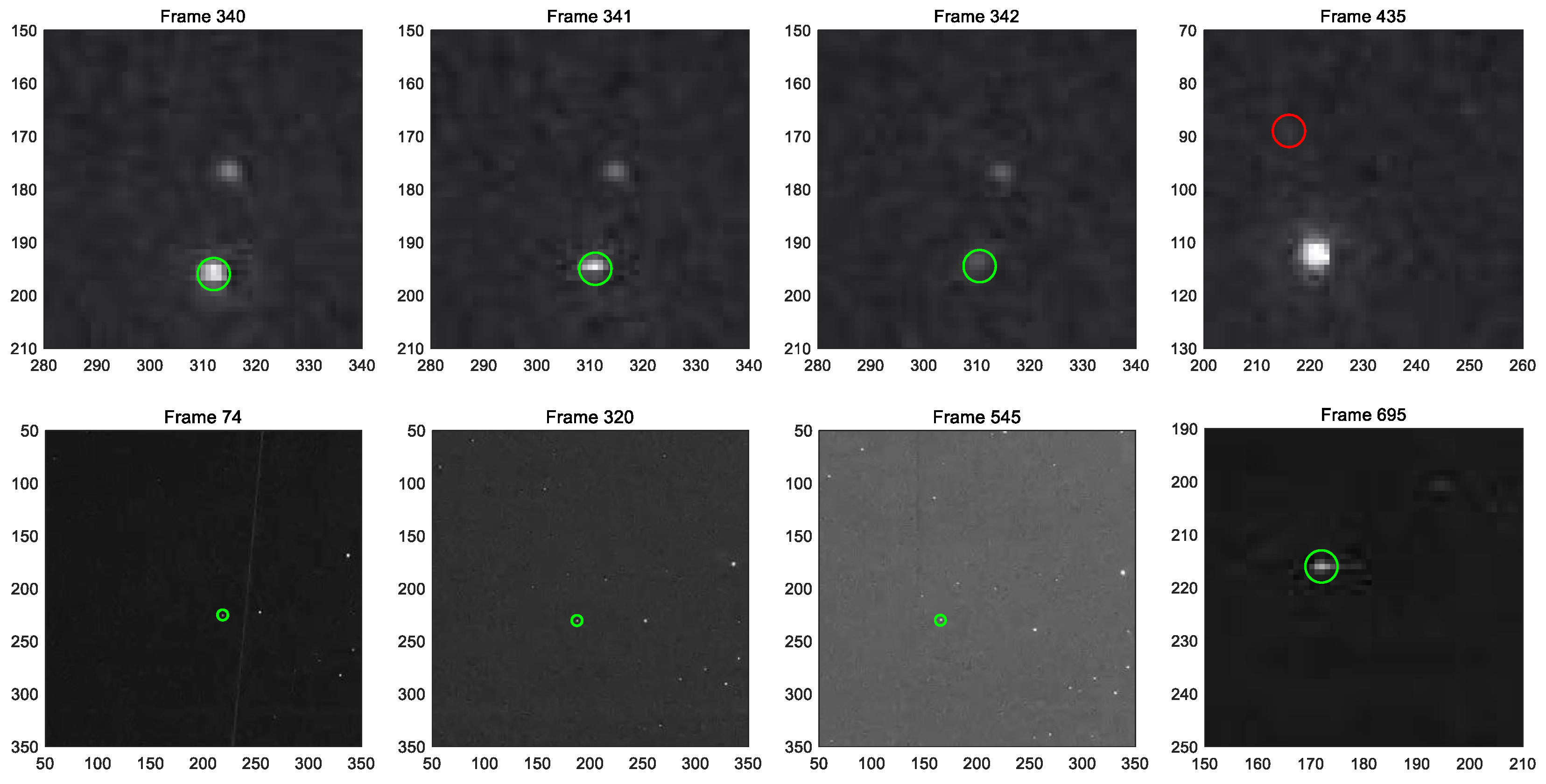

The typical procedure for detecting space debris involves object extraction and centroid computation [

6,

7]. The centroid can then be used for astrometry by matching the observed star field with the star catalog [

8]. However, the main focus of this paper is object extraction; a simple centroid computational method was adopted to evaluate the proposed object extraction method. This paper does not cover the astrometry problem. Based on different operational modes of the surveillance system, commonly used visible sensor-based space debris detection strategies can be divided into streak-like object detection and point-like object detection. When the orientation of the surveillance platform is continuously fixed to stars, the space objects appear as streak-like regions; this operational mode is called sidereal tracking. When the surveillance platform is continuously reoriented to be fixed at the space objects, the objects appear as point-like regions; this mode is called object tracking [

9]. Afterward, space debris and stars could be distinguished by their shape characteristics in both operation modes using only single-frame images. Typical single-frame image-based methods for space debris detection include the Hough transform method [

10], feature-based methods using image moments [

7], point spread function (PSF) fitting techniques [

11,

12], mathematical morphological methods [

13,

14], etc. However, faint space debris could not be detected by single-frame images due to the tiny observable magnitude limited by charge-coupled device (CCD) sensors [

15]. To deal with this problem, multi-frame image-based methods have been proposed using the space object’s motion information, which can be divided into two categories: track-before-detect (TBD) methods and optical flow methods. The main idea of TBD is to improve the signal-to-noise ratio (SNR) of faint space objects according to accumulating measurements to produce more confident detections. The representative TBD-based space debris detection methods include the stacking method [

16,

17], line-identifying technique [

18], particle filter (PF) [

19,

20], and multistage hypothesis testing (MHT)-based methods [

21,

22,

23]. However, there are many limitations to these methods. The stacking method needs to presume a number of likely movement paths and requires time to analyze the observation data for the uncatalogued space debris. The line-identifying technique assumes the space debris moving with a constant velocity and the analysis time will rise exponentially with the number of candidate detections in each frame. PF-based methods need to carefully design the likelihood function to confirm the trade-off between particle convergence and final tracks under the computational restrictions. For MHT-based methods, candidate trajectories will increase rapidly with the number of space objects and noise, resulting in high computational costs. The optical flow-based methods [

24,

25,

26] can be performed without prior information but they are also limited by their high computational complexity.

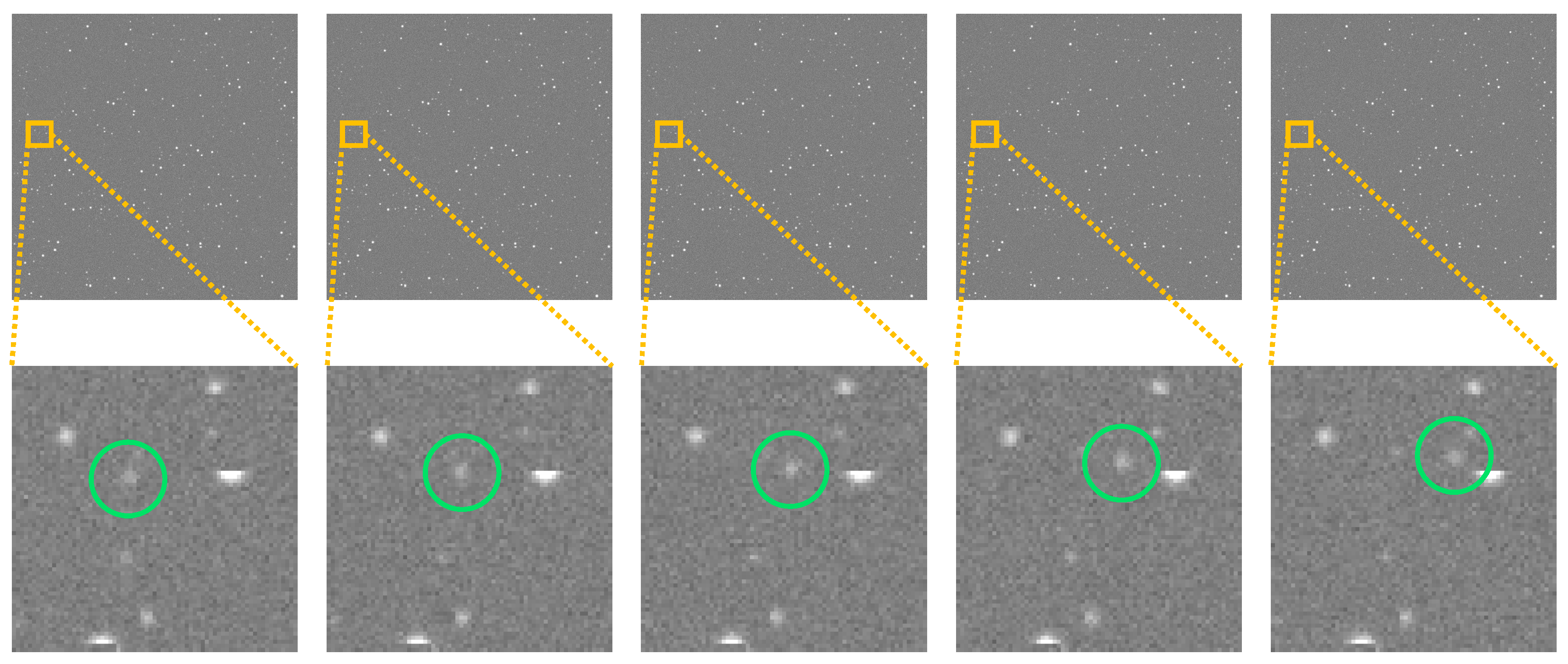

With the progress in satellite imaging technology, video satellite image data have been introduced to detect space objects in recent years [

27,

28]. Different from multi-frame image sequences taken with long exposure times and long interval times, video image data can capture spatial information as well as more compact temporal information with the continuous movement state of space objects, which is vital for the immediate orbital anomaly detection and continuous monitoring. Typical video satellites and their key parameters are listed in

Table 1.

Zhang et al. [

28] proposed a space object detection algorithm based on motion information using the Tiantuo-2 video satellite. The author removed the image background based on local image properties for a single-frame first. The space object trajectories are associated with the Kalman filter for all frames. Finally, the trajectory is considered a space object if the mean velocity of the object in all frames is larger than a given threshold. The algorithm can detect moving space objects with brightness changes from satellite videos, but it is not a straightforward process. Moreover, many parameters should be set up through empiricism or experiment in advance. The advantages and disadvantages of the above three methods are summarized in

Table 2.

Over the past decade, deep learning has made great advancements in computer vision [

33], and deep convolutional neural networks have shown significant competence for video salient object detection (VSOD) [

34,

35,

36]. The central principle of the VSOD involves learning temporal dynamic cues related to moving objects. based on the fact that long-range dependencies exist in the space and time of consecutive frames [

37,

38]. Yan [

39] proposed a non-locally enhanced temporal module to construct the spatiotemporal connection between the features of input video frames. Chen [

34] developed a nonlocal self-attention scheme to capture the global information in the video frame. The intra-frame contrastive loss helps separate the foreground and background features and inter-frame contrastive loss improves temporal consistency. Su [

37] introduced the transformer block to capture the long-range dependencies among group-based images through the self-attention mechanism and designed an intra-MLP learning module to avoid partial activation to further enhance the network. However, using VSOD directly for space debris detection is questionable. The reasons can be concluded as follows: (1) The large distances involved in detecting space debris can make the images appear very small, and they are often referred to as “small objects.” Therefore, it is difficult to extract spatial features of space debris in videos by deep neural networks. (2) The cluttered backgrounds generated by large numbers of stars appearing as point source objects can make it difficult to distinguish space debris from star backgrounds. (3) Various noises exist in space surveillance platforms, such as thermal noise, shot noise, dark current noise, and stray light. Therefore, space debris energy is very faint compared to the noise background, which also brings challenges to space debris detection.

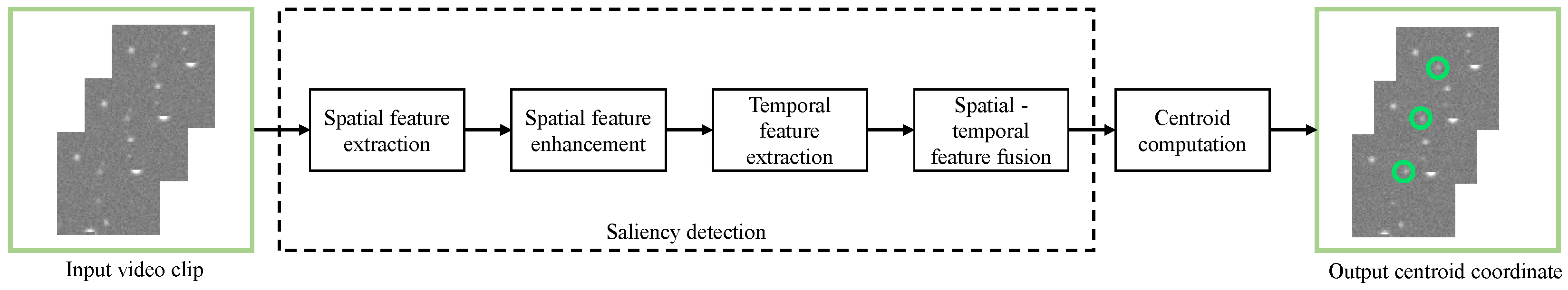

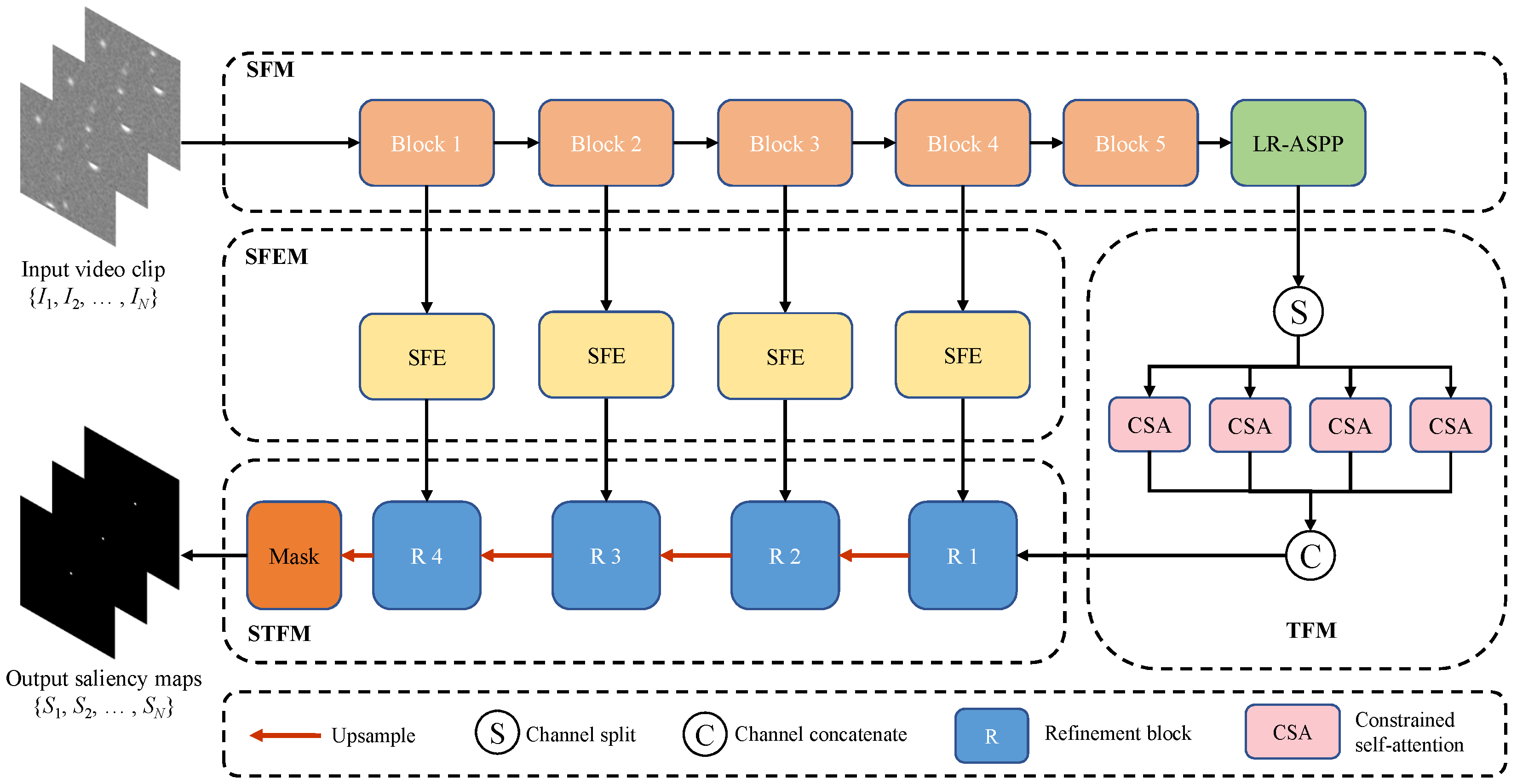

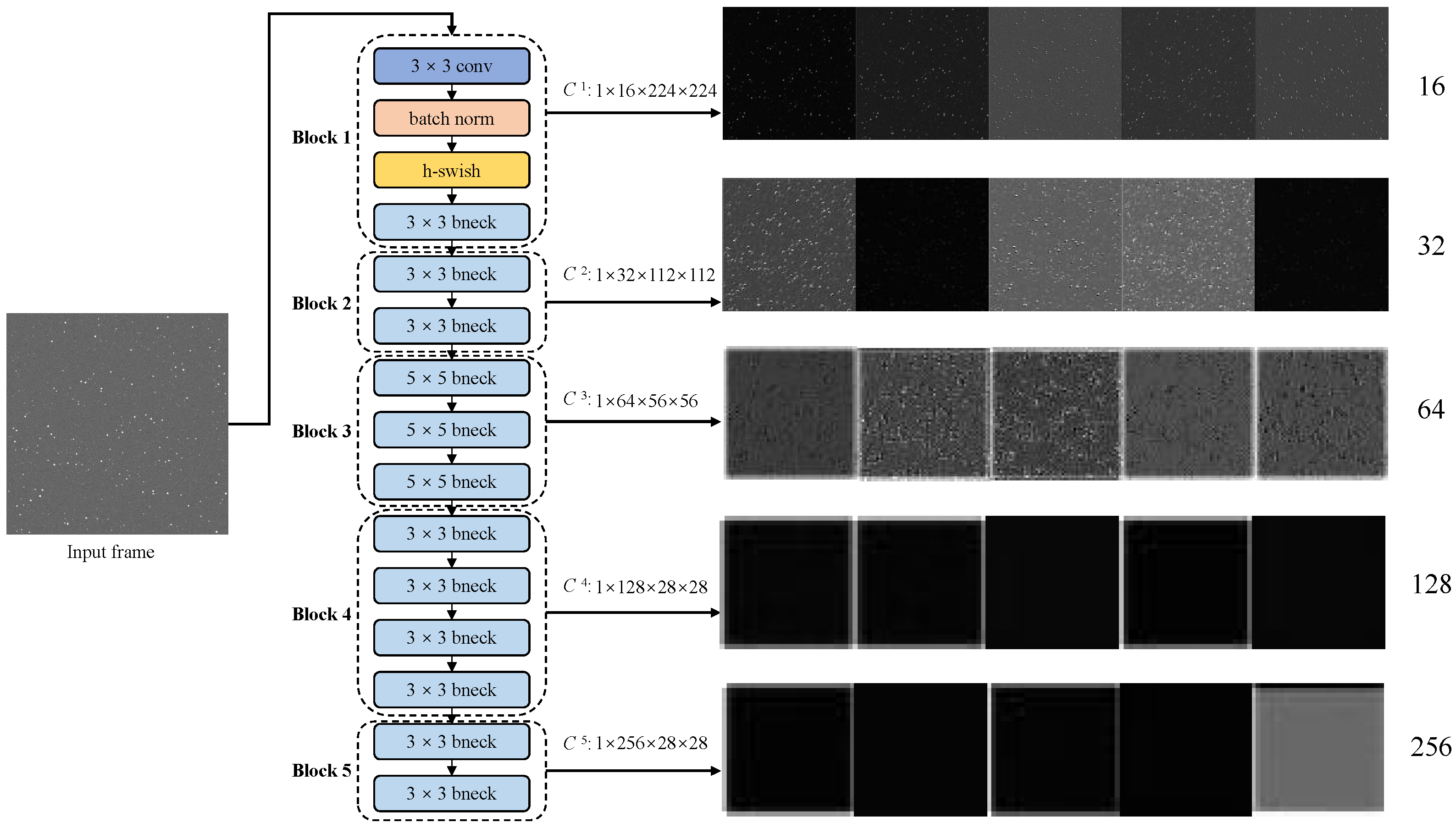

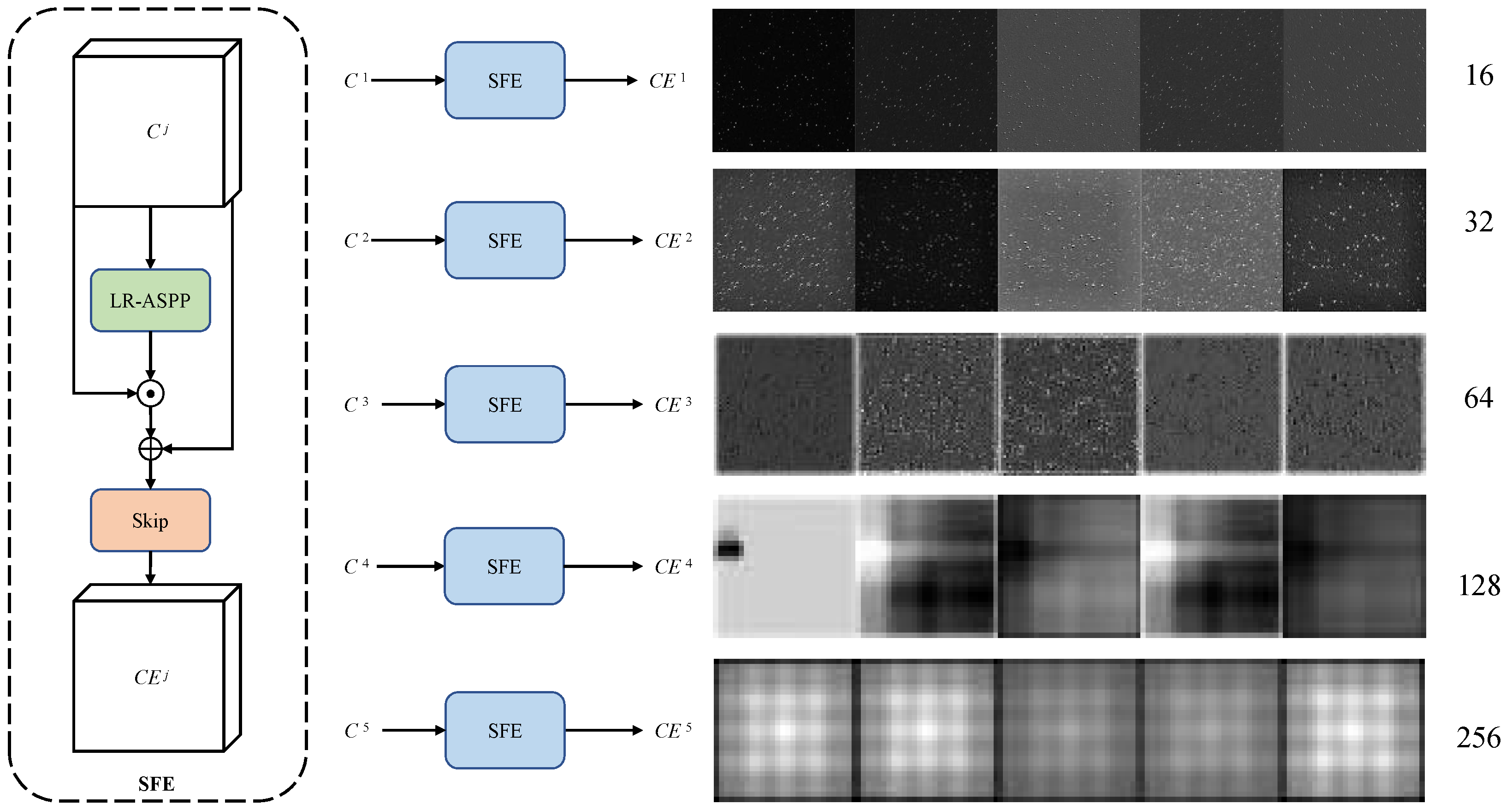

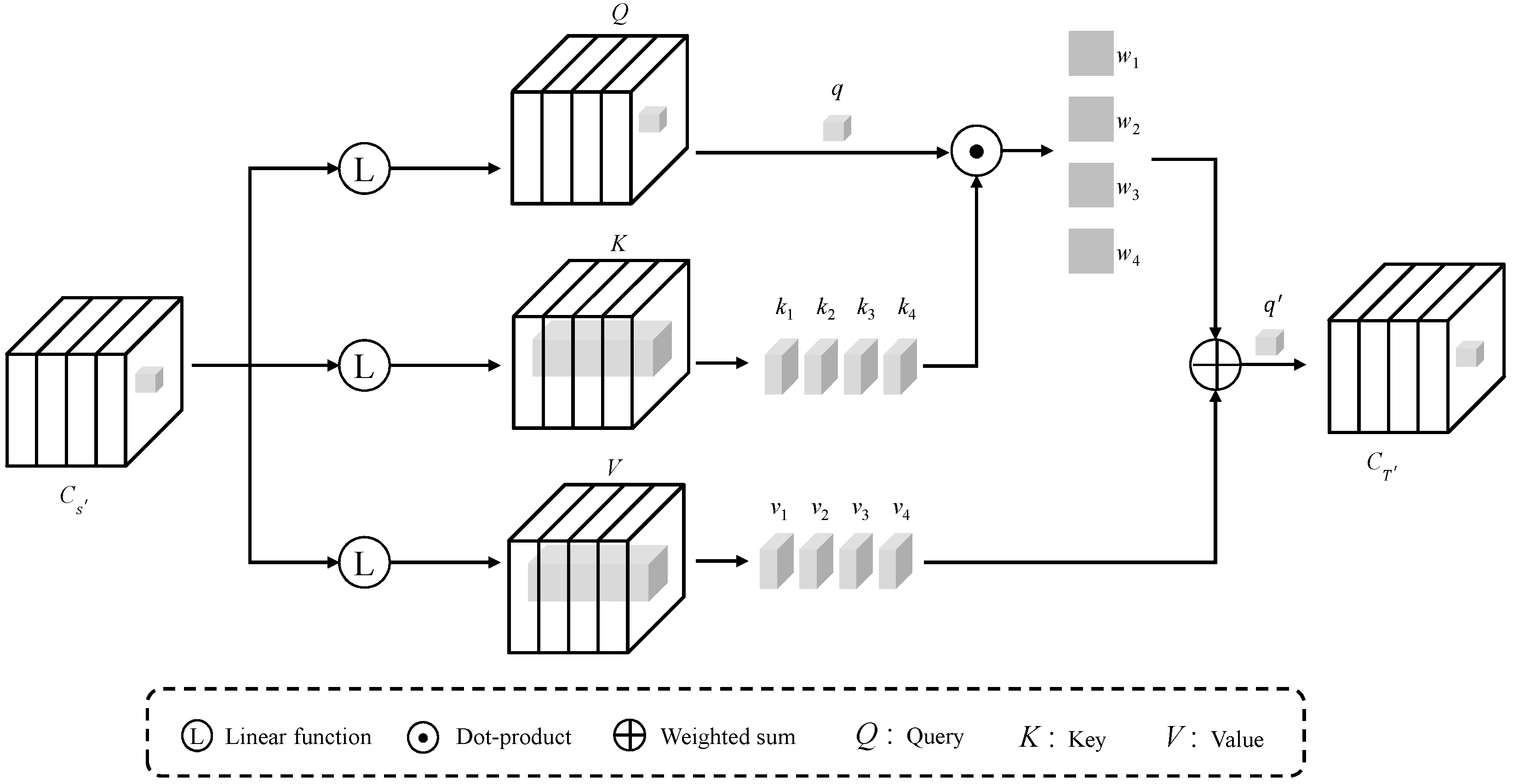

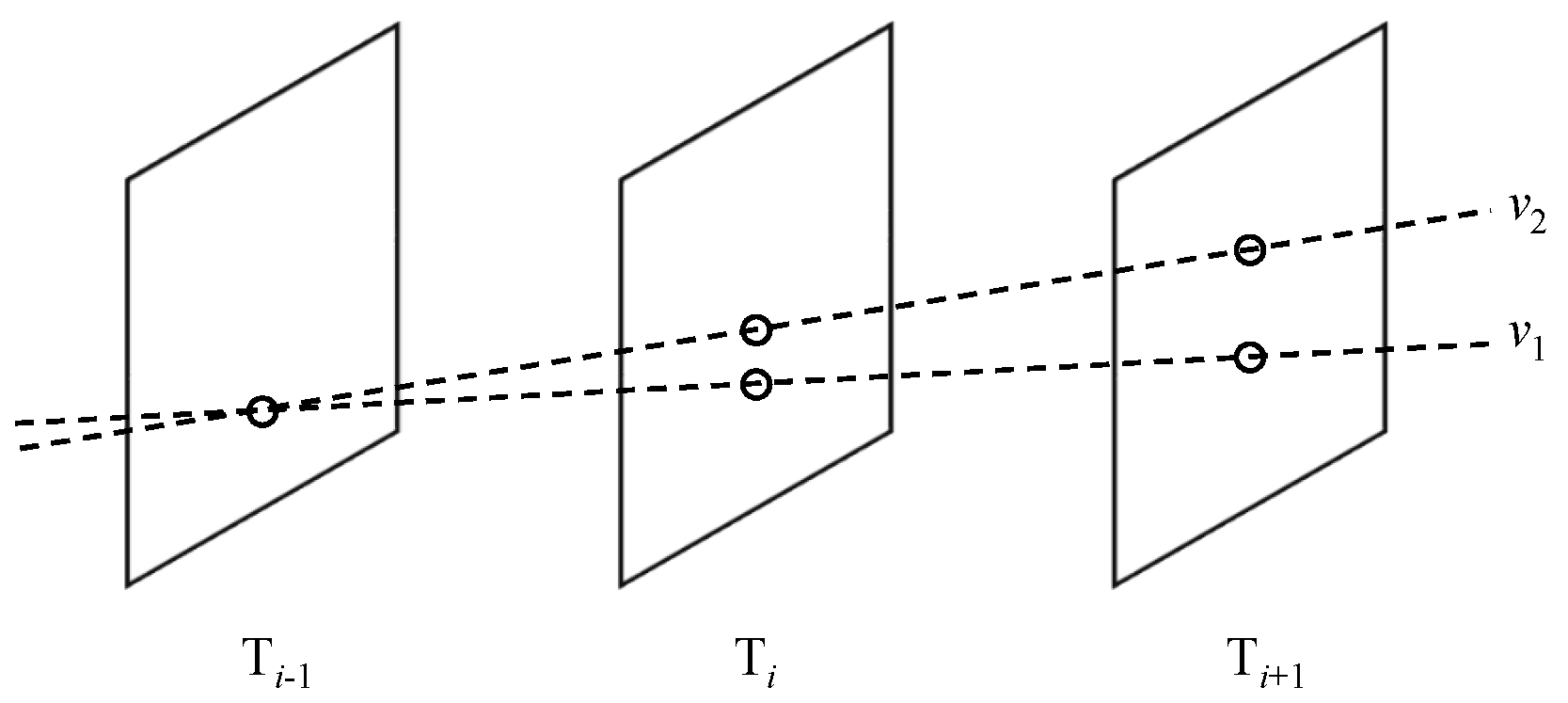

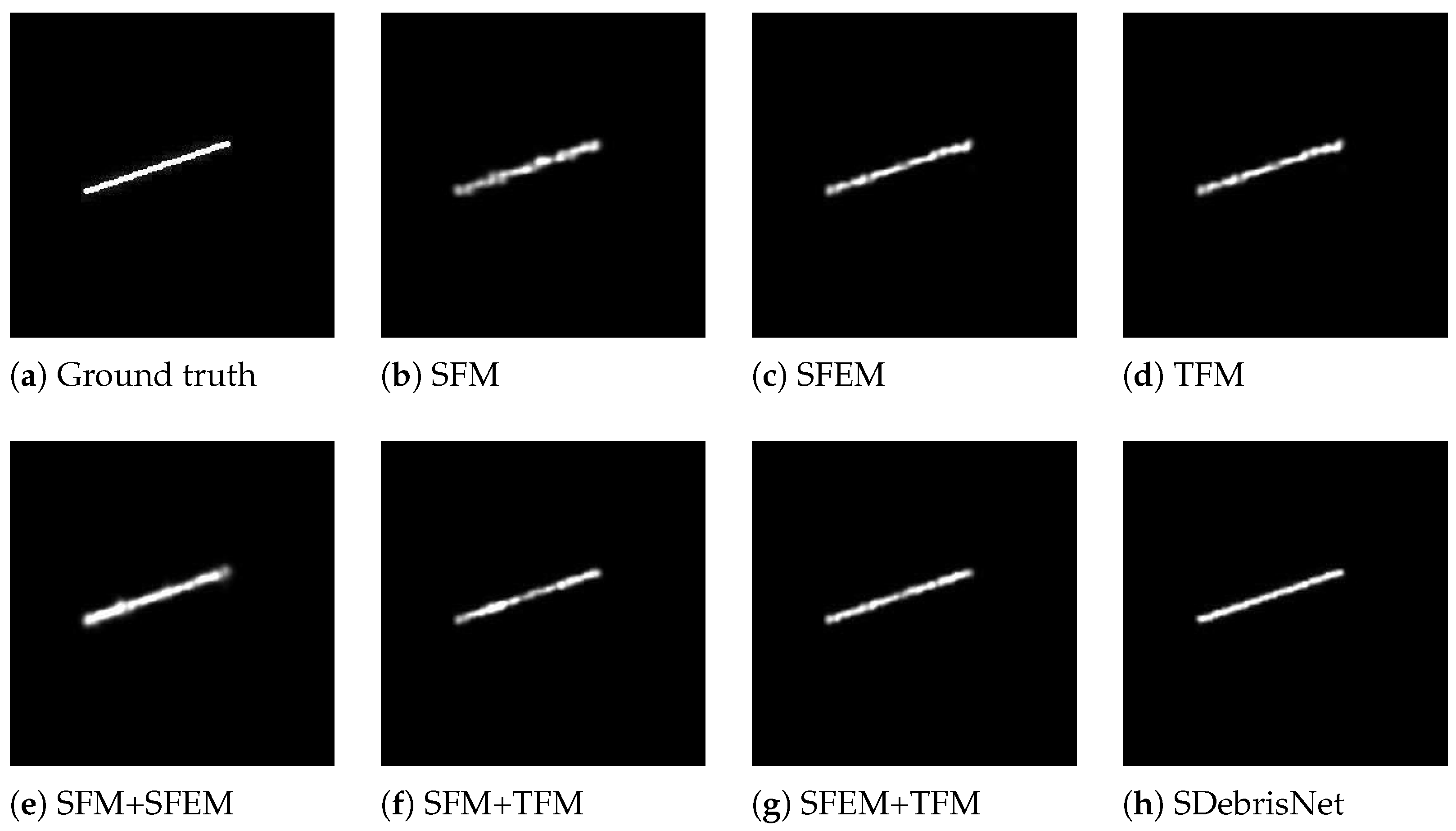

To solve these difficulties, we propose a novel spatial–temporal saliency method called SDebrisNet for detecting space debris in satellite videos; it uses lightweight atrous spatial pyramid pooling and scale-enhanced structures to enhance context-aware and scale-aware features, while constrained self-attention helps capture local temporal features. First of all, a spatial feature extraction module (SFM) is proposed to improve the feature extraction performance for small objects. We added a high-resolution feature detector to the multi-scale feature extraction network to extract more specific features from the low-level feature map. Then, the spatial data of small objects were further strengthened by a spatial feature enhancement module (SFEM). Next, the spatial–temporal coherence was enhanced by a temporal feature extraction module (TFM). Finally, the saliency map of space debris can be obtained by a spatial–temporal feature fusion module (STFM). The main contributions of this paper can be summarized as follows.

(1) In order to account for the unique characteristics of moving space debris in satellite videos, a spatial–temporal saliency framework was developed. This framework enables end-to-end space debris detection without the need for preprocessing or post-processing steps.

(2) SFM extracts the spatial features using a lightweight neural network, which is well-suited for space-based computational platforms with limited storage and computational resources.

(3) To deal with the small object detection problem, SFEM enhances both the context-aware features and the scale-aware features, which could significantly improve object detection precision in different scales, including small objects.

(4) TFM connects both the spatial features and temporal features from the consecutive frames. Even if space debris data possess a low signal-to-noise ratio, our method could effectively output the saliency maps. The novelty of this paper can be summarized as follows: (1) A new space debris detection method based on spatial–temporal saliency is proposed. (2) A new saliency detection neural network based on the constrained self-attention is proposed. (3) A new small object feature extraction network, including a spatial feature extraction network and spatial feature enhancement network, is proposed. The rest of the paper is organized as follows.

Section 2 describes the proposed spatial–temporal saliency network architecture for space debris detection. The centroid computational method is introduced in

Section 3.

Section 4 presents the experimental setup.

Section 5 presents experimental studies for verifying the proposed space debris detection method, including the comparison results with the current space object detection algorithms. Finally, we conclude our research in

Section 6.

6. Conclusions

In this paper, a novel spatial–temporal saliency-based approach was proposed for space debris detection. The proposed approach uses deep learning techniques to output the saliency maps and compute the centroid coordinates of the space debris from satellite video sequences. The approach achieves end-to-end saliency detection of space debris without multiple steps of traditional methods. Moreover, as the model can generalize well to unseen space debris, due to the self-contained training samples, it does not require motion information from the space debris to be known a priori.

First, a lightweight spatial feature network was established to enable the inference model suitable for deployment on onboard devices with limited storage space. Based on the lightweight backbone, a spatial feature enhancement network was created by capturing both the image-level global context and the multi-scale spatial context. The experiment results show that the spatial feature enhancement network can extract more complex semantic features and complete shape features of small objects. Most importantly, a temporal feature extraction network was introduced by establishing the pair-wise relationships among feature elements in consecutive frames. This enables our method to detect space debris with a curved motion track due to the highly correlated temporal information existing in consecutive frames of satellite video. In addition, a public satellite video dataset for space debris detection was created to evaluate the proposed method. The motion speed and direction of space debris and the diameter of space debris and SNR are considered in the dataset. The results of the detection performance experiments show that both the detection probability and the false alarm rate of the space debris extracted by our method are much greater than those of both the single-frame-based method and the multi-frame-based method. In the sensitivity analysis experiment, the best video clip length used for each batch in the training stage is recommended as 4. The ablation study demonstrates that the model size of our method is largely reduced compared to the current saliency detection models. Moreover, we also tested the robustness of the proposed method on the SDD dataset with different SNRs. When the SNR of space debris is approximately 0.1, the centroid error of the space debris extracted by our method does not exceed 0.9 pixels. Finally, we tested our method on two real video datasets with more challenges. It showed that our method can work well in the space background with significant intensity variations, curved motion tracks, and stray light noise. The advantages of the proposed method can be summarized as follows. (1) The proposed method could achieve end-to-end space debris detection without multiple preprocessing steps. (2) It can detect both the linear and curved movements of space debris without a priori information. (3) It can detect space debris with a low SNR of 0.1. The main disadvantage of the proposed method is that it needs to create a video dataset containing different SNR and space debris movements.

The next step in our research will involve testing our method on an embedded platform with the same computational efficiency and storage capacity as the onboard computers.