Analysis of Airglow Image Classification Based on Feature Map Visualization

Abstract

1. Introduction

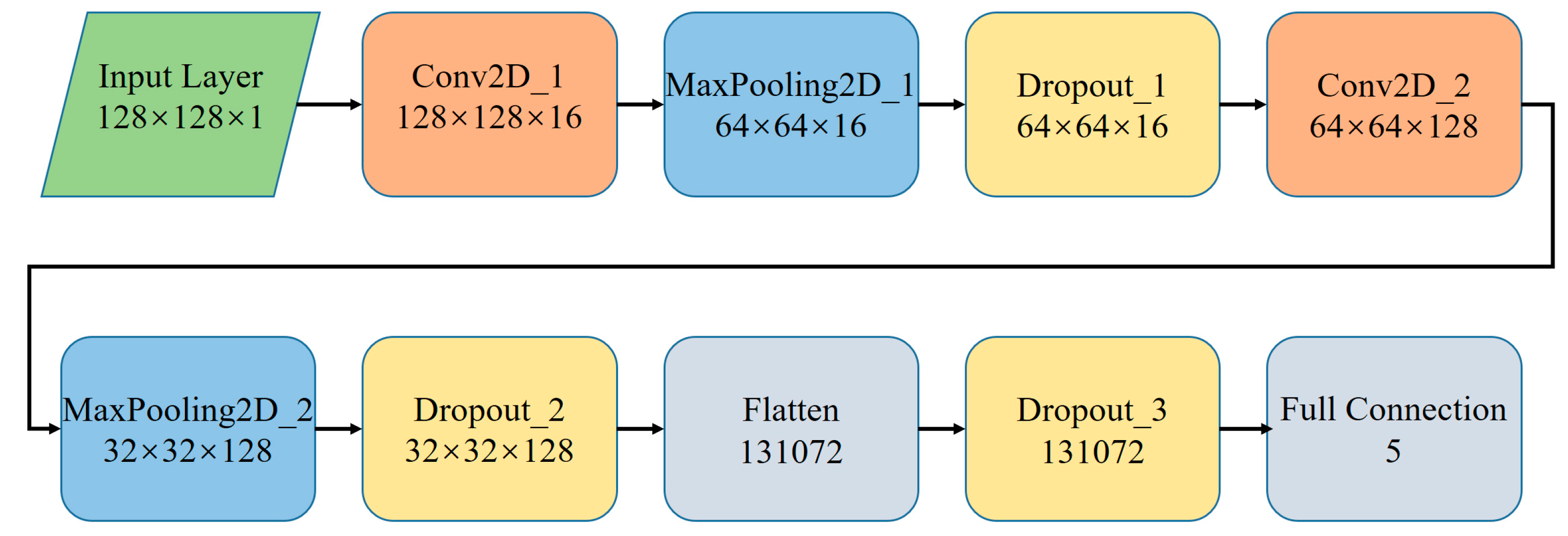

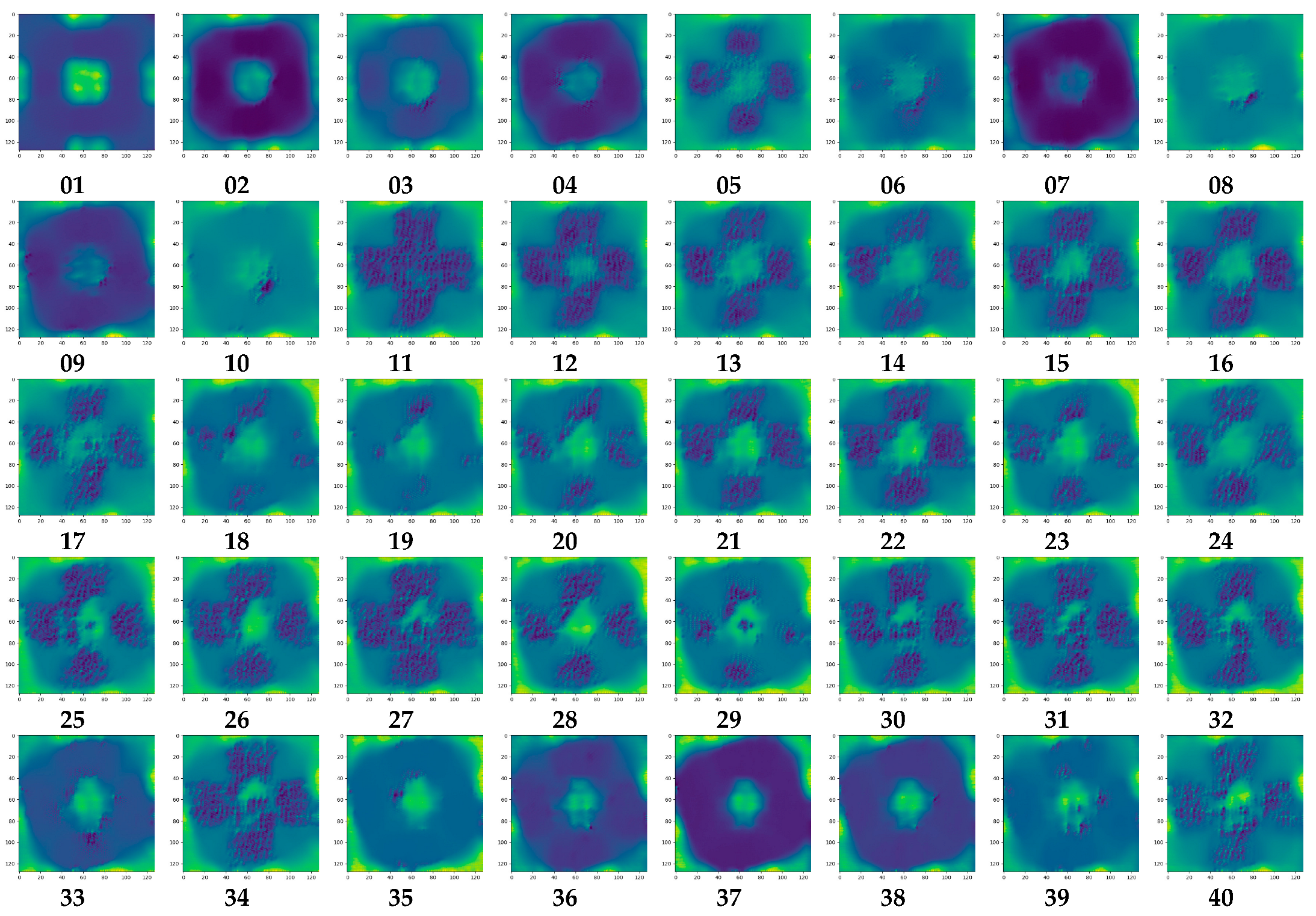

2. Structure of the Convolutional Neuron Network

3. Experiment

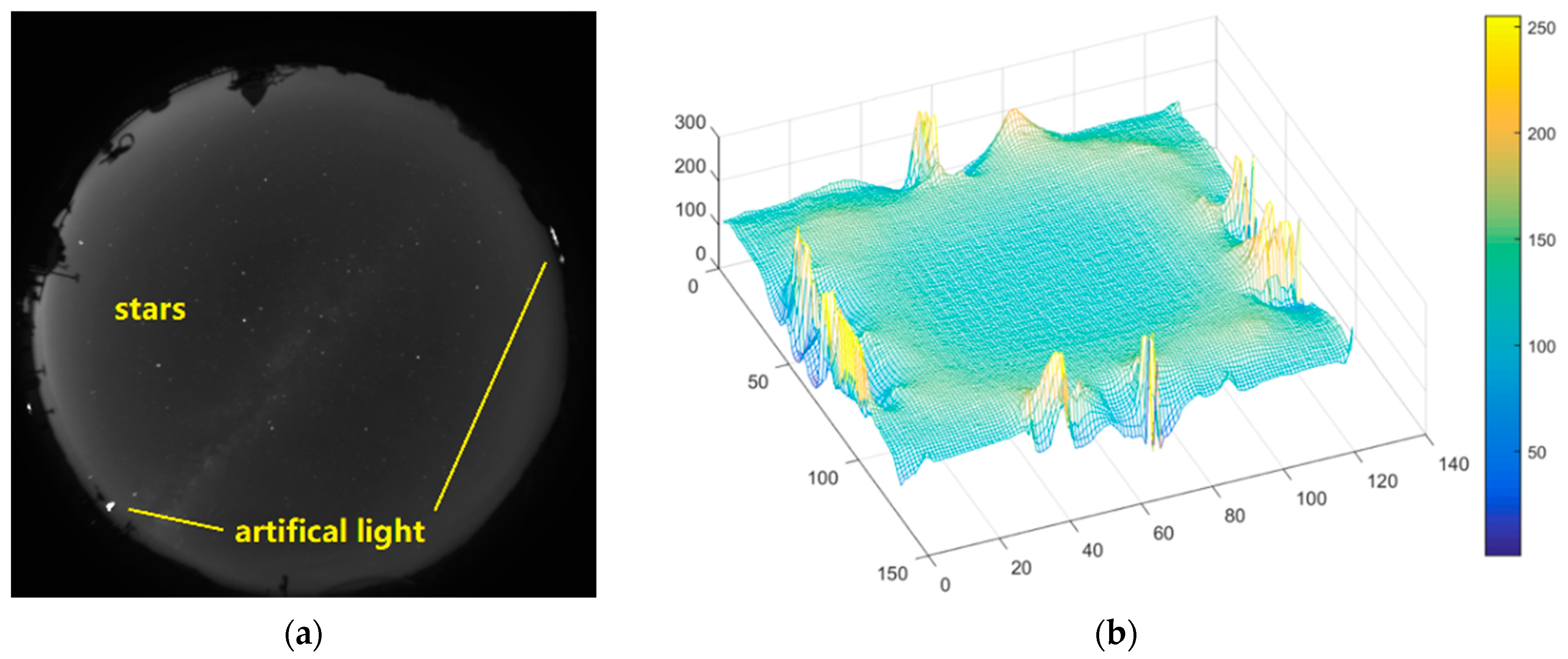

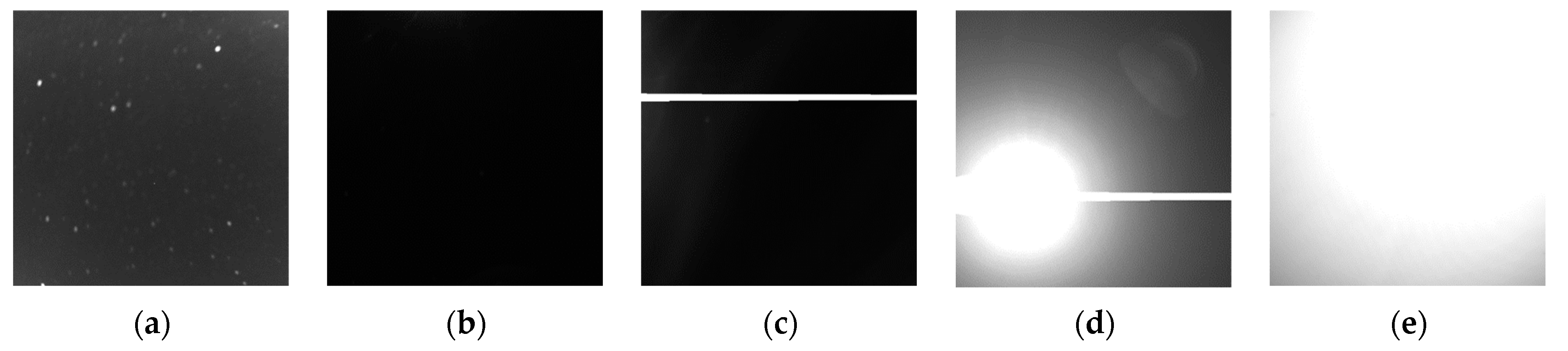

3.1. Data Sets

3.2. Learning Process

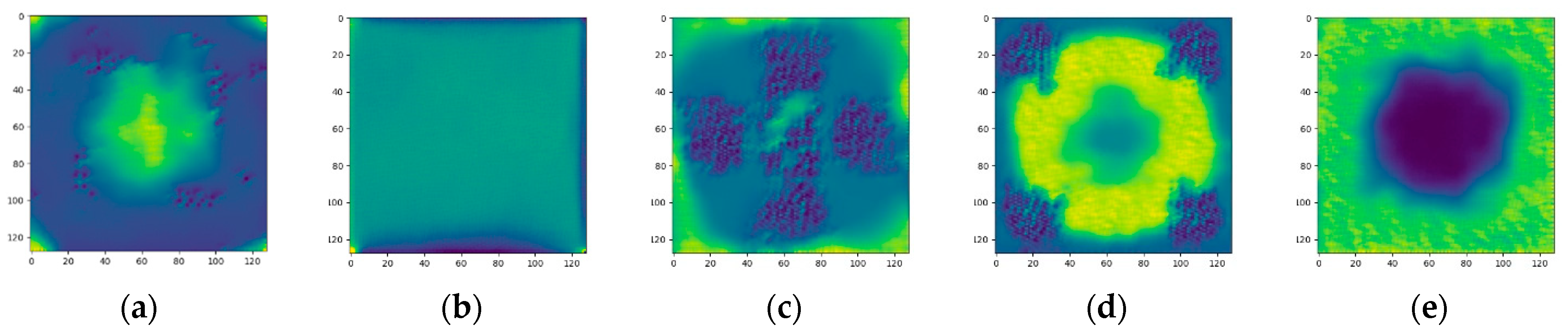

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Peterson, A.W.; Kieffaber, L.M. Infrared photography of OH airglow structures. Nature 1973, 242, 321–322. [Google Scholar] [CrossRef]

- Li, Q.; Yusupov, K.; Akchurin, A.; Yuan, W.; Liu, X.; Xu, J. First OH airglow observation of mesospheric gravity waves over European Russia region. J. Geophys. Res. 2018, 123, 2168–2180. [Google Scholar] [CrossRef]

- Sedlak, R.; Hannawald, P.; Schmide, C.; Wüst, S.; Bittner, M.; Stanič, S. Gravity wave instability structures and turbulence from more than 1.5 years of OH* airglow imager observations in Slovenia. Atmos. Meas. Tech. 2021, 14, 6821–6833. [Google Scholar] [CrossRef]

- Ramkumar, T.; Malik, M.; Ganaie, B.; Bhat, A. Airglow-imager based observation of possible influences of subtropical mesospheric gravity waves on F-region ionosphere over Jammu & Kashmir, India. Sci. Rep. 2021, 11, 10168. [Google Scholar] [CrossRef]

- Zhou, C.; Tang, Q.; Huang, F.; Liu, Y.; Gu, X.; Lei, J.; Ni, B.; Zhao, Z. The simultaneous observations of nighttime ionospheric E region irregularities and F region medium-scale traveling ionospheric disturbances in midlatitude China. J. Geophys. Res. 2018, 123, 5195–5209. [Google Scholar] [CrossRef]

- Figueiredo, C.A.O.B.; Takahashi, H.; Wrasse, C.M.; Otsuka, Y.; Shiokawa, K.; Barros, D. Investigation of nighttime MSTIDs observed by optical thermosphere imagers at low latitudes: Morphology, propagation direction, and wind fltering. J. Geophys. Res.-Space 2018, 123, 7843–7857. [Google Scholar] [CrossRef]

- Sau, S.; Lakshmi Narayanan, V.; Gurubaran, S.; Emperumal, K. Study of wave signatures observed in thermospheric airglow imaging over the dip equatorial region. Adv. Space Res. 2018, 62, 1762–1774. [Google Scholar] [CrossRef]

- Xu, J.Y.; Li, Q.Z.; Sun, L.C.; Liu, X.; Yuan, W.; Wang, W.B.; Yue, J.; Zhang, S.R.; Liu, W.J.; Jiang, G.Y.; et al. The ground-based airglow imager network in China. In Upper Atmosphere Dynamics and Energetics; Wang, W.B., Zhang, Y.L., Paxton, L.J., Eds.; American Geophysical Union: Washington, DC, USA, 2021; ISBN 978-1-1195-0756-7. [Google Scholar]

- Yu, D.; Xu, Q.; Guo, H.; Zhao, C.; Lin, Y.; Li, D. An Efficient and Lightweight Convolutional Neural Network for Remote Sensing Image Scene Classification. Sensors 2020, 20, 1999. [Google Scholar] [CrossRef]

- Mishra, J.; Goyal, S. An effective automatic traffic sign classification and recognition deep convolutional networks. Multimed. Tools Appl. 2022, 81, 18915–18934. [Google Scholar] [CrossRef]

- Lanjewar, M.G.; Gurav, O.L. Convolutional Neural Networks based classifications of soil images. Multimed. Tools Appl. 2022, 81, 10313–10336. [Google Scholar] [CrossRef]

- Rocha, M.M.M.; Landini, G.; Florindo, J.B. Medical image classification using a combination of features from convolutional neural networks. Multimed. Tools Appl. 2022. [Google Scholar] [CrossRef]

- Hubel, D.H.; Wiesel, T.N. Receptive fields, binocular interaction and functional architecture in the cat’s visual cortex. J. Physiol. 1962, 160, 106–154. [Google Scholar] [CrossRef] [PubMed]

- Fukushima, K.; Miyake, S. Neocognitron: A new algorithm for pattern recognition tolerant of deformations and shifts in position. Pattern Recogn. 1982, 15, 455–469. [Google Scholar] [CrossRef]

- Paul, W. Beyond regression: New Tools for Prediction and Analysis in the Behavioral Sciences. Ph.D. Thesis, Harvard University, Cambridge, MA, USA, 1974. [Google Scholar]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning representations by back-propagating errors. Nature 1986, 323, 533–536. [Google Scholar] [CrossRef]

- LeCun, Y.; Boser, B.; Denker, J.S.; Henderson, D.; Howard, W.; Jackel, L.D. Handwritten digit recognition with a back-propagation network. Adv. Neural Inf. Process. Syst. 1990, 2, 396–404. [Google Scholar]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Freund, Y.; Schapire, R.E. A decision-theoretic generalization of on-line learning and an application to boosting. J. Comput. Syst. Sci. 1997, 55, 119–139. [Google Scholar] [CrossRef]

- Girosi, F.; Jones, M.; Poggio, T. Regularization theory and neural networks architectures. Neural Comput. 1995, 7, 219–269. [Google Scholar] [CrossRef]

- Hinton, G.E.; Osindero, S.; Teh, Y.W. A fast learning algorithm for deep belief nets. Neural Comput. 2006, 18, 1527–1554. [Google Scholar] [CrossRef]

- Hinton, G.E.; Salakhutdinov, R.R. Reducing the dimensionality of data with neural networks. Science 2006, 313, 504–507. [Google Scholar] [CrossRef] [PubMed]

- Glorot, X.; Bengio, Y. Understanding the difficulty of training deep feedforward neural networks. J. Mach. Learn. Res. 2010, 9, 249–256. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Int. Conf. Neural Inf. Process. Syst. 2012, 60, 1097–1105. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. Int. Conf. Neural Inf. Process. Syst. 2015, 28, 91–99. [Google Scholar] [CrossRef] [PubMed]

- Toshev, A.; Szegedy, C. DeepPose: Human pose estimation via deep neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 24–27 June 2014; pp. 1653–1660. [Google Scholar]

- Arrieta, A.B.; Díaz-Rodríguez, N.; Del Ser, J.; Bennetot, A.; Tabik, S.; Barbado, A.; Garcia, S.; Gil-Lopez, S.; Molina, D.; Benjamins, R.; et al. Explainable Artificial Intelligence (XAI): Concepts, taxonomies, opportunities and challenges toward responsible AI. Inform. Fusion 2020, 58, 82–115. [Google Scholar] [CrossRef]

- Khan, A.; Sohail, A.; Zahoora, U.; Qureshi, A.S. A survey of the recent architectures of deep convolutional neural networks. Artif. Intell. Rev. 2020, 53, 5455–5516. [Google Scholar] [CrossRef]

- Linardatos, P.; Papastefanopoulos, V.; Kotsiantis, S. Explainable AI: A Review of Machine Learning Interpretability Methods. Entropy 2021, 23, 18. [Google Scholar] [CrossRef]

- Erhan, D.; Bengio, Y.; Courville, A.; Vincent, P. Visualizing Higher-Layer Features of a Deep Network; University of Montreal: Montreal, QC, Canada, 2009; Volume 1341, p. 1. [Google Scholar]

- Zeiler, M.D.; Fergus, R. Visualizing and understanding convolutional networks. In Proceedings of the Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Volume 8689, pp. 818–833. [Google Scholar] [CrossRef]

- Zeiler, M.D.; Taylor, G.W.; Fergus, R. Adaptive deconvolutional networks for mid and high level feature learning. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2018–2025. [Google Scholar] [CrossRef]

- Simonyan, K.; Vedaldi, A.; Zisserman, A. Deep inside convolutional networks: Visualising image classification models and saliency maps. arXiv 2013, arXiv:1312.6034. [Google Scholar] [CrossRef]

- Lai, C.; Xu, J.; Yue, J.; Yuan, W.; Liu, X.; Li, W.; Li, Q. Automatic Extraction of Gravity Waves from All-Sky Airglow Image Based on Machine Learning. Remote Sens. 2019, 11, 1516. [Google Scholar] [CrossRef]

- Wang, C. Development of the Chinese meridian project. Chin. J. Space Sci. 2010, 30, 382–384. [Google Scholar] [CrossRef]

- Egmont-Petersen, M.; de Ridder, D.; Handels, H. Image processing with neural networks—A review. Pattern Recogn. 2002, 35, 2279–2301. [Google Scholar] [CrossRef]

- Hinton, G.E.; Srivastava, N.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R.R. Improving neural networks by preventing co-adaptation of feature detectors. arXiv 2012, arXiv:1207.0580. [Google Scholar] [CrossRef]

- He, K.M.; Zhang, X.Y.; Ren, S.Q.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Zagoruyko, S.; Komodakis, N. Wide residual networks. arXiv 2016, arXiv:1605.07146. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

| Label | Explanation | Images in the Training/ Validation Set |

|---|---|---|

| clear night | Stars can be seen clearly; there are no apparent intense light sources other than stars. | 457/105 |

| overcast sky | No light can be seen; completely dark. | 405/82 |

| light band | There are obvious/unignorable intense light sources other than stars, such as the light band caused by intense moon light. | 103/22 |

| moon | Stars cannot be easily discerned due to extensive areas of intense moon light; there are still darker areas in the image. | 361/72 |

| twilight | Stars cannot be recognized due to the extremely intense light emitted by sun; completely white. | 67/14 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lin, Z.; Wang, Q.; Lai, C. Analysis of Airglow Image Classification Based on Feature Map Visualization. Appl. Sci. 2023, 13, 3671. https://doi.org/10.3390/app13063671

Lin Z, Wang Q, Lai C. Analysis of Airglow Image Classification Based on Feature Map Visualization. Applied Sciences. 2023; 13(6):3671. https://doi.org/10.3390/app13063671

Chicago/Turabian StyleLin, Zhishuang, Qianyu Wang, and Chang Lai. 2023. "Analysis of Airglow Image Classification Based on Feature Map Visualization" Applied Sciences 13, no. 6: 3671. https://doi.org/10.3390/app13063671

APA StyleLin, Z., Wang, Q., & Lai, C. (2023). Analysis of Airglow Image Classification Based on Feature Map Visualization. Applied Sciences, 13(6), 3671. https://doi.org/10.3390/app13063671