Joint Syntax-Enhanced and Topic-Driven Graph Networks for Emotion Recognition in Multi-Speaker Conversations

Abstract

1. Introduction

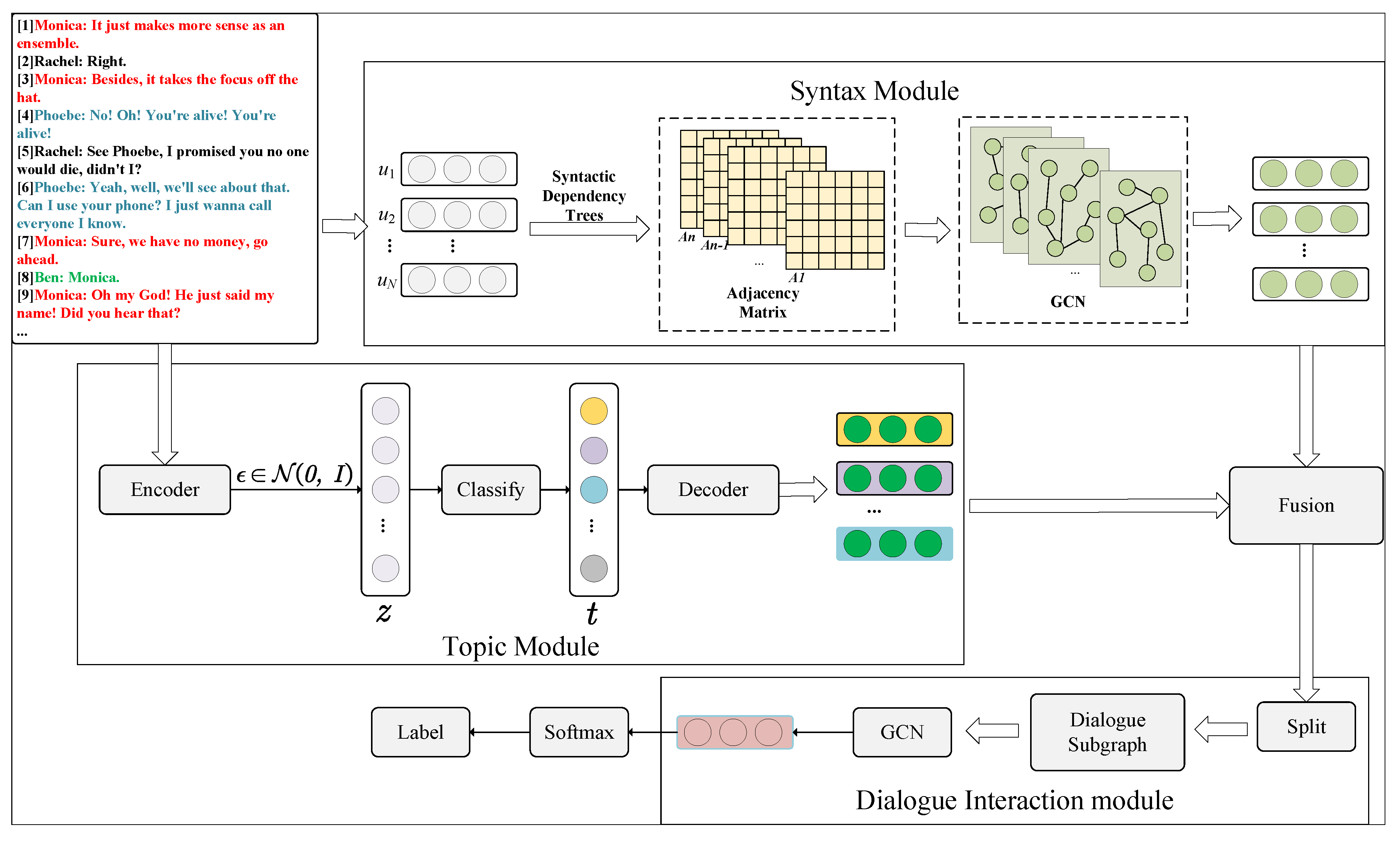

- We jointly learn the grammatical information and topic information of dialogues and propose a new sentiment analysis method for multi-speaker dialogues, a joint syntax-enhanced and topic-driven graph network model (SETD-ERC).

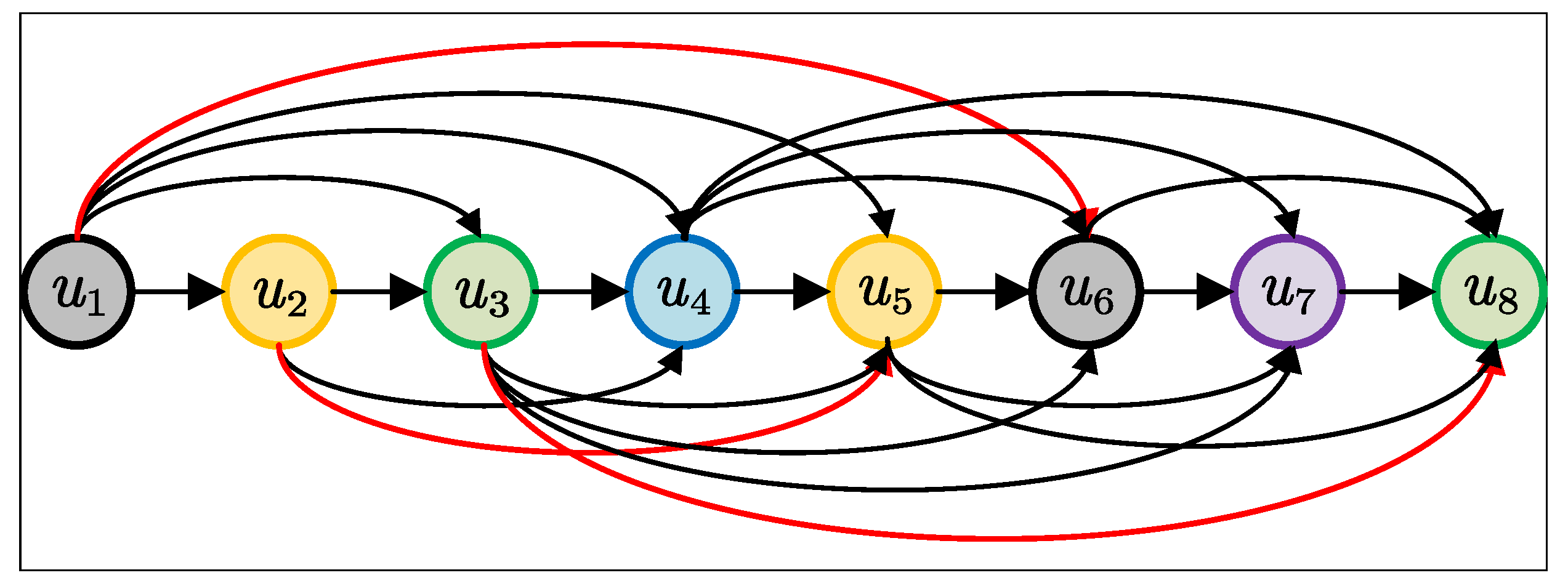

- We combine topic segmentation techniques to model dialogues as dialogue graphs containing both interaction information and contextual information, which build the information iteration of multi-speaker conversations.

- We validate our model on four datasets. The experimental results show that the performance of our model is better than baseline models.

2. Related Work

2.1. Feature Learning in Emotion Recognition

2.2. Emotion Recognition in Conversations

3. Methodology

3.1. Problem Definition

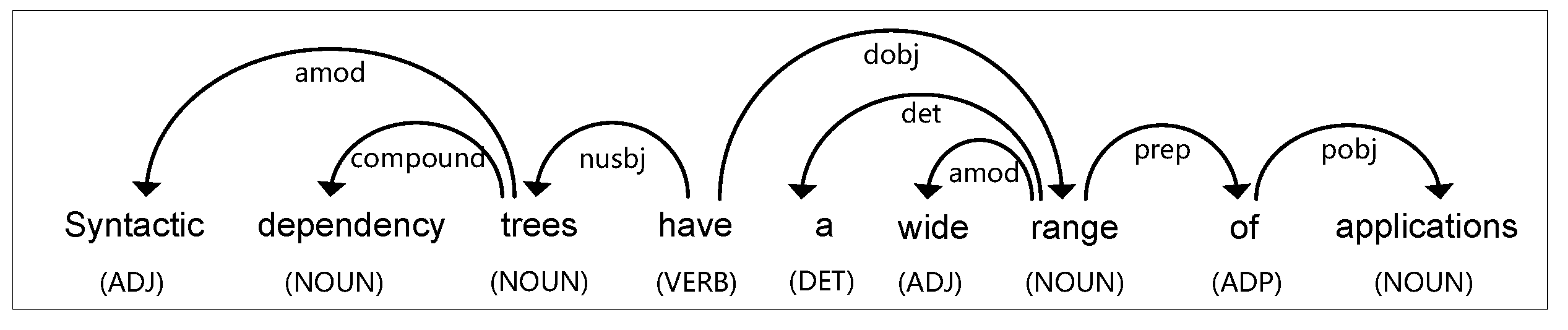

3.2. Syntax Module

| Algorithm 1 Syntactic information construction algorithm. |

|

3.3. Topic Module

3.4. Dialogue Interaction Module

| Algorithm 2 conversation subgraph construction algorithm. |

|

3.5. Objective Function

4. Experimental Setup

4.1. Datasets

4.2. Experimental Details

4.3. Baselines

5. Experimental Results and Analysis

5.1. Analysis of Comparative Experimental Results

5.2. Ablation Study

5.3. Case Study

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Bengesi, S.; Oladunni, T.; Olusegun, R.; Audu, H. A Machine Learning-Sentiment Analysis on Monkeypox Outbreak: An Extensive Dataset to Show the Polarity of Public Opinion From Twitter Tweets. IEEE Access 2023, 11, 11811–11826. [Google Scholar] [CrossRef]

- Qian, Y.; Wang, J.; Li, D.; Zhang, X. Interactive capsule network for implicit sentiment analysis. Appl. Intell. 2023, 53, 3109–3123. [Google Scholar] [CrossRef]

- Mao, Y.; Cai, F.; Guo, Y.; Chen, H. Incorporating emotion for response generation in multi-turn dialogues. Appl. Intell. 2022, 52, 7218–7229. [Google Scholar] [CrossRef]

- Alswaidan, N.; Menai, M.E.B. A survey of state-of-the-art approaches for emotion recognition in text. Knowl. Inf. Syst. 2020, 62, 2937–2987. [Google Scholar] [CrossRef]

- Birjali, M.; Kasri, M.; Hssane, A.B. A comprehensive survey on sentiment analysis: Approaches, challenges and trends. Knowl. Based Syst. 2021, 226, 107134. [Google Scholar] [CrossRef]

- Chen, F.; Huang, Y. Knowledge-enhanced neural networks for sentiment analysis of Chinese reviews. Neurocomputing 2019, 368, 51–58. [Google Scholar] [CrossRef]

- Xu, G.; Meng, Y.; Qiu, X.; Yu, Z.; Wu, X. Sentiment Analysis of Comment Texts Based on BiLSTM. IEEE Access 2019, 7, 51522–51532. [Google Scholar] [CrossRef]

- Tian, L.; Moore, J.D.; Lai, C. Emotion recognition in spontaneous and acted dialogues. In Proceedings of the 2015 International Conference on Affective Computing and Intelligent Interaction, ACII 2015, Xi’an, China, 21–24 September 2015; pp. 698–704. [Google Scholar] [CrossRef]

- Cai, Y.; Cai, H.; Wan, X. Multi-Modal Sarcasm Detection in Twitter with Hierarchical Fusion Model. In Proceedings of the 57th Conference of the Association for Computational Linguistics, ACL 2019, Florence, Italy, 28 July–2 August 2019; Volume 1, pp. 2506–2515. [Google Scholar] [CrossRef]

- Qin, L.; Li, Z.; Che, W.; Ni, M.; Liu, T. Co-GAT: A Co-Interactive Graph Attention Network for Joint Dialog Act Recognition and Sentiment Classification. In Proceedings of the Thirty-Fifth AAAI Conference on Artificial Intelligence, AAAI 2021, Thirty-Third Conference on Innovative Applications of Artificial Intelligence, IAAI 2021, The Eleventh Symposium on Educational Advances in Artificial Intelligence, EAAI 2021, Virtual Event, 2–9 February 2021; pp. 13709–13717. [Google Scholar]

- Li, D.; Li, Y.; Wang, S. Interactive double states emotion cell model for textual dialogue emotion prediction. Knowl. Based Syst. 2020, 189, 105084. [Google Scholar] [CrossRef]

- Lou, C.; Liang, B.; Gui, L.; He, Y.; Dang, Y.; Xu, R. Affective Dependency Graph for Sarcasm Detection. In Proceedings of the SIGIR ’21: The 44th International ACM SIGIR Conference on Research and Development in Information Retrieval, Virtual Event, 11–15 July 2021; pp. 1844–1849. [Google Scholar] [CrossRef]

- Ouyang, S.; Zhang, Z.; Zhao, H. Dialogue Graph Modeling for Conversational Machine Reading. In Proceedings of the Findings of the Association for Computational Linguistics: ACL/IJCNLP 2021, Online Event, 1–6 August 2021; Volume ACL/IJCNLP, Findings of ACL. pp. 3158–3169. [Google Scholar] [CrossRef]

- Thost, V.; Chen, J. Directed Acyclic Graph Neural Networks. In Proceedings of the 9th International Conference on Learning Representations, ICLR 2021, Virtual Event, Austria, 3–7 May 2021. [Google Scholar]

- Lin, W.; Li, C. Review of Studies on Emotion Recognition and Judgment Based on Physiological Signals. Appl. Sci. 2023, 13, 2573. [Google Scholar] [CrossRef]

- Sindhu, C.; Vadivu, G. Fine grained sentiment polarity classification using augmented knowledge sequence-attention mechanism. Microprocess. Microsyst. 2021, 81, 103365. [Google Scholar] [CrossRef]

- Remus, R. ASVUniOfLeipzig: Sentiment Analysis in Twitter using Data-driven Machine Learning Techniques. In Proceedings of the 7th International Workshop on Semantic Evaluation, SemEval@NAACL-HLT 2013, Atlanta, GA, USA, 14–15 June 2013; Diab, M.T., Baldwin, T., Baroni, M., Eds.; The Association for Computer Linguistics: Stroudsburg, PA, USA, 2013; pp. 450–454. [Google Scholar]

- Jo, A.H.; Kwak, K.C. Speech Emotion Recognition Based on Two-Stream Deep Learning Model Using Korean Audio Information. Appl. Sci. 2023, 13, 2167. [Google Scholar] [CrossRef]

- Zhang, D.; Zhu, Z.; Kang, S.; Zhang, G.; Liu, P. Syntactic and semantic analysis network for aspect-level sentiment classification. Appl. Intell. 2021, 51, 6136–6147. [Google Scholar] [CrossRef]

- Pang, J.; Rao, Y.; Xie, H.; Wang, X.; Wang, F.L.; Wong, T.; Li, Q. Fast Supervised Topic Models for Short Text Emotion Detection. IEEE Trans. Cybern. 2021, 51, 815–828. [Google Scholar] [CrossRef] [PubMed]

- Dieng, A.B.; Ruiz, F.J.R.; Blei, D.M. Topic Modeling in Embedding Spaces. Trans. Assoc. Comput. Linguist. 2020, 8, 439–453. [Google Scholar] [CrossRef]

- Mikolov, T.; Sutskever, I.; Chen, K.; Corrado, G.S.; Dean, J. Distributed Representations of Words and Phrases and their Compositionality. In Proceedings of the Advances in Neural Information Processing Systems 26: 27th Annual Conference on Neural Information Processing Systems 2013, Lake Tahoe, NV, USA, 5–8 December 2013; Burges, C.J.C., Bottou, L., Ghahramani, Z., Weinberger, K.Q., Eds.; 2013; pp. 3111–3119. [Google Scholar]

- Devlin, J.; Chang, M.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, NAACL-HLT 2019, (Volume 1: Long and Short Papers), Minneapolis, MN, USA, 2–7 June 2019; Burstein, J., Doran, C., Solorio, T., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2019; pp. 4171–4186. [Google Scholar] [CrossRef]

- Peinelt, N.; Nguyen, D.; Liakata, M. tBERT: Topic Models and BERT Joining Forces for Semantic Similarity Detection. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, ACL 2020, Online, 5–10 July 2020; Jurafsky, D., Chai, J., Schluter, N., Tetreault, J.R., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020; pp. 7047–7055. [Google Scholar] [CrossRef]

- Zhu, L.; Pergola, G.; Gui, L.; Zhou, D.; He, Y. Topic-Driven and Knowledge-Aware Transformer for Dialogue Emotion Detection. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing, ACL/IJCNLP 2021, (Volume 1: Long Papers), Virtual Event, 1–6 August 2021; Zong, C., Xia, F., Li, W., Navigli, R., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2021; pp. 1571–1582. [Google Scholar] [CrossRef]

- Wang, Y.; Huang, M.; Zhu, X.; Zhao, L. Attention-based LSTM for Aspect-level Sentiment Classification. In Proceedings of the 2016 Conference on Empirical Methods in Natural Language Processing, EMNLP 2016, Austin, TX, USA, 1–4 November 2016; Su, J., Carreras, X., Duh, K., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2016; pp. 606–615. [Google Scholar] [CrossRef]

- Huang, B.; Carley, K.M. Syntax-Aware Aspect Level Sentiment Classification with Graph Attention Networks. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing, EMNLP-IJCNLP 2019, Hong Kong, China, 3–7 November 2019; Inui, K., Jiang, J., Ng, V., Wan, X., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2019; pp. 5468–5476. [Google Scholar] [CrossRef]

- Jia, X.; Wang, L. Attention enhanced capsule network for text classification by encoding syntactic dependency trees with graph convolutional neural network. PeerJ Comput. Sci. 2022, 8, e831. [Google Scholar] [CrossRef] [PubMed]

- Al-Shaikh, A.; Mahafzah, B.A.; Alshraideh, M. Hybrid harmony search algorithm for social network contact tracing of COVID-19. Soft Comput. 2023, 27, 3343–3365. [Google Scholar] [CrossRef]

- Majumder, N.; Poria, S.; Hazarika, D.; Mihalcea, R.; Gelbukh, A.F.; Cambria, E. DialogueRNN: An Attentive RNN for Emotion Detection in Conversations. In Proceedings of the The Thirty-Third AAAI Conference on Artificial Intelligence, AAAI 2019, The Thirty-First Innovative Applications of Artificial Intelligence Conference, IAAI 2019, The Ninth AAAI Symposium on Educational Advances in Artificial Intelligence, EAAI 2019, Honolulu, HI, USA, 27 January–1 February 2019; pp. 6818–6825. [Google Scholar] [CrossRef]

- Ghosal, D.; Majumder, N.; Poria, S.; Chhaya, N.; Gelbukh, A.F. DialogueGCN: A Graph Convolutional Neural Network for Emotion Recognition in Conversation. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing, EMNLP-IJCNLP 2019, Hong Kong, China, 3–7 November 2019; pp. 154–164. [Google Scholar] [CrossRef]

- Wan, H.; Tang, P.; Tian, B.; Yu, H.; Jin, C.; Zhao, B.; Wang, H. Water Extraction in PolSAR Image Based on Superpixel and Graph Convolutional Network. Appl. Sci. 2023, 13, 2610. [Google Scholar] [CrossRef]

- Lee, B.; Choi, Y.S. Graph Based Network with Contextualized Representations of Turns in Dialogue. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, EMNLP 2021, Virtual Event/Punta Cana, Dominican Republic, 7–11 November 2021; pp. 443–455. [Google Scholar] [CrossRef]

- Zhang, D.; Wu, L.; Sun, C.; Li, S.; Zhu, Q.; Zhou, G. Modeling both Context- and Speaker-Sensitive Dependence for Emotion Detection in Multi-speaker Conversations. In Proceedings of the Twenty-Eighth International Joint Conference on Artificial Intelligence, IJCAI 2019, Macao, China, 10–16 August 2019; pp. 5415–5421. [Google Scholar] [CrossRef]

- Shen, W.; Chen, J.; Quan, X.; Xie, Z. DialogXL: All-in-One XLNet for Multi-Party Conversation Emotion Recognition. In Proceedings of the Thirty-Fifth AAAI Conference on Artificial Intelligence, AAAI 2021, Thirty-Third Conference on Innovative Applications of Artificial Intelligence, IAAI 2021, The Eleventh Symposium on Educational Advances in Artificial Intelligence, EAAI 2021, Virtual Event, 2–9 February2021; pp. 13789–13797. [Google Scholar]

- Yang, Z.; Dai, Z.; Yang, Y.; Carbonell, J.G.; Salakhutdinov, R.; Le, Q.V. XLNet: Generalized Autoregressive Pretraining for Language Understanding. In Proceedings of the Advances in Neural Information Processing Systems 32: Annual Conference on Neural Information Processing Systems 2019, NeurIPS 2019, Vancouver, BC, Canada, 8–14 December 2019; pp. 5754–5764. [Google Scholar]

- Sun, Y.; Yu, N.; Fu, G. A Discourse-Aware Graph Neural Network for Emotion Recognition in Multi-Party Conversation. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2021, Virtual Event, 16–20 November 2021; pp. 2949–2958. [Google Scholar] [CrossRef]

- Kingma, D.P.; Welling, M. Auto-Encoding Variational Bayes. In Proceedings of the 2nd International Conference on Learning Representations, ICLR 2014, Banff, AB, Canada, 14–16 April 2014. [Google Scholar]

- Eger, S.; Youssef, P.; Gurevych, I. Is it time to swish? Comparing deep learning activation functions across NLP tasks. arXiv 2019, arXiv:1901.02671. [Google Scholar]

- Abuqaddom, I.; Mahafzah, B.A.; Faris, H. Oriented stochastic loss descent algorithm to train very deep multi-layer neural networks without vanishing gradients. Knowl.-Based Syst. 2021, 230, 107391. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Busso, C.; Bulut, M.; Lee, C.; Kazemzadeh, A.; Mower, E.; Kim, S.; Chang, J.N.; Lee, S.; Narayanan, S.S. IEMOCAP: Interactive emotional dyadic motion capture database. Lang. Resour. Eval. 2008, 42, 335–359. [Google Scholar] [CrossRef]

- Zhong, P.; Wang, D.; Miao, C. Knowledge-Enriched Transformer for Emotion Detection in Textual Conversations. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing, EMNLP-IJCNLP 2019, Hong Kong, China, 3–7 November 2019; pp. 165–176. [Google Scholar] [CrossRef]

- Poria, S.; Hazarika, D.; Majumder, N.; Naik, G.; Cambria, E.; Mihalcea, R. MELD: A Multimodal Multi-Party Dataset for Emotion Recognition in Conversations. In Proceedings of the 57th Conference of the Association for Computational Linguistics, ACL 2019, Florence, Italy, 28 July–2 August 2019; Volume 1, pp. 527–536. [Google Scholar] [CrossRef]

- Li, Y.; Su, H.; Shen, X.; Li, W.; Cao, Z.; Niu, S. DailyDialog: A Manually Labelled Multi-turn Dialogue Dataset. In Proceedings of the Eighth International Joint Conference on Natural Language Processing, IJCNLP 2017, Taipei, Taiwan, 27 November–1 December 2017; Volume 1, pp. 986–995. [Google Scholar]

- Zahiri, S.M.; Choi, J.D. Emotion Detection on TV Show Transcripts with Sequence-Based Convolutional Neural Networks. In Proceedings of the The Workshops of the The Thirty-Second AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; pp. 44–52. [Google Scholar]

- Shen, W.; Wu, S.; Yang, Y.; Quan, X. Directed Acyclic Graph Network for Conversational Emotion Recognition. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing, ACL/IJCNLP 2021, (Volume 1: Long Papers), Virtual Event, 1–6 August 2021; pp. 1551–1560. [Google Scholar] [CrossRef]

- Van Rossum, G.; Drake, F.L. Python Reference Manual; Centrum voor Wiskunde en Informatica Amsterdam: Amsterdam, The Netherlands, 1995. [Google Scholar]

- Jiao, W.; Yang, H.; King, I.; Lyu, M.R. HiGRU: Hierarchical Gated Recurrent Units for Utterance-Level Emotion Recognition. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, NAACL-HLT 2019, Minneapolis, MN, USA, 2–7 June 2019; Volume 1, pp. 397–406. [Google Scholar] [CrossRef]

- Ghosal, D.; Majumder, N.; Gelbukh, A.F.; Mihalcea, R.; Poria, S. COSMIC: COmmonSense knowledge for eMotion Identification in Conversations. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2020, Online Event, 16–20 November 2020; Volume EMNLP 2020, Findings of ACL. pp. 2470–2481. [Google Scholar] [CrossRef]

- Hu, D.; Wei, L.; Huai, X. DialogueCRN: Contextual Reasoning Networks for Emotion Recognition in Conversations. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing, ACL/IJCNLP 2021, (Volume 1: Long Papers), Virtual Event, 1–6 August 2021; pp. 7042–7052. [Google Scholar] [CrossRef]

- Li, S.; Yan, H.; Qiu, X. Contrast and Generation Make BART a Good Dialogue Emotion Recognizer. In Proceedings of the Thirty-Sixth AAAI Conference on Artificial Intelligence, AAAI 2022, Thirty-Fourth Conference on Innovative Applications of Artificial Intelligence, IAAI 2022, The Twelveth Symposium on Educational Advances in Artificial Intelligence, EAAI 2022 Virtual Event, 22 February–1 March 2022; pp. 11002–11010. [Google Scholar]

| Token | Part of Speech | Tag | Relationship Type |

|---|---|---|---|

| NOUN | Noun, words that specify a person, place, thing, animal, or idea. | amod | adjective modifie |

| ADJ | Adjective, words that typically modify nouns. | compound | compound word |

| VERB | Verb, words that signal events and actions. | nusbj | nominal subject |

| DET | Determiner, articles and other words that specify a particular noun phrase. | dobj | direct object |

| ADP | Adposition, the head of a prepositional or postpositional phrase. | det | determiner |

| prep | prepositional modifier | ||

| pobj | prepositional object |

| Dataset | Conversations | Utterances | ||||

|---|---|---|---|---|---|---|

| Train | Val | Test | Train | Val | Test | |

| IEMOCAP | 120 | 31 | 5810 | 1623 | ||

| MELD | 1038 | 114 | 280 | 9989 | 1109 | 2610 |

| DailyDialog | 11,118 | 1000 | 1000 | 87,170 | 8069 | 7740 |

| EmoryNLP | 713 | 99 | 85 | 9934 | 1344 | 1328 |

| Model | IEMOCAP | DailyDialog | MELD | EmoryNLP |

|---|---|---|---|---|

| Weighted-Avg-F1 | Micro-F1 | Weighted-Avg-F1 | Weighted-Avg-F1 | |

| HiGRU (2019) | 59.79 | 52.01 | 56.92 | 31.88 |

| DialogueRNN (2019) | 62.75 | 50.65 | 57.03 | 31.27 |

| COSMIC (2020) | 63.05 | 56.16 | 64.28 | 37.10 |

| DAG-ERC (2021) | 68.03 | 59.33 | 63.65 | 39.02 |

| ERMC-DisGCN(2021) | 64.1 | * | 64.22 | 36.38 |

| DialogueGCN (2019) | 64.18 | * | 58.1 | 33.85 |

| DialogXL (2021) | 65.94 | 54.93 | 62.41 | 34.73 |

| DialogueCRN(2021) | 66.2 | * | 58.39 | * |

| CoG-BART (2022) | 66.18 | 56.29 | 64.81 | 39.04 |

| Ours | 70.92 | 57.07 | 66.13 | 40.97 |

| Model | IEMOCAP | DailyDialog | MELD | EmoryNLP |

|---|---|---|---|---|

| Weighted-Avg-F1 | Micro-F1 | Weighted-Avg-F1 | Weighted-Avg-F1 | |

| Ours | 70.92 | 57.07 | 66.13 | 40.97 |

| -syntax | 69.02 | 55.14 | 64.98 | 39.75 |

| -topic | 67.10 | 55.89 | 65.16 | 37.52 |

| -syntax, topic | 66.63 | 54.93 | 64.04 | 37.01 |

| -dialogue graph | 61.81 | 55.18 | 63.37 | 35.59 |

| 59.45 | 53.12 | 56.21 | 33.15 |

| Num | ID | Speaker | Text | Emotion | Prediction |

|---|---|---|---|---|---|

| 1 | 08 | Joey Tribbiani | I know...watch Green Acres the way it was meant to be seen. | Joyful | Joyful |

| 09 | Joey Tribbiani | Uh-huh. | Joyful | Joyful | |

| 2 | 03 | Chandler Bing | Get out! | Mad | Mad |

| ... | |||||

| 07 | Chandler Bing | Uh-huh. | Mad | Mad | |

| 3 | 03 | Casting Guy | Excuse me, that’s 50 bucks? | Mad | Mad |

| ... | |||||

| 06 | Joey Tribbiani | Ohh, you know what it is? ...they’d send over the whole script on real paper and everything. | Peaceful | Peaceful | |

| 07 | Casting Guy | That’s great. | Mad | Peaceful | |

| 4 | 02 | Phoebe Buffay | Hey. | Neutral | Neutral |

| 03 | Rachel Green | Hey Phoebs, whatcha got there? | Neutral | Neutral | |

| 04 | Phoebe Buffay | Ok, Love Story, Brian’s Song, and Terms of Endearment. | Neutral | Neutral | |

| 05 | Monica Geller | Wow, all you need now is The Killing Fields and some guacamole and you’ve got yourself a part-ay. | Joyful | Joyful | |

| 06 | Phoebe Buffay | Yeah, I talked to my grandma about the Old Yeller incident, and she told me that my mom used to not show the ends of sad movies to shield us from the pain and sadness. You know, before she killed herself. | Sad | Sad | |

| 07 | Chandler Bing | Hey. | Neutral | Neutral | |

| 08 | Joey Tribbiani | Hey. | Neutral | Neutral | |

| 09 | Rachel Green | Hey. | Neutral | Neutral | |

| 10 | Monica Geller | Hey. Where is he, where’s Richard? Did you ditch him? | Neutral | Neutral | |

| 11 | Joey Tribbiani | Yeah right after we stole his lunch money and gave him a wedgie. What’s the matter with you, he’s parking the car. | Mad | Mad | |

| 12 | Monica Geller | So’d you guys have fun? | Neutral | Neutral | |

| 13 | Chandler Bing | Your boyfriend is so cool. | Joyful | Joyful | |

| 14 | Joey Tribbiani | Really? | Neutral | Neutral | |

| 15 | Chandler Bing | Yeah, he let us drive his Jaguar. Joey for 12 blocks, me for 15. | Neutral | Neutral |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yu, H.; Ma, T.; Jia, L.; Al-Nabhan, N.; Wahab, M.M.A. Joint Syntax-Enhanced and Topic-Driven Graph Networks for Emotion Recognition in Multi-Speaker Conversations. Appl. Sci. 2023, 13, 3548. https://doi.org/10.3390/app13063548

Yu H, Ma T, Jia L, Al-Nabhan N, Wahab MMA. Joint Syntax-Enhanced and Topic-Driven Graph Networks for Emotion Recognition in Multi-Speaker Conversations. Applied Sciences. 2023; 13(6):3548. https://doi.org/10.3390/app13063548

Chicago/Turabian StyleYu, Hui, Tinghuai Ma, Li Jia, Najla Al-Nabhan, and M. M. Abdel Wahab. 2023. "Joint Syntax-Enhanced and Topic-Driven Graph Networks for Emotion Recognition in Multi-Speaker Conversations" Applied Sciences 13, no. 6: 3548. https://doi.org/10.3390/app13063548

APA StyleYu, H., Ma, T., Jia, L., Al-Nabhan, N., & Wahab, M. M. A. (2023). Joint Syntax-Enhanced and Topic-Driven Graph Networks for Emotion Recognition in Multi-Speaker Conversations. Applied Sciences, 13(6), 3548. https://doi.org/10.3390/app13063548