Temporal Extraction of Complex Medicine by Combining Probabilistic Soft Logic and Textual Feature Feedback

Abstract

1. Introduction

- We realize the atomization and regularization of complex medical time texts. We split the features of medical time and propose seven basic features after analyzing the text of medical time. Then, exploring the textual concept of complex medical time in the CTO, we combine the basic features and first-order predicates and propose eleven extraction rules.

- We apply the PSL to text feature recognition. The PSL, a first-order logical predicate probabilistic inference framework, is combined with rules defining the correspondence of each time type to obtain the satisfaction distance corresponding to eleven types of time. The distance is used as a feedback factor in the model to improve the effectiveness of the text-feature-recognition model.

- We add a text feedback mechanism to the entity recognition model and validate the results of the entity recognition model on the trained text feature model. A positive feedback factor is added based on the validation results, improving the complex time entity recognition model.

2. Related Work

2.1. CTO

2.2. Time Entity Recognition

3. Methodology

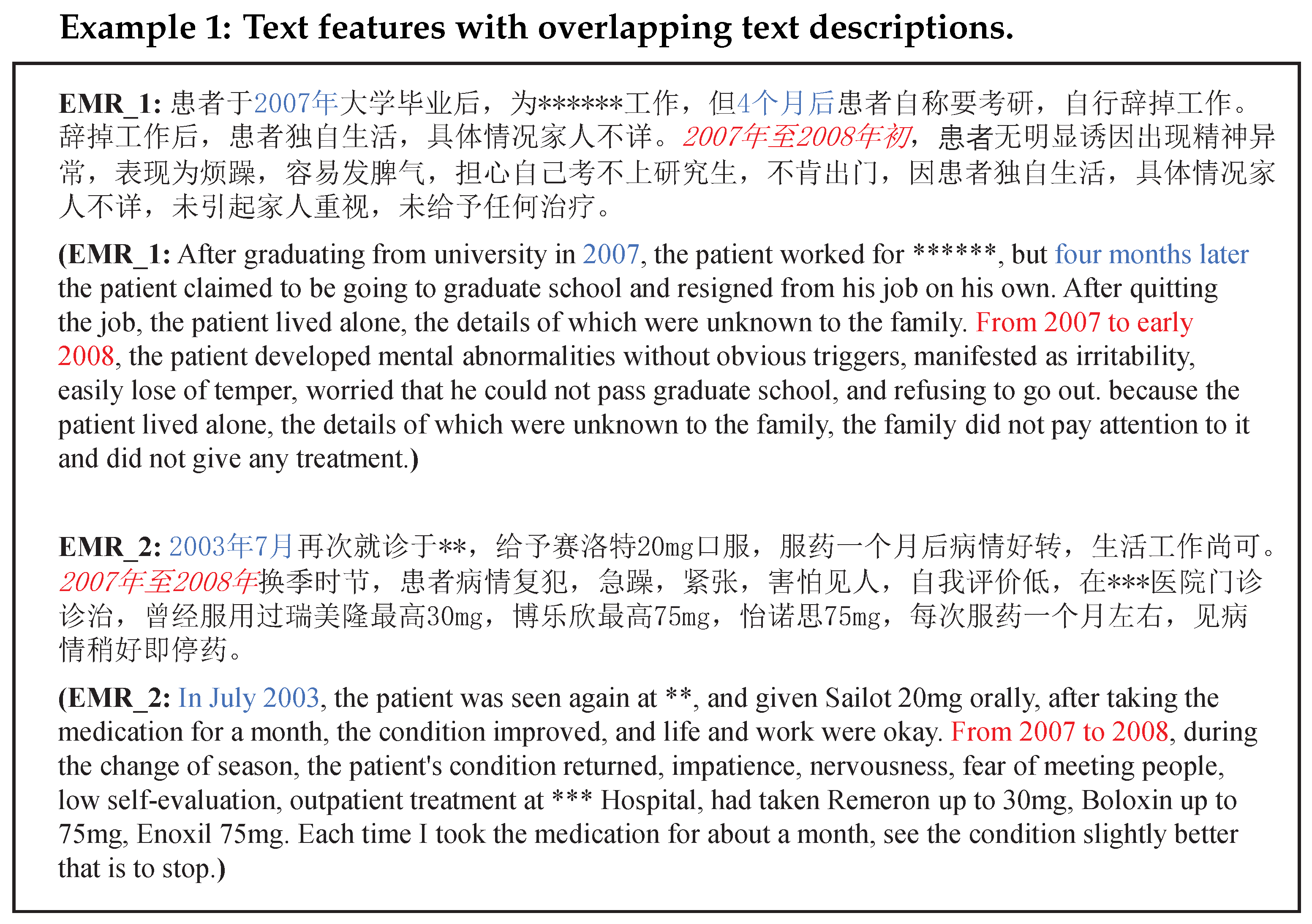

3.1. Basic Extraction Rules for Complex Medical Time

3.2. Feedback Factor Calculation Based on Probabilistic Soft Logic

3.3. Text Feature Feedback Mechanism

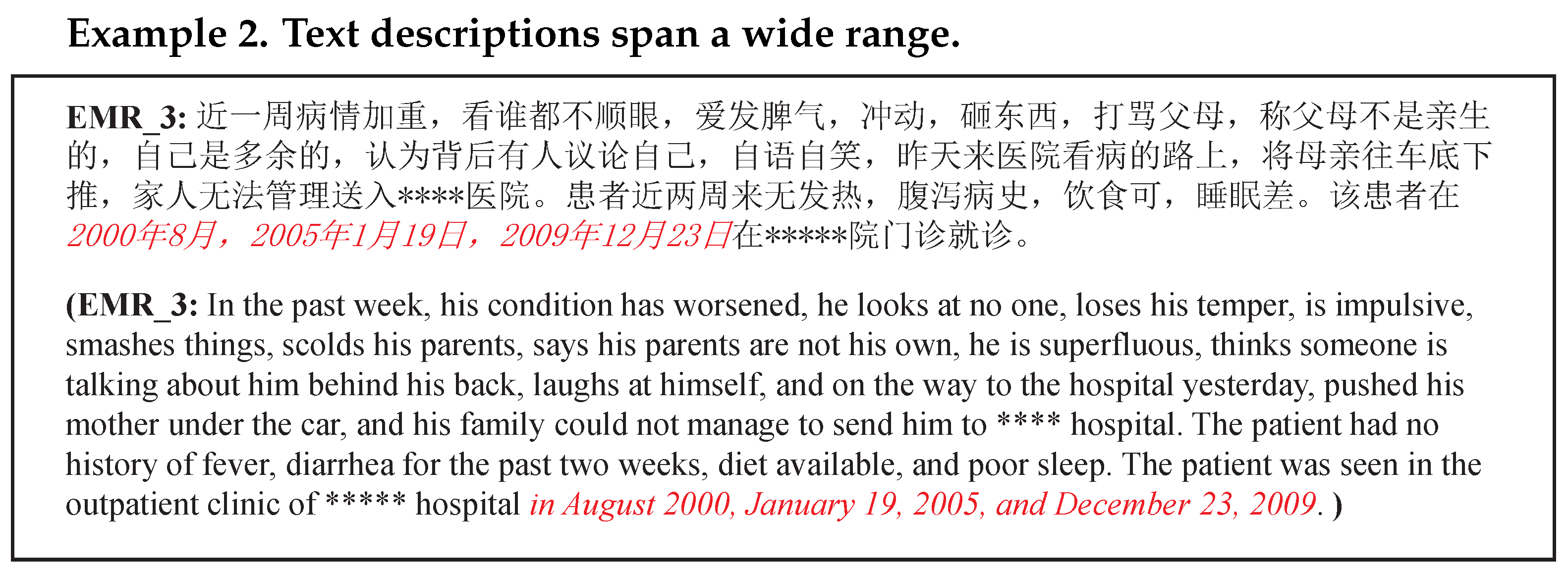

3.3.1. Negative Feedback Mechanism

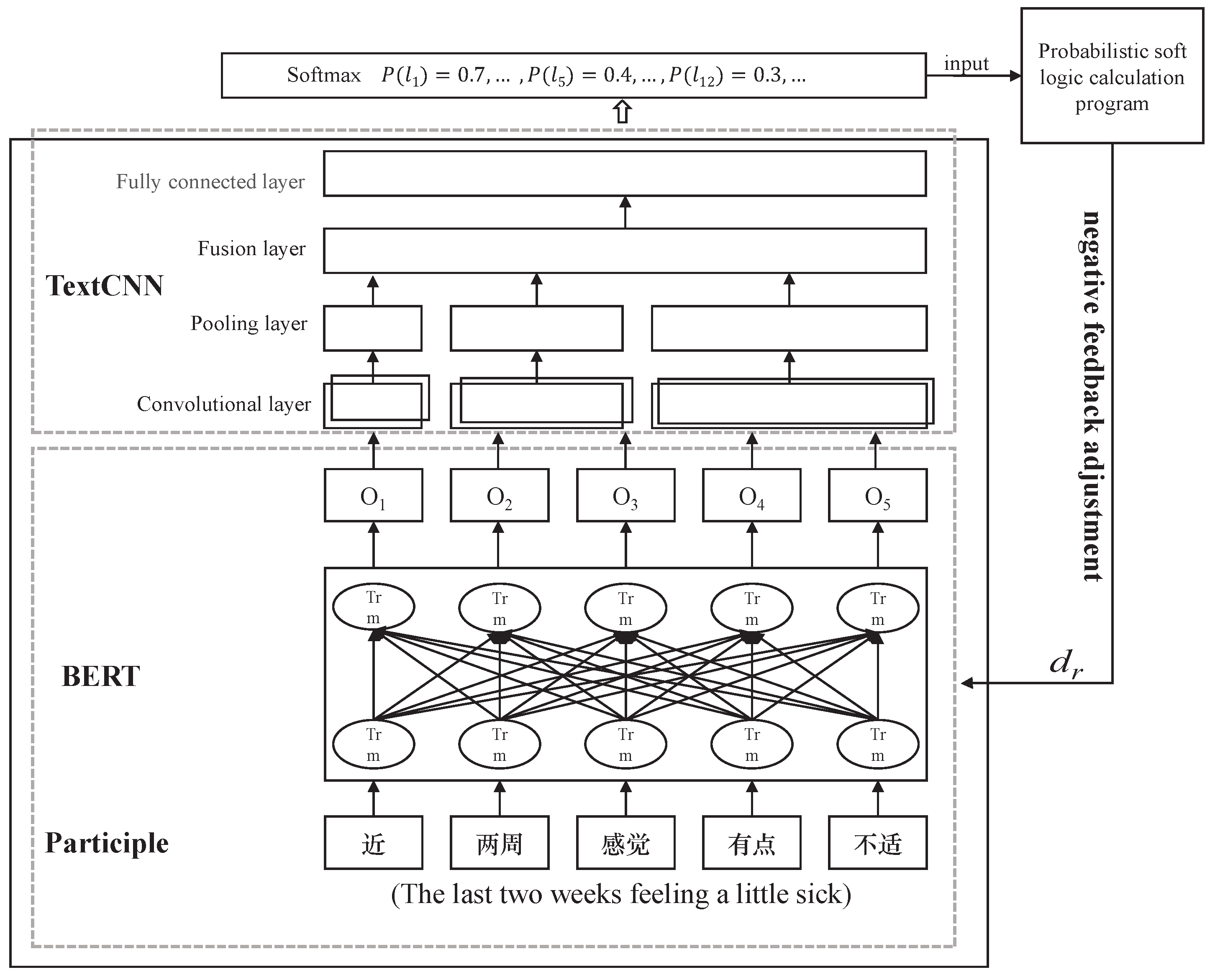

3.3.2. Positive Feedback Mechanism

4. Experimental Results and Discussion

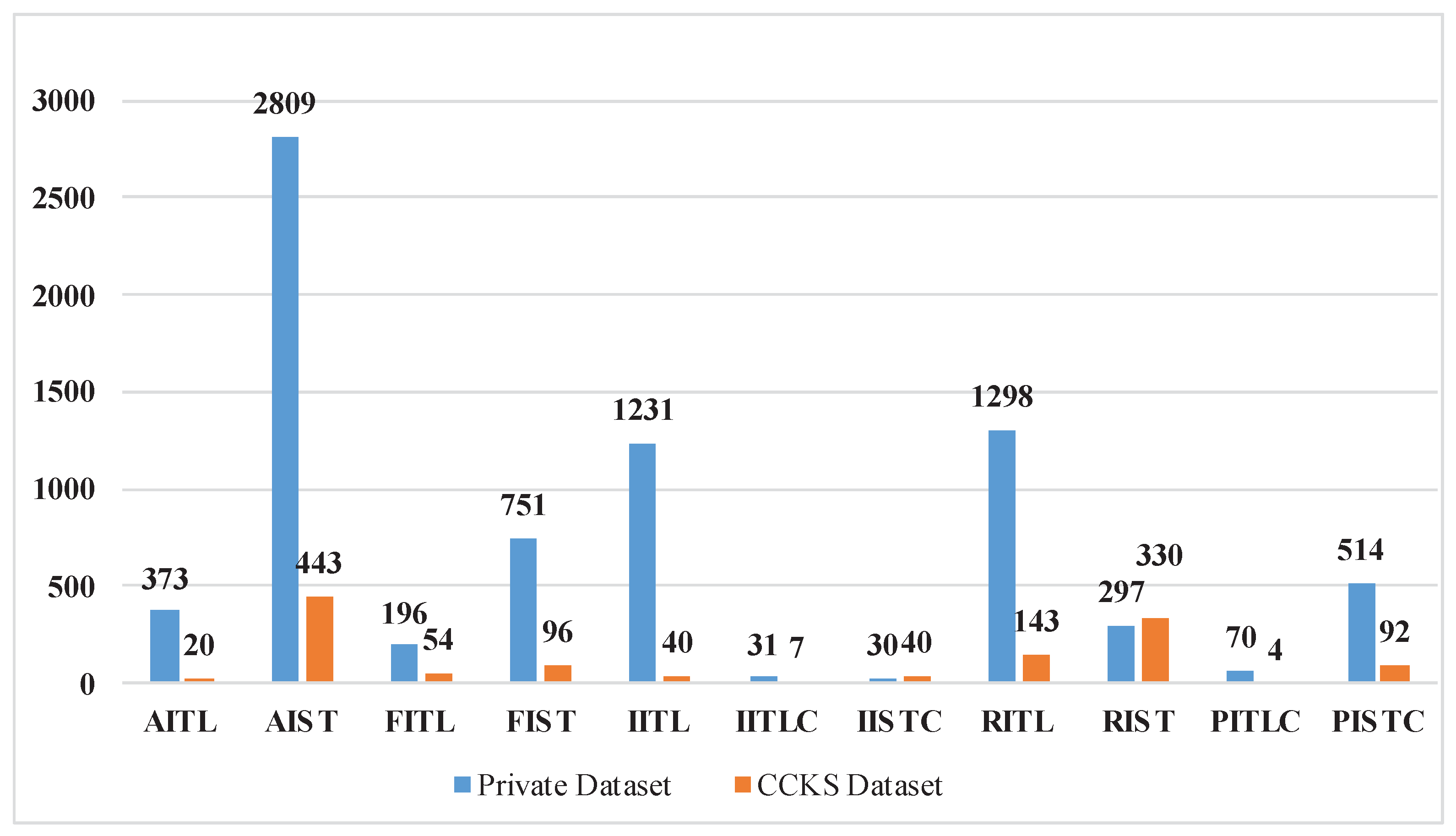

4.1. Datasets

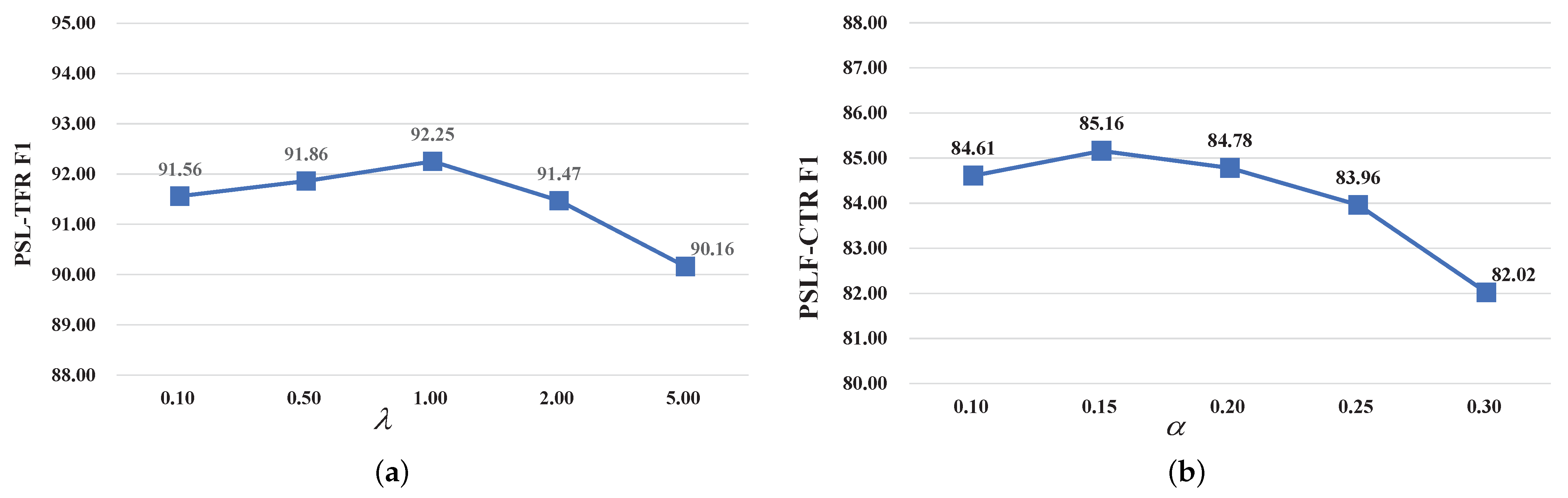

4.2. Experimental Settings and Metrics

4.3. Results and Analyses

4.3.1. Comparison Experiments

4.3.2. Ablation Study

5. Conclusions

- The data used are not a publicly available normalized dataset. Therefore, we consider how to construct a Chinese medical time dataset and achieve normalized annotation of temporal entities and temporal relationships while protecting privacy.

- The model in this paper is only for a Chinese dataset. We will verify whether this method can be used for other languages and explore multilingual temporal recognition models in the future.

- This paper only uses feedback adjustment to adjust the model after the model obtains each training result. Another promising research direction is to explore embedding the rules in the model during the model training.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ben-Assuli, O.; Jacobi, A.; Goldman, O.; Shenhar-Tsarfaty, S.; Rogowski, O.; Zeltser, D.; Shapira, I.; Berliner, S.; Zelber-Sagi, S. Stratifying individuals into non-alcoholic fatty liver disease risk levels using time series machine learning models. J. Biomed. Inform. 2022, 126, 103986. [Google Scholar] [CrossRef] [PubMed]

- Abbasimehr, H.; Paki, R.; Bahrini, A. A novel approach based on combining deep learning models with statistical methods for COVID-19 time series forecasting. Neural Comput. Appl. 2022, 34, 3135–3149. [Google Scholar] [CrossRef] [PubMed]

- Khanday, A.M.U.D.; Rabani, S.T.; Khan, Q.R.; Rouf, N.; Mohi Ud Din, M. Machine learning based approaches for detecting COVID-19 using clinical text data. Int. J. Inf. Technol. 2020, 12, 731–739. [Google Scholar] [CrossRef] [PubMed]

- Hettige, B.; Wang, W.; Li, Y.; Le, S.; Buntine, W.L. MedGraph: Structural and Temporal Representation Learning of Electronic Medical Records. In Proceedings of the ECAI 2020—24th European Conference on Artificial Intelligence, Santiago de Compostela, Spain, 29 August–8 September 2020; pp. 1810–1817. [Google Scholar]

- Lee, W.; Kim, G.; Yu, J.; Kim, Y. Model Interpretation Considering Both Time and Frequency Axes Given Time Series Data. Appl. Sci. 2022, 12, 12807. [Google Scholar] [CrossRef]

- Hauskrecht, M.; Liu, Z.; Wu, L. Modeling Clinical Time Series Using Gaussian Process Sequences. In Proceedings of the 13th SIAM International Conference on Data Mining, Austin, TX, USA, 2–4 May 2013; pp. 623–631. [Google Scholar]

- Hu, D.; Wang, M.; Gao, F.; Xu, F.; Gu, J. Knowledge Representation and Reasoning for Complex Time Expression in Clinical Text. Data Intell. 2022, 4, 573–598. [Google Scholar] [CrossRef]

- Grishman, R.; Sundheim, B. Message Understanding Conference-6: A Brief History. In Proceedings of the 16th International Conference on Computational Linguistics, COLING 1996, Center for Sprogteknologi, Copenhagen, Denmark, 5–9 August 1996; pp. 466–471. [Google Scholar]

- Chinchor, N. Appendix E: MUC-7 Named Entity Task Definition (Version 3.5). In Proceedings of the Seventh Message Understanding Conference, MUC 1998, Fairfax, VA, USA, 29 April–1 May 1998. [Google Scholar]

- Setzer, A.; Gaizauskas, R.J. Annotating Events and Temporal Information in Newswire Texts. In Proceedings of the Second International Conference on Language Resources and Evaluation, LREC 2000, Athens, Greece, 31 May–2 June 2000; pp. 1287–1294. [Google Scholar]

- Fu, Y.; Dhonnchadha, E.U. A Pattern-mining Driven Study on Differences of Newspapers in Expressing Temporal Information. arXiv 2020, arXiv:2011.12265. [Google Scholar]

- Kim, A.; Pethe, C.; Skiena, S. What time is it? Temporal Analysis of Novels. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing, EMNLP 2020, Online, 16–20 November 2020; pp. 9076–9086. [Google Scholar]

- Zarcone, A.; Alam, T.; Kolagar, Z. PATE: A Corpus of Temporal Expressions for the In-car Voice Assistant Domain. In Proceedings of the 12th Language Resources and Evaluation Conference, LREC 2020, Marseille, France, 11–16 May 2020; pp. 523–530. [Google Scholar]

- Madkour, M.; Benhaddou, D.; Tao, C. Temporal data representation, normalization, extraction, and reasoning: A review from clinical domain. Comput. Methods Programs Biomed. 2016, 128, 52–68. [Google Scholar] [CrossRef]

- Hao, T.; Rusanov, A.; Weng, C. Extracting and Normalizing Temporal Expressions in Clinical Data Requests from Researchers. In Proceedings of the Smart Health—International Conference, ICSH 2013, Beijing, China, 3–4 August 2013; pp. 41–51. [Google Scholar]

- Sun, W.; Rumshisky, A.; Uzuner, Ö. Evaluating temporal relations in clinical text: 2012 i2b2 Challenge. J. Am. Med. Inform. Assoc. 2013, 20, 806–813. [Google Scholar] [CrossRef]

- Bethard, S.; Savova, G.; Chen, W.; Derczynski, L.; Pustejovsky, J.; Verhagen, M. SemEval-2016 Task 12: Clinical TempEval. In Proceedings of the 10th International Workshop on Semantic Evaluation, SemEval@NAACL-HLT 2016, San Diego, CA, USA, 16–17 June 2016; pp. 1052–1062. [Google Scholar]

- Tong, W.; Yaqian, Z.; Xuanjing, H.; Lide, W. Chinese Time Expression Recognition Based on Automatically Generated Basic-Time-Unit Rules. J. Chin. Inf. Process. 2010, 24, 3–11. [Google Scholar]

- Viani, N.; Kam, J.; Yin, L.; Bittar, A.; Dutta, R.; Patel, R.; Stewart, R.; Velupillai, S. Temporal information extraction from mental health records to identify duration of untreated psychosis. J. Biomed. Semant. 2020, 11, 2. [Google Scholar] [CrossRef]

- Jinfeng, Y.; Qiubin, Y.; Yi, G.; Zhipeng, J. An Overview of Research on Electronic Medical Record Oriented Named Entity. Acta Autom. Sin. 2014, 40, 1537–1562. [Google Scholar]

- Li, P.; Huang, H. UTA DLNLP at SemEval-2016 Task 12: Deep Learning Based Natural Language Processing System for Clinical Information Identification from Clinical Notes and Pathology Reports. In Proceedings of the 10th International Workshop on Semantic Evaluation, SemEval@NAACL-HLT 2016, San Diego, CA, USA, 16–17 June 2016; pp. 1268–1273. [Google Scholar]

- Chikka, V.R. CDE-IIITH at SemEval-2016 Task 12: Extraction of Temporal Information from Clinical documents using Machine Learning techniques. In Proceedings of the 10th International Workshop on Semantic Evaluation, SemEval@NAACL-HLT 2016, San Diego, CA, USA, 16–17 June 2016; pp. 1237–1240. [Google Scholar]

- Chang, A.X.; Manning, C.D. SUTime: A library for recognizing and normalizing time expressions. In Proceedings of the Eighth International Conference on Language Resources and Evaluation, LREC 2012, Istanbul, Turkey, 23–25 May 2012; pp. 3735–3740. [Google Scholar]

- Strötgen, J.; Gertz, M. A Baseline Temporal Tagger for all Languages. In Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, EMNLP 2015, Lisbon, Portugal, 17–21 September 2015; pp. 541–547. [Google Scholar]

- Zhong, X.; Sun, A.; Cambria, E. Time Expression Analysis and Recognition Using Syntactic Token Types and General Heuristic Rules. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics, ACL 2017, Vancouver, BC, Canada, 30 July–4 August 2017; Volume 1, pp. 420–429. [Google Scholar]

- Moharasan, G.; Ho, T.B. A semi-supervised approach for temporal information extraction from clinical text. In Proceedings of the 2016 IEEE RIVF International Conference on Computing & Communication Technologies, Research, Innovation, and Vision for the Future, RIVF 2016, Hanoi, Vietnam, 7–9 November 2016; pp. 7–12. [Google Scholar]

- Ding, W.; Gao, G.; Shi, L.; Qu, Y. A Pattern-Based Approach to Recognizing Time Expressions. In Proceedings of the Thirty-Third AAAI Conference on Artificial Intelligence, AAAI 2019, the Thirty-First Innovative Applications of Artificial Intelligence Conference, IAAI 2019, the Ninth AAAI Symposium on Educational Advances in Artificial Intelligence, EAAI 2019, Honolulu, HI, USA, 27 January–1 February 2019; pp. 6335–6342. [Google Scholar]

- MacAvaney, S.; Cohan, A.; Goharian, N. GUIR at SemEval-2017 Task 12: A Framework for Cross-Domain Clinical Temporal Information Extraction. In Proceedings of the 11th International Workshop on Semantic Evaluation, SemEval@ACL 2017, Vancouver, BC, Canada, 3–4 August 2017; pp. 1024–1029. [Google Scholar]

- Hossain, T.; Rahman, M.M.; Islam, S.M. Temporal Information Extraction from Textual Data using Long Short-term Memory Recurrent Neural Network. J. Comput. Technol. Appl. 2018, 9, 1–8. [Google Scholar]

- Patra, B.; Fufa, C.; Bhattacharya, P.; Lee, C. To Schedule or not to Schedule: Extracting Task Specific Temporal Entities and Associated Negation Constraints. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing, EMNLP 2020, Online, 16–20 November 2020; pp. 8445–8455. [Google Scholar]

- Li, Z.; Li, C.; Long, Y.; Wang, X. A system for automatically extracting clinical events with temporal information. BMC Med. Inform. Decis. Mak. 2020, 20, 198. [Google Scholar] [CrossRef]

- Guominl, S.; Sanqian, Z.; Fenlil, J.; Songyanl, J. Temporal Information Extraction and Normalization Method in Chinese Texts. J. Geomat. Sci. Technol. 2019, 36, 538–544. [Google Scholar]

- Jing, L.; Defang, C.; Chunfa, Y. Automatic TIMEX2 tagging of Chinese temporal information. J. Tsinghua Univ. Technol. 2008, 48, 117–120. [Google Scholar]

- Qiong, W.; Degen, H. Temporal Information Extraction Based on CRF and Time Thesaurus. J. Chin. Inf. Process. 2014, 28, 169–174. [Google Scholar]

- Wencong, L.; Chunju, Z.; Chen, W.; Xueying, Z.; Yueqin, Z.; Shoutao, J.; Yanxu, L. Geological Time Information Extraction from Chinese Text Based on BiLSTM-CRF. Adv. Earth Sci. 2021, 36, 211–220. [Google Scholar]

- Ma, K.; Tan, Y.; Tian, M.; Xie, X.; Qiu, Q.; Li, S.; Wang, X. Extraction of temporal information from social media messages using the BERT model. Earth Sci. Inform. 2022, 15, 573–584. [Google Scholar] [CrossRef]

- Lejun, Z.; Weimin, W. Chinese Time Expression Recognition Based on BERT-FLAT-CRF Model. Softw. Guide 2021, 20, 59–63. [Google Scholar]

- Kimmig, A.; Bach, S.; Broecheler, M.; Huang, B.; Getoor, L. A short introduction to probabilistic soft logic. In Proceedings of the NIPS Workshop on Probabilistic Programming: Foundations and Applications, Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1–4. [Google Scholar]

- Gridach, M. A framework based on (probabilistic) soft logic and neural network for NLP. Appl. Soft Comput. 2020, 93, 106232. [Google Scholar] [CrossRef]

- Broecheler, M.; Getoor, L. Computing marginal distributions over continuous Markov networks for statistical relational learning. In Proceedings of the Advances in Neural Information Processing Systems 23: 24th Annual Conference on Neural 478 Information Processing Systems 2010, Vancouver, BC, Canada, 6–9 December 2010; pp. 316–324. [Google Scholar]

- Chen, X.; Chen, M.; Shi, W.; Sun, Y.; Zaniolo, C. Embedding Uncertain Knowledge Graphs. In Proceedings of the Thirty-Third AAAI Conference on Artificial Intelligence, AAAI 2019, The Thirty-First Innovative Applications of Artificial Intelligence Conference, IAAI 2019, The Ninth AAAI Symposium on Educational Advances in Artificial Intelligence, EAAI 2019, Honolulu, HI, USA, 27 January–1 February 2019; pp. 3363–3370. [Google Scholar]

- Chowdhury, R.; Srinivasan, S.; Getoor, L. Joint Estimation of User And Publisher Credibility for Fake News Detection. In Proceedings of the CIKM ’20: The 29th ACM International Conference on Information and Knowledge Management, Virtual Event, Ireland, 19–23 October 2020; pp. 1993–1996. [Google Scholar]

- Li, J.; Sun, A.; Han, J.; Li, C. A Survey on Deep Learning for Named Entity Recognition. IEEE Trans. Knowl. Data Eng. 2022, 34, 50–70. [Google Scholar] [CrossRef]

- Bethard, S.; Savova, G.; Palmer, M.; Pustejovsky, J. SemEval-2017 Task 12: Clinical TempEval. In Proceedings of the 11th International Workshop on Semantic Evaluation, Vancouver, BC, Canada, 3–4 August 2017; pp. 565–572. [Google Scholar]

| Syntactic Forms | Semantic Concepts | Acronyms | Examples | Eglish Examples |

|---|---|---|---|---|

| Instant | Absolute | AIST | 2021年12月4日 | 4 December 2021 |

| Relative | RIST | 昨天 | Yesterday | |

| Fuzzy | FIST | 6点左右 | Around 6:00 p.m. | |

| Interval | Absolute | AITL | 2021年11月至12月 | November to December 2021 |

| Relative | RITL | 昨天早6点到9点 | Yesterday from 6:00 a.m. to 9:00 a.m. | |

| Fuzzy | FITL | 大概下午5点开始8点结束 | About 5:00 p.m. start 8:00 p.m. end | |

| Incomplete | IITL | 昨天结束服药 | Finished taking medication yesterday | |

| Collection | Periodic Instant | PISTC | 每天下午三点 | Daily at 3:00 p.m. |

| Irregular Instant | IISTC | 10点、 11点、 20点分别有抽搐发作 | Seizures at 10:00, 11:00 and 20:00 o’clock | |

| Periodic Interval Collection | PITLC | 每年3至5月 | March to May every year | |

| Irregular Interval | IITLC | 在昨天下午9点至12点、今天早上5点至6点、下午4点至11点分别进行抢救 | Resuscitation took place yesterday from 9:00 pm to 12:00 pm, this morning from 5:00 a.m. to 6:00 a.m., and from 4:00 p.m. to 11:00 p.m. |

| Parameter | Value |

|---|---|

| Max Length | 256 |

| Learning Rate | 0.001 |

| batch-size | 32 |

| dropout | 0.1 |

| 1 | |

| 0.15 |

| BERT+BiLSTM+CRF | BERT+FLAT+CRF | PSLF-CTR | |||||||

|---|---|---|---|---|---|---|---|---|---|

| TYPE | P | R | F1 | P | R | F1 | P | R | F1 |

| AIST | 92.88 | 95.83 | 94.33 | 93.81 | 96.54 | 95.15 | 93.84 (0.96) | 95.81 (−0.02) | 94.82 (0.49) |

| RIST | 85.13 | 85.67 | 85.40 | 85.53 | 86.90 | 86.21 | 89.14 (4.01) | 89.14 (3.47) | 89.14 (3.74) |

| FIST | 81.86 | 77.67 | 79.71 | 80.65 | 79.91 | 80.28 | 81.17 (−0.69) | 82.65 (4.98) | 81.90 (2.19) |

| AITL | 86.40 | 85.71 | 86.06 | 85.83 | 86.51 | 86.17 | 88.00 (1.60) | 87.30 (1.59) | 87.65 (1.59) |

| RITL | 75.89 | 79.55 | 77.68 | 76.83 | 80.11 | 78.43 | 78.75 (2.86) | 81.97 (2.42) | 80.33 (2.62) |

| FITL | 68.60 | 81.38 | 74.45 | 69.19 | 81.51 | 74.84 | 76.92 (8.32) | 82.19 (0.81) | 79.47 (5.02) |

| IITL | 78.57 | 91.67 | 84.62 | 77.27 | 91.89 | 83.95 | 79.76 (1.19) | 90.54 (−1.13) | 84.81 (0.19) |

| PISTC | 60.33 | 68.46 | 64.14 | 62.13 | 48.98 | 48.98 | 64.41 (4.08) | 70.95 (2.49) | 67.52 (3.38) |

| IISTC | 47.37 | 69.23 | 56.25 | 52.94 | 69.23 | 60.00 | 64.29 (16.92) | 69.23 (0.00) | 66.67 (10.42) |

| PITLC | 44.44 | 52.17 | 48.00 | 51.85 | 60.87 | 56.00 | 53.85 (9.41) | 60.87 (8.70) | 57.14 (9.14) |

| IITLC | 71.43 | 71.43 | 71.43 | 77.78 | 77.78 | 77.78 | 77.78 (6.35) | 77.78 (6.35) | 77.78 (6.35) |

| BERT+BiLSTM+CRF | BERT+FLAT+CRF | PSLF-CTR | |||||||

|---|---|---|---|---|---|---|---|---|---|

| TYPE | P | R | F1 | P | R | F1 | P | R | F1 |

| AIST | 93.92 | 94.13 | 94.02 | 94.59 | 94.81 | 94.70 | 94.52 (0.60) | 93.45 (−0.68) | 93.98 (−0.04) |

| RIST | 91.89 | 92.73 | 92.31 | 92.17 | 92.73 | 92.45 | 91.34 (−0.55) | 92.73 (0.00) | 92.03 (−0.28) |

| FIST | 85.39 | 79.17 | 82.16 | 87.36 | 79.17 | 83.06 | 87.64 (2.25) | 81.25 (2.08) | 84.32 (2.16) |

| AITL | 84.62 | 55.00 | 66.67 | 80.00 | 60.00 | 68.57 | 92.31 (7.69) | 60.00 (5.00) | 72.73 (6.06) |

| RITL | 68.38 | 65.03 | 66.67 | 75.76 | 69.93 | 72.73 | 76.15 (7.77) | 69.23 (4.20) | 72.53 (5.86) |

| FITL | 72.88 | 79.63 | 76.11 | 72.41 | 77.78 | 77.19 | 73.77 (0.89) | 83.33 (3.70) | 78.26 (2.15) |

| IITL | 85.00 | 85.00 | 85.00 | 84.44 | 95.00 | 89.41 | 86.05 (1.05) | 92.50 (7.5) | 89.16 (4.16) |

| PISTC | 45.71 | 52.17 | 48.73 | 46.15 | 52.17 | 48.98 | 46.60 (0.89) | 52.17 (0.00) | 49.23 (0.50) |

| IISTC | 79.17 | 95.00 | 86.36 | 82.61 | 95.00 | 88.37 | 84.44 (5.27) | 95.00 (0.00) | 89.41 (3.05) |

| PITLC | 50.00 | 50.00 | 50.00 | 50.00 | 50.00 | 50.00 | 50.00 (0.00) | 50.00 (0.00) | 50.00 (0.00) |

| IITLC | 36.36 | 70.00 | 47.86 | 38.46 | 71.43 | 50.00 | 41.67 (5.31) | 71.43 (1.43) | 52.63 (4.77) |

| BERT+textCNN | PSL-CTR | |||||

|---|---|---|---|---|---|---|

| P | R | F1 | P | R | F1 | |

| 99.81 | 96.89 | 98.33 | 99.34 (−0.47) | 94.23 (−2.66) | 96.72 (−1.61) | |

| 97.42 | 86.64 | 91.72 | 97.18 (−0.24) | 87.22 (0.58) | 91.93 (0.21) | |

| 96.97 | 91.10 | 94.65 | 97.75 (0.78) | 89.21 (−1.89) | 93.29 (−1.36) | |

| 78.79 | 55.32 | 65.00 | 80.25 (1.46) | 61.41 (6.093) | 69.58 (4.58) | |

| 96.64 | 93.98 | 95.29 | 98.73 (2.08) | 92.38 (−1.60) | 95.45 (0.16) | |

| 97.60 | 92.64 | 95.06 | 97.59 (−0.01) | 92.87 (0.23) | 95.17 (0.11) | |

| 96.84 | 64.04 | 77.09 | 92.97 (−3.87) | 65.58 (1.54) | 76.91 (−0.18) | |

| BERT+textCNN | PSL-CTR | |||||

|---|---|---|---|---|---|---|

| P | R | F1 | P | R | F1 | |

| 90.39 | 93.99 | 92.15 | 97.93 (7.54) | 96.08 (2.09) | 97.00 (4.84) | |

| 81.63 | 77.16 | 79.33 | 92.20 (10.57) | 91.58 (14.42) | 91.89 (12.56) | |

| 73.08 | 77.98 | 75.45 | 85.93 (12.85) | 82.96 (4.98) | 84.42 (8.97) | |

| 90.53 | 82.13 | 86.13 | 93.88 (3.35) | 94.64 (12.51) | 94.26 (8.13) | |

| 68.56 | 71.60 | 70.05 | 82.47 (13.91) | 79.74 (8.14) | 81.08 (11.03) | |

| 83.24 | 70.86 | 76.55 | 92.35 (9.11) | 80.10 (9.24) | 85.79 (9.24) | |

| 66.67 | 76.00 | 71.03 | 86.68 (20.01) | 80.91 (4.91) | 83.70 (12.67) | |

| 82.13 | 83.40 | 82.76 | 93.11 (10.98) | 86.77 (3.37) | 89.83 (7.07) | |

| 60.86 | 58.23 | 59.52 | 83.93 (23.07) | 67.14 (8.91) | 74.60 (15.09) | |

| 47.23 | 47.84 | 47.53 | 63.64 (16.41) | 67.74 (19.90) | 65.63 (18.09) | |

| 45.00 | 44.00 | 44.49 | 58.62 (13.62) | 56.67 (12.67) | 57.63 (13.13) | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gu, J.; Wang, D.; Hu, D.; Gao, F.; Xu, F. Temporal Extraction of Complex Medicine by Combining Probabilistic Soft Logic and Textual Feature Feedback. Appl. Sci. 2023, 13, 3348. https://doi.org/10.3390/app13053348

Gu J, Wang D, Hu D, Gao F, Xu F. Temporal Extraction of Complex Medicine by Combining Probabilistic Soft Logic and Textual Feature Feedback. Applied Sciences. 2023; 13(5):3348. https://doi.org/10.3390/app13053348

Chicago/Turabian StyleGu, Jinguang, Daiwen Wang, Danyang Hu, Feng Gao, and Fangfang Xu. 2023. "Temporal Extraction of Complex Medicine by Combining Probabilistic Soft Logic and Textual Feature Feedback" Applied Sciences 13, no. 5: 3348. https://doi.org/10.3390/app13053348

APA StyleGu, J., Wang, D., Hu, D., Gao, F., & Xu, F. (2023). Temporal Extraction of Complex Medicine by Combining Probabilistic Soft Logic and Textual Feature Feedback. Applied Sciences, 13(5), 3348. https://doi.org/10.3390/app13053348