Inferior Alveolar Canal Automatic Detection with Deep Learning CNNs on CBCTs: Development of a Novel Model and Release of Open-Source Dataset and Algorithm

Abstract

Featured Application

Abstract

1. Introduction

2. Literature Review

2.1. The Inferior Alveolar Canal: Clinical Insights

2.2. Cone Beam Computed Tomography and Conventional Radiology

2.3. CBCT Image Processing

2.4. Automatic Segmentation of the Inferior Alveolar Canal

3. Materials and Methods

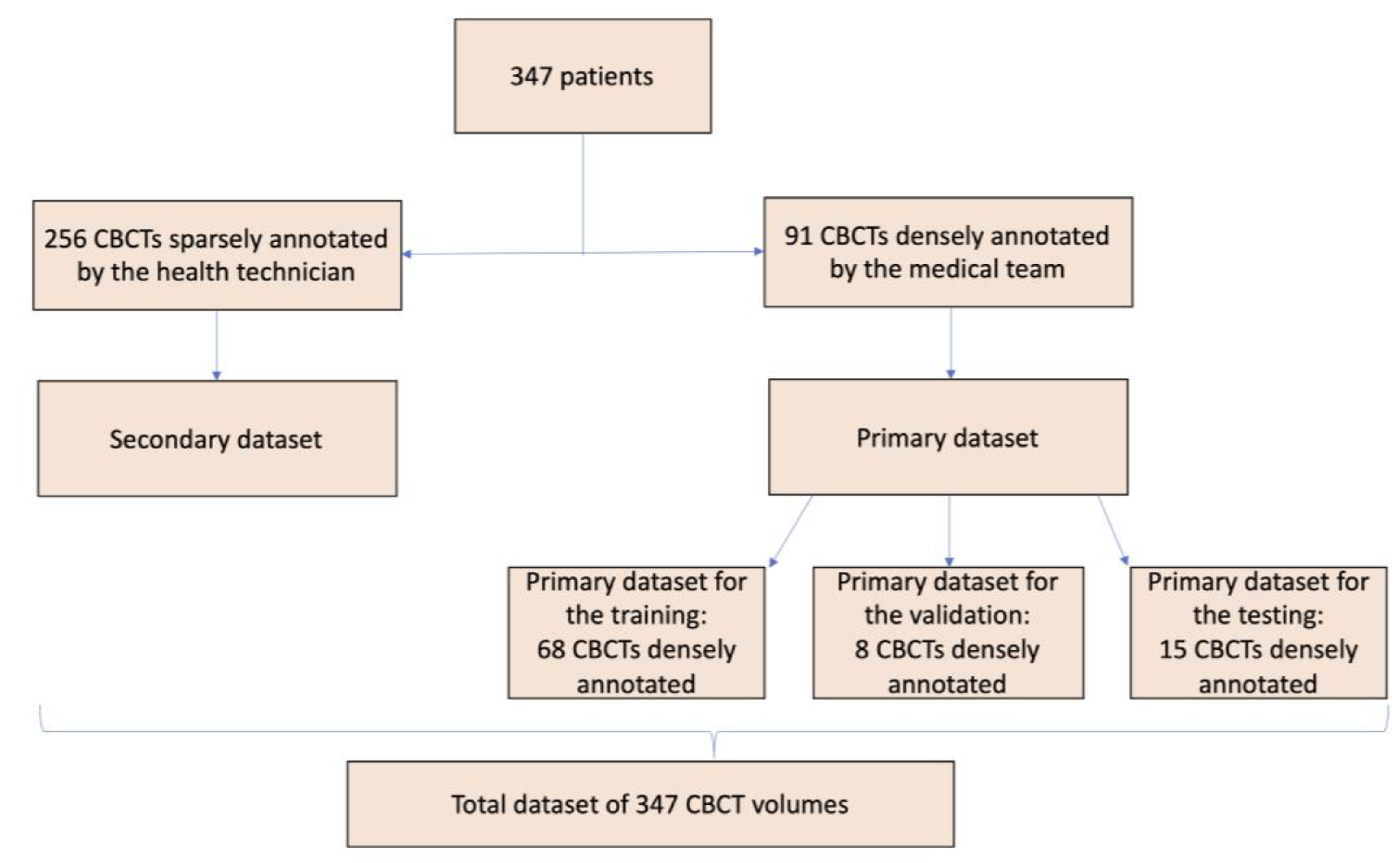

3.1. Dataset Generation and Annotation

- Both male and female patients older than 12 years old;

- Patients whose identification of the IAC on CBCT images was performed either by a radiology technician or by a radiologist.

- Unclear or unreadable CBCT images;

- Presence of gross anatomical mandible anomalies, including those related to previous oncological or respective surgery.

- Selection of the axial slice on which to base the subsequent extraction of the simil-OPG coronal slice (Figure 3a);

- Generation of the coronal slice, similar to the OPG. This is the curved plane perpendicular to the axial plane and containing the base curve (Figure 3c);

- Bidimensional annotation of the IAC course on the coronal plane (Figure 3d).

- Selection of the axial slice, on which to base the subsequent extraction of the simil-OPG coronal slice (Figure 3a);

- Generation of the coronal slice, similar to the OPG. This is the curved plane perpendicular to the axial plane and containing the base curve (Figure 3c);

- Bidimensional annotation of the IAC course on the coronal plane (Figure 3d);

- Automatic generation of Cross-Sectional Lines (CSLs), i.e., lines perpendicular to the base curve and always lying on the axial plane (Figure 3e);

- Generation and annotation of the Cross-Sectional Views (CSVs), obtained on the basis of the CLSs already described (Figure 3f). These views are planes containing the CSLs and perpendicular to the direction of the canal, which is derived from the bidimensional annotation previously obtained (Figure 3e);

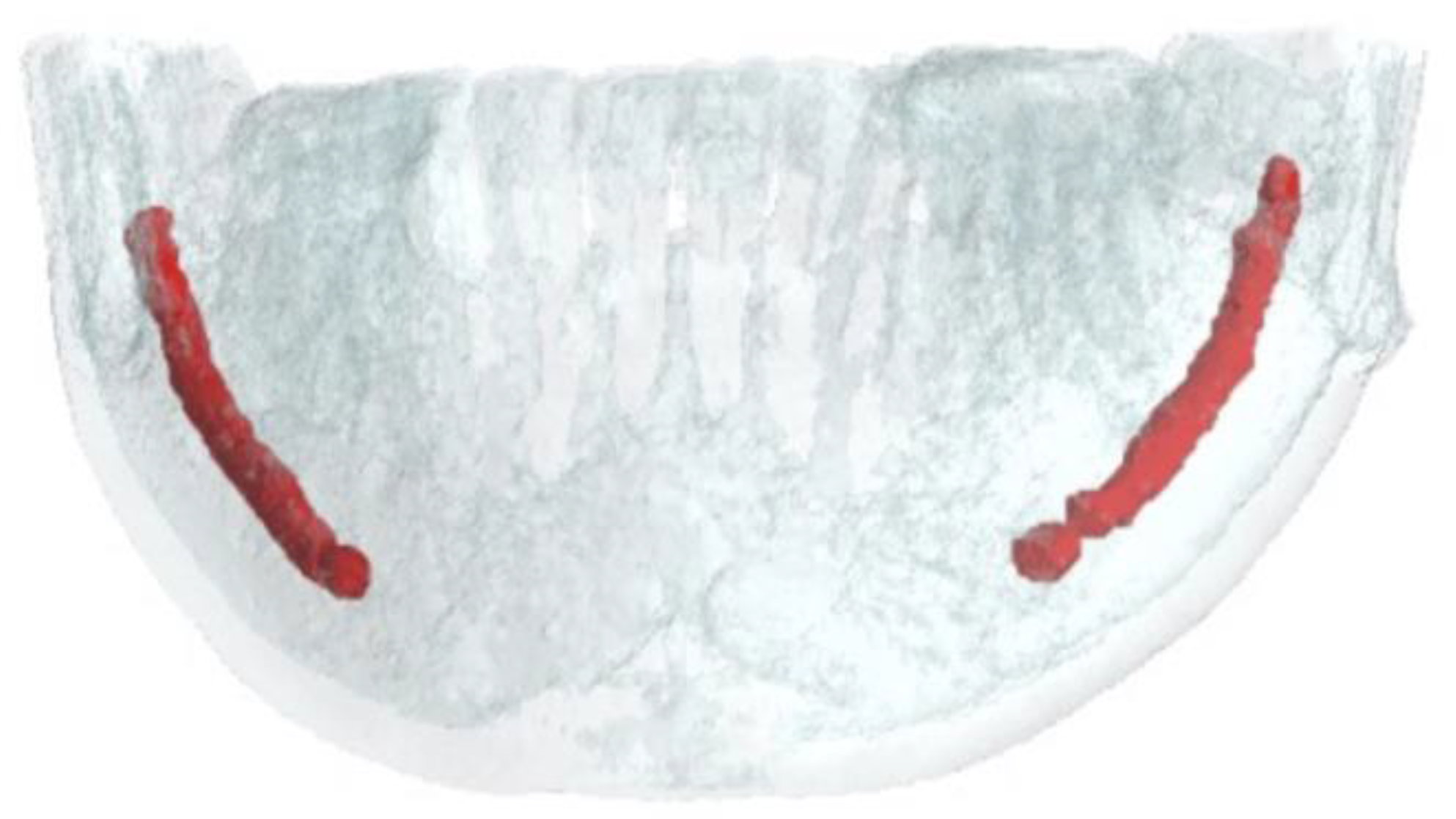

- Generation of 3D volumes (Figure 3g).

- A secondary dataset: it presents only sparse annotations, thus only showing the descriptive curve of the IAC course on the axial slice and the 2D canal identification on a coronal slice, similar to the OPG;

- A primary dataset: it has both sparse and dense annotations. The latter are all the 3D annotations of the canal, including those performed on the CSVs.

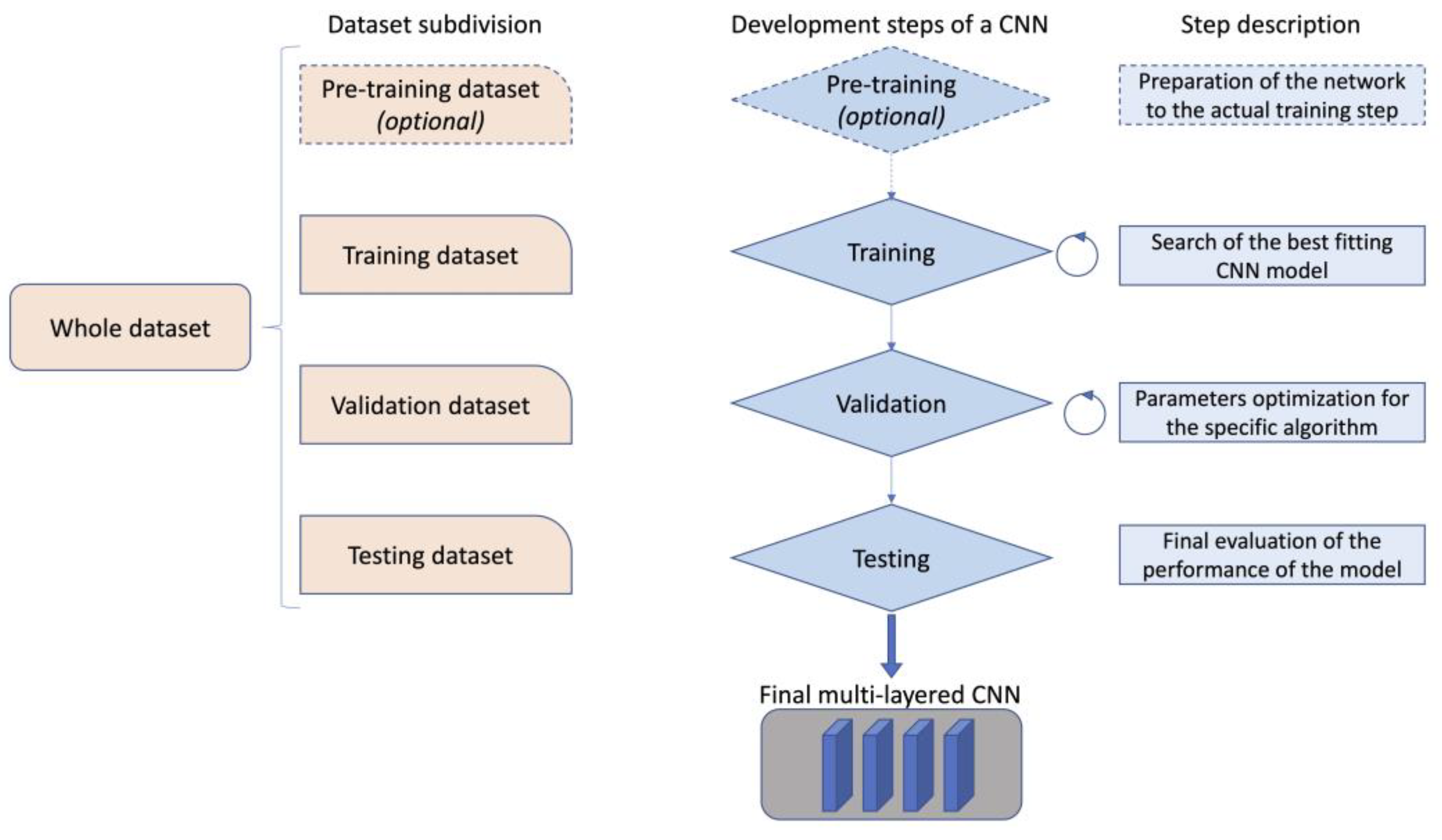

3.2. Model Description and Primary End-Point Measurement

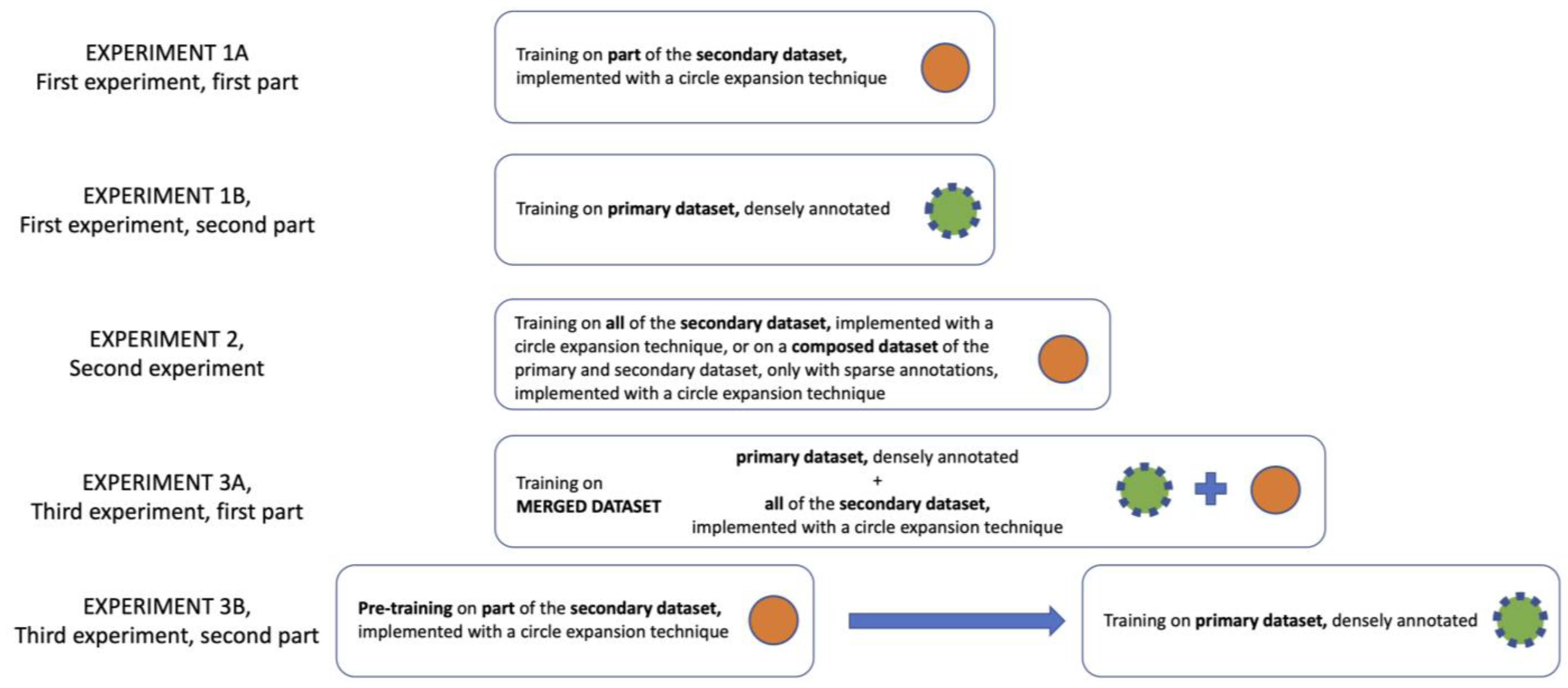

- In the first experiment, the CNN was trained twice on the primary dataset. First, only using the sparse annotations extended with the circular expansion technique (experiment 1A). Second, the CNN was trained on the same number of volumes, but this time using dense annotations (experiment 1B). The results in terms of IoU and Dice score were then compared.

- In the second experiment, the results of the CNN obtained with two different techniques were compared (experiment 2). In one case, the CNN was trained using only the secondary dataset with circular expansion. In the second case, the CNN was trained with a dataset composed of the whole secondary dataset and of the sparse annotations on the primary dataset, both undergoing a circular expansion.

- In the third experiment, two attempts were made. In the first case, the CNN was trained on a cumulative dataset which included both the primary (with only dense annotations) and secondary datasets (experiment 3A). In the second case, however, a pre-training of CNN was performed using the secondary dataset, and subsequently, the pre-trained CNN was actually trained on the primary dataset with 3D dense annotations only (experiment 3B).

- For each point in the sparse annotation, the direction of the canal is first determined using the coordinates of the next point.

- A 1.6 voxel-long radius is computed to be orthogonal to the direction of the canal in that point, and a circle is drawn.

- The radius length is set to ensure a circle diameter of 3 mm in real-world measurements. This unit can differ due to the diverse voxel spacing specified in the patient DICOM files (0.3 mm for each dimension, in our data).

- The previous step produces a hollow pipe-shaped 3D structure, that is finally filled with traditional computer vision algorithms.

3.3. Secondary End Point

4. Results

4.1. Demographic Data Collected

4.2. Radiographic Data and Subdivision of the Datasets

- A primary dataset for the training, made of 68 CBCTs;

- A primary dataset for the validation, made of 8 CBCTs;

- A primary dataset for the testing, made of 15 CBCTs.

4.3. Results of the Experiments

4.3.1. Primary Endpoints

4.3.2. Optimization of the Algorithm

- During training, the CNN is fed with implicit information about areas close to the edges of the scan where the IAN is very unlikely to be present.

- Information about cut positions helps the network to better shape the output: sub-volumes located close to the mental foramen generally present a much thinner canal than those located in the mandibular foramen.

4.3.3. Secondary Endpoint

5. Discussion

- The surgeon has access to a three-dimensional annotation of the IAC, thus being able to better visualize the data and better plan the surgical procedures (i.e., positioning of a dental implant or approaching to an impacted tooth).

- The radiologist can examine the CBCT volume and describe the IAC course more detailly.

- The standard of care would be improved, providing the patient a safer and more predictable morphological diagnosis.

- The waste of time for the radiology technician is minimized, while maximizing the amount of information provided to the clinicians.

- The radiology center would increase the cost-effectiveness of the CBCT exam.

6. Conclusions

- EXP1A-B: a dataset with dense annotations is more accurate than a dataset which uses a circular expansion technique only;

- EXP2: the circle expansion technique can have limited results;

- EXP3A-B: a sparsely annotated dataset implemented with circle expansion technique can be helpful to pre-train a CNN. The algorithm was further improved thanks to our innovative deep label propagation method applied to the secondary dataset, to enhance the pre-training of the CNN. This crucial step allowed to achieve the best-ever Dice score recorded in the segmentation of the IAC. The results were also obtained with a considerably lower number of CBCT volumes compared to previously published papers in this field.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Layer | Input Channels | Output Channels | Skip Connections |

|---|---|---|---|

| 3D Conv. Block 0 | 1 | 32 | No |

| 3D Conv. Block 1 + MaxPool | 32 | 64 | Yes |

| 3D Conv. Block 2 | 64 | 64 | No |

| 3D Conv. Block 3 + MaxPool | 64 | 128 | Yes |

| 3D Conv. Block 4 | 128 | 128 | No |

| 3D Conv. Block 5 + MaxPool | 128 | 256 | Yes |

| 3D Conv. Block 6 | 256 | 256 | No |

| 3D Conv. Block 7 + MaxPool | 256 | 512 | No |

| Transpose Conv. 0 | 513 | 512 | No |

| 3D Conv. Block 8 | 512 + 256 | 256 | Yes |

| 3D Conv. Block 9 | 256 | 256 | No |

| Transpose Conv. 1 | 256 | 256 | No |

| 3D Conv. Block 10 | 256 + 128 | 128 | Yes |

| 3D Conv. Block 11 | 128 | 128 | No |

| Transpose Conv. 2 | 128 | 128 | No |

| 3D Conv. Block 12 | 128 + 64 | 64 | Yes |

| 3D Conv. Block 13 | 64 | 64 | No |

| 3D Conv. Block 14 | 64 | 1 | No |

References

- Hwang, J.-J.; Jung, Y.-H.; Cho, B.-H.; Heo, M.-S. An overview of deep learning in the field of dentistry. Imaging Sci. Dent. 2019, 49, 1–7. [Google Scholar] [CrossRef] [PubMed]

- Crowson, M.G.; Ranisau, J.; Eskander, A.; Babier, A.; Xu, B.; Kahmke, R.R.; Chen, J.M.; Chan, T.C.Y. A contemporary review of machine learning in otolaryngology-head and neck surgery. Laryngoscope 2020, 130, 45–51. [Google Scholar] [CrossRef] [PubMed]

- Esteva, A.; Kuprel, B.; Novoa, R.A.; Ko, J.; Swetter, S.M.; Blau, H.M.; Thrun, S. Dermatologist-level classification of skin cancer with deep neural networks. Nature 2017, 542, 115–118. [Google Scholar] [CrossRef]

- Allegretti, S.; Bolelli, F.; Pollastri, F.; Longhitano, S.; Pellacani, G.; Grana, C. Supporting Skin Lesion Diagnosis with Content-Based Image Retrieval. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021. [Google Scholar]

- Alkhadar, H.; Macluskey, M.; White, S.; Ellis, I.; Gardner, A. Comparison of machine learning algorithms for the prediction of five-year survival in oral squamous cell carcinoma. J. Oral Pathol. Med. 2021, 50, 378–384. [Google Scholar] [CrossRef]

- Almangush, A.; Alabi, R.O.; Mäkitie, A.A.; Leivo, I. Machine learning in head and neck cancer: Importance of a web-based prognostic tool for improved decision making. Oral Oncol. 2021, 124, 105452. [Google Scholar] [CrossRef]

- Chinnery, T.; Arifin, A.; Tay, K.Y.; Leung, A.; Nichols, A.C.; Palma, D.A.; Mattonen, S.A.; Lang, P. Utilizing Artificial Intelligence for Head and Neck Cancer Outcomes Prediction From Imaging. Can. Assoc. Radiol. J. 2021, 72, 73–85. [Google Scholar] [CrossRef]

- Wang, Y.-C.; Hsueh, P.-C.; Wu, C.-C.; Tseng, Y.-J. Machine Learning Based Risk Prediction Models for Oral Squamous Cell Carcinoma Using Salivary Biomarkers. Stud. Health Technol. Inform. 2021, 281, 498–499. [Google Scholar] [CrossRef]

- Ariji, Y.; Yanashita, Y.; Kutsuna, S.; Muramatsu, C.; Fukuda, M.; Kise, Y.; Nozawa, M.; Kuwada, C.; Fujita, H.; Katsumata, A.; et al. Automatic detection and classification of radiolucent lesions in the mandible on panoramic radiographs using a deep learning object detection technique. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. 2019, 128, 424–430. [Google Scholar] [CrossRef] [PubMed]

- Brosset, S.; Dumont, M.; Bianchi, J.; Ruellas, A.; Cevidanes, L.; Yatabe, M.; Goncalves, J.; Benavides, E.; Soki, F.; Paniagua, B.; et al. 3D Auto-Segmentation of Mandibular Condyles. In Proceedings of the 2020 42nd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Montreal, QC, Canada, 20–24 July 2020; Volume 2020, pp. 1270–1273. [Google Scholar] [CrossRef]

- Qiu, B.; van der Wel, H.; Kraeima, J.; Glas, H.H.; Guo, J.; Borra, R.J.H.; Witjes, M.J.H.; van Ooijen, P.M.A. Automatic Segmentation of Mandible from Conventional Methods to Deep Learning-A Review. J. Pers. Med. 2021, 11, 629. [Google Scholar] [CrossRef] [PubMed]

- Lahoud, P.; Diels, S.; Niclaes, L.; Van Aelst, S.; Willems, H.; Van Gerven, A.; Quirynen, M.; Jacobs, R. Development and validation of a novel artificial intelligence driven tool for accurate mandibular canal segmentation on CBCT. J. Dent. 2021, 116, 103891. [Google Scholar] [CrossRef]

- Agbaje, J.O.; de Casteele, E.V.; Salem, A.S.; Anumendem, D.; Lambrichts, I.; Politis, C. Tracking of the inferior alveolar nerve: Its implication in surgical planning. Clin. Oral Investig. 2017, 21, 2213–2220. [Google Scholar] [CrossRef] [PubMed]

- Iwanaga, J.; Matsushita, Y.; Decater, T.; Ibaragi, S.; Tubbs, R.S. Mandibular canal vs. inferior alveolar canal: Evidence-based terminology analysis. Clin. Anat. 2021, 34, 209–217. [Google Scholar] [CrossRef]

- Ennes, J.P.; Medeiros, R.M. de Localization of Mandibular Foramen and Clinical Implications. Int. J. Morphol. 2009, 27, 1305–1311. [Google Scholar] [CrossRef]

- Juodzbalys, G.; Wang, H.-L.; Sabalys, G. Anatomy of mandibular vital structures. Part I: Mandibular canal and inferior alveolar neurovascular bundle in relation with dental implantology. J. Oral Maxillofac. Res. 2010, 1, e2. [Google Scholar] [CrossRef]

- Komal, A.; Bedi, R.S.; Wadhwani, P.; Aurora, J.K.; Chauhan, H. Study of Normal Anatomy of Mandibular Canal and its Variations in Indian Population Using CBCT. J. Maxillofac. Oral Surg. 2020, 19, 98–105. [Google Scholar] [CrossRef]

- Oliveira-Santos, C.; Capelozza, A.L.Á.; Dezzoti, M.S.G.; Fischer, C.M.; Poleti, M.L.; Rubira-Bullen, I.R.F. Visibility of the mandibular canal on CBCT cross-sectional images. J. Appl. Oral Sci. 2011, 19, 240–243. [Google Scholar] [CrossRef] [PubMed]

- Lofthag-Hansen, S.; Gröndahl, K.; Ekestubbe, A. Cone-beam CT for preoperative implant planning in the posterior mandible: Visibility of anatomic landmarks. Clin. Implant Dent. Relat. Res. 2009, 11, 246–255. [Google Scholar] [CrossRef]

- Weckx, A.; Agbaje, J.O.; Sun, Y.; Jacobs, R.; Politis, C. Visualization techniques of the inferior alveolar nerve (IAN): A narrative review. Surg. Radiol. Anat. 2016, 38, 55–63. [Google Scholar] [CrossRef]

- Libersa, P.; Savignat, M.; Tonnel, A. Neurosensory disturbances of the inferior alveolar nerve: A retrospective study of complaints in a 10-year period. J. Oral Maxillofac. Surg. 2007, 65, 1486–1489. [Google Scholar] [CrossRef]

- Monaco, G.; Montevecchi, M.; Bonetti, G.A.; Gatto, M.R.A.; Checchi, L. Reliability of panoramic radiography in evaluating the topographic relationship between the mandibular canal and impacted third molars. J. Am. Dent. Assoc. 2004, 135, 312–318. [Google Scholar] [CrossRef]

- Padmanabhan, H.; Kumar, A.V.; Shivashankar, K. Incidence of neurosensory disturbance in mandibular implant surgery—A meta-analysis. J. Indian Prosthodont. Soc. 2020, 20, 17–26. [Google Scholar] [CrossRef] [PubMed]

- Al-Sabbagh, M.; Okeson, J.P.; Khalaf, M.W.; Bhavsar, I. Persistent pain and neurosensory disturbance after dental implant surgery: Pathophysiology’s etiology, and diagnosis. Dent. Clin. N. Am. 2015, 59, 131–142. [Google Scholar] [CrossRef]

- Leung, Y.Y.; Cheung, L.K. Risk factors of neurosensory deficits in lower third molar surgery: A literature review of prospective studies. Int. J. Oral Maxillofac. Surg. 2011, 40, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Van der Cruyssen, F.; Peeters, F.; Gill, T.; De Laat, A.; Jacobs, R.; Politis, C.; Renton, T. Signs and symptoms, quality of life and psychosocial data in 1331 post-traumatic trigeminal neuropathy patients seen in two tertiary referral centres in two countries. J. Oral Rehabil. 2020, 47, 1212–1221. [Google Scholar] [CrossRef] [PubMed]

- Vinayahalingam, S.; Xi, T.; Bergé, S.; Maal, T.; de Jong, G. Automated detection of third molars and mandibular nerve by deep learning. Sci. Rep. 2019, 9, 9007. [Google Scholar] [CrossRef]

- Ueda, M.; Nakamori, K.; Shiratori, K.; Igarashi, T.; Sasaki, T.; Anbo, N.; Kaneko, T.; Suzuki, N.; Dehari, H.; Sonoda, T.; et al. Clinical Significance of Computed Tomographic Assessment and Anatomic Features of the Inferior Alveolar Canal as Risk Factors for Injury of the Inferior Alveolar Nerve at Third Molar Surgery. J. Oral Maxillofac. Surg. 2012, 70, 514–520. [Google Scholar] [CrossRef]

- Jacobs, R. Dental cone beam ct and its justified use in oral health care. J. Belg. Soc. Radiol. 2011, 94, 254. [Google Scholar] [CrossRef]

- Ludlow, J.B.; Ivanovic, M. Comparative dosimetry of dental CBCT devices and 64-slice CT for oral and maxillofacial radiology. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. Endod. 2008, 106, 106–114. [Google Scholar] [CrossRef]

- Signorelli, L.; Patcas, R.; Peltomäki, T.; Schätzle, M. Radiation dose of cone-beam computed tomography compared to conventional radiographs in orthodontics. J. Orofac. Orthop. 2016, 77, 9–15. [Google Scholar] [CrossRef]

- Saati, S.; Kaveh, F.; Yarmohammadi, S. Comparison of Cone Beam Computed Tomography and Multi Slice Computed Tomography Image Quality of Human Dried Mandible using 10 Anatomical Landmarks. J. Clin. Diagn. Res. 2017, 11, ZC13–ZC16. [Google Scholar] [CrossRef]

- Nasseh, I.; Al-Rawi, W. Cone Beam Computed Tomography. Dent. Clin. N. Am. 2018, 62, 361–391. [Google Scholar] [CrossRef]

- Schramm, A.; Rücker, M.; Sakkas, N.; Schön, R.; Düker, J.; Gellrich, N.-C. The use of cone beam CT in cranio-maxillofacial surgery. Int. Congr. Ser. 2005, 1281, 1200–1204. [Google Scholar] [CrossRef]

- Scarfe, W.C.; Farman, A.G.; Sukovic, P. Clinical applications of cone-beam computed tomography in dental practice. J. Can. Dent. Assoc. 2006, 72, 75–80. [Google Scholar] [PubMed]

- Anesi, A.; Di Bartolomeo, M.; Pellacani, A.; Ferretti, M.; Cavani, F.; Salvatori, R.; Nocini, R.; Palumbo, C.; Chiarini, L. Bone Healing Evaluation Following Different Osteotomic Techniques in Animal Models: A Suitable Method for Clinical Insights. Appl. Sci. 2020, 10, 7165. [Google Scholar] [CrossRef]

- Negrello, S.; Pellacani, A.; di Bartolomeo, M.; Bernardelli, G.; Nocini, R.; Pinelli, M.; Chiarini, L.; Anesi, A. Primary Intraosseous Squamous Cell Carcinoma of the Anterior Mandible Arising in an Odontogenic Cyst in 34-Year-Old Male. Rep. Med. Cases Images Videos 2020, 3, 12. [Google Scholar] [CrossRef]

- Matherne, R.P.; Angelopoulos, C.; Kulild, J.C.; Tira, D. Use of cone-beam computed tomography to identify root canal systems in vitro. J. Endod. 2008, 34, 87–89. [Google Scholar] [CrossRef]

- Kamburoğlu, K.; Kiliç, C.; Ozen, T.; Yüksel, S.P. Measurements of mandibular canal region obtained by cone-beam computed tomography: A cadaveric study. OFral Surg. Oral Med. Oral Pathol. Oral Radiol. Endod. 2009, 107, e34–e42. [Google Scholar] [CrossRef]

- Chen, J.C.-H.; Lin, L.-M.; Geist, J.R.; Chen, J.-Y.; Chen, C.-H.; Chen, Y.-K. A retrospective comparison of the location and diameter of the inferior alveolar canal at the mental foramen and length of the anterior loop between American and Taiwanese cohorts using CBCT. Surg. Radiol. Anat. 2013, 35, 11–18. [Google Scholar] [CrossRef]

- Kim, S.T.; Hu, K.-S.; Song, W.-C.; Kang, M.-K.; Park, H.-D.; Kim, H.-J. Location of the mandibular canal and the topography of its neurovascular structures. J. Craniofacial Surg. 2009, 20, 936–939. [Google Scholar] [CrossRef]

- Çiçek, Ö.; Abdulkadir, A.; Lienkamp, S.S.; Brox, T.; Ronneberger, O. 3D U-Net: Learning Dense Volumetric Segmentation from Sparse Annotation; Spring: Berlin/Heidelberg, Germany, 2016. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Bolelli, F.; Baraldi, L.; Pollastri, F.; Grana, C. A Hierarchical Quasi-Recurrent approach to Video Captioning. In Proceedings of the 2018 IEEE International Conference on Image Processing, Applications and Systems (IPAS), Sophia Antipolis, France, 12–14 December 2018; pp. 162–167. [Google Scholar]

- Pollastri, F.; Maroñas, J.; Bolelli, F.; Ligabue, G.; Paredes, R.; Magistroni, R.; Grana, C. Confidence Calibration for Deep Renal Biopsy Immunofluorescence Image Classification. In Proceedings of the 25th International Conference on Pattern Recognition, Milan, Italy, 10–15 January 2021; pp. 1298–1305. [Google Scholar]

- Kainmueller, D.; Lamecker, H.; Seim, H.; Zinser, M.; Zachow, S. Automatic extraction of mandibular nerve and bone from cone-beam CT data. Med. Image Comput. Comput. Assist. Interv. 2009, 12, 76–83. [Google Scholar] [CrossRef] [PubMed]

- Moris, B.; Claesen, L.; Yi, S.; Politis, C. Automated tracking of the mandibular canal in CBCT images using matching and multiple hypotheses methods. In Proceedings of the 2012 Fourth International Conference on Communications and Electronics (ICCE), Hue, Vietnam, 1–3 August 2012; pp. 327–332. [Google Scholar]

- Abdolali, F.; Zoroofi, R.A.; Abdolali, M.; Yokota, F.; Otake, Y.; Sato, Y. Automatic segmentation of mandibular canal in cone beam CT images using conditional statistical shape model and fast marching. Int. J. Comput. Assist. Radiol. Surg. 2017, 12, 581–593. [Google Scholar] [CrossRef] [PubMed]

- Wei, X.; Wang, Y. Inferior alveolar canal segmentation based on cone-beam computed tomography. Med. Phys. 2021, 48, 7074–7088. [Google Scholar] [CrossRef]

- Kwak, G.H.; Kwak, E.-J.; Song, J.M.; Park, H.R.; Jung, Y.-H.; Cho, B.-H.; Hui, P.; Hwang, J.J. Automatic mandibular canal detection using a deep convolutional neural network. Sci. Rep. 2020, 10, 5711. [Google Scholar] [CrossRef] [PubMed]

- Jaskari, J.; Sahlsten, J.; Järnstedt, J.; Mehtonen, H.; Karhu, K.; Sundqvist, O.; Hietanen, A.; Varjonen, V.; Mattila, V.; Kaski, K. Deep Learning Method for Mandibular Canal Segmentation in Dental Cone Beam Computed Tomography Volumes. Sci. Rep. 2020, 10, 5842. [Google Scholar] [CrossRef]

- Mercadante, C.; Cipriano, M.; Bolelli, F.; Pollastri, F.; Di Bartolomeo, M.; Anesi, A.; Grana, C. A Cone Beam Computed Tomography Annotation Tool for Automatic Detection of the Inferior Alveolar Nerve Canal. In Proceedings of the 16th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications-Volume 4: VISAPP, Online, 8–10 February 2021; Volume 4, pp. 724–731. [Google Scholar]

- Heasman, P.A. Variation in the position of the inferior dental canal and its significance to restorative dentistry. J. Dent. 1988, 16, 36–39. [Google Scholar] [CrossRef]

- Rajchel, J.; Ellis, E.; Fonseca, R.J. The anatomical location of the mandibular canal: Its relationship to the sagittal ramus osteotomy. Int. J. Adult Orthod. Orthognath. Surg. 1986, 1, 37–47. [Google Scholar]

- Levine, M.H.; Goddard, A.L.; Dodson, T.B. Inferior alveolar nerve canal position: A clinical and radiographic study. J. Oral Maxillofac. Surg. 2007, 65, 470–474. [Google Scholar] [CrossRef]

- Sato, I.; Ueno, R.; Kawai, T.; Yosue, T. Rare courses of the mandibular canal in the molar regions of the human mandible: A cadaveric study. Okajimas Folia Anat. Jpn. 2005, 82, 95–101. [Google Scholar] [CrossRef]

- Clancy, K.; Aboutalib, S.; Mohamed, A.; Sumkin, J.; Wu, S. Deep Learning Pre-training Strategy for Mammogram Image Classification: An Evaluation Study. J. Digit. Imaging 2020, 33, 1257–1265. [Google Scholar] [CrossRef]

- Cipriano, M.; Allegretti, S.; Bolelli, F.; Pollastri, F.; Grana, C. Improving Segmentation of the Inferior Alveolar Nerve through Deep Label Propagation. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 19–20 June 2022; pp. 21105–21114. [Google Scholar]

- Valenzuela-Fuenzalida, J.J.; Cariseo, C.; Gold, M.; Díaz, D.; Orellana, M.; Iwanaga, J. Anatomical variations of the mandibular canal and their clinical implications in dental practice: A literature review. Surg. Radiol. Anat. 2021, 43, 1259–1272. [Google Scholar] [CrossRef] [PubMed]

- Angel, J.S.; Mincer, H.H.; Chaudhry, J.; Scarbecz, M. Cone-beam computed tomography for analyzing variations in inferior alveolar canal location in adults in relation to age and sex. J. Forensic Sci. 2011, 56, 216–219. [Google Scholar] [CrossRef] [PubMed]

- Di Bartolomeo, M.; Pellacani, A.; Negrello, S.; Buchignani, M.; Nocini, R.; Di Massa, G.; Gianotti, G.; Pollastri, G.; Colletti, G.; Chiarini, L.; et al. Ameloblastoma in a three-year-old child with Hurler Syndrome (Mucopolysaccharidosis Type I). Reports 2022, 5, 10. [Google Scholar] [CrossRef]

| Experiment | Iou Score | Dice Score |

|---|---|---|

| Experiment 1A: Training on part of the secondary dataset, implemented with a circle expansion technique | 0.39 | 0.56 |

| Experiment 1B: Training on primary dataset, densely annotated | 0.52 | 0.67 |

| Experiment 2: Training on all of the secondary dataset, implemented with a circle expansion technique, or on a composed dataset of the primary and secondary dataset, only with sparse annotations, implemented with a circle expansion technique | 0.45 | 0.62 |

| Experiment 3A: Training on a merged dataset composed of all of the primary dataset, with dense annotations, and all of the secondary dataset, implemented with a circle expansion technique | 0.45 | 0.62 |

| Experiment 3B: Pre-training on all of the secondary dataset, implemented with a circle expansion technique, followed by a proper training on primary dataset, densely annotated | 0.54 | 0.69 |

| Optimization of the results of the experiment 3B Pre-training on all of the secondary dataset, implemented with the deep label propagation method, followed by a proper training on primary dataset, densely annotated | 0.64 | 0.79 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Di Bartolomeo, M.; Pellacani, A.; Bolelli, F.; Cipriano, M.; Lumetti, L.; Negrello, S.; Allegretti, S.; Minafra, P.; Pollastri, F.; Nocini, R.; et al. Inferior Alveolar Canal Automatic Detection with Deep Learning CNNs on CBCTs: Development of a Novel Model and Release of Open-Source Dataset and Algorithm. Appl. Sci. 2023, 13, 3271. https://doi.org/10.3390/app13053271

Di Bartolomeo M, Pellacani A, Bolelli F, Cipriano M, Lumetti L, Negrello S, Allegretti S, Minafra P, Pollastri F, Nocini R, et al. Inferior Alveolar Canal Automatic Detection with Deep Learning CNNs on CBCTs: Development of a Novel Model and Release of Open-Source Dataset and Algorithm. Applied Sciences. 2023; 13(5):3271. https://doi.org/10.3390/app13053271

Chicago/Turabian StyleDi Bartolomeo, Mattia, Arrigo Pellacani, Federico Bolelli, Marco Cipriano, Luca Lumetti, Sara Negrello, Stefano Allegretti, Paolo Minafra, Federico Pollastri, Riccardo Nocini, and et al. 2023. "Inferior Alveolar Canal Automatic Detection with Deep Learning CNNs on CBCTs: Development of a Novel Model and Release of Open-Source Dataset and Algorithm" Applied Sciences 13, no. 5: 3271. https://doi.org/10.3390/app13053271

APA StyleDi Bartolomeo, M., Pellacani, A., Bolelli, F., Cipriano, M., Lumetti, L., Negrello, S., Allegretti, S., Minafra, P., Pollastri, F., Nocini, R., Colletti, G., Chiarini, L., Grana, C., & Anesi, A. (2023). Inferior Alveolar Canal Automatic Detection with Deep Learning CNNs on CBCTs: Development of a Novel Model and Release of Open-Source Dataset and Algorithm. Applied Sciences, 13(5), 3271. https://doi.org/10.3390/app13053271