Multi-Information Fusion Indoor Localization Using Smartphones

Abstract

1. Introduction

- How can the tradeoff of cost and precision be mitigated in indoor environments? High precision often requires high costs, but in real applications users typically hope to achieve high precision performance at low cost. Therefore, low cost and high precision are the core key research topics in localization.

- How can heterogeneous equipment be achieved? The application scope of indoor localization is related to the requirements for device heterogeneity. A good method must be developed for different devices. Therefore, device heterogeneity remains a great challenge.

- How can the generality of indoor localization be achieved? Both environments and human behaviors have a strong influence on positioning. Therefore, eliminating this interference remains a challenge for localization.

- A dynamic improved PDR method. In this article, we propose a dynamic improved PDR method. In this method, we add the previous two steps to estimate the current step length. We also introduce a compensation factor due to some errors from the sensors themselves when collecting sensor data. The maximum influence factor is set for the previous two steps to ensure the importance of the step length estimation at the current time. The experiments show that the proposed method can provide more location information and achieve better performance than the traditional method.

- An error correction method for heading direction. During improved PDR estimation, to mitigate equipment heterogeneity, we propose a heading direction correction method. The experimental results demonstrate that issues of equipment heterogeneity have been solved.

- Fusion localization framework-based acoustic signal. Considering compatibility with ultrasonic signals, we propose a fusion CHAN and the improved PDR indoor localization system (CHAN-IPDR-ILS). We developed some experiments with different devices and pedestrians at the two sites. The experimental results demonstrate that the fusion localization system can achieve comparable performance, generality, and flexibility for application.

2. Related Work

3. System Workflow

4. Fusion Localization Architecture

4.1. Overview

| Algorithm 1: Procedure of fusion localization |

| Input: The acoustic signal and IMU data from smartphone. |

| Output: The target location . 1: Access data from smartphone. 2: Calculate the location of the CHAN estimation as Section 4.2. 3: Peak and valley detection as Section 4.3. 4: Threshold judgment as Section 4.3. 5: Time interval detection as Section 4.3. 6: Estimate the step counting. 7: for each step do 8: Estimate the step length as Section 4.4. 9: Calculate heading direction estimation as Section 4.5. 10: Calculate the location of the PDR estimation at time m. 11: end for 12: The fusion localization as Section 4.6. 13: If the CHAN estimation threshold then 14: Discard the CHAN estimation, the location at time m − 1 is . 15: else 16: The location at time m − 1 is . 17: end if 18: Location determination and heading by motion model as (26). 19: Return step 2. |

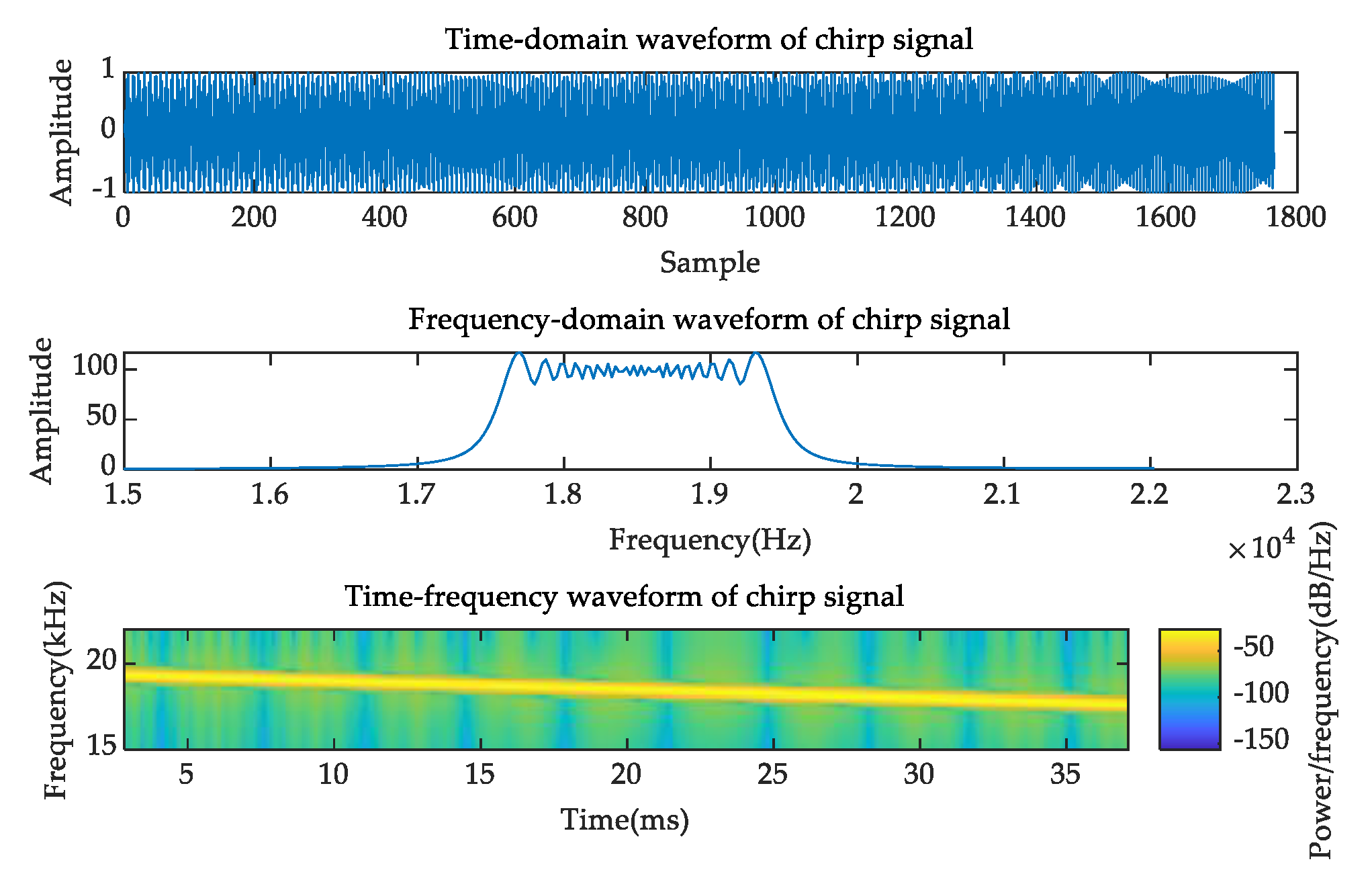

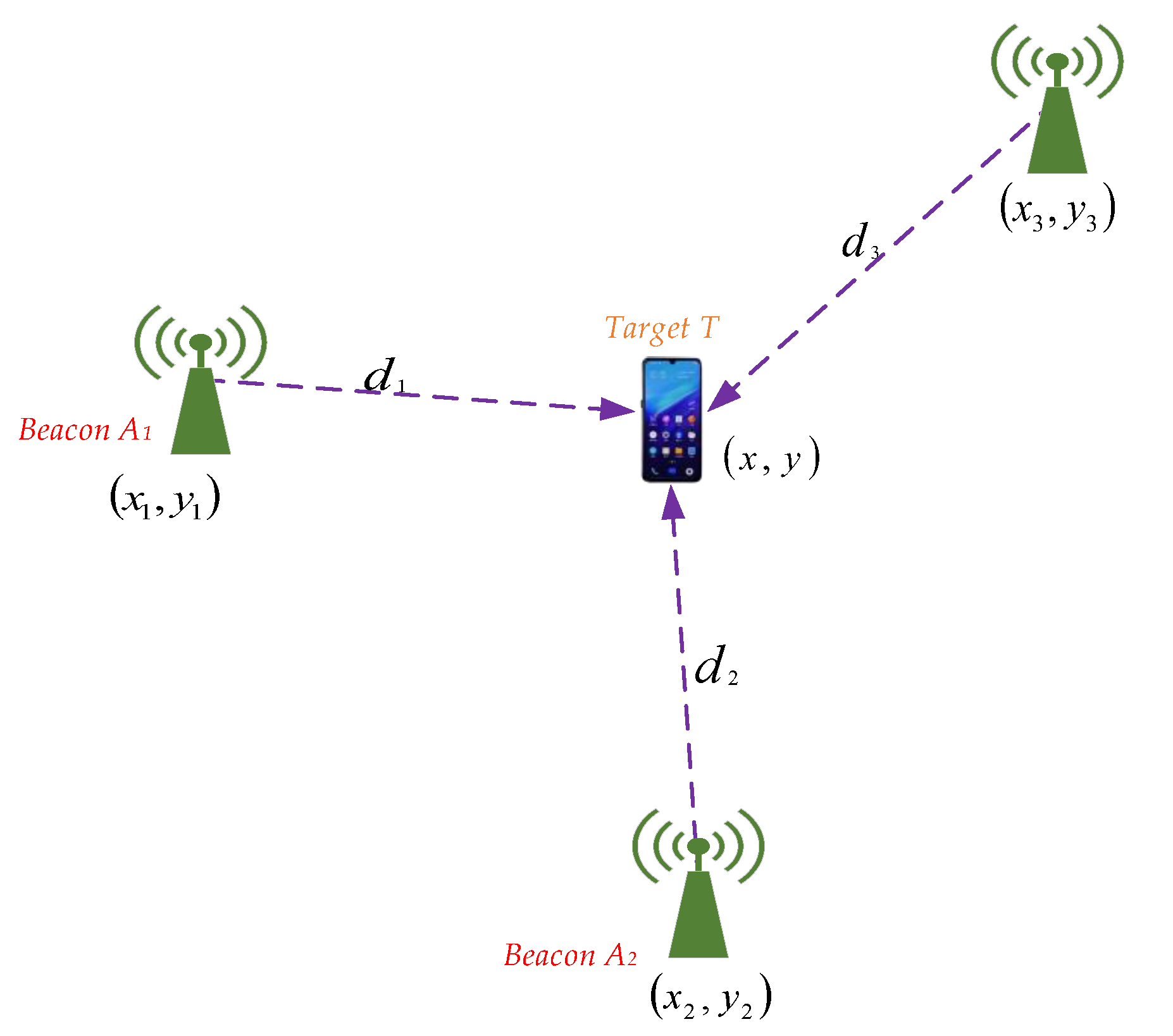

4.2. Location Initialization

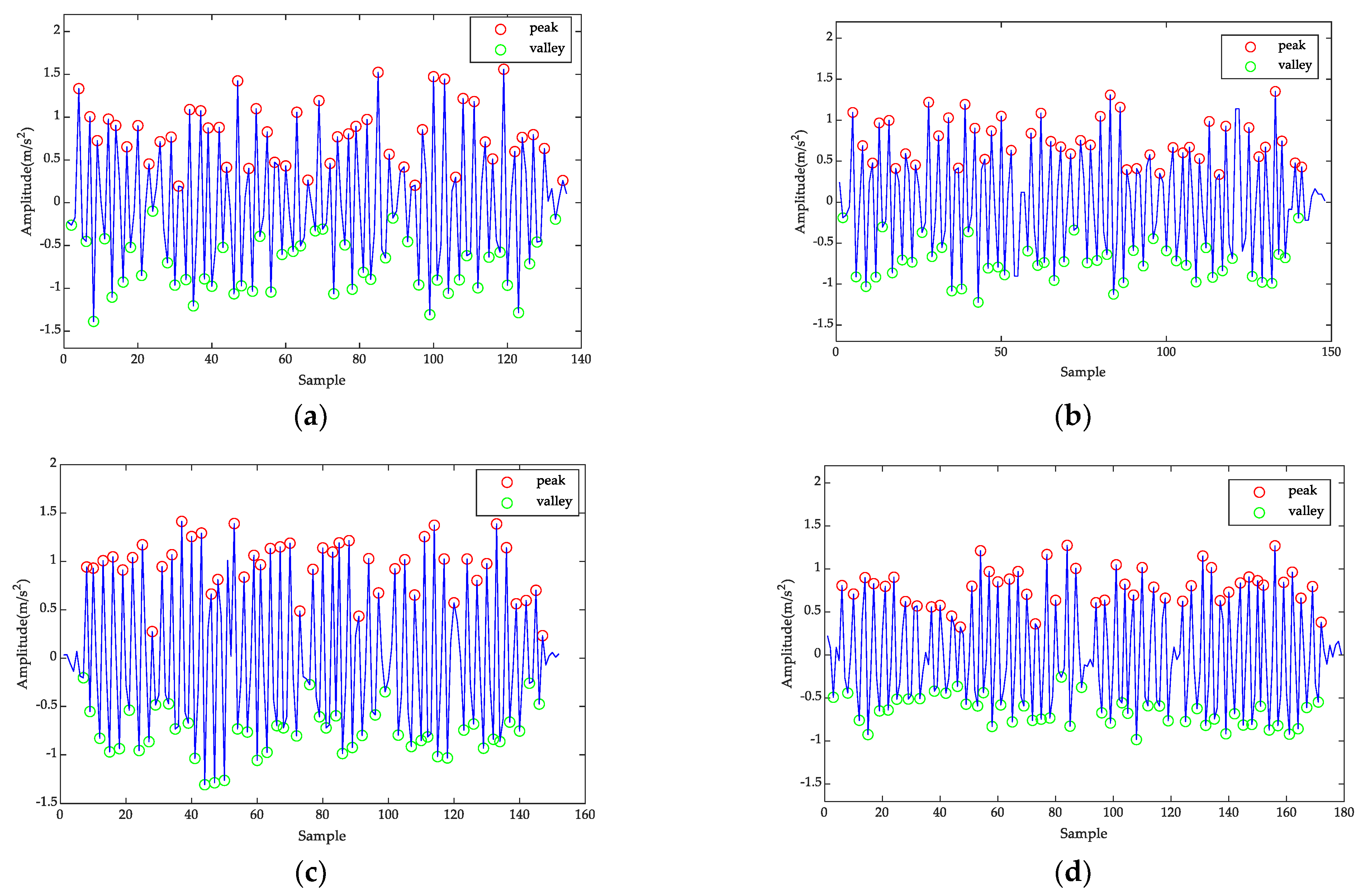

4.3. Step-Counting Detection

- Peak and Valley Detection;

- If , then (m) is the peak.

- If , then (m) is the valley

- where are the acceleration values at times m, m − 1, and m + 1, respectively.

- Threshold Judgment:

- All detected peaks must be greater than ; otherwise, they are discarded.

- All detected valleys are less than the preset valley threshold ; otherwise, they are discarded.

- Time Interval Detection:

- If , then the acceleration at time m is peak or valley; otherwise, the acceleration is discarded.

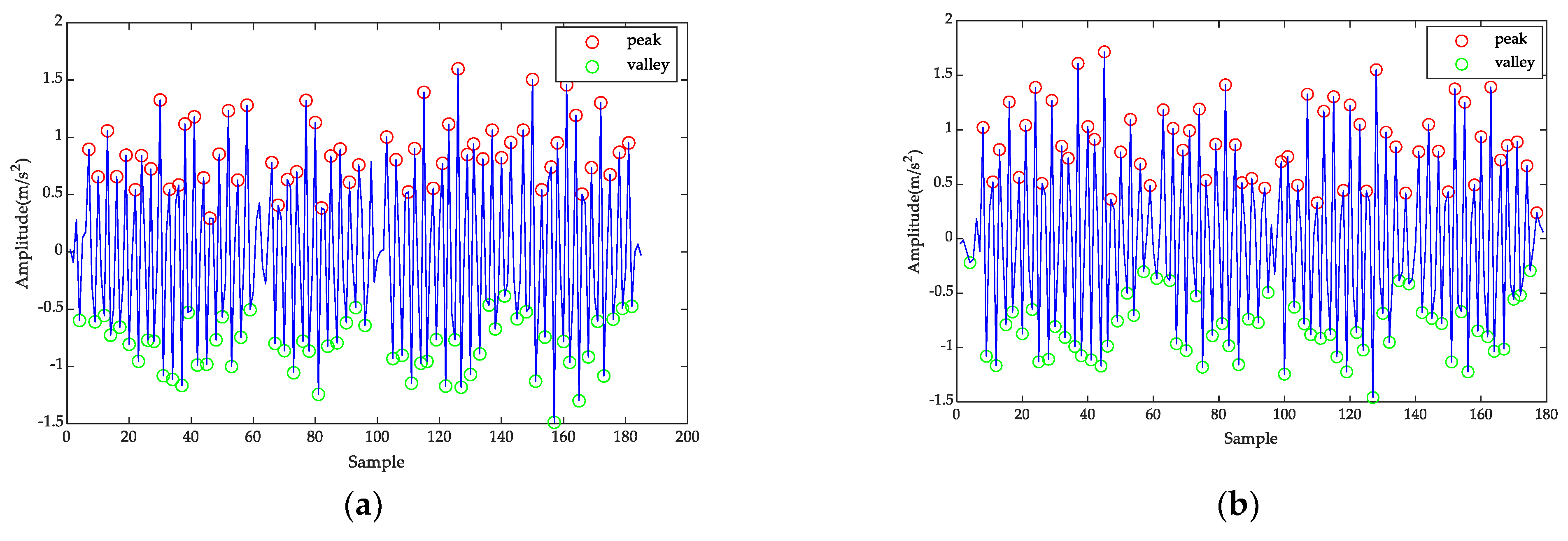

4.4. Improved Adaptive Step Length Estimation

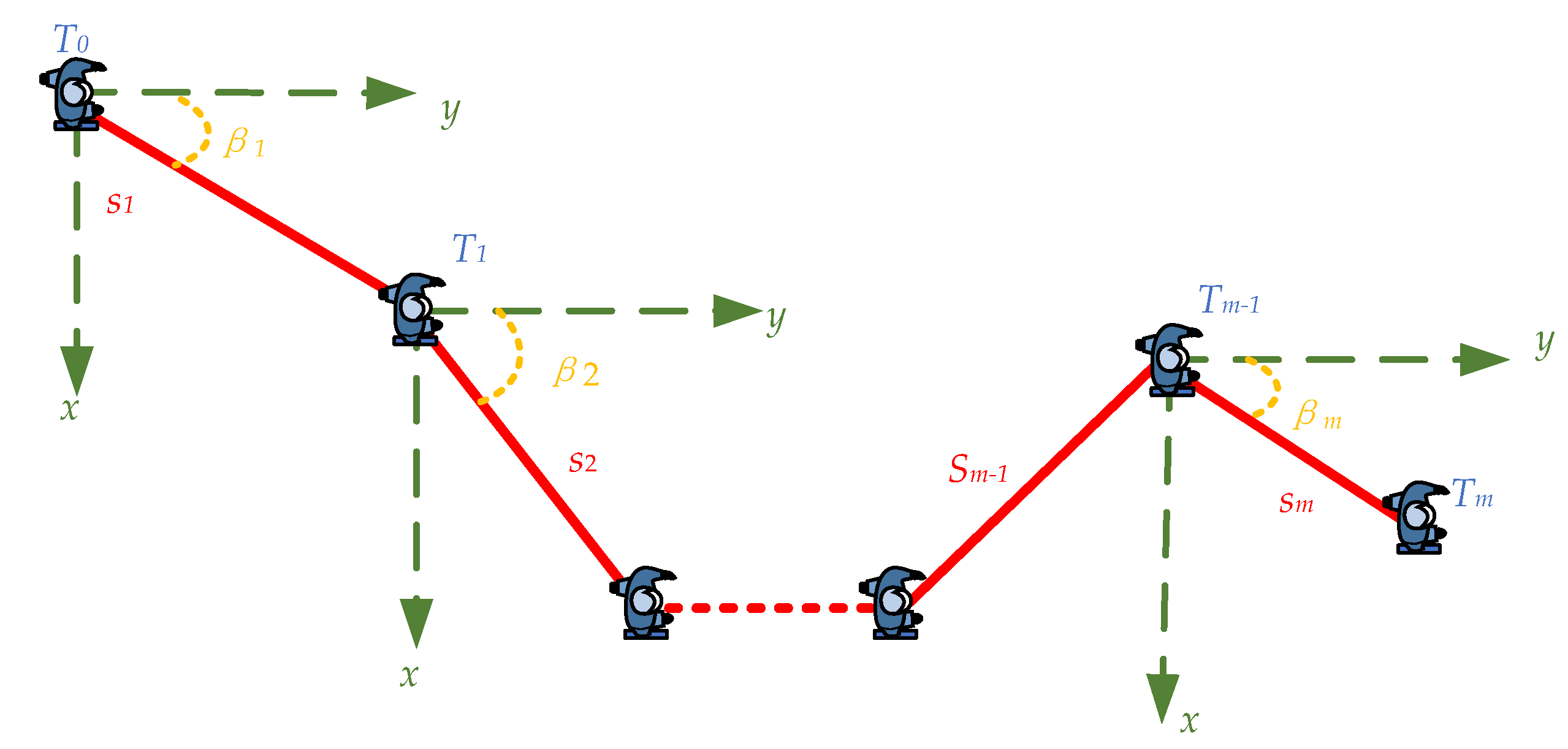

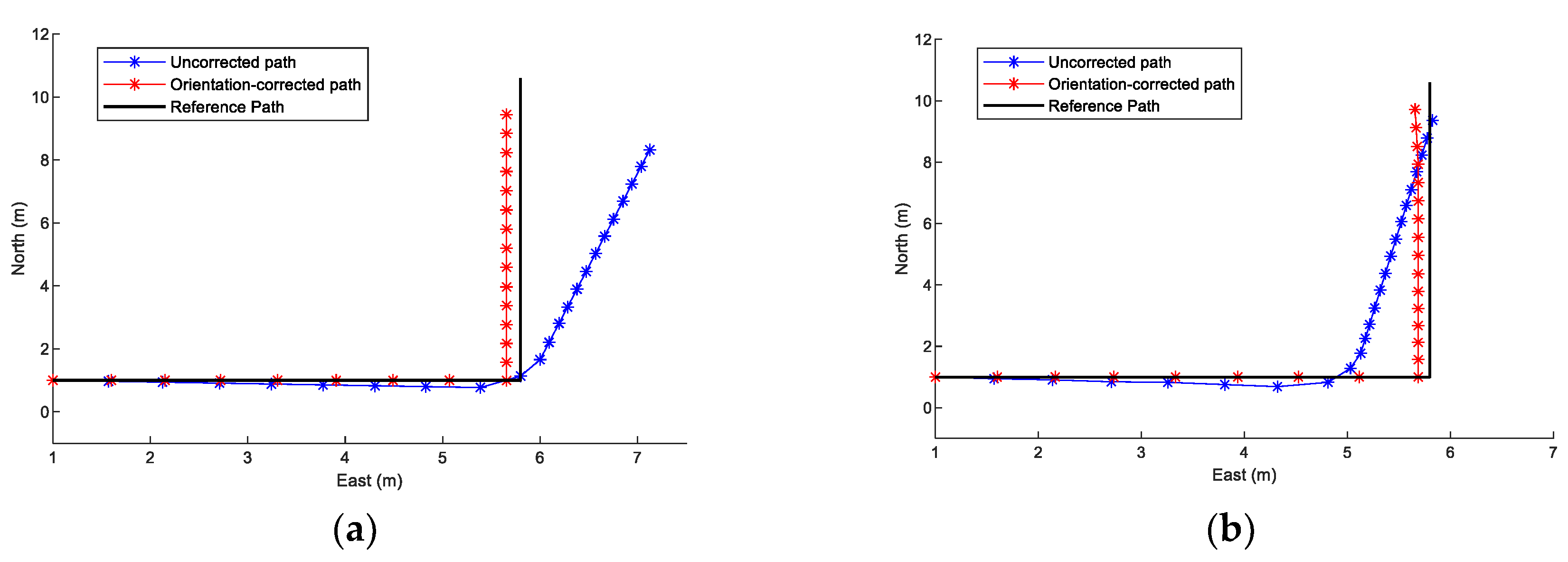

4.5. Improved Heading Direction Estimation

4.6. Fusion Localization

5. Experimental Verification and Analysis

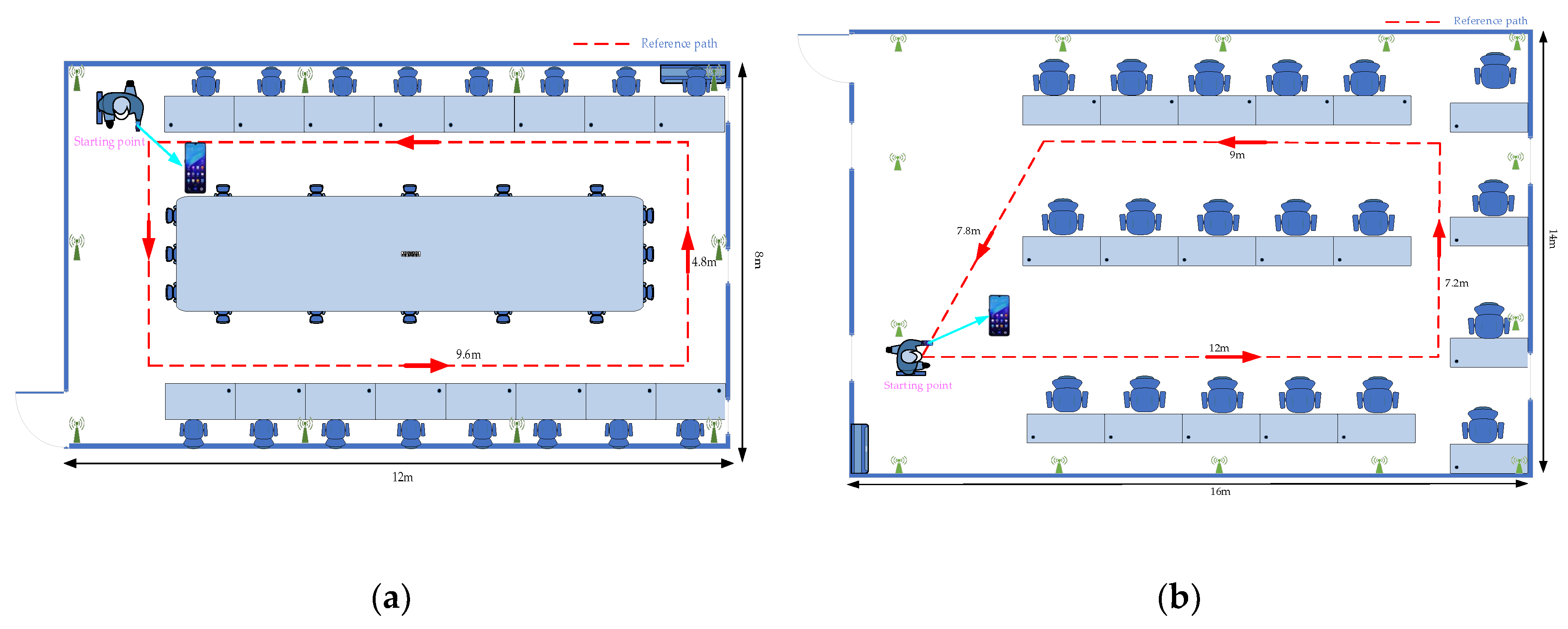

5.1. Experimental Setup

5.2. Discussion and Analysis

5.2.1. Step-Counting Detection

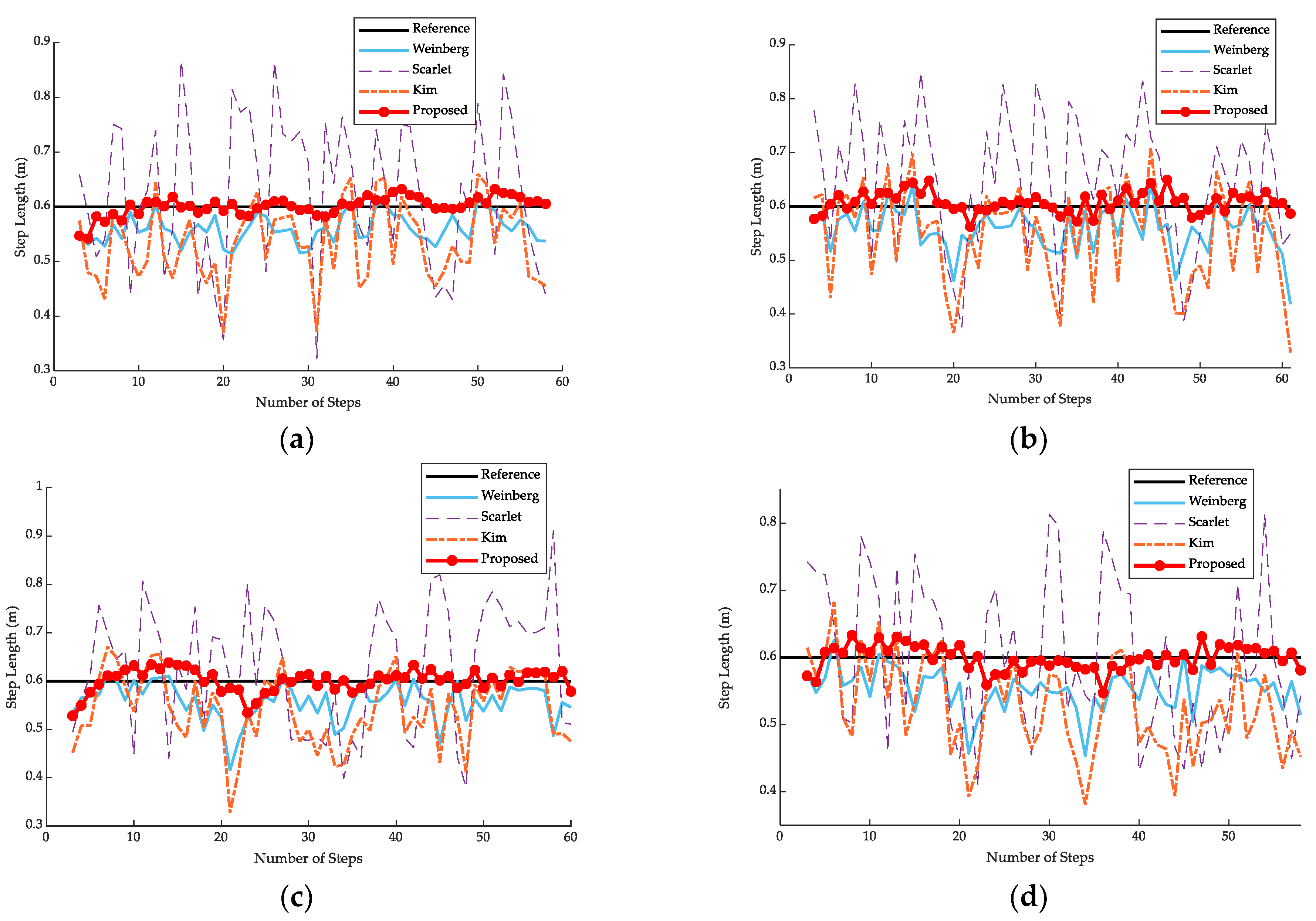

5.2.2. Step Length Estimation

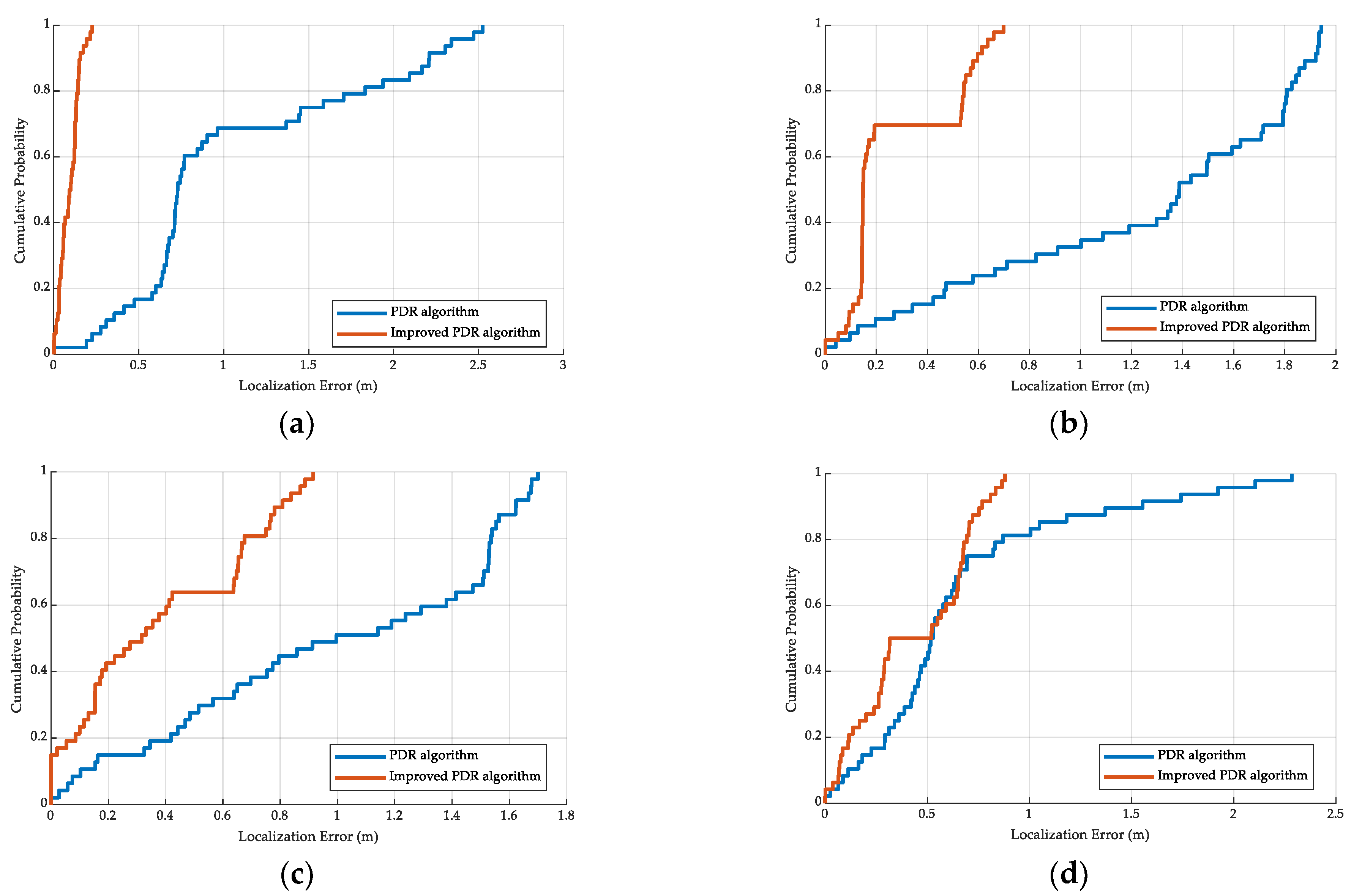

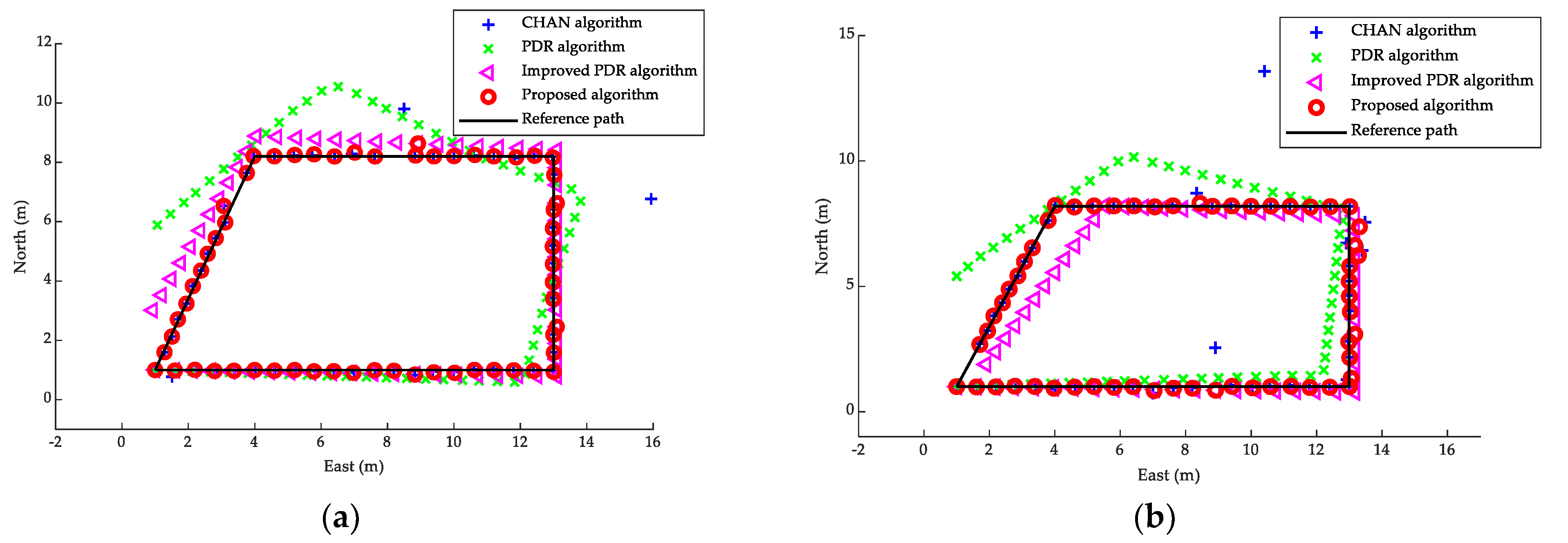

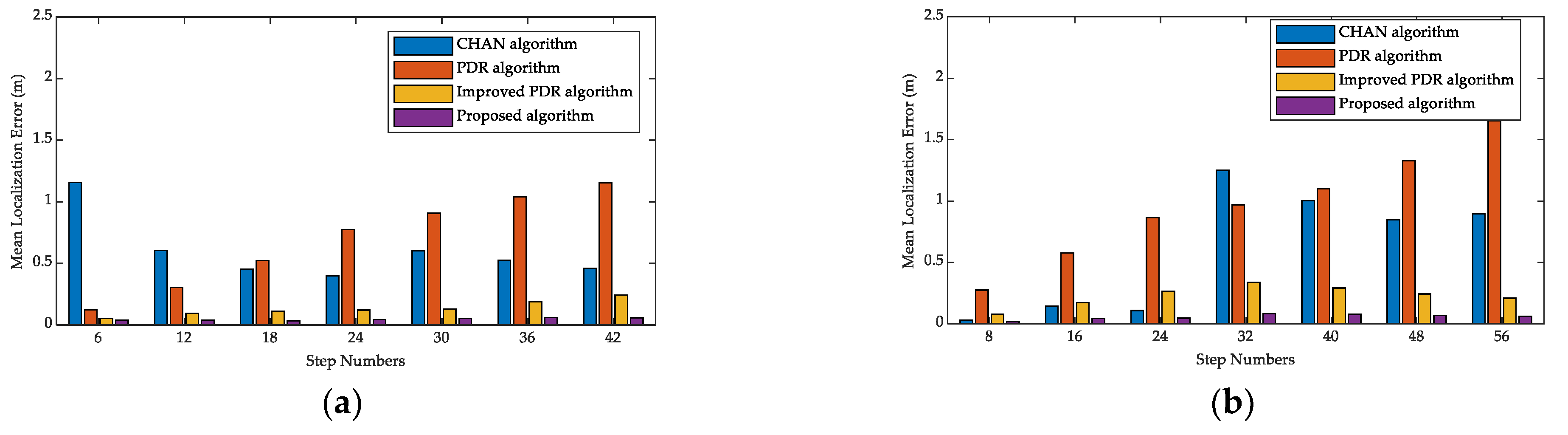

5.2.3. Improved Dynamic PDR Results

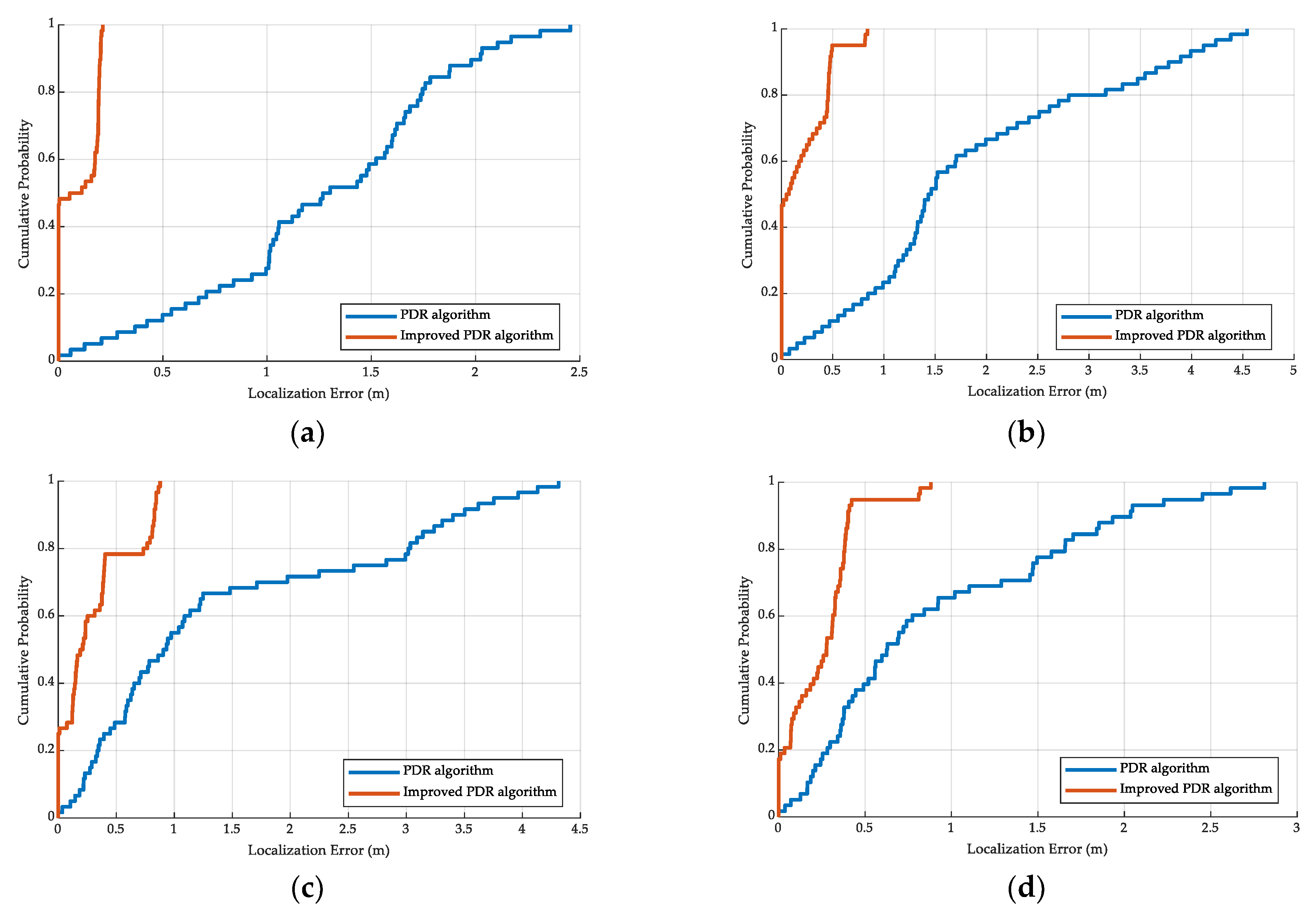

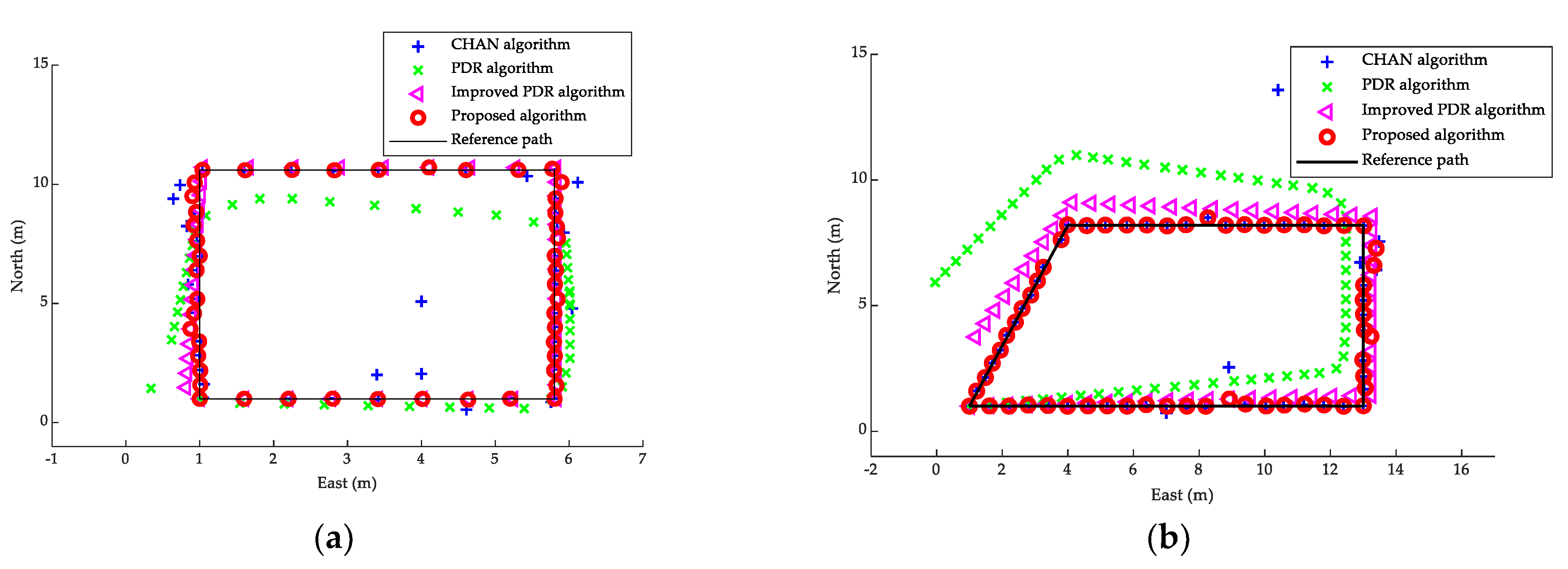

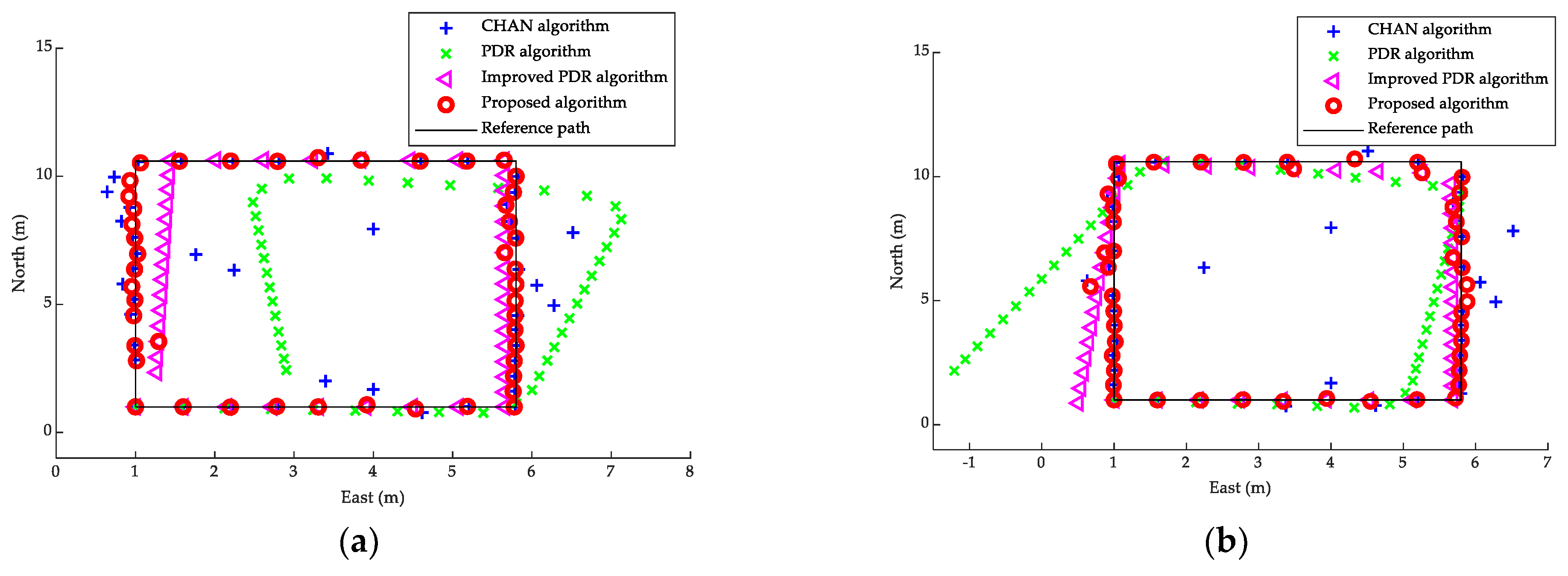

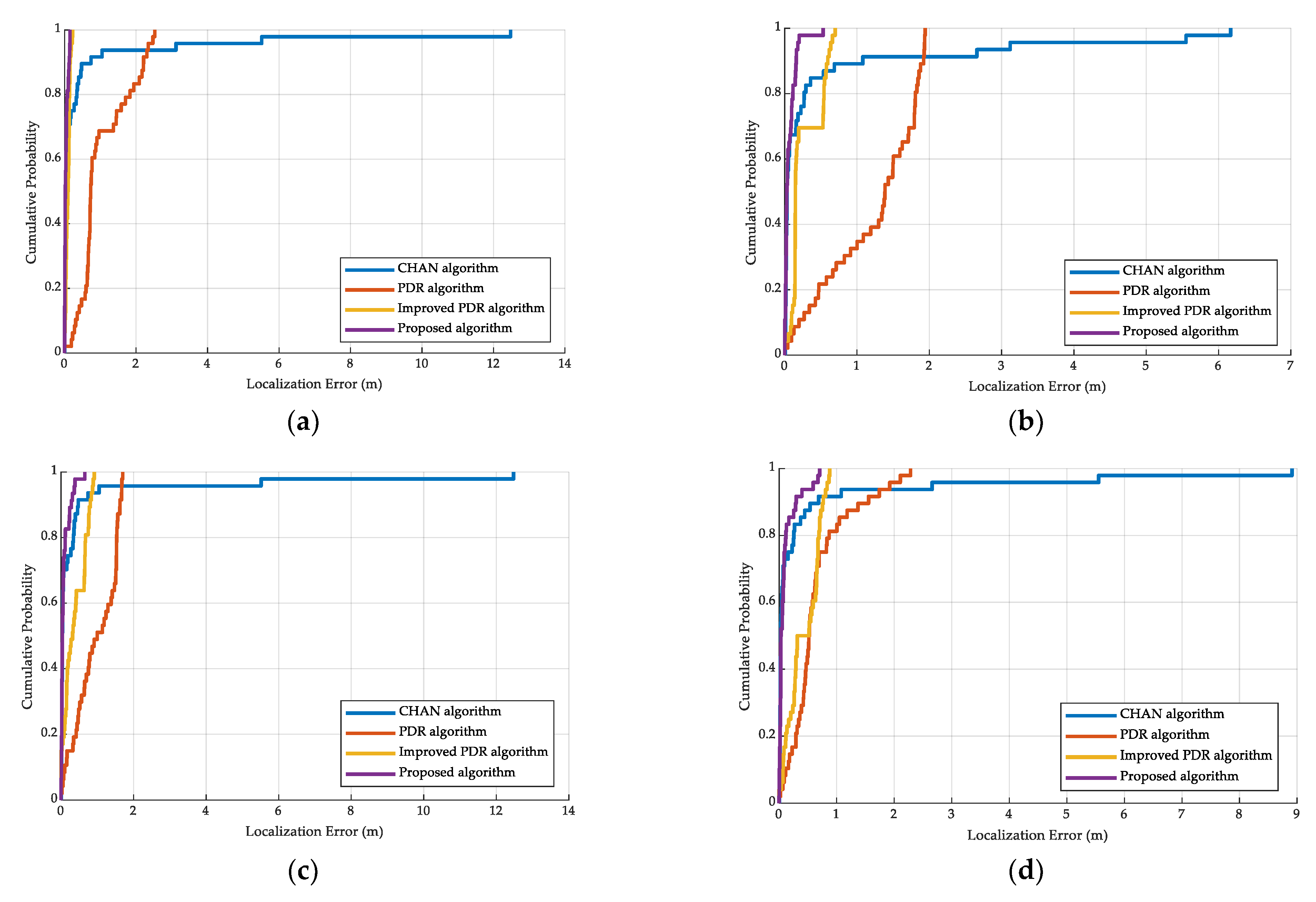

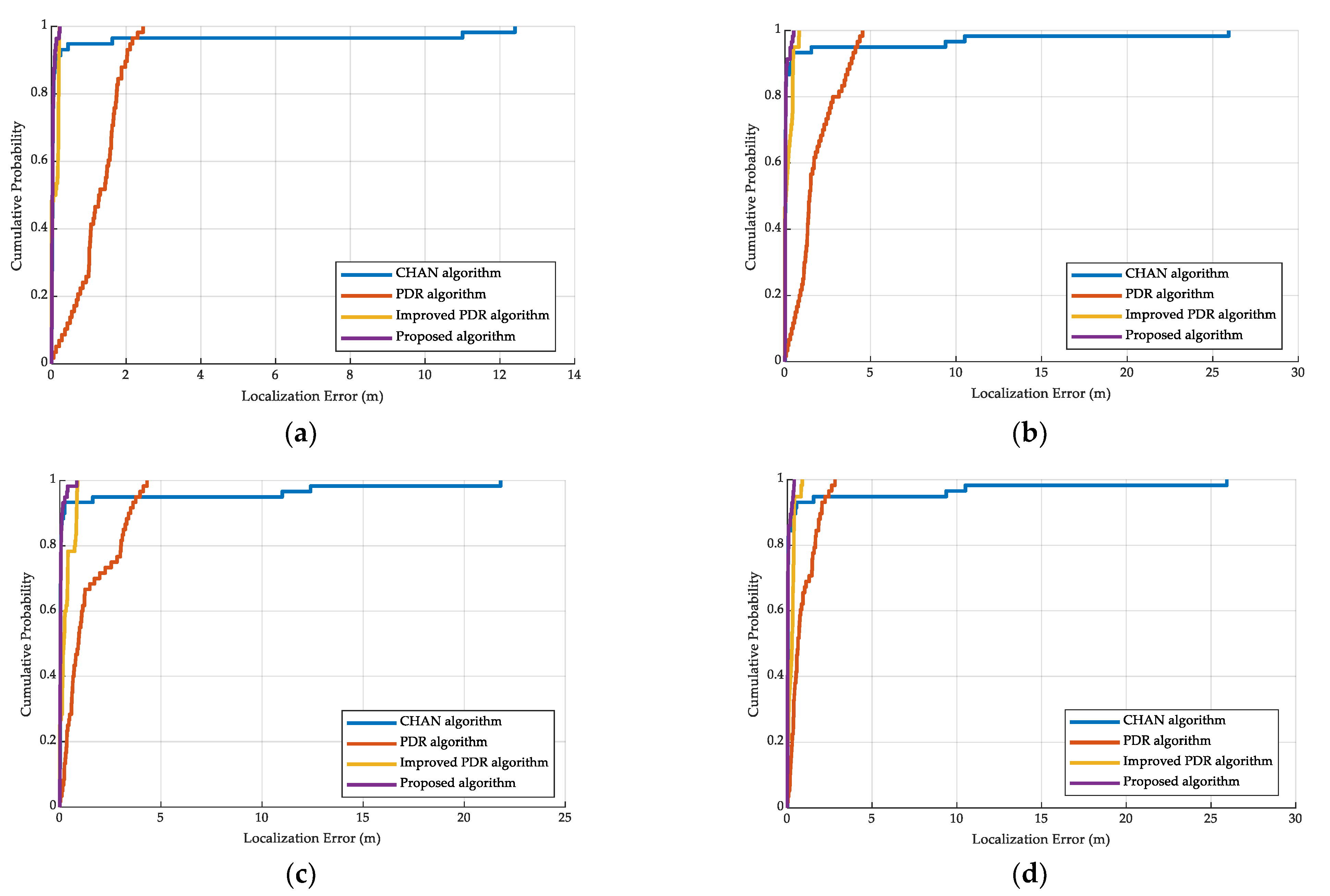

5.3. Localization Performance

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| Symbol | Quantity |

| Data Step length of the m-th step | |

| Heading direction of the m-th step after correction | |

| Measured heading direction using smartphone | |

| Compensation error of heading direction | |

| Distance difference between beacons Ai and Aj on the target | |

| Distance between beacon Ai and target M | |

| Error vector | |

| Covariance matrix | |

| Ordinary least squares | |

| Ultrasonic-base localization estimation | |

| Difference between the horizontal coordinates of the i-th beacon and the first beacon | |

| Difference between the vertical coordinates of the i-th beacon and the first beacon | |

| Sum of the squares of the horizontal and vertical coordinates of point i | |

| Model parameter | |

| Weight vector | |

| Location estimation at time m | |

| Location estimation using PDR method at time m | |

| Distance confidence level for ultrasonic-base estimation | |

| Distance confidence level for improved PDR estimation | |

| Distance threshold |

References

- Yassin, A.; Nasser, Y.; Awad, M.; Al-Dubai, A.; Liu, R.; Yuen, C.; Raulefs, R.; Aboutanios, E. Recent Advances in Indoor Localization: A Survey on Theoretical Approaches and Applications. IEEE Commun. Surv. Tutor. 2017, 19, 1327–1346. [Google Scholar] [CrossRef]

- Li, Z.; Wang, R.; Gao, J.; Wang, J. An approach to improve the positioning performance of GPS/INS/UWB integrated system with two-step filter. Remote Sens. 2017, 10, 19. [Google Scholar] [CrossRef]

- Zheng, Y.; Zeng, Q.; Lv, C.; Yu, H.; Ou, B. Mobile Robot Integrated Navigation Algorithm Based on Template Matching VO/IMU/UWB. IEEE Sens. J. 2021, 21, 27957–27966. [Google Scholar] [CrossRef]

- Li, J.; Xue, J.; Fu, D.; Gui, C.; Wang, X. Position Estimation and Error Correction of Mobile Robots Based on UWB and Multisensors. J. Sens. 2022, 2022, 1–18. [Google Scholar] [CrossRef]

- Wu, W.; Fu, S.; Luo, Y. Practical Privacy Protection Scheme In WiFi Fingerprint-based Localization. In Proceedings of the 2020 IEEE 7th International Conference on Data Science and Advanced Analytics (DSAA), Sydney, Australia, 6–9 October 2020; pp. 699–708. [Google Scholar]

- Poulose, A.; Han, D.S. Hybrid Deep Learning Model Based Indoor Positioning Using Wi-Fi RSSI Heat Maps for Autonomous Applications. Electronics 2021, 10, 2. [Google Scholar] [CrossRef]

- Want, R.; Hopper, A.; Falcao, V.; Gibbons, J. The Active Badge Location System. Acm. T Inform. Syst. 1992, 10, 91–102. [Google Scholar] [CrossRef]

- Zhu, J.; Xu, H. Review of RFID-based indoor positioning technology. In International Conference on Innovative Mobile and Internet Services in Ubiquitous Computing(IMIS); Abertay University: Matsue, Japan, 2018; pp. 632–641. [Google Scholar]

- Yang, B.Y.; Yang, E.F. A Survey on Radio Frequency based Precise Localisation Technology for UAV in GPS-denied Environment. J. Intell. Robot. Syst. 2021, 103, 1–30. [Google Scholar] [CrossRef]

- Florio, A.; Avitabile, G.; Coviello, G. A Linear Technique for Artifacts Correction and Compensation in Phase Interferometric Angle of Arrival Estimation. Sensors 2022, 22, 1427. [Google Scholar] [CrossRef]

- Florio, A.; Avitabile, G.; Coviello, G. Multiple Source Angle of Arrival Estimation Through Phase Interferometry. IEEE Trans. Circuits Syst. Ii-Express Briefs 2022, 69, 674–678. [Google Scholar] [CrossRef]

- Paulino, N.; Pessoa, L.M. Self-Localization via Circular Bluetooth 5.1 Antenna Array Receiver. IEEE Access 2023, 11, 365–395. [Google Scholar] [CrossRef]

- Zhou, C.; Yuan, J.Z.; Liu, H.Z.; Qiu, J. Bluetooth Indoor Positioning Based on RSSI and Kalman Filter. Wirel. Pers. Commun. 2017, 96, 4115–4130. [Google Scholar] [CrossRef]

- Yao, Y.B.; Bao, Q.J.; Han, Q.; Yao, R.L.; Xu, X.R.; Yan, J.R. BtPDR: Bluetooth and PDR-Based Indoor Fusion Localization Using Smartphones. Ksii Trans. Internet Inf. Syst. 2018, 12, 3657–3682. [Google Scholar] [CrossRef]

- Jiang, C.; Liu, J. A smartphone-based indoor geomagnetic positioning system. GNSS Word China 2018, 43, 9–16. [Google Scholar]

- Wang, Q.; Zhou, J. Simultaneous localization and mapping method for geomagnetic aided navigation. Optik 2018, 171, 437–445. [Google Scholar] [CrossRef]

- Elloumi, W.; Latoui, A.; Canals, R.; Chetouani, A.; Treuillet, S. Indoor Pedestrian Localization With a Smartphone: A Comparison of Inertial and Vision-Based Methods. IEEE Sens. J. 2016, 16, 5376–5388. [Google Scholar] [CrossRef]

- Fischer, G.; Bordoy, J.; Schott, D.J.; Xiong, W.X.; Gabbrielli, A.; Hoflinger, F.; Fischer, K.; Schindelhauer, C.; Rupitsch, S.J. Multimodal Indoor Localization: Fusion Possibilities of Ultrasonic and Bluetooth Low-Energy Data. IEEE Sens. J. 2022, 22, 5857–5868. [Google Scholar] [CrossRef]

- Dai, S. Design and implementation of ultrasonic-based indoor positioning system. Southwest Jiaotong Univ. 2017. [Google Scholar]

- Gentner, C.; Ulmschneider, M.; Jost, T. Cooperative simultaneous localization and mapping for pedestrians using low-cost ultra-wideband system and gyroscope. In Proceedings of the 2018 IEEE/ION Position, Location and Navigation Symposium (PLANS), Monterey, CA, USA, 23–26 April 2018; pp. 1197–1205. [Google Scholar]

- Hauschildt, D.; Kirchhof, N. Advances in thermal infrared localization: Challenges and solutions. In Proceedings of the 2010 International Conference on Indoor Positioning and Indoor Navigation, Zurich, Switzerland, 15–17 September 2010; pp. 1–8. [Google Scholar]

- Ajroud, C.; Hattay, J.; Machhout, M. Holographic Multi-Reader RFID Localization Method for Static Tags. In Proceedings of the 2022 8th International Conference on Control, Decision and Information Technologies (CoDIT), Istanbul, Turkey, 17–20 May 2022; pp. 1393–1396. [Google Scholar]

- Song, S.; Feng, F.; Xu, J. Review of Geomagnetic Indoor Positioning. In Proceedings of the 2020 IEEE 4th International Conference on Frontiers of Sensors Technologies (ICFST), Shanghai, China, 6–9 November 2020; pp. 30–33. [Google Scholar]

- Xing, H.; Guo, S.; Shi, L.; Hou, X.; Liu, Y.; Hu, Y.; Xia, D.; Li, Z. Quadrotor vision-based localization for amphibious robots in amphibious area. In Proceedings of the 2019 IEEE International Conference on Mechatronics and Automation (ICMA), Tianjin, China, 4–7 August 2019; pp. 2469–2474. [Google Scholar]

- Yanovsky, F.J.; Sinitsyn, R.B. Application of wideband signals for acoustic localization. In Proceedings of the 2016 8th International Conference on Ultrawideband and Ultrashort Impulse Signals (UWBUSIS), Odessa, Ukraine, 5–11 September 2016; pp. 27–35. [Google Scholar]

- Lu, Y.; Wei, D.; Yuan, H. A Magnetic-Aided PDR Localization Method Based on the Hidden Markov Model. In Proceedings of the 30th International Technical Meeting of the Satellite Division of The Institute of Navigation (ION GNSS+ 2017), Portland, OR, USA, 25–29 September 2017; pp. 3331–3339. [Google Scholar]

- Mazlan, A.B.; Ng, Y.H.; Tan, C.K. A Fast Indoor Positioning Using a Knowledge-Distilled Convolutional Neural Network (KD-CNN). IEEE Access 2022, 10, 65326–65338. [Google Scholar] [CrossRef]

- Mazlan, A.B.; Ng, Y.H.; Tan, C.K. Teacher-Assistant Knowledge Distillation Based Indoor Positioning System. Sustainability 2022, 14, 4652. [Google Scholar] [CrossRef]

- Gu, H.; Zhao, K.; Yu, C.; Zheng, Z. High resolution time of arrival estimation algorithm for B5G indoor positioning. Phys. Commun. 2022, 50, 101494. [Google Scholar] [CrossRef]

- Yao, S.; Su, Y.; Zhu, X. Technology. High Precision Indoor Positioning System Based on UWB/MINS Integration in NLOS Condition. J. Electr. Eng. 2022, 17, 1–10. [Google Scholar]

- Zhang, S.; Wang, W.; Jiang, T. Wi-Fi-inertial indoor pose estimation for microaerial vehicles. IEEE Trans. Ind. Electron. 2020, 68, 4331–4340. [Google Scholar] [CrossRef]

- Zhu, Q.; Niu, K.; Dong, C.; Wang, Y. A novel angle of arrival (AOA) positioning algorithm aided by location reliability prior information. In Proceedings of the 2021 IEEE Wireless Communications and Networking Conference (WCNC), Nanjing, China, 29 March 2021–1 April 2021; pp. 1–6. [Google Scholar]

- Deng, Z.; Wang, H.; Zheng, X.; Fu, X.; Yin, L.; Tang, S.; Yang, F. A closed-form localization algorithm and GDOP analysis for multiple TDOAs and single TOA based hybrid positioning. Appl. Sci. 2019, 9, 4935. [Google Scholar] [CrossRef]

- Qu, J.; Shi, H.; Qiao, N.; Wu, C.; Su, C.; Razi, A. Networking. New three-dimensional positioning algorithm through integrating TDOA and Newton’s method. EURASIP J. Wirel. Commun. 2020, 2020, 1–8. [Google Scholar]

- Fang, B.T. Simple solutions for hyperbolic and related position fixes. IEEE Trans. Aerosp. Electron. Syst. 1990, 26, 748–753. [Google Scholar] [CrossRef]

- Chan, Y.T.; Ho, K.C. A simple and efficient estimator for hyperbolic location. IEEE Trans. Signal Process. 1994, 42, 1905–1915. [Google Scholar] [CrossRef]

- Poulose, A.; Han, D.S. UWB indoor localization using deep learning LSTM networks. Appl. Sci. 2020, 10, 6290. [Google Scholar] [CrossRef]

- Kolakowski, M. Improving accuracy and reliability of bluetooth low-Energy-Based localization systems using proximity sensors. Appl. Sci. 2019, 9, 4081. [Google Scholar] [CrossRef]

- Zhang, F. Fusion positioning algorithm of indoor WiFi and bluetooth based on discrete mathematical model. J. Ambient. Intell. Humaniz. Comput. 2020, 1–11. [Google Scholar] [CrossRef]

- Jia, B.; Huang, B.Q.; Gao, H.P.; Li, W.; Hao, L.F. Selecting Critical WiFi APs for Indoor Localization Based on a Theoretical Error Analysis. IEEE Access 2019, 7, 36312–36321. [Google Scholar] [CrossRef]

- Liu, K.K.; Liu, X.X.; Li, X.L. Guoguo: Enabling Fine-Grained Smartphone Localization via Acoustic Anchors. IEEE Trans. Mob. Comput. 2016, 15, 1144–1156. [Google Scholar] [CrossRef]

- Luo, X.N.; Wang, H.C.; Yan, S.Q.; Liu, J.M.; Zhong, Y.R.; Lan, R.S. Ultrasonic localization method based on receiver array optimization schemes. Int. J. Distrib. Sens. Netw. 2018, 14, 1–13. [Google Scholar] [CrossRef]

- Li, L.; Liu, Z. Analysis of TDOA Algorithm about Rapid Moving Target with UWB Tag. In Proceedings of the 2017 9th International Conference on Intelligent Human-Machine Systems and Cybernetics (IHMSC), Hangzhou, China, 26–27 August 2017; pp. 406–409. [Google Scholar]

- Lee, G.T.; Seo, S.B.; Jeon, W.S. Indoor localization by Kalman filter based combining of UWB-positioning and PDR. In Proceedings of the 2021 IEEE 18th Annual Consumer Communications & Networking Conference (CCNC), Las Vegas, NV, USA, 9–12 January 2021; pp. 1–6. [Google Scholar]

- Wang, H.; Luo, X.; Zhong, Y.; Lan, R.; Wang, Z. Acoustic signal positioning and calibration with IMU in NLOS environment. In Proceedings of the 2019 Eleventh International Conference on Advanced Computational Intelligence (ICACI), Guilin, China, 7–9 June 2019; pp. 223–228. [Google Scholar]

- Yan, S.; Wu, C.; Deng, H.; Luo, X.; Ji, Y.; Xiao, J. A Low-Cost and Efficient Indoor Fusion Localization Method. Sensors 2022, 22, 5505. [Google Scholar] [CrossRef] [PubMed]

- Liu, R.; Yuen, C.; Do, T.N.; Tan, U.X. Fusing Similarity-Based Sequence and Dead Reckoning for Indoor Positioning Without Training. IEEE Sens. J. 2017, 17, 4197–4207. [Google Scholar] [CrossRef]

- Zhu, Y.; Luo, X.; Guan, S.; Wang, Z. Indoor positioning method based on WiFi/Bluetooth and PDR fusion positioning. In Proceedings of the 2021 13th International Conference on Advanced Computational Intelligence (ICACI), Wanzhou, China, 14–16 May 2021; pp. 233–238. [Google Scholar]

- Weinberg, H. Using the ADXL202 in pedometer and personal navigation applications. Analog. Devices AN-602 Appl. Note 2002, 2, 1–6. [Google Scholar]

- Scarlett, J. Enhancing the perdormance of pedometers using a single accelerometer. Appl. Note Analog. Devices 2007, 41, 1–16. [Google Scholar]

- Kim, J.W.; Jang, H.J.; Hwang, D.H.; Park, C. A Step, Stride and Heading Determination for the Pedestrian Navigation System. J. Glob. Position. Syst. 2004, 3, 273–279. [Google Scholar] [CrossRef]

- Zhao, Q.; Zhang, B.; Wang, J. Improved method of step length estimation based on inverted pendulum mode. Int. Iournal Distrib. Sens. Netw. 2007, 13, 1–13. [Google Scholar]

- Song, H. Research and Implentation of Indoor Navigation System Based on Pedestrian Dead Reckoning. Univ. Electron. Sci. Technol. China 2018. [Google Scholar]

| Technical Information | VivoY85a | Honor60 |

|---|---|---|

| Operating system | Android 8.1.0 | Android 11 |

| CPU | Snapdragon 450 | Snapdragon 778 |

| RAM + ROM | 4 G + 64 G | 8 G + 256 G |

| Screen | 6.26 inch | 6.67 inch |

| Image resolution | 2280 × 1080 | 2400 × 1080 |

| Battery capacity | 3260 mAh | 4800 mAh |

| Method | Volunteer #1 (m) | Volunteer #2 (m) |

|---|---|---|

| Scarlet (VivoY85a) | 0.6621 | 0.6453 |

| Scarlet (Honor60) | 0.6190 | 0.5619 |

| Kim (VivoY85a) | 0.5375 | 0.5238 |

| Kim (Honor60) | 0.5418 | 0.4859 |

| Weinberg (VivoY85a) | 0.5532 | 0.5514 |

| Weinberg (Honor60) | 0.5608 | 0.5494 |

| Proposed method (VivoY85a) | 0.6078 | 0.5956 |

| Proposed method (Honor60) | 0.6066 | 0.5952 |

| Method | Volunteer #1 (m) | Volunteer #2 (m) |

|---|---|---|

| Scarlet (VivoY85a) | 0.6245 | 0.6490 |

| Scarlet (Honor60) | 0.6280 | 0.6018 |

| Kim (VivoY85a) | 0.5351 | 0.5466 |

| Kim (Honor60) | 0.5422 | 0.5272 |

| Weinberg (VivoY85a) | 0.5602 | 0.5583 |

| Weinberg (Honor60) | 0.5576 | 0.5548 |

| Proposed method (VivoY85a) | 0.6013 | 0.6075 |

| Proposed method (Honor60) | 0.6009 | 0.5992 |

| Distance | Number | Weinberg Method | Proposed Method | ||

|---|---|---|---|---|---|

| Distance Estimation | Absolute Error | Distance Estimation | Absolute Error | ||

| 15 m | 1 | 14.1103 | 0.8897 | 15.1936 | 0.1936 |

| 2 | 13.9190 | 1.0810 | 14.7965 | 0.2035 | |

| 3 | 13.8630 | 1.1370 | 15.0210 | 0.0210 | |

| 24 m | 1 | 22.1598 | 1.8402 | 23.9690 | 0.0310 |

| 2 | 22.4331 | 1.5669 | 24.2047 | 0.2047 | |

| 3 | 22.3525 | 1.6475 | 23.8515 | 0.1485 | |

| 33 m | 1 | 30.8524 | 2.1476 | 33.0497 | 0.0497 |

| 2 | 30.5610 | 2.4390 | 33.0742 | 0.0742 | |

| 3 | 30.8507 | 2.1493 | 33.4002 | 0.4002 | |

| Method | 90th Percentile (Volunteer #1) | 90th Percentile (Volunteer #2) |

|---|---|---|

| CHAN (VivoY85a) | 0.7405 | 1.0800 |

| CHAN (Honor60) | 0.4742 | 0.6856 |

| PDR (VivoY85a) | 2.2100 | 1.9215 |

| PDR (Honor60) | 1.6223 | 1.5540 |

| Improved PDR (VivoY85a) | 0.1556 | 0.5968 |

| Improved PDR (Honor60) | 0.8085 | 0.7678 |

| Proposed method (VivoY85a) | 0.1337 | 0.1597 |

| Proposed method (Honor60) | 0.2852 | 0.2956 |

| Method | 90th Percentile (Volunteer #1) | 90th Percentile (Volunteer #2) |

|---|---|---|

| CHAN (VivoY85a) | 0.1745 | 0.3967 |

| CHAN (Honor60) | 0.2443 | 0.4873 |

| PDR (VivoY85a) | 2.0231 | 3.8940 |

| PDR (Honor60) | 3.5036 | 2.0372 |

| Improved PDR (VivoY85a) | 0.2014 | 0.4758 |

| Improved PDR (Honor60) | 0.8283 | 0.4026 |

| Proposed method (VivoY85a) | 0.0861 | 0.1387 |

| Proposed method (Honor60) | 0.1305 | 0.2571 |

| Volunteer #1 | Method | Mean Error | RMS Error |

|---|---|---|---|

| CHAN (VivoY85a) | 0.4563 | 1.8913 | |

| CHAN (Honor60) | 0.4050 | 1.8565 | |

| PDR (VivoY85a) | 1.0417 | 1.2482 | |

| PDR (Honor60) | 0.9708 | 1.1240 | |

| Improved PDR (VivoY85a) | 0.0921 | 0.1083 | |

| Improved PDR (Honor60) | 0.3755 | 0.4822 | |

| Proposed method (VivoY85a) | 0.0432 | 0.0632 | |

| Proposed method (Honor60) | 0.0904 | 0.1574 | |

| Volunteer #2 | |||

| CHAN (VivoY85a) | 0.4780 | 1.3898 | |

| CHAN (Honor60) | 0.4195 | 1.5492 | |

| PDR (VivoY85a) | 1.2213 | 1.3730 | |

| PDR (Honor60) | 0.6565 | 0.8382 | |

| Improved PDR (VivoY85a) | 0.2681 | 0.3416 | |

| Improved PDR (Honor60) | 0.4304 | 0.5097 | |

| Proposed method (VivoY85a) | 0.0670 | 0.1112 | |

| Proposed method (Honor60) | 0.1054 | 0.1956 |

| Volunteer #1 | Method | Mean Error | RMS Error |

|---|---|---|---|

| CHAN (VivoY85a) | 0.2400 | 1.4719 | |

| CHAN (Honor60) | 0.2586 | 1.4613 | |

| PDR (VivoY85a) | 1.2592 | 1.3911 | |

| PDR (Honor60) | 1.4259 | 1.9098 | |

| Improved PDR (VivoY85a) | 0.0937 | 0.1322 | |

| Improved PDR (Honor60) | 0.3030 | 0.4273 | |

| Proposed method (VivoY85a) | 0.0390 | 0.0580 | |

| Proposed method (Honor60) | 0.0643 | 0.1406 | |

| Volunteer #2 | |||

| CHAN (VivoY85a) | 0.2391 | 1.2526 | |

| CHAN (Honor60) | 0.2543 | 1.2768 | |

| PDR (VivoY85a) | 1.8299 | 2.1867 | |

| PDR (Honor60) | 0.9014 | 1.1565 | |

| Improved PDR (VivoY85a) | 0.1942 | 0.3075 | |

| Improved PDR (Honor60) | 0.2479 | 0.3193 | |

| Proposed method (VivoY85a) | 0.0610 | 0.1227 | |

| Proposed method (Honor60) | 0.0615 | 0.1176 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yan, S.; Wu, C.; Luo, X.; Ji, Y.; Xiao, J. Multi-Information Fusion Indoor Localization Using Smartphones. Appl. Sci. 2023, 13, 3270. https://doi.org/10.3390/app13053270

Yan S, Wu C, Luo X, Ji Y, Xiao J. Multi-Information Fusion Indoor Localization Using Smartphones. Applied Sciences. 2023; 13(5):3270. https://doi.org/10.3390/app13053270

Chicago/Turabian StyleYan, Suqing, Chunping Wu, Xiaonan Luo, Yuanfa Ji, and Jianming Xiao. 2023. "Multi-Information Fusion Indoor Localization Using Smartphones" Applied Sciences 13, no. 5: 3270. https://doi.org/10.3390/app13053270

APA StyleYan, S., Wu, C., Luo, X., Ji, Y., & Xiao, J. (2023). Multi-Information Fusion Indoor Localization Using Smartphones. Applied Sciences, 13(5), 3270. https://doi.org/10.3390/app13053270