A Review of Recent Advances and Challenges in Grocery Label Detection and Recognition

Abstract

1. Introduction

2. Technological Review

2.1. Barcode Based System

2.2. Label-Based System

3. Image Pre-Processing and Selection

4. Related Datasets

4.1. Datasets for Grocery Product Detection and Recognition

4.2. Scene Text Recognition Datasets

| Datasets | #Images | #Classes | Objective | Labels | Language | Resolution |

|---|---|---|---|---|---|---|

| Products-6K [8] | 12,917 R 373 Q | 6348 R 104 Q | Classify products on the shelf or in a hand by matching system. | SKU classes, image class Textual information | Greek | 800 × 800 R 3024 × 4032 Q |

| GroZi-120 [47] | 676 R 4973 Q | 120 | Detected and classify products on the shelf by matching system. | Image class, Bounding box | Swiss | 183 × 162 |

| GroZi-3.2k [45] | 3235 R 680 Q | 27 | Detected and classify products on the shelf by matching system. | Object superclass, Bounding box | Swiss | 421 × 500 R 3264 × 2448 Q |

| Grocery Store [46] | 1745 | 31 | Classify subcategory products essentially in hand and get detailed information of the product. | Image sub-class, small description and detailed information | Swedish | 348 × 348 |

| Freiburg [48] | 4947 | 25 | Classify super-category product essentially on the shelf. Photos contain one category. | Image class | German | 256 × 256 |

| D2S-Densely Segmented [49] | 4380 R 19,620 Q | 60 | Designed for automatic checkout or inventory system. Detect and classify products on a table. | Instance segmentation Bounding box Object super-class Object subclass | German | 1920 × 1440 |

| Unitail-Det [50] | 11,744 + 500 | 1,740,037 + 37,071 | Detect all products displayed in a supermarket. | Quadrilateral bounding boxes | English | 1216 × 1600 3024 × 4032 |

| Unitail-OCR [50] | 1454 R 10,000 Q | 1454 | Classify cropped image products by three steps: text detection, text recognition and product matching. | Image class Quadrilateral text loca- tion. Text transcription | English | 194 × 504 |

| RPC [5] | 53,739 R 30,000 Q | 200 | Designed for automatic checkout or inventory system. Detect and classify products on a table. | Bounding box SKU class Hierarquical classes | Chinese | 1817 × 1817 |

| CAPG Grocery [44] | 18 R 236 Q | 18 | Detected and classify products on the shelf by matching system. | Bounding box SKU class | Chinese | 261 × 600 R 4032 × 3024 Q |

| RP2K [51] | 384,311 | 2395 | Classify cropped image products capture on the shelf. | SKU class | Chinese | 153 × 251 |

| Store images for Retail [52] | 300 R 3153 Q | 100 | Grocery product classification | No labels | English a | 2272 × 1704 R 757 × 568 Q |

| Open Food Facts dataset [16] | +2.5 M | +2.5 M | Image classification and extensive product information | SKU class, Additional information such as common name, allergens, Nutri-score, Nova score | Multi-lingual | width × 400 |

| SKU-110K [3] | 11,762 | 110,712 | Detect all objects on shelves | Bounding box | Multi-lingual | - |

| WebMarket [53] | 300 | - | Detect all objects on shelves | Bounding box | English | 2272 × 1704 |

5. Product Detection and Recognition

5.1. Product Detection Based on Deep Learning

5.2. Product Recognition Based on Deep Learning

5.3. End-to-End Product Classification Based on Deep Learning

| Methods | System | Classification | Dataset | Scores | Metrics |

|---|---|---|---|---|---|

| Goldman and Goldberger [85] | Sequence of products on the rack | Fine-grained/ ultrafine-grained | Private dataset | 0.129 | Mean Error |

| Wang, Y. et al. [87] | Matching | Fine-grained | Private dataset | 0.422 | Avg Accuracy |

| Santra et al. [88] | Matching | Fine-grained | Grocery Products | 0.797 | F1 |

| WebMarket | 0.740 | ||||

| GroZi-120 | 0.440 | ||||

| Klasson et al. [46] | Matching | Hierarchical | Grocery Store | 0.804 | Accuracy |

| Ciocca et al. [90] Supervised | Matching | Hierarchical | Grocery Products | 0.904 | Accuracy |

| Ciocca et al. [90] Unsupervised | Matching | Hierarchical | Grocery Products | 0.929 | Accuracy |

| Wang, W et al. [93]—VGG-16 | Matching | Fine-grained | RPC | 0.787 | Accuracy |

| Wang, W et al. [93]—ResNet-50 | Matching | Fine-grained | RPC | 0.814 | Accuracy |

| Domingo et al. [92] | Matching (Siamese) | Fine-grained | Grocery Store | 0.891 | F1 |

6. Product Label Analysis

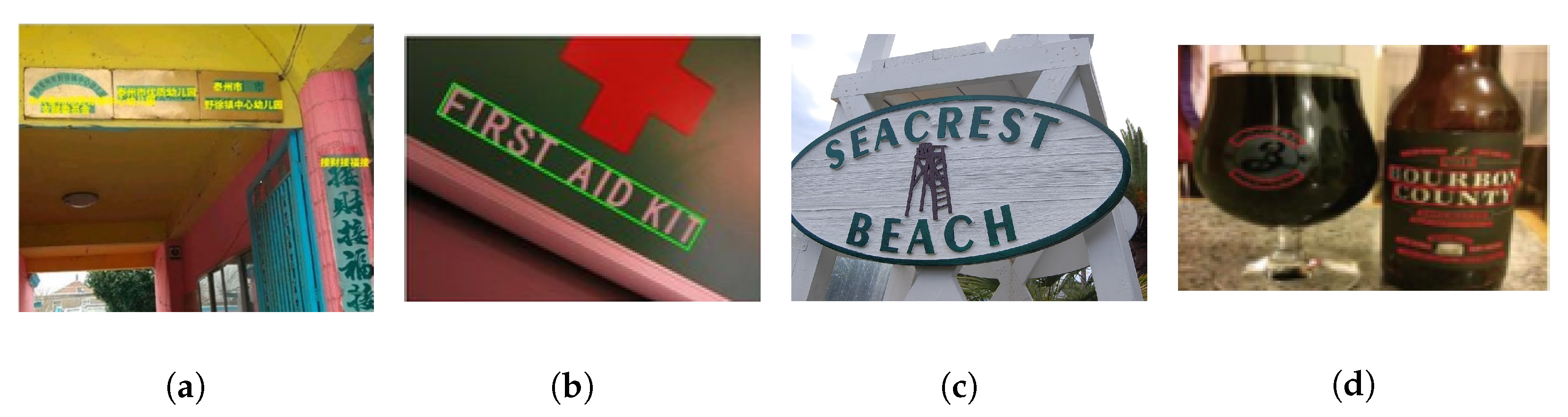

6.1. Text Detection

6.1.1. Regression-Based Methods

6.1.2. Segmentation-Based Methods

| ICDAR2015 | SCUT-CTW1500 | Text Style | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Methods | Recall | Precision | F-Measure | FPS | Recall | Precision | F-measure | FPS | Multi Orientation | Curved | |

| Regression-based | [109] | 79.68% | 85.62% | 82.54% | 0.44 | - | - | - | - | √ | × |

| RRPN [110] | 73.23% | 82.17% | 77.44% | - | - | - | - | - | √ | × | |

| RRD [111] | 79.00% | 85.60% | 82.20% | 6.5 | - | - | - | - | √ | × | |

| RRD+MS [111] | 80.00% | 88.00% | 83.80% | - | - | - | - | - | √ | × | |

| EAST+PVANET2x [113] | 73.47% | 83.57% | 78.20% | 13.2 | - | - | - | - | √ | × | |

| TextBoxes++ [112] | 76.70% | 87.20% | 82.90% | 11.6 | - | - | - | - | √ | × | |

| TextBoxes++_MS [112] | 78.50% | 87.80% | 82.90% | 2.3 | - | - | - | - | √ | × | |

| MOST [118] | 87.30% | 89.1% | 88.2% | 10 | - | - | - | - | √ | × | |

| SegLink [105] | 76.80% | 73.10% | 75.00% | - | - | - | - | - | √ | × | |

| SegLink++ [114] | 80.30% | 83.70% | 82.00% | 7.1 | 79.80% | 82.80% | 81.30% | - | √ | √ | |

| CounterNet [115] | 86.10% | 87.60% | 86.90% | 3.5 | 84.10% | 83.70% | 83.90% | 4.5 | √ | √ | |

| Zhang et al. [116] | 84.69% | 88.53% | 86.56% | - | 83.02% | 85.93% | 84.45% | - | √ | √ | |

| FCENet [117] | 84.20% | 85.10% | 84.60% | - | 80.70% | 85.70% | 83.10% | - | √ | √ | |

| FCENet+DCN [117] | 82.60% | 90.10% | 86.20% | - | 83.40% | 87.60% | 85.50% | - | √ | √ | |

| Segmentation-based | TextSnake [119] | 80.40% | 84.90% | 82.60% | 1.1 | 63.40% | 65.40% | 64.40% | - | √ | √ |

| LOMO [120] | 83.50% | 91.30% | 87.20% | - | 69.60% | 89.20% | 78.40% | - | √ | √ | |

| LOMO+MS [120] | 87.60% | 87.80% | 87.70% | - | 76.50% | 85.70% | 80.80% | - | √ | √ | |

| PSENet-1s [121] | 84.50% | 86.90% | 85.70% | 1.6 | 79.70% | 84.80% | 82.20% | 8.4 | √ | √ | |

| CRAFT [122] a | 84.30% | 89.80% | 86.90% | - | 81.10% | 86.00% | 83.50% | - | √ | √ | |

| PAN [123] | 81.90% | 84.00% | 82.90% | 26.1 | 81.50% | 85.50% | 83.50% | 58.1 | √ | √ | |

| DBNet [124] | 83.20% | 91.80% | 87.30% | 12 | 80.20% | 86.90% | 83.40% | 22 | √ | √ | |

| DBNet++ [125] | 83.90% | 90.90% | 87.30% | 10 | 82.80% | 87.90% | 85.30% | 21 | √ | √ | |

| MOSTL [126] | 84.56% | 92.50% | 88.35% | 5 | - | - | - | - | √ | √ | |

| RSCA [127] | 82.70% | 87.20% | 84.90% | 23.3 | 83.30% | 86.60% | 85.00% | 30.4 | √ | √ | |

| Wang et al. [128] | - | - | - | - | 80.52% | 86.91% | 83.59% | 25.1 | √ | √ | |

| SAST [129] | 87.09% | 86.72% | 86.91% | - | 77.05% | 85.31% | 80.97% | 27.63 | √ | √ | |

| SAST+MS [129] | 87.34% | 87.55% | 87.44% | - | 81.71% | 81.19% | 81.45% | - | √ | √ | |

| Long et al. [130] | - | - | - | - | 87.44% | 84.56% | 85.97% | - | √ | √ | |

6.2. Text Recognition

6.3. Text Spotting

7. Challenges and Opportunities

- Poor image quality, as blurring and perspective distortion can be enhanced with image preprocessing techniques.

- Lack of datasets can be overcome by creating synthetic datasets, applying data augmentation or selecting one-shot or unsupervised learning.

- Shift environment between training data and test data requires strategies such as GAN network, data augmentation and transfer learning.

- New classes are regularly added and the use of incremental learning or one-shot learning prevents the model from being completely retrained.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Wei, Y.; Tran, S.N.; Xu, S.; Kang, B.H.; Springer, M. Deep Learning for Retail Product Recognition: Challenges and Techniques. Comput. Intell. Neurosci. 2020, 2020, 8875910. [Google Scholar] [CrossRef]

- Franco, A.; Maltoni, D.; Papi, S. Grocery product detection and recognition. Expert Syst. Appl. 2017, 81, 163–176. [Google Scholar] [CrossRef]

- Goldman, E.; Herzig, R.; Eisenschtat, A.; Goldberger, J.; Hassner, T. Precise detection in densely packed scenes. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–17 June 2019; pp. 5227–5236. [Google Scholar]

- Tonioni, A.; Serra, E.; Di Stefano, L. A deep learning pipeline for product recognition on store shelves. In Proceedings of the 2018 IEEE International Conference on Image Processing, Applications and Systems (IPAS), Sophia Antipolis, France, 12–14 December 2018; pp. 25–31. [Google Scholar]

- Wei, X.S.; Cui, Q.; Yang, L.; Wang, P.; Liu, L. RPC: A Large-Scale Retail Product Checkout Dataset. arXiv 2019, arXiv:1901.07249. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Proceedings of the Advances in Neural Information Processing Systems, Montréal, QC, Canada, 8–13 December 2014; pp. 2672–2680. [Google Scholar]

- Oucheikh, R.; Pettersson, T.; Löfström, T. Product verification using OCR classification and Mondrian conformal prediction. Expert Syst. Appl. 2022, 188, 115942. [Google Scholar] [CrossRef]

- Georgiadis, K.; Kordopatis-Zilos, G.; Kalaganis, F.; Migkotzidis, P.; Chatzilari, E.; Panakidou, V.; Pantouvakis, K.; Tortopidis, S.; Papadopoulos, S.; Nikolopoulos, S.; et al. Products-6K: A Large-Scale Groceries Product Recognition Dataset. In Proceedings of the 14th PErvasive Technologies Related to Assistive Environments Conference, Corfu, Greece, 29 June–2 July 2021; pp. 1–7. [Google Scholar]

- Klasson, M.; Zhang, C.; Kjellström, H. Using variational multi-view learning for classification of grocery items. Patterns 2020, 1, 100143. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.; Min, W.; Hou, S.; Ma, S.; Zheng, Y.; Jiang, S. LogoDet-3K: A Large-scale Image Dataset for Logo Detection. ACM Trans. Multimed. Comput. Commun. Appl. (TOMM) 2020, 18, 1–19. [Google Scholar] [CrossRef]

- Melek, C.G.; Sonmez, E.B.; Albayrak, S. A survey of product recognition in shelf images. In Proceedings of the 2017 International Conference on Computer Science and Engineering (UBMK), Antalya, Turkey, 5–7 October 2017; pp. 145–150. [Google Scholar] [CrossRef]

- Santra, B.; Mukherjee, D.P. A comprehensive survey on computer vision based approaches for automatic identification of products in retail store. Image Vis. Comput. 2019, 86, 45–63. [Google Scholar] [CrossRef]

- Kulyukin, V.; Gharpure, C.; Nicholson, J. RoboCart: Toward robot-assisted navigation of grocery stores by the visually impaired. In Proceedings of the 2005 IEEE/RSJ International Conference on Intelligent Robots and Systems, Edmonton, AB, Canada, 2–6 August 2005; pp. 2845–2850. [Google Scholar] [CrossRef]

- Seeing-AI. Available online: https://www.microsoft.com/en-us/ai/seeing-ai (accessed on 30 December 2022).

- Yuka. Available online: https://yuka.io/en/ (accessed on 30 December 2022).

- Open Food Facts. Available online: https://github.com/openfoodfacts (accessed on 30 December 2022).

- Lookout—Assisted Vision. Available online: https://play.google.com/store/apps/details?id=com.google.android.apps.accessibility.reveal&hl=en_GB&gl=US (accessed on 30 December 2022).

- Identify Products with Your Echo Show. Available online: https://www.amazon.com/gp/help/customer/display.html?nodeId=G5723QKAVR8Z9S26 (accessed on 30 December 2022).

- OrCam MyEye. Available online: https://www.orcam.com/en/ (accessed on 30 December 2022).

- Wine-Searcher. Available online: https://www.wine-searcher.com/wine-searcher (accessed on 30 December 2022).

- Amazon Go. Available online: https://www.amazon.com/ref=footer_us (accessed on 30 December 2022).

- Varga, L.A.; Koch, S.; Zell, A. Comprehensive Analysis of the Object Detection Pipeline on UAVs. Remote Sens. 2022, 14, 5508. [Google Scholar] [CrossRef]

- Minh, T.N.; Sinn, M.; Lam, H.T.; Wistuba, M. Automated Image Data Preprocessing with Deep Reinforcement Learning. arXiv 2018, arXiv:1806.05886. [Google Scholar]

- Chen, C.; Chen, Q.; Xu, J.; Koltun, V. Learning to See in the Dark. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 3291–3300. [Google Scholar] [CrossRef]

- Ledig, C.; Theis, L.; Huszár, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Aitken, A.; Tejani, A.; Totz, J.; Wang, Z.; et al. Photo-realistic single image super-resolution using a generative adversarial network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4681–4690. [Google Scholar]

- Dudhane, A.; Zamir, S.W.; Khan, S.; Khan, F.S.; Yang, M.H. Burst Image Restoration and Enhancement. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 19–20 June 2022; pp. 5749–5758. [Google Scholar] [CrossRef]

- Bhat, G.; Danelljan, M.; Van Gool, L.; Timofte, R. Deep Burst Super-Resolution. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 9205–9214. [Google Scholar] [CrossRef]

- Bhat, G.; Danelljan, M.; Yu, F.; Van Gool, L.; Timofte, R. Deep Reparametrization of Multi-Frame Super-Resolution and Denoising. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 2440–2450. [Google Scholar] [CrossRef]

- Wronski, B.; Garcia-Dorado, I.; Ernst, M.; Kelly, D.; Krainin, M.; Liang, C.K.; Levoy, M.; Milanfar, P. Handheld Multi-Frame Super-Resolution. ACM Trans. Graph. 2019, 38, 1–18. [Google Scholar] [CrossRef]

- Godard, C.; Matzen, K.; Uyttendaele, M. Deep Burst Denoising. In Proceedings of the Computer Vision—ECCV 2018, Munich, Germany, 8–14 September 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 560–577. [Google Scholar]

- Lecouat, B.; Eboli, T.; Ponce, J.; Mairal, J. High Dynamic Range and Super-Resolution from Raw Image Bursts. ACM Trans. Graph. 2022, 41, 1–21. [Google Scholar] [CrossRef]

- Luo, Z.; Li, Y.; Cheng, S.; Yu, L.; Wu, Q.; Wen, Z.; Fan, H.; Sun, J.; Liu, S. BSRT: Improving Burst Super-Resolution with Swin Transformer and Flow-Guided Deformable Alignment. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), New Orleans, LA, USA, 19–20 June 2022; IEEE Computer Society: Los Alamitos, CA, USA, 2022; pp. 997–1007. [Google Scholar] [CrossRef]

- Deudon, M.; Kalaitzis, A.; Goytom, I.; Arefin, M.R.; Lin, Z.; Sankaran, K.; Michalski, V.; Kahou, S.E.; Cornebise, J.; Bengio, Y. HighRes-net: Recursive Fusion for Multi-Frame Super-Resolution of Satellite Imagery. arXiv 2020, arXiv:2002.06460. [Google Scholar]

- Mehta, N.; Dudhane, A.; Murala, S.; Zamir, S.W.; Khan, S.; Khan, F.S. Adaptive Feature Consolidation Network for Burst Super-Resolution. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), New Orleans, LA, USA, 19–20 June 2022; pp. 1278–1285. [Google Scholar] [CrossRef]

- An, T.; Zhang, X.; Huo, C.; Xue, B.; Wang, L.; Pan, C. TR-MISR: Multiimage Super-Resolution Based on Feature Fusion With Transformers. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 1373–1388. [Google Scholar] [CrossRef]

- Zamir, S.W.; Arora, A.; Khan, S.; Hayat, M.; Khan, F.S.; Yang, M.H.; Shao, L. Learning Enriched Features for Real Image Restoration and Enhancement. In Proceedings of the Computer Vision—ECCV 2020, Glasgow, UK, 23–28 August 2020; Vedaldi, A., Bischof, H., Brox, T., Frahm, J.M., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 492–511. [Google Scholar]

- Nguyen, T.P.H.; Cai, Z.; Nguyen, K.; Keth, S.; Shen, N.; Park, M. Pre-processing Images using Brightening, CLAHE and RETINEX. arXiv 2020, arXiv:2003.10822. [Google Scholar]

- Mehrnejad, M.; Albu, A.B.; Capson, D.; Hoeberechts, M. Towards Robust Identification of Slow Moving Animals in Deep-Sea Imagery by Integrating Shape and Appearance Cues. In Proceedings of the 2014 ICPR Workshop on Computer Vision for Analysis of Underwater Imagery, Stockholm, Sweden, 24 August 2014; pp. 25–32. [Google Scholar] [CrossRef]

- Reza, A.M. Realization of the Contrast Limited Adaptive Histogram Equalization (CLAHE) for Real-Time Image Enhancement. J. VLSI Signal Process. Syst. Signal, Image Video Technol. 2004, 38, 35–44. [Google Scholar] [CrossRef]

- Parthasarathy, S.; Sankaran, P. An automated multi Scale Retinex with Color Restoration for image enhancement. In Proceedings of the 2012 National Conference on Communications (NCC), Kharagpur, India, 3–5 February 2012; pp. 1–5. [Google Scholar] [CrossRef]

- Jiang, Y.; Gong, X.; Liu, D.; Cheng, Y.; Fang, C.; Shen, X.; Yang, J.; Zhou, P.; Wang, Z. EnlightenGAN: Deep Light Enhancement Without Paired Supervision. IEEE Trans. Image Process. 2021, 30, 2340–2349. [Google Scholar] [CrossRef]

- Loh, Y.P.; Chan, C.S. Getting to know low-light images with the Exclusively Dark dataset. Comput. Vis. Image Underst. 2019, 178, 30–42. [Google Scholar] [CrossRef]

- Koshy, A.; MJ, N.B.; Shyna, A.; John, A. Preprocessing Techniques for High Quality Text Extraction from Text Images. In Proceedings of the 2019 1st International Conference on Innovations in Information and Communication Technology (ICIICT), Chennai, India, 25–26 April 2019; pp. 1–4. [Google Scholar] [CrossRef]

- Geng, W.; Han, F.; Lin, J.; Zhu, L.; Bai, J.; Wang, S.; He, L.; Xiao, Q.; Lai, Z. Fine-Grained Grocery Product Recognition by One-Shot Learning. In Proceedings of the 26th ACM International Conference on Multimedia, MM ’18, Seoul, Republic of Korea, 22–26 October 2018; Association for Computing Machinery: New York, NY, USA, 2018; pp. 1706–1714. [Google Scholar] [CrossRef]

- George, M.; Floerkemeier, C. Recognizing products: A per-exemplar multi-label image classification approach. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 440–455. [Google Scholar]

- Klasson, M.; Zhang, C.; Kjellström, H. A Hierarchical Grocery Store Image Dataset with Visual and Semantic Labels. In Proceedings of the IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 7–11 January 2019. [Google Scholar]

- Merler, M.; Galleguillos, C.; Belongie, S. Recognizing Groceries in situ Using in vitro Training Data. In Proceedings of the 2007 IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; pp. 1–8. [Google Scholar] [CrossRef]

- Jund, P.; Abdo, N.; Eitel, A.; Burgard, W. The Freiburg Groceries Dataset. arXiv 2016, arXiv:1611.05799. [Google Scholar]

- Follmann, P.; Böttger, T.; Härtinger, P.; König, R.; Ulrich, M. MVTec D2S: Densely Segmented Supermarket Dataset. In Proceedings of the Computer Vision—ECCV 2018, Munich, Germany, 8–14 September 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 581–597. [Google Scholar]

- Chen, F.; Zhang, H.; Li, Z.; Dou, J.; Mo, S.; Chen, H.W.; Zhang, Y.; Ahmed, U.; Zhu, C.; Savvides, M. Unitail: Detecting, Reading, and Matching in Retail Scene. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–24 October 2022. [Google Scholar]

- Peng, J.; Xiao, C.; Wei, X.; Li, Y. RP2K: A Large-Scale Retail Product Dataset for Fine-Grained Image Classification. arXiv 2020, arXiv:2006.12634. [Google Scholar]

- India, A. Store Shelf Images and Product Images for Retail. 2022. Available online: https://www.kaggle.com/datasets/amanindiamuz/store-shelf-images-and-product-images-for-retial?select=url (accessed on 30 December 2022).

- WebMarket. Available online: https://www.kaggle.com/datasets/manikchitralwar/webmarket-dataset (accessed on 30 December 2022).

- Wang, J.; Min, W.; Hou, S.; Ma, S.; Zheng, Y.; Wang, H.; Jiang, S. Logo-2K+: A Large-Scale Logo Dataset for Scalable Logo Classification. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, New York, USA, 7–12 February 2020. [Google Scholar]

- Hou, Q.; Min, W.; Wang, J.; Hou, S.; Zheng, Y.; Jiang, S. FoodLogoDet-1500: A Dataset for Large-Scale Food Logo Detection via Multi-Scale Feature Decoupling Network. In Proceedings of the 29th ACM International Conference on Multimedia, Chengdu, China, 20–24 October 2021. [Google Scholar]

- Su, H.; Gong, S.; Zhu, X. Weblogo-2m: Scalable logo detection by deep learning from the web. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Venice, Italy, 22–29 October 2017; pp. 270–279. [Google Scholar]

- Jaderberg, M.; Simonyan, K.; Vedaldi, A.; Zisserman, A. Synthetic Data and Artificial Neural Networks for Natural Scene Text Recognition. In Proceedings of the Workshop on Deep Learning, NIPS, Montréal, QC, Canada, 12–13 December 2014. [Google Scholar]

- Gupta, A.; Vedaldi, A.; Zisserman, A. Synthetic data for text localisation in natural images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2315–2324. [Google Scholar]

- Sun, Y.; Ni, Z.; Chng, C.K.; Liu, Y.; Luo, C.; Ng, C.C.; Han, J.; Ding, E.; Liu, J.; Karatzas, D.; et al. ICDAR 2019 Competition on Large-Scale Street View Text with Partial Labeling—RRC-LSVT. In Proceedings of the 2019 International Conference on Document Analysis and Recognition (ICDAR), Sydney, Australia, 20–25 September 2019 2019; pp. 1557–1562. [Google Scholar]

- Yao, C.; Bai, X.; Liu, W.; Ma, Y.; Tu, Z. Detecting texts of arbitrary orientations in natural images. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 1083–1090. [Google Scholar]

- Risnumawan, A.; Shivakumara, P.; Chan, C.S.; Tan, C.L. A robust arbitrary text detection system for natural scene images. Expert Syst. Appl. 2014, 41, 8027–8048. [Google Scholar] [CrossRef]

- Liu, Y.; Jin, L.; Zhang, S.; Luo, C.; Zhang, S. Curved scene text detection via transverse and longitudinal sequence connection. Pattern Recognit. 2019, 90, 337–345. [Google Scholar] [CrossRef]

- Mishra, A.; Karteek, A.; Jawahar, C.V. Scene Text Recognition using Higher Order Language Priors. In Proceedings of the BMVC, Surrey, UK, 3–7 September 2012. [Google Scholar]

- Wang, K.; Babenko, B.; Belongie, S.J. End-to-end scene text recognition. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 1457–1464. [Google Scholar]

- Lucas, S.M.M.; Panaretos, A.; Sosa, L.; Tang, A.; Wong, S.; Young, R. ICDAR 2003 robust reading competitions. In Proceedings of the Seventh International Conference on Document Analysis and Recognition, Edinburgh, UK, 6 August 2003; pp. 682–687. [Google Scholar]

- Karatzas, D.; Shafait, F.; Uchida, S.; Iwamura, M.; Bigorda, L.G.i.; Mestre, S.R.; Mas, J.; Mota, D.F.; Almazàn, J.A.; de las Heras, L.P. ICDAR 2013 Robust Reading Competition. In Proceedings of the 2013 12th International Conference on Document Analysis and Recognition, Washington, DC, USA, 25–28 August 2013; pp. 1484–1493. [Google Scholar] [CrossRef]

- de Campos, T.E.; Babu, B.R.; Varma, M. Character recognition in natural images. In Proceedings of the International Conference on Computer Vision Theory and Applications, Lisbon, Portugal, 5–8 February 2009. [Google Scholar]

- Karatzas, D.; Gomez-Bigorda, L.; Nicolaou, A.; Ghosh, S.; Bagdanov, A.; Iwamura, M.; Matas, J.; Neumann, L.; Chandrasekhar, V.R.; Lu, S.; et al. ICDAR 2015 competition on Robust Reading. In Proceedings of the 2015 13th International Conference on Document Analysis and Recognition (ICDAR), Tunis, Tunisia, 23–26 August 2015; pp. 1156–1160. [Google Scholar] [CrossRef]

- Phan, T.Q.; Shivakumara, P.; Tian, S.; Tan, C.L. Recognizing Text with Perspective Distortion in Natural Scenes. In Proceedings of the 2013 IEEE International Conference on Computer Vision, Sydney, NSW, Australia, 1–8 December 2013; pp. 569–576. [Google Scholar] [CrossRef]

- Veit, A.; Matera, T.; Neumann, L.; Matas, J.; Belongie, S.J. COCO-Text: Dataset and Benchmark for Text Detection and Recognition in Natural Images. arXiv 2016, arXiv:1601.07140. [Google Scholar]

- Chng, C.K.; Chan, C.S. Total-Text: A Comprehensive Dataset for Scene Text Detection and Recognition. In Proceedings of the 2017 14th IAPR International Conference on Document Analysis and Recognition (ICDAR), Kyoto, Japan, 9–15 November 2017; Volume 1, pp. 935–942. [Google Scholar]

- Nguyen, N.; Nguyen, T.; Tran, V.; Tran, M.T.; Ngo, T.D.; Nguyen, T.H.; Hoai, M. Dictionary-Guided Scene Text Recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 7383–7392. [Google Scholar]

- Nayef, N.; Patel, Y.; Busta, M.; Chowdhury, P.N.; Karatzas, D.; Khlif, W.; Matas, J.; Pal, U.; Burie, J.C.; Liu, C.L.; et al. ICDAR2019 Robust Reading Challenge on Multi-lingual Scene Text Detection and Recognition—RRC-MLT-2019. In Proceedings of the 2019 International Conference on Document Analysis and Recognition (ICDAR), Sydney, NSW, Australia, 20–25 September 2019; pp. 1582–1587. [Google Scholar]

- Chng, C.K.; Liu, Y.; Sun, Y.; Ng, C.C.; Luo, C.; Ni, Z.; Fang, C.; Zhang, S.; Han, J.; Ding, E.; et al. ICDAR2019 Robust Reading Challenge on Arbitrary-Shaped Text (RRC-ArT). arXiv 2019, arXiv:1909.07145. [Google Scholar]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Baz, I.; Yoruk, E.; Cetin, M. Context-aware hybrid classification system for fine-grained retail product recognition. In Proceedings of the 2016 IEEE 12th Image, Video, and Multidimensional Signal ProcessingWorkshop (IVMSP), Bordeaux, France, 11–12 July 2016; pp. 1–5. [Google Scholar]

- Yörük, E.; Öner, K.T.; Akgül, C.B. An efficient hough transform for multi-instance object recognition and pose estimation. In Proceedings of the 2016 23rd International Conference on Pattern Recognition (ICPR), Cancun, Mexico, 4–8 December 2016; pp. 1352–1357. [Google Scholar]

- Santra, B.; Shaw, A.K.; Mukherjee, D.P. An end-to-end annotation-free machine vision system for detection of products on the rack. Mach. Vis. Appl. 2021, 32, 56. [Google Scholar] [CrossRef]

- Zhang, J.; Marszałek, M.; Lazebnik, S.; Schmid, C. Local features and kernels for classification of texture and object categories: A comprehensive study. Int. J. Comput. Vis. 2007, 73, 213–238. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Qiao, S.; Shen, W.; Qiu, W.; Liu, C.; Yuille, A. Scalenet: Guiding object proposal generation in supermarkets and beyond. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1791–1800. [Google Scholar]

- Santra, B.; Shaw, A.K.; Mukherjee, D.P. Graph-based non-maximal suppression for detecting products on the rack. Pattern Recognit. Lett. 2020, 140, 73–80. [Google Scholar] [CrossRef]

- Huang, L.; Yang, Y.; Deng, Y.; Yu, Y. DenseBox: Unifying Landmark Localization with End to End Object Detection. arXiv 2015, arXiv:1509.04874. [Google Scholar]

- Osokin, A.; Sumin, D.; Lomakin, V. OS2D: One-Stage One-Shot Object Detection by Matching Anchor Features. In Proceedings of the proceedings of the European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020. [Google Scholar]

- Goldman, E.; Goldberger, J. CRF with deep class embedding for large scale classification. Comput. Vis. Image Underst. 2020, 191, 102865. [Google Scholar] [CrossRef]

- Goldman, E.; Goldberger, J. Large-Scale Classification of Structured Objects using a CRF with Deep Class Embedding. arXiv 2017, arXiv:1705.07420. [Google Scholar]

- Wang, Y.; Song, R.; Wei, X.S.; Zhang, L. An adversarial domain adaptation network for cross-domain fine-grained recognition. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Snowmass village, Colorado, USA, 1–5 March 2020; pp. 1228–1236. [Google Scholar]

- Santra, B.; Shaw, A.K.; Mukherjee, D.P. Part-based annotation-free fine-grained classification of images of retail products. Pattern Recognit. 2022, 121, 108257. [Google Scholar] [CrossRef]

- Wang, W.; Lee, H.; Livescu, K. Deep Variational Canonical Correlation Analysis. arXiv 2016, arXiv:1610.03454. [Google Scholar]

- Ciocca, G.; Napoletano, P.; Locatelli, S.G. Multi-task learning for supervised and unsupervised classification of grocery images. In Proceedings of the International Conference on Pattern Recognition, Milan, Italy, 10–15 January 2020; 2021; pp. 325–338. [Google Scholar]

- Dueck, D.; Frey, B.J. Non-metric affinity propagation for unsupervised image categorization. In Proceedings of the 2007 IEEE 11th International Conference on Computer Vision, Rio de Janeiro, Brazil, 17–22 June 2007; pp. 1–8. [Google Scholar] [CrossRef]

- Duque Domingo, J.; Medina Aparicio, R.; González Rodrigo, L.M. Improvement of One-Shot-Learning by Integrating a Convolutional Neural Network and an Image Descriptor into a Siamese Neural Network. Appl. Sci. 2021, 11, 839. [Google Scholar] [CrossRef]

- Wang, W.; Cui, Y.; Li, G.; Jiang, C.; Deng, S. A self-attention-based destruction and construction learning fine-grained image classification method for retail product recognition. Neural Comput. Appl. 2020, 32, 14613–14622. [Google Scholar] [CrossRef]

- Karlinsky, L.; Shtok, J.; Tzur, Y.; Tzadok, A. Fine-grained recognition of thousands of object categories with single-example training. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 22–29 October 2017; pp. 4113–4122. [Google Scholar]

- Gothai, E.; Bhatia, S.; Alabdali, A.; Sharma, D.; Raj, B.; Dadheech, P. Design Features of Grocery Product Recognition Using Deep Learning. Intell. Autom. Soft Comput. 2022, 34, 1231–1246. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 22–29 October 2017; pp. 6517–6525. [Google Scholar] [CrossRef]

- Tonioni, A.; Di Stefano, L. Domain invariant hierarchical embedding for grocery products recognition. Comput. Vis. Image Underst. 2019, 182, 81–92. [Google Scholar] [CrossRef]

- Sinha, A.; Banerjee, S.; Chattopadhyay, P. An Improved Deep Learning Approach For Product Recognition on Racks in Retail Stores. arXiv 2022, arXiv:2202.13081. [Google Scholar]

- George, M.; Mircic, D.; Soros, G.; Floerkemeier, C.; Mattern, F. Fine-grained product class recognition for assisted shopping. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Santiago, Chile, 7–13 December 2015; pp. 154–162. [Google Scholar]

- Kuhn, H.W. The Hungarian method for the assignment problem. Nav. Res. Logist. Q. 1955, 2, 83–97. [Google Scholar] [CrossRef]

- Pettersson, T.; Oucheikh, R.; Lofstrom, T. NLP Cross-Domain Recognition of Retail Products. In Proceedings of the 2022 7th International Conference on Machine Learning Technologies (ICMLT), Rome, Italy, 11–13 March 2022; pp. 237–243. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. arXiv 2019, arXiv:1810.04805. [Google Scholar]

- BERT. Available online: https://huggingface.co/docs/transformers/model_doc/bert (accessed on 30 December 2022).

- Georgieva, P.; Zhang, P. Optical character recognition for autonomous stores. In Proceedings of the 2020 IEEE 10th International Conference on Intelligent Systems (IS), Varna, Bulgaria, 28–30 August 2020; pp. 69–75. [Google Scholar]

- Shi, B.; Bai, X.; Belongie, S. Detecting oriented text in natural images by linking segments. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2550–2558. [Google Scholar]

- Selvam, P.; Koilraj, J.A.S. A Deep Learning Framework for Grocery Product Detection and Recognition. Food Anal. Methods 2022, 15, 3498–3522. [Google Scholar] [CrossRef]

- Jocher, G. ultralytics/yolov5: V3.1—Bug Fixes and Performance Improvements. 2020. Available online: https://github.com/ultralytics/yolov5 (accessed on 20 February 2023). [CrossRef]

- Litman, R.; Anschel, O.; Tsiper, S.; Litman, R.; Mazor, S.; Manmatha, R. Scatter: Selective context attentional scene text recognizer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11962–11972. [Google Scholar]

- Jiang, Y.; Zhu, X.; Wang, X.; Yang, S.; Li, W.; Wang, H.; Fu, P.; Luo, Z. R2CNN: Rotational Region CNN for Orientation Robust Scene Text Detection. arXiv 2017, arXiv:1706.09579. [Google Scholar]

- Ma, J.; Shao, W.; Ye, H.; Wang, L.; Wang, H.; Zheng, Y.; Xue, X. Arbitrary-oriented scene text detection via rotation proposals. IEEE Trans. Multimed. 2018, 20, 3111–3122. [Google Scholar] [CrossRef]

- Liao, M.; Zhu, Z.; Shi, B.; Xia, G.s.; Bai, X. Rotation-sensitive regression for oriented scene text detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 5909–5918. [Google Scholar]

- Liao, M.; Shi, B.; Bai, X. TextBoxes++: A Single-Shot Oriented Scene Text Detector. IEEE Trans. Image Process. 2018, 27, 3676–3690. [Google Scholar] [CrossRef] [PubMed]

- Zhou, X.; Yao, C.; Wen, H.; Wang, Y.; Zhou, S.; He, W.; Liang, J. East: An efficient and accurate scene text detector. In Proceedings of the IEEE conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5551–5560. [Google Scholar]

- Tang, J.; Yang, Z.; Wang, Y.; Zheng, Q.; Xu, Y.; Bai, X. SegLink++: Detecting Dense and Arbitrary-shaped Scene Text by Instance-aware Component Grouping. Pattern Recognit. 2019, 96, 106954. [Google Scholar] [CrossRef]

- Wang, Y.; Xie, H.; Zha, Z.; Xing, M.; Fu, Z.; Zhang, Y. ContourNet: Taking a Further Step Toward Accurate Arbitrary-Shaped Scene Text Detection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA 1–5 March 2020; pp. 11750–11759. [Google Scholar]

- Zhang, S.X.; Zhu, X.; Hou, J.B.; Liu, C.; Yang, C.; Wang, H.; Yin, X.C. Deep relational reasoning graph network for arbitrary shape text detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 1–5 March 2020; pp. 9699–9708. [Google Scholar]

- Zhu, Y.; Chen, J.; Liang, L.; Kuang, Z.; Jin, L.; Zhang, W. Fourier Contour Embedding for Arbitrary-Shaped Text Detection. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 21–25 June 2021; pp. 3122–3130. [Google Scholar]

- He, M.; Liao, M.; Yang, Z.; Zhong, H.; Tang, J.; Cheng, W.; Yao, C.; Wang, Y.; Bai, X. MOST: A multi-oriented scene text detector with localization refinement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 8813–8822. [Google Scholar]

- Long, S.; Ruan, J.; Zhang, W.; He, X.; Wu, W.; Yao, C. Textsnake: A flexible representation for detecting text of arbitrary shapes. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 20–36. [Google Scholar]

- Zhang, C.; Liang, B.; Huang, Z.; En, M.; Han, J.; Ding, E.; Ding, X. Look More Than Once: An Accurate Detector for Text of Arbitrary Shapes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2019, Long Beach, CA, USA, 16–20 June 2019; pp. 10552–10561. [Google Scholar]

- Wang, W.; Xie, E.; Li, X.; Hou, W.; Lu, T.; Yu, G.; Shao, S. Shape robust text detection with progressive scale expansion network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9336–9345. [Google Scholar]

- Baek, Y.; Lee, B.; Han, D.; Yun, S.; Lee, H. Character Region Awareness for Text Detection. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 9357–9366. [Google Scholar]

- Wang, W.; Xie, E.; Song, X.; Zang, Y.; Wang, W.; Lu, T.; Yu, G.; Shen, C. Efficient and accurate arbitrary-shaped text detection with pixel aggregation network. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 8440–8449. [Google Scholar]

- Liao, M.; Wan, Z.; Yao, C.; Chen, K.; Bai, X. Real-time scene text detection with differentiable binarization. In Proceedings of the AAAI conference on artificial intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 11474–11481. [Google Scholar]

- Liao, M.; Zou, Z.; Wan, Z.; Yao, C.; Bai, X. Real-Time Scene Text Detection With Differentiable Binarization and Adaptive Scale Fusion. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 919–931. [Google Scholar] [CrossRef]

- Naiemi, F.; Ghods, V.; Khalesi, H. MOSTL: An Accurate Multi-Oriented Scene Text Localization. Circuits, Syst. Signal Process. 2021, 40, 4452–4473. [Google Scholar] [CrossRef]

- Li, J.; Lin, Y.; Liu, R.; Ho, C.M.; Shi, H. RSCA: Real-time Segmentation-based Context-Aware Scene Text Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 2349–2358. [Google Scholar]

- Wang, Z.; Silamu, W.; Li, Y.; Xu, M. A Robust Method: Arbitrary Shape Text Detection Combining Semantic and Position Information. Sensors 2022, 22, 9982. [Google Scholar] [CrossRef] [PubMed]

- Wang, P.; Zhang, C.; Qi, F.; Huang, Z.; En, M.; Han, J.; Liu, J.; Ding, E.; Shi, G. A Single-Shot Arbitrarily-Shaped Text Detector based on Context Attended Multi-Task Learning. In Proceedings of the 27th ACM International Conference on Multimedia, Nice, France 21–25 October 2019. [Google Scholar]

- Long, S.; Qin, S.; Panteleev, D.; Bissacco, A.; Fujii, Y.; Raptis, M. Towards End-to-End Unified Scene Text Detection and Layout Analysis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 1049–1059. [Google Scholar]

- Shi, B.; Yang, M.; Wang, X.; Lyu, P.; Yao, C.; Bai, X. Aster: An attentional scene text recognizer with flexible rectification. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 41, 2035–2048. [Google Scholar] [CrossRef]

- Shi, B.; Wang, X.; Lyu, P.; Yao, C.; Bai, X. Robust scene text recognition with automatic rectification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4168–4176. [Google Scholar]

- Yang, M.; Guan, Y.; Liao, M.; He, X.; Bian, K.; Bai, S.; Yao, C.; Bai, X. Symmetry-constrained rectification network for scene text recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–November 2019; pp. 9147–9156. [Google Scholar]

- Liao, M.; Zhang, J.; Wan, Z.; Xie, F.; Liang, J.; Lyu, P.; Yao, C.; Bai, X. Scene Text Recognition from Two-Dimensional Perspective. Proc. AAAI Conf. Artif. Intell. 2019, 33, 8714–8721. [Google Scholar] [CrossRef]

- Long, S.; Guan, Y.; Bian, K.; Yao, C. A new perspective for flexible feature gathering in scene text recognition via character anchor pooling. In Proceedings of the ICASSP 2020-2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Virtual Barcelona, 4–8 May 2020; pp. 2458–2462. [Google Scholar]

- Li, H.; Wang, P.; Shen, C.; Zhang, G. Show, attend and read: A simple and strong baseline for irregular text recognition. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 28–29 January 2019; Volume 33, pp. 8610–8617. [Google Scholar]

- Wan, Z.; He, M.; Chen, H.; Bai, X.; Yao, C. TextScanner: Reading Characters in Order for Robust Scene Text Recognition. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 28–29 January 2019. [Google Scholar]

- Yu, D.; Li, X.; Zhang, C.; Liu, T.; Han, J.; Liu, J.; Ding, E. Towards accurate scene text recognition with semantic reasoning networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 12113–12122. [Google Scholar]

- Fu, Z.; Xie, H.; Jin, G.; Guo, J. Look Back Again: Dual Parallel Attention Network for Accurate and Robust Scene Text Recognition, Proceedings of the 2021 International Conference on Multimedia Retrieval (ICMR ’21), Taipei, Taiwan, 21–24 August 2021; Association for Computing Machinery: New York, NY, USA, 2021; pp. 638–644. [Google Scholar] [CrossRef]

- Zheng, T.; Chen, Z.; Fang, S.; Xie, H.; Jiang, Y.G. CDistNet: Perceiving Multi-Domain Character Distance for Robust Text Recognition. arXiv 2021, arXiv:2111.11011. [Google Scholar]

- Na, B.; Kim, Y.; Park, S. Multi-modal text recognition networks: Interactive enhancements between visual and semantic features. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–24 October 2022; pp. 446–463. [Google Scholar]

- He, Y.; Chen, C.; Zhang, J.; Liu, J.; He, F.; Wang, C.; Du, B. Visual Semantics Allow for Textual Reasoning Better in Scene Text Recognition. arXiv 2021, arXiv:2112.12916. [Google Scholar] [CrossRef]

- Bautista, D.; Atienza, R. Scene Text Recognition with Permuted Autoregressive Sequence Models. In Proceedings of the Computer Vision—ECCV 2022: 17th European Conference, Tel Aviv, Israel, 23–27 October 2022; Proceedings, Part XXVIII. Springer: Berlin/Heidelberg, Germany, 2022; pp. 178–196. [Google Scholar] [CrossRef]

- Cai, H.; Sun, J.; Xiong, Y. Revisiting Classification Perspective on Scene Text Recognition. arXiv 2021, arXiv:2102.10884. [Google Scholar]

- Liu, X.; Liang, D.; Yan, S.; Chen, D.; Qiao, Y.; Yan, J. Fots: Fast oriented text spotting with a unified network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18-23 June 2018; pp. 5676–5685. [Google Scholar]

- Naiemi, F.; Ghods, V.; Khalesi, H. A novel pipeline framework for multi oriented scene text image detection and recognition. Expert Syst. Appl. 2021, 170, 114549. [Google Scholar] [CrossRef]

- Feng, W.; He, W.; Yin, F.; Zhang, X.Y.; Liu, C.L. TextDragon: An End-to-End Framework for Arbitrary Shaped Text Spotting. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9075–9084. [Google Scholar]

- Liu, Y.; Chen, H.; Shen, C.; He, T.; Jin, L.; Wang, L. Abcnet: Real-time scene text spotting with adaptive bezier-curve network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 1–5 March 2020; pp. 9809–9818. [Google Scholar]

- Liu, Y.; Shen, C.; Jin, L.; He, T.; Chen, P.; Liu, C.; Chen, H. ABCNet v2: Adaptive Bezier-Curve Network for Real-Time End-to-End Text Spotting. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 8048–8064. [Google Scholar] [CrossRef]

- Zhong, H.; Tang, J.; Wang, W.; Yang, Z.; Yao, C.; Lu, T. ARTS: Eliminating Inconsistency between Text Detection and Recognition with Auto-Rectification Text Spotter. arXiv 2021, arXiv:2110.10405. [Google Scholar]

- Lyu, P.; Liao, M.; Yao, C.; Wu, W.; Bai, X. Mask TextSpotter: An End-to-End Trainable Neural Network for Spotting Text with Arbitrary Shapes. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 43, 532–548. [Google Scholar]

- Liao, M.; Pang, G.; Huang, J.; Hassner, T.; Bai, X. Mask textspotter v3: Segmentation proposal network for robust scene text spotting. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 706–722. [Google Scholar]

- Qin, S.; Bissacco, A.; Raptis, M.; Fujii, Y.; Xiao, Y. Towards Unconstrained End-to-End Text Spotting. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 4703–4713. [Google Scholar]

- Qiao, L.; Chen, Y.; Cheng, Z.; Xu, Y.; Niu, Y.; Pu, S.; Wu, F. MANGO: A Mask Attention Guided One-Stage Scene Text Spotter. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA 7–12 February 2020. [Google Scholar]

- Zhang, X.; Su, Y.; Tripathi, S.; Tu, Z. Text Spotting Transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 9519–9528. [Google Scholar]

- Wang, W.; Liu, X.; Ji, X.; Xie, E.; Liang, D.; Yang, Z.; Lu, T.; Shen, C.; Luo, P. Ae textspotter: Learning visual and linguistic representation for ambiguous text spotting. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 457–473. [Google Scholar]

- Huang, M.; Liu, Y.; Peng, Z.; Liu, C.; Lin, D.; Zhu, S.; Yuan, N.J.; Ding, K.; Jin, L. SwinTextSpotter: Scene Text Spotting via Better Synergy between Text Detection and Text Recognition. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 4583–4593. [Google Scholar]

- Hao, Y.; Fu, Y.; Jiang, Y.G.; Tian, Q. An End-to-End Architecture for Class-Incremental Object Detection with Knowledge Distillation. In Proceedings of the 2019 IEEE International Conference on Multimedia and Expo (ICME), Shanghai, China, 8–12 July 2019; pp. 1–6. [Google Scholar]

- Yang, D.; Zhou, Y.; Zhang, A.; Sun, X.; Wu, D.; Wang, W.; Ye, Q. Multi-view correlation distillation for incremental object detection. Pattern Recognit. 2022, 131, 108863. [Google Scholar] [CrossRef]

- Zhang, L.; Du, D.; Li, C.; Wu, Y.; Luo, T. Iterative Knowledge Distillation for Automatic Check-Out. IEEE Trans. Multimed. 2021, 23, 4158–4170. [Google Scholar] [CrossRef]

- Capozzi, L.; Barbosa, V.; Pinto, C.; Pinto, J.R.; Pereira, A.; Carvalho, P.M.; Cardoso, J.S. Toward Vehicle Occupant-Invariant Models for Activity Characterization. IEEE Access 2022, 10, 104215–104225. [Google Scholar] [CrossRef]

- Bhojanapalli, S.; Chakrabarti, A.; Glasner, D.; Li, D.; Unterthiner, T.; Veit, A. Understanding Robustness of Transformers for Image Classification. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 10211–10221. [Google Scholar]

- Long, S.; Yao, C. UnrealText: Synthesizing Realistic Scene Text Images from the Unreal World. arXiv 2020, arXiv:2003.10608. [Google Scholar]

- Luo, C.; Lin, Q.; Liu, Y.; Jin, L.; Shen, C. Separating Content from Style Using Adversarial Learning for Recognizing Text in the Wild. Int. J. Comput. Vis. 2020, 129, 960–976. [Google Scholar] [CrossRef]

- Coates, A.; Carpenter, B.; Case, C.; Satheesh, S.; Suresh, B.; Wang, T.; Wu, D.J.; Ng, A.Y. Text detection and character recognition in scene images with unsupervised feature learning. In Proceedings of the 2011 International Conference on Document Analysis and Recognition, Beijing, China, 18–21 September 2011; pp. 440–445. [Google Scholar]

- Gupta, A.; Vedaldi, A.; Zisserman, A. Learning to Read by Spelling: Towards Unsupervised Text Recognition. In Proceedings of the 11th Indian Conference on Computer Vision, Graphics and Image Processing, Hyderabad, India, 18–22 December 2018. [Google Scholar]

| Applications | Barcode Detection | Label Detection | Guidance | Mode | Portable |

|---|---|---|---|---|---|

| RoboCart [13] | √ | × | × | RFID | × |

| Seeing AI [14] | √ | × | √ | Both | √ |

| OrCam MyEye [19] | √ | √ | √ | Offline | √ |

| Yuka [15] | √ | × | × | Both | √ |

| Open Food Facts [16] | √ | × | × | Online | √ |

| Lookout [17] | √ | √ | √ | Both | √ |

| Alexa Echo Show [18] | √ | √ | √ | Online | × |

| Wine Searcher [20] | × | √ | √ | Online | √ |

| Amazon GO [21] | × | √ | × | Online | × |

| Datasets | #Training / Test Images | #Text Instances | Annotation | Orientation | Curved | Language |

|---|---|---|---|---|---|---|

| MJSynth [57] | ∼8.9 M | ∼8.9 M | Word | Multi-oriented | Not curved | English |

| SynthText [58] | ∼800 k | ∼8 M | Text-string/ Word/ Character | Multi-oriented | Not curved | English |

| III5K-Words [63] | 380/740 | 2000/3000 | Word/ Character | Horizontal | Not curved | English |

| Street View Text [64] | 101/249 | 211/514 | Word | Horizontal | Not curved | English |

| ICDAR2003 [65] | 258/251 | 1156/1110 | Word/ Character | Horizontal | Not curved | English |

| ICDAR2013 [66] | 420/141 | 3564/1439 | Word/ Character | Horizontal | Not curved | English |

| Char74k [67] | - | ∼78,000 | Character | Horizontal | Few curved | Latin |

| ICDAR2015 [68] | 1000/500 | 4468/2077 | Word | Multi-oriented | Few curved | English |

| MSRA-TD500 [60] | 300/200 | - | Word | Multi-oriented | Few curved | Chinese and English |

| SVT Perspective [69] | 238 | 639 | Word | Multi-oriented | Few curved | English |

| VinText [72] | 2000 | ∼56,000 | Word | Multi-oriented | Few curved | Vietnamese |

| CUTE80 [61] | 80 | 288 | Word | Multi-oriented | Curved | English |

| COCO-Text [70] | 43,686/20,000 | 118,309/27,550 | Word | Multi-oriented | Curved | English |

| Total-Text [71] | 1555/300 | 111,666/293 | Word | Multi-oriented | Curved | English |

| SCUT-CTW1500 [62] | 1000/500 | 7683/3068 | Word | Multi-oriented | Curved | Chinese and English |

| ICDAR2017—MLT [73] | 9000/9000 | 84,868/97,619 | Word | Multi-oriented | Curved | 9 Languages |

| ICDAR2019—MLT [73] | 10,000/10,000 | 89,177/102,462 | Word | Multi-oriented | Curved | 10 Languages |

| ICDAR2019—Art [74] | 6603/4563 | 50,029/48,426 | Word | Multi-oriented | Curved | Chinese and English |

| ICDAR2019—LSVT [59] | 430,000/20,000 | - | Word | Multi-oriented | Curved | Chinese and English |

| Methods | Annotations | Dataset | Scores | Metrics |

|---|---|---|---|---|

| ScaleNet [81] | Mask based/segmentation | MS COCO | 0.578 | AR@1k |

| Goldman et al. [3] | Bounding box | SKU-110K | 0.492 | AP |

| Santra et al. [82] | Bounding box | GroZi-3.2 | 0.802 | F1 |

| WebMarket | 0.755 | |||

| GroZi-120 | 0.448 | |||

| RetailDet [50] | Bounding box | SKU110k | 0.590 | mAP |

| UnitailDet | OD:0.587, CD:0.509 | |||

| OS2D [84] | Bounding box | GroZi-3.2k | 0.850 | mAP |

| Methods | System | GroZi-3.2K | SKU-110K | ||

|---|---|---|---|---|---|

| AP | AR | AP | AR | ||

| Leonid et al. [94] | Region matching | 0.447 | - | - | - |

| Geng et al. [44] | Matching | 0.739 | - | - | - |

| Tonioni et al. [4] | Matching | 0.735 | 0.827 | - | - |

| Tonioni et al. [97] | Matching | 0.842 | - | - | |

| Sinha et al. [98] | Multi-scale CNN-based | 0.478 | 0.581 | - | - |

| Gothai et al. [95] | Matching | - | - | 0.580 | 0.710 |

| Methods | Anno. | ICDAR15 | SVTP | CUTE80 |

|---|---|---|---|---|

| ASTER [131] | word | 0.761 | 0.785 | 0.795 |

| ScRN [133] | word, char | 0.784 | 0.811 | 0.906 |

| CA-FCN+data [134] | word, char | - | - | 0.799 |

| CAPNet [135] | word, char | 0.766 | 0.788 | 0.868 |

| SAR [136] | word | 0.788 | 0.864 | 0.896 |

| TextScanner [137] | word, char | 0.835 | 0.848 | 0.916 |

| SRN [138] | word | 0.827 | 0.851 | 0.878 |

| DPAN [139] | word | 0.855 | 0.890 | 0.919 |

| CDistNet [140] | word, char | 0.860 | 0.887 | 0.934 |

| MATRN model [141] | word, char | 0.828 | 0.906 | 0.935 |

| S-GTR [142] | word | 0.873 | 0.906 | 0.947 |

| PARSeq [143] | word | 0.896 | 0.957 | 0.983 |

| STN-CSTR [144] | word | 0.820 | 0.862 | - |

| Methods | ICDAR15 - End-to-End | ||

|---|---|---|---|

| S | W | G | |

| FOTS [145] | 0.811 | 0.759 | 0.608 |

| TextDragon [147] | 0.825 | 0.783 | 0.651 |

| ABCNet+MS [148] | - | - | - |

| ABCNet V2 [149] | 0.827 | 0.785 | 0.730 |

| ARTS [150] | 0.815 | 0.773 | 0.687 |

| Mask TextSpotter [151] | 0.830 | 0.777 | 0.735 |

| Mask TextSpotter V3 [152] | 0.833 | 0.781 | 0.742 |

| Unconstrained [153] | 0.855 | 0.819 | 0.699 |

| MANGO [154] | 0.818 | 0.789 | 0.673 |

| TESTR [155] | 0.852 | 0.794 | 0.736 |

| AE TextSpotter [156] | - | - | - |

| SwinTextSpotter [157] | 0.839 | 0.773 | 0.705 |

| Methods | Detection | End-to-End | FPS | |

|---|---|---|---|---|

| F-Measure | None | Full | ||

| FOTS [145] | 0.440 | 0.322 | 0.359 | - |

| TextDragon [147] | 0.803 | 0.488 | 0.748 | - |

| ABCNet+MS [148] | - | 0.695 | 0.784 | 6.9 |

| ABCNet V2 [149] | 0.870 | 0.704 | 0.781 | 10 |

| ARTS-RT [150] | 0.803 | 0.659 | 0.781 | 28.0 |

| ARTS-S [150] | 0.865 | 0.771 | 0.851 | 10.5 |

| Mask TextSpotter [151] | 0.613 | 0.529 | 0.718 | 4.8 |

| Mask TextSpotter V3 [152] | - | 0.712 | 0.784 | - |

| Unconstrained [153] | 0.864 | 0.707 | - | - |

| MANGO [154] | - | 0.729 | 0.836 | 4.3 |

| TESTR-Bezier [155] | 0.880 | 0.716 | 0.833 | 5.5 |

| TESTR-Polygon [155] | 0.869 | 0.733 | 0.839 | 5.3 |

| AE TextSpotter [156] | - | - | - | - |

| SwinTextSpotter [157] | 0.880 | 0.743 | 0.841 | - |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guimarães, V.; Nascimento, J.; Viana, P.; Carvalho, P. A Review of Recent Advances and Challenges in Grocery Label Detection and Recognition. Appl. Sci. 2023, 13, 2871. https://doi.org/10.3390/app13052871

Guimarães V, Nascimento J, Viana P, Carvalho P. A Review of Recent Advances and Challenges in Grocery Label Detection and Recognition. Applied Sciences. 2023; 13(5):2871. https://doi.org/10.3390/app13052871

Chicago/Turabian StyleGuimarães, Vânia, Jéssica Nascimento, Paula Viana, and Pedro Carvalho. 2023. "A Review of Recent Advances and Challenges in Grocery Label Detection and Recognition" Applied Sciences 13, no. 5: 2871. https://doi.org/10.3390/app13052871

APA StyleGuimarães, V., Nascimento, J., Viana, P., & Carvalho, P. (2023). A Review of Recent Advances and Challenges in Grocery Label Detection and Recognition. Applied Sciences, 13(5), 2871. https://doi.org/10.3390/app13052871