Robots in Inspection and Monitoring of Buildings and Infrastructure: A Systematic Review

Abstract

1. Introduction

- What types of robots have been studied in the literature on robotic inspection of buildings and infrastructure based on their locomotion?

- What are the prevalent application domains for the robotic inspection of buildings and infrastructure?

- What are the prevalent research areas in the robotic inspection of buildings and infrastructure?

- What research gaps currently exist in the robotic inspection of buildings and infrastructure?

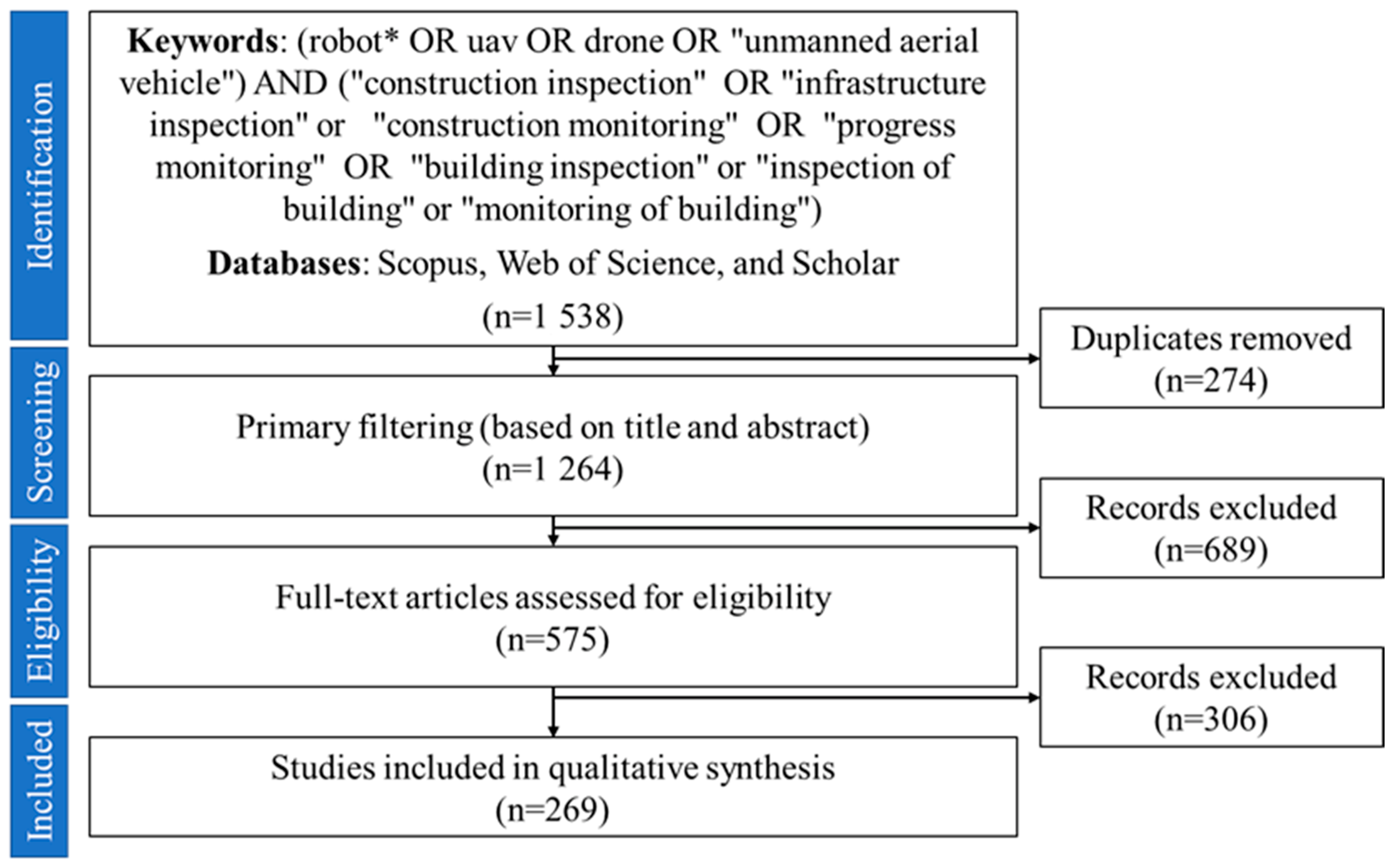

2. Research Methodology

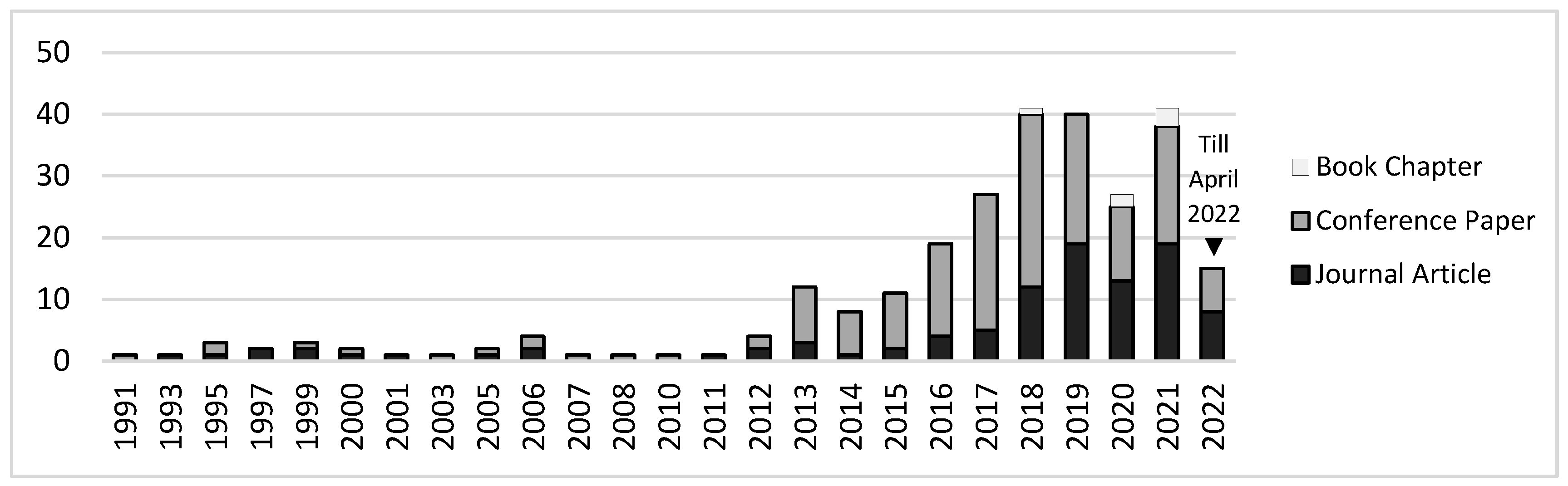

3. Bibliometric Analysis

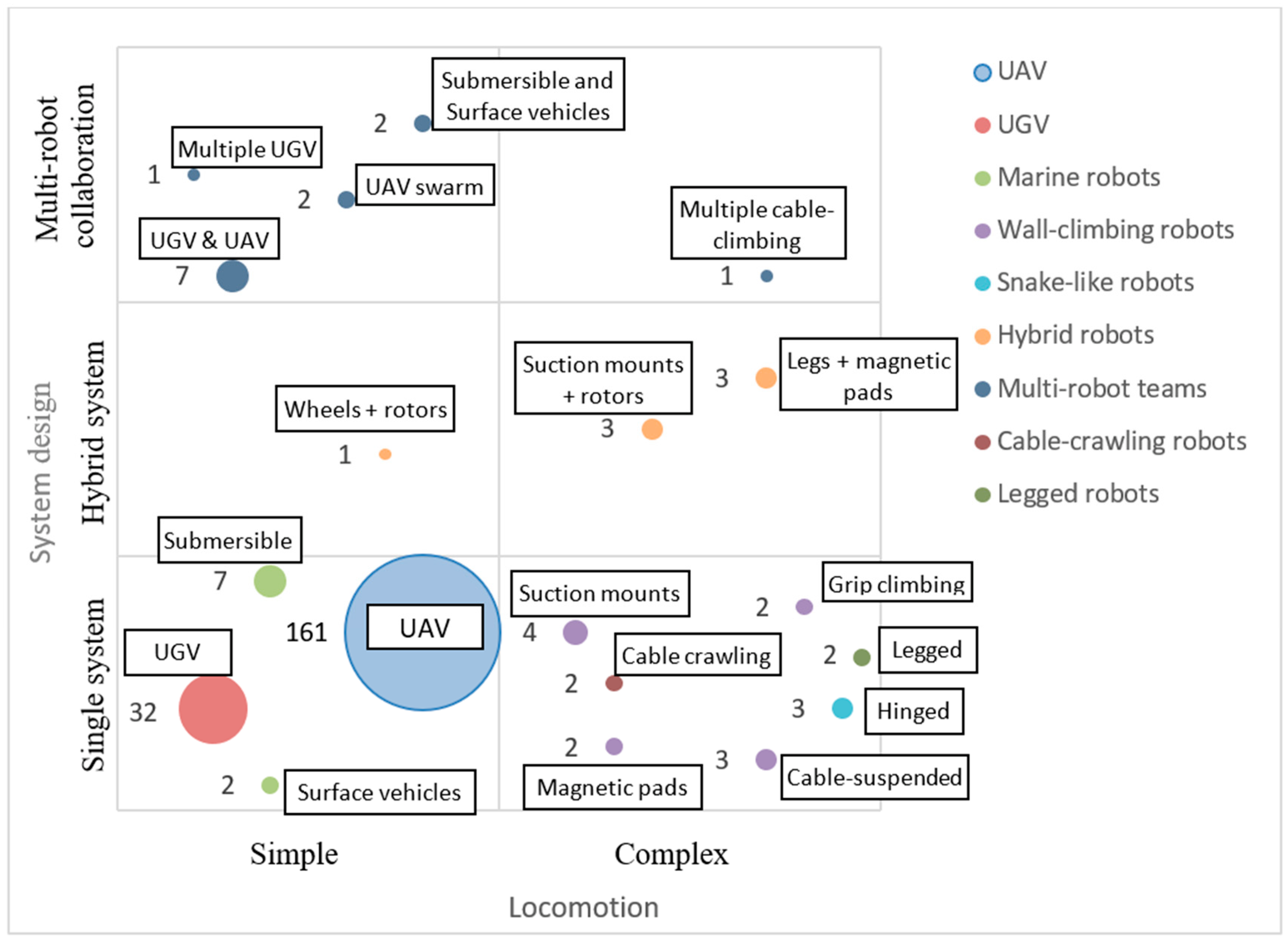

4. Types of Robots

4.1. Unmanned Aerial Vehicle (UAV)

- UAVs can hover anywhere to take photos.

- UAVs can zoom in and focus on a small region of interest.

- UAV operation should be simple, and the operator can get started without professional training.

- UAVs should have a long enough flight time to improve operational efficiency.

- UAVs should be capable of autonomous flight.

- UAVs should be small enough for transportation and maintenance.

4.2. Unmanned Ground Vehicles (UGV)

4.3. Wall-Climbing Robots

4.4. Cable-Crawling Robots

4.5. Marine Robots

4.6. Hinged Microbots

4.7. Legged Robots

4.8. Hybrid Robots

4.9. Multi-Robot Systems

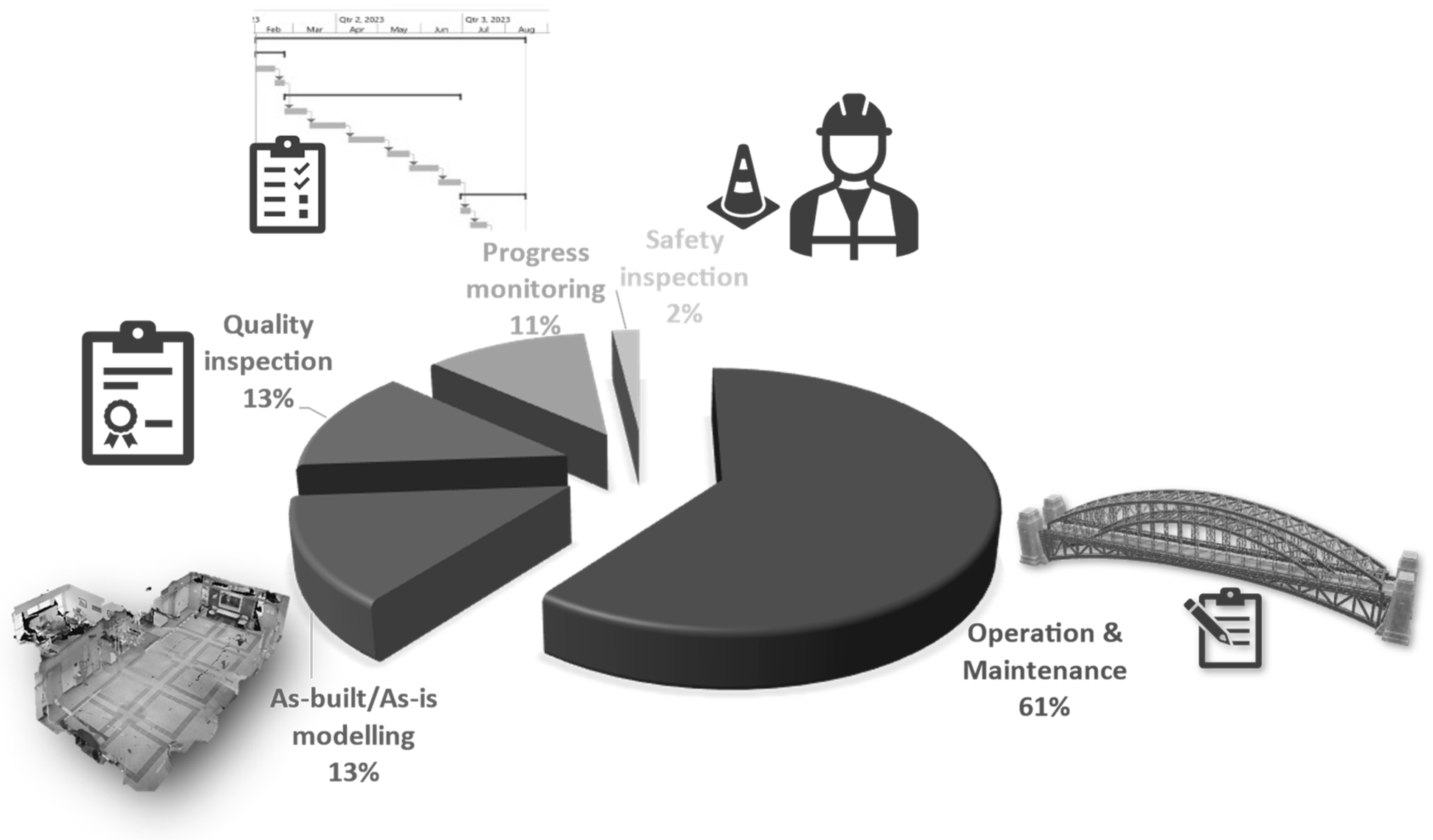

5. Application Domain

5.1. Maintenance Inspection

5.2. Construction Quality Inspection

5.3. Progress Monitoring

5.4. As-Built/As-Is Modeling

5.5. Safety Inspection

6. Research Areas

6.1. Autonomous Navigation

6.1.1. Localization

6.1.2. Path Planning

6.1.3. Navigation

6.2. Knowledge Extraction

6.3. Motion Control System

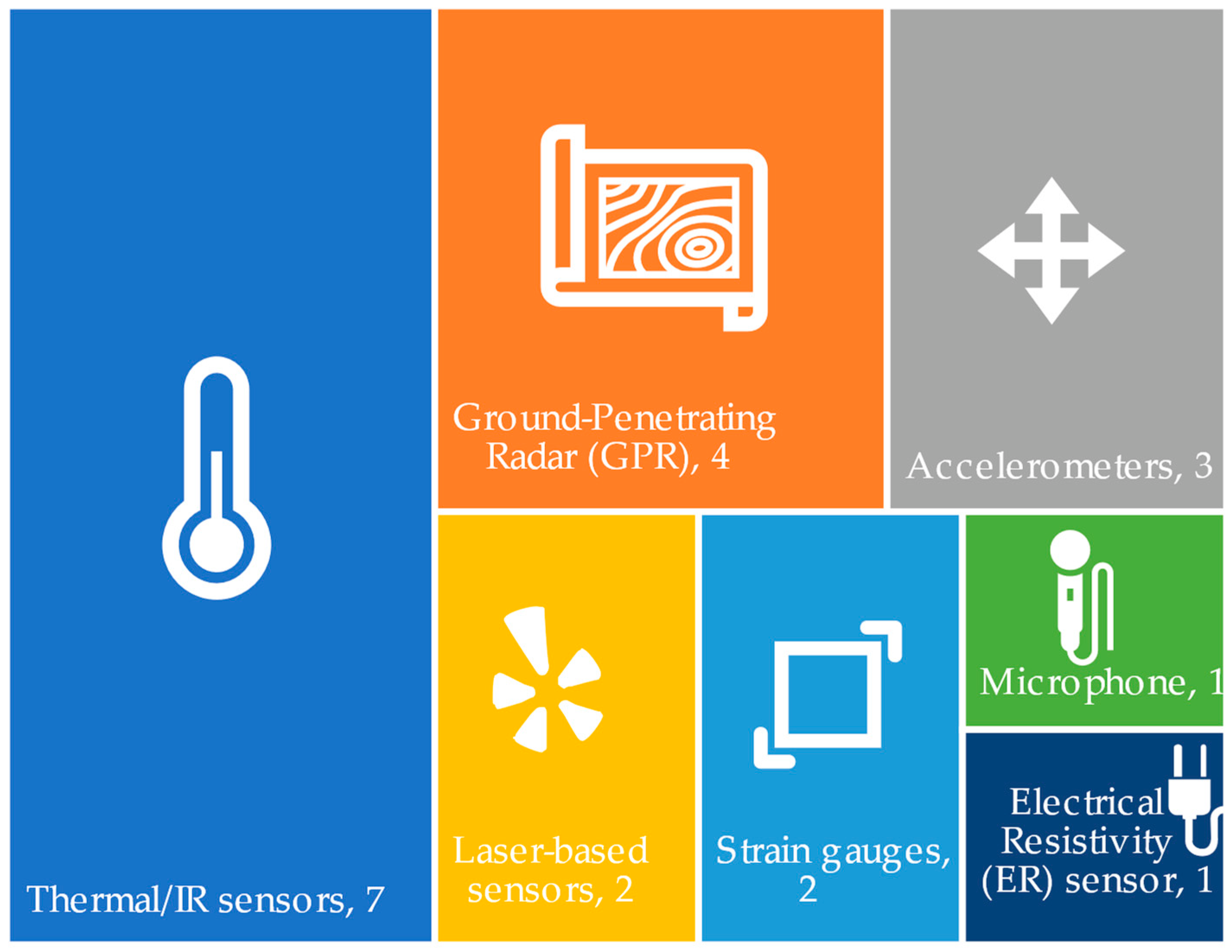

6.4. Sensing

6.5. Multi-Robot Collaboration

6.6. Safety Implications

6.7. Data Transmission

6.8. Human Factors

7. Future Research Directions

7.1. Autonomous Navigation

7.2. Knowledge Extraction

7.3. Motion Control System

7.4. Sensing

7.5. Multi-Robot Collaboration

7.6. Safety Implications

7.7. Data Transmission

7.8. Human Factors

8. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Vo-Tran, H.; Kanjanabootra, S. Information Sharing Problems among Stakeholders in the Construction Industry at the Inspection Stage: A Case Study. In Proceedings of the CIB World Building Congres: Construction and Society, Brisbane, Australia, 5–9 May 2013; pp. 50–63. [Google Scholar]

- Hallermann, N.; Morgenthal, G.; Rodehorst, V. Unmanned Aerial Systems (UAS)—Case Studies of Vision Based Monitoring of Ageing Structures. In Proceedings of the International Symposium Non-Destructive Testing in Civil Engineering (NDT-CE), Berlin, Germany, 15–17 September 2015; pp. 15–17. [Google Scholar]

- Lattanzi, D.; Miller, G. Review of Robotic Infrastructure Inspection Systems. J. Infrastruct. Syst. 2017, 23, 04017004. [Google Scholar] [CrossRef]

- Pinto, L.; Bianchini, F.; Nova, V.; Passoni, D. Low-Cost Uas Photogrammetry for Road Infrastructures’ Inspection. ISPRS—Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, 43B2, 1145–1150. [Google Scholar] [CrossRef]

- Bulgakov, A.; Sayfeddine, D. Air Conditioning Ducts Inspection and Cleaning Using Telerobotics. Procedia Eng. 2016, 164, 121–126. [Google Scholar] [CrossRef]

- Wang, Y.; Su, J. Automated Defect and Contaminant Inspection of HVAC Duct. Autom. Constr. 2014, 41, 15–24. [Google Scholar] [CrossRef]

- Inzartsev, A.; Eliseenko, G.; Panin, M.; Pavin, A.; Bobkov, V.; Morozov, M. Underwater Pipeline Inspection Method for AUV Based on Laser Line Recognition: Simulation Results. In Proceedings of the 2019 IEEE Underwater Technology (UT), Kaohsiung, Taiwan, 16–19 April 2019. [Google Scholar]

- Kreuzer, E.; Pinto, F.C. Sensing the Position of a Remotely Operated Underwater Vehicle. In Proceedings of the Theory and Practice of Robots and Manipulators; Morecki, A., Bianchi, G., Jaworek, K., Eds.; Springer: Vienna, Austria, 1995; pp. 323–328. [Google Scholar]

- Brunete, A.; Hernando, M.; Torres, J.E.; Gambao, E. Heterogeneous Multi-Configurable Chained Microrobot for the Exploration of Small Cavities. Autom. Constr. 2012, 21, 184–198. [Google Scholar] [CrossRef]

- Tan, Y.; Li, S.; Liu, H.; Chen, P.; Zhou, Z. Automatic Inspection Data Collection of Building Surface Based on BIM and UAV. Autom. Constr. 2021, 131, 103881. [Google Scholar] [CrossRef]

- Torok Matthew, M.; Golparvar-Fard, M.; Kochersberger Kevin, B. Image-Based Automated 3D Crack Detection for Post-Disaster Building Assessment. J. Comput. Civ. Eng. 2014, 28, A4014004. [Google Scholar] [CrossRef]

- Khan, F.; Ellenberg, A.; Mazzotti, M.; Kontsos, A.; Moon, F.; Pradhan, A.; Bartoli, I. Investigation on Bridge Assessment Using Unmanned Aerial Systems. In Proceedings of the Structures Congress 2015, Portland, OR, USA, 23–25 April 2015; pp. 404–413. [Google Scholar] [CrossRef]

- Siciliano, B.; Khatib, O. Springer Handbook of Robotics; Springer: Berlin, Germany, 2016; ISBN 978-3-319-32552-1. [Google Scholar]

- Birk, A.; Pfingsthorn, M.; Bulow, H. Advances in Underwater Mapping and Their Application Potential for Safety, Security, and Rescue Robotics (SSRR). In Proceedings of the 2012 IEEE International Symposium on Safety, Security, and Rescue Robotics (SSRR), College Station, TX, USA, 5–8 November 2012. [Google Scholar]

- Ruiz, R.D.B.; Lordsleem Jr, A.C.; Rocha, J.H.A.; Irizarry, J. Unmanned Aerial Vehicles (UAV) as a Tool for Visual Inspection of Building Facades in AEC+FM Industry. Constr. Innov. 2021, 22, 1155–1170. [Google Scholar] [CrossRef]

- Agnisarman, S.; Lopes, S.; Chalil Madathil, K.; Piratla, K.; Gramopadhye, A. A Survey of Automation-Enabled Human-in-the-Loop Systems for Infrastructure Visual Inspection. Autom. Constr. 2019, 97, 52–76. [Google Scholar] [CrossRef]

- Halder, S.; Afsari, K.; Chiou, E.; Patrick, R.; Hamed, K.A. Construction Inspection & Monitoring with Quadruped Robots in Future Human-Robot Teaming. J. Build. Eng. 2023, 65, 105814. [Google Scholar]

- Lim, R.S.; La, H.M.; Shan, Z.; Sheng, W. Developing a Crack Inspection Robot for Bridge Maintenance. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 6288–6293. [Google Scholar]

- Kim, I.-H.; Jeon, H.; Baek, S.-C.; Hong, W.-H.; Jung, H.-J. Application of Crack Identification Techniques for an Aging Concrete Bridge Inspection Using an Unmanned Aerial Vehicle. Sensors 2018, 18, 1881. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.H.; Park, J.; Jang, B. Design of Robot Based Work Progress Monitoring System for the Building Construction Site. In Proceedings of the 2018 International Conference on Information and Communication Technology Convergence (ICTC), Jeju Island, Republic of Korea, 17–19 October 2018; pp. 1420–1422. [Google Scholar]

- Kim, D.; Liu, M.; Lee, S.; Kamat, V.R. Remote Proximity Monitoring between Mobile Construction Resources Using Camera-Mounted UAVs. Autom. Constr. 2019, 99, 168–182. [Google Scholar] [CrossRef]

- Rakha, T.; Gorodetsky, A. Review of Unmanned Aerial System (UAS) Applications in the Built Environment: Towards Automated Building Inspection Procedures Using Drones. Autom. Constr. 2018, 93, 252–264. [Google Scholar] [CrossRef]

- Bock, T.; Linner, T. Construction Robots: Elementary Technologies and Single-Task Construction Robots; Cambridge University Press: Cambridge, UK, 2016; Volume 3, ISBN 978-1-107-07599-3. [Google Scholar]

- Pal, A.; Hsieh, S.-H. Deep-Learning-Based Visual Data Analytics for Smart Construction Management. Autom. Constr. 2021, 131, 103892. [Google Scholar] [CrossRef]

- Tang, S.; Shelden, D.R.; Eastman, C.M.; Pishdad-Bozorgi, P.; Gao, X. A Review of Building Information Modeling (BIM) and the Internet of Things (IoT) Devices Integration: Present Status and Future Trends. Autom. Constr. 2019, 101, 127–139. [Google Scholar] [CrossRef]

- Huang, Y.; Yan, J.; Chen, L. A Bird’s Eye of Prefabricated Construction Based on Bibliometric Analysis from 2010 to 2019. In Proceedings of the IOP Conf. Series: Materials Science and Engineering, Ulaanbaatar, Mongolia, 10–13 September 2020; p. 052011. [Google Scholar]

- Aria, M.; Cuccurullo, C. Bibliometrix: An R-Tool for Comprehensive Science Mapping Analysis. J. Informetr. 2017, 11, 959–975. [Google Scholar] [CrossRef]

- Li, P.; Lu, Y.; Yan, D.; Xiao, J.; Wu, H. Scientometric Mapping of Smart Building Research: Towards a Framework of Human-Cyber-Physical System (HCPS). Autom. Constr. 2021, 129, 103776. [Google Scholar] [CrossRef]

- Sawada, J.; Kusumoto, K.; Maikawa, Y.; Munakata, T.; Ishikawa, Y. A Mobile Robot for Inspection of Power Transmission Lines. IEEE Trans. Power Deliv. 1991, 6, 309–315. [Google Scholar] [CrossRef]

- Patel, T.; Suthar, V.; Bhatt, N. Application of Remotely Piloted Unmanned Aerial Vehicle in Construction Management BT-Recent Trends in Civil Engineering; Pathak, K.K., Bandara, J.M.S.J., Agrawal, R., Eds.; Springer: Singapore, 2021; pp. 319–329. [Google Scholar]

- Phung, M.D.; Quach, C.H.; Dinh, T.H.; Ha, Q. Enhanced Discrete Particle Swarm Optimization Path Planning for UAV Vision-Based Surface Inspection. Autom. Constr. 2017, 81, 25–33. [Google Scholar] [CrossRef]

- Whang, S.-H.; Kim, D.-H.; Kang, M.-S.; Cho, K.; Park, S.; Son, W.-H. Development of a Flying Robot System for Visual Inspection of Bridges; International Society for Structural Health Monitoring of Intelligent Infrastructure (ISHMII): Winnipeg, MB, Canada, 2007. [Google Scholar]

- Asadi, K.; Kalkunte Suresh, A.; Ender, A.; Gotad, S.; Maniyar, S.; Anand, S.; Noghabaei, M.; Han, K.; Lobaton, E.; Wu, T. An Integrated UGV-UAV System for Construction Site Data Collection. Autom. Constr. 2020, 112, 103068. [Google Scholar] [CrossRef]

- Kim, P.; Park, J.; Cho, Y.K.; Kang, J. UAV-Assisted Autonomous Mobile Robot Navigation for as-Is 3D Data Collection and Registration in Cluttered Environments. Autom. Constr. 2019, 106, 102918. [Google Scholar] [CrossRef]

- Kamagaluh, B.; Kumar, J.S.; Virk, G.S. Design of Multi-Terrain Climbing Robot for Petrochemical Applications. In Proceedings of the Adaptive Mobile Robotics, Baltimore, MD, USA, 23–26 July 2012; pp. 639–646. [Google Scholar]

- Katrasnik, J.; Pernus, F.; Likar, B. A Climbing-Flying Robot for Power Line Inspection. In Climbing and Walking Robots; Miripour, B., Ed.; IntechOpen: Rijeka, Croatia, 2010; pp. 95–110. [Google Scholar]

- Mattar, R.A.; Kalai, R. Development of a Wall-Sticking Drone for Non-Destructive Ultrasonic and Corrosion Testing. Drones 2018, 2, 8. [Google Scholar] [CrossRef]

- Irizarry, J.; Costa, D. Exploratory Study of Potential Applications of Unmanned Aerial Systems for Construction Management Tasks. J. Manag. Eng. 2016, 32, 05016001. [Google Scholar] [CrossRef]

- Razali, S.N.M.; Kaamin, M.; Razak, S.N.A.; Hamid, N.B.; Ahmad, N.F.A.; Mokhtar, M.; Ngadiman, N.; Sahat, S. Application of UAV and Csp1 Matrix for Building Inspection at Muzium Negeri, Seremban. Int. J. Innov. Technol. Explor. Eng. 2019, 8, 1366–1372. [Google Scholar] [CrossRef]

- Moore, J.; Tadinada, H.; Kirsche, K.; Perry, J.; Remen, F.; Tse, Z.T.H. Facility Inspection Using UAVs: A Case Study in the University of Georgia Campus. Int. J. Remote Sens. 2018, 39, 7189–7200. [Google Scholar] [CrossRef]

- Biswas, S.; Sharma, R. Goal-Aware Navigation of Quadrotor UAV for Infrastructure Inspection. In Proceedings of the AIAA Scitech 2019 Forum, American Institute of Aeronautics and Astronautics, San Diego, CA, USA, 7–11 January 2019. [Google Scholar]

- Mao, Z.; Yan, Y.; Wu, J.; Hajjar, J.F.; Padlr, T. Towards Automated Post-Disaster Damage Assessment of Critical Infrastructure with Small Unmanned Aircraft Systems. In Proceedings of the 2018 IEEE International Symposium on Technologies for Homeland Security (HST), Woburn, MA, USA, 23–24 October 2018. [Google Scholar]

- Walczyński, M.; Bożejko, W.; Skorupka, D. Parallel Optimization Algorithm for Drone Inspection in the Building Industry. AIP Conf. Proc. 2017, 1863, 230014. [Google Scholar] [CrossRef]

- Vacca, G.; Furfaro, G.; Dessì, A. The Use of the Uav Images for the Building 3D Model Generation. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences—ISPRS Archives, Dar es Salaam, TN, USA, 29 August 2018; Volume 42, pp. 217–223. [Google Scholar]

- Taj, G.; Anand, S.; Haneefi, A.; Kanishka, R.P.; Mythra, D.H.A. Monitoring of Historical Structures Using Drones; IOP Publishing Ltd.: Bristol, UK, 2020; Volume 955. [Google Scholar]

- Saifizi, M.; Syahirah, N.; Mustafa, W.A.; Rahim, H.A.; Nasrudin, M.W. Using Unmanned Aerial Vehicle in 3D Modelling of UniCITI Campus to Estimate Building Size; IOP Publishing Ltd.: Bristol, UK, 2021; Volume 1962. [Google Scholar]

- Hament, B.; Oh, P. Unmanned Aerial and Ground Vehicle (UAV-UGV) System Prototype for Civil Infrastructure Missions. In Proceedings of the 2018 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 12–14 January 2018; Mohanty, S., Corcoran, P., Li, H., Sengupta, A., Lee, J., Eds.; IEEE: Piscataway, NJ, USA, 2018. [Google Scholar]

- Roca, D.; Lagüela, S.; Díaz-Vilariño, L.; Armesto, J.; Arias, P. Low-Cost Aerial Unit for Outdoor Inspection of Building Façades. Autom. Constr. 2013, 36, 128–135. [Google Scholar] [CrossRef]

- Lin, J.J.; Han, K.K.; Golparvar-Fard, M. A Framework for Model-Driven Acquisition and Analytics of Visual Data Using UAVs for Automated Construction Progress Monitoring. In Proceedings of the Computing in Civil Engineering, Austin, TX, USA, 21–23 June 2015; pp. 156–164. [Google Scholar]

- Keyvanfar, A.; Shafaghat, A.; Awanghamat, A. Optimization and Trajectory Analysis of Drone’s Flying and Environmental Variables for 3D Modelling the Construction Progress Monitoring. Int. J. Civ. Eng. 2021, 20, 363–388. [Google Scholar] [CrossRef]

- Freimuth, H.; König, M. Planning and Executing Construction Inspections with Unmanned Aerial Vehicles. Autom. Constr. 2018, 96, 540–553. [Google Scholar] [CrossRef]

- Hamledari, H.; Davari, S.; Azar, E.R.; McCabe, B.; Flager, F.; Fischer, M. UAV-Enabled Site-to-BIM Automation: Aerial Robotic- and Computer Vision-Based Development of As-Built/As-Is BIMs and Quality Control. In Proceedings of the Construction Research Congress 2018: Construction Information Technology—Selected Papers from the Construction Research Congress 2018, New Orleans, LA, USA, 2–4 April 2018; American Society of Civil Engineers (ASCE): Reston, VA, USA, 2018; pp. 336–346. [Google Scholar]

- Kayhani, N.; McCabe, B.; Abdelaal, A.; Heins, A.; Schoellig, A.P. Tag-Based Indoor Localization of UAVs in Construction Environments: Opportunities and Challenges in Practice. In Proceedings of the Construction Research Congress 2020, Tempe, AZ, USA, 8–10 March 2020; pp. 226–235. [Google Scholar]

- Previtali, M.; Barazzetti, L.; Brumana, R.; Roncoroni, F. Thermographic Analysis from Uav Platforms for Energy Efficiency Retrofit Applications. J. Mob. Multimed. 2013, 9, 66–82. [Google Scholar]

- Teixeira, J.M.; Ferreira, R.; Santos, M.; Teichrieb, V. Teleoperation Using Google Glass and Ar, Drone for Structural Inspection. In Proceedings of the 2014 XVI Symposium on Virtual and Augmented Reality, Piata Salvador, Brazil, 12–15 May 2014; pp. 28–36. [Google Scholar]

- Esfahan, N.R.; Attalla, M.; Khurshid, K.; Saeed, N.; Huang, T.; Razavi, S. Employing Unmanned Aerial Vehicles (UAV) to Enhance Building Roof Inspection Practices: A Case Study. In Proceedings of the Canadian Society for Civil Engineering Annual Conference, London, ON, Canada, 1–4 June 2016; Volume 1, pp. 296–305. [Google Scholar]

- Chen, K.; Reichard, G.; Xu, X. Opportunities for Applying Camera-Equipped Drones towards Performance Inspections of Building Facades. In Proceedings of the ASCE International Conference on Computing in Civil Engineering 2019, Atlanta, GA, USA, 17–19 June 2019; pp. 113–120. [Google Scholar]

- Oudjehane, A.; Moeini, S.; Baker, T. Construction Project Control and Monitoring with the Integration of Unmanned Aerial Systems with Virtual Design and Construction Models; Canadian Society for Civil Engineering: Vancouver, BC, Canada, 2017; Volume 1, pp. 381–406. [Google Scholar]

- Choi, J.; Yeum, C.M.; Dyke, S.J.; Jahanshahi, M.; Pena, F.; Park, G.W. Machine-Aided Rapid Visual Evaluation of Building Façades. In Proceedings of the 9th European Workshop on Structural Health Monitoring, Manchester, UK, 10–13 July 2018. [Google Scholar]

- Mavroulis, S.; Andreadakis, E.; Spyrou, N.-I.; Antoniou, V.; Skourtsos, E.; Papadimitriou, P.; Kasssaras, I.; Kaviris, G.; Tselentis, G.-A.; Voulgaris, N.; et al. UAV and GIS Based Rapid Earthquake-Induced Building Damage Assessment and Methodology for EMS-98 Isoseismal Map Drawing: The June 12, 2017 Mw 6.3 Lesvos (Northeastern Aegean, Greece) Earthquake. Int. J. Disaster Risk Reduct. 2019, 37, 101169. [Google Scholar] [CrossRef]

- Daniel Otero, L.; Gagliardo, N.; Dalli, D.; Otero, C.E. Preliminary SUAV Component Evaluation for Inspecting Transportation Infrastructure Systems. In Proceedings of the 2016 Annual IEEE Systems Conference (SysCon), Orlando, FL, USA, 18–21 April 2016. [Google Scholar]

- Pereira, F.C.; Pereira, C.E. Embedded Image Processing Systems for Automatic Recognition of Cracks Using UAVs. IFAC-PapersOnLine 2015, 28, 16–21. [Google Scholar] [CrossRef]

- Watanabe, K.; Moritoki, N.; Nagai, I. Attitude Control of a Camera Mounted-Type Tethered Quadrotor for Infrastructure Inspection. In Proceedings of the IECON 2017-43rd Annual Conference of the IEEE Industrial Electronics Society, Beijing, China, 29 October–1 November 2017; pp. 6252–6257. [Google Scholar]

- Murtiyoso, A.; Koehl, M.; Grussenmeyer, P.; Freville, T. Acquisition and Processing Protocols for Uav Images: 3d Modeling of Historical Buildings Using Photogrammetry. In Proceedings of the 26th International CIPA Symposium 2017, Ottawa, ON, Canada, 28 August–1 September 2017; Volume 4, pp. 163–170. [Google Scholar]

- Saifizi, M.; Azani Mustafa, W.; Syahirah Mohammad Radzi, N.; Aminudin Jamlos, M.; Zulkarnain Syed Idrus, S. UAV Based Image Acquisition Data for 3D Model Application. IOP Conf. Ser. Mater. Sci. Eng. 2020, 917, 012074. [Google Scholar] [CrossRef]

- Khaloo, A.; Lattanzi, D. Integrating 3D Computer Vision and Robotic Infrastructure Inspection. In Proceedings of the 11th International Workshop on Structural Health Monitoring (IWSHM), Stanford, CA, USA, 12–14 September 2017; Volume 2, pp. 3202–3209. [Google Scholar]

- Kersten, J.; Rodehorst, V.; Hallermann, N.; Debus, P.; Morgenthal, G. Potentials of Autonomous UAS and Automated Image Analysis for Structural Health Monitoring. In Proceedings of the IABSE Symposium 2018, Nantes, France, 19–21 September 2018; pp. S24–S119. [Google Scholar]

- Kakillioglu, B.; Velipasalar, S.; Rakha, T. Autonomous Heat Leakage Detection from Unmanned Aerial Vehicle-Mounted Thermal Cameras. In Proceedings of the 12th International Conference, Jeju, Republic of Korea, 25–28 October 2018. [Google Scholar]

- Gomez, J.; Tascon, A. A Protocol for Using Unmanned Aerial Vehicles to Inspect Agro-Industrial Buildings. Inf. Constr. 2021, 73, e421. [Google Scholar] [CrossRef]

- Dergachov, K.; Kulik, A. Impact-Resistant Flying Platform for Use in the Urban Construction Monitoring. In Methods and Applications of Geospatial Technology in Sustainable Urbanism; IGI Global: Pennsylvania, PA, USA, 2021; pp. 520–551. [Google Scholar]

- Kucuksubasi, F.; Sorguc, A.G. Transfer Learning-Based Crack Detection by Autonomous UAVs. In Proceedings of the 35th International Symposium on Automation and Robotics in Construction (ISARC), Berlin, Germany, 20–25 July 2018; Teizer, J., Ed.; International Association for Automation and Robotics in Construction (IAARC): Taipei, Taiwan, 2018; pp. 593–600. [Google Scholar]

- Bruggemann, T. Automated Feature-Driven Flight Planning for Airborne Inspection of Large Linear Infrastructure Assets. IEEE Trans. Autom. Sci. Eng. 2022, 19, 804–817. [Google Scholar] [CrossRef]

- Nakata, K.; Umemoto, K.; Kaneko, K.; Ryusuke, F. Development and Operation Of Wire Movement Type Bridge Inspection Robot System ARANEUS. In Proceedings of the International Symposium on Applied Science, Bali, Indonesia, 24–25 October 2019; Kalpa Publications in Engineering: Manchester, UK, 2020; pp. 168–174. [Google Scholar]

- Lyu, J.; Zhao, T.; Xu, G. Research on UAV’s Fixed-Point Cruise Method Aiming at the Appearance Defects of Buildings. In Proceedings of the ACM International Conference Proceeding Series; Association for Computing Machinery: New York, NY, USA, 2021; pp. 783–787. [Google Scholar]

- Vazquez-Nicolas, J.M.; Zamora, E.; Gonzalez-Hernandez, I.; Lozano, R.; Sossa, H. Towards Automatic Inspection: Crack Recognition Based on Quadrotor UAV-Taken Images. In Proceedings of the 2018 International Conference on Unmanned Aircraft Systems (ICUAS), Dallas, TX, USA, 12–15 June 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 654–659. [Google Scholar]

- Choi, Y.; Payan, A.P.; Briceno, S.I.; Mavris, D.N. A Framework for Unmanned Aerial Systems Selection and Trajectory Generation for Imaging Service Missions. In Proceedings of the 2018 Aviation Technology, Integration, and Operations Conference, Atlanta, GA, USA, 25–29 June 2018. [Google Scholar]

- Zhenga, Z.J.; Pana, M.; Pan, W. Virtual Prototyping-Based Path Planning of Unmanned Aerial Vehicles for Building Exterior Inspection. In Proceedings of the 2020 37th ISARC, Kitakyushu, Japan, 27–28 October 2020; pp. 16–23. [Google Scholar]

- Debus, P.; Rodehorst, V. Multi-Scale Flight Path Planning for UAS Building Inspection; Lecture Notes in Civil Engineering; Springer: Berlin, Germany, 2021; Volume 98, p. 1085. [Google Scholar]

- Freimuth, H.; Müller, J.; König, M. Simulating and Executing UAV-Assisted Inspections on Construction Sites. In Proceedings of the 2017 34rd ISARC, Taipei, Taiwan, 28 June–1 July 2017; pp. 647–654. [Google Scholar]

- Sa, I.; Corke, P. Vertical Infrastructure Inspection Using a Quadcopter and Shared Autonomy Control; Springer Tracts in Advanced Robotics; Springer: Berlin, Germany, 2014; Volume 92, p. 232. [Google Scholar]

- Hamledari, H.; Davari, S.; Sajedi, S.O.; Zangeneh, P.; McCabe, B.; Fischer, M. UAV Mission Planning Using Swarm Intelligence and 4D BIMs in Support of Vision-Based Construction Progress Monitoring and as-Built Modeling. In Proceedings of the Construction Research Congress 2018: Construction Information Technology-Selected Papers from the Construction Research Congress 2018, New Orleans, LA, USA, 2–4 April 2018; American Society of Civil Engineers (ASCE): Reston, VA, USA, 2018; pp. 43–53. [Google Scholar]

- Kayhani, N.; Zhao, W.; McCabe, B.; Schoellig, A.P. Tag-Based Visual-Inertial Localization of Unmanned Aerial Vehicles in Indoor Construction Environments Using an on-Manifold Extended Kalman Filter. Autom. Constr. 2022, 135, 104112. [Google Scholar] [CrossRef]

- Vanegas, F.; Gaston, K.; Roberts, J.; Gonzalez, F. A Framework for UAV Navigation and Exploration in GPS-Denied Environments. In Proceedings of the 2019 IEEE Aerospace Conference, Big Sky, MT, USA, 2–9 March 2019. [Google Scholar]

- Usenko, V.; von Stumberg, L.; Stückler, J.; Cremers, D. TUM Flyers: Vision—Based MAV Navigation for Systematic Inspection of Structures; Springer Tracts in Advanced Robotics; Springer: Berlin, Germany, 2020; Volume 136, p. 209. [Google Scholar]

- Rea, P.; Ottaviano, E.; Castillo-Garcia, F.; Gonzalez-Rodriguez, A. Inspection Robotic System: Design and Simulation for Indoor and Outdoor Surveys; Machado, J., Soares, F., Trojanowska, J., Yildirim, S., Eds.; Springer: Berlin, Germany, 2022; pp. 313–321. [Google Scholar]

- Gibb, S.; La, H.M.; Le, T.; Nguyen, L.; Schmid, R.; Pham, H. Nondestructive Evaluation Sensor Fusion with Autonomous Robotic System for Civil Infrastructure Inspection. J. Field Robot. 2018, 35, 988–1004. [Google Scholar] [CrossRef]

- Nitta, Y.; Nishitani, A.; Iwasaki, A.; Watakabe, M.; Inai, S.; Ohdomari, I. Damage Assessment Methodology for Nonstructural Components with Inspection Robot. Key Eng. Mater. 2013, 558, 297–304. [Google Scholar] [CrossRef]

- Watanabe, A.; Even, J.; Morales, L.Y.; Ishi, C. Robot-Assisted Acoustic Inspection of Infrastructures-Cooperative Hammer Sounding Inspection. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 5942–5947. [Google Scholar]

- Phillips, S.; Narasimhan, S. Automating Data Collection for Robotic Bridge Inspections. J. Bridge Eng. 2019, 24, 04019075. [Google Scholar] [CrossRef]

- Asadi, K.; Jain, R.; Qin, Z.; Sun, M.; Noghabaei, M.; Cole, J.; Han, K.; Lobaton, E. Vision-Based Obstacle Removal System for Autonomous Ground Vehicles Using a Robotic Arm. In Proceedings of the ASCE International Conference on Computing in Civil Engineering 2019, Atlanta, GA, USA, 17–19 June 2019; pp. 328–335. [Google Scholar]

- Kim, P.; Chen, J.; Kim, J.; Cho, Y.K. SLAM-Driven Intelligent Autonomous Mobile Robot Navigation for Construction Applications. In Proceedings of the Advanced Computing Strategies for Engineering, Lausanne, Switzerland, 10–13 June 2018; Smith, I.F.C., Domer, B., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 254–269. [Google Scholar]

- Davidson, N.C.; Chase, S.B. Initial Testing of Advanced Ground-Penetrating Radar Technology for the Inspection of Bridge Decks: The HERMES and PERES Bridge Inspectors. In Proceedings of the SPIE Proceedings, Newport Beach, CA, USA, 1 February 1999; Volume 3587, pp. 180–185. [Google Scholar] [CrossRef]

- Dobmann, G.; Kurz, J.H.; Taffe, A.; Streicher, D. Development of Automated Non-Destructive Evaluation (NDE) Systems for Reinforced Concrete Structures and Other Applications. In Non-Destructive Evaluation of Reinforced Concrete Structures; Maierhofer, C., Reinhardt, H.-W., Dobmann, G., Eds.; Woodhead Publishing: Sawston, UK, 2010; Volume 2, pp. 30–62. ISBN 978-1-84569-950-5. [Google Scholar]

- Gibb, S.; Le, T.; La, H.M.; Schmid, R.; Berendsen, T. A Multi-Functional Inspection Robot for Civil Infrastructure Evaluation and Maintenance. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 2672–2677. [Google Scholar]

- Massaro, A.; Savino, N.; Selicato, S.; Panarese, A.; Galiano, A.; Dipierro, G. Thermal IR and GPR UAV and Vehicle Embedded Sensor Non-Invasive Systems for Road and Bridge Inspections. In Proceedings of the 2021 IEEE International Workshop on Metrology for Industry 4.0 & IoT (MetroInd4.0&IoT), Rome, Italy, 7–9 June 2021; pp. 248–253. [Google Scholar]

- Kanellakis, C.; Fresk, E.; Mansouri, S.; Kominiak, D.; Nikolakopoulos, G. Towards Visual Inspection of Wind Turbines: A Case of Visual Data Acquisition Using Autonomous Aerial Robots. IEEE Access 2020, 8, 181650–181661. [Google Scholar] [CrossRef]

- McCrea, A.; Chamberlain, D.A. Towards the Development of a Bridge Inspecting Automated Device. In Proceedings of the Automation and Robotics in Construction X, Houston, TX, USA, 26 May 1993; Watson, G.H., Tuccker, R.L., Walters, J.K., Eds.; International Association for Automation and Robotics in Construction (IAARC): Chennai, India, 1993; pp. 269–276. [Google Scholar]

- Mu, H.; Li, Y.; Chen, D.; Li, J.; Wang, M. Design of Tank Inspection Robot Navigation System Based on Virtual Reality. In Proceedings of the 2021 IEEE International Conference on Robotics and Biomimetics (ROBIO), Sanya, China, 27–31 December 2021; pp. 1773–1778. [Google Scholar]

- Moselhi, O.; Shehab-Eldeen, T. Automated Detection of Surface Defects in Water and Sewer Pipes. Autom. Constr. 1999, 8, 581–588. [Google Scholar] [CrossRef]

- Liu, K.P.; Luk, B.L.; Tong, F.; Chan, Y.T. Application of Service Robots for Building NDT Inspection Tasks. Ind. Robot 2011, 38, 58–65. [Google Scholar] [CrossRef]

- Barry, N.; Fisher, E.; Vaughan, J. Modeling and Control of a Cable-Suspended Robot for Inspection of Vertical Structures. In Proceedings of the Journal of Physics: Conference Series; IOP Publishing: Bristol, UK, 2016; Volume 744, p. 12071. [Google Scholar]

- Aracil, R.; Saltarén, R.J.; Almonacid, M.; Azorín, J.M.; Sabater, J.M. Climbing Parallel Robots Morphologies. IFAC Proc. Volume 2000, 33, 471–476. [Google Scholar] [CrossRef]

- Esser, B.; Huston, D.R.; Esser, B.; Huston, D.R. Versatile Robotic Platform for Structural Health Monitoring and Surveillance. Smart Struct. Syst. 2005, 1, 325. [Google Scholar] [CrossRef]

- Schempf, H. Neptune: Above-Ground Storage Tank Inspection Robot System. In Proceedings of the 1994 IEEE International Conference on Robotics and Automation, San Diego, CA, USA, 8–13 May 1994; IEEE: Piscataway, NJ, USA, 1994; Volume 2, pp. 1403–1408. [Google Scholar]

- Caccia, M.; Robino, R.; Bateman, W.; Eich, M.; Ortiz, A.; Drikos, L.; Todorova, A.; Gaviotis, I.; Spadoni, F.; Apostolopoulou, V. MINOAS a Marine INspection RObotic Assistant: System Requirements and Design. IFAC Proc. Vol. 2010, 43, 479–484. [Google Scholar] [CrossRef]

- Longo, D.; Muscato, G.; Sessa, S. Simulator for Locomotion Control of the Alicia3 Climbing Robot. In Climbing and Walking Robots; Tokhi, M.O., Virk, G.S., Hossain, M.A., Eds.; Springer: Berlin/Heidelberg, Germany, 2006; pp. 843–850. [Google Scholar]

- Kouzehgar, M.; Krishnasamy Tamilselvam, Y.; Vega Heredia, M.; Rajesh Elara, M. Self-Reconfigurable Façade-Cleaning Robot Equipped with Deep-Learning-Based Crack Detection Based on Convolutional Neural Networks. Autom. Constr. 2019, 108, 102959. [Google Scholar] [CrossRef]

- Hernando, M.; Brunete, A.; Gambao, E. ROMERIN: A Modular Climber Robot for Infrastructure Inspection. IFAC-Paper 2019, 52, 424–429. [Google Scholar] [CrossRef]

- Hou, S.; Dong, B.; Wang, H.; Wu, G. Inspection of Surface Defects on Stay Cables Using a Robot and Transfer Learning. Autom. Constr. 2020, 119, 103382. [Google Scholar] [CrossRef]

- Kajiwara, H.; Hanajima, N.; Kurashige, K.; Fujihira, Y. Development of Hanger-Rope Inspection Robot for Suspension Bridges. J. Robot. Mechatron. 2019, 31, 855–862. [Google Scholar] [CrossRef]

- Boreyko, A.A.; Moun, S.A.; Scherbatyuk, A.P. Precise UUV Positioning Based on Images Processing for Underwater Construction Inspection | Pacific/Asia Offshore Mechanics Symposium | OnePetro. In Proceedings of the The Eighth ISOPE Pacific/Asia Offshore Mechanics Symposium, Bangkok, Thailand, 10–14 November 2008; pp. 14–20. [Google Scholar]

- Atyabi, A.; MahmoudZadeh, S.; Nefti-Meziani, S. Current Advancements on Autonomous Mission Planning and Management Systems: An AUV and UAV Perspective. Annu. Rev. Control 2018, 46, 196–215. [Google Scholar] [CrossRef]

- Shimono, S.; Toyama, S.; Nishizawa, U. Development of Underwater Inspection System for Dam Inspection: Results of Field Tests. In Proceedings of the OCEANS 2016 MTS/IEEE Monterey, Monterey, CA, USA, 19–23 September 2016; pp. 1–4. [Google Scholar]

- Shojaei, A.; Moud, H.I.; Flood, I. Proof of Concept for the Use of Small Unmanned Surface Vehicle in Built Environment Management. In Proceedings of the Construction Research Congress, New Orleans, LA, USA, 2–4 April 2018; pp. 116–126. [Google Scholar]

- Masago, H. Approaches towards Standardization of Performance Evaluation of Underwater Infrastructure Inspection Robots: Establishment of Standard Procedures and Training Programs. In Proceedings of the 2021 IEEE International Conference on Intelligence and Safety for Robotics (ISR), Tokoname, Japan, 4–6 March 2021; pp. 244–247. [Google Scholar]

- Cymbal, M.; Tao, H.; Tao, J. Underwater Inspection with Remotely Controlled Robot and Image Based 3D Structure Reconstruction Techniques. In Proceedings of the Transportation Research Board 95th Annual Meeting, Washington, DC, USA, 10–14 January 2016; pp. 1–10. [Google Scholar]

- Ma, Y.; Ye, R.; Zheng, R.; Geng, L.; Yang, Y. A Highly Mobile Ducted Underwater Robot for Subsea Infrastructure Inspection. In Proceedings of the 2016 IEEE International Conference on Cyber Technology in Automation, Control, and Intelligent Systems (CYBER), Chengdu, China, 19–22 June 2016; pp. 397–400. [Google Scholar]

- Shojaei, A.; Moud, H.I.; Flood, I. Proof of Concept for the Use of Small Unmanned Surface Vehicle in Built Environment Management. In Proceedings of the Construction Research Congress 2018: Construction Information Technology-Selected Papers from the Construction Research Congress 2018, New Orleans, LA, USA, 2–4 April 2018; American Society of Civil Engineers (ASCE): Reston, VA, USA, 2018; pp. 116–126. [Google Scholar]

- Shojaei, A.; Izadi Moud, H.; Razkenari, M.; Flood, I.; Hakim, H. Feasibility Study of Small Unmanned Surface Vehicle Use in Built Environment Assessment. In Proceedings of the Institute of Industrial and Systems Engineers (IISE) Annual Conference, Orlando, FL, USA, 19 May 2018. [Google Scholar]

- Paap, K.L.; Christaller, T.; Kirchner, F. A Robot Snake to Inspect Broken Buildings. In Proceedings of the 2000 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2000) (Cat. No.00CH37113), Takamatsu, Japan, 31 October–5 November 2000; Volume 3, pp. 2079–2082. [Google Scholar]

- Lakshmanan, A.; Elara, M.; Ramalingam, B.; Le, A.; Veerajagadeshwar, P.; Tiwari, K.; Ilyas, M. Complete Coverage Path Planning Using Reinforcement Learning for Tetromino Based Cleaning and Maintenance Robot. Autom. Constr. 2020, 112, 103078. [Google Scholar] [CrossRef]

- Lin, J.-L.; Hwang, K.-S.; Jiang, W.-C.; Chen, Y.-J. Gait Balance and Acceleration of a Biped Robot Based on Q-Learning. IEEE Access 2016, 4, 2439–2449. [Google Scholar] [CrossRef]

- Afsari, K.; Halder, S.; King, R.; Thabet, W.; Serdakowski, J.; Devito, S.; Mahnaz, E.; Lopez, J. Identification of Indicators for Effectiveness Evaluation of Four-Legged Robots in Automated Construction Progress Monitoring. In Proceedings of the Construction Research Congress, Arlington, VA, USA, 9–12 March 2022; American Society of Civil Engineers: Arlington, TX, USA, 2022; pp. 610–620. [Google Scholar]

- Faigl, J.; Čížek, P. Adaptive Locomotion Control of Hexapod Walking Robot for Traversing Rough Terrains with Position Feedback Only. Robot. Auton. Syst. 2019, 116, 136–147. [Google Scholar] [CrossRef]

- Afsari, K.; Halder, S.; Ensafi, M.; DeVito, S.; Serdakowski, J. Fundamentals and Prospects of Four-Legged Robot Application in Construction Progress Monitoring. In Proceedings of the ASC International Proceedings of the Annual Conference, Virtual, CA, USA, 5–8 April 2021; pp. 271–278. [Google Scholar]

- Halder, S.; Afsari, K.; Serdakowski, J.; DeVito, S. A Methodology for BIM-Enabled Automated Reality Capture in Construction Inspection with Quadruped Robots. In Proceedings of the International Symposium on Automation and Robotics in Construction, Bogotá, Colombia, 13–15 July 2021; pp. 17–24. [Google Scholar]

- Shin, J.-U.; Kim, D.; Jung, S.; Myung, H. Dynamics Analysis and Controller Design of a Quadrotor-Based Wall-Climbing Robot for Structural Health Monitoring. In Structural Health Monitoring 2015; Stanford University: Stanford, CA, USA, 2015. [Google Scholar]

- Shin, J.-U.; Kim, D.; Kim, J.-H.; Jeon, H.; Myung, H. Quadrotor-Based Wall-Climbing Robot for Structural Health Monitoring. Struct. Health Monit. 2013, 2, 1889–1894. [Google Scholar]

- Kriengkomol, P.; Kamiyama, K.; Kojima, M.; Horade, M.; Mae, Y.; Arai, T. Hammering Sound Analysis for Infrastructure Inspection by Leg Robot. In Proceedings of the 2015 IEEE International Conference on Robotics and Biomimetics (ROBIO), Zhuhai, China, 6–9 December 2015; pp. 887–892. [Google Scholar]

- Prieto, S.A.; Giakoumidis, N.; García de Soto, B. AutoCIS: An Automated Construction Inspection System for Quality Inspection of Buildings. In Proceedings of the 2021 38th ISARC, Dubai, United Arab Emirates, 2–4 November 2021. [Google Scholar]

- Kim, P.; Price, L.C.; Park, J.; Cho, Y.K. UAV-UGV Cooperative 3D Environmental Mapping. In Proceedings of the ASCE International Conference on Computing in Civil Engineering 2019, Atlanta, GA, USA, 17–19 June 2019; ASCE: Atlanta, GA, USA, 2019; pp. 384–392. [Google Scholar]

- Kim, P.; Park, J.; Cho, Y. As-Is Geometric Data Collection and 3D Visualization through the Collaboration between UAV and UGV. In Proceedings of the 36th International Symposium on Automation and Robotics in Construction (ISARC), Banff, AB, Canada, 21–24 May 2019; Al-Hussein, M., Ed.; International Association for Automation and Robotics in Construction (IAARC): Banff, AB, Canada, 2019; pp. 544–551. [Google Scholar]

- Khaloo, A.; Lattanzi, D.; Jachimowicz, A.; Devaney, C. Utilizing UAV and 3D Computer Vision for Visual Inspection of a Large Gravity Dam. Front. Built Environ. 2018, 4, 31. [Google Scholar] [CrossRef]

- Mansouri, S.S.; Kanellakis, C.; Fresk, E.; Kominiak, D.; Nikolakopoulos, G. Cooperative UAVs as a Tool for Aerial Inspection of the Aging Infrastructure. In Proceedings of the Field and Service Robotics; Hutter, M., Siegwart, R., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 177–189. [Google Scholar]

- Mansouri, S.S.; Kanellakis, C.; Fresk, E.; Kominiak, D.; Nikolakopoulos, G. Cooperative Coverage Path Planning for Visual Inspection. Control Eng. Pract. 2018, 74, 118–131. [Google Scholar] [CrossRef]

- Ueda, T.; Hirai, H.; Fuchigami, K.; Yuki, R.; Jonghyun, A.; Yasukawa, S.; Nishida, Y.; Ishii, K.; Sonoda, T.; Higashi, K.; et al. Inspection System for Underwater Structure of Bridge Pier. Proc. Int. Conf. Artif. Life Robot. 2019, 24, 521–524. [Google Scholar] [CrossRef]

- Yang, Y.; Hirose, S.; Debenest, P.; Guarnieri, M.; Izumi, N.; Suzumori, K. Development of a Stable Localized Visual Inspection System for Underwater Structures. Adv. Robot. 2016, 30, 1415–1429. [Google Scholar] [CrossRef]

- Sulaiman, M.; AlQahtani, A.; Liu, H.; Binalhaj, M. Utilizing Wi-Fi Access Points for Unmanned Aerial Vehicle Localization for Building Indoor Inspection. In Proceedings of the 2021 IEEE 12th Annual Ubiquitous Computing, Electronics & Mobile Communication Conference (UEMCON), New York, NY, USA, 1–4 December 2021; Paul, R., Ed.; IEEE: Piscataway, NJ, USA, 2021; pp. 775–779. [Google Scholar]

- Kuo, C.H.; Hsiung, S.H.; Peng, K.C.; Peng, K.C.; Yang, T.H.; Hsieh, Y.C.; Tsai, Y.D.; Shen, C.C.; Kuang, C.Y.; Hsieh, P.S.; et al. Unmanned System and Vehicle for Infrastructure Inspection–Image Correction, Quantification, Reliabilities and Formation of Façade Map. In Proceedings of the 9th European Workshop on Structural Health Monitoring (EWSHM 2018), Manchester, UK, 10–13 July 2018. [Google Scholar]

- Jo, J.; Jadidi, Z.; Stantic, B. A Drone-Based Building Inspection System Using Software-Agents; Ivanovic, M., Badica, C., Dix, J., Jovanovic, Z., Malgeri, M., Savic, M., Eds.; Speinger: Berlin, Germany, 2018; Volume 737, pp. 115–121. [Google Scholar]

- Wang, R.; Xiong, Z.; Chen, Y.L.; Manjunatha, P.; Masri, S.F. A Two-Stage Local Positioning Method with Misalignment Calibration for Robotic Structural Monitoring of Buildings. J. Dyn. Syst. Meas. Control Trans. ASME 2019, 141, 061014. [Google Scholar] [CrossRef]

- Falorca, J.F.; Lanzinha, J.C.G. Facade Inspections with Drones–Theoretical Analysis and Exploratory Tests. Int. J. Build. Pathol. Adapt. 2021, 39, 235–258. [Google Scholar] [CrossRef]

- Grosso, R.; Mecca, U.; Moglia, G.; Prizzon, F.; Rebaudengo, M. Collecting Built Environment Information Using UAVs: Time and Applicability in Building Inspection Activities. Sustainability 2020, 12, 4731. [Google Scholar] [CrossRef]

- Kuo, C.; Leber, A.; Kuo, C.; Boller, C.; Eschmann, C.; Kurz, J. Unmanned Robot System for Structure Health Monitoring and Non-Destructive Building Inspection, Current Technologies Overview and Future Improvements. In Proceedings of the 9th International Workshop on Structural Health Monitoring, Stanford, CA, USA, 10–13 September 2013; Chang, F., Ed.; pp. 1–8. [Google Scholar]

- Perry, B.J.; Guo, Y.; Atadero, R.; van de Lindt, J.W. Tracking Bridge Condition over Time Using Recurrent UAV-Based Inspection. In Bridge Maintenance, Safety, Management, Life-Cycle Sustainability and Innovations; CRC Press: Boca Raton, FL, USA, 2021; pp. 286–291. [Google Scholar]

- Liu, Y.; Lin, Y.; Yeoh, J.K.W.; Chua, D.K.H.; Wong, L.W.C.; Ang, M.H., Jr.; Lee, W.L.; Chew, M.Y.L. Framework for Automated UAV-Based Inspection of External Building Façades; Advances in 21st Century Human Settlements; Springer: Berlin, Germany, 2021; p. 194. [Google Scholar]

- Fujihira, Y.; Buriya, K.; Kaneko, S.; Tsukida, T.; Hanajima, N.; Mizukami, M. Evaluation of Whole Circumference Image Acquisition System for a Long Cylindrical Structures Inspection Robot. In Proceedings of the 2017 56th Annual Conference of the Society of Instrument and Control Engineers of Japan (SICE), Kanazawa, Japan, 19–22 September 2017; pp. 1108–1110. [Google Scholar]

- Levine, N.M.; Spencer, B.F., Jr. Post-Earthquake Building Evaluation Using UAVs: A BIM-Based Digital Twin Framework. Sensors 2022, 22, 873. [Google Scholar] [CrossRef] [PubMed]

- Yusof, H.; Ahmad, M.; Abdullah, A. Historical Building Inspection Using the Unmanned Aerial Vehicle (Uav). Int. J. Sustain. Constr. Eng. Technol. 2020, 11, 12–20. [Google Scholar] [CrossRef]

- Mita, A.; Shinagawa, Y. Response Estimation of a Building Subject to a Large Earthquake Using Acceleration Data of a Single Floor Recorded by a Sensor Agent Robot. In Proceedings of the Sensors and Smart Structures Technologies for Civil, Mechanical, and Aerospace Systems 2014, San Diego, CA, USA, 10–13 March 2014; International Society for Optics and Photonics: Bellingham, WA, USA, 2014; Volume 9061, p. 90611G. [Google Scholar]

- Ghosh Mondal, T.; Jahanshahi, M.R.; Wu, R.; Wu, Z.Y. Deep Learning-based Multi-class Damage Detection for Autonomous Post-disaster Reconnaissance. Struct. Control Health Monit. 2020, 27, e2507. [Google Scholar] [CrossRef]

- Mader, D.; Blaskow, R.; Westfeld, P.; Weller, C. Potential of Uav-Based Laser Scanner and Multispectral Camera Data in Building Inspection. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 41, 1135–1142. [Google Scholar] [CrossRef]

- Mirzabeigi, S.; Razkenari, M. Automated Vision-Based Building Inspection Using Drone Thermography. In Proceedings of the Construction Research Congress 2022, Arlington, VA, USA, 9–12 March 2022; Jazizadeh, F., Shealy, T., Garvin, M., Eds.; pp. 737–746. [Google Scholar]

- Jung Hyung-Jo; Lee Jin-Hwan; Yoon Sungsik; Kim In-Ho Bridge Inspection and Condition Assessment Using Unmanned Aerial Vehicles (UAVs): Major Challenges and Solutions from a Practical Perspective. Smart Struct. Syst. 2019, 24, 669–681. [CrossRef]

- Schober, T. CLIBOT-a Rope Climbing Robot for Building Surface Inspection. Bautechnik 2010, 87, 81–85. [Google Scholar] [CrossRef]

- Lee, J.; Hwang, I.; Lee, H. Development of Advanced Robot System for Bridge Inspection and Monitoring. IABSE Symp. Rep. 2007, 93, 9–16. [Google Scholar] [CrossRef]

- Adán, A.; Prieto, S.A.; Quintana, B.; Prado, T.; García, J. An Autonomous Thermal Scanning System with Which to Obtain 3D Thermal Models of Buildings. In Proceedings of the Advances in Informatics and Computing in Civil and Construction Engineering, Chicago, IL, USA, 9 October 2018; Mutis, I., Hartmann, T., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 489–496. [Google Scholar]

- Dios, J.R.M.; Ollero, A. Automatic Detection of Windows Thermal Heat Losses in Buildings Using UAVs. In Proceedings of the 2006 World Automation Congress, Budapest, Hungary, 24–26 July 2006; pp. 1–6. [Google Scholar]

- Wang, C.; Cho, Y.K.; Gai, M. As-Is 3D Thermal Modeling for Existing Building Envelopes Using a Hybrid LIDAR System. J. Comput. Civ. Eng. 2013, 27, 645–656. [Google Scholar] [CrossRef]

- Peng, X.; Zhong, X.; Chen, A.; Zhao, C.; Liu, C.; Chen, Y. Debonding Defect Quantification Method of Building Decoration Layers via UAV-Thermography and Deep Learning. Smart Struct. Syst. 2021, 28, 55–67. [Google Scholar] [CrossRef]

- Pini, F.; Ferrari, C.; Libbra, A.; Leali, F.; Muscio, A. Robotic Implementation of the Slide Method for Measurement of the Thermal Emissivity of Building Elements. Energy Build. 2016, 114, 241–246. [Google Scholar] [CrossRef]

- Bohari, S.N.; Amran, A.U.; Zaki, N.A.M.; Suhaimi, M.S.; Rasam, A.R.A. Accuracy Assessment of Detecting Cracks on Concrete Wall at Different Distances Using Unmanned Autonomous Vehicle (UAV) Images; IOP Publishing Ltd.: Bristol, UK, 2021; Volume 620. [Google Scholar]

- Yan, Y.; Mao, Z.; Wu, J.; Padir, T.; Hajjar, J.F. Towards Automated Detection and Quantification of Concrete Cracks Using Integrated Images and Lidar Data from Unmanned Aerial Vehicles. Struct. Control Health Monit. 2021, 28, e2757. [Google Scholar] [CrossRef]

- Liu, D.; Xia, X.; Chen, J.; Li, S. Integrating Building Information Model and Augmented Reality for Drone-Based Building Inspection. J. Comput. Civ. Eng. 2021, 35, 04020073. [Google Scholar] [CrossRef]

- Wang, J.; Luo, C. Automatic Wall Defect Detection Using an Autonomous Robot: A Focus on Data Collection. In Proceedings of the ASCE International Conference on Computing in Civil Engineering 2019, Atlanta, GA, USA, 17–19 June 2019; pp. 312–319. [Google Scholar]

- Vazquez-Nicolas, J.M.; Zamora, E.; González-Hernández, I.; Lozano, R.; Sossa, H. PD+SMC Quadrotor Control for Altitude and Crack Recognition Using Deep Learning. Int. J. Control Autom. Syst. 2020, 18, 834–844. [Google Scholar] [CrossRef]

- Lorenc, S.J.; Yeager, M.; Bernold, L.E. Field Test Results of the Remotely Controlled Drilled Shaft Inspection System (DSIS). In Proceedings of the Robotics 2000, Albuquerque, New Mexico, 27 February–2 March 2000; pp. 120–125. [Google Scholar]

- Shutin, D.; Malakhov, A.; Marfin, K. Applying Automated and Robotic Means in Construction as a Factor for Providing Constructive Safety of Buildings and Structures. MATEC Web Conf. 2018, 251, 3005. [Google Scholar] [CrossRef]

- Hamledari, H.; McCabe, B.; Davari, S. Automated Computer Vision-Based Detection of Components of under-Construction Indoor Partitions. Autom. Constr. 2017, 74, 78–94. [Google Scholar] [CrossRef]

- Hussung, D.; Kurz, J.; Stoppel, M. Automated Non-Destructive Testing Technology for Large-Area Reinforced Concrete Structures. Concr. Reinf. Concr. Constr. 2012, 107, 794–804. [Google Scholar] [CrossRef]

- Qu, T.; Zang, W.; Peng, Z.; Liu, J.; Li, W.; Zhu, Y.; Zhang, B.; Wang, Y. Construction Site Monitoring Using UAV Oblique Photogrammetry and BIM Technologies. In Proceedings of the 22nd International Conference of the Association for Computer-Aided Architectural Design Research in Asia (CAADRIA) 2017, Yunlin, Taiwan, 5–8 April 2017; The Association for Computer-Aided Architectural Design Research in Asia (CAADRIA): Hong Kong, China, 2017; pp. 655–663. [Google Scholar]

- Samsami, R.; Mukherjee, A.; Asce, M.; Brooks, C.N. Application of Unmanned Aerial System (UAS) in Highway Construction Progress Monitoring Automation; American Society of Civil Engineers: Arlington, VA, USA, 2022; pp. 698–707. [Google Scholar]

- Han, D.; Lee, S.B.; Song, M.; Cho, J.S. Change Detection in Unmanned Aerial Vehicle Images for Progress Monitoring of Road Construction. Buildings 2021, 11, 150. [Google Scholar] [CrossRef]

- Shen, H.; Li, X.; Jiang, X.; Liu, Y. Automatic Scan Planning and Construction Progress Monitoring in Unknown Building Scene. In Proceedings of the 2021 IEEE International Conference on Robotics and Biomimetics (ROBIO), Sanya, China, 27–31 December 2021; pp. 1617–1622. [Google Scholar]

- Jacob-Loyola, N.; Muñoz-La Rivera, F.; Herrera, R.F.; Atencio, E. Unmanned Aerial Vehicles (UAVs) for Physical Progress Monitoring of Construction. Sensors 2021, 21, 4227. [Google Scholar] [CrossRef]

- Perez, M.A.; Zech, W.C.; Donald, W.N. Using Unmanned Aerial Vehicles to Conduct Site Inspections of Erosion and Sediment Control Practices and Track Project Progression. Transp. Res. Rec. 2015, 2528, 38–48. [Google Scholar] [CrossRef]

- Serrat, C.; Banaszek, S.; Cellmer, A.; Gilbert, V.; Banaszek, A. UAV, Digital Processing and Vectorization Techniques Applied to Building Condition Assessment and Follow-Up. Teh. Glas.-Tech. J. 2020, 14, 507–513. [Google Scholar] [CrossRef]

- Halder, S.; Afsari, K. Real-Time Construction Inspection in an Immersive Environment with an Inspector Assistant Robot. In Proceedings of the ASC2022. 58th Annual Associated Schools of Construction International Conference, Atlanta, GA, USA, 20–23 April 2022; Leathem, T., Collins, W., Perrenoud, A., Eds.; EasyChair: Atlanta, GA, USA, 2022; Volume 3, pp. 389–397. [Google Scholar]

- Bang, S.; Kim, H.; Kim, H. UAV-Based Automatic Generation of High-Resolution Panorama at a Construction Site with a Focus on Preprocessing for Image Stitching. Autom. Constr. 2017, 84, 70–80. [Google Scholar] [CrossRef]

- Asadi, K.; Ramshankar, H.; Pullagurla, H.; Bhandare, A.; Shanbhag, S.; Mehta, P.; Kundu, S.; Han, K.; Lobaton, E.; Wu, T. Vision-Based Integrated Mobile Robotic System for Real-Time Applications in Construction. Autom. Constr. 2018, 96, 470–482. [Google Scholar] [CrossRef]

- Ibrahim, A.; Sabet, A.; Golparvar-Fard, M. BIM-Driven Mission Planning and Navigation for Automatic Indoor Construction Progress Detection Using Robotic Ground Platform. Proc. 2019 Eur. Conf. Comput. Constr. 2019, 1, 182–189. [Google Scholar] [CrossRef]

- Shirina, N.V.; Kalachuk, T.G.; Shin, E.R.; Parfenyukova, E.A. Use of UAV to Resolve Construction Disputes; Lecture Notes in Civil Engineering; Springer: Berlin, Germany, 2021; Volume 151, LNCE; p. 246. [Google Scholar]

- Shang, Z.; Shen, Z. Real-Time 3D Reconstruction on Construction Site Using Visual SLAM and UAV. Constr. Res. Congr. 2018, 44, 305–315. [Google Scholar]

- Freimuth, H.; König, M. A Toolchain for Automated Acquisition and Processing of As-Built Data with Autonomous UAVs; University College Dublin: Dublin, Ireland, 2019. [Google Scholar]

- Hamledari, H. IFC-Enabled Site-to-BIM Automation: An Interoperable Approach Toward the Integration of Unmanned Aerial Vehicle (UAV)-Captured Reality into BIM. In Proceedings of the BuildingSMART Int. Student Project Award 2017, London, UK, 15 August 2017. [Google Scholar]

- Tian, J.; Luo, S.; Wang, X.; Hu, J.; Yin, J. Crane Lifting Optimization and Construction Monitoring in Steel Bridge Construction Project Based on BIM and UAV. Adv. Civ. Eng. 2021, 2021, 5512229. [Google Scholar] [CrossRef]

- Freimuth, H.; König, M. A Framework for Automated Acquisition and Processing of As-Built Data with Autonomous Unmanned Aerial Vehicles. Sensors 2019, 19, 4513. [Google Scholar] [CrossRef]

- Wang, J.; Huang, S.; Zhao, L.; Ge, J.; He, S.; Zhang, C.; Wang, X. High Quality 3D Reconstruction of Indoor Environments Using RGB-D Sensors. In Proceedings of the 2017 12th IEEE Conference on Industrial Electronics and Applications (ICIEA), Siem Reap, Cambodia, 18–20 June 2017; pp. 1739–1744. [Google Scholar]

- Coetzee, G.L. Smart Construction Monitoring of Dams with UAVS-Neckartal Dam Water Project Phase 1; ICE Publishing: London, UK, 2018; pp. 445–456. [Google Scholar]

- Golparvar-Fard, M.; Peña-Mora, F.; Savarese, S. D4AR-a 4-Dimensional Augmented Reality Model for Automating Construction Progress Monitoring Data Collection, Processing and Communication. J. Inf. Technol. Constr. 2009, 14, 129–153. [Google Scholar]

- Lattanzi, D.; Miller, G.R. 3D Scene Reconstruction for Robotic Bridge Inspection. J. Infrastruct. Syst. 2015, 21, 4014041. [Google Scholar] [CrossRef]

- De Winter, H.; Bassier, M.; Vergauwen, M. Digitisation in Road Construction: Automation of As-Built Models; Copernicus GmbH: Gottingen, Germany, 2022; Volume 46, pp. 69–76. [Google Scholar]

- Kim, P.; Chen, J.; Cho, Y.K. SLAM-Driven Robotic Mapping and Registration of 3D Point Clouds. Autom. Constr. 2018, 89, 38–48. [Google Scholar] [CrossRef]

- Bang, S.; Kim, H.; Kim, H. Vision-Based 2D Map Generation for Monitoring Construction Sites Using UAV Videos. In Proceedings of the 2017 34rd ISARC, Taipei, Taiwan, 1 July 2017; Chiu, K.C., Ed.; IAARC: Taipei, Taiwan, 2017; pp. 830–833. [Google Scholar]

- Kim, S.; Irizarry, J.; Costa, D.B. Field Test-Based UAS Operational Procedures and Considerations for Construction Safety Management: A Qualitative Exploratory Study. Int. J. Civ. Eng. 2020, 18, 919–933. [Google Scholar] [CrossRef]

- Gheisari, M.; Esmaeili, B. Applications and Requirements of Unmanned Aerial Systems (UASs) for Construction Safety. Saf. Sci. 2019, 118, 230–240. [Google Scholar] [CrossRef]

- Gheisari, M.; Rashidi, A.; Esmaeili, B. Using Unmanned Aerial Systems for Automated Fall Hazard Monitoring. In Proceedings of the Construction Research Congress 2018, New Orleans, LA, USA, 2–4 April 2018; pp. 62–72. [Google Scholar]

- Asadi, K.; Chen, P.; Han, K.; Wu, T.; Lobaton, E. Real-Time Scene Segmentation Using a Light Deep Neural Network Architecture for Autonomous Robot Navigation on Construction Sites. In Proceedings of the Computing in Civil Engineering 2019: Data, Sensing, and Analytics, Reston, VA, USA, 17–19 June 2019; American Society of Civil Engineers: Reston, VA, USA, 2019; pp. 320–327. [Google Scholar]

- Mantha, B.R.; de Soto, B. Designing a Reliable Fiducial Marker Network for Autonomous Indoor Robot Navigation. In Proceedings of the Proceedings of the 36th International Symposium on Automation and Robotics in Construction (ISARC), Banff, AB, Canada, 21–24 May 2019; pp. 21–24. [Google Scholar]

- Raja, A.K.; Pang, Z. High Accuracy Indoor Localization for Robot-Based Fine-Grain Inspection of Smart Buildings. In Proceedings of the 2016 IEEE International Conference on Industrial Technology (ICIT), Taipei, Taiwan, 14–17 March 2016; pp. 2010–2015. [Google Scholar]

- Myung, H.; Jung, J.; Jeon, H. Robotic SHM and Model-Based Positioning System for Monitoring and Construction Automation. Adv. Struct. Eng. 2012, 15, 943–954. [Google Scholar] [CrossRef]

- Chen, K.; Reichard, G.; Akanmu, A.; Xu, X. Geo-Registering UAV-Captured Close-Range Images to GIS-Based Spatial Model for Building Façade Inspections. Autom. Constr. 2021, 122, 103503. [Google Scholar] [CrossRef]

- Cesetti, A.; Frontoni, E.; Mancini, A.; Ascani, A.; Zingaretti, P.; Longhi, S. A Visual Global Positioning System for Unmanned Aerial Vehicles Used in Photogrammetric Applications. J. Intell. Robot. Syst. 2011, 61, 157–168. [Google Scholar] [CrossRef]

- Paterson, A.M.; Dowling, G.R.; Chamberlain, D.A. Building Inspection: Can Computer Vision Help? Autom. Constr. 1997, 7, 13–20. [Google Scholar] [CrossRef]

- Shang, Z.; Shen, Z. Vision Model-Based Real-Time Localization of Unmanned Aerial Vehicle for Autonomous Structure Inspection under GPS-Denied Environment. In Proceedings of the Computing in Civil Engineering 2019, Atlanta, GA, USA, 17–19 June 2019; pp. 292–298. [Google Scholar]

- Sugimoto, H.; Moriya, Y.; Ogasawara, T. Underwater Survey System of Dam Embankment by Remotely Operated Vehicle. In Proceedings of the 2017 IEEE Underwater Technology (UT), Busan, Republic of Korea, 21–24 February 2017; pp. 1–6. [Google Scholar]

- Ibrahim, A.; Roberts, D.; Golparvar-Fard, M.; Bretl, T. An Interactive Model-Driven Path Planning and Data Capture System for Camera-Equipped Aerial Robots on Construction Sites. In Proceedings of the ASCE International Workshop on Computing in Civil Engineering 2017, Seattle, WA, USA, 25–27 June 2017; American Society of Civil Engineers (ASCE): Seattle, WA, USA, 2017; pp. 117–124. [Google Scholar]

- Mantha, B. Navigation, Path Planning, and Task Allocation Framework For Mobile Co-Robotic Service Applications in Indoor Building Environments. Ph.D. Thesis, University of Michigan, Ann Arbor, MI, USA, 2018. [Google Scholar]

- Lin, S.; Kong, X.; Wang, J.; Liu, A.; Fang, G.; Han, Y. Development of a UAV Path Planning Approach for Multi-Building Inspection with Minimal Cost; Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin, Germany, 2021; Volume 12606, LNCS; p. 93. [Google Scholar]

- González-Desantos, L.M.; Frías, E.; Martínez-Sánchez, J.; González-Jorge, H. Indoor Path-Planning Algorithm for Uav-Based Contact Inspection. Sensors 2021, 21, 642. [Google Scholar] [CrossRef]

- Shi, L.; Mehrooz, G.; Jacobsen, R.H. Inspection Path Planning for Aerial Vehicles via Sampling-Based Sequential Optimization. In Proceedings of the 2021 International Conference on Unmanned Aircraft Systems (ICUAS), Athens, Greece, 15–18 June 2021; pp. 679–687. [Google Scholar]

- Asadi, K.; Ramshankar, H.; Pullagurla, H.; Bhandare, A.; Shanbhag, S.; Mehta, P.; Kundu, S.; Han, K.; Lobaton, E.; Wu, T. Building an Integrated Mobile Robotic System for Real-Time Applications in Construction. In Proceedings of the 35th International Symposium on Automation and Robotics in Construction (ISARC), Berlin, Germany, 20–25 July 2018; Teizer, J., Ed.; International Association for Automation and Robotics in Construction (IAARC): Taipei, Taiwan, 2018; pp. 453–461. [Google Scholar]

- Warszawski, A.; Rosenfeld, Y.; Shohet, I. Autonomous Mapping System for an Interior Finishing Robot. J. Comput. Civ. Eng. 1996, 10, 67–77. [Google Scholar] [CrossRef]

- Zhang, H.; Chi, S.; Yang, J.; Nepal, M.; Moon, S. Development of a Safety Inspection Framework on Construction Sites Using Mobile Computing. J. Manag. Eng. 2017, 33, 4016048. [Google Scholar] [CrossRef]

- Al-Kaff, A.; Moreno, F.; Jose, L.; Garcia, F.; Martin, D.; de la Escalera, A.; Nieva, A.; Garcea, J. VBII-UAV: Vision-Based Infrastructure Inspection-UAV; Rocha, A., Correia, A., Adeli, H., Reis, L., Costanzo, S., Eds.; Springer: Berlin, Germany, 2017; Volume 570, pp. 221–231. [Google Scholar]

- Seo, J.; Han, S.; Lee, S.; Kim, H. Computer Vision Techniques for Construction Safety and Health Monitoring. Adv. Eng. Inform. 2015, 29, 239–251. [Google Scholar] [CrossRef]

- Li, Y.; Li, X.; Wang, H.; Wang, S.; Gu, S.; Zhang, H. Exposed Aggregate Detection of Stilling Basin Slabs Using Attention U-Net Network. KSCE J. Civ. Eng. 2020, 24, 1740–1749. [Google Scholar] [CrossRef]

- Giergiel, M.; Buratowski, T.; Małka, P.; Kurc, K.; Kohut, P.; Majkut, K. The Project of Tank Inspection Robot. In Proceedings of the Structural Health Monitoring II, Online, 12 July 2012; Trans Tech Publications Ltd.: Bäch, Switzerland, 2012; Volume 518, pp. 375–383. [Google Scholar]

- Munawar, H.; Ullah, F.; Heravi, A.; Thaheem, M.; Maqsoom, A. Inspecting Buildings Using Drones and Computer Vision: A Machine Learning Approach to Detect Cracks and Damages. Drones 2022, 6, 5. [Google Scholar] [CrossRef]

- Alipour, M.; Harris, D.K. Increasing the Robustness of Material-Specific Deep Learning Models for Crack Detection across Different Materials. Eng. Struct. 2020, 206, 110157. [Google Scholar] [CrossRef]

- Chen, K.; Reichard, G.; Xu, X.; Akanmu, A. Automated Crack Segmentation in Close-Range Building Facade Inspection Images Using Deep Learning Techniques. J. Build. Eng. 2021, 43, 102913. [Google Scholar] [CrossRef]

- Kuo, C.-M.; Kuo, C.-H.; Lin, S.-P.; Manuel, M.; Lin, P.T.; Hsieh, Y.-C.; Lu, W.-H. Infrastructure Inspection Using an Unmanned Aerial System (UAS) With Metamodeling-Based Image Correction. In Proceedings of the ASME 2016 International Design Engineering Technical Conferences and Computers and Information in Engineering Conference, Charlotte, NC, USA, 21–24 August 2016; American Society of Mechanical Engineers Digital Collection: New York, NY, USA, 2016. [Google Scholar]

- Peng, K.C.; Feng, L.; Hsieh, Y.C.; Yang, T.H.; Hsiung, S.H.; Tsai, Y.D.; Kuo, C. Unmanned Aerial Vehicle for Infrastructure Inspection with Image Processing for Quantification of Measurement and Formation of Facade Map. In Proceedings of the 2017 International Conference on Applied System Innovation (ICASI), Sapporo, Japan, 13–17 May 2017; pp. 1969–1972. [Google Scholar]

- Costa, D.B.; Mendes, A.T.C. Lessons Learned from Unmanned Aerial System-Based 3D Mapping Experiments. In Proceedings of the 52nd ASC Annual International Conference, Provo, Utah, 13–16 April 2016. [Google Scholar]

- Choi, S.; Kim, E. Image Acquisition System for Construction Inspection Based on Small Unmanned Aerial Vehicle BT-Advanced Multimedia and Ubiquitous Engineering; Park, J.J., Jong, H., Chao, H.-C., Arabnia, H., Yen, N.Y., Eds.; Springer: Berlin/Heidelberg, Germany, 2015; pp. 273–280. [Google Scholar]

- Yan, R.-J.; Kayacan, E.; Chen, I.-M.; Tiong, L.; Wu, J. QuicaBot: Quality Inspection and Assessment Robot. IEEE Trans. Autom. Sci. Eng. 2018, 16, 506–517. [Google Scholar] [CrossRef]

- Ham, Y.; Kamari, M. Automated Content-Based Filtering for Enhanced Vision-Based Documentation in Construction toward Exploiting Big Visual Data from Drones. Autom. Constr. 2019, 105, 102831. [Google Scholar] [CrossRef]

- Heffron, R.E. The Use of Submersible Remotely Operated Vehicles for Inspection of Water-Filled Pipelines and Tunnels. In Proceedings of the Pipelines in the Constructed Environment, San Diego, CA, USA, 23–27 August 1998; pp. 397–404. [Google Scholar]

- Longo, D.; Muscato, G. Adhesion Control for the Alicia3 Climbing Robot. In Proceedings of the Climbing and Walking Robots, Madrid, Spain, 22–24 September 2004; Springer: Berlin/Heidelberg, Germany, 2005; pp. 1005–1015. [Google Scholar]

- Kuo, C.-H.; Kanlanjan, S.; Pagès, L.; Menzel, H.; Power, S.; Kuo, C.-M.; Boller, C.; Grondel, S. Effects of Enhanced Image Quality in Infrastructure Monitoring through Micro Aerial Vehicle Stabilization. In Proceedings of the European Workshop on Structural Health Monitoring, Nantes, France, 8–11 July 2014; pp. 710–717. [Google Scholar]

- González-deSantos, L.M.; Martínez-Sánchez, J.; González-Jorge, H.; Ribeiro, M.; de Sousa, J.B.; Arias, P. Payload for Contact Inspection Tasks with UAV Systems. Sensors 2019, 19, 3752. [Google Scholar] [CrossRef]

- Kuo, C.; Kuo, C.; Boller, C. Adaptive Measures to Control Micro Aerial Vehicles for Enhanced Monitoring of Civil Infrastructure. In Proceedings of the World Congress on Structural Control and Monitoring, Barcelona, Spain, 15–17 July 2014. [Google Scholar]

- Andriani, N.; Maulida, M.D.; Aniroh, Y.; Alfafa, M.F. Pressure Control of a Wheeled Wall Climbing Robot Using Proporsional Controller. In Proceedings of the 2016 International Conference on Information Communication Technology and Systems (ICTS), Surabaya, Indonesia, 12 October 2016; pp. 124–128. [Google Scholar]

- Lee, N.; Mita, A. Sensor Agent Robot with Servo-Accelerometer for Structural Health Monitoring. In Proceedings of the Sensors and Smart Structures Technologies for Civil, Mechanical, and Aerospace Systems 2012, San Diego, CA, USA, 12–15 March 2012; Tomizuka, M., Yun, C.-B., Lynch, J.P., Eds.; International Society for Optics and Photonics: Bellingham, WA, USA, 2012; Volume 8345, pp. 753–759. [Google Scholar]

- Elbanhawi, M.; Simic, M. Robotics Application in Remote Data Acquisition and Control for Solar Ponds. Appl. Mech. Mater. 2013, 253–255, 705–715. [Google Scholar] [CrossRef]

- Huston, D.R.; Esser, B.; Gaida, G.; Arms, S.W.; Townsend, C.P. Wireless Inspection of Structures Aided by Robots. Proc. SPIE 2001, 4337, 147–154. [Google Scholar] [CrossRef]

- Carrio, A.; Pestana, J.; Sanchez-Lopez, J.-L.; Suarez-Fernandez, R.; Campoy, P.; Tendero, R.; García-De-Viedma, M.; González-Rodrigo, B.; Bonatti, J.; Gregorio Rejas-Ayuga, J.; et al. UBRISTES: UAV-Based Building Rehabilitation with Visible and Thermal Infrared Remote Sensing. Adv. Intell. Syst. Comput. 2016, 417, 245–256. [Google Scholar] [CrossRef]

- Rakha, T.; El Masri, Y.; Chen, K.; Panagoulia, E.; De Wilde, P. Building Envelope Anomaly Characterization and Simulation Using Drone Time-Lapse Thermography. Energy Build. 2022, 259, 111754. [Google Scholar] [CrossRef]

- Martínez De Dios, J.R.; Ollero, A.; Ferruz, J. Infrared Inspection of Buildings Using Autonomous Helicopters. IFAC Proc. Vol. 2006, 4, 602–607. [Google Scholar] [CrossRef]

- Ortiz-Sanz, J.; Gil-Docampo, M.; Arza-García, M.; Cañas-Guerrero, I. IR Thermography from UAVs to Monitor Thermal Anomalies in the Envelopes of Traditional Wine Cellars: Field Test. Remote Sens. 2019, 11, 1424. [Google Scholar] [CrossRef]

- Eschmann, C.; Kuo, C.-M.; Kuo, C.-H.; Boller, C. High-resolution multisensor infrastructure inspection with unmanned aircraft systems. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, XL-1/W2, 125–129. [Google Scholar] [CrossRef]

- Caroti, G.; Piemonte, A.; Zaragoza, I.M.-E.; Brambilla, G. Indoor Photogrammetry Using UAVs with Protective Structures: Issues and Precision Tests. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 42, 137–142. [Google Scholar] [CrossRef]

- Cocchioni, F.; Pierfelice, V.; Benini, A.; Mancini, A.; Frontoni, E.; Zingaretti, P.; Ippoliti, G.; Longhi, S. Unmanned Ground and Aerial Vehicles in Extended Range Indoor and Outdoor Missions. In Proceedings of the 2014 International Conference on Unmanned Aircraft Systems (ICUAS), Orlando, FL, USA, 27–30 May 2014; pp. 374–382. [Google Scholar]

- Causa, F.; Fasano, G.; Grassi, M. Improving Autonomy in GNSS-Challenging Environments by Multi-UAV Cooperation. In Proceedings of the 2019 IEEE/AIAA 38th Digital Avionics Systems Conference (DASC), San Diego, CA, USA, 8–12 September 2019; pp. 1–10. [Google Scholar]

- Mantha, B.; Menassa, C.; Kamat, V. Multi-Robot Task Allocation and Route Planning for Indoor Building Environment Applications. In Proceedings of the Construction Research Congress 2018, New Orleans, LA, USA, 2–4 April 2018; American Society of Civil Engineers (ASCE): New Orleans, LA, USA, 2018; Volume 2018-April, pp. 137–146. [Google Scholar]

- Mantha, B.R.K.; Menassa, C.C.; Kamat, V.R. Task Allocation and Route Planning for Robotic Service Networks in Indoor Building Environments. J. Comput. Civ. Eng. 2017, 31, 04017038. [Google Scholar] [CrossRef]

- Moud, H.I.; Zhang, X.; Flood, I.; Shojaei, A.; Zhang, Y.; Capano, C. Qualitative and Quantitative Risk Analysis of Unmanned Aerial Vehicle Flights on Construction Job Sites: A Case Study. Int. J. Adv. Intell. Syst. 2019, 12, 135–146. [Google Scholar]

- Federal Aviation Administration Part 107 Waiver. Available online: https://www.faa.gov/uas/commercial_operators/part_107_waivers (accessed on 19 June 2022).

- OSHA (Occupational Safety and Health Administration) OSHA’s Use of Unmanned Aircraft Systems in Inspections-11/10/2016 | Occupational Safety and Health Administration. Available online: https://www.osha.gov/dep/memos/use-of-unmanned-aircraft-systems-inspection_memo_05182018.html (accessed on 20 January 2021).

- Moud, H.I.; Flood, I.; Shojaei, A.; Zhang, Y.; Zhang, X.; Tadayon, M.; Hatami, M. Qualitative Assessment of Indirect Risks Associated with Unmanned Aerial Vehicle Flights over Construction Job Sites. In Proceedings of the Computing in Civil Engineering 2019, Atlanta, GA, USA, 17–19 June 2019; pp. 83–89. [Google Scholar]

- Paes, D.; Kim, S.; Irizarry, J. Human Factors Considerations of First Person View (FPV) Operation of Unmanned Aircraft Systems (UAS) in Infrastructure Construction and Inspection Environments. In Proceedings of the 6th CSCE-CRC International Construction Specialty Conference, Vancouver, BC, Canada, 31 May–3 June 2017. [Google Scholar]

- Izadi Moud, H.; Razkenari, M.A.; Flood, I.; Kibert, C. A Flight Simulator for Unmanned Aerial Vehicle Flights Over Construction Job Sites BT-Advances in Informatics and Computing in Civil and Construction Engineering; Mutis, I., Hartmann, T., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 609–616. [Google Scholar]

- Petukhov, A.; Rachkov, M. Video Compression Method for On-Board Systems of Construction Robots. In Proceedings of the Proceedings of the 20th International Symposium on Automation and Robotics in Construction ISARC 2003—The Future Site; Maas, G., Van Gassel, F., Eds.; International Association for Automation and Robotics in Construction (IAARC): Eindhoven, The Netherlands, 2003; pp. 443–447. [Google Scholar]

- Schilling, K. Telediagnosis and Teleinspection Potential of Telematic Techniques. Adv. Eng. Softw. 2000, 31, 875–879. [Google Scholar] [CrossRef]

- Yang, Y.; Nagarajaiah, S. Robust Data Transmission and Recovery of Images by Compressed Sensing for Structural Health Diagnosis. Struct. Control Health Monit. 2017, 24, e1856. [Google Scholar] [CrossRef]

- Li, Z.; Zhang, B. Research on Application of Robot and IOT Technology in Engineering Quality Intelligent Inspection. In Proceedings of the 2020 2nd International Conference on Robotics, Intelligent Control and Artificial Intelligence, Shanghai, China, 17–19 October 2020; pp. 17–22. [Google Scholar]

- Mahama, E.; Walpita, T.; Karimoddini, A.; Eroglu, A.; Goudarzi, N.; Cavalline, T.; Khan, M. Testing and Evaluation of Radio Frequency Immunity of Unmanned Aerial Vehicles for Bridge Inspection. In Proceedings of the 2021 IEEE Aerospace Conference (50100), Virtual, 6–20 March 2021; pp. 1–8. [Google Scholar]

- Xia, P.; Xu, F.; Qi, Z.; Du, J.; Asce, M.; Student, P.D. Human Robot Comparison in Rapid Structural Inspection. In Proceedings of the Construction Research Congress, Arlington, VA, USA, 9–12 March 2022; American Society of Civil Engineers: Reston, VA, USA, 2022; pp. 570–580. [Google Scholar]

- Van Dam, J.; Krasner, A.; Gabbard, J. Augmented Reality for Infrastructure Inspection with Semi-Autonomous Aerial Systems: An Examination of User Performance, Workload, and System Trust. In Proceedings of the 2020 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Atlanta, GA, USA, 22–26 March 2020; pp. 743–744. [Google Scholar]

- Li, Y.; Karim, M.; Qin, R. A Virtual-Reality-Based Training and Assessment System for Bridge Inspectors with an Assistant Drone. Ieee Trans. Hum.-Mach. Syst. 2022, 52, 591–601. [Google Scholar] [CrossRef]

- Dam, J.V.; Krasner, A.; Gabbard, J.L. Drone-Based Augmented Reality Platform for Bridge Inspection: Effect of AR Cue Design on Visual Search Tasks. In Proceedings of the 2020 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Atlanta, GA, USA, 22–26 March 2020; pp. 201–204. [Google Scholar]

- Eiris, R.; Albeaino, G.; Gheisari, M.; Benda, W.; Faris, R. InDrone: A 2D-Based Drone Flight Behavior Visualization Platform for Indoor Building Inspection. Smart Sustain. Built Environ. 2021, 10, 438–456. [Google Scholar] [CrossRef]

- Cheng, W.; Shen, H.; Chen, Y.; Jiang, X.; Liu, Y. Automatical Acquisition of Point Clouds of Construction Sites and Its Application in Autonomous Interior Finishing Robot. In Proceedings of the 2019 IEEE International Conference on Robotics and Biomimetics (ROBIO), Dali, China, 6–8 December 2019; pp. 1711–1716. [Google Scholar]

- Salaan, C.J.; Tadakuma, K.; Okada, Y.; Ohno, K.; Tadokoro, S. UAV with Two Passive Rotating Hemispherical Shells and Horizontal Rotor for Hammering Inspection of Infrastructure. In Proceedings of the 2017 IEEE/SICE International Symposium on System Integration (SII), Taipei, Taiwan, 11–14 December 2017; pp. 769–774. [Google Scholar]

- Dorafshan, S.; Thomas, R.J.; Maguire, M. SDNET2018: An Annotated Image Dataset for Non-Contact Concrete Crack Detection Using Deep Convolutional Neural Networks. Data Brief 2018, 21, 1664–1668. [Google Scholar] [CrossRef] [PubMed]

- Moud., H.I.; Shojaei., A.; Flood., I.; Zhang., X. Monte Carlo Based Risk Analysis of Unmanned Aerial Vehicle Flights over Construction Job Sites. In Proceedings of the 8th International Conference on Simulation and Modeling Methodologies, Technologies and Applications, Porto, Portugal, 29–31 July 2018; pp. 451–458. [Google Scholar]

- Karnik, N.; Bora, U.; Bhadri, K.; Kadambi, P.; Dhatrak, P. A Comprehensive Study on Current and Future Trends towards the Characteristics and Enablers of Industry 4.0. J. Ind. Inf. Integr. 2022, 27, 100294. [Google Scholar] [CrossRef]

- Brosque, C.; Galbally, E.; Khatib, O.; Fischer, M. Human-Robot Collaboration in Construction: Opportunities and Challenges. In Proceedings of the 2020 International Congress on Human-Computer Interaction, Optimization and Robotic Applications (HORA), Ankara, Turkey, 26–27 June 2020; pp. 1–8. [Google Scholar]

- Salmi, T.; Ahola, J.M.; Heikkilä, T.; Kilpeläinen, P.; Malm, T. Human-Robot Collaboration and Sensor-Based Robots in Industrial Applications and Construction. In Robotic Building; Bier, H., Ed.; Springer International Publishing: Cham, Switzerland, 2018; pp. 25–52. ISBN 978-3-319-70866-9. [Google Scholar]

- Reardon, C.; Fink, J. Air-Ground Robot Team Surveillance of Complex 3D Environments. In Proceedings of the 2016 IEEE International Symposium on Safety, Security, and Rescue Robotics (SSRR), Lausanne, Switzerland, 23–27 October 2016; Melo, K., Ed.; IEEE: Piscataway, NJ, USA, 2016; pp. 320–327. [Google Scholar]

- Alonso-Martin, F.; Malfaz, M.; Sequeira, J.; Gorostiza, J.F.; Salichs, M.A. A Multimodal Emotion Detection System during Human--Robot Interaction. Sensors 2013, 13, 15549–15581. [Google Scholar] [CrossRef] [PubMed]

- Adami, P.; Rodrigues, P.B.; Woods, P.J.; Becerik-Gerber, B.; Soibelman, L.; Copur-Gencturk, Y.; Lucas, G. Effectiveness of VR-Based Training on Improving Construction Workers’ Knowledge, Skills, and Safety Behavior in Robotic Teleoperation. Adv. Eng. Inform. 2021, 50, 101431. [Google Scholar] [CrossRef]

| Description | Results |

|---|---|

| Timespan | 1991:2022 |

| Sources (Journals, Books, etc) | 185 |

| Documents | 269 |

| Average years from publication | 5.25 |

| Average citations per documents | 11.13 |

| Average citations per year per doc | 1.591 |

| References | 463 |

| DOCUMENT TYPES | |

| Article | 100 |

| Book chapter | 6 |

| Conference paper | 163 |

| DOCUMENT CONTENTS | |

| Author’s Keywords (DE) | 515 |

| AUTHORS | |

| Authors | 663 |

| Author Appearances | 823 |

| Authors of single-authored documents | 70 |

| Authors of multi-authored documents | 593 |

| AUTHORS COLLABORATION | |

| Single-authored documents | 70 |

| Documents per Author | 0.406 |

| Authors per Document | 2.46 |

| Co-Authors per Documents | 3.06 |

| Collaboration Index | 2.98 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Halder, S.; Afsari, K. Robots in Inspection and Monitoring of Buildings and Infrastructure: A Systematic Review. Appl. Sci. 2023, 13, 2304. https://doi.org/10.3390/app13042304

Halder S, Afsari K. Robots in Inspection and Monitoring of Buildings and Infrastructure: A Systematic Review. Applied Sciences. 2023; 13(4):2304. https://doi.org/10.3390/app13042304

Chicago/Turabian StyleHalder, Srijeet, and Kereshmeh Afsari. 2023. "Robots in Inspection and Monitoring of Buildings and Infrastructure: A Systematic Review" Applied Sciences 13, no. 4: 2304. https://doi.org/10.3390/app13042304

APA StyleHalder, S., & Afsari, K. (2023). Robots in Inspection and Monitoring of Buildings and Infrastructure: A Systematic Review. Applied Sciences, 13(4), 2304. https://doi.org/10.3390/app13042304