Assessing the Relationship between Cognitive Workload, Workstation Design, User Acceptance and Trust in Collaborative Robots

Abstract

1. Introduction

2. Literature Review

3. Materials and Methods

3.1. Participants

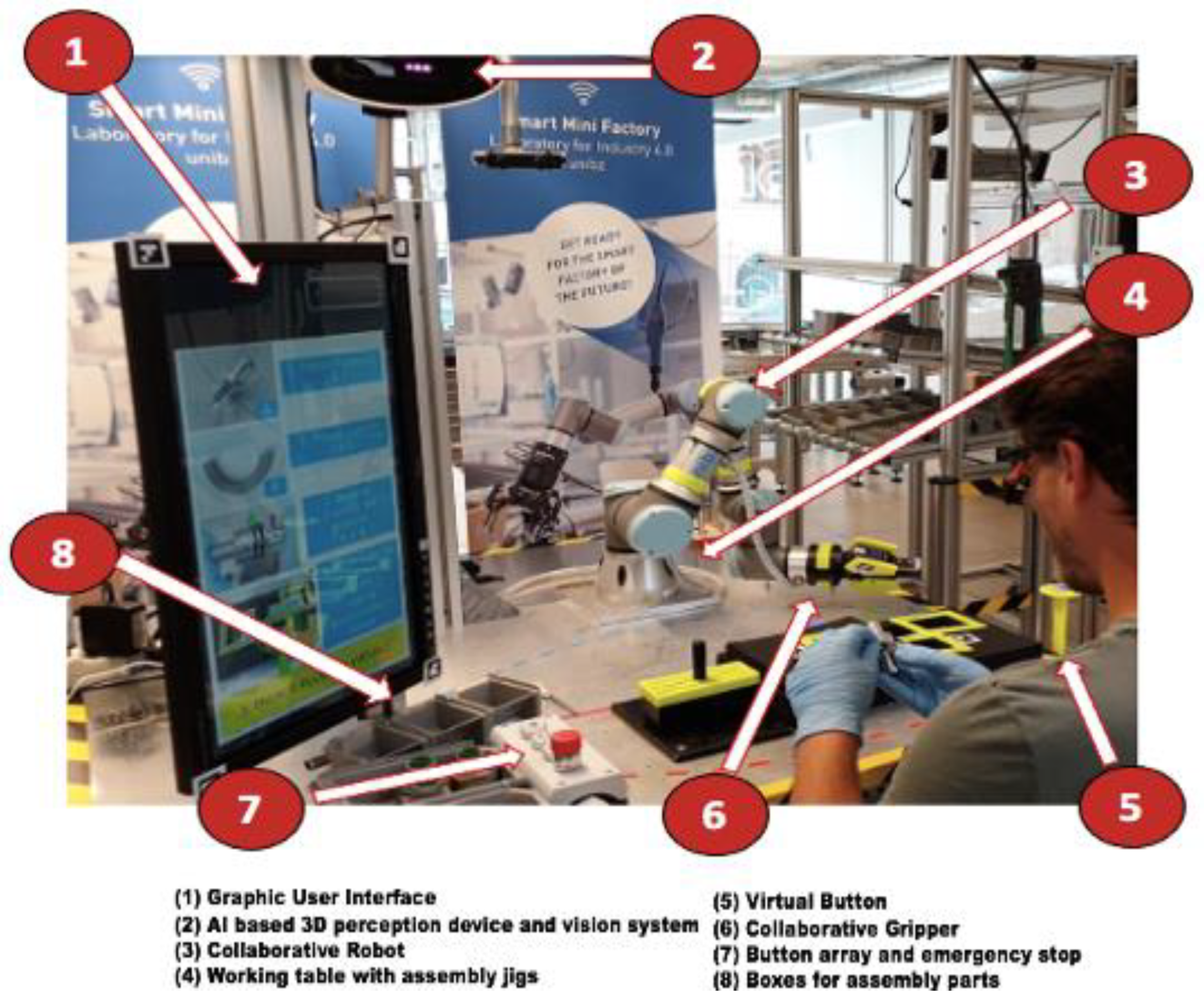

3.2. Experimental Setup

3.3. Measures

3.3.1. Cognitive Workload

3.3.2. User Acceptance

3.3.3. Perceived Stress

3.3.4. Trust

3.4. Data Analysis

4. Results

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

- Useful—Unuseful

- Pleasant—Unpleasant

- Nice—Annoying

- Effective—Superfluous

- Irritating—Likable

- Raising alertness—Sleep-inducing

- I think that I would like to use the robot frequently.

- I found the robot unnecessarily complex.

- I found that the robot was performing its tasks in a good way.

- I found that the robot was not functioning according to the task to be performed.

- I found that the robot was difficult to be used.

- How mentally demanding was the task?

- Irritated—Serene

- Worried—Carefree

- Motivated to complete the task—Unmotivated

- Competent—Unskilled

- Comfortable—Uncomfortable

- The way in which the robot moved made me feel uncomfortable.

- The speed with which the robot picked and released the components made me feel uneasy.

- I trusted that the robot was safe to cooperate with.

- I was comfortable the robot would not hurt me.

- I felt safe interacting with the robot.

- I knew the gripper would not drop the components.

- The robot gripper did not look reliable.

- The gripper seemed like it could be trusted.

- I felt I could rely on the robot to do what it was supposed to do.

References

- Kadir, B.A.; Broberg, O. Human-centered design of work systems in the transition to industry 4.0. Appl. Ergon. 2021, 92. [Google Scholar] [CrossRef] [PubMed]

- Wang, L.; Törngren, M.; Onori, M. Current status and advancement of cyber-physical systems in manufacturing. J. Manuf. Syst. 2015, 37, 517–527. [Google Scholar] [CrossRef]

- Oztemel, E.; Gursev, S. Literature review of Industry 4.0 and related technologies. J. Intell. Manuf. 2020, 31, 127–182. [Google Scholar] [CrossRef]

- Kolbeinsson, A.; Lagerstedt, E.; Lindblom, J. Foundation for a classification of collaboration levels for human-robot cooperation in manufacturing. Prod. Manuf. Res. 2019, 7, 448–471. [Google Scholar] [CrossRef]

- Huang, Z.; Xi, F.; Huang, T.; Dai, J.S.; Sinatra, R. Lower-mobility parallel robots: Theory and applications. Adv. Mech. Eng. 2010, 2, 927930. [Google Scholar] [CrossRef]

- ISO/9241-210; Ergonomics of Human-System Interaction—Human-Centered Design for Interactive Systems. International Organization for Standardization: Geneva, Switzerland, 2010.

- Cherubini, A.; Passama, R.; Crosnier, A.; Lasnier, A.; Fraisse, P. Collaborative Manufacturing with Physical Human-Robot Interaction. Robot. Comput.-Integr. Manuf. 2016, 40, 1–13. [Google Scholar] [CrossRef]

- Villani, V.; Sabattini, L.; Czerniak, J.; Mertens, A.; Vogel-Heuser, B.; Fantuzzi, C. Towards Modern Inclusive Factories: A Methodology for the Development of Smart Adaptive Human-Machine Interfaces. In Proceedings of the IEEE International Conference on Emerging Technologies and Factory Automation, Limassol, Cyprus, 12–15 September 2017; pp. 1–7. [Google Scholar] [CrossRef]

- Messeri, C. Enhancing the Quality of Human-Robot Cooperation Through the Optimisation of Human Well-Being and Productivity; Special Topics in Information Technology. SpringerBriefs in Applied Sciences and Technology; Springer: Cham, Switzerland, 2023. [Google Scholar] [CrossRef]

- Oliveira, R.; Arriaga, P.; Alves-Oliveira, P.; Correia, F.; Petisca, S.; Paiva, A. Friends or Foes? In Proceedings of the 2018 ACM/IEEE International Conference on Human-Robot Interaction, Chicago, IL, USA, 5–8 March 2018; pp. 279–288. [Google Scholar] [CrossRef]

- Weiss, A.; Huber, A.; Minichberger, J.; Ikeda, M. First application of robot teaching in an existing industry 4.0 environment: Does it really work? Societies 2016, 6, 20. [Google Scholar] [CrossRef]

- Fink, J. Anthropomorphism and human likeness in the design of robots and human-robot interaction. In International Conference on Social Robotics; Springer: Berlin/Heidelberg, Germany, 2012; pp. 199–208. [Google Scholar] [CrossRef]

- Michalos, G.; Makris, S.; Tsarouchi, P.; Guasch, T.; Kontovrakis, D.; Chryssolouris, G. Design considerations for safe human-robot collaborative workplaces. Procedia CIrP 2015, 37, 248–253. [Google Scholar] [CrossRef]

- Lindblom, J.; Wang, W. Towards an evaluation framework of safety, trust, and operator experience in different demonstrators of human-robot collaboration. Adv. Manuf. Technol. 2018, 32, 145–150. [Google Scholar] [CrossRef]

- Baumgartner, M.; Kopp, T.; Kinkel, S. Analysing Factory Workers’ Acceptance of Collaborative Robots: A Web-Based Tool for Company Representatives. Electronics 2022, 11, 145. [Google Scholar] [CrossRef]

- Cardoso, A.; Colim, A.; Bicho, E.; Braga, A.C.; Menozzi, M.; Arezes, P. Ergonomics and Human Factors as a Requirement to Implement Safer Collaborative Robotic Workstations: A Literature Review. Safety 2021, 7, 71. [Google Scholar] [CrossRef]

- Hopko, S.; Wang, J.; Mehta, R. Human Factors Considerations and Metrics in Shared Space Human-Robot Collaboration: A Systematic Review. Front. Robot. AI 2022, 9, 799522. [Google Scholar] [CrossRef] [PubMed]

- Kalakoski, V.; Selinheimo, S.; Valtonen, T.; Turunen, J.; Käpykangas, S.; Ylisassi, H.; Toivio, P.; Järnefelt, H.; Hannonen, H.; Paajanen, T. Effects of a cognitive ergonomics workplace intervention (CogErg) on cognitive strain and well-being: A cluster-randomised controlled trial. A study protocol. BMC Psychol. 2020, 8, 1. [Google Scholar] [CrossRef]

- Cascio, W.F.; Montealegre, R. How technology is changing work and organisations. Annu. Rev. Organ. Psychol. 2016, 3, 349–375. [Google Scholar] [CrossRef]

- Czerniak, J.N.; Brandl, C.; Mertens, A. Designing human-machine interaction concepts for machine tool controls regarding ergonomic requirements. IFAC-PapersOnLine 2017, 50, 1378–1383. [Google Scholar] [CrossRef]

- Kong, F. Development of metric method and framework model of integrated complexity evaluations of production process for ergonomics workstations. Int. J. Prod. Res. 2019, 57, 2429–2445. [Google Scholar] [CrossRef]

- Sadrfaridpour, B.; Burke, J.; Wang, Y. Human and robot collaborative assembly manufacturing: Trust dynamics and control. In RSS 2014 Workshop on Human-Robot Collaboration for Industrial Manufacturing; Springer: Boston, MA, USA, 2014. [Google Scholar] [CrossRef]

- Wickens, C.D. Mental workload: Assessment, prediction and consequences. In Human Mental Workload: Models and Applications; Springer: Cham, Switzerland, 2017; pp. 18–29. [Google Scholar] [CrossRef]

- Gualtieri, L.; Fraboni, F.; De Marchi, M.; Rauch, E. Development and evaluation of design guidelines for cognitive ergonomics in human-robot collaborative assembly systems. Appl. Ergon. 2022, 104, 103807. [Google Scholar] [CrossRef] [PubMed]

- Wickens, C.D.; Lee, J.D.; Liu, Y.; Becker, S.E.G. An Introduction to Human Factors Engineering; Pearson Education: London, UK, 2004. [Google Scholar]

- Habib, K.; Gouda, M.; El-Basyouny, K. Calibrating Design Guidelines using Mental Workload and Reliability Analysis. Transp. Res. Rec. J. Transp. Res. Board 2020, 2674, 360–369. [Google Scholar] [CrossRef]

- Longo, L. Experienced mental workload, perception of usability, their interaction and impact on task performance. PLoS ONE 2018, 13, e0199661. [Google Scholar] [CrossRef]

- Gaillard, A.W.K. Concentration, Stress and Performance. In Performance Under Stress; Ashgate: Aldershot, UK, 2008; pp. 59–75. [Google Scholar]

- Alsuraykh, N.H.; Wilson, M.L.; Tennent, P.; Sharples, S. How stress and mental workload are connected. In Proceedings of the 13th EAI International Conference on Pervasive Computing Technologies for Healthcare, Trento, Italy, 20–23 May 2019; pp. 371–376. [Google Scholar] [CrossRef]

- Biondi, F.N.; Cacanindin, A.; Douglas, C.; Cort, J. Overloaded and at Work: Investigating the Effect of Cognitive Workload on Assembly Task Performance. Hum. Factors 2021, 63, 813–820. [Google Scholar] [CrossRef]

- Liu, J.C.; Li, K.A.; Yeh, S.L.; Chien, S.Y. Assessing Perceptual Load and Cognitive Load by Fixation-Related Information of Eye Movements. Sensors 2022, 22, 1187. [Google Scholar] [CrossRef] [PubMed]

- Lazarus, R.S.; Folkman, S. Stress, Appraisal and Coping; Springer: New York, NY, USA, 1984. [Google Scholar]

- Rojas, R.A.; Garcia, M.A.R.; Wehrle, E.; Vidoni, R. A variational approach to minimum-jerk trajectories for psychological safety in collaborative assembly stations. IEEE Robot. Autom. Lett. 2019, 4, 823–829. [Google Scholar] [CrossRef]

- Arai, T.; Kato, R.; Fujita, M. Assessment of operator stress induced by robot collaboration in assembly. CIRP Ann. Manuf. Technol. 2010, 59, 5–8. [Google Scholar] [CrossRef]

- Lagomarsino, M.; Lorenzini, M.; Balatti, P.; Momi, E.D.; Ajoudani, A. Pick the Right Co-Worker: Online Assessment of Cognitive Ergonomics in Human-Robot Collaborative Assembly. IEEE Trans. Cogn. Dev. Syst. 2022; online ahead of print. [Google Scholar] [CrossRef]

- Bortot, D.; Born, M.; Bengler, K. Directly or on detours? How should industrial robots approximate humans? In Proceedings of the 2013 8th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Tokyo, Japan, 3–6 March 2013; pp. 89–90. [Google Scholar] [CrossRef]

- Koppenborg, M.; Nickel, P.; Naber, B.; Lungfiel, A.; Huelke, M. Effects of movement speed and predictability in human–robot collaboration. Hum. Factors Ergon. Manuf. Serv. Ind. 2017, 27, 197–209. [Google Scholar] [CrossRef]

- Dillion, A. User acceptance of information technology. In Encyclopedia of Human Factors and Ergonomics; Taylor and Francis: London, UK, 2001. [Google Scholar]

- Davis, F.D. Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q. 1989, 13, 319–340. [Google Scholar] [CrossRef]

- Kuz, S.; Mayer, M.P.; Müller, S.; Schlick, C.M. Using anthropomorphism to improve the human-machine interaction in industrial environments (part I). In Digital Human Modeling and Applications in Health, Safety, Ergonomics and Risk Management; Springer: Berlin/Heidelberg, Germany, 2013; pp. 76–85. [Google Scholar] [CrossRef]

- Mayer, M.P.; Kuz, S.; Schlick, C.M. Using anthropomorphism to improve the human-machine interaction in industrial environments (part II). In Digital Human Modeling and Applications in Health, Safety, Ergonomics and Risk Management; Springer: Berlin/Heidelberg, Germany, 2013; pp. 93–100. [Google Scholar] [CrossRef]

- Flash, T.; Hogan, N. The coordination of arm movements: An experimentally confirmed mathematical model. J. Neurosci. 1985, 5, 1688–1703. [Google Scholar] [CrossRef]

- Kokabe, M.; Shibata, S.; Yamamoto, T. Modeling of handling motion reflecting emotional state and its application to robots. In Proceedings of the 2008 SICE Annual Conference, Chofu, Japan, 20–22 August 2008; pp. 495–501. [Google Scholar] [CrossRef]

- Gopinath, V.; Ore, F.; Johansen, K. Safe assembly cell layout through risk assessment–an application with hand guided industrial robot. Procedia CIRP 2017, 63, 430–435. [Google Scholar] [CrossRef]

- Tang, G.; Webb, P.; Thrower, J. The development and evaluation of Robot Light Skin: A novel robot signalling system to improve communication in industrial human–robot collaboration. Robot. Comput. Integr. Manuf. 2019, 56, 85–94. [Google Scholar] [CrossRef]

- De Visser, E.J.; Peeters, M.M.; Jung, M.F.; Kohn, S.; Shaw, T.H.; Pak, R.; Neerincx, M.A. Towards a theory of longitudinal trust calibration in human–robot teams. Int. J. Soc. Robot. 2020, 12, 459–478. [Google Scholar] [CrossRef]

- Kuz, S.; Schlick, C.; Lindgaard, G.; Moore, D. Anthropomorphic motion control for safe and efficient human-robot cooperation in assembly system. In Proceedings of the 19th Triennial Congress of the IEA, Melbourne, Australia, 9–14 August 2015. [Google Scholar]

- Petruck, H.; Faber, M.; Giese, H.; Geibel, M.; Mostert, S.; Usai, M.; Mertens, A.; Brandl, C. Human-robot collaboration in manual assembly—A collaborative workplace. In Congress of the International Ergonomics Association; Springer: Cham, Switzerland, 2018; pp. 21–28. [Google Scholar] [CrossRef]

- Hancock, P.A.; Billings, D.R.; Schaefer, K.E.; Chen, J.Y.C.; de Visser, E.J.; Parasuraman, R. A meta-analysis of factors affecting trust in human-robot interaction. Hum. Factors 2011, 53, 517–527. [Google Scholar] [CrossRef] [PubMed]

- De Marchi, M.; Gualtieri, L.; Rojas, R.A.; Rauch, E.; Cividini, F. Integration of an Artificial Intelligence Based 3D Perception Device into a Human-Robot Collaborative Workstation for Learning Factories. In Proceedings of the Conference on Learning Factories (CLF), Graz, Austria, 10 June 2021. [Google Scholar]

- Kassner, M.; Patera, W.; Bulling, A. Pupil: An open source platform for pervasive eye tracking and mobile gaze-based interaction. arXiv 2014, arXiv:1405.0006. [Google Scholar] [CrossRef]

- Van Der Laan, J.D.; Heino, A.; De Waard, D. A simple procedure for the assessment of acceptance of advanced transport telematics. Transp. Res. Part C Emerg. Technol. 1997, 5, 1–10. [Google Scholar] [CrossRef]

- Lewis, J.J.R.; Sauro, J. Revisiting the Factor Structure of the System Usability Scale. J. Usability Stud. 2017, 12, 183–192. [Google Scholar]

- NASA. National Aeronautics and Space Administration Task Load Index (TLX): Computerised Version; Human Research Performance Group NASA Ames Research Center: Mountain View, CA, USA, 1986.

- Helton, W.S. Validation of a Short Stress State Questionnaire. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2004, 48, 1238–1242. [Google Scholar] [CrossRef]

- Charalambous, G.; Fletcher, S.; Webb, P. The development of a scale to evaluate trust in industrial human-robot collaboration. Int. J. Soc. Robot. 2016, 8, 193–209. [Google Scholar] [CrossRef]

- Hayes, A.F. Introduction to Mediation, Moderation, and Conditional Process Analysis: A Regression-Based Approach; Guilford: New York, NY, USA, 2017. [Google Scholar]

- Cohen, J. Statistical Power Analysis for the Behavioral Sciences, 2nd ed.; Lawrence Erlbaum Associates: Hillsdale, NJ, USA, 1988. [Google Scholar]

- Hackman, J.R.; Oldham, G.R. Motivation through the Design of Work: Test of a Theory. Organ. Behav. Hum. Perform. 1976, 16, 250–279. [Google Scholar] [CrossRef]

- Deci, E.L.; Ryan, R.M. The “what” and “why” of goal pursuits: Human needs and the self-determination of behavior. Psychol. Inq. 2000, 11, 227–268. [Google Scholar] [CrossRef]

- Jetter, H.; Reiterer, H.; Geyer, F. Blended interaction: Understanding natural human–computer interaction in post-WIMP interactive spaces. Pers. Ubiquitous Comput. 2013, 18, 1139–1158. [Google Scholar] [CrossRef]

- Haider, J.D.; Pohl, M.; Fröhlich, P. Defining Visual User Interface Design Recommendations for Highway Traffic Management Centres. In Proceedings of the 17th International Conference on Information Visualisation, London, UK, 16–18 July 2013; pp. 204–209. [Google Scholar] [CrossRef]

- Spence, C.; Driver, J. Cross-modal links in attention between audition, vision, and touch: Implications for interface design. Int. J. Cogn. Ergon. 1997, 1, 351–373. [Google Scholar] [CrossRef]

- Schlienger, C.; Conversy, S.; Chatty, S.; Anquetil, M.; Mertz, C. Improving users’ comprehension of changes with animation and sound: An empirical assessment. In IFIP Conference on Human-Computer Interaction; Springer: Berlin/Heidelberg, Germany, 2007; pp. 207–220. [Google Scholar] [CrossRef]

- Parasuraman, A. Technology Readiness Index (TRI) a multiple-item scale to measure readiness to embrace new technologies. J. Serv. Res. 2000, 2, 307–320. [Google Scholar] [CrossRef]

- Lin, C.H.; Shih, H.Y.; Sher, P.J. Integrating technology readiness into technology acceptance: The TRAM model. Psychol. Mark. 2007, 24, 641–657. [Google Scholar] [CrossRef]

- Wang, W.; Benbasat, I. Trust in and adoption of online recommendation agents. J. Assoc. Inf. Syst. 2005, 6, 72–101. [Google Scholar] [CrossRef]

- Petzoldt, C.; Niermann, D.; Maack, E.; Sontopski, M.; Vur, B.; Freitag, M. Implementation and Evaluation of Dynamic Task Allocation for Human–Robot Collaboration in Assembly. Appl. Sci. 2022, 12, 12645. [Google Scholar] [CrossRef]

| Feature | Scenario 1 | Scenario 2 | Scenario 3 |

|---|---|---|---|

| Robot’s speed | Lower than nominal values | Higher than nominal values | Set by participants (nominal, slower, higher) |

| Robot’s autonomy | Low | High | Intermediate |

| Robot’s trajectories | Point-to-point trapezoidal velocity profile trajectories | Point-to-point trapezoidal velocity profile trajectories | Minimum-jerk trajectories |

| Type of commands | Touch button | Gesture recognition system | Set by participants (touch or gesture recognition) |

| Notifications | Only instructions | Instructions and robot’s status | Instructions and robot’s status and speed |

| Training on safety | No info to participants | Basic training | Full training (including commands and GUI) |

| Variable | Method | ||

|---|---|---|---|

| Reference | Number of Items | ||

| User acceptance | |||

| Semantic differential | Reduced version of the “System Acceptance Scale” [52] | 6 items | |

| Likert scale | Adapted version of the “System Usability Scale” [53] | 5 items | |

| Cognitive workload | Likert-type scale | Reduced version of “NASA-TLX” [54] | 1 item |

| Perceived stress | Semantic differential | “Short Stress Questionnaire” [55] | 5 items |

| Trust | Likert scale | Adapted version of the “Trust in Industrial Human-Robot Interaction Questionnaire” [56] | 9 items |

| Measures | Unit of Measurement | ||

| Gaze behaviour | Eye tracker | Number of fixations and fixation duration | ms (for fixation duration) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Panchetti, T.; Pietrantoni, L.; Puzzo, G.; Gualtieri, L.; Fraboni, F. Assessing the Relationship between Cognitive Workload, Workstation Design, User Acceptance and Trust in Collaborative Robots. Appl. Sci. 2023, 13, 1720. https://doi.org/10.3390/app13031720

Panchetti T, Pietrantoni L, Puzzo G, Gualtieri L, Fraboni F. Assessing the Relationship between Cognitive Workload, Workstation Design, User Acceptance and Trust in Collaborative Robots. Applied Sciences. 2023; 13(3):1720. https://doi.org/10.3390/app13031720

Chicago/Turabian StylePanchetti, Tommaso, Luca Pietrantoni, Gabriele Puzzo, Luca Gualtieri, and Federico Fraboni. 2023. "Assessing the Relationship between Cognitive Workload, Workstation Design, User Acceptance and Trust in Collaborative Robots" Applied Sciences 13, no. 3: 1720. https://doi.org/10.3390/app13031720

APA StylePanchetti, T., Pietrantoni, L., Puzzo, G., Gualtieri, L., & Fraboni, F. (2023). Assessing the Relationship between Cognitive Workload, Workstation Design, User Acceptance and Trust in Collaborative Robots. Applied Sciences, 13(3), 1720. https://doi.org/10.3390/app13031720