This section focuses on the combination of the prediction model of user preference and the STSA method. An aggregate function based on MPP is constructed to coordinate the user preference objective with other objectives, such as structural weight. On this basis, a cost function is further designed by taking the design constraints into account. Finally, an innovative design method named STSA-P is proposed.

4.1. Physical Programming (PP) Synopsis

PP is a user-friendly multi-objective optimization method that eliminates the process of assigning subjective weights. According to the PP procedure, the users’ expectations for design objectives can be classified into four classes: smaller is better (Class 1), larger is better (Class 2), value is better (Class 3) and range is better (Class 4). Each class comprises two cases, hard (H) and soft (S), depending on the sharpness of the expectations [

31]. In the soft classes, users need to specify several ranges for each objective to show the degrees of satisfaction. A corresponding class function is built to map the objective function to a non-dimensional space, where all the objectives with different physical meanings and orders of magnitude have the same numerical scale.

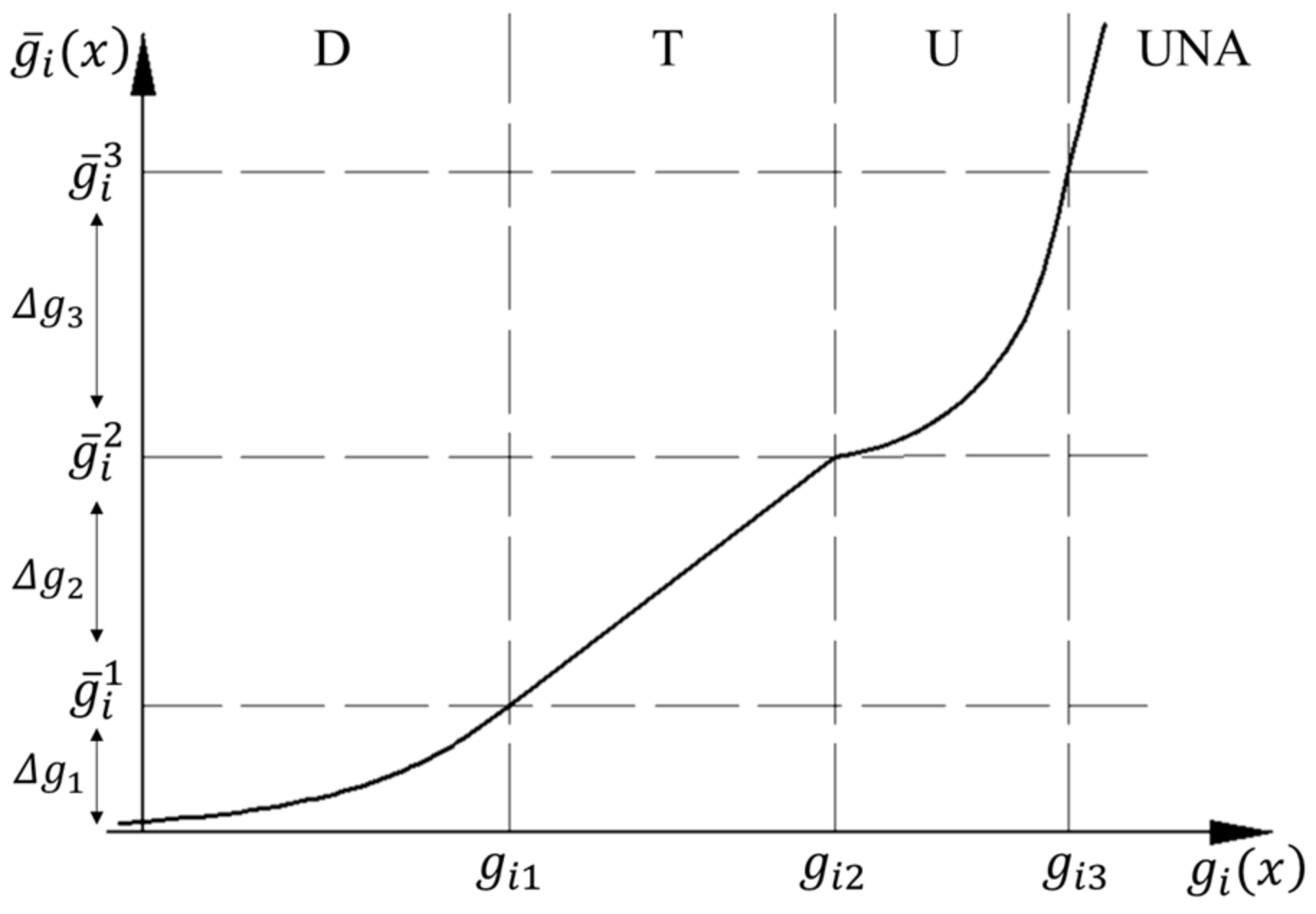

Figure 8. shows one possible class function of 1-S (soft case of Class 1).

The objective function

is illustrated on the horizontal axis. The corresponding class function

is shown on the vertical axis. Six ranges for the objective are given: highly desirable (HD), desirable (D), tolerable (T), undesirable (U), highly undesirable (HU), and unacceptable (UNA). The parameters

to

are the boundary values of the ranges, which are specified by users and directly reflect the users’ expectations. To ensure the same scale for each class function, the parameters

to

, corresponding to

to

, should be the same for different class functions. These parameters can be calculated as:

where

nsc = number of objective functions, and

β = parameter of convexity, generally greater than 1.

The curve of the 1-S class function has two parts. The first part, in the HD range (

), is represented by a decaying exponential. The second part, in the D, T, U and HU ranges, takes the form of a spline segment. The class function should meet the requirements including continuous first derivative and strictly positive second derivative [

30]. These settings are beneficial for gradient-based methods in searching for the optimal solution [

47]. However, they also lead to complicated calculations of the class function.

The aggregate function

G(

x) is formed by combing all the class functions (Equation (15)). Thus, a MOP can be transformed into an SOP.

subject to

4.2. Coordination of Multiple Objectives with MPP

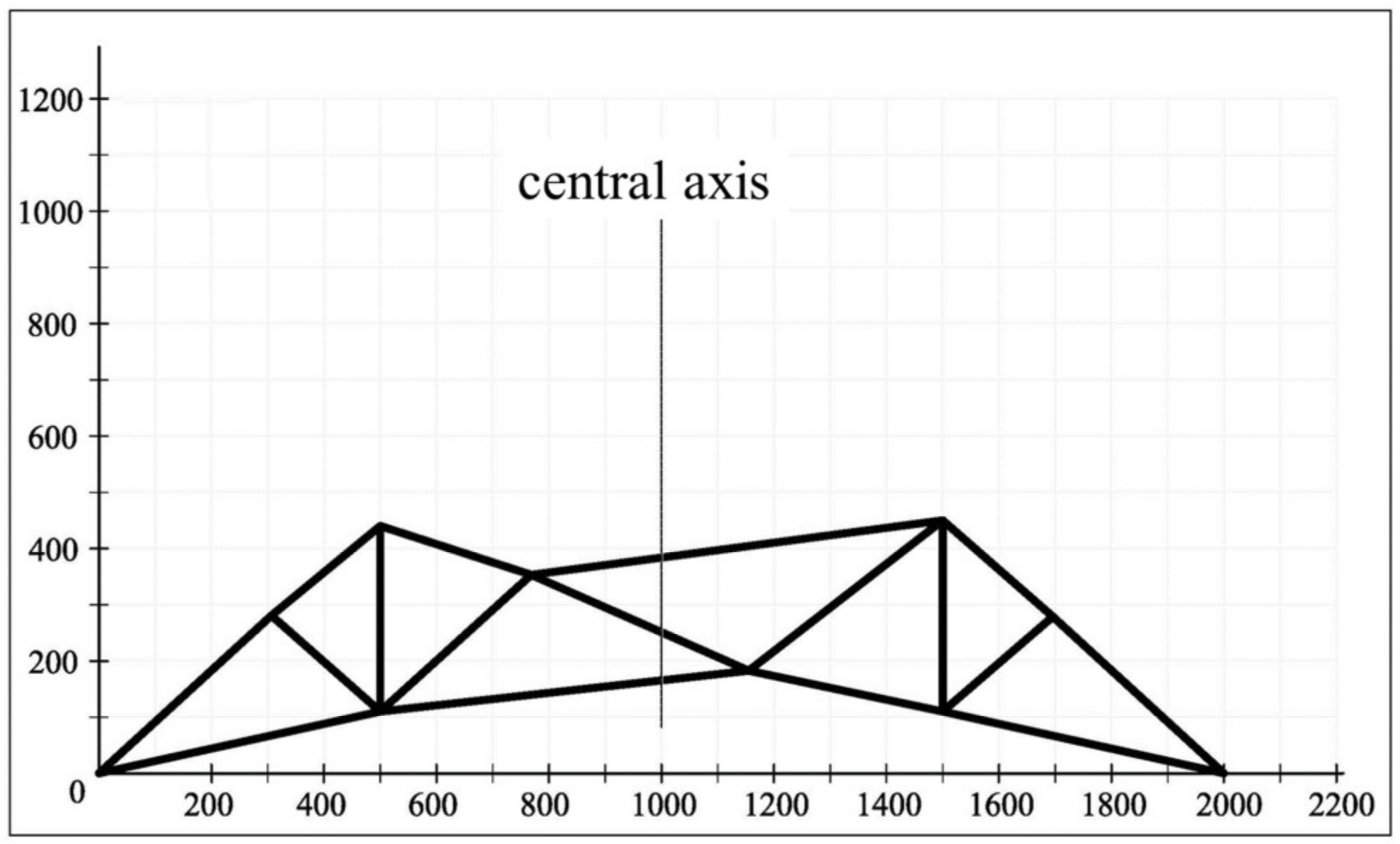

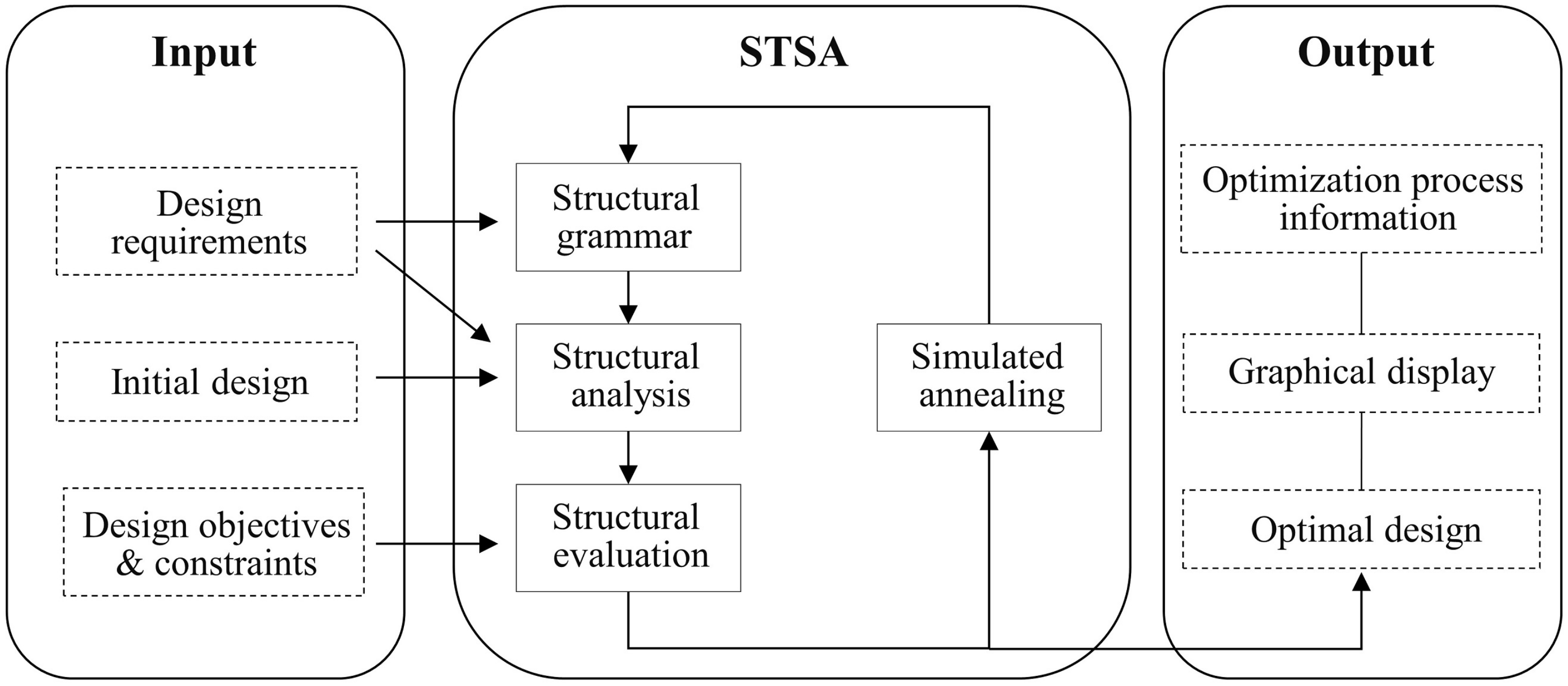

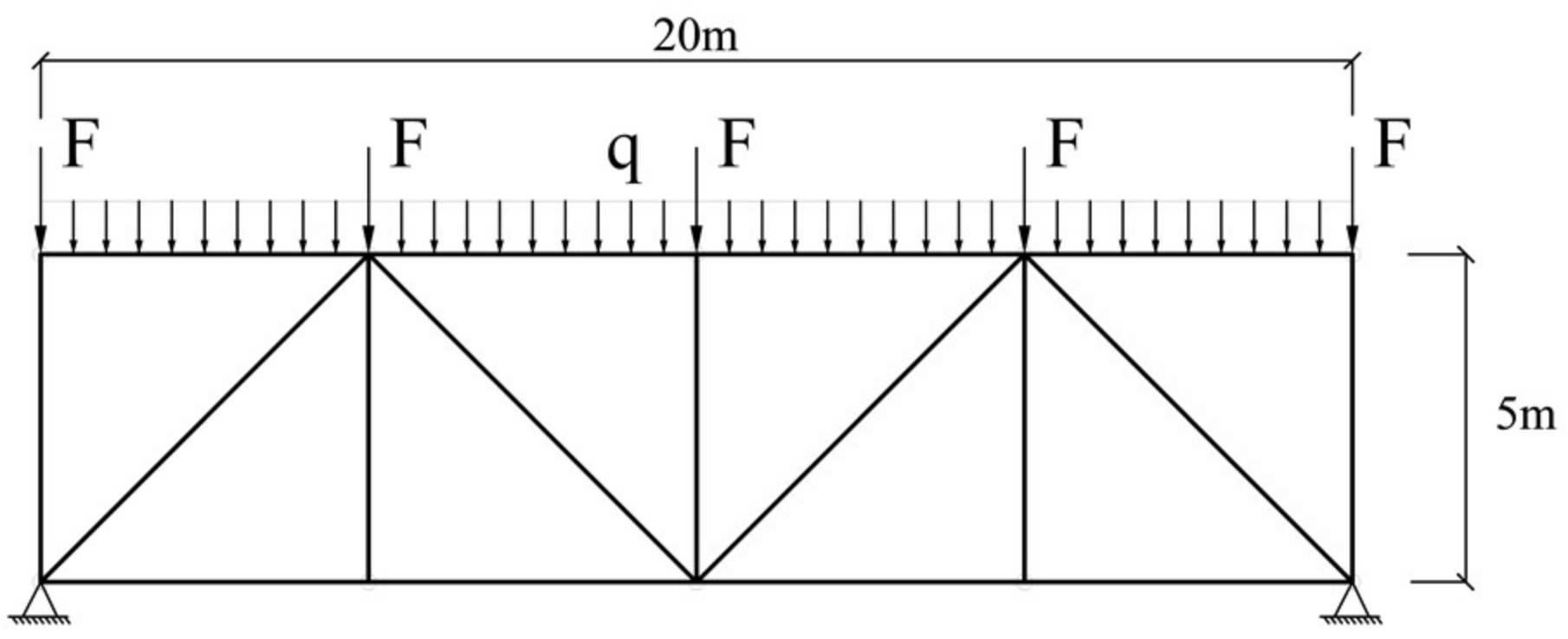

The prediction model of user preference is integrated into the STSA method to guide the generation of a satisfactory structure. The STSA method combines structural grammar and performance evaluation with a stochastic optimization technique (simulated annealing, SA) [

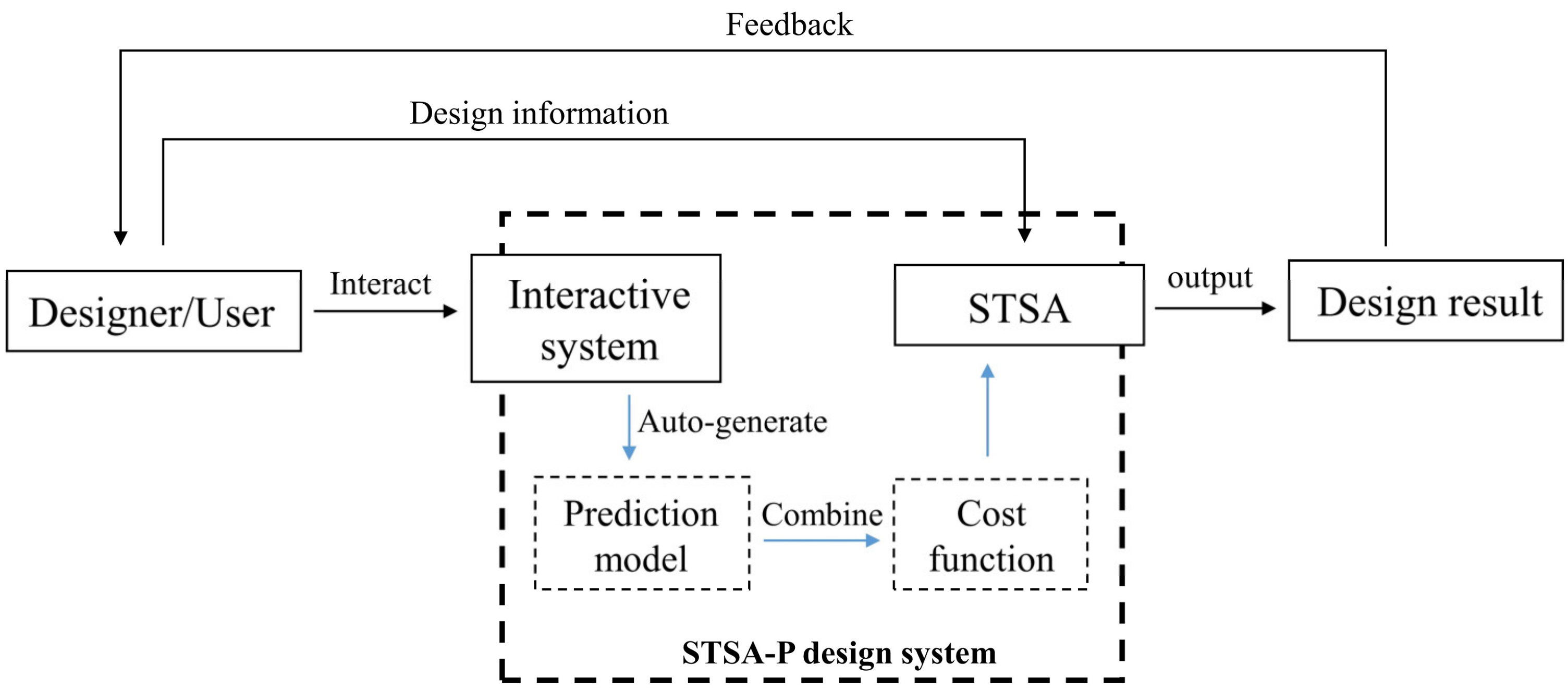

1]. It can be used for topology generation and optimization of discrete structures. Structural grammar defines the basic units (e.g., triangles in 2D, tetrahedrons in 3D) and rules for the structural modification in sectional size, global shape, and topology, which can modify and transform the structures more flexibly. Similarly to human languages, the basic units can form a structure based on structural grammar. STSA improves the initial design continuously with structural grammar based on the feedback from performance evaluation until the final design meets the design goals of safety, efficiency, and economy. The workflow is shown in

Figure 9 [

1].

Generally, many design factors, such as weight, displacement, stiffness, stress, frequency, etc., can be employed as the design objectives when STSA is applied to design structures. In this study, weight and displacement are selected as the first and second design objectives. Meanwhile, user preference is introduced as a third objective based on the prediction model. These objective functions are defined as follows:

where

ρ = material density,

n = number of members,

li = length of the

ith member,

si = sectional area of the

ith member,

m = number of nodes,

dispj = displacement of the

jth node, and

pref = preference prediction value of the structure.

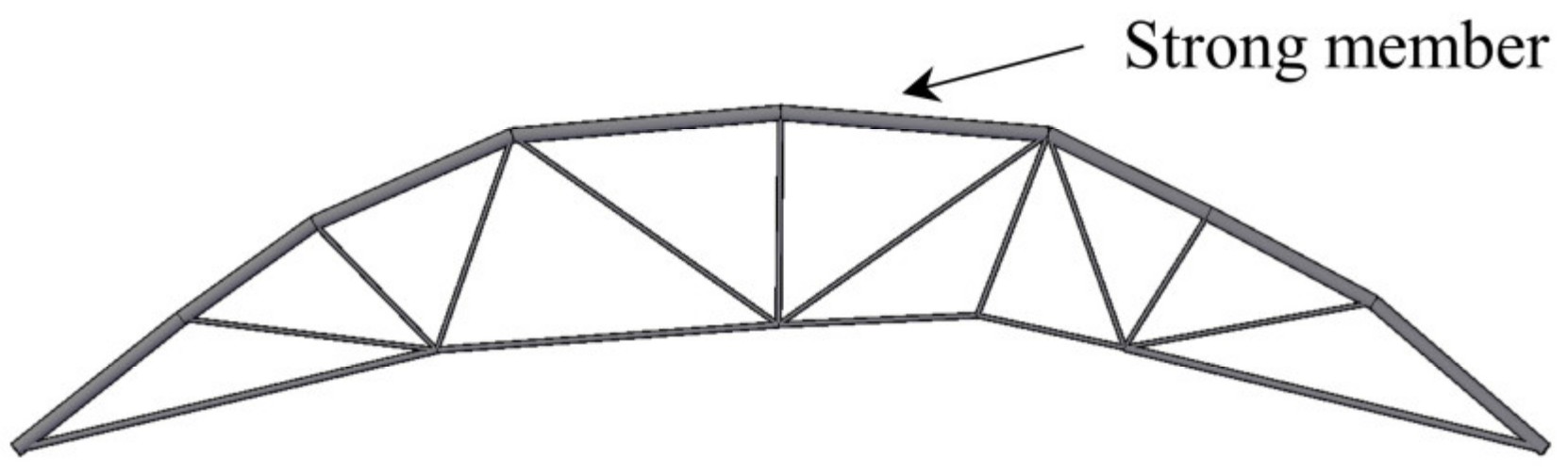

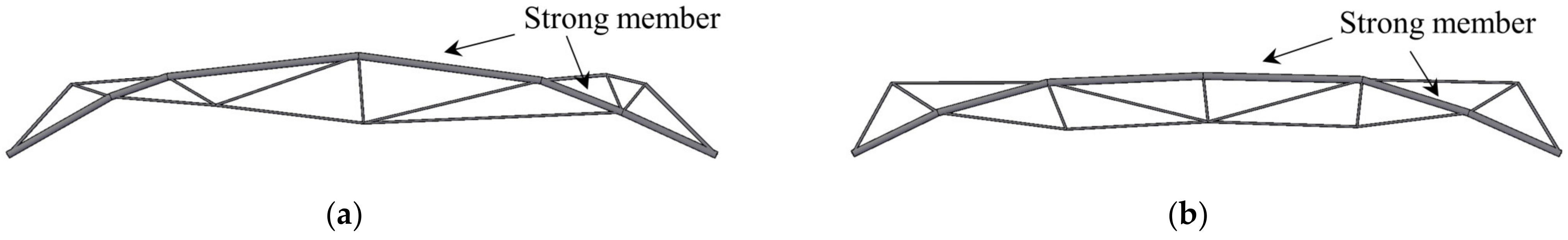

Structural weight (Equation (17)) is a classical design objective in structural optimization. The smaller the weight, the better the structure. The objective of node displacement (Equation (18)) minimizes the maximum displacement at all the structural nodes. Similarly, the user preference objective (Equation (19)) minimizes the preference prediction value of the generated structures. A smaller value means a higher user satisfaction with the structural appearance. All three objectives conform to the feature of “smaller is better”, which can be represented by 1-S.

For the objectives of structural weight and node displacement, the class function of the PP method is redesigned and shown in

Figure 10.

The number of ranges for each objective is simplified to four (D, T, U, UNA), in which D, T, and U are pertinent to the evaluation options “Preferred”, “Acceptable” and “Disliked” in the interactive system, respectively. The curve of the new class function expands to the UNA range, which is originally regarded as the “infeasible region” in the PP method. This is beneficial for the full exploration of the optimal structure, especially in the early optimization phase. The new class function contains three expressions. The first one is represented by a continuous quadratic function in the U and UNA ranges, where the objective value is expected to enter the U range from the UNA range quickly at a larger slope. Then the slope at the left boundary of the U range becomes smaller to reduce the impact of the current objective. It is conducive to the uniform optimization of all objectives. The second expression in the T range is set to be a linear function to keep a high slope throughout the range. This makes the objective values move quickly to the D range. The third expression in the D range is represented by an exponential function. The curves in the D and T ranges are continuous and have the same slope at the boundary. The new class function is defined as:

The parameters to need to be specified by users, whereas the parameters on the vertical axis are calculated by Equations (12)–(14).

For the user preference objective, the expressions of class function are the same as those of the weight and displacement objectives in the D, T, and U ranges, whereas a special expression is used in the UNA range. The boundary values

,

and

are determined as 1.5, 2.5, and 3, according to the evaluation options and the rounding rule. The prediction model runs only when all the feature values of the current structure are in the Permissible Feature Range (PFR, defined in

Section 3.4) of the training data. Therefore, the class function in the UNA range is constructed based on the differences between the feature values and PFR:

where

Nf = number of features without redundant features,

ξ= a penalty constant,

η= a penalty coefficient,

xj = value of the

jth feature of the current structure,

= maximum value of the

jth feature of the samples in the training group, and

= minimum value of the

jth feature of the samples in the training group.

Equation (22) calculates the difference between the

jth feature of the current structure and PFR. Then, the result is substituted into Equation (21) to calculate the value of the class function in the UNA range. The parameters

ξ and

η generally take large values to penalize the structure when feature values are outside the PFR. The class function of the user preference objective is defined as:

A simplified form is adopted for the aggregate function:

subject to

where

nr = number of constraint functions, and

= the

nrth inequality constraint function.

During the original design process of the PP method, if the final design result or the performance of an objective fails to meet the user’s needs, the user can improve them by adjusting or resetting the boundary values. However, this manner has some drawbacks. Firstly, the adjusted boundary values may be against the original expectations of the user. Secondly, the boundary values for some objectives are unchangeable. For example, the boundary values for the user preference objective are fixed according to the rounding rule and the evaluation options in the interactive system. To solve this problem, an adjustment coefficient α is introduced to avoid the modification of boundary values. Then, the modified aggregate function G(x) is given as:

subject to

Each objective corresponds to one adjustment coefficient. The default value is 1. A user can improve the performance of an objective by increasing the pertinent coefficient, which is equivalent to increasing the weight of the objective. To verify the effectiveness of the coefficient, a simple numerical example is presented:

subject to

where

The class function for the three objectives can be calculated by Equation (20). The boundary values are given in

Table 6. The SA method is used to solve the optimization problem. The results are shown in Equations (33) and (34).

According to Equation (34) and

Table 6 the three objective values lie in the U, D, and T ranges, respectively. The result of the first objective

g1(

x) in the U range is not satisfactory. Therefore, the user can increase the adjustment coefficient of

g1(

x). Then, the new results are shown in Equations (35) and (36).

It is observed that the three objective values lie in the T, D, and T ranges, respectively. Compared to the initial results, the first objective is significantly improved, whereas the other two objective values still lie in good ranges. Thus, the adjustment coefficient can improve the result effectively without changing the boundary values.

4.3. Treatment of Design Constraints

Many design constraints, e.g., stress constraints and geometric constraints, need to be considered in the design process of truss structures. Generally, these design constraints are regarded as hard constraints, i.e., they cannot be violated. This study tends to handle the constraints as soft constraints, which means that they can be violated during the optimization process. Meanwhile, the final result needs to satisfy the constraints. This is beneficial to the full exploration of the optimal result.

The penalty parameter

r of constraint violation (Equation (37)) is set to be associated with the iteration steps. In the early stage of optimization, the penalty for the constraint violation is small, i.e., the value of

r is small. As the optimization progresses, the value of

r gradually increases, which indicates a larger penalty for the constraint violation. At the late stage of optimization, the penalty is sufficiently large to make the final result satisfy the constraints.

where

T = number of the current step of STSA during the iteration.

For the

ith constraint, the constraint violation

vi(

x) is defined as the proportion of the current constraint value exceeding the constraint limit:

where

valk = value of constraint function of the

kth unit, for example, the tensile stress of the

kth member,

vallimit = value of constraint limit.

Thus, the penalty term

V(

x) of constraint violation is defined by Equation (39):

The new cost function (Equation (40)) is obtained by multiplying

V(

x) and

G(

x), which describes the quality of a new design during the optimization process. It combines the prediction model with the STSA method. On this basis, a new automated design method named STSA-P is established for the plane trusses, which applies user preference information. The design process using STSA-P is shown in

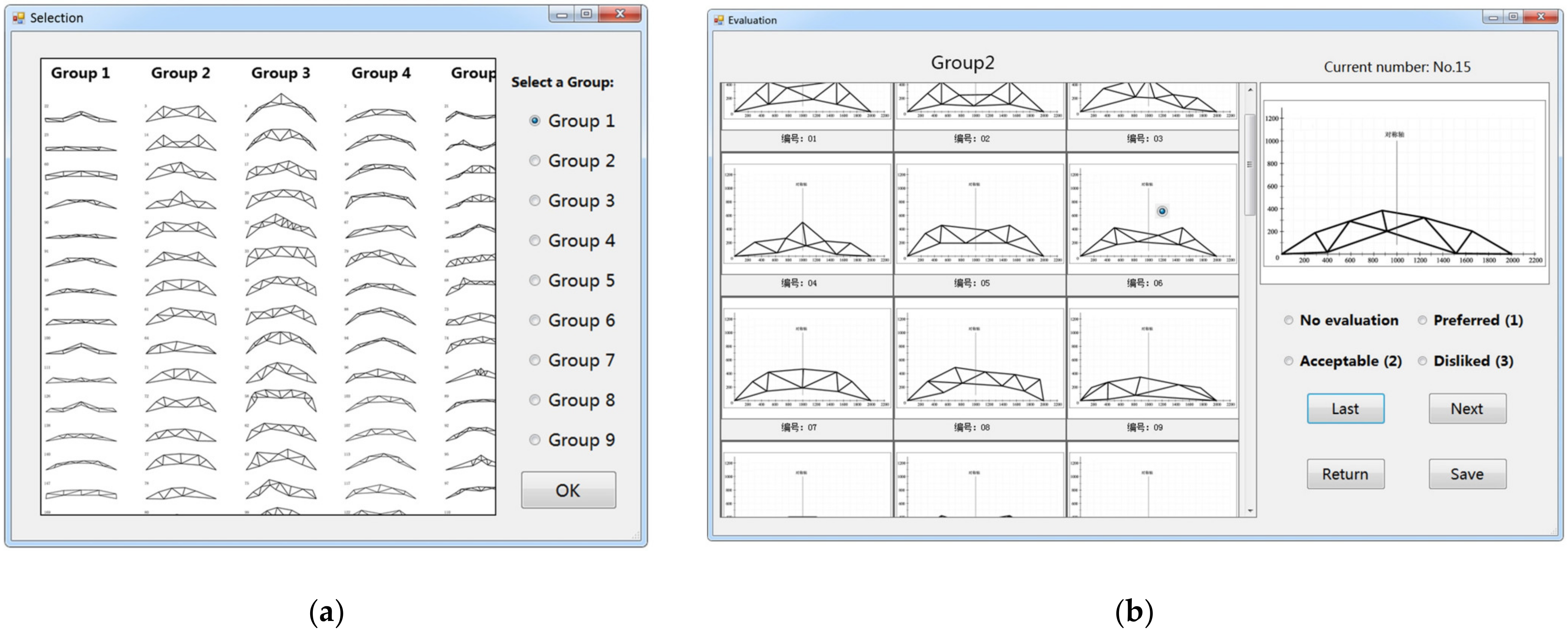

Figure 11.

The combination of the interactive system and the STSA algorithm forms the STSA-P design system. Designing a truss structure with this system, designers or users can preset some design information, such as boundary values, and they need to complete the evaluation using the interactive system. Then, the prediction model of user preference is automatically developed and combined with the cost function. After that, the optimization process starts. Finally, the design result is obtained. if the result fails to meet the user’s needs, the designer or user can improve the result by modifying the adjustment coefficient until a satisfactory result is achieved.