Abstract

Hemerocallis citrina Baroni with different maturity levels has different uses for food and medicine and has different economic benefits and sales value. However, the growth speed of Hemerocallis citrina Baroni is fast, the harvesting cycle is short, and the maturity identification is completely dependent on experience, so the harvesting efficiency is low, the dependence on manual labor is large, and the identification standard is not uniform. In this paper, we propose a GCB YOLOv7 Hemerocallis citrina Baroni maturity detection method based on a lightweight neural network and attention mechanism. First, lightweight Ghost convolution is introduced to reduce the difficulty of feature extraction and decrease the number of computations and parameters of the model. Second, between the feature extraction backbone network and the feature fusion network, the CBAM mechanism is added to perform the feature extraction independently in the channel and spatial dimensions, which improves the tendency of the feature extraction and enhances the expressive ability of the model. Last, in the feature fusion network, Bi FPN is used instead of the concatenate feature fusion method, which increases the information fusion channels while decreasing the number of edge nodes and realizing cross-channel information fusion. The experimental results show that the improved GCB YOLOv7 algorithm reduces the number of parameters and floating-point operations by about 2.03 million and 7.3 G, respectively. The training time is reduced by about 0.122 h, and the model volume is compressed from 74.8 M to 70.8 M. In addition, the average precision is improved from 91.3% to 92.2%, mAP@0.5 and mAP@0.5:0.95 are improved by about 1.38% and 0.20%, respectively, and the detection efficiency reaches 10 ms/frame, which meets the real-time performance requirements. It can be seen that the improved GCB YOLOv7 algorithm is not only lightweight but also effectively improves detection precision.

1. Introduction

In the construction process of smart agriculture, with the development of artificial intelligence and deep learning technology, computer vision and image processing technology to solve the problem of low efficiency and quality in the field of agriculture have become current research key spots.

Hemerocallis citrina Baroni represents the pillar industry of economic development in the Datong Yunzhou area of northern Shanxi and is also the key to farmers’ poverty alleviation and enrichment. However, Hemerocallis citrina Baroni has a fast growth rate and a short picking cycle, and the process of identifying maturity and picking is more dependent on manual labor. As a result, the picking efficiency is low, and the standard for identifying maturity is not uniform.

Currently, there are more extensive applications for the maturity discrimination of fruits and vegetables with the help of deep learning and target detection techniques. In the literature [1], the authors introduced the CBAM (convolutional block attention module) mechanism based on the YOLOv5 algorithm and constructed a high-resolution-based slicing method for detecting the seeding of Solanum rostratum Dunal, which realized the multi-scale training of the model. In the literature [2], the authors detected the growth of small apple fruits as follows: firstly, the YOLOv5s model was compressed; secondly, the model was pre-trained by migration learning [3]; and, finally, the model structure was simplified to ensure the detection efficiency. The precision and recall of the model are 95.8% and 87.6%, respectively, and the model volume is only 1.4 M, but the real-time performance is poor. Similarly, in the literature [4], the authors optimized the model by pruning [5], which realized the fast detection of three kinds of apple blossoms. Although the volume of the model after the final pruning operation was reduced by about 231.51 M, the detection time was reduced by about 39.47%, but the average precision was reduced by about 0.24%.

In addition, computer vision technology can real-time monitor the growth status of crops. In the literature [6], the authors both realized the detection of different types of weeds among soybean crops based on deep learning models. However, in the literature [7], the authors optimized the deep learning algorithm to achieve hybrid detection of multiple weeds in the field of smart agriculture. In addition, deep learning target detection also has a wider application in fruit and vegetable cultivation, for example, in apple rot detection [8] and aphid detection during the growth of sugarcane [9]. It realizes intelligent pesticide spraying and drip irrigation, which effectively protect the growth of fruits and vegetables. In the literature [10], the authors compressed the model based on the YOLOv4 algorithm in order to realize the intelligent planting and management of figs, simplified the model structure, and realized fig detection in complex scenes, but the training process is redundant, and the model structure is still able to be further optimized. A multisensory deep learning approach was proposed in the literature [11] to achieve the detection and counting of cotton bolls. The authors increased the detail extraction of boll features by introducing a multiscale residual module and an attention mechanism, thus improving the detection precision. An average precision mAP@0.5 of 92.75% was finally achieved.

Different from the current study, in the literature [12], the authors analyze the external environmental conditions that can affect the transmission of visual data during the construction of smart agriculture and remote monitoring of the growth of fruits and vegetables by comprehensively considering factors such as species classification, plant diseases [13], yield, weather and soil [14], etc., and realized the recognition and detection of different parts of cabbage using convolutional neural networks and image processing technology. The detection precision reached 97%, but the advantage of the model’s lightweight is not obvious. In the literature [15], the authors used the YOLO algorithm to automatically identify the stomata of corn leaves, which compensated for the shortcomings of manual observation, improved the detection efficiency, reduced the false detection rate, and saved the observation time, but the loss function convergence of the proposed model was unsatisfactory. The growth characteristics of Camellia sinensis are similar to those of Hemerocallis citrina Baroni; the plants are dense and need to be picked selectively at regular intervals. Therefore, in the literature [16], a highly integrated and lightweight deep learning model is proposed using multiple residual networks as the main framework, which is capable of efficiently detecting chamomile, and, finally, an average precision of 92.49% was achieved.

Most importantly, computer vision and target detection are widely used in the field of yellow flowers. In the literature [17], based on the YOLOv4 algorithm, the authors designed the dense-YOLOv4 network structure with the K-means++ clustering algorithm to address the shortcomings of the algorithm in terms of precision and speed for the detection of Hemerocallis citrina Baroni. Similarly, in the literature [18], for Hemerocallis Citrina Baroni maturity detection, a lightweight network model was constructed in the YOLOv7 target detection algorithm using the MobileOne network as the backbone feature extraction network and incorporating the coordinate mechanism in the neck network to improve the detection of samples. This method has low detection precision for complex backgrounds. In the literature [19], the authors used algorithms to detect Hemerocallis Citrina Baroni diseases, improving the ability to control them in the early stages of development.

The development of convolutional neural networks has gradually matured, and their performance has been steadily improving, allowing for real-time detection with high precision in multiple fields and scenarios. However, the model structure is becoming more and more complex, and the neural network is gradually developing in the direction of large models and deep networks. Therefore, in Hemerocallis citrina Baroni maturity detection, for the problems of multi-branching, large computation, and low efficiency of the YOLOv7 [20] model, this paper proposes the GCB (Ghost-CBAM-Bi directional feature pyramid network) YOLOv7 algorithm. The main innovations are as follows:

- (1)

- The efficient layer aggregation network (ELAN) module in the feature extraction network and the feature fusion network is lightened; linear transformation is introduced; and Ghost convolution is used to replace the traditional convolution to reduce the redundancy of feature extraction and simplify the model structure.

- (2)

- Between the feature extraction backbone network and the feature fusion network, the CBAM mechanism is added, which improves the tendency of feature extraction and enhances the detection precision and efficiency of the model.

- (3)

- The cross-channel multi-source information fusion method is optimized; the Bi FPN is applied instead of concatenate to reduce the edge information nodes, increase the cross-phase information fusion channels, and realize node adaptive weight adjustment.

The remainder of this paper is organized as follows: Section 2 gives an introduction to the applied modules, while the GCB YOLOv7 algorithmic structure is being presented. Section 3 describes the experimental platform and parameters, analyzes the experiments, and compares the detection results. Finally, conclusions and future work are given in Section 4.

2. GCB YOLOv7 Detection Method

2.1. Lightweight Neural Networks

In the process of convolutional neural network feature extraction, a lot of redundant and similar information exists, leading to a significant increase in model computation. Therefore, the introduction of a lightweight neural network can not only reduce the occupation of computational resources but also increase the inference speed and generalized migration ability of the model. With limited computation and resources, lightweight networks can achieve higher precision and efficiency compared to traditional neural networks.

In Hemerocallis citrina Baroni maturity detection, the improved GCB YOLOv7 algorithm uses Ghost convolution [21] to realize the lightweight of the neural network and adopts the idea of grouped convolution, which reduces the computational complexity by combining linear variation and traditional convolution, and a better and faster lightweight model is obtained.

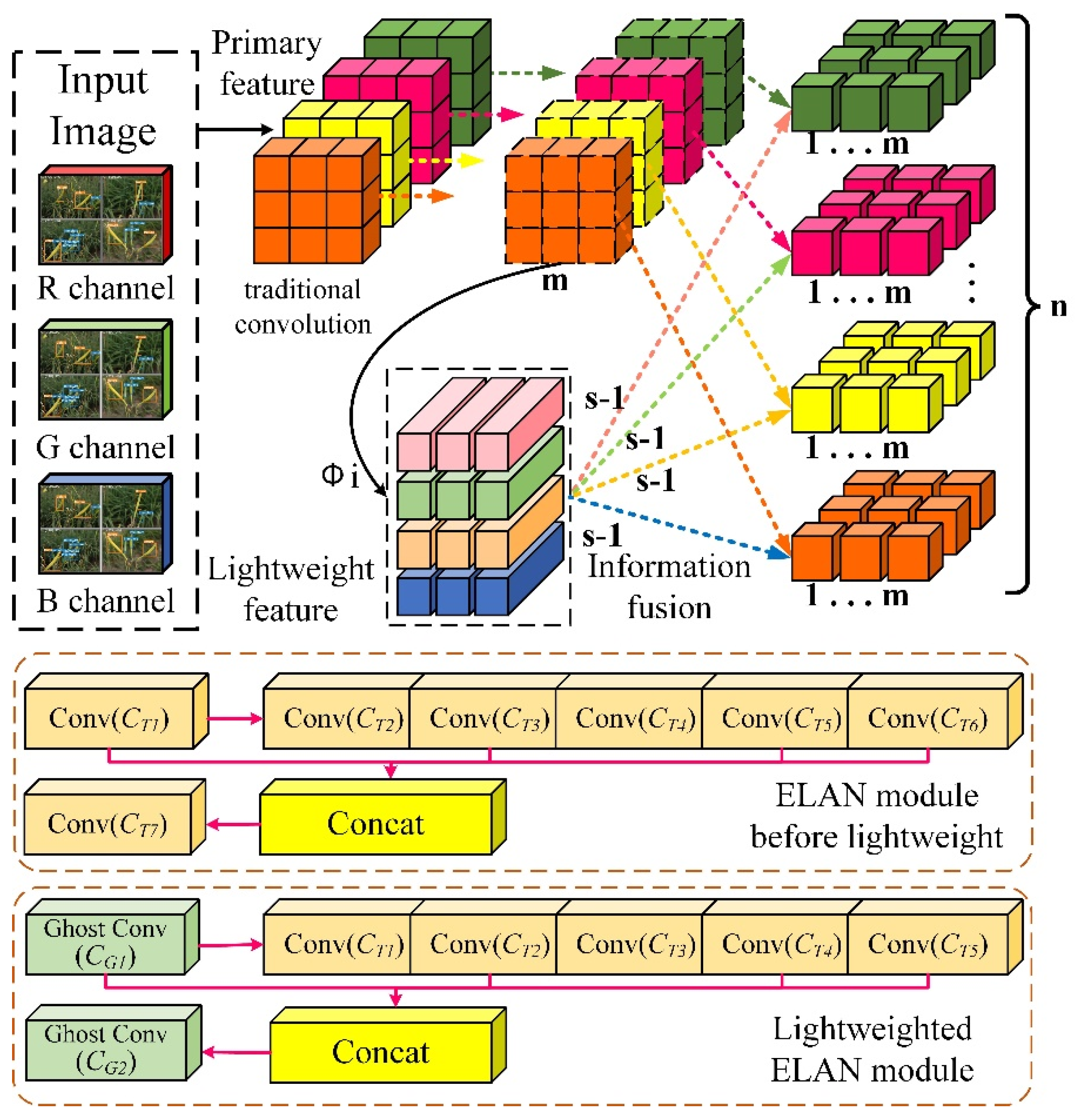

As shown in Figure 1, Ghost Conv exploits the redundancy property of feature maps by first generating individual intrinsic feature maps through a small number of traditional convolutions and then performing the low-cost linear operation on the individual intrinsic feature maps, such that each intrinsic feature map produces individual new feature maps. Finally, the individual intrinsic feature maps and the individual new feature maps are spliced to complete the lightweight convolution operation.

Figure 1.

Lightweight Ghost conv.

Assume is the number of input channels, is the number of output channels, k × k is the customized convolutional kernel size, and and are the sizes of the input and output images. Analyzed in conjunction with Figure 1, the ELAN module after the lightweight consists of two Ghost convolutions and five traditional convolutions. Additionally, is the computational amount of one traditional convolution, so the computational amount of the ELAN module before the lightweight is as follows:

Similarly, is the computation of the Ghost convolution, so the computation of the ELAN module after the lightweight is as follows:

The ratio of the computational amount of traditional convolution to Ghost convolution is shown in Equation (3). It can be seen from the equation that the computation of the Ghost convolution is of the traditional convolution.

Therefore, using with less computational effort instead of can effectively reduce the computational effort of the ELAN module, which, in turn, simplifies the feature extraction process and reduces the number of model parameters and computational effort.

2.2. Attention Mechanisms

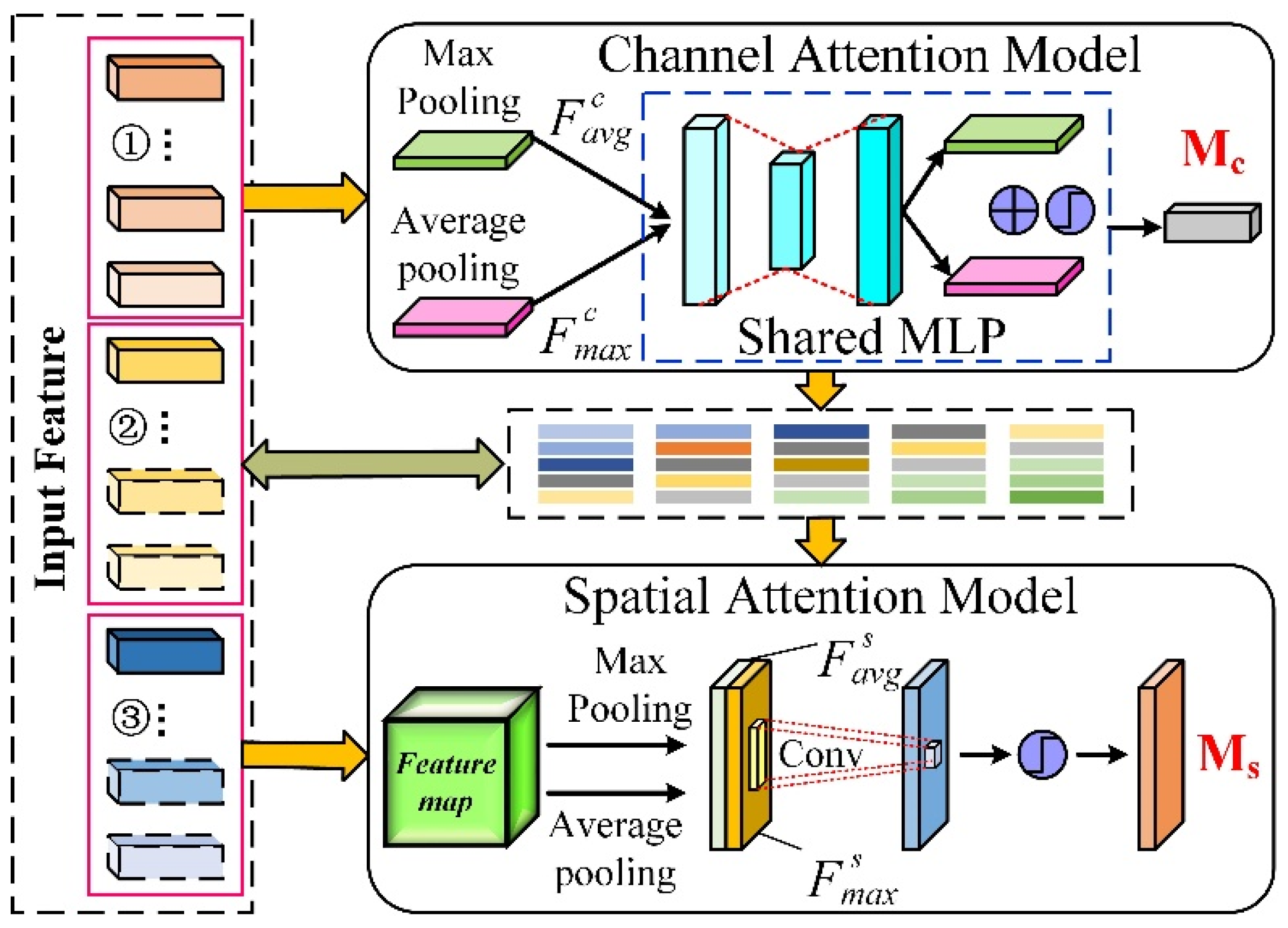

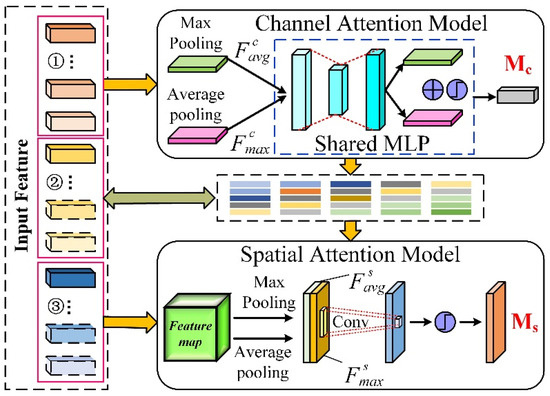

The CBAM mechanism [22] is lightweight and plug-and-play, which can allocate the resources of the convolutional network according to the importance of feature extraction, thus changing the tendency of feature extraction. It reduces the interference of invalid features and improves the detection effect of the target.

The CBAM mechanism is shown in Figure 2. Firstly, the input image in the initial stage undergoes parallel maximum pooling and average pooling operations on the feature map by the channel attention module and shares a multilayer perceptron to realize the output of the channel features . Secondly, interacts with the input image and transfers the transition features to the spatial attention module. Finally, in the spatial attention module, the transition features are normalized by convolution and sigmoid functions, and the weights are multiplied with the features to achieve the output of spatial features .

Figure 2.

CBAM mechanism.

Suppose given an input intermediate feature map , CBAM will complete channel feature extraction and spatial feature extraction and, in turn, generate a 1D channel attention map and a 2D spatial attention map , respectively. Thus, the overall attention process of channel feature extraction and spatial feature extraction can be summarized as follows:

where F′ is an intermediate feature; ⊗ denotes element-wise multiplication; and is the final refined output.

In Hemerocallis citrina Baroni maturity detection, the CBAM mechanism is able to allocate attention to both spatial and channel dimensions simultaneously, which enhances the detection effect of the model.

The CBAM mechanism utilizes the feature relationships between channels to generate feature mappings. Considering the feature information of each channel at the same time requires a lot of computation. Therefore, the channel computation can be represented as follows:

where denotes the sigmoid function, , , and W1 denote the weights after pooling and sharing the network, respectively, and is the channel reduction ratio.

In the spatial attention model, to aggregate spatial information, two 2D maps and are generated by applying max-pooling and average pooling operations and concatenating the two 2D maps along the channel axis to generate an efficient feature descriptor. Then, a convolution layer is applied to generate a spatial attention map . In short, spatial attention is computed as follows:

where represents a convolution operation with the filter size of .

In the Hemerocallis citrina Baroni maturity detection model, inserting the CBAM mechanism between the feature extraction and the feature fusion network enables the model to focus on the information that is more critical to the current task among the numerous information inputs, reduces attention to other information, and even filters out irrelevant information. It can also solve the problem of information overload and improve the efficiency and precision of image processing.

2.3. Cross-Stage Information Fusion Mechanisms

A feature fusion network is a link between feature extraction and prediction output that is able to fuse the shallow features obtained with deep features by convolution and complete the prediction output.

Shallow features are less semantic, have more noise, have high feature resolution due to fewer convolutions, and only include location, details, etc. While the deep features can include more semantic information after many convolutions and feature extraction, the feature resolution is lower and the ability to perceive details is poor. Therefore, the feature fusion network is able to combine shallow and deep features to improve the recognition and detection performance of the model.

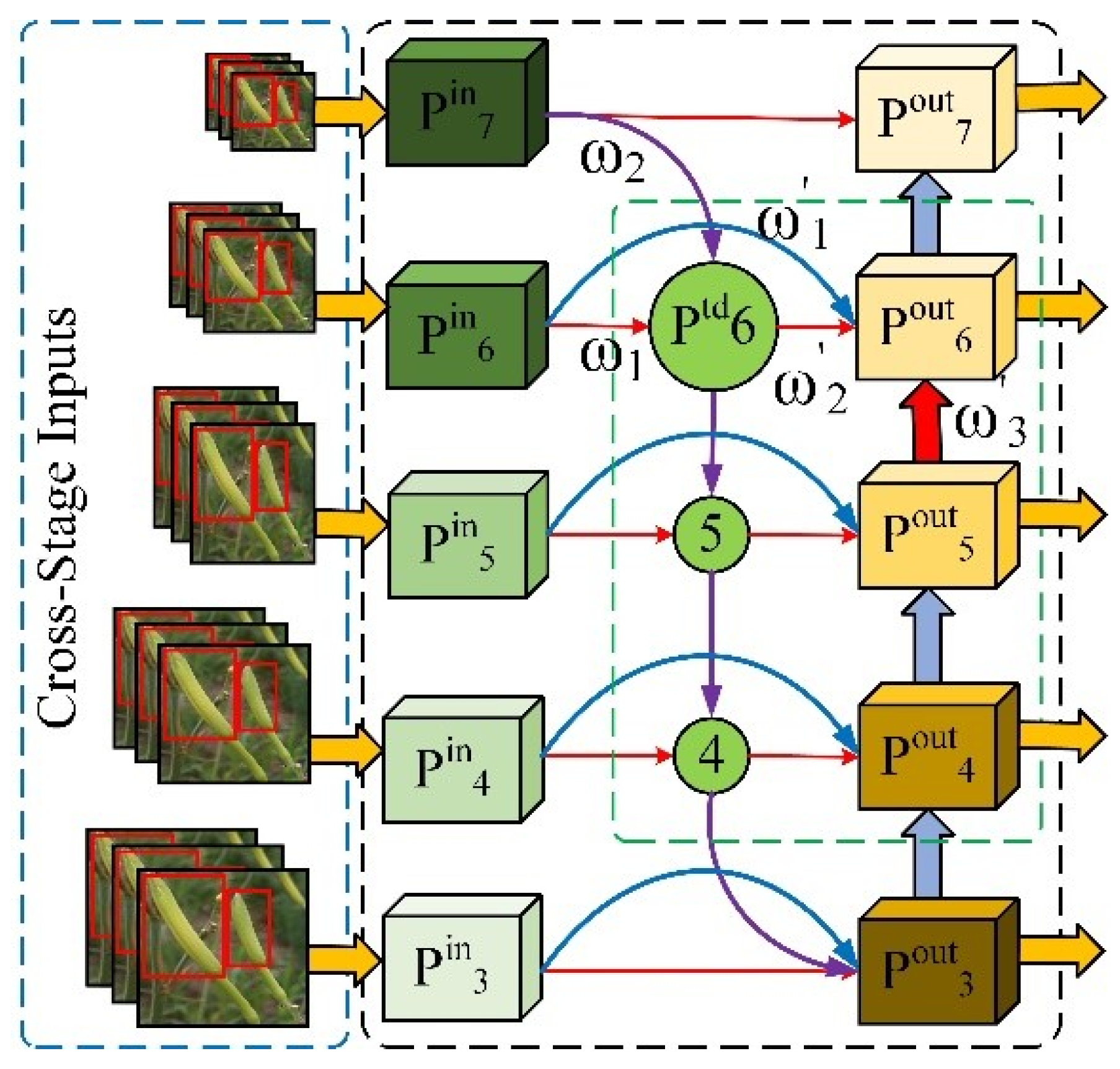

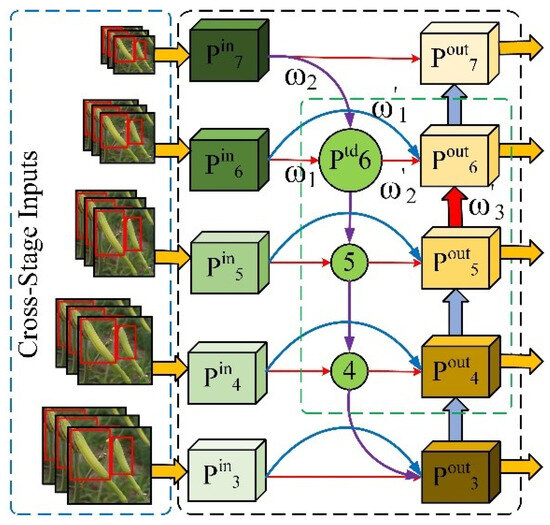

In the Hemerocallis citrina Baroni maturity detection model, the Bi FPN (Bi directional feature pyramid network) information fusion mechanism [23] is shown in Figure 3. This module is not only simple and lightweight but also gives different weights to different resolution features, so the information fusion ability is strong.

Figure 3.

Bi FPN information fusion mechanism.

Taking the level 6 input node as an example, the Bi FPN information fusion mechanism after adjustment by introducing weights can be expressed as follows:

where is the input of different levels, and are the transition and output features, respectively, and and are the feature weights in different paths. denotes the up-sampling or down-sampling operation in the resolution adjustment process, is the convolution operation in the feature extraction process, and is the nonzero minima.

It can be seen that Bi FPN, through multi-level feature fusion and dynamic feature weight assignment, improves the precision and efficiency of target detection. Additionally, the information bottleneck and feature distortion problems existing in the feature pyramid network are effectively solved. In Hemerocallis citrina Baroni maturity detection, using Bi FPN instead of concatenate can solve the problem of Hemerocallis citrina Baroni detection in different environments, especially in cloudy and rainy weather.

2.4. GCB YOLOv7 Model Structure

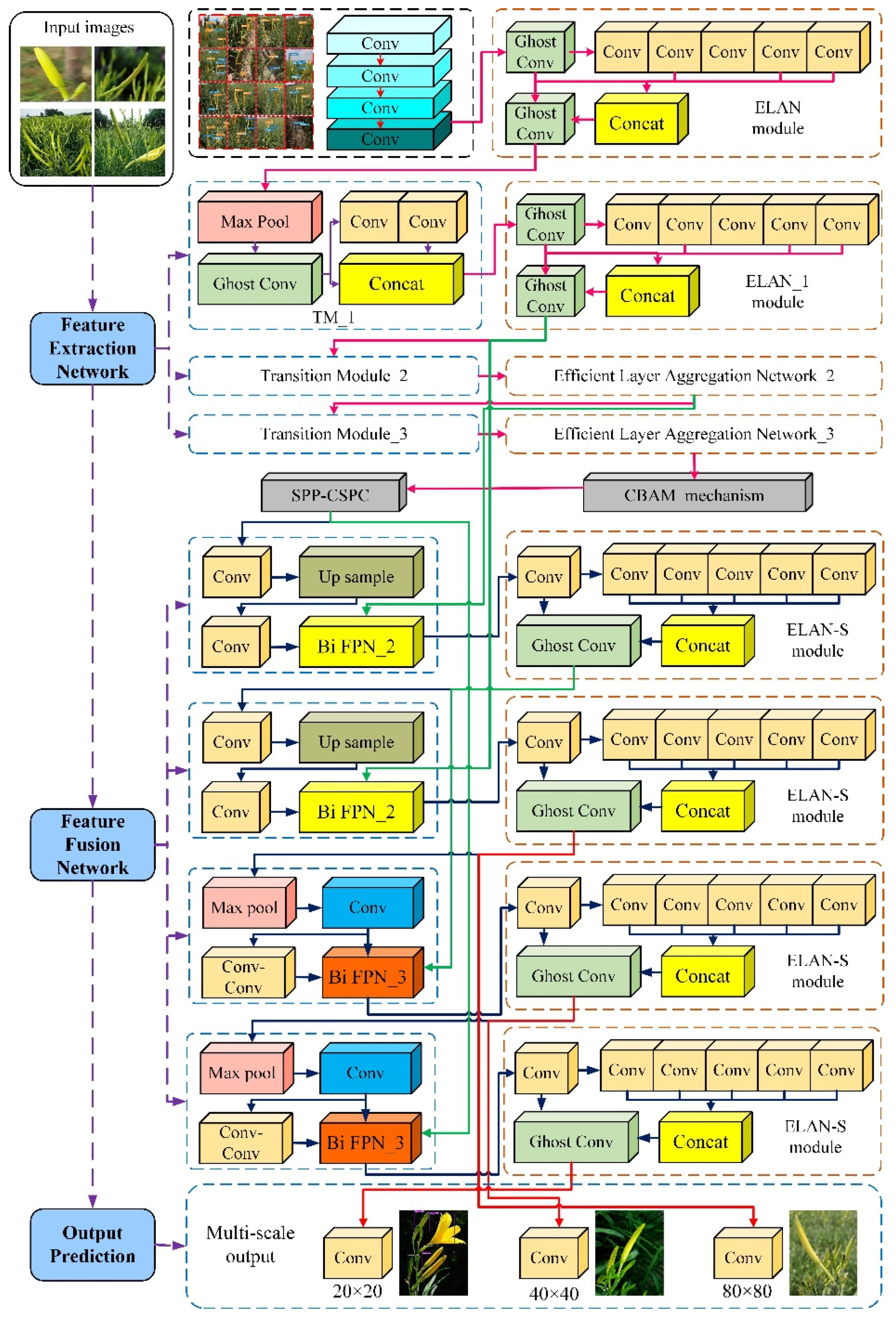

The YOLOv7 algorithm consists of the convolution, pooling, up-sampling, ELAN module, transition module (TM), and spatial pyramid pooling cross-stage partial channel (SPP-CSPC) modules. The model has three branching paths: a main path running through the whole model and two branching paths in the ELAN module and the TM module, respectively.

The structure of the improved GCB YOLOv7 model is shown in Figure 4. As can be seen from the figure, the GCB YOLOv7 algorithm mainly uses the ELAN and TM modules to accomplish feature extraction alternately, and the ELAN module mainly consists of traditional convolution. The ELAN module in the feature extraction and feature fusion networks is different since it has four and six convolutional branches, respectively. Additionally, the transition module contains pooling and up-sampling operations with the aim of changing the size of the feature map to achieve cross-stage information fusion [23].

Figure 4.

GCB YOLOv7 model structure.

The improved GCB YOLOv7 algorithm optimizes the convolution according to the relative importance of the traditional convolution in the ELAN and TM modules and replaces the traditional convolution with the Ghost convolution, which is less computationally intensive, ensures the stability of the model, and reduces the amount of model computation.

In addition, the CBAM mechanism is introduced to complete the information transition. Meanwhile, the Bi FPN information fusion mechanism improves the model’s performance. In the feature fusion network, the feature fusion is achieved by 2-channel and 3-channel information fusion methods, and the visualization output is formed so that the monitoring results contain localization, classification, and confidence information.

Eventually, the detection precision and recall were improved while achieving model lightweight, and the detection of Hemerocallis citrina Baroni maturity was accomplished more accurately and efficiently [24].

3. Experimental Analysis

3.1. Experimental Platform and Model Training

This experiment was performed on the basis of Python 3.8.5 and CUDA 11.3 environments. During the experiment, the parameters of the hardware devices were Inter Core i9-10900k@3.7 GHz and NVidia GeForce RTX 3080 10 G with DDR4 3600 MHz dual memory.

In this paper, the GCB YOLOv7 algorithm and the YOLOv7 algorithm use the same parameter settings. In terms of the image dataset, there are 800 images with a size of 6960 × 4640, among which 597 and 148 images are used in the training and validation sets, respectively, and 55 images are used in the test set. The algorithm parameter settings are as follows: the learning rate is 0.01; the cosine annealing hyperparameter is 0.1; the weight decay coefficient is 0.0005; and the momentum parameter in the gradient descent with momentum is 0.937. A total of 200 epochs and a batch size of 10 are used during training.

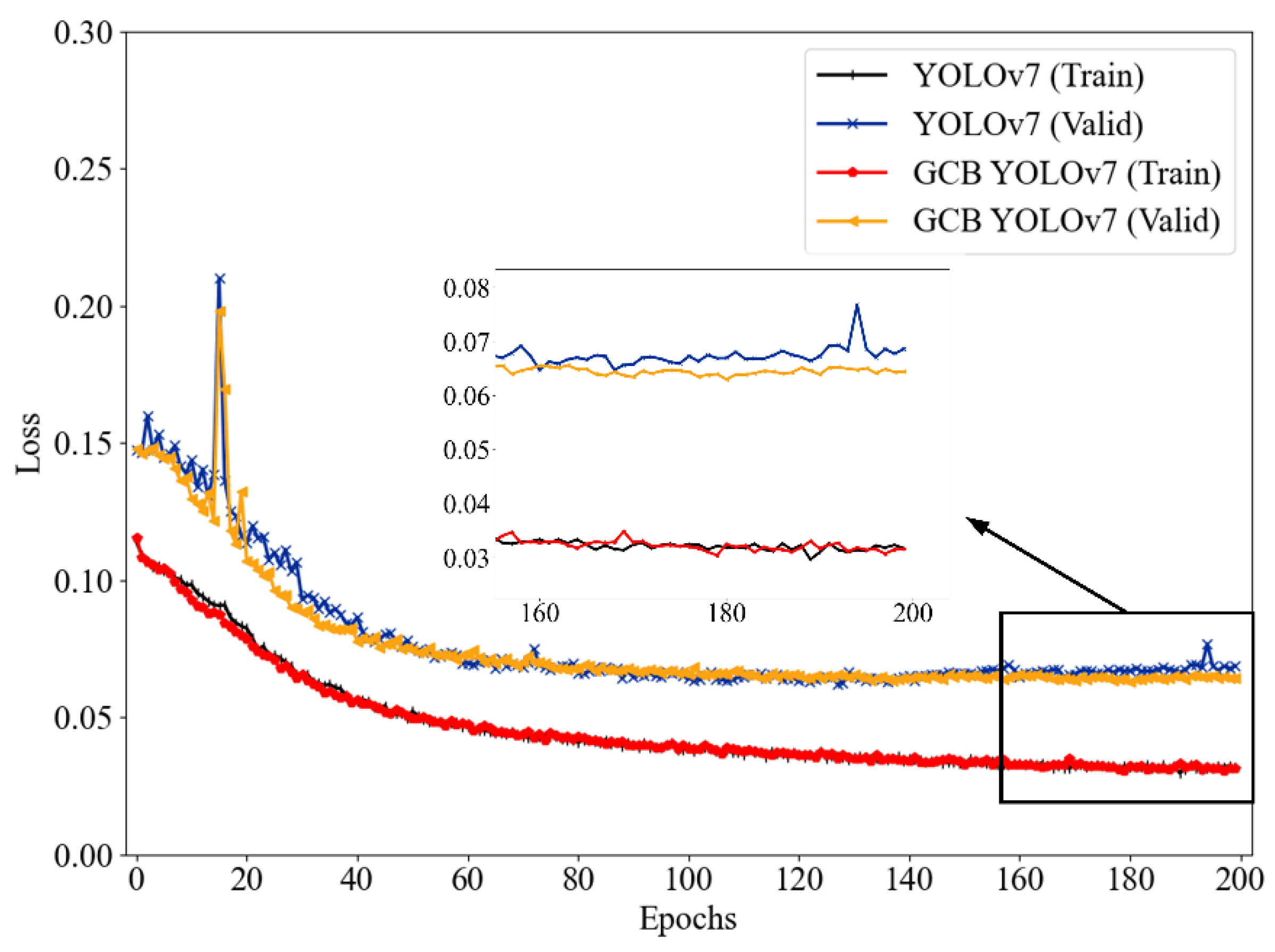

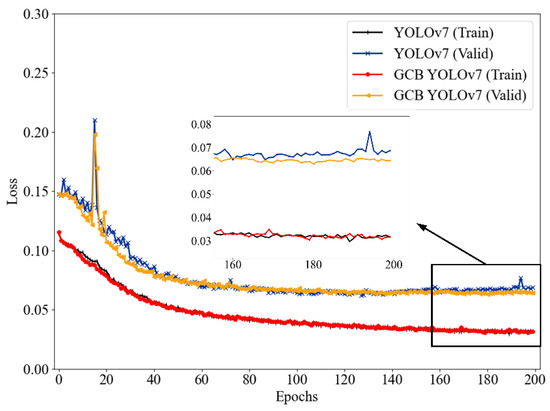

In deep neural networks, the loss function is used as an objective function to evaluate the difference between the model prediction and the target value. When the value of the loss function is smaller, the model prediction is closer to the target value and the model precision is higher, and vice versa. The loss function before and after the algorithm improvement is shown in Figure 5.

Figure 5.

Loss function convergence curve.

As shown in the figure, the GCB YOLOv7 algorithm converges faster in the pre-model training period compared to the original algorithm during the model training process [25]. In addition, the final convergence values of the YOLOv7 and GCB YOLOv7 algorithms are 0.03169 and 0.03154, respectively, which maintain approximately the same convergence trend.

However, the improved GCB YOLOv7 algorithm shows more obvious advantages during the model validation process. The specific advantages are as follows: (1) Influenced by the structure of the model, the GCB YOLOv7 algorithm has a lower starting value for the loss function and less volatility. (2) The GCB YOLOv7 algorithm converged faster and more efficiently in the first 40 model trainings. (3) The final loss function convergence values are even lower at the completion of 200 iterations of training, and the original YOLOv7 and GCB YOLOv7 algorithms have loss function values of 0.6855 and 0.6442, respectively.

It can be seen that after the integration of the lightweight neural network and attention mechanism in Hemerocallis citrina Baroni maturity detection, the GCB YOLOv7 model has higher detection precision, the validation results are closer to the labeling result values, and the model performance is better.

3.2. Model Lightweight Analysis

In machine learning, especially in the context of complex deep neural networks, ablation experiments can effectively verify the necessity of individual modules [26]. It can improve and optimize the neural network by adding or deleting different modules to enhance the model’s performance.

The ablation experiments of the GCB YOLOv7 model are shown in Table 1. As can be seen from Table 1, Models 1 to 3 are based on the YOLOv7 algorithm, and the lightweight Ghost convolution, the CBAM mechanism, and the information fusion Bi FPN mechanism are introduced sequentially based on the previous model.

Table 1.

Ablation experiment.

In the model lightweight analysis, the number of model network layers, parameters, and floating-point operations, as well as the training time and model volume, are the main parameters for judging the lightweight of the model, so the structural parameters of each ablation experimental model are shown in Table 2. In order to highlight the advantages of the GCB YOLOv7 algorithm proposed in this paper, the YOLOv5 algorithm is added to the comparative analysis experiments for comparison.

Table 2.

Model lightweight analysis.

Compared with the YOLOv5 algorithm, the YOLOv7 algorithm has fewer network layers, which is reduced by about 53 layers compared to YOLOv5. The number of parameters and floating-point operations are reduced by about 8.94 million and 3.1 G, respectively, and the model volume is compressed from 92.7 M to 74.8 M. It can be seen that, as analyzed from a number of performance metrics, YOLOv7 is lighter than YOLOv5 by using multi-branch stacking.

Compared with the YOLOv7 algorithm, the improved GCB YOLOv7 shows superior performance with more obvious lightweight advantages. First, in terms of model structure, the number of network layers increases from 415 to 511 for the GCB YOLOv7 model due to the introduction of linear transformation, CBAM, and Bi FPN. However, the number of parameters and floating-point operations are reduced by about 5.46% and 6.96%, respectively. Secondly, the model training duration is reduced from 8.025 h to 7.903 h, which is about 1.52%. Finally, affected by the model structure, the model volume obtained from GCB YOLOv7 training is only 70.8 M, which is reduced by about 4 M.

From the model lightweight analysis, it can be seen that, after the introduction of lightweight convolution, attention mechanism, and cross-stage information fusion in the original YOLOv7 algorithm, this simplifies the model structure, reduces the amount of model computation, and compresses the model training time and volume.

3.3. Average Precision Analysis

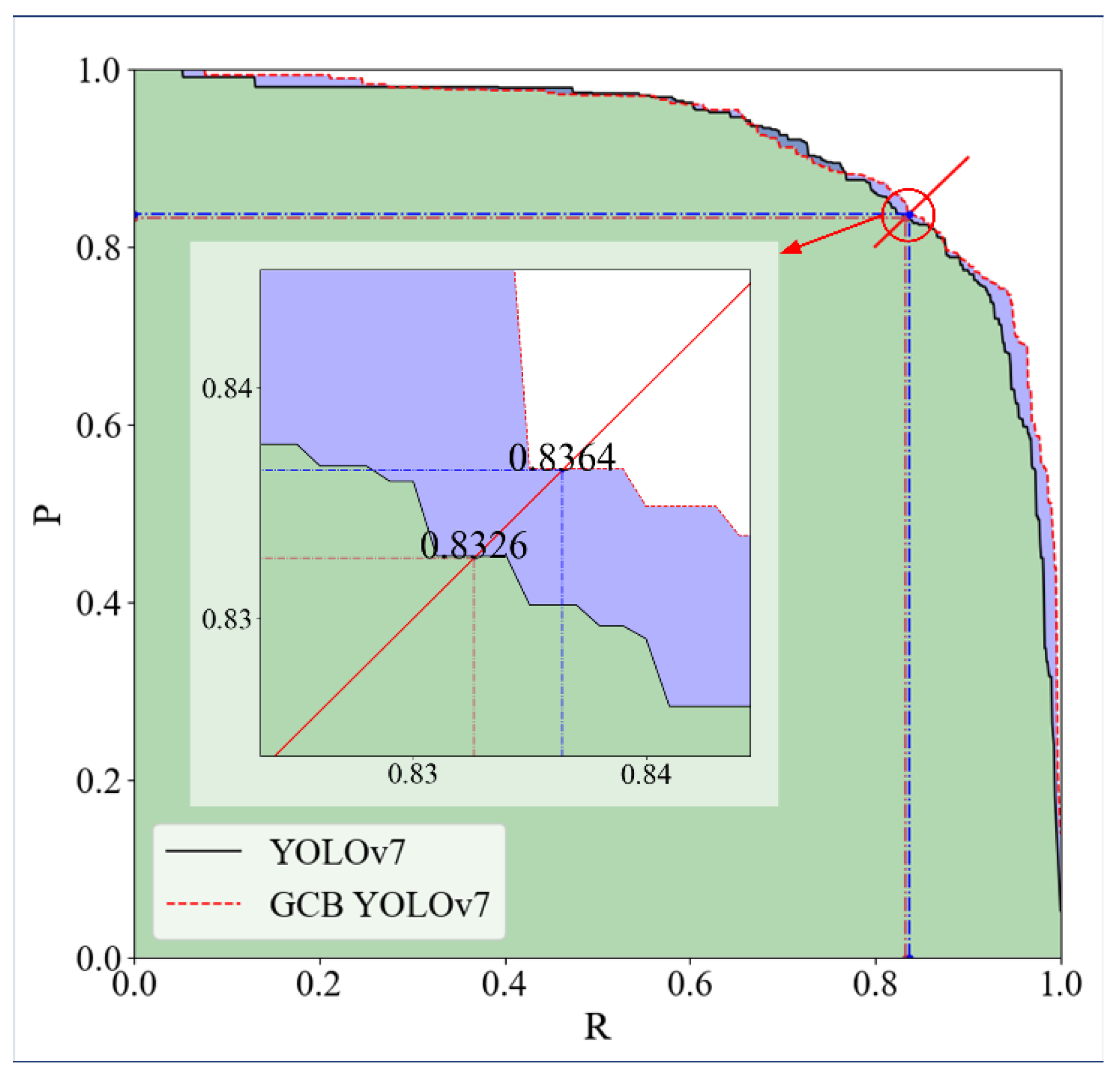

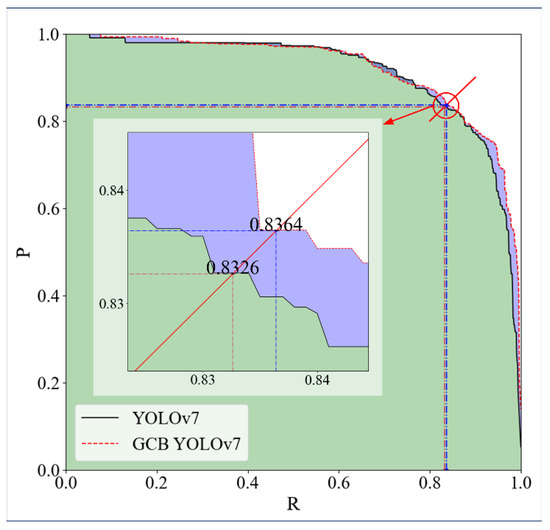

The R-P curve demonstrates the relationship between model precision (P) and recall (R) and enables the evaluation of model performance. When the classification threshold interval is taken from 0.001 to 1.000 with a total of 1000 threshold nodes, the corresponding P and R of the model are obtained. Using 1000 sets (R, P), the R-P curve is plotted with recall on the horizontal axis and precision on the vertical axis. The area under the line of the resulting R-P curve is the average precision (AP) of the model.

The R-P curves of YOLOv7 and GCB YOLOv7 are shown in Figure 6. As can be seen from the figure, the area under the line of the GCB YOLOv7 model is significantly larger than that of the YOLOv7 algorithm, so the average precision is higher. In Hemerocallis citrina Baroni maturity detection, the average precision of the YOLOv7 and GCB YOLOv7 models is 0.913 and 0.922, respectively. It can be seen that the improved GCB YOLOv7 algorithm is superior.

Figure 6.

R-P curve.

In the R-P curve, the balance line of the R-P curve (red solid line) is plotted by connecting the points (0,0) and (1,1), which produces two intersections with the R-P curves of the YOLOv7 and GCB YOLOv7 algorithms, (0.8326, 0.8326) and (0.8364, 0.8364), respectively. From the enlarged view inside the red circle, we can see that when models P and R are balanced in Hemerocallis citrina Baroni maturity detection, the GCB YOLOv7 algorithm is able to take into account both P and R, and its balanced performance is more desirable.

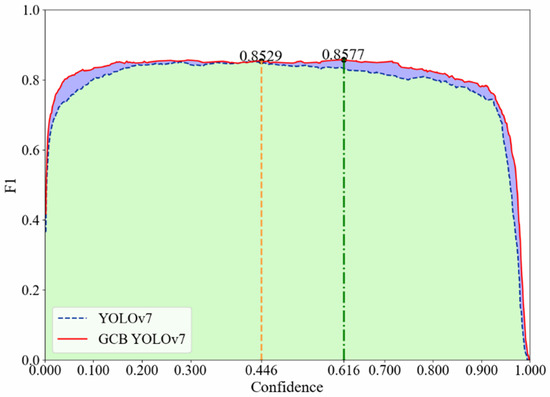

3.4. Harmonized Mean Performance Analysis

The harmonic mean performance is a harmonic calculation of model precision and recall, and Hemerocallis citrina Baroni maturity detection has to consider both precision and recall to accurately recognize and classify whether the Hemerocallis citrina Baroni are mature or not. At the same time, recall should also be taken into account to avoid omission detection during the recognition process, which misses Hemerocallis citrina Baroni that meets the picking standard.

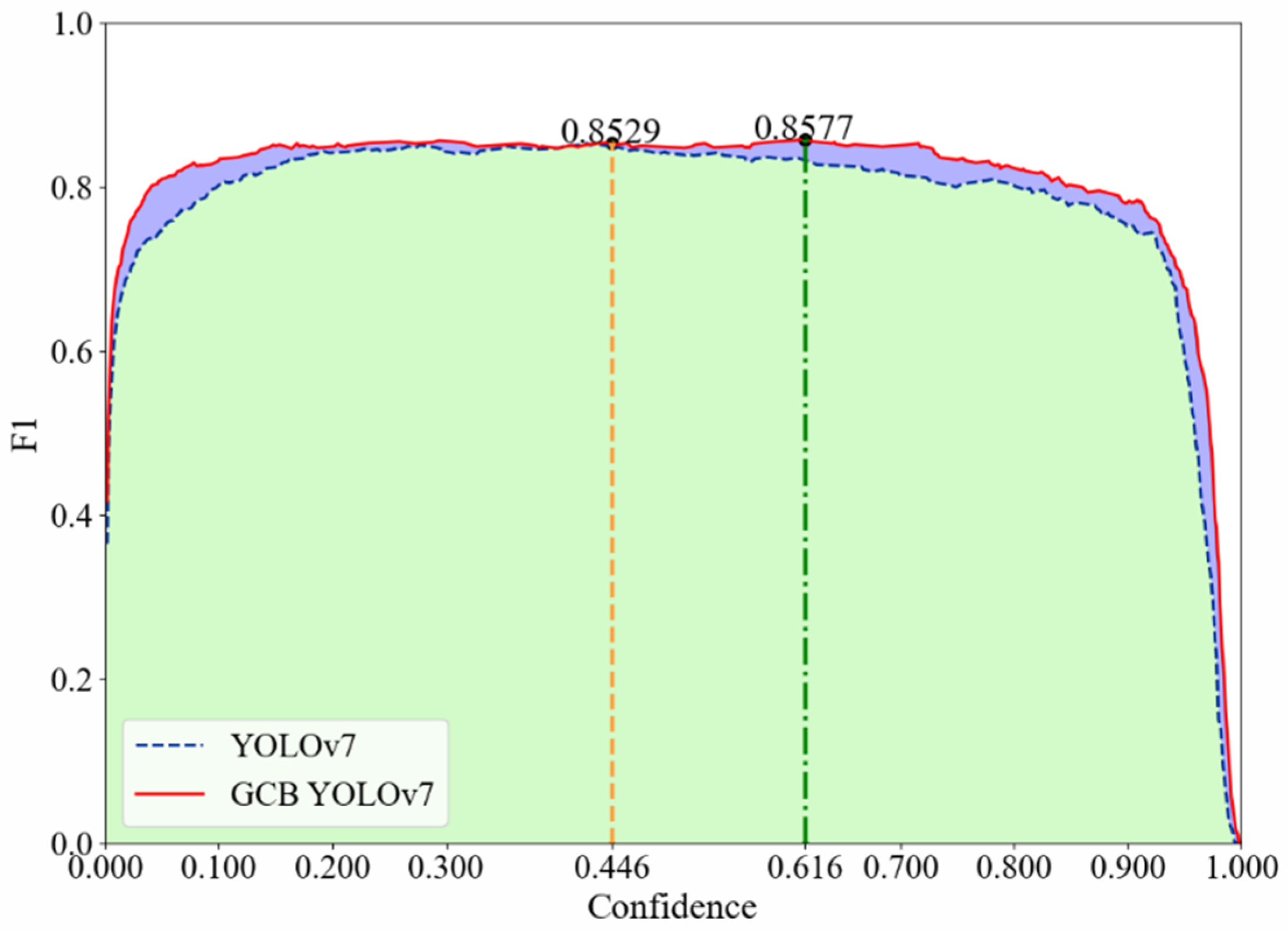

The harmonic mean performance curve varies with the IOU threshold, and the difference in precision and recall under different IOU thresholds leads to the difference in harmonic mean performance. The harmonic mean performance curves before and after algorithm improvement in Hemerocallis citrina Baroni maturity detection are shown in Figure 7.

Figure 7.

F1 harmonized mean curve.

As can be seen from the figure, under different confidence thresholds, the harmonic mean curve of the GCB YOLOv7 algorithm is more desirable than the original YOLOv7 algorithm. When the confidence threshold is 0.446 (yellow dotted line), the F1 value of the YOLOv7 model is 0.8529, while when the confidence threshold is 0.616 (green dotted line), the F1 value of the GCB YOLOv7 algorithm is 0.8577, which is higher than that of the original algorithm, 0.0048.

It can be seen that the model GCB YOLOv7 has the ability to improve the feature extraction tendency and self-update the weights after incorporating the CBAM mechanism and multi-information fusion, and the comprehensive performance of the model is better.

3.5. Real-Time Performance Analysis

In Hemerocallis citrina Baroni maturity detection, the non-maximum suppression strategy is added to the detection model obtained from training, so the detection efficiency is higher and the detection process is more stable [27].

In this experiment, 25 images of Hemerocallis citrina Baroni from different scenes were detected. The YOLOv7 algorithm took 230 ms, of which 20 ms were used to perform the NMS inference strategy alone. Therefore, the real-time performance of the YOLOv7 algorithm was 108.7 FPS. The GCB YOLOv7 algorithm took 250 ms, and no separate inference was performed; therefore, the real-time performance was 100.0 FPS.

The analysis of the real-time performance of the model shows that the GCB YOLOv7 algorithm does not negatively affect the performance of the model after the introduction of lightweight, and all of them are able to meet the real-time performance requirements.

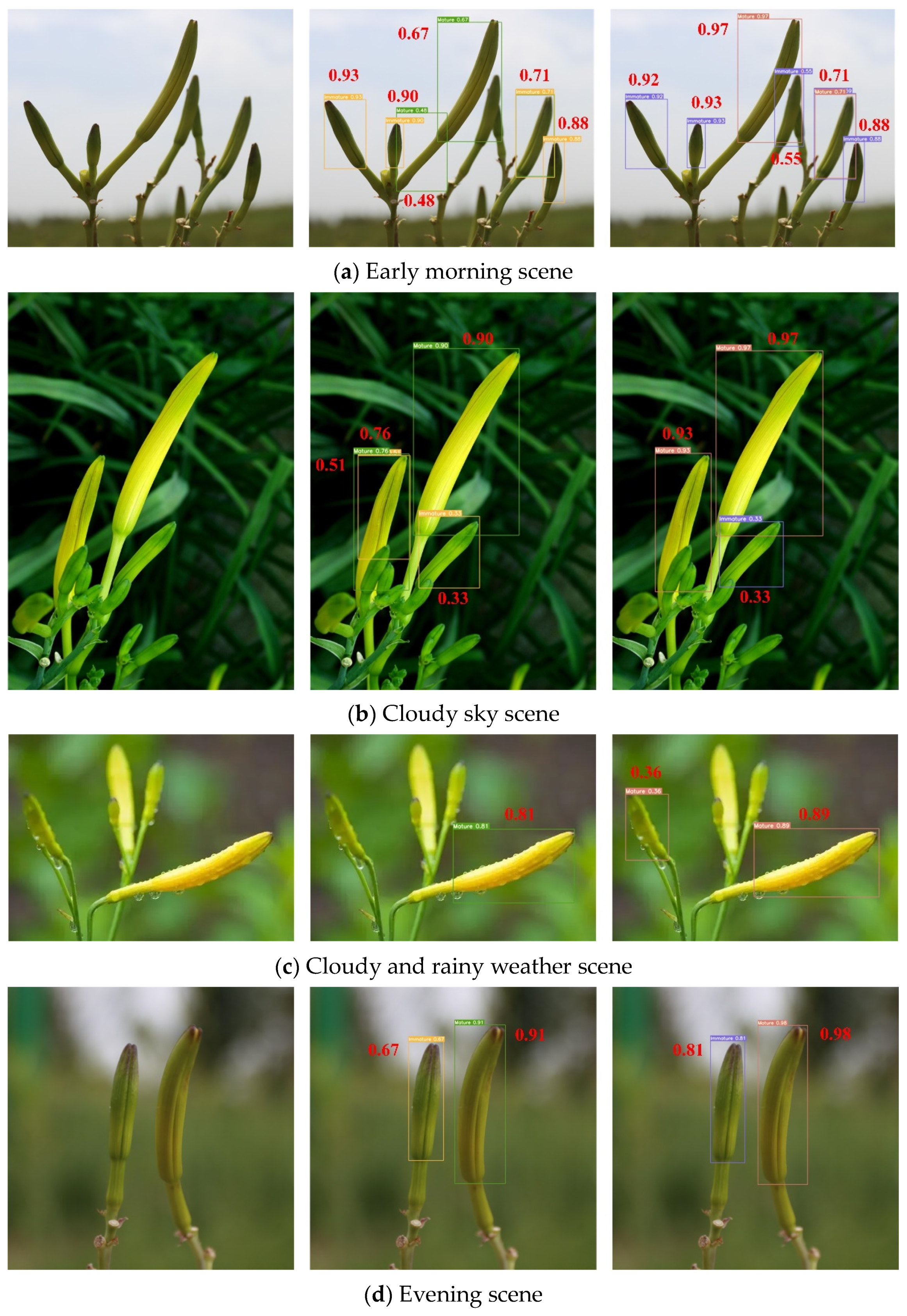

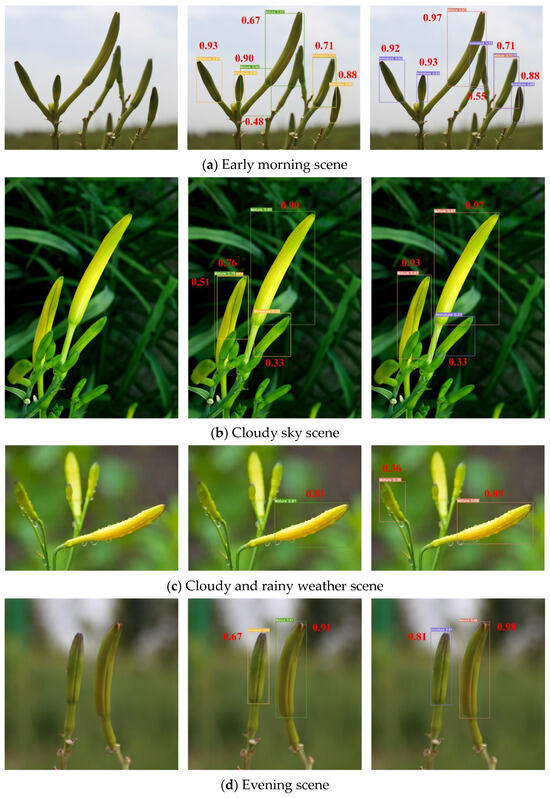

3.6. Comparison of Test Results

In the process of Hemerocallis citrina Baroni maturity detection, the same parameters were set for detection, in which the IOU threshold and confidence threshold were 0.2 and 0.2, respectively. Because NMS is added to both the YOLOv7 and GCB YOLOv7 algorithms, the non-maximal suppression effect is obvious. This reduces the shortage of duplicate detection to a greater extent and improves the local search and comparison abilities of the model [28].

Influenced by the growth and harvesting characteristics of Hemerocallis citrina Baroni, its harvesting is mainly carried out in the early morning, evening, or rainy weather. Therefore, this experimental comparison was performed in compliance with the Hemerocallis citrina Baroni picking. The comparison of the results of the YOLOv7 and GCB YOLOv7 models for the detection of Hemerocallis citrina Baroni maturity is shown in Figure 8.

Figure 8.

Test results.

As can be seen from Figure 8a, the maturation recognition of Hemerocallis citrina Baroni in the early morning scene is more satisfactory. The YOLOv7 algorithm mistakenly recognizes plant rhizomes as Hemerocallis citrina Baroni with a recognition confidence of 0.48. Compared to the original algorithm, the improved GCB YOLOv7 algorithm does not show any misdetections in this image and has a higher detection confidence.

In the cloudy sky scene for group (b), performing the same NMS strategy for the same Hemerocallis citrina Baroni, the YOLOv7 algorithm gives both 0.51 and 0.76 confidence, while the GCB YOLOv7 algorithm directly gives 0.93 confidence. The GCB YOLOv7 algorithm has a significant advantage over the NMS effect.

In the cloudy and rainy weather scene for group (c), which is affected by the resolution of the detected images, especially the blurred edge resolution in the same image, the GCB YOLOv7 algorithm detected two Hemerocallis citrina Baroni, while the original YOLOv7 algorithm detected only one Hemerocallis citrina Baroni. Also, the YOLOv7 algorithm has a much lower detection confidence for the same Hemerocallis citrina Baroni of only 0.36.

In the evening scene for group (d), both the YOLOv7 and GCB YOLOv7 algorithms demonstrate excellent detection results for the single bouquet detection environment. However, the improved GCB YOLOv7 algorithm has better detection results, with confidence levels of 0.81 and 0.98 for the two Hemerocallis citrina Baroni, which are higher than the original algorithm’s 0.24 and 0.07, respectively.

In addition, three different algorithms, YOLOv5, YOLOv7, and YOLOv8, were used to detect Hemerocallis citrina Baroni maturity, respectively, and the experimental results are shown in Table 3.

Table 3.

Comparison of experimental results.

From Table 3, it can be seen that the floating-point operations of the YOLOv5 and YOLOv8 algorithms are larger compared to the YOLOv7 algorithm, which are 107.9 G and 165.4 G. At the same time, the real-time performance of the two algorithms is poorer, at only 64.16 FPS and 80.75 FPS, respectively. In addition, YOLOv5 has the highest accuracy but also the highest number of parameters, which may result in the need for a larger computing device for the Hemerocallis citrina Baroni maturity detection device.

In addition, comparative experiments replicated the models in the literature [29,30] and were conducted on the basis of the YOLOv5 standard model using the same yellow flower dataset.

The experimental results show that, compared with the original YOLOv5, the CBAM-YOLOv5 model proposed in the literature [29] improves the detection efficiency, and the real-time performance improves from 64.16 FPS to 71.72 FPS, but the detection accuracy decreases to different degrees. The YOLO-CBAM model proposed in the literature [30], on the other hand, achieves a further improvement in performance but is slightly inferior to YOLOv7.

Meanwhile, as can be seen from Table 3, the literature [29,30] improves on YOLOv5, while the proposed GCB YOLOv7 in this paper improves on the YOLOv7 algorithm. Although both of the above models introduce the CBAM attention mechanism, the YOLOv5 algorithm does not perform as well as YOLOv7 in terms of both model parameters and detection performance.

Obviously, based on the YOLOv7 algorithm, the improved GCB YOLOv7 algorithm has lower parameter counts and floating-point operations, and the model size is smaller, which is suitable for the future needs of intelligent harvesting equipment. In addition, the real-time performance surpasses the YOLOv5 and YOLOv8 algorithms, reaching 100.00 FPS.

As we can see in Table 3, the accuracy of the GCB YOLOv7 model can meet the needs of detection, while the real-time performance also meets the requirements of target detection. Therefore, the improved YOLOv7 model is suitable for the maturation of Hemerocallis citrina Baroni.

In summary, the improved GCB YOLOv7 algorithm has obvious advantages in being lightweight, which reduces the model computation, compresses the model volume, and simplifies the model structure. At the same time, detection precision, recall, average precision, and harmonic mean performance are all improved to different degrees. In addition, the detection efficiency also meets the requirements of real-time performance. It can be seen that the GCB YOLOv7 algorithm proposed in this paper can meet the requirements of Hemerocallis citrina Baroni maturity detection [31].

4. Conclusions

In this paper, in order to address the problems of complex structure and low detection precision and recall of deep learning models in the process of smart agriculture construction, the GCB YOLOv7 Hemerocallis citrina Baroni maturity detection method is proposed by combining a lightweight neural network and an attention mechanism, which simplifies the model’s structure by fusing a lightweight network, improves the tendency of feature extraction by combining the CBAM mechanism, reduces unnecessary network nodes by fusing cross-channel information, realizes adaptive updating of the model weight, balances the model’s precision and recall, and solves the problem of mismatch between the performances [32,33].

The results of Hemerocallis citrina Baroni maturity detection show that the proposed GCB YOLOv7 algorithm optimizes the detection results, improves the detection precision, and reduces the model leakage rate, and the model lightweight advantage is more obvious compared with YOLOv5 and YOLOv7. In addition, simplifying the model structure to achieve lightweight also improves the model’s performance, which solves the contradiction between model structure and performance.

Author Contributions

Conceptualization, B.S., L.W. and N.Z.; methodology, B.S., L.W. and N.Z.; software, B.S. and N.Z.; validation, L.W. and N.Z.; investigation, N.Z.; resources, L.W.; data curation, B.S.; writing—original draft preparation, B.S., L.W. and N.Z.; writing—review and editing, L.W. and N.Z.; visualization, B.S.; supervision, L.W. and N.Z.; project administration, N.Z.; funding acquisition, L.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Shanxi Datong University Research Program (No. 2020Q13, No. 2022K1 and No. 2022K06), the 2022 Teaching Reform and Innovation Program of Shanxi Datong University (No. XJG2022216), and the 2023 Shanxi Province Higher Education Institutions Science and Technology Innovation Program Various Projects (No. 2023L276).

Institutional Review Board Statement

The research does not require the consent or approval of any authority.

Informed Consent Statement

The study did not involve animal or human experimentation.

Data Availability Statement

Data are contained within the article.

Acknowledgments

The authors would like to thank the reviewers for their careful reading of our paper and for their valuable suggestions for revision, which make it possible to present our paper better.

Conflicts of Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Introduction to Hemerocallis Citrina Baroni

Hemerocallis citrina Baroni, or Liliaceae daylilies angiosperms, are hardy, drought-tolerant, semi-shade-tolerant, and have low soil requirements. Hemerocallis citrina Baroni plants are generally taller, with green leaves, 7 to 20 in number, 50 to 130 cm long, and 6 to 25 mm wide. It takes about 40 to 60 days from flowering to seed maturity, and the flowering and fruiting period is from May to September. The fruits of Hemerocallis citrina Baroni before blooming are able to be used as a vegetable and medicinal herb, and the fresh Hemerocallis citrina Baroni fruits can also be canned and eaten. After blooming, the economic value of Hemerocallis citrina Baroni decreases sharply, and there are fewer uses for it. A picture of Hemerocallis citrina Baroni is shown in Figure 8.

References

- Wang, Q.F.; Cheng, M.; Huan, S.; Cai, Z.; Zhang, J.; Yuan, H. A deep learning approach incorporating YOLO v5 and attention mechanisms for field real-time detection of the invasive weed Solanum rostratum Dunal seedlings. Comput. Electron. Agric. 2022, 199, 107194. [Google Scholar] [CrossRef]

- Wang, D.D.; He, J. Channel pruned YOLOV5s-based deep learning approach for rapid and accurate apple fruitlet detection before fruit thinning. Biosyst. Eng. 2021, 210, 271–281. [Google Scholar] [CrossRef]

- Tummapudi, S.; Sadhu, S.S.; Simhadri, S.N.; Damarla, S.N.T.; Bhukya, M. Deep Learning Based Weed Detection and Elimination in Agriculture. In Proceedings of the 2023 International Conference on Inventive Computation Technologies (ICICT), Lalitpur, Nepal, 26–28 April 2023; pp. 147–151. [Google Scholar]

- Wu, D.H.; Lv, S.C.; Jiang, M. Using channel pruning-based YOLO v4 deep learning algorithm for the real-time and accurate detection of apple flowers in natural environments. Comput. Electron. Agric. 2020, 178, 105742. [Google Scholar] [CrossRef]

- Susa, J.B.; Nombrefia, W.C.; Abustan, A.S.; Macalisang, J.; Maaliw, R.R. Deep Learning Technique Detection for Cotton and Leaf Classification Using the YOLO Algorithm. In Proceedings of the 2022 International Conference on Smart Information Systems and Technologies (SIST), Nur-Sultan, Kazakhstan, 28–30 April 2022; pp. 1–6. [Google Scholar]

- Razfar, N.; True, J.L.; Bassiouny, R. Weed detection in soybean crops using custom lightweight deep learning models. J. Agric. Food Res. 2022, 8, 100308. [Google Scholar] [CrossRef]

- Alrowais, F.; Mashael, M.A.; Alabdan, R.; Marzouk, R.; Hilal, A.M.; Gupta, D. Hybrid leader based optimization with deep learning driven weed detection on internet of things enabled smart agriculture environment. Comput. Electr. Eng. 2022, 104, 108411. [Google Scholar] [CrossRef]

- Stasenko, N.; Shukhratov, I.; Savinov, M.; Shadrin, D.; Somov, A. Deep Learning in Precision Agriculture: Artificially Generated VNIR Images Segmentation for Early Postharvest Decay Prediction in Apples. Entropy 2023, 25, 987. [Google Scholar] [CrossRef]

- Xu, W.Y.; Xu, T.; Thomasson, A.J. A lightweight SSV2-YOLO based model for detection of sugarcane aphids in unstructured natural environments. Comput. Electron. Agric. 2023, 211, 107961. [Google Scholar] [CrossRef]

- Wu, Y.J.; Yang, Y.; Wang, X.-F.; Jian, C.; Li, X. Fig Fruit Recognition Method Based on YOLO v4 Deep Learning. In Proceedings of the 2021 18th International Conference on Electrical Engineering/Electronics, Computer, Telecommunications and Information Technology (ECTI-CON), Chiang Mai, Thailand, 19–22 May 2021; pp. 303–306. [Google Scholar]

- Liu, H.Q.; Zhang, Y.; Yang, G.P. Small unopened cotton boll counting by detection with MRF-YOLO in the wild. Comput. Electron. Agric. 2023, 204, 107576. [Google Scholar] [CrossRef]

- Alom, M.; Ali, M.Y.; Islam, M.T.; Uddin, A.H.; Rahman, W. Species classification of brassica napus based on flowers, leaves, and packets using deep neural networks. J. Agric. Food Res. 2023, 14, 100658. [Google Scholar] [CrossRef]

- Jeevanantham, R.; Vignesh, D.; Abdul, R.A.; Angeljulie, J. Deep Learning Based Plant Diseases Monitoring and Detection System. In Proceedings of the 2023 International Conference on Sustainable Computing and Data Communication Systems (ICSCDS), Erode, India, 23–25 March 2023; pp. 360–365. [Google Scholar]

- Amin, U.; Shahzad, M.I.; Shahzad, A.; Shahzad, M.; Khan, U.; Mahmood, Z. Automatic Fruits Freshness Classification Using CNN and Transfer Learning. Appl. Sci. 2023, 13, 8087. [Google Scholar] [CrossRef]

- Zhang, F.; Ren, F.T.; Li, J.P. Automatic stomata recognition and measurement based on improved YOLO deep learning model and entropy rate superpixel algorithm. Ecol. Inform. 2022, 68, 101521. [Google Scholar] [CrossRef]

- Qi, C.; Gao, G.F.; Pearson, S.; Harman, H.; Chen, K.; Shu, L. Tea chrysanthemum detection under unstructured environments using the TC-YOLO model. Expert Syst. Appl. 2022, 193, 116473. [Google Scholar] [CrossRef]

- Huo, J.Q. Research on Yellow Cauliflower Bud Target Recognition and Localization Based on Machine Vision; Shanxi Agricultural University: Shanxi, China, 2022. [Google Scholar]

- Jin, H.J.; Ma, G.Y.; Tang, M.Y.; Chen, J.M.; Zhang, Y.P.; Ge, X.F. Identifying daylily in complex environment using YOLOv7-MOCA model. IEEE Trans. Chin. Soc. Agric. Eng. 2023, 39, 181–188. [Google Scholar]

- Li, S. Classification and Recognition of Major Diseases of Brassicas Based on Faster R-CNN; Shanxi Agricultural University: Shanxi, China, 2022. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Mark, H.Y. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022. [Google Scholar]

- Han, K.; Wang, Y.H.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. GhostNet: More Features from Cheap Operations. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Proceedings of the 15th European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Su, X.; Wang, Y.; Duan, S.; Ma, J. Detecting Chaos from Agricultural Product Price Time Series. Entropy 2014, 16, 6415–6433. [Google Scholar] [CrossRef]

- Zhang, L.; Wu, L.; Liu, Y. Hemerocallis citrina Baroni Maturity Detection Method Integrating Lightweight Neural Network and Dual Attention Mechanism. Electronics 2022, 11, 2743. [Google Scholar] [CrossRef]

- Yao, R.; Guo, C.; Deng, W.; Zhao, H. A novel mathematical morphology spectrum entropy based on scale-adaptive techniques. ISA Trans. 2022, 126, 691–702. [Google Scholar] [CrossRef] [PubMed]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 2011–2023. [Google Scholar] [CrossRef] [PubMed]

- Perugachi-Diaz, Y.; Tomczak, J.M.; Bhulai, S. Deep learning for white cabbage seedling prediction. Computer. Electron. Agric. 2021, 184, 106059. [Google Scholar] [CrossRef]

- Patel, A.; Lee, W.S.; Peres, N.A. Strawberry plant wetness detection using computer vision and deep learning. Smart Agric. Technol. 2021, 1, 100013. [Google Scholar] [CrossRef]

- Hao, S.; Zhang, X.; Ma, X.; Sun, S.Y.; Wen, H.; Wang, J.; Bai, Q. Foreign object detection in coal mine conveyor belt based on CBAM-YOLOv5. J. China Coal Soc. 2022, 47, 4147–4156. [Google Scholar]

- Zhang, M.; Shi, H.; Zhang, Y.; Yu, Y.; Zhou, M. Deep learning-based damage detection of mining conveyor belt. Measurement 2021, 175, 109130. [Google Scholar] [CrossRef]

- Haq, S.I.U.; Tahir, M.N.; Lan, Y. Weed Detection in Wheat Crops Using Image Analysis and Artificial Intelligence (AI). Appl. Sci. 2023, 13, 8840. [Google Scholar] [CrossRef]

- López-Martínez, M.; Díaz-Flórez, G.; Villagrana-Barraza, S.; Solís-Sánchez, L.O.; Guerrero-Osuna, H.A.; Soto-Zarazúa, G.M.; Olvera-Olvera, C.A. A High-Performance Computing Cluster for Distributed Deep Learning: A Practical Case of Weed Classification Using Convolutional Neural Network Models. Appl. Sci. 2023, 13, 6007. [Google Scholar] [CrossRef]

- Wang, K.; Chen, K.; Du, H.; Liu, S.; Xu, J.; Zhao, J.; Chen, H.; Liu, Y.; Liu, Y. New image dataset and new negative sample judgment method for crop pest recognition based on deep learning models. Ecol. Inform. 2022, 69, 101620. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).