Machine Learning Methods in Weather and Climate Applications: A Survey

Abstract

:1. Introduction

- Limited Scope: Existing surveys predominantly focus either on short-term weather forecasting or medium-to-long-term climate predictions. There is a notable absence of comprehensive surveys that endeavour to bridge these two-time scales. In addition, current investigations tend to focus narrowly on specific methods, such as simple neural networks, thereby neglecting some combination of methods.

- Lack of model details: Many extisting studies offer only generalized viewpoints and lack a systematic analysis of the specific model employed in weather and climate prediction. This absence creates a barrier for researchers aiming to understand the intricacies and efficacy of individual methods.

- Neglect of Recent Advances: Despite rapid developments in machine learning and computational techniques, existing surveys have not kept pace with these advancements. The paucity of information on cutting-edge technologies stymies the progression of research in this interdisciplinary field.

- Comprehensive scope: Unlike research endeavors that restrict their inquiry to a singular temporal scale, our survey provides a comprehensive analysis that amalgamates short-term weather forecasting with medium- and long-term climate predictions. In total, 20 models were surveyed, of which a select subset of eight were chosen for in-depth scrutiny. These models are discerned as the industry’s avant-garde, thereby serving as invaluable references for researchers. For instance, the PanGu model exhibits remarkable congruence with actual observational results, thereby illustrating the caliber of the models included in our analysis.

- In-Depth Analysis: Breaking new ground, this study delves into the intricate operational mechanisms of the eight focal models. We have dissected the operating mechanisms of these eight models, distinguishing the differences in their approaches and summarizing the commonalities in their methods through comparison. This comparison helps readers gain a deeper understanding of the efficacy and applicability of each model and provides a reference for choosing the most appropriate model for a given scenario.

- Identification of Contemporary Challenges and Future Work: The survey identifies pressing challenges currently facing the field, such as the limited dataset of chronological seasons and complex climate change effects, and suggests directions for future work, including simulating datasets and physics-based constraint models. These recommendations not only add a forward-looking dimension to our research but also act as a catalyst for further research and development in climate prediction.

2. Background

3. Related Work

3.1. Statistical Method

3.2. Physical Models

4. Taxonomy of Climate Prediction Applications

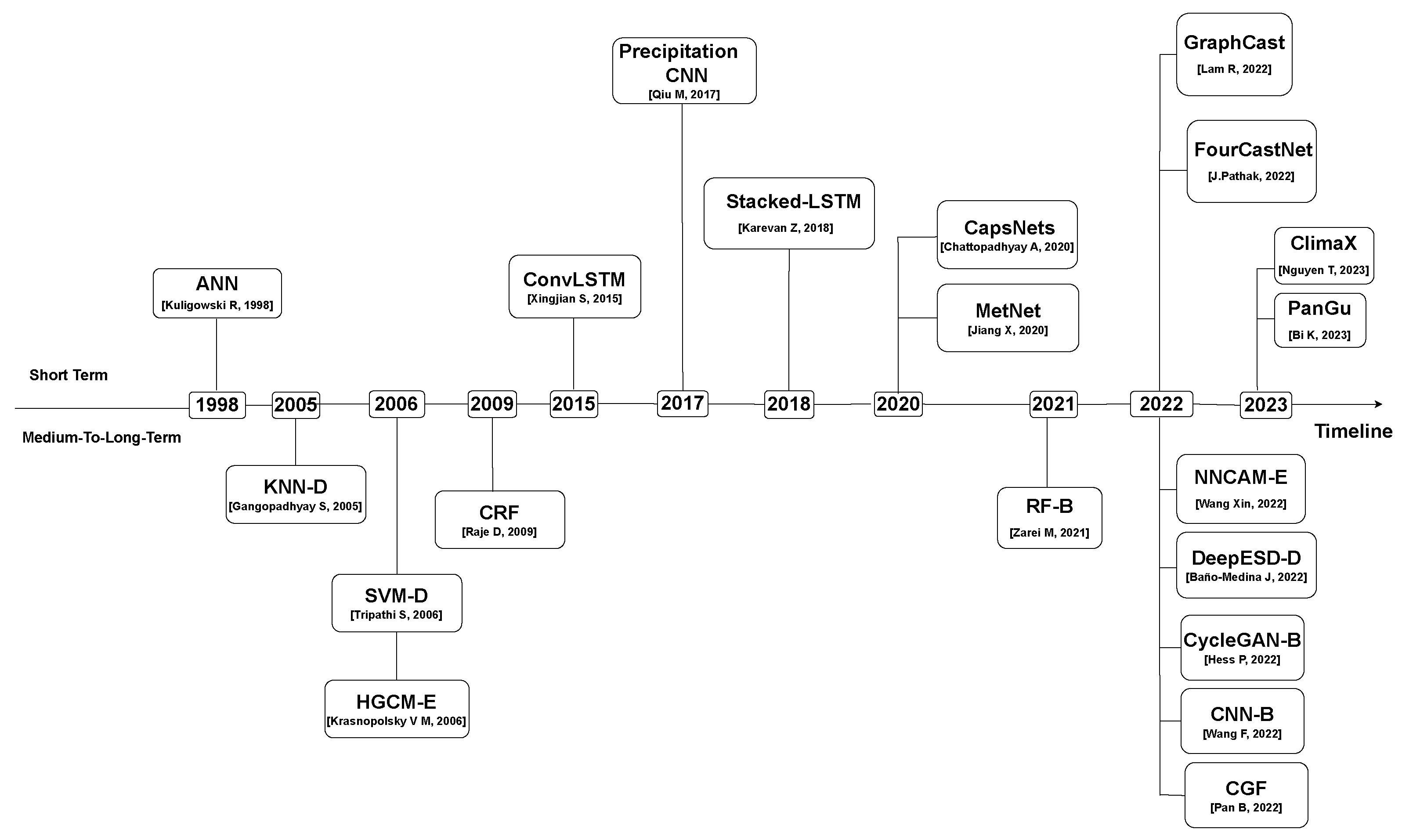

4.1. Climate Prediction Milestone Based on Machine-Learning

4.2. Classification of Climate Prediction Methods

5. Short-Term Weather Forecast

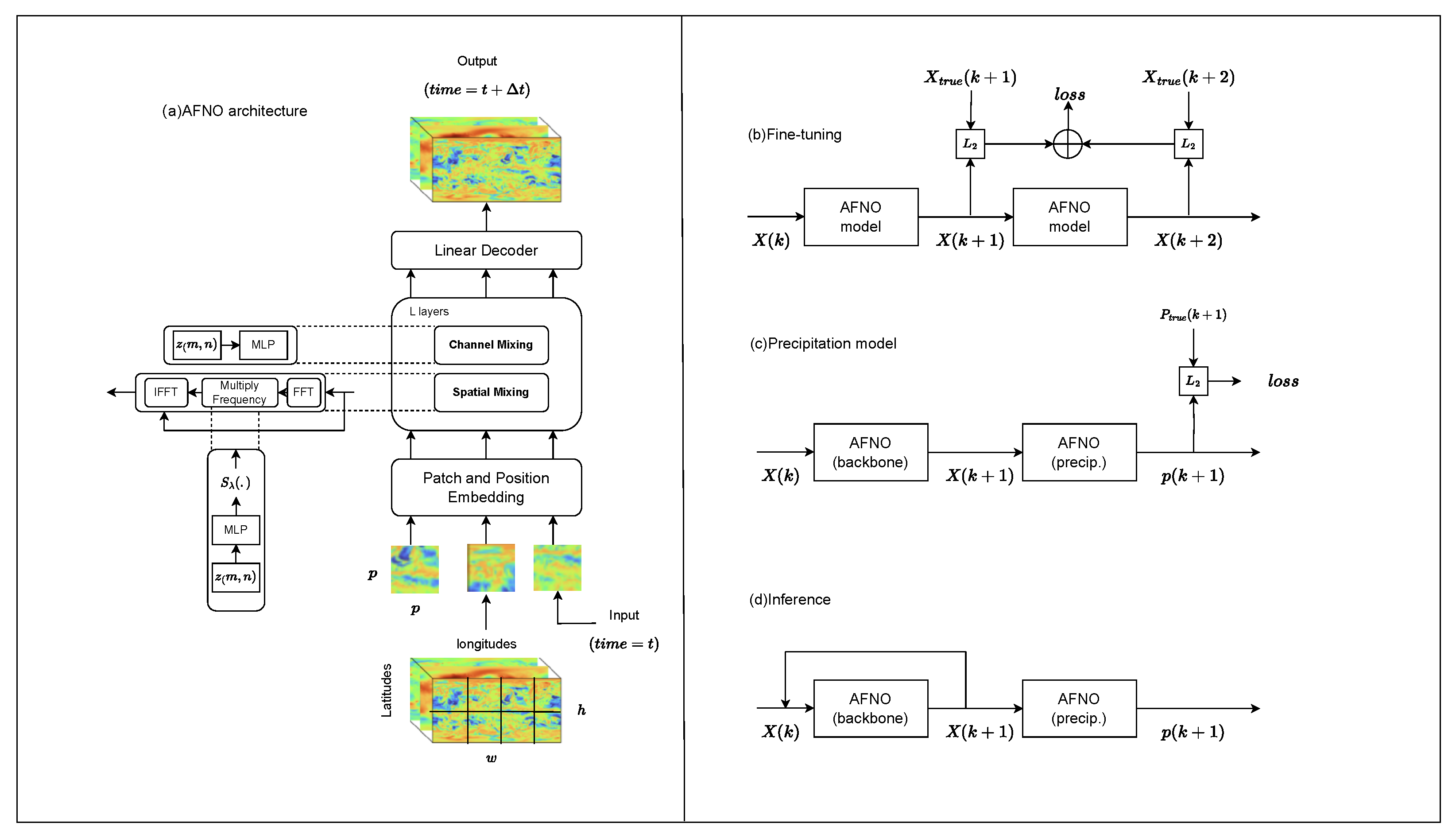

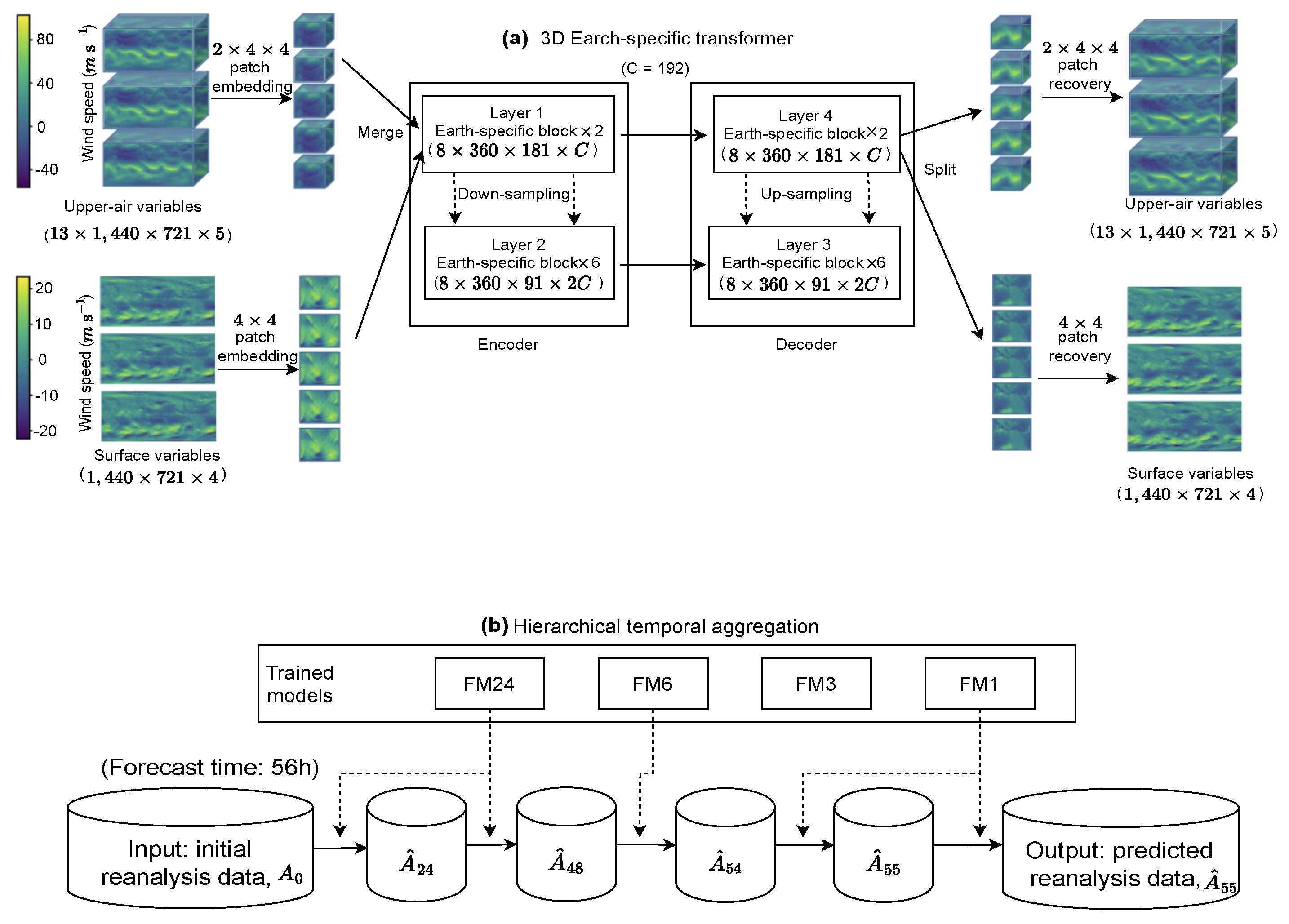

5.1. Model Design

- The Navier-Stokes Equations [73]: Serving as the quintessential descriptors of fluid motion, these equations delineate the fundamental mechanics underlying atmospheric flow.

- The Thermodynamic Equations [74]: These equations intricately interrelate the temperature, pressure, and humidity within the atmospheric matrix, offering insights into the state and transitions of atmospheric energy.

- Shortwave and Longwave Radiation Transfer Equations elucidate the absorption, scattering, and emission of both solar and terrestrial radiation, which in turn influence atmospheric temperature and dynamics.

- Empirical or Semi-Empirical Convection Parameterization Schemes simulate vertical atmospheric motions initiated by local instabilities, facilitating the capture of weather phenomena like thunderstorms.

- Boundary-Layer Dynamics concentrates on the exchanges of momentum, energy, and matter between the Earth’s surface and the atmosphere which are crucial for the accurate representation of surface conditions in the model.

- Land Surface and Soil/Ocean Interaction Modules simulate the exchange of energy, moisture, and momentum between the surface and the atmosphere, while also accounting for terrestrial and aquatic influences on atmospheric conditions.

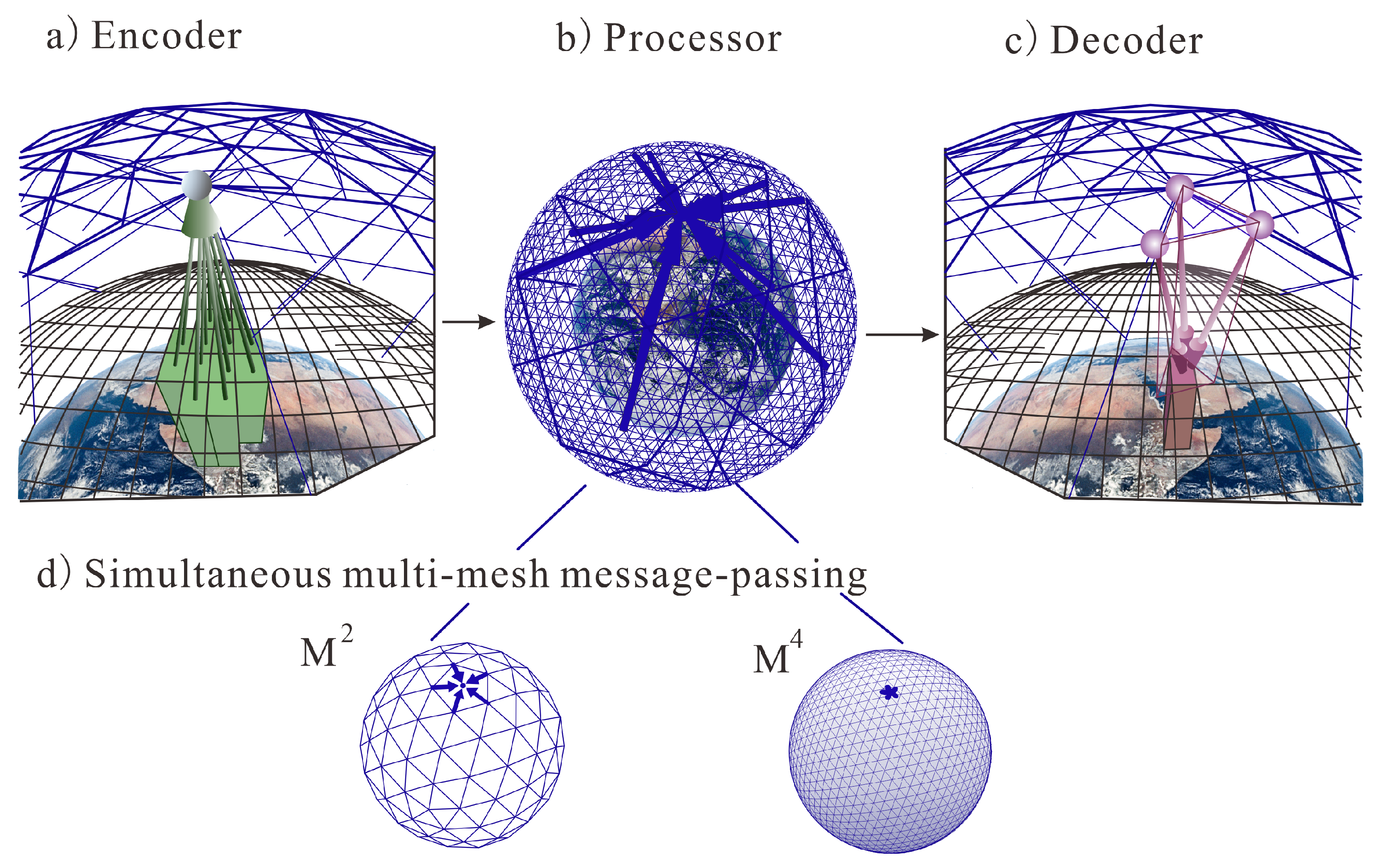

- Encoder: The encoder component maps the local region of the input data (on the original latitude-longitude grid) onto the nodes of the multigrid graphical representation. It maps two consecutive input frames of the latitude-longitude input grid, with numerous variables per grid point, into a multi-scale internal mesh representation. This mapping process helps the model better capture and understand spatial dependencies in the data, allowing for more accurate predictions of future weather conditions.

- Processor: This part performs several rounds of message-passing on the multi-mesh, where the edges can span short or long ranges, facilitating efficient communication without necessitating an explicit hierarchy. More specifically, the section uses a multi-mesh graph representation. It refers to a special graph structure that is able to represent the spatial structure of the Earth’s surface in an efficient way. In a multi-mesh graph representation, nodes may represent specific regions of the Earth’s surface, while edges may represent spatial relationships between these regions. In this way, models can capture spatial dependencies on a global scale and are able to utilize the power of GNNs to analyze and predict weather changes.

- Decoder: It then maps the multi-mesh representation back to the latitude-longitude grid as a prediction for the next time step.

5.2. Result Analysis

6. Medium-to-Long-Term Climate Prediction

6.1. Model Design

- Problem Definition: The goal is to approximate , a task challenged by high-dimensional geospatial data, data inhomogeneity, and a large dataset.

- Model Specification:

- Random Variable z: A latent variable with a fixed standard Gaussian distribution.

- Parametric Functions : Neural networks for transforming z and approximating target and posterior distributions.

- Objective Function: Maximization of the Evidence Lower Bound (ELBO).

- Training Procedure:

- parametric functions .

- Training Objective (Maximize ELBO) [98]: The ELBO is defined as:with terms for reconstruction, regularization, and residual error.

- Optimization: Utilize variational inference, Monte Carlo reparameterization, and Gaussian assumptions.

- Forecasting: Generate forecasts by sampling , the likelihood of , and using the mean of for an average estimate.

- Two Generators: The CycleGAN model includes two generators. Generator G learns the mapping from the simulated domain to the real domain, and generator F learns the mapping from the real domain to the simulated domain [100].

- Two Discriminators: There are two discriminators, one for the real domain and one for the simulated domain. Discriminator encourages generator G to generate samples that look similar to samples in the real domain, and discriminator encourages generator F to generate samples that look similar to samples in the simulated domain.

- Cycle Consistency Loss: To ensure that the mappings are consistent, the model enforces the following condition through a cycle consistency loss: if a sample is mapped from the simulated domain to the real domain and then mapped back to the simulated domain, it should get a sample similar to the original simulated sample. Similarly, if a sample is mapped from the real domain to the simulated domain and then mapped back to the real domain, it should get a sample similar to the original real sample.

- Training Process: The model is trained to learn the mapping between these two domains by minimizing the adversarial loss and cycle consistency loss between the generators and discriminators.

- Application to Prediction: Once trained, these mappings can be used for various tasks, such as transforming simulated precipitation data into forecasts that resemble observed data.

- Reference Model: SPCAM. SPCAM serves as the foundational GCM and is embedded with Cloud-Resolving Models (CRMs) to simulate microscale atmospheric processes like cloud formation and convection. SPCAM is employed to generate “target simulation data”, which serves as the training baseline for the neural networks. The use of CRMs is inspired by recent advancements in data science, demonstrating that machine learning parameterizations can potentially outperform traditional methods in simulating convective and cloud processes.

- Neural Networks: ResDNNs, a specialized form of deep neural networks, are employed for their ability to approximate complex, nonlinear relationships. The network comprises multiple residual blocks, each containing two fully connected layers with Rectified Linear Unit (ReLU) activations. ResDNNs are designed to address the vanishing and exploding gradient problems in deep networks through residual connections, offering a stable and effective gradient propagation mechanism. This makes them well-suited for capturing the complex and nonlinear nature of atmospheric processes.

- Subgrid-Scale Physical Simulator. Traditional parameterizations often employ simplified equations to model subgrid-scale processes, which might lack accuracy. In contrast, the ResDNNs are organized into a subgrid-scale physical simulator that operates independently within each model grid cell. This simulator takes atmospheric states as inputs and outputs physical quantities at the subgrid scale, such as cloud fraction and precipitation rate.

6.2. Result Analysis

7. Discussion

7.1. Overall Comparison

7.2. Challenge

7.3. Future Work

- Simulate the dataset using statistical methods or physical methods.

- Combining statistical knowledge with machine learning methods to enhance the interpretability of patterns.

- Consider the introduction of physics-based constraints into deep learning models to produced more accurate and reliable results.

- Accelerating Physical Model Prediction with machine learning knowledge.

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| Symbol | Definition |

| v | velocity vector |

| t | time |

| fluid density | |

| p | pressure |

| dynamic viscosity | |

| g | gravitational acceleration vector |

| expectation under the variational distribution | |

| latent variable | |

| observed data | |

| joint distribution of observed and latent variables | |

| variational distribution | |

| G, F | Generators for mappings from simulated to real domain and vice versa. |

| D, D | Discriminators for real and simulated domains. |

| , | Cycle consistency loss and Generative Adversarial Network loss. |

| X, Y | Data distributions for simulated and real domains. |

| Weighting factor for the cycle consistency loss. |

References

- Abbe, C. The physical basis of long-range weather. Mon. Weather Rev. 1901, 29, 551–561. [Google Scholar] [CrossRef]

- Zheng, Y.; Capra, L.; Wolfson, O.; Yang, H. Urban computing: Concepts, methodologies, and applications. Acm Trans. Intell. Syst. Technol. TIST 2014, 5, 1–55. [Google Scholar]

- Gneiting, T.; Raftery, A.E. Weather forecasting with ensemble methods. Science 2005, 310, 248–249. [Google Scholar] [CrossRef] [PubMed]

- Agapiou, A. Remote sensing heritage in a petabyte-scale: Satellite data and heritage Earth Engine applications. Int. J. Digit. Earth 2017, 10, 85–102. [Google Scholar] [CrossRef]

- Bendre, M.R.; Thool, R.C.; Thool, V.R. Big data in precision agriculture: Weather forecasting for future farming. In Proceedings of the 2015 1st International Conference on Next Generation Computing Technologies (NGCT), Dehradun, India, 4–5 September 2015; pp. 744–750. [Google Scholar]

- Zavala, V.M.; Constantinescu, E.M.; Krause, T. On-line economic optimization of energy systems using weather forecast information. J. Process Control 2009, 19, 1725–1736. [Google Scholar] [CrossRef]

- Nurmi, V.; Perrels, A.; Nurmi, P.; Michaelides, S.; Athanasatos, S.; Papadakis, M. Economic value of weather forecasts on transportation–Impacts of weather forecast quality developments to the economic effects of severe weather. EWENT FP7 Project. 2012, Volume 490. Available online: http://virtual.vtt.fi/virtual/ewent/Deliverables/D5/D5_2_16_02_2012_revised_final.pdf (accessed on 8 September 2023).

- Russo, J.A., Jr. The economic impact of weather on the construction industry of the United States. Bull. Am. Meteorol. Soc. 1966, 47, 967–972. [Google Scholar] [CrossRef]

- Badorf, F.; Hoberg, K. The impact of daily weather on retail sales: An empirical study in brick-and-mortar stores. J. Retail. Consum. Serv. 2020, 52, 101921. [Google Scholar] [CrossRef]

- De Freitas, C.R. Tourism climatology: Evaluating environmental information for decision making and business planning in the recreation and tourism sector. Int. J. Biometeorol. 2003, 48, 45–54. [Google Scholar] [CrossRef]

- Smith, K. Environmental Hazards: Assessing Risk and Reducing Disaster; Routledge: London, UK, 2013. [Google Scholar]

- Hammer, G.L.; Hansen, J.W.; Phillips, J.G.; Mjelde, J.W.; Hill, H.; Love, A.; Potgieter, A. Advances in application of climate prediction in agriculture. Agric. Syst. 2001, 70, 515–553. [Google Scholar] [CrossRef]

- Guedes, G.; Raad, R.; Raad, L. Welfare consequences of persistent climate prediction errors on insurance markets against natural hazards. Estud. Econ. Sao Paulo 2019, 49, 235–264. [Google Scholar] [CrossRef]

- McNamara, D.E.; Keeler, A. A coupled physical and economic model of the response of coastal real estate to climate risk. Nat. Clim. Chang. 2013, 3, 559–562. [Google Scholar] [CrossRef]

- Kleerekoper, L.; Esch, M.V.; Salcedo, T.B. How to make a city climate-proof, addressing the urban heat island effect. Resour. Conserv. Recycl. 2012, 64, 30–38. [Google Scholar] [CrossRef]

- Kaján, E.; Saarinen, J. Tourism, climate change and adaptation: A review. Curr. Issues Tour. 2013, 16, 167–195. [Google Scholar]

- Dessai, S.; Hulme, M.; Lempert, R.; Pielke, R., Jr. Climate prediction: A limit to adaptation. Adapt. Clim. Chang. Threshold. Values Gov. 2009, 64, 78. [Google Scholar]

- Ham, Y.-G.; Kim, J.-H.; Luo, J.-J. Deep Learning for Multi-Year ENSO Forecasts. Nature 2019, 573, 568–572. [Google Scholar] [CrossRef] [PubMed]

- Howe, L.; Wain, A. Predicting the Future; Cambridge University Press: Cambridge, UK, 1993; Volume V, pp. 1–195. [Google Scholar]

- Hantson, S.; Arneth, A.; Harrison, S.P.; Kelley, D.I.; Prentice, I.C.; Rabin, S.S.; Archibald, S.; Mouillot, F.; Arnold, S.R.; Artaxo, P.; et al. The status and challenge of global fire modelling. Biogeosciences 2016, 13, 3359–3375. [Google Scholar]

- Racah, E.; Beckham, C.; Maharaj, T.; Ebrahimi Kahou, S.; Prabhat, M.; Pal, C. ExtremeWeather: A large-scale climate dataset for semi-supervised detection, localization, and understanding of extreme weather events. Adv. Neural Inf. Process. Syst. 2017, 30, 3402–3413. [Google Scholar]

- Gao, S.; Zhao, P.; Pan, B.; Li, Y.; Zhou, M.; Xu, J.; Zhong, S.; Shi, Z. A nowcasting model for the prediction of typhoon tracks based on a long short term memory neural network. Acta Oceanol. Sin. 2018, 37, 8–12. [Google Scholar]

- Ren, X.; Li, X.; Ren, K.; Song, J.; Xu, Z.; Deng, K.; Wang, X. Deep Learning-Based Weather Prediction: A Survey. Big Data Res. 2021, 23, 100178. [Google Scholar]

- Reichstein, M.; Camps-Valls, G.; Stevens, B.; Jung, M.; Denzler, J.; Carvalhais, N.; Prabhat, F. Deep learning and process understanding for data-driven Earth system science. Nature 2019, 566, 195–204. [Google Scholar] [CrossRef]

- Stockhause, M.; Lautenschlager, M. CMIP6 data citation of evolving data. Data Sci. J. 2017, 16, 30. [Google Scholar] [CrossRef]

- Hsieh, W.W. Machine Learning Methods in the Environmental Sciences: Neural Networks and Kernels; Cambridge University Press: Cambridge, UK, 2009. [Google Scholar]

- Krasnopolsky, V.M.; Fox-Rabinovitz, M.S.; Chalikov, D.V. New Approach to Calculation of Atmospheric Model Physics: Accurate and Fast Neural Network Emulation of Longwave Radiation in a Climate Model. Mon. Weather Rev. 2005, 133, 1370–1383. [Google Scholar] [CrossRef]

- Krasnopolsky, V.M.; Fox-Rabinovitz, M.S.; Belochitski, A.A. Using ensemble of neural networks to learn stochastic convection parameterizations for climate and numerical weather prediction models from data simulated by a cloud resolving model. Adv. Artif. Neural Syst. 2013, 2013, 485913. [Google Scholar] [CrossRef]

- Chevallier, F.; Morcrette, J.-J.; Chéruy, F.; Scott, N.A. Use of a neural-network-based long-wave radiative-transfer scheme in the ECMWF atmospheric model. Q. J. R. Meteorol. Soc. 2000, 126, 761–776. [Google Scholar]

- Krasnopolsky, V.M.; Fox-Rabinovitz, M.S.; Hou, Y.T.; Lord, S.J.; Belochitski, A.A. Accurate and fast neural network emulations of model radiation for the NCEP coupled climate forecast system: Climate simulations and seasonal predictions. Mon. Weather Rev. 2010, 138, 1822–1842. [Google Scholar] [CrossRef]

- Tolman, H.L.; Krasnopolsky, V.M.; Chalikov, D.V. Neural network approximations for nonlinear interactions in wind wave spectra: Direct mapping for wind seas in deep water. Ocean. Model. 2005, 8, 253–278. [Google Scholar] [CrossRef]

- Markakis, E.; Papadopoulos, A.; Perakakis, P. Spatiotemporal Forecasting: A Survey. arXiv 2018, arXiv:1808.06571. [Google Scholar]

- Box, G.E.; Jenkins, G.M.; Reinsel, G.C.; Ljung, G.M. Time Series Analysis: Forecasting and Control; John Wiley & Sons: Hoboken, NJ, USA, 2015. [Google Scholar]

- He, Y.; Kolovos, A. Spatial and Spatio-Temporal Geostatistical Modeling and Kriging. In Wiley StatsRef: Statistics Reference Online; John Wiley & Sons: Hoboken, NJ, USA, 2015. [Google Scholar]

- Lu, H.; Fan, Z.; Zhu, H. Spatiotemporal Analysis of Air Quality and Its Application in LASG/IAP Climate System Model. Atmos. Ocean. Sci. Lett. 2011, 4, 204–210. [Google Scholar]

- Chatfield, C. The Analysis of Time Series: An Introduction, 7th ed.; CRC Press: Boca Raton, FL, USA, 2016. [Google Scholar]

- Stull, R. Meteorology for Scientists and Engineers, 3rd ed.; Brooks/Cole: Pacific Grove, CA, USA, 2015. [Google Scholar]

- Yuval, J.; O’Gorman, P.A. Machine Learning for Parameterization of Moist Convection in the Community Atmosphere Model. Proc. Natl. Acad. Sci. USA 2020, 117, 12–20. [Google Scholar]

- Gagne, D.J.; Haupt, S.E.; Nychka, D.W. Machine Learning for Spatial Environmental Data. Meteorol. Monogr. 2020, 59, 9.1–9.36. [Google Scholar]

- Xu, Z.; Li, Y.; Guo, Q.; Shi, X.; Zhu, Y. A Multi-Model Deep Learning Ensemble Method for Rainfall Prediction. J. Hydrol. 2020, 584, 124579. [Google Scholar]

- Kuligowski, R.J.; Barros, A.P. Localized precipitation forecasts from a numerical weather prediction model using artificial neural networks. Weather. Forecast. 1998, 13, 1194–1204. [Google Scholar] [CrossRef]

- Shi, X.; Chen, Z.; Wang, H.; Yeung, D.Y.; Wong, W.K.; Woo, W.C. Convolutional LSTM Network: A Machine Learning Approach for Precipitation Nowcasting. arXiv 2015, arXiv:1506.04214. [Google Scholar]

- Qiu, M.; Zhao, P.; Zhang, K.; Huang, J.; Shi, X.; Wang, X.; Chu, W. A short-term rainfall prediction model using multi-task convolutional neural networks. In Proceedings of the 2017 IEEE International Conference on Data Mining (ICDM), New Orleans, LA, USA, 18–21 November 2017; IEEE: New York, NY, USA, 2017; pp. 395–404. [Google Scholar]

- Karevan, Z.; Suykens, J.A. Spatio-temporal stacked lstm for temperature prediction in weather forecasting. arXiv 2018, arXiv:1811.06341. [Google Scholar]

- Chattopadhyay, A.; Nabizadeh, E.; Hassanzadeh, P. Analog Forecasting of extreme-causing weather patterns using deep learning. J. Adv. Model. Earth Syst. 2020, 12, e2019MS001958. [Google Scholar] [CrossRef] [PubMed]

- Sønderby, C.K.; Espeholt, L.; Heek, J.; Dehghani, M.; Oliver, A.; Salimans, T.; Alchbrenner, N. MetNet: A Neural Weather Model for Precipitation Forecasting. arXiv 2020, arXiv:2003.12140. [Google Scholar]

- Pathak, J.; Subramanian, S.; Harrington, P.; Raja, S.; Chattopadhyay, A.; Mardani, M.; Anandkumar, A. FourCastNet: A Global Data-Driven High-Resolution Weather Model Using Adaptive Fourier Neural Operators. arXiv 2022, arXiv:2202.11214. [Google Scholar]

- Lam, R.; Sanchez-Gonzalez, A.; Willson, M.; Wirnsberger, P.; Fortunato, M.; Pritzel, A.; Battaglia, P. GraphCast: Learning skillful medium-range global weather forecasting. arXiv 2022, arXiv:2212.12794. [Google Scholar]

- Bi, K.; Xie, L.; Zhang, H.; Chen, X.; Gu, X.; Tian, Q. Accurate Medium-Range Global Weather Forecasting with 3D Neural Networks. Nature 2023, 619, 533–538. [Google Scholar] [CrossRef]

- Nguyen, T.; Brandstetter, J.; Kapoor, A.; Gupta, J.K.; Grover, A. ClimaX: A foundation model for weather and climate. arXiv 2023, arXiv:2301.10343. [Google Scholar]

- Gangopadhyay, S.; Clark, M.; Rajagopalan, B. Statistical Down-scaling using K-nearest neighbors. In Water Resources Research; Wiley Online Library: Hoboken, NJ, USA, 2005; Volume 41. [Google Scholar]

- Tripathi, S.; Srinivas, V.V.; Nanjundiah, R.S. Down-scaling of precipitation for climate change scenarios: A support vector machine approach. J. Hydrol. 2006, 330, 621–640. [Google Scholar] [CrossRef]

- Krasnopolsky, V.M.; Fox-Rabinovitz, M.S. Complex hybrid models combining deterministic and machine learning components for numerical climate modeling and weather prediction. Neural Netw. 2006, 19, 122–134. [Google Scholar] [CrossRef] [PubMed]

- Raje, D.; Mujumdar, P.P. A conditional random field–based Down-scaling method for assessment of climate change impact on multisite daily precipitation in the Mahanadi basin. In Water Resources Research; Wiley Online Library: Hoboken, NJ, USA, 2009; Volume 45. [Google Scholar]

- Zarei, M.; Najarchi, M.; Mastouri, R. Bias correction of global ensemble precipitation forecasts by Random Forest method. Earth Sci. Inform. 2021, 14, 677–689. [Google Scholar] [CrossRef]

- Andersson, T.R.; Hosking, J.S.; Pérez-Ortiz, M.; Paige, B.; Elliott, A.; Russell, C.; Law, S.; Jones, D.C.; Wilkinson, J.; Phillips, T.; et al. Seasonal Arctic Sea Ice Forecasting with Probabilistic Deep Learning. Nat. Commun. 2021, 12, 5124. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Han, Y.; Xue, W.; Yang, G.; Zhang, G. Stable climate simulations using a realistic general circulation model with neural network parameterizations for atmospheric moist physics and radiation processes. Geosci. Model Dev. 2022, 15, 3923–3940. [Google Scholar] [CrossRef]

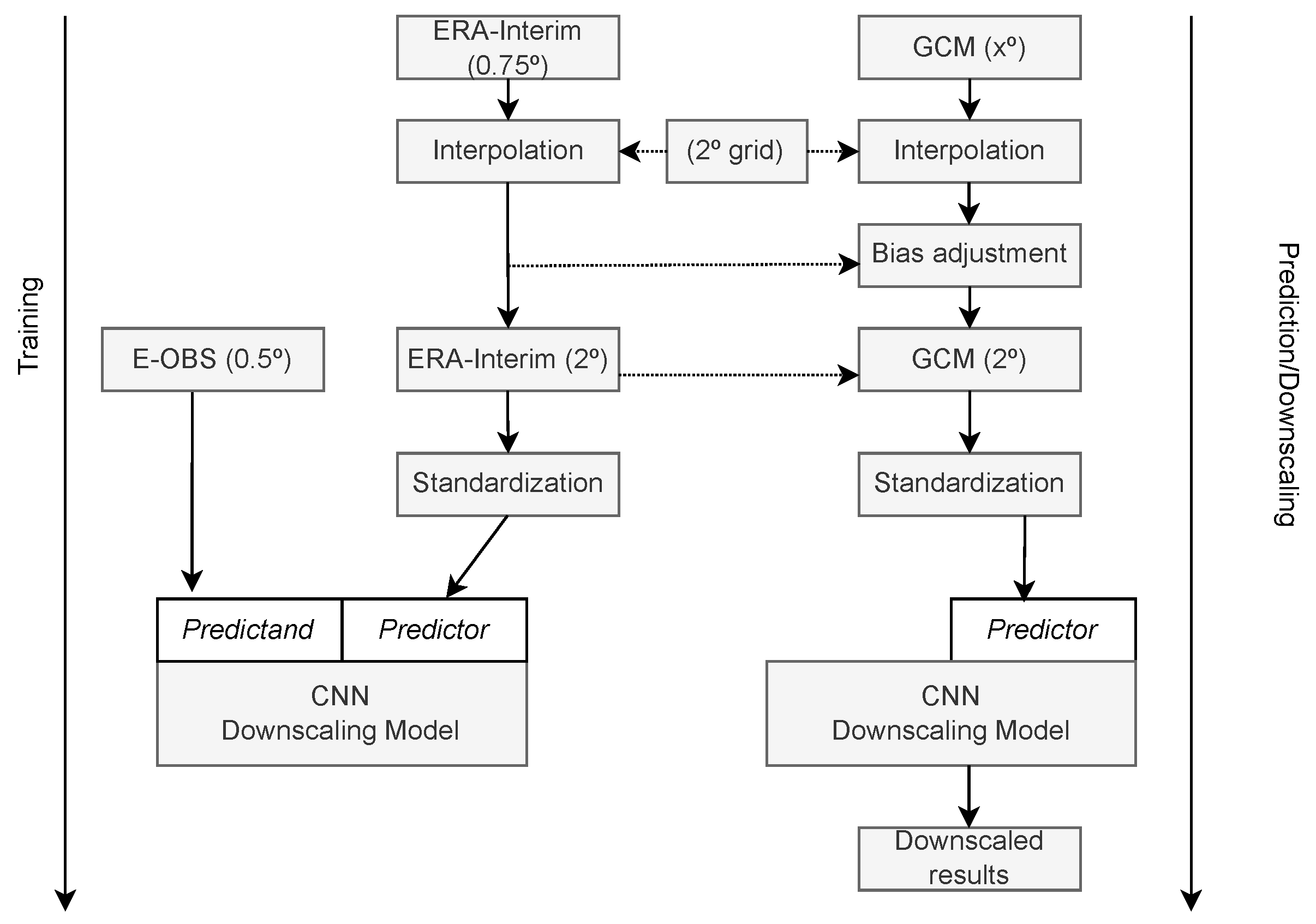

- Baño-Medina, J.; Manzanas, R.; Cimadevilla, E.; Fernández, J.; González-Abad, J.; Cofiño, A.S.; Gutiérrez, J.M. Down-scaling Multi-Model Climate Projection Ensembles with Deep Learning (DeepESD): Contribution to CORDEX EUR-44. Geosci. Model Dev. 2022, 15, 6747–6758. [Google Scholar] [CrossRef]

- Hess, P.; Lange, S.; Boers, N. Deep Learning for bias-correcting comprehensive high-resolution Earth system models. arXiv 2022, arXiv:2301.01253. [Google Scholar]

- Wang, F.; Tian, D. On deep learning-based bias correction and Down-scaling of multiple climate models simulations. Clim. Dyn. 2022, 59, 3451–3468. [Google Scholar] [CrossRef]

- Pan, B.; Anderson, G.J.; Goncalves, A.; Lucas, D.D.; Bonfils, C.J.W.; Lee, J. Improving Seasonal Forecast Using Probabilistic Deep Learning. J. Adv. Model. Earth Syst. 2022, 14, e2021MS002766. [Google Scholar] [CrossRef]

- Hu, Y.; Chen, L.; Wang, Z.; Li, H. SwinVRNN: A Data-Driven Ensemble Forecasting Model via Learned Distribution Perturbation. J. Adv. Model. Earth Syst. 2023, 15, e2022MS003211. [Google Scholar] [CrossRef]

- Chen, L.; Zhong, X.; Zhang, F.; Cheng, Y.; Xu, Y.; Qi, Y.; Li, H. FuXi: A cascade machine learning forecasting system for 15-day global weather forecast. arXiv 2023, arXiv:2306.12873. [Google Scholar]

- Lin, H.; Gao, Z.; Xu, Y.; Wu, L.; Li, L.; Li, S.Z. Conditional local convolution for spatio-temporal meteorological forecasting. Proc. Aaai Conf. Artif. Intell. 2022, 36, 7470–7478. [Google Scholar] [CrossRef]

- Chen, K.; Han, T.; Gong, J.; Bai, L.; Ling, F.; Luo, J.J.; Chen, X.; Ma, L.; Zhang, T.; Su, R.; et al. FengWu: Pushing the Skillful Global Medium-range Weather Forecast beyond 10 Days Lead. arXiv 2023, arXiv:2304.02948. [Google Scholar]

- De Burgh-Day, C.O.; Leeuwenburg, T. Machine Learning for numerical weather and climate modelling: A review. EGUsphere 2023, 2023, 1–48. [Google Scholar]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Scherer, D.; Müller, A.; Behnke, S. Evaluation of pooling operations in convolutional architectures for object recognition. In Proceedings of the International Conference on Artificial Neural Networks 2010, Thessaloniki, Greece, 15–18 September 2010; pp. 92–101. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Racah, E.; Correa, J.; Khosrowshahi, A.; Lavers, D.; Kunkel, K.; Wehner, M.; Collins, W. Application of deep convolutional neural networks for detecting extreme weather in climate datasets. arXiv 2016, arXiv:1605.01156. [Google Scholar]

- Goodfellow, I.; Warde-Farley, D.; Mirza, M.; Courville, A.; Bengio, Y. Maxout networks. In Proceedings of the International Conference on Machine Learning, Atlanta, GA, USA, 16–21 June 2013; pp. 1319–1327. [Google Scholar]

- Marion, M.; Roger, T. Navier-Stokes equations: Theory and approximation. Handb. Numer. Anal. 1998, 6, 503–689. [Google Scholar]

- Iacono, M.J.; Mlawer, E.J.; Clough, S.A.; Morcrette, J.-J. Impact of an improved longwave radiation model, RRTM, on the energy budget and thermodynamic properties of the NCAR community climate model, CCM3. J. Geophys. Res. Atmos. 2000, 105, 14873–14890. [Google Scholar] [CrossRef]

- Guo, Y.; Shao, C.; Su, A. Comparative Evaluation of Rainfall Forecasts during the Summer of 2020 over Central East China. Atmosphere 2023, 14, 992. [Google Scholar] [CrossRef]

- Guo, Y.; Shao, C.; Su, A. Investigation of Land–Atmosphere Coupling during the Extreme Rainstorm of 20 July 2021 over Central East China. Atmosphere 2023, 14, 1474. [Google Scholar] [CrossRef]

- Bauer, P.; Thorpe, A.; Brunet, G. The Quiet Revolution of Numerical Weather Prediction. Nature 2015, 525, 47–55. [Google Scholar] [CrossRef] [PubMed]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is All You Need. In Proceedings of the NeurIPS, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Wang, H.; Zhu, Y.; Green, B.; Adam, H.; Yuille, A.; Chen, L.C. Axial-DeepLab: Stand-Alone Axial-Attention for Panoptic Segmentation. arXiv 2019, arXiv:2003.07853. [Google Scholar]

- Schmit, T.J.; Griffith, P.; Gunshor, M.M.; Daniels, J.M.; Goodman, S.J.; Lebair, W.J. A closer look at the ABI on the GOES-R series. Bull. Am. Meteorol. Soc. 2017, 98, 681–698. [Google Scholar] [CrossRef]

- Li, Z.; Kovachki, N.; Azizzadenesheli, K.; Liu, B.; Bhattacharya, K.; Stuart, A.; Anandkumar, A. Fourier Neural Operator for Parametric Partial Differential Equations. In Proceedings of the International Conference on Learning Representations (ICLR), Virtual Event. 3–7 May 2021. [Google Scholar]

- Guibas, J.; Mardani, M.; Li, Z.; Tao, A.; Anandkumar, A.; Catanzaro, B. Adaptive Fourier Neural Operators: Efficient token mixers for transformers. In Proceedings of the International Conference on Representation Learning, Virtual Event. 25–29 April 2022. [Google Scholar]

- Rasp, S.; Thuerey, N. Purely data-driven medium-range weather forecasting achieves comparable skill to physical models at similar resolution. arXiv 2020, arXiv:2008.08626. [Google Scholar]

- Weyn, J.A.; Durran, D.R.; Caruana, R.; Cresswell-Clay, N. Sub-seasonal forecasting with a large ensemble of deep-learning weather prediction models. arXiv 2021, arXiv:2102.05107. [Google Scholar] [CrossRef]

- Rasp, S.; Dueben, P.D.; Scher, S.; Weyn, J.A.; Mouatadid, S.; Thuerey, N. Weatherbench: A benchmark data set for data-driven weather forecasting. J. Adv. Model. Earth Syst. 2020, 12, e2020MS002203. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the International Conference on Computer Vision, Virtual. 11–17 October 2021; IEEE: New York, NY, USA, 2021; pp. 10012–10022. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Váňa, F.; Düben, P.; Lang, S.; Palmer, T.; Leutbecher, M.; Salmond, D.; Carver, G. Single precision in weather forecasting models: An evaluation with the IFS. Mon. Weather Rev. 2017, 145, 495–502. [Google Scholar] [CrossRef]

- IPCC. Climate Change 2013: The Physical Science Basis. Contribution of Working Group I to the Fifth Assessment Report of the Intergovernmental Panel on Climate Change; Cambridge University Press: Cambridge, UK; New York, NY, USA, 2013. [Google Scholar]

- Flato, G.; Marotzke, J.; Abiodun, B.; Braconnot, P.; Chou, S.C.; Collins, W.; Cox, P.; Driouech, F.; Emori, S.; Eyring, V.; et al. Evaluation of Climate Models. In Climate Change 2013: The Physical Science Basis. Contribution of Working Group I to the Fifth Assessment Report of the Intergovernmental Panel on Climate Change; Cambridge University Press: Cambridge, UK; New York, NY, USA, 2013. [Google Scholar]

- Washington, W.M.; Parkinson, C.L. An Introduction to Three-Dimensional Climate Modeling; University Science Books: Beijing, China, 2005. [Google Scholar]

- Giorgi, F.; Gutowski, W.J. Regional Dynamical Down-scaling and the CORDEX Initiative. Annu. Rev. Environ. Resour. 2015, 40, 467–490. [Google Scholar] [CrossRef]

- Randall, D.A.; Wood, R.A.; Bony, S.; Colman, R.; Fichefet, T.; Fyfe, J.; Kattsov, V.; Pitman, A.; Shukla, J.; Srinivasan, J.; et al. Climate Models and Their Evaluation. In Climate Change 2007: The Physical Science Basis. Contribution of Working Group I to the Fourth Assessment Report of the Intergovernmental Panel on Climate Change; Cambridge University Press: Cambridge, UK; New York, NY, USA, 2007. [Google Scholar]

- Taylor, K.E.; Stouffer, R.J.; Meehl, G.A. An overview of CMIP5 and the experiment design. Bull. Am. Meteorol. Soc. 2012, 93, 485–498. [Google Scholar] [CrossRef]

- Miao, C.; Shen, Y.; Sun, J. Spatial–temporal ensemble forecasting (STEFS) of high-resolution temperature using machine learning models. J. Adv. Model. Earth Syst. 2019, 11, 2961–2973. [Google Scholar]

- Mukkavilli, S.; Perone, C.S.; Rangapuram, S.S.; Müller, K.R. Distribution regression forests for probabilistic spatio-temporal forecasting. In Proceedings of the International Conference on Machine Learning (ICML), Vienna, Austria, 12–18 July 2020. [Google Scholar]

- Walker, G.; Charlton-Perez, A.; Lee, R.; Inness, P. Challenges and progress in probabilistic forecasting of convective phenomena: The 2016 GFE/EUMETSAT/NCEP/SPC severe convective weather workshop. Bull. Am. Meteorol. Soc. 2016, 97, 1829–1835. [Google Scholar]

- Kingma, D.P.; Welling, M. Auto-encoding variational bayes. arXiv 2013, arXiv:1312.6114. [Google Scholar]

- Krasting, J.P.; John, J.G.; Blanton, C.; McHugh, C.; Nikonov, S.; Radhakrishnan, A.; Zhao, M. NOAA-GFDL GFDL-ESM4 model output prepared for CMIP6 CMIP. Earth Syst. Grid Fed. 2018, 10. [Google Scholar] [CrossRef]

- Zhu, J.-Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2223–2232. [Google Scholar]

- Brands, S.; Herrera, S.; Fernández, J.; Gutiérrez, J.M. How well do CMIP5 Earth System Models simulate present climate conditions in Europe and Africa? Clim. Dynam. 2013, 41, 803–817. [Google Scholar] [CrossRef]

- Vautard, R.; Kadygrov, N.; Iles, C. Evaluation of the large EURO-CORDEX regional climate model ensemble. J. Geophys. Res.-Atmos. 2021, 126, e2019JD032344. [Google Scholar] [CrossRef]

- Boé, J.; Somot, S.; Corre, L.; Nabat, P. Large discrepancies in summer climate change over Europe as projected by global and regional climate models: Causes and consequences. Clim. Dynam. 2020, 54, 2981–3002. [Google Scholar] [CrossRef]

- Baño-Medina, J.; Manzanas, R.; Gutiérrez, J.M. Configuration and intercomparison of deep learning neural models for statistical Down-scaling. Geosci. Model Dev. 2020, 13, 2109–2124. [Google Scholar] [CrossRef]

- Lecun, Y.; Bengio, Y. Convolutional Networks for Images, Speech, and Time-Series. Handb. Brain Theory Neural Netw. 1995, 336, 1995. [Google Scholar]

- Dee, D.P.; Uppala, S.M.; Simmons, A.J.; Berrisford, P.; Poli, P.; Kobayashi, S.; Andrae, U.; Balmaseda, M.A.; Balsamo, G.; Bauer, D.P.; et al. The ERA-Interim reanalysis: Configuration and performance of the data assimilation system. Q. J. Roy Meteor. Soc. 2011, 137, 553–597. [Google Scholar] [CrossRef]

- Cornes, R.C.; van der Schrier, G.; van den Besselaar, E.J.M.; Jones, P.D. An Ensemble Version of the E-OBS Temperature and Precipitation Data Sets. J. Geophys. Res.-Atmos. 2018, 123, 9391–9409. [Google Scholar] [CrossRef]

- Baño-Medina, J.; Manzanas, R.; Gutiérrez, J.M. On the suitability of deep convolutional neural networks for continentalwide Down-scaling of climate change projections. Clim. Dynam. 2021, 57, 1–11. [Google Scholar] [CrossRef]

- Maraun, D.; Widmann, M.; Gutiérrez, J.M.; Kotlarski, S.; Chandler, R.E.; Hertig, E.; Wibig, J.; Huth, R.; Wilcke, R.A. VALUE: A framework to validate Down-scaling approaches for climate change studies. Earths Future 2015, 3, 1–14. [Google Scholar] [CrossRef]

- Vrac, M.; Ayar, P. Influence of Bias Correcting Predictors on Statistical Down-scaling Models. J. Appl. Meteorol. Clim. 2016, 56, 5–26. [Google Scholar] [CrossRef]

- Williams, P.M. Modelling Seasonality and Trends in Daily Rainfall Data. In Advances in Neural Information Processing Systems 10, Proceedings of the Neural Information Processing Systems (NIPS): Denver, Colorado, USA, 1997; MIT Press: Cambridge, MA, USA, 1998; pp. 985–991. ISBN 0-262-10076-2. [Google Scholar]

- Cannon, A.J. Probabilistic Multisite Precipitation Down-scaling by an Expanded Bernoulli–Gamma Density Network. J. Hydrometeorol. 2008, 9, 1284–1300. [Google Scholar] [CrossRef]

- Schoof, J.T. and Pryor, S.C. Down-scaling temperature and precipitation: A comparison of regression-based methods and artificial neural networks. Int. J. Climatol. 2001, 21, 773–790. [Google Scholar] [CrossRef]

- Maraun, D.; Widmann, M. Statistical Down-Scaling and Bias Correction for Climate Research; Cambridge University Press: Cambridge, UK, 2018; ISBN 9781107588783. [Google Scholar]

- Vrac, M.; Stein, M.; Hayhoe, K.; Liang, X.-Z. A general method for validating statistical Down-scaling methods under future climate change. Geophys. Res. Lett. 2007, 34, L18701. [Google Scholar] [CrossRef]

- San-Martín, D.; Manzanas, R.; Brands, S.; Herrera, S.; Gutiérrez, J.M. Reassessing Model Uncertainty for Regional Projections of Precipitation with an Ensemble of Statistical Down-scaling Methods. J. Clim. 2017, 30, 203–223. [Google Scholar] [CrossRef]

- Quesada-Chacón, D.; Barfus, K.; Bernhofer, C. Climate change projections and extremes for Costa Rica using tailored predictors from CORDEX model output through statistical Down-scaling with artificial neural networks. Int. J. Climatol. 2021, 41, 211–232. [Google Scholar] [CrossRef]

| Time Scale | Domains | Applications |

|---|---|---|

| Short Term | Agriculture | The timing for sowing and harvesting; Irrigation and fertilization plans [5]. |

| Energy | Predicts output for wind and solar energy [6]. | |

| Transportation | Road traffic safety; Rail transport; Aviation and maritime industries [7]. | |

| Construction | Project plans and timelines; Safe operations [8]. | |

| Retail and Sales | Adjusts inventory based on weather forecasts [9]. | |

| Tourism and Entertainment | Operations of outdoor activities and tourist attractions [10] | |

| Environment and Disaster Management | Early warnings for floods, fires, and other natural disasters [11]. | |

| Medium—Long Term | Agriculture | Long-term land management and planning [12]. |

| Insurance | Preparations for future increases in types of disasters, such as floods and droughts [13]. | |

| Real Estate | Assessment of future sea-level rise or other climate-related factors [14]. | |

| Urban Planning | Water resource management [15]. | |

| Tourism | Long-term investments and planning, such as deciding which regions may become popular tourist destinations in the future [16]. | |

| Public Health | Long-term climate changes may impact the spread of diseases [17]. |

| Time Scale | Spational Scale | Type | Model | Technology | Name | Event |

|---|---|---|---|---|---|---|

| Short-term weather prediction | Global | ML | Special DNN Models | AFNO | FourCastNet [47] | Extreme Events |

| 3D Neural Network | PanGu [49] | |||||

| Vision Transformers | ClimaX [50] | Temperature & Extreme Event | ||||

| SwinTransformer | SwinVRNN [62] | Temperature & Precipitation | ||||

| U-Transformer | FuXi [63] | |||||

| Single DNNs Model | GNN | CLCRN [64] | Temperature | |||

| GraphCast [48] | ||||||

| Transformer | FengWu [65] | Extreme Events | ||||

| Regional | CapsNet [45] | |||||

| CNN | Precipitation Convolution prediction [43] | Precipitation | ||||

| ANN | Precipitation Neural Network prediction [41] | |||||

| LSTM | Stacked-LSTM-Model [44] | Temperature | ||||

| Hybrid DNNs Model | LSTM + CNN | ConsvLSTM [42] | Precipitation | |||

| MetNet [46] | ||||||

| Medium-to-long-term climate prediction | Global | Single DNN models | Probalistic deep learning | Conditional Generative Forecasting [61] | Temperature & Precipitation | |

| ML Enhanced | CNN | CNN-Bias-correction model [60] | Temperature & Extreme Event | |||

| GAN | Cycle GAN [59] | Precipitation | ||||

| NN | Hybrid-GCM-Emulation [53] | |||||

| ResDNN | NNCAM-emulation [57] | |||||

| Regional | CNN | DeepESD-Down-scaling model [58] | Temperature | |||

| Non-Deep-Learning Model | Random forest (RF) | RF-bias-correction model [55] | Precipitation | |||

| Support vector machine (SVM) | SVM-Down-scaling model [52] | |||||

| K-nearest neighbor (KNN) | KNN-Down-scaling model [51] | |||||

| Conditional random field (CRF) | CRF-Down-scaling model [54] |

| Model | Forecast-Timeliness | Z500 RMSE (7 Days) | Z500 ACC (7 Days) | Training-Complexity | Forecasting-Speed |

|---|---|---|---|---|---|

| MetNet [46] | 8 h | - | - | 256 Google-TPU-accelerators (16-days-training) | Fewer seconds |

| FourCastNet [47] | 7 days | 595 | 0.762 | 4 A100-GPU | 24-h forecast for 100 members in 7 s |

| GraphCast [48] | 9.75 days | 460 | 0.825 | 32 Cloud-TPU-V4 (21-days-training) | 10-days-predication within 1 min |

| PanGu [49] | 7 days | 510 | 0.872 | 192 V100-GPU (16-days-training) | 24-h-global-prediction in 1.4 s for each GPU |

| IFS [88] | 8.5 days | 439 | 0.85 | - | - |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, L.; Han, B.; Wang, X.; Zhao, J.; Yang, W.; Yang, Z. Machine Learning Methods in Weather and Climate Applications: A Survey. Appl. Sci. 2023, 13, 12019. https://doi.org/10.3390/app132112019

Chen L, Han B, Wang X, Zhao J, Yang W, Yang Z. Machine Learning Methods in Weather and Climate Applications: A Survey. Applied Sciences. 2023; 13(21):12019. https://doi.org/10.3390/app132112019

Chicago/Turabian StyleChen, Liuyi, Bocheng Han, Xuesong Wang, Jiazhen Zhao, Wenke Yang, and Zhengyi Yang. 2023. "Machine Learning Methods in Weather and Climate Applications: A Survey" Applied Sciences 13, no. 21: 12019. https://doi.org/10.3390/app132112019

APA StyleChen, L., Han, B., Wang, X., Zhao, J., Yang, W., & Yang, Z. (2023). Machine Learning Methods in Weather and Climate Applications: A Survey. Applied Sciences, 13(21), 12019. https://doi.org/10.3390/app132112019