Abstract

Bridge inspection methods using unmanned vehicles have been attracting attention. In this study, we devised an efficient and reliable method for visually inspecting bridges using unmanned vehicles. For this purpose, we developed the BIRD U-Net algorithm, which is an evolution of the U-Net algorithm that utilizes images taken by unmanned vehicles. Unlike the U-Net algorithm, however, this algorithm identifies the optimal function by setting the epoch to 120 and uses the Adam optimization algorithm. In addition, a bilateral filter was applied to highlight the damaged areas of the bridge, and a different color was used for each of the five types of abnormalities detected, such as cracks. Next, we trained and tested 135,696 images of exterior bridge damage, including concrete delamination, water leakage, and exposed rebar. Through the analysis, we confirmed an analysis method that yields an average inspection reproduction rate of more than 95%. In addition, we compared and analyzed the inspection reproduction rate of the method with that of BIRD U-Net after using the same method and images for training as the existing U-Net and ResNet algorithms for validation. In addition, the algorithm developed in this study is expected to yield objective results through automatic damage analysis. It can be applied to regular inspections that involve unmanned mobile vehicles in the field of bridge maintenance, thereby reducing the associated time and cost.

1. Introduction

Infrastructures, such as buildings, bridges, and paved roads, should be periodically inspected to ensure their reliability and structural integrity. Gradual stress and wear can be visually observed through cracking and depression, leading to failure/decay if cracks occur in important positions, such as in load support joints [1]. Maintaining concrete structures in Korea is difficult due to the continuous increase in the number of facilities to be managed and the decrease in the number of personnel involved. In practice, visual inspection methods are time-consuming and expensive because they require inspectors to directly observe the structure to identify damage. They also increase the risk of accidents when inspectors are working on inaccessible structures such as suspension bridges, dams, and bridge decks. Particularly in the case of bridges with weak access, systematic management is crucial. Existing bridge maintenance systems involve manual inspections, which are regularly performed at least once semi-annually in accordance with the Special Act on Safety and Maintenance. Visual inspections involve examining the exterior of the structure [2]. However, this is inefficient in terms of time and costs due to the requirement of separate inspection equipment, such as ladder trucks and refractive skies, in environments with weak access. Various studies to develop methods for efficiently inspecting and maintaining structures are underway. While AI crack detection can be a powerful and efficient way to monitor the structural health of bridges, there are some cons and difficulties associated with approach. Image quality can vary due to factors like weather conditions, lighting, and camera specifications. Poor-quality images may affect the accuracy of crack detection algorithms [3]. UAVs can capture a large volume of data, which may require significant storage and processing capabilities. Handling and analyzing these data can be challenging, especially for large bridge networks. In addition, annotating UAV images for crack detection can be a time-consuming and costly process, which may require manual marking of cracks in images to create a labeled dataset for training AI models. In particular, research on defect inspection in bridges and concrete structures using unmanned mobile vehicles is being actively conducted at home and abroad. In this paper, we aimed to solve existing problems in the industry, as well as those identified in a theoretical review of facility safety diagnosis and maintenance, and to identify the current status of bridges. Furthermore, we propose an objective damage-type classification method utilizing artificial intelligence algorithms based on bridge image data acquired using drones and BIRD U-Net, which complements the U-Net algorithm.

2. Previous Research

2.1. Reviewing Prior Research

Prior research on bridge safety inspection using drones has been conducted. Lovelace et al. (2015) [4] used an unmanned aerial vehicle (UAV) to visually inspect bridges and acquired images of bridge defects using infrared and conventional cameras. Chanda et al. (2014) [5] proposed an automated bridge inspection approach that utilizes wavelet-based image features in conjunction with support vector machines to identify cracks in bridges and inspect bridge conditions. Irizarry et al. (2012) [6] evaluated the use of drone technology as a safety inspection tool and proposed autonomous navigation, voice interaction, a high-resolution camera, and a collaborative user interface environment for unmanned vehicles as a tool for safety checks by managers at construction sites. De Melo et al. (2017) [7] reviewed the applicability of unmanned aerial systems (UASs) for safety inspections and developed a set of procedures and guidelines for collecting, processing, and analyzing safety requirements using UAS visual information for safety management. Jung et al. (2016) [8] proposed a smart maintenance plan utilizing advanced convergence technologies, such as drones, IoT, and big data, considering that aging infrastructure threatens safety and increases maintenance demand. Lee et al. (2017) [9] found that an overhaul of the Saemangeum sea wall, a large-scale facility, took two to three months for a conventional visual inspection but one or two days for a drone inspection. Kang et al. (2021) [10] performed 3D mapping using drones to estimate the amount of construction work required because existing facility management methods are risky and require a large amount of time, manpower, and equipment. Their method achieved an accuracy rate of 90.5% compared to the pre-planned amount of construction work.

Wu et al. (2023) [11] introduced an enhanced U-Net network that incorporates multi-scale feature prediction fusion. Additionally, they introduced an improved parallel attention module to enhance the identification of concrete cracks. This multi-scale feature prediction fusion combines multiple U-Net features generated by intermediate layers for aggregated prediction, thus utilizing global information from different scales. The improved parallel attention module is used to process the U-Net decoded output of multi-scale feature prediction fusion, allowing it to assign more weight to the target region in the image and capture global contextual information to improve recognition accuracy.

Similarly, Hadinata et al. (2023) [12] focused on implementing a pixel-level convolutional neural network for detecting concrete surface damage in complex image settings. Given the presence of various types of damage on concrete surfaces, the convolutional neural network model was trained to identify three specific types of damage: Cracks, spalling, and voids. The training architecture utilized both the U-Net and DeepLabV3+ models, and a comparative evaluation was performed using various evaluation metrics to assess the predicted results.

Kang et al. (2016) [13] proposed a method for maintaining facilities by photographing the bridge inspection area using a drone and checking for cracks, efflorescence, or leaks using Context Capture, as the visual inspection of bridges using manpower and mounted vehicles is inefficient. Similarly, studies on facility maintenance using drones have shown that existing methods of bridge safety inspection and diagnostic surveys are inefficient. As an alternative, drones are being used. However, research on objectively classifying and detecting bridge damage based on the acquired data is lacking. Jeon et al. (2019) [14] noted that deep learning algorithms are open-sourced by global IT companies such as Google, making them readily available to anyone, and can be used by both industry and academia to solve a variety of problems across a range of industries, including civil engineering, manufacturing, steel, automotive, and infrastructure businesses. However, because most of the research in civil engineering aims to identify accident hazards, perform real-time situation analysis, and locate defects based on data acquired from CCTV or fixed sensors, the emphasis is on research and development by utilizing various types of data in the field of civil engineering. Kim et al. (2021) [15] proposed a multi-damage-type detection system for bridge structures using a CNN- and pixel-based watershed region segmentation algorithm considering the problems in objectivity and efficiency arising from trained inspectors making visual judgments in the field during structure safety inspection under current methods. Hong et al. (2021) [16] proposed a deep learning-based image preprocessing and bridge damage object automation method for automating bridge damage inspection, considering that bridge surface defects are manually inspected and intuitive judgment is the evaluation factor.

Cardellicchio (2023) [17] endeavored to amalgamate visual inspections with automated defect recognition for evaluating the condition of heritage bridges, particularly reinforced concrete (RC) structures. The research compiled images of common defects and applied convolutional neural networks (CNNs) for defect identification. Notably, the study prioritized rendering the outcomes comprehensible and applicable by employing class activation maps (CAMs) and explainable artificial intelligence (XAI) techniques. It also assessed the efficacy of different CNN models and introduced innovative metrics for reliability. These findings hold practical significance for road management entities and public organizations involved in gauging the health of road networks.

Recently, different organizations have been promoting the use of unmanned mobile vehicles for efficient management in maintaining these concrete facilities through pilot operations and applicability reviews to acquire exterior images of bridge facilities. Accordingly, the inspection time for the structure exterior has been shortened compared to when performing a manual inspection. However, damage inspection during a safety review is again performed by a human. Therefore, inspections using unmanned mobile vehicles are not limited to simply photographing the exterior, but can be extended to detecting damage from the acquired images. Furthermore, they can overcome the problems of manpower requirements and limited access, which limit the safety inspection of the exterior of the structure under current methods. As a key technology, this method can be a step in developing an effective maintenance system by reducing the high manpower, time, and cost requirements of processing the acquired exterior images. To inspect bridge facilities using unmanned vehicles, an unmanned vehicle control system and operation automation system that is suitable for the railway operation environment is required, and the necessary technologies for practical and efficient inspection must be utilized, such as those required to address inaccuracies in shadowed areas when relying on GPS for existing unmanned vehicles and automation of damage detection and analysis from acquired images

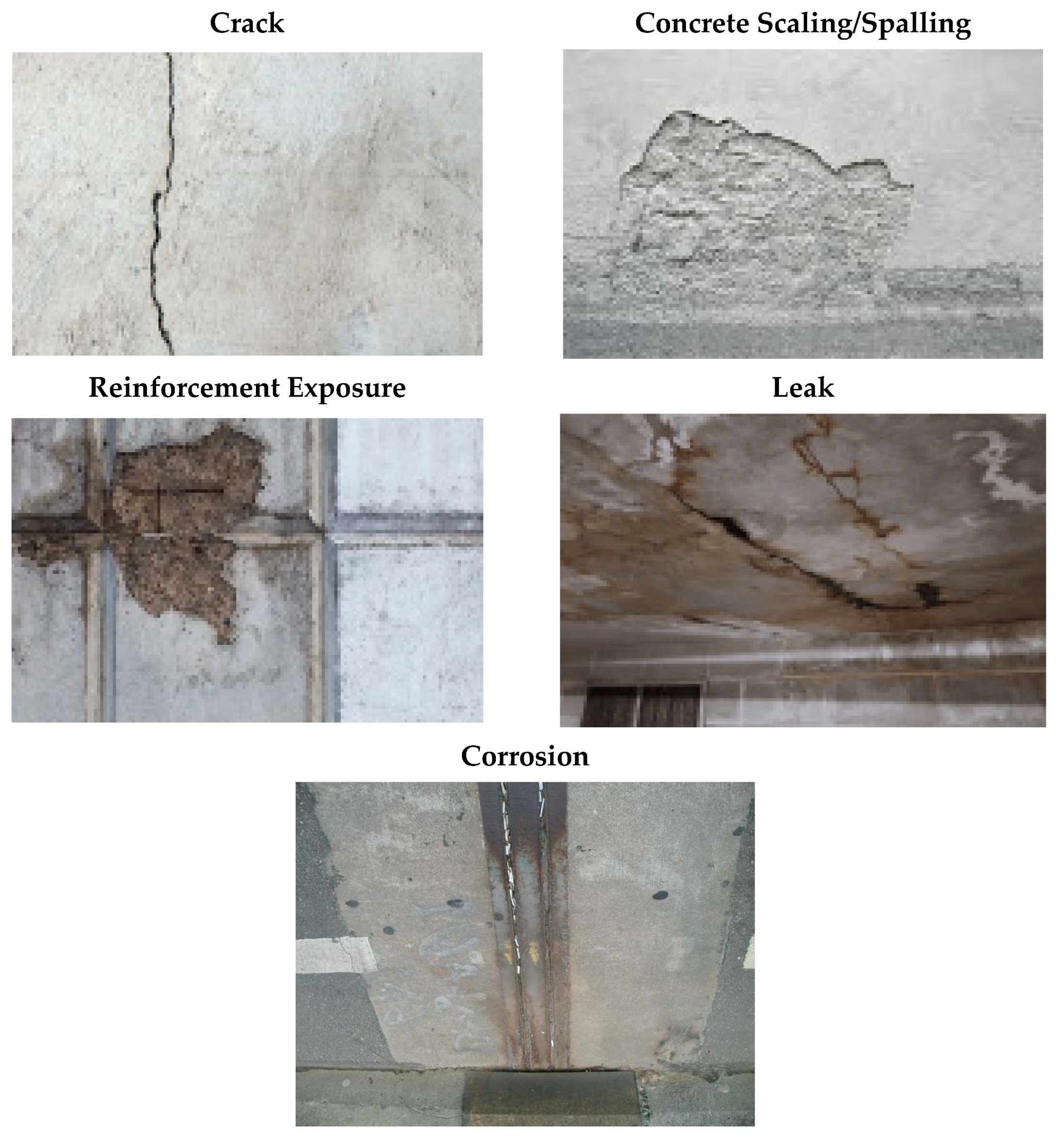

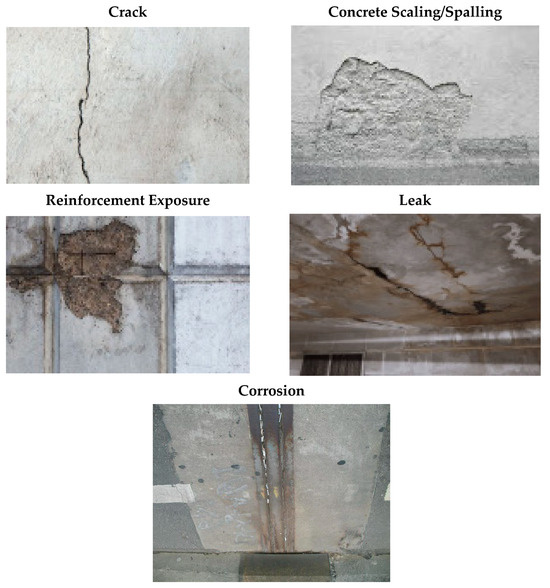

In this study, the damage items to be learned and analyzed through deep learning-based image analysis technology were first defined from the exterior damage of bridges, which represent one of the vulnerable access facilities. Figure 1 demonstrates the damage items that can be collected using unmanned mobile vehicles. The images in Figure 1 were collected by UAVs, and they were carefully chosen to highlight clear differences in each type of damage. Various types of damage can occur in bridges, such as surface cracks, concrete scaling/spalling, reinforcement exposure, or leaks. Image classes were chosen for each type to train the AI model to recognize specific characteristics of cracks, with images having been captured from different angles, distances, and perspectives. Designating image classes based on these perspectives can enhance the model’s ability to detect cracks in diverse situations.

Figure 1.

Example photos of collected data.

Deep learning-based technology allows you to visually inspect and identify cracks while reducing the need for direct human observation. Ground robots and UAVs are gaining popularity as modern infrastructure inspection and monitoring systems, owing to their advantages over traditional inspection methods, such as improved safety, efficiency, and accuracy [18]. These systems, equipped with sensors and cameras, can provide real-time data and imagery of infrastructure components, allowing for more accurate and efficient inspections [19]. These systems are expected to become increasingly crucial as technology in infrastructural inspection and monitoring advances [20].

2.2. Reviewing Unmanned Aerial Vehicles for Bridge Exterior Image Acquisition

Drones, also known as unmanned aerial vehicles, are broadly categorized as fixed-wing and rotorcraft. Fixed-wing drones can be operated for relatively long periods, have low vibration, and are operated at high altitudes, making them suitable for capturing the top of terrains or structures, such as during aerial photography. For this study, a rotary-wing drone was more suitable than a fixed-wing drone for capturing the exterior of concrete bridges, including piers, abutments, slab sides, and the underside. Drones have the advantage of being able to take off and land vertically, even in small spaces, and can acquire images at close range when photographing structures, enabling precise image acquisition for damage analysis. In addition, the damage analysis performance of the acquired images depends on their quality. Therefore, the drone was mounted on a module with hovering and attitude control functions and a gimbal to reduce vibration. The environmental conditions for bridge photography in this study required a drone with high wind resistance and a camera capable of capturing high-resolution images. We used Intel’s Falcon 8+ industrial drone and DJI’s Phantom and Mavic drones, which meet these conditions.

2.3. Image Acquisition Equipment Selection and Shooting Conditions

To detect cracks, the smallest of the damage items targeted in this study, a camera—the mission equipment mounted on the drone—with high specifications was selected. As shown in (Figure 2), the camera used in this study, the Sony A7R, was a full-frame camera with a 36 MP image resolution capable of observing fine details. It was also equipped with a 35 mm full-frame sensor with an ISO50-25600 low-pass filter.

Figure 2.

A&R-topped drone.

2.4. Selecting a Bridge Exterior Image Acquisition Target and Collecting Damage Images

To develop a technique to automatically detect damage in bridges, it is necessary to apply supervised learning to analyze the damage. Therefore, images were taken of the Yi Sunshin Bridge in Yeosu, Jeollanam-do, a facility that is expected to have relatively high damage due to high traffic volume. According to the Special Act on Safety Management of Facilities, facilities rated C or lower should be subjected to a regular inspection at least once every half year, a detailed inspection at least once every two years, and a detailed safety diagnosis once every five years. The visually inspected items in these facilities include cracks, concrete delamination/decay, exposed rebar, water leakage, and efflorescence. In general, cracks 0.3 mm or more and less than 0.5 mm should be less than 10% of the total cracks, and non-cracks are classified as Grade C if the surface damage area is less than 2–10%.

A total of 33,924 images were collected through drone photography, and images containing various and complex damage were acquired. Table 1 lists the quantity of each damage item among the acquired image data. Noticeably, the frequency of leakage/whitening, scaling/spalling, and corrosion is relatively low compared to that of cracks. For deep learning analysis, the larger the number of training objects, the higher the classification detection accuracy. Table 1 presents the quantity of each damage category in the acquired image data. The occurrences of leakage/whitening, scaling/spalling, and corrosion are relatively low when compared to the frequency of cracks [2]. Each class has been meticulously designed for separate evaluation, ensuring that the comparison of each class among the BIRD U-Net, U-Net, and ResNet algorithms remains valid. Therefore, we performed augmentation of up–down symmetry (FLIP_TOP_BOTTOM) and left–right symmetry (FLIP_LEFT_RIGHT) in this study to augment the 33,924 images collected for each damage item, and 135,696 images were ultimately used for analysis.

Table 1.

Collected data.

The reason for the scarcity of no-damage data is because damage presents itself in various shapes, sizes, and depths. This diversity results in the ability to capture damage instances multiple times from different angles, under different lighting conditions, and in different situations. In contrast, images without damage tend to have a relatively consistent appearance, enabling representativeness with a relatively small number of images. In this study, we limited the representation of no-damage data because our focus was primarily on characterizing the different types of damage.

2.5. Acquisition Image Preprocessing and Feature Point Extraction

The images acquired from a fixed mount in outdoor environments, where lighting is insufficient and focusing is difficult, are vulnerable to problems with illumination, contrast, and noise. Therefore, an image filter algorithm was applied to improve the quality of the damage analysis performance based on an image of the exterior of the railway bridge. Among several filter algorithms, bilateral filter, which can learn and analyze the data for deep learning with optimal quality, was used to remove noise from the image data. This filter is nonlinear, in that it removes noise while preserving edges, and the intensity value at each pixel is replaced by a weighted average of the neighboring pixels, which makes the boundaries more distinct and the rest of the image smoother, improving accuracy.

3. Damage Detection Methods

3.1. Review of Deep Learning-Based Analytics

We studied the ResNet and U-Net algorithms, which are two of the most commonly used artificial neural network techniques for deep learning image analysis. Each algorithm can be analyzed in two ways. The LH-RCNN technique detects damage in the image in the form of a box based on the damage data trained by the detection method. For example, in the case of a crack, the range covered by the crack is detected as a box region, and further analysis is required to analyze the exact damage area. Lu et al. (2019) [21] proposed a novel two-stage detection method for faster and more accurate analysis by combining the RoI-based analysis techniques of Faster-RCNN and R-FCN. A study was conducted to significantly improve the analysis speed based on ResNet while maintaining the analysis performance of the existing RCNN. Bui et al. (2016) [22] found that converting the images to grayscale increases the CNN performance during object recognition. Similarly, Xie and Richmond (2018) [23] found that grayscale images improve the CNN performance in lung disease classification. For crack detection, Shahriar et al. (2020) [24] proposed improving images to determine the image quality and preprocessing data to improve the results.

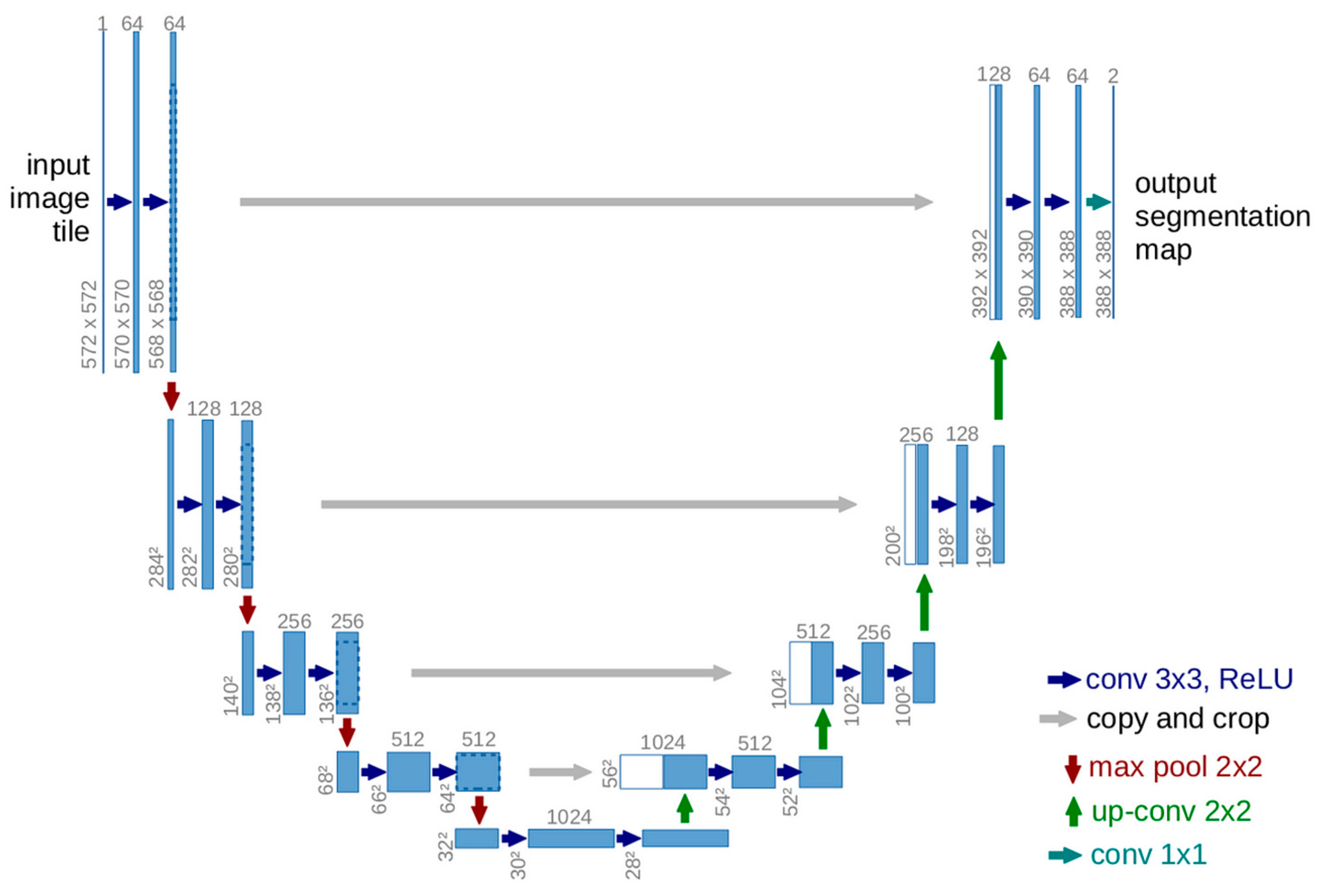

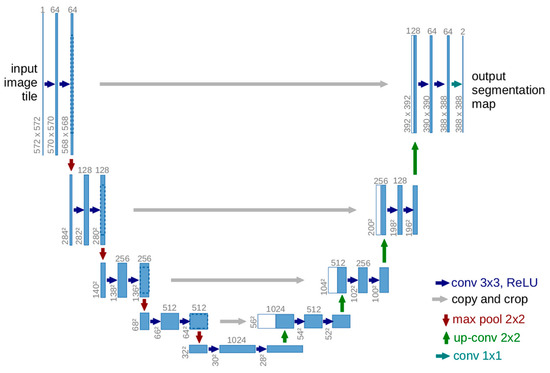

The U-Net method is an end-to-end fully convolutional network-based model proposed for image segmentation in the medical field. Image work in the medical field previously relied only on expert analysis, but with the advent of deep learning models, the analysis of X-rays, MRI images, and so on in the medical field is increasing with the help of deep learning. In medical research, automated microscopy experiments generate a large amount of image data, and for each image, cells and cell components are classified using semantic segmentation methods. Therefore, they achieve excellent performance on several biomedical image segmentation problems using data augmentation with only a small amount of training data by utilizing the U-Net structure. Long et al. (2015) [25] applied contemporary classification networks (AlexNet, VGGnet, and GoogLeNet) to fully convolutional networks and studied techniques to obtain fine-grained trained results through segmentation tasks. Ronneberger et al. (2015) [26] termed the U-Net technique U-Net because the shape of the algorithm configuration resembles a “U” and has the configuration shown in Figure 3. The technique consists of a symmetrical network for obtaining the overall contextual information of the image and a network for accurate localization. The network on the left is configured to detect the context of the input image with a contracting path and has a VGG-based architecture similar to FCNs. The network on the right is the expanding path, which is configured for detailed localization and combines high-dimensional up-sampling with thin-layer feature maps.

Figure 3.

U-Net architecture.

When training the actual network, because U-Net is a network for segmentation, we used Softmax on a pixel-wise basis. Softmax is an activation function used in multiclass classification in which three or more classes are classified, and it has the property of the input values all being normalized to values between 0 and 1 as the output. Moreover, the sum of the output values is always 1. Given n classes to be classified, an n-dimensional vector is input to estimate the probability of belonging to each class. Equation (1) is a Softmax that finds the probability value for every pixel, allowing the probability value for each pixel to be predicted.

- ;

- ;

- : activation value at position x of channel k.

When we compared the accuracy of U-Net and ResNet, we found that U-Net outperformed ResNet by 20.7% for all types of bridge damage, as demonstrated in Section 3.2 (Analysis Results). As a result, our primary objective in this study was to enhance the original U-Net. We accomplished this through several improvement methods, including setting the number of epochs, applying the Adam optimization algorithm, implementing a bilateral filter, and using distinct colors to mark the different anomalies detected. The significant advantage of this algorithm is its ability to function effectively with a minimal amount of data. This is especially valuable for facilities where acquiring substantial damage data is challenging. We harnessed these improvements to devise an automated damage diagnosis method.

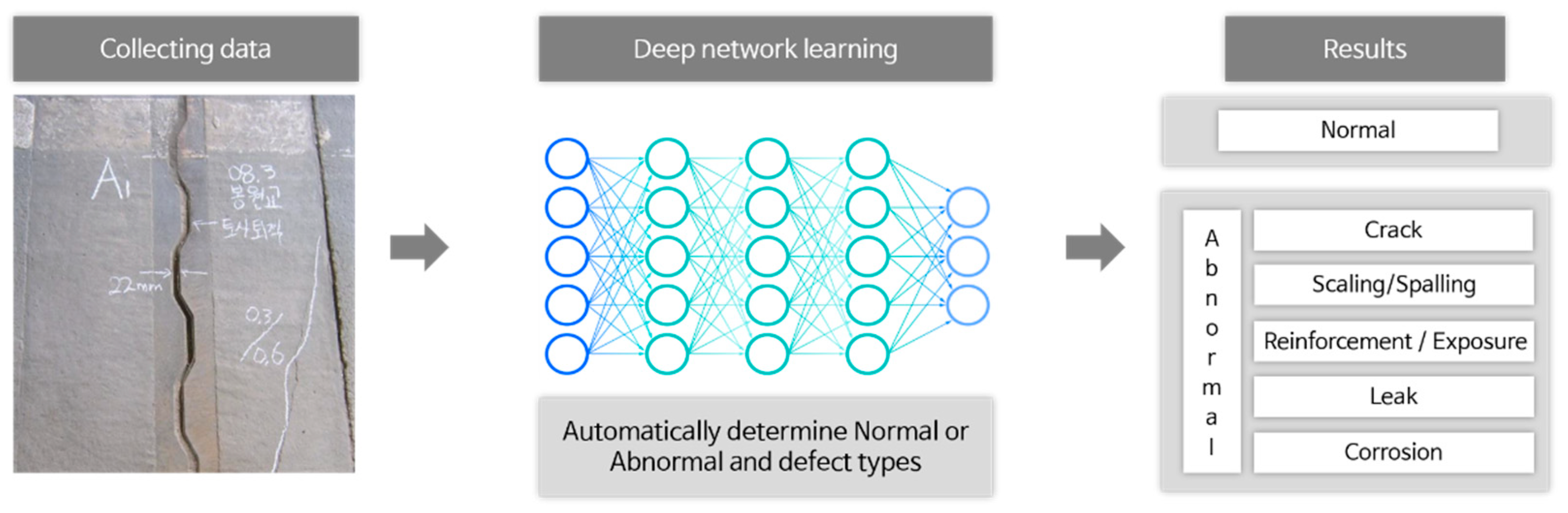

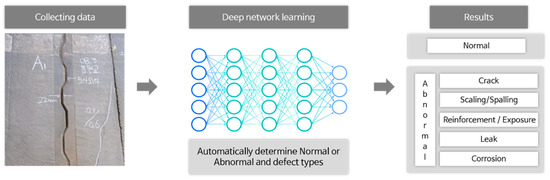

3.2. Crack Detection Algorithms

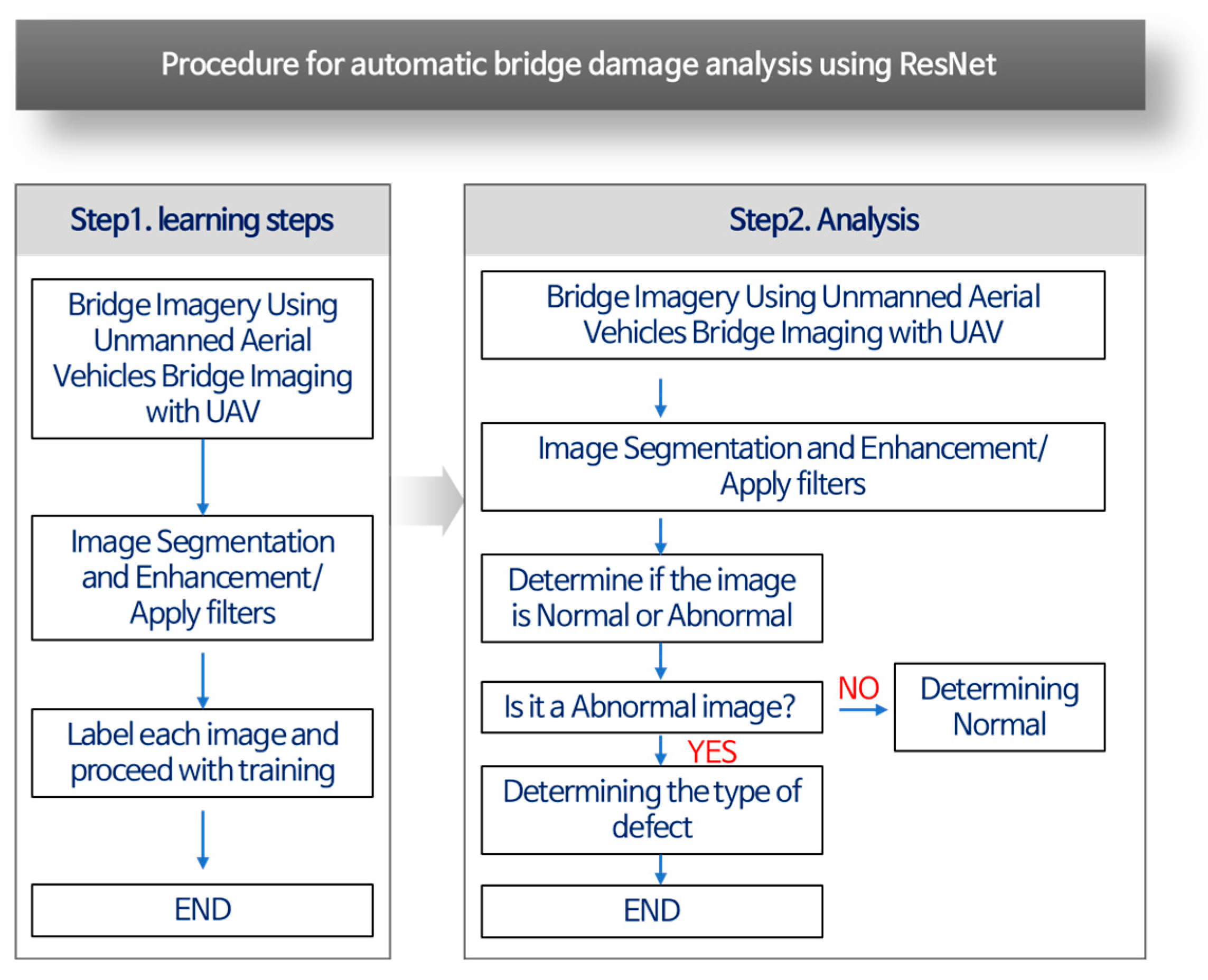

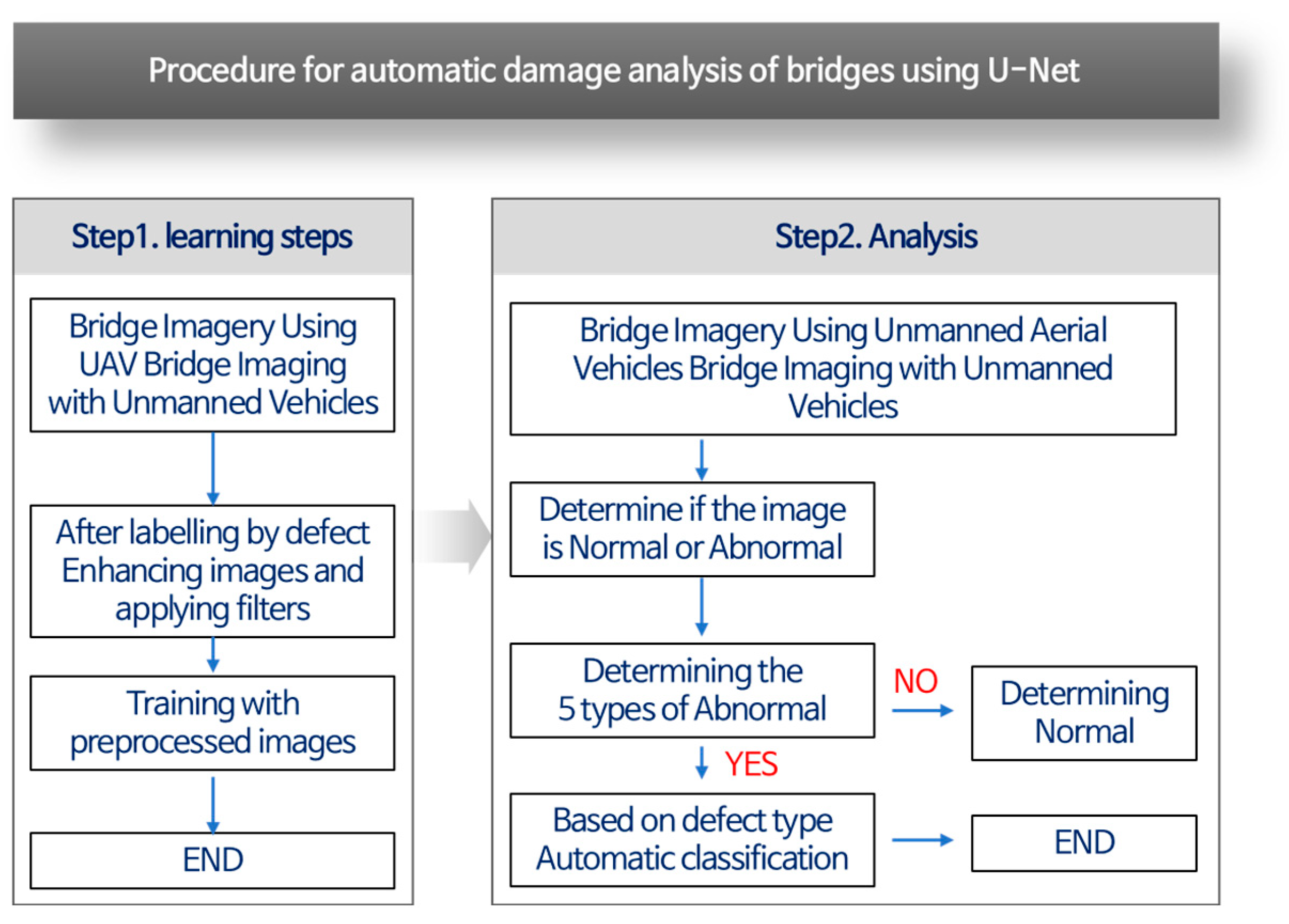

In the defect classification algorithm using U-Net (including BIRD U-Net) and ResNet, the input image was passed through all five networks in order, and if no defects were detected in all networks, the image was considered normal. In this study, the damage types were classified as cracks, delamination, efflorescence/leakage, corrosion, and exposed rebar, which are the five important defects of bridges, as previously mentioned, following the advice of current safety inspection experts. Learning other damage types, such as deterioration and plastic deformation, was difficult due to the lack of data, and finding more important defects for demonstration and commercialization was deemed important. The algorithm classifies the damage type when the input image passes through the network, as shown in Figure 4.

Figure 4.

Algorithm process.

Table 2 summarizes the experimental environment. Python 3.9.12 was used in the experiments, and the model was implemented using Torch 1.1.0, a Python-based deep learning library. The experiments were run on Windows 11 Home with an Intel i9-12900KS CPU, 64 GB of RAM, and a Geforce GTX 3090ti graphics card. Additional libraries used were NumPy (ver. 1.14 or later), Pandas, and Matplotlib. For the hyperparameters used in the experiments, the number of training iterations (epoch) was set to 120.

Table 2.

Experimental environments.

To improve the accuracy of classifying the five damage types, we developed an algorithm that passes through five networks that detect each damage type to finally classify the five damage types rather than detect all of the damage types by passing through one network.

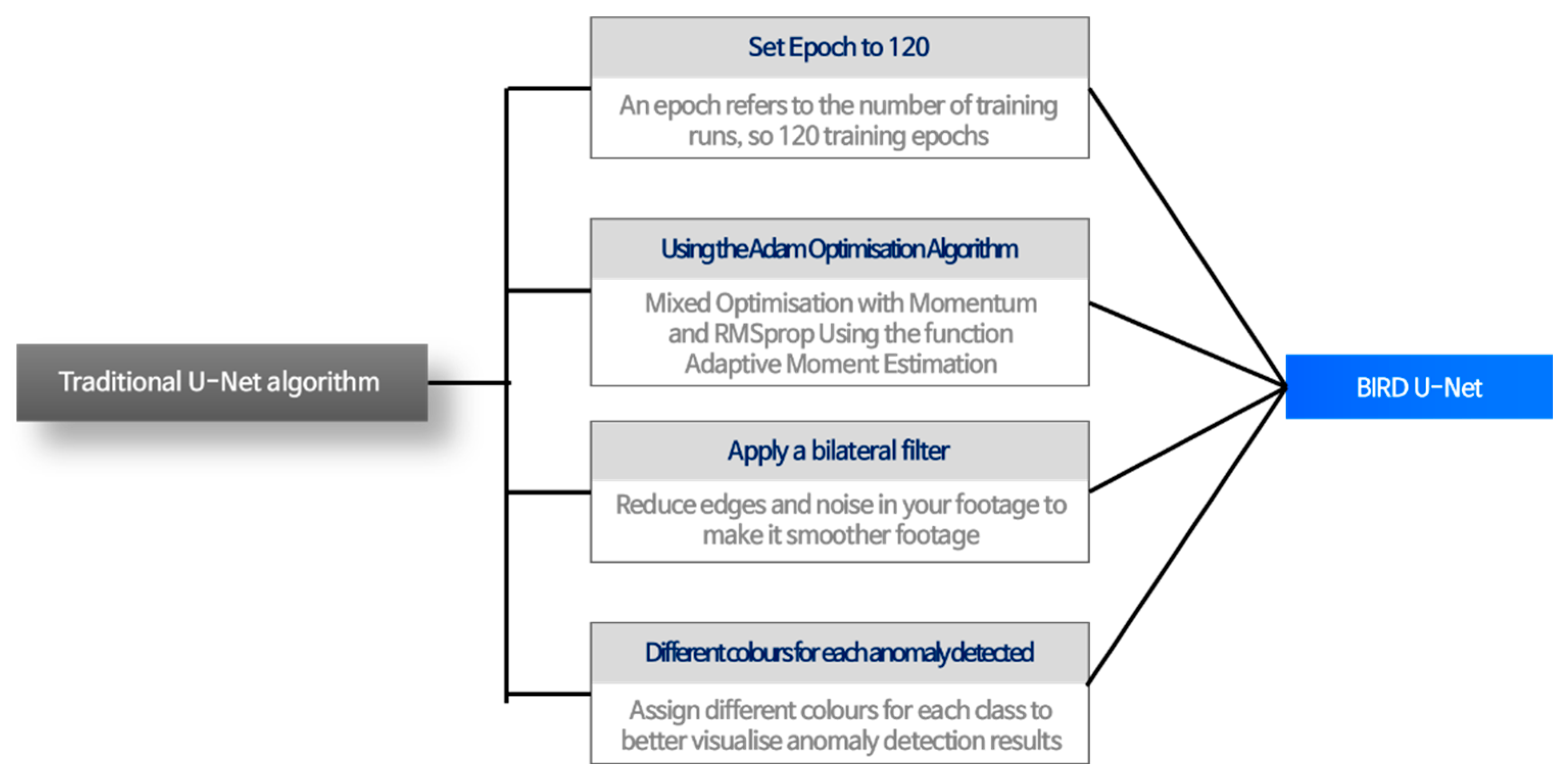

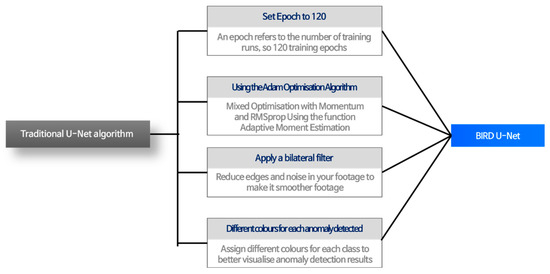

Furthermore, we added four points to the existing U-Net to implement the BIRD U-Net algorithm, which is specialized for bridges, as shown in Figure 5. The learning rate determines the update rate of a parameter and ranges between 0 and 1. The default learning rate for the Adam algorithm is 0.001, which is optimal. In addition, the Adam algorithm has the advantage of changing the optimal learning rate according to the update intensity of each parameter. A study by Xiaodong Yu (2023) [27], similar to this paper, applied the Adam optimizer in PyTorch 1.10.0 to train the network. Initially, the learning rate was set to 1 × 10−4 and was dynamically tuned in terms of exponential decay, the epoch was set to 120, and batch_size was set to 8. The observation indicated that when the epoch reached a value of 20, the loss then tended to saturate and terminate to prevent overfitting, so that generalization was increased further. When the epoch reached a value of 100, the loss attained a level of 0.04 and tended toward stabilization.

Figure 5.

BIRD U-Net characteristics.

Second, the Adam function was used to optimize the U-Net model. Adam is a gradient descent algorithm with an adaptive learning rate. It can efficiently update the weights of the model, and this allows to achieve good performance marked by fast convergence. Third, bilateral filtering was applied to remove noise from the image and improve sharpness, which considers both spatial and color information to soften the image and help detect cracks and anomalies.

Finally, we used different colors for each anomaly detected, such as cracks, delamination, efflorescence, and exposed rebar. Different colors were assigned to each class to visualize the anomaly detection results clearly, making interpreting the results easier.

When a defect was detected in each network by the algorithms, it was displayed on the input image, and the ratio of the training to test data for the five damage types was 8 to 2, while the ratio of the validation to training data was 8 to 2. Meanwhile, the ratio of the number of datapoints was training data/validation data/test data = 64:16:20.

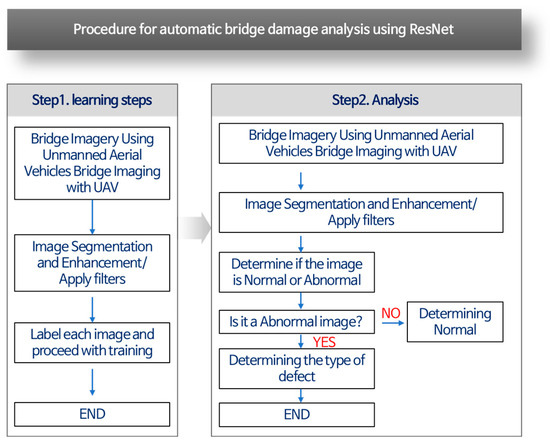

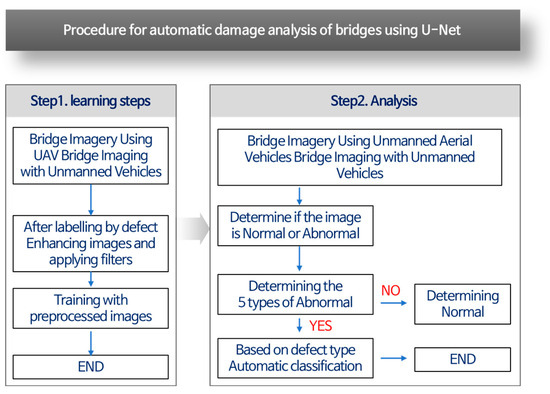

With a limited number of datasets, data augmentation is used to collect a larger number of datasets. However, data augmentation is performed with division of train/test/validation in mind. Datasets are intentionally divided before data augmentation to avoid using the same dataset for training and validation. Figure 6 shows the automatic bridge handprint analysis procedure using ResNet. Figure 7 shows the learning and analysis steps using U-Net. Data augmentation is only carried out within the train/test/validation groups as follows: (1) Acquiring a bridge image using an unmanned aerial vehicle, (2) dividing the acquired image into train/test/validation sets, (3) applying image augmentation and filters to the training set images, and (4) learning progression based on the augmented training data.

Figure 6.

ResNet process.

Figure 7.

U-Net process.

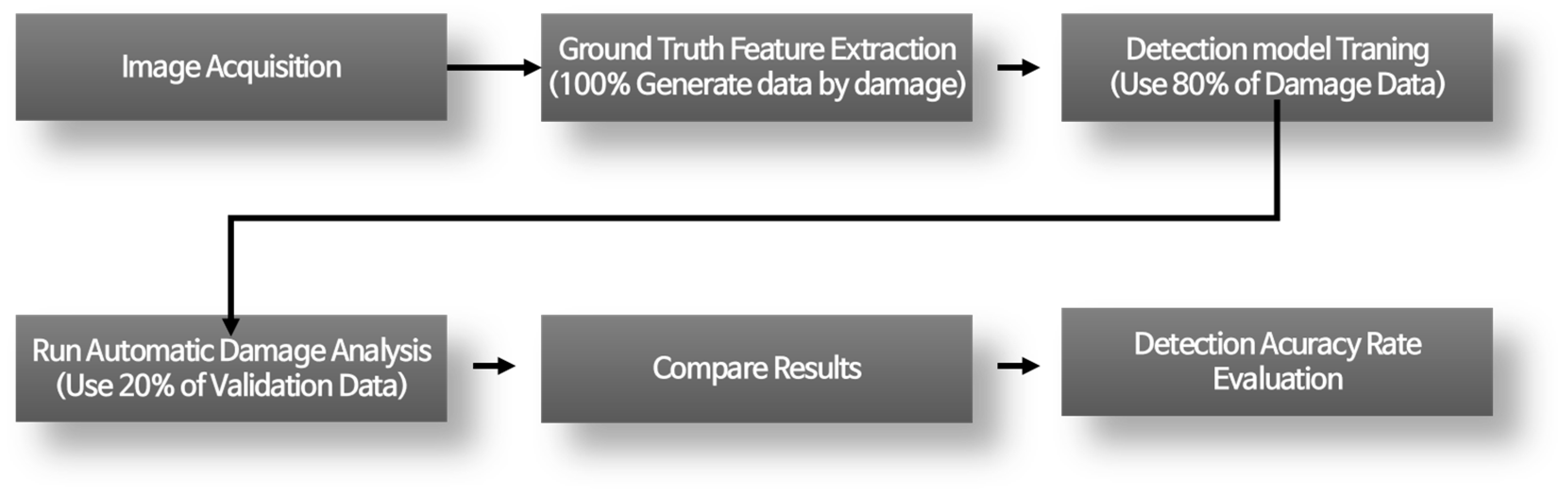

3.3. How to Validate Crack Detection Algorithms

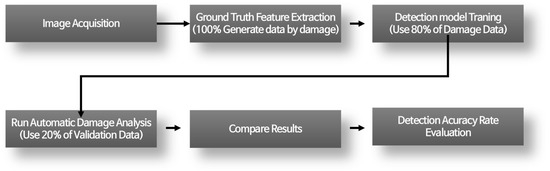

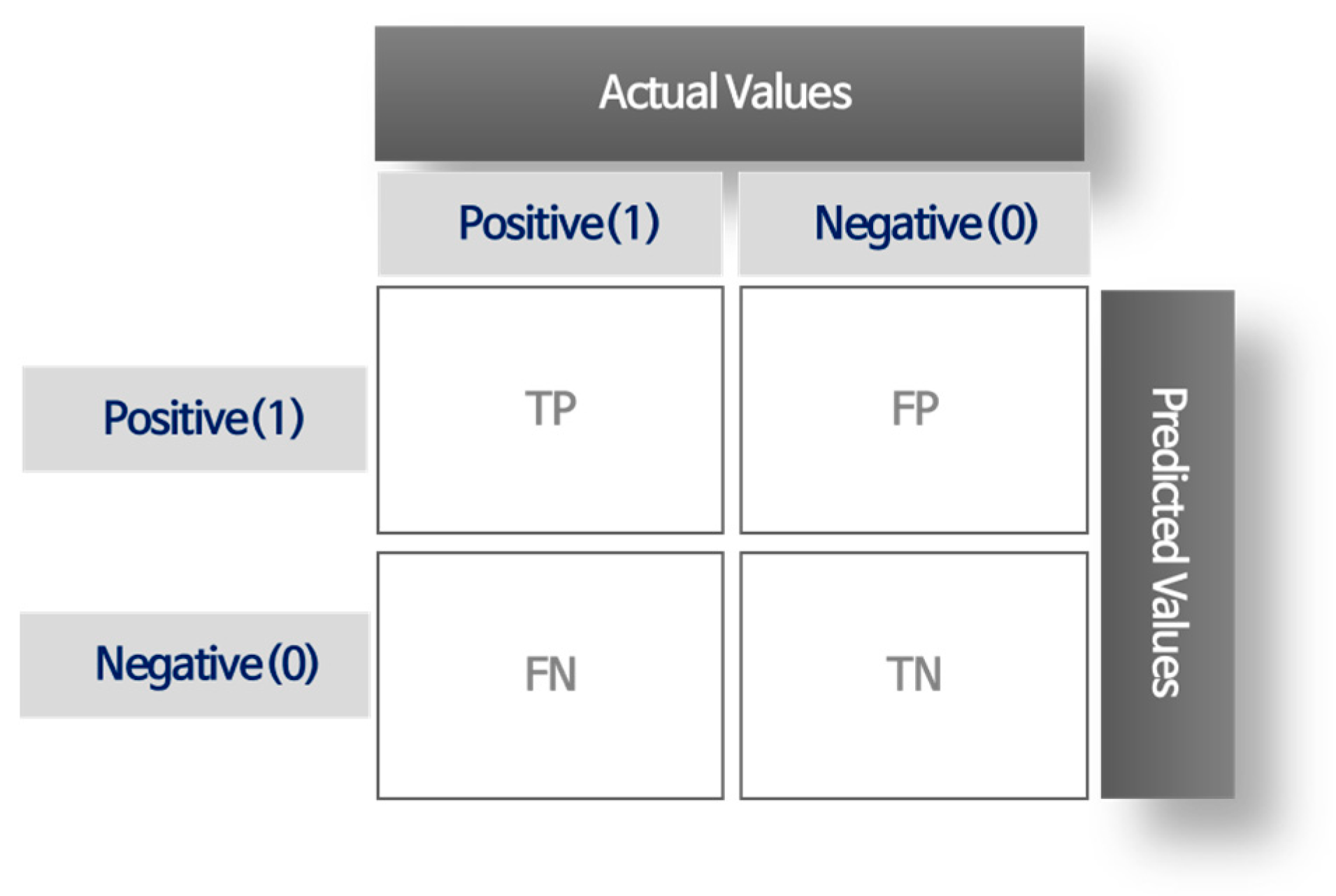

Based on the models generated via the proposed deep learning-based damage analysis methods, we examined how accurately damage can be detected from untrained damage images. This allowed us to verify the performance of the proposed process and validate it for damage diagnosis of the target structure, which is the goal of this study. Figure 8 illustrates verification process of an image in AI algorithm. Model validation methods for AI algorithms include determining recall and accuracy, and the defect recognition rate algorithm in this study was validated using the confusion matrix-based model evaluation method.

Figure 8.

Verification process.

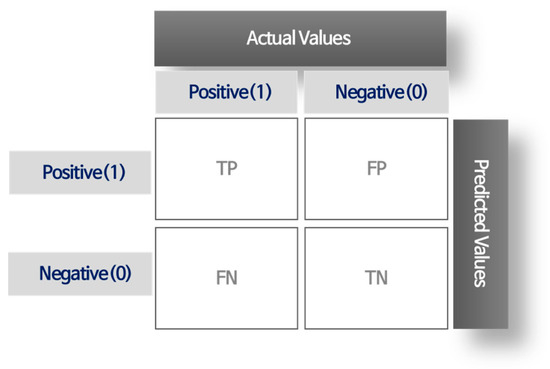

The confusion matrix is used as a tool to arrange true and predicted values to compare the true values defined for each damage item with the damage determined from the analysis. The matrix can be utilized to categorize true and false cases of correct damage detection in images where damage is present, non-detection cases, and false detection cases where no crack is present to better understand and evaluate the performance of the model. The structure of the confusion matrix is a table with the true value (True Label) on the horizontal axis and the predicted value (Predicted Label) on the vertical axis, where the main diagonal entries represent the correctly detected damage cases, and the off-diagonal entries represent the detected errors. Figure 9 shows accuracy model validation method. The confusion matrix summarizes four situations by frequency (1) True Positive (TP): The actual value is true, and the model’s predicted value is also true. (2) True negative (TN): The actual value is false, and the model’s predicted value is also false. (3) False positive (FP): The actual value is false, but the model’s predicted value is true. (4) False negative (FN): The actual value is true, but the model’s predicted value is false. Accuracy in Equation (2) represents the likelihood that a prediction aligns with reality. It is determined by collecting all prediction results in the denominator and calculating the frequency of successful predictions, where both true positives and true negatives are considered. Accuracy values range from 0 to 1, with high accuracy indicating that predictions are frequently correct. Algorithms with high accuracy are generally considered highly reliable.

Figure 9.

Confusion matrix.

Figure 9.

Confusion matrix.

3.4. Analysis Results

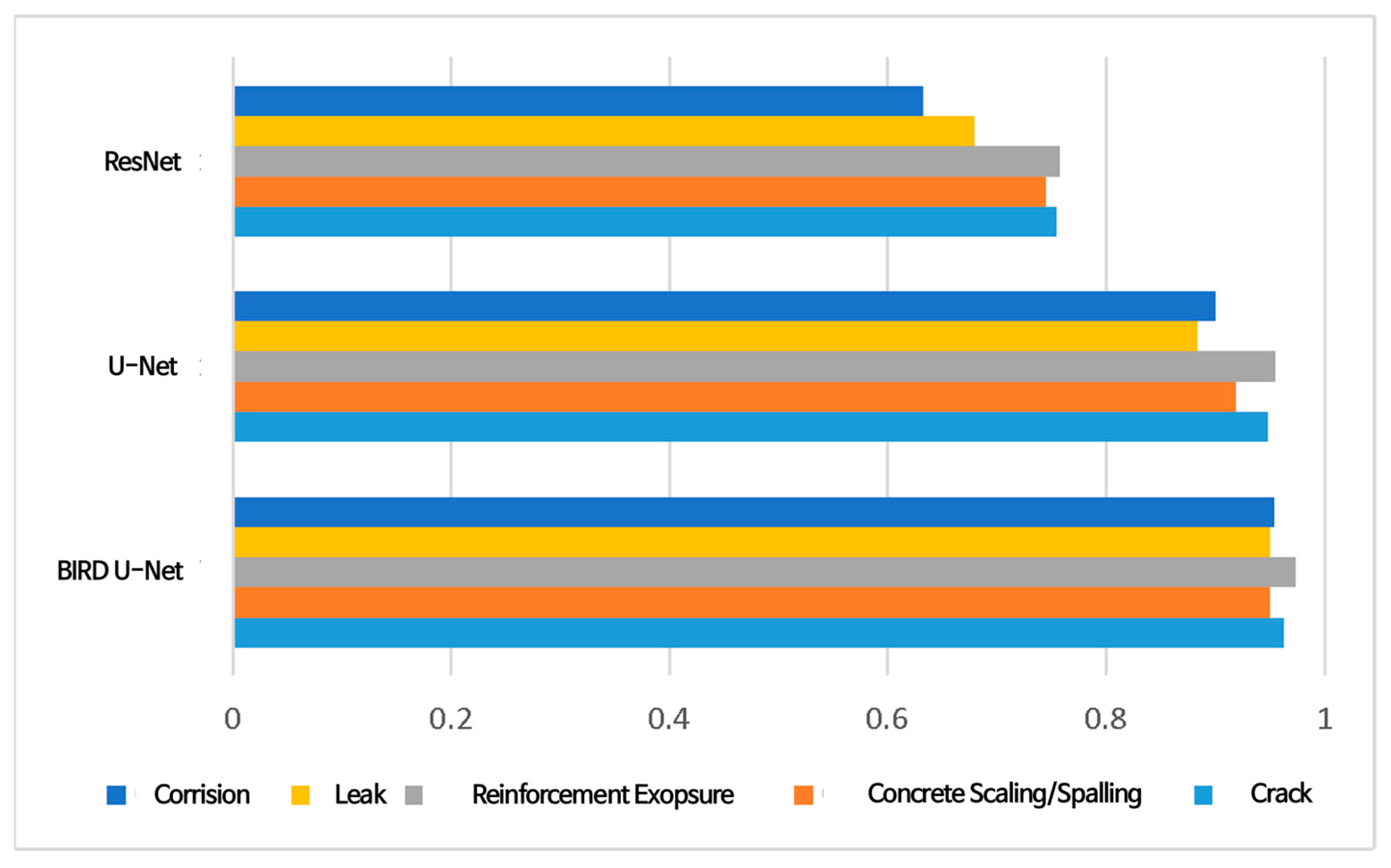

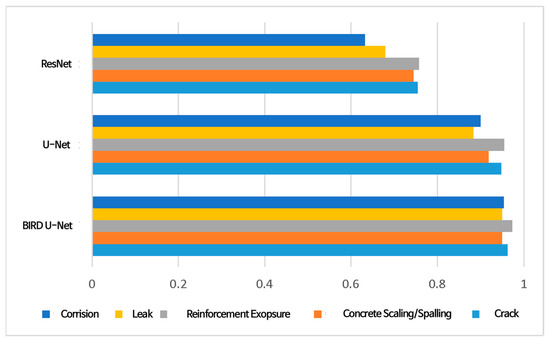

The damage detection analysis was performed on 27,139 images, comprising 20% of the total 135,696 images collected in this study. Figure 10 compares detection rates of algorithms we tested. BIRD U-Net exhibited a higher detection recall than U-Net and ResNet in all test parts for the analysis of each damage item, with 96.31% for cracks, 94.98% for delamination, 97.32% for rebar exposure, 94.98% for leakage efflorescence, and 95.39% for corrosion.

Figure 10.

Detection rate comparison.

Regarding U-Net, detection recalls of 94.83% for cracks, 91.86% for delamination, 95.51% for rebar exposure, 88.30% for leakage efflorescence, and 90.04% for corrosion were verified, and the recall was lower than that of BIRD U-Net in all five categories.

Conversely, when the ResNet algorithm was used to verify the pixel-by-pixel classification for the five categories (cracks, delamination, rebar exposure, leakage efflorescence, and corrosion), it had a relatively lower detection rate than BIRD U-Net, with 75.47% for cracks, 74.52% for delamination, 75.75% for rebar exposure, 67.92% for leakage efflorescence, and 63.22% for corrosion.

The recall, error, and detection rates of the deep learning analysis methods reviewed in this study are demonstrated in Table 3. Noticeably, the segmentation method demonstrated better performance in detecting damage than the detection method, and the overall recall rate was lower in the case of relatively small leaks in the collected damage images.

Table 3.

Analysis results for each type of damage.

The performance was next evaluated using a chaotic matrix-based model evaluation method to determine the suitability of the technology for deep learning-based automatic damage analysis. In addition, the BIRD U-Net, U-Net, and ResNet algorithms were trained under the same conditions with the training data to perform the analysis and compare the performance of the three models. Accordingly, BIRD U-Net exhibited an average recall of more than 95%, whereas U-Net exhibited an average recall of more than 92%. On the contrary, the ResNet model algorithm exhibited an average recall of 71%, and the BIRD U-Net model algorithm outperformed it by more than 24%. Notably, BIRD U-Net and U-Net showed the most substantial improvements in detection recall by damage type, with efflorescence at 7% and corrosion at 5%. In comparison, cracks, delamination, and rebar exposure averaged a 2% increase.

It is important to note that, like many other deep learning models, the BIRD U-Net algorithm does not have a fixed or specific number of images it requires to operate effectively. The number of images necessary for training depends on multiple factors, including the complexity of the problem, the diversity of the dataset, and the desired model performance. There is no rigid rule for the number of images, and data requirements can significantly vary from one project to another. We are convinced that the U-Net algorithm is a superior choice for the purpose of bridge damage detection. Therefore, we are committed to further research aimed at enhancing the performance of the U-Net algorithm.

4. Conclusions

In this study, in addition to the existing U-Net deep learning algorithm, we implemented a bridge-specific BIRD U-Net algorithm through epoch setting, Adam function, and bilateral filtering. We also evaluated the performance of BIRD U-Net, U-Net, and ResNet algorithms using a chaotic matrix-based model evaluation method. The results demonstrated that BIRD U-Net has a higher detection recall rate than U-Net and ResNet in all test cases, with an average of 96% for BIRD U-Net, 92% for U-Net, and 71% for ResNet. BIRD U-Net and U-Net exhibited the largest increases in detection recall by damage type, with efflorescence at 7% and corrosion at 5%, whereas cracks, delamination, and rebar exposure averaged a 2% increase. When comparing BIRD U-Net to ResNet, corrosion exhibited the largest increase of 32% and efflorescence of 27%, whereas cracks, delamination, and rebar exposure exhibited an average increase of 21%. This result is attributable to ResNet having a segmentation feature that detects the complete shape of the bridge by judging the shape and color of the bridge, in contrast to the BIRD U-Net algorithm.

Maintaining domestic facilities is difficult due to the continuous increase in the number of facilities to be managed and the decrease in the number of personnel in charge. Specifically, most facilities require thorough management due to various natural disasters and aging structures, and it is important to avoid disasters caused by poor management. In addition, bridges, which are vulnerable to access, require systematic management because they are major civil engineering structures for railways. Visual inspection is the primary method for investigating the appearance of structures. However, inspecting the exterior of a structure for damage is expensive and time-consuming due to the operation of separate survey equipment in inaccessible environmental conditions. In this study, various methods of deep learning-based automatic damage analysis techniques were reviewed to automate the damage inspection of 33,924 images taken by a UAV to more efficiently and reliably inspect the exterior of bridges using UAVs. Damage items were defined as cracks, efflorescence/leakage, delamination, corrosion, and rebar exposure, based on the images acquired by operating a commercial UAV equipped with a high-resolution camera, and deep learning analysis models were created by extracting damage as training data. In this study, damage images directly acquired using commercial UAV and other image data on damage occurring in various concrete structures were obtained and applied to develop deep learning models. The results revealed that BIRD U-Net has a higher detection recall rate than U-Net and ResNet in all test parts, with an average of 96%, with U-Net and ResNet having an average of 92% and 71%.

By applying automatic damage analysis technology, as reviewed above, more objective and accurate damage detection can be achieved compared to previous visual inspection. Moreover, an efficient maintenance system can be established. In addition to bridges, the BIRD U-Net algorithm can be used to perform image analysis on other concrete structures. Therefore, the automatic damage analysis technique for bridges developed through this research can provide objective damage inspection results for regular inspections using unmanned mobile vehicles in the field of concrete structure maintenance while reducing the time and cost compared to utilizing the existing system.

Various methods other than the U-Net-based algorithm proposed in this study are being explored for damage inspection using UAVs. Additional research is needed to develop more effective algorithms. In order to create and apply an algorithm for selecting an optimal maintenance method, it is necessary to enhance the accuracy of influencing factors beyond their current levels. These influencing factors may encompass the collection of a sufficient amount of damaged data. Furthermore, the selection of the optimal algorithm requires the application and analysis of a wider range of diverse machine learning algorithms.

Author Contributions

Data curation, T.S. and D.K.; methodology, M.N.; project administration, J.L.; resources, B.K.; software, K.K.; supervision, M.C.; writing—original draft, K.J.; writing—review and editing, J.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Science and Technology Policy Expert Development and Support Program through the Ministry of Science and ICT of Korean government, grant number S2022A066700001. The funder was not involved in the study design, collection, analysis, interpretation of data, the writing of this article or the decision to submit it for publication.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Acknowledgments

The authors would like to express their gratitude to all the participants who generously devoted their time and effort to contribute to this research.

Conflicts of Interest

Author Kyungil Kwak was employed by the company Neongkul Mobile. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Golding, V.P.; Gharineiat, Z.; Munawar, H.S.; Ullah, F. Crack Detection in Concrete Structures using Deep Learning. Sustainability 2022, 14, 8117. [Google Scholar] [CrossRef]

- Na, Y.H.; Park, M.Y. A Study of Railway Bridge Automatic Damage Analysis Method using Unmanned Aerial Vehicle and Deep Learning-Based Image Analysis Technology. J. Soc. Disaster Inf. 2021, 17, 556–567. [Google Scholar]

- Kaur, R.; Karmakar, G.; Xia, F.; Imran, M. Deep learning: Survey of environmental and camera impacts on internet of things images. Artif. Intell. Rev. 2023, 56, 9605–9638. [Google Scholar] [CrossRef] [PubMed]

- Lovelace, B.; Zink, J. Unmanned Aerial Vehicle Bridge Inspection Demonstration Project; Research Project Final Report; Minnesota Department of Transportation Research Services & Library: St. Paul, MN, USA, 2015; Report no. 40. [Google Scholar]

- Chanda, S.; Bu, G.; Guan, H.; Jo, J.; Pal, U.; Loo, Y.; Blumenstein, M. Automatic Bridge Crack Detection–a Texture Analysis-Based Approach. In Proceedings of the Artificial Neural Networks in Pattern Recognition: 6th IAPR TC 3 International Workshop, ANNPR 2014, Montreal, QC, Canada, 6–8 October 2014; Proceedings 6. pp. 193–203. [Google Scholar]

- Irizarry, J.; Gheisari, M.; Walker, B.N. Usability Assessment of Drone Technology as Safety Inspection Tools. J. Inf. Technol. Constr. (ITcon) 2012, 17, 194–212. [Google Scholar]

- De Melo, R.R.S.; Costa, D.B.; Álvares, J.S.; Irizarry, J. Applicability of Unmanned Aerial System (UAS) for Safety Inspection on Construction Sites. Saf. Sci. 2017, 98, 174–185. [Google Scholar] [CrossRef]

- Jung, I.-W.; Park, J.-B.; Kwon, J.-H. Smart facility maintenance using unmanned vehicles. Water Future J. Korean Water Resour. Soc. 2016, 49, 96–99. [Google Scholar]

- Lee, R.; Shon, H.; Kim, R. Case Study on the Safety Inspection using Drones. KSCE Mag. 2017, 65, 75–79. [Google Scholar]

- Kang, S.C.; Kim, C.S.; Jung, J.I.; Kim, E.J.; Park, J.W. A Study on the Method of Facility Management and the Effectiveness of 3D Mapping using Drone in Large Areas. Korean Assoc. Comput. Educ. 2021, 25, 223–226. [Google Scholar]

- Wu, Q.; Song, Z.; Chen, H.; Lu, Y.; Zhou, L. A Highway Pavement Crack Identification Method Based on an Improved U-Net Model. Appl. Sci. 2023, 13, 7227. [Google Scholar] [CrossRef]

- Hadinata, P.N.; Simanta, D.; Eddy, L.; Nagai, K. Multiclass Segmentation of Concrete Surface Damages Using U-Net and DeepLabV3+. Appl. Sci. 2023, 13, 2398. [Google Scholar] [CrossRef]

- Kang, J.O.; Lee, Y.C. Preliminary Research for Drone Based Visual-Safety Inspection of Bridge. Proc. Korean Soc. Geospat. Inf. Sci. 2016, 2016, 207–210. [Google Scholar]

- Jeon, S.-H.; Jung, D.-H.; Kim, J.-H.; Kevin, R. State-of-the-art deep learning algorithms: Focusing on civil engineering applications. J. Korean Soc. Civ. Eng. 2019, 67, 90–94. [Google Scholar]

- Kim, Y.N.; Bae, H.J.; Kim, Y.M.; Jang, K.J.; Kim, J.P. Deep learning based multiple damage type detection system for bridge structures. Proc. Korean Inf. Sci. Assoc. 2021, 48, 582–584. [Google Scholar]

- Hong, S.S.; Hwang, C.; Kim, H.K.; Kim, B.K. A Deep Learning-Based Bridge Image Pretreatment and Damaged Objects Automatic Detection Model for Bridge Damage Management. Converg. Inf. Serv. Technol. 2021, 10, 497–511. [Google Scholar] [CrossRef]

- Cardellicchio, A.; Ruggieri, S.; Nettis, A.; Renò, V.; Uva, G. Physical interpretation of machine learning-based recognition of defects for the risk management of existing bridge heritage. Eng. Fail. Anal. 2023, 149, 107237. [Google Scholar] [CrossRef]

- Rao, A.S.; Nguyen, T.; Palaniswami, M.; Ngo, T. Vision-Based Automated Crack Detection using Convolutional Neural Networks for Condition Assessment of Infrastructure. Struct. Health Monit. 2021, 20, 2124–2142. [Google Scholar] [CrossRef]

- Dais, D.; Bal, I.E.; Smyrou, E.; Sarhosis, V. Automatic Crack Classification and Segmentation on Masonry Surfaces using Convolutional Neural Networks and Transfer Learning. Autom. Constr. 2021, 125, 103606. [Google Scholar] [CrossRef]

- Macaulay, M.O.; Shafiee, M. Machine Learning Techniques for Robotic and Autonomous Inspection of Mechanical Systems and Civil Infrastructure. Auton. Intell. Syst. 2022, 2, 8. [Google Scholar] [CrossRef]

- Lu, M.; Hu, Y.; Lu, X. Dilated Light-Head R-CNN using Tri-Center Loss for Driving Behavior Recognition. Image Vis. Comput. 2019, 90, 103800. [Google Scholar] [CrossRef]

- Bui, H.M.; Lech, M.; Cheng, E.; Neville, K.; Burnett, I.S. Using Grayscale Images for Object Recognition with Convolutional-Recursive Neural Network. In Proceedings of the 2016 IEEE Sixth International Conference on Communications and Electronics (ICCE), Ha Long, Vietnam, 27–29 July 2016; pp. 321–325. [Google Scholar]

- Xie, Y.; Richmond, D. Pre-Training on Grayscale Imagenet Improves Medical Image Classification. In Computer Vision—ECCV 2018 Workshops. ECCV 2018. Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2018. [Google Scholar]

- Shahriar, M.T.; Li, H. A Study of Image Pre-Processing for Faster Object Recognition. arXiv 2020, arXiv:2011.06928. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; Springer: Cham, Switzerland, 2015; Part III 18; pp. 234–241. [Google Scholar]

- Yu, X.; Kuan, T.-W.; Tseng, S.-P.; Chen, Y.; Chen, S.; Wang, J.-F.; Gu, Y.; Chen, T. EnRDeA U-Net Deep Learning of Semantic Segmentation on Intricate Noise Roads. Entropy 2023, 25, 1085. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).