A Novel Interpretable Deep Learning Model for Ozone Prediction

Abstract

:1. Introduction

2. Materials and Methods

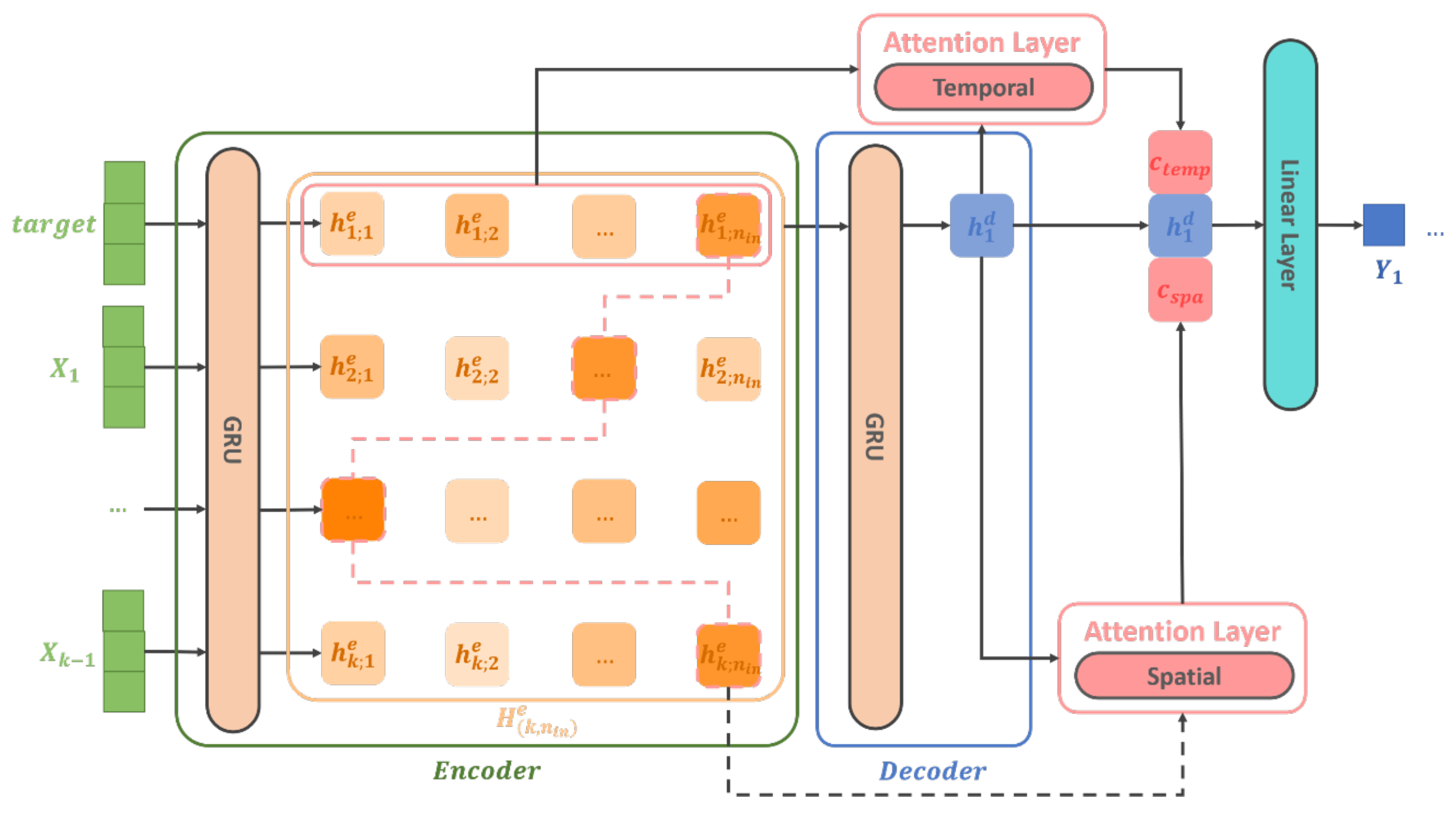

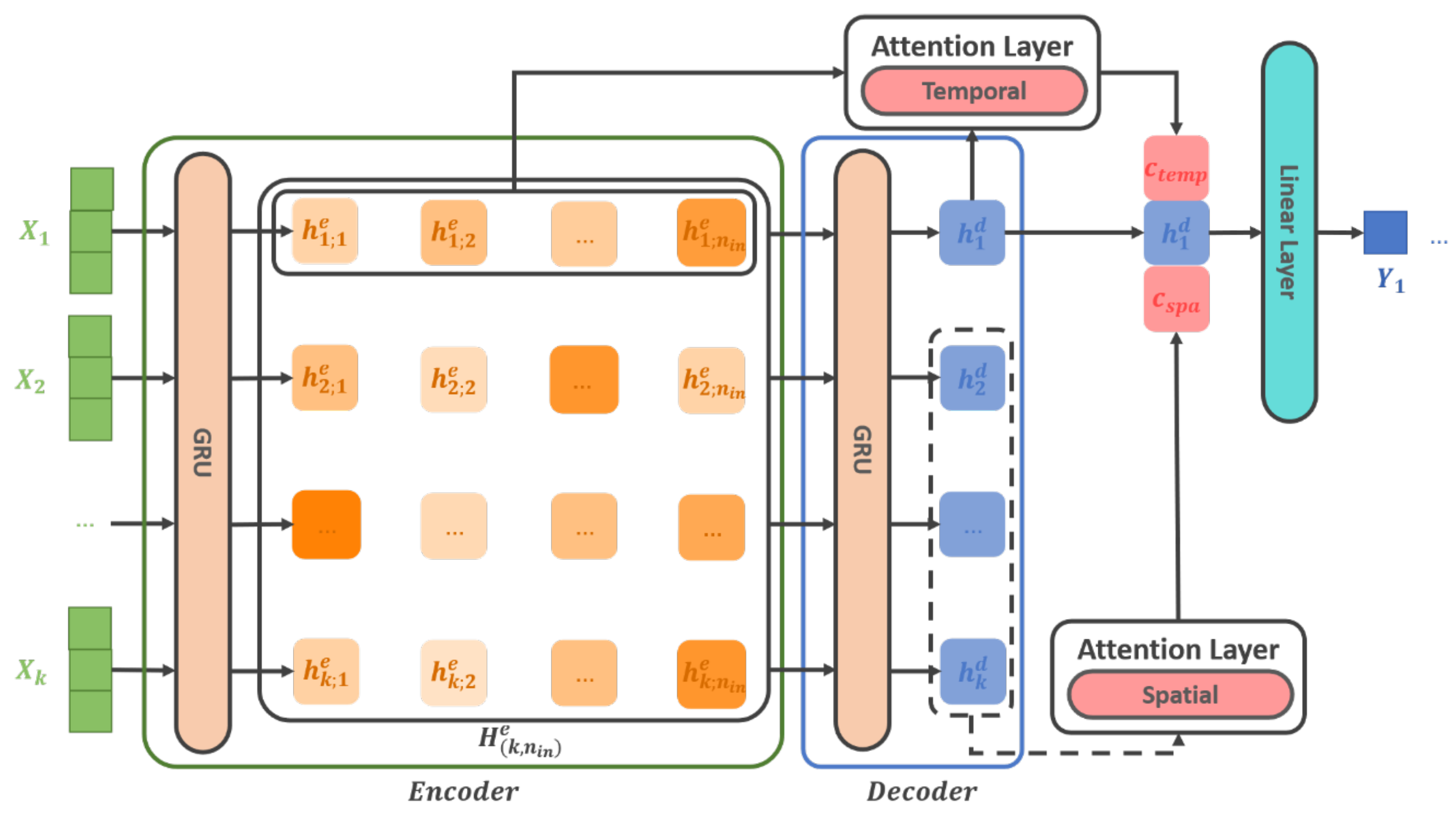

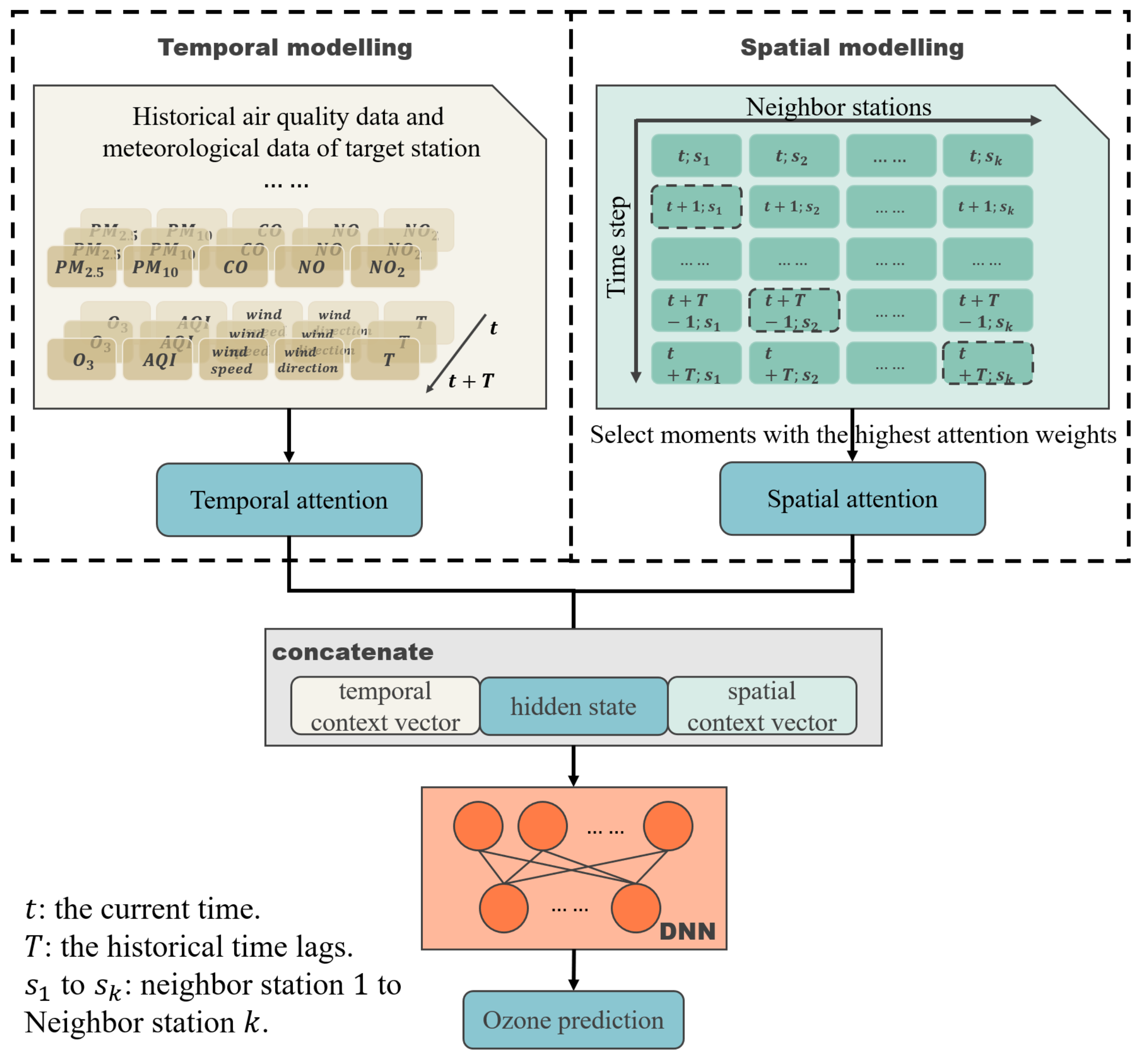

2.1. Methods

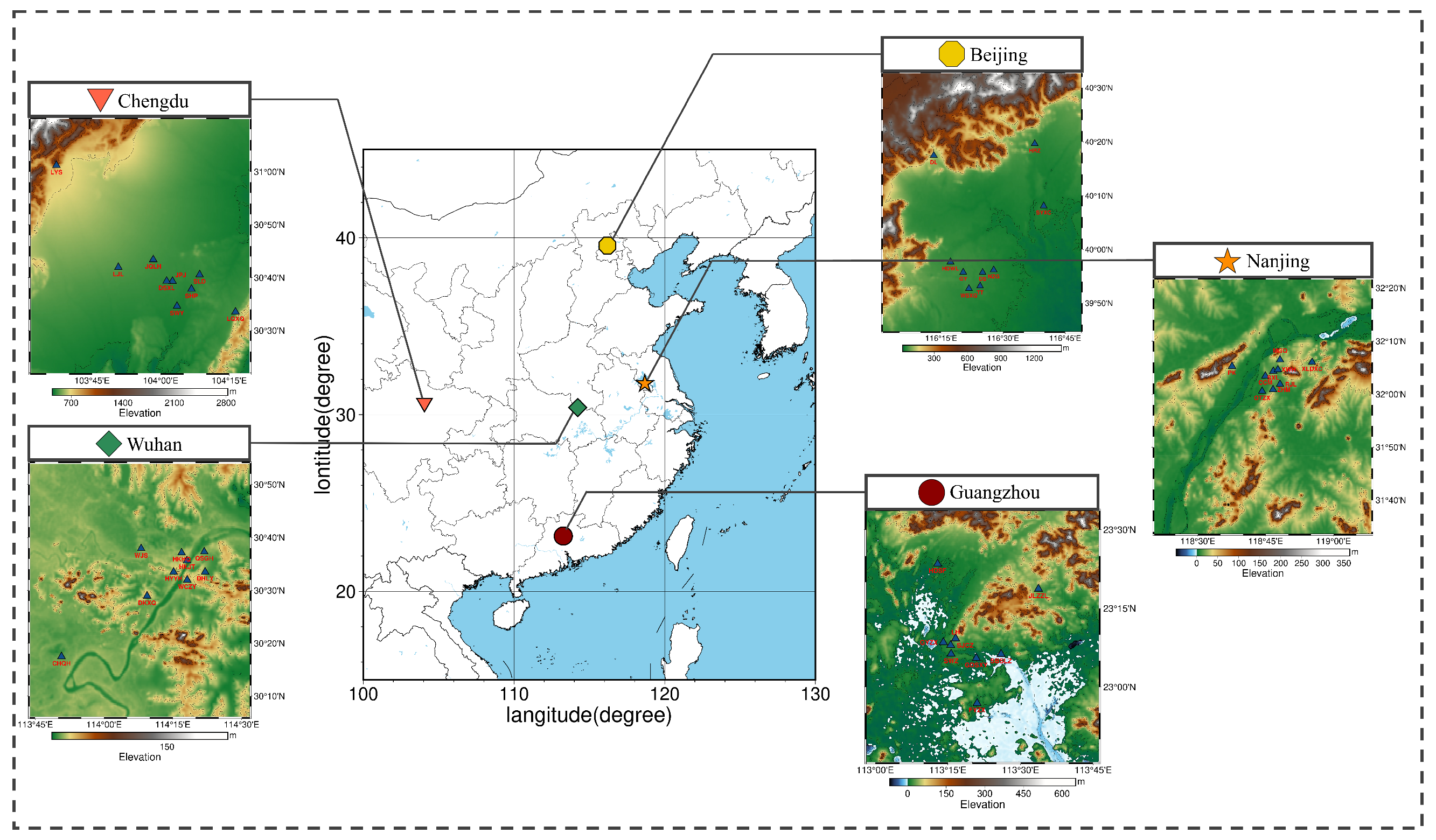

2.2. Data

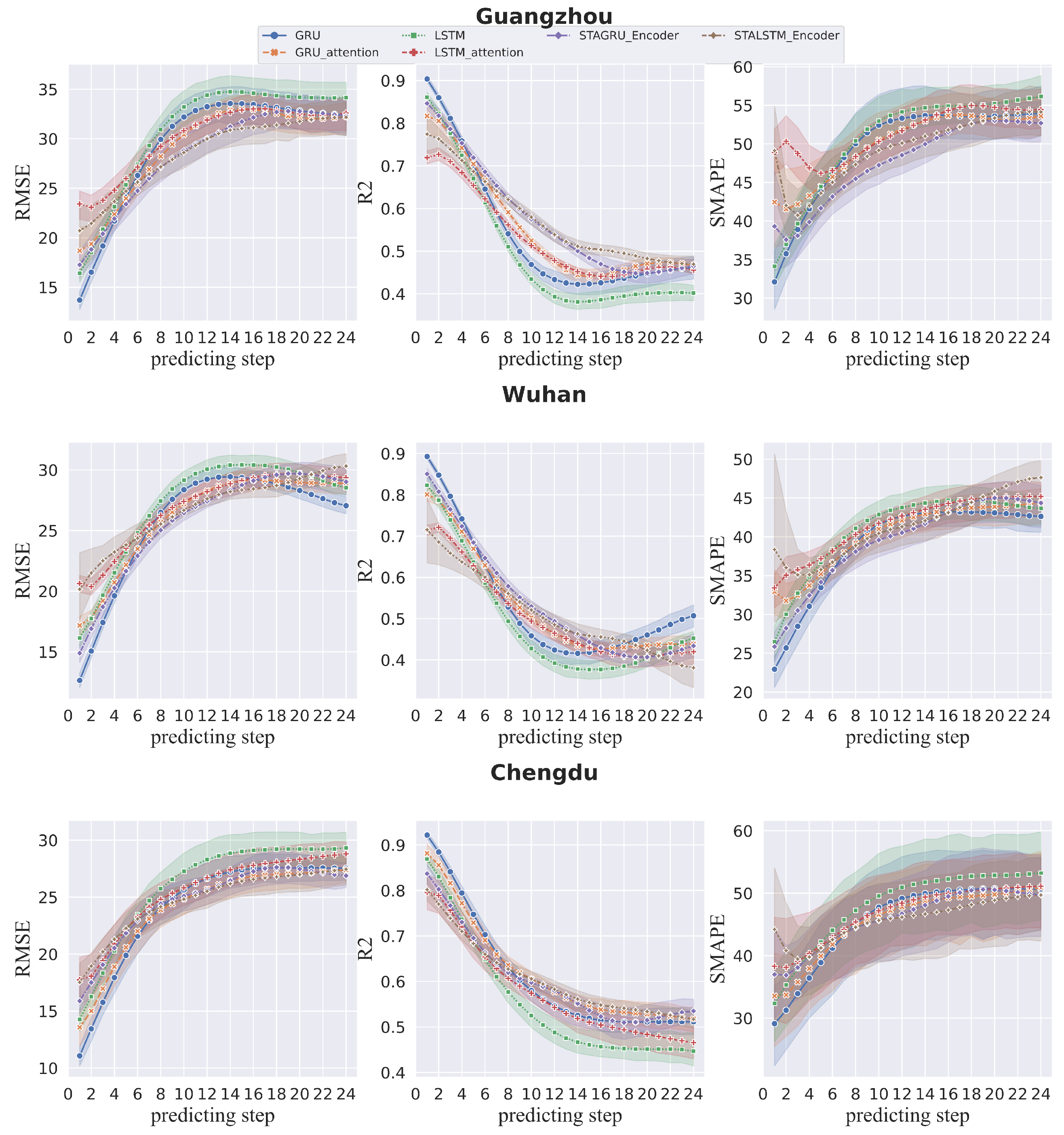

2.2.1. Study Regions and Datasets

2.2.2. Evaluation Metrics

2.2.3. Experimental Design

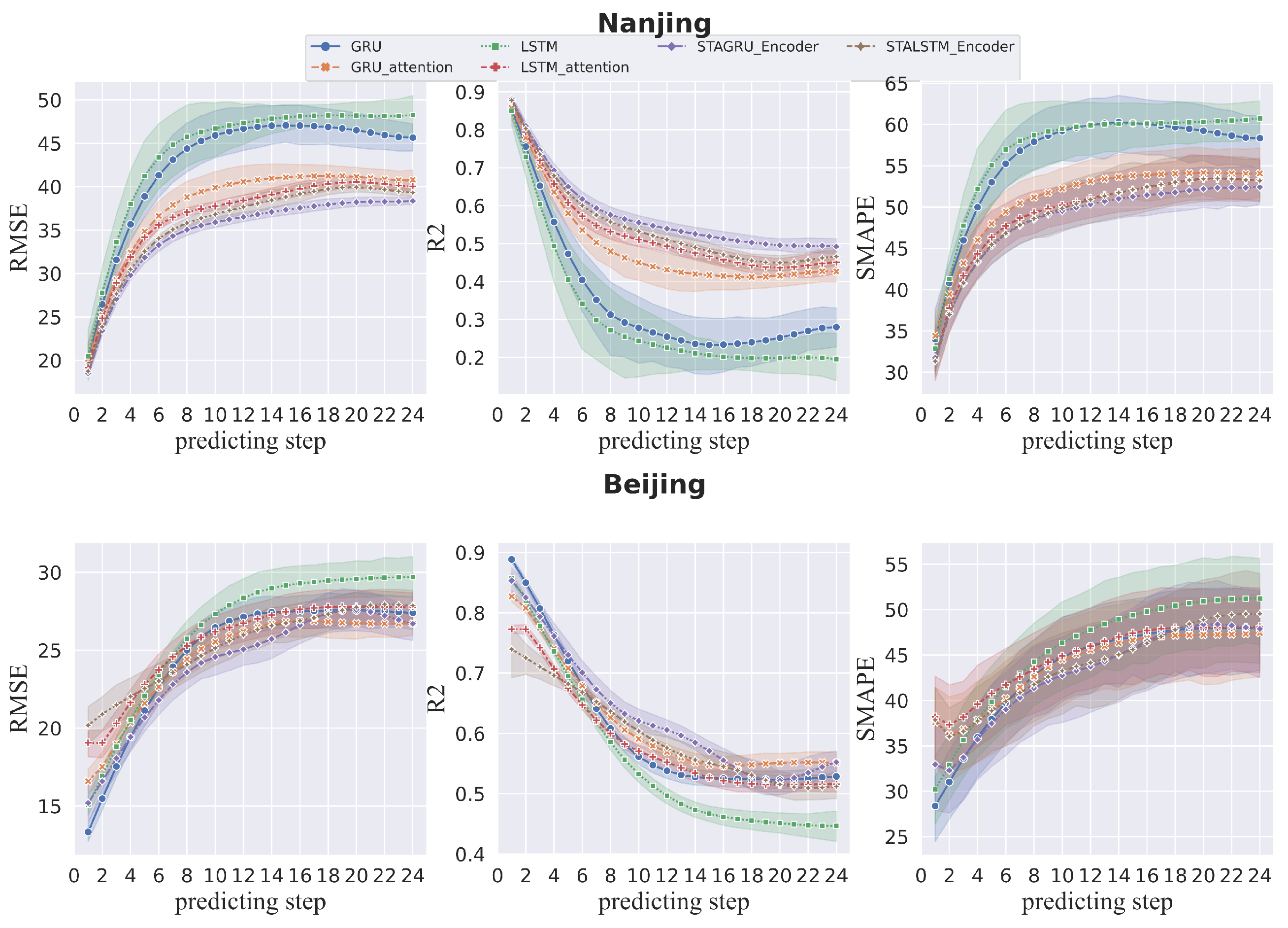

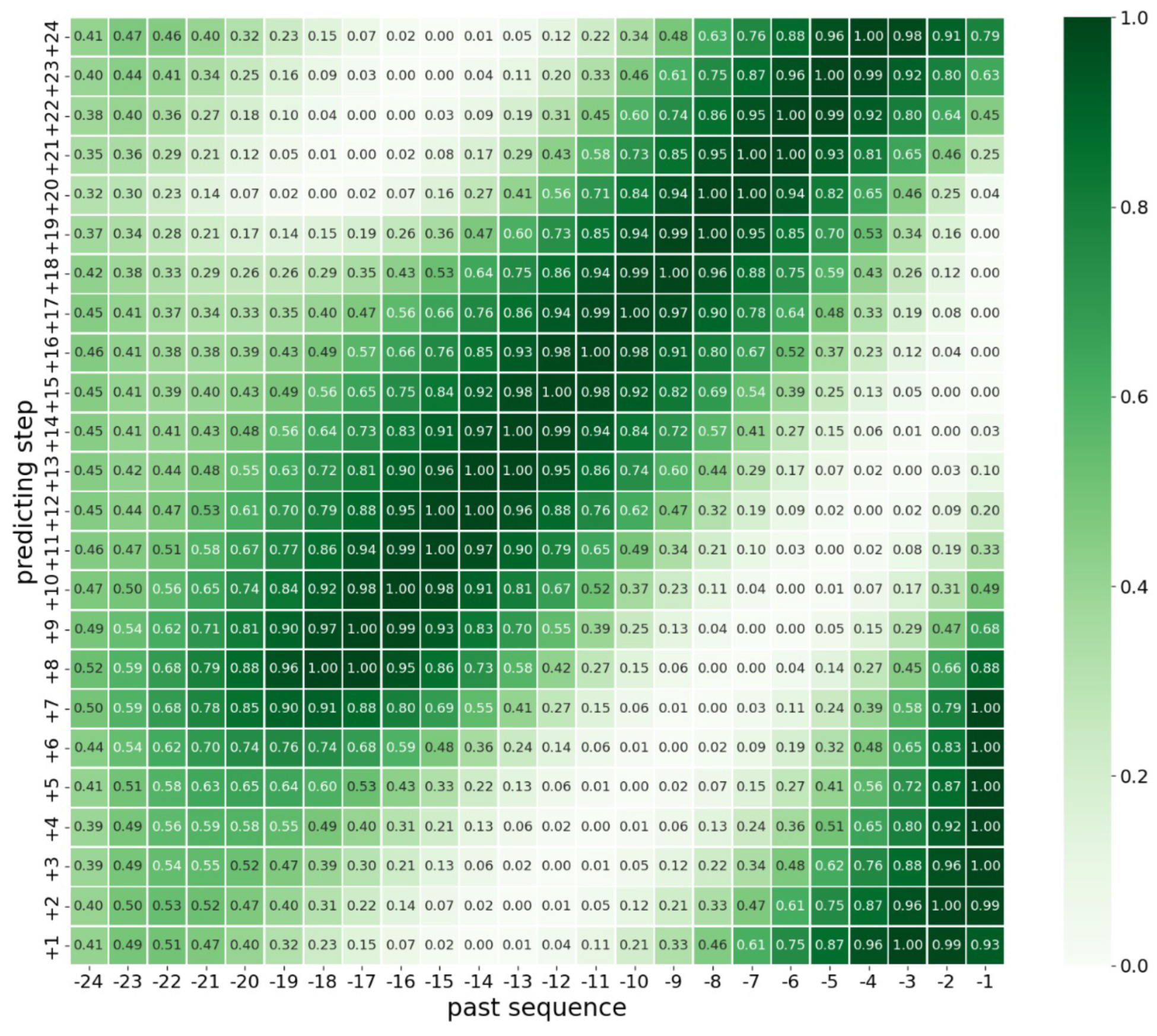

3. Results

4. Discussion

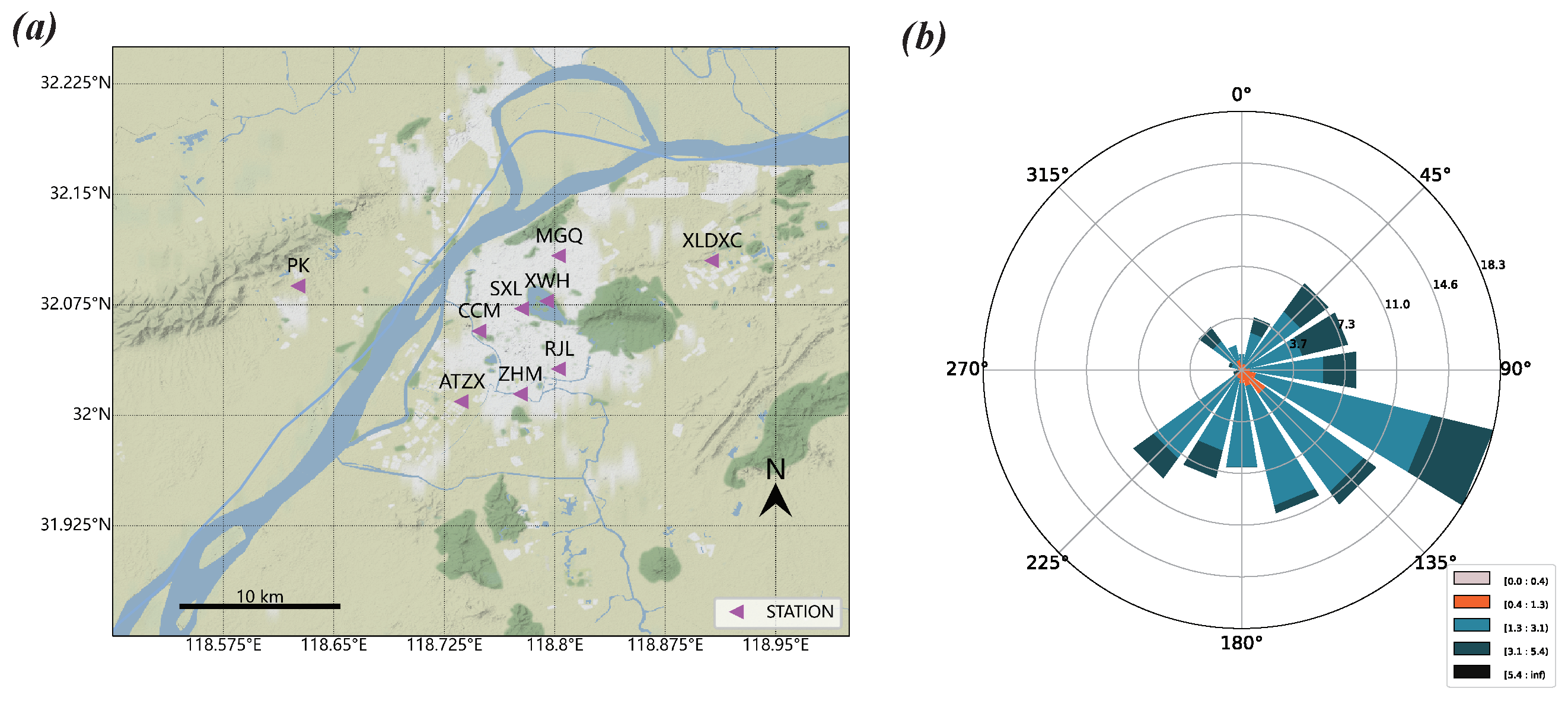

4.1. Interpretability Discussion

4.2. Derivative Model Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| City | Station | Longitude | Latitude | Station | Longitude | Latitude |

|---|---|---|---|---|---|---|

| Nanjing | ATZX | 118.737 | 32.009 | RJL | 118.803 | 32.031 |

| CCM | 118.749 | 32.057 | SXL | 118.778 | 32.072 | |

| MGQ | 118.803 | 32.108 | XLDXC | 118.907 | 32.105 | |

| PK | 118.626 | 32.088 | XWH | 118.795 | 32.078 | |

| ZHM | 118.777 | 32.014 | ||||

| Beijing | WSXG | 116.3621 | 39.8784 | DL | 116.2202 | 40.2915 |

| DS | 116.4174 | 39.9289 | TT | 116.4072 | 39.8863 | |

| NZG | 116.462 | 39.9365 | GY | 116.3392 | 39.9295 | |

| HDWL | 116.2878 | 39.9611 | SYXC | 116.6636 | 40.135 | |

| HRZ | 116.6275 | 60.3275 | ||||

| Chengdu | JQLH | 103.9728 | 30.7236 | SLD | 104.1419 | 30.6764 |

| SWY | 104.0594 | 30.5767 | SHP | 104.1122 | 30.6306 | |

| JPJ | 104.0431 | 30.6556 | LYS | 103.6202 | 31.0201 | |

| DSXL | 104.0219 | 30.6558 | LQXQ | 104.2725 | 30.5589 | |

| LJL | 103.8458 | 30.6994 | ||||

| Guangzhou | GYZX | 113.2347 | 23.1423 | SWZ | 113.2612 | 23.105 |

| GDSXY | 113.3478 | 23.0916 | SBSLZ | 113.4332 | 23.1047 | |

| FYZX | 113.3505 | 22.9483 | HDSF | 113.2146 | 23.3916 | |

| SJCZ | 113.2597 | 23.1331 | JLZZL | 113.5618 | 23.312 | |

| LH | 113.2765 | 23.1544 | ||||

| Wuhan | DHLY | 114.3677 | 30.5584 | HYYH | 114.2529 | 30.558 |

| HKHQ | 114.282 | 30.6189 | WCZY | 114.3025 | 30.5332 | |

| QSGH | 114.3646 | 30.6217 | DKXQ | 114.1566 | 30.4825 | |

| HKJT | 114.3014 | 30.5944 | WJS | 114.135 | 30.6319 | |

| CHQH | 113.8454 | 30.2917 |

References

- Atkinson, R. Atmospheric chemistry of VOCs and NOx. Atmos. Environ. 2000, 34, 2063–2101. [Google Scholar]

- Carvalho, A.; Carvalho, A.; Gelpi, I.; Barreiro, M.; Borrego, C.; Miranda, A.; Pérez-Muñuzuri, V. Influence of topography and land use on pollutants dispersion in the Atlantic coast of Iberian Peninsula. Atmos. Environ. 2006, 40, 3969–3982. [Google Scholar]

- Meng, X.; Wang, W.; Shi, S.; Zhu, S.; Wang, P.; Chen, R.; Xiao, Q.; Xue, T.; Geng, G.; Zhang, Q.; et al. Evaluating the spatiotemporal ozone characteristics with high-resolution predictions in mainland China, 2013–2019. Environ. Pollut. 2022, 299, 118865. [Google Scholar]

- Tu, J.; Xia, Z.G.; Wang, H.; Li, W. Temporal variations in surface ozone and its precursors and meteorological effects at an urban site in China. Atmos. Res. 2007, 85, 310–337. [Google Scholar]

- Wang, W.N.; Cheng, T.H.; Gu, X.F.; Chen, H.; Guo, H.; Wang, Y.; Bao, F.W.; Shi, S.Y.; Xu, B.R.; Zuo, X.; et al. Assessing spatial and temporal patterns of observed ground-level ozone in China. Sci. Rep. 2017, 7, 3651. [Google Scholar]

- Yu, R.; Lin, Y.; Zou, J.; Dan, Y.; Cheng, C. Review on atmospheric ozone pollution in China: Formation, spatiotemporal distribution, precursors and affecting factors. Atmosphere 2021, 12, 1675. [Google Scholar]

- Mousavinezhad, S.; Choi, Y.; Pouyaei, A.; Ghahremanloo, M.; Nelson, D.L. A comprehensive investigation of surface ozone pollution in China, 2015–2019: Separating the contributions from meteorology and precursor emissions. Atmos. Res. 2021, 257, 105599. [Google Scholar]

- Lelieveld, J.; Dentener, F.J. What controls tropospheric ozone? J. Geophys. Res. Atmos. 2000, 105, 3531–3551. [Google Scholar]

- Camalier, L.; Cox, W.; Dolwick, P. The effects of meteorology on ozone in urban areas and their use in assessing ozone trends. Atmos. Environ. 2007, 41, 7127–7137. [Google Scholar]

- Dueñas, C.; Fernández, M.; Cañete, S.; Carretero, J.; Liger, E. Assessment of ozone variations and meteorological effects in an urban area in the Mediterranean Coast. Sci. Total Environ. 2002, 299, 97–113. [Google Scholar]

- Hu, C.; Kang, P.; Jaffe, D.A.; Li, C.; Zhang, X.; Wu, K.; Zhou, M. Understanding the impact of meteorology on ozone in 334 cities of China. Atmos. Environ. 2021, 248, 118221. [Google Scholar]

- Li, K.; Chen, L.; Ying, F.; White, S.J.; Jang, C.; Wu, X.; Gao, X.; Hong, S.; Shen, J.; Azzi, M.; et al. Meteorological and chemical impacts on ozone formation: A case study in Hangzhou, China. Atmos. Res. 2017, 196, 40–52. [Google Scholar]

- Pu, X.; Wang, T.; Huang, X.; Melas, D.; Zanis, P.; Papanastasiou, D.; Poupkou, A. Enhanced surface ozone during the heat wave of 2013 in Yangtze River Delta region, China. Sci. Total Environ. 2017, 603, 807–816. [Google Scholar] [CrossRef]

- Lu, X.; Hong, J.; Zhang, L.; Cooper, O.R.; Schultz, M.G.; Xu, X.; Wang, T.; Gao, M.; Zhao, Y.; Zhang, Y. Severe surface ozone pollution in China: A global perspective. Environ. Sci. Technol. Lett. 2018, 5, 487–494. [Google Scholar] [CrossRef]

- Sun, L.; Xue, L.; Wang, T.; Gao, J.; Ding, A.; Cooper, O.R.; Lin, M.; Xu, P.; Wang, Z.; Wang, X.; et al. Significant increase of summertime ozone at Mount Tai in Central Eastern China. Atmos. Chem. Phys. 2016, 16, 10637–10650. [Google Scholar]

- Wang, T.; Wei, X.; Ding, A.; Poon, C.N.; Lam, K.S.; Li, Y.S.; Chan, L.; Anson, M. Increasing surface ozone concentrations in the background atmosphere of Southern China, 1994–2007. Atmos. Chem. Phys. 2009, 9, 6217–6227. [Google Scholar] [CrossRef]

- Dimakopoulou, K.; Douros, J.; Samoli, E.; Karakatsani, A.; Rodopoulou, S.; Papakosta, D.; Grivas, G.; Tsilingiridis, G.; Mudway, I.; Moussiopoulos, N.; et al. Long-term exposure to ozone and children’s respiratory health: Results from the RESPOZE study. Environ. Res. 2020, 182, 109002. [Google Scholar]

- Keiser, D.; Lade, G.; Rudik, I. Air pollution and visitation at US national parks. Sci. Adv. 2018, 4, eaat1613. [Google Scholar] [CrossRef]

- Michaudel, C.; Mackowiak, C.; Maillet, I.; Fauconnier, L.; Akdis, C.A.; Sokolowska, M.; Dreher, A.; Tan, H.T.T.; Quesniaux, V.F.; Ryffel, B.; et al. Ozone exposure induces respiratory barrier biphasic injury and inflammation controlled by IL-33. J. Allergy Clin. Immunol. 2018, 142, 942–958. [Google Scholar]

- Zhang, Y.; Ma, Y.; Feng, F.; Cheng, B.; Shen, J.; Wang, H.; Jiao, H.; Li, M. Respiratory mortality associated with ozone in China: A systematic review and meta-analysis. Environ. Pollut. 2021, 280, 116957. [Google Scholar]

- Wennberg, P.O.; Dabdub, D. Rethinking ozone production. Science 2008, 319, 1624–1625. [Google Scholar] [CrossRef] [PubMed]

- Bey, I.; Jacob, D.J.; Yantosca, R.M.; Logan, J.A.; Field, B.D.; Fiore, A.M.; Li, Q.; Liu, H.Y.; Mickley, L.J.; Schultz, M.G. Global modeling of tropospheric chemistry with assimilated meteorology: Model description and evaluation. J. Geophys. Res. Atmos. 2001, 106, 23073–23095. [Google Scholar] [CrossRef]

- Dennis, R.L.; Byun, D.W.; Novak, J.H.; Galluppi, K.J.; Coats, C.J.; Vouk, M.A. The next generation of integrated air quality modeling: EPA’s Models-3. Atmos. Environ. 1996, 30, 1925–1938. [Google Scholar] [CrossRef]

- Grell, G.A.; Peckham, S.E.; Schmitz, R.; McKeen, S.A.; Frost, G.; Skamarock, W.C.; Eder, B. Fully coupled “online” chemistry within the WRF model. Atmos. Environ. 2005, 39, 6957–6975. [Google Scholar] [CrossRef]

- Zhou, G.; Xu, J.; Xie, Y.; Chang, L.; Gao, W.; Gu, Y.; Zhou, J. Numerical air quality forecasting over eastern China: An operational application of WRF-Chem. Atmos. Environ. 2017, 153, 94–108. [Google Scholar] [CrossRef]

- Schlink, U.; Herbarth, O.; Richter, M.; Dorling, S.; Nunnari, G.; Cawley, G.; Pelikan, E. Statistical models to assess the health effects and to forecast ground-level ozone. Environ. Model. Softw. 2006, 21, 547–558. [Google Scholar] [CrossRef]

- Huang, L.; Zhang, C.; Bi, J. Development of land use regression models for PM2.5, SO2, NO2 and O3 in Nanjing, China. Environ. Res. 2017, 158, 542–552. [Google Scholar] [CrossRef]

- Hubbard, M.C.; Cobourn, W.G. Development of a regression model to forecast ground-level ozone concentration in Louisville, KY. Atmos. Environ. 1998, 32, 2637–2647. [Google Scholar] [CrossRef]

- Kumar, U.; Jain, V. ARIMA forecasting of ambient air pollutants (O3, NO, NO2 and CO). Stoch. Environ. Res. Risk Assess. 2010, 24, 751–760. [Google Scholar] [CrossRef]

- Pagowski, M.; Grell, G.; Devenyi, D.; Peckham, S.; McKeen, S.; Gong, W.; Delle Monache, L.; McHenry, J.; McQueen, J.; Lee, P. Application of dynamic linear regression to improve the skill of ensemble-based deterministic ozone forecasts. Atmos. Environ. 2006, 40, 3240–3250. [Google Scholar] [CrossRef]

- Wang, M.; Keller, J.P.; Adar, S.D.; Kim, S.Y.; Larson, T.V.; Olives, C.; Sampson, P.D.; Sheppard, L.; Szpiro, A.A.; Vedal, S.; et al. Development of long-term spatiotemporal models for ambient ozone in six metropolitan regions of the United States: The MESA Air study. Atmos. Environ. 2015, 123, 79–87. [Google Scholar] [CrossRef] [PubMed]

- Comrie, A.C. Comparing neural networks and regression models for ozone forecasting. J. Air Waste Manag. Assoc. 1997, 47, 653–663. [Google Scholar] [CrossRef]

- Robeson, S.; Steyn, D. Evaluation and comparison of statistical forecast models for daily maximum ozone concentrations. Atmos. Environ. Part B Urban Atmos. 1990, 24, 303–312. [Google Scholar] [CrossRef]

- Burrows, W.R.; Benjamin, M.; Beauchamp, S.; Lord, E.R.; McCollor, D.; Thomson, B. CART decision-tree statistical analysis and prediction of summer season maximum surface ozone for the Vancouver, Montreal and Atlantic regions of Canada. J. Appl. Meteorol. Climatol. 1995, 34, 1848–1862. [Google Scholar] [CrossRef]

- Luna, A.; Paredes, M.; De Oliveira, G.; Corrêa, S. Prediction of ozone concentration in tropospheric levels using artificial neural networks and support vector machine at Rio de Janeiro, Brazil. Atmos. Environ. 2014, 98, 98–104. [Google Scholar] [CrossRef]

- Cai, W. Using machine learning method for predicting the concentration of ozone in the air. Environ. Conform. Assess 2018, 10, 78–84. [Google Scholar]

- Ding, S.; Chen, B.; Wang, J.; Chen, L.; Zhang, C.; Sun, S.; Huang, C. An applied research of decision-tree based statistical model in forecasting the spatial-temporal distribution of O3. Acta Sci. Circumst. 2018, 38, 3229–3242. [Google Scholar]

- Eslami, E.; Salman, A.K.; Choi, Y.; Sayeed, A.; Lops, Y. A data ensemble approach for real-time air quality forecasting using extremely randomized trees and deep neural networks. Neural Comput. Appl. 2020, 32, 7563–7579. [Google Scholar] [CrossRef]

- Requia, W.J.; Di, Q.; Silvern, R.; Kelly, J.T.; Koutrakis, P.; Mickley, L.J.; Sulprizio, M.P.; Amini, H.; Shi, L.; Schwartz, J. An ensemble learning approach for estimating high spatiotemporal resolution of ground-level ozone in the contiguous United States. Environ. Sci. Technol. 2020, 54, 11037–11047. [Google Scholar] [CrossRef]

- Al-Alawi, S.M.; Abdul-Wahab, S.A.; Bakheit, C.S. Combining principal component regression and artificial neural networks for more accurate predictions of ground-level ozone. Environ. Model. Softw. 2008, 23, 396–403. [Google Scholar] [CrossRef]

- Arhami, M.; Kamali, N.; Rajabi, M.M. Predicting hourly air pollutant levels using artificial neural networks coupled with uncertainty analysis by Monte Carlo simulations. Environ. Sci. Pollut. Res. 2013, 20, 4777–4789. [Google Scholar] [CrossRef]

- Tsai, C.H.; Chang, L.C.; Chiang, H.C. Forecasting of ozone episode days by cost-sensitive neural network methods. Sci. Total Environ. 2009, 407, 2124–2135. [Google Scholar] [CrossRef] [PubMed]

- Wolpert, D.H. The lack of a priori distinctions between learning algorithms. Neural Comput. 1996, 8, 1341–1390. [Google Scholar] [CrossRef]

- Wolpert, D.H.; Macready, W.G. No free lunch theorems for optimization. IEEE Trans. Evol. Comput. 1997, 1, 67–82. [Google Scholar] [CrossRef]

- Elman, J.L. Finding structure in time. Cogn. Sci. 1990, 14, 179–211. [Google Scholar] [CrossRef]

- Lipton, Z.C.; Berkowitz, J.; Elkan, C. A critical review of recurrent neural networks for sequence learning. arXiv 2015, arXiv:1506.00019. [Google Scholar]

- Chung, J.; Gulcehre, C.; Cho, K.; Bengio, Y. Empirical evaluation of gated recurrent neural networks on sequence modeling. arXiv 2014, arXiv:1412.3555. [Google Scholar]

- Pascanu, R.; Mikolov, T.; Bengio, Y. On the difficulty of training recurrent neural networks. In Proceedings of the International Conference on Machine Learning, Atlanta, GA, USA, 16–21 June 2013; pp. 1310–1318. [Google Scholar]

- Sutskever, I.; Vinyals, O.; Le, Q.V. Sequence to sequence learning with neural networks. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; Volume 27. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Volume 30. [Google Scholar]

- Liu, B.; Yan, S.; Li, J.; Qu, G.; Li, Y.; Lang, J.; Gu, R. An attention-based air quality forecasting method. In Proceedings of the 2018 17th IEEE International Conference on Machine Learning and Applications (ICMLA), Orlando, FL, USA, 17–20 December 2018; pp. 728–733. [Google Scholar]

- Liu, B.; Yan, S.; Li, J.; Qu, G.; Li, Y.; Lang, J.; Gu, R. A sequence-to-sequence air quality predictor based on the n-step recurrent prediction. IEEE Access 2019, 7, 43331–43345. [Google Scholar] [CrossRef]

- Chung, Y. Ground-level ozone and regional transport of air pollutants. J. Appl. Meteorol. Climatol. 1977, 16, 1127–1136. [Google Scholar] [CrossRef]

- Wild, O.; Akimoto, H. Intercontinental transport of ozone and its precursors in a three-dimensional global CTM. J. Geophys. Res. Atmos. 2001, 106, 27729–27744. [Google Scholar] [CrossRef]

- Rijal, N.; Gutta, R.T.; Cao, T.; Lin, J.; Bo, Q.; Zhang, J. Ensemble of deep neural networks for estimating particulate matter from images. In Proceedings of the 2018 IEEE 3rd international conference on image, Vision and Computing (ICIVC), Chongqing, China, 27–29 June 2018; pp. 733–738. [Google Scholar]

- Zhang, C.; Yan, J.; Li, C.; Rui, X.; Liu, L.; Bie, R. On estimating air pollution from photos using convolutional neural network. In Proceedings of the 24th ACM international Conference on Multimedia, Amsterdam, The Netherlands, 15–19 October 2016; pp. 297–301. [Google Scholar]

- Zhu, J.; Deng, F.; Zhao, J.; Zheng, H. Attention-based parallel networks (APNet) for PM2.5 spatiotemporal prediction. Sci. Total Environ. 2021, 769, 145082. [Google Scholar] [CrossRef] [PubMed]

- Benesty, J.; Chen, J.; Huang, Y. Time-delay estimation via linear interpolation and cross correlation. IEEE Trans. Speech Audio Process. 2004, 12, 509–519. [Google Scholar] [CrossRef]

- Saeipourdizaj, P.; Sarbakhsh, P.; Gholampour, A. Application of imputation methods for missing values of PM10 and O3 data: Interpolation, moving average and K-nearest neighbor methods. Environ. Health Eng. Manag. J. 2021, 8, 215–226. [Google Scholar] [CrossRef]

- Rajagukguk, R.A.; Ramadhan, R.A.; Lee, H.J. A review on deep learning models for forecasting time series data of solar irradiance and photovoltaic power. Energies 2020, 13, 6623. [Google Scholar] [CrossRef]

- Mehtab, S.; Sen, J.; Dasgupta, S. Robust analysis of stock price time series using CNN and LSTM-based deep learning models. In Proceedings of the 2020 4th International Conference on Electronics, Communication and Aerospace Technology (ICECA), Coimbatore, India, 5–7 November 2020; pp. 1481–1486. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

| Experiment Category | Experiment Name | Description |

|---|---|---|

| Seq2Seq | Seq2Seq_LSTM | Encoder–Decoder framework with recurrent component LSTM/GRU. |

| Seq2Seq_GRU | ||

| Seq2Seq+Attention | Seq2Seq_LSTM+Attention | Seq2Seq_LSTM/GRU with single attention mechanism. |

| Seq2Seq_GRU+Attention | ||

| Spatiotemporal attentive | STALSTM | Spatiotemporal attentive model with recurrent component LSTM/GRU |

| STAGRU |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, X.; Li, Y.; Xu, X.; Shao, M. A Novel Interpretable Deep Learning Model for Ozone Prediction. Appl. Sci. 2023, 13, 11799. https://doi.org/10.3390/app132111799

Chen X, Li Y, Xu X, Shao M. A Novel Interpretable Deep Learning Model for Ozone Prediction. Applied Sciences. 2023; 13(21):11799. https://doi.org/10.3390/app132111799

Chicago/Turabian StyleChen, Xingguo, Yang Li, Xiaoyan Xu, and Min Shao. 2023. "A Novel Interpretable Deep Learning Model for Ozone Prediction" Applied Sciences 13, no. 21: 11799. https://doi.org/10.3390/app132111799

APA StyleChen, X., Li, Y., Xu, X., & Shao, M. (2023). A Novel Interpretable Deep Learning Model for Ozone Prediction. Applied Sciences, 13(21), 11799. https://doi.org/10.3390/app132111799