1. Introduction

The incidence of impacted teeth is relatively high and varies in various ethnic populations and different countries, from 5.6% to 38%. Impacted teeth have the potential to develop pathologies that can result in decay lesions, resorption in adjacent teeth, periodontal disease, marginal bone loss, cysts, or tumours. The teeth with the highest inclusion rate in the maxilla are canine teeth, with an incidence of 0.2–3.58%. Alignment of impacted teeth to the dental arch is becoming a standard treatment in all orthodontic practices. Determining the exact location of the affected tooth is part of the diagnostic process and is essential for successful treatment [

1,

2].

This information can be partially obtained from conventional two-dimensional (2D) X-rays, which assess the presence of an impacted tooth and determine the approximate position [

3]. Cone beam computer tomography (CBCT) is an advanced imaging technology that overcomes many of the limitations of conventional 2D imaging. Through VisLab 20.10.1 imaging software, it is possible to segment the DICOM data provided by the CBCT and convert the data into a three-dimensional (3D) file [

4], making it possible to evaluate the bone structures in 3D models and produce a high-resolution 3D model useful for diagnosing impacted teeth [

5]. CBCT has been shown to locate a tooth more accurately and is, therefore, necessary to perform correct treatment planning in the disimpaction of a tooth [

6]. Three-dimensional radiological digital images also allow the assessment of a tooth’s accurate position relative to other teeth and the identification of associated complications that arise from the orthodontic movement of the tooth, such as root resorption of adjacent teeth. Treatment for tooth disimpaction requires teamwork, especially in regard to the role of the orthodontist and the oral surgeon. The therapeutic approach generally involves surgical exposure of impacted teeth, which is performed by preparing a flap and removing the cortical bone around the crown of the teeth [

7].

This procedure provides optimal conditions for the orthodontist. Surgical exposure allows the orthodontist to bond the impacted tooth in the correct position depending on the intended direction to apply the proper traction forces for alignment. Several parameters should be considered when planning surgical exposure of an impacted tooth. Flap design and the correct position of the osteotomy site are key points for successful treatment. Oral surgeons should be as precise and non-invasive as possible to minimise post-operative patient stress; only enough bone should be removed [

8,

9].

New software is being used to assist the clinician in precisely identifying the impacted teeth position pre- and intra-operatively. Digital medicine is very focused on developing protocols to help the surgeon to visualise internal structures in the human body acquired from digital CBCT directly on the patient [

10].

The modern technology that allows this to be carried out is Augmented Reality (AR), which aims to enrich the perception of reality through digital processing. Software for AR uses algorithms to recognise shapes and objects. Clinicians upload digital diagnostic imaging into the software and superimpose it into a real-time video frame from a camera to view the patient and his internal structures [

11].

AR software is in constant development and will reveal the full potential of oral treatment in the near future. The operation of the software in AR uses two processes. The technical process of recognising a target in an image or a single video frame is called “object detection”. Object detection only works if the target image is visible on the input. If the target object is hidden by any interference, it will not be able to detect it.

Following the structures after object detection is known as “object tracking”. Video tracking, if processed in a video frame, is the task of tracking a moving object in a video. This process is one of the most important tasks in computer data processing.

This article describes an AR-assisted approach for surgical exposure of a maxillary-impacted canine. A technical note on how viewing the impacted tooth’s crown aligned in the patient’s mouth was achieved to carry out a precise and less-invasive surgical procedure is reported.

2. Materials and Methods

A 27-year-old male patient visited our clinic for a resolution of his malocclusion. Following objective examination and collection of radiographs, photographs, and CBCT, the patient was diagnosed with class II malocclusion, a deep bite with dental inclusions of the upper right canine. The possibility, not without risks, of removing and supporting the right upper impacted canine in the arch was assessed. The operational difficulty was due to the deep retention of the tooth in question (vestibular side) and its contiguous relationship with the roots of the adjacent tooth.

The patient was informed of all the risks of close proximity (including tooth ankylosis and resorption of the roots of the incisors), and an agreement was reached with the patient to proceed with this treatment. The designated operating procedure involves aligning the canine in the upper arch to re-establish adequate occlusion and aesthetics.

This was possible through traction of the canine after surgical exposure, which was performed by supporting a bone conduction screw trans-palatal bar. Skeletal anchorage by palatal mini-screws was chosen for dissipation of the reaction counterforces on bone bases, minimising possible unwanted dental effects.

Computer-Aided Design and Computer-Aided Manufacturing (CAD-CAM) technology was used to plan the site and size of the mini-screws to be inserted into the palate and to build a surgical template for insertion (

Figure 1).

After insertion of the two mini-screws and the trans-palatal bar using a printed tooth-supported drill guide (

Figure 2), we proceeded with surgical exposure of the canine to perform the tooth traction.

In the vestibular-apical zone of the lateral incisor, the impacted canine was not clinically detectable via palpation. To have clinical certainty about the canine position, it was decided to use AR by superimposing the surgical site and the operator’s visual field with CBCT information. This technology aims both to provide the design of the flap for surgical access directly on the patient and to minimise the error of the osteotomy point to avoid iatrogenic damage to the adjacent teeth roots.

The first step towards visualising the patient’s impacted tooth in AR was to create a 3D file that the software could easily detect. It was decided to use the patient’s teeth as software identification markers.

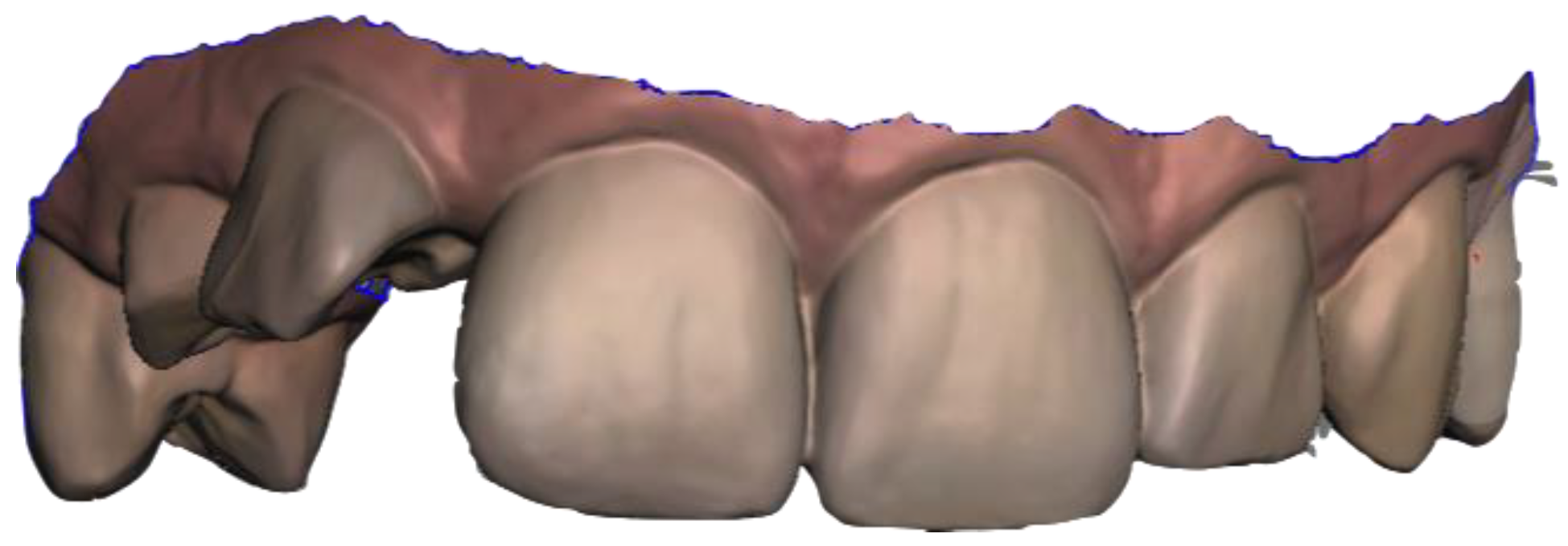

The maxillary arch was scanned using an optical intraoral scanner (TRIOS 4; 3Shape A/S, Copenhagen, Denmark). The file was exported in Polygon File Format (PLY). Using open-source CAD software (Meshmixer, Autodesk, Inc., San Francisco, CA, USA), the part of the upper arch from the first premolar to the other premolars was selected. The anterior sector’s teeth, incisal edges, and cusps were the anatomical markers required to match the patient’s mouth and perform object recognition (

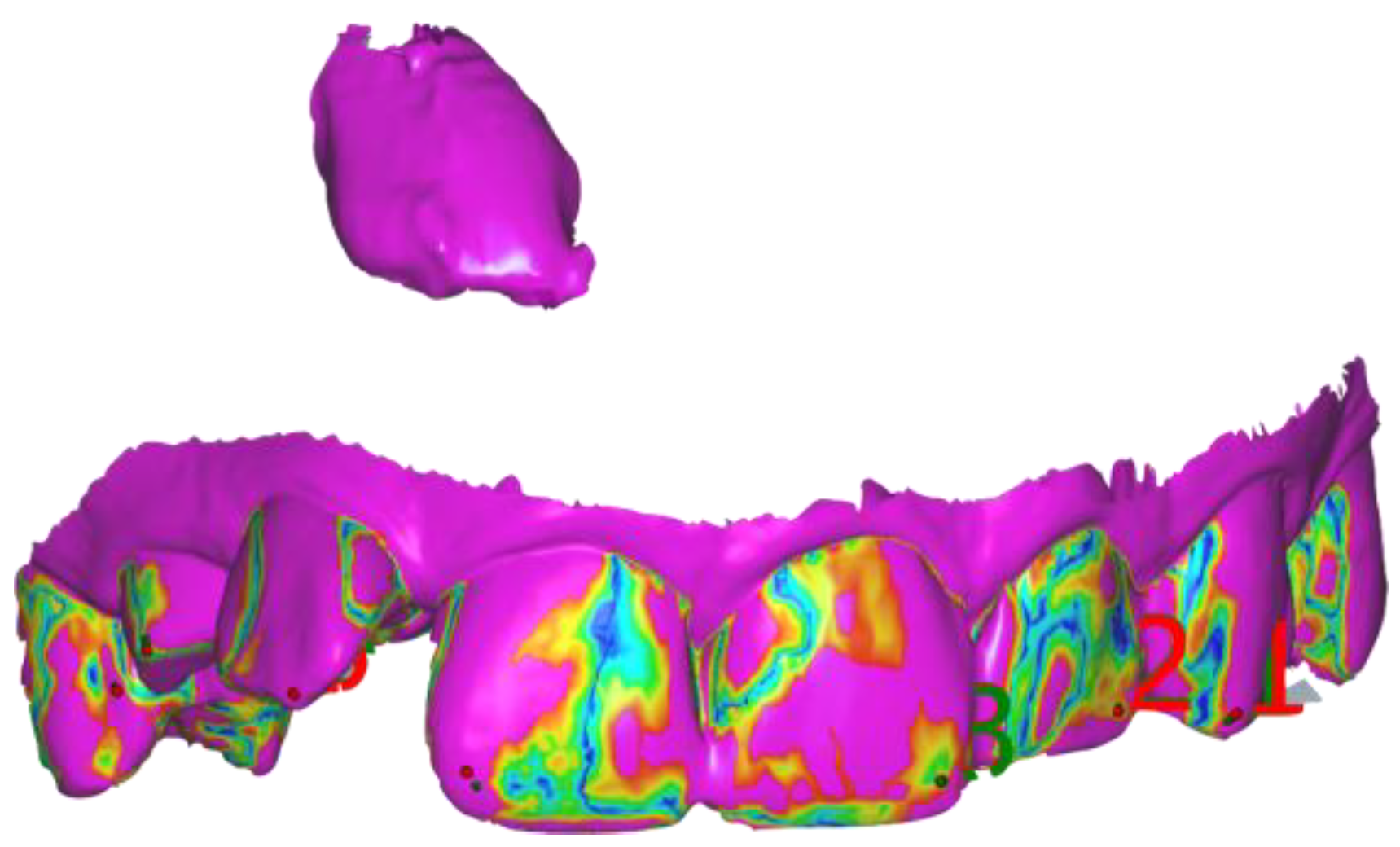

Figure 3).

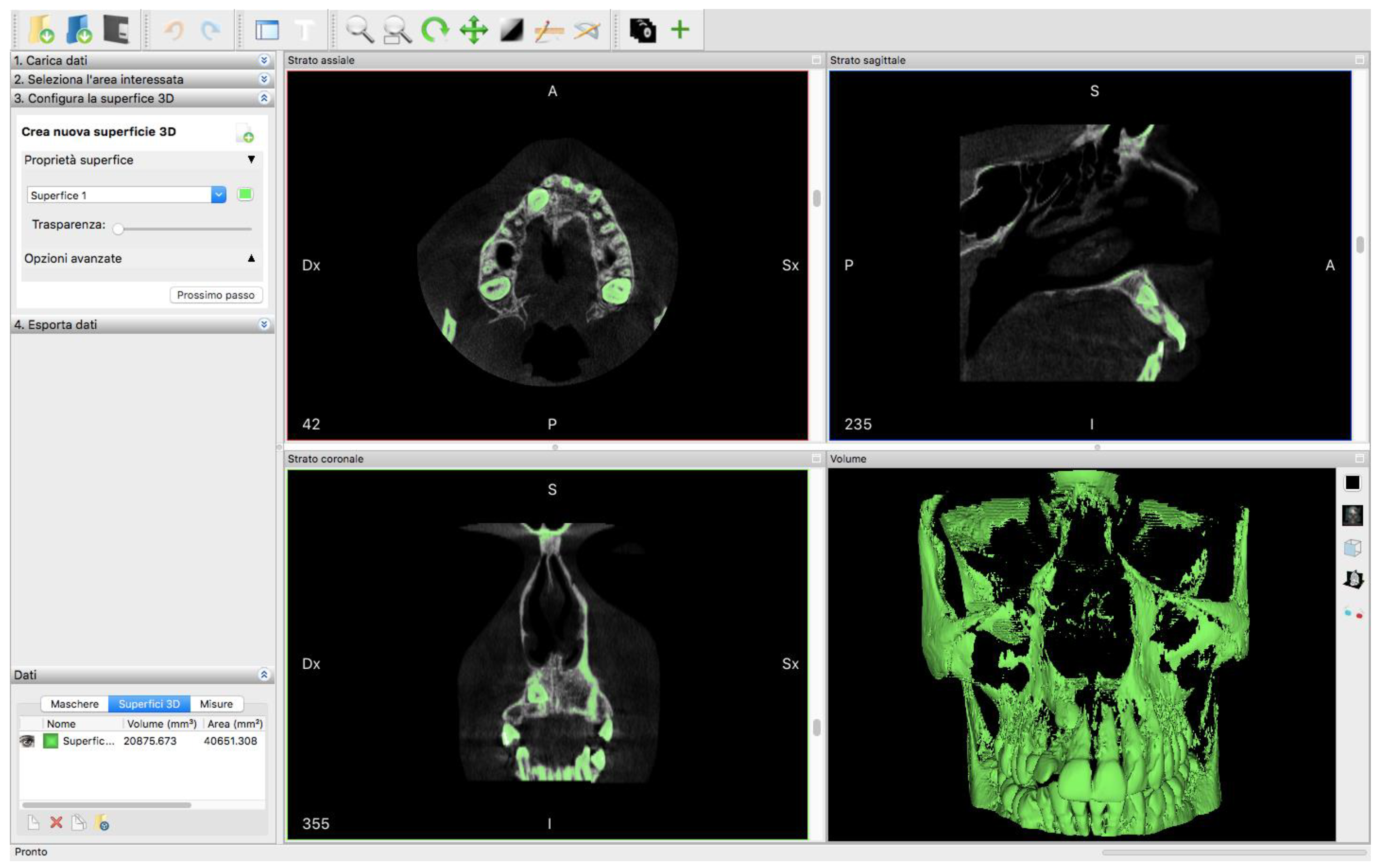

To this file, which corresponded faithfully to reality, we added the digital information required: the position of the impacted crown of the canine. From the maxillary CBCT, Digital Imaging and Communications in Medicine (DICOM) files were imported into open-source medical software (InVesalius, CTI, Campinas, Brazil) to segment and export an STL file of the area of interest (

Figure 4).

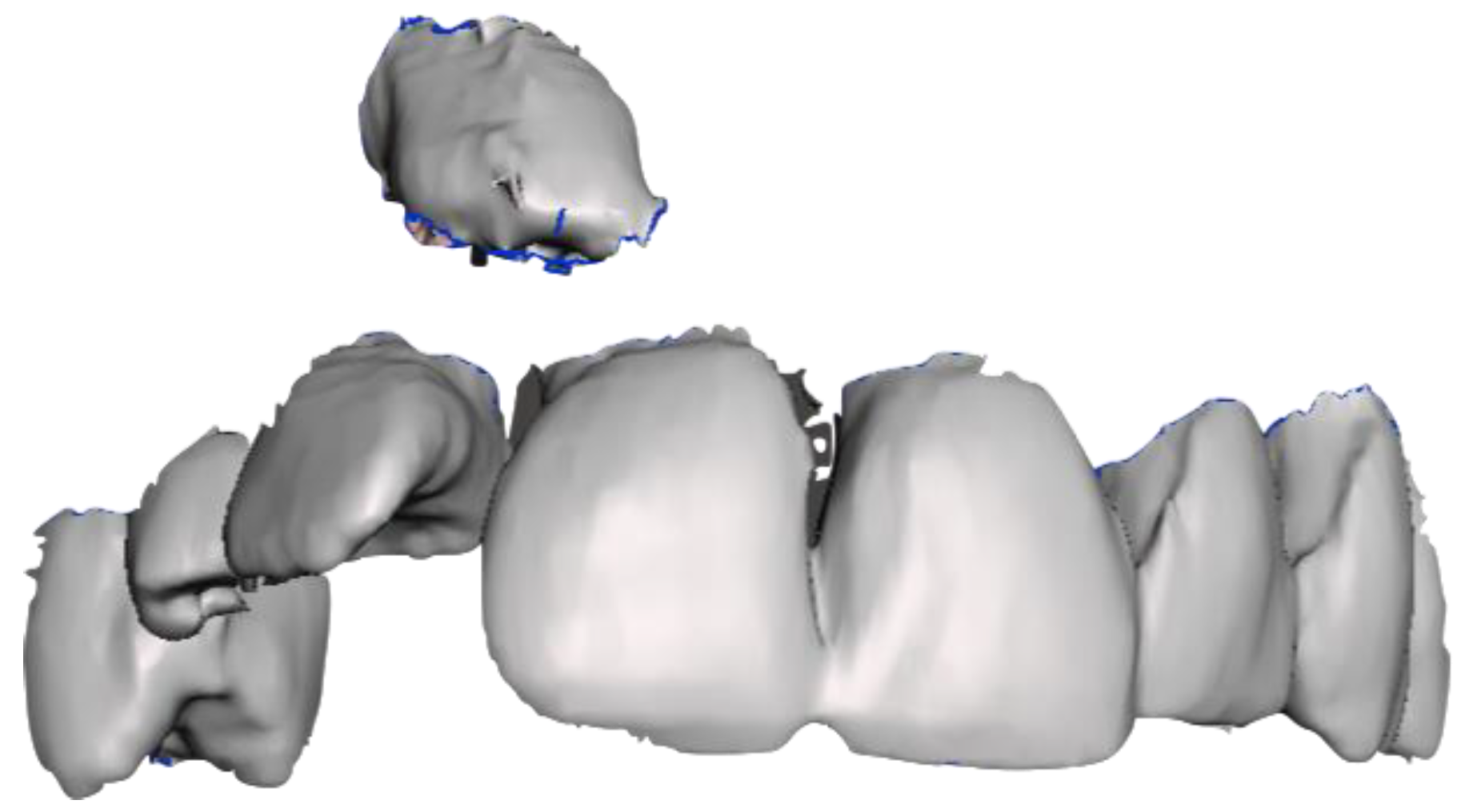

Only the six anterior teeth and the crown of the impacted tooth were selected from the 3D file (

Figure 5).

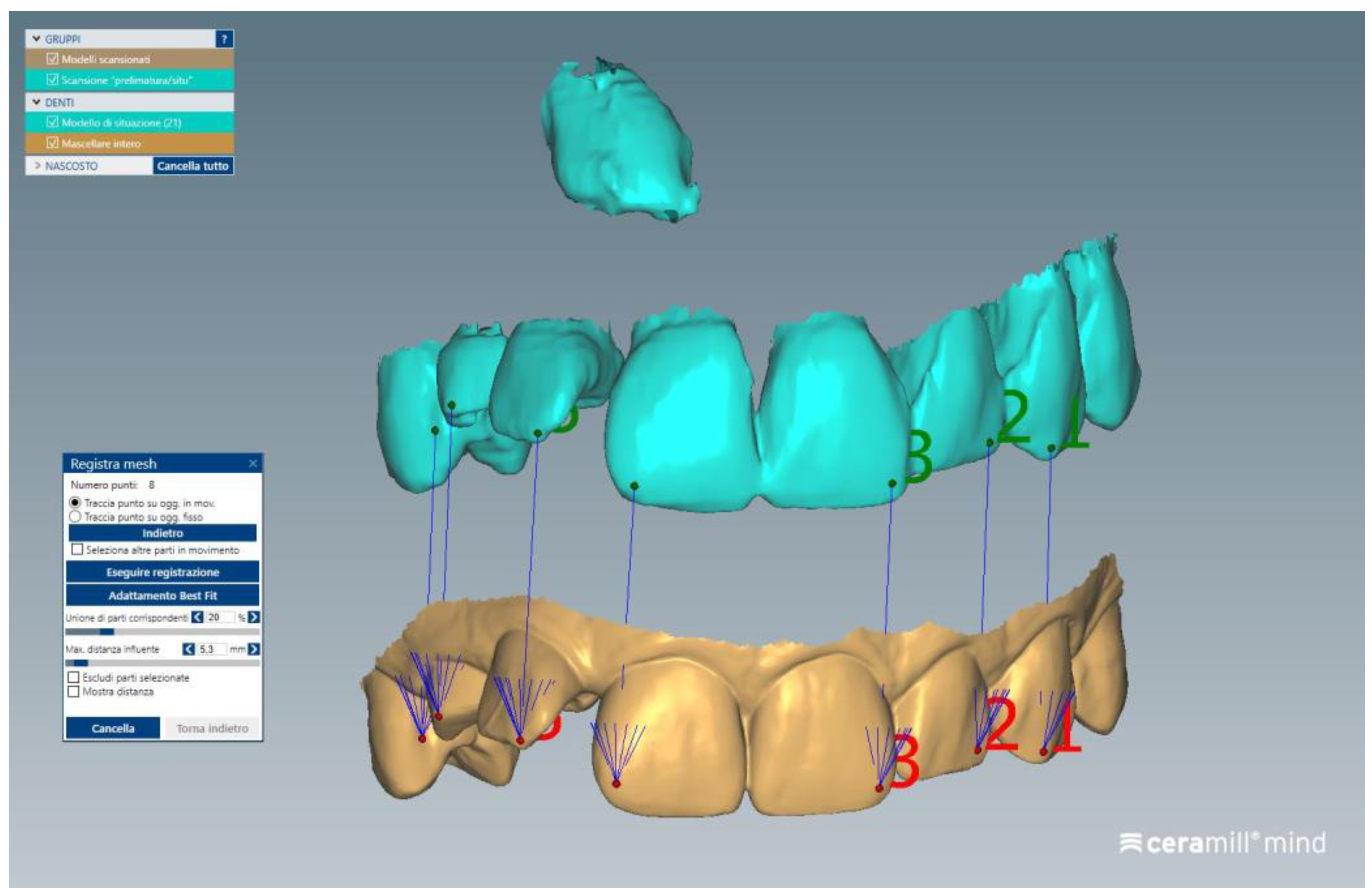

Two 3D files were imported into CAD-CAM design software (Ceramill Mind; Amann Girrbach AG, Koblach, Austria) for superimposition. Matching was easily achieved by marking six common points (

Figure 6) and combining files. For this alignment, the “Best fit matching” function of the software was used, which utilizes algorithms to perform the alignment of the two meshes. Six areas of the teeth were selected where edges were present, as recommended by the software manufacturer.

A 3D file of the anterior sector of the intraoral scan plus the endosseous position of the crown of the impacted canine was exported (

Figure 7).

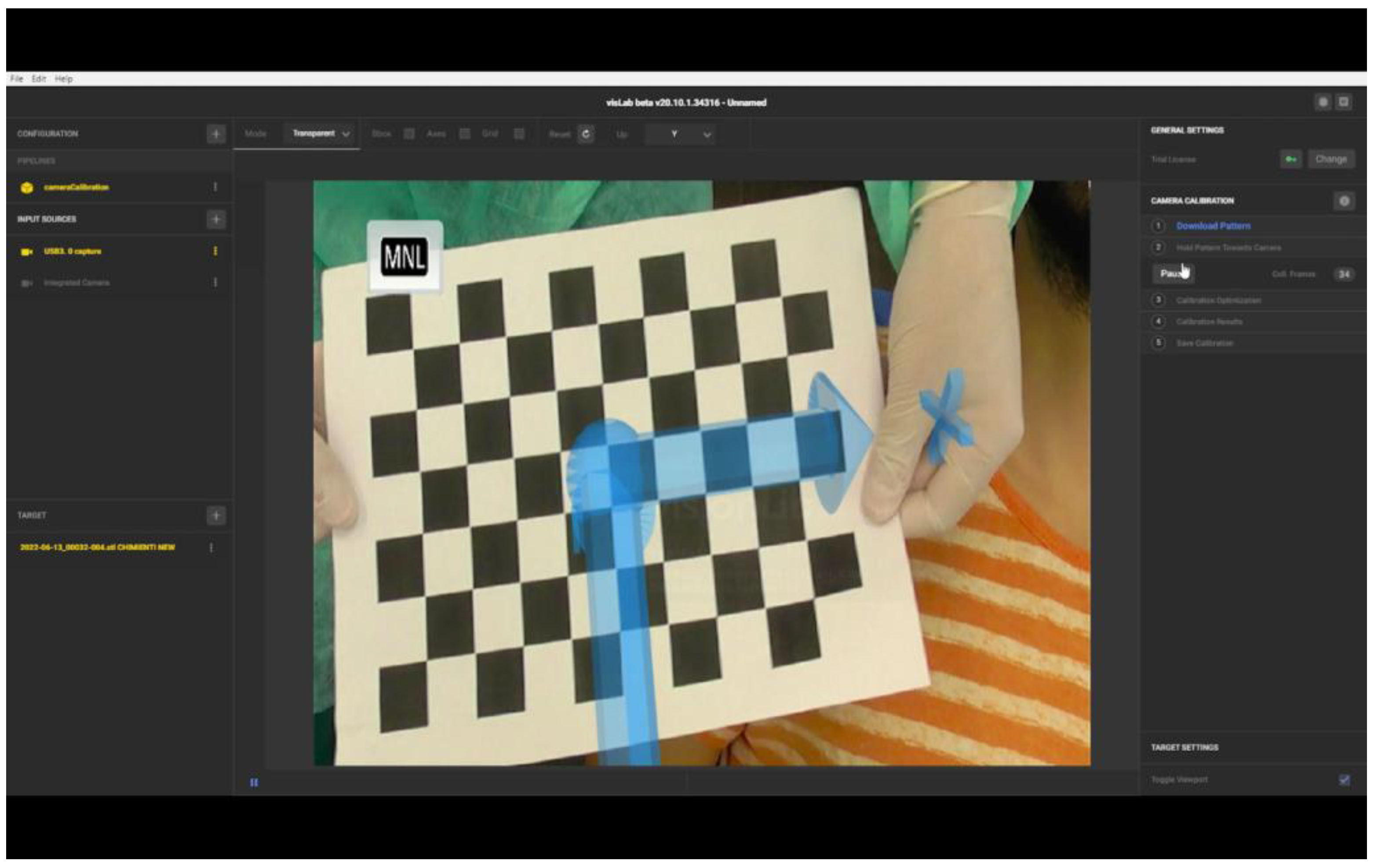

A full HD video camera was positioned in front of the patient’s mouth, connected via an HDMI cable to a laptop. The AR software was started (VisionLib; Visometry, Darmstadt, Germany) and the video camera was designated as an input source. The camera calibration was then performed using a chessboard pattern (

Figure 8).

The camera calibration was essential for the impact on tracking quality and stability. A correct calibration improves the superimposition quality of 3D content. Calibration was performed using the pattern of a special graphic chessboard printed on A4 format paper. The chessboard was placed 40 cm away from the camera. After starting the calibration on the software, 300 images were collected. All corners of the chessboard were visible in the camera image during calibration. For performing the correct calibration, the pattern should not be distorted. To avoid distortion and consequent calibration alteration, the chessboard printed on A4 format paper was glued onto a rigid plastic panel that allowed its use without bending the pattern.

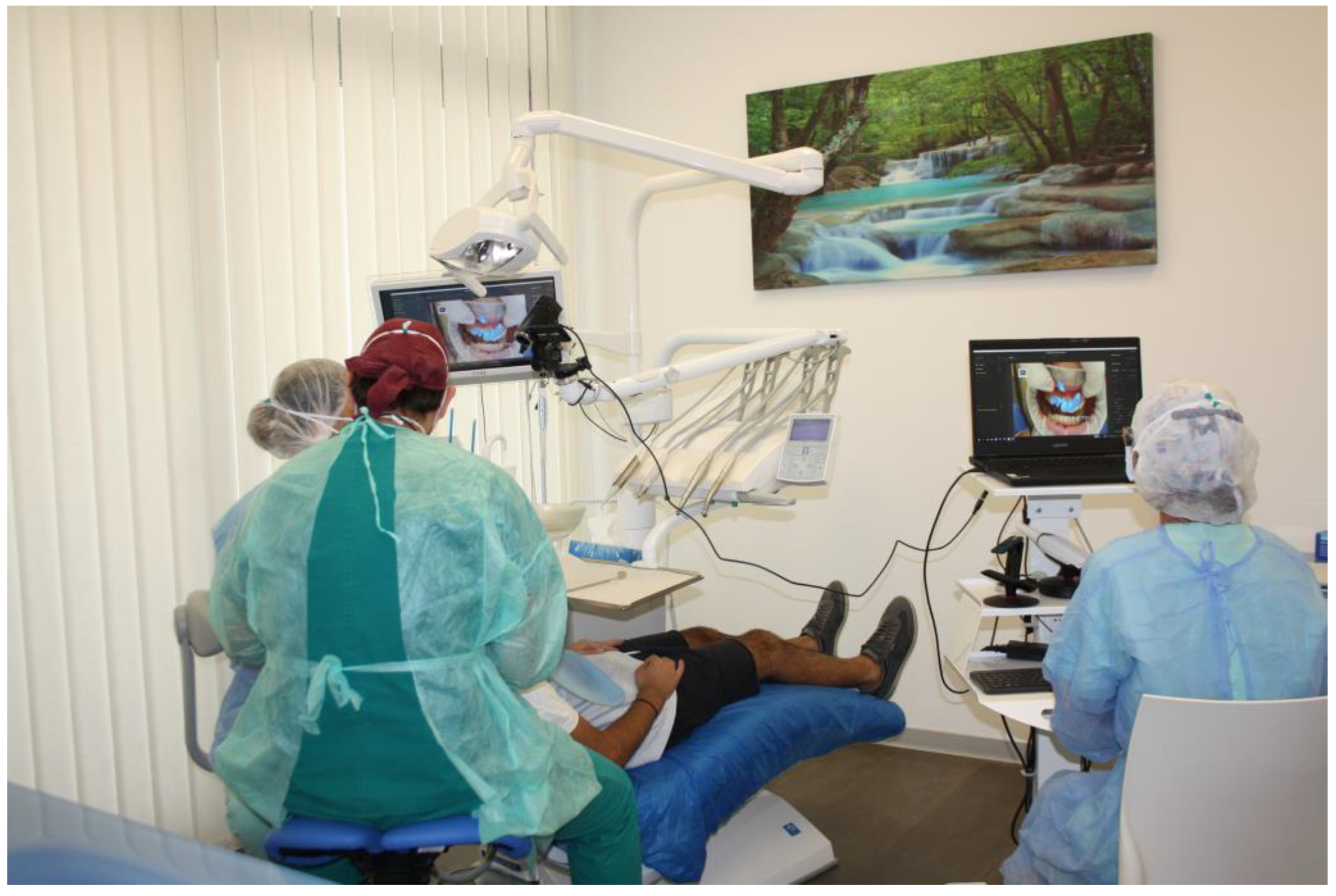

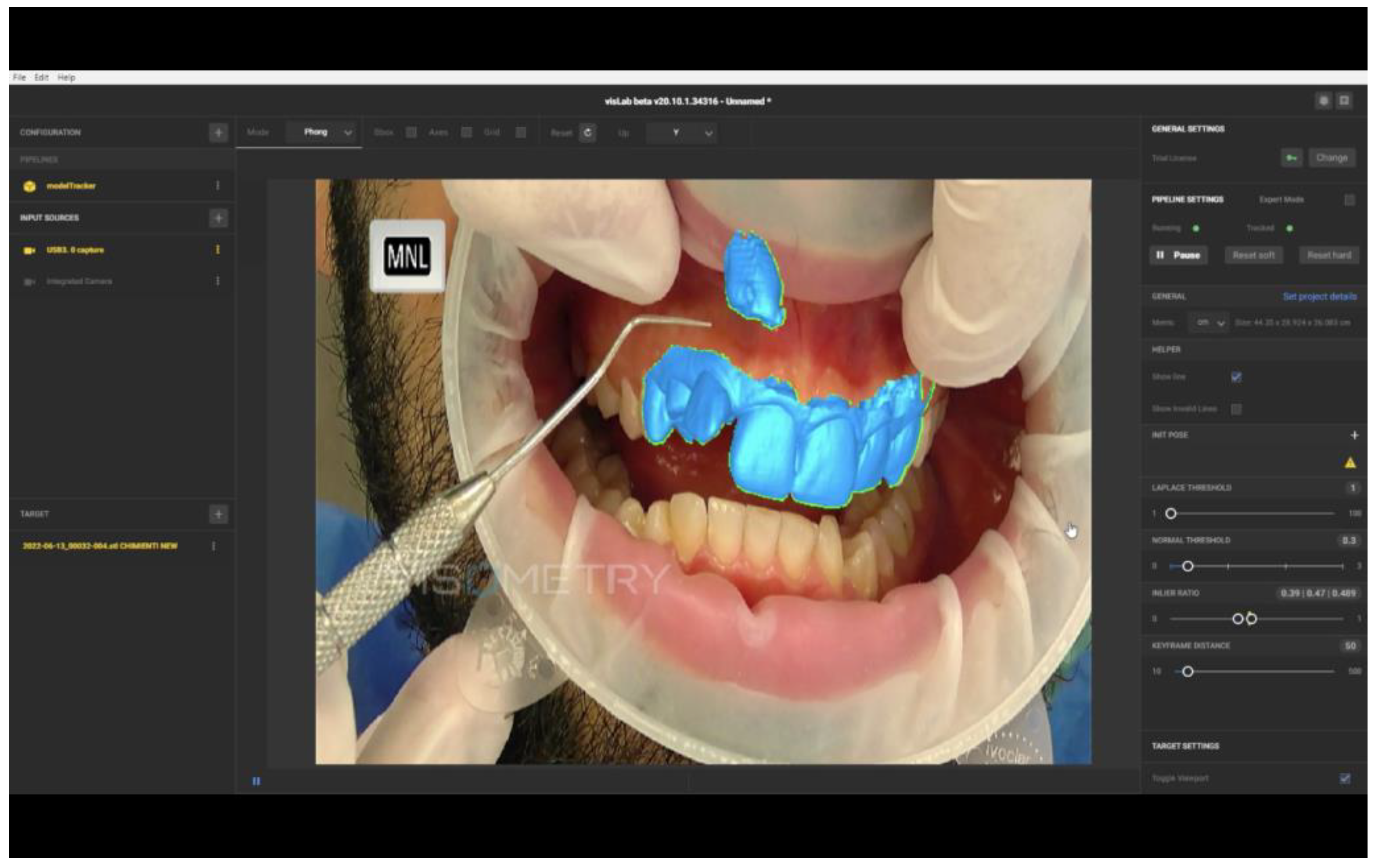

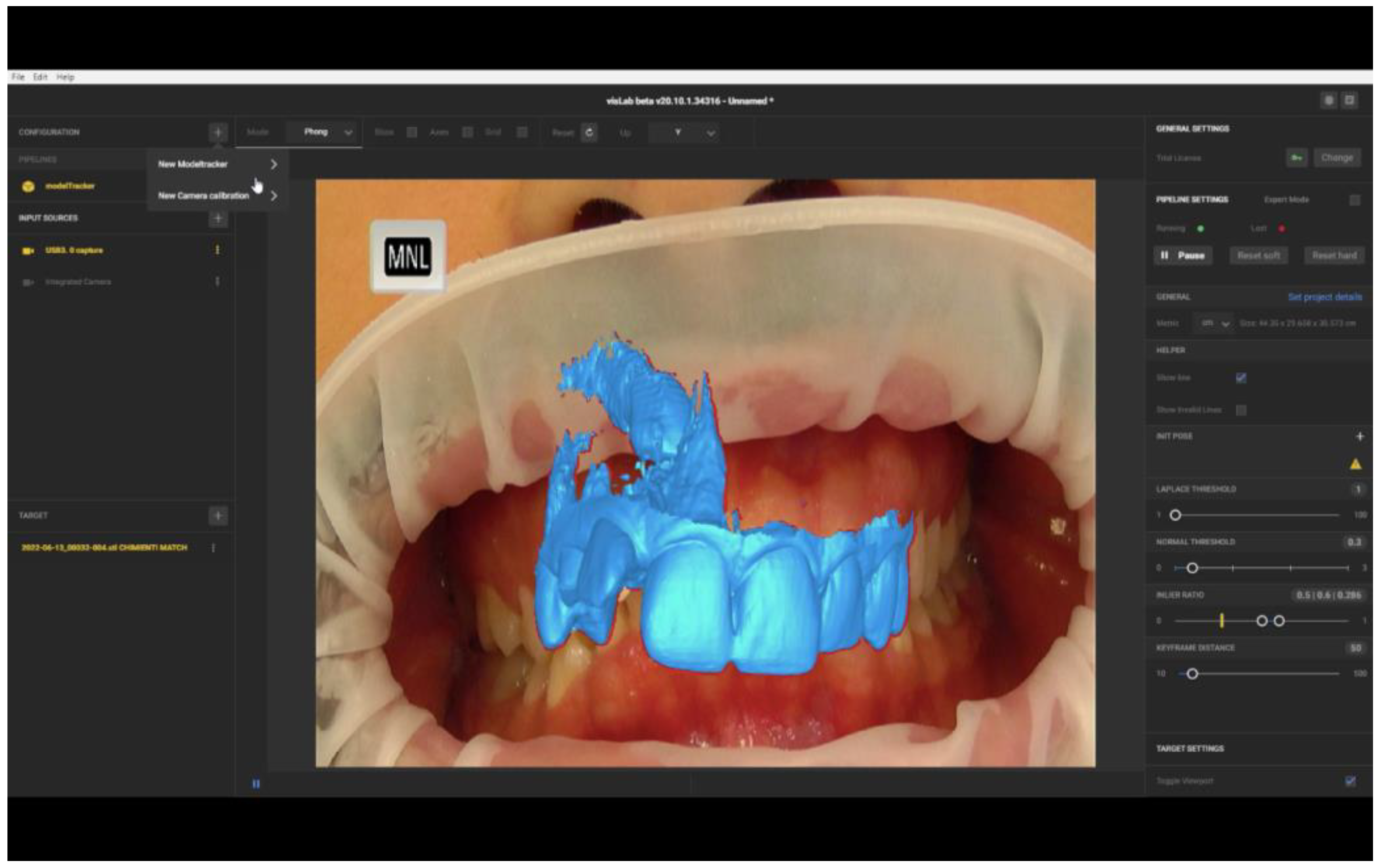

The 3D file was uploaded to the software. The patient’s lips were spread apart with a mouth opener, and the assistant, who was trained in using the software, aligned the file image onto the projection of the patient’s teeth (

Figure 9).

The software recognises the edges of files in images transmitted from the camera and the border colour changes. From the red border, the outline turns green, signalling the correct overlapping of the object to the video images.

By observing real-time alignments on the PC screen, it was possible to evaluate the exact position of the canine crown and make markers on the gum to determine the incision lines and the osteotomy to perform the surgical exposure (

Figure 10).

Margins of 3 mm from the canine crown were generally maintained for flap design or for the benefit of optimal direct vision to perform the osteotomy.

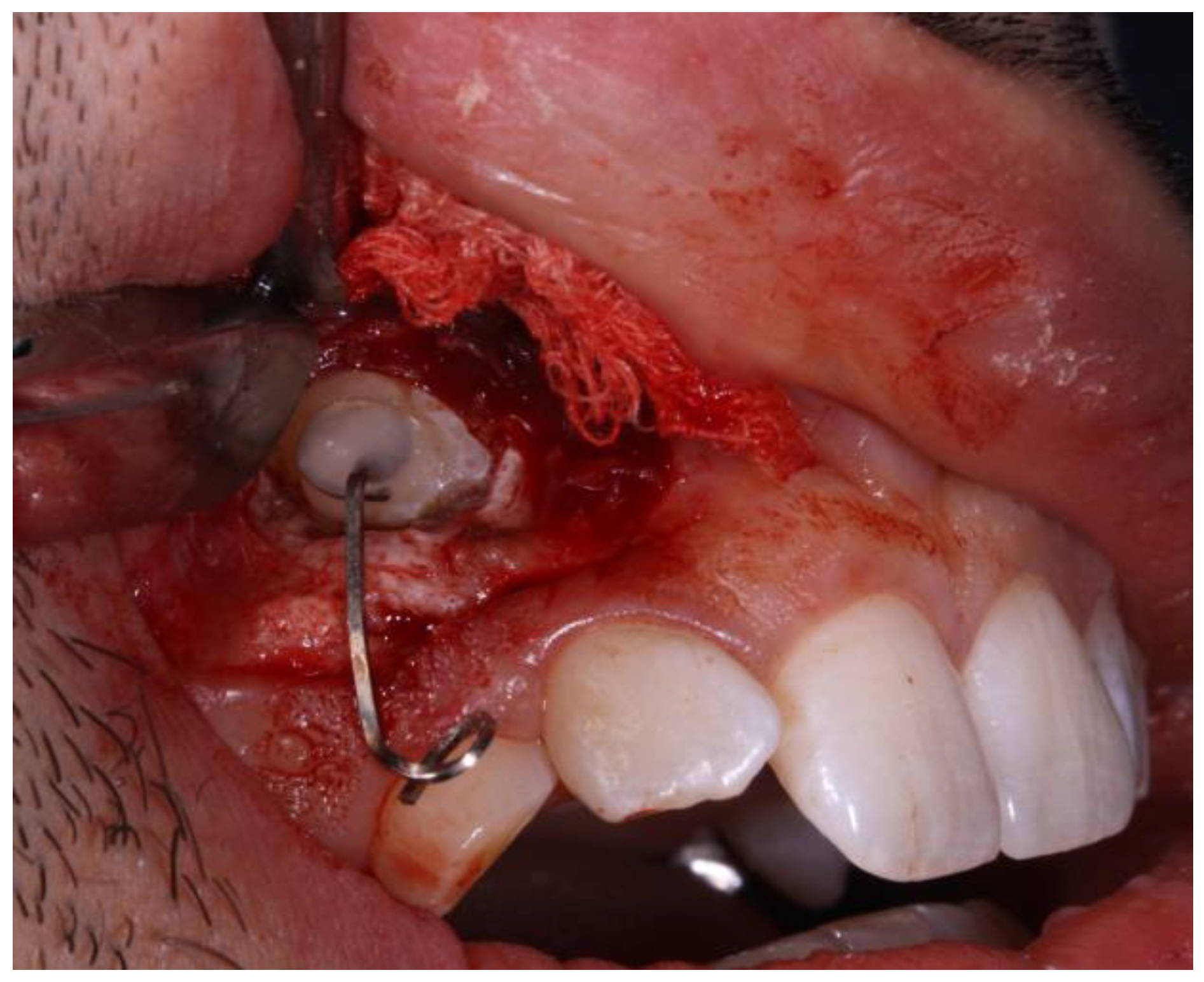

The tooth was exposed by removing the buccal bone cortex and, after obtaining hemostasis with gauze soaked in tranexamic acid, the eyelet was bonded with composite (

Figure 11).

After successful wound healing, the eyelet was connected with a wire anchored to the trans-palatal bar tied on the first upper molars and solidarised on the palate with mini-screws to perform the orthodontic traction and bring the impacted canine into the dental arch.

3. Results

From the three-dimensional analysis of the CBCT, it was possible to ascertain that the impacted canine was in contact with the vestibular part of the root of the lateral incisor and the palatine part of the root of the central incisor. Considering the apical position of the crown and contact with the adjacent roots, accurate surgical exposure was essential to avoid iatrogenic damage to the adjacent teeth. Augmented Reality was, therefore, used to minimise the possibility of surgical error.

We used AR software to perform AR-assisted surgery through the real-time superimposition of the 3D file image onto the video frame acquired by the camera positioned in front of the patient’s mouth.

The final 3D model was created by combining the six anterior teeth taken from the intraoral scan with the same teeth plus the included canine taken from the CBCT segmentation. Preparing the files was quick and easy; the time needed to create the uploaded file in the Augmented Reality software was around 15 min.

The 3D file was uploaded into the AR software and manually positioned onto the image of the patient’s teeth.

Model tracking was straightforward to set up without prior registration of targets or surroundings. Software model tracking was enabled to use 3D files and their anatomical markers, as they are references for the patients.

Automatic file recognition is very efficient if the uploaded object closely matches reality. The 3D object was superimposed on the image that corresponds exactly to the video image and the software was, therefore, able to recognise the edges of the object very quickly and has very accurate tracking even with moving objects.

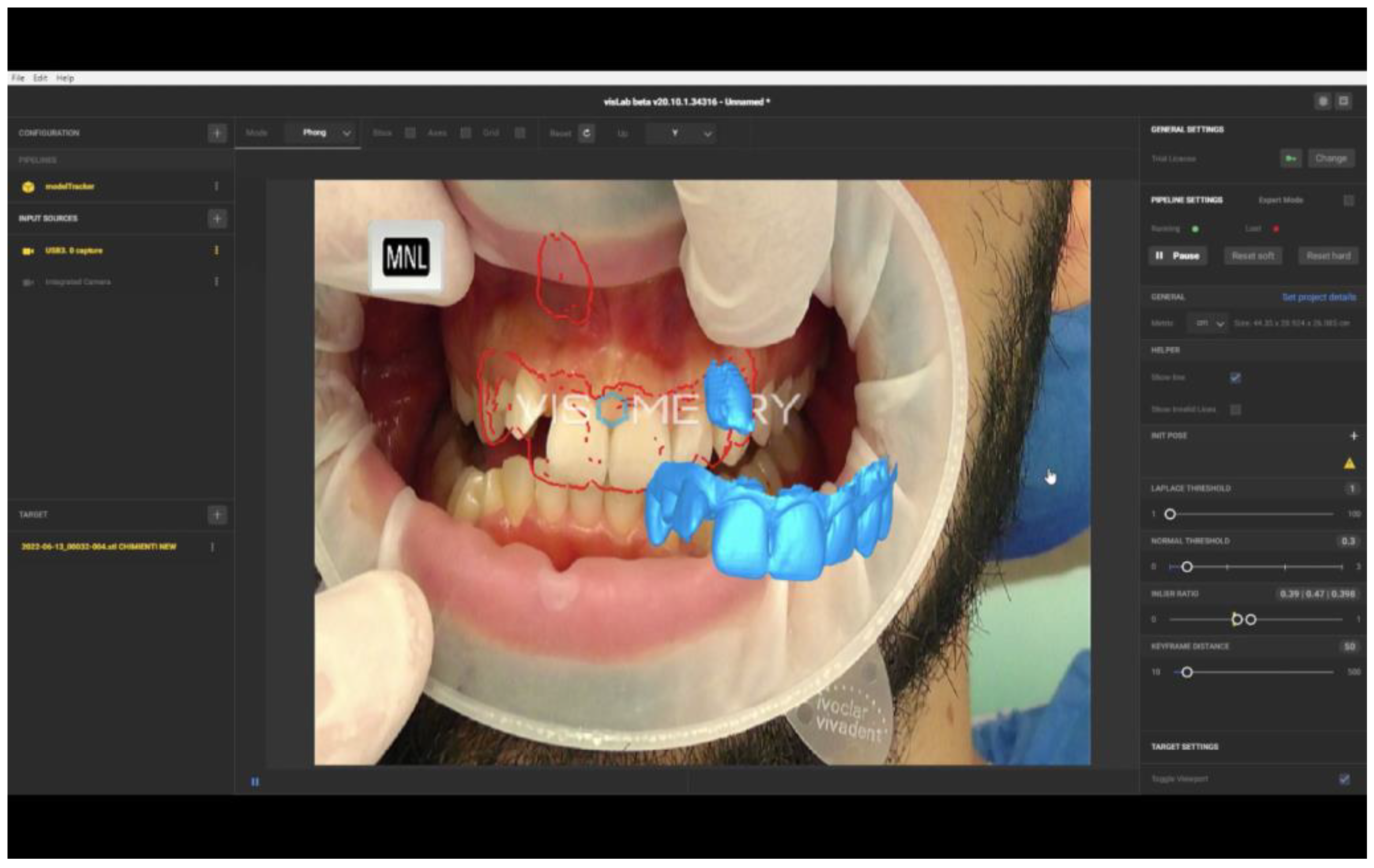

When a 3D file containing other digital information (the impacted canine and the roots of the adjacent teeth) was inserted, the software was better able to superimpose the object in video reality. Any failure to align the 3D file with the video frame was marked by the red margin (

Figure 12).

For this reason, the image of the impacted canine was reduced to just the vestibular portion of the crown to optimise recognition of the file by the software.

One drawback found during real-time video tracking was caused by the displacement of the patient’s head. This can cause the interruption of object tracking (

Figure 13).

It was, therefore, necessary to manually reposition the file image onto the patient’s teeth. Furthermore, if an instrument was placed between the teeth and the camera lens, in this case, the system did not maintain the position of the 3D file and moved. This was an interference phenomenon where the object in the foreground affects the algorithm so the tracking process loses track of the object.

The presence of an assistant who repositioned the file every time the software lost tracking was required, making it challenging to use Augmented Reality during surgery.

The software produces the ‘line model’ that is equivalent to the edges of the final virtual data. The lines in different 3D directions are important for determining a good camera position. The software calculated the tracking accuracy through the inlier ratio, which depicts the ratio between found and not found parts of edges; therefore, values from 0 to 1 were allowed. A model tracking inlier ratio of 0.39:0.48 was recorded during the process. A high value inlier ratio (>0.4) means that many edges could be found in the actual camera image, making the tracking result robust. It was possible to extend model tracking with feature tracking to enhance the robustness of model tracking, achieving significantly higher inlier ratio values.

The clinical advantage perceived by the surgeon is proportional to the time dedicated to creating files to upload to the software. AR using anatomical references reduces the operation time and costs compared to other surgically-assisted techniques that require surgical or extraoral templates.

According to the authors, AR in oral surgery has enormous potential to support the surgeon in identifying anatomical information collected by the CBCT concerning the structures involved in the intervention. This type of AR could help in managing the exposure of impacted teeth and achieving lower invasiveness.

4. Discussion

In this pilot study, there was an impacted canine and, due to orthodontic needs, surgical exposure was necessary to align it to the upper arch.

From the three-dimensional analysis of the CBCT, the impacted canine was in contact with the vestibular part of the root of the lateral incisor and the palatine part of the root of the central incisor [

12,

13,

14]. Considering the apical position of the crown and contact with the adjacent roots, accurate surgical exposure was essential to avoid iatrogenic damage to the adjacent teeth [

14,

15,

16]. Augmented Reality was, therefore, used to minimise the possibility of surgical error.

Through software that uses algorithms for object recognition and object tracking, real-time superimposition of the 3D file image onto the video frame acquired by the camera positioned in front of the patient’s mouth was possible.

The final 3D model was created by combining the six anterior teeth taken from the intraoral scan with the same teeth plus the included canine taken from the CBCT segmentation. The 3D file was uploaded into the software and manually positioned onto the image of the patient’s teeth. Model tracking was straightforward to set up without prior registration of targets or surroundings. Software model tracking was enabled to use 3D files and their anatomical markers, as they are references for the patients.

When a 3D file that contains other digital information is inserted (in this case, the impacted canine and the roots of the adjacent teeth), the software is better able to superimpose the object onto video reality. Any failure to align the 3D file with the video frame was marked by the red margin. For this reason, the image of the impacted canine was reduced to just the vestibular portion of the crown to optimise recognition of the file by the software.

Using dental-supported extraoral templates could aid current software in the recognition and tracking phases. A preclinical study on the phantom, with the support of an extra-oral template used as a recognition marker, reports successful and encouraging preliminary results performed to evaluate the visualisation of a plan for performing maxillofacial surgery [

17].

The patient’s teeth were tracked directly and standard digital RGB cameras were adopted, with visible light as a source of information. The presence of an assistant who takes care of repositioning the file and restarting the registration every time the software loses tracking, either after fast displacement of the patient’s head or if an instrument is placed between the teeth and the camera lens, is required, which makes it challenging to use Augmented Reality during surgery.

Reproducing images on the PC screen as an AR display type could make the image of reality less ergonomic for the clinician. The surgeon must turn his gaze from the patient’s mouth to the PC to view the AR image. Smart glasses with Video-See-Through (VST) mode and Optical See-Through (OST) mode could be an advantage for using this technology so that the operator can avoid turning to the screen to see the software image [

18,

19,

20].

However, direct projection within the field of view with smart glasses could be uncomfortable; the weight and bulk of the glasses currently on the market could alter the surgeon’s comfort.

In a recent review documenting AR’s use in dentistry, the authors collected some adverse information on the time it takes. Three out of six dentists reported an increase of at least 1 h to perform AR [

21]. The preparation time of digital files clearly varies depending on numerous variables, the software used, and the references chosen to perform object recognition. However, preparing the files for this case report was quick and easy; the time needed to create the uploaded file in the Augmented Reality software was around 15 min. Other AR needs a surgical template as a template marker or a 3D sensor positioned on suitable tools; using AR with anatomical markers is an economic advantage because it does not require printing or milling any templates and uses many tools [

21].

5. Conclusions

Using AR software, it was possible to visualise the endosseous position of the crown of an impacted tooth to plan the surgical exposure in a precise and minimally invasive manner.

Automatic detection of the 3D file to enable AR proved satisfactory for realising the clinical case. The clinical advantage is proportional to the time dedicated to creating files to upload to the software. AR using anatomical references reduces the operation time and costs compared to other surgically-assisted techniques that require surgical or extraoral templates.

AR in oral surgery has enormous potential to support the surgeon in identifying anatomical information collected by the CBCT concerning the structures involved in the intervention. However, new studies are needed to assess this digital workflow’s accuracy and to quantify AR precision and accuracy.

The improvement of software utilising algorithms for anatomical recognition and tracking will play a pivotal role in advancing the use of Augmented Reality in oral surgery. Enhancing the accuracy, precision and real-time capabilities of these software algorithms can significantly enhance surgical guidance and personalized treatment planning in the surgical exposure of impacted teeth.