3D Position Estimation of Objects for Inventory Management Automation Using Drones

Abstract

:1. Introduction

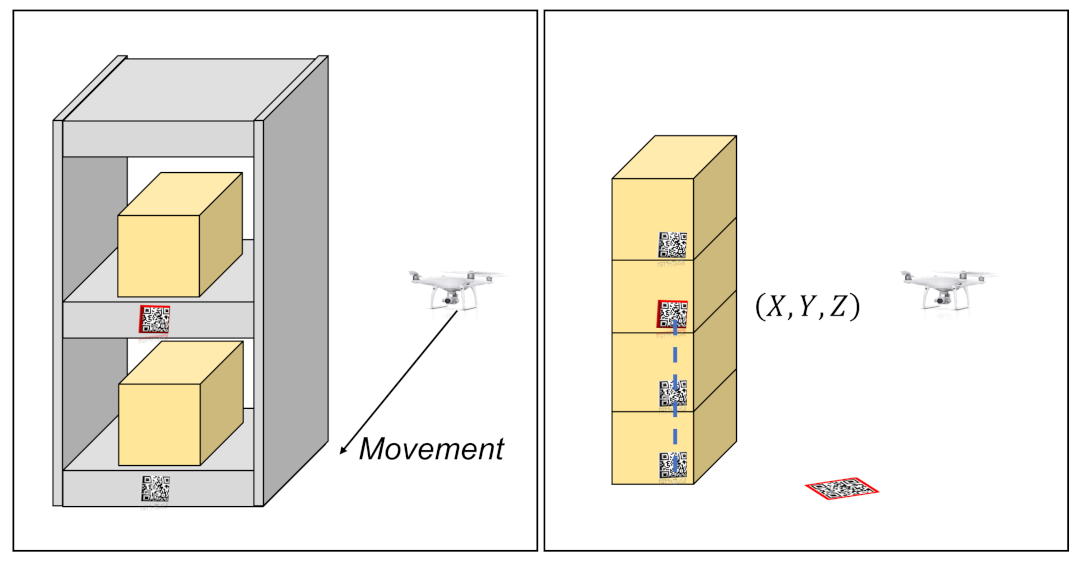

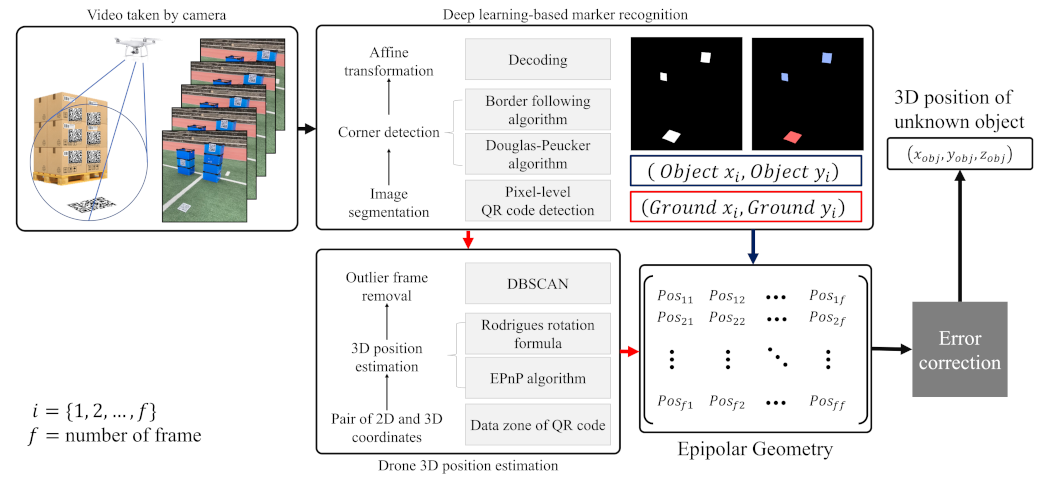

- 3D position estimation of inventory based on the deep learning model using a drone. We propose the 3D position estimation framework for the inventory based on the deep learning model using the QR code and drone-mounted monocular camera. The proposed framework estimates the 3D position of the inventory from the video frame using the deep learning model trained from our generated dataset.

- Estimated inventory 3D position correction method. We improved the performance of the inventory 3D position estimation by removing frames where the estimated drone position is an outlier. In addition, the method of correcting the estimated inventory 3D position through the relationship between the captured video frame is proposed.

- Performance analysis and comparison of the proposed model through the ablation study. We performed the ablation study about the proposed framework to verify that the proposed correction method improves the performance of 3D position estimation of the inventory. The 3D position estimation performance of the inventory was evaluated using videos captured in various real environments.

2. Literature Review

2.1. Inventory Management Using Drones

2.2. Position Estimation Based on the QR Code Recognition

2.3. Image Segmentation

3. Methodology

3.1. Architecture

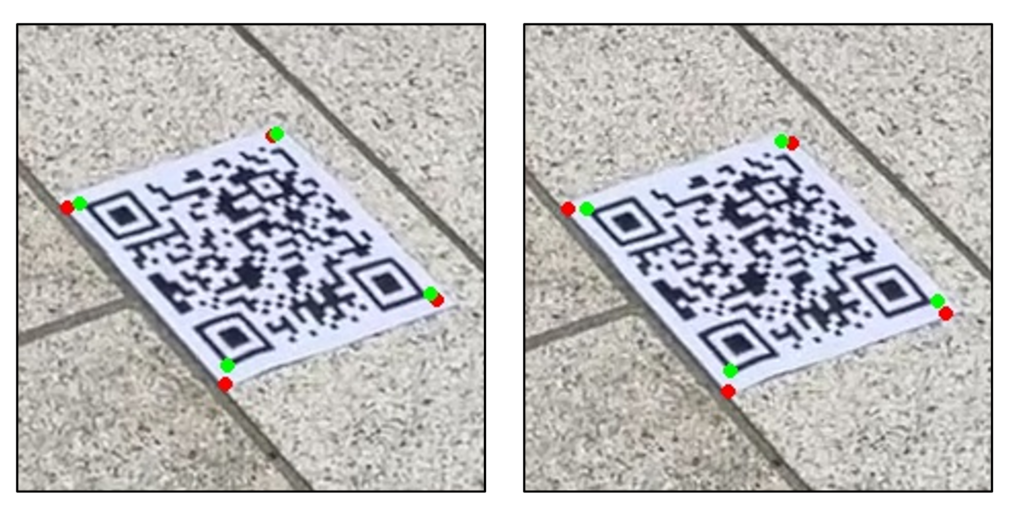

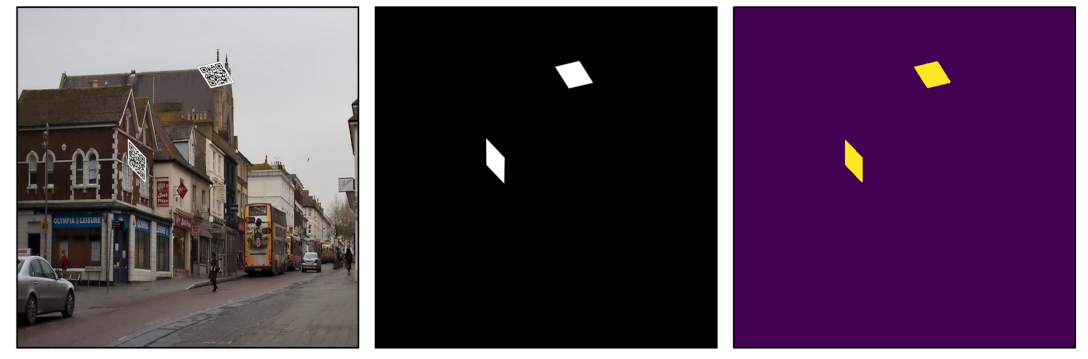

3.2. QR Code Segmentation and Decoding

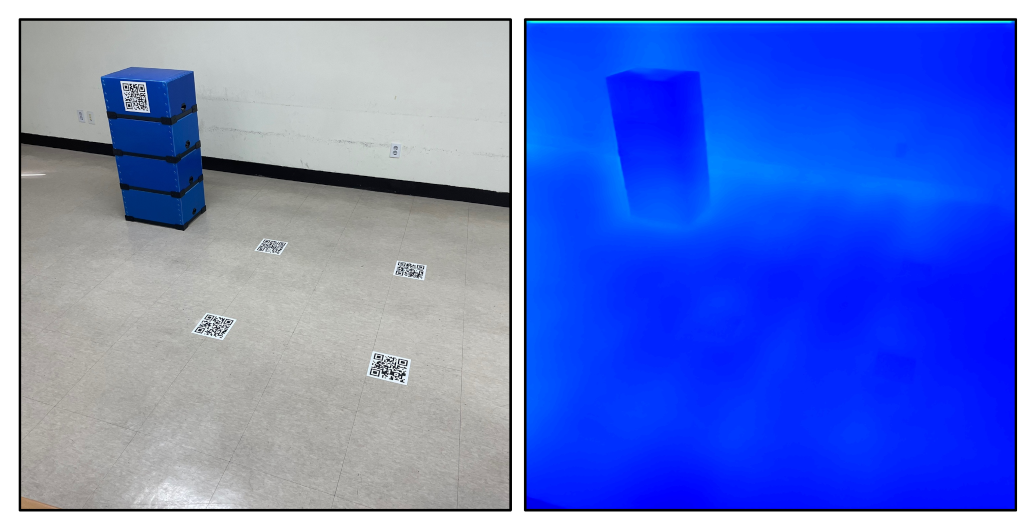

3.3. Drone 3D Position Estimation and Outlier Frame Removal

| Algorithm 1 DBSCAN pseudocode |

Require: set, D; minimum point, ; radius, |

Ensure: clustered C and un-clustered |

1: for each sample point in D do |

2: if is not visited then |

3: mark as visited |

4: sample points in of |

5: if then |

6: mark as |

7: else |

8: add to new cluster C |

9: for each sample point in do |

10: if is not visited then |

11: mark as visited |

12: sample points in |

13: of |

14: if then |

15: |

16: end if |

17: end if |

18: if is not yet member of any cluster then |

19: add to cluster C |

20: end if |

21: end for |

22: end if |

23: end if |

24: end for |

| Algorithm 2 Outlier frame removal pseudocode |

Require: Video frame, ; clustered C |

Ensure: Extracted frame, |

1: 0 |

2: for each clusterc in C do |

3: if () then |

4: |

5: |

6: end if |

7: end for |

8: for each video frame to do |

9: if then |

10: |

11: end if |

12: end for |

13: return |

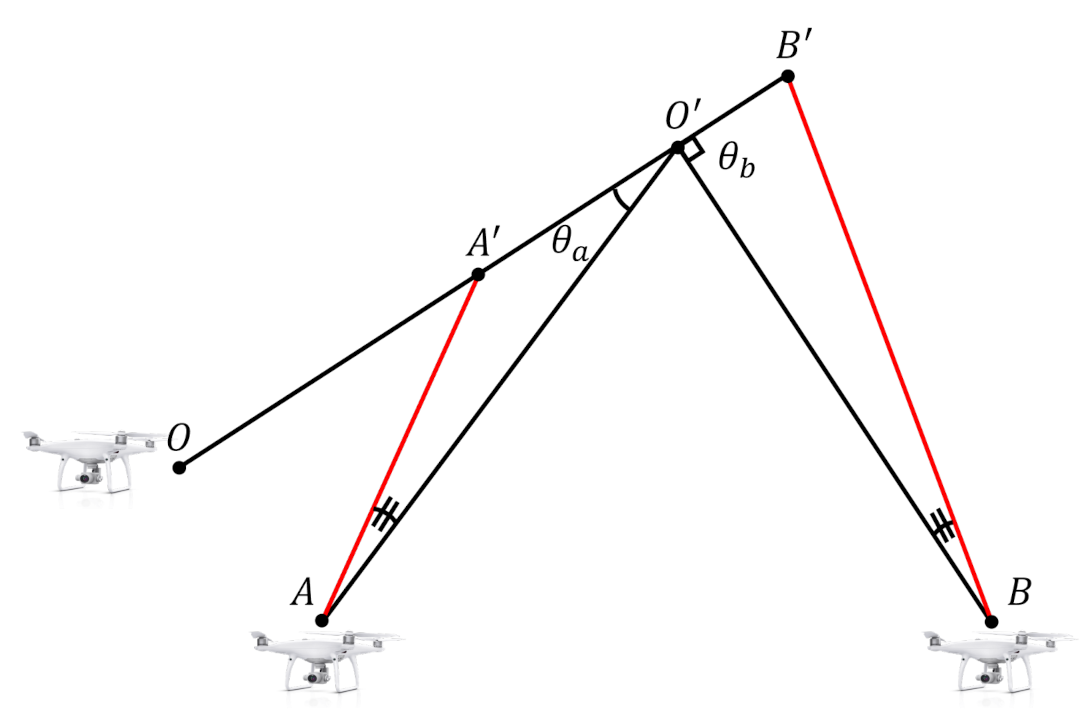

3.4. Epipolar Geometry-Based Object 3D Position Estimation with Error Correction

4. Results and Discussions

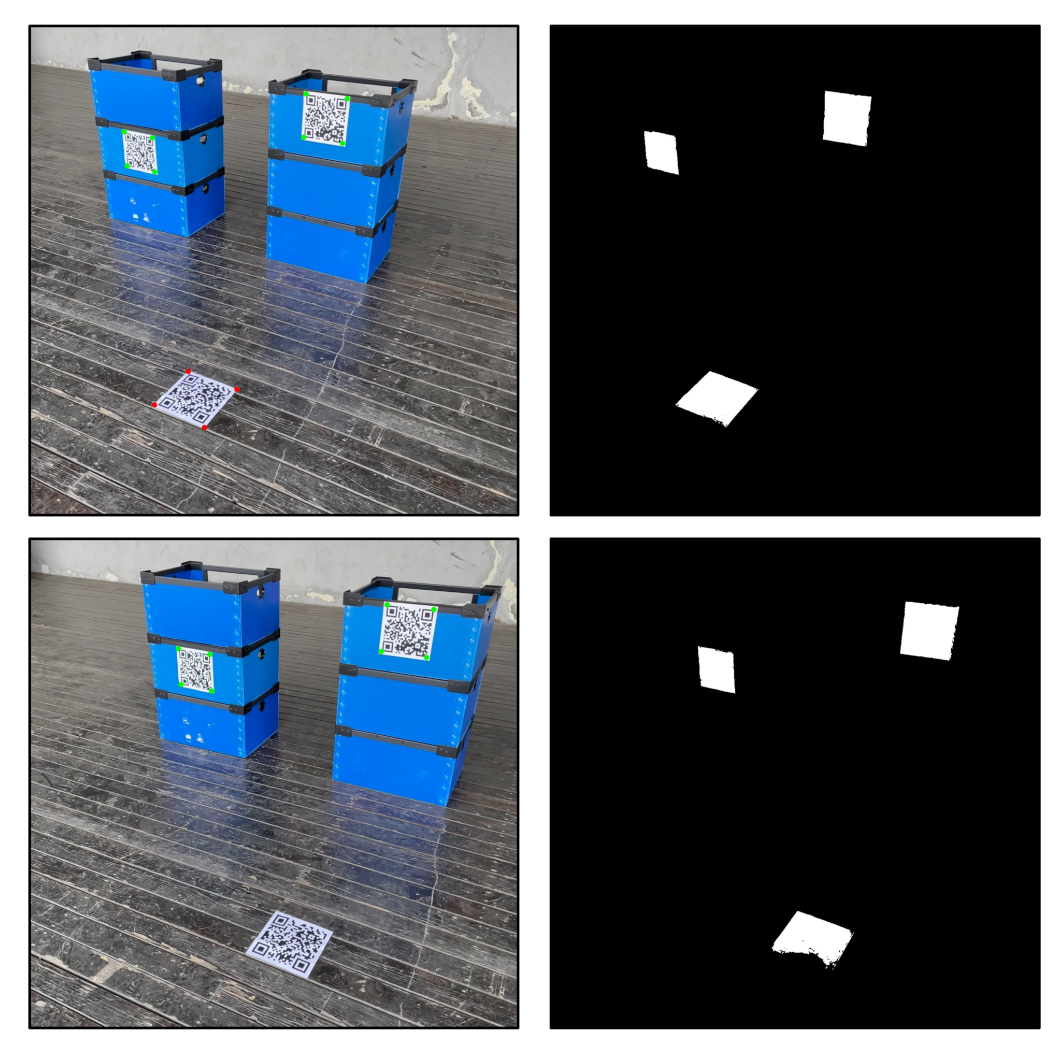

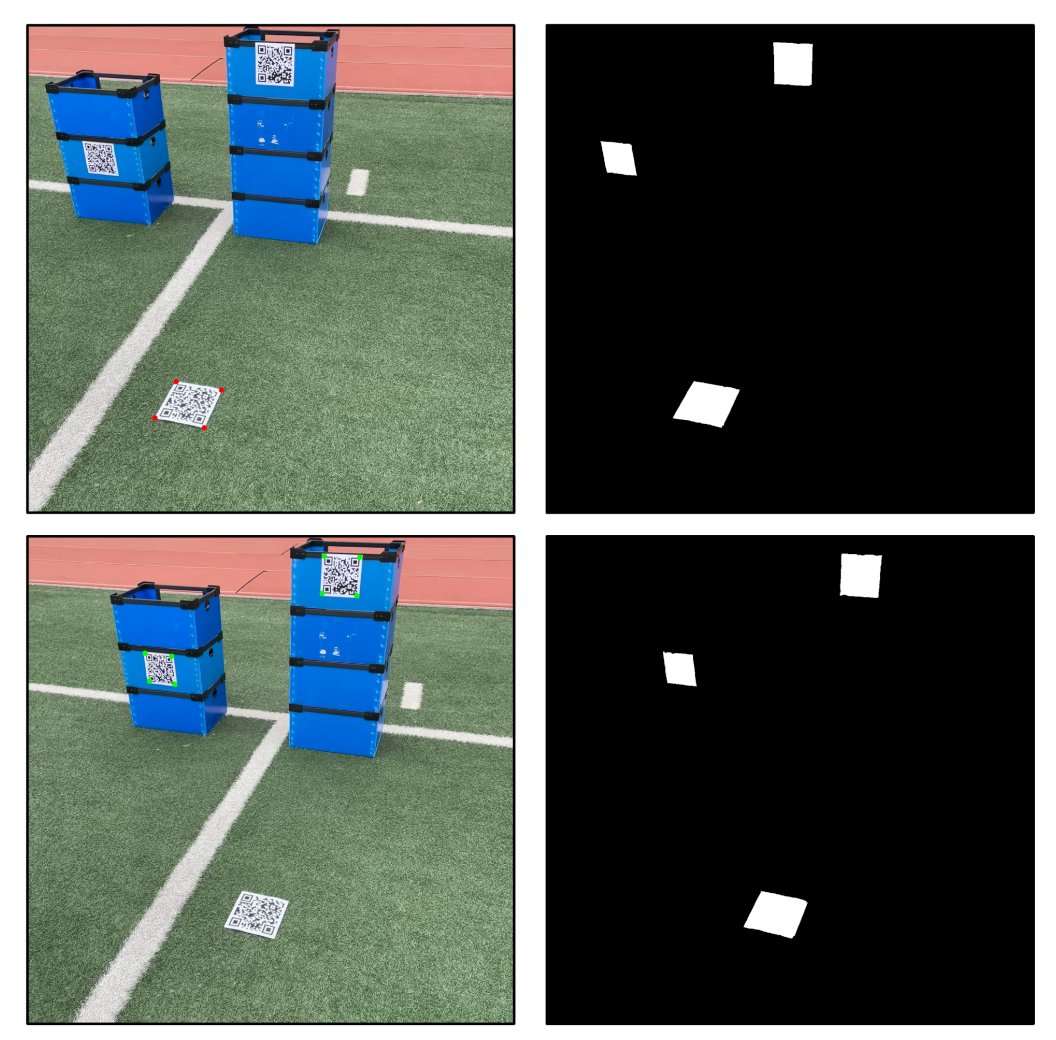

4.1. Dataset

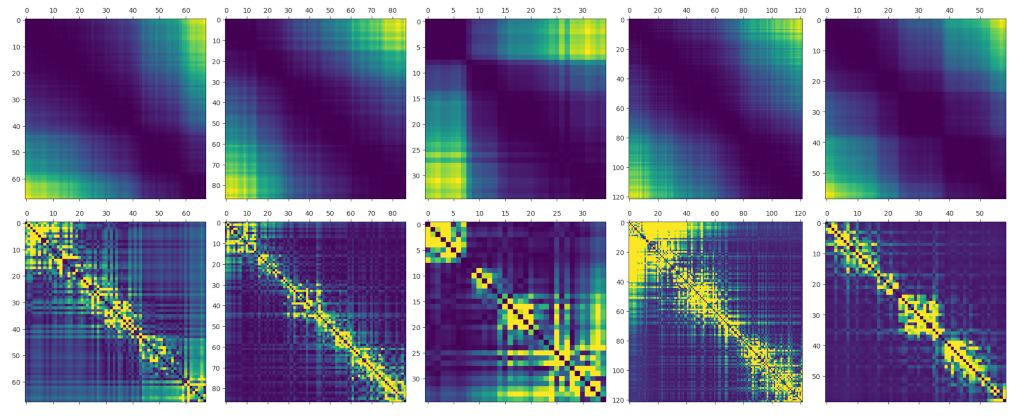

4.2. Segmentation-Based QR Code Decoding Test

4.3. Comparison of 3D Position Estimation Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Yoon, B.; Kim, H.; Youn, G.; Rhee, J. 3D position estimation of drone and object based on QR code segmentation model for inventory management automation. In Proceedings of the 2021 IEEE International Symposium on Safety, Security, and Rescue Robotics (SSRR), New York, NY, USA, 25–27 October 2021; pp. 223–229. [Google Scholar]

- Saggiani, G.; Persiani, F.; Ceruti, A.; Tortora, P.; Troiani, E.; Giuletti, F.; Amici, S.; Buongiorno, M.; Distefano, G.; Bentini, G.; et al. A UAV System for Observing Volcanoes and Natural Hazards. American Geophysical Union, Fall Meeting 2007, Abstract ID. GC11B-05, 2007. Available online: https://ui.adsabs.harvard.edu/abs/2007AGUFMGC11B..05S/abstract (accessed on 26 September 2023).

- Bai, X.; Cao, M.; Yan, W.; Ge, S.S. Efficient routing for precedence-constrained package delivery for heterogeneous vehicles. IEEE Trans. Autom. Sci. Eng. 2019, 17, 248–260. [Google Scholar] [CrossRef]

- Shakhatreh, H.; Sawalmeh, A.H.; Al-Fuqaha, A.; Dou, Z.; Almaita, E.; Khalil, I.; Othman, N.S.; Khreishah, A.; Guizani, M. Unmanned aerial vehicles (UAVs): A survey on civil applications and key research challenges. IEEE Access 2019, 7, 48572–48634. [Google Scholar] [CrossRef]

- Rhiat, A.; Chalal, L.; Saadane, A. A Smart Warehouse Using Robots and Drone to Optimize Inventory Management. In Proceedings of the Future Technologies Conference, Vancouver, BC, Canada, 28–29 October 2021; Springer: Berlin/Heidelberg, Germany, 2021; pp. 475–483. [Google Scholar]

- Radácsi, L.; Gubán, M.; Szabó, L.; Udvaros, J. A Path Planning Model for Stock Inventory Using a Drone. Mathematics 2022, 10, 2899. [Google Scholar] [CrossRef]

- Gubán, M.; Udvaros, J. A Path Planning Model with a Genetic Algorithm for Stock Inventory Using a Swarm of Drones. Drones 2022, 6, 364. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Tardós, J.D. Orb-slam2: An open-source slam system for monocular, stereo, and rgb-d cameras. IEEE Trans. Robot. 2017, 33, 1255–1262. [Google Scholar] [CrossRef]

- Gao, X.; Wang, R.; Demmel, N.; Cremers, D. LDSO: Direct sparse odometry with loop closure. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 2198–2204. [Google Scholar]

- Szeliski, R. Computer Vision: Algorithms and Applications; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Zhou, B.; Lapedriza, A.; Khosla, A.; Oliva, A.; Torralba, A. Places: A 10 million Image Database for Scene Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 1452–1464. [Google Scholar] [CrossRef] [PubMed]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Cho, H.; Kim, D.; Park, J.; Roh, K.; Hwang, W. 2D barcode detection using images for drone-assisted inventory management. In Proceedings of the 2018 15th International Conference on Ubiquitous Robots (UR), Honolulu, HI, USA, 26–30 June 2018; pp. 461–465. [Google Scholar]

- Ramaswamy, A.; Gubbi, J.; Raj, R.; Purushothaman, B. Frame stitching in indoor environment using drone captured images. In Proceedings of the 2018 25th IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018; pp. 91–95. [Google Scholar]

- Anand, A.; Agrawal, S.; Agrawal, S.; Chandra, A.; Deshmukh, K. Grid-based localization stack for inspection drones towards automation of large scale warehouse systems. arXiv 2019, arXiv:1906.01299. [Google Scholar]

- Almalki, F.A. Utilizing Drone for Food Quality and Safety Detection using Wireless Sensors. In Proceedings of the 2020 IEEE 3rd International Conference on Information Communication and Signal Processing (ICICSP), Shanghai, China, 12–15 September 2020; pp. 405–412. [Google Scholar]

- Li, C.; Tanghe, E.; Suanet, P.; Plets, D.; Hoebeke, J.; De Poorter, E.; Joseph, W. ReLoc 2.0: UHF-RFID Relative Localization for Drone-Based Inventory Management. IEEE Trans. Instrum. Meas. 2021, 70, 1–13. [Google Scholar] [CrossRef]

- Cristiani, D.; Bottonelli, F.; Trotta, A.; Di Felice, M. Inventory Management through Mini-Drones: Architecture and Proof-of-Concept Implementation. In Proceedings of the 2020 IEEE 21st International Symposium on “A World of Wireless, Mobile and Multimedia Networks” (WoWMoM), Cork, Ireland, 31 August–3 September 2020; pp. 317–322. [Google Scholar]

- Manjrekar, A.; Jha, D.; Jagtap, P.; Yadav, V. Warehouse Inventory Management with Cycle Counting Using Drones. In Proceedings of the 4th International Conference on Advances in Science and Technology (ICAST2021), Bahir Dar, Ethiopia, 27–29 August 2021. [Google Scholar]

- Martinez-Martin, E.; Ferrer, E.; Vasilev, I.; Del Pobil, A.P. The UJI Aerial Librarian Robot: A Quadcopter for Visual Library Inventory and Book Localisation. Sensors 2021, 21, 1079. [Google Scholar] [CrossRef]

- Blanger, L.; Hirata, N.S. An evaluation of deep learning techniques for QR code detection. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; pp. 1625–1629. [Google Scholar]

- Yuan, B.; Li, Y.; Jiang, F.; Xu, X.; Zhao, J.; Zhang, D.; Guo, J.; Wang, Y.; Zhang, S. Fast QR code detection based on BING and AdaBoost-SVM. In Proceedings of the 2019 IEEE 20th International Conference on High Performance Switching and Routing (HPSR), Xi’an, China, 26–29 May 2019; pp. 1–6. [Google Scholar]

- Cheng, M.M.; Zhang, Z.; Lin, W.Y.; Torr, P. BING: Binarized normed gradients for objectness estimation at 300fps. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 3286–3293. [Google Scholar]

- Yuan, B.; Li, Y.; Jiang, F.; Xu, X.; Guo, Y.; Zhao, J.; Zhang, D.; Guo, J.; Shen, X. MU R-CNN: A two-dimensional code instance segmentation network based on deep learning. Future Internet 2019, 11, 197. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Li, K.; Meng, F.; Huang, Z.; Wang, Q. A correction algorithm of QR code on cylindrical surface. J. Phys. Conf. Ser. 2019, 1237, 022006. [Google Scholar] [CrossRef]

- Peng, L.; Wen, L.; Qiang, L.; Min, D.; Yue, D.; Yiying, N. Research on QR 2-D Code Graphics Correction Algorithms Based on Morphological Expansion Closure and Edge Detection. In Advances in 3D Image and Graphics Representation, Analysis, Computing and Information Technology; Springer: Berlin/Heidelberg, Germany, 2020; pp. 197–209. [Google Scholar]

- Choi, J.H.; Choi, B.J. Design of self-localization based autonomous driving platform for an electric wheelchair. In Proceedings of the 2017 11th Asian Control Conference (ASCC), Gold Coast, QLD, Australia, 17–20 December 2017; pp. 465–466. [Google Scholar]

- Li, Z.; Huang, J. Study on the use of QR codes as landmarks for indoor positioning: Preliminary results. In Proceedings of the 2018 IEEE/ION Position, Location and Navigation Symposium (PLANS), Monterey, CA, USA, 23–26 April 2018; pp. 1270–1276. [Google Scholar]

- Lee, D.H.; Lee, S.S.; Kang, H.H.; Ahn, C.K. Camera Position Estimation for UAVs Using SolvePnP with Kalman Filter. In Proceedings of the 2018 1st IEEE International Conference on Hot Information-Centric Networking (HotICN), Shenzhen, China, 15–17 August 2018; pp. 250–251. [Google Scholar]

- Taketani, R.; Kobayashi, H. A Proposal for Improving Estimation Accuracy of Localization Using QR codes and Image Sensors. In Proceedings of the IECON 2019—45th Annual Conference of the IEEE Industrial Electronics Society, Lisbon, Portugal, 14–17 October 2019; Volume 1, pp. 6815–6820. [Google Scholar]

- Pan, G.; Liang, A.; Liu, J.; Liu, M.; Wang, E.X. 3-D Positioning System Based QR Code and Monocular Vision. In Proceedings of the 2020 5th International Conference on Robotics and Automation Engineering (ICRAE), Singapore, 20–22 November 2020; pp. 54–58. [Google Scholar]

- Kim, J.I.; Gang, H.S.; Pyun, J.Y.; Kwon, G.R. Implementation of QR Code Recognition Technology Using Smartphone Camera for Indoor Positioning. Energies 2021, 14, 2759. [Google Scholar] [CrossRef]

- Kang, T.W.; Choi, Y.S.; Jung, J.W. Estimation of Relative Position of Drone using Fixed Size QR Code. In Proceedings of the 2022 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Prague, Czech Republic, 9–12 October 2022; pp. 442–447. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Minaee, S.; Boykov, Y.Y.; Porikli, F.; Plaza, A.J.; Kehtarnavaz, N.; Terzopoulos, D. Image segmentation using deep learning: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 3523–3542. [Google Scholar] [CrossRef]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Semantic image segmentation with deep convolutional nets and fully connected crfs. arXiv 2014, arXiv:1412.7062. [Google Scholar]

- Liu, Z.; Li, X.; Luo, P.; Loy, C.C.; Tang, X. Semantic image segmentation via deep parsing network. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1377–1385. [Google Scholar]

- Zhou, Z.; Siddiquee, M.M.R.; Tajbakhsh, N.; Liang, J. Unet++: A nested u-net architecture for medical image segmentation. In Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support; Springer: Berlin/Heidelberg, Germany, 2018; pp. 3–11. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef]

- Chen, L.C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking atrous convolution for semantic image segmentation. arXiv 2017, arXiv:1706.05587. [Google Scholar]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2881–2890. [Google Scholar]

- Khan, S.D.; Alarabi, L.; Basalamah, S. Deep hybrid network for land cover semantic segmentation in high-spatial resolution satellite images. Information 2021, 12, 230. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Khan, S.D.; Alarabi, L.; Basalamah, S. DSMSA-Net: Deep Spatial and Multi-scale Attention Network for Road Extraction in High Spatial Resolution Satellite Images. Arab. J. Sci. Eng. 2022, 48, 1907–1920. [Google Scholar] [CrossRef]

- Khan, S.D.; Alarabi, L.; Basalamah, S. An encoder–decoder deep learning framework for building footprints extraction from aerial imagery. Arab. J. Sci. Eng. 2023, 48, 1273–1284. [Google Scholar] [CrossRef]

- Douglas, D.H.; Peucker, T.K. Algorithms for the reduction of the number of points required to represent a digitized line or its caricature. Cartogr. Int. J. Geogr. Inf. Geovis. 1973, 10, 112–122. [Google Scholar] [CrossRef]

- Wang, P.; Xu, G.; Cheng, Y.; Yu, Q. A simple, robust and fast method for the perspective-n-point problem. Pattern Recognit. Lett. 2018, 108, 31–37. [Google Scholar] [CrossRef]

- Gao, X.S.; Hou, X.R.; Tang, J.; Cheng, H.F. Complete solution classification for the perspective-three-point problem. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 930–943. [Google Scholar]

- Hesch, J.A.; Roumeliotis, S.I. A direct least-squares (DLS) method for PnP. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 383–390. [Google Scholar]

- Lepetit, V.; Moreno-Noguer, F.; Fua, P. Epnp: An accurate o (n) solution to the pnp problem. Int. J. Comput. Vis. 2009, 81, 155–166. [Google Scholar] [CrossRef]

- Ester, M.; Kriegel, H.P.; Sander, J.; Xu, X. A density-based algorithm for discovering clusters in large spatial databases with noise. In Proceedings of the Second International Conference on Knowledge Discovery and Data Mining (KDD-96), Portland, OR, USA, 2–4 August 1996; pp. 226–231. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Milletari, F.; Navab, N.; Ahmadi, S.A. V-net: Fully convolutional neural networks for volumetric medical image segmentation. In Proceedings of the 2016 Fourth International Conference on 3D Vision (3DV), Stanford, CA, USA, 25–28 October 2016; pp. 565–571. [Google Scholar]

- Kim, D.; Ka, W.; Ahn, P.; Joo, D.; Chun, S.; Kim, J. Global-local path networks for monocular depth estimation with vertical cutdepth. arXiv 2022, arXiv:2201.07436. [Google Scholar]

- Zheng, M.; Zhi, K.; Zeng, J.; Tian, C.; You, L. A hybrid CNN for image denoising. J. Artif. Intell. Technol. 2022, 2, 93–99. [Google Scholar] [CrossRef]

- Fang, B.; Jiang, M.; Shen, J.; Stenger, B. Deep generative inpainting with comparative sample augmentation. J. Comput. Cogn. Eng. 2022, 1, 174–180. [Google Scholar] [CrossRef]

- Wang, Y.Q.; Li, J.Y.; Chen, C.H.; Zhang, J.; Zhan, Z.H. Scale adaptive fitness evaluation-based particle swarm optimisation for hyperparameter and architecture optimisation in neural networks and deep learning. CAAI Trans. Intell. Technol. 2022, 8, 849–862. [Google Scholar] [CrossRef]

| Model | IoU | Decoding Rate | |

|---|---|---|---|

| Decoder | Encoder | ||

| Decoder | Encoder | IoU | Decoding Rate |

| U-Net | ResNet101 | 0.9869 | 98.71% |

| U-Net++ | ResNet101 | 0.9894 | 98.71% |

| PSPNet | ResNet101 | 0.9798 | 85.91% |

| DeepLabv3 | ResNet101 | 0.9815 | 91.77% |

| DeepLabv3+ | ResNet101 | 0.9736 | 97.82% |

| U-Net | Xception | 0.9890 | 98.81% |

| U-Net++ | Xception | 0.9898 | 99.07% |

| PSPNet | Xception | 0.9544 | 75.87% |

| Method | Quad | Data Zone | ||

|---|---|---|---|---|

| Mean | SD | Mean | SD | |

| Base | 0.2040 | 0.1081 | 0.1440 | 0.1080 |

| Proposed | 0.1945 | 0.0935 | 0.1193 | 0.0744 |

| w/o DBSCAN | 0.2416 | 0.1487 | 0.1798 | 0.1494 |

| w/o Correction | 0.2022 | 0.0967 | 0.1463 | 0.1106 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yoon, B.; Kim, H.; Youn, G.; Rhee, J. 3D Position Estimation of Objects for Inventory Management Automation Using Drones. Appl. Sci. 2023, 13, 10830. https://doi.org/10.3390/app131910830

Yoon B, Kim H, Youn G, Rhee J. 3D Position Estimation of Objects for Inventory Management Automation Using Drones. Applied Sciences. 2023; 13(19):10830. https://doi.org/10.3390/app131910830

Chicago/Turabian StyleYoon, Bohan, Hyeonha Kim, Geonsik Youn, and Jongtae Rhee. 2023. "3D Position Estimation of Objects for Inventory Management Automation Using Drones" Applied Sciences 13, no. 19: 10830. https://doi.org/10.3390/app131910830

APA StyleYoon, B., Kim, H., Youn, G., & Rhee, J. (2023). 3D Position Estimation of Objects for Inventory Management Automation Using Drones. Applied Sciences, 13(19), 10830. https://doi.org/10.3390/app131910830