Abstract

Stylish fonts are widely utilized in social networks, driving research interest in stylish Chinese font generation. Most existing methods for generating Chinese characters rely on GAN-based deep learning approaches. However, persistent issues of mode collapse and missing structure in CycleGAN-generated results make font generation a formidable task. This paper introduces a unique semi-supervised model specifically designed for generating stylish Chinese fonts. By incorporating a small amount of paired data and stroke encoding as information into CycleGAN, our model effectively captures both the global structural features and local characteristics of Chinese characters. Additionally, an attention module has been added and refined to improve the connection between strokes and character structures. To enhance the model’s understanding of the global structure of Chinese characters and stroke encoding reconstruction, two loss functions are included. The integration of these components successfully alleviates mode collapse and structural errors. To assess the effectiveness of our approach, comprehensive visual and quantitative assessments are conducted, comparing our method with benchmark approaches on six diverse datasets. The results clearly demonstrate the superior performance of our method in generating stylish Chinese fonts.

1. Introduction

Stylish Chinese fonts have become a popular means of expressing individuality, attracting significant attention from users. However, considering the extensive character repertoire in Chinese, designing each character by hand poses a significant challenge for designers. Recently, there has been increased interest in the generation of stylish Chinese fonts, making it an intriguing research area.

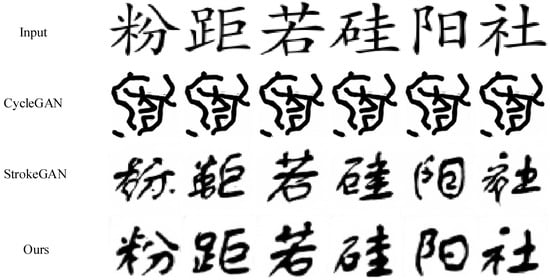

The fundamental methods of Chinese character generation primarily involve reconstruction techniques based on the structural features of characters [1]. These methods initially analyze the font’s skeleton structure to extract stroke and radical information, which is then utilized to compose new Chinese characters. However, the diverse stroke types and intricate structure of Chinese characters often necessitate manual extraction, a labor-intensive and time-consuming process. Consequently, the practical application of these methods is severely limited. With the advancements in deep learning [2,3], generative adversarial networks (GANs) [4] and their variants have become the most commonly employed approaches [5]. These methods facilitate the integration of feature extraction and generation processes, enabling end-to-end training for Chinese font generation. Among these techniques, CycleGAN [6] is a widely used approach in Chinese character generation, as it leverages unpaired training data. Nevertheless, CycleGAN often encounters challenges such as mode collapse and structural distortions in Chinese characters. To address this, StrokeGAN [7] incorporates explicit stroke feature information during the training phase of Chinese font generation. By utilizing stroke information, StrokeGAN captures the mode details of Chinese characters and preserves their integrity, effectively mitigating mode collapse. However, the distinctive characteristics of Chinese character images, coupled with their inherent structural complexity and substantial similarities, often result in a higher incidence of structural omissions during the model’s generation process, as illustrated in Figure 1.

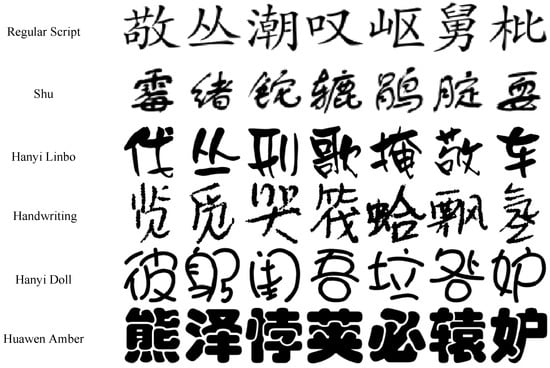

Figure 1.

Mode collapse occurred in Chinese fonts generated by CycleGAN, and StrokeGAN encoded by strokes was introduced, which solved the mode collapse problem well. However, missing strokes and redundancy occurred in the generated fonts; our method preserves the integrity of Chinese characters while effectively tackling the mode collapse.

We propose a semi-supervised model that incorporates a small amount of paired data to achieve a supervised-like effect that is typically accomplished by conventional GANs. Stroke encoding (SE) is utilized as additional conditional information for generating Chinese characters, effectively addressing the issue of generating structurally incomplete characters in CycleGAN. Unlike natural images, Chinese character images exhibit a high degree of structural complexity. Minor alterations in stroke positions can have a substantial impact on the overall structure of Chinese characters. As a result, by utilizing a limited number of paired datasets to acquire structural information from all character images, the model can effectively discern their distinctions. Furthermore, we introduce an attention module, building upon the self-attention network [8], that has been enhanced to highlight the interplay between strokes, as well as the interdependence between strokes and the overall structure. To guide the model in accurately capturing stroke and structure information from these character images, we incorporate two additional loss functions.

This paper makes the following contributions:

- This paper proposes a semi-supervised method for generating Chinese characters with a more complete structure. Stroke encoding is employed to capture fine-grained details, while a small quantity of paired data is integrated to offer supervised information regarding the overall structural characteristics of Chinese characters. This enables the model to effectively capture the structural intricacies of Chinese characters. To assist the model in accurately capturing stroke and structure information from these character images, we incorporate two additional loss functions.

- This paper involves the introduction of an attention module in the generator model. To enhance the model’s ability to capture the relationship between local features and overall structure while maintaining the model as a lightweight module and improving the efficiency of Chinese character generation, we have implemented several enhancements to this module. These improvements are reflected in the addition of pooling operations to obtain information in the feature map while enhancing the connection between feature information and original features.

- This paper involves a comparative analysis and evaluation of the model’s performance, utilizing six font datasets for generating Chinese characters. Various methodologies are employed to assess the quality of the images generated after converting Chinese character styles. Quantitative results demonstrate that incorporating stroke encoding and the semi-supervised scheme effectively alleviates inherent challenges associated with the model. Furthermore, the attention module exhibits improved precision in capturing the interplay between individual strokes and the overall structure of Chinese characters.

The rest of this paper is organized as follows. In Section 2, we introduce some related work in the field of Chinese character generation. In Section 3, we provide a detailed description of our model. In Section 4, we provide a series of experiments to prove the rationality of the proposed model. In Section 5, we conclude the paper.

2. Related Work

Over the past few years, most of the applications used for generating Chinese fonts have been based on machine learning methods [1,9]. These methods initially analyze the font’s skeleton structure to extract stroke and radical information, which is then utilized to compose new Chinese characters; however, they have some limitations. With the widespread use of deep learning, numerous deep-learning approaches have emerged for generating stylish Chinese fonts [10,11,12]. For example, Tian et al. [13] utilized the pix2pix model, originally introduced by Isola et al. [3], to generate Chinese fonts. They also introduced the zi2zi method, which utilizes paired training data, where each character in the source style domain corresponds to a character in the target style domain during training. Chen et al. [14] expanded on this concept by generating Chinese characters from a specific font to multiple diverse fonts. CalliGAN [15] also took advantage of the prior knowledge of Chinese character structure to handle the multi-class image-to-image conversion, i.e., multiple fonts to multiple font generation. Liu et al. [16] improved the quality of generated results by employing a decoupling mechanism that separates character images into style representation and content representation. This approach effectively synthesized artistic text with specified properties, resulting in improved output quality. Additionally, various methods have been proposed for Chinese font generation, all of which rely on paired training data [17,18,19,20,21,22]. With the advancement of deep learning, deep neural networks have made significant progress in extracting valuable feature information from Chinese characters, encompassing strokes, radicals, and structures. These features can be efficiently integrated into GAN models, offering vital supervisory information [23,24,25,26]. However, collecting paired training data can be a time- and effort-consuming process. To tackle this challenge, Chang et al. [6] utilized the CycleGAN method developed by Zhu et al. [2], enabling image style migration based on unpaired training data for Chinese font generation. Nevertheless, font style transformations often necessitate a substantial number of training samples. To overcome this limitation, Tang et al. [27] focused on generating new fonts with a limited number of examples, leveraging the combined representation of radicals, parts, and strokes of Chinese characters to depict the glyph’s style. Zhang et al. [28] utilized attention modules within style encoders to extract both shallow and deep style features. In addition, novel linguistic complexity-aware jump connections are employed to generate Chinese characters. Moreover, ZiGAN [29] was trained simultaneously on a reduced number of samples, facilitating Chinese font generation.

Regarding training methods using unpaired data, AGT-GAN [30] employed a combination of global and local glyph style modeling to generate characters. This approach incorporated stroke-aware texture transfer and utilized adversarial learning mechanisms to achieve improved results. The CycleGAN-based approach proposed by Chang et al. [6] is prone to the mode collapse problem, where the value domain of successive mappings becomes highly concentrated on a specific connected branch. To address this issue, StrokeGAN [7] incorporated explicit stroke feature information during the training phase of Chinese font generation. The stroke information can capture the mode information of Chinese characters and preserve the character’s integrity, effectively mitigating the mode collapse problem. In response to the still-frequent problem of mode collapse, Zeng et al. [31] proposed a few-shot semi-supervised scheme to deal with these problems. Nevertheless, despite mitigating the mode collapse challenge, certain issues persist concerning the overall structure of Chinese characters and stroke fluency. Unlike natural images, Chinese character images exhibit a high degree of structural complexity. Minor alterations in stroke positions can have a substantial impact on the overall structure of Chinese characters. Aiming at the structural incompleteness problem in generated Chinese characters, we propose a semi-supervised model for stylish Chinese font generation, incorporating stroke encoding, and an additional attention module has been incorporated into the generator. The semi-supervised scheme utilizes the global structural information of Chinese characters. Stroke encoding is employed as local conditional information for characters, enabling our method to better emphasize the local stroke features and global structural features of Chinese characters, and two new loss functions are integrated into the model. The attention mechanism, widely utilized in computer vision [32,33,34], improves network performance and efficiency by highlighting essential components and mitigating training-related challenges. To accurately capture the dependency between strokes and structures, several enhancements have been made to the attention module. Our proposed method is a significant improvement over previous approaches and can be applied to a variety of tasks related to Chinese character generation.

3. Stylish Chinese Font Generation

In this section, we present a comprehensive description of our proposed method, elucidating its key components and functionalities in detail. Our model’s fundamental concept revolves around addressing the issue of missing structures. Unlike previous research efforts that primarily emphasize increasing model complexity, our goal is to attain simpler yet more efficient supervision within a relatively straightforward GAN model, thereby optimizing its functionality.

3.1. Font Generation Model Architecture

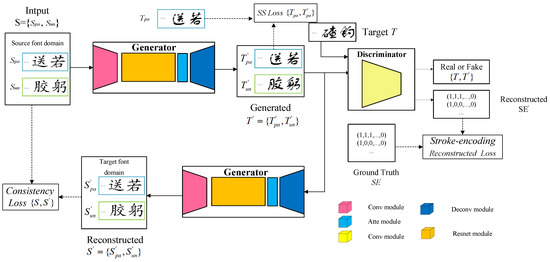

The method follows the typical conditional CycleGAN framework, with the main model structure depicted in Figure 2. First, the stroke encoding of the characters in the source domain is generated using one-bit stroke encoding. The datasets in the source font domain are then divided into partially paired data and unpaired data . These datasets S and the stroke encoding generated based on S are used as inputs to generator G in the model. Generator G generates fake characters that resemble the style of the target domain. The generated fake characters are then fed to the discriminator, along with the ground truth characters from the target domain. The discriminator not only determines the authenticity of the input data but also generates its reconstructed stroke code for the fake characters . Subsequently, the generated characters are passed to the next generator, which aims to reconstruct the font into characters in the style of the font domain of the source domain. This process forms a cycle and is controlled through loss functions. It is essential to note that the discriminator in this method differs from the discriminator in traditional CycleGAN. The generator and loss functions will be described in detail in later sections.

where represents the probability distribution of the source font domain, while represents the probability distribution of the SE for a given Chinese font image x.

Figure 2.

Overall framework diagram of the proposed model.

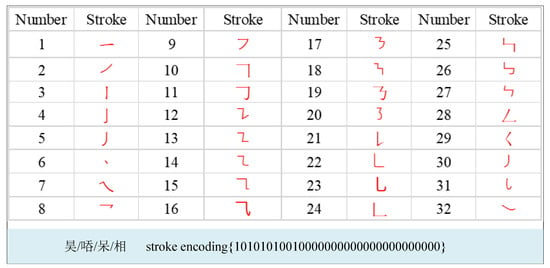

3.2. SE and Semi-Supervised Scheme

The SE is equivalent to the conditional information of the model, which serves to allow the model to focus more precisely on the local stroke information in the Chinese character structure. The stroke encoding method for Chinese characters uses a simple one-bit encoding method, i.e., the corresponding position in the vector is 1 for whichever stroke exists in a given Chinese character, otherwise, it is 0. As shown in Figure 3, there are 32 kinds of basic strokes in Chinese characters, so the SE of each Chinese character, c, is defined as a vector, . Nevertheless, it is worth noting that certain Chinese characters can possess the same one-bit stroke encoding but exhibit vastly different structures, as depicted in Figure 3. As depicted in Figure 1, although StrokeGAN, which only contains stroke encoding as conditional information, alleviates the mode collapse problem that occurs in the original method, it still exhibits a significant number of missing strokes in the generated characters. This indicates that the role of Chinese character stroke encoding as conditional information is limited and insufficient for the model to generate more perfect results. To tackle this challenge, we propose a semi-supervised scheme. Considering the unique structure of Chinese characters and the relatively smaller number of structural categories compared to the total number of strokes, we randomly select a percentage of commonly used Chinese characters from the available data. These paired data are then incorporated as additional supervised data to obtain extra information about the global structure of the entire character image. This approach helps alleviate the challenge of poor model performance when generating different characters with the same stroke encoding. In this paper, we consider the robustness of strokes as only an indicator function, rather than the exact number of strokes for a given Chinese character.

Figure 3.

These strokes above are the basic strokes that form Chinese characters. Here are some examples of Chinese characters that have distinct composition structures but share common basic strokes.

3.3. Improved Attention Module

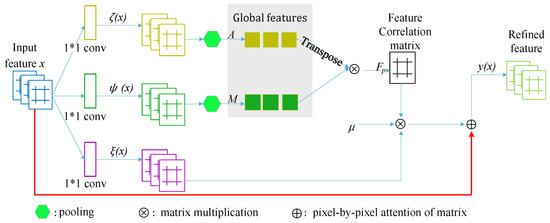

To enhance the realization of stylish Chinese font style conversion and emphasize the network’s attention to the relationship between the position of Chinese strokes and the overall structure of Chinese characters, we incorporate an attention module in this paper. We improve the attention module by incorporating average pooling and maximum pooling operations to extract partial information from the feature mapping. To enhance the connection with the original features, we perform operations between the obtained non-local feature mappings and the original features, resulting in the final perceptually enhanced feature mappings. The module learns by capturing the dependency between Chinese character features and stroke features, as well as the interconnections among stroke features, thereby better preserving the crucial stroke information of characters. The improved attention module possesses fewer parameters compared to the self-attention mechanism and exhibits enhanced focus on stroke information, as illustrated in Figure 4. Additional details regarding the attention module and its refinements are presented in the following sections.

Figure 4.

Framework diagram of the improved attention module.

- Three embedded feature spaces are used in this module. , , and are all constructed by 1 × 1 convolution. n is the size of the feature map, and c is the number of channels.

- To simplify the parameters of the attention module, accelerate the speed of the model, and obtain some feature information of Chinese characters and strokes, the average pool and maximum pool are used to obtain some feature information in the feature map. Average pooling and maximum pooling are used to obtain feature information in the two feature spaces and , respectively.

- The feature correlation matrix F is obtained by simple matrix multiplication of the two feature information and obtained earlier. Each element in the feature correlation matrix signifies the association between the average feature in and the significant feature in .In this context, each channel map can be considered as a response specific to Chinese character features, and these feature responses exhibit correlations among themselves. Consequently, the resulting feature correlation matrix carries significant physical meaning, where the row represents the statistical correlation between channel i and all other channels. By capitalizing on these inter-dependencies, we can effectively highlight the interdependent feature maps.

- The feature map m is derived by performing matrix multiplication between the feature correlation matrix and the feature space . This matrix effectively measures the response of each location to the overall characteristics present in the Chinese character image. This design establishes a linkage between the strokes and the overall structure of the Chinese image.The aforementioned mechanism strives to augment the channel features at every position by leveraging the amalgamation of all channels and integrating the inter-dependencies among them. Consequently, with the inclusion of the attention module in the model, the feature map gains the ability to transcend its local perceptual domain and discern the holistic structural characteristics of the Chinese character by gauging the response to global features.

- In order to make the attention module also pay better attention to the original features, based on inspiration from the residual network, we have summed the original feature x with obtained non-local feature mapping m to yield the perceptually enhanced features. In order to allow the attention module not to affect the pre-trained model and at the same time to better regulate the weight relationship between the two features, we limit the coefficients to the non-local feature mappings, which can solve some problems perfectly. Additionally, it will automatically adjust its size according to the training of the model.Chinese character images are different from natural images, so we improved their attention module. The module can pay good attention to the features of Chinese characters while alleviating the parameters of the module and improving the efficiency of model generation. Moreover, it has been shown in the literature [35] that nonlinear operations are not essential in attention modules.

3.4. Training Loss for Our Model

The training loss for our method is mainly composed of four components: general adversarial loss, cyclic consistency loss, semi-supervised loss, and SE reconstruction loss.

3.4.1. Adversarial Loss

The definition of adversarial losses typically follows the following formulation:

where refers to the synthetic character in the target style produced by the generator using the input character x, while the discriminator’s role is to discern the authenticity of the generated synthetic character.

3.4.2. Cycle Consistency Loss

The cycle consistency loss ensures that the stroke labels associated with the generated characters from the generator can accurately reconstruct the characters in the source font domain. More precisely, this component of the loss is able to be represented as follows:

where denotes the character generated by the generator G, while corresponds to the character after undergoing reconstruction by the generator.

3.4.3. SE Reconstruction Loss

SE was not emphasized in the previous two losses. As previously mentioned, strokes are the fundamental building blocks of Chinese characters, and the absence of even a single stroke significantly compromises the character’s integrity. Consequently, strokes play a crucial role in model training as essential information. To be more specific, this component of the loss is able to be defined in the following manner:

where refers to the SE generated by the discriminator upon receiving the generated fake character . This loss is employed to guide the network model in accurately reconstructing the stroke encoding, thereby retaining the basic information of Chinese characters more effectively.

3.4.4. Semi-Supervised Loss

In this paper, the semi-supervised scheme is used to enhance the learning of the model and the semi-supervised loss is also incorporated, which is able to be expressed in the following manner:

where and y are the characters generated by the semi-supervised training samples through the generator and the ground truth characters in the target domain, respectively.

3.4.5. Total Training Loss

Combining several of these losses, the full objective of our model is as follows:

where , , and are tuning hyper-parameters. Considering the previously defined total loss , the objective is:

4. Experiments

All experiments in this section were performed in the PyTorch environment for Linux with an Intel® Xeon® Platinum 8255C 12-core processor and a GeForce RTX 2080Ti GPU.

4.1. Experimental Setting

4.1.1. Data Preparation

Stylish art fonts have very different styles, and it is not as if calligraphy fonts are divided into fixed categories. In order to verify the generalization ability of the model, the experimental study in this paper chooses the most characteristic six fonts, which can be mainly divided into three categories: handwritten fonts, Regular Script, and four personalized art fonts. One of the most readily available fonts is the handwritten font. These stylish art fonts are frequently used in life and have a unique and distinctive style. Figure 5 illustrates the different styles of artistic fonts used in this study. The first row represents standard print fonts, while the next five rows show different styles of personalized fonts.

Figure 5.

The six fonts above are the dataset used in this paper. Each font has a different style and, in general, reflects the individuality and exquisiteness of artistic fonts.

The first dataset of Handwriting fonts is created by CASIA-HWDB1.1, and its size is 3755. Regular Script is used as a standard font, and its size is 3757. The other font datasets are those used in StrokeGAN, which include Huawen Amber, Shu, Hanyi Doll, and Hanyi Lingbo, whose sizes are 3596, 2595, 3213, and 3673, respectively. For all the experiments conducted, the training set comprises 80% of the samples, while the remaining 20% is allocated for the test set. Given the challenges associated with gathering paired datasets and considering the distinctive structure of Chinese radicals, we utilized a semi-supervised sample comprising 20% of the data. Figure 6 shows the five personalized fonts generated using Regular Script as the source domain.

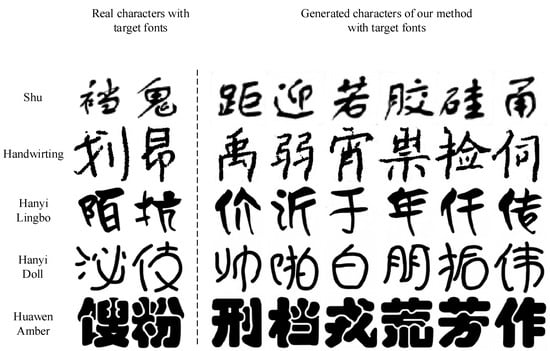

Figure 6.

The generated results of our method on different fonts.

4.1.2. Network Framework and Optimizer

The method uses two generators and two discriminators with the same network structure as the Chinese font generation CycleGAN. Each generator and discriminator has the same structure. The down-sampling module in the generator contains 2 convolutional layers. The residual network contains 9 residual modules, with each residual module containing 2 convolutional residual network layers. The up-sampling module contains 2 de-convolutional layers. The structure of the discriminator network employed in this method closely resembles PatchGAN [4], comprising 6 hidden convolutional layers and 2 convolutional layers in the output module. To ensure stability, all layers were batch-normalized [36]. The widely used Adam optimizer [37] was employed with correlation parameters (0.5, 0.999) to optimize the model sub-problems in our stylish Chinese font experiments. For the cycle consistency loss, a penalty parameter of 10 was set, while the SE reconstruction loss adopted a penalty parameter of 0.18. The hyper-parameters were determined through manual optimization. The architecture of the generator is presented in Table 1.

Table 1.

The architecture of the generative network.

4.1.3. Evaluation Metrics

To ensure the reliability and validity of our proposed method, we employed two objective evaluation metrics commonly utilized. The first metric is content accuracy [2], a widely adopted measure to assess the quality of generated art fonts. In our case, we utilized a pre-trained HCCG-GoogLeNet [38] model to compute the accuracy of the generated stylish Chinese art characters. Additionally, we calculated the absolute value of the difference between the number of strokes in the generated character image and the actual number of strokes in that specific character. This value was then divided by the actual number of strokes to determine the accuracy of stroke representation in the generated character image. Referred to as stroke error , it signifies the proportion of incorrect strokes in relation to the total number of strokes :

Thus, a smaller stroke error means better stroke retention in the character. By employing these evaluation metrics, we can effectively evaluate the quality and fidelity of our generated Chinese characters while minimizing repetition in the explanation.

4.2. Experimental Results

The rationality and validity of our model were examined and confirmed in part one of the experiments. Then, the effectiveness and rationality of the improved attention module and the combination of the semi-supervised scheme and SE in this paper are verified through ablation experiments. The effectiveness of our model is substantiated through experimental comparisons with methods such as StrokeGAN, CycleGAN, and zi2zi (which rely on paired training data). These comparisons serve to demonstrate the superior performance of our model.

4.2.1. Effectiveness of Our Model

The rationality and validity of our model were thoroughly examined and confirmed in the experimental section. We proposed a semi-supervised model to alleviate the problem of incomplete Chinese structure in the generated results of training models based on unpaired data. The attention module was specifically enhanced to preserve character integrity and stroke smoothness. To validate our approach, we conducted experiments using six different fonts, designating the Regular Script font as the source font and the remaining five fonts as target style fonts.

The experimental outcomes, depicted in Figure 6, illustrate the success of our proposed method in generating stylish Chinese fonts. The results demonstrate significant advancements in producing diverse styles of artistic Chinese characters that closely resemble the target fonts in terms of style and font structure. Despite the presence of some hyphenation in the target domain, the readability of the generated characters remains unaffected. Overall, our proposed model exhibits clear advantages in generating the five fonts considered in this study. It effectively preserves stroke details while acquiring the stylistic attributes of the target domain, resulting in improved accuracy and realism of the generated characters. These outcomes serve as compelling evidence of the efficacy of our method in producing stylish Chinese fonts.

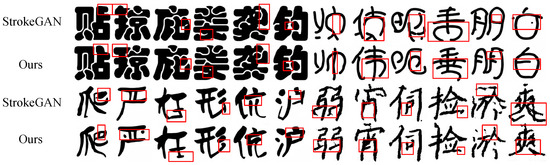

Both StrokeGAN and our proposed method were used as baselines during the training process, and the resulting rendering outcomes are depicted in Figure 7. Notably, the Handwriting font generated by StrokeGAN exhibited noticeable stroke omissions and noise. Similarly, the Hanyi Doll font generated by StrokeGAN suffered from significant stroke errors, leading to illegible fonts. Although the StrokeGAN-generated Huawen Amber font had a small amount of stroke redundancy, it did not significantly affect font recognition. However, the other two types of generated fonts exhibited excessive stroke redundancy and noise, resulting in unreadable characters overall. In contrast, our approach effectively maintained the overall structure of Chinese characters while enhancing the coherence and fluidity of the strokes within the characters. Table 2 presents a comparison between the performance of our method and StrokeGAN in generating stylish fonts. Significantly, our method demonstrates substantial performance superiority over StrokeGAN.

Figure 7.

Comparison of our method with StrokeGAN for generating results on different fonts. The red rectangles mark some of the contrasts in the generated characters.

Table 2.

Comparison of the performance of our method and StrokeGAN in different art font generation tasks.

Furthermore, we compared our proposed method with a model that includes a self-attention module to validate the plausibility of our improvements to the attention module, as shown in Figure 8. From the comparison, we can draw several conclusions. The model incorporating the self-attention module generates characters that closely resemble the target domain, with high readability and retention of most strokes in Chinese characters. However, it still faces the issue of missing strokes. In contrast, our method excels in Chinese font style migration and generates more realistic Chinese characters. Table 3 provides a performance comparison between our method and the model integrating the self-attention module in Chinese font generation. The results further highlight the advantages of our method. It not only achieves more accurate Chinese font generation but also overcomes the issue of missing strokes. These findings demonstrate the efficacy of our proposed approach in generating stylish Chinese fonts.

Figure 8.

Comparison between the proposed method and the effect of adding self-attention mechanism generation is shown. The red rectangles mark images that are structurally incomplete or have incorrect strokes.

Table 3.

Comparison of numerical results of the proposed method with the addition of the self-attention mechanism.

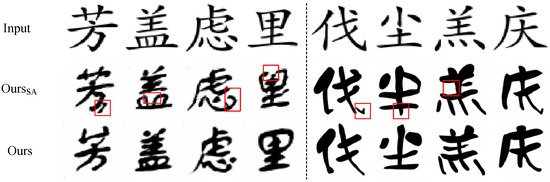

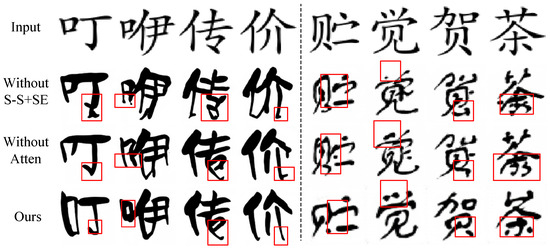

4.2.2. Ablation Experiments

Taking the conversion of the Regular Script font into the Hanyi Doll and Shu fonts as an example, we compared them with our method after removing the attentional module and the combination of the semi-supervised scheme and stroke encoding, respectively. The ablation experiment is shown in Figure 9, and several conclusions are drawn from the results of this experiment. First, not adding the semi-supervised scheme and stroke encoding led to a model that had a hard time taking care of local stroke information and the global features of the Chinese character structure. The training results then showed severe stroke misalignment and missing strokes, leading to poor overall readability and illegible handwriting. Second, when the attention module was not added, there were fewer stroke errors and a more complete font structure in the generated results compared to not adding the semi-supervised scheme and stroke encoding. However, there were still a lot of noise and stroke errors. With both components added to the model, the model generated more artistic and accurate fonts with less stroke redundancy, which improved performance. This justifies the rationality of combining of attentional mechanisms with semi-supervised schemes and stroke encoding. As shown in Table 4, these results emphasize the importance of the semi-supervised scheme and the attention module in this model and illustrate the effectiveness of the method in generating artistic Chinese fonts.

Figure 9.

Our model underwent a series of ablation experiments. Red rectangles mark the gap in the results generated by different experiments.

Table 4.

Comparison of numerical results of ablation experiments.

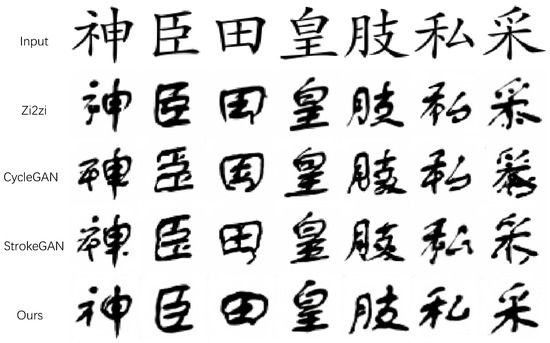

4.2.3. Comparison with Advanced Methods

In this experimental section, we compared our proposed method with various alternative generative models. Since the zi2zi method relied on paired datasets, we manually constructed two paired datasets for this paper. Due to the large number of Chinese characters, acquiring paired training datasets was challenging. Therefore, in these experimental methods, we focused solely on the task of generating Regular Script and Shu font styles from standard print. Figure 10 illustrates several examples of the generated artistic characters, revealing notable occurrences of spelling errors in the strokes when employing the CycleGAN model. Although StrokeGAN mitigated mode collapse and preserved stroke information, it still exhibited stroke redundancy. In comparison, zi2zi produced better results due to the use of paired training data but still suffered from noise and missing strokes. In contrast, our proposed improved model effectively compensated for missing and redundant strokes in StrokeGAN, generating more realistic Chinese fonts than other methods, as shown in Figure 10.

Figure 10.

Comparison between our method and the other three methods in generating fonts from Regular Script to Shu.

Table 5 presents the performance of the four methods. As expected, zi2zi, which utilizes paired data, produces more recognizable results with lower stroke error rates compared to the other two methods. However, our method surpasses all others in these metrics and generates superior-quality artistic Chinese fonts with the lowest stroke error rate. Importantly, our method achieves these results using a limited amount of paired training data, confirming its effectiveness in generating high-quality artistic Chinese fonts.

Table 5.

Evaluation of the performance of our method by comparison with several commonly used methods. The evaluation considered performance metrics such as content accuracy and stroke error.

5. Conclusions

In this paper, a reasonably efficient font generation method is proposed, which can directly transform fonts in the source domain into art fonts in the style of the target domain. By preserving the stroke encoding in the model, a semi-supervised scheme and an improved attention module are introduced to solve the problems of mode collapse, missing structures, and stroke errors that occur during the generation of Chinese fonts. By incorporating stroke encoding and the semi-supervised scheme, the model gains an improved ability to emphasize the local stroke information and global structural features of the characters. The addition of the attention module can further make the connection between the local stroke information and global structural features of the characters closer, thus making the generated character strokes more fluent and natural. The improved attention module better preserves the key pattern information of the stylish Chinese characters, thus improving the performance and reliability of the system. The reliability of our proposed model is validated across six representative font datasets. Through a comparative analysis with existing methods, including the Zi2zi approach that relies on paired data, our model consistently outperforms them. Quantitative evaluations confirm its superiority in terms of performance. The generation result of our model reaches up to 93.8% for content recognition. The generation result of our model is the smallest at 0.0307 in terms of stroke error. Moreover, the visual perception of the generated fonts clearly demonstrates their enhanced recognizability when compared to fonts generated by other models.

Author Contributions

Conceptualization, X.T. and F.Y.; Methodology, X.T. and F.Y.; Writing—original draft, X.T.; Writing—review & editing, X.T., F.Y. and F.T.; Visualization, X.T. and F.T.; Project administration, X.T. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Lin, J.W.; Hong, C.Y.; Chang, R.I.; Wang, Y.C.; Lin, S.Y.; Ho, J.M. Complete font generation of Chinese characters in personal handwriting style. In Proceedings of the 2015 IEEE 34th International Performance Computing and Communications Conference (IPCCC), Nanjing, China, 14–16 December 2015; pp. 1–5. [Google Scholar]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2223–2232. [Google Scholar]

- Isola, P.; Zhu, J.Y.; Zhou, T.; Efros, A.A. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–16 July 2017; pp. 1125–1134. [Google Scholar]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Nets. Stat 2014, 1050, 10. [Google Scholar]

- Mirza, M.; Osindero, S. Conditional generative adversarial nets. arXiv 2014, arXiv:1411.1784. [Google Scholar]

- Chang, B.; Zhang, Q.; Pan, S.; Meng, L. Generating handwritten chinese characters using cyclegan. In Proceedings of the 2018 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Tahoe, NV, USA, 12–15 March 2018; pp. 199–207. [Google Scholar]

- Zeng, J.; Chen, Q.; Liu, Y.; Wang, M.; Yao, Y. Strokegan: Reducing mode collapse in chinese font generation via stroke encoding. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtually, 2–9 February 2021; Volume 35, pp. 3270–3277. [Google Scholar]

- Zhang, H.; Goodfellow, I.; Metaxas, D.; Odena, A. Self-attention generative adversarial networks. In Proceedings of the International Conference on Machine Learning, PMLR, Long Beach, CA, USA, 9–15 June 2019; pp. 7354–7363. [Google Scholar]

- Xu, S.; Lau, F.C.; Cheung, W.K.; Pan, Y. Automatic generation of artistic Chinese calligraphy. IEEE Intell. Syst. 2005, 20, 32–39. [Google Scholar]

- Chang, J.; Gu, Y. Chinese typography transfer. arXiv 2017, arXiv:1707.04904. [Google Scholar]

- Jiang, Y.; Lian, Z.; Tang, Y.; Xiao, J. Scfont: Structure-guided Chinese font generation via deep stacked networks. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 4015–4022. [Google Scholar]

- Zhang, J.; Chen, D.; Han, G.; Li, G.; He, J.; Liu, Z.; Ruan, Z. SSNet: Structure-Semantic Net for Chinese typography generation based on image translation. Neurocomputing 2020, 371, 15–26. [Google Scholar] [CrossRef]

- Tian, Y. zi2zi: Master Chinese Calligraphy with Conditional Adversarial Networks. 2017, Volume 3, p. 2. Available online: https://github.com/kaonashi-Tyc/zi2zi (accessed on 16 January 2023).

- Chen, J.; Ji, Y.; Chen, H.; Xu, X. Learning one-to-many stylised Chinese character transformation and generation by generative adversarial networks. IET Image Process. 2019, 13, 2680–2686. [Google Scholar] [CrossRef]

- Wu, S.J.; Yang, C.Y.; Hsu, J.Y.J. Calligan: Style and structure-aware chinese calligraphy character generator. arXiv 2020, arXiv:2005.12500. [Google Scholar]

- Liu, X.; Meng, G.; Xiang, S.; Pan, C. Handwritten Text Generation via Disentangled Representations. IEEE Signal Process. Lett. 2021, 28, 1838–1842. [Google Scholar] [CrossRef]

- Jiang, Y.; Lian, Z.; Tang, Y.; Xiao, J. DCFont: An end-to-end deep Chinese font generation system. In SIGGRAPH Asia 2017 Technical Briefs; ACM Digital Library’: New York, NY, USA, 2017; pp. 1–4. [Google Scholar]

- Kong, Y.; Luo, C.; Ma, W.; Zhu, Q.; Zhu, S.; Yuan, N.; Jin, L. Look Closer to Supervise Better: One-Shot Font Generation via Component-Based Discriminator. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 13482–13491. [Google Scholar]

- Chen, X.; Xie, Y.; Sun, L.; Lu, Y. DGFont++: Robust Deformable Generative Networks for Unsupervised Font Generation. arXiv 2022, arXiv:2212.14742. [Google Scholar]

- Liu, W.; Liu, F.; Ding, F.; He, Q.; Yi, Z. XMP-Font: Self-Supervised Cross-Modality Pre-training for Few-Shot Font Generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 7905–7914. [Google Scholar]

- Zhu, X.; Lin, M.; Wen, K.; Zhao, H.; Sun, X. Deep deformable artistic font style transfer. Electronics 2023, 12, 1561. [Google Scholar] [CrossRef]

- Xue, M.; Ito, Y.; Nakano, K. An Art Font Generation Technique using Pix2Pix-based Networks. Bull. Netw. Comput. Syst. Softw. 2023, 12, 6–12. [Google Scholar]

- Yuan, S.; Liu, R.; Chen, M.; Chen, B.; Qiu, Z.; He, X. Se-gan: Skeleton enhanced gan-based model for brush handwriting font generation. In Proceedings of the 2022 IEEE International Conference on Multimedia and Expo (ICME), Taipei, Taiwan, 18–22 July 2022; pp. 1–6. [Google Scholar]

- Qin, M.; Zhang, Z.; Zhou, X. Disentangled representation learning GANs for generalized and stable font fusion network. IET Image Process. 2022, 16, 393–406. [Google Scholar] [CrossRef]

- Gao, Y.; Wu, J. Gan-based unpaired chinese character image translation via skeleton transformation and stroke rendering. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 646–653. [Google Scholar]

- Wen, C.; Pan, Y.; Chang, J.; Zhang, Y.; Chen, S.; Wang, Y.; Han, M.; Tian, Q. Handwritten Chinese font generation with collaborative stroke refinement. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikola, HI, USA, 5–9 January 2021; pp. 3882–3891. [Google Scholar]

- Tang, L.; Cai, Y.; Liu, J.; Hong, Z.; Gong, M.; Fan, M.; Han, J.; Liu, J.; Ding, E.; Wang, J. Few-Shot Font Generation by Learning Fine-Grained Local Styles. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 7895–7904. [Google Scholar]

- Zhang, Y.; Man, J.; Sun, P. MF-Net: A Novel Few-shot Stylized Multilingual Font Generation Method. In Proceedings of the 30th ACM International Conference on Multimedia, Lisbon, Portugal, 10 October 2022; pp. 2088–2096. [Google Scholar]

- Wen, Q.; Li, S.; Han, B.; Yuan, Y. Zigan: Fine-grained chinese calligraphy font generation via a few-shot style transfer approach. In Proceedings of the 29th ACM International Conference on Multimedia, Virtual Event, 20–24 October 2021; pp. 621–629. [Google Scholar]

- Huang, H.; Yang, D.; Dai, G.; Han, Z.; Wang, Y.; Lam, K.M.; Yang, F.; Huang, S.; Liu, Y.; He, M. AGTGAN: Unpaired Image Translation for Photographic Ancient Character Generation. In Proceedings of the 30th ACM International Conference on Multimedia, Lisbon, Portugal, 10 October 2022; pp. 5456–5467. [Google Scholar]

- Zeng, J.; Wang, Y.; Chen, Q.; Liu, Y.; Wang, M.; Yao, Y. StrokeGAN+: Few-Shot Semi-Supervised Chinese Font Generation with Stroke Encoding. arXiv 2022, arXiv:2211.06198. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking atrous convolution for semantic image segmentation. arXiv 2017, arXiv:1706.05587. [Google Scholar]

- Wang, F.; Jiang, M.; Qian, C.; Yang, S.; Li, C.; Zhang, H.; Wang, X.; Tang, X. Residual attention network for image classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3156–3164. [Google Scholar]

- Zhang, Y.; Tian, Y.; Kong, Y.; Zhong, B.; Fu, Y. Residual dense network for image restoration. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 2480–2495. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Li, J.; Song, G.; Li, T. Less memory, faster speed: Refining self-attention module for image reconstruction. arXiv 2019, arXiv:1905.08008. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In Proceedings of the International Conference on Machine Learning, PMLR, Lille, France, 7–9 July 2015; pp. 448–456. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going Deeper with Convolutions. arXiv 2014, arXiv:1409.4842. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).