Author Contributions

Conceptualization, S.M.A. and A.Y.M.; methodology, S.M.A. and A.Y.M.; software, S.M.A., A.G., M.A.H. and A.Y.M.; validation, S.M.A., A.G., M.A.H. and A.Y.M.; formal analysis, S.M.A., A.G., M.A.H. and A.Y.M.; investigation, S.M.A., A.G., M.A.H. and A.Y.M.; resources, S.M.A., A.G., M.A.H. and A.Y.M.; data curation, S.M.A., A.G., M.A.H. and A.Y.M.; writing—original draft preparation, S.M.A., A.G., M.A.H. and A.Y.M.; writing—review and editing, S.M.A., A.G. and A.Y.M.; visualization, S.M.A., A.G. and A.Y.M.; supervision S.M.A., A.G. and A.Y.M.; project administration, S.M.A. and A.G.; funding acquisition, S.M.A. and A.G. All authors have read and agreed to the published version of the manuscript.

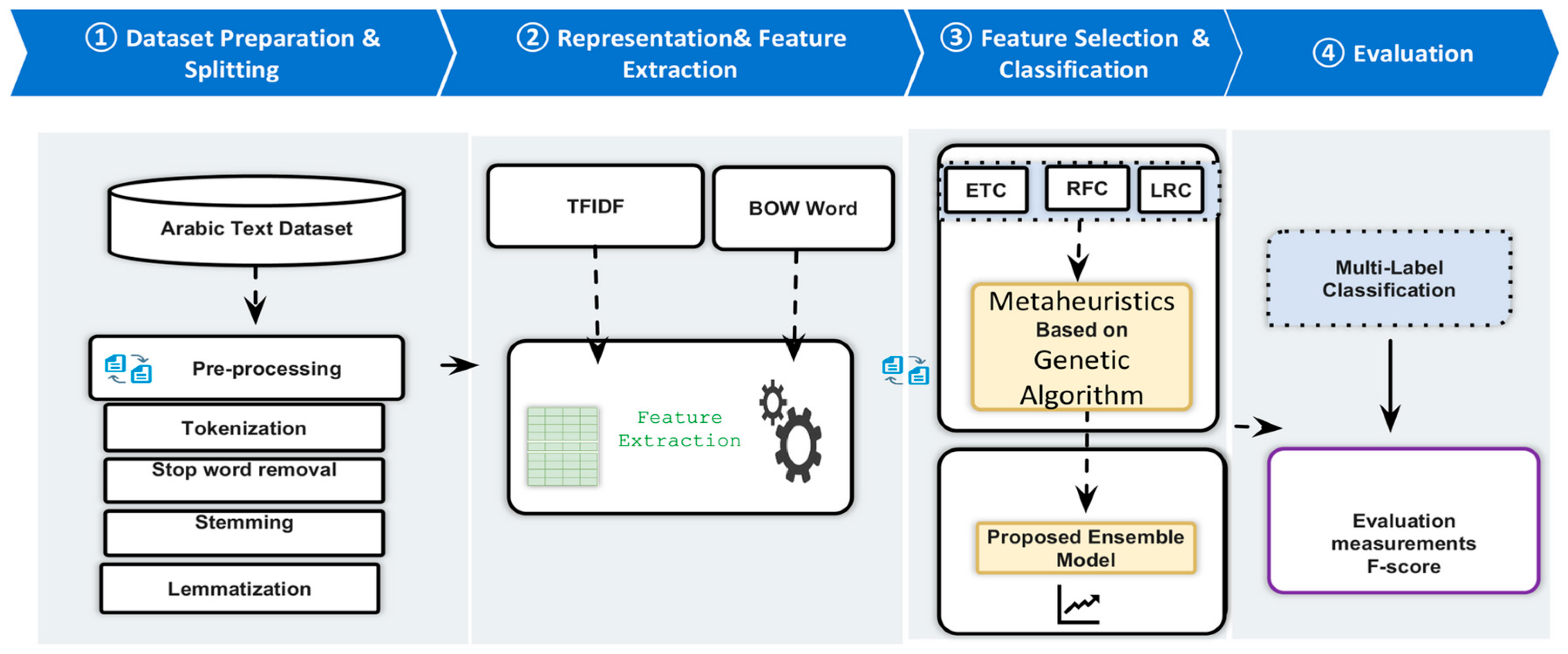

Figure 1.

Architecture model for multilabel classification of Arabic text.

Figure 1.

Architecture model for multilabel classification of Arabic text.

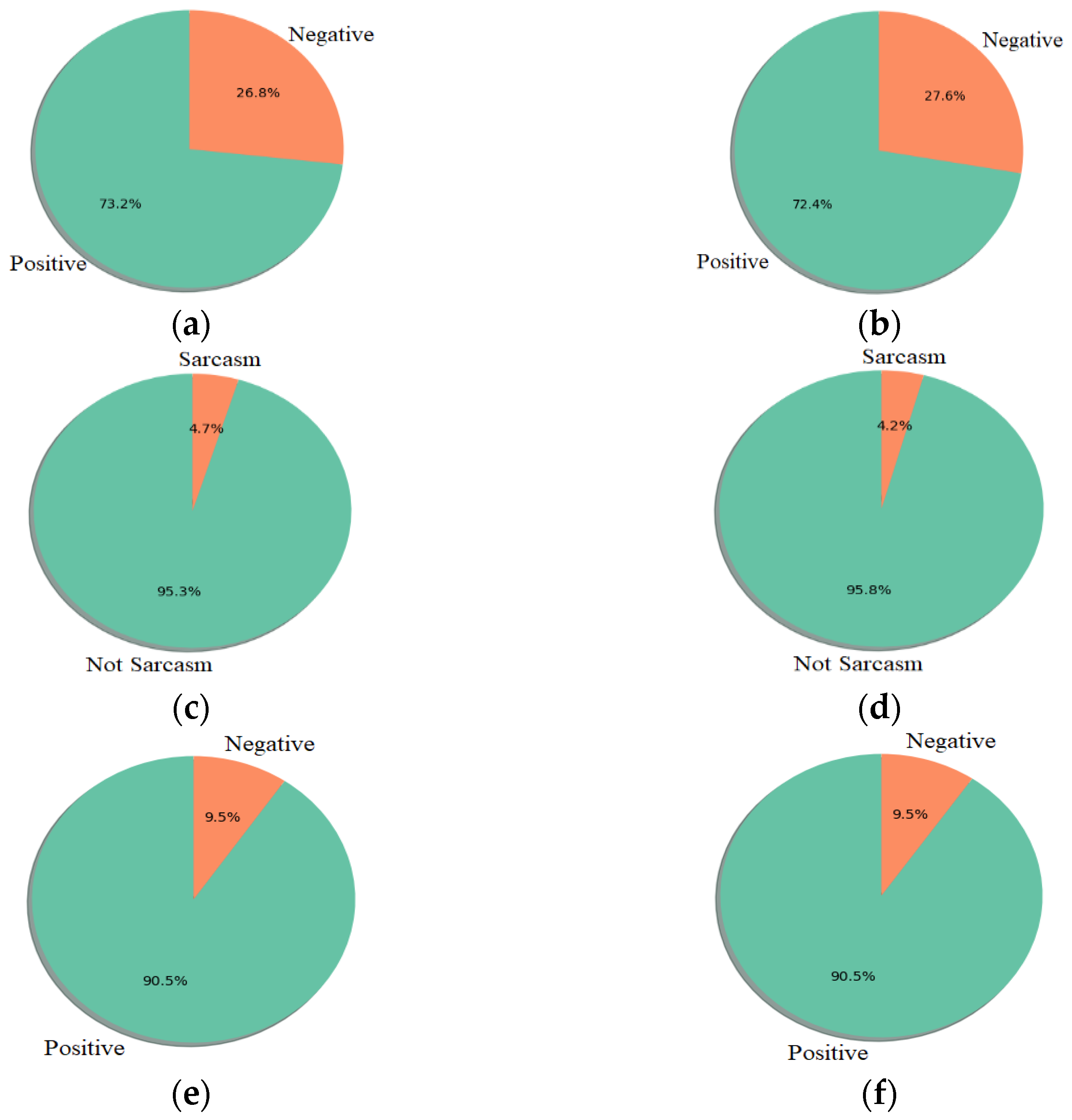

Figure 2.

Distribution of classes in the training and test sets, respectively: (a,b) are the distribution of sentiment classes; (c,d) are the distribution of sarcasm classes; and (e,f) are the distribution of stance classes.

Figure 2.

Distribution of classes in the training and test sets, respectively: (a,b) are the distribution of sentiment classes; (c,d) are the distribution of sarcasm classes; and (e,f) are the distribution of stance classes.

Figure 3.

Distribution of text length: (a) the distribution of length texts in the training set and (b) the distribution of text length in the test set.

Figure 3.

Distribution of text length: (a) the distribution of length texts in the training set and (b) the distribution of text length in the test set.

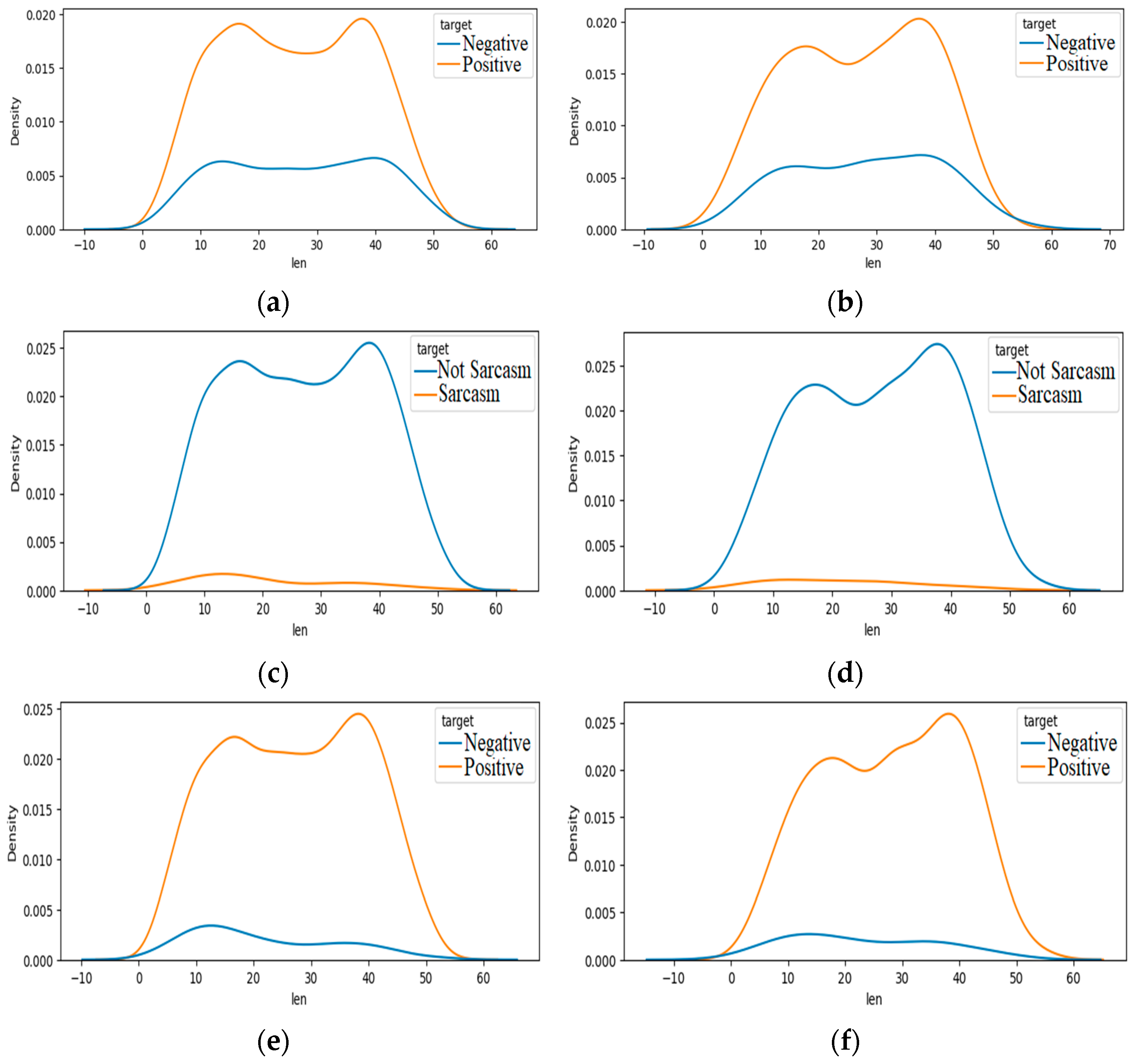

Figure 4.

Density distribution of text length for each topic’s class label of the training and test sets, respectively: (a,b) are the density distribution of sentiment classes; (c,d) are the density distribution of sarcasm classes; and (e,f) are the density distribution of stance classes.

Figure 4.

Density distribution of text length for each topic’s class label of the training and test sets, respectively: (a,b) are the density distribution of sentiment classes; (c,d) are the density distribution of sarcasm classes; and (e,f) are the density distribution of stance classes.

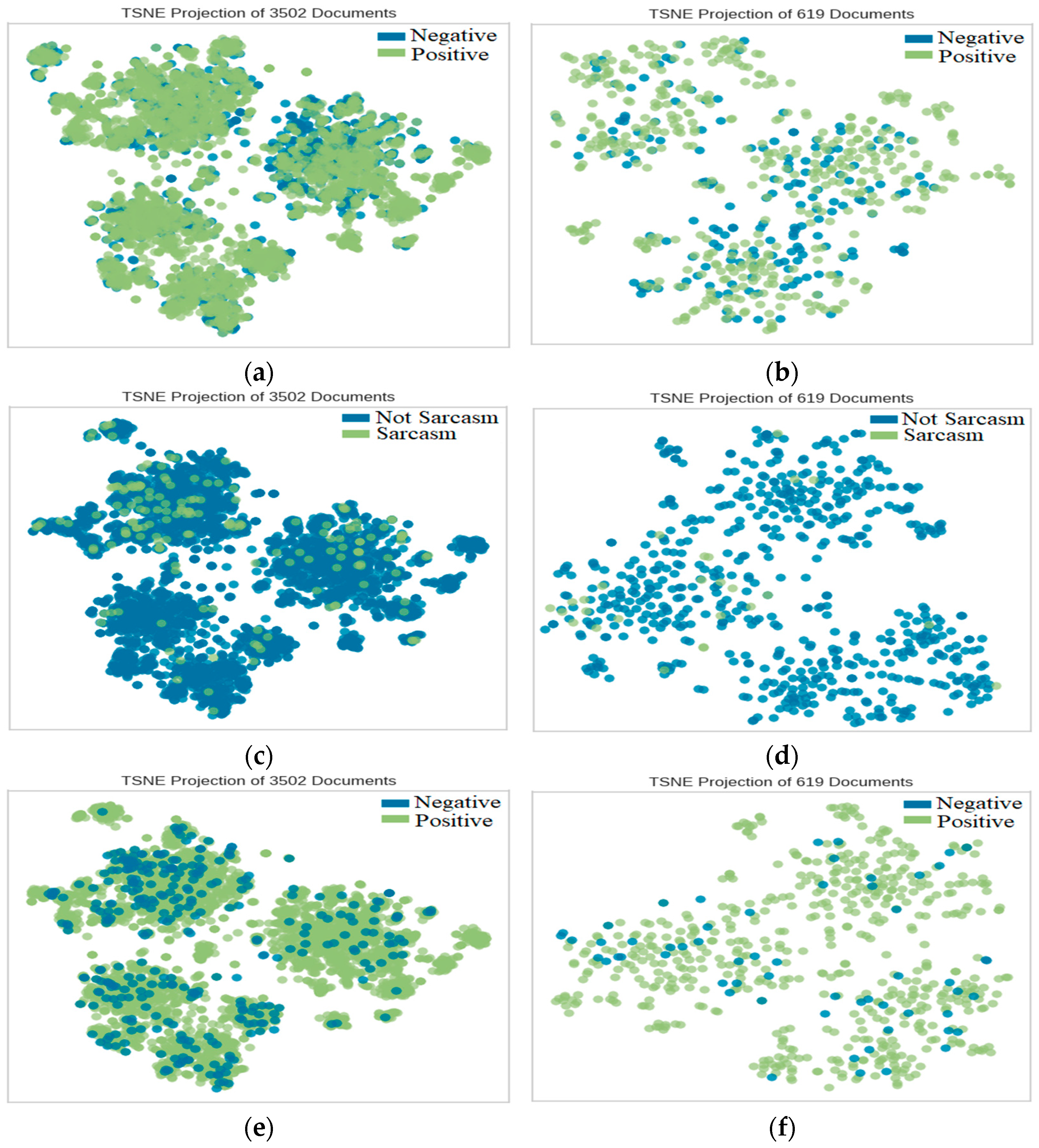

Figure 5.

t-SNE charts of the training and test sets, respectively: (a,b) are the t-SNE charts of the sentiment classes; (c,d) are the t-SNE charts of the sarcasm classes; and (e,f) are the t-SNE charts of the stance classes.

Figure 5.

t-SNE charts of the training and test sets, respectively: (a,b) are the t-SNE charts of the sentiment classes; (c,d) are the t-SNE charts of the sarcasm classes; and (e,f) are the t-SNE charts of the stance classes.

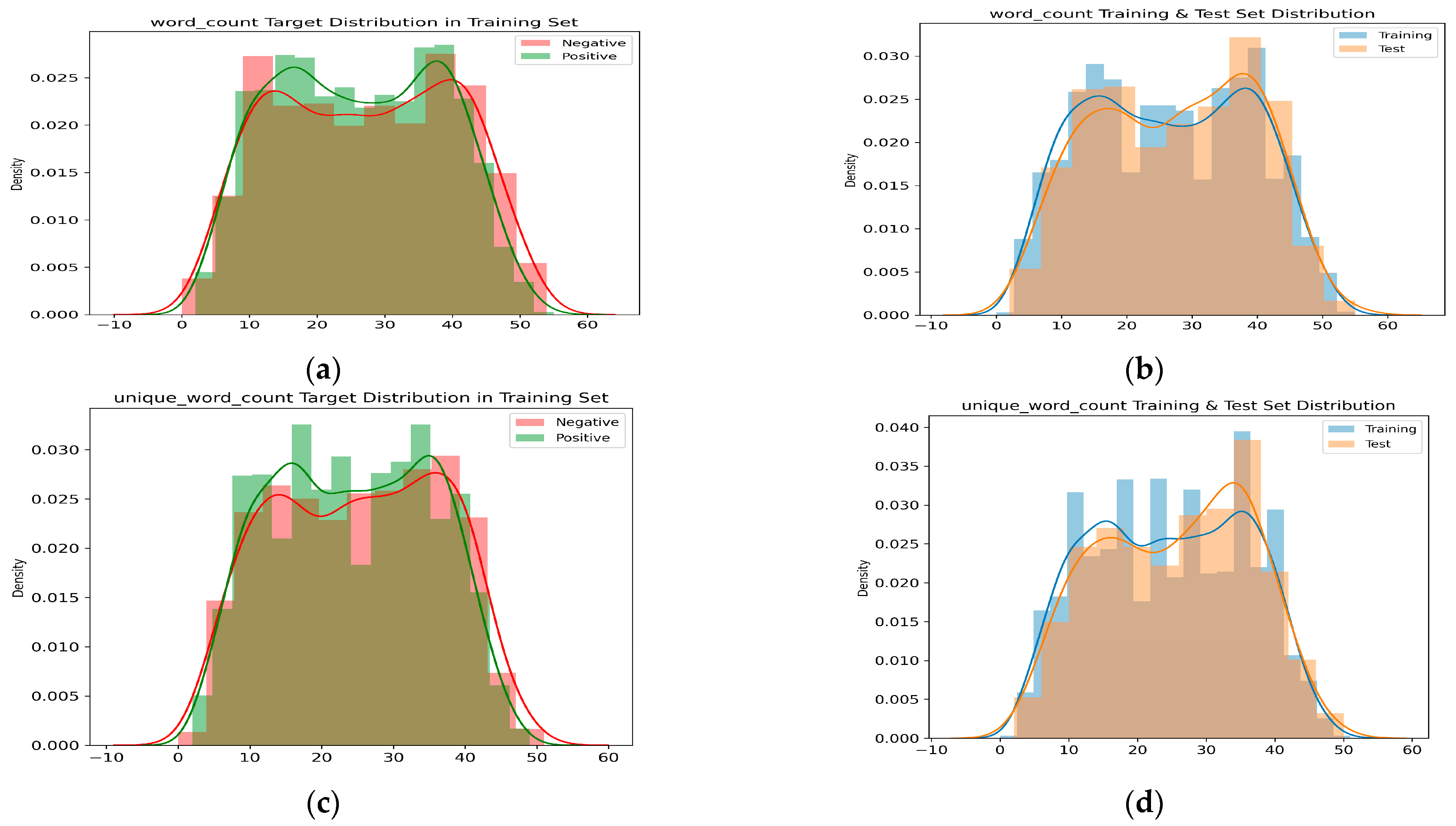

Figure 6.

Density distribution of the word counts and unique word counts of the sentiment data texts: (a,c) are the density distribution of the word counts and unique word counts of the training set regarding the sentiment classes; (b,d) are the density distribution of the word counts and unique word counts of the training and test sets.

Figure 6.

Density distribution of the word counts and unique word counts of the sentiment data texts: (a,c) are the density distribution of the word counts and unique word counts of the training set regarding the sentiment classes; (b,d) are the density distribution of the word counts and unique word counts of the training and test sets.

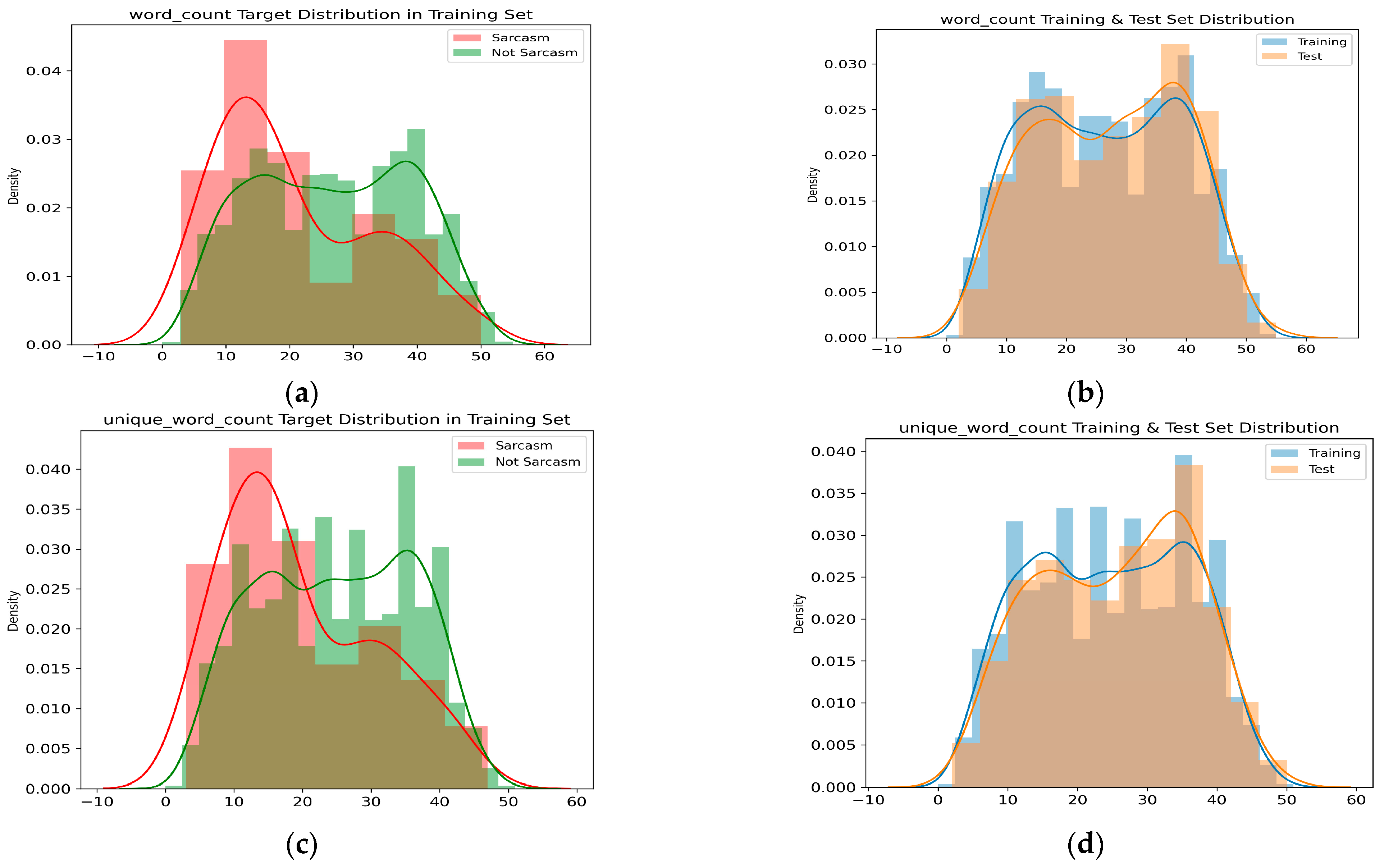

Figure 7.

Density distribution of the word counts and unique word counts of the sarcasm data texts: (a,c) are the density distribution of the word counts and unique word counts of the training set regarding the sarcasm classes; (b,d) are the density distribution of the word counts and unique word counts of the training and test sets.

Figure 7.

Density distribution of the word counts and unique word counts of the sarcasm data texts: (a,c) are the density distribution of the word counts and unique word counts of the training set regarding the sarcasm classes; (b,d) are the density distribution of the word counts and unique word counts of the training and test sets.

Figure 8.

Density distribution of the word counts and unique word counts of the stance data texts: (a,c) are the density distribution of the word counts and unique word counts of the training set regarding the stance classes; (b,d) are the density distribution of the word counts and unique word counts of the training and test sets.

Figure 8.

Density distribution of the word counts and unique word counts of the stance data texts: (a,c) are the density distribution of the word counts and unique word counts of the training set regarding the stance classes; (b,d) are the density distribution of the word counts and unique word counts of the training and test sets.

Table 1.

Distribution of the MAWQIF dataset.

Table 1.

Distribution of the MAWQIF dataset.

| No. | Topic of Tweets | موضوع التغريدات | No. of Arabic Tweets | Training (80%) | Testing (20%) |

|---|

| 1. | COVID-19 Vaccine | تحصين كويد -19 | 1373 | 1167 | 206 |

| 2. | Digital Transformation | موقف التحول الرقمي | 1348 | 1145 | 203 |

| 3. | Women Empowerment | موقف من النساء | 1400 | 1190 | 210 |

| Total | | 4121 | 3502 | 619 |

Table 2.

Examples of multilabel Arabic text by the MAWQIF dataset.

Table 2.

Examples of multilabel Arabic text by the MAWQIF dataset.

| No. | Original Arabic Text | Translated To English Text * | Sentiment | Sarcasm | Stance |

|---|

| 1 | كما أشكر خادم الحرمين الشريفين وصاحب السمو ولي العهد على اهتمامهما بصحة المواطن، كما أشكر وزير الصحة على تنظيمه الرائع واستقباله الجيد. | I also thank the Custodian of the Two Holy Mosques and His Highness the Crown Prince for their concern for the health of the citizen, and I also thank the Minister of Health for his distinguished organization and good reception. | Negative/Positive | Sarcasm/Not Sarcasm | Negative/Positive |

Table 3.

The hyper-parameters of feature selection with cross-validation module.

Table 3.

The hyper-parameters of feature selection with cross-validation module.

| Hyper-Parameter | Description |

|---|

| doc_list | Python list with text documents |

| use_class_weight = True | Python list with Y labels |

| save_data = True | Boolean value representing if you want to apply class weight before training classifiers |

| label_list | The list of labels |

| use_class_weight = True | The use of class weight |

| n_crossvalidation = total_cross_val validation_done | The number of cross-validation |

| n_crossvalidation = total_cross | How many cross-validation samples |

| stop_words | Stop words for count and TF-IDF vectors |

| pickle_path | Path where base model |

| base_model_list | List of machine learning algorithms to be trained |

| vector_list | Type of text vectors from sklearn to be used |

| feature_list | Type of features to be used for ensembling |

| method | Which method you want to specify for metaheuristics feature selection |

Table 4.

Selected default values of metaheuristic hyper-parameters.

Table 4.

Selected default values of metaheuristic hyper-parameters.

| Hyper-Parameter | Value |

|---|

| classification_models | ETC, RFC, and LRC |

| n_jobs | −1 |

| random_state | 1 |

| cost_function | f1_score |

| cost_function_type | micro-averaged |

| cost_function_improvement | increase |

Table 5.

Selected default values of genetic algorithm hyper-parameters.

Table 5.

Selected default values of genetic algorithm hyper-parameters.

| Hyper-Parameter | Value |

|---|

| number of generations | 100 |

| number of population | 150 |

| probability of crossover | 0.9 |

| probability of mutation | 0.1 |

| run_time | 120 min |

Table 6.

ANOVA test results of training set targets for the three topics.

Table 6.

ANOVA test results of training set targets for the three topics.

| Target | Sum_sq | Df | F | PR (>F) |

|---|

| Sentiment | 679.471926 | 1.0 | 4.376833 | 0.036502 |

| Sarcasm | 5243.281393 | 1.0 | 34.060803 | 5.827878 × 10−9 |

| Stance | 7626.842878 | 1.0 | 49.764778 | 2.079751 × 10−12 |

Table 7.

ANOVA test results of test set targets for the three topics.

Table 7.

ANOVA test results of test set targets for the three topics.

| Target | Sum_sq | Df | F | PR (>F) |

|---|

| Sentiment | 130.569338 | 1.0 | 0.88228 | 0.347946 |

| Sarcasm | 896.219770 | 1.0 | 6.107121 | 0.013733 |

| Stance | 1221.586594 | 1.0 | 8.354293 | 0.003983 |

Table 8.

The results of the five configurations settings for the sentiment task scenario with and without the feature selection and optimization methods.

Table 8.

The results of the five configurations settings for the sentiment task scenario with and without the feature selection and optimization methods.

| Classifier Model | Feature | Vector | F1-Score without Feature Selection (%) | F1-Score with Feature Selection (%) |

|---|

| ETC | Unigram | CountVectorizer | 79.17 | 80.88 |

| ETC | Unigram | TfidfVectorizer | 77.12 | 77.38 |

| LRC | Unigram | CountVectorizer | 75.01 | 75.32 |

| RFC | Unigram | CountVectorizer | 75.81 | 77.26 |

| RFC | Unigram | TfidfVectorizer | 75.86 | 76.86 |

Table 9.

The results of the five configuration settings for the augmented sentiment task scenario with and without the feature selection and optimization method.

Table 9.

The results of the five configuration settings for the augmented sentiment task scenario with and without the feature selection and optimization method.

| Classifier Model | Feature | Vector | F1-Score with Feature Selection (%) |

|---|

| ETC | Unigram | CountVectorizer | 86.12 |

| ETC | Unigram | TfidfVectorizer | 86.26 |

| LRC | Unigram | CountVectorizer | 82.21 |

| RFC | Unigram | CountVectorizer | 86.51 |

| RFC | Unigram | TfidfVectorizer | 86.22 |

Table 10.

The results of the five configuration settings for the sarcasm task scenarios with and without the feature selection and optimization method.

Table 10.

The results of the five configuration settings for the sarcasm task scenarios with and without the feature selection and optimization method.

| Classifier Model | Feature | Vector | F1-Score without Feature Selection (%) | F1-Score with Feature

Selection (%) |

|---|

| ETC | Unigram | CountVectorizer | 95.00 | 95.38 |

| ETC | Unigram | TfidfVectorizer | 95.20 | 95.38 |

| LRC | Unigram | CountVectorizer | 93.52 | 94.21 |

| RFC | Unigram | CountVectorizer | 95.38 | 95.38 |

| RFC | Unigram | TfidfVectorizer | 95.32 | 95.38 |

Table 11.

The results of the five configuration settings for the augmented sarcasm task scenario with and without the feature selection and optimization method.

Table 11.

The results of the five configuration settings for the augmented sarcasm task scenario with and without the feature selection and optimization method.

| Classifier Model | Feature | Vector | F1-Score with Feature Selection (%) |

|---|

| ETC | Unigram | CountVectorizer | 96.52 |

| ETC | Unigram | TfidfVectorizer | 96.22 |

| LRC | Unigram | CountVectorizer | 94.21 |

| RFC | Unigram | CountVectorizer | 96.22 |

| RFC | Unigram | TfidfVectorizer | 96.12 |

Table 12.

The results of the five configuration settings for the stance task scenario with and without the feature selection and optimization method.

Table 12.

The results of the five configuration settings for the stance task scenario with and without the feature selection and optimization method.

| Classifier Model | Feature | Vector | F1-Score without Feature

Selection (%) | F1-Score with Feature

Selection (%) |

|---|

| ETC | Unigram | CountVectorizer | 90.73 | 94.11 |

| ETC | Unigram | TfidfVectorizer | 90.41 | 90.41 |

| LRC | Unigram | CountVectorizer | 87.42 | 88.25 |

| RFC | Unigram | CountVectorizer | 89.79 | 89.79 |

| RFC | Unigram | TfidfVectorizer | 90.44 | 90.44 |

Table 13.

The results of the five configuration settings for the augmented stance task scenario with and without the feature selection and optimization method.

Table 13.

The results of the five configuration settings for the augmented stance task scenario with and without the feature selection and optimization method.

| Classifier Model | Feature | Vector | F1-Score with Feature Selection (%) |

|---|

| ETC | Unigram | CountVectorizer | 95.50 |

| ETC | Unigram | TfidfVectorizer | 95.10 |

| LRC | Unigram | CountVectorizer | 94.54 |

| RFC | Unigram | CountVectorizer | 95.23 |

| RFC | Unigram | TfidfVectorizer | 95.44 |

Table 14.

The results of the five configuration settings for the multilabel scenario with and without feature the selection and optimization method.

Table 14.

The results of the five configuration settings for the multilabel scenario with and without feature the selection and optimization method.

| Classifier Model | Feature | Vector | F1-Score without

Feature Selection (%) | F1-Score with Feature

Selection (%) |

|---|

| ETC | Unigram | CountVectorizer | 65.62 | 68.76 |

| ETC | Unigram | TfidfVectorizer | 64.79 | 64.79 |

| LRC | Unigram | CountVectorizer | 62.03 | 63.71 |

| RFC | Unigram | CountVectorizer | 64.02 | 64.54 |

| RFC | Unigram | TfidfVectorizer | 64.91 | 64.99 |

Table 15.

The results of the five configuration settings for the augmented multilabel scenario with and without the feature selection and optimization method.

Table 15.

The results of the five configuration settings for the augmented multilabel scenario with and without the feature selection and optimization method.

| Classifier Model | Feature | Vector | F1-Score with Feature Selection (%) |

|---|

| ETC | Unigram | CountVectorizer | 68.80 |

| ETC | Unigram | TfidfVectorizer | 66.54 |

| LRC | Unigram | CountVectorizer | 65.43 |

| RFC | Unigram | CountVectorizer | 65.00 |

| RFC | Unigram | TfidfVectorizer | 66.78 |

Table 16.

The highest F1-score results of each topic and multilabel classes with and without feature selection and optimization method.

Table 16.

The highest F1-score results of each topic and multilabel classes with and without feature selection and optimization method.

| Task Scenario | F1-Score without Feature Selection (%) | F1-Score with Feature

Selection (%) | F1-Score with Feature Selection and Data Augmentation (%) |

|---|

| Sentiment | 79.17 | 80.88 | 86.51 |

| Sarcasm | 95.38 | 95.38 | 96.52 |

| Stance | 90.73 | 94.11 | 95.50 |

| Multilabel | 65.62 | 68.76 | 68.80 |

Table 17.

Comparison with current related work on the new dataset.

Table 17.

Comparison with current related work on the new dataset.

| Author and Ref. | Year | Model | Multilabel | No. of Topics | F1-Score (%) |

|---|

| Alturayeif et al. [30] | 2022 | Single Model | No | 3 | 61.90 |

| This work | 2023 | Single Model | Yes | 65.62 |

| Ensemble Model | Yes | 68.76 |