Expanded Scene Image Preprocessing Method for the Shack–Hartmann Wavefront Sensor

Abstract

:1. Introduction

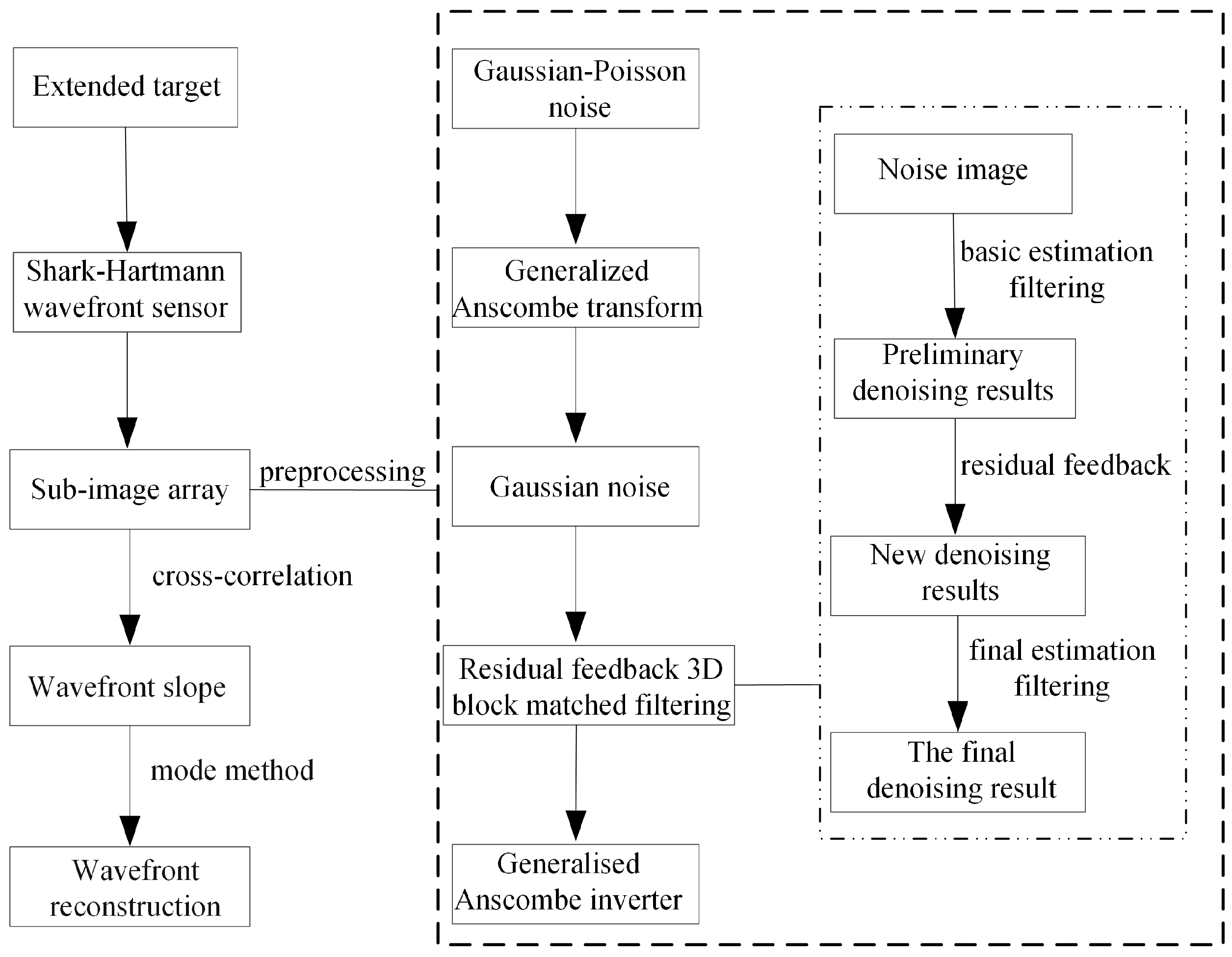

2. Methods

2.1. Principles of Wavefront Detection for Extended Target Shack–Hartmann Wavefront Sensors

2.2. The Extended Target Image Preprocessing Method with a Low Signal-to-Noise Ratio

2.3. Generalized Anscombe Transform

2.4. Generalized Anscombe transform

2.5. Generalized Anscombe Inverter

3. Results

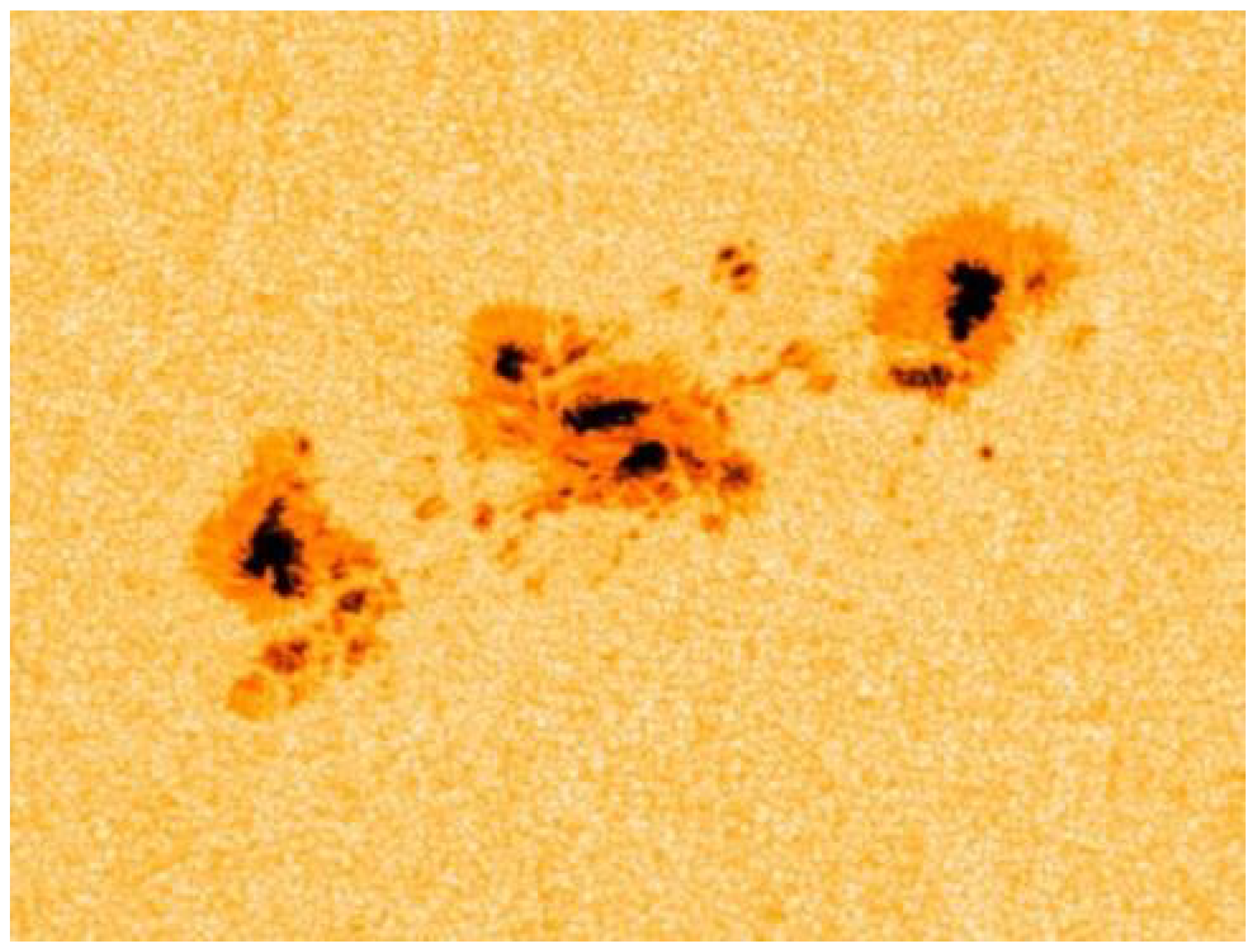

3.1. Simulation-Related Parameters

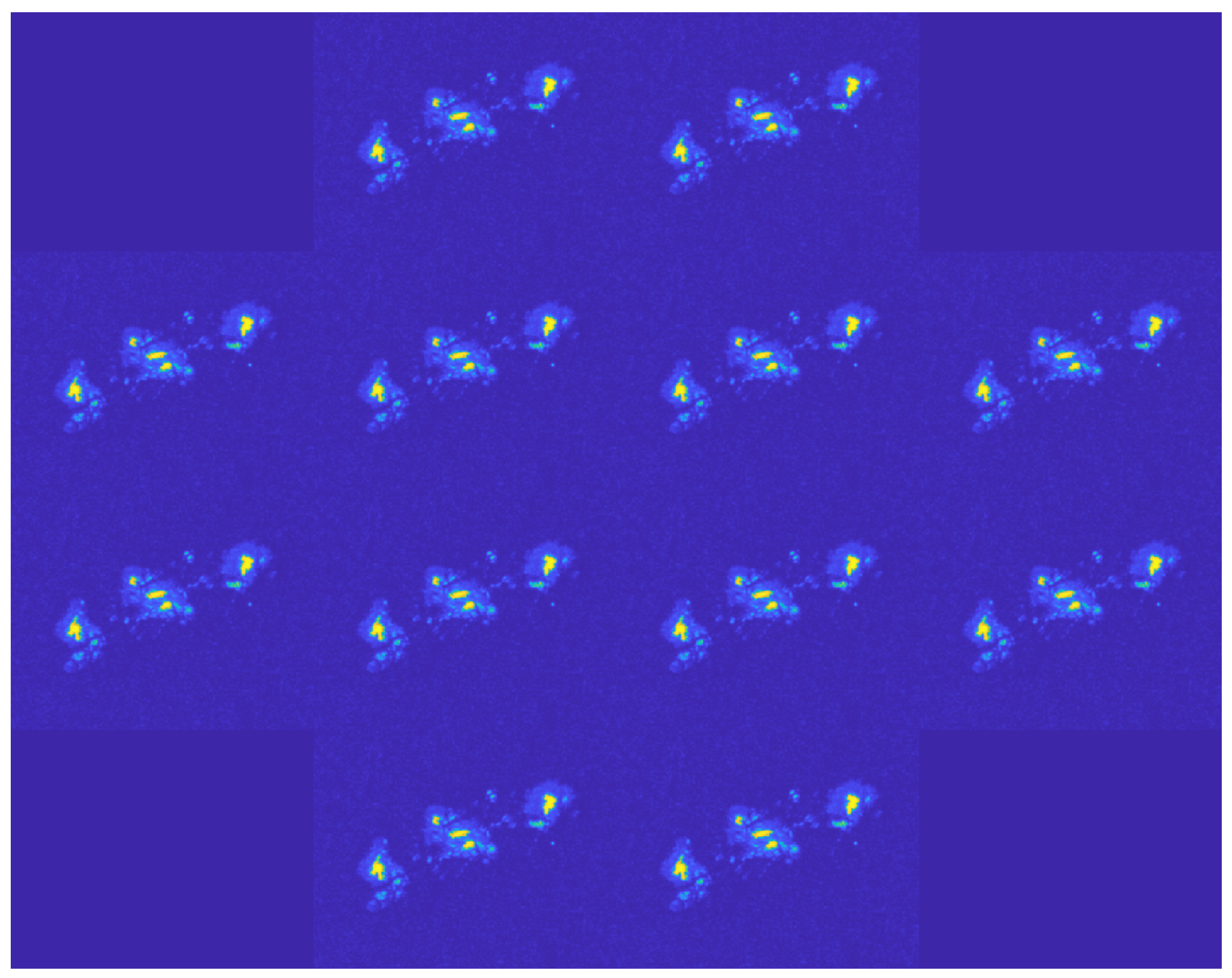

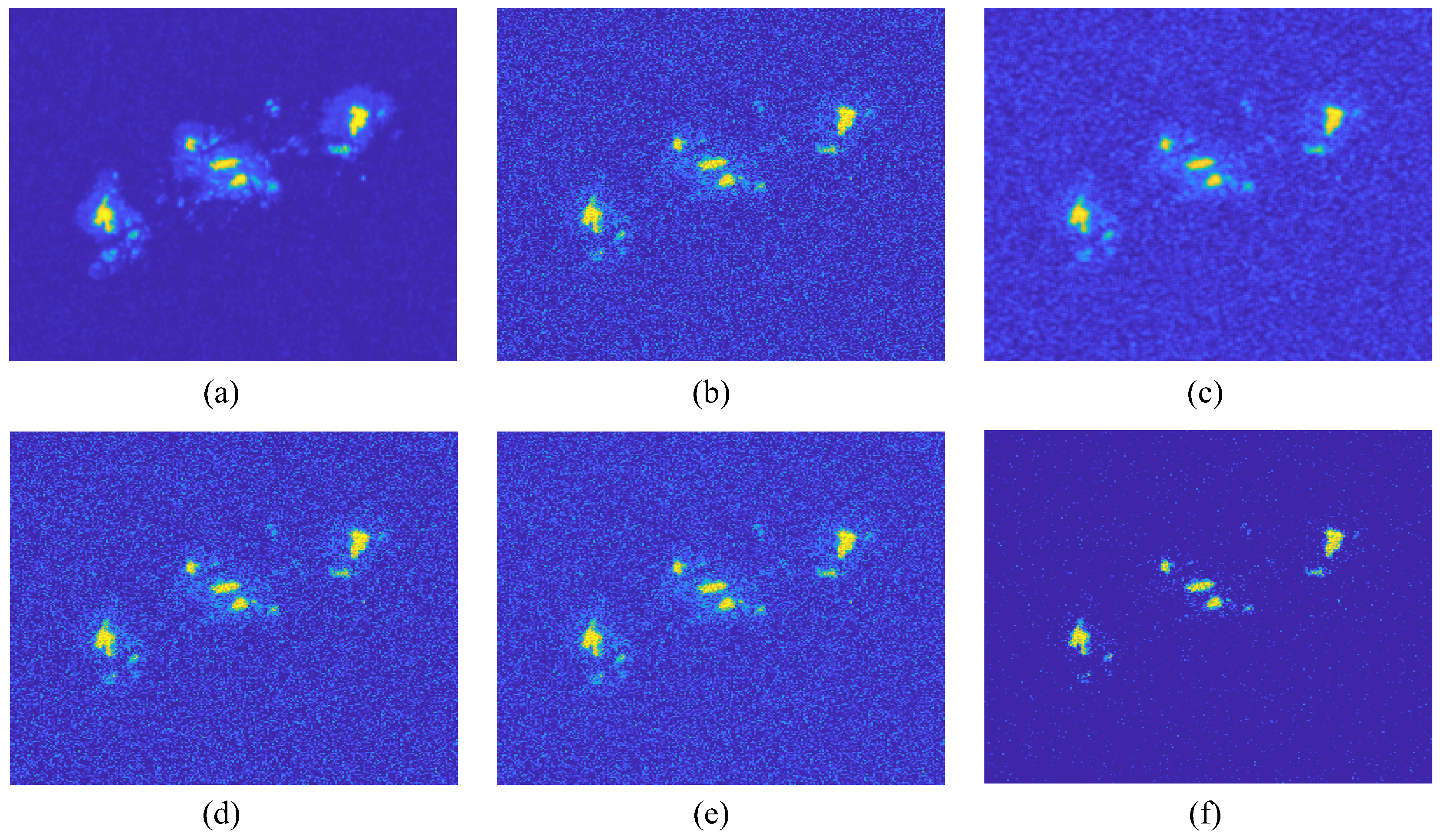

3.2. Subaperture Image Noise Reduction Simulation

3.3. Subaperture Offset Detection Simulation

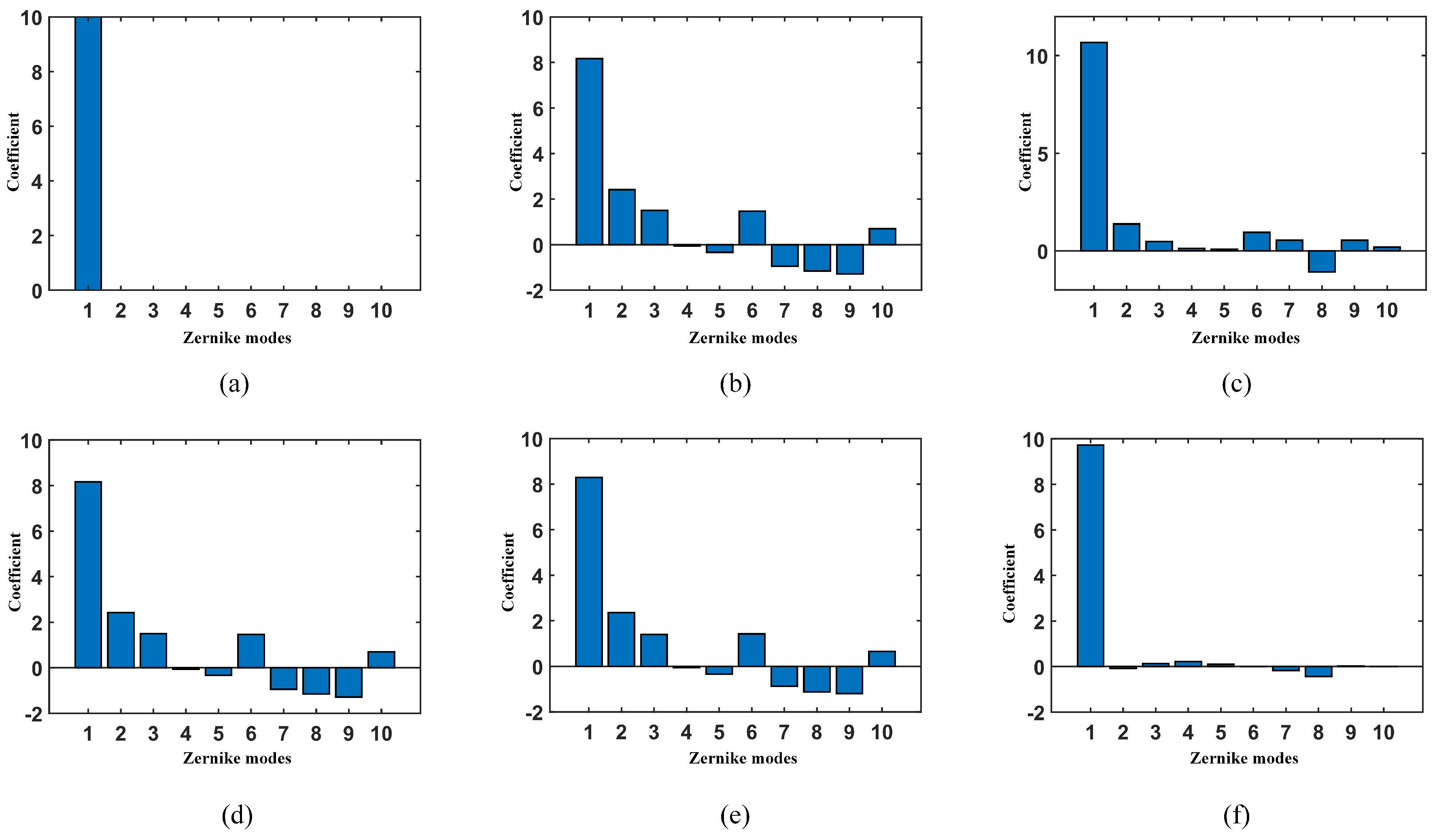

3.4. Simulation and Analysis of the Wavefront Reconstruction

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Hu, J.; Chen, T.; Lin, X.; Wang, L.; An, Q.; Wang, Z. Improved wavefront reconstruction and correction strategy for adaptive optics system with a plenoptic Sensor. IEEE Photonics J. 2021, 13, 6801008. [Google Scholar] [CrossRef]

- Primot, J. Theoretical description of Shack–Hartmann wave-front sensor. Opt. Commun. 2003, 222, 81–92. [Google Scholar] [CrossRef]

- Shahidi, M.; Yang, Y. Measurements of ocular aberrations and light scatter in healthy subjects. Optom. Vis. Sci. 2004, 81, 853–857. [Google Scholar] [CrossRef] [PubMed]

- Perez, G.M.; Manzanera, S.; Artal, P. Impact of scattering and spherical aberration in contrast sensitivity. J. Vis. 2009, 9, 19. [Google Scholar] [CrossRef]

- Leith, E.N.; Hoover, B.G.; Dilworth, D.S.; Naulleau, P.P. Ensemble-averaged Shack–Hartmann wave-front sensing for imaging through turbid media. Appl. Opt. 1998, 37, 3643–3650. [Google Scholar] [CrossRef]

- Galaktionov, I.; Sheldakova, J.; Nikitin, A.; Toporovsky, V.; Kudryashov, A. A Hybrid Model for Analysis of Laser Beam Distortions Using Monte Carlo and Shack–Hartmann Techniques: Numerical Study and Experimental Results. Algorithms 2023, 16, 337. [Google Scholar] [CrossRef]

- Tao, X.; Dean, Z.; Chien, C.; Azucena, O.; Bodington, D.; Kubby, J. Shack-Hartmann wavefront sensing using interferometric focusing of light onto guide-stars. Opt. Express 2013, 21, 31282–31292. [Google Scholar] [CrossRef]

- Li, X.; Li, X.; Wang, C. Optimum threshold selection method of centroid computation for Gaussian spot. In AOPC 2015: Image Processing and Analysis; SPIE: Bellingham, WA, USA, 2015; Volume 9675, p. 967517. [Google Scholar]

- Vargas, J.; Restrepo, R.; Belenguer, T. Shack-Hartmann spot dislocation map determination using an optical flow method. Opt. Express 2014, 22, 1319–1329. [Google Scholar] [CrossRef]

- Vargas, J.; Restrepo, R.; Estrada, J.; Sorzano, C.; Du, Y.Z.; Carazo, J. Shack–Hartmann centroid detection using the spiral phase transform. Appl. Opt. 2012, 51, 7362–7367. [Google Scholar] [CrossRef]

- Wei, P.; Li, X.; Luo, X.; Li, J. Analysis of the wavefront reconstruction error of the spot location algorithms for the Shack–Hartmann wavefront sensor. Opt. Eng. 2020, 59, 043103. [Google Scholar] [CrossRef]

- Shen, T.T.; Zhu, L.; Kong, L.; Zhang, L.Q.; Rao, C.H. Real-time Image Shift Detection with Cross Correlation Coefficient Algorithm for correlating Shack-Hartmann Wavefront Sensors Based on FPGA and DSP. Appl. Mech. Mater. 2015, 742, 303–311. [Google Scholar] [CrossRef]

- Xia, M.; Li, C.; Liu, Z. Adaptive threshold selection method for Shack-Hartmann wavefront sensors. Opt Precis. Eng 2010, 18, 334–340. [Google Scholar]

- Yang, W.; Wang, J.; Wang, B. A method used to improve the dynamic range of Shack–Hartmann wavefront sensor in presence of large aberration. Sensors 2022, 22, 7120. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Chen, X.; Cao, Z.; Zhang, X.; Liu, C.; Mu, Q. Gradient cross-correlation algorithm for scene-based Shack-Hartmann wavefront sensing. Opt. Express 2018, 26, 17549–17562. [Google Scholar] [CrossRef] [PubMed]

- Poyneer, L.A. Scene-based Shack-Hartmann wave-front sensing: Analysis and simulation. Appl. Opt. 2003, 42, 5807–5815. [Google Scholar] [CrossRef] [PubMed]

- Rimmele, T.R.; Marino, J. Solar adaptive optics. Living Rev. Sol. Phys. 2011, 8, 2. [Google Scholar] [CrossRef]

- Jiang, P.; Zhao, M.; Zhao, W.; Wang, S.; Yang, P. Image enhancement of Shack-Hartmann wavefront sensor with non-uniform illumination. In Proceedings of the 10th International Symposium on Advanced Optical Manufacturing and Testing Technologies: Advanced Optical Manufacturing and Metrology Technologies; SPIE: Bellingham, WA, USA, 2021; Volume 12071, p. 120710P. [Google Scholar]

- Li, X.; Li, X. Improvement of correlation-based centroiding methods for point source Shack–Hartmann wavefront sensor. Opt. Commun. 2018, 411, 187–194. [Google Scholar] [CrossRef]

- Mao, H.; Liang, Y.; Liu, J.; Huang, Z. A noise error estimation method for Shack-Hartmann wavefront sensor. In AOPC 2015: Telescope and Space Optical Instrumentation; SPIE: Bellingham, WA, USA, 2015; Volume 9678, p. 967811. [Google Scholar]

- Kong, F.; Polo, M.C.; Lambert, A. Centroid estimation for a Shack–Hartmann wavefront sensor based on stream processing. Appl. Opt. 2017, 56, 6466–6475. [Google Scholar] [CrossRef]

- Anugu, N.; Garcia, P.J.; Correia, C.M. Peak-locking centroid bias in shack–hartmann wavefront sensing. Mon. Not. R. Astron. Soc. 2018, 476, 300–306. [Google Scholar] [CrossRef]

- Zhao, P.; Wang, X.; Yang, F.; Min, Z. Extremely Weak Signal Detection Algorithm of Multi-Pixel Photon Detector. J. Phys. Conf. Ser. 2023, 2476, 012026. [Google Scholar] [CrossRef]

- Zhou, H.; Zhang, L.; Zhu, L.; Bao, H.; Guo, Y.; Rao, X.; Zhong, L.; Rao, C. Comparison of correlation algorithms with correlating Shack-Hartmann wave-front images. In Proceedings of the Society of Photo-Optical Instrumentation Engineers (SPIE) Conference Series; SPIE: Bellingham, WA, USA, 2016; Volume 10026, p. 100261B. [Google Scholar]

- Wang, G.; Hou, Z.; Qin, L.; Jing, X.; Wu, Y. Simulation Analysis of a Wavefront Reconstruction of a Large Aperture Laser Beam. Sensors 2023, 23, 623. [Google Scholar] [CrossRef] [PubMed]

- Fried, D.L. Least-square fitting a wave-front distortion estimate to an array of phase-difference measurements. JOSA 1977, 67, 370–375. [Google Scholar] [CrossRef]

- Löfdahl, M.G. Evaluation of image-shift measurement algorithms for solar Shack-Hartmann wavefront sensors. Astron. Astrophys. 2010, 524, A90. [Google Scholar] [CrossRef]

- Rimmele, T.R.; Radick, R.R. Solar adaptive optics at the National Solar Observatory. Adapt. Opt. Syst. Technol. 1998, 3353, 72–81. [Google Scholar]

- Xie, M.; Zhang, Z.; Zheng, W.; Li, Y.; Cao, K. Multi-Frame Star Image Denoising Algorithm Based on Deep Reinforcement Learning and Mixed Poisson–Gaussian Likelihood. Sensors 2020, 20, 5983. [Google Scholar] [CrossRef] [PubMed]

- Zou, C.; Xia, Y. Bayesian dictionary learning for hyperspectral image super resolution in mixed Poisson–Gaussian noise. Signal Process. Image Commun. 2018, 60, 29–41. [Google Scholar] [CrossRef]

- Chouzenoux, E.; Jezierska, A.; Pesquet, J.C.; Talbot, H. A convex approach for image restoration with exact Poisson–Gaussian likelihood. SIAM J. Imaging Sci. 2015, 8, 2662–2682. [Google Scholar] [CrossRef]

- Astari, F.M.; Mulyantoro, D.K.; Indrati, R. Analysis of BM3D Denoising Techniques to Improvement of Thoracal MRI Image Quality; Study on Low Field MRI. J. Med. Imaging Radiat. Sci. 2022, 53, S24. [Google Scholar] [CrossRef]

- Ri, G.I.; Kim, S.J.; Kim, M.S. Improved BM3D method with modified block-matching and multi-scaled images. Multimed. Tools Appl. 2022, 81, 12661–12679. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, J.; Wang, M. Improved BM3D denoising method. IET Image Process. 2017, 11, 1197–1204. [Google Scholar] [CrossRef]

- Feruglio, P.F.; Vinegoni, C.; Gros, J.; Sbarbati, A.; Weissleder, R. Block matching 3D random noise filtering for absorption optical projection tomography. Phys. Med. Biol. 2010, 55, 5401. [Google Scholar] [CrossRef] [PubMed]

| Method | Average Offset Calculation Error |

|---|---|

| Noisy image | 7.78 |

| Mean filtering | 5.4865 |

| Gaussian filtering | 7.7843 |

| BM3D | 7.60 |

| GFBM3D | 3.76 |

| Method | Root Mean Square Error |

|---|---|

| Noisy image | 1.3440 |

| Mean filtering | 0.7251 |

| Gaussian filtering | 1.3440 |

| BM3D | 1.2827 |

| GFBM3D | 0.1941 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, B.; Jia, J.; Zhou, Y.; Zhang, Y.; Li, Z. Expanded Scene Image Preprocessing Method for the Shack–Hartmann Wavefront Sensor. Appl. Sci. 2023, 13, 10004. https://doi.org/10.3390/app131810004

Chen B, Jia J, Zhou Y, Zhang Y, Li Z. Expanded Scene Image Preprocessing Method for the Shack–Hartmann Wavefront Sensor. Applied Sciences. 2023; 13(18):10004. https://doi.org/10.3390/app131810004

Chicago/Turabian StyleChen, Bo, Jingjing Jia, Yilin Zhou, Yirui Zhang, and Zhaoyi Li. 2023. "Expanded Scene Image Preprocessing Method for the Shack–Hartmann Wavefront Sensor" Applied Sciences 13, no. 18: 10004. https://doi.org/10.3390/app131810004

APA StyleChen, B., Jia, J., Zhou, Y., Zhang, Y., & Li, Z. (2023). Expanded Scene Image Preprocessing Method for the Shack–Hartmann Wavefront Sensor. Applied Sciences, 13(18), 10004. https://doi.org/10.3390/app131810004