1. Introduction

The development of individualized three-dimensionally printed upper limb prostheses has been a significant area of research in the field of medical technology [

1], with many advancements performed in recent years regarding additive manufacturing of such devices [

1,

2,

3]. Prosthetic devices aim to improve the quality of life for individuals with limb loss by restoring lost functionality and enhancing their mobility and independence. However, designing and fabricating prosthetic devices that meet the unique needs and preferences of each patient remains a complex challenge. In recent years, the emergence of virtual reality (VR), augmented reality (AR), and mixed reality (MR) technologies has shown promising potential in revolutionizing the design of various medical devices, including prosthetics [

4].

The field of upper limb prosthetics has witnessed remarkable advancements over the years. Traditional manufacturing processes for prostheses often involve time-consuming and labor-intensive techniques, resulting in standardized devices that may not fully meet the unique anatomical and functional requirements of individual patients [

5,

6]. This limitation has spurred the exploration of innovative technologies, such as three-dimensional printing, to address the customization challenges and to enable the production of personalized prostheses [

1,

5,

6], although the modern process is not without its own issues to solve [

3,

7]. One of the examples of the innovative technologies for rapid production of individualized prostheses is the AutoMedPrint system [

6]. It allows one to build inexpensive three-dimensionally printed prostheses for functional use, in a very short time, thanks to the automation of the data processing and design stages [

6]. In the AutoMedPrint system, AR and VR technologies are part of the process, helping during work with the patient in customizing the product [

8].

Virtual reality, an immersive technology that simulates a computer-generated environment, has gained significant attention in various domains, including medicine and rehabilitation [

9]. Mixed reality and augmented reality, technologies that blend virtual elements with the real world, are also used in many contexts in engineering [

10] and medicine [

11]. MR devices, such as Microsoft HoloLens and Magic Leap, overlay digital content onto the user’s real-world environment, creating a seamless integration between the virtual and physical realms. This enables users to interact with virtual objects—often with their own hands by use of hand motion tracking—and to receive real-time feedback [

12]. AR, on the other hand, can be utilized in everyday use devices, such as tablets and cellphones, requiring only markers (such as QR codes) to present the digital content overlaid on the real world. As such, AR applications can be easily widespread and remain accessible for many persons, facilitating education [

13], as well as the improvement of many engineering processes [

14]. Augmented reality and mixed reality operate on a similar principle—the difference lies in the spatial context (as defined in [

15]), present only in AR, non-existent in MR. In other words, digital images in AR are linked spatially with specific real-world objects, while MR visualizations are mostly disconnected and able to freely move around and interact in the blended virtual-real scene.

Virtual, mixed, and augmented reality put together are considered as Extended Reality (XR) technologies by today’s nomenclature, filling the whole simulated reality continuum, as defined three decades prior by Milgram [

16]. The current trend is integration of the three, both on the hardware and the software level, and, frequently, applications can blend between virtual and mixed and between mixed and augmented [

17], even using a single device having all these capabilities (examples of such devices are Meta Quest Pro or Varjo XR-3 headsets, available on the market since 2022).

From the engineering viewpoint, XR technologies are considered to be an integral part of the Industry 4.0 concept and a vital part of the smart factory concept [

18]. Numerous researchers investigated the use of Augmented and Virtual Reality in various stages of the product lifecycle, including design, assembly, quality control, and manufacturing simulation [

19]. It is nowadays considered as general knowledge that all the XR technologies can be successfully implemented in industrial production and that they can greatly help in modern product development.

The medical domain, as well as medical production, is a prominent area of application of XR. For example, VR and MR technologies offer novel possibilities for preoperative planning and patient education. Virtual, augmented, and mixed reality, used together with haptics, can be also of a great help in remote patient examination [

20].

As regards medical production, VR technology offers compelling opportunities for the design phase. By utilizing VR, designers and engineers can create virtual models of patient-specific devices and iterate upon them in a virtual space. This allows for rapid customization and modification, as well as the ability to incorporate patient-specific anatomical data [

21]. For example, VR allows for the precise measurement and modeling of residual limbs, ensuring optimal fit and functionality of the prosthesis. By incorporating VR-based design processes, researchers and practitioners can develop individualized upper limb prostheses that are tailored to each patient’s unique requirements. VR-based design also enables collaboration among multidisciplinary teams, including clinicians, engineers, and patients, fostering a more comprehensive and patient-centered approach to prosthetic development [

22].

For individuals who require upper limb amputation, VR can simulate the expected outcomes of different prosthetic designs and aid in the decision-making process. Furthermore, VR-based environments can provide a platform for patients to familiarize themselves with their future prostheses and practice activities of daily living, thus reducing anxiety and improving the overall acceptance and adoption of the devices [

4].

Virtual and mixed reality technologies also offer immense potential in the training and rehabilitation of individuals using upper limb prostheses. With VR, users can engage in immersive training simulations that replicate real-world activities, allowing them to practice and refine their prosthetic control and manipulation skills [

4,

23]. These simulations provide a safe and controlled environment for patients to develop confidence and proficiency in using their prostheses, ultimately enhancing their overall rehabilitation experience. Furthermore, mixed reality (MR) devices, such as Microsoft HoloLens, overlay digital content onto the user’s real-world environment, enabling real-time feedback and guidance during training sessions [

11].

In addition to design and training, virtual and mixed reality technologies have other promising applications in the medical domain. VR can be used to simulate the expected outcomes of different prosthetic designs, aiding in the decision-making process for individuals requiring upper limb amputation [

24]. Patients can virtually visualize and interact with different prosthetic options, allowing them to make informed choices based on their preferences and functional requirements. Moreover, VR-based environments can also be utilized to reduce anxiety and to improve patient acceptance and adoption of prostheses. By providing a platform for patients to practice activities of daily living in a virtual space, VR fosters familiarity and confidence in using the prosthetic device [

25].

In summary, the integration of virtual and mixed reality technologies in the development of individualized three-dimensionally printed upper limb prostheses has the potential to revolutionize the field. By leveraging these technologies, researchers and practitioners can create customized prosthetic designs, provide immersive training experiences, and enhance patient acceptance and rehabilitation outcomes. The state-of-the-art analysis presented in this section highlights the advancements and possibilities offered by VR and MR in the specific context of upper limb prosthetics. It establishes the foundation for the experimental evaluation that will be conducted in this research.

To date, there has been limited exploration of the combined potential of the entire spectrum of these technologies in the lifecycle and development of rapidly manufactured personalized upper limb prosthetics. The studies on the matter, presenting expert evaluation and comparison of various technologies from the XR continuum in such use, are practically non-existent in the current literature. As such, the use of these technologies is still quite limited and mostly experimental, and, in the authors’ opinion, this is in spite of their huge potential. Recent study examples include, e.g., comparison of AR versus VR in driver assistance [

26] or general selection of XR methods for production based on case studies [

27]. In these and other examples, academia experts were asked for their opinions to test and to validate various technologies in certain use cases. However, in the available literature, there are no attempts at testing and validating all three XR technologies in the case of a personalized medical product. As such, the authors believe that the proposed approach is novel and not directly comparable with other methods.

This scientific paper aims to explore and evaluate the applications of VR and MR technologies as a part of the lifecycle of individualized upper limb prostheses. By presenting the results of the performed experimental studies, the authors seek to provide valuable insights for scientists, researchers, and practitioners in the field, ultimately contributing to the advancement of personalized prosthetic solutions and incorporating XR technologies into the design and production processes.

2. Materials and Methods

2.1. Research Concept and Plan

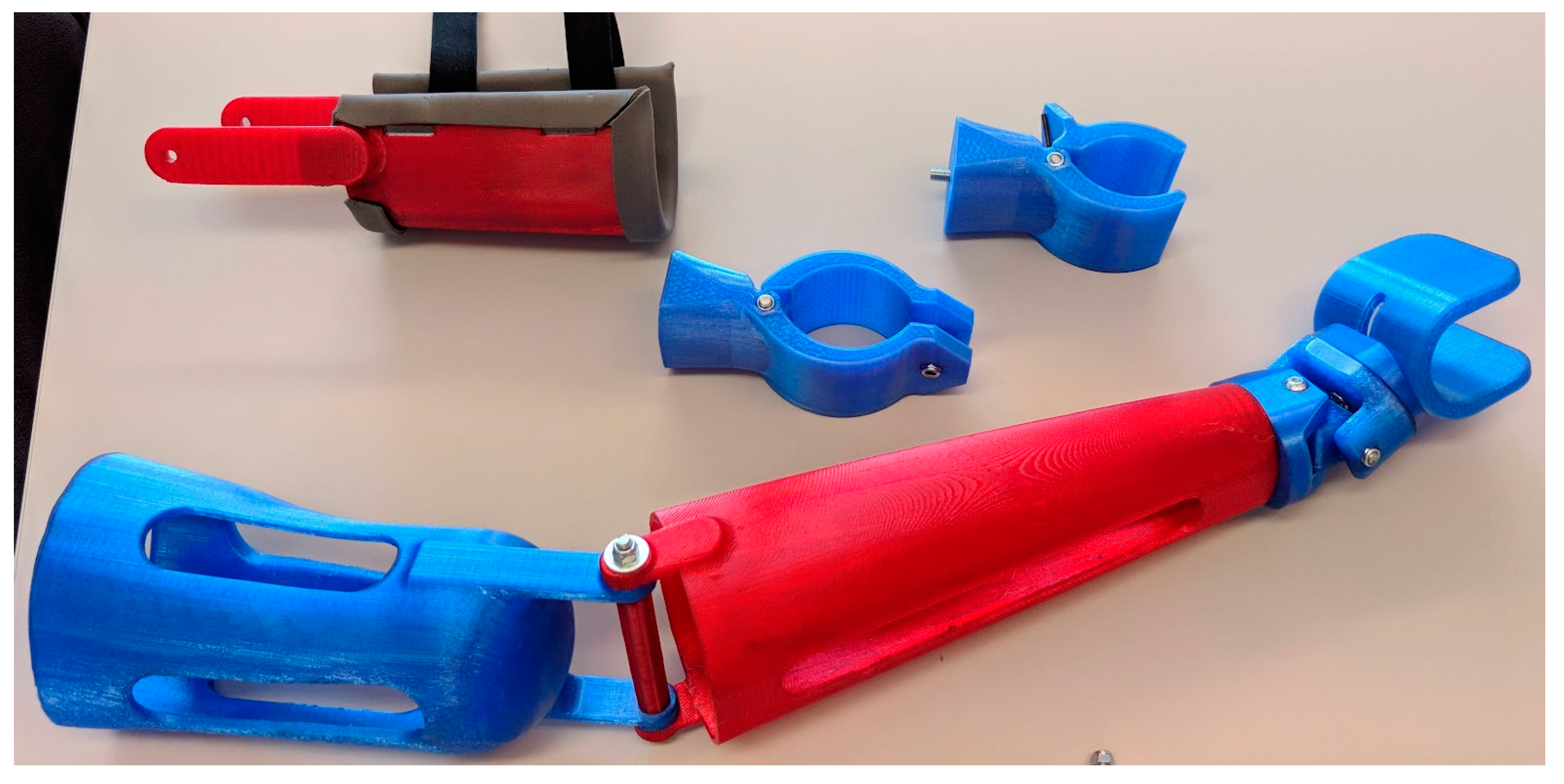

The aim of the presented research is to evaluate and compare all three basic XR technologies—Virtual Reality, Augmented Reality and Mixed Reality—in the process of development of a customized, individually designed medical product, which is a modular prosthesis, manufactured with 3D printing technologies (

Figure 1).

The research is realized using the following assumptions:

The prosthesis model is prepared in a CAD system, using available archive anatomical data as gathered in some previous studies [

28]. It is exported to an appropriate filetype for visualization in a 3D engine. Two different versions of the model are created: one for the configuration process (AR and MR) and the other for the assembly process (VR).

The models are imported into the 3D engine, with Unity game engine being employed for all the applications, and subsequent preparation of the applications takes place. Each application is created using different frameworks, with their own algorithms and scripting for interaction implementation. However, only free-to-use plugins and software development kits are used to ensure enhanced reproducibility of the process, while duly considering their inherent limitations.

The applications are tested among the group of purposefully selected experts. The experts all have an engineering background and expertise in the design and manufacturing of customized medical products. However, the majority of experts lack extensive prior experience with XR technologies, leaving them uncertain about what to anticipate from the technology. The expert group is also diversified in terms of age, gender, and ethnicity.

After testing, experts fill out an anonymous survey for the evaluation of a given technology. After testing all three applications, the experts fill out another survey, aimed at comparing technologies and their potential. All the answers, except for the open questions, are performed using a 5-point Likert scale for easier comparison of the results. The experts are also free to exchange opinions among themselves and to ask any questions related to the technology, so as to be able to fully evaluate its potential in the product lifecycle.

In the final stage, results of testing, juxtaposed with researchers’ observations, are used to draw the final conclusions and recommendations of the use of XR technologies in the medical product development process.

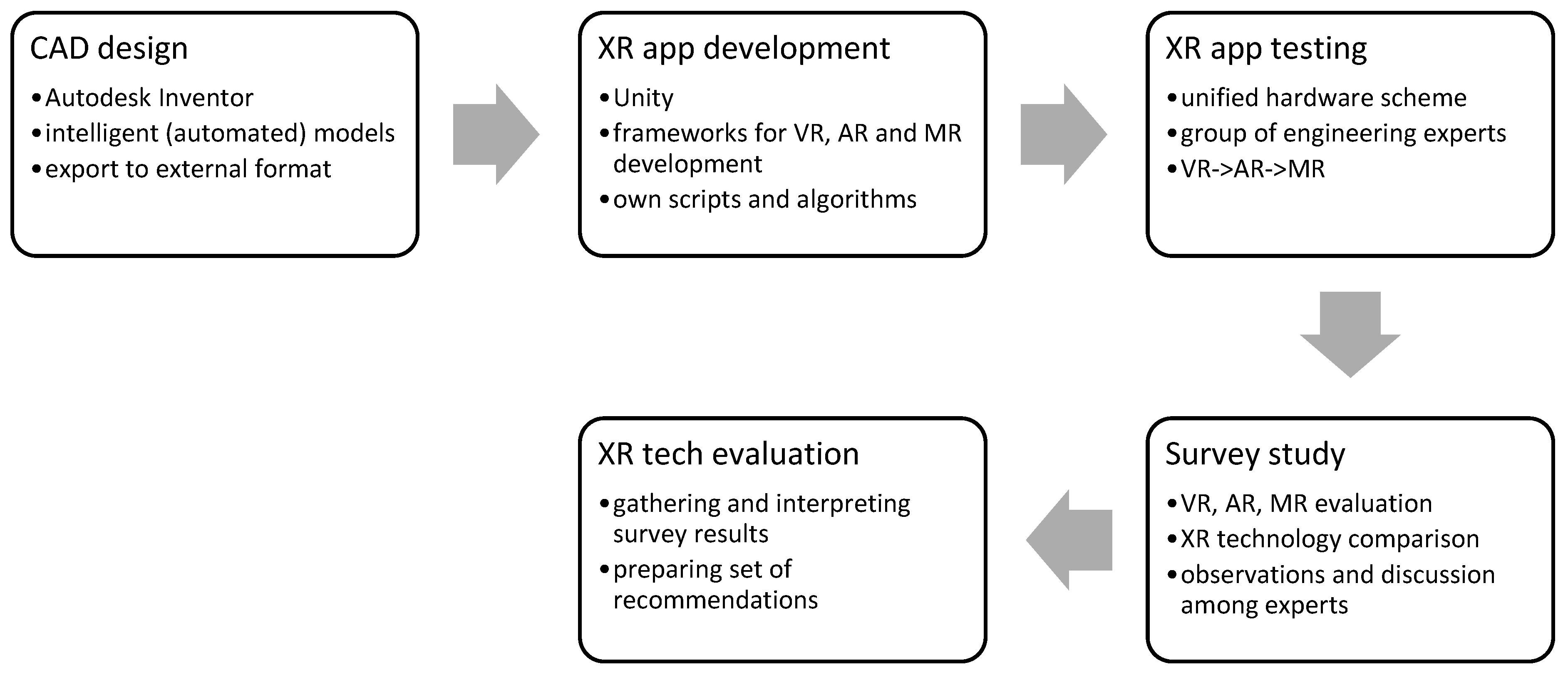

The scheme of research as described above is presented in

Figure 2. More details are given in the subsequent technical sections.

2.2. Background and Motivation—AutoMedPrint System

The initial concept of the modular prosthesis, which is a basis of all the applications, was made as part of the project “Automation of design and rapid production of individualized orthopedic and prosthetic products based on data from anthropometric measurements”, serving the development of the prototype AutoMedPrint system [

28,

29], continuing previous long-term studies by the authors. The prosthesis design was created and iteratively improved in a number of studies [

30], resulting in successful implementations for various patients, demonstratively shown in

Figure 3 (not part of this study).

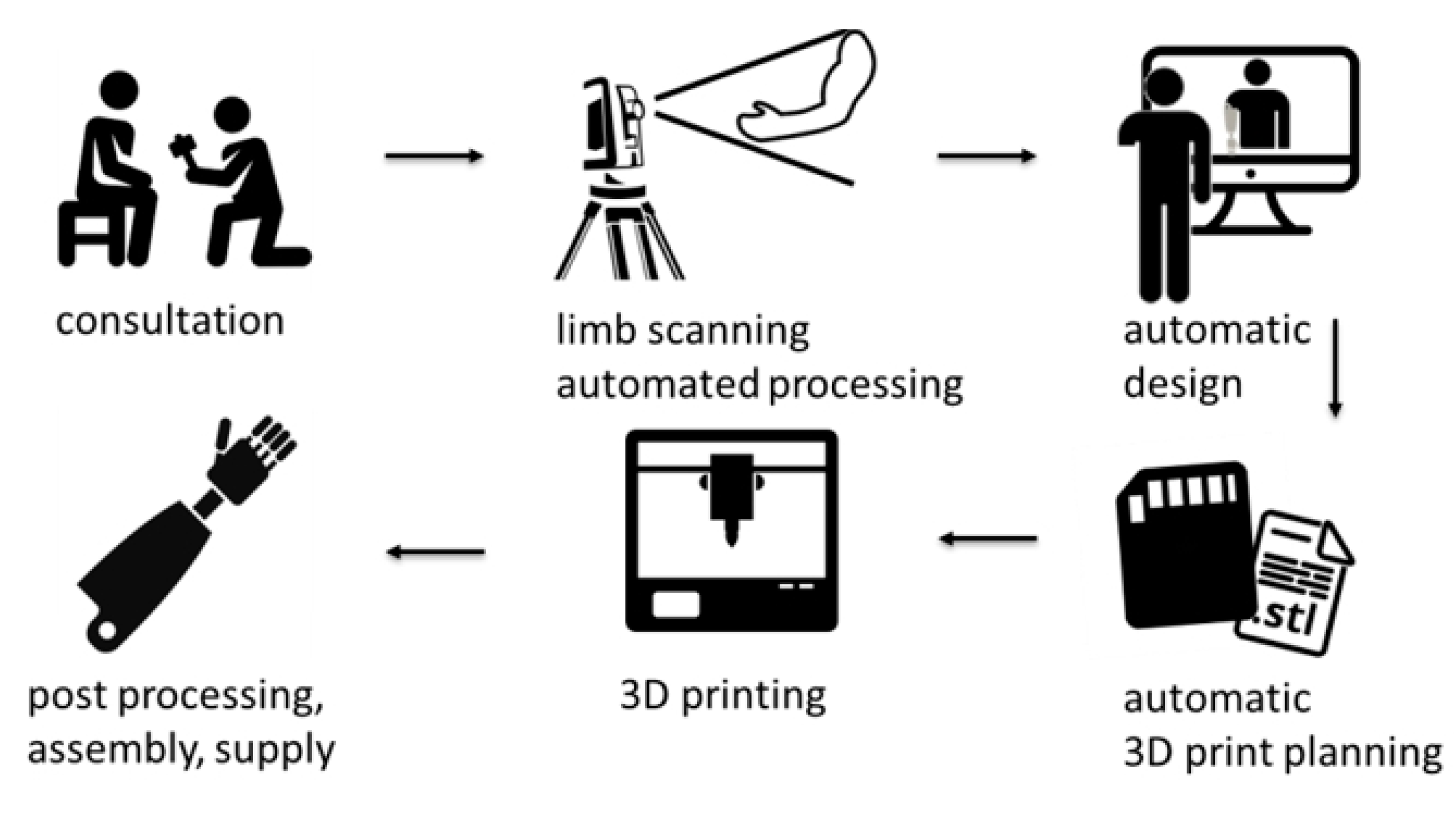

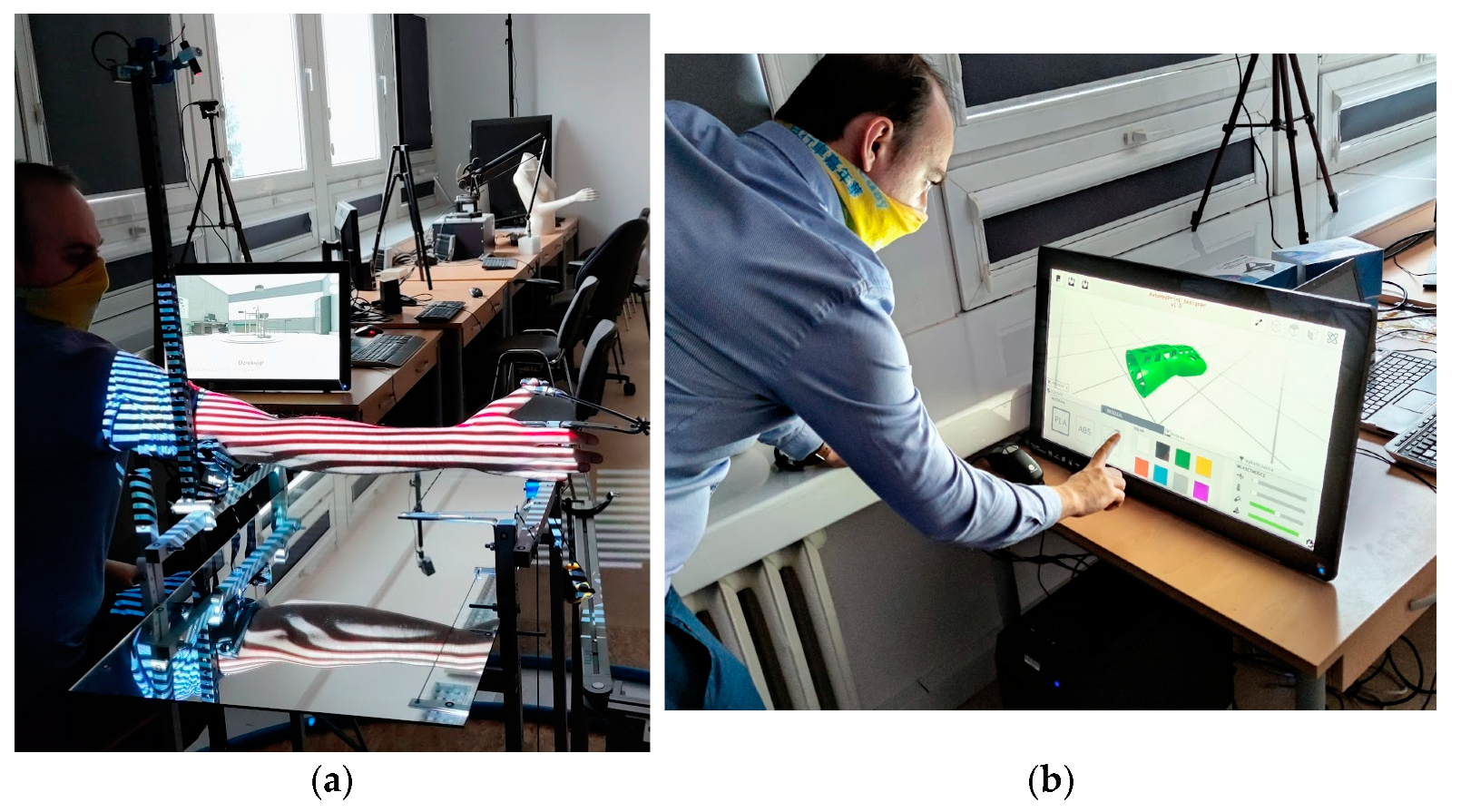

The AutoMedPrint system (awarded as the Polish Product of the Future in the year 2022) itself is a complex solution, containing hardware and software for realization of a full cycle of design of customized orthopedic and prosthetic devices. Apart from 3D scanning, CAD, and 3D printing modules, the AutoMedPrint system also contains a visualization module, which is used for real-time communication with the patients using the system. The scheme in

Figure 4 presents how the system actually operates, while

Figure 5 presents how it looks in its current, prototype phase. Currently, the system is not yet directly available as a commercial solution, and it is treated as a research tool. One of the authors’ overarching goals in their studies, including this one, is the creation of a solution for commercial purposes to popularize the automated design and the production approach utilized in the system prototype.

It was noted by the authors that XR technologies could be successfully used in aiding the development of the customized products, helping the patients to familiarize with the designed customized devices, virtually configure and try them on, or even assembly them in virtual space. Therefore, the underlying motivation of the studies discussed in this paper is to assess, among a group of experts, how particular XR technologies could be effectively integrated into the design and development process. The objective is to potentially achieve improved outcomes and increased user satisfaction, ultimately leading to more efficient utilization of custom-designed medical products in therapy. This aspect remains undecided within the current literature. The obtained results will be used to refine the process of work with the system, both with child and adult patients, especially regarding the prosthetic devices.

2.3. XR Applications Development

2.3.1. Application Types and Data Preparation

The first stage of development was a selection of technologies and their matchup with basic application types used in the product lifecycle. The following decisions are made:

VR technology is assigned to one of the manufacturing process elements—the manual assembly process of the 3D printed prosthesis. In order to obtain more complex visualization and level of difficulty, it is decided to consider a mechatronic prosthesis, equipped with microcontrollers and sensors. It is decided to create a training application compatible with any PC VR headset, operated using a standard pair of goggles with controllers.

AR technology is assigned to the simplified configuration process, allowing one to visualize the prosthesis and change its basic modules and colors in the real environment. To ensure simplicity, it is decided to use a tablet device with the Android operating system, with interactions by use of touchscreen and with simple image markers.

MR technology is assigned to a mixed type of application, involving both configuration process and some interactions performed with the prosthesis, such as virtual try-on, explosive view, and other manipulations. It is decided to create the application using a popular VR headset with a passthrough image of the real world and hand tracking interactions, thus creating a MR experience.

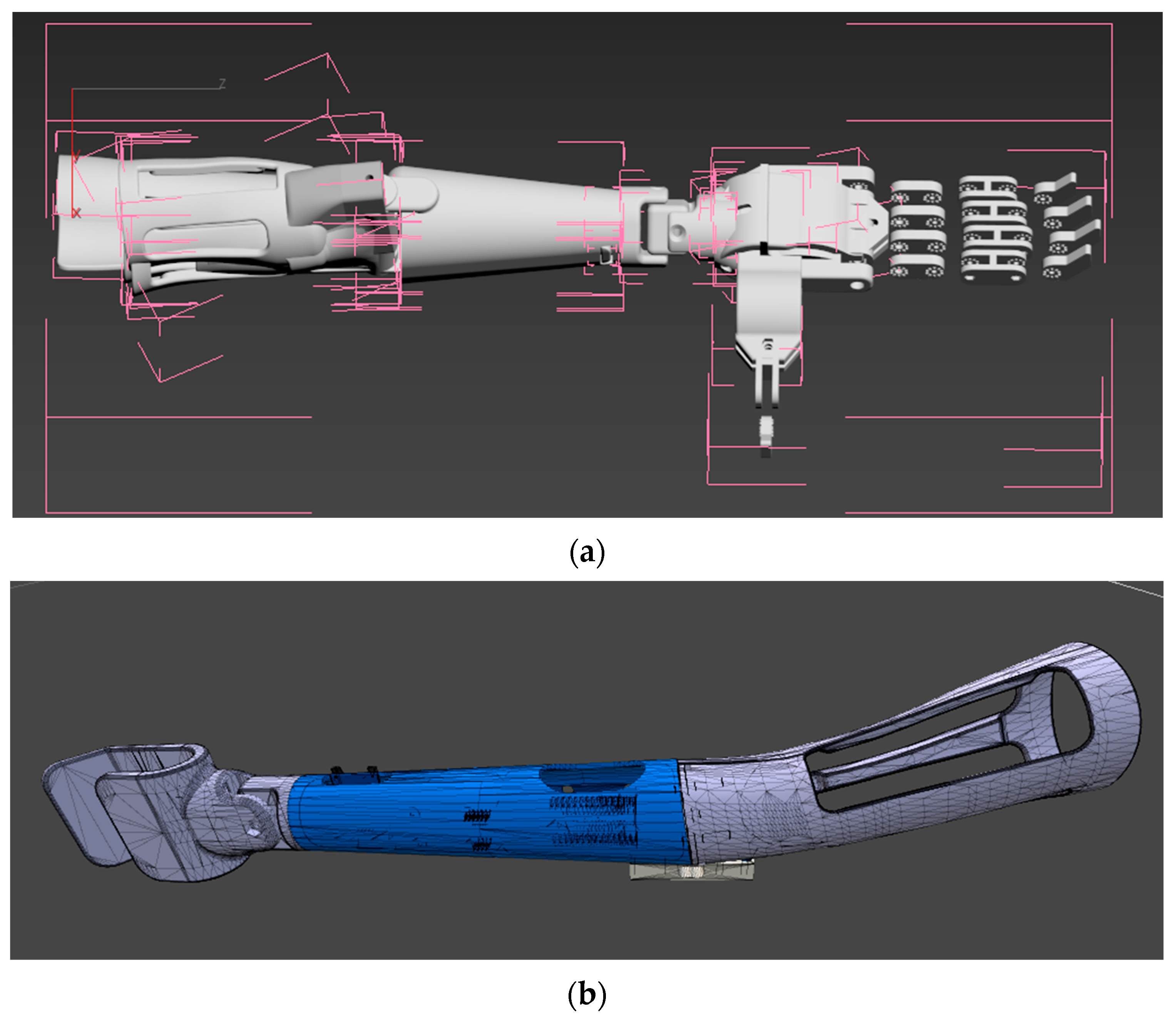

The initial data preparation work involves use of the modular CAD model [

31] to prepare a set of 3D models, with some demonstrative modularity capabilities for the configuration process. The same model is also used to develop a single variant of a prosthesis for assembly instruction. Both models are presented in

Figure 6.

Apart from the 3D model data of the products, the following other data is used in building the applications:

UI icons, created by the authors,

3D models and textures of environment (for the VR application), downloaded from multiple free sources and/or created by the authors in previous research,

scripts in the C# language, created in the previous research for scientific or didactic purposes.

When data packages are prepared, the actual work of development commences. All applications are created using the Unity engine (Unity Software Inc., San Francisco, CA, USA), with a free educational license granted to higher education institutions, in version 2019.4.37f1.

2.3.2. Virtual Reality—Assembly Training

The VR application was built using the aforementioned Unity Engine. The fundamental approach to incorporate VR functionalities involves transforming the basic Unity project into a VR-compatible version by leveraging the OpenVR library (integrated within Unity) and the SteamVR plugin for Unity, specifically version 2.7.3 (Valve Corporation, Bellevue, WA, USA). The SteamVR plugin is free for use, and that is why it was selected for further work. It allows one to create PC VR applications, compatible with all VR devices that work with the SteamVR desktop application (also produced by Valve). Therefore, most PC VR headsets are compatible with the created application (e.g., Meta Quest, HTC Vive, HP Reverb G2, and many others).

All the interactions are programmed either using SteamVR’s implemented code or the authors’ own scripts. Third party scripts are not modified. For visualization, a simulated texture of 3D printed “staircase” surface is applied as a combination of proper color and normal map settings.

The following assumptions are made for making the application:

The user starts inside a 3D visualization of a medical product development laboratory, which is created for this purpose by the authors.

In the beginning of the simulation, the product (prosthesis) is disassembled into pieces, and it is the user’s task to put them together—manually—to create a final product.

Movement in the working space is realized in two ways: using the user’s own movements and positional tracking (“on own legs”) or—for longer distances—via teleportation, operated by an analog stick of the VR controllers.

The user sees his simulated hands in the simulation. Interactions are realized using VR controllers—grasping the objects (parts) can be realized by touching them and holding either the “trigger” or the “grip” button of a controller representing a given hand.

Operations requiring use of tools in real life are simplified, and they happen automatically when the user puts a given part in a specific zone at the assembly basis.

It is possible to show helpers in the application—after interacting with a 3D cube in the work environment, assembly instructions are displayed, along with the names of all the parts.

It is impossible for the user to perform the assembly process in a wrong way due to imposed logical constraints. Additionally, it is not possible to disassemble the prosthesis after assembling it.

After finalizing the assembly, the user is congratulated, and the process ends. The user can operate the resulting prosthesis to try it on and to simulate movements.

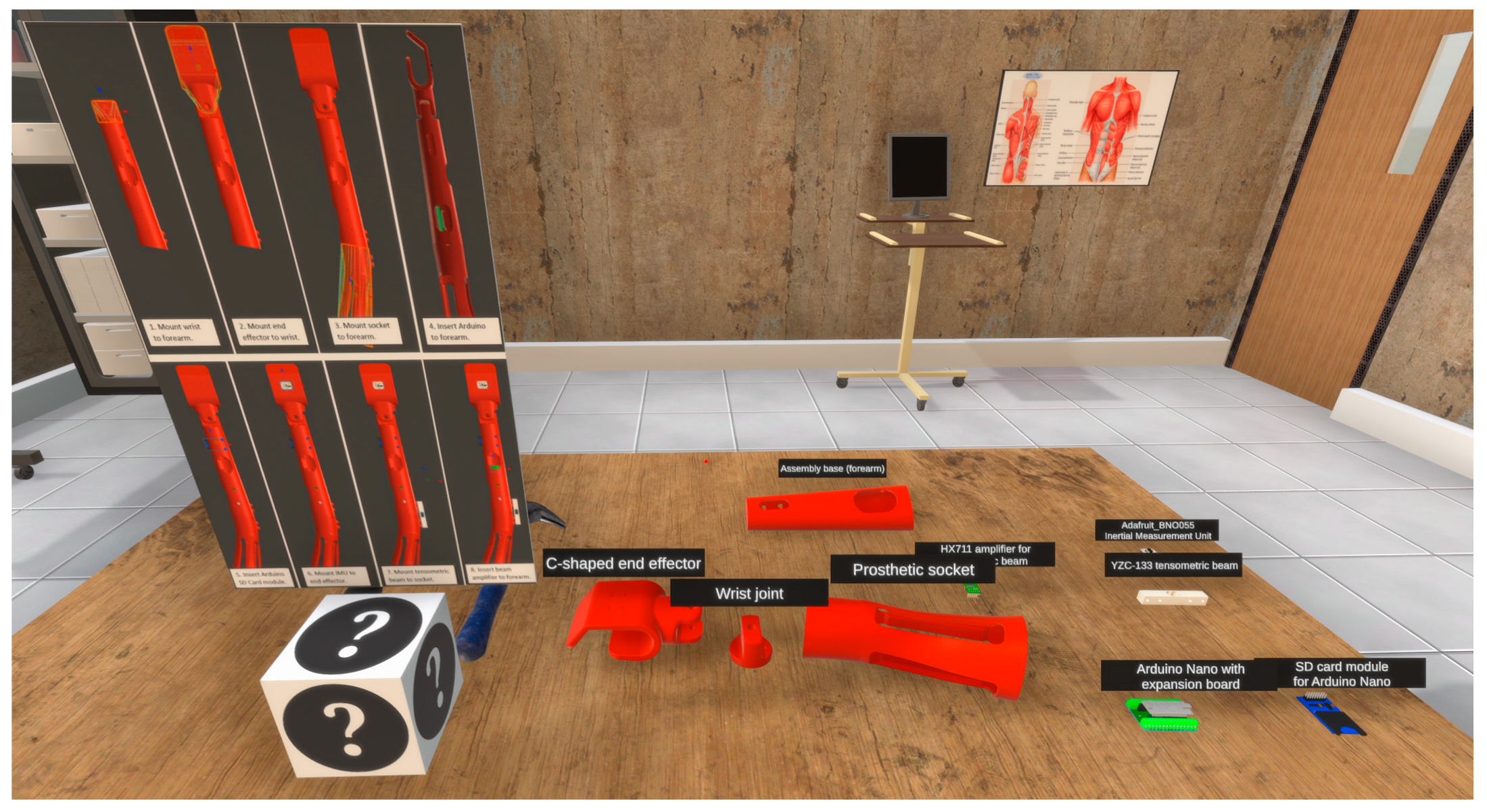

Figure 7 shows the simulated environment, as well as the user’s starting location and initial state of the parts.

Figure 8 shows the user while in the middle of the assembly procedure, aided with helpers.

Figure 9 shows the completion of the assembly and a congratulation message for the user.

The application has been built as an executable, Windows application and tested that way during the experimental phase of research using Meta Quest/Quest 2 headsets.

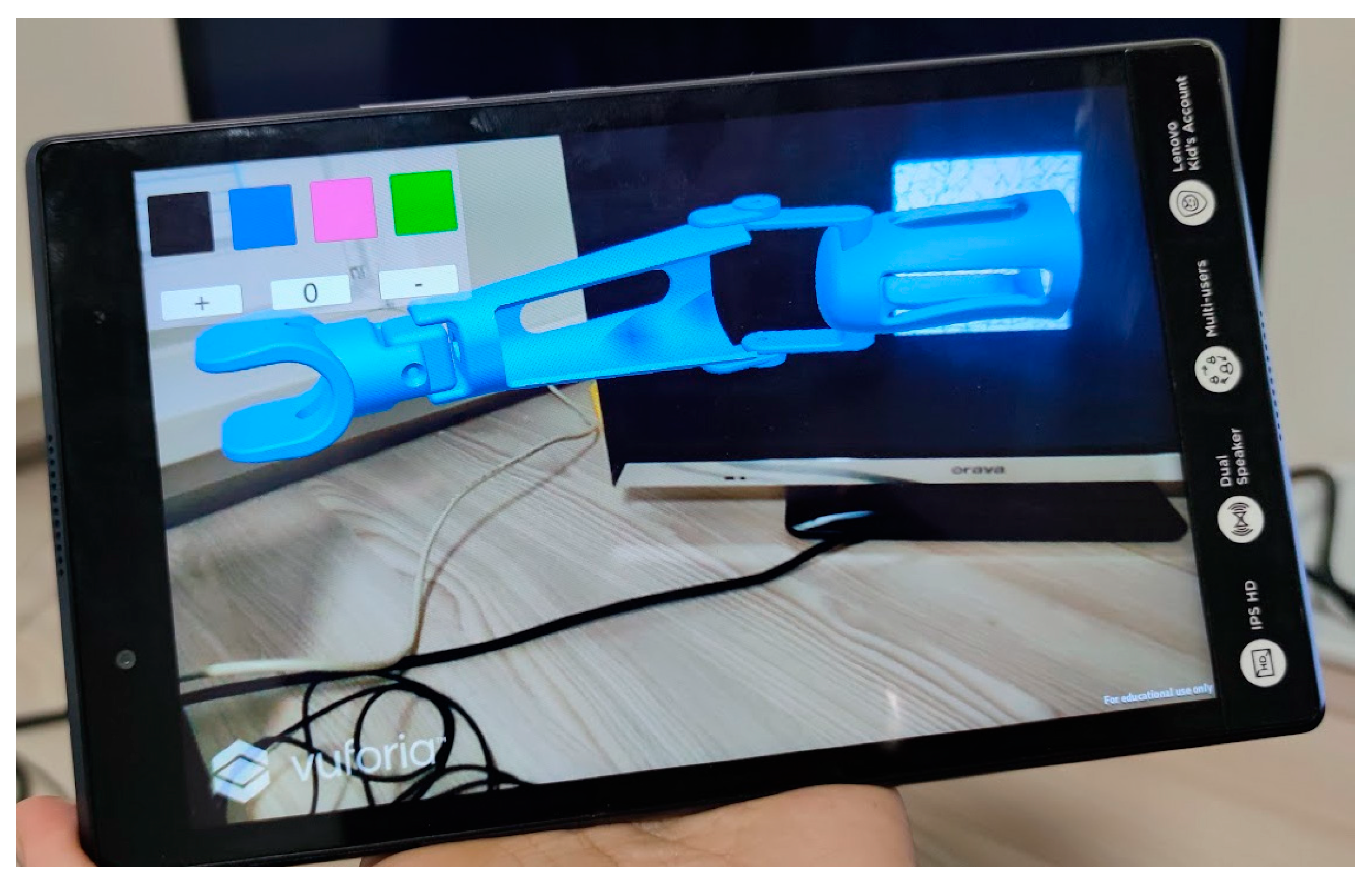

2.3.3. Augmented Reality—Configurator

For the AR application, the Android operating system was selected as a target platform, with a tablet or a cellphone as the target devices. For basic AR functions, such as marker recognition, the free Vuforia package (PTC, Boston, MA, USA) was installed from inside the Unity package manager. Vuforia is a package that allows handling of target device’s built-in camera, as well as recognition of markers, as well as rendering 3D and content over them. It needs a license, but it can be obtained for free for educational purposes.

The application is built with the following assumptions:

The prosthesis is rendered over a single graphic marker, having the form of a randomized grayscale image (

Figure 10).

When the prosthesis 3D model is visible, the user can operate a simple graphical user interface, with icons for prosthesis color change and additional icons for simple rotational animation in the elbow axis (

Figure 10).

A second randomized graphic marker is used to render a 3D interface, with 3D icons enabling switching of modules of the prosthesis—once the icons are visible, the user can touch them to switch between different end effectors and sockets of the prosthesis (

Figure 11).

The markers can be printed or displayed horizontally or vertically. Once a marker with the prosthesis is recognized, the prosthesis will stay in the camera’s field of view as long as environment does not change, so the prosthesis model can be rendered, even when the marker is not directly visible.

The markers can be also be moved, and the prosthesis and 3D UI models will move along. For virtual try-on, a marker can be placed on the user’s limb (or their stump, if an actual patient is using it).

The application has been deployed to some Android devices—7″ and 11″ tablets. For the actual experiments, the larger device is used for a better clarity of image.

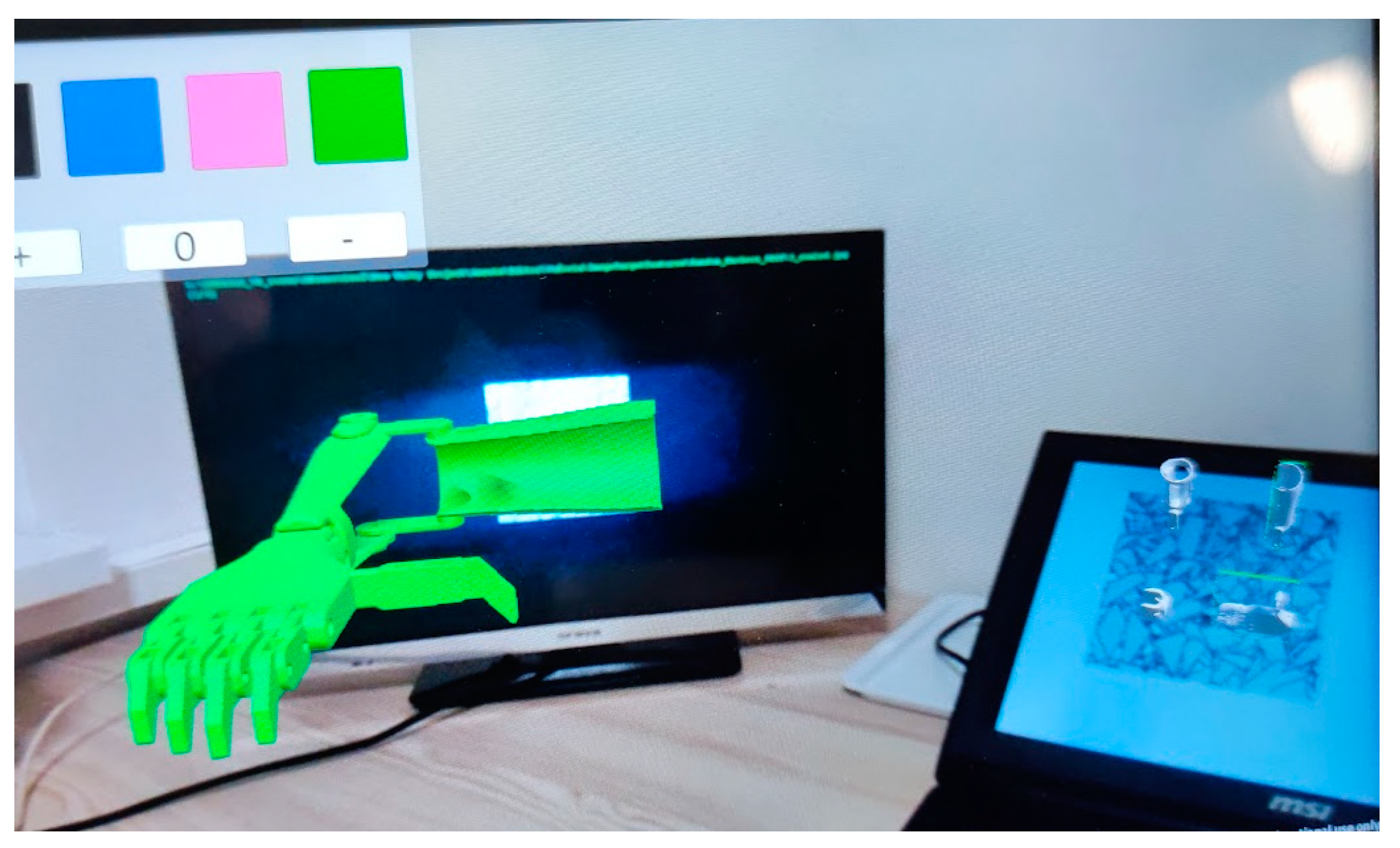

2.3.4. Mixed Reality—Configurator and Try-On

As the previously described two applications, the MR application is built using the Unity engine. Just like the AR application, the Android platform is chosen as the preferred platform for development. However, this time, a headset is selected as the device of choice. The application is designed, deployed, and tested using the Meta Quest 2 goggles (Meta Platforms, Inc., Menlo Park, CA, USA), leveraging their unique feature of displaying the user’s real-world surroundings through the built-in cameras on the device’s screens, known as passthrough. The view is in grayscale, but it is enough for the user to fully notice and care about their surroundings—including their own body.

For building the application, a free Mixed Reality Toolkit package—MRTK (Microsoft Corporation, Redmond, WA, USA)—was used. The empty Unity project is converted to a Mixed Reality project by installing the MRTK package, along with hand interaction examples. Moreover, the Oculus Integration package by Meta was installed into the project, and the basic scene is prepared accordingly to enable passthrough in the Meta Quest device.

The application is built with the following assumptions:

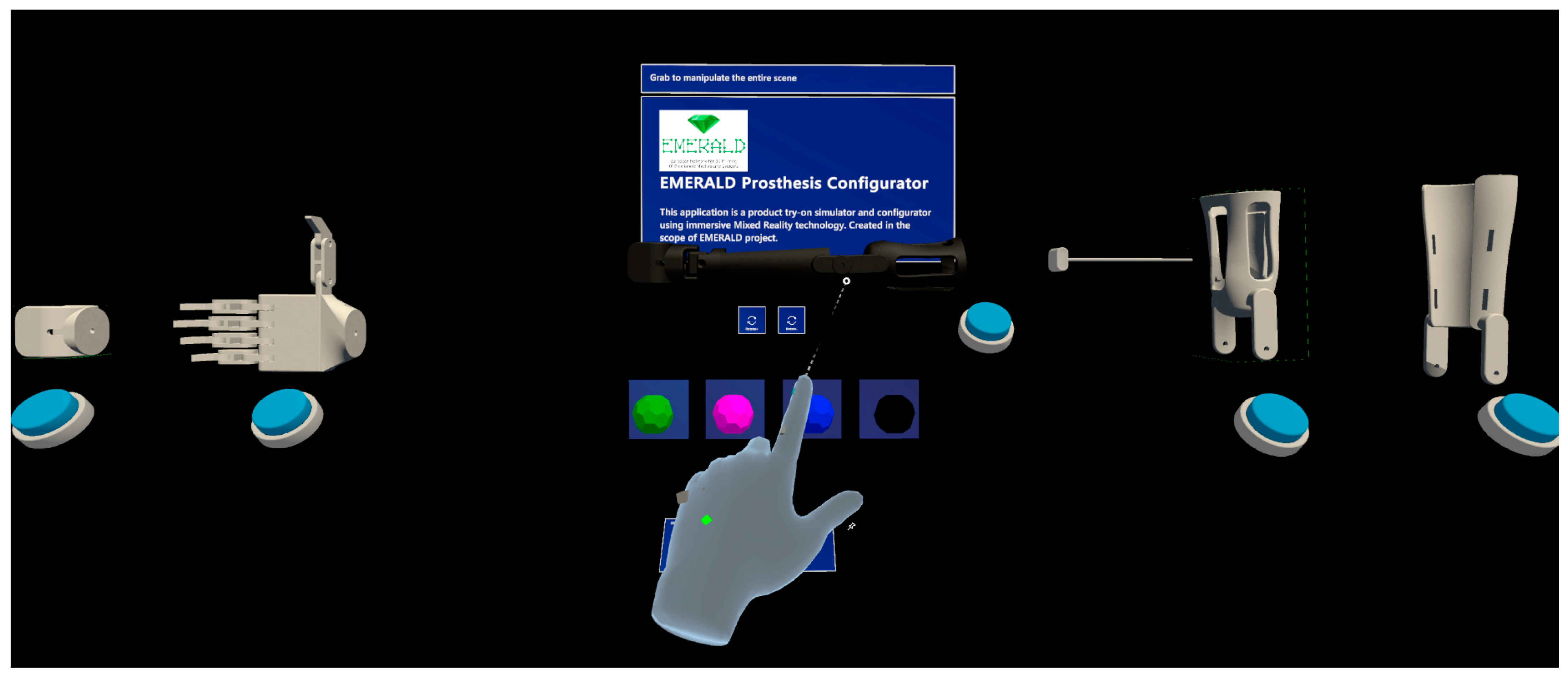

The user starts in front of a 3D visualization of a product, surrounded by various user interface elements (both 3D and 2D icons)—as shown in

Figure 12. The user can always see the passthrough layer (i.e., his real-world surroundings), but this image cannot be captured by means of screen or video capture—that is why, in all the Figures depicting MR, the background is black.

Using the user interface (UI), the user is able to make configuration changes—identical to the AR app (colors, modules)—but all the icons are 3D and operated through touch gestures.

Movement in the working space is realized using the user’s own movements and positional tracking (“on own legs”)—and, as the real world is all visible, no teleportation is possible. However, the user can “reset” his starting point to see the UI at the center of his location again (by using the Meta Quest context menu) or drag the whole scene contents with him. As such, there are no positional constraints, and the virtual prosthesis can be “taken” anywhere in the physical world.

The user sees his simulated hands in the simulation, superimposed on real hands (the rendered hands can be turned off). Interactions are realized using hand tracking and natural movements (grasping, pointing at objects from distance, tapping, etc.) VR controllers are also an option, if for some reason hand tracking does not work properly for a given user. If they are turned on and in the field of view, hand tracking is automatically disabled—and it is enabled once again when the controllers are put away.

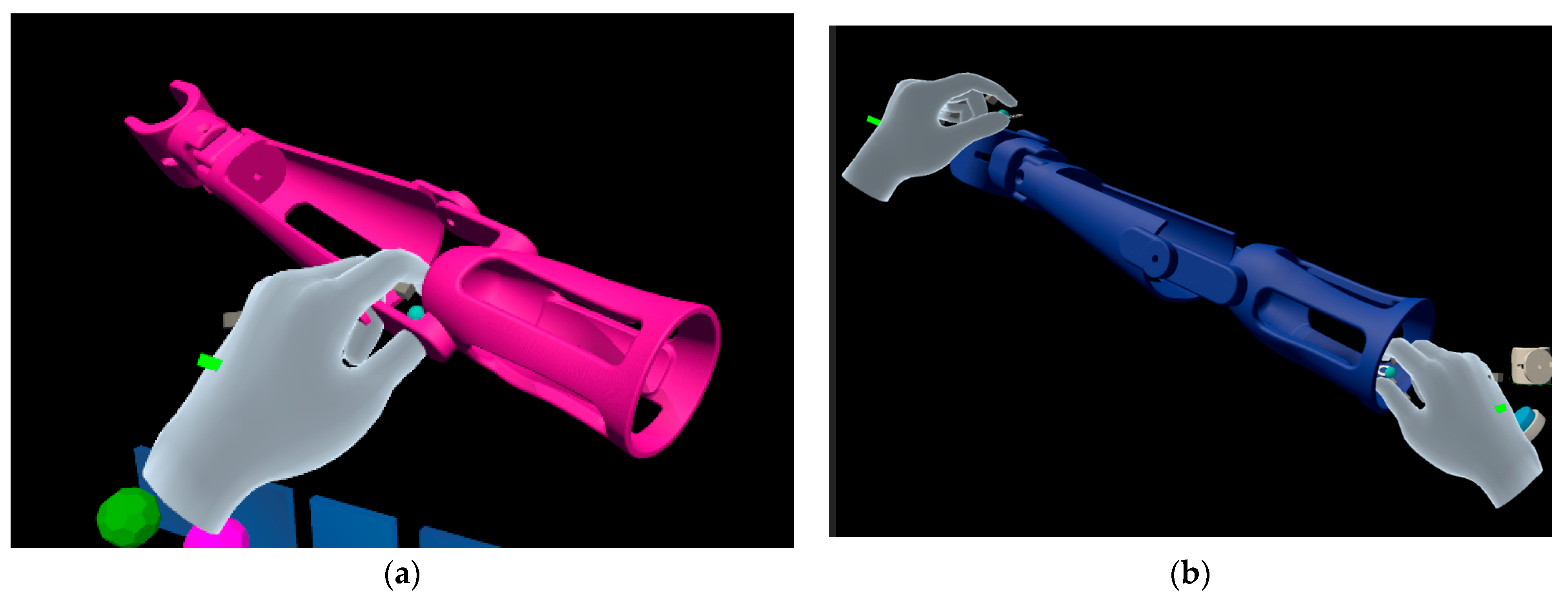

The main 3D model—prosthesis—can be operated with use of two hands or one hand. One-hand interaction (

Figure 13) allows one to drag around and rotate the object (the pivot depends on the grasping point), while two-hand interaction enables scaling (some limits were set to not allow the user to obtain extreme sizes of the prosthesis).

Using the UI, it is also possible to animate the rotating elbow of the prosthesis, as well as making an “exploded” view by using a 3D slider.

The application is deployed to a couple of Meta Quest 2 devices (set in developer’s mode to enable that) and tested only using that particular device type.

2.4. The Course of the Experimental Study

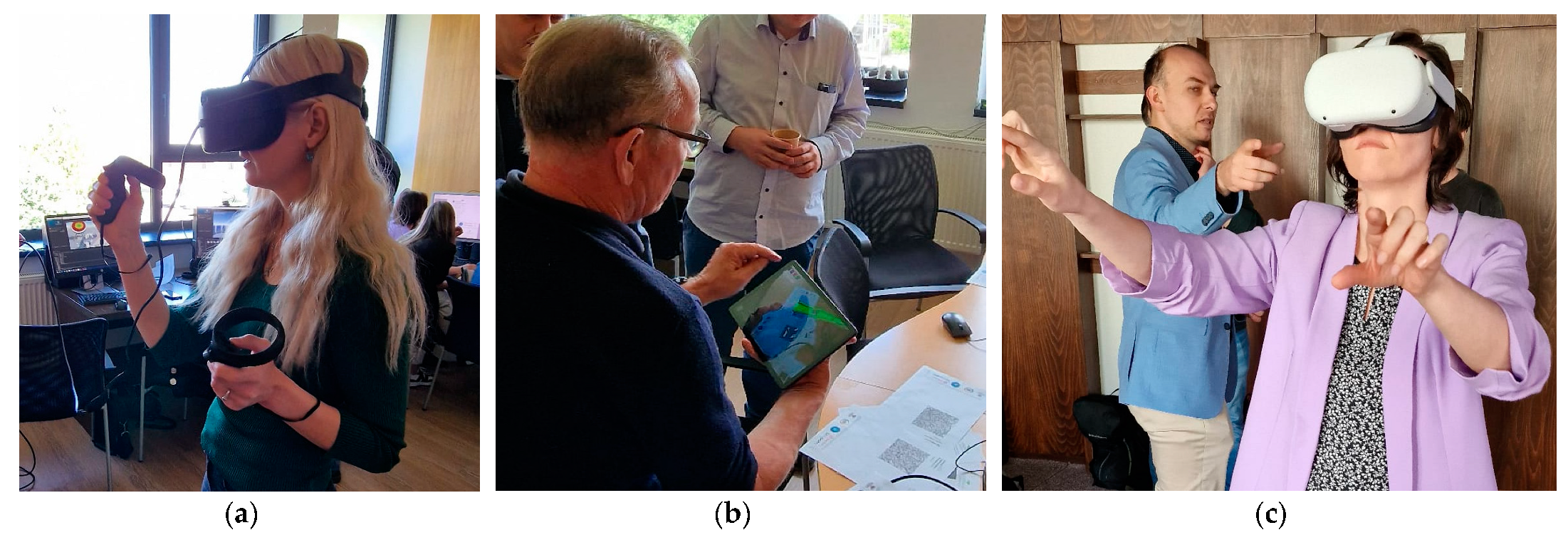

The experimental studies involved conducting organized tests on the capabilities of all three applications. These tests were carried out by an international group of experts who possess expertise, including engineering degrees, in areas relevant to design, mechatronics, and the development of medical products. The group was made of 14 people, aged between 22 and 65. The studies were organized as follows:

The first stage was the presentation of the application. The idea of the studies was presented to the experts, as well as the types of applications and their capabilities.

The experts could then familiarize themselves with all the XR technologies, playing with the devices and various interactive scenarios, if necessary (mostly for experts with no prior XR technology knowledge).

Following the experts’ familiarization with the technology, the actual testing phase was initiated. Each expert individually tested all the technologies sequentially, in the following order: VR → AR → MR. It was required for the entire group to complete testing of one application before moving on to the next.

In the VR application, Meta Quest goggles were primarily used for testing. The assembly scenario was realized once, until the final message, and the testers were able to stay a bit longer in VR space if they wanted to (time was not measured or otherwise constrained).

For AR application, an 11″ tablet was used for testing. The experts played with the application using all its options and testing various arrangements of printed and displayed markers. No time constraints were imposed.

Regarding the MR application, Meta Quest 2 goggles were used for testing, with full utilization of the hand-tracking technique. The experts played with the application until all the features were thoroughly tested (again, there were no time constraints).

After testing each technology, a dedicated survey was filled in. After finalizing all the tests, finally, a fourth survey was filled out (a comparison of different XR technology capabilities). The surveys contained mostly closed questions, using 5-point Likert scales for better visual comparison. Apart from that, there were open questions at the end of each survey.

During and after finalizing the whole testing procedure, various aspects were discussed, and the observations of experts were recorded.

Between testing particular technologies, certain pauses were made to allow for the possible effects of cyber sickness to wear off. The whole procedure of testing was realized during a single working day (8 h).

Figure 14 illustrates the particular phases of testing.

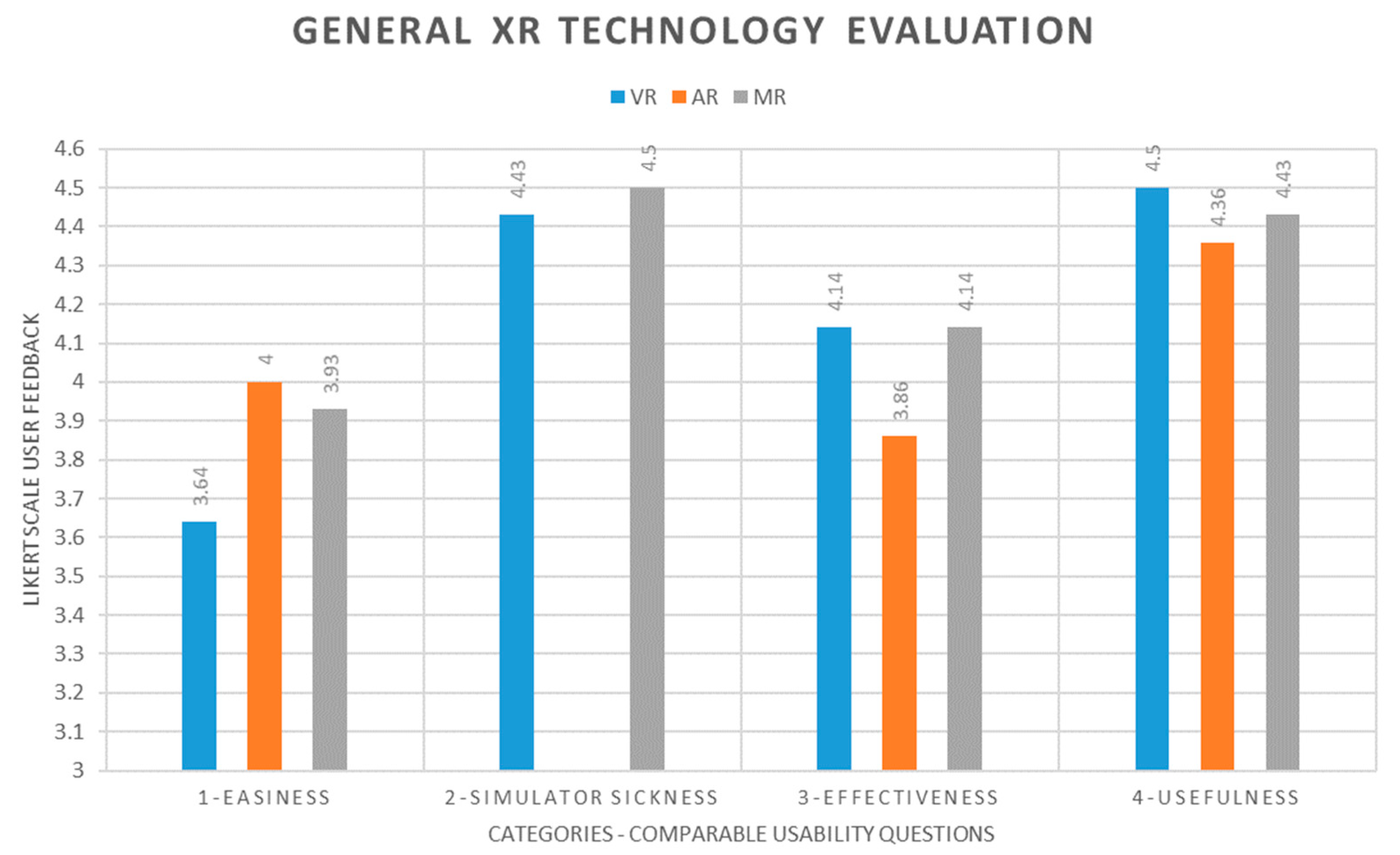

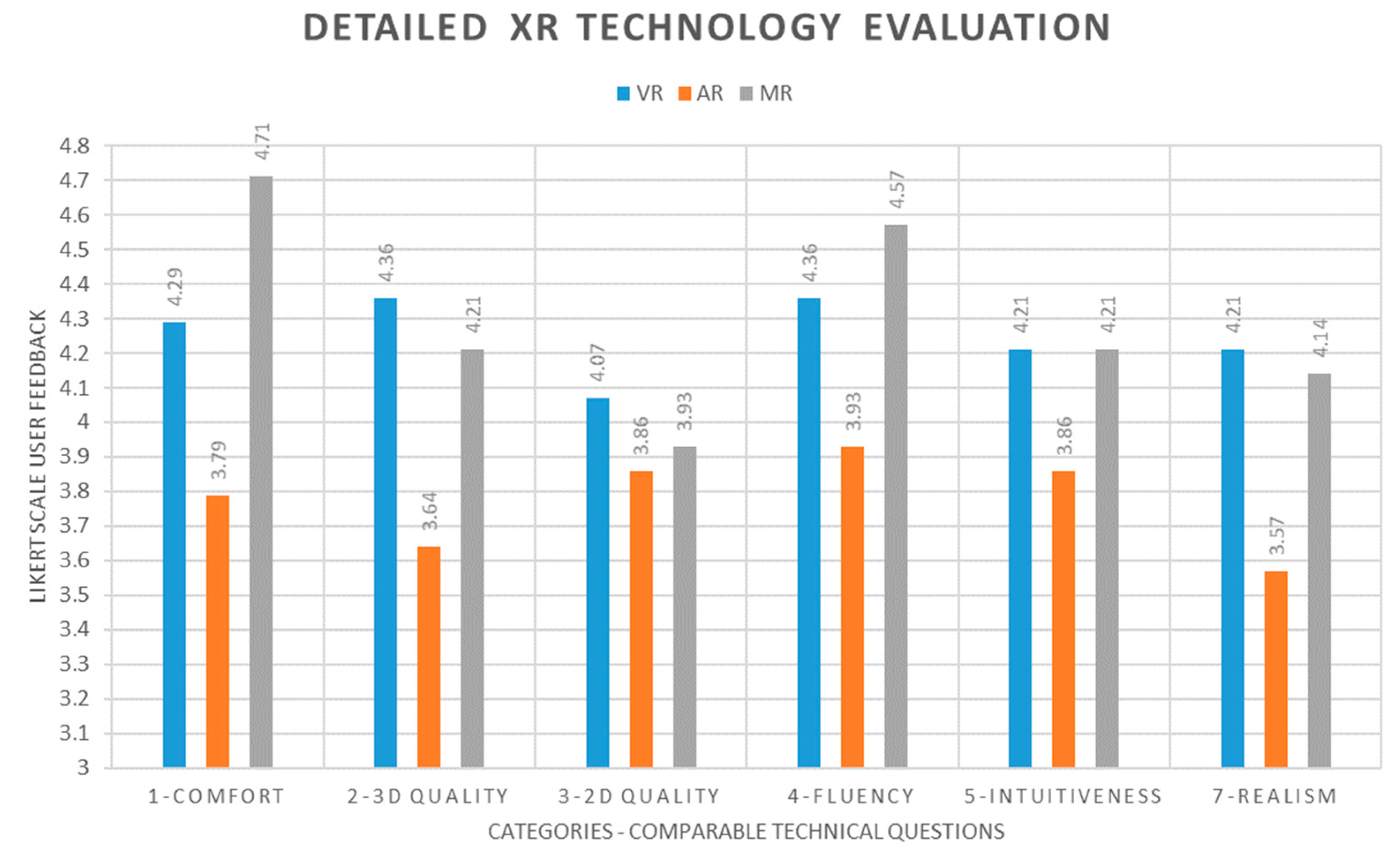

The first three surveys pertaining to particular technologies are divided into two parts:

general questions (four or three) to evaluate difficulty, effectiveness, usefulness, and simulator sickness symptoms (the last question is not asked in the case of AR, as no headset is used).

detailed technical questions, a bit different to each application, to evaluate, among other things, the quality of 3D and 2D graphics, fluency, intuitiveness, realism, and other interaction features.

Quantitatively, the surveys contain 11 (VR, MR) or 10 (AR) closed, 5-point Likert scale questions, with one open question (optional comments).

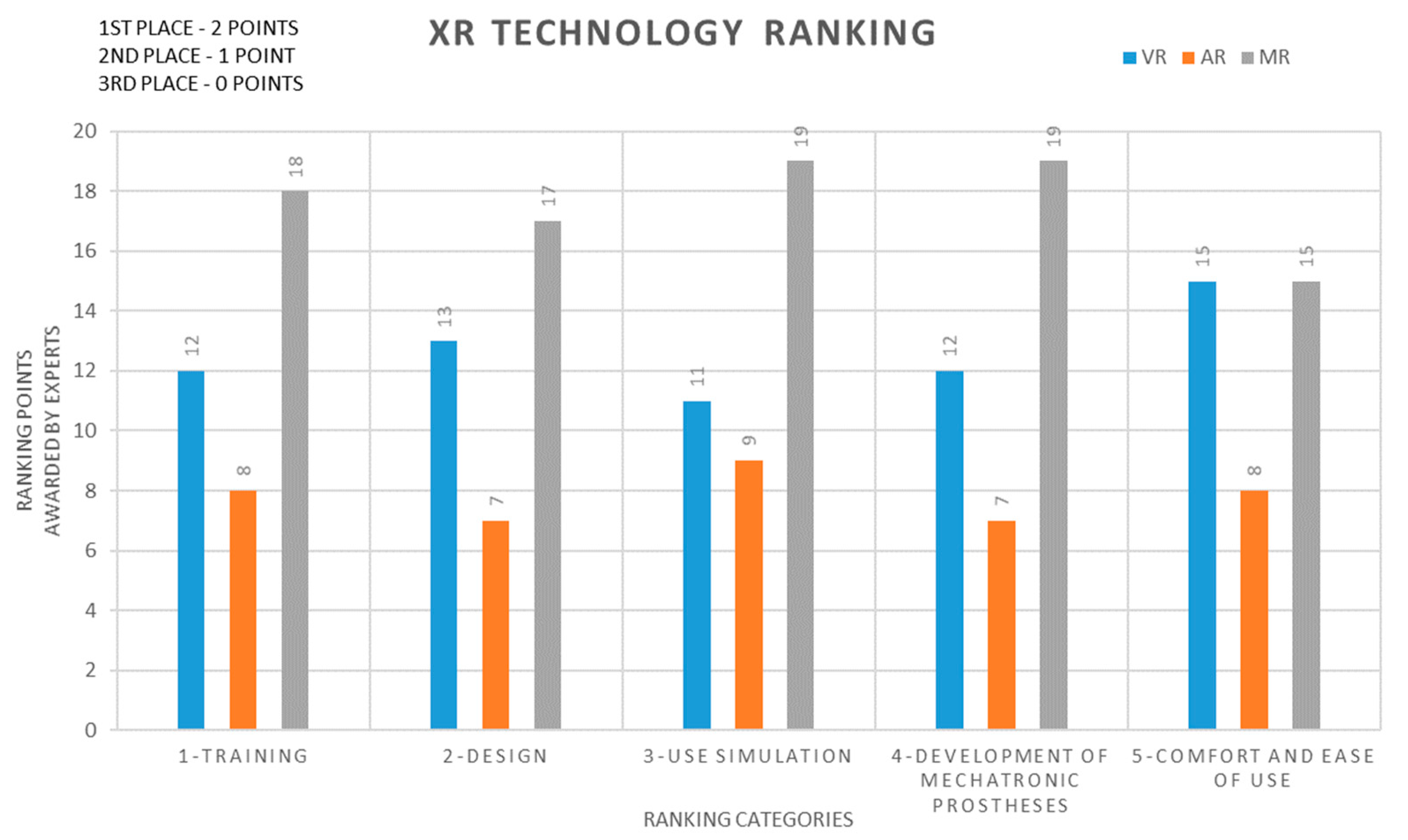

The fourth survey, for comparison of XR technologies, contains 5 questions. Each required an expert to make a ranking—writing 1, 2, and 3 numbers near the name of a technology. The 4 questions were to evaluate the potential in training, product design, and configuration, product use simulation, and the development of biomimetic mechatronic devices. The fifth question ranks the XR technologies by their general comfort and ease of use.

The surveys—full texts of questions as presented to the experts—are contained in

Appendix A of this paper.

4. Discussion

In the performed experiments, the VR and MR technologies gathered more positive and enthusiastic feedback than the AR technology. This could be attained by their real efficiency and capabilities, as well as limitations of the simple AR technology implemented (tablet with simple image markers recognition). Part of that increased score, in the authors’ opinion, comes from the so-called “wow effect” of immersive technologies on inexperienced users. However, this does not mean that the expert evaluation is less valid, especially in the presented use case scenario (inexperienced factory workers—training in VR—or inexperienced clients—MR).

The XR technologies are still relatively unknown to the general public, and, as such, their use will be often met with the “wow” effect, bringing more positive evaluation of the technology itself, as well as a more enthusiastic approach to the presented product. That is very important when working with prosthetic patients, as many of them (especially children) do not accept their prostheses and have a hard time working with them and using them, which can further deteriorate their health and wellbeing [

32]. That is why using simple applications, even in form of games or visualizations with the “fun factor”, combined with immersive equipment (VR/MR goggles), can potentially increase the acceptance and use rate of prosthetic devices among younger patients.

It was apparent from the technology comparison and ranking that Mixed Reality did the best impression on all the experts—and this might be caused by many factors—the standalone aspect of the application (mobility), maintaining contact with real world, intuitive and enjoyable manipulation by hand tracking, simplicity, etc.

A very important aspect of the tested applications is their ease of use. It was noted that all the experts were able to utilize all the features of the presented applications, if not with some minor difficulties. In terms of immersive technologies (VR/MR), it was noted that going into hand tracking, as opposed to use of controllers, had a positive effect on both perceived effectiveness and usefulness, as well as fluency and intuitiveness of interaction. It was also noted by the researchers that the time required to familiarize one’s self with the MR technology with hand tracking was considerably shorter than in the case of VR with controllers and their buttons. In the actual experiment, the difference was very apparent—but, in the scores resulting from the survey, it is not that significant (VR is slightly worse, but there is no huge difference in numbers).

However, it must be mentioned that some experts indicated that the quality of two-dimensional elements could be improved. Some of them also had troubles with slight or (rarely) moderate symptoms of cyber sickness while using immersive equipment. The surveys were anonymous, but from authors’ observations, correlation of age and “digital” experience is visible—older users, with less experience in digital technologies, tend to have more problems with perceiving user interface elements and were more prone to cyber sickness symptoms. However, the statistical sample was too small to actually prove that in the study—further, more detailed experiments are required here, with more numerous groups of users.

In the general evaluation of XR technology, based on the study results, it is quite important to notify that the experts were very positive about the prospect of XR technologies possibly replacing traditional ways many processes in a personalized product lifecycle are realized. More than half of the responders indicated that either VR or MR could replace, entirely, the traditional training or configuration with use of physical products. Such answers were also given (albeit more rarely) in the case of AR. That is a very promising direction in terms of development of XR technology, but the observed reality is far from that—although XR is present in the media and known by many, it is still relatively rarely encountered in the lifecycle of many products, especially considering prosthetics.

Results of the studies presented in the paper should be treated as an insight that this situation might be changing soon and that the technology is almost ready to be popularized and included as often as possible, especially as a means of communication—between designers, manufacturers, doctors, patients, and salesmen. Therefore, the experiments confirm that the XR in the view of experts is a technology that can be used for real work, not only as an attractor and a marketing device. According to the experts, and in authors’ opinion, the XR technologies presented in this paper will be used in the future in all medical centers, as well as in the educational field.

5. Conclusions

This paper presented an experimental evaluation of the applications of virtual, augmented and mixed reality (VR, AR, MR) technologies in the context of individualized three-dimensionally printed upper limb prostheses. Through complex and advanced applications, subjected to experimental verification, the authors aimed to provide a comprehensive understanding of the benefits, challenges, and future directions of these technologies in the field. It has been found that each XR technology has the potential to be used efficiently in the field of development of biomechatronic prosthetic devices. However, it is also quite personal, as experts had various opinions on each technology and their potentials (most Likert scale questions in the surveys gathered answers between 2 and 5). According to the authors’ observations, the most efficient technology is VR used for training purposes, but due to its many technical issues, it requires long familiarization. On the other hand, AR, and also MR, are quick to dive into and could be interesting in producer-customer relations, even more than VR, but their performance and effectiveness may be not that high, especially in their current states of development. It is, therefore, important to select the technology, each time, for a given case, and to fully consider use scenarios before deciding on the use of one of them—but it is important that they are all considered and should be thought as a standard part of the process of product development.

The presented studies might be some of the first steps in that direction, which will be further explored in future studies, also involving real patients. By disseminating this knowledge, the authors hope to stimulate further research and innovation, fostering advancements in the development of individualized prosthetic solutions and ultimately benefiting patients worldwide. Advances in artificial intelligence, augmented reality, and virtual reality mean fresh AR/VR design opportunities for designers. Recognizing the innovations that are occurring in the medicine and in the industry will help designers design smarter and make more informed creative decisions.

In future studies, the authors will focus on a more detailed approach regarding particular XR technologies. In the case of VR technology, its training capabilities in the case of manufacturing and assembly of personalized prosthetics and orthotics will be compared with real-life training scenario. In the case of AR technology, numerous more advanced object recognition methods will be explored, as opposed to the simple marker approach presented in the basic studies. In the case of MR technology, video see-through will be put against the optical see-through approach. In all technologies, it is also planned to explore the potential benefits of collaborative approach. Long-time studies are planned that will hopefully continue the development of XR technologies in medical production. The integration with a fully-immersive hapto-audio-visual framework may be also considered in the future [

33,

34].