Impact of Negation and AnA-Words on Overall Sentiment Value of the Text Written in the Bosnian Language

Abstract

1. Introduction

2. State of the Art

2.1. Sentiment Analysis in the Bosnian/Croatian/Serbian/Slovenian Language

2.2. Negation in Sentiment Analysis

2.3. AnA-words in Sentiment Analysis

2.4. Intensifiers and Negation in the Bosnian/Croatian/Serbian/Slovenian Language

- LBM0: words from the sentiment lexicon are classified on positive and negative sentiment.

- LBM1: tweets are classified also on negated first word that follows the negation signal sentiment.

- LBM2: is basically a compositum of previous methods combined with the rules which are included for detecting and processing negation.

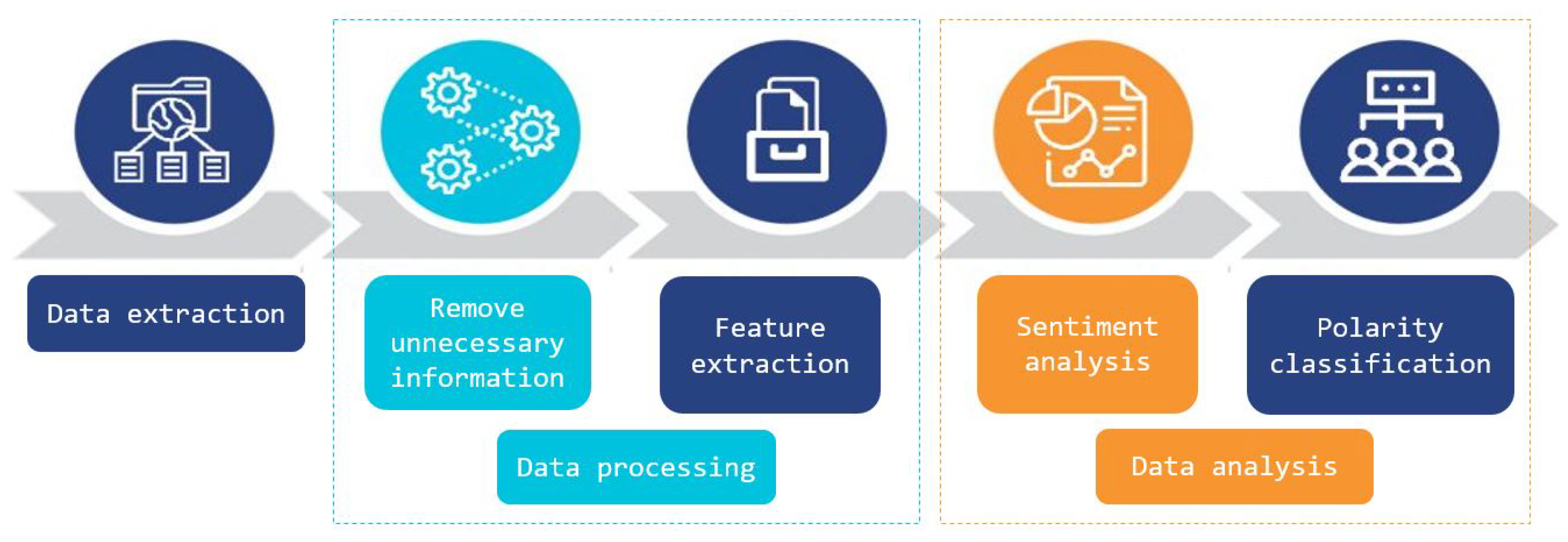

3. Methodology

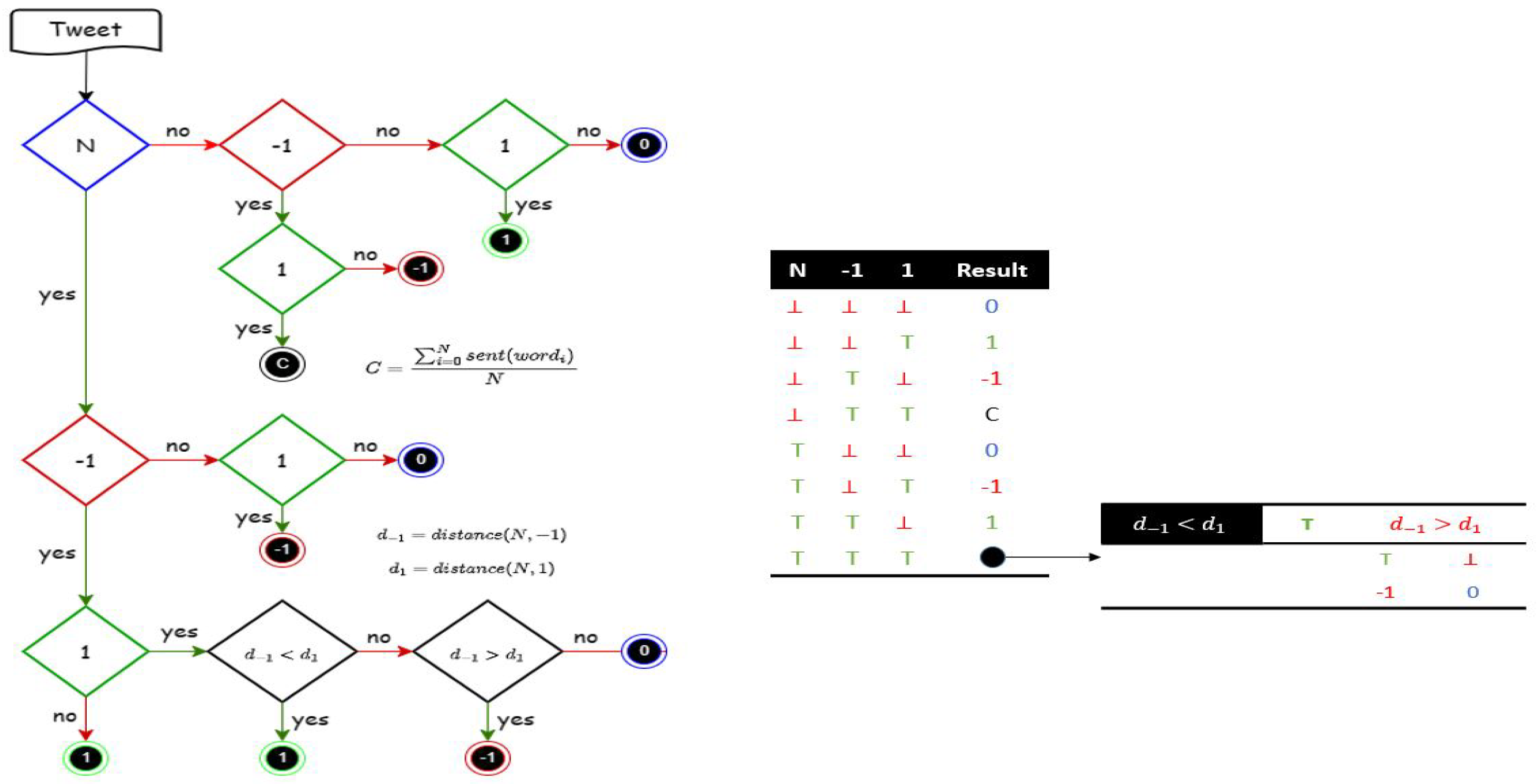

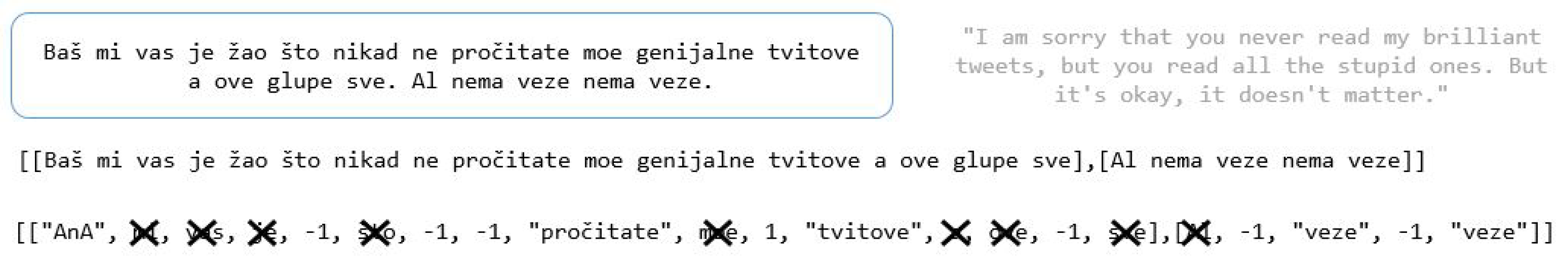

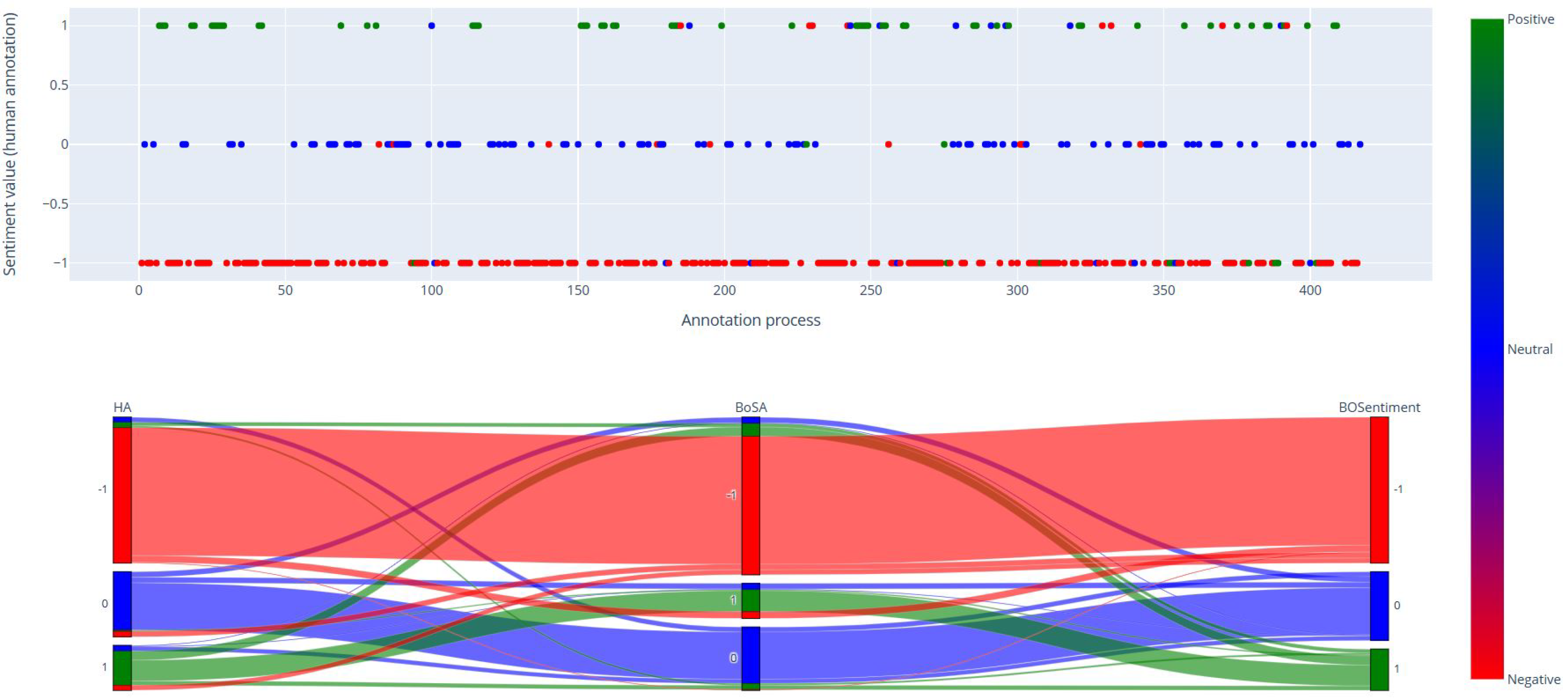

- The BoSA model, which considers the presence of negation and lexicon terms in the sentence (see Figure 1). Moreover, if there is no negation in a tweet, and no lexicon terms, the final sentiment value is 0 (neutral). However, if there is no negation and a positive word from the lexicon is present, the final sentiment value is 1 (positive); conversely, if a negative word from the lexicon is present the sentimental value is (negative). In the case where both positive and negative words from the lexicon are present simultaneously, the sentiment value is calculated using the following Equation (1). Furthermore, if there is only negation without any words carrying sentiment, the final sentiment value of the tweet is 0. However, if both negation and a positive word are present, the sentiment value is reversed, resulting in a value of . Conversely, if a negative word is present alongside negation, the sentiment value is 1. Finally, if both negation and both positive and negative words are present, the sentiment value of the tweet is determined by which word is closer to the negation. In this case, if the positive word is closer, the sentiment value is ; otherwise, it is 1.

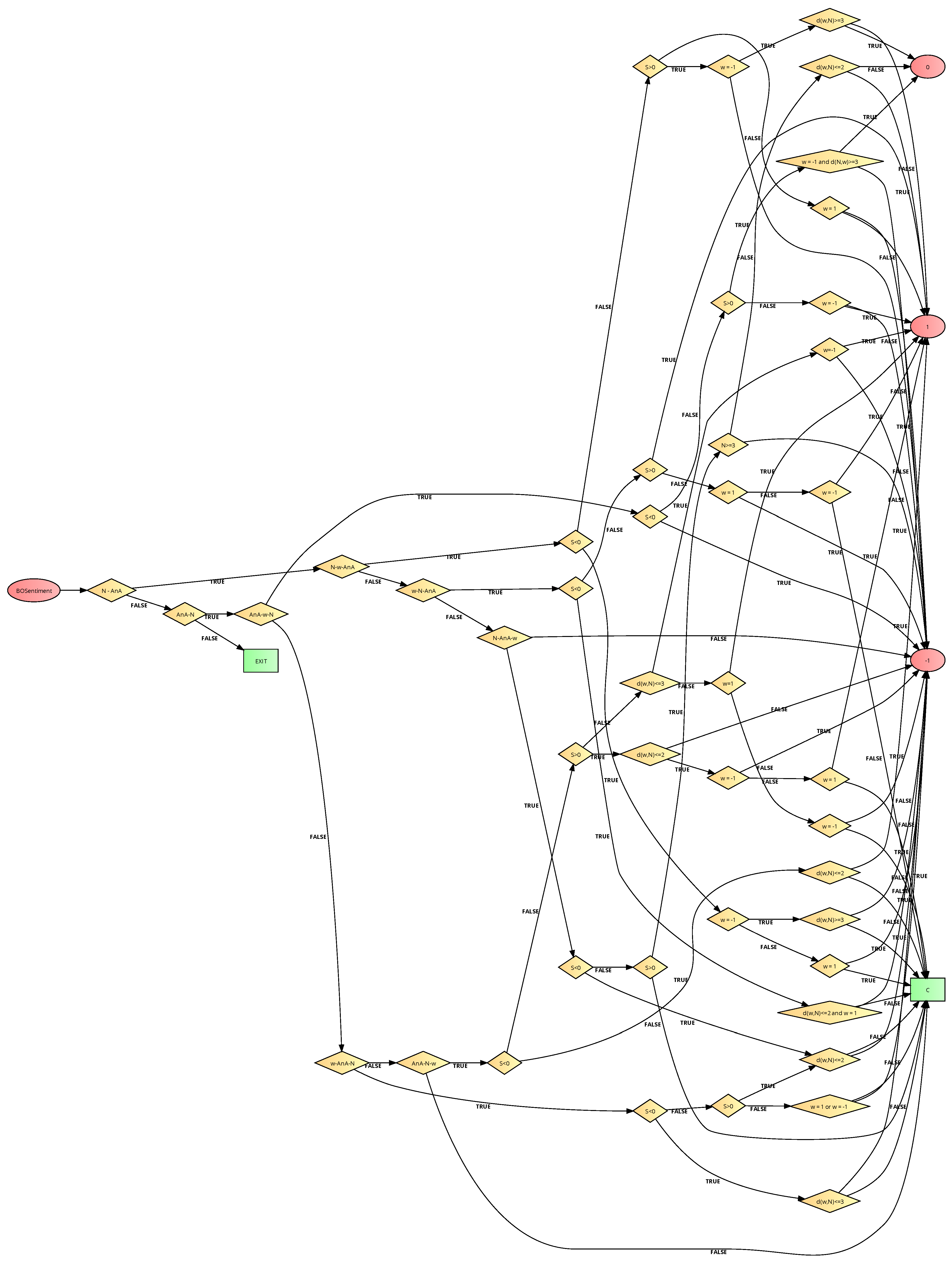

- The BOSentiment model, which examines the position of negation and AnA-word terms in relation to all other words in the text (see Figure 2).Moreover, the BOSentiment classifier works by examining the order of Negation, AnA-words, and lexicon words. In this regard, we have the following possible positions:

- ∘

- Negation before AnA-words;

- ∘

- AnA-words before Negation.

The lexicon word can be found either at the beginning, end, or in the middle between the word from the AnA-words list and Negation.The BOSentiment classifier provided in the above Figure checks for these positions and calculates the final sentiment score accordingly.The BOSentiment classifier provided in Figure 2 checks for these positions and calculates the final sentiment score accordingly. The sentiment score can be either , 0, or 1, where represents negative sentiment, 0 represents neutral sentiment, and 1 represents positive sentiment. Additionally, at times when we were uncertain about the impact of negation and AnA-words on the sentiment analysis of a tweet, we employed a simple method (1), where all other words that were not labeled as or 1 were labeled with 0. If the resulting value was less than 0, then the tweet was assigned a sentimental value of . Conversely, if the value was greater than 0, the tweet received a sentimental value of 1. If the value was exactly 0, the tweet was deemed to be neutral. This approach was used as a fallback when the more complex analysis involving negation and AnA-words did not produce a clear sentiment value. While not as nuanced as the more sophisticated approach, this simple method still provides a rough estimate of the sentiment of a tweet. The model first checks whether Negation comes before AnA or vice versa. Depending on the order, it then checks the position of the lexicon word to Negation and AnA. If all three elements (Negation, AnA, and lexicon word) are strictly present in a tweet, the classifier then uses the position and relationship between these three elements to determine the final sentiment value. The code follows a set of conditions to determine the sentiment score. For example, if Negation comes before the AnA-words word, the lexicon word is found between Negation and AnA, and the sentiment value of the tweet is negative, the classifier assigns a sentiment score of . Similarly, if AnA comes before Negation, the lexicon word is found at the beginning, and the sentiment value is positive, the classifier assigns a sentiment score of 1. In addition, it includes various conditions that check for the position and relationship between Negation, AnA-words word, and the lexicon word, and assigns the sentiment score accordingly. If any of the three elements are missing in a tweet, the classifier assigns an exit code.

- In the first case, the final sentiment value (without modifiers) is , which means that this text is classified as negative.

- In the second case, the final sentiment value for the given text (with modifiers and applying a shift of in the case of negation) is , which means that this text is also classified as negative.

- The BoSA model, which considers the presence of negation and lexicon terms in the sentence;

- The BOSentiment model, which examines the position of negation and AnA-words terms in relation to all other words in the text.

- CNN (as a pure neural network model) and

- CNNBOSentiment model as a model that combines the BOSentiment model as a pre-model and CNN network as a main model to predict sentiment values.

- Embedding maps each word to a 32-dimensional vector. The number 5000 represents the total number of words in the vocabulary, and input_length represents the maximum length of input sequences that will be processed. In this case, the maximum sequence length is set to 32.

- Conv1D performs convolution on the input sequence using filters of size . The number 64 represents the number of filters used in the layer, and ‘ReLu’ indicates the activation function Rectified Linear Unit (ReLU) that is applied after convolution.

- MaxPooling1D with pool_size is 4 were applied after Conv1D layer. MaxPooling1D applies maxpooling operation to each feature dimension of the 3D input tensor (batch_size, steps, features), where steps represent the length of the sequence and features represent the number of features in each step. The pool_size is 4 means that the operation will pool four adjacent values at a time and return the maximum value of those four. This reduces the length of the sequence by a factor of 4 while retaining the most important features. The output of MaxPooling1D is a 3D tensor with shape (batch_size, new_steps, features), where new_steps is the result of dividing the original steps by the pool_size (rounded down).

- Flatten was applied after MaxPoolong1D layer. This layer flattens the input data into a one-dimensional array. In other words, it takes the 3D tensor output from the previous layer and converts it into a 1D array that can be used as input to a fully connected (Dense) layer. The output shape of the Flatten layer is a 1D tensor with a length equal to the product of the dimensions of the input tensor (batch_size, steps, features).

| Listing 1. CNN model with 7 layers. |

| model = Sequential() |

| model.add(Embedding(5000, 32, input_length=maxlen)) |

| model.add(Conv1D(64, 5, activation=‘relu’)) |

| model.add(MaxPooling1D(pool_size=4)) |

| model.add(Flatten()) |

| model.add(Dense(64, activation=‘relu’)) |

| model.add(Dropout(0.4)) |

| model.add(Dense(3, activation=‘softmax’)) |

- 1st Dense has 64 neurons and uses the ReLU (Rectified Linear Unit - a simple and computationally efficient function that returns the input value if it is positive, and 0 otherwise) activation function. It transforms the flattened feature vector into a new vector of length 64. This allows the model to learn more complex representations of the features.

- Dropout is a technique used in neural networks to prevent over-fitting. During training, the Dropout layer randomly selects some of the neurons and “drops” them, meaning they will be ignored during that particular forward and backward pass. This forces the remaining neurons to learn more robust features that are relevant to the classification task. The probability of dropping a neuron is a hyper-parameter and is usually set to a value between and .

- 2nd Dense has 3 neurons and uses the softmax (The output of the softmax activation function can be interpreted as the predicted probability of each class, and the predicted class is simply the one with the highest probability.) activation function. It takes the output of the previous layer and maps it to a probability distribution over the three classes—positive, negative, or neutral. This allows the model to make a prediction on which class the input sequence belongs to.

- Conv2D adds a 2D convolutional layer with 32 filters of size 3 × 3. The activation function used is ReLU;

- MaxPooling2D((2,2)) adds a max pooling layer that reduces the spatial dimensions of the output of the previous layer. The parameter specifies the size of the pooling window. In this case, the layer pools the maximum value of a window in the output.

- Flatten() adds a flattened layer that converts the output of the previous layer to a 1D array. This is necessary because the next layer in the model is a dense layer that requires a 1D input.

- 1st Dense (),

- Dropout (Dropout ),

- 2nd Dense (Dense (3, activation=‘softmax’)).

4. Results

5. Discussion and Further Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AnA | A word from the AnA-words list |

| AnA-words | Affirmative and non-Affirmative words |

| BCMS | Bosnian-Croatian-Montenegrin-Serbian language |

| BERT | Bidirectional Encoder Representations from Transformers |

| CNN | Convolution Neural Network |

| CRF | Conditional random fields |

| LBM | Lexicon-based method |

| NLTK | Natural Language Toolkit |

| RF | Random Forest |

| SVM | Support Vector Machine |

References

- The editors of Encyclopaedia Britannica. Bosnian-Croatian-Montenegrin-Serbian Language Summary. 2021. Available online: https://www.britannica.com/summary/Bosnian-Croatian-Montenegrin-Serbian-language (accessed on 1 June 2023).

- Čušić, T. D1.36: Report on the Bosnian Language. 2023. Available online: https://european-language-equality.eu/wp-content/uploads/2022/03/ELE___Deliverable_D1_36__Language_Report_Bosnian_.pdf (accessed on 1 June 2023).

- Agency for Statistics of Bosnia and Herzegovina. Cenzus of Population, Households and Dwellings in Bosnia and Herzegovina, 2013 Final Results. 2013. Available online: https://dataspace.princeton.edu/handle/88435/dsp0176537424z (accessed on 1 June 2023).

- Pang, B.; Lee, L. Opinion mining and sentiment analysis. Found. Trends Inf. Retr. 2008, 2, 1–135. [Google Scholar] [CrossRef]

- Liu, B. Sentiment Analysis and Opinion Mining; Morgan & Claypool Publishers: San Rafael, CA, USA, 2012. [Google Scholar]

- Gunasekaran, K.P. Exploring Sentiment Analysis Techniques in Natural Language Processing: A Comprehensive Review. arXiv 2023, arXiv:2305.14842. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is All You Need. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4 December 2017; Curran Associates Inc.: Red Hook, NY, USA, 2017; pp. 6000–6010. [Google Scholar]

- Catelli, R.; Pelosi, S.; Esposito, M. Lexicon-based vs. Bert-based sentiment analysis: A comparative study in Italian. Electronics 2022, 11, 374. [Google Scholar] [CrossRef]

- Cambria, E.; Hussain, A.; Havasi, C.; Eckl, C. A new approach to sentic computing: Ontology-based representation of natural language semantics. In Proceedings of the IEEE International Conference on Granular Computing, Beijing, China, 13 December 2013; pp. 397–402. [Google Scholar]

- Storey, V.C.; O’Leary, D.E. Text analysis of evolving emotions and sentiments in COVID-19 Twitter communication. Cogn. Comput. 2022, 1–24. [Google Scholar] [CrossRef]

- Pak, A.; Paroubek, P. Twitter as a corpus for sentiment analysis and opinion mining. In Proceedings of the LREC, Valletta, Malta, 19 May 2010; Volume 10, pp. 1320–1326. [Google Scholar]

- Agarwal, A.; Xie, B.; Vovsha, I.; Rambow, O.; Passonneau, R. Sentiment analysis of Twitter data. In Proceedings of the Workshop on Languages in Social Media, Portland, OR, USA, 23 June 2011; Association for Computational Linguistics: Baltimore, MD, USA, 2011; pp. 30–38. [Google Scholar]

- Go, A.; Bhayani, R.; Huang, L. Twitter sentiment classification using distant supervision. In Proceedings of the 22nd International Conference on Computational Linguistics-Volume 2; Association for Computational Linguistics: Baltimore, MD, USA, 2009; pp. 1–4. [Google Scholar]

- Joachims, T. Text categorization with support vector machines: Learning with many relevant features. In Proceedings of the Machine learning: ECML-98, Chemnitz, Germany, 21 April 1998; Volume 1398, pp. 137–142. [Google Scholar]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Kurani, A.; Doshi, P.; Vakharia, A.; Shah, M. A comprehensive comparative study of artificial neural network (ANN) and support vector machines (SVM) on stock forecasting. Ann. Data Sci. 2023, 10, 183–208. [Google Scholar] [CrossRef]

- McCallum, A.; Nigam, K. A comparison of event models for naive Bayes text classification. In Proceedings of the AAAI Conference on Artificial Intelligence, AAAI-98 Workshop on Learning Text Categorization, Madison, WI, USA, 26 July 1998; Volume 752, pp. 41–48. [Google Scholar]

- Rish, I. An empirical study of the naive Bayes classifier. In Proceedings of the IJCAI 2001 Workshop on Empirical Methods in Artificial Intelligence, Seattle, WA, USA, 4 August 2001; Volume 3, pp. 41–46. [Google Scholar]

- Reddy, E.M.K.; Gurrala, A.; Hasitha, V.B.; Kumar, K.V.R. Introduction to Naive Bayes and a Review on Its Subtypes with Applications. In Bayesian Reasoning and Gaussian Processes for Machine Learning Applications; CRC: Boca Raton, FL, USA, 2022; pp. 1–14. [Google Scholar]

- Breiman, L. Random forests. In Proceedings of the Machine Learning; Springer: Berlin/Heidelberg, Germany, 2001; Volume 45-1, pp. 5–32. [Google Scholar]

- Wang, F.; Zhang, C.; Liu, X.; Zhang, Y. Sentiment classification based on random forests. Expert Syst. Appl. 2011, 38, 7677–7683. [Google Scholar]

- Mardjo, A.; Choksuchat, C. HyVADRF: Hybrid VADER–Random Forest and GWO for Bitcoin Tweet Sentiment Analysis. IEEE Access 2022, 10, 101889–101897. [Google Scholar] [CrossRef]

- Kim, Y. Convolutional neural networks for sentence classification. In Proceedings of the Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25 October 2014; pp. 1746–1751. [Google Scholar]

- Severyn, A.; Moschitti, A. Twitter sentiment analysis with deep convolutional neural networks. In Proceedings of the 38th International ACM SIGIR Conference on Research and Development in Information Retrieval, Santiago, Chile, 9–13 August 2015; ACM: Hong Kong, China, 2015; pp. 959–962. [Google Scholar]

- Wang, L.; Tang, R.; Zhao, S.; Zhang, Y.; Zhang, Y. Sentiment Analysis of Twitter Data: A Comprehensive Study. In Proceedings of the 2020 International Conference on Data Science and Information Technology (DSIT), Xiamen, China, 24 July 2020; pp. 243–248. [Google Scholar]

- Pang, B.; Lee, L.; Vaithyanathan, S. Thumbs up? Sentiment Classification using Machine Learning Techniques. In Proceedings of the 2002 Conference on Empirical Methods in Natural Language Processing (EMNLP 2002), Philadelphia, PA, USA, 6 July 2002; Association for Computational Linguistics: Stroudsburg, PA, USA; pp. 79–86. [Google Scholar] [CrossRef]

- Nigam, N.; Yadav, D. Lexicon-Based Approach to Sentiment Analysis of Tweets Using R Language. In Proceedings of the ICACDS 2018: Advances in Computing and Data Sciences; Springer: Singapore, 2018; pp. 154–164. [Google Scholar] [CrossRef]

- Taboada, M.; Brooke, J.; Tofiloski, M.; Voll, K.; Stede, M. Lexicon-Based Methods for Sentiment Analysis. Comput. Linguist. 2011, 37, 267–307. [Google Scholar] [CrossRef]

- Osgood, C.E.; Suci, G.J.; Tannenbaum, P.H. The Measurement of Meaning; University of Illinois Press: Urbana, IL, USA, 1957. [Google Scholar]

- Bruce, R.; Wiebe, J. Recognizing Subjectivity: A Case Study of Manual Tagging. Nat. Lang. Eng. 2000, 5, 187–205. [Google Scholar] [CrossRef]

- Hu, M.; Liu, B. Mining and Summarizing Customer Reviews. In Proceedings of the Tenth ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Seattle, WA, USA, 22 August 2004; pp. 168–177. [Google Scholar] [CrossRef]

- Kim, S.M.; Hovy, E. Determining the Sentiment of Opinions. In Proceedings of the 20th International Conference on Computational Linguistics, Geneva, Switzerland, 23 August 2004; p. 1367-es. [Google Scholar] [CrossRef]

- Stone, P.; Dunphy, D.; Smith, M.; Ogilvie, D. The General Inquirer: A Computer Approach to Content Analysis; The MIT Press: Cambridge, MA, USA, 1966; Volume 4. [Google Scholar] [CrossRef]

- Jahić, S.; Vičič, J. Sentiment Polarity Lexicon of Bosnian Language. 2023. Available online: https://zenodo.org/record/7520809 (accessed on 1 June 2023). [CrossRef]

- Kapukaranov, B.; Nakov, P. Fine-Grained Sentiment Analysis for Movie Reviews in Bulgarian. In Proceedings of the PInternational Conference Recent Advances in Natural Language Processing, Hissar, Bulgaria, 7 September 2015; pp. 266–274. [Google Scholar]

- Glavaš, G.; Šnajder, J.; Dalbelo Bašić, B. Semi-supervised Acquisition of Croatian Sentiment Lexicon. In Proceedings of the International Conference on Text, Speech and Dialogue, Brno, Czech Republic, 3–7 September 2012; Volume 7499, pp. 166–173. [Google Scholar] [CrossRef]

- Veselovská, K. Czech Subjectivity Lexicon: A Lexical Resource for Czech Polarity Classification. In Proceedings of the 7th International Conference, Slovko, Bratislava, 4 July 2013; pp. 279–284. [Google Scholar]

- Jovanoski, D.; Pachovski, V.; Nakov, P. Sentiment Analysis in Twitter for Macedonian. In Proceedings of the International Conference Recent Advances in Natural Language Processing, Hissar, Bulgaria, 7 September 2015; pp. 249–257. [Google Scholar]

- Wawer, A. Extracting emotive patterns for languages with rich morphology. Int. J. Comput. Linguist. Appl. 2012, 3, 11–24. [Google Scholar]

- Okruhlica, A. Slovak Sentiment Lexicon Induction in Absence of Labeled Data. Master’s Thesis, Comenius University Bratislava, Bratislava, Slovakia, 2013. [Google Scholar]

- Kadunc, K. Določanje Sentimenta Slovenskim Spletnim Komentarjem s Pomočjo Strojnega Učenja; K. Kadunc: Washington, DC, USA, 2016. [Google Scholar]

- Jahić, S.; Vičič, J. Determining Sentiment of Tweets Using First Bosnian Lexicon and (AnA)-Affirmative and Non-affirmative Words. In Advanced Technologies, Systems, and Applications V: Papers Selected by the Technical Sciences Division of the Bosnian-Herzegovinian American Academy of Arts and Sciences 2020; Springer International Publishing: Cham, Switzerland, 2021; pp. 361–373. [Google Scholar] [CrossRef]

- Jahić, S.; Vičič, J. Annotated lexicon for sentiment analysis in the Bosnian language. In Proceedings of the ALTNLP The International Conference and workshop on Agglutinative Language Technologies as a Challenge of Natural Language Processing, Koper, Slovenia, 7–8 June 2022; Volume 3315, pp. 9–19. [Google Scholar]

- Tadić, M.; Brozović-Rončević, D.; Kapetanović, A. The Croatian Language in the Digital Age; Springer: Berlin/Heidelberg, Germany, 2012; p. 93. [Google Scholar]

- Pelicon, A.; Pranjić, M.; Miljković, D.; Škrlj, B.; Pollak, S. Sentiment Annotated Dataset of Croatian News. Slovenian Language Resource Repository CLARIN.SI. 2020. Available online: https://www.clarin.si/repository/xmlui/handle/11356/1342 (accessed on 1 June 2023).

- Mozetič, I.; Grčar, M.; Smailović, J. Twitter sentiment for 15 European languages, 2016. Slovenian language resource repository CLARIN.SI.

- Pavić Pintarić, A.; Frleta, Z. Upwards Intensifiers in the English, German and Croatian Language. J. Foreign Lang. 2014, 6, 31–48. [Google Scholar] [CrossRef]

- Krstev, C.; Pavlovic-Lazetic, G.; Vitas, D.; Obradović, I. Using Textual and Lexical Resources in Developing Serbian Wordnet. Rom. J. Inf. Sci. Technol. 2004, 7, 147–161. [Google Scholar]

- Mladenovic, M.; Mitrović, J.; Krstev, C.; Vitas, D. Hybrid Sentiment Analysis Framework for a Morphologically Rich Language. J. Intell. Inf. Syst. JIIS 2015, 46, 599–620. [Google Scholar] [CrossRef]

- Batanović, V.; Nikolić, B.; Milosavljević, M. Reliable Baselines for Sentiment Analysis in Resource-Limited Languages: The Serbian Movie Review Dataset. In Proceedings of the Tenth International Conference on Language Resources and Evaluation (LREC’16), Portorož, Slovenia, 5 May 2016; pp. 2688–2696. [Google Scholar]

- Kovacevic, J.; Graovac, J. Application of a Structural Support Vector Machine method to N-gram based text classification in Serbian. Infotheca J. Digit. Humanit. 2015, 16, 1–2. [Google Scholar] [CrossRef]

- Drašković, D.; Zečević, D.; Nikolić, B. Development of a Multilingual Model for Machine Sentiment Analysis in the Serbian Language. Mathematics 2022, 10, 3236. [Google Scholar] [CrossRef]

- Ljajić, A.; Stanković, M.; Marovac, U. Detection of Negation in the Serbian Language. In Proceedings of the 8th International Conference on Web Intelligence, Mining and Semantics, New York, NY, USA, 5 July 2018. [Google Scholar] [CrossRef]

- Ljajić, A.B. Obrada Negacije u Kratkim Neformalnim Tekstovima u Cilju Poboljšanja Klasifikacije Sentimenta/Processing Negation in Short Informal Text for Improving the Sentiment Classification. Ph.D. Thesis, University of Niš, Niš, Serbia, 2019. [Google Scholar]

- Bučar, J.; Žnidaršič, M.; Povh, J. Annotated news corpora and a lexicon for sentiment analysis in Slovene. Lang. Resour. Eval. 2018, 52, 895–919. [Google Scholar] [CrossRef]

- Mozetič, I.; Grčar, M.; Smailović, J. Multilingual Twitter Sentiment Classification: The Role of Human Annotators. PLoS ONE 2016, 11, 1–26. [Google Scholar] [CrossRef]

- Fišer, D.; Smailović, J.; Erjavec, T.; Mozetič, I.; Grčar, M. Sentiment annotation of Slovene user-generated content. In Proceedings of the Zbornik Konference Jezikovne Tehnologije in Digitalna Humanistika, Ljubljana, Slovenija, 29 September–1 October 2016; Erjavec, T., Fišer, D., Eds.; Znanstvena Založba Filozofske Fakultete = Ljubljana University Press: Ljubljana, Slovenija, 2016. [Google Scholar]

- Moilanen, K.; Pulman, S. Sentiment Composition. In Proceedings of the Proceedings of the Recent Advances in Natural Language Processing International Conference (RANLP-2007), Borovets, Bulgaria, 27 September 2007; pp. 378–382. [Google Scholar]

- Singh, P.K.; Paul, S. Deep learning approach for negation handling in sentiment analysis. IEEE Access 2021, 9, 102579–102592. [Google Scholar] [CrossRef]

- Councill, I.; McDonald, R.; Velikovich, L. What’s great and what’s not: Learning to classify the scope of negation for improved sentiment analysis. In Proceedings of the Workshop on Negation and Speculation in Natural Language Processing, Uppsala, Sweden, 5 September 2010; pp. 51–59. [Google Scholar]

- Morante, R.; Daelemans, W. A Metalearning Approach to Processing the Scope of Negation. In Proceedings of the Thirteenth Conference on Computational Natural Language Learning (CoNLL-2009), Boulder, CO, USA, 9 June 2009; pp. 21–29. [Google Scholar]

- Reitan, J.; Faret, J.; Gambäck, B.; Bungum, L. Negation Scope Detection for Twitter Sentiment Analysis. In Proceedings of the 6th Workshop on Computational Approaches to Subjectivity, Sentiment and Social Media Analysis, Lisboa, Portugal, 7 September 2015; pp. 99–108. [Google Scholar] [CrossRef]

- Jia, L.; Yu, C.; Meng, W. The Effect of Negation on Sentiment Analysis and Retrieval Effectiveness. In Proceedings of the 18th ACM Conference on Information and Knowledge Management, CIKM ’09, Hong Kong, China, 2 November 2009; pp. 1827–1830. [Google Scholar] [CrossRef]

- Wiegand, M.; Balahur, A.; Roth, B.; Klakow, D.; Montoyo, A. A survey on the role of negation in sentiment analysis. In Proceedings of the NeSp-NLP@ACL, Uppsala, Sweden, 10 July 2010. [Google Scholar]

- Polanyi, L.; Zaenen, A. Contextual Valence Shifters. In Computing Attitude and Affect in Text: Theory and Applications; Springer: Dordrecht, The Netherlands, 2006; pp. 1–10. [Google Scholar] [CrossRef]

- Kennedy, A.; Inkpen, D. Sentiment Classification of Movie Reviews Using Contextual Valance Shifters. Comput. Intell. 2006, 22, 110–125. [Google Scholar] [CrossRef]

- Zhu, X.; Guo, H.; Mohammad, S.; Kiritchenko, S. An Empirical Study on the Effect of Negation Words on Sentiment. In Proceedings of the 52nd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Baltimore, MD, USA, 6 June 2014; pp. 304–313. [Google Scholar] [CrossRef]

- Horn, L.R. A Natural History of Negation; University of Chicago Press: Chicago, IL, USA, 1989. [Google Scholar]

- Wilson, T.; Wiebe, J.; Hoffmann, P. Recognizing Contextual Polarity in Phrase-Level Sentiment Analysis. In Proceedings of the Human Language Technology Conference and Conference on Empirical Methods in Natural Language Processing, Vancouver, BC, Canada, 7 October 2005; pp. 347–354. [Google Scholar]

- Merima, O. A Contribution to the Classification of Intensifiers in English and Bosnian. In Književni Jezik 21/2; Institut za Jezik: Sarajevo, Bosnia and Herzegovina, 2003; pp. 50–62. [Google Scholar]

- Patra, B.; Mazumdar, S.; Das, D.; Rosso, P.; Bandyopadhyay, S. A Multilevel Approach to Sentiment Analysis of Figurative Language in Twitter. In Proceedings of the Computational Linguistics and Intelligent Text Processing, Konya, Turkey, 3 April 2018; Springer International Publishing: Berlin/Heidelberg, Germany, 2018; pp. 281–291. [Google Scholar]

- Kennedy, G. Amplifier Collocations in the British National Corpus: Implications for English Language Teaching. TESOL Q. 2003, 37, 467–487. [Google Scholar] [CrossRef]

- Recski, L. “… It’s Really Ultimately Very Cruel …”: Contrasting English intensifier collocations across EFL writing and academic spoken discourse. DELTA Doc. Estud. Lingüística Teórica Apl. 2004, 20, 211–234. [Google Scholar] [CrossRef]

- Quirk, R.; Greenbaum, S.; Leech, G.; Svartvik, J. A Comprehensive Grammar of the English Language; Longman: London, UK, 1985. [Google Scholar]

- Jahić, S.; Vičič, J. The Lists of AnAwords and Stopwords are Publicly Available on the Zenodo Repository. 2023. Available online: https://zenodo.org/record/8021150 (accessed on 1 June 2023). [CrossRef]

- Ljajić, A.; Marovac, U. Improving sentiment analysis for twitter data by handling negation rules in the Serbian language. Comput. Sci. Inf. Syst. 2019, 16, 289–311. [Google Scholar] [CrossRef]

- Bird, S.; Klein, E.; Loper, E. Natural Language Processing with Python: Analyzing Text with the Natural Language Toolkit; O’Reilly Media, Inc.: Newton, MA, USA, 2009. [Google Scholar]

- Sauri, R. A Factuality Profiler for Eventualities in Text. Ph.D. Thesis, Brandeis University, Brandeis, MA, USA, 2008. [Google Scholar]

- Derbyshire, J. (Ed.) Prime Obsession: Bernhard Riemann and the Greatest Unsolved Problem in Mathematics; The National Academies Press: Washington, DC, USA, 2003. [Google Scholar] [CrossRef]

- Hossin, M.; Sulaiman, M.N. A Review on Evaluation Metrics for Data Classification Evaluations. Int. J. Data Min. Knowl. Manag. Process. 2015, 5, 1–11. [Google Scholar] [CrossRef]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Madjarov, G.; Kocev, D.; Gjorgjevikj, D.; Džeroski, S. An extensive experimental comparison of methods for multi-label learning. Pattern Recognit. 2012, 45, 3084–3104. [Google Scholar] [CrossRef]

- Ting, K.M. Confusion Matrix. In Encyclopedia of Machine Learning; Springer: Boston, MA, USA, 2010; p. 209. [Google Scholar] [CrossRef]

| ID_Model | Short ID | [cl ] | [f ] | [fs ] | [d ] | [e e] | [b ] |

|---|---|---|---|---|---|---|---|

| CNN | |||||||

| cnn_cl1_f64-fs5_d5_e10_b16 | CNN1 | 1 | 64 | 5 | 0.5 | 10 | 16 |

| cnn_cl1_f64-fs5_d5_e10_b32 | CNN1b32 | 1 | 64 | 5 | 0.5 | 10 | 32 |

| cnn_cl1_f64-fs5_d5_e10_b64 | CNN1b64 | 1 | 64 | 5 | 0.5 | 10 | 64 |

| cnn_cl1_f64-fs5_d5_e7_b16 | CNN1e7 | 1 | 64 | 5 | 0.5 | 7 | 16 |

| cnn_cl1_f64-fs5_d5_e12_b16 | CNN1e12 | 1 | 64 | 5 | 0.5 | 12 | 16 |

| cnn_cl1_f64-fs5_d3_e7_b16 | CNN1d3e7 | 1 | 64 | 5 | 0.3 | 7 | 16 |

| cnn_cl1_f64-fs5_d4_e10_b16 | CNN1d4 | 1 | 64 | 5 | 0.4 | 10 | 16 |

| cnn_cl1_f64-fs3_d4_e10_b16 | CNN1e3 | 1 | 64 | 3 | 0.4 | 10 | 16 |

| cnn_cl1_f128-fs3_d4_e10_b16 | CNN1f128 | 1 | 128 | 3 | 0.4 | 10 | 16 |

| cnn_cl1_f256-fs3_d4_e10_b16 | CNN1f256 | 1 | 256 | 3 | 0.4 | 10 | 16 |

| cnn_cl2_f64-fs34_d4_e10_b16 | CNN2f34 | 2 | 64 | 3, 4 | 0.4 | 10 | 16 |

| cnn_cl2_f64-fs45_d4_e10_b16 | CNN2f45 | 2 | 64 | 4, 5 | 0.4 | 10 | 16 |

| cnn_cl3_f64-fs345_d4_e10_b16 | CNN3f345 | 3 | 64 | 3, 4, 5 | 0.4 | 10 | 16 |

| CNNBOSentiment | |||||||

| cnnBOS_cl1_f32_fs33_d4_e10_b16 | CNNBOS1 | 1 | 32 | 3 × 3 | 0.4 | 10 | 16 |

| cnnBOS_cl1_f32_fs33_d4_e10_b32 | CNNBOSb32 | 1 | 32 | 3 × 3 | 0.4 | 10 | 32 |

| cnnBOS_cl1_f32_fs33_d4_e10_b64 | CNNBOSb64 | 1 | 32 | 3 × 3 | 0.4 | 10 | 64 |

| cnnBOS_cl1_f32_fs44_d4_e10_b16 | CNNBOSfs44 | 1 | 32 | 4 × 4 | 0.4 | 10 | 16 |

| cnnBOS_cl1_f64_fs44_d4_e10_b16 | CNNBOSf64 | 1 | 64 | 4 × 4 | 0.4 | 10 | 16 |

| cnnBOS_cl1_f128_fs44_d4_e10_b16 | CNNBOSf128 | 1 | 128 | 4 × 4 | 0.4 | 10 | 16 |

| cnnBOS_cl1_f256_fs44_d4_e10_b16 | CNNBOSf256 | 1 | 256 | 4 × 4 | 0.4 | 10 | 16 |

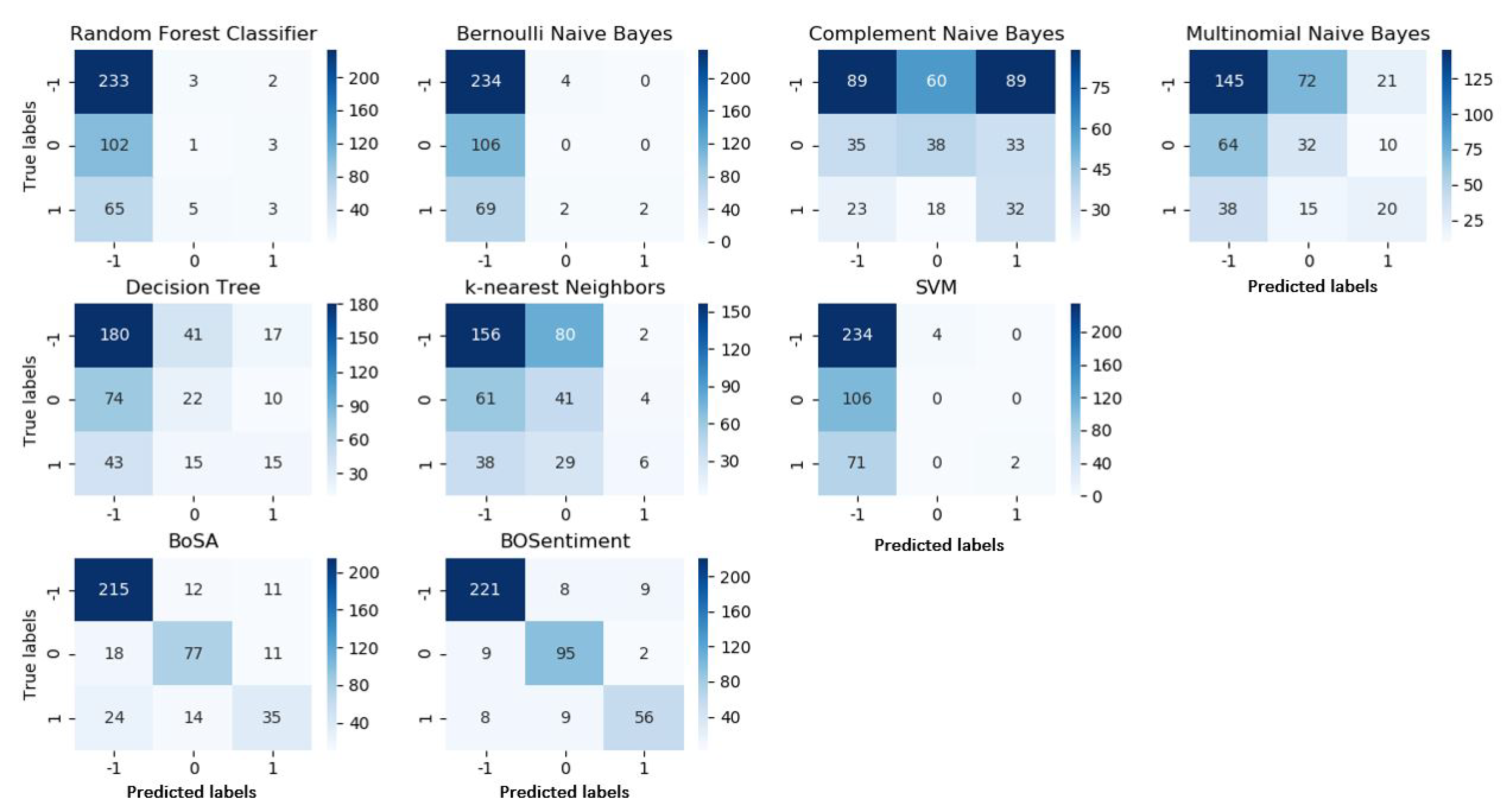

| Classifier | Precision | Recall | Score (Class) | Avg. Score (Model) | Accuracy Score | Hamming Loss Score | |||

|---|---|---|---|---|---|---|---|---|---|

| Random Forest | −1 | 0.58 | 0.98 | 0.73 | 0.44 | 0.58 | 0.25 | 0.32 | 0.32 |

| 0 | 0.25 | 0.01 | 0.02 | ||||||

| 1 | 0.50 | 0.07 | 0.12 | ||||||

| Bernoulli Naive Bayes | −1 | 0.57 | 0.98 | 0.72 | 0.42 | 0.57 | 0.88 | 0.18 | 0.43 |

| 0 | 0.00 | 0.00 | 0.00 | ||||||

| 1 | 1.00 | 0.03 | 0.05 | ||||||

| Complements NB | −1 | 0.61 | 0.37 | 0.46 | 0.40 | 0.38 | 0.37 | 0.85 | 0.43 |

| 0 | 0.33 | 0.36 | 0.34 | ||||||

| 1 | 0.21 | 0.44 | 0.28 | ||||||

| Multinomial NB | −1 | 0.59 | 0.61 | 0.60 | 0.47 | 0.47 | 0.33 | 0.70 | 0.43 |

| 0 | 0.27 | 0.30 | 0.28 | ||||||

| 1 | 0.39 | 0.27 | 0.32 | ||||||

| Decision Tree | −1 | 0.61 | 0.71 | 0.66 | 0.49 | 0.52 | 0.37 | 0.71 | 0.32 |

| 0 | 0.34 | 0.28 | 0.31 | ||||||

| 1 | 0.30 | 0.22 | 0.25 | ||||||

| k-Nearest Neighbors | −1 | 0.61 | 0.66 | 0.63 | 0.47 | 0.49 | 0.78 | 0.55 | 0.32 |

| 0 | 0.27 | 0.39 | 0.32 | ||||||

| 1 | 0.50 | 0.08 | 0.14 | ||||||

| SVM | −1 | 0.57 | 0.98 | 0.72 | 0.42 | 0.57 | 0.35 | 0.17 | 0.35 |

| 0 | 0.00 | 0.00 | 0.00 | ||||||

| 1 | 1.00 | 0.03 | 0.05 | ||||||

| BoSA | −1 | 0.84 | 0.90 | 0.87 | 0.78 | 0.78 | 0.47 | 0.72 | 0.22 |

| 0 | 0.75 | 0.73 | 0.74 | ||||||

| 1 | 0.61 | 0.48 | 0.54 | ||||||

| BOSentiment | −1 | 0.93 | 0.93 | 0.93 | 0.89 | 0.89 | 0.23 | 0.75 | 0.11 |

| 0 | 0.85 | 0.90 | 0.87 | ||||||

| 1 | 0.84 | 0.77 | 0.80 | ||||||

| BOSentiment | |||||

|---|---|---|---|---|---|

| Negative | Neutral | Positive | Total | ||

| Negative | 221 | 8 | 9 | 238 | |

| Human Annotation | Neutral | 9 | 95 | 2 | 106 |

| Positive | 8 | 9 | 56 | 73 | |

| Total | 238 | 112 | 67 | 417 | |

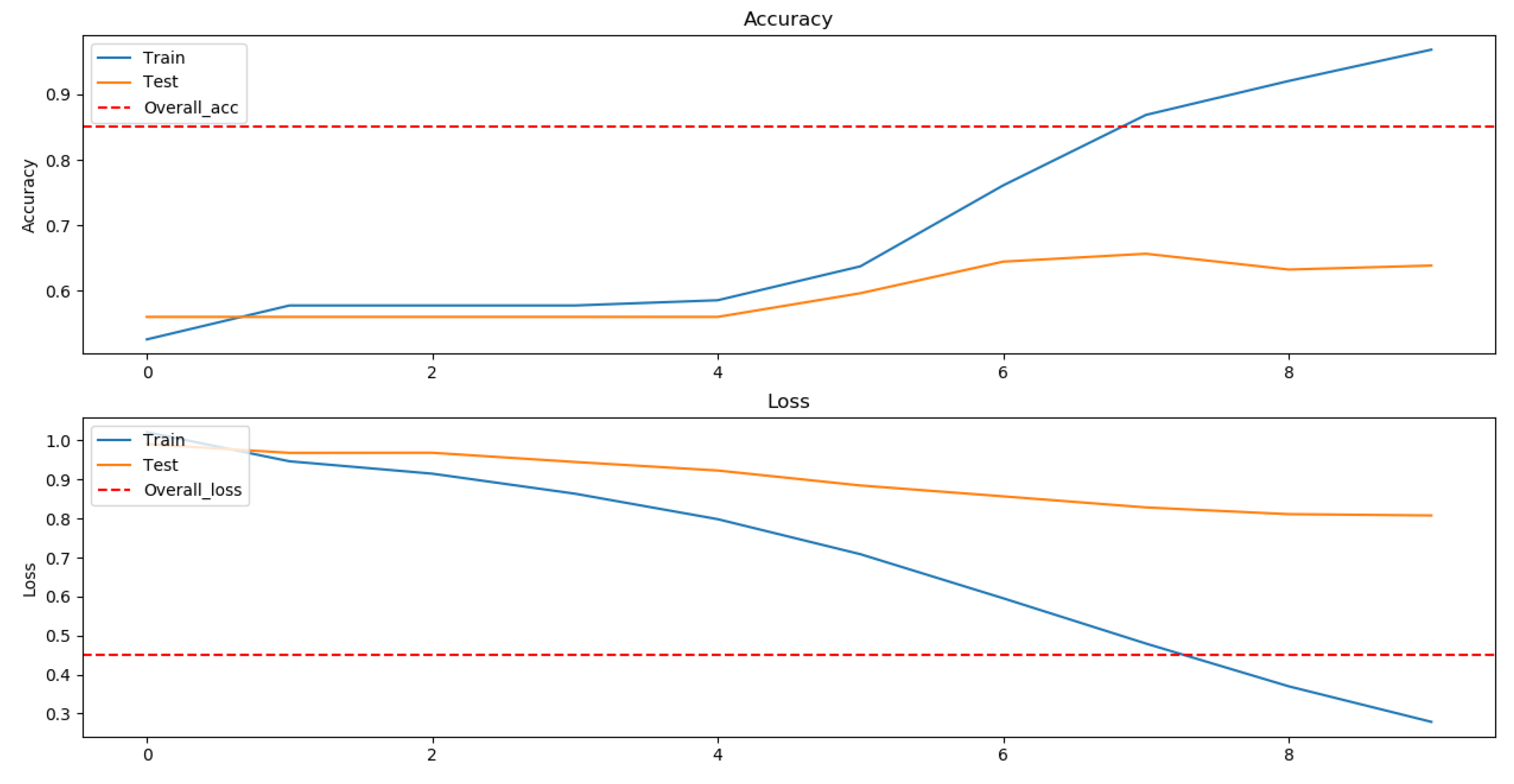

| Model | Training acc | Testing acc (40:60) | 30:70 | 20:80 |

|---|---|---|---|---|

| CNN | 0.6386 | 0.7938 | 0.8609 | 0.9376 |

| CNNBOSentiment | 0.7349 | 0.8153 | 0.8082 | 0.8345 |

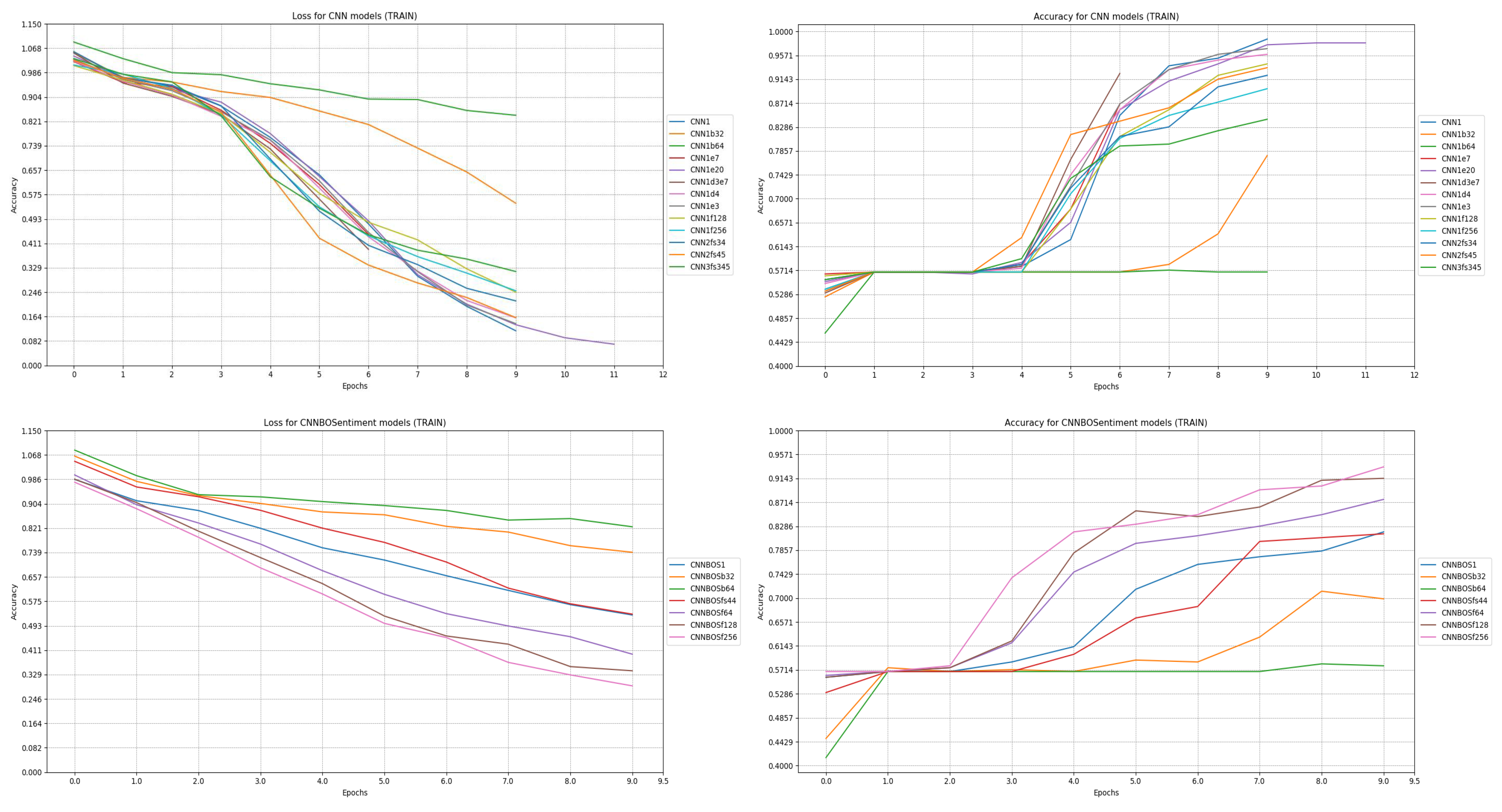

| Model | Train Loss | Train Accuracy | Test Loss | Test Accuracy |

|---|---|---|---|---|

| CNN | ||||

| CNN1 | 0.1165 | 0.9863 | 0.8828 | 0.6480 |

| CNN1b32 | 0.5457 | 0.7774 | 0.5868 | 0.8273 |

| CNN1b64 | 0.8420 | 0.5685 | 0.8346 | 0.5707 |

| CNN1e7 | 0.4413 | 0.8699 | 0.4750 | 0.8465 |

| CNN1e12 | 0.0715 | 0.9795 | 0.3556 | 0.8801 |

| CNN1d3e7 | 0.3905 | 0.9247 | 0.4477 | 0.8801 |

| CNN1d4 | 0.1602 | 0.9589 | 0.3222 | 0.8633 |

| CNN1e3 | 0.1405 | 0.9692 | 0.3287 | 0.8585 |

| CNN1f128 | 0.2465 | 0.9418 | 0.4095 | 0.8753 |

| CNN1f256 | 0.2511 | 0.8973 | 0.4530 | 0.8633 |

| CNN2fs34 | 0.2171 | 0.9212 | 0.5986 | 0.8417 |

| CNN2fs45 | 0.1608 | 0.9349 | 0.5301 | 0.8537 |

| CNN3fs345 | 0.3160 | 0.8425 | 1.0663 | 0.7314 |

| CNNBOSentiment | ||||

| CNNBOS1 | 0.5290 | 0.8185 | 0.5524 | 0.8273 |

| CNNBOSb32 | 0.7401 | 0.6986 | 0.7128 | 0.7002 |

| CNNBOSb64 | 0.8260 | 0.5788 | 0.8133 | 0.5731 |

| CNNBOSfs44 | 0.5319 | 0.8151 | 0.5167 | 0.8345 |

| CNNBOSf64 | 0.3972 | 0.8767 | 0.4693 | 0.8681 |

| CNNBOSf128 | 0.3412 | 0.9144 | 0.3981 | 0.8969 |

| CNNBOSf256 | 0.2905 | 0.9349 | 0.3513 | 0.9137 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jahić, S.; Vičič, J. Impact of Negation and AnA-Words on Overall Sentiment Value of the Text Written in the Bosnian Language. Appl. Sci. 2023, 13, 7760. https://doi.org/10.3390/app13137760

Jahić S, Vičič J. Impact of Negation and AnA-Words on Overall Sentiment Value of the Text Written in the Bosnian Language. Applied Sciences. 2023; 13(13):7760. https://doi.org/10.3390/app13137760

Chicago/Turabian StyleJahić, Sead, and Jernej Vičič. 2023. "Impact of Negation and AnA-Words on Overall Sentiment Value of the Text Written in the Bosnian Language" Applied Sciences 13, no. 13: 7760. https://doi.org/10.3390/app13137760

APA StyleJahić, S., & Vičič, J. (2023). Impact of Negation and AnA-Words on Overall Sentiment Value of the Text Written in the Bosnian Language. Applied Sciences, 13(13), 7760. https://doi.org/10.3390/app13137760